Abstract

Results

Embedded pragmatic clinical trials (PCTs) are set in routine health care, have broad eligibility criteria, and use routinely collected electronic data. Many consider them a breakthrough innovation in clinical research and a necessary step in clinical trial development. To identify barriers and success factors, we reviewed published embedded PCTs and interviewed 30 researchers and clinical leaders in 7 US delivery systems.

Literature

We searched PubMed, the Cochrane library, and clinicaltrials.gov for studies reporting embedded PCTs. We identified 108 embedded PCTs published in the last 10 years. The included studies had a median of 5540 randomized patients, addressed a variety of diseases, and practice settings covering a broad range of interventions. Eighty‐one used cluster randomization. The median cost per patient was $97 in the 64 trials for which it was possible to obtain cost data.

Interviews

Delivery systems required research studies to align with operational priorities, existing information technology capabilities, and standard quality improvement procedures. Barriers that were identified included research governance, requirements for processes that were incompatible with clinical operations, and unrecoverable costs.

Conclusions

Embedding PCTs in delivery systems can provide generalizable knowledge that is directly applicable to practice settings at much lower cost than conventional trials. Successful embedding trials require accommodating delivery systems' needs and priorities.

1. INTRODUCTION

In a learning health system, evidence is both generated and applied as a natural product of the care process.1 An essential element of a learning health system is to collect and analyze data to generate knowledge.2 Many study designs exist to achieve this. While there is no single best approach, the randomized controlled trial (RCT) is the gold standard in knowledge generation.3 While traditional RCTs have strong internal validity, they are expensive and often have limited generalizability.4 Observational studies, while less expensive and often applicable to broad populations, are prone to selection bias and confounding. Because of this, pragmatic randomized clinical trials embedded in routine health care (embedded pragmatic clinical trials [PCTs]) may be an important part of a learning health system: They are less costly and can have better generalizability than traditional RCTs while having stronger internal validity than observational studies.5 , 6 The recent digitalization of health care data represents a potential quantum leap in the possibilities to conduct pragmatic research embedded in the routine delivery of health care.1

Some embedded PCTs have indicated that very large cost‐savings might be possible compared to regular RCTs. The TASTE trial, a registry‐based trial of thrombectomy added to stent placement for acute coronary occlusion, achieved >90% cost‐saving compared with similar randomized trials with a conventional design.7 If this finding holds true generally, it would constitute a breakthrough innovation in clinical research8 and open up for a new paradigm with access to much more cost‐effective clinical research than before.

Major efforts in the United States to promote embedded PCTs include PCORI's National Patient Centered Clinical Research Network, PCORnet,9 and the NIH's Health Care Systems Research Collaboratory.10 However, a real “revolution” in clinical research has yet to occur.11 , 12 Pragmatic trials—embedded or not—continue to be rare; only 344 (0.2%) of 203 788 studies in clinicaltrials.gov are termed “pragmatic.” The relatively slow spread of embedded PCTs may be related to current policies and a paucity of knowledge and experience in both methods and practical means to implement these strategically in health care systems. We thus reviewed the literature to describe characteristics of embedded PCTs and to identify barriers and key success factors, including their costs. We also interviewed researchers involved in embedded research as well as leaders of health systems that have hosted embedded trials.

2. METHODS

2.1. Literature review

We searched PubMed, Cochrane Library, and clinicaltrials.gov for articles reporting embedded PCTs, in English, published in peer‐reviewed journals between January 2006 and March 2016. See Box 1 for the definition used to identify embedded PCTs. The literature search strategy was slightly modified during the search but was designed prior to the literature review, as was the data extraction template. The keywords pragmatic, cluster‐randomized, and registry‐based were used alone and in relevant combinations. Search strings are provided in Appendix A. Abstracts and, if necessary, full text articles were read to see if the study fulfilled the inclusion criteria. Furthermore, “snowballing” was used in which reference lists of review and methodological papers were searched. Finally, researchers active in the field were asked for additional references. The searches were restricted to RCTs. Protocols and articles describing a trial design without reporting a result were excluded, as were reviews. The studies had to have an active comparator that was a clinically relevant alternative for the patient population and broad eligibility criteria (as determined by reviewer). Data for primary end points needed to be collected in electronic, routinely used sources such as electronic health records (EHR), disease registries, other registries, or a combination of these sources, ie, no large infrastructure for data collection intended principally to support the trial's needs was allowed. Hybrid designs are sometimes used where some data such as patient reported outcomes (PROs) are collected for the trial. These were included if the primary end point was collected from existing data sources. We also included trials that used conventional follow‐up for individuals who ceased to receive care at the institution whose electronic data provided the primary outcome. A flow diagram depicting the selection process is provided in Data S1.

Box 1. Definition of embedded PCT.

Pragmatic clinical trials (PCTs) are defined here as ones that randomize patients in routine health care using broad eligibility criteria.1 The unit of randomization can be the individual patient or health care practices, hospitals, or other units. Data collection relies in large measure on data sources like electronic health records or registries that are created as part of usual clinical care. Hybrid forms exist in which some data are collected from these sources and others from sources intended principally to support the trial's needs.

Information about how much a trial cost was rarely available in the publications. When possible, publicly available information about research grants was obtained from relevant databases. If not, authors were asked to provide an estimate of the total cost of the trial, including both direct and indirect costs, to the nearest ¼ million dollars. Ideally, all cost estimates should include both direct costs and indirect costs for the institutions in which the studies take place. Costs were adjusted from year of publication to 2015 dollars using the NIH Biomedical Research and Development Price Index (BRDPI).13 In the case of non‐US studies, the same index has been used. The exchange rates used were summer 2016. Nonparametric methods (Mann‐Whitney if 2 groups, Kruskal‐Wallis if >2 groups) were used to test for statistical significance of differences in medians between groups.

One reviewer read each full text article and extracted information. Subsequently, an independent reviewer performed a quality control and re‐examined all the extracted data.

2.2. Interviews

In the spring of 2016, one of the authors (J.R.) conducted semistructured interviews with 30 key informants. Most were researchers, and a smaller number were clinical and administrative leaders. They were selected from organizations with experience conducting PCTs. The interviewees came from 7 different US health care delivery systems that all actively integrate research in clinical practice (Kaiser Permanente Northwest, Portland OR; Kaiser Permanente Northern California, Oakland CA; Hospital Corporation of America, Nashville, TN; Intermountain Health Care, Salt Lake City UT; Group Health [which has since become Kaiser Permanente Washington], Seattle WA; Veterans Affairs Boston Healthcare System and Palo Alto Medical Foundation; Palo Alto CA). Each interview was 30 to 60 minutes long and was focused on success factors, barriers, and the different stages of research from initiation to reporting for embedded PCTs (Interview guide in Appendix B). Twenty‐three of the 30 interviews were recorded (telephone interviews were not recorded for technical reasons) and later analyzed; notes were taken for the nonrecorded interviews.

The IRB at Harvard Pilgrim Health Care determined the study to be exempt.

3. RESULTS

3.1. Results literature review

The literature search identified 108 articles that met the inclusion criteria. The number of publications increased over time, with 4 published in 2006‐2007 and 45 in 2014‐2015. Table S1 summarizes the data extracted from articles.

3.2. Settings

About half (55%) of the studies occurred in only primary care settings. Of the other studies, 16% were set in both primary care and hospitals and 14% in hospitals (but trials were also set in the community, schools, and, in one case, a prison. There were qualifying studies in 15 countries. The United States accounted for 55% of studies; the UK (14%) and Canada (7%) have contributed the next largest numbers. Within the United States, over 30% of studies were performed at Veterans Administration facilities, Kaiser Permanente, and a few other integrated health care systems.

3.3. Disease areas

Infectious diseases accounted for a quarter of the studies. Cardiovascular diseases were second with 18%, followed by diabetes (12%), cancer (9%), and behavioral health (5%).

3.4. Types of interaction

As can be seen in Table 1, more than 30 studies investigated different ways of using prompts and reminders directed at patients and clinicians to, predominantly, increase vaccination or screening rates. Another frequent topic was feedback and other ways to increase adherence to clinical guidelines. Nine trials compared effectiveness between treatments.

Table 1.

Type of interactions in embedded pragmatic clinical trials

| Intervention Level | Types of Interactions | Number of Studies |

|---|---|---|

| Population1 | Prompts and reminders (eg, for screening) to population | 5 |

| Patient | Comparing effectiveness of treatments | 9 |

| Clinician | Prompts and reminders to patients | 22 |

| Financial | 4 | |

| Multifaceted (often directed both to patients and clinicians) | 9 | |

| Education and information (may be to patients or clinicians) | 10 | |

| Organization | Decision support tools | 18 |

| Prompts and reminders to clinician | 9 | |

| Audit and feedback | 16 | |

| Organizational changes (eg, task shifting) | 7 | |

| Care management | 4 |

Note: Individuals for whom a health system is responsible or residents of a geographic region.

Eighty‐one percent of the studies had unspecified usual care as the comparator or allowed a variety of usual care practices. The rest had a specific usual care comparator.

3.4.1. Trial design

These trials had a median of 5540 patients. Some studies involved millions of patients treated in the randomized health care units. Most (81 of 108) were cluster randomized, and 9 of these used a stepped wedge design. Fifty percent of studies used registries or administrative databases, 38% used EHRs and 12% a combination. Registry‐based trials had a median of 4173 patients and EHR‐based a median of 7740 patients.

Forty‐three percent of studies collected data specifically for the study. PROs were collected in 11% of the studies. Cost data were collected in 25% of the trials. Individual informed consent was waived in all but 17% of the cluster randomized trials.

3.4.2. Trial costs

Cost data were available for 64 trials. The mean and median cost per patient randomized was $478 and $97, respectively, in 2015 dollars. Twenty‐five percent of studies cost less than $19 per patient; 10 of the trials had a cost per patient above $1000.

As noted in Table 2, US studies had a significantly higher median cost per patient than non‐US studies, $187 vs $27 (P = .0088). Furthermore, registry‐based trials were less expensive than EHR‐based trials, and trials in pediatric populations had a lower median (but not mean) cost per patient than those in adults. Neither difference was statistically significant. The cost per patient for median cluster randomized trials was less expensive than the median for trials with individual randomization: $76 vs $204, although not statistically significant. Behavioral health studies were the most expensive (median $931 vs $90).

Table 2.

Median and mean costs per subject for embedded PCTs (2015 USD equivalent)

| Median (mean) Cost in USD | Number of Trials | |

|---|---|---|

| Funder of research | ||

| Ex‐US government funding | 32 (401) | 18 |

| Other | 16 (426) | 10 |

| US government funding | 147 (569) | 32 |

| Country | ||

| USA | 177 (569) | 39 |

| Non‐USA | 27 (330) | 24 |

| Data collected specifically for trial's need | ||

| Yes | 142 (589) | 26 |

| No | 56 (400) | 37 |

| Patient reported outcomes collected | ||

| Yes | 520 (765) | 6 |

| No | 71 (447) | 57 |

| Disease area | ||

| Behavioral | 931 (1467) | 4 |

| Cancer | 105 (361) | 5 |

| CVD | 135 (477) | 12 |

| Diabetes | 215 (490) | 5 |

| Emergency | 213 (672) | 3 |

| General | 16 (278) | 9 |

| Infectious diseases | 56 (466) | 16 |

| Musculoskeletal | 356 (356) | 2 |

| Other | 44 (44) | 2 |

| Respiratory | 378 (294) | 5 |

| Type of interaction | ||

| Audit and feedback | 79 (139) | 8 |

| Care management | 631 (631) | 2 |

| Comparative effectiveness | 360 (877) | 6 |

| Decision support tools | 105 (866) | 11 |

| Education | 29 (749) | 4 |

| Financial | 32 (32) | 1 |

| Multifaceted | 77 (337) | 6 |

| Organizational | 19 (367) | 5 |

| Reminders and prompts ‐patients | 150 (194) | 14 |

| Reminders and prompts ‐ physicians | 23 (556) | 6 |

| Data source | ||

| EHR | 105 (732) | 24 |

| Registry, administrative data base | 42 (339) | 29 |

| Mixed | 140 (271) | 10 |

| Randomization | ||

| Individual | 204 (362) | 16 |

| Cluster | 71 (517) | 47 |

| Informed consent | ||

| Yes | 553 (845) | 10 |

| No | 76 (408) | 48 |

| Pediatric population | ||

| Yes | 33 (746) | 9 |

| No | 103 (404) | 44 |

| Mixed | 24 (558) | 10 |

Hybrid designs, where some data were collected principally for the trials' needs, made the median trial more than twice as expensive.

3.4.3. Research funding

The NIH and AHRQ funded 16 trials each, the Veterans Health Administration 7, and CDC 8 trials. In total, they funded 74% of all US trials. These trials also had more funding than other trials with a median (mean) total funding of $1 million ($1.5 million) per trial in comparison with $0.3 million ($0.5 million) for non‐US government funded studies and $0.1 million ($0.94 million) for other funders. Government funding also dominated in other countries. A few trials were funded by pharmaceutical or medical technology industries, insurance companies, or other private companies. Provider organizations, integrated health care systems, and philanthropic organizations like the Commonwealth Fund have funded some studies.

3.5. Results interviews

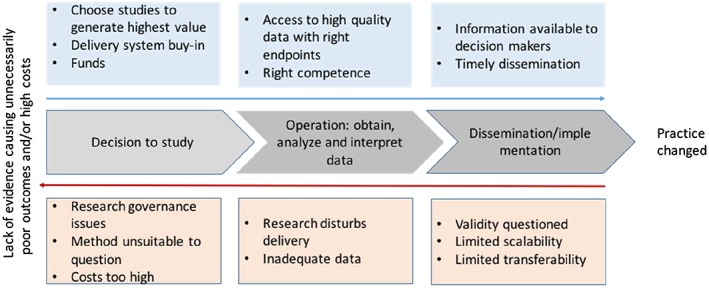

Results from interviews are presented as a conceptual model of barriers and success factors for embedded PCTs. See Figure 1. The model is structured around 3 steps for embedded PCTs to influence practice: (1) the decision of what to study, (2) obtaining, analyzing, and interpreting data, and (3) disseminating and implementing findings. There are success factors (presented on top in Figure 1) and factors that may be obstacles (at the bottom in Figure 1).

Figure 1.

Steps for embedded research to influence practice. Facilitating factors on top and barriers below

3.5.1. Decision of what, where, and how to study

Health system leaders prioritized studies that generate value to health care delivery systems in the short term, did not incur direct costs, and had low opportunity costs. Some decision makers acknowledged the potential return on investment of their directly funding some embedded trials, but were disinclined to self‐fund studies that would benefit other systems at no cost, ie, a free‐riding problem. Informants noted that external funders often required a level of methodological rigor and end points that are not routinely collected, making it more difficult for the systems to incorporate the studies into routine practice.

Many of the interviewed researchers noted a tension between quickly collecting enough information to address the most urgent questions that the delivery system has and conducting studies that provide stronger, more generalizable, evidence. Most administrators and clinical leaders indicated that they usually could not wait for federal grant review and award mechanisms or commit to studies that required long implementation times.

All the interviewed researchers understood the ethical requirements for equipoise and exposure of research subjects to minimal risk. However, most identified the research governance process as an obstacle, because of the length of time it added and inconsistency in determining whether a pragmatic study could be classified as minimal risk and whether the requirement for written informed consent could be waived. One of them noted that cluster randomization was sometimes chosen because it lessened the need for individual informed consent.

3.5.2. Implementation of PCT

In the interviews, some researchers mentioned that if the research disturbs health care delivery too much, this can create obstacles. This can happen if the intervention is burdensome to implement, if clinicians must spend time to recruit patients, obtain informed consent, or record data for the study. Those and other factors may cause slow or insufficient recruitment into the study. A few researchers also discussed issues related to study operations such as practical and technical issues around data collection and that unexpected changes in leadership at study sites (where the new leader has not “bought into” the study) or changes in the usual care control arm may create obstacles.

3.5.3. Dissemination and implementation of results

While all informants noted the importance of disseminating trial results and implementation when appropriate, a couple of interviewees mentioned examples where successful interventions were not implemented. Reasons mentioned by researchers and clinical leaders included questioning the validity of the results, or a determination that results had limited scalability or transferability from a research setting to an operational one.

Although the published reports identified by the literature review were in journals with relatively high impact factors (median = 4.5), a few investigators reported greater difficulty publishing embedded PCTs than other types of clinical research. The time it takes to publish was related to factors such as the quality of peer reviews—pragmatic trials were sometimes reviewed as if they were conventional explanatory clinical trials—and 2 researchers mentioned having to send the paper to many journals. According to one of the interviewed decision makers, the long lead times of research may affect the value of these trials. If researchers take care in investigating questions that are meaningful to health care providers, that effort may be less valuable if the decision makers cannot learn the results in a timeframe they perceive as realistic. However, some researchers pointed to other more informal and faster ways they shared results with the delivery system.

4. DISCUSSION

The embedded PCTs in this review included many more subjects than conventional RCTs. The average RCT in PubMed had 36 patients per arm in 20064: These PCTs were approximately 2 orders of larger magnitude. The ability to study larger numbers of individuals allows study designs that more closely replicate clinical practice situations, with broad inclusion criteria and clinically relevant comparators rather than placebo. Such designs require larger sample sizes to have adequate power. An important caveat is that the intent to replicate clinical practice does not diminish the importance of ensuring that the intervention is implemented in a manner that results in sufficiently different treatment, on average, of randomized individuals.

The trials analyzed in this review were inexpensive (median cost/patient under $100) in comparison with traditional RCTs, where a published estimate put the cost (in 2011) at an average of $16 600 per patient.14 However, cost information could be obtained only for 64 trials in this review, and costs were not measured consistently, which limits generalizability. Furthermore, it is not straightforward to compare costs of embedded PCTs with traditional RCTs, because traditional RCTs are often pharmaceutical trials intended to support licensing applications. A study that analyzed 4 surgical trials, intended to influence practice but not serve a regulatory need, may therefore serve as another point of reference and estimated the total cost per enrolled patient from $380 to $1600.15 Overall, PCTs had low total costs compared to conventional trials, as well as low costs per subject. Factors contributing to the low total cost include the relatively low fixed costs of study design, implementation, and oversight. Although these costs are low compared to conventional clinical trials, their total cost is large compared to clinical organizations' typical evaluation budgets.

Although there are examples of embedded PCTs in a variety of care settings, the most fertile environments have been integrated health care systems with well‐established electronic medical records systems, commitment to ongoing, data‐driven quality improvement, and active research departments. This may raise issues around transferability of results to other settings.

Embedded PCTs have principally been used for rigorous evaluations in health services research of quality improvement initiatives and organizational and structural changes. There is a relative paucity of PCTs that compare alternative prevention, diagnostic, or treatment regimens that are used in routine clinical practice—situations in which there is a presumption of clinical equipoise. This review suggests that it will be both feasible and relatively inexpensive to expand the use of embedded PCTs to evaluate treatment effect in a variety of practice settings and populations, both for the use of pharmaceuticals in real world settings and other interventions.

Limitations include the difficulties in searching the literature for studies that have used a particular design. We have undoubtedly missed published studies that should have been included. The requirement that the primary end point be obtainable from already available data sources excluded trials that used PROs as their primary end point. Restriction to published studies may have selected an unrepresentative subset of studies. Furthermore, the true costs are likely to be underestimated for some studies because it was often not possible to determine what costs were included. We are unaware of information about the true costs to delivery systems for supporting embedded clinical trials. Such information will become more important if organizations contemplate participation in a larger volume of PCTs. Finally, since all the interviewees were based in the United States, the interview information may not be relevant to other countries.

4.1. Conclusion and recommendations

The published experience with embedded PCTs indicates that they can be a relatively inexpensive and effective way to address important knowledge gaps. The PCTs' high external validity makes them well suited to assess comparative effectiveness and cost‐effectiveness. They can also evaluate differences in cost and quality of life that would not be realistic for conventional clinical trials to address. Although at present, they complement rather than replace regulatory trials for pharmaceuticals, some pragmatic aspects may be incorporated into such trials in light of the growing interest in real‐world evidence, and the FDA's commitment to developing standards for using it.16 Until then, PCTs have their main role when there is insufficient knowledge on comparative effectiveness and effectiveness in the real world clinical setting with heterogeneous populations.17

Our interviews highlighted 3 major considerations that must be addressed for embedded PCTs to become more widely used:

-

1

The decision of what to study and how. Embedded PCTs should be attuned to the needs of health care delivery systems through more direct links between clinical decision makers, investigators, and research sponsors.

One possibility for extending pragmatic embedded research to a larger group of organizations is to create methods for multiple organizations to fund and/or participate in a single, coordinated PCT. Consortium approaches might work particularly well for cluster randomization, but multicenter studies incur substantial added time, effort, and cost to address governance, implementation, and analysis needs.

There are also ways researchers can make embedded research more relevant for health care when designing and evaluating trials.4 A practical way to increase value for end users is for researchers to engage directly and substantively with patients, clinicians, and health system leaders to establish research priorities. Another is to collect resource consumption and/or cost data to guide decisions about implementation.

Research funders for their part should recognize that PCTs are only well suited for some of the questions for which they currently fund conventional RCTs and strive to identify areas of overlap between their needs and priorities and those of delivery systems.

-

2

Implementation of PCTs. Embedded research must not unduly disturb health care delivery. An important aspect of pragmatic trials is to use data that are available and keep end points few to restrict data collection to a minimum. Nonetheless, it is important to implement the intervention in a manner that assures that the treatment arms will differ sufficiently to assess the impact of the intervention and collect enough data to understand both the effect of the intervention and how well it has been implemented.

-

3

Disseminate and implement findings. Academic publishers would do well to adopt guidelines specifically for reporting embedded PCTs. They should ensure that the review process evaluates them on their own merits and does not treating pragmatic research as if it were exploratory. Furthermore, the research community may need to develop alternative dissemination venues.

Generally, the pragmatic nature of these trials should facilitate implementation of results in clinical practice. However, as for all clinical practices, for successful implementation, creation of implementation toolkits that contain detailed standard operating procedures, instructional guides for local implementers, and resources for staff and patients will greatly enhance uptake of findings. Stepped wedge designs18 and point of care trials with adaptive randomization13 are innovative approaches that expedite implementation in the environments where they are tested, because implementation is largely complete by the end of the trial.

Supporting information

Table S1: (Supplementary file 2) summarizes the data extracted.

Data S1: Supporting info item

APPENDIX A.

A.1.

Search terms in PubMed.

Pragmatic[Title/Abstract]) AND (Randomized Clinical Trial[ptyp] AND “2006/01/01”[PDAT]: “2016/03/31”[PDAT]) AND Humans[Mesh]) AND English[Language])))) NOT clinical protocols[MeSH Terms]) NOT statistical analysis plan[Title])) NOT protocol[Title] NOT design[Title/Abstract]) NOT rationale[Title/Abstract]) NOT pilot[Title/Abstract]

Registry‐based[Title/Abstract]) AND (Randomized Clinical Trial[ptyp] AND “2006/01/01”[PDAT]: “2016/03/31”[PDAT]) AND Humans[Mesh]) AND English[Language])))) NOT clinical protocols[MeSH Terms]) NOT statistical analysis plan[Title])) NOT protocol[Title] NOT design[Title/Abstract]) NOT rationale[Title/Abstract]) NOT pilot[Title/Abstract]

Cluster‐randomized[Title/Abstract] AND (Randomized Clinical Trial[ptyp] AND “2006/01/01”[PDAT]: “2016/03/31”[PDAT]) AND Humans[Mesh]) AND English[Language])))) NOT clinical protocols[MeSH Terms]) NOT statistical analysis plan[Title])) NOT protocol[Title] NOT design[Title/Abstract]) NOT rationale[Title/Abstract]) NOT pilot[Title/Abstract]

APPENDIX B.

B.1.

Interview guide site visits—researchers

Tell me about your experiences with embedded research.

How does embedded trials differ from regular clinical trials, observational research, or quality improvement work?

In your view, what is the value of this research?

How is the research typically initiated?

How is the research funded?

Can you describe how research results have been disseminated?

Have the findings from your trials been used to inform clinical practice?

What is your overall impression of the possibilities for embedding research into clinical practice? When is it a good idea and when is it not the best method?

-

What were some of the difficulties you experienced?

Prompts (if topic does not come up in conversation):

Were there challenges associated with- Quality or completeness of data?

- Using Patient Reported Outcomes?

- Funding?

- Research governance and informed consent?

- Making room for research in the daily delivery of health care?

- Interactions with leadership and administration at clinics and in the organization?

- Publication of results?

What are the costs of embedded trials and how do they differ from regular trials?

Do you have any suggestions to improve policies to facilitate embedded research?

Interview guide site visits—leaders and administrators

Tell me about your experiences with embedded research.

In your view, what is the value of this research?

How is the research initiated and funded?

What are the costs of embedded trials and how do they differ from regular trials?

What is the role for embedded trials in relation to regular clinical trials, observational research, or quality improvement work?

- How does embedded trials fit in with other ways of generating knowledge?

- What motivates you as a health delivery organization to be involved in generating new knowledge?

- Are evident, short term, cost‐savings necessary?

Have findings from embedded trials been used to inform clinical practice in your organization?

What has been some of the difficulties you have experienced with embedded research?

What are your overall impressions of the possibilities for embedding research into clinical practice?

Do you have any suggestions to improve policies to facilitate embedded research?

Ramsberg J, Platt R. Opportunities and barriers for pragmatic embedded trials: Triumphs and tribulations. Learn Health Sys. 2018;2:e10044 10.1002/lrh2.10044

REFERENCES

- 1. Institute of Medicine. Envisioning a Transformed Clinical Trials Enterprise in the United States: Establishing An Agenda for 2020: Workshop Summary . In: Weisfeld N, English RA. and Claiborne AB, (eds.). Forum on drug discovery, development, and translation. Washington D.C. 2012. [PubMed]

- 2. Institute of Medicine. Learning what works. Infrastructure Required for Comparative Effectiveness Research. Workshop Summary In: Olsen L, Grossmann C. and McGinnis JM, (eds.). The learning health systems series. 2007.

- 3. Frieden T. Evidence for health decision making—beyond randomized controlled trials. N Engl J Med. 2017;377:465‐475. [DOI] [PubMed] [Google Scholar]

- 4. Ioannidis JP. Why most clinical research is not useful. PLoS Med 2016; 13: e1002049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Ford I, Norrie J. Pragmatic trials. N Engl J Med. 2016;375:454‐463. [DOI] [PubMed] [Google Scholar]

- 6. Smith R, Chalmers I. Britain's gift: a "Medline" of synthesised evidence. BMJ. 2001;323:1437‐1438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. James S, Rao SV, Granger CB. Registry‐based randomized clinical trials—a new clinical trial paradigm. Nat Rev Cardiol. 2015;12:312‐316. [DOI] [PubMed] [Google Scholar]

- 8. Lauer MS, D'Agostino RB. The randomized registry trial—the next disruptive technology in clinical research? N Engl J Med. 2013;369:1579‐1581. [DOI] [PubMed] [Google Scholar]

- 9. PCORI. PCORnet . 2016.

- 10. National Institutes of Health . NIH collaboratory. 2016.

- 11. Institute of Medicine . Public engagement and clinical trials: new models and disruptive technologies Washington DC2012.

- 12. Shih MC, Turakhia M, Lai TL. Innovative designs of point‐of‐care comparative effectiveness trials. Contemp Clin Trials. 2015;45:61‐68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. National Institutes of Health . NIH biomedical research and development price index (BRDPI) 2016.

- 14. Berndt ER, Cockburn IM. Price indexes for clinical trial research: a feasibility study. Mon Labor Rev. 2014; [Google Scholar]

- 15. Shore BJ, Nasreddine AY, Kocher MS. Overcoming the funding challenge: the cost of randomized controlled trials in the next decade. J Bone Joint Surg Am. 2012;94(Suppl 1):101‐106. [DOI] [PubMed] [Google Scholar]

- 16. Califf R. 21st century cures act: making progress on shared goals for patients. Available at: https://blogs.fda.gov/fdavoice/index.php/2016/12/21st‐century‐cures‐act‐making‐progress‐on‐shared‐goals‐for‐patients/).December 13 2016. Accessed on: August 17 2017.

- 17. van Staa TP, Klungel O, Smeeth L. Use of electronic healthcare records in large‐scale simple randomized trials at the point of care for the documentation of value‐based medicine. J Intern Med. 2014;275:562‐569. [DOI] [PubMed] [Google Scholar]

- 18. Hemming K, Haines TP, Chilton PJ, Girling AJ, Lilford RJ. The stepped wedge cluster randomised trial: rationale, design, analysis, and reporting. BMJ. 2015;350:h391 [DOI] [PubMed] [Google Scholar]

- 19. Tunis SR, Stryer DB, Clancy CM. Practical clinical trials: increasing the value of clinical research for decision making in clinical and health policy. JAMA. 2003;290:1624‐1632. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Table S1: (Supplementary file 2) summarizes the data extracted.

Data S1: Supporting info item