Abstract

Animals identify, interpret, and respond to complex, natural signals that are often multisensory. The ability to integrate signals across sensory modalities depends on the convergence of sensory inputs at the level of single neurons. Neurons in the amygdala are expected to be multisensory because they respond to complex, natural stimuli, and the amygdala receives inputs from multiple sensory areas. We recorded activity from the amygdala of 2 male monkeys (Macaca mulatta) in response to visual, tactile, and auditory stimuli. Although the stimuli were devoid of inherent emotional or social significance and were not paired with rewards or punishments, the majority of neurons that responded to these stimuli were multisensory. Selectivity for sensory modality was stronger and emerged earlier than selectivity for individual items within a sensory modality. Modality and item selectivity were expressed via three main spike-train metrics: (1) response magnitude, (2) response polarity, and (3) response duration. None of these metrics were unique to a particular sensory modality; rather, each neuron responded with distinct combinations of spike-train metrics to discriminate sensory modalities and items within a modality. The relative proportion of multisensory neurons was similar across the nuclei of the amygdala. The convergence of inputs of multiple sensory modalities at the level of single neurons in the amygdala rests at the foundation for multisensory integration. The integration of visual, auditory, and tactile inputs in the amygdala may serve social communication by binding together social signals carried by facial expressions, vocalizations, and social grooming.

SIGNIFICANCE STATEMENT Our brain continuously decodes information detected by multiple sensory systems. The emotional and social significance of the incoming signals is likely extracted by the amygdala, which receives input from all sensory domains. Here we show that a large portion of neurons in the amygdala respond to stimuli from two or more sensory modalities. The convergence of visual, tactile, and auditory signals at the level of individual neurons in the amygdala establishes a foundation for multisensory integration within this structure. The ability to integrate signals across sensory modalities is critical for social communication and other high-level cognitive functions.

Keywords: amygdala, multisensory, primate

Introduction

From learning that the buzzing of a bee might be accompanied by a painful sting to combining words with body language to understand the emotions of others, the natural world requires organisms to incorporate information from all available senses. High-level association cortices, such as the superior temporal sulcus and PFC, integrate socially significant signals from multiple sensory modalities at the single-neuron level (Barraclough et al., 2005; Sugihara et al., 2006; Chandrasekaran and Ghazanfar, 2009; Dahl et al., 2010; Romanski and Hwang, 2012; Diehl and Romanski, 2014). These areas are bidirectionally connected to the amygdala (Aggleton et al., 1980; Amaral and Price, 1984; Ghashghaei and Barbas, 2002; Sah et al., 2003), a structure known to signal the affective and social significance of stimuli (Leonard et al., 1985; Brothers et al., 1990; Uwano et al., 1995; Zald and Pardo, 1997; Schoenbaum et al., 1999; Paton et al., 2006; Gothard et al., 2007; Hoffman et al., 2007; Bermudez and Schultz, 2010; Haruno and Frith, 2010; Kuraoka and Nakamura, 2012; Genud-Gabai et al., 2013; Gore et al., 2015; Resnik and Paz, 2015; Minxha et al., 2017). The anatomical convergence of inputs of multiple sensory modalities in the amygdala, and the role this structure plays in computing the social/emotional significance of multisensory stimuli, suggest that neurons in the amygdala respond to multiple sensory modalities. However, the degree of convergence of different sensory modalities at the single-neuron level in the amygdala is unknown.

Remarkably few studies have explored the cellular basis of multisensory processing in the primate amygdala, and those that do used tasks in which the social/emotional significance of the stimuli may have affected neural responses (Nishijo et al., 1988; Kuraoka and Nakamura, 2007). Most neurophysiological recordings from the primate amygdala have relied on visual stimuli. Collectively, these studies reported that ∼12%–50% of neurons in the amygdala are visually responsive (Leonard et al., 1985; Brothers et al., 1990; Sugase-Miyamoto and Richmond, 2005; Paton et al., 2006; Gothard et al., 2007; Mosher et al., 2010, 2014; Minxha et al., 2017; Munuera et al., 2018). Recently, Mosher et al. (2016) reported that ∼34% of neurons in the monkey amygdala respond to tactile stimulation of the face (Mosher et al., 2016). Few studies have explored auditory responses in the amygdala of primates (Genud-Gabai et al., 2013; Resnik and Paz, 2015), and it is unclear what proportion of neurons respond to sounds. Gustatory and olfactory stimuli activate widely varying proportions (8%–50%) of amygdala neurons depending on the behavioral task used (Karádi et al., 1998; Scott et al., 1993, 1999; Kadohisa et al., 2005; Sugase-Miyamoto and Richmond, 2005; Rolls, 2006; Livneh and Paz, 2012). Based solely on these proportions, it is likely that these neurons process inputs from multiple modalities.

An important challenge in investigating the organization of multisensory inputs in the amygdala is to differentiate the purely sensory component of the neural response from task- or value-related activity. For example, Genud-Gabai et al. (2013) showed that neurons in the amygdala encode whether a stimulus is a “safety signal” regardless of the sensory modality of the stimulus. Likewise, Paton et al. (2006) noted that both value and sensory features of images were often encoded by the same neurons. It is imperative, therefore, to start exploring the organization of sensory modalities in the amygdala using stimuli devoid of inherent, species-specific, or learned affective or behavioral significance.

Here we addressed the following questions regarding multisensory processing in the monkey amygdala using neutral visual, tactile, and auditory stimuli: (1) What are the relative proportions of neurons that respond to single versus multiple sensory modalities? (2) How is information about sensory modality encoded by the spike trains of these cells? (3) Are multisensory neurons more common in nuclei that represent later stages of intra-amygdala processing? We found a large, widely distributed population of neurons in the amygdala that were multisensory. Selectivity for sensory modality was encoded via modulations in response magnitude, polarity, and duration.

Materials and Methods

Surgical procedures

Two adult male rhesus macaques, Monkey F and Monkey B (weight 9 and 14 kg; age 9 and 8 years, respectively), were prepared for neurophysiological recordings from the amygdala. The stereotaxic coordinates of the right amygdala in each animal were determined based on high-resolution 3T structural MRI scans (isotropic voxel size = 0.5 mm for Monkey F and 0.55 mm for Monkey B). A square (26 × 26 mm inner dimensions) polyether ether ketone, MRI-compatible recording chamber was surgically attached to the skull, and a craniotomy was made within the chamber. The implant also included three titanium posts, used to attach the implant to a ring that was locked into a head fixation system. Between recording sessions, the craniotomy was sealed with a silicone elastomer that can prevent growth and scarring of the dura (Spitler and Gothard, 2008). All procedures comply with the National Institutes of Health guidelines for the use of nonhuman primates in research and have been approved by Institutional Animal Care and Use Committee.

Experimental design

Electrophysiological procedures.

Single-unit activity was recorded with linear electrode arrays (V-probes, Plexon) that have 16 equidistant contacts on a 236-μm-diameter shaft. The first contact is located 300 μm from the tip of the probe, and each subsequent contact is spaced 400 μm apart; this arrangement allowed us to monitor simultaneously the entire dorsoventral expanse of the amygdala. Impedance for each contact typically ranged from 0.2 to 1.2 mΩ. The anatomical location of each electrode was calculated by drawing to scale on each MRI slice the chamber and aligning it to fiducial markers (coaxial columns of high contrast material). During recordings, slip-fitting grids with 1 mm distance between cannula guide-holes were placed in the chamber; this allowed a systematic sampling of most mediolateral and anteroposterior locations in the amygdala (see Fig. 5A).

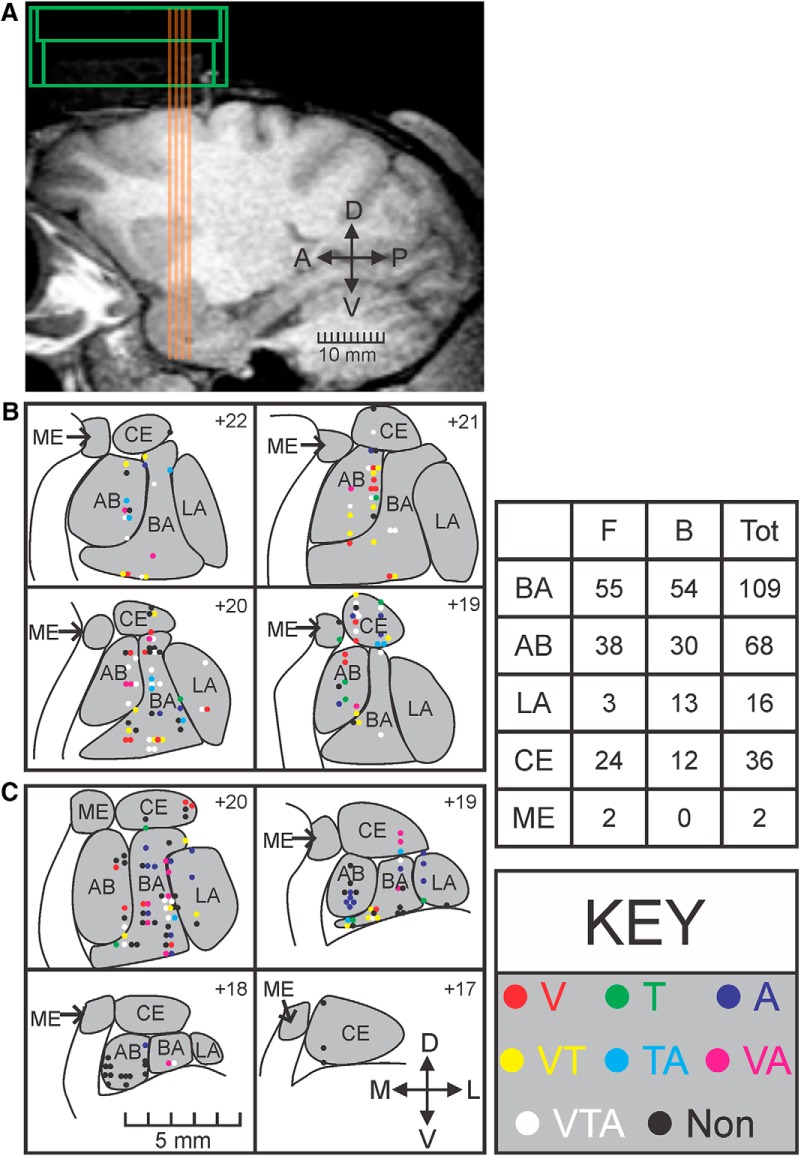

Figure 5.

Anatomical distribution of response type for all recorded cells. A, Sagittal section from the MRI of Monkey F showing the electrode tracks (orange lines) and chamber location (green). B, Anatomical reconstruction of each recorded cell for Monkey F. Each panel represents a coronal section of an MRI with the recorded cells for that anteroposterior position overlaid on top (approximate distance from interaural line shown in top right of each panel). Response types follow the same color code as in Figure 2. Gray represents amygdala nuclei. C, Anatomical reconstruction of each recorded cell for Monkey B. Bottom left, scale bar for all coronal sections. Bottom right, Arrows indicate mediolateral (M-L) and dorsoventral (D-V) axes. The table gives a numerical breakdown of the sampling from the 2 monkeys (Monkey F and Monkey B) from across the nuclei. BA, basal; AB, accessory basal; LA, lateral; CE, central; ME, medial.

The analog signal from each channel on the V-probe was digitized at the headstage (Plexon, HST/16D Gen2) before being sent through a Plexon preamplifier, filtering from 0.3 to 6 kHz, and sampling continuously at 40 kHz. Single units were sorted offline (Plexon offline sorter version 3, RRID:SCR_000012) using predominately principle component analysis.

Stimulus delivery.

The monkey was seated in a primate chair and placed in a recording booth featuring a 1280 × 720 resolution monitor (ASUSTek Computer), two Audix PH5-VS powered speakers (Audix) to either side of the monitor, a custom-made airflow delivery apparatus (Crist Instruments), and a juice spout (Fig. 1A,B). The airflow system was designed to deliver gentle, nonaversive airflow stimuli to various locations on the face and head (the pressure of the air flow was set to be perceptible but not aversive). The system, based on the designs of Huang and Sereno (2007) and Goldring et al. (2014), consists of a solenoid manifold and an airflow regulator (Crist Instruments), which controlled the intensity of the airflow directed toward the monkey. Low-pressure vinyl tubing lines (ID 1/8 inch) were attached to 10 individual computer-controlled solenoid valves and fed through a series of Loc-line hoses (Lockwood Products). The Loc-line hoses were placed such that they did not move during stimulus delivery and were out of the monkey's line of sight (Fig. 1B). All airflow nozzles were placed ∼2 cm from the monkeys' fur, and outflow was regulated to 20 psi. At this pressure and distance, the air flow caused a visible deflection of the monkeys' fur.

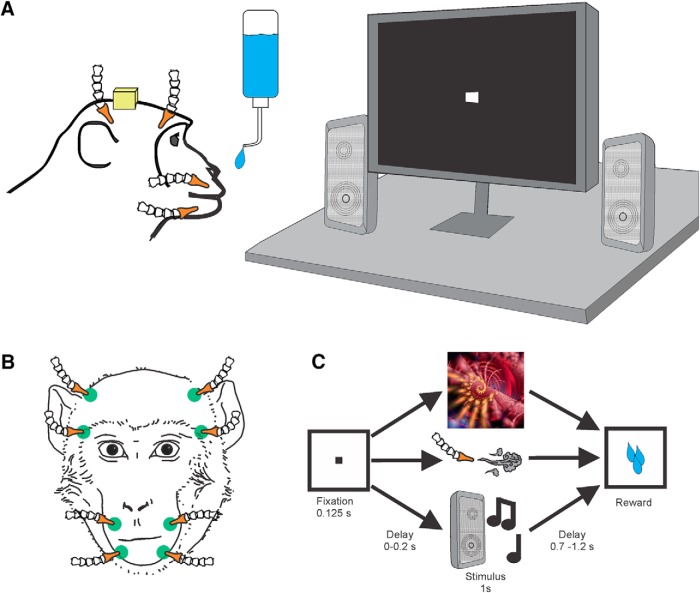

Figure 1.

Experiment paradigm. A, Setup for the delivery of visual, tactile, and auditory stimuli. The monkey was seated in a primate chair facing a monitor and two speakers. Speakers, air flow lines, and juice spout were placed such that they did not block the view of the monitor. B, Frontal view of the Loc-line hoses for tactile stimulation locations (green). All hoses were placed orthogonal to the surface of the skin at ∼2 cm distance and directed toward nonsensitive areas (i.e., away from the eyes and ears). C, Task design. The monkey fixates a target cue on the monitor that is followed by a visual, tactile, or auditory stimulus drawn at random from a stimulus set. Stimulus delivery is followed by a small juice reward.

Stimulus delivery was controlled using custom-written code in Presentation Software (Neurobehavioral Systems). The monkey's eye movements were tracked by an infrared eye tracker (ISCAN, camera type: RK826PCI-01) with a sampling rate of 120 Hz. Eye position was calibrated before every session using a 5 point test. During the task, the animal was required to fixate for 125 ms a central cue (“fixspot”) that subtended 0.35 dva. Successful fixation was followed by the delivery of a stimulus randomly drawn from a pool of neutral visual, tactile, and auditory stimuli. In Monkey F, there was no delay between the fixspot offset and stimulus onset, whereas in Monkey B, a 200 ms delay was used (Fig. 1). Stimulus delivery lasted for 1 s and was followed (after a pause of 700–1200 ms) by juice reward. Each stimulus was presented 12–20 times and was followed by the same amount of juice. Trials were separated by a 4 s intertrial interval.

Each day, a set of eight novel images were selected at random from a large pool of pictures of fractals and objects. Images were displayed centrally on the monitor and covered ∼10.5 × 10.5 dva area. During trials with visual stimuli, the monkey was required to keep his eye within the boundary of the image. If the monkey looked outside of the image boundary, the trial was terminated without reward and repeated following an intertrial interval.

Tactile stimulation was delivered to eight sites on the face and head (Fig. 1B): the lower muzzle, upper muzzle, brow, and above the ears on both sides of the head. The face was chosen because, in our previous study, a large proportion of the neurons in the amygdala responded to tactile stimulation of the face (Mosher et al., 2016). Two “sham” nozzles were directed away from the monkey on either side of the head to control for the noise made by the solenoid opening and/or by the movement of air through the nozzle. Pre-experiment checks ensured that the airflow was perceptible (deflected the hairs) but not aversive. The monkeys displayed slight behavioral responses to the stimuli during the first few habituation sessions, but they did not respond to these stimuli during the experimental sessions.

For each recording session, a set of eight novel auditory stimuli were taken from www.freesound.org, edited to be 1 s in duration, and amplified to have the same maximal volume using Audacity sound editing software (Audacity version 2.1.2, RRID:SCR_007198). Sounds included musical notes from a variety of instruments, synthesized sounds, and real-world sounds (e.g., tearing paper). The auditory stimuli for each session were drawn at random from a stimulus pool using a MATLAB (The MathWorks, version 2016b, RRID:SCR_001622) script.

All stimuli were specifically chosen to be unfamiliar and devoid of any inherent or learned significance for the animal. Stimuli with socially salient content, such as faces or vocalizations, were avoided as were images or sounds associated with food (e.g., fruit or the sound of the feed bin opening). Airflow nozzles were never directed toward the eyes or into the ears to avoid potentially aversive stimulation of these sensitive areas. Because previous studies have failed to find tuning to low-level visual features, such as color, shape, or orientation (Sanghera et al., 1979; Ono et al., 1983; Nakamura et al., 1992), we did not systematically alter these features in our stimulus set.

Statistical analysis

Spike times and waveforms were imported into MATLAB for further analysis using scripts from the Plexon-MATLAB Offline Software Development Kit (Plexon). A neuron was only included in the general analysis if it met three criteria: (1) it was active during at least 144 consecutive trials, (2) the mean firing rate of the cell during either the baseline (−2 to −1 s preceding the onset of the fixspot) or during stimulus delivery (0–1 s after stimulus on) was >1 Hz, and (3) the estimated location of the neuron was within the amygdala. A total of 231 cells (109 from Monkey B, and 122 from Monkey F) met these criteria.

We first established whether each neuron responded to any of the sensory modalities using a sliding window Wilcoxon rank-sum test. This method has been used previously to assess neural responsivity in quiescent structures, such as the amygdala (Kennerley et al., 2009; Rudebeck et al., 2013; Mosher et al., 2016). Briefly, rank-sum tests were used to compare the firing rate in a series of 100 ms bins throughout the stimulation period (each bin stepped by 20 ms) to a pretrial baseline period. If the response in any of the bins was significantly different from the pretrial baseline, the cell was classified as responsive. The α value (α) was Bonferroni–Holm corrected for number of comparisons per set. In most cases, there were 46 tests per modality (i.e., the tests started with a bin spanning 0–100 ms and ended with a bin spanning 900–1000 ms). In some cases, we found that fixspot responses continued into the stimulation window. To avoid erroneously attributing the responses in these bins to stimulus delivery, we shifted the period in which we ran the rank-sum tests by 200 ms (i.e., the tests started at 200–300 ms and ended at 900–1000 ms, performing 36 tests). In these cases, we corrected the α value accordingly. To ensure that the auditory component of the airflow was not the cause of significant activity changes on tactile trials, we compared the neural response to airflow directed toward the monkey and airflow directed away from the monkey (“sham” trials) for each cell. This was done using a series of rank-sum tests comparing the activity in each 100 ms bin between these two conditions (Bonferroni-corrected for number of comparisons). A bin must show a significant difference for both baseline versus response and sham versus response in order for it to be classified as tactile responsive.

To determine the validity of our statistical methods, we performed two control analyses: (1) calculated the number of responsive cells when varying the bin size and (2) calculated the false-discovery rate using nonstimulus periods with two separate methods.

Changing the window size by ±50 ms resulted in <5% changes in the total number of responsive cells. Even doubling the window size to 200 ms only resulted in a 5.6% increase in the number of responsive cells. These changes were not statistically significant (χ2(3, N = 231) = 3.18, p = 0.37). Therefore, the results are likely not attributable to the parameters of the sliding-window Wilcoxon rank-sum analysis.

Although the Bonferroni–Holm correction method is a relatively strict way to control for multiple comparisons, it is possible that we underestimated the rate of false positives generated using the sliding window Wilcoxon rank-sum test. We applied the same analysis described above to periods of time in the interblock interval (i.e., when no task-related activity was present) and found that this test classified 8 of 231 neurons as responsive. This corresponds to a false discovery rate of 3.5%.

To further validate our findings, we calculated the false discovery rate using a Wilcoxon rank-sum based cluster mass test described by Maris and Oostenveld (2007) and applied to single-unit data by numerous groups (Kaminski et al., 2017; Gelbard-Sagiv et al., 2018; Intveld et al., 2018). In this method, a series of Wilcoxon rank-sum tests were again used to assess firing rate differences between baseline bins and a series of 100 ms bins (20 ms steps) taken from the stimulation period for each response category. The longest segment of adjacent bins with significant p values (<0.01) was identified, and the Z statistics during this cluster of bins was summed. This gives a cluster-level test statistic for the stimulation versus baseline comparison. The labels for the bins (baseline vs stimulation) were then shuffled randomly to obtain binned firing rate data that no longer corresponded to the timeline of the task. We extracted the cluster-level Z statistic from 500 different shuffles.

This method gives a distribution of Z statistics that could be obtained due solely to the parameters of the Wilcoxon rank-sum tests and not to differences between the stimulation and baseline periods. If the Z statistic obtained from the nonshuffled data fell within the inner 99th percentile of values, it was considered a false positive, whereas values falling outside of this range were considered true positives. Using this method, which restricts false positives to <1%, we found 168 cells that responded to the stimuli. This value is similar to Bonferroni–Holm correction method (160 cells), though slightly higher. Because both methods return similar results, it is unlikely that the number of responsive cells was due to our statistical analysis methods.

A χ2 test compared the number of cells that showed visual, tactile, or auditory responses to determine whether there were any differences in the prevalence of these responses among the neurons. Each neuron was then assigned to eight possible categories: (1) “visual only,” (2) “tactile only,” (3) “auditory only,” (4) “visual-and-tactile,” (5) “visual-and-auditory,” (6) “tactile-and-auditory,” (7) “visual-and-tactile-and-auditory,” or (8) “nonresponsive” based on the results of the sliding window rank-sum test. We compared the number of neurons observed in each class with the predicted values generated from a binomial probability density function. To generate the expected values for these distributions (Fig. 2E), we used the conditional probabilities obtained from the rates of visual, tactile, and auditory responses across the 231 recorded cells. Conditional probability is given by the following formula:

Where P(A) is the probability of Condition A being true and P(B) is the probability of Condition B being true. For example, the conditional probability of a cell responding to visual and tactile but not auditory stimuli is given by the following:

This probability is then used to generate a binomial probability density function, which is obtained from the following formula:

|

Where y is probability of getting x number of cells in a category given n number of total cells and the conditional probability, p, of a cell being part of that category. If the value of y was <0.00125 (α = 0.01 corrected for 8 comparisons), then the observed value was considered significantly different from the value predicted by the conditional probability. Values that fell below the 0.06 percentile or above the 99.94 percentile of the distribution generated from the binomial probability density function (as shown in Fig. 2E) met this criterion. This is the equivalent of a two-tailed binomial test, Bonferroni-corrected for eight comparisons.

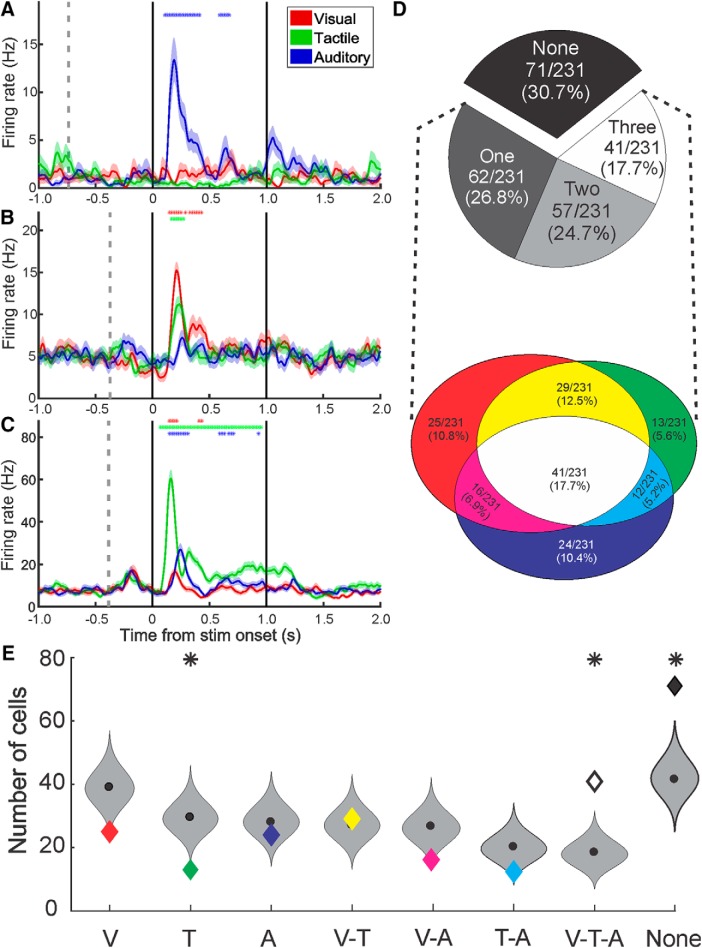

Figure 2.

Types and proportions of multisensory neurons in the monkey amygdala. A–C, Example neural responses to stimuli of a single or multiple sensory modalities: Red represents visual. Green represents tactile. Blue represents auditory. The mean spike density function (solid lines) ± SEM (shaded regions) for each sensory modality shows the amplitude and the time course of the response averaged across eight stimuli. *Time segments in which the firing rate was significantly different from baseline. Vertical gray dotted line indicates the onset of the fixspot. Solid black lines indicate the onset and offset of the stimulus. D, Classification of responses. Top, Pie chart represents the proportion of all recorded cells that responded to 0, 1, 2, or 3 modalities. Bottom, Venn diagram represents the ratio of responsive neurons classified as each combination of responses to visual, tactile, and auditory stimuli: Red represents visual only. Green represents tactile only. Blue represents auditory only. Yellow represents visual-and-tactile. Magenta represents visual-and-auditory. Cyan represents tactile-and-auditory. White represents visual-and-tactile-and-auditory. E, Binomial probability density functions (gray violin plots), cut at the 0.06 and 99.94 percentiles, for the number of cells in each response category given the observed rates of visual, tactile, and auditory responses. Colored diamonds represent the observed number of cells for the category (same color code as in D). Black circle represents the median. Above violin plots, *The observed value was significantly different than expected from the binomial probability density function.

We next determined whether each responsive neuron was selective primarily for sensory modality or specific items within a sensory modality. We used a mixed-effects, nested ANOVA model with modality as a fixed group variable and item within modality as a random nested variable (α = 0.01). The response during the 1 s stimulation period for each stimulus was used for this test. For neurons that were significantly selective for modality, item, or both, we calculated the effect size as the measure of selectivity. The effect size allowed us to compare selectivity for modality to selectivity for items, especially for neurons that showed selectivity for both. It should be noted that the calculation of effect size for group and nested subgroup variables in a nested, mixed-effects ANOVA model is dependent on whether the variable is considered fixed (i.e., a measurement without error) or random (i.e., a subsample of representative values drawn from a larger population) (Kirk, 2013). In this case, sensory modality is considered fixed because this is a categorical variable for which there is no error term; however, item within modality is considered random due to the fact that we are only presenting eight stimuli of an infinite number of potential possibilities.

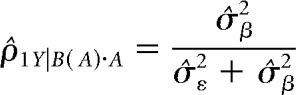

For the fixed variable (i.e., modality), partial ω2 was calculated using the following formula:

|

where

|

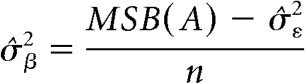

While the comparable metric, called the intraclass correlation, for the random variable (i.e., item) is obtained from the following formula:

|

where

|

In these formulas, MSA is mean squares for modality, MSB(A) is mean squares for item nested within modality, n is degrees of freedom for the subgroup variable (i.e., items nested in modality), p is degrees of freedom for the group variable (i.e., modality), q is number of repetitions per stimulus for the jth stimulus in the set, and σ̂ε2 is the mean square error for the item (i.e., subgroup) level (Kirk, 2013).

Last, to assess how information about modality or item is encoded over time, we determined the time point of the first significant bin using a mixed-effects, nested ANOVA on a series of partially overlapping bins (similar to the sliding window rank-sum method above). Activity was binned in 100 ms bins (stepped by 20 ms) during the stimulation period and compared with activity in the baseline period (−2 to −1 s preceding fixspot on). The first bin with p < 0.01 was considered the first significant bin and taken as the latency to the onset of response of the neuron. To further ensure that this latency metric was reliable, we also calculated the time that elapsed before the effect size during stimulation exceeded the baseline threshold for modality and for item. To do this, we binned the activity during both baseline and stimulus delivery into partially overlapping 100 ms bins (stepped by 20 ms). We then found the mean effect size for modality and for item plus 3.5 SDs of the activity during baseline. We set these values to be our thresholds. Then, for each neuron that showed significant selectivity for modality, item, or both, we found the time point at which the response crossed the appropriate threshold (e.g., if the cell was selective only for modality, we only looked for the first bin where modality selectivity crossed the threshold). It should be noted that a small number of the cells that registered as modality- and/or item-selective did not have a single bin that crossed this 3.5 SD, likely due to relatively small, but sustained, changes in firing rate relative to baseline. These were excluded from the timing analysis. Both results showed that, on average, information about modality was encoded at earlier time points than information about individual items.

Anatomical reconstruction of recording sites

Reconstruction of the recording sites was done by aligning the electrode tracks to high-contrast fiducial markers (see above) aligned to chamber coordinates on postsurgical MRIs. Coronal sections of the MRIs were spaced 1 mm and aligned to the grid holes in 3D slicer (Kikinis et al., 2014) (RRID:SCR_005619) and MRIcro (Rorden and Brett, 2000). The depth of the electrode tip relative to the top of the recording grid was measured every day and used to relay the dorsoventral (z) position of the electrode. Because the locations of each hole on the recording grid relative to the chamber boundaries and MRI were known, measurements of mediolateral (x) and anterior posterior (y) position of the probe were also available. All reconstructions were done by importing the MRIs into CorelDRAW ×7 (Corel, RRID:SCR_014235), converting the image to real-world dimensions, and using the x, y, and z coordinates to place a diagram electrode over the image. Assessment of this reconstruction method was previously found to be accurate to within ∼1 mm (Mosher et al., 2010). In Monkey B, the recording sites calculated as described above were verified by MRI and CT scans, each with tungsten electrodes inserted in specific grid positions that pointed to the designated targets.

We tested whether any particular response combination was more likely to be observed in any nucleus of the amygdala using a series of Fisher's exact tests (Table 1). The α value was Bonferroni-corrected for the number of comparisons (α = 0.05/5 nuclei × 8 response categories = 0.00125). A series of Fisher's exact tests were more appropriate than a χ2 test for these data as the sample sizes for some of the nuclei were small.

Table 1.

Response category by nucleusa

| Non | V | T | A | VT | VA | TA | VTA | |

|---|---|---|---|---|---|---|---|---|

| BA | 29 | 11 | 5 | 9 | 16 | 7 | 7 | 25 |

| 0.2531 | 0.8332 | 0.7729 | 0.3897 | 0.5576 | 0.8019 | 0.5558 | 0.0587 | |

| 0.69 | 0.87 | 0.79 | 0.64 | 1.33 | 0.86 | 1.61 | 1.97 | |

| 0.39, 1.22 | 0.38, 2.00 | 0.24, 2.56 | 0.27, 1.53 | 0.62, 2.86 | 0.31, 2.40 | 0.49, 5.22 | 0.23, 1.24 | |

| AB | 27 | 9 | 2 | 8 | 7 | 5 | 1 | 8 |

| 0.0588 | 0.4843 | 0.5165 | 0.6382 | 0.5249 | 0.7828 | 0.1877 | 0.183 | |

| 1.84 | 1.44 | 0.47 | 1.25 | 0.72 | 1.12 | 0.21 | 90.54 | |

| 1.01, 3.35 | 0.60, 3.43 | 0.10, 2.22 | 0.51, 3.09 | 0.29, 1.76 | 0.37, 3.36 | 0.03, 1.67 | 0.23, 1.24 | |

| LA | 2 | 1 | 1 | 6 | 2 | 2 | 0 | 2 |

| 0.1582 | 1.000 | 0.5866 | 0.0027 | 1.000 | 0.3057 | 1.000 | 0.7438 | |

| 0.30 | 0.53 | 1.24 | 6.57 | 0.95 | 2.05 | 0.00 | 0.64 | |

| 0.07, 1.37 | 0.07, 4.20 | 0.15, 0.23 | 2.14, 0.15 | 0.21, 4.42 | 0.42, 9.93 | NA | 0.14, 2.95 | |

| CE | 12 | 4 | 4 | 1 | 4 | 2 | 4 | 6 |

| 0.8467 | 1.000 | 0.1061 | 0.1391 | 0.7947 | 1.000 | 0.1061 | 1.000 | |

| 1.10 | 1.00 | 2.82 | 0.21 | 0.78 | 0.73 | 2.82 | 0.88 | |

| 0.52, 2.33 | 0.32, 3.10 | 0.80, 9.90 | 0.03, 1.58 | 0.26, 2.39 | 0.16, 3.38 | 0.80, 9.90 | 0.34, 2.27 | |

| ME | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

| 0.5212 | 1.000 | 1.000 | 1.000 | 0.2434 | 1.000 | 1.000 | 1.000 | |

| 2.27 | 0.00 | 0.00 | 0.00 | 6.90 | 0.00 | 0.00 | 0.00 | |

| 0.14, 36.83 | NA | NA | NA | 0.42, 113.3 | NA | NA | NA |

aEach cell indicates the number of cells (first line), p value (second line), odds ratio (third line), and CI (fourth line) for a response type in a particular nucleus (statistics generated using a Fisher's exact test comparing the number of cells of each response type between the nuclei). No response by nucleus pairing was significant at the p < 0.05 level when correcting for multiple comparisons (0.05/40 comparisons = 0.00125). BA, basal; AB, accessory basal; LA, lateral; CE, central; ME, medial. Response category labels: Non, nonresponsive; V, visual; T, tactile; A, auditory; VT, visual/tactile; VA, visual/auditory; TA, tactile/auditory; VTA, visual/tactile/auditory.

Details regarding all critical equipment and software are outlined in Table 2.

Table 2.

Research Resource Identifiers (RRIDs) for experiment toolsa

| Reagent or resourceb | Sourcec | Identifierd |

|---|---|---|

| Software | ||

| MATLAB 2016b | The MathWorks | SCR_001622 |

| Audacity: Free Audio Editor and Recorder | https://www.audacityteam.org/download/ | SCR_007198 |

| Plexon: Offline Sorter | http://www.plexon.com/products/offline-sorter | SCR_000012 |

| CorelDRAW Graphics Suite | Corel | SCR_014235 |

| 3D Slicer: MRI viewer | http://slicer.org/ | SCR_005619 |

| Neurobehavioral Systems: Presentation | https://www.neurobs.com/ | Presentation Software |

| Other | ||

| V-Probe | https://plexon.com/ | 16-Channel V-probe |

| V-Probe Headstage | https://plexon.com/ | HST/16D Gen2 |

| Plexon Omniplex System | https://plexon.com/ | Plexon Omniplex Data Acquisition System |

| Airflow regulation system | http://www.cristinstrument.com/ | Custom solenoid manifold and regulator |

| iSCAN infrared eye tracker | http://www.iscaninc.com/ | RK826PCI-01 |

aRRIDs for critical equipment used in the experiments outlined in the manuscript. Resources are grouped by category (Software and Other).

bA general description of the resource.

cThe manufacturer, producer, or URL of a website related to the resource.

dA more detailed identifier (i.e., the RRID or a short description).

Results

The majority of responsive neurons responded to multiple sensory modalities

Although our stimuli were both unfamiliar and devoid of value associations or social significance, they activated a large percentage of neurons (69.3%, 160 of 231) as assessed by the sliding window, rank-sum analysis described in Materials and Methods. Each cell was assigned to one of eight possible types: “visual only,” “tactile only,” “auditory only,” “visual-and-tactile,” “visual-and-auditory,” “tactile-and-auditory,” “visual-and-tactile-and-auditory,” or “nonresponsive.” Examples of neurons that responded to one, two, or all three sensory modalities are shown in Figure 2A–C, respectively. Asterisks indicate the 100 ms bins when the firing rate showed significant deviations from baseline. The relative proportions of cells responding to none, one, two, or all three sensory domains is shown in Figure 2D (top). Equal proportions of visual (111 of 231, 48.1%), tactile (95 of 231, 41.1%), and auditory (93 of 231, 40.3%) responses were observed in this population of neurons (χ2(2, N = 299) = 2.10, p = 0.38). Many neurons were responsive to multiple modalities; therefore, the number of visual, tactile, and auditory responses (299) adds up to more than the total number of cells (231). We found that the majority of responsive neurons (98 of 160, 61.3%) responded to stimuli of more than one sensory modality with the largest single response type being the “visual-and-tactile-and-auditory” group (Fig. 2D, bottom). These results challenge the view that the monkey amygdala processes predominately visual stimuli, and other sensory modalities may be less effective in activating the component neurons.

We next determined whether any particular response combination was observed more often than expected by chance given the overall number of visual, tactile, and auditory responses. We calculated the conditional probabilities for each of the eight groups listed above and used these to create binomial probability density functions for each group. There were three cases in which the observed value was significantly different from the expected value determined by these probabilities (Fig. 2E). The number of cells responding to all three sensory domains and the number responding to none of the sensory domains were higher than expected (two-tailed binomial test, p = 0.0000007 and p = 0.000002, respectively), whereas the “tactile only” category had fewer cells than expected (two-tailed binomial test, p = 0.0002). Furthermore, it is possible that some neurons will only respond to stimuli of different sensory modalities when they are presented simultaneously (Ghazanfar et al., 2005; Sugihara et al., 2006; Stein et al., 2014). Because we can only present a subset of stimuli from each sensory modality, and we did not present multiple stimuli concurrently, these numbers are likely a conservative estimate of the propensity of single neurons in the amygdala to respond to stimuli of different sensory domains. Therefore, these data show that amygdala neurons have a high propensity for multisensory responses, even when the stimuli lack social or emotional value.

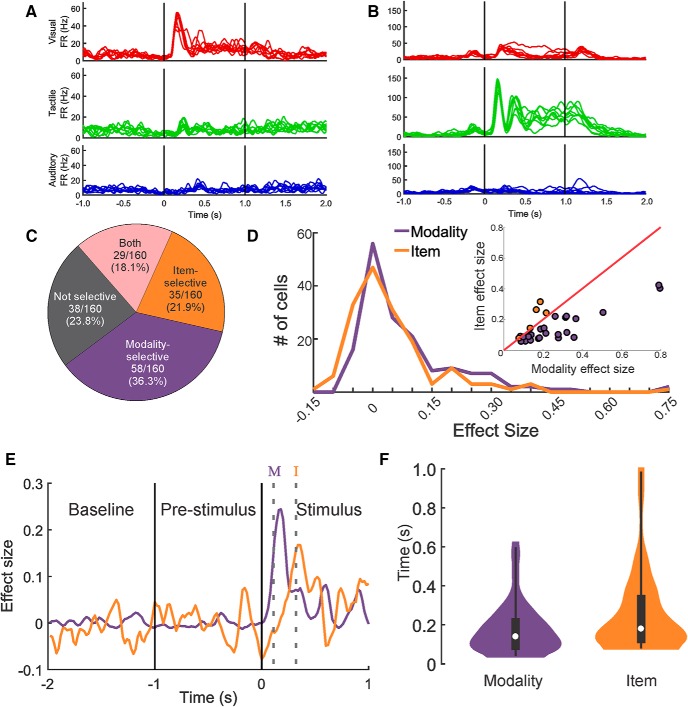

Neurons in the amygdala show greater modality selectivity than item selectivity

We next quantified and compared the difference in responses (1) between sensory modalities and (2) between items within a sensory modality using a mixed-effects, nested ANOVA model with modality as a fixed group variable and items within modality as a random, nested variable (see Materials and Methods). For example, the neuron shown in Figure 3A was modality-selective, responding with a large increase in firing rate to all visual stimuli and significantly smaller increases for auditory and tactile stimuli. This neuron showed no item selectivity because the variation of firing rate induced by the eight stimuli within each sensory modality was low (effect size for modality = 0.48, effect size for item = −0.05). In contrast, the neuron shown in Figure 3B shows a very strong tactile response, a moderate visual response, and a relatively weak auditory response. There is also considerable variability within each sensory domain: note the relatively elevated response to a single visual stimulus, the variability in the later stages of the tactile stimuli, and the elevations in firing rate for two of the auditory stimuli. This neuron was classified as both modality- and item-selective (effect size for modality = 0.80, effect size for item = 0.40). Overall, 76.3% of responsive neurons (122 of 160 cells) were selective for modality, item, or both (Fig. 3C; mixed-effects, nested ANOVA, α = 0.01). Of these selective neurons, a higher proportion were modality-selective (58 of 122, 47.5%) than item-selective (35 of 122, 28.7%) or both modality- and item-selective (29 of 122, 23.8%), suggesting that sensory modality figures more prominently in the population of cells (Fig. 3C; χ2(2, N = 122) = 17.29, p = 0.0001). Figure 3D shows the distributions of the effect sizes for modality and for item for all responsive cells. While the range of effect sizes seen is similar between the two variables, the distribution of the effect sizes for modality is slightly shifted toward higher values. This is confirmed by comparing the per cell difference in the effect size metrics for all responsive cells (paired t test, t(159) = 3.52, p = 0.0006). When restricting this comparison to only cells that were both modality- and item-selective, we found a similar strong bias toward modality selectivity (Fig. 3D, inset; paired t test, t(28) = 4.13, p = 0.0003).

Figure 3.

Modality selectivity was more prominent than item selectivity and emerged earlier across the population of neurons. A, A modality-selective neuron with no item selectivity. Mean responses across 16 repetitions of each stimulus are plotted as individual spike density functions and overlaid by modality. B, A modality- and item-selective neuron that responded with large differences to stimulus modality. Smaller, but significant, differences in response to items within a modality were present as well (e.g., the elevated response to a single visual stimulus). C, Proportions of neurons classified as nonselective, modality-selective, item-selective, or both modality- and item-selective. D, Distributions of the effect sizes for modality and item selectivity for all responsive cells. Inset, Scatterplot represents the effect size for modality plotted against the effect size for item for all 29 modality- and item-selective neurons. Purple dots represent cells that had higher effect size for modality. Orange dots represent cells that had a higher effect size for item. E, Comparison of the effect sizes for modality and item over time for one example cell. Purple and orange solid lines indicate effect size over time for modality and item, respectively. Gray dotted lines indicate the time of the first significant bin for modality (purple M) and item (orange I). F, Distributions of the latencies to the first significant bin for modality and item. White circle represents the median. Black rectangle represents the inner quartile range. Thin black lines indicate the 1st to 99th percentile range. Modality selectivity arose earlier than item selectivity.

To determine whether modality- or item-specific information emerges earlier from the spike trains, we performed mixed-effects, nested ANOVAs in a series of 100 ms bins (20 ms steps) throughout stimulus delivery. Figure 3E shows effect size over the baseline, prestimulus, and stimulus delivery periods for one example neuron that was both modality- and item-selective. We found that the latency to the first significant bin was significantly shorter for modality than for item (Fig. 3F; Wilcoxon rank-sum test, Z = −2.64, p = 0.008).

These results demonstrate the primacy of sensory modality over item specificity across the neural population in the amygdala in terms of the higher number of modality-selective neurons, the larger average effect size of modality, and the earlier emergence of modality selectivity. It is likely, therefore, that earlier components of the spike train typically signal modality and later components signal a specific item within a sensory modality.

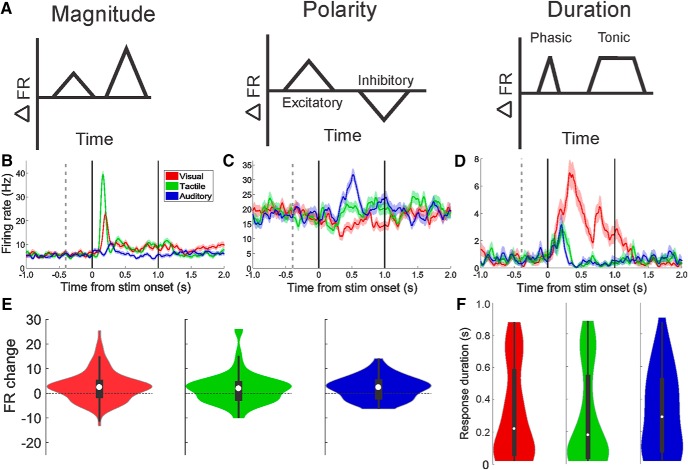

Different spike-train metrics select for sensory modality and items within a modality

Given the relatively small number of neurons in the macaque amygdala, ∼6 million (Carlo et al., 2010; Chareyron et al., 2011), it is likely that variations in response magnitude alone are insufficient to differentiate between the great diversity of stimuli processed therein. Indeed, within the visual modality alone, Mosher et al. (2010) found that amygdala neurons differentiate stimuli using the following: (1) response magnitude (differences in the maximal change in firing rate between baseline and stimulus delivery), (2) polarity of the response (increases or decreases in firing rate during stimulation relative to baseline), and (3) duration of the response (phasic or tonic). We determined whether these metrics (see schematic in Fig. 4A) could also differentiate between sensory modalities. Example neurons that exhibited each of these three types of firing rate modulations are shown in Figure 4B–D. Figure 4B shows a neuron that responded with different magnitudes of firing rate to tactile (largest), visual (intermediate), and auditory (smallest) stimuli. The neuron shown in Figure 4C also responded to all three sensory modalities, but the responses to the visual stimuli were decreases in firing rate, whereas the responses to the tactile and auditory stimuli were increases in firing rate. This type of response was most obvious in neurons that had higher baseline firing rates. Changes in the temporal pattern of the response are illustrated by the neuron shown in Figure 4D. While the tactile and auditory stimuli elicit phasic increases in firing rates, the visual response causes a tonic elevation of firing rate: that is, the elevated firing rate lasted longer than the phasic increase seen for the other two sensory modalities (a significant visual response was seen in 45 of the tested bins, whereas the tactile and auditory responses only lasted for 13 and 7 bins, respectively). This neuron also showed a difference in response magnitude, which was determined by finding the 100 ms bin with the highest mean response rate relative to the baseline period across all trials (visual maximal response = 12.4 ± 2.6 SD spikes/s, tactile maximal response 5.9 ± 2.8 SD spikes/s, auditory maximal response = 5.2 ± 2.7 SD spikes/s, one-way ANOVA, F(2,21), p = 0.001).

Figure 4.

Modality and item selectivity is expressed by changes of response magnitude, polarity, and duration. A, Simplified diagrams illustrating the three main spike-train metrics that differentiate between categories of stimuli and items within categories (Mosher et al., 2010). B–D, Examples of each type of spike-train modulation. Each neuron shown here responded to all three sensory domains. E, Distribution of response magnitudes for all responsive neurons. The number of excitatory responses was significantly higher than the number of inhibitory responses for all sensory domains, but no difference was present between domains. Violin plots use same conventions as in Figure 3F. F, The response duration for visual, tactile, and auditory stimuli shows no significant difference between the groups.

Finally, we determined whether any of these three types of firing rate modulations were specific for any sensory modality. No significant difference was seen between the sensory domains for response magnitude (Fig. 4E; Kruskal–Wallis, F(2,297) = 1.17, p = 0.56), for the proportions of excitatory versus inhibitory responses (Fig. 4E; χ2(2, N = 299) = 1.69, p = 0.43), or for response duration (Fig. 4F; Kruskal–Wallis, F(2,297) = 1.09, p = 0.58). Given that phasic/tonic and excitatory/inhibitory responses are likely to emerge as a consequence of interaction of inhibitory and excitatory neurons in the amygdala, it appears that sensory inputs of different modalities do not show preferential connectivity with inhibitory neurons that shape spike-train polarity and duration.

Anatomical distribution of multisensory cells

To examine the anatomical distribution of responsive cells, we reconstructed the location of all recorded neurons by aligning the anteroposterior, mediolateral, and dorsoventral position of a 16 channel V-probe to high-contrast markers on postsurgical MRIs. We found that almost all response combinations could be observed in each amygdala nucleus, with the exception of the medial nucleus (in which we only recorded two analyzable neurons) and the lateral nucleus (in which no tactile-auditory responses were observed) (Fig. 5). It is likely that all response types would have been observed in the lateral nucleus if we had recorded from more cells in this area; however, we have generally found that it is more difficult to isolate cells in the lateral nucleus of the amygdala relative to the basal and accessory basal nuclei.

No clustering of responses was seen quantitatively (Fig. 5B,C; Table 1; Fisher exact tests, all p > 0.05, Bonferroni-corrected for multiple comparisons). It is possible that the apparent clustering of nonresponsive neurons in the posterior-medial aspects of the amygdala in Monkey B reflects an anatomical gradient of responsivity (16 of 19 cells were categorized as nonresponsive on slices 17 and 18 in Fig. 5C). We did not record from these posterior sites in Monkey F and are therefore unable to assess this issue in-depth. Future studies with more systematic sampling from more animals will help to elucidate this issue. The presence of multisensory neurons in all sampled nuclei (i.e., at all stages of intra-amygdala processing) indicates that multisensory processing does not emerge from the hierarchical convergence of inputs from the lateral to the basal and accessory basal nuclei as suggested by anatomical studies (Pitkänen and Amaral, 1991; Jolkkonen and Pitkänen, 1998; Bonda, 2000).

Discussion

The majority of responsive neurons in the primate amygdala were multisensory

Single-unit recording studies in the monkey amygdala provide evidence for neurons that respond to all sensory modalities (Leonard et al., 1985; Scott et al., 1993; Karádi et al., 1998; Kadohisa et al., 2005; Paton et al., 2006; Rolls, 2006; Gothard et al., 2007; Mosher et al., 2010, 2016; Livneh et al., 2012; Genud-Gabai et al., 2013; Peck and Salzman, 2014; Chang et al., 2015; Resnik and Paz, 2015). The relative proportion and intra-amygdala distribution of neurons tuned to different sensory modalities are difficult to assess because, with rare exceptions (Nishijo et al., 1988; Kuraoka and Nakamura, 2012), studies use stimuli of a single sensory modality. In our study, only 38.7% of responsive neurons were tuned to a single modality; the rest (61.3%) were multisensory. This may explain why numerous studies report that neurons in the amygdala appear relatively nonresponsive or difficult to activate (i.e., increasing the complexity of the stimulus space would likely result in more responses).

More cells responded to stimuli from all three sensory domains than expected given the conditional probability of getting “visual-and-tactile-and-auditory” responses (i.e., if the chances of getting visual, tactile, and auditory responses were independent and randomly distributed across all neurons). This suggests an active mechanism or connectivity pattern that enhances the convergence of multiple sensory inputs onto single neurons. This pattern is reminiscent of the properties of hidden layers in fully connected neural networks (Touretzky and Pomerleau, 1989; Anderson, 1995).

The hypothesis that there is a large overlap in inputs and/or a high degree of intra-amygdala connectivity is further supported by the failure to find a regional distribution of neurons that respond to a particular sensory modality. It should be noted that we recorded different numbers of neurons from each nucleus (Table 1), and we have not histologically verified all of the recording sites (Monkey B is still alive). Future experiments and histology will fully address these issues. Nevertheless, these findings support the emerging idea that neurons recorded from different nuclei of the amygdala cannot be distinguished based on their neuronal responses to stimuli or task variables (Amir et al., 2015; Resnik and Paz, 2015; Paré and Quirk, 2017; Kyriazi et al., 2018; Munuera et al., 2018).

Modality selectivity and item selectivity were encoded by different spike-train metrics

We report here that coarse information (i.e., sensory modality) is encoded before more detailed information that differentiates between items within a modality. This finding is consistent with a report of shorter response latencies for species-specific stimulus categories by Minxha et al. (2017). This study replicated previous reports that neurons in the monkey amygdala differentiate between categories of stimuli and between items within each category by three main types of firing rate changes: (1) response magnitude, (2) response polarity, and (3) response duration (Mosher et al., 2010). We found that any of these response metrics could distinguish between any sensory modality and/or item within a modality. It appeared, however, that different metrics were used for modality and items; for example, the polarity of the response of the neuron shown in Figure 4C could be used to differentiate between sensory modality, whereas response magnitude could be used to discriminate items within a modality.

The observed differences in response magnitude, polarity, and duration could arise from circuit motifs that cannot be assessed with the experimental tools currently available in primates. However, it is likely that response polarity and response duration are shaped, at least in part, by different mechanisms of inhibition in the local circuits of the amygdala. If a stimulus of particular sensory modality activates neurons that provide feedforward inhibition to the recorded neuron, the result would be a modality-specific decrease in firing rate relative to baseline. Likewise, one potential mechanism explaining the phasic responses is feedback inhibition, whereas tonic responses could arise via disinhibition. Disinhibition, in particular, is a common circuit mechanism that has been amply documented using in vivo genetic/molecular manipulations in the rodent amygdala (Paré et al., 2003; Ehrlich et al., 2009; Ciocchi et al., 2010; Han et al., 2017). Importantly, any sensory modality could elicit any type of firing rate modulation; this feature is also suggestive of a fully connected hidden layer (Touretzky and Pomerleau, 1989; Anderson, 1995).

Limitations of this study

Several questions related to multisensory processing in the amygdala were not addressed by this study and will require follow-up experiments. The most important of these questions is whether neurons in the amygdala show multisensory integration, similar to what has been shown in other areas, including the superior temporal sulcus (Beauchamp et al., 2004; Barraclough et al., 2005; Ghazanfar et al., 2005; Dahl et al., 2010; Werner and Noppeney, 2010; Stein et al., 2014), parietal cortex (Duhamel et al., 1998; Avillac et al., 2004; Bremen et al., 2017), PFC (Sugihara et al., 2006; Romanski and Hwang, 2012; Diehl and Romanski, 2014), and in subcortical areas, such as the superior colliculus (Wallace and Stein, 1997; Groh and Pai, 2010; Stein et al., 2014). It will be critical to determine whether multisensory integration in the amygdala (whatever cellular and circuit mechanism it takes) can be induced by stimuli that require joint processing of multiple sensory modalities. For example, are social stimuli (e.g., faces and voices) more likely to be integrated than random objects that produce specific sounds (e.g., a faucet and the sound of running water)? Furthermore, would multisensory integration be favored if processing jointly the sight of random objects and the sounds they make if these stimuli become predictive of reward or punishment?

Neurons in the amygdala respond to stimuli without social/emotional significance

It is remarkable that ∼70% of cells significantly altered their activity in response to the passive reception of neutral stimuli. The animals had no prior experience with the stimuli, so familiarity is unlikely to drive this activity. It is possible that some of these responses were related to shifts in attention or arousal generated by the stimuli (i.e., despite our efforts, some of the stimuli may have had an unknown significance). Complete dissociation of responses driven by attentional versus sensory factors was beyond the scope of these experiments, yet should be considered in future work. For example, follow-up experiments could assess whether the responses of neurons in the amygdala are tuned to low-level features of tactile stimuli (i.e., does the magnitude of response to nonaversive tactile stimulation scale with the intensity/force profile of the touch?). The firing rate changes elicited by these emotionally neutral, nonsocial stimuli were in the same range as the responses elicited in previous studies by videos of facial expressions and rewards (Leonard et al., 1985; Gothard et al., 2007; Belova et al., 2008; Bermudez and Schultz, 2010). If a role of the amygdala is to signal the presence of highly salient (i.e., emotionally and socially relevant) stimuli, an activation of this magnitude in response to stimuli of low significance is unexpected. It is possible that the amygdala signals the value or behavioral relevance of all encountered stimuli, including the stimuli of low value (that require no arousal decisions about approach/avoidance). Indeed, Paton et al. (2006) noted that “In addition [to image value], image identity had a significant effect in many cells, often overlapping with the representation of value at the single-cell level.” Furthermore, Genud-Gabai et al. (2013) demonstrated that stimuli often considered “controls” (i.e., the nonreinforced stimuli in an association task) are well represented by neurons in the amygdala, possibly because these stimuli now are reliable predictors of the lack of an outcome. We intentionally used a simple task featuring stimuli with no inherent value to emphasize that sensory modality and stimulus identity are encoded in the amygdala, almost by default. The default response of large populations of neurons in the amygdala may be independent of association with value (whether unconditioned or conditioned) or social significance. Establishing that certain stimuli are of low significance may be important to prevent the elaboration of energetically costly behavioral and autonomic responses that are typically elicited by stimuli of high behavioral relevance. These processes may not be easily detectable by signals with slower temporal dynamics (e.g., the BOLD signal) but may be important for affective homeostasis and optimal social behavior.

In conclusion, these data demonstrate that single neurons in the amygdala often respond to neutral stimuli from multiple sensory domains. The diverse response profiles and lack of clear anatomical clustering suggest a highly flexible system upon which more elaborate representations of associated value or social significance might be built. Future studies of how neurons in the amygdala integrate the various sensory features, as well as more abstract components of stimuli, will help to elucidate the mechanisms by which the amygdala binds information from multiple sources.

Footnotes

This work was supported by National Institute of Mental Health P50MH100023 and a Medical Research Grant from an Anonymous Foundation. We thank Andrew Fuglevand for help designing this project; and Prisca Zimmerman and Kennya Garcia for help setting up the experiments and collecting the data.

The authors declare no competing financial interests.

References

- Aggleton JP, Burton MJ, Passingham RE (1980) Cortical and subcortical afferents to the amygdala of the rhesus monkey (Macaca mulatta). Brain Res 190:347–368. 10.1016/0006-8993(80)90279-6 [DOI] [PubMed] [Google Scholar]

- Amaral DG, Price JL (1984) Amygdalo-cortical projections in the monkey (Macaca fascicularis). J Comp Neurol 230:465–496. 10.1002/cne.902300402 [DOI] [PubMed] [Google Scholar]

- Amir A, Lee SC, Headley DB, Herzallah MM, Paré D (2015) Amygdala signaling during foraging in a hazardous environment. J Neurosci 35:12994–13005. 10.1523/JNEUROSCI.0407-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson J. (1995) An Introduction to neural networks. Cambridge, MA: Massachusetts Institute of Technology. [Google Scholar]

- Avillac M, Olivier E, Denève S, Ben Hamed S, Duhamel JR, (2004) Multisensory integration in multiple reference frames in the posterior parietal cortex. Cogn Process 5:159–166. [Google Scholar]

- Barraclough NE, Xiao D, Baker CI, Oram MW, Perrett DI (2005) Integration of visual and auditory information by superior temporal sulcus neurons responsive to the sight of actions. J Cogn Neurosci 17:377–391. 10.1162/0898929053279586 [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Argall BD, Martin A (2004) Integration of auditory and visual information about objects in superior temporal sulcus. Neuron 41:809–823. 10.1016/S0896-6273(04)00070-4 [DOI] [PubMed] [Google Scholar]

- Belova MA, Paton JJ, Salzman CD (2008) Moment-to-moment tracking of state value in the amygdala. J Neurosci 28:10023–10030. 10.1523/JNEUROSCI.1400-08.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bermudez MA, Schultz W (2010) Reward magnitude coding in primate amygdala neurons. J Neurophysiol 104:3424–3432. 10.1152/jn.00540.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonda E. (2000) Organization of connections of the basal and accessory basal nuclei in the monkey amygdala. Eur J Neurosci 12:1971–1992. 10.1046/j.1460-9568.2000.00082.x [DOI] [PubMed] [Google Scholar]

- Bremen P, Massoudi R, Van Wanrooij MM, Van Opstal AJ (2017) Audio-visual integration in a redundant target paradigm: a comparison between rhesus macaque and man. Front Syst Neurosci 11:89. 10.3389/fnsys.2017.00089 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brothers L, Ring B, Kling A (1990) Response of neurons in the macaque amygdala to complex social stimuli. Behav Brain Res 41:199–213. 10.1016/0166-4328(90)90108-Q [DOI] [PubMed] [Google Scholar]

- Carlo CN, Stefanacci L, Semendeferi K, Stevens CF (2010) Comparative analyses of the neuron numbers and volumes of the amygdaloid complex in old and new world primates. J Comp Neurol 518:1176–1198. 10.1002/cne.22264 [DOI] [PubMed] [Google Scholar]

- Chandrasekaran C, Ghazanfar AA (2009) Different neural frequency bands integrate faces and voices differently in the superior temporal sulcus. J Neurophysiol 101:773–788. 10.1152/jn.90843.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang SW, Fagan NA, Toda K, Utevsky AV, Pearson JM, Platt ML (2015) Neural mechanisms of social decision-making in the primate amygdala. Proc Natl Acad Sci U S A 112:16012–16017. 10.1073/pnas.1514761112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chareyron LJ, Banta Lavenex P, Amaral DG, Lavenex P (2011) Stereological analysis of the rat and monkey amygdala. J Comp Neurol 519:3218–3239. 10.1002/cne.22677 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ciocchi S, Herry C, Grenier F, Wolff SB, Letzkus JJ, Vlachos I, Ehrlich I, Sprengel R, Deisseroth K, Stadler MB, Müller C, Lüthi A (2010) Encoding of conditioned fear in central amygdala inhibitory circuits. Nature 468:277–282. 10.1038/nature09559 [DOI] [PubMed] [Google Scholar]

- Dahl CD, Logothetis NK, Kayser C (2010) Modulation of visual responses in the superior temporal sulcus by audio-visual congruency. Front Integr Neurosci 4:10. 10.3389/fnint.2010.00010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diehl MM, Romanski LM (2014) Responses of prefrontal multisensory neurons to mismatching faces and vocalizations. J Neurosci 34:11233–11243. 10.1523/JNEUROSCI.5168-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME (1998) Ventral intraparietal area of the macaque: congruent visual and somatic response properties. J Neurophysiol 79:126–136. 10.1152/jn.1998.79.1.126 [DOI] [PubMed] [Google Scholar]

- Ehrlich I, Humeau Y, Grenier F, Ciocchi S, Herry C, Lüthi A (2009) Amygdala inhibitory circuits and the control of fear memory. Neuron 62:757–771. 10.1016/j.neuron.2009.05.026 [DOI] [PubMed] [Google Scholar]

- Gelbard-Sagiv H, Mudrik L, Hill MR, Koch C, Fried I (2018) Human single neuron activity precedes emergence of conscious perception. Nat Commun 9:2057. 10.1038/s41467-018-03749-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Genud-Gabai R, Klavir O, Paz R (2013) Safety signals in the primate amygdala. J Neurosci 33:17986–17994. 10.1523/JNEUROSCI.1539-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghashghaei HT, Barbas H (2002) Pathways for emotion: interactions of prefrontal and anterior temporal pathways in the amygdala of the rhesus monkey. Neuroscience 115:1261–1279. 10.1016/S0306-4522(02)00446-3 [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Maier JX, Hoffman KL, Logothetis NK (2005) Multisensory integration of dynamic faces and voices in rhesus monkey auditory cortex. J Neurosci 25:5004–5012. 10.1523/JNEUROSCI.0799-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldring AB, Cooke DF, Baldwin MK, Recanzone GH, Gordon AG, Pan T, Simon SI, Krubitzer L (2014) Reversible deactivation of higher-order posterior parietal areas: II. Alterations in response properties of neurons in areas 1 and 2. J Neurophysiol 112:2545–2560. 10.1152/jn.00141.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gore F, Schwartz EC, Brangers BC, Aladi S, Stujenske JM, Likhtik E, Russo MJ, Gordon JA, Salzman CD, Axel R (2015) Neural representations of unconditioned stimuli in basolateral amygdala mediate innate and learned responses. Cell 162:134–145. 10.1016/j.cell.2015.06.027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gothard KM, Battaglia FP, Erickson CA, Spitler KM, Amaral DG (2007) Neural responses to facial expression and face identity in the monkey amygdala. J Neurophysiol 97:1671–1683. 10.1152/jn.00714.2006 [DOI] [PubMed] [Google Scholar]

- Groh JM, Pai DK (2010) Looking at sounds: neural mechanisms in the primate brain. Oxford: Oxford UP. [Google Scholar]

- Han W, Tellez LA, Rangel MJ Jr, Motta SC, Zhang X, Perez IO, Canteras NS, Shammah-Lagnado SJ, van den Pol AN, de Araujo IE (2017) Integrated control of predatory hunting by the central nucleus of the amygdala. Cell 168:311–324.e18. 10.1016/j.cell.2016.12.027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haruno M, Frith CD (2010) Activity in the amygdala elicited by unfair divisions predicts social value orientation. Nat Neurosci 13:160–161. 10.1038/nn.2468 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman KL, Gothard KM, Schmid MC, Logothetis NK (2007) Facial-expression and gaze-selective responses in the monkey amygdala. Curr Biol 17:766–772. 10.1016/j.cub.2007.03.040 [DOI] [PubMed] [Google Scholar]

- Huang RS, Sereno MI (2007) Dodecapus: an MR-compatible system for somatosensory stimulation. Neuroimage 34:1060–1073. 10.1016/j.neuroimage.2006.10.024 [DOI] [PubMed] [Google Scholar]

- Intveld RW, Dann B, Michaels JA, Scherberger H (2018) Neural coding of intended and executed grasp force in macaque areas AIP, F5, and M1. Sci Rep 8:17985. 10.1038/s41598-018-35488-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jolkkonen E, Pitkänen A (1998) Intrinsic connections of the rat amygdaloid complex: projections originating in the central nucleus. J Comp Neurol 395:53–72. [DOI] [PubMed] [Google Scholar]

- Kadohisa M, Verhagen JV, Rolls ET (2005) The primate amygdala: neuronal representations of the viscosity, fat texture, temperature, grittiness and taste of foods. Neuroscience 132:33–48. 10.1016/j.neuroscience.2004.12.005 [DOI] [PubMed] [Google Scholar]

- Kaminski J, Sullivan S, Chung JM, Ross IB, Mamelak AN, Rutishauser U (2017) Persistently active neurons in human medial frontal and medial temporal lobe support working memory. Nat Neurosci 20:590–601. 10.1038/nn.4509 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karádi Z, Scott TR, Oomura Y, Nishino H, Aou S, Lénárd L (1998) Complex functional attributes of amygdaloid gustatory neurons in the rhesus monkeys. Ann N Y Acad Sci 855:488–492. 10.1111/j.1749-6632.1998.tb10611.x [DOI] [PubMed] [Google Scholar]

- Kennerley SW, Dahmubed AF, Lara AH, Wallis JD (2009) Neurons in the frontal lobe encode the value of multiple decision variables. J Cogn Neurosci 21:1162–1178. 10.1162/jocn.2009.21100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kikinis R, Pieper SD, Vosburgh KG (2014) 3D Slicer: a platform for subject-specific image analysis, visualization, and clinical support. In: Intraoperative imaging and image-guided therapy, pp. 277–289. New York: Springer. [Google Scholar]

- Kirk R. (2013) Experimental design: procedures for the behavioral sciences. Thousand Oaks, CA: Sage. [Google Scholar]

- Kuraoka K, Nakamura K (2007) Responses of single neurons in monkey amygdala to facial and vocal emotions. J Neurophysiol 97:1379–1387. 10.1152/jn.00464.2006 [DOI] [PubMed] [Google Scholar]

- Kuraoka K, Nakamura K (2012) Categorical representation of objects in the central nucleus of the monkey amygdala: object categorization within the monkey amygdala. Eur J Neurosci 35:1504–1512. 10.1111/j.1460-9568.2012.08061.x [DOI] [PubMed] [Google Scholar]

- Kyriazi P, Headley DB, Paré D (2018) Multi-dimensional coding by basolateral amygdala neurons. Neuron 99:1315–1328.e5. 10.1016/j.neuron.2018.07.036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonard CM, Rolls ET, Wilson FA, Baylis GC (1985) Neurons in the amygdala of the monkey with responses selective for faces. Behav Brain Res 15:159–176. 10.1016/0166-4328(85)90062-2 [DOI] [PubMed] [Google Scholar]

- Livneh U, Paz R (2012) Aversive-bias and stage selectivity in neurons of the primate amygdala during acquisition, extinction, and overnight retention. J Neurosci 32:8598–8610. 10.1523/JNEUROSCI.0323-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Livneh U, Resnik J, Shohat Y, Paz R (2012) Self-monitoring of social facial expressions in the primate amygdala and cingulate cortex. Proc Natl Acad Sci U S A 109:18956–18961. 10.1073/pnas.1207662109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maris E, Oostenveld R (2007) Nonparametric statistical testing of EEG- and MEG-data. J Neurosci Methods 164:177–190. 10.1016/j.jneumeth.2007.03.024 [DOI] [PubMed] [Google Scholar]

- Minxha J, Mosher C, Morrow JK, Mamelak AN, Adolphs R, Gothard KM, Rutishauser U (2017) Fixations gate species-specific responses to free viewing of faces in the human and macaque amygdala. Cell Rep 18:878–891. 10.1016/j.celrep.2016.12.083 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mosher CP, Zimmerman PE, Gothard KM (2010) Response characteristics of basolateral and centromedial neurons in the primate amygdala. J Neurosci 30:16197–16207. 10.1523/JNEUROSCI.3225-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mosher CP, Zimmerman PE, Gothard KM (2014) Neurons in the monkey amygdala detect eye-contact during naturalistic social interactions. Curr Biol 24:2459–2464. 10.1016/j.cub.2014.08.063 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mosher CP, Zimmerman PE, Fuglevand AJ, Gothard KM (2016) Tactile stimulation of the face and the production of facial expressions activate neurons in the primate amygdala. eNeuro 3:ENEURO.0182-16.2016. 10.1523/ENEURO.0182-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Munuera J, Rigotti M, Salzman CD (2018) Shared neural coding for social hierarchy and reward value in primate amygdala. Nat Neurosci 21:415–423. 10.1038/s41593-018-0082-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakamura K, Mikami A, Kubota K (1992) Activity of single neurons in the monkey amygdala during performance of a visual discrimination task. J Neurophysiol 67:1447–1463. 10.1152/jn.1992.67.6.1447 [DOI] [PubMed] [Google Scholar]

- Nishijo H, Ono T, Nishino H (1988) Topographic distribution of modality-specific amygdalar neurons in alert monkey. J Neurosci 8:3556–3569. 10.1523/JNEUROSCI.08-10-03556.1988 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ono T, Fukuda M, Nishino H, Sasaki K, Muramoto K-I (1983) Amygdaloid neuronal responses to complex visual stimuli in an operant feeding situation in the monkey. Brain Res Bull 11:515–518. 10.1016/0361-9230(83)90123-5 [DOI] [PubMed] [Google Scholar]

- Paré D, Royer S, Smith Y, Lang EJ (2003) Contextual inhibitory gating of impulse traffic in the intra-amygdaloid network. Ann N Y Acad Sci 985:78–91. 10.1111/j.1749-6632.2003.tb07073.x [DOI] [PubMed] [Google Scholar]

- Paré D, Quirk GJ (2017) When scientific paradigms lead to tunnel vision: lessons from the study of fear. NPJ Sci Learn 2:6. 10.1038/s41539-017-0007-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paton JJ, Belova MA, Morrison SE, Salzman CD (2006) The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature 439:865–870. 10.1038/nature04490 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peck CJ, Salzman CD (2014) Amygdala neural activity reflects spatial attention towards stimuli promising reward or threatening punishment. eLife 3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitkänen A, Amaral DG (1991) Demonstration of projections from the lateral nucleus to the basal nucleus of the amygdala: a PHA-L study in the monkey. Exp Brain Res 83:465–470. [DOI] [PubMed] [Google Scholar]

- Resnik J, Paz R (2015) Fear generalization in the primate amygdala. Nat Neurosci 18:188–190. 10.1038/nn.3900 [DOI] [PubMed] [Google Scholar]

- Rolls ET. (2006) Brain mechanisms underlying flavour and appetite. Philos Trans R Soc Lond B Biol Sci 361:1123–1136. 10.1098/rstb.2006.1852 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Hwang J (2012) Timing of audiovisual inputs to the prefrontal cortex and multisensory integration. Neuroscience 214:36–48. 10.1016/j.neuroscience.2012.03.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rorden C, Brett M (2000) Stereotaxic display of brain lesions. Behav Neurol 11:191–200. [DOI] [PubMed] [Google Scholar]

- Rudebeck PH, Mitz AR, Chacko RV, Murray EA (2013) Effects of amygdala lesions on reward-value coding in orbital and medial prefrontal cortex. Neuron 80:1519–1531. 10.1016/j.neuron.2013.09.036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sah P, Faber ES, Lopez De Armentia M, Power J (2003) The amygdaloid complex: anatomy and physiology. Physiol Rev 83:803–834. 10.1152/physrev.00002.2003 [DOI] [PubMed] [Google Scholar]

- Sanghera MK, Rolls ET, Roper-Hall A (1979) Visual responses of neurons in the dorsolateral amygdala of the alert monkey. Exp Neurol 63:610–626. 10.1016/0014-4886(79)90175-4 [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M (1999) Neural encoding in orbitofrontal cortex and basolateral amygdala during olfactory discrimination learning. J Neurosci 19:1876–1884. 10.1523/JNEUROSCI.19-05-01876.1999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott TR, Karadi Z, Oomura Y, Nishino H, Plata-Salaman CR, Lenard L, Giza BK, Aou S (1993) Gustatory neural coding in the amygdala of the alert macaque monkey. J Neurophysiol 69:1810–1820. 10.1152/jn.1993.69.6.1810 [DOI] [PubMed] [Google Scholar]

- Scott TR, Giza BK, Yan J (1999) Gustatory neural coding in the cortex of the alert cynomolgus macaque: the quality of bitterness. J Neurophysiol 81:60–71. 10.1152/jn.1999.81.1.60 [DOI] [PubMed] [Google Scholar]

- Spitler KM, Gothard KM (2008) A removable silicone elastomer seal reduces granulation tissue growth and maintains the sterility of recording chambers for primate neurophysiology. J Neurosci Methods 169:23–26. 10.1016/j.jneumeth.2007.11.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein BE, Stanford TR, Rowland BA (2014) Development of multisensory integration from the perspective of the individual neuron. Nat Rev Neurosci 15:520–535. 10.1038/nrn3742 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugase-Miyamoto Y, Richmond BJ (2005) Neuronal signals in the monkey basolateral amygdala during reward schedules. J Neurosci 25:11071–11083. 10.1523/JNEUROSCI.1796-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugihara T, Diltz MD, Averbeck BB, Romanski LM (2006) Integration of auditory and visual communication information in the primate ventrolateral prefrontal cortex. J Neurosci 26:11138–11147. 10.1523/JNEUROSCI.3550-06.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Touretzky D, Pomerleau D (1989) What's hidden in the hidden layers? BYTE 14:227–233. [Google Scholar]

- Uwano T, Nishijo H, Ono T, Tamura R (1995) Neuronal responsiveness to various sensory stimuli, and associative learning in the rat amygdala. Neuroscience 68:339–361. 10.1016/0306-4522(95)00125-3 [DOI] [PubMed] [Google Scholar]

- Wallace MT, Stein BE (1997) Development of multisensory neurons and multisensory integration in cat superior colliculus. J Neurosci 17:2429–2444. 10.1523/JNEUROSCI.17-07-02429.1997 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Werner S, Noppeney U (2010) Superadditive responses in superior temporal sulcus predict audiovisual benefits in object categorization. Cereb Cortex 20:1829–1842. 10.1093/cercor/bhp248 [DOI] [PubMed] [Google Scholar]

- Zald DH, Pardo JV (1997) Emotion, olfaction, and the human amygdala: amygdala activation during aversive olfactory stimulation. Proc Natl Acad Sci U S A 94:4119–4124. 10.1073/pnas.94.8.4119 [DOI] [PMC free article] [PubMed] [Google Scholar]