Abstract

Purpose:

To demonstrate a tagging method compatible with RT-MRI for the study of speech production.

Methods:

Tagging is applied as a brief interruption to a continuous real-time spiral acquisition. Tagging can be initiated manually by the operator, cued to the speech stimulus, or be automatically applied with a fixed frequency. We use a standard 2D 1-3-3-1 binomial SPAtial Modulation of Magnetization (SPAMM) sequence with 1 cm spacing in both in-plane directions. Tag persistence in tongue muscle is simulated and validated in-vivo. The ability to capture internal tongue deformations is tested during speech production of American English diphthongs in native speakers.

Results:

We achieved an imaging window of 650–800 ms at 1.5T, with imaging SNR ≥ 17 and tag CNR ≥ 5 in human tongue, providing 36 frames/sec temporal resolution and 2mm in-plane spatial resolution with real-time interactive acquisition and view-sharing reconstruction. The proposed method was able to capture tongue motion patterns and their relative timing with adequate spatiotemporal resolution during the production of American English diphthongs and consonants.

Conclusion:

Intermittent tagging during real-time MRI of speech production is able to reveal the internal deformations of the tongue. This capability will allow new investigations of valuable spatiotemporal information on the biomechanics of the lingual subsystems during speech without reliance on binning speech utterance repetition.

Keywords: tagging, real-time MRI, spiral, speech production, tongue

INTRODUCTION

The vocal tract is a complex system that consists of both movable and immovable structures. Speech production involves complex spatiotemporal coordination of multiple vocal organs in the upper (oral) and lower (pharyngeal) airways. Visualization of the movements of the organs can provide important information about the spatiotemporal properties of speech actions, or “gestures.” Several modalities have been employed to visualize speech, including X-ray [1], computer tomography (CT) [2], electromagnetic articulography (EMA) [3], ultrasound [4] and MRI [5]–[10]. MRI can uniquely provide both static images with excellent soft tissue contrast and dynamic images with high frame rate, without the use of ionizing radiation, making it a promising tool. Real-time MRI (RT-MRI) now plays an important role in interpreting dynamics of vocal tract shaping during speech production, swallowing, and other human functions such as vocal performance [5], [11]–[13].

In speech production, the upper respiratory tract forms a series of connected resonance cavities that can be modified in size and shape using coordinated movements of the velum, jaw, pharyngeal tongue root, tongue body, tongue tip, and lips [6]. Among these articulators, the human tongue is the most powerful enabler of the remarkably complex shaping occurring in speech. The tongue is a muscular hydrostat comprised of numerous intrinsic and extrinsic muscles [14]. The internal deformation of tongue muscles cannot be easily interpreted by the contours of the tongue surface, and the relationship between muscle activity and tongue shaping is the subject of scientific investigation as an important component in understanding how healthy speech is controlled and how it is disrupted in disease [15], [16]. However, scientists remained reliant on inverse modeling of surface contours heavily contingent on modeling assumptions [16]–[19].

RT-MRI techniques have been extensively used in the last decade to study speech production, specifically the dynamics of vocal tract shaping with a focus on tracking the air-tissue interface at articulator and vocal tract surfaces [13]. Recent RT-MRI advances include improvements in spatiotemporal resolution [12], [20], [21], increasing spatial coverage [22]–[25], reducing reconstruction latency [26]–[28], mitigating off-resonance artifacts [21], [29], and combinations of the above. However, these techniques all lack the ability to measure internal muscle activity and to image and quantify the deformations of local regions within the human tongue, arguably the most important articulator, during natural speech.

Tagged MRI has been used to capture internal tongue deformation since early 1990s. Static MRI was utilized as snap shot at designated points in a tongue movement to visualize the deformation [30]–[32]. Later, tagged CINE-MRI was employed to analyze the motion of the internal tongue during speech [33]–[35]. Recently, it has been utilized to provide images for measurement of 4D tongue motion and to generate an atlas of the human tongue during articulation [36], [37]. Such CINE methods rely on repetition with perfect synchronization, thus allowing tagged MRI to be used to analyze cardiac motion [38], [39], as heart beats in sinus rhythm are highly repeatable, independent of rate of contraction [40], and can be easily synchronized with ECG. However, the heart differs from the tongue in important ways, most notably in that speech production possesses great token and type variability due to its voluntary, information encoding, and highly context-sensitive nature. Real-time tagged MRI with Cartesian sampling was explored for cardiac applications [41], [42]. However, these methods only provide 1-D deformation in real-time, as they implement fast imaging by either compromising resolution on phase encoding direction [41] or by only acquiring a small island of harmonic peak in k-space [42]. They need at least two heartbeats to resolve motion on both directions. Real-time Strain ENCoding (SENC) techniques [43], [44], although able to provide quantitative strain for cardiac applications, nevertheless measure on a plane that is perpendicular to the imaging plane and are not compatible with speech applications.

In this work, we demonstrate a tagging method compatible with RT-MRI for the study of natural human speech production. We apply tagging as a brief interruption of continuous RT-MRI data acquisition. We explore the selection of imaging parameters for such speech studies to optimize image quality and tag persistence. We evaluate this method using simulations and in-vivo studies of American English diphthong and consonant production. We show that the proposed method can capture tongue motion patterns and their relative timing through internal tongue deformation, and therefore provide a potential tool for studying muscle function in speech production and similar scientific and clinical applications.

METHODS

Tagged RT-MRI Implementation

Experiments were performed on a Signa Excite HD 1.5 T scanner with a custom eight-channel upper-airway coil [12]. The pulse sequence was implemented within a real-time imaging platform (RTHawk Research v2.3.4, HeartVista, Inc., Los Altos, CA, USA) [45].

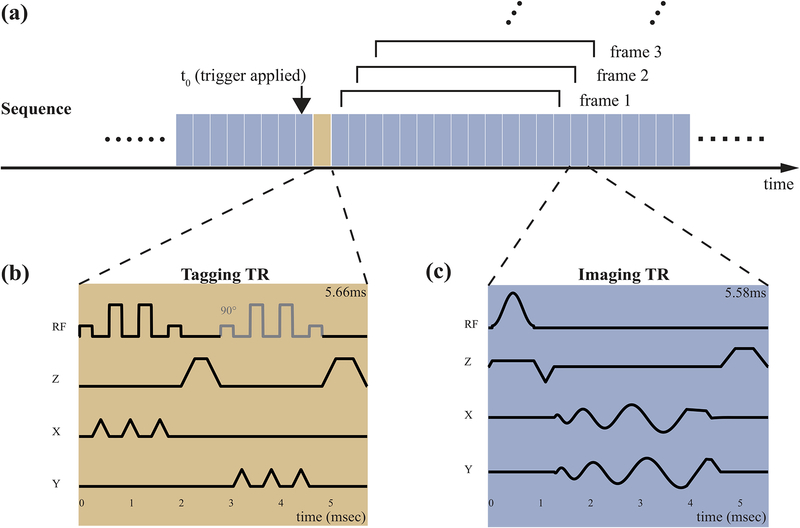

Figure 1 illustrates the acquisition timing and the pulse sequence diagrams for tagging and imaging. As shown in Figure 1a, tagging is applied as a brief interruption to continuous real-time spiral acquisition. A button was added to the RTHawk graphical user interface to allow operator control of intermittent tagging. Manually pushing the button initiates the tagging module to be applied right after the current imaging TR and before the next imaging TR. Real-time spiral data acquisition experiences only a brief interruption of less than 6 msec (comparable to one imaging TR). The persistence of the tag grid depends on longitudinal relaxation (T1) of the tongue muscle and the effect of imaging RF excitations [46].

Figure 1.

Speech RT-MRI with Intermittent Tagging. (a) Overall acquisition timing. Continuous imaging is performed using interleaved spiral GRE imaging (c, blue block) with view-sharing reconstruction. 13-interleaves were utilized to fully sample k-space at each time frame using a bit-reversed interleaf order. Tag placement is performed using two 1-3-3-1 SPAMM pulses along x and y (b, yellow block). Note the second composite SPAMM pulse is shifted with a 90° relative phase and is with slightly larger crusher to avoid stimulated echo.

Figure 1(b) illustrates the tagging sequence, which is a standard 2D 1-3-3-1 binomial SPAtial Modulation of Magnetization (SPAMM) sequence [47], [48], with a 1 cm spacing in both in-plane directions. Two SPAMM pulses were sequentially applied along the x and y axes, followed by crushers to eliminate any remaining transverse magnetization [49]. The second composite SPAMM sequence had its phase shifted by 90° relative to the first one [48] and used a different crusher area to avoid stimulated echoes. The overall duration was 5.66 msec.

Figure 1(c) illustrates the imaging sequence, which is a standard spiral spoiled gradient echo, and is designed to make the maximum use of the gradients (40 mT/m amplitude and 150 mT/m/ms slew rate). The imaging parameters were: FOV 20 cm, slice thickness 7 mm, readout duration 2.49 ms, TE/TR 0.71 ms/5.58 ms, 13-interleaves bit reversed view-ordering.

Coil-by-coil gridding reconstruction with view-sharing was performed on-the-fly during data acquisition. The Walsh method was used to estimate the sensitivity map for coil combining [50]. We utilized step size of 5 TRs for the sliding window, resulting in a nominal temporal resolution of 36 frames/sec. The approximate end-to-end reconstruction latency was 27 msec. This setup enables the operator to observe the tagging lines deformation in real-time to monitor the subject completion of the designed articulation task, and to determine if the timing of triggering conformed to design. Concomitant fields correction [51] and image unwarping that accounts for gradient nonuniformity [52] were applied with gridding reconstruction.

Selection of Acquisition Parameters

Tag persistence was quantitatively evaluated by analyzing the temporal evolution of contrast-to-noise ratio CNRtag as a function of time [46]. We assume that the steady state signal Mss is reached prior to tagging. Immediately after tagging sequence, at time t0, the longitudinal magnetization can be expressed as:

| (1) |

where Q(x, y) represents the modulation function due to the SPAMM sequence. The longitudinal magnetization immediately before the first RF at time t1, considering T1 recovery, is:

| (2) |

The first term, denoted MT, contains the fading tag information; the second term, denoted MR, contains the recovery toward equilibrium magnetization M0. We calculate the temporal evolution of tag contrast by considering n consecutive spiral GRE TRs, each with flip angle α. Each of such imaging RF will scale the magnetization with a factor of cos α. The MT component immediately before the nth RF excitation (at time tn) can be expressed as:

| (3) |

and the MR component can be recursively expressed as:

| (4) |

Applications of RFs during imaging contributes to reducing the tag information, as it consumes part of the longitudinal magnetization. An optimal flip angle can be determined as described below.

The contrast in image is the part in (the peak-to-valley difference in magnetization) that tipped to the transverse plane by the imaging RF. The CNRtag after the nth RF excitation is defined as the ratio between the contrast in image and standard deviation of the image noise:

| (5) |

The tag persistence can be defined as the time span between the grids being placed and CNRtag dropping below a certain threshold. Markl et al. [53] suggested a CNR threshold of 6 for cardiac applications. Simulated σ is calculated as the simulated steady state signal divided by 15, as suggested by previous experiments [12]. A threshold time can be calculated as the time span between the tag being placed and when the CNR decrease below the threshold value.

Two healthy volunteers (27/M, 27/F) were scanned to verify tag persistence in the tongue and to identify the optimal imaging flip angle. Fifteen integer flip angles ranging from 1° to 15° was utilized in the experiment. A wide tag spacing of 5 cm was used to mitigate partial volume effects in the post processing steps. The noise covariance matrix of the coils was measured with a separate scan with excitation RFs turned off. The measured noise covariance matrix was utilized to pre-whiten the multi-coil data and to calculate the standard deviation of the noise to normalize the result. For each flip angle, a separate scan was employed to measure the steady state signal to properly scale between simulation and measurements. The subjects were instructed to keep their mouth in a closed neutral position and remain still during the scan to minimize off-resonance and motion artifacts. The peak and valley values were calculated by taking average over the manually selected regions of interest (ROIs). The peak ROI was drawn in two 4-by-6-pixel squares in the bright regions in the tongue; the valley ROI was selected over one 3-by-16-pixel stripe at the center of the dark tag lines.

Triggering Mechanism

In this study, we tested three different tag-triggering schemes to assess the best utilization of the imaging window after the intermittent tagging sequence. Each involved a specific approach to coordinating the tag triggering by the operator with the speech production by a subject (who read linguistic stimuli projected on a screen).

(a) Manual triggering:

In the manual triggering approach, the subjects were instructed to speak the linguistic stimuli (described below) 10 times with a full pause between each production (to ensure the intermediate return to a neutral vocal tract posture). The operator used the first 2–3 utterances to ascertain the token-to-token rhythm or pacing of the subject for application of the tagging module for the rest of the trials. The operator controlled both the button for the tagging module and the projector showing the stimuli one utterance at a time.

(b) Cued triggering:

In the cued triggering approach, the MRI operator and the subject were instructed, respectively, to push the triggering button and to read the stimulus immediately upon its visual appearance on the projector screen.

(c) Periodic triggering:

In the periodic triggering approach, an automatic triggering was implemented in the sequence system. The tagging module was applied every 182 TRs with a period of approximately 1015 ms, which is equivalent to 14 fully sampled frames when no view sharing is applied. The subjects were instructed to say the stimuli for 15 sec with a pause between each individual speech item.

Speech Experiments

Four healthy volunteers (2M2F, 27–31yrs), all native American English speakers, were scanned. The experiment protocol was approved by our Institutional Review Board, and informed consent was obtained from all volunteers. Audio recording and stimuli presentation were adapted from similar protocols successfully used in previous studies [e.g., 17].

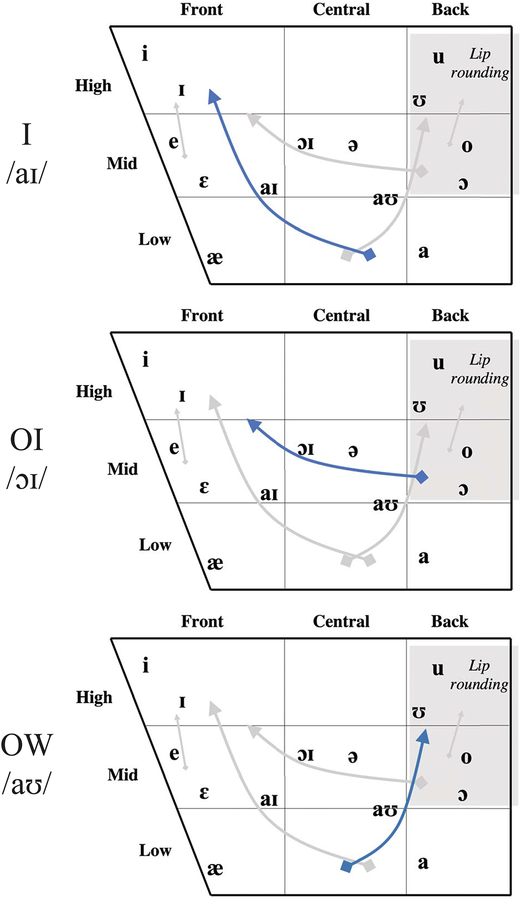

Table 1 shows the American English diphthong vowel stimuli used in this experiment [54]–[56]. Diphthongs are vowels in which the lingual postures, and their concomitant formant frequencies, require relatively large movements from one vowel target to another in the same syllable [54]. The diphthongs /aɪ/, /ɔɪ/ and /aʊ/ were chosen for this study because they involve substantial movement of tongue when gliding from initial to final vowel quality, and the duration of this movements (~180ms to 300ms [56]) can be thoroughly covered in the current imaging window. Figure 5 uses American English Vowel Charts to provide a rough schema for understanding the tongue positioning. The blue curve in the chart marks the starting and ending points for the three diphthong vowels being studied. These vowels in English are known to produce sweeping lingual motions that move the tongue upward from a depressed and/or retracted posture to a raised and fronted or raised and retracted posture as follows: in /ai/ from a low-back posture to a high-front posture, in /ɔɪ/ from a mid-back posture (with lip rounding) to a mid-high front posture, in /aʊ/ from a low posture to a high-back (lip rounded) posture. In these vowels, as in vowels generally, the tongue is generally more or less arched; it is not grooved or concave.

Table 1.

American English diphthong stimuli. Starting/ending posture description refers to the approximate tongue position when tagging began (if in a carrier, during the “a”) and ended.

| Approximate diphthong articulation duration | |||||

|---|---|---|---|---|---|

| “I” | none | /aɪ/ | Low Back | High Front | 180ms |

| “oy” | /ɔɪ/ | Mid Back | High Front | 300ms | |

| “ow” | /aʊ/ | Low Back | Mid/High Back | 180ms | |

| “A buy puppy” | a [·] puppy | /aɪ/ | Mid Central | High Front | 180ms |

| “A boy puppy” | /ɔɪ/ | Mid Central | High Front | 300ms | |

| “A bow puppy” | /aʊ/ | Mid Central | Mid/High Back | 180ms |

Figure 5.

American English Vowel Charts illustrate a rough schema for understanding tongue position observed in the representative frames in Figure 6.

The stimuli were placed both in carrier phrases and presented in isolation, so as to provide variation for investigating the proposed tagging sequence. Diphthong stimuli in isolation were the words/pseudo-words: “I”, “oy” and “ow.” The stimuli in carrier phrases placed the diphthongs after labial consonants in the words: “buy,” “boy,” and “bow.” (for “ow”, subjects were instructed so as to ensure that their pronunciation rhymed with “now.”) A [b], a consonant made with lip rather than lingual closure, was used preceding and following the diphthong to minimize any coarticulation with other nearby lingual sounds. The tagging module was triggered in close temporal proximity with the onset of the diphthong. Different motion patterns and their relative timing during the transition between the component postures of the diphthongs were then imaged. The carrier phrase stimuli (“a buy/boy/bow puppy”) are presented in this work.

Table 2 shows consonant stimuli used in the experiment. Stimuli occurred in the pseudo-words: “ara”, “asha” and “acha,” so as to place /ɹ/, /ʃ/ and /tʃ/ between two /ə/s having a relative neutral vocal tract posture. All of these target consonants are produced using a tongue constriction in the anterior oral hard palate area immediately posterior to the alveolar ridge. /ɹ/ (for this speaker) places the tongue tip in a retroflex posture (though other American English speakers are known to make /ɹ/ with a bunched, tip-down posture), and /ʃ/ and /tʃ/ raise the tongue tip and blade up toward the post-alveolar area; during /ʃ/ retains a small airway opening allowing turbulent airflow while /tʃ/ has a brief stop of airflow as the tongue fully contacts the palate followed by turbulent airflow as it draws away.

Table 2.

American English consonant stimuli.

| Constriction area | |||

|---|---|---|---|

| “ara” | /ɹ/ | Retroflex Approximant | Lips, Post-alveolar ridge, Pharynx |

| “asha” | /ʃ/ | Postalveolar Fricative | Post-alveolar ridge |

| “acha” | /tʃ/ | Postalveolar Affricate | Post-alveolar ridge |

RESULTS

Acquisition Parameters

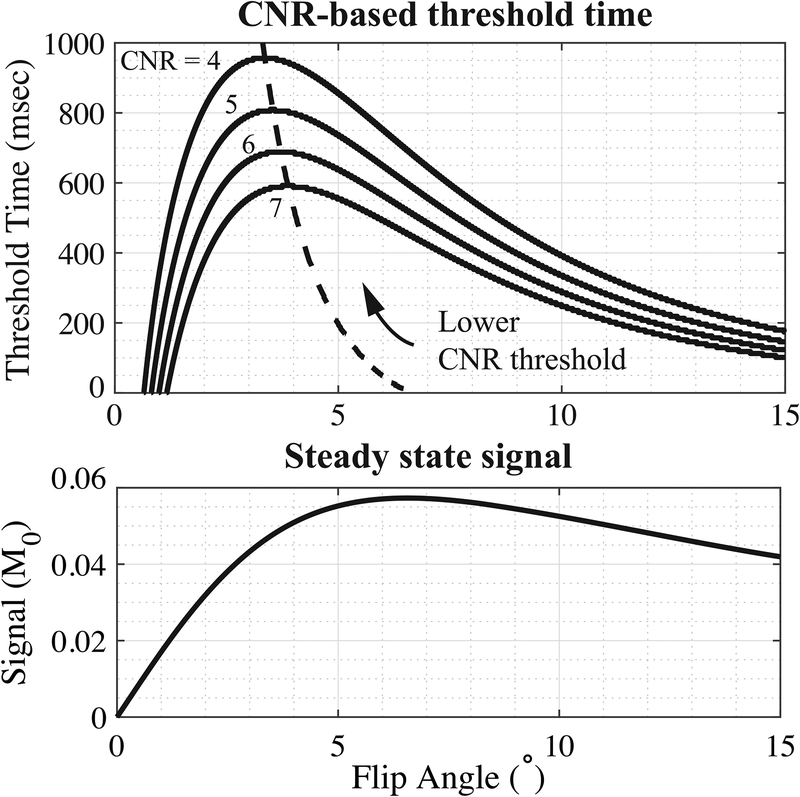

Figure 2 shows CNR-based threshold time and signal intensity as functions of imaging flip angle. The longitudinal relaxation of the tongue muscle T1=850ms at 1.5 T was measured by inversion recovery fast spin echo (FSE-IR) with multiple inversion times. This value agreed with previous literature [6], [57]. Dashed lines in Figure 2(a) indicate CNR optimal flip angle that delivers the longest threshold time. The CNR optimal flip angle increases from 3° to 6.5° with higher threshold values providing shorter tag persistence. The Ernst angle for imaging tongue is αE = 6.2° as showed in Figure 2(b). The simulation shows a trade-off between CNR-based tag persistence and image SNR when choosing optimal excitation flip angle.

Figure 2.

Simulation of tag persistence and steady-state signal as a function of imaging flip angle. Top: Threshold time is defined as the time span between the tag being placed and the tag CNR falling below the threshold value (shown for CNR cutoffs of 4, 5, 6, and 7). The dashed line marks the flip angles that will deliver the longest threshold time for each CNR threshold. The longest persistence can be reached at a flip angle of 3–6.5°. Performance suffers quickly if the flip angle is too low, but less so if the flip angle is too high. Bottom: Steady state signal as a function of flip angle for the imaging TR=5.58msec and tongue T1=850msec at 1.5 T. The Ernst angle in this case is 6.2°. The actual imaging flip angle was selected based on both tag persistence and steady-state tongue SNR.

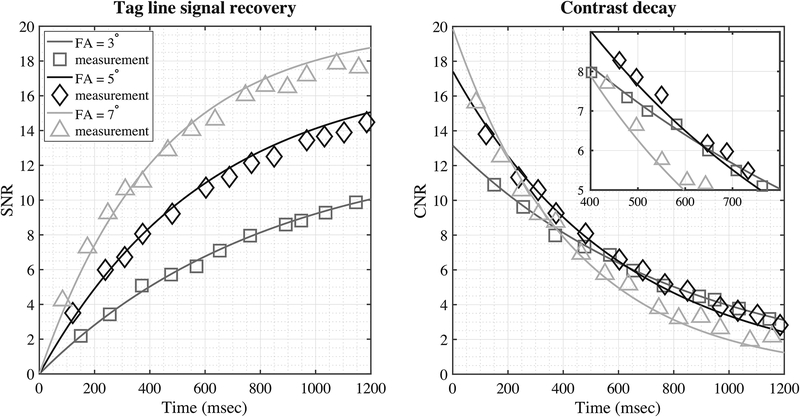

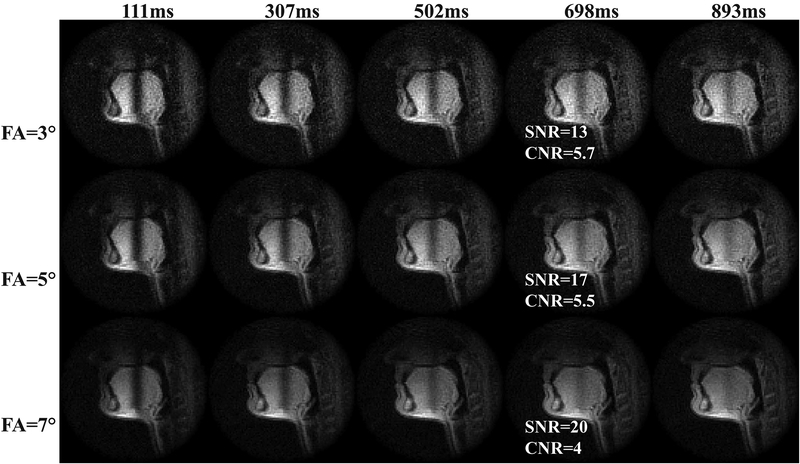

Figure 3 shows an in-vivo experiment on tag persistence in human tongue. Measured signal of tag lines (center) and peak-to-valley contrast were plotted as functions of time with corresponding simulated curve. The curves were normalized by the standard deviation of noise measured in a separate scan. Only a subset of flip angles (3°, 5°, 7° in 1–15°) are shown in the figure for illustrative purpose. The measured signal conformed to the simulation for all imaging flip angles. The tag lines of FA = 3°, 5°, 7° recovered to the steady signal with SNRs of 13, 17 and 20 with decreasing times, respectively. Note that FA = 7° had the highest imaging SNR; however, the faster decay resulted in a CNR drop to 5 in only 600 msec. In contrast, the CNR by FA = 3° and 5° reached the threshold level in more than 650 msec, with the latter having 30% higher image SNR in the tongue compared to the former. In our experience, imaging using a very small flip angle (α < 5°) was sensitive to B1 inhomogeneity in the tongue, as the signal dropped dramatically when unintentionally decreasing the flip angle. As an overall result of the above considerations, we used flip angle of 5° with an imaging window of around 650–800ms, with the ending CNR of 5–6. Figure 4 shows example images of tag fading.

Figure 3.

Tag persistence in human tongue at 1.5T. Left: simulation (line) and measurement (symbol) of the tag line signal for the first 1.2s after the tag module was applied. Right: contrast decay after tag module being applied. Tongue T1=850msec was measured using an inversion recovery fast spin echo (IR-FSE) sequence with multiple inversion times. The signal and contrast were normalized by the standard deviation of noise, measured by a separate scan with RF excitation turned off.

Figure 4.

Example images of tag fading with imaging flip angle of 3°, 5° and 7°. Wide tag spacing of 5 cm was used to mitigate partial volume effects. At around 700 msec (4th column), FA=3°, 5° have similar CNR, while the latter has 30% higher SNR. As an overall consideration, we used flip angle of 5° with an imaging window of around 650–800ms, with the ending CNR of 5–6.

Triggering Mechanism

Manual triggers were likely to miss the beginning of the diphthong even with the operator and the subject synchronized into the same rhythm with practice. The reflex delay of the human operator and the normal speech pacing and production variability of the subjects aggravated the miss rate. Further, the operator’s timing accuracy largely depended on the speech sound that came from the scanner, which was compromised by acoustic scanner noise.

Both cued and periodic triggering performed well. During cued triggering, the normal reflex delay of the subject between seeing the stimuli on the project and starting articulation was largely matched by the reflex delay of the MRI operator in executing the tagging button press, ensuring that the tag was reliably placed appropriately before the target tongue movement. Interestingly for the elicitation protocol of periodic tagging, the tagging module interrupted the acoustic sound of the readout gradient heard by the subjects and acted in effect as an auditory metronome for the subject, causing them to entrain to the tag triggering rhythm and thereby consequently aligning their productions with the tagging timing after the first 1–2 triggers. And, since there was no voluntary effort required by the operator on the triggering side, operator alignment errors were not an issue.

Visualization of Tongue Deformation

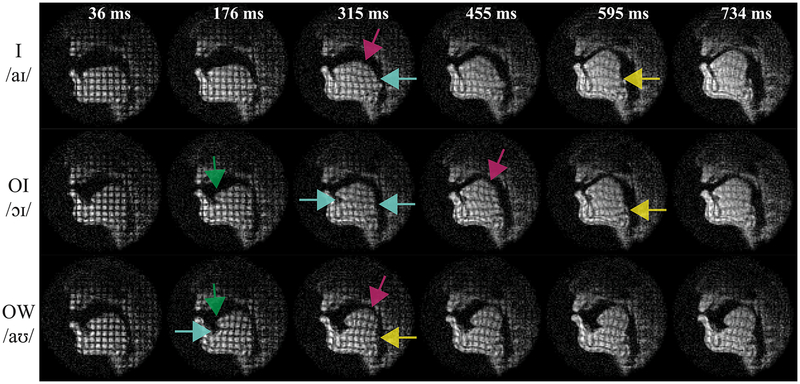

Figure 6 reveals internal tongue movement during three American English diphthong articulation examples. The videos can be found in Supporting Information Video S1. For orientation note that /aɪ/ and /aʊ/ start with similar low and retracted tongue postures (note the pharyngeal narrowing); /aɪ/ and /ɔɪ/ end with similar postures of the tongue bunched up high in the palatal vault; and the starting posture of /ɔɪ/ is similar to the ending posture of /aʊ/ with the tongue high and retracted toward the velum (soft palate).

Figure 6.

Tagged RT-MRI reveals internal tongue deformations and their relative timing during American English diphthong articulation. Each color indicates the start of a different motion pattern: (left to right) tongue tip deformation (green), shear (cyan), tongue body compression (magenta), and tongue root compression (yellow). Importantly, the relative timing of motion patterns is seen; for example, deformation of the tongue tip (green) was followed by shear (cyan) and finally compression of the tongue root (yellow).

Figure 6 contains representative frames, illustrating multiple deformation patterns and capturing their relative timing. A shear between different parts of the tongue can be identified as square grids changing into parallelograms. Compression can be identified as square grids changing into bi-concave rectangles. Stretching and curving of the tongue can be identified by bended grid lines. Each of these types of deformations occurred during the course of diphthong articulation. Color arrows mark the start of one specific type of deformation in the representative frames.

In the case of /aɪ/ (top row), parallelograms emerge at 315msec (cyan), indicating shear between the tongue body and tongue root. Also at this time, bi-concave rectangles can be observed at the top of the tongue body (magenta). These compressions move the tongue forward and somewhat higher. Compression of the tongue root happens later (frame 595 msec), further increasing the height of the tongue into the palatal vault (yellow).

In /ɔɪ/ (middle row), the tongue tip stretching forward was identified by the vertical tag lines in that area starting to curve (green). Then as the tongue moves forward and higher, upper-lower shear (cyan), compression in tongue body (magenta), and some tongue root fronting (yellow) is observed in the later frames.

In /aʊ/ (bottom row), we again see early compression and curving of the tongue tip (green). Shear (cyan) appears as the tongue retracting and bunching toward the pharyngeal wall. Compression in both tongue body (magenta) and tongue root (yellow) further move the tongue upward toward the velum.

The representative frames were chosen specifically to show the timing relations of these various tongue internal deformations, documented as the four colors distributing differently in time from left to right. For instance, in the top row deformation of tongue body (magenta) and tongue base (yellow) (which can be thought of as the tongue’s ‘undercarriage’) is seen during /aɪ/, with the former happening earlier (~300msec) than the latter (~590msec). Another example is tongue tip deformation, which happened early in all diphthongs tested, indicated by green arrows on the left.

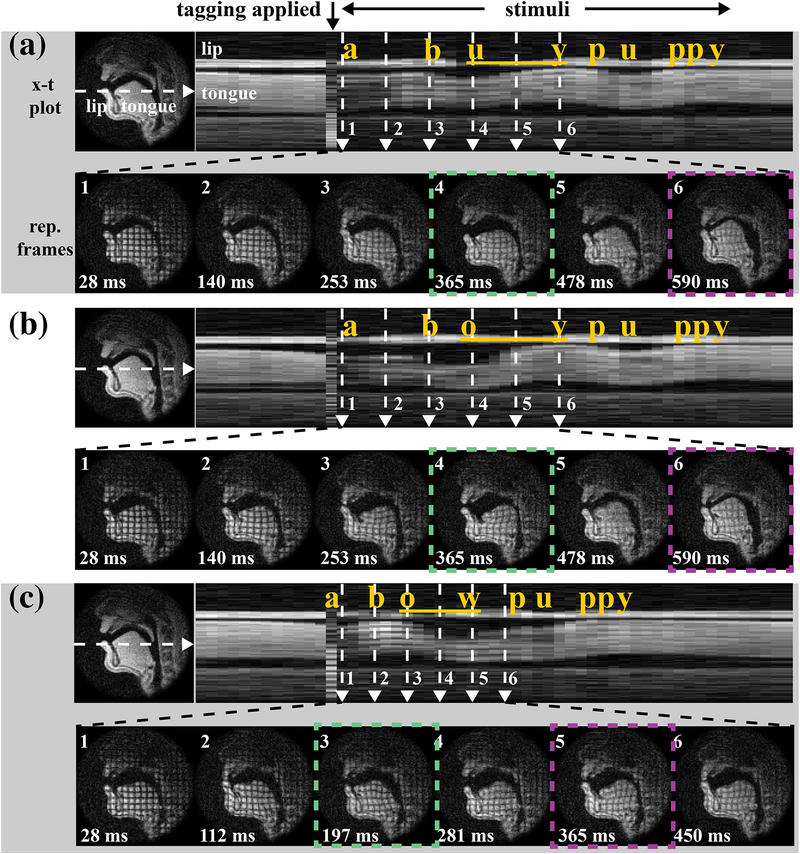

Figure 7 shows diphthongs in carrier phrases: (a) “a buy puppy,” (b) “a boy puppy,” and (c) “a bow puppy.” Supporting Information Video S2–4 shows the three diphthong stimuli in carrier phrases with synchronized audio recording. Intensity-time (x-t) plots are shown in the top rows of (a)-(c) and the moment at which the tagging module was applied is indicated at the very top of the figure and serves as the temporal alignment point for the figures. Six representative frames are zoomed out in the bottom rows with green and magenta dashed squares marking the start and end of the diphthong articulations. (Note that the representative frames in (c) have a shorter time span compared to (a) and (b).) The tag persisted from the beginning of the mid-central /ə/ that preceded the target word in the carrier phrase and successfully visualized deformation of the tongue for the entire course of the target diphthong.

Figure 7.

Tagged RT-MRI reveals deformation relative to the relatively neutral posture of the schwa /ə/ (“a”) of the carrier sentence. Stimuli occurred in carrier phrases: (a) “a buy puppy,” (b) “a boy puppy” and (c) “a bow puppy.” The intensity-time (x-t) plots in top rows of (a)-(c) indicate tagging timing, and six representative frames are shown across time in each bottom row. Green and magenta dashed square mark the start and end gestures of the diphthong articulation. Note the deformation differences in internal tongue among the three diphthongs’ starting postures and across their ending postures. (Such as start of /aɪ/ vs. /aʊ/ as in a4 vs. c3, start of /ɔɪ/ vs. end of /aʊ/ as in b4 vs. c5.)

The first frames in Figure 7(a)–(c) show the tag applied when the tongue started at a mid-central vowel /ə/ (the initial “a” of the carrier phrase), so that all of the deformations in the later frames are relative to this relatively neutral vocalic schwa posture. Note that while (a4)/aɪ/ and (c3)/aʊ/ start with similar low and retracted tongue postures marked by pharyngeal narrowing, differences in the internal tongue can be immediately visualized in the distinct grid deformations. This confirms subtle distinction between the starting position of /aɪ/ and /aʊ/, echoed by the American English Vowel Chart in Figure 5. Similarly, the deformational difference between the ending posture of /aʊ/ (b4) and the starting posture of /ɔɪ/ (c5) was clearly evident; more bi-concave rectangles exist in (c5) in addition to parallelograms in both (b4) and (c5), indicating horizontal squeeze, which further packs the tongue up toward palatal vault. This is consistent with the placement in the second and third vowel charts in Figure 5.

With a relatively neutral schwa posture (frame 1’s) as a reference, the deformations also indicate regional motion within the tongue: in (b3–4) parallelograms in the middle of the tongue indicate shear serving to retract the tongue body back toward the pharyngeal wall; (a6, b6) indicate horizontal compression squeezing the tongue up toward the palate. Little or no deformation is observed during the maintenance of the most extreme postures such as (a6, b6, c3).

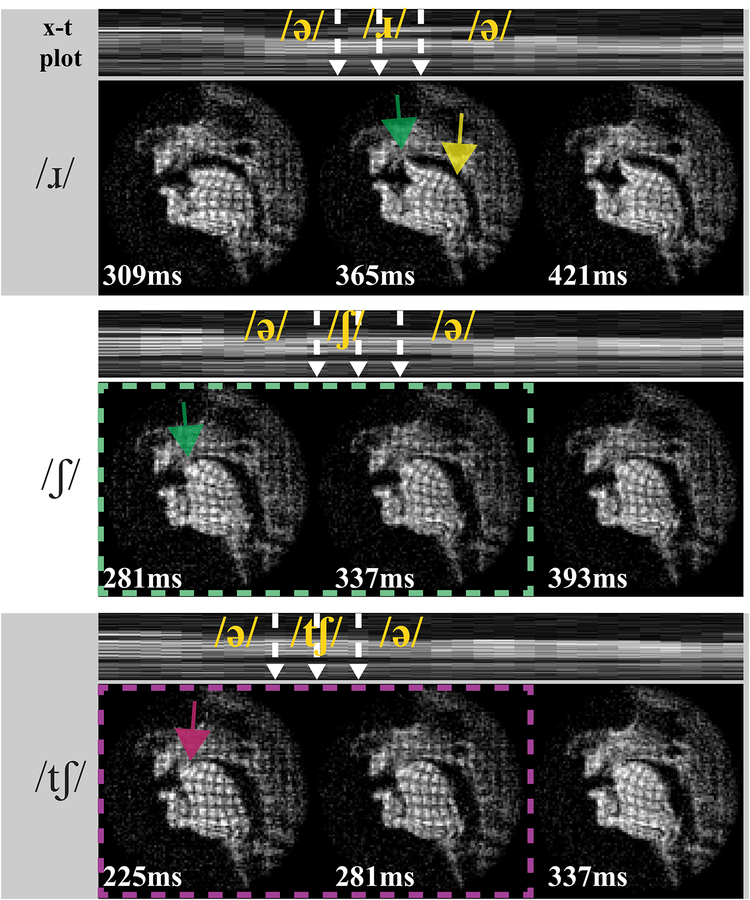

Figure 8 shows different deformation patterns in three example consonant stimuli. In /ɹ/, curved tag lines in tongue tip (green) are evident, indicating the upward ‘bending’ deformation of the tongue front high into the palatal vault. Note that /ɹ/ has three constrictions: at the lips, in the post-alveolar region, and in the pharynx; while /ʃ/ and /tʃ/ only have one constriction, in the post-alveolar region. Thus in /ɹ/ vertical compression in the tongue body (yellow arrows in /ɹ/) is seen due to the tongue body and root is squeezed toward the pharyngeal wall. This vertical compression is not present in the other two consonant stimuli. In both /ʃ/ and /tʃ/, the x-t waveforms show there is a highly similar airway shape (i.e., tongue surface contour), as we would expect for the fricative portion (green dash). However, internal differences are visible, presumably arising from the pull-away characteristics of the blade that remains pressed or stabilized upward more so for /tʃ/ (magenta) than for /ʃ/ (green). Significantly, the tagged images show the tongue internal deformation differences even when tongue surface contours and vocal tract constriction locations are comparable.

Figure 8.

Tagged RT-MRI shows different deformation patterns (relative to preceding schwa postures) during the articulation of consonants /ɹ/, /ʃ/, and /tʃ/. The intensity-time (x-t) plots in top rows indicate tagging timing, and three representative frames are shown across time in each bottom row. All stimuli involve constriction with the tongue tip and/or blade (i.e. the tongue front) in the post-alveolar region of the vocal tract. Interestingly, the tagged images show tongue internal deformation differences (magenta vs. green) even when tongue surface contours and vocal tract constriction locations are comparable.

DISCUSSION

We have demonstrated intermittent tagging during RT-MRI as a potential means to visualize internal tongue motion during speech production. This approach eliminates the need for re-binning data using multiple repetitions and is suitable for investigations of natural speech production. We demonstrated a framework to select imaging parameters in consideration of image quality and tag persistence and achieved an imaging window of approximately 650–800 ms at 1.5T, with imaging SNR ≥ 17 and tag CNR ≥ 5 in human tongue. This work leverages mature speech RT-MRI techniques [12], [45] to provide adequate spatiotemporal resolution for tagged imaging. The resulting method is able to capture tongue motion patterns and their relative timing as exemplified by internal tongue deformation during American English diphthong vowels and consonants. This method can also provide images for further quantification of internal tongue motion [34], [58]–[61].

The proposed method may provide insight into several open questions in speech science and linguistics. For instance, acoustic studies have shown that the vocalic formants of the initial and terminal portions of a diphthong are not necessarily the same as those found for the simple vowels in monophthongs used to describe them [54]. Hsieh et al. [55] hypothesized that strong biomechanical coupling between starting and ending gestures truncates diphthong articulation, leading to less extreme [a] vowels (as compared to the corresponding monophthong). This study used constriction degree to examine diphthong articulation, by assuming that constrictions can be identified with higher signal intensity in a region of interest. The proposed tagging method here can enable testing of this and similar hypotheses by directly examining the biomechanical subsystems in the tongue.

The proposed method may also serve to provide insights into disease states that affect speech production. CINE-tagging has been used by Lee et al. [61] to assess tongue impairment in amyotrophic lateral sclerosis (ALS) patients and by Stone et al. [35] to investigate articulation variance between post-glossectomy patients and controls. For these applications, the requisite repeating motion required in CINE-tagging could be burdensome for some patients, aside from the fact that highly consistent repeatability—which is challenging in impaired speech—is required for re-binning data. Such challenge is demonstrated in Supporting Information Video S5. The proposed RT-MRI tagging method can substantially simplify the data acquisition and preclude errors from a re-binning process, by compromising resolution and/or SNR. Lastly, tongue muscle movement patterns in obstructive sleep apnea (OSA) patients have been characterized in clinical studies for treatment evaluation [62]. The proposed method with automatic periodic tagging could potentially allow studies during natural sleep.

We investigated the performance of the proposed intermittent tagging with three varying triggering mechanisms. Cued and periodic tagging perform well for all four subject scans. Although there is variability in speech rate across subjects, the flexible nature of these intermittent-tagging protocols allows us to flexibly adjust the triggering timing.

As a feasibility effort, this work employed a fairly simple tagging module. We used a 1-3-3-1 SPAMM tagging sequence, as established in the literature, and produced high quality visualization of tag grids in tongue. There exist many alternatives to SPAMM. Several cardiovascular magnetic resonance (CMR) tagging approaches can potentially be adapted for speech applications [39]. Particularly appealing options include HARmonic Phase (HARP) [63] and Displacement ENcoding with Stimulated Echoes (DENSE) [64], allowing faster and simpler post processing and analysis. HARP has been adapted for speech production in the CINE framework [33], [36], [37], [58], [61]. More rapid data acquisition implementation by Echo Planar Imaging (EPI) was proposed for cardiac HARP [42], in which only the spectral peak of interest was acquired. DENSE provides higher sensitivity and spatial resolution. However, the technique is derived from Stimulated Echo Acquisition Mode (STEAM) sequence and suffers from low SNR. Phase contrast imaging has been shown for the application of tissue velocity mapping in myocardial motion [65] as well as in skeletal muscle contraction [66]. This technique encodes information about velocity into the phase of the detected signal. Note that all three of these alternatives are phase-sensitive methods; phase errors introduced by uncounted off-resonance need to be carefully considered when adapting to speech applications [65], [67]–[69].

The SPAMM parameters may also be optimized. We used grid spacing of 1 cm, but this spacing may need adjustment based on the size of the subject. For example, we expect a finer grid spacing will be required in small people, such as young children. The grid spacing may also need modification depending on the specific muscle groups or vocal tract subsystems of interest such that they are fine enough to distinguish the contractions and internal movements of the specific lingual muscle system(s) of interest such as for the tongue tip.

Improvement in tag persistence is also of interest. Variable flip angle (VFA) has been utilized in spiral myocardial tagging to improve contrast throughout the entire cardiac cycle. Ryf et al. [70] applied larger flip angle in the later stage of the imaging cycle to compensate the faded longitudinal magnetization. This topic remains as future work.

Motion artifacts exist in some of the current results. This is not surprising as Lingala et al. [12] pointed out that fully sampled single slice RT-MRI cannot resolve all tongue movements, especially during faster pace speaking or those involving intrinsically faster subsystem movement such as by the tongue tip. These artifacts can be mitigated by under-sampling and constrained reconstruction methods, which have yet to be explored in combination with tagging.

Imaging at 3T is of interest because it could provide longer tag persistence and higher SNR. We conducted all of our experiments at 1.5 T field strength. Previous studies have compared imaging at 1.5 T and 3 T for cardiac applications for the SSFP sequence [53]. With the same imaging parameters, the tag persists approximately 25–30% longer due to slower T1 relaxation and higher intrinsic imaging SNR in human tongue. This can be further improved by a smaller flip angle, considering the lower Ernst angle needed for longer T1. However, stronger off-resonance emerges at higher field strength, especially at air-tissue boundaries with an amount of approximately 9.4 ppm [71]. This could cause blurring of the grid near the tongue surface, or even total disappearance in subtle structure such as the tongue tip. To mitigate the off-resonance artifacts, dynamic off-resonance can also be incorporated into the reconstruction pipeline to reduce artifacts [21], [29]. Subjects with large proton density fat fraction at the base of the tongue (inferior-posterior) will also suffer from signal dephasing due to off-resonance of 3.5 ppm between fat and water [72]. This signal loss can be reduced by shortening the readout duration of spiral acquisition while trading-off temporal resolution, or by using another sampling pattern with short readout, such as radial sampling [26].

CONCLUSIONS

We have developed and demonstrated a method for intermittent tagging during real-time MRI of speech production to reveal internal deformations of the tongue. We incorporated 1-3-3-1 SPAMM tagging with rapid spiral GRE to reveal the internal tongue motion during articulation. We showed that this method can capture various motion patterns in the tongue and their relative timing using case examples of American English diphthongs and consonants. The proposed method can potentially provide tools to investigate muscle function or other applications of internal tissue movement in future scientific and clinical research.

Supplementary Material

Supporting Information Video S1. Top: Movie of isolated American English Diphthong shown in Figure 4. This video shows top three rows listed in Table 1. Bottom: each color indicates the start of a different motion pattern: (left to right) tongue tip deformation (green), shear (cyan), tongue body compression (magenta), and tongue root compression (yellow). Importantly, the relative timing of motion patterns is seen in (b); for example, deformation of the tongue tip (green) was followed by shear (cyan) and finally compression of the tongue root (yellow).

Supporting Information Video S2. Movie of tagged real-time MRI with a synchronized audio shown in Figure 5(a). This video shows “a buy puppy” listed in Table 1.

Supporting Information Video S3. Movie of tagged real-time MRI with a synchronized audio shown in Figure 5(b). This video shows “a boy puppy” listed in Table 1.

Supporting Information Video S4. Movie of tagged real-time MRI with a synchronized audio shown in Figure 5(c). This video shows “a bow puppy” listed in Table 1.

Supporting Information Video S5. Significance of tagged real-time MRI for speech. Top row: five trials of tagged real-time MRI during stimuli “a boy puppy”. In each trial the tagging was triggered at the desired timing and successfully capture the deformation throughout the whole articulation. Bottom row: averaging over five trials shows high SNR but unacceptable quality, due to high intra-subject variability of speech production.

ACKNOWLEDGEMENTS

This work was supported by NIH Grant R01DC007124 and NSF Grant 1514544. We thank Eric Peterson, William Overall and Juan Santos at HeartVista, Inc. for supporting on RTHawk Research system. We acknowledge the support and collaboration of the Speech Production and Articulation kNowledge (SPAN) group at the University of Southern California, Los Angeles, CA, USA.

REFERENCES

- [1].Delattre P and Freeman DC, “A dialect study of american R’S by x-ray motion picture,” Linguistics, vol. 6, no. 44, pp. 29–68, 1968. [Google Scholar]

- [2].Perrier P, Boë LL-J, and Sock R, “Vocal Tract Area Function Estimation From Midsagittal Dimensions With CT Scans and a Vocal Tract CastModeling the Transition With Two Sets of Coefficients,” J. Speech, Lang. Hear. Res, vol. 35, no. 1, pp. 53–67, February 1992. [DOI] [PubMed] [Google Scholar]

- [3].Perkell JS, Cohen MH, Svirsky MA, Matthies ML, Garabieta I, and Jackson MT, “Electromagnetic midsagittal articulometer systems for transducing speech articulatory movements.,” J. Acoust. Soc. Am, vol. 92, no. 6, pp. 3078–3096, December 1992. [DOI] [PubMed] [Google Scholar]

- [4].Stone M, Shawker TH, Talbot TL, and Rich AH, “Cross-sectional tongue shape during the production of vowels,” J. Acoust. Soc. Am, vol. 83, no. 4, pp. 1586–1596, April 1988. [DOI] [PubMed] [Google Scholar]

- [5].Bresch E, Yoon-Chul Kim, Nayak K, Byrd D, and Narayanan S, “Seeing speech: Capturing vocal tract shaping using real-time magnetic resonance imaging,” IEEE Signal Process. Mag, vol. 25, no. 3, pp. 123–132, May 2008. [Google Scholar]

- [6].Scott AD, Wylezinska M, Birch MJ, and Miquel ME, “Speech MRI: Morphology and function,” Physica Medica, vol. 30, no. 6 Elsevier, pp. 604–618, 01-September-2014. [DOI] [PubMed] [Google Scholar]

- [7].Demolin D, Hassid S, Metens T, and Soquet A, “Real-time MRI and articulatory coordination in speech,” Comptes Rendus - Biol, 2002. [DOI] [PubMed] [Google Scholar]

- [8].Honda K, Takemoto H, Kitamura T, Fujita S, and Takano S, “Exploring human speech production mechanisms by MRI,” IEICE Trans. Inf. Syst, 2004. [Google Scholar]

- [9].NessAiver MS, Stone M, Parthasarathy V, Kahana Y, and Paritsky A, “Recording high quality speech during tagged cine-MRI studies using a fiber optic microphone,” J. Magn. Reson. Imaging, 2006. [DOI] [PubMed] [Google Scholar]

- [10].Ventura SR, Freitas DR, and Tavares JMRS, “Application of MRI and biomedical engineering in speech production study,” Comput. Methods Biomech. Biomed. Engin, 2009. [DOI] [PubMed] [Google Scholar]

- [11].Narayanan S, Nayak K, Lee S, Sethy A, and Byrd D, “An approach to real-time magnetic resonance imaging for speech production,” J. Acoust. Soc. Am, vol. 115, no. 4, pp. 1771–1776, April 2004. [DOI] [PubMed] [Google Scholar]

- [12].Lingala SG, Zhu Y, Kim Y-C, Toutios A, Narayanan S, and Nayak KS, “A fast and flexible MRI system for the study of dynamic vocal tract shaping.,” Magn. Reson. Med, vol. 77, no. 1, pp. 112–125, January 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Lingala SG, Sutton BP, Miquel ME, and Nayak KS, “Recommendations for real-time speech MRI,” Journal of Magnetic Resonance Imaging, vol. 43, no. 1 pp. 28–44, 01-January-2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Kier WM and Smith KK, “Tongues, tentacles and trunks: the biomechanics of movement in muscular-hydrostats,” Zool. J. Linn. Soc, vol. 83, no. 4, pp. 307–324, April 1985. [Google Scholar]

- [15].Hiiemae KM and Palmer JB, “Tongue Movements in Feeding and Speech,” Crit. Rev. Oral Biol. Med, vol. 14, no. 6, pp. 413–429, November 2003. [DOI] [PubMed] [Google Scholar]

- [16].Green JR, “Mouth Matters: Scientific and Clinical Applications of Speech Movement Analysis,” Perspect. Speech Sci. Orofac. Disord, vol. 25, no. 1, p. 6, July 2015. [Google Scholar]

- [17].Gerard YPJM, Wilhelms-Tricarico R, Perrier P, “A 3D dynamical biomechanical tongue model to study speech motor control,” Recent Res Dev. Biomech, 2003. [Google Scholar]

- [18].Buchaillard S, Perrier P, and Payan Y, “A biomechanical model of cardinal vowel production: Muscle activations and the impact of gravity on tongue positioning,” J. Acoust. Soc. Am, 2009. [DOI] [PubMed] [Google Scholar]

- [19].Toutios A and Narayanan SS, “Articulatory synthesis of french connected speech from EMA data,” in Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH, 2013. [Google Scholar]

- [20].Fu M et al. , “High-resolution dynamic speech imaging with joint low-rank and sparsity constraints,” Magn. Reson. Med, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Sutton BP, Conway CA, Bae Y, Seethamraju R, and Kuehn DP, “Faster dynamic imaging of speech with field inhomogeneity corrected spiral fast low angle shot (FLASH) at 3 T,” J. Magn. Reson. Imaging, vol. 32, no. 5, pp. 1228–1237, November 2010. [DOI] [PubMed] [Google Scholar]

- [22].Burdumy M et al. , “One-second MRI of a three-dimensional vocal tract to measure dynamic articulator modifications,” J. Magn. Reson. Imaging, 2017. [DOI] [PubMed] [Google Scholar]

- [23].Zhu Y, Toutios A, Narayanan S, and Nayak K, “Faster 3D vocal tract real-time MRI using constrained reconstruction,” in Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH, 2013. [Google Scholar]

- [24].Fu M et al. , “High-frame-rate full-vocal-tract 3D dynamic speech imaging,” Magn. Reson. Med, 2017. [DOI] [PubMed] [Google Scholar]

- [25].Lim Y, Zhu Y, Lingala SG, Byrd D, Narayanan S, and Nayak KS, “3D dynamic MRI of the vocal tract during natural speech,” Magn. Reson. Med, November 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Niebergall A et al. , “Real-time MRI of speaking at a resolution of 33 ms: Undersampled radial FLASH with nonlinear inverse reconstruction,” Magn. Reson. Med, vol. 69, no. 2, pp. 477–485, February 2013. [DOI] [PubMed] [Google Scholar]

- [27].Lingala SG et al. , “Feasibility of through-time spiral generalized autocalibrating partial parallel acquisition for low latency accelerated real-time MRI of speech,” Magn. Reson. Med, p. n/a–n/a, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Uecker M, Zhang S, Voit D, Karaus A, Merboldt KD, and Frahm J, “Real-time MRI at a resolution of 20 ms,” NMR Biomed, vol. 23, no. 8, pp. 986–994, 2010. [DOI] [PubMed] [Google Scholar]

- [29].Lim Y, Lingala SG, Narayanan SS, and Nayak KS, “Dynamic off-resonance correction for spiral real-time MRI of speech,” Magn. Reson. Med, July 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Kumada K, Niitsu M, Niimi S, and Hirose H, “A Study on the Inner Structure of the Tongue in the Production of the 5 Japanese Vowels by Tagging Snapshot MRI,” Ann Bull RILP, vol. 26, pp. 1–5, 1992. [Google Scholar]

- [31].Niitsu M, Kumada M, Campeau NG, Niimi S, Riederer SJ, and Itai Y, “Tongue displacement: Visualization with rapid tagged magnetization-prepared MR imaging,” Radiology, 1994. [DOI] [PubMed] [Google Scholar]

- [32].Napadow VJ, Chen Q, Wedeen VJ, and Gilbert RJ, “Intramural mechanics of the human tongue in association with physiological deformations,” J. Biomech, 1999. [DOI] [PubMed] [Google Scholar]

- [33].Stone M et al. , “Modeling the motion of the internal tongue from tagged cine-MRI images,” J. Acoust. Soc. Am, vol. 109, no. 6, pp. 2974–2982, June 2001. [DOI] [PubMed] [Google Scholar]

- [34].Parthasarathy V, Prince JL, Stone M, Murano EZ, and NessAiver M, “Measuring tongue motion from tagged cine-MRI using harmonic phase (HARP) processing,” J. Acoust. Soc. Am, vol. 121, no. 1, pp. 491–504, January 2007. [DOI] [PubMed] [Google Scholar]

- [35].Stone M, Woo J, Zhuo J, Chen H, and Prince JL, “Patterns of variance in /s/ during normal and glossectomy speech,” Comput. Methods Biomech. Biomed. Eng. Imaging Vis, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Woo J et al. , “Speech Map: a statistical multimodal atlas of 4D tongue motion during speech from tagged and cine MR images,” Comput. Methods Biomech. Biomed. Eng. Imaging Vis, pp. 1–13, October 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Woo J et al. , “A high-resolution atlas and statistical model of the vocal tract from structural MRI,” Comput. Methods Biomech. Biomed. Eng. Imaging Vis, vol. 3, no. 1, pp. 47–60, January 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Shehata ML, Cheng S, Osman NF, Bluemke D. a, and Lima J. a C. , “Myocardial tissue tagging with cardiovascular magnetic resonance.,” J. Cardiovasc. Magn. Reson, vol. 11, no. 1, p. 55, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [39].Ibrahim E-SH, “Myocardial tagging by cardiovascular magnetic resonance: evolution of techniques--pulse sequences, analysis algorithms, and applications.,” J. Cardiovasc. Magn. Reson, vol. 13, no. 1, p. 36, July 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [40].Douglas AS, Rodriguez EK, O’Dell W, and Hunter WC, “Unique strain history during ejection in canine left ventricle.,” Am. J. Physiol, vol. 260, no. 5(2), pp. 1596–611, May 1991. [DOI] [PubMed] [Google Scholar]

- [41].McVeigh ER and Epstein F, “Myocardial tagging during real-time MRI,” in 2001. Conference Proceedings of the 23rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society, vol. 3, pp. 2284–2285. [Google Scholar]

- [42].Sampath S, Derbyshire JA, Atalar E, Osman NF, and Prince JL, “Real-time imaging of two-dimensional cardiac strain using a harmonic phase magnetic resonance imaging (HARP-MRI) pulse sequence,” Magn. Reson. Med, vol. 50, no. 1, pp. 154–163, July 2003. [DOI] [PubMed] [Google Scholar]

- [43].Pan L, Stuber M, Kraitchman DL, Fritzges DL, Gilson WD, and Osman NF, “Real-time imaging of regional myocardial function using fast-SENC,” Magn. Reson. Med, 2006. [DOI] [PubMed] [Google Scholar]

- [44].Ibrahim ESH et al. , “Real-time MR imaging of myocardial regional function using strain-encoding (SENC) with tissue through-plane motion tracking,” J. Magn. Reson. Imaging, 2007. [DOI] [PubMed] [Google Scholar]

- [45].Santos JM, Wright GA, and Pauly JM, “Flexible real-time magnetic resonance imaging framework,” in The 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, vol. 3, pp. 1048–1051. [DOI] [PubMed] [Google Scholar]

- [46].Fischer SE, McKinnon GC, Maier SE, and Boesiger P, “Improved myocardial tagging contrast,” Magn. Reson. Med, vol. 30, no. 2, pp. 191–200, 1993. [DOI] [PubMed] [Google Scholar]

- [47].Axel L and Dougherty L, “MR imaging of motion with spatial modulation of magnetization.,” Radiology, vol. 171, no. 3, pp. 841–845, June 1989. [DOI] [PubMed] [Google Scholar]

- [48].Axel L and Dougherty L, “Heart wall motion: improved method of spatial modulation of magnetization for MR imaging.,” Radiology, vol. 172, no. 2, pp. 349–350, August 1989. [DOI] [PubMed] [Google Scholar]

- [49].Young AA, Axel L, Dougherty L, Bogen DK, and Parenteau CS, “Validation of tagging with MR imaging to estimate material deformation.,” Radiology, vol. 188, no. 1, pp. 101–108, July 1993. [DOI] [PubMed] [Google Scholar]

- [50].Walsh DO, Gmitro AF, and Marcellin MW, “Adaptive reconstruction of phased array MR imagery,” Magn. Reson. Med, 2000. [DOI] [PubMed] [Google Scholar]

- [51].King KF, Ganin A, Zhou XJ, and Bernstein MA, “Concomitant gradient field effects in spiral scans,” Magn. Reson. Med, 1999. [DOI] [PubMed] [Google Scholar]

- [52].Markl M et al. , “Generalized reconstruction of phase contrast MRI: Analysis and correction of the effect of gradient field distortions,” Magn. Reson. Med, 2003. [DOI] [PubMed] [Google Scholar]

- [53].Markl M, Scherer S, Frydrychowicz A, Burger D, Geibel A, and Hennig J, “Balanced left ventricular myocardial SSFP-tagging at 1.5T and 3T,” Magn. Reson. Med, vol. 60, no. 3, pp. 631–639, September 2008. [DOI] [PubMed] [Google Scholar]

- [54].Lehiste I and Peterson GE, “Transitions, Glides, and Diphthongs,” J. Acoust. Soc. Am, 1961. [Google Scholar]

- [55].Hsieh FY, Goldstein L, Byrd D, and Narayanan S, “Truncation of pharyngeal gesture in English diphthong [aI],” in Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH, 2013. [Google Scholar]

- [56].Lee S, Potamianos A, and Narayanan S, “Developmental acoustic study of American English diphthongs,” J. Acoust. Soc. Am, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [57].Valeti VU et al. , “Myocardial tagging and strain analysis at 3 Tesla: Comparison with 1.5 Tesla imaging,” J. Magn. Reson. Imaging, vol. 23, no. 4, pp. 477–480, April 2006. [DOI] [PubMed] [Google Scholar]

- [58].Woo J, Stone M, Suo Y, Murano EZ, and Prince JL, “Tissue-point motion tracking in the tongue from cine MRI and tagged MRI.,” J. Speech. Lang. Hear. Res, vol. 57, no. 2, pp. S626–36, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [59].Woo J et al. , “A high-resolution atlas and statistical model of the vocal tract from structural MRI,” Comput. Methods Biomech. Biomed. Eng. Imaging Vis, vol. 3, no. 1, pp. 47–60, January 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [60].Xing F et al. , “Phase Vector Incompressible Registration Algorithm for Motion Estimation from Tagged Magnetic Resonance Images,” IEEE Trans. Med. Imaging, vol. 36, no. 10, pp. 2116–2128, October 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [61].Lee E et al. , “Magnetic resonance imaging based anatomical assessment of tongue impairment due to amyotrophic lateral sclerosis: A preliminary study,” J. Acoust. Soc. Am, vol. 143, no. 4, pp. EL248–EL254, April 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [62].Brown EC, Cheng S, McKenzie DK, Butler JE, Gandevia SC, and Bilston LE, “Tongue and Lateral Upper Airway Movement with Mandibular Advancement,” Sleep, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [63].Osman NF, McVeigh ER, and Prince JL, “Imaging heart motion using harmonic phase MRI,” IEEE Trans. Med. Imaging, vol. 19, no. 3, pp. 186–202, March 2000. [DOI] [PubMed] [Google Scholar]

- [64].Aletras AH, Ding S, Balaban RS, and Wen H, “DENSE: Displacement Encoding with Stimulated Echoes in Cardiac Functional MRI,” J. Magn. Reson, 1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [65].Nayak KS et al. , “Cardiovascular magnetic resonance phase contrast imaging,” Journal of Cardiovascular Magnetic Resonance. 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [66].Mazzoli V et al. , “Accelerated 4D phase contrast MRI in skeletal muscle contraction,” Magn. Reson. Med, vol. 80, no. 5, pp. 1799–1811, November 2018. [DOI] [PubMed] [Google Scholar]

- [67].Kuijer JPA, Hofman MBM, Zwanenburg JJM, Marcus JT, Van Rossum AC, and Heethaar RM, “DENSE and HARP: Two views on the same technique of phase-based strain imaging,” J. Magn. Reson. Imaging, 2006. [DOI] [PubMed] [Google Scholar]

- [68].Haraldsson H, Sigfridsson A, Sakuma H, Engvall J, and Ebbers T, “Influence of the FID and off-resonance effects in dense MRI,” Magn. Reson. Med, 2011. [DOI] [PubMed] [Google Scholar]

- [69].Ryf S, Tsao J, Schwitter J, Stuessi A, and Boesiger P, “Peak-combination HARP: A method to correct for phase errors in HARP,” J. Magn. Reson. Imaging, 2004. [DOI] [PubMed] [Google Scholar]

- [70].Ryf S et al. , “Spiral MR Myocardial Tagging,” Magn. Reson. Med, vol. 51, no. 2, pp. 237–242, 2004. [DOI] [PubMed] [Google Scholar]

- [71].Schenck JF, “The role of magnetic susceptibility in magnetic resonance imaging: MRI magnetic compatibility of the first and second kinds,” Med. Phys, vol. 23, no. 6, pp. 815–850, June 1996. [DOI] [PubMed] [Google Scholar]

- [72].Humbert IA, Reeder SB, Porcaro EJ, Kays SA, Brittain JH, and Robbins J, “Simultaneous estimation of tongue volume and fat fraction using IDEAL-FSE,” J. Magn. Reson. Imaging, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information Video S1. Top: Movie of isolated American English Diphthong shown in Figure 4. This video shows top three rows listed in Table 1. Bottom: each color indicates the start of a different motion pattern: (left to right) tongue tip deformation (green), shear (cyan), tongue body compression (magenta), and tongue root compression (yellow). Importantly, the relative timing of motion patterns is seen in (b); for example, deformation of the tongue tip (green) was followed by shear (cyan) and finally compression of the tongue root (yellow).

Supporting Information Video S2. Movie of tagged real-time MRI with a synchronized audio shown in Figure 5(a). This video shows “a buy puppy” listed in Table 1.

Supporting Information Video S3. Movie of tagged real-time MRI with a synchronized audio shown in Figure 5(b). This video shows “a boy puppy” listed in Table 1.

Supporting Information Video S4. Movie of tagged real-time MRI with a synchronized audio shown in Figure 5(c). This video shows “a bow puppy” listed in Table 1.

Supporting Information Video S5. Significance of tagged real-time MRI for speech. Top row: five trials of tagged real-time MRI during stimuli “a boy puppy”. In each trial the tagging was triggered at the desired timing and successfully capture the deformation throughout the whole articulation. Bottom row: averaging over five trials shows high SNR but unacceptable quality, due to high intra-subject variability of speech production.