This cohort study examines whether the specific word and phrase frequency used in physician trainee narrative evaluations of medical faculty and qualified by automated text mining is associated with the gender of the faculty member evaluated.

Key Points

Question

Are there gender-based linguistic differences in narrative evaluations of medical faculty written by physician trainees?

Findings

In this cohort study of 7326 narrative evaluations for 521 medical faculty members, the words art, trials, master, and humor were significantly associated with evaluations of male faculty, whereas the words empathetic, delight, and warm were significantly associated with evaluations of female faculty. Two-word phrase associations included run rounds, big picture, and master clinician in male faculty evaluations and model physician and attention (to) detail in female faculty evaluations.

Meaning

The findings suggest that quantitative linguistic differences between free-text comments about male and female medical faculty may occur in physician trainee evaluations.

Abstract

Importance

Women are underrepresented at higher ranks in academic medicine. However, the factors contributing to this disparity have not been fully elucidated. Implicit bias and unconscious mental attitudes toward a person or group may be factors. Although academic medical centers use physician trainee evaluations of faculty to inform promotion decisions, little is known about gender bias in these evaluations. To date, no studies have examined narrative evaluations of medical faculty by physician trainees for differences based on gender.

Objective

To characterize gender-associated linguistic differences in narrative evaluations of medical faculty written by physician trainees.

Design, Setting, and Participants

This retrospective cohort study included all faculty teaching evaluations completed for the department of medicine faculty by medical students, residents, and fellows at a large academic center in Pennsylvania from July 1, 2015, through June 30, 2016. Data analysis was performed from June 1, 2018, through July 31, 2018.

Main Outcomes and Measures

Word use in faculty evaluations was quantified using automated text mining by converting free-text comments into unique 1- and 2-word phrases. Mixed-effects logistic regression analysis was performed to assess associations of faculty gender with frequencies of specific words and phrases present in a physician trainee evaluation.

Results

A total of 7326 unique evaluations were collected for 521 faculty (325 men [62.4%] and 196 women [37.6%]). The individual words art (odds ratio [OR], 7.78; 95% CI, 1.01-59.89), trials (OR, 4.43; 95% CI, 1.34-14.69), master (OR, 4.24; 95% CI, 1.69-10.63), and humor (OR, 2.32; 95% CI, 1.44-3.73) were significantly associated with evaluations of male faculty, whereas the words empathetic (OR, 4.34; 95% CI, 1.56-12.07), delight (OR, 4.26; 95% CI, 1.35-13.40), and warm (OR, 3.45; 95% CI, 1.83-6.49) were significantly associated with evaluations of female faculty. Two-word phrases associated with male faculty evaluations included run rounds (OR, 7.78; 95% CI, 1.01-59.84), big picture (OR, 7.15; 95% CI, 1.68-30.42), and master clinician (OR, 4.02; 95% CI, 1.21-13.36), whereas evaluations of female faculty were more likely to be associated with model physician (OR, 7.75; 95% CI, 1.70-35.39), just right (OR, 6.97; 95% CI, 1.51-32.30), and attention (to) detail (OR, 4.26; 95% CI, 1.36-13.40).

Conclusions and Relevance

The data showed quantifiable linguistic differences between free-text comments about male and female faculty in physician trainee evaluations. Further evaluation of these differences, particularly in association with ongoing gender disparities in faculty promotion and retention, may be warranted.

Introduction

Although women compose half of all US medical school graduates,1 recent evidence has shown ongoing sex disparities in academic job achievements and academic promotion.2,3,4,5,6,7,8 Specifically, women in medicine represent only 38% of academic faculty and 15% of senior leadership positions across the United States.9 This underrepresentation of women has been attributed in part to high rates of attrition at multiple points along the promotion pathway. Although there is robust evidence2,3,4,5,6,7,8,9 showing the presence of these sex differences, the factors leading to disparities in faculty retention and promotion have not been fully elucidated.

One potential influence may be implicit bias, namely unconscious mental attitudes toward a person or group.10 Evidence suggests that implicit biases pervade academia, influencing hiring processes,11 mentorship,12 publication,4 and funding opportunities.13,14,15 In previous studies,16,17,18,19 implicit gender bias also has been quantitatively and qualitatively shown in evaluation of nonmedical academic faculty. Similar biases have also been noted in medical education within narrative evaluations of physician trainees written by medical faculty.20,21 However, despite the critical role of evaluations from physician trainees in promotion decisions for medical faculty, the presence of gender bias within evaluations of medical faculty written by physician trainees has not been adequately assessed.

Thus far, studies22,23 assessing gender differences in faculty evaluations have focused exclusively on numeric scales evaluating teaching effectiveness, with the largest such study showing no significant differences in numeric rating by gender. However, both numeric ratings and written comments are used by academic medical centers for promotion purposes. No studies, to our knowledge, have examined gender differences in narrative evaluations of medical faculty by physician trainees, which could identify implicit biases not captured by numeric ratings.

The use of computer-based language analysis or natural language processing techniques may provide a quantitative method to identify subtle linguistic differences in narrative evaluation that may not be captured by qualitative coding and thematic analysis. The practice of n-gram analysis, which converts text into a sequence of words and phrases for further quantitative analysis,24 has been successfully used to explore linguistic differences between genders in a variety of other settings.25,26,27,28 We hypothesized that use of an n-gram analysis could help quantify gender-associated differences in the vocabulary used by physician trainees in narrative evaluations of medical faculty.

Methods

Setting and Participants

We performed a single-center retrospective cohort analysis of all evaluations of department of medicine faculty that were written by physician trainees at a large academic medical center from July 1, 2015, to June 30, 2016. Anonymous evaluations of faculty, completed by medical students, residents, and fellows, consisted of a cumulative numeric score rating global teaching effectiveness (5-point rating scale: 1, poor; 2, fair; 3, good; 4, very good; and 5, excellent), in addition to mandated free-text comments. These evaluations were submitted by physician trainees within an anonymous online evaluation platform. Other than the global teaching effectiveness score, there were no separate ratings of faculty. Physician trainees completed the evaluations using a web-based platform at the end of each clinical rotation (both inpatient and outpatient), usually representing 1 to 2 weeks of exposure to the attending physician. The institutional review board of the University of Pennsylvania, Philadelphia, approved this study and granted a waiver of consent for participation. A waiver of consent was obtained because the research was retrospective and posed no more than minimal risk to participants, and the research could not be performed without a waiver. Our reporting adheres to the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) reporting guideline for cohort studies.

Data Collection and Processing

The data set included faculty sex, faculty division within the department of medicine, physician trainee sex, physician trainee level (medical student, resident, or fellow), numeric rating scores (the teaching effectiveness score), and free-text comments. To preserve anonymity among the physician trainees, unique physician trainee identifiers were not associated with any evaluations. A third party deidentified all evaluations before the analysis. Text from the narrative portion of the evaluation was anonymized by replacing all first and last names with a placeholder (eg, first name and last name). In addition, text was converted to all lower case letters, and special characters were normalized. All punctuation was removed in the data preprocessing step, and stop words (such as a, the, and this) were removed from the text.

N-gram Text Analysis

To analyze the narrative data, we used n-gram text mining to convert preprocessed free-text comments into discrete words and phrases. N-grams are 1- and 2-word phrases found in the text. We counted the presence of each n-gram in each document and then created a matrix of binary indicators for unigrams (single words) and bigrams (2-word phrases) for analysis. Because bigrams create phrases based on adjacent words within text, each unigram (discrete word) was represented in all n-gram categories. For example, the phrase “the attending taught the team skills” would be changed to “attending taught team skills” during preprocessing and then broken into 4 unigrams (attending, taught, team, and skills) and 3 bigrams (attending taught, taught team, and team skills). Bigrams were included to provide useful local contextual features that unigrams were unable to capture. N-grams occurring fewer than 10 times in the entire corpus were excluded from analysis because the study was not sufficiently powered to detect differences for such rarely occurring terms. Words describing a specific service (such as cardiology or nephrology), location (medical intensive care unit), or procedure (bronchoscopy) were excluded from analysis, given the potential effect of gender imbalance among faculty within specific specialties.

Statistical Analysis

Data analysis was performed from June 1, 2018, through July 31, 2018. We assessed the associations of faculty gender with word or phrase use in the evaluations. The exposure variables were the frequency of words or phrases, as mined by the n-gram analysis. The dependent variable was faculty gender. Given the sparsity of the data matrix after initial n-gram text mining, variable selection was conducted using elastic net regression.29,30 Elastic net regression is a regularized regression method that eliminates insignificant covariates, preserves correlated variables, and guards against overfitting. To select an appropriate value for the tuning parameter (λ value), we used 10-fold cross-validation and selected the value that minimized the mean cross-validated error and reduced potential overfitting within the model. In addition to the aforementioned variables selected by elastic net regression, several unigrams were preselected for univariate analysis based on previous literature describing gender associations, specifically teacher, first name, and last name.27

The frequencies of selected unigram and bigram words were then fitted into a multivariable logistic regression model to assess their associations with faculty gender (using odds ratios [ORs] and 95% CIs). To assess the association of each individual n-gram with gender, each unigram and bigram was used in distinct mixed-effects logistic regression models (clustered on individual faculty members to assess the influence of instructor characteristics). To ensure anonymity of physician trainees, additional demographics (age and race/ethnicity) were not available in the data set, and unique physician trainee identifiers could not be used in the mixed-effects model. A secondary logistic regression was performed to evaluate the association of physician trainee gender with word choice.

For all analyses, the statistical significance was determined using the Benjamini-Hochberg approach for multiple comparisons, using a false discovery rate of 0.10, given the exploratory purposes of our analysis.31,32 This method controls for the false discovery rate using the analysis 2-sided P value compared with the Benjamini-Hochberg critical value ([i/m])Q, where i is the rank of the P value, m is the total number of tests, and Q is the false discovery rate.

Overall word counts by comment were tabulated for all evaluations in the cohort, and median word counts were compared across faculty gender, physician trainee gender, and physician trainee level using the Wilcoxon rank sum test (α = .05).

To evaluate quantitative differences between ratings by faculty gender, summary statistics were calculated across all submitted evaluations within the cohort. We performed a descriptive analysis of the overall teaching effectiveness score by comparing mean numeric ratings across faculty gender, physician trainee gender, and physician trainee level using the Wilcoxon rank sum test. All statistical analyses and additional text analysis were completed using Stata, version 15.1 (StataCorp).

Results

Demographic Characteristics

A total of 7326 unique evaluations were collected during the 2015 through 2016 academic year for 521 faculty members in the Department of Medicine at the Perelman School of Medicine, University of Pennsylvania, Philadelphia, including 325 men (62.4%) and 196 women (37.6%). Complete data (including the sex of the faculty member and physician trainee) were available for 6840 (93.4%) evaluations. Individual faculty members had a mean (SD) of 14.1 (13.8) evaluations (range, 1.0-76.0) included in the cohort. Most of the evaluations (5732 [78.2%]) were submitted by physicians in residency or fellowship training, and the remainder were submitted by medical students during clerkship, elective, and subinternship rotations (Table). Male physician trainees completed 3724 of 6840 faculty evaluations (50.8%) with complete data.

Table. Demographic Information of Faculty Evaluation Cohort.

| Characteristic | No. (%) | |

|---|---|---|

| Total (N = 521) | Evaluations (n = 6840)a | |

| Faculty | ||

| Male identified | 325 (62.4) | 4448 (61) |

| Female identified | 196 (37.6) | 2878 (39) |

| Physician traineesb | ||

| Male identified | NA | 3724 (54.4) |

| Female identified | NA | 3116 (46) |

| Medical students | NA | 1594 (22) |

| Residents or fellows | NA | 5732 (78) |

| Division | ||

| General medicine | 111 (21.3) | 1818 (26.6) |

| Cardiology | 88 (16.9) | 1335 (19.5) |

| Gastroenterology | 51 (9.8) | 558 (8.2) |

| Geriatrics | 14 (2.7) | 137 (2.0) |

| Hematology/oncology | 76 (14.6) | 642 (9.4) |

| Infectious diseases | 47 (9.0) | 485 (7.1) |

| Pulmonary, allergy, and critical care | 62 (11.9) | 1217 (17.8) |

| Rheumatology | 25 (4.8) | 264 (3.8) |

| Renal | 36 (6.9) | 332 (4.8) |

| Sleep medicine | 11 (2.1) | 52 (0.7) |

Abbreviation: NA, not applicable.

Of the 7326 evaluations, 6840 had full data on both medical faculty and physician trainee gender.

No unique physician trainee identifiers were captured within the data set to preserve physician trainee anonymity of evaluation.

Faculty members (N = 521) represented all of the following divisions within the department of medicine: general medicine (n = 111; 21.3%); cardiology (n = 88; 16.9%); gastroenterology (n = 51; 9.8%); geriatrics (n = 14; 2.7%); hematology and oncology (n = 76; 14.6%); infectious diseases (n = 47; 9.0%); pulmonary, allergy, and critical care (n = 62; 11.9%); rheumatology (n = 25; 4.8%); renal (n = 36; 6.9%); and sleep medicine (n = 11; 2.1%).

N-gram Text Analysis

After removal of stop words, n-gram text analysis was used to extract text features, creating a matrix of binary indicators for 1610 unigrams and 1698 bigrams. Variable selection with elastic net regression identified a subset of 151 unigrams and 165 bigrams for further analysis. The complete list of selected variables is included in eTable 1 and eTable 2 in the Supplement.

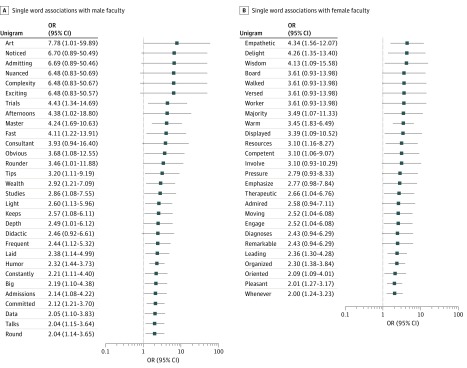

Our findings showed that written evaluations including art (OR, 7.78; 95% CI, 1.01-59.89), trials (OR, 4.43; 95% CI, 1.34-14.69), master (OR, 4.24; 95% CI, 1.69-10.63), and humor (OR, 2.32; 95% CI, 1.44-3.73) were associated with evaluations of male faculty, whereas words including empathetic (OR, 4.34; 95% CI, 1.56-12.07), delight (OR, 4.26; 95% CI, 1.35-13.40), and warm (OR, 3.45; 95% CI, 1.83-6.49) were associated with evaluations of female faculty. Figure 1 highlights all significant single word associations with faculty gender (based on Benjamini-Hochberg threshold). The full list of unigrams that were associated with gender are listed in eTable 1 in the Supplement. Analysis of previously identified words associated with gender, including teacher, first name, and last name, did not reveal significant associations with faculty gender.

Figure 1. Significant Single-Word (Unigram) Associations by Faculty Gender.

OR indicates odds ratio.

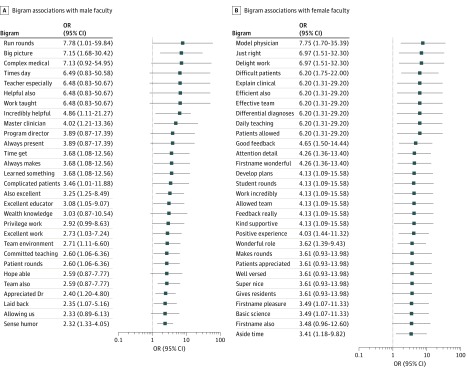

The 2-word phrases that were associated with male faculty evaluations included run rounds (OR, 7.78; 95% CI, 1.01-59.84), big picture (OR, 7.15; 95% CI, 1.68-30.42), and master clinician (OR, 4.02; 95% CI, 1.21-13.36). Female faculty evaluations tended to contain more comments like model physician (OR, 7.75; 95% CI, 1.70-35.39), just right (OR, 6.97; 95% CI, 1.51-32.30), and attention (to) detail (OR, 4.26; 95% CI, 1.36-13.40). All 2-word phrases significantly associated with gender are displayed in Figure 2. The full bigram associations are included in eTable 2 in the Supplement. A secondary analysis did not reveal significant differences in word choice based on the gender of the physician trainee after adjustment for multiple comparisons (eTable 3 in the Supplement)

Figure 2. Significant 2-Word (Bigram) Associations by Faculty Gender.

OR indicates odds ratio.

Descriptive Analysis of Narrative Comments and Numeric Rating (Teaching Effectiveness Score)

Narrative evaluations had a median word count of 32 (IQR, 12-60 words). The median word count was significantly higher for evaluations of female faculty (33 words; IQR, 14-62 words) compared with evaluations of male faculty (30 words; IQR, 12-58 words) (P < .001). Neither resident gender nor training level was significantly associated with narrative evaluation word count.

There was no significant difference in the mean numeric ratings of overall teaching effectiveness between female and male faculty (mean difference, 0.02; 95% CI, –0.05 to 0.03). There was also no significant difference between mean numeric ratings assigned by female and male residents or fellows (0.02; 95% CI, –0.05 to 0.03). Compared with residents, medical students assigned significantly higher ratings to the faculty (0.22; 95% CI, 0.17-0.26; P < .001).

Discussion

Our data showed quantitative linguistic differences in free-text comments based on faculty gender that persisted after adjustment for evaluator gender and level of training. These word choice differences, while not innately negative, reflected notable contrasts in the ways male and female faculty were perceived and evaluated. These differences are particularly relevant if job qualifications are defined using stereotypically male characteristics, which may preclude women from these opportunities if evaluations use different descriptors. To our knowledge, this was the first study to focus on narrative evaluation of faculty within the medical field. Because narrative evaluations are frequently used to guide decisions about reappointment, promotion, salary increases, and consideration for leadership positions, bias in these evaluations may have far-reaching consequences for the career trajectories of medical faculty.

The word associations identified in our cohort affirmed linguistic trends by gender previously reported in nonmedical settings,11,12,16,17,18,19,33,34,35 specifically the use of ability terms (such as master and complexity) associated with men and emotive terms (such as empathetic, delight, and warm) associated with women. This mirrors previous qualitative work21,33,34,35 highlighting gender-based differences in patterns of word choice used in letters of recommendation and performance evaluations that identified differential use of grindstone traits (eg, hardworking), ability traits, and standout adjectives (eg, best). Our results were also consistent with previous findings in other educational settings where trainees (irrespective of age and generational characteristics) displayed biases in ratings of teachers.16,19,20 The linguistic differences noted in our study suggest the need for further evaluation of potential biases in narrative evaluations of faculty.

Despite the linguistic differences, we did not identify differences in numeric rating of teaching effectiveness by faculty gender. We suspect that numeric rating, given the significant positive skew and limited spread of the ratings, may be insensitive to the magnitude and/or types of differences associated with gender bias in this setting. However, this limited range of ratings may suggest greater importance to the written comments, and differential word choice may reveal subtle imbalances not appreciated in ratings data. Although the numeric ratings were equal, there were differences in the physician trainee descriptors used in evaluation of faculty, and there may be differential valuation of the mentioned characteristics by academic medical centers. Specifically, recent evidence36 highlights that the presence of humor is significantly associated with performance evaluation and assessment of leadership capability. Further study on how the different descriptors used in faculty evaluation are associated with performance appraisal and promotion in academic medical centers is warranted.

Although previous qualitative research has shown the presence of bias in narrative evaluation of physician trainees, this study offers novel insights into the potential for gender bias throughout the spectrum of narrative evaluations in medicine. In addition, the use of natural language processing techniques enabled a robust analysis of a large number of evaluations, exposing word choice differences that may not have been perceived by using qualitative techniques alone. Further study is warranted to explore the effect of gender-based linguistic differences in narrative evaluations on decisions about reappointment, promotion, and salary increases. Clarifying the ultimate effect of these differences on career trajectories of women in medicine may help identify interventions (such as implicit bias training for physician trainees and/or designing faculty evaluation forms to encourage the use of standard descriptors for both genders) to prevent or mitigate implicit bias.

Strengths and Limitations

The strengths of our study included the size and composition of our faculty cohort, representing all clinical departments within the department of medicine, as well as the inclusion of physician trainee raters spanning from clinical undergraduate medical education (medical school) to graduate medical education (residency and fellowship). In addition, the use of n-gram analysis allowed for objective quantification of word use within the sample. Although n-gram analysis may be limited for capturing important phrases or context, other natural language processing methods, such as embedding vectors or recurrent neural network models, may be more appropriate and an important area of future work. Further qualitative research may be warranted to examine the context of the word choice differences identified in this study.

A major limitation of the current study is generalizability, since our data set included faculty in the department of medicine within a single large academic institution. However, because the cohort consisted of narrative evaluations written by physician trainees from various geographic backgrounds and previous training institutions, we believe that the results are likely to be representative of academic faculty evaluations at other institutions. Future work may be warranted to determine whether similar associations exist in specialties other than internal medicine and its subspecialties.

Although we were unable to ascertain objective differences in attributes of faculty based on gender, our study highlights that there are certainly differences in which attributes are perceived by physician trainee evaluators. It is possible that existing biases about the inherent value of these characteristics may play a role in interpretation and valuation of these comments by promotion committees. Also, we were not able to assess the effect of underrepresented minority status, given the small numbers of faculty and physician trainees within our institution with this status as well as the observational nature of this study. Although our n-gram analysis identified differences in unigram and bigram descriptors applied to male vs female faculty members' performance, it did not fully capture contextual differences in comments, which would require additional qualitative study and/or application of additional computational techniques.

Conclusions

This study identified quantitative linguistic differences in physician trainee evaluations of faculty on the basis of gender. Stereotypical gender phrases were identified in physician trainee evaluations of medical faculty (such as ability and cognitive terms in male faculty evaluations and emotive terms in female faculty evaluations). There were no significant differences in numeric ratings between female and male faculty members, which may add greater importance to the language used in narrative comments. Overall, these findings suggest the presence of gender differences in narrative evaluations of faculty written by physician trainees. Further research may be warranted to explore the context of the specific words and phrases identified and to understand the downstream effect of such evaluation differences on the career trajectories of women in medicine.

eTable 1. Unigram Associations With Female Faculty Sex (Following Variable Selection With Elastic Net Regression)

eTable 2. Bigram Associations With Female Faculty Sex (Following Variable Selection With Elastic Net Regression)

eTable 3. Secondary Analysis of Unigram Association With Trainee Female Sex

References

- 1.Lautenberger DM, Dandar VM, Raezer CL, Sloane RA. The State of Women in Academic Medicine: The Pipeline and Pathways to Leadership, 2013-2014. Washington, DC: Association of American Medical Colleges; 2014. [Google Scholar]

- 2.Jena AB, Khullar D, Ho O, Olenski AR, Blumenthal DM. Sex differences in academic rank in US medical schools in 2014. JAMA. 2015;314(11):-. doi: 10.1001/jama.2015.10680 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Jagsi R, Griffith KA, Stewart A, Sambuco D, DeCastro R, Ubel PA. Gender differences in salary in a recent cohort of early-career physician-researchers. Acad Med. 2013;88(11):1689-1699. doi: 10.1097/ACM.0b013e3182a71519 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jagsi R, Guancial EA, Worobey CC, et al. The “gender gap” in authorship of academic medical literature—a 35-year perspective. N Engl J Med. 2006;355(3):281-287. doi: 10.1056/NEJMsa053910 [DOI] [PubMed] [Google Scholar]

- 5.Jagsi R, Griffith KA, Stewart A, Sambuco D, DeCastro R, Ubel PA. Gender differences in the salaries of physician researchers. JAMA. 2012;307(22):2410-2417. doi: 10.1001/jama.2012.6183 [DOI] [PubMed] [Google Scholar]

- 6.Reed DA, Enders F, Lindor R, McClees M, Lindor KD. Gender differences in academic productivity and leadership appointments of physicians throughout academic careers. Acad Med. 2011;86(1):43-47. doi: 10.1097/ACM.0b013e3181ff9ff2 [DOI] [PubMed] [Google Scholar]

- 7.Kaplan SH, Sullivan LM, Dukes KA, Phillips CF, Kelch RP, Schaller JG. Sex differences in academic advancement: results of a national study of pediatricians. N Engl J Med. 1996;335(17):1282-1289. doi: 10.1056/NEJM199610243351706 [DOI] [PubMed] [Google Scholar]

- 8.Jena AB, Olenski AR, Blumenthal DM. Sex differences in physician salary in US public medical schools. JAMA Intern Med. 2016;176(9):1294-1304. doi: 10.1001/jamainternmed.2016.3284 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Association of American Medical Colleges. US medical school applications and matriculants by school, state of legal residence, and sex, 2018-2019. https://www.aamc.org/download/321442/data/factstablea1.pdf. Published November 9, 2018. Accessed September 25, 2018.

- 10.Staats C. State of the science: implicit bias review. http://kirwaninstitute.osu.edu/wp-content/uploads/2014/03/2014-implicit-bias.pdf. Published March 2, 2014. Accessed December 15, 2018.

- 11.Gorman EH. Gender stereotypes, same-gender preferences, and organizational variation in the hiring of women: evidence from law firms. Am Sociol Rev. 2005;70(4):702-728. doi: 10.1177/000312240507000408 [DOI] [Google Scholar]

- 12.Moss-Racusin CA, Dovidio JF, Brescoll VL, Graham MJ, Handelsman J. Science faculty’s subtle gender biases favor male students. Proc Natl Acad Sci USA. 2012;109(41):16474-16479. doi: 10.1073/pnas.1211286109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Pohlhaus JR, Jiang H, Wagner RM, Schaffer WT, Pinn VW. Sex differences in application, success, and funding rates for NIH extramural programs. Acad Med. 2011;86(6):759-767. doi: 10.1097/ACM.0b013e31821836ff [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hechtman LA, Moore NP, Schulkey CE, et al. NIH funding longevity by gender. Proc Natl Acad Sci USA. 2018;115(31):7943-7948. doi: 10.1073/pnas.1800615115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Zhou CD, Head MG, Marshall DC, et al. A systematic analysis of UK cancer research funding by gender of primary investigator. BMJ Open. 2018;8(4):e018625. doi: 10.1136/bmjopen-2017-018625 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Young S, Rush L, Shaw D. Evaluating gender bias in ratings of university instructors’ teaching effectiveness. Int J Scholarsh Teach Learn. 2009;3(2):1-14. doi: 10.20429/ijsotl.2009.030219 [DOI] [Google Scholar]

- 17.Hirshfield LE. ‘She’s not good with crying’: the effect of gender expectations on graduate students’ assessments of their principal investigators. Gend Educ. 2014;26(6):601-617. doi: 10.1080/09540253.2014.940036 [DOI] [Google Scholar]

- 18.Laube H, Massoni K, Sprague J, Ferber AL. The impact of gender on the evaluation of teaching: what we know and what we can do. NWSA J. 2007;19(3):87-104. [Google Scholar]

- 19.Mengel F, Sauermann J, Zölitz U. Gender bias in teaching evaluations. J Eur Econ Assoc. 2018;17(2):535-566. doi: 10.1093/jeea/jvx057 [DOI] [Google Scholar]

- 20.Dayal A, O’Connor DM, Qadri U, Arora VM. Comparison of male vs female resident milestone evaluations by faculty during emergency medicine residency training. JAMA Intern Med. 2017;177(5):651-657. doi: 10.1001/jamainternmed.2016.9616 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Mueller AS, Jenkins TM, Osborne M, Dayal A, O’Connor DM, Arora VM. Gender differences in attending physicians’ feedback to residents: a qualitative analysis. J Grad Med Educ. 2017;9(5):577-585. doi: 10.4300/JGME-D-17-00126.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.McOwen KS, Bellini LM, Guerra CE, Shea JA. Evaluation of clinical faculty: gender and minority implications. Acad Med. 2007;82(10)(suppl):S94-S96. doi: 10.1097/ACM.0b013e3181405a10 [DOI] [PubMed] [Google Scholar]

- 23.Morgan HK, Purkiss JA, Porter AC, et al. Student evaluation of faculty physicians: gender differences in teaching evaluations. J Womens Health (Larchmt). 2016;25(5):453-456. doi: 10.1089/jwh.2015.5475 [DOI] [PubMed] [Google Scholar]

- 24.Brown PF, deSouza PV, Mercer RL, Della Pietra VJ, Lai JC. Class-based n-gram models of natural language. Comput Linguist. 1992;18(4):468-479. [Google Scholar]

- 25.Schmader T, Whitehead J, Wysocki VH. A linguistic comparison of letters of recommendation for male and female chemistry and biochemistry job applicants. Sex Roles. 2007;57(7-8):509-514. doi: 10.1007/s11199-007-9291-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Recasens M, Danescu-Niculescu-Mizil C, Jurafsky D Linguistic models for analyzing and detecting biased language. In: Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics Sofia, Bulgaria: Association for Computational Linguistics; 2013:1650-1659. [Google Scholar]

- 27.Terkik A, Prud’hommeaux E, Alm CO, Homan C, Franklin S Analyzing gender bias in student evaluations. In: Proceedings of COLING 2016, 26th International Conference of Computational Linguistics. Osaka, Japan: The COLING 2016 Organizing Committee; 2016:868-876. [Google Scholar]

- 28.Aull L. Gender in the American anthology apparatus: a linguistic analysis. Adv in Lit Stud. 2014;2(1):38-45. doi: 10.4236/als.2014.21008 [DOI] [Google Scholar]

- 29.Marafino BJ, Boscardin WJ, Dudley RA. Efficient and sparse feature selection for biomedical text classification via the elastic net: application to ICU risk stratification from nursing notes. J Biomed Inform. 2015;54:114-120. doi: 10.1016/j.jbi.2015.02.003 [DOI] [PubMed] [Google Scholar]

- 30.Zou H, Hastie T. Regularization and variable selection via the elastic net. J R Stat Soc B. 2005;67(2):301-320. doi: 10.1111/j.1467-9868.2005.00503.x [DOI] [Google Scholar]

- 31.Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc, Ser B. 1995;57(1):289-300. [Google Scholar]

- 32.Pike N. Using false discovery rates for multiple comparisons in ecology and evolution. Methods Ecol Evol. 2011;2(3):278-282. doi: 10.1111/j.2041-210X.2010.00061.x [DOI] [Google Scholar]

- 33.Trix F, Psenka C. Exploring the color of glass: letters of recommendation for female and male medical faculty. Discourse Soc. 2003;14(2):191-220. doi: 10.1177/0957926503014002277 [DOI] [Google Scholar]

- 34.Fischbach A, Lichtenthaler PW, Horstmann N. Leadership and gender stereotyping of emotions. J Pers Psychol. 2015;14(3):153-162. doi: 10.1027/1866-5888/a000136 [DOI] [Google Scholar]

- 35.Eagty AH, Makhijani MG, Klonsky BG. Gender and the evaluation of leaders: a meta-analysis. Psychol Bull. 1992;111(1):3-22. doi: 10.1037/0033-2909.111.1.3 1529038 [DOI] [Google Scholar]

- 36.Evans JB, Slaughter JE, Ellis APJ, Rivin JM. Gender and the evaluation of humor at work [published online February 7]. J Appl Psychol. 2019. doi: 10.1037/apl0000395 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eTable 1. Unigram Associations With Female Faculty Sex (Following Variable Selection With Elastic Net Regression)

eTable 2. Bigram Associations With Female Faculty Sex (Following Variable Selection With Elastic Net Regression)

eTable 3. Secondary Analysis of Unigram Association With Trainee Female Sex