Abstract

The movement towards open science is a consequence of seemingly pervasive failures to replicate previous research. This transition comes with great benefits but also significant challenges that are likely to affect those who carry out the research, usually early career researchers (ECRs). Here, we describe key benefits, including reputational gains, increased chances of publication, and a broader increase in the reliability of research. The increased chances of publication are supported by exploratory analyses indicating null findings are substantially more likely to be published via open registered reports in comparison to more conventional methods. These benefits are balanced by challenges that we have encountered and that involve increased costs in terms of flexibility, time, and issues with the current incentive structure, all of which seem to affect ECRs acutely. Although there are major obstacles to the early adoption of open science, overall open science practices should benefit both the ECR and improve the quality of research. We review 3 benefits and 3 challenges and provide suggestions from the perspective of ECRs for moving towards open science practices, which we believe scientists and institutions at all levels would do well to consider.

This Perspective article offers a balanced perspective on both the benefits and the challenges involved in the adoption of open science practices, with an emphasis on the implications for Early Career Researchers.

Introduction

Pervasive failures to replicate published work have raised major concerns in the life and social sciences, which some have gone so far as to call a ‘crisis’ ([1–6], also see [7–9]). The potential causes are numerous, well documented, and require a substantive change in how science is conducted [2,4,10–16]. A shift to open science methods (summarised in Table 1) has been suggested as a potential remedy to many of these concerns [2,4,17]. These encompass a range of practices aimed at making science more reliable, including wider sharing and reanalysis of code, data, and research materials [2,18]; valuing replications and reanalyses [2,5,6,19,20]; changes in statistical approaches with regards to power [21,22] and how evidence is assessed [23]; interactive and more transparent ways of presenting data graphically [24,25]; potentially the use of double-blind peer review [26]; and the use of formats such as preprints [27] and open access publishing. In our experience of these, the adoption of study preregistration and registered reports (RRs) is the change that most affects how science is conducted. These approaches require hypotheses and analysis pipelines to be declared publicly before data collection [2,14,28,29] (although protocols can be embargoed). This makes the crucial distinction between confirmatory hypothesis testing and post hoc exploratory analyses transparent. In the case of RRs, hypotheses and methods are peer reviewed on the basis of scientific validity, statistical power, and interest, and RRs can receive in principal acceptance for publication before data is collected [29,30]. RRs can thereby increase the chances of publishing null findings, as we demonstrate in ‘Benefit 1: Greater faith in research’. These preregistration approaches also circumvent many of the factors that have contributed to the current problems of replication [2,4,11,19]. Preregistering hypotheses and methods renders so-called hypothesizing after the results are known (HARKing) [31] impossible and prevents manipulation of researcher degrees of freedom or p hacking [4]. In addition, because most RR formats involve peer review prior to data collection, the process can improve experimental design and methods through recommendations made by reviewers.

Table 1. Open science practices.

Some methods introduced or suggested by the open science community to improve scientific practices.

| Resources | Sharing of code, data, research materials, and methods [2,19]. |

| Publishing formats | Registered reports [28], preregistrations [17], exploratory reports [32], preprints [27], open access publishing [33], as well as new evaluation and peer review processes [24]. |

| Research questions | Pursuing replications and reanalyses [2,5,6,9,19]. |

| Methodology | Changes in statistical approaches for power [21,22], how evidence is assessed [23] and communicated [34], as well as documenting data analysis in a way that facilitates reproducing results [35]. |

There are promising and important reasons to implement and promote open science methods, as well as career-motivated reasons [27,36,37]. However, there are also major challenges that are underrepresented and particularly affect those who carry out the research, most commonly early career researchers (ECRs). Here, we review 3 areas of challenge posed by open science practices, which are balanced against 3 beneficial aspects, with a focus on ECRs working in quantitative life sciences. Both challenges and benefits are accompanied by suggestions, in the form of tips, which may help ECRs to surmount these challenges and reap the rewards of open science. We conclude that overall, open science methods are inevitable to address concerns around replication, are increasingly expected, and ECRs in particular can benefit from being involved early on.

Three challenges

Challenge 1: Restrictions on flexibility

Statistical hypothesis testing is the predominant approach for addressing research questions in quantitative research, but a point often underemphasised is that a hypothesis can only be truly held before the data are looked at, usually before the data are collected. Open science methods, in particular preregistrations and RRs, respect this distinction and require separating exploratory analyses from planned confirmatory hypothesis testing. This distinction lies at the centre of RRs and preregistrations [29,30], in which timelines are fixed, enforcing a true application of hypothesis testing but also forcing researchers to stop developing an experiment and start collecting data. Once data collection has started, new learning about analysis techniques, subsequent publications, and exploration of patterns in data cannot inform confirmatory hypotheses or the preregistered experimental design. This restriction can be exasperating because scientists do not tend to stop thinking about and thus developing their experiments. Continuous learning during the course of an investigation is difficult to reconcile with a hard distinction between confirmatory and exploratory research but may be the price of unbiased science [4,11]. Open science methods do not preclude the possibility of serendipitous discovery, but confirmation requires subsequent replication, which entails additional work. Exploratory analyses can be added after registration; however, they can and should have a lower evidential status than preregistered tests. Although this particular loss of flexibility only directly and unavoidably affects the preregistered aspects of open science, the distinction between exploratory and confirmatory enquiry is a more general principle advocated in open science [32]. Closed orthodox science simply allows for the incorporation of new ideas more flexibly, if questionably.

Informing and formulating research questions based on data exploration is recommended. Being open to and guided by the data rather than mere opinions also has many merits. However, robust statistical inference requires that the time for it is restricted to the piloting (or learning) phase. Historically, ECRs have often been provided with existing data sets and learned data analyses through data exploration. Exploratory analyses and learning are desirable but are only acceptable if explicitly separated from planned confirmatory analyses [28,29]. The common practice of maintaining ambiguity between the two can convey an advantage to the traditional researcher because failure to acknowledge the difference exploits the assumption that presented analyses are planned. Distinguishing explicitly between planned and exploratory analyses can thus only disadvantage the open researcher, because denoting a subset of analyses as exploratory reduces their evidential status. We believe, however, that this apparent disadvantage is the scientifically correct approach and is increasingly viewed as a positive and necessary distinction [2,4,28]. The restriction on flexibility imposed by explicit differentiation between exploratory and confirmatory science represents a major systemic shift in how science is understood, planned, and conducted—the impact of which is often underestimated.

The more restrictive structures of open science can result in mistakes having greater ramifications than within a more closed approach. Transparent documentation and data come with higher error visibility, and the flexibility to avoid acknowledging mistakes is lost. However, for science, unacknowledged or covered-up mistakes are certainly problematic. We therefore support the view that mistakes should be handled openly, constructively, and, perhaps most importantly, in a positive nondetrimental way [38,39]. Mistakes can and will happen, but by encouraging researchers to be open about them and not reprimanding others for them, open science can counter incentives to hide mistakes.

Besides higher visibility, mistakes can also have greater ramifications owing to the loss of flexibility in responding to them and the fixed timelines, particularly under preregistered conditions. When developing full a priori analyses, pipelines anticipating all potential outcomes and contingencies should be attempted. It is rarely possible to anticipate all contingencies, and the anticipation itself can lead to problems. For example, we have spent considerable time developing complex exhaustive analyses, which may never be used because registered preliminary assumption checks failed. Had a more flexible approach been adopted, the unnecessary time investment would likely have never been made. Amendments to preregistrations and RRs are perfectly acceptable, as are iterative studies, but such changes and additions will also take time. Beyond preregistration, the greater scrutiny that comes with open science, particularly open data and code, means that there are fewer options to exploit researcher degrees of freedom. These examples illustrate how open science researchers can pursue higher standards than closed science but can encounter difficulties and restrictions because of doing so.

Tips

Pilot data are essential when developing complex a priori analyses pipelines and pilot data can be explored without constraint. Make and expect a distinction between planned and exploratory analyses. Preregistered and RR experiments are likely to have a higher evidential value than closed science experiments in the future and so researchers should be encouraged to use these formats. Be open about mistakes and do not reprimand others for their mistakes, rather applaud honesty.

Challenge 2: The time cost

There are theoretical reasons why open science methods could save time. For example, a priori analysis plans constrain the number of analyses, or reviewers may be less suspicious of demonstrably a priori hypothesis. However, in our experience, these potential benefits rarely come to fruition in the current system. The additional requirements of open and reproducible sciences often consume more time: Archiving, documenting, and quality controlling of code and data takes time. Considerably more time consuming is the adoption of preregistrations or RRs, because full analysis pipelines, piloting, manuscripts, and peer review (for RRs) must occur prior to data collection, which is only then followed by the more traditional, but still necessary, stages involved in publication such as developing (exploratory) analyses, writing the final manuscript, peer reviewing, etc. For comparison, it is usually easier and quicker (although questionable) to develop complex analyses on existing final data sets rather than on separate subsets of pilot data or simulated data, as required under preregistration. In our experience, these additional requirements can easily double the duration of a project. Data collection also takes longer in open experiments, which often have higher power requirements, particularly when conducted as RRs [2,21]. The ECR who adopts open science methods will likely complete fewer projects within a fixed period in comparison to peers who work with traditional methods. Therefore, very careful consideration needs to be given to the overall research strategy as early as possible in projects, because resources are limited for ECRs within graduate programs and post-doctoral positions. Although there are discussions around reducing training periods for ECRs [40,41], the additional time requirements of open science, and in particular of preregistration and RRs, might be seen as countermanding factors that require longer periods of continuous employment to allow ECRs to adopt open science practices. Less emphasis on moving between institutions than is currently the norm may also help alleviate these concerns by allowing for longer projects. The increased time cost, in our experience, presents the greatest challenge in conducting open science and acutely afflicts ECRs and thus may require rethinking how ECR training and research is organised by senior colleagues.

Tips

Preregistered, well powered experiments are preferential to those that are not. However, it should be expected and planned for that these will take substantially longer than would otherwise be the case. Where possible, researchers at all levels should take this time cost into account, whether in planning research or questions of employment and reward.

Challenge 3: Incentive structure isn’t in place yet

Open science is changing how science is conducted, but it is still developing and will take time to consolidate in the mainstream [42,43]. Systems that reward open science practices are currently rare, and researchers are primarily assessed according to traditional standards. For instance, assessment structures such as the national Research Excellence Framework (REF) in the United Kingdom or the Universities Excellence Initiative in Germany, as well as research evaluations within universities, are yet to fully endorse and reward the full range of open science practices. Some reviewers and editors at journals and funders remain to be convinced of the necessity or suitability of open methods. Although many may view open science efforts neutrally or positively, they rarely weigh proportionally the sacrifices made in terms of flexibility and productivity. For example, reviewers tend to apply the same critical lens irrespective of when tested hypotheses were declared.

High-profile journals tend to reward a good story with positive results, but loss of flexibility limits the extent to which articles can be finessed, and it reduces the likelihood of positive results (see ‘Benefit 2’). The requirement for novelty can also countermand the motivation to perform replications, which, as recent findings indicate, are necessary [4,5,11,44]. Some journals are taking a lead in combatting questionable research practices and have signed guidelines promoting open methods [45,46]. However, levels of adoption are highly variable. Although many prestigious journals, institutions, and senior researchers declare their support for open methods, as yet, few have published using them.

Within open science, standards are still developing. At present, there is a lack of concessions over single-blind, double-blind, and open peer review [26]. Levels of preregistration vary dramatically [47], with some registrations only outlining hypotheses without analysis plans. Although this approach may guard against HARKing and be tactically advantageous for individuals, it does little to prevent p hacking and may eventually diminish the perceived value of preregistrations. There is also a practical concern around statistical power. High standards are admirable (e.g., Nature Human Behaviour requires all frequentist hypothesis tests in RRs be powered to at least 95%), but within limited ECR research contracts, they run up against feasibility constraints, partially for resource-intensive (e.g., neuroimaging, clinical studies) or complex multilevel experiments that are likely to contain low to medium effect sizes. Such constraints might skew areas of investigation and raise new barriers specifically for ECRs trying to work openly. However, developments in the assessment of evidence might alleviate some of these concerns in the future [22,23,48].

The challenges described above mean that ECRs practicing open science are likely to have fewer published papers by the time they are applying for their next career stage. Compounding this issue is the dilution of authorship caused by the move towards more collaborative work practices (although, see [49]). ECR career progression critically depends on the number of first and last author publications in high-profile journals [1,4,18]. These factors make it more difficult for ECRs to compete for jobs or funding with colleagues taking a more orthodox approach [27]. Furthermore, although senior colleagues may find their previous work devalued by failed replications, they are likely to have already secured the benefits from quicker and less robust research practices [43]. They may then expect and teach comparable levels of productivity, which has the potential to be a source of tension [50].

The trade-off between quality and quantity appears to be tipped in favour of quantity in the current incentive structure. As long as open science efforts are not formally recognised, it seems ECRs who pursue open science are put at a disadvantage compared with ECRs who have not invested in open science [42,51]. However, reproducible science is increasingly recognized and supported, as we will discuss in the next section. Overall, ECRs are likely to be the ones who put in the effort to implement open science practices and may thus be most affected by the described obstacles. We believe academics at all levels and institutions should take into account these difficulties because the move towards open and reproducible science may be unavoidable and can ultimately benefit the whole community and beyond.

To summarise, ECRs currently face a situation in which demands on them are increasing. However, the structures that might aid a move towards more open and robust practices are not widely implemented or valued yet. We hope that one consequence of the well-publicised failures to replicate previous work and the consequent open science movement will be a shift in emphasis from an expectation of quantity to one of quality. This would include greater recognition, understanding, and reward for open science efforts, including replication attempts, broader adoption of preregistration and RRs, expectation of explicit distinctions between confirmatory and exploratory analyses, and longer, continuous ECR positions from which lower numbers of completed studies are expected.

Tips

Early adoption of open methods and high standards requires careful planning at an early stage of investigations but doing so should place ECRs ahead of the curve as practices evolve. Be strategic with which open science practices suit your research. Persevere, focus on quality rather than quantity, and, when evaluating others’ work, give credit for efforts made towards the common good.

Three benefits

Benefit 1: Greater faith in research

“Science is an ongoing race between our inventing ways to fool ourselves, and our inventing ways to avoid fooling ourselves.” [52]. A scientist might observe a difference between conditions in their data, think they thought something similar previously, apply a difference test (e.g., a t test), and report a headline significant result. However, researchers rarely have perfect access to previous intentions and may have even forgotten thinking the opposite effect was plausible. Preregistration prevents this form of, often unconscious, error by providing an explicit timeline and record, as well as guarding against other forms of questionable research practices [19,29,30]. ECRs are at a particularly high risk of committing such errors due to lack of experience [53]. Preregistration also forces researchers to gain a more complete understanding of analyses (including stopping plans and smallest effect sizes of interest) and to attempt to anticipate all potential outcomes of an experiment [23]. Therefore, open science methods such as RRs can improve the quality and reliability of scientific work. As such procedures become more widely known, the gain in quality should reflect positively on ECRs who adopt them early.

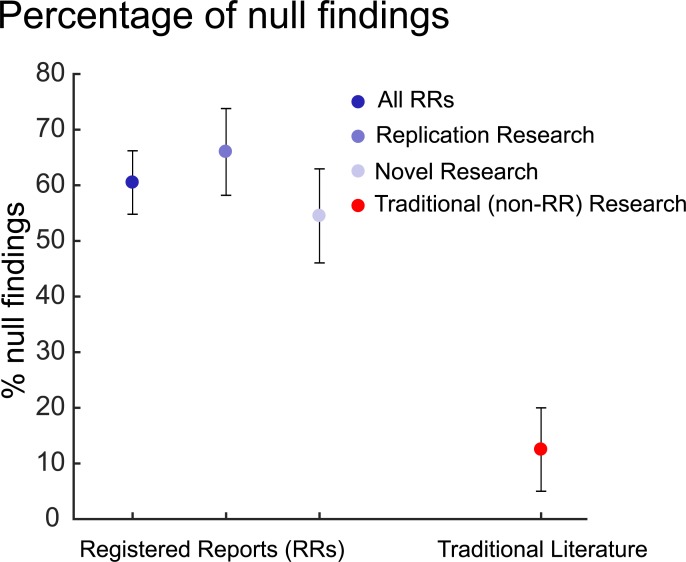

RRs not only guard against questionable practices but can also increase the chances of publication because they offer a path to publication irrespective of null findings. In well-designed and adequately powered experiments, null findings are often informative [23]. Furthermore, if the current incentive structure has skewed the literature toward positive findings, a higher prevalence of null findings is likely to be a better reflection of scientific enquiry. If this were the case, then we would expect more null findings in RRs and preregistrations than in the rest of the literature. To test this, we surveyed a list of 127 published biomedical and psychological science RRs compiled (September 2018) by the Center for Open Science (see S1 Text and https://osf.io/d9m6e/), of which 113 RRs were included in final analyses. For each RR, we counted the number of clearly stated, a priori, discrete hypotheses per preregistered experiment. We assessed the percentage of hypotheses that were not supported and compared it with percentages previously reported within the wider literature. Of the hypotheses we surveyed, 60.5% (see Fig 1) were not supported by the experimental data (see S1 Data and https://osf.io/wy2ek/), which is in stark contrast to the estimated 5% to 20% of null findings in the traditional literature [46,47]. The principle binomial test was applied with an uninformed prior (beta prior scaling parameters a and b set to 1), using the open software package JASP (version 0.8.5.1) [54]. Data suggested that even compared to a liberal estimate of 30% published null results, a substantially larger proportion of hypotheses was not supported among RRs (60.5% versus test value 30%, 95% confidence interval [54.7%–66.1%], p < 0.001; Bayes Factor = 2.0 × 1024). Moreover, the percentage of unsupported hypotheses was similar, if not slightly higher for replication attempts (66.0% [57.9%–73.5%]) compared with novel research (54.5% [46.0%–62.9%]) amongst the surveyed RRs. Because these comparisons are between estimates that we have surveyed and published estimates, we highlight their exploratory nature. However, these analyses suggest that RRs increase the chances for publishing null findings. Adoption of RRs might therefore reduce the chances of ECRs’ work going unpublished. Furthermore, the difference between the incidences of null findings in RRs and that of the wider literature can be interpreted as an estimate of the file drawer problem [14]. Because RRs guarantee the publication of work irrespective of their statistical significance, the ECR publishes irrespective of the study’s outcome.

Fig 1. Percentages of null findings among RRs and traditional (non-RR) literature [46,47], with their respective 95% confidence intervals.

In total, we extracted n = 153 hypotheses from RRs that were declared as replication attempts and n = 143 hypotheses that were declared as original research. The bounds of the confidence intervals shown for traditional literature were based on estimates (5% and 20%, respectively) of null findings that have been previously reported for traditional literature [46,47]. Data is available on the Open Science Framework (https://osf.io/wy2ek/) and in S1 Data. RR, registered report.

A core aim of the open science movement is to make science more reliable. All the structures of open science are there to make this so. Sharing of protocols and data leads to replication, reproduction of analyses, and greater scrutiny. This increased scrutiny can also be a great motivator to ensure good quality data and analyses. Sharing data and analyses is increasingly common and expected [55], and soon we anticipate findings may only be deemed fully credible if they are accompanied by accessible data and transparent analysis pathways [56]. Instead of relying on trust, open science allows verification through checking and transparent timelines. There is also an educational aspect to this: when code and data are available, one can reproduce results presented in papers, which also facilitates understanding. More fundamentally, replication of findings is core to open science and paramount in increasing reliability, which can benefit scientists at all levels.

Tips

Make your work as accessible as possible and preregister experiments when suitable. If your research group lacks experience in open science practices, consider initiating the discussion. Do not be afraid of null results, but design and power experiments such that null results can be informative and register them to raise the chances of publication. When preparing preregistrations or RRs, we recommend consulting the material provided by the Center for Open Science [57]. Be aware of the additional time and power demands preregistered and RR experiments can require.

Benefit 2: New helpful systems

The structures developed around open and reproducible science are there to help researchers and promote collaboration [58]. These structures include a range of software tools, publishing mechanisms, incentives, and international organisations. These can help ECRs in documenting their work, improving workflows, supporting collaborations, and ultimately progressing their training.

Open science software such as web-based, version-controlled repositories like GitHub archivist and Bitbucket [59] can help with storing and sharing code. In combination with scripting formats like R markdown [60] and jupyter Python Notebooks [35], ECRs can build up well documented and robust code libraries that may be reused for future projects and for teaching purposes. Ultimately, the most thankful recipient may be yourself in a few years’ time. New open tools can aid robust and reproducible data analysis in a user-friendly way. For instance, the open-source Brain Imaging Data Structure (BIDS) application was designed to standardise analysis pipelines in neuroimaging [61]. Well commented, standardised, and documented code and data are critical for making science open and improves programs, as do the additional checks when they are shared.

Open science tools can further assist ECRs in scrutinising existing work. For instance, the open software p-curve analysis was developed in response to the skew in the literature in favour of positive results and facilitates estimating publication bias within research areas ([62]; although, see [63]). Another useful checking tool is statcheck, an R toolbox that scans documents for inconsistencies in reported statistical values [64]. Overall, these examples can guide the ECR towards becoming a more rigorous researcher and may also help them to exert some healthy self-scepticism via additional checks [51].

The open science movement also provides opportunities to access free high-quality, often standardised data. For instance, in genetics the repository Addgene [65], in neuroanatomy the Allen Brain Atlas [66], in brain imaging the Human Connectome Project [67], and in biomedicine the UK Biobank [68] present rich data sources that may be in particular useful for ECRs, who often have limited funds. Furthermore, distributed laboratory networks such as the Psychological Science Accelerator [58], which supports crowdsourced research projects, and the open consortium Enhancing Neuroimaging Genetics Through Meta Analysis (ENIGMA) [69] allow ECRs to partake in international collaborations. However, although these new open forms of collaboration are often beneficial and productive, the coordination of time lines between researchers involved and expectations of contributions can be challenging and require clear and open communication.

ECRs who work with existing data sets can also benefit from new publishing formats such as secondary RRs and exploratory reports [32]. These allow preregistration of hypotheses and analysis plans for data that have already been acquired. Although still under development, exploratory reports are intended for situations in which researchers don’t have strong a priori predictions and in which authors agree to fully share data and code [32]. Similar to RRs, they are outcome independent in terms of statistical significance, and publication is based on transparency and an intriguing research question. As such, this format may present an entry to preregistration for ECRs that can help build expertise in open science methods.

There is a spectrum of open science practices and tools at researchers’ disposal. These range from making data publicly available right through to fully open RRs. Generally, researchers should be encouraged to adopt as much as possible, but one should not let the perfect be the enemy of the good. Some research questions are exploratory, may be data driven, or are iterative, which may be less well suited to preregistration. Preregistration also presents problems for complex experiments, because it can be difficult to anticipate all potential outcomes. There are also often constraints on when and if data can be made available, such as anonymization. Dilemmas also arise when elegant experimental designs are capable of probing both confirmatory and exploratory questions, in which it is recommended that only confirmatory aspects are preregistered.

Tips

Make use of new tools that facilitate sharing and documenting your work efficiently and publicly. Think about whether your research question can be addressed with existing, open data sets. Free training options in open science methods are growing [30]; try to make use of them. Making data and materials, such as code, available is a relatively low-cost entry into open science. ECRs should be encouraged to adopt as many open practices as possible but select the methods that fit their research question with feasibility in mind.

Benefit 3: Investment in your future

Putting more of your work and data in the public domain is central to open science and increases ECRs’ opportunities for acknowledgment, exchange, collaboration, and advancement [43,70]. It also renders preclinical and translational research more robust and efficient [71] and can accelerate the development of life-saving drugs, for instance, in response to public health emergencies [70,72]. Reuse of open data can lead to publications [43], which may not have happened under closed science [73]. ECRs can receive citations for data alone when stored at public repositories such as the Open Science Framework [74,75], and articles with published open data receive more citations than articles that don’t share data [76]. Preprints and preregistrations are also citable and appear to increase citation rates [27]. As such, ECRs who make use of these open science methods can accumulate additional citations early on and thereby evidence the impact of their work [77].

Moreover, it has also been highlighted that authors may receive early media coverage based on preprints [27], which we see confirmed in our experience with a preprint based on an earlier version of this article [78,79]. Openness in science can even promote equality by making resource-costly data or rarely available observations accessible to a wider range of communities [74,75]. In theory, data sharing increases the longevity and therefore utility of data, whereas in closed science, data usability declines drastically over time [80]; although it is worth noting that this particular advantage is negated by inadequate data documentation [8]. More generally, with open data, it's open to anyone: in order to access, to use, and to publish using open data, one doesn't need a big grant, and therefore open science can facilitate widened participation and diversity for ECRs. In short, open science should improve the quality of work and get researchers recognised for their efforts. These benefits apply to individual career progression but also benefit science in general and thus may create a virtuous cycle.

Beyond academia, working reproducibly should put ECRs in a better career position. For instance, reproducibility has also been raised as a major concern in industrial contexts such as in software development [81], as well as in industrial biomedical and pharmaceutical research [82]. Therefore, for ECRs considering a career transition towards industry, adopting open science methods and reproducible research practices might allow them to stand out. This advantage may outweigh possible disadvantages (e.g., a shorter publication list) when it comes to career paths outside of academia [83].

Early adoption of open and reproducible methods is an investment in the future and can put researchers ahead of the curve. In the wake of numerous failed replications of previous research, employers and grant funders increasingly see open and reproducible science as part of necessary requirements and heavily encourage their adoption [51]. Recently funders have also offered funding specifically for replications and open science research projects. Some have called for data sharing [84], which is becoming a requirement for a range of biomedical journals [85]. Open access publishing has seen a rapid increase in uptake, rising by the factor of 4 to 5 between 2006 and 2016 [27], with several journals rewarding open science efforts [46]. Initiatives by leading scientific bodies demonstrate that the need for an open science culture is starting to be recognised, increasingly desired, and should become the norm [18,19,27,30,38,39]. In this spirit, the Montreal Neurological Institute as a leading neuroscience institution has recently declared itself to be a fully open science centre [86]. Other examples are the universities LMU München and Cologne (Germany) as well as Cardiff (UK) that have recently asked some candidates applying for positions in psychology to provide a track record of open science methods. Therefore, adoption of open science practices is likely to have career benefits and to grow, especially as it is a one-way street: once adopted it is very hard to revert to a traditional approach. For example, once the distinction between confirmatory and exploratory research is understood and implemented, it is difficult to unknow [4].

Tips

Early adoption of open science practices, which can be evidenced, will likely confer career advantages in the future. Explore opportunities for open science collaborations in consortia or research networks and connect with others to build a local open science community. Look out for open science funding opportunities, which are increasingly available. Consider the level of open science conducted when deciding where to work. Where possible, support open science initiatives.

Conclusion

Overall, we believe open methods are worthwhile, positive, necessary, and inevitable but can come at a cost that ECRs would do well to consider. We have summarised 3 main benefits that the ECR can gain when working with open science methods and, perhaps more importantly, how open science methods allow us to place greater faith in scientific work. We also emphasize that there are obstacles, particularly for the ECR. The adoption of open practices requires a change in attitude and productivity expectations, which need to be considered by academics at all levels, as well as funders. Yet, taken together, we think that capitalising on the benefits is a good investment for both the ECR and science and should be encouraged where possible. A response to pervasive failures to replicate previous research makes the transition to open science methods necessary, and despite the challenges, early adoption of open practices will likely pay off for both the individual and science.

Supporting information

(DOCX)

RR, registered report.

(XLSX)

Acknowledgments

We would also like to thank the following people (in alphabetical order) for their helpful input: Rhian Barrance, Dorothy Bishop, Lara Boyd, Chris Chambers, Emily Hammond, Nikolaus Kriegeskorte, Jeanette Mumford, Russell Poldrack, Robert Thibault, Adam Thomas, Kevin Weiner, and Kirstie Whitaker.

Abbreviations

- ECR

early career researcher

- HARKing

hypothesizing after the results are known

- REF

Research Excellence Framework

- RR

registered reports

Data Availability

All relevant data is available on the Open Science Framework (https://osf.io/wy2ek/) and in the supplementary material of this article.

Funding Statement

We would like to thank the Wellcome Trust (104943/Z/14/Z; https://wellcome.ac.uk/) and Health and Care Research Wales (HS/14/20; https://www.healthandcareresearch.gov.wales/) for their financial support. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Footnotes

Provenance: Not commissioned; externally peer reviewed

References

- 1.Higginson AD, Munafò MR. Current Incentives for Scientists Lead to Underpowered Studies with Erroneous Conclusions. PLoS Biol. 2016;14(11): e2000995 10.1371/journal.pbio.2000995 PMCID: PMC5104444 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Munafò M, Nosek B, Bishop D, Button K, Chambers C, Percie du Sert N, et al. A manifesto for reproducible science. Nat Hum Behav. Nature Publishing Group; 2017;1: 0021 10.1038/s41562-016-0021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Moonesinghe R, Khoury MJ, Janssens ACJW. Most Published Research Findings Are False—But a Little Replication Goes a Long Way. PLoS Med. 2007;4(2): e28 10.1371/journal.pmed.0040028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chambers C. The seven deadly sins of psychology: A manifesto for reforming the culture of scientific practice. Princeton university Press; 2017. [Google Scholar]

- 5.Errington TM, Iorns E, Gunn W, Tan FE lisabet., Lomax J, Nosek BA. An open investigation of the reproducibility of cancer biology research. Elife. 2014;3: 1–9. 10.7554/eLife.04333 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Nosek BA, Errington TM. Making sense of replications. Elife. 2017;6: 4–7. 10.7554/eLife.23383 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Harris R. Reproducibility issues. Chem Eng News. 2017;95. [Google Scholar]

- 8.Wallach JD, Boyack KW, Ioannidis JPA. Reproducible research practices, transparency, and open access data in the biomedical literature, 2015–2017. PLoS Biol. 2018;16(11): e2006930 10.1371/journal.pbio.2006930 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mehler D. The replication challenge: Is brain imaging next? In: Raz A, Thibault RT, editors. Casting Light on the Dark Side of Brain Imaging. 1st ed. Academic Press; 2019. pp. 84–88. 10.1016/B978-0-12-816179-1.00010-4 [DOI] [Google Scholar]

- 10.Button KS, Ioannidis JPA, Mokrysz C, Nosek BA, Flint J, Robinson ESJ, et al. Power failure: why small sample size undermines the reliability of neuroscience. Nat Rev Neurosci. 2013;14: 365–376. 10.1038/nrn3475 [DOI] [PubMed] [Google Scholar]

- 11.Iqbal SA, Wallach JD, Khoury MJ, Schully SD, Ioannidis JPA. Reproducible Research Practices and Transparency across the Biomedical Literature. PLoS Biol. 2016;14(1): e1002333 10.1371/journal.pbio.1002333 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Szucs D, Ioannidis JPA. Empirical assessment of published effect sizes and power in the recent cognitive neuroscience and psychology literature. PLoS Biol. 2017;15(3): e2000797 10.1371/journal.pbio.2000797 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ramsey S, Scoggins J. Practicing on the Tip of an Information Iceberg? Evidence of Underpublication of Registered Clinical Trials in Oncology. Oncologist. NIH Public Access; 2008;13: 925 10.1634/theoncologist.2008-0133 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Rosenthal R. The file drawer problem and tolerance for null results. Psychol Bull. 1979;86: 638–641. 10.1037/0033-2909.86.3.638 [DOI] [Google Scholar]

- 15.Tomkins A, Zhang M, Heavlin WD. Reviewer bias in single- versus double-blind peer review. Proc Natl Acad Sci U S A. National Academy of Sciences; 2017;114: 12708–12713. 10.1073/pnas.1707323114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Willingham S, Volkmer J, Gentles A, Sahoo D, Dalerba P, Mitra S, et al. The challenges of replication. Elife. eLife Sciences Publications Limited; 2017;6: 6662–6667. 10.7554/eLife.23693 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Nosek BA, Ebersole CR, DeHaven AC, Mellor DT. The preregistration revolution. Proc Natl Acad Sci. 2018;2017: 201708274 10.1073/pnas.1708274114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Nosek B, Spies J, Motyl M. Scientific Utopia. Perspect Psychol Sci. 2012;7: 615–631. 10.1177/1745691612459058 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Freedman LP, Cockburn IM, Simcoe TS. The economics of reproducibility in preclinical research. PLoS Biol. 2015;13(6): e1002165 10.1371/journal.pbio.1002165 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mazey L, Tzavella L. Barriers and solutions for early career researchers in tackling the reproducibility crisis in cognitive neuroscience. Cortex. 2018; 113: 357–359. 10.1016/j.cortex.2018.12.015 [DOI] [PubMed] [Google Scholar]

- 21.Button KS, Ioannidis JPA, Mokrysz C, Nosek BA, Flint J, Robinson ESJ, et al. Power failure: why small sample size undermines the reliability of neuroscience. Nat Rev Neurosci. 2013;14: 365–376. 10.1038/nrn3475 [DOI] [PubMed] [Google Scholar]

- 22.Algermissen J, Mehler DMA. May the power be with you: are there highly powered studies in neuroscience, and how can we get more of them? J Neurophysiol. 2018;119: 2114–2117. 10.1152/jn.00765.2017 [DOI] [PubMed] [Google Scholar]

- 23.Dienes Z. Understanding psychology as a science: An introduction to scientific and statistical inference. Palgrave Macmillan; 2008. [Google Scholar]

- 24.Introducing eLife’s first computationally reproducible article [Internet]. 2019. Available from: https://elifesciences.org/labs/ad58f08d/introducing-elife-s-first-computationally-reproducible-article. [cited 2019 March 25].

- 25.Living Figures–an interview with Björn Brembs and Julien Colomb [Internet]. 2014. Available from: https://blog.f1000.com/2014/09/09/living-figures-interview/. [cited 2019 March 25].

- 26.Tomkins A, Zhang M, Heavlin WD. Reviewer bias in single- versus double-blind peer review. Proc Natl Acad Sci U S A. National Academy of Sciences; 2017;114: 12708–12713. 10.1073/pnas.1707323114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.McKiernan EC, Bourne PE, Brown CT, Buck S, Kenall A, Lin J, et al. How open science helps researchers succeed. Elife. 2016;5: 1–19. 10.7554/eLife.16800 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chambers CD. Registered Reports: A new publishing initiative at Cortex. Cortex. 2013;49: 609–610. 10.1016/j.cortex.2012.12.016 [DOI] [PubMed] [Google Scholar]

- 29.Nosek BA, Ebersole CR, DeHaven AC, Mellor DT. The preregistration revolution. Proc Natl Acad Sci. 2018;2017: 201708274 10.1073/pnas.1708274114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Toelch U, Ostwald D. Digital open science—Teaching digital tools for reproducible and transparent research. PLoS Biol. 2018;16(7): e2006022 10.1371/journal.pbio.2006022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kerr N. HARKing: -hypnothesizing after the results are known. Personal Soc Psychol Rev. 1998;2: 196–217. 10.1207/s15327957pspr0203_4 [DOI] [PubMed] [Google Scholar]

- 32.McIntosh RD. Exploratory reports: A new article type for Cortex. Cortex. Elsevier Ltd; 2017;96: A1–A4. 10.1016/j.cortex.2017.07.014 [DOI] [PubMed] [Google Scholar]

- 33.Laakso M, Welling P, Bukvova H, Nyman L, Bjoerk B-C, Hedlund T. The Development of Open Access Journal Publishing from 1993 to 2009. PLoS ONE. 2011;6(6): e20961 10.1371/journal.pone.0020961 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hanel PHP, Mehler DMA. Beyond reporting statistical significance: Identifying informative effect sizes to improve scientific communication. Public Underst Sci. 2019; 10.1177/0963662519834193 [DOI] [PubMed] [Google Scholar]

- 35.Kluyver Thomas, Benjamin Ragan-Kelley Fernando Pérez, Granger Brian, Bussonnier Matthias, Frederic Jonathan, Kelley Kyle, Hamrick Jessica, Grout Jason, Corlay Sylvain, Ivanov Paul, Avila Damián, Abdalla Safia, Carol Willing JDT. Jupyter Notebooks–a publishing format for reproducible computational workflows. Positioning and Power in Academic Publishing: Players, Agents and Agendas; 2016. pp. 87–90. 10.3233/978-1-61499-649-1-87 [DOI] [Google Scholar]

- 36.Markowetz F. Five selfish reasons to work reproducibly. Genome Biol. 2015;16: 274 10.1186/s13059-015-0850-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Wagenmakers EJ, Dutilh G. Seven Selfish Reasons for Preregistration. APS Obs. 2016;29. [Google Scholar]

- 38.Bishop DVM. Fallibility in science: Responding to errors in the work of oneself and others. Adv Methods Pract Psychol Sci. 2017; 1–9. 10.7287/peerj.preprints.3486v1 [DOI] [Google Scholar]

- 39.Lewandowsky S, Bishop D. Research integrity: Don’t let transparency damage science. Nature. 2016;529: 459–461. 10.1038/529459a [DOI] [PubMed] [Google Scholar]

- 40.National Academy of Sciences; National Academy of Engineering and Institute of Medicine. The Postdoctoral Experience Revisited. Washington, D.C.: The National Academies Press; 2014. 10.17226/18982 [DOI] [PubMed] [Google Scholar]

- 41.Pickett CL, Corb BW, Matthews CR, Sundquist WI, Berg JM. Toward a sustainable biomedical research enterprise: Finding consensus and implementing recommendations. 2015;112 10.1073/pnas.1509901112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Moher D, Naudet F, Cristea IA, Miedema F, Ioannidis JPA, Goodman SN. Assessing scientists for hiring, promotion, and tenure. PLoS Biol. 2018;16(3): e2004089 10.1371/journal.pbio.2004089 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Poldrack RA. NeuroView The Costs of Reproducibility NeuroView. Neuron. Elsevier Inc.; 2019;101: 11–14. 10.1016/j.neuron.2018.11.030 [DOI] [PubMed] [Google Scholar]

- 44.Baker M. 1,500 scientists lift the lid on reproducibility. Nature. 2016;533: 452–454. 10.1038/533452a [DOI] [PubMed] [Google Scholar]

- 45.Nosek BA, Alter G, Banks GC, Borsboom D, Bowman SD, Breckler SJ. Scientific standards. Promoting an open research culture. Science (80-). 2015;348 10.1126/science.aab2374 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Kidwell MC, Lazarević LB, Baranski E, Hardwicke TE, Piechowski S, Falkenberg L-S, et al. Badges to Acknowledge Open Practices: A Simple, Low-Cost, Effective Method for Increasing Transparency. PLoS Biol. 2016;14(5): e1002456 10.1371/journal.pbio.1002456 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Hardwicke TE, Ioannidis JPA. Mapping the universe of registered reports. Nat Hum Behav. Springer US; 2018;2: 793–796. 10.1038/s41562-018-0444-y [DOI] [PubMed] [Google Scholar]

- 48.Smith PL, Little DR. Small is beautiful: In defense of the small-N design. Psychonomic Bulletin & Review; 2018; 10.3758/s13423-018-1451-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Fontanarosa P, Bauchner H, Flanagin A. Authorship and Team Science. JAMA. American Medical Association; 2017;318: 2433 10.1001/jama.2017.19341 [DOI] [PubMed] [Google Scholar]

- 50.Brecht K. “Bullied Into Bad Science”: An Interview with Corina Logan–JEPS Bulletin. 2017. Available from: http://blog.efpsa.org/2017/10/23/meet-corina-logan-from-the-bullied-into-bad-science-campaign/. [cited2017 Nov 14].

- 51.Flier J. Faculty promotion must assess reproducibility. Nature. 2017;549: 133–133. 10.1038/549133a [DOI] [PubMed] [Google Scholar]

- 52.Nuzzo R. How scientists fool themselves–and how they can stop. Nature. 2015;526: 182–185. 10.1038/526182a [DOI] [PubMed] [Google Scholar]

- 53.Fanelli D, Costas R, Ioannidis JPA. Meta-assessment of bias in science. Proc Natl Acad Sci. 2017;114: 3714–3719. 10.1073/pnas.1618569114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Team J. JASP (Version 0.8.5.1)[Computer software]. 2017.

- 55.Eglen SJ, Marwick B, Halchenko YO, Hanke M, Sufi S, Gleeson P, et al. Toward standard practices for sharing computer code and programs in neuroscience. Nat Neurosci. Nature Publishing Group; 2017;20: 770–773. 10.1038/nn.4550 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Editorial. Referees’ rights. Peer reviewers should not feel pressured to produce a report if key data are missing. Nature. 2018;560: 409 10.1038/d41586-018-06006-y [DOI] [PubMed] [Google Scholar]

- 57.Centre for Open Science Blog. A Preregistration Coaching Network [Internet]. 2017 Available from: https://cos.io/blog/preregistration-coaching-network/. [cited 2 Nov 2017].

- 58.Moshontz H, Campbell L, Ebersole CR, IJzerman H, Urry HL, Forscher PS, et al. The Psychological Science Accelerator: Advancing Psychology through a Distributed Collaborative Network. Adv Methods Pract Psychol Sci. 2018; 10.31234/OSF.IO/785QU [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Biecek P, Kosinski M. archivist: An R Package for Managing, Recording and Restoring Data Analysis Results. 2017;VV. 10.18637/jss.v082.i11 [DOI]

- 60.Baumer B, Cetinkaya-Rundel M, Bray A, Loi L, Horton NJ. R Markdown: Integrating A Reproducible Analysis Tool into Introductory Statistics. BioRxiV. 2014; 10.5811/westjem.2011.5.6700 [DOI] [Google Scholar]

- 61.Gorgolewski KJ, Alfaro-Almagro F, Auer T, Bellec P, Capotă M, Chakravarty MM, et al. BIDS apps: Improving ease of use, accessibility, and reproducibility of neuroimaging data analysis methods. PLoS Comput Biol. 2017;13(3): e1005209 10.1371/journal.pcbi.1005209 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Simonsohn U, Nelson LD, Simmons JP. P-Curve: A Key to the File Drawer [Internet]. 2013. Available from: https://papers.ssrn.com/sol3/papers.cfm?abstract_id = 2256237. [cited 2019 March 25].

- 63.Bishop DVM, Thompson PA. Problems in using p -curve analysis and text-mining to detect rate of p -hacking and evidential value. PeerJ. PeerJ Inc.; 2016;4: e1715 10.7717/peerj.1715 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Nuijten MB, Hartgerink CHJ, van Assen MALM, Epskamp S, Wicherts JM. The prevalence of statistical reporting errors in psychology (1985–2013). Behav Res Methods. Behavior Research Methods; 2016;48: 1205–1226. 10.3758/s13428-015-0664-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Kamens J. Addgene: Making Materials Sharing ‘“Science As Usual.”‘ PLoS Biol. 2014;12(11): e1001991 10.1371/journal.pbio.1001991 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Jones AR, Overly CC, Sunkin SM. The Allen Brain Atlas: 5 years and beyond. Nat Rev Neurosci. Nature Publishing Group; 2009;10: 821–828. 10.1038/nrn2722 [DOI] [PubMed] [Google Scholar]

- 67.Van Essen DC, Ugurbil K, Auerbach E, Barch D, Behrens TEJ, Bucholz R, et al. The Human Connectome Project: A data acquisition perspective. Neuroimage. 2012;62: 2222–2231. 10.1016/j.neuroimage.2012.02.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Sudlow C, Gallacher J, Allen N, Beral V, Burton P, Danesh J, et al. UK Biobank: An Open Access Resource for Identifying the Causes of a Wide Range of Complex Diseases of Middle and Old Age. PLoS Med. 2015;12: 1–10. 10.1371/journal.pmed.1001779 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Thompson PM, Stein JL, Medland SE, Hibar DP, Vasquez AA, Renteria ME, et al. The ENIGMA Consortium: large-scale collaborative analyses of neuroimaging and genetic data. Brain Imaging Behav. Springer; 2014;8: 153–82. 10.1007/s11682-013-9269-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Modjarrad K, Moorthy VS, Millett P, Gsell P-S, Roth C, Kieny M-P. Developing Global Norms for Sharing Data and Results during Public Health Emergencies. PLoS Med. 2016;13(1): e1001935 10.1371/journal.pmed.1001935 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Steward O, Balice-Gordon R. Rigor or mortis: Best practices for preclinical research in neuroscience. Neuron. Elsevier Inc.; 2014;84: 572–581. 10.1016/j.neuron.2014.10.042 [DOI] [PubMed] [Google Scholar]

- 72.Yozwiak NL, Schaffner SF, Sabeti PC. Data sharing: Make outbreak research open access. Nature. 2015;518: 477–479. 10.1038/518477a [DOI] [PubMed] [Google Scholar]

- 73.Lowndes JSS, Best BD, Scarborough C, Afflerbach JC, Frazier MR, O’Hara CC, et al. Our path to better science in less time using open data science tools. Nat Ecol Evol. 2017;1: 0160 10.1038/s41559-017-0160 [DOI] [PubMed] [Google Scholar]

- 74.Milham MP, Ai L, Koo B, Xu T, Amiez C, Balezeau F, et al. An Open Resource for Non-human Primate Imaging. Neuron. 2018;100: 61–74.e2. 10.1016/j.neuron.2018.08.039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Weiss A, Wilson ML, Collins DA, Mjungu D, Kamenya S, Foerster S, et al. Personality in the chimpanzees of Gombe National Park. Sci Data. Nature Publishing Group; 2017;4: 170146 10.1038/sdata.2017.146 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Piwowar HA, Vision TJ. Data reuse and the open data citation advantage. PeerJ. 2013; 1–25. 10.7717/peerj.175 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Sarabipour S, Debat HJ, Emmott E, Burgess SJ, Schwessinger B, Hensel Z. On the value of preprints: An early career researcher perspective. PLoS Biol. 2019;17(2): e3000151 10.1371/journal.pbio.3000151 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Allen CPG, Mehler DMA. Open Science challenges, benefits and tips in early career and beyond. PsyArXiv. 2018; 10.31234/OSF.IO/3CZYT [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Warren M. First analysis of ‘pre-registered’ studies shows sharp rise in null findings. Nature. 2018; [Google Scholar]

- 80.Vines TH, Albert AYK, Andrew RL, Dé F, Bock DG, Franklin MT, et al. Report The Availability of Research Data Declines Rapidly with Article Age. 2014; 10.1016/j.cub.2013.11.014 [DOI] [PubMed] [Google Scholar]

- 81.Stodden V, McNutt M, Bailey DH, Deelman E, Gil Y, Hanson B, et al. Enhancing reproducibility for computational methods. Science (80-). 2016;354: 1240–1241. 10.1126/science.aah6168 [DOI] [PubMed] [Google Scholar]

- 82.Jarvis MF, Williams M. Irreproducibility in Preclinical Biomedical Research: Perceptions, Uncertainties, and Knowledge Gaps. Trends Pharmacol Sci. Elsevier Ltd; 2016;37: 290–302. 10.1016/j.tips.2015.12.001 [DOI] [PubMed] [Google Scholar]

- 83.Many junior scientists need to take a hard look at their job prospects. Nature. 2017;550: 429–429. 10.1038/550429a [DOI] [PubMed] [Google Scholar]

- 84.Kiley R, Peatfield T, Hansen J, Reddington F. Data Sharing from Clinical Trials—A Research Funder’s Perspective. N Engl J Med. Massachusetts Medical Society; 2017;377: 1990–1992. 10.1056/NEJMsb1708278 [DOI] [PubMed] [Google Scholar]

- 85.Taichman DB, Sahni P, Pinborg A, Peiperl L, Laine C, James A, et al. Data Sharing Statements for Clinical Trials—A Requirement of the International Committee of Medical Journal Editors. N Engl J Med. Massachusetts Medical Society; 2017;376: 2277–2279. 10.1056/NEJMe1705439 [DOI] [PubMed] [Google Scholar]

- 86.Poupon V, Seyller A, Rouleau GA. The Tanenbaum Open Science Institute: Leading a Paradigm Shift at the Montreal Neurological Institute. Neuron. Cell Press; 2017;95: 1002–1006. 10.1016/J.NEURON.2017.07.026 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX)

RR, registered report.

(XLSX)

Data Availability Statement

All relevant data is available on the Open Science Framework (https://osf.io/wy2ek/) and in the supplementary material of this article.