Abstract

The concept of a preattentive feature has been central to vision and attention research for about half a century. A preattentive feature is a feature that guides attention in visual search and that cannot be decomposed into simpler features. While that definition seems straightforward, there is no simple diagnostic test that infallibly identifies a preattentive feature. This paper briefly reviews the criteria that have been proposed and illustrates some of the difficulties of definition.

About 50 years ago, researchers were doing experiments in which observers needed to look for one type of target among distractor items. For instance, they might have been asked to look for a circle among squares [1], In some cases, including that search for circles among squares, the reaction time (RT) to declare that the target was present or to declare that all the items were the same did not vary as a function of how many items were presented. The slope of the RT x set size function was near zero. This strongly suggested that the all the items were being processed “in parallel” [2]. In other cases, the RT increased as a roughly linear function of set size, suggesting a serial process [though that need not be the case 3,4]. What made some targets “pop-out”? A leading thought has long been that observers “might have based their decision on a single distinctive feature” [p691 of 5]. The idea of a feature took center stage with Anne Treisman’s [6] “Feature Integration Theory” (FIT). The theory proposed that a limited set of features could be identified in parallel across the entire visual field. Any act of object identification that was based on a combination of features would require the deployment of serial attention to the object so that its features could be ‘bound’ into a recognizable, perceptual whole [7].

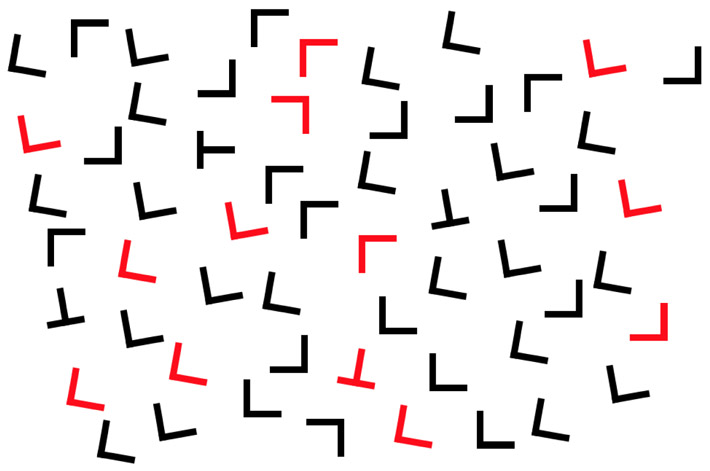

While FIT proposed that visual search tasks were either based on parallel detection of a feature or serial binding of features, it became clear that the data produced more of a continuum than a dichotomy [8]. Even when feature information was not adequate to complete a search task, it could be used to guide attention toward a target. In a clear example, Egeth and colleagues [9] had observers search for a specific letter among other red and black letters. If observers knew that the target was red, they could restrict attention to the red items, resulting in a search that was more efficient than it would have been if it were not guided by color (Look for the red T in Figure 3, below.). Wolfe et al. [10] argued that you could guide by more than one feature at a time. Thus, in a search for a red vertical, observers could guide to red and to vertical. Triple conjunctions (e.g. find the Big Red Vertical) could be more efficient, given three sources of guidance. See Friedman-Hill & Wolfe [11] for evidence that multiple features guide at the same time.

Figure 3. a: Plus intersections do not support texture segmentation (find the plus region). b: Size and orientation do support texture segmentation.

Not all features guide attention. Many features of the visual stimulus contribute to other aspects of visual perception. Object recognition is, perhaps, the most straight-forward example. Reading this document relies on distinguishing amongst the ‘features’ of the letters of the alphabet, and subtle properties of shape and texture are used to distinguish one fruit from the next or one make of automobile from others. Some specific examples of features that do not guide will be mentioned below. A different set of features arise from the convolutional neural networks (CNNs) that have revolutionized object recognition by machine vision. After training, such networks can distinguish between thousands of object classes [12]. These classifications are based on a set of features (typically several thousand) that the network has learned. Those features may turn out to be very similar to the features that support human object recognition. However, it is unlikely that they form a set of guiding features for human visual search. The set of features that can guide human search has been reviewed elsewhere [13,14]. Here, we will briefly review the properties of preattentive/guiding features and how they operate in visual search tasks.

Some definitions

In this field, definitions have always been open to debate. Ever since William James famously asserted in Chapter 11 of his 1890 Principles of Psychology [15] that “Everyone knows what attention is.”, it has been clear that everyone knows but no one agrees on the definition; in part, because the term, attention, covers many processes in the nervous system. Here we are referring specifically to visual selective attention; that aspect of visual attention that allows features of an object to be ‘bound’ into a recognizable object. That definition requires that we define ‘bound’ and ‘binding’ [7,16]. That is, perhaps, best done through a figure like Figure 1.

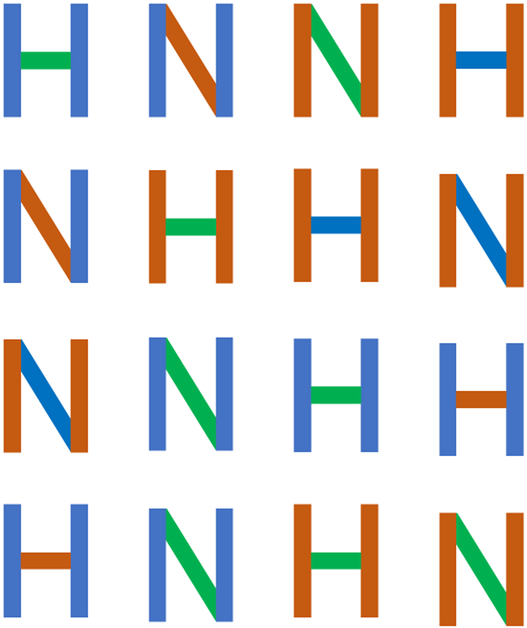

Figure 1: Find the two brown and green “N”s.

Visual selective attention is the attention required to determine that there are brown and green N’s here. Other factors, like crowding [17] play a role in this illustration but some form of scrutiny would be required in any version of this search for specific letters in particular color combinations [18].

Something is seen at the location of brown and green N’s before they are positively identified. That experience of oriented regions colored blue, brown, and green can be called “preattentive” because it is what is available before visual selective attention is deployed to those locations, enabling the colors and orientations to be bound into a recognizable green and brown N. Different properties of a stimulus – here colors and orientations – are processed by different specialized parts of the nervous system. “Binding” refers to the process that creates a representation of the stimulus that registers the relationships between those properties as would be required for most acts of object recognition.

This does not mean that there are no attentional demands when the task involves identifying even a simple color or orientation [19] nor does it mean that only the simplest tasks can be done in the near absence of attention. Quite complex tasks such as the categorization of scenes [20] [21] can be accomplished at above chance levels even if the binding of features into recognized objects is prevented. Such tasks are not attention-free [22] [23]. Some type of attention seems to be required for any conscious perception [24].

Selective attention also improves performance on tasks involving preattentive processes [25] (see Carrasco’s review in this issue for an extended discussion). The manner in which selective attention does its work is open to debate. We tend to envision selective attention as being deployed from item to item in series [26] [27]. In other accounts, attentional resources are spread in parallel across multiple items [28-30]. For the purposes of this brief review, when we say “attention”, we mean visual selective attention that is needed for object recognition. Its application, however accomplished, takes some time. Something is visible and processed before attention’s work is done. That experience and those processes are what we refer to as “preattentive”.

How do preattentive features guide attention?

Some featural information is available preattentively. There are three basic ways in which that feature information guides attention in visual search tasks. Bottom-up, stimulus-based guidance is based on local feature contrast.

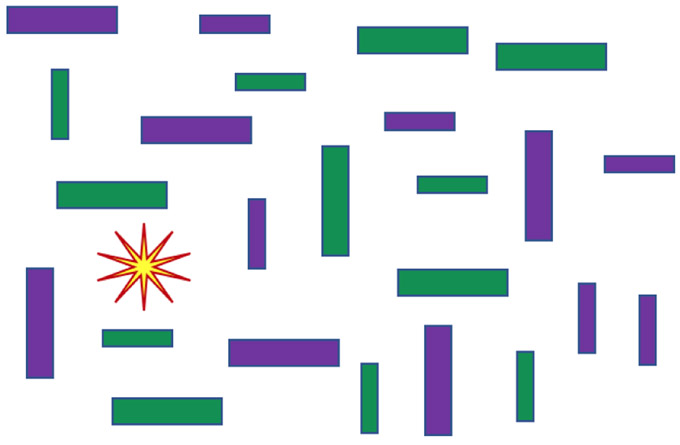

Thus, in Figure 2, your attention will be summoned to the red and yellow starburst, without any need for instruction. The item differs from its neighbors in color and shape features like “terminator number” [31]. Indeed, it would be hard not to attend to that item. In contrast, once you are told what to look for, top-down, user-driven guidance will rapidly get your attention to a big green vertical item. If you are asked about small purple horizontal items, a new top-down setting will quickly reveal a rough line of three such items, changing your percept without changing the stimulus. There has been much debate about the relative priority of top-down and bottom-up processing with ardent partisans of bottom-up [32,33] and top-down [34]. In fact, each can dominate attentional deployment under the correct circumstances. More recently, it has become clear that a third factor, the history of prior guidance, is important, too. If you randomly select an item in Figure 1, you will quickly be able to find other items sharing those features because the first selection “primes” subsequent selection [35-38]. Bottom-up guidance is very fast – producing an “exogenous” shift of attention. Top-down takes longer to put into place and can be thought of as producing an “endogenous” shift [39].

Figure 2: Find a big green vertical target.

Not all guidance in visual search is feature guidance. Scene structure can guide attention very effectively [40-46]. If you are looking for a coffee maker in a kitchen, your search will be highly constrained even though the basic visual features of a coffee maker can vary quite widely. You will tend to look on kitchen counters, not the floor and not on a dining table in the kitchen. Note that you will tend to look in the same places whether or not the coffee maker is present because it is not the coffee maker’s features that are relevant in scene guidance. Of course, an observers’ understanding of the gist and structure of a scene is based on its visual properties, but those visual features define the meaning of the scene, not the target. Scene features do not behave like preattentive features. Thus, for example, search for a natural scene among urban scenes is not efficient [47].

The characteristics of guiding features

There are a number of properties that are considered diagnostic of preattentive / guiding features. Each has its ambiguities, and none is perfectly diagnostic.

-

1)

A guiding feature should guide.

If a target is defined by a unique feature, it should not matter how many distractors are present, the slope of the RT x set size function should be near zero. As Duncan and Humphreys [48] describe, this will be true only if the unique target feature is sufficiently different from the distractor feature. The required target-distractor difference that will support efficient search tends to be much greater than the just noticeable difference for that feature [e.g. color 49], [orientation 50]. If the distractors are heterogeneous, the efficiency of search will decline as a function of the degree of distractor variation [48]. Efficiency will be especially impacted if the target and distractors are not “linearly separable” [51] meaning that a line can be drawn, separating the target from the distractors in the feature space. Yellow among orange and red is linearly separable and search is efficient. Orange among yellow and red is not [52]. Similar rules apply, for example, in orientation [53].

Sometimes search is efficient even if no single feature defines the target. Targets defined by conjunctions of highly salient features can produce RT x set size slopes near zero. For instance, Theeuwes & Kooi [54] showed that a search for a black O among black Xs and white Os was very efficient. It would be a mistake to think of the “black O” conjunction as a basic feature in its own right because it is composed of two other more basic features: shape and luminance polarity. They simply combine in this case to guide conjunction search particularly efficiently. This occurs for higher order conjunctions as well [e.g. things like vertical green crescents 55]. This point can be and has been debated. For instance, people are very good at detecting the presence of an animal in a scene in brief glimpse [56,57]. One argument would be that animals are defined by a particular, perhaps probabilistic conjunction of basic features, but others argue that low level visual features cannot explain the behavior [58].

-

2)

Search asymmetries: Detecting the presence of a feature is easier than detecting its absence.

Treisman introduced the idea of a search asymmetry as “A diagnostic for preattentive processing of separable features” [59]. A search asymmetry is a situation where search for A among B is more efficient than search for B among A. In a truly diagnostic case, A among B will be very efficient while B among A will be inefficient. Perhaps the clearest instance of this is motion [60] where finding the presence of a moving item among stationary is much easier than finding the presence of a stationary item among moving items. Treisman’s useful idea was that the presence of a feature (e.g. motion) is easier to detect than its absence [61]. Thus, orange among yellow is easier than yellow among orange because orange is defined by the presence of the feature of “redness” while, in comparison to orange, yellow is defined by the absence of redness. Asymmetries are not completely unambiguous as evidence for the presence of preattentive features. Stimulus factors like target eccentricity that might be thought to be irrelevant to the status of a candidate feature, will modulate the strength or even the presence of an asymmetry [62] and, in some cases, the task, itself, may be asymmetric. For example, as Rosenholtz [for a set of important cautions about asymmetries see 63] pointed out, in the classic motion asymmetry, the harder case is search for a stationary target among heterogeneous, randomly moving distractors while the other side of the asymmetry is the easier search for a moving item among homogeneous, stationary distractors.

Search asymmetries have been also used as evidence for preattentive features that are not simple visual properties. For example, it has been proposed that emotionally valent (e.g. ‘angry’) objects (notably, faces) are found more efficiently among neutral distractors than vice versa, suggesting the existence of preattentive processing of “emotional features” [64]. Asymmetries are important here because the search tasks tend to produce relatively inefficient searches. It can be argued that the steep RT x set size slopes come from low stimulus salience [48]. leaving the asymmetry between two inefficient searches as the evidence for featural status for emotional valence [65]. However, asymmetries can also be produced if one type of distractor is harder to reject than another. Thus, for example, if each face is processed in series and it takes longer to move away from an angry face than from a neutral face, then search for neutral among angry will be less efficient. Asymmetries are most convincing when one of the two searches is efficient. The point is arguable, but, in the case of emotional valence, the asymmetry probably does not reflect featural status [66].

-

3)

Groups or regions, defined by a unique preattentive feature, segment from a background defined by other features

As a negative example, Figure 3a shows that intersection type (here T vs “plus” intersections) does not behave like a basic feature [67]. The evidence is that attentional scrutiny is required to notice that the bottom third of the figure is composed of plus intersections.

In Figure 3b, adequate variation in orientation and/or size produces clear texture segmentation, as basic features should. Conjunctions, though they can support efficient search, do not support texture segmentation [68], more evidence that the conjunction, itself, is not a guiding feature. Turning to grouping, in the lower left quadrant of Figure 3b, four orientation oddballs form a rough diamond. The colored items in the upper left quadrant may seem to form more of a red triangle with an added blue oddball [69]. Different types of features can be governed by somewhat different rules. Recent studies showed that even the most conventional features do not always support global display segmentation. In displays mixing many colors or orientations, observers are very efficient at selecting the subset of items based on hue. They are much less efficient at doing so based on color saturation or line orientation [70,71], although both features can pop-out in visual search [72]. Therefore, easy segmentation of groups and regions and target pop-out do not always unambiguously converge to diagnose basic features.

-

4)

Features are organized into modular dimensions.

This point requires a bit of care about terminology. “Red”, for this purpose, would be a feature. “Color” is the dimension. “Vertical” is a feature. “Orientation” is a dimension, and so forth. The distinction is useful because it is possible to guide attention to a dimension as well as to a feature [73,74]. Thus, you could give someone the instruction to find orientation oddballs and ignore color. Moreover, as Treisman [75] suggested, in disjunctive search, it is harder to search for features in two different dimensions (find red or vertical) than in the same (find red or green). In conjunctive search, where two features are attached to one item, it is more efficient to search for an item that is red AND vertical than one that is red AND green [18].

-

5)

Adaptation is not a distinctive property of basic features.

It is tempting to think that features should produce featural aftereffects. After all, for instance, orientation is a feature dimension and there are orientation (tilt) aftereffects [76,77]. The same is true for size, motion, and others [78]. However, lack of an aftereffect does not disqualify a candidate feature. For example, line terminations [31] and topological properties like the presence and absence of holes [79] are probably guiding features without having associated aftereffects. Moreover, presence of an aftereffect is not clear evidence for feature status. Contingent aftereffects like the McCollough effect [80,81] occur for many conjunctions of features [82] even though the conjunctions are not features in their own right. Other stimuli like faces show clear adaptation effects [83] [84] but properties like Asian vs European that do produce aftereffects are unlikely to serve as guiding features. On balance, it seems that adaptation effects are not diagnostic of guiding feature status.

-

6)

Preattentive features are derived from but not the same as early vision features.

Treisman [85] originally suggested that her preattentive features were the same as the features that electrophysiologists were finding in primary visual cortex [86]. For a modern version of this thought see Zhaoping [87]. We presume that the features that guide attention are derived from early visual processes. Thus, in Figure 4, one of the target “T”s is composed of vertical and horizontal lines. The others are tilted. Knowing that does not help you to find it. The 10 deg differences in orientation, while readily detectable, are not able to guide attention [50,88] because guiding features are coarsely coded. In contrast, as noted earlier, knowing that one of the Ts is red helps considerably.

Figure 4: Look for the red T. Look for the untilted T.

Guiding features are also appear to be coded categorically. Semantic categories like “number” vs “letter” probably do not serve to guide search [89,90]. However, more perceptual categories have a role to play. If we define vertical as “0 deg”, it is easier to search for a −10 deg target among −50 and 50 deg distractors than it is to search for a 10 deg target among −30 and 70 deg distractors. In the first case. Wolfe et al. [53] concluded that the important difference was that target was the only “steep” item in the first condition. The second condition is a simple 20 deg rotation of the first but now the target is merely the “steepest” item. Even though the relative geometries are the same in the two conditions, the first condition is the easier search (see also [91]). Similar effects have been reported in color [92] [93] but see [94].

One way to summarize this section would be to argue that attention is guided by a set of coarse, perhaps categorical, features, abstracted from early visual processes. In this view, you do not see guiding features, you use them [95]. This view leaves unclear the relationship of guidance to texture segmentation and grouping. Clearly, in those cases, what we are calling guiding features also shape what we see.

Can you learn a new feature?

Is the set of preattentive features fixed or can new features be learned? Most tasks improve with practice. In search, some inefficient searches can become efficient, as in the pioneering work of Schneider and Shiffrin [96] or the work of Carrasco et al. on conjunctions of two colors [97]. After some reports of failures to train efficient search, another group managed to make previously inefficient conjunction searches efficient [98,99]. Efficient searches for categories like “vehicle” have also been taken as evidence for learned features [57,100]. Have these conjunctions and vehicles become features? The alternative is that observers have learned to use basic features in a way that permits efficient search [101], similar to the account we offered for efficient search for salient conjunctions earlier.

If a new feature was behind newly efficient search, we would expect the new feature to behave like a feature in other tasks (e.g. texture segmentation). Evidence for such transfer has been weak [102], but in recent work, we obtained some transfer of a quite arbitrary property from one type of stimulus to another [103]. In part because the criteria for feature status, discussed above, remain unclear, it is hard to definitively determine if a new feature has been created by training.

Conclusions

At the end of the brief review, we find ourselves with an essentially pragmatic, operational answer to the question: What is a preattentive feature? A preattentive feature is a feature that guides attention in visual search and that cannot be decomposed into simpler features. Thus, color and orientation are features. A color X orientation conjunction, even if it supports efficient search, is not a feature because the efficient search can be attributed to guidance by color and orientation, acting independently. There are other phenomena that go along with feature status; e.g. search asymmetries and texture segmentation, but there is no airtight set of diagnostics to rule one attribute into preattentive feature status and others out of that status. From the days of Treisman’s “feature maps”, one approach has been to reify features into building blocks with their own separate existence. One of us, for example, keeps publishing tables of these features [13] [14] that could lead to the expectation that there will be specific pieces of the brain that embody the feature. A more nuanced way to think of preattentive features might be to think of them as the way that basic visual information is used by the mechanisms of selective visual attention. There are restrictions on the guidance of selective attention. One way to think of those restrictions is to describe attention as guided by a limited set of coarsely coded visual properties. We might not need to imagine that these exist as separate modules, defining a physical stage of processing. They might be what emerges when the human search engine makes use of the human visual system.

Highlights.

Preattentive features guide attention

Guidance can be bottom-up (stimulus-driven) or top-down (user-driven).

Features typically produce efficient visual search and texture segmentation.

Features may produce visual search “asymmetries”, but the issue is somewhat complex.

It remains unclear if training allows people to learn a new preattentive feature.

Acknowledgements:

This research was supported by NIH CA207490, US Army Research Office: ARO R00000000000588 to JMW and the Program for Basic Research of the Higher School of Economics (project TZ-57) to ISU.

Footnotes

Both authors state that they have no Declarations of interest.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Donderi DC, Zelnicker D: Parallel processing in visual same-different decisions. Perception & Psychophysics (1969) 5(4)(p197–200. [Google Scholar]

- 2. *.Egeth HW: Parallel versus serial processes in multidimentional stimulus discrimination. Perception & Psychophysics (1966. ) 1(245–252. [Google Scholar]; This is a good paper to look at in order to remember that the history of work in this field goes back to befor Treisman and Gelade (1980).

- 3. *.Townsend JT: A note on the identification of parallel and serial processes. Perception and Psychophysics (1971) 10(161–163. [Google Scholar]; Townsend has spent many years warning us that the distinction between serial and parallel processes may be important in a theory but it is not easy to decide between patterns of RT data that come from underlying serial and parallel processes

- 4.Townsend JT, Wenger MJ: The serial-parallel dilemma: A case study in a linkage of theory and method. Psychonomic Bulletin & Review (2004) 11(3):391–418. [DOI] [PubMed] [Google Scholar]

- 5.Egeth H, Jonides J, Wall S: Parallel processing of multielement displays. Cognitive Psychology (1972) 3(674–698. [Google Scholar]

- 6. *.Treisman A, Gelade G: A feature-integration theory of attention. Cognitive Psychology (1980) 12(97–136. [DOI] [PubMed] [Google Scholar]; Even if you think you know all about Feature Integration Theory, this paper will be worth reading or re-reading.

- 7.Treisman A: The binding problem. Current Opinion in Neurobiology (1996) 6(171–178. [DOI] [PubMed] [Google Scholar]

- 8.Wolfe JM: What do 1,000,000 trials tell us about visual search? Psychological Science (1998) 9(1):33–39. [Google Scholar]

- 9. *.Egeth HE, Virzi RA, Garbart H: Searching for conjunctively defined targets. J Exp Psychol: Human Perception and Performance (1984) 10(32–39. [DOI] [PubMed] [Google Scholar]; This paper is not known as widely as it should be. It is a clear, early demonstration of the guidance of attention by basic features (see Figure 3 of the present paper).

- 10.Wolfe JM, Cave KR, Franzel SL: Guided search: An alternative to the feature integration model for visual search. J Exp Psychol - Human Perception and Perf (1989) 15(419–433. [DOI] [PubMed] [Google Scholar]

- 11.Friedman-Hill SR, Wolfe JM: Second-order parallel processing: Visual search for the odd item in a subset. J Experimental Psychology: Human Perception and Performance (1995) 21(3):531–551. [DOI] [PubMed] [Google Scholar]

- 12. *.Kriegeskorte N: Deep neural networks: A new framework for modeling biological vision and brain information processing. Ann Review of Vision Science (2015) 1(417–446. [DOI] [PubMed] [Google Scholar]; It hardly needs to be mentioned that deep neural networks have transformed computer-based object recognition. This is a useful introduction to that world.

- 13.Wolfe JM, Horowitz TS: What attributes guide the deployment of visual attention and how do they do it? Nature Reviews Neuroscience (2004) 5(6):495–501. [DOI] [PubMed] [Google Scholar]

- 14. *.Wolfe JM, Horowitz TS: Five factors that guide attention in visual search. Nature Human Behaviour (2017) 1(0058. [DOI] [PMC free article] [PubMed] [Google Scholar]; This paper is offers a recent list of the basic, preattentive features. The paper you are reading is, in a sense, a companion to this one.

- 15.James W: The principles of psychology Henry Holt and Co, New York: (1890). [Google Scholar]

- 16.von der Malsburg C: The correlation theory of brain function. Max-Planck-lnstitute for Biophysical Chemistry,Gottingen, Germany, Internal Report 81–2 (1981) Reprinted in Models of Neural Networks II (1994), Domany E, van Hemmen JL, and Schulten K, eds. (Berlin: Springer; ).( [Google Scholar]

- 17.Whitney D, Levi DM: Visual crowding: A fundamental limit on conscious perception and object recognition. Trends in Cognitive Sciences (2011) 15(4):160–168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wolfe JM, Yu KP, Stewart Ml, Shorter AD, Friedman-Hill SR, Cave KR: Limitations on the parallel guidance of visual search: Color x color and orientation x orientation conjunctions. J Exp Psychol: Human Perception and Performance (1990) 16(4):879–892. [DOI] [PubMed] [Google Scholar]

- 19.Joseph JS, Chun MM, Nakayama K: Attentional requirements in a "preattentive" feature search task. Nature (1997) 387(805. [DOI] [PubMed] [Google Scholar]

- 20.Thorpe S, Fize D, Marlot C: Speed of processing in the human visual system. Nature (1996) 381(6 June):520–552. [DOI] [PubMed] [Google Scholar]

- 21.Greene MR, Oliva A: Recognition of natural scenes from global properties: Seeing the forest without representing the trees. . Cognitive Psychology (2008) 58(2):137–176 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Cohen MA, Alvarez GA, Nakayama K: Natural-scene perception requires attention. Psychological Science (2011). [DOI] [PubMed] [Google Scholar]

- 23.Evans KK, Treisman A: Perception of objects in natural scenes: Is it really attention free? J Exp Psychol Hum Percept Perform (2005) 31(6):1476–1492. [DOI] [PubMed] [Google Scholar]

- 24.Cohen MA, Cavanagh P, Chun MM, Nakayama K: The attentional requirements of consciousness. Trends in Cognitive Sciences (2012) 16(8):411–417. [DOI] [PubMed] [Google Scholar]

- 25. *.Yeshurun Y, Carrasco M: Attention improves performance in spatial resolution tasks. Vision Research (1999) 39(293–306. [DOI] [PubMed] [Google Scholar]; A good source for the role selective attention plays in enhancing the representation of attended objects.

- 26.Woodman GF, Luck SJ: Serial deployment of attention during visual search. J Exp Psychol: Human Perception and Performance (2003) 29(1):121–138. [DOI] [PubMed] [Google Scholar]

- 27.Wolfe JM: Moving towards solutions to some enduring controversies in visual search. Trends Cogn Sci (2003) 7(2):70–76. [DOI] [PubMed] [Google Scholar]

- 28.McElree B, Carrasco M: The temporal dynamics of visual search: Evidence for parallel processing in feature and conjunction searches. J Exp Psychol Hum Percept Perform (1999) 25(6):1517–1539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Palmer J, Verghese P, Pavel M: The psychophysics of visual search. Vision Res (2000) 40(10–12):1227–1268. [DOI] [PubMed] [Google Scholar]

- 30.Hulleman J, Olivers CNL: The impending demise of the item in visual search. Behav Brain Sci (2017) 1–20( [DOI] [PubMed] [Google Scholar]

- 31.Cheal M, Lyon D: Attention in visual search: Multiple search classes. Perception and Psychophysics (1992) 52(2):113–138. [DOI] [PubMed] [Google Scholar]

- 32.Van der Stigchel S, Belopolsky AV, Peters JC, Wijnen JG, Meeter M, Theeuwes J: The limits of top-down control of visual attention. Acta Psychol (2009) 132(3):201–212. [DOI] [PubMed] [Google Scholar]

- 33. *.Theeuwes J: Feature-based attention: It is all bottom-up priming. Philosophical Transactions of the Royal Society B: Biological Sciences (2013) 368(1628). [DOI] [PMC free article] [PubMed] [Google Scholar]; Jan Theeuwes is a leading proponent of the idea that feature guidance is a bottom-up process. We think he is wrong but this is a clear statement of that position.

- 34. *.Chen X, Zelinsky GJ: Real-world visual search is dominated by top-down guidance. Vision Res (2006) 46(24):4118–4133. [DOI] [PubMed] [Google Scholar]; Greg Zelinsky offers an alternative to the bottom-up views of Jan Theeuwes. Like so many debates, the debate between top-down and bottom-up guidance is not an either/or choice. It is both/and.

- 35.Maljkovic V, Nakayama K: Priming of popout: I. Role of features. Memory & Cognition (1994) 22(6):657–672. [DOI] [PubMed] [Google Scholar]

- 36.Wolfe JM, Butcher SJ, Lee C, Hyle M: Changing your mind: On the contributions of top-down and bottom-up guidance in visual search for feature singletons. J Exp Psychol: Human Perception and Performance (2003) 29(2):483–502. [DOI] [PubMed] [Google Scholar]

- 37.Awh E, Belopolsky AV, Theeuwes J: Top-down versus bottom-up attentional control: A failed theoretical dichotomy. Trends in Cognitive Sciences (2012) 16(8):437–443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kristjánsson A, Campana G: Where perception meets memory: A review of repetition priming in visual search tasks. Attention, Perception & Psychophysics (2010) 72(1):5–18. [DOI] [PubMed] [Google Scholar]

- 39.Klein RM: On the control of attention Canadian Journal of Experimental Psychology (2009) 63(3):240–252. [DOI] [PubMed] [Google Scholar]

- 40. *.Henderson JM, Hayes TR: Meaning guides attention in real-world scene images: Evidence from eye movements and meaning maps. Journal of Vision (2018) 18(6):10–10. [DOI] [PMC free article] [PubMed] [Google Scholar]; Guidance by features is important in visual search. However, in the real-world of meaningful scenes, the structure of those scenes and the relationships between objects become powerful guiding forces in their own right. This paper is part of the new literature exploring scene guidance.

- 41.Vo ML, Wolfe JM: The interplay of episodic and semantic memory in guiding repeated search in scenes. Cognition (2013) 126(2):198–212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ehinger KA, Hidalgo-Sotelo B, Torralba A, Oliva A: Modeling search for people in 900 scenes: A combined source model of eye guidance. Visual Cognition (2009) 17(6):945–978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Pereira EJ, Castelhano MS: Peripheral guidance in scenes: The interaction of scene context and object content. Journal of Experimental Psychology: Human Perception and Performance (2014) 40(5):2056–2072. [DOI] [PubMed] [Google Scholar]

- 44.Castelhano MS, Heaven C: The relative contribution of scene context and target features to visual search in scenes. Atten Percept Psychophys (2010) 72(5):1283–1297. [DOI] [PubMed] [Google Scholar]

- 45.Henderson JM, Ferreira F: Scene perception for psycholinguists In: The interface of language, vision, and action: Eye movements and the visual world. Henderson JM, Ferreira F (Eds), Psychology Press, New York: (2004):1–58. [Google Scholar]

- 46.Biederman I, Glass AL, Stacy EW: Searching for objects in real-world scenes. J of Experimental Psychology (1973) 97(22–27. [DOI] [PubMed] [Google Scholar]

- 47.Greene MR, Wolfe JM: Global image properties do not guide visual search. J Vis (2011) 11(6). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Duncan J, Humphreys GW: Visual search and stimulus similarity. Psychological Review (1989) 96(433–458. [DOI] [PubMed] [Google Scholar]

- 49.Nagy AL, Sanchez RR: Critical color differences determined with a visual search task. J Optical Society of America - A (1990) 7(7):1209–1217. [DOI] [PubMed] [Google Scholar]

- 50.Foster DH, Westland S: Multiple groups of orientation-selective visual mechanisms underlying rapid oriented-line detection. Proc R Soc Lond B (1998) 265(1605–1613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.D'Zmura M: Color in visual search. Vision Research (1991) 31(6):951–966. [DOI] [PubMed] [Google Scholar]

- 52.Bauer B, Jolicœur P, Cowan WB: Visual search for colour targets that are or are not linearly-separable from distractors. Vision Research (1996) 36(10):1439–1466. [DOI] [PubMed] [Google Scholar]

- 53.Wolfe JM, Friedman-Hill SR, Stewart Ml, O'Connell KM: The role of categorization in visual search for orientation. J Exp Psychol: Human Perception and Performance (1992) 18(1):34–49. [DOI] [PubMed] [Google Scholar]

- 54.Theeuwes J, Kooi JL: Parallel search for a conjunction of shape and contrast polarity. Vision Research (1994) 34(22):3013–3016. [DOI] [PubMed] [Google Scholar]

- 55.Nordfang M, Wolfe JM: Guided search for triple conjunctions Atten Percept Psychophys (2014) 76(6):1535–1559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Thorpe SJ, Gegenfurtner KR, Fabre-Thorpe M, Bulthoff HH: Detection of animals in natural images using far peripheral vision. Eur J Neurosci (2001) 14(5):869–876. [DOI] [PubMed] [Google Scholar]

- 57.Li FF, VanRullen R, Koch C, Perona P: Rapid natural scene categorization in the near absence of attention. Proc Natl Acad Sci U S A (2002) 99(14):9596–9601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Drewes J, Trommershauser J, Gegenfurtner KR: Parallel visual search and rapid animal detection in natural scenes. Journal of Vision (2011) 11(2). [DOI] [PubMed] [Google Scholar]

- 59.Treisman A, Souther J: Search asymmetry: A diagnostic for preattentive processing of seperable features. J Exp Psychol - General (1985) 114(285–310. [DOI] [PubMed] [Google Scholar]

- 60.Dick M, Ullman S, Sagi D: Parallel and serial processes in motion detection. Science (1987) 237(400–402. [DOI] [PubMed] [Google Scholar]

- 61.Treisman A, Gormican S: Feature analysis in early vision: Evidence from search asymmetries. Psych Rev (1988) 95(15–48. [DOI] [PubMed] [Google Scholar]

- 62.Carrasco M, McLean TL, Katz SM, Frieder KS: Feature asymmetries in visual search: Effects of display duration, target eccentricity, orientation and spatial frequency. Vision Research (1998) 38(3):347–374. [DOI] [PubMed] [Google Scholar]

- 63.Rosenholtz R: Search asymmetries? What search asymmetries? Perception and Psychophysics (2001) 63(3):476–489. [DOI] [PubMed] [Google Scholar]

- 64.Eastwood JD, Smilek D, Merikle PM: Differential attentional guidance by unattended faces expressing positive and negative emotion. Percept Psychophys (2001) 63(6):1004–1013. [DOI] [PubMed] [Google Scholar]

- 65.Frischen A, Eastwood JD, Smilek D: Visual search for faces with emotional expressions. Psychological Bulletin (2008) 134(5):662–676. [DOI] [PubMed] [Google Scholar]

- 66.Horstmann G, Bergmann S, Burghaus L, Becker S: A reversal of the search asymmetry favoring negative schematic faces Visual Cognition (2010) 18(7):981–1016. [Google Scholar]

- 67.Wolfe JM, DiMase JS: Do intersections serve as basic features in visual search? Perception (2003) 32(6):645–656. [DOI] [PubMed] [Google Scholar]

- 68.Wolfe JM: "Effortless" texture segmentation and "parallel" visual search are not the same thing. Vision Research (1992) 32(4):757–763. [DOI] [PubMed] [Google Scholar]

- 69.Wolfe JM, Chun MM, Friedman-Hill SR: Making use of texton gradients: Visual search and perceptual grouping exploit the same parallel processes in different ways In: Early vision and beyond. Papathomas T, Chubb C, Gorea A, Kowler E (Eds), MIT Press, Cambridge, MA: (1995):189–198. [Google Scholar]

- 70. *.Sun P, Chubb C, Wright CE, Sperling G: Human attention filters for single colors. Proceedings of the National Academy of Sciences (2016) 113(43):E6712–E6720. [DOI] [PMC free article] [PubMed] [Google Scholar]; The virtue of this paper is that it presents a novel method for looking at the way the preattentive features (here, color) do their work in guiding attention.

- 71. *.Sun P, Chubb C, Wright CE, Sperling G: The centroid paradigm: Quantifying feature-based attention in terms of attention filters. Attention, Perception, & Psychophysics (2016) 78(2):474–515. [DOI] [PubMed] [Google Scholar]; This paper offers a more complete development of a novel method for measuring “attention filters”.

- 72.Lindsey DT, Brown AM, Reijnen E, Rich AN, Kuzmova Y, Wolfe JM: Color channels, not color appearance or color categories, guide visual search for desaturated color targets. Psychol Sci (2010) 21(9):1208–1214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Müller HJ, Heller D, Ziegler J: Visual search for singleton feature targets within and across feature dimensions. Perception & Psychophysics (1995) 57(1):1–17. [DOI] [PubMed] [Google Scholar]

- 74.Rangelov D, Muller HJ, Zehetleitner M: The multiple-weighting-systems hypothesis: Theory and empirical support. Atten Percept Psychophys (2012) 74(3):540–552. [DOI] [PubMed] [Google Scholar]

- 75.Treisman A: Features and objects: The fourteenth bartlett memorial lecture. The Quarterly J of Experimental Psychology (1988) 40A(2)(201–237. [DOI] [PubMed] [Google Scholar]

- 76.Gibson JJ, Radner M: Adaptation, after-effect and contrast in the preception of tilted lines: I. Quantitative studies. J Exp Psychol (1937. ) 20(p453–467. [Google Scholar]

- 77.Campbell FW, Maffei L: The tilt aftereffect: A fresh look. . Vision Research (1971. ) 11(p833–840. [DOI] [PubMed] [Google Scholar]

- 78.Braddick O, Campbell FW, Atkinson J: Channels in vision: Basic aspects In: Perception: Handbook of sensory physiology. Held R, Leibowitz WH, Teuber H-L (Eds), Springer-Verlag, Berlin; (1978):3–38. [Google Scholar]

- 79.Chen L: The topological approach to perceptual organization. Visual Cognition (2005) 12(4):553–637. [Google Scholar]

- 80.McCollough C: Color adaptation of edge-detectors in the human visual system. Science (1965. ) 149(p1115–1116. [DOI] [PubMed] [Google Scholar]

- 81.Houck MR, Hoffman JE: Conjunction of color and form without attention. Evidence from an orientation-contingent color aftereffect. J Exp Psychol: Human Perception and Performance (1986) 12(186–199. [DOI] [PubMed] [Google Scholar]

- 82.Mayhew JEW, Anstis SM: Movement aftereffects contingent on color, intensity, and pattern. P&P (1972. ) 12(p77–85. [Google Scholar]

- 83.Webster MA, MacLeod DIA: Visual adaptation and face perception. (2011) 366(1571):1702–1725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Ng M, Boynton GM, Fine I: Face adaptation does not improve performance on search or discrimination tasks. Journal of Vision (2008) 8(1):1–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Treisman A: Features and objects in visual processing. Scientific American (1986) 255(Nov):114B–125. [Google Scholar]

- 86.Zeki SM: Functional specialisation in the visual cortex of the rhesus monkey. Nature (1978) 274(5670):423–428. [DOI] [PubMed] [Google Scholar]

- 87. *.Zhaoping L: From the optic tectum to the primary visual cortex: Migration through evolution of the saliency map for exogenous attentional guidance. Current Opinion in Neurobiology (2016) 40(94–102. [DOI] [PubMed] [Google Scholar]; Zhaoping Li has been a leading proponent of thinking of preattentive features as having their roots in early stages of visual cortical processing.

- 88.Foster DH, Ward PA: Horizontal-vertical filters in early vision predict anomalous line-orientation identification frequencies. Proceedings of the Royal Society of London B (1991) 243(83–86. [DOI] [PubMed] [Google Scholar]

- 89.Jonides J, Gleitman H: A conceptual category effect in visual search: O as letter or digit. Perception and Psychophysics (1972) 12(457–460. [Google Scholar]

- 90.Krueger LE: The category effect in visual search depends on physical rather than conceptual differences. Perception and Psychophysics (1984) 35(6):558–564. [DOI] [PubMed] [Google Scholar]

- 91.Kong G, Alais D, Van der Berg E: Orientation categories used in guidance of attention in visual search can differ in strength. Atten Percept Psychophys (2017) 79(8):2246–2256. [DOI] [PubMed] [Google Scholar]

- 92.Daoutis CA, Pilling M, Davies IRL: Categorical effects in visual search for colour. . Visual Cognition (2006) 14(2):217–240. [Google Scholar]

- 93.Wright O: Categorical influences on chromatic search asymmetries. Visual Cognition (2012) 20(8):947–987. [Google Scholar]

- 94.Brown AM, Lindsey DT, Guckes KM: Color names, color categories, and color-cued visual search: Sometimes, color perception is not categorical. Journal of Vision (2011) 11(12). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Wolfe JM, Vo ML, Evans KK, Greene MR: Visual search in scenes involves selective and nonselective pathways. Trends Cogn Sci (2011) 15(2):77–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Schneider W, Shiffrin RM: Controlled and automatic human information processing: I. Detection, search, and attention. Psychol Rev (1977) 84(1–66. [Google Scholar]

- 97.Carrasco M, Ponte D, Rechea C, Sampedro MJ: "Transient structures": The effects of practice and distractor grouping on a within-dimension conjunction search. Perception and Psychophysics (1998) 60(7):1243–1258. [DOI] [PubMed] [Google Scholar]

- 98.Lobley K, Walsh V: Perceptual learning in visual conjunction search. Perception (1998) 27(10):1245–1255. [DOI] [PubMed] [Google Scholar]

- 99.Ellison A, Walsh V: Perceptual learning in visual search: Some evidence of specificities. Vision Res (1998) 38(3):333–345. [DOI] [PubMed] [Google Scholar]

- 100.Treisman A: How the deployment of attention determines what we see. Vis Cognition (2006) 14(4-8):411–443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Su Y, Lai Y, Huang W, Tan W, Qu Z, Ding Y: Short-term perceptual learning in visual conjunction search. Journal of Experimental Psychology: Human Perception and Performance (2014) 40(4):1415–1424. [DOI] [PubMed] [Google Scholar]

- 102.Treisman A, Vieira A, Hayes A: Automaticity and preattentive processing. American Journal of Psychology (1992) 105(341–362. [PubMed] [Google Scholar]

- 103.Utochkin IS, Wolfe JM: How do 25,000+ visual searches change the visual system? Poster presented at the Annual Meeting of the Vision Science Society, May 2018 (2018). [Google Scholar]