Abstract

Objective

The objective was to systematically assess lung cancer risk prediction models by critical evaluation of methodology, transparency and validation in order to provide a direction for future model development.

Methods

Electronic searches (including PubMed, EMbase, the Cochrane Library, Web of Science, the China National Knowledge Infrastructure, Wanfang, the Chinese BioMedical Literature Database, and other official cancer websites) were completed with English and Chinese databases until April 30th, 2018. Main reported sources were input data, assumptions and sensitivity analysis. Model validation was based on statements in the publications regarding internal validation, external validation and/or cross-validation.

Results

Twenty-two studies (containing 11 multiple-use and 11 single-use models) were included. Original models were developed between 2003 and 2016. Most of these were from the United States. Multivariate logistic regression was widely used to identify a model. The minimum area under the curve for each model was 0.57 and the largest was 0.87. The smallest C statistic was 0.59 and the largest 0.85. Six studies were validated by external validation and three were cross-validated. In total, 2 models had a high risk of bias, 6 models reported the most used variables were age and smoking duration, and 5 models included family history of lung cancer.

Conclusions

The prediction accuracy of the models was high overall, indicating that it is feasible to use models for high-risk population prediction. However, the process of model development and reporting is not optimal with a high risk of bias. This risk affects prediction accuracy, influencing the promotion and further development of the model. In view of this, model developers need to be more attentive to bias risk control and validity verification in the development of models.

Keywords: Lung neoplasms, carcinoma, bronchogenic, risk assessment, models, theoretical

Introduction

Lung cancer is the most common cause of cancer death worldwide. In 2012, there were 1.82 million new cases, accounting for 12.9% of the total number of new cancers and 1.56 million lung cancer deaths, with lung cancer responsible for nearly 1 in 5 cancer deaths (1). In Europe, lung cancer is the most common cause of cancer death in males (267,000, 24.8%) and the second most common cause of cancer death in females (121,000 deaths, 14.2%) (2). The National Lung Screening Trial (NLST) in the United States found a 20% relative reduction in mortality of lung cancer among long-term, high-risk smokers that were screened with low-dose computed tomography (LDCT) (3). That trial suggests that screening may prevent and reduce lung cancer mortality with sensitive risk models. Hence, population screening for the early detection of lung cancer is an important part of current clinical research.

However, LDCT screening has disadvantages including radiation exposure, false positives and over diagnosis. It is therefore essential to identify the most appropriate target population to maximize screening benefits and minimize adverse effects. By preliminary assessment, screening programs for high-risk groups will improve screening efficiency as well as reduce screening costs and resource waste. In fact, the success of any screening program is directly related to high-risk group assessment (4,5) and accomplished with lung cancer prediction models (6,7). To help define the target population for lung cancer screening, some models allow calculation of individual risk for lung cancer based on previously results (8). Model prediction can improve clinical intervention and post-care development, as well as guide the selection of screening populations to promote optimal use of resources. After Bach’s study (9), research focus has been on predictive models of lung cancer. Current models have good sensitivity and specificity and were based on traditional variables, biomarkers, LDCT and data mining techniques. The objective of this study was to evaluate prediction models for lung cancer high-risk groups in order to provide a direction for further model development.

Materials and methods

Search strategies and eligibility criteria

A systematic literature search was performed with both English and Chinese databases including EMbase, PubMed, Web of Science, the Cochrane Library, Chinese BioMedical Literature Database (CBM), WanFang Data, and the China National Knowledge Infrastructure (CNKI). The search used a combination of subject mesh terms and free words. Search terms included lung neoplasms, lung cancer, mass screening, early detection of cancer, risk factors, high-risk population, high-risk group, high-risky population, decision support techniques, prediction model and forecast model. A search strategy in PubMed is listed below as an example:

#1 “lung Neoplasms”[MeSH] OR “lung Neoplasms”[Title/Abstract] OR “lung cancer”[Title/Abstract]

#2 “Mass Screening”[MeSH] OR “Early Detection of Cancer”[MeSH] OR “Screening”[Title/Abstract]

#3 “high risk”[Title/Abstract]

#4 “decision support techniques”[MeSH] OR “prediction model” [Title/Abstract] OR “forecast model”[Title/Abstract]

#5 #1 AND #2 AND #3 AND #4

The inclusion criteria were: 1) lung cancer screening; 2) high-risk population prediction model; and 3) report validity and model’s statistical method, etc. Literature exclusion criteria were: 1) non-Chinese, non-English, and documents that do not have full text; 2) not related to lung cancer screening or early diagnosis of lung cancer; 3) repeated publications; 4) review and other secondary research literature; 5) conference summary; or 6) patented technology.

Selection of eligible studies and data extraction

Two researchers independently conducted literature screening, data extraction and cross-checking. If disagreements occurred, the two researchers would discuss a solution or submit the disagreement to a third researcher for discussion. If information could not be extracted from an article, the researchers contacted the original author for clarification. When reading the literature, the researchers read the title and abstract first to exclude apparently unrelated literature, and then read the complete text to determine inclusion. Data extraction content mainly included: 1) basic information such as publication year, country or region, research design type, model’s statistical method, crowd information, modeling sample, area under the receiver-operating characteristic curve (AUC) and concordance index (C-index); 2) model transparency information, inclusion variables, expressions, limitations, financial support, conflicts of interest and validity evaluation methods; 3) model risk of bias, including blind method, data bias risk, sensitivity analysis of uncertainty variables, whether the model was calibrated, and external validity; 4) variables included in each model: sociodemographic, exposure history, smoking history, medical history, family history and genetic risk factors; 5) model validity evaluation content including internal validity, cross-validity and external validity; and 6) basic information of single-use models.

Framework for qualitative assessment of multiple-use models

In this study, models were divided into multiple-use and single-use. The model description, transparency and risk of bias assessment were used for multiple-use models. Model descriptions included model publication date, country or region, study type, model’s statistical method, population information, modeling samples, model samples and model accuracy (AUC or C-index).

Transparency mainly evaluates the degree of disclosure of specific information by the model. Improving the transparency of the model promotes the use of the model by exposing the model development process, statistical methods, inclusion parameters, model structure and other pertinent information for the user (7). Herein, this study conducted a transparency evaluation of the inclusion variables, expressions, limitations, financial support and conflicts of interest for each model.

Validity directly reflects the accuracy of the model in realistic prediction and is also an important criterion for actual application of the model. This study evaluated the internal validity, intersection angle and external validity of the included models. Internal validity detects the standardization of mathematical methods and models in the process of model construction. Through multiple data training, it avoids unintentional calculation errors and improves the internal accuracy of the model. Cross-validation identifies how different models solve the same problem. External validity aligns the model to actual data and investigates its predictive accuracy. Validity evaluation should be compared and completed (10,11).

Risk of bias assessment was based on the Mcginn checklist (12) and the results of Jamie’s study (13). A checklist for model risk of bias assessment was developed and blinded from outcome evaluation by the predictive factor blind method. In this manner, sensitivity analysis of the variables was determined when the model had been calibrated. The five dimensions of external validity were used to evaluate the risk of bias for clinical prediction tools, and the study was rated as high, moderate, or low risk of bias. Studies with a high risk of bias had a fatal flaw that made their results very uncertain. Studies with a low risk of bias met all criteria, making their results more certain. Studies that did not meet all criteria but had no fatal flaw (thus making their results somewhat uncertain) were rated as having a moderate risk of bias (Table 1).

1.

Framework for quality assessment of multiple-use models

| Term | Content |

| Transparency | 1. Variables include (Yes or No) |

| 2. Model expression (Yes or No) | |

| 3. Limitation | |

| 4. Financial support | |

| 5. Conflict of interest | |

| 6. Validation | |

| Risk of bias | 1. Blind evaluation of outcome (Yes or No) |

| 2. Blind evaluation of predictor (Yes or No) | |

| 3. Sensitivity analysis (Yes or No) | |

| 4. Calibration (Yes or No) | |

| 5. External validation (Yes or No) | |

| Validation

methods |

1. Internal validation |

| 2. Cross-validation | |

| 3. External validation |

Results

Basic information

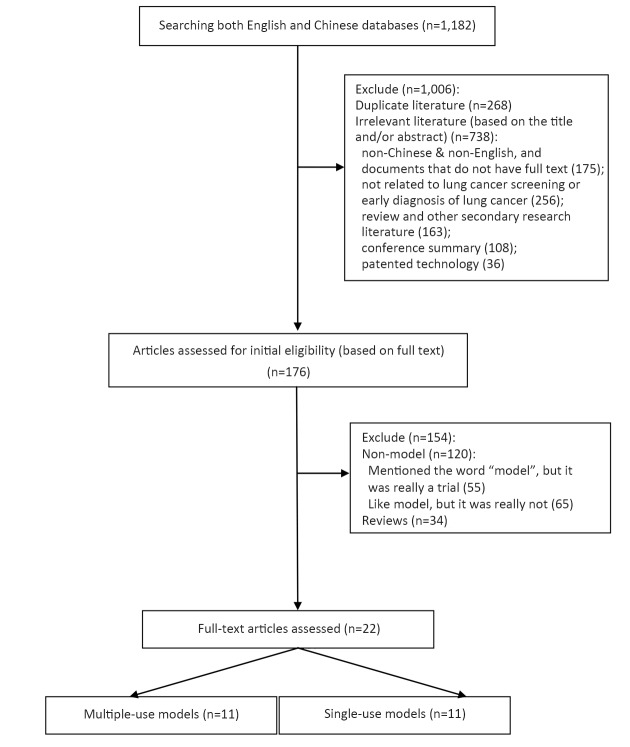

A total of 11 models that were used multiple times were included in this study (Figure 1). Three of those were derived versions. The earliest model was the Bach model published in 2003. The largest number of published models was from the United States, with the remaining from the United Kingdom and Canada. These models were based on case-control studies (six studies) and cohort studies (five studies). Statistically, most of the studies used logistic regression, while three of the models used Cox regression.

1.

Flowchart of screening result.

Two of the models included racial factors. The other models were mainly limited by age and smoking history. The youngest individual was 20 years old and the oldest 80 years old. Most individuals were 50−75 years old. The definition of smoking history was defined as never smoker, former smoker and current smoker (Table 2).

2.

Characteristics of multiple-use models

| Model | Year | Country or region | Research design | Statistical methods | Population | Modeling sample | AUC (95% CI) | C-index (95% CI) |

| AUC, area under receiver-operating characteristic curve; 95% CI, 95% confidence interval; C-index, concordance index. | ||||||||

| Bach (9) | 2003 | US | Cohort study | Cox proportional hazards regression | Aged 50−69 years, current and former smokers | 18,172 | 0.72 | |

| Spitz (14) | 2007 | US | Case-control study | Logistic | Never, former and current smokers | Cancer case 1,851/Control 2,001 Never smokers: cancer case 330/Control 379 Former smokers: cancer case 784/Control 884 Current smokers: cancer case 737/Control 738 | Never smokers,

0.57 (0.47−0.66); Former smokers, 0.63 (0.58−0.69); Current smokers, 0.58 (0.52−0.64) |

Never smokers:

0.59 (0.51−0.67); Former smokers: 0.63 (0.58−0.67); Current smokers: 0.65 (0.60−0.69) |

| Spitz (15) | 2008 | US | Case-control study | Logistic | Current and former smokers, White non-Hispanic cases | Current smokers: cancer case 350/Control 244; Former smokers: cancer case 375/Control 371 | Former smokers,

0.70 (0.66−0.74); Current smokers, 0.73 (0.69−0.77) |

|

| LLP (16) | 2008 | United

Kingdom |

Case-control study | Logistic | Aged 20−80 years | Cancer case 579/

Control 1,157 |

0.71 | |

| LLPi (17) | 2015 | United

Kingdom |

Case-control study | Cox proportional hazards regression | Aged 45−79 years | 8,760: cancer case 237, control 8,523 | 0.852 (0.831−0.873) | |

| PLCO (18) | 2009 | Canada | Cohort study | Logistic | Aged 55−74 years who were free of the cancers under study | 12,314 | 0.865 | |

| PLCO (19) | 2011 | Canada | Cohort study | Logistic | Aged 55−74 years, Model 1: the PLCO control arms; Model 2: smokers only | Model 1: 70,962

Model 2: 38,254 |

Model 1: 0.859

(0.8476−0.8707); Model 2: 0.809 (0.7957−0.8219) |

|

| PLCOM (20) | 2012 | Canada | Cohort study | Logistic | Aged 55−74 years, former smokers | 36,286 | 0.803 (0.782−0.813) | |

| Etzel (21) | 2008 | US | Case-control study | Logistic | African-Americans | Cancer case 491/Control 497 | 0.75 | |

| Pittsburgh (22) | 2016 | US | Case-control study | Logistic | Aged 55−74 years, current and former smokers | LDCT 25,929/CXR 25,648 | LDCT 0.679/CXR 0.687 | |

| Hoggart (23) | 2012 | United

Kingdom |

Cohort study | Survival analysis | Aged 40−65 years, current, former and never smokers | 169,035 (90% of the data) | One year-current: 0.82; Former: 0.83; Never: 0.84. 5 year-current: 0.77; Former: 0.72; Never: 0.79 | |

Modeling samples ranged from 594 to 70,962. The accuracy of the model was measured by AUC or C statistic. According to the summary results, the minimum AUC of each model was 0.57 and the largest was 0.87. The smallest C statistic was 0.59 and the largest was 0.85.

Transparency

The models included in this study listed inclusion variables, but only two models listed the model’s expressions. The limitations of each model were primarily uncommon population assessed by the model, the lack of good external validity verification and the inability of the model to assess an individual’s lung cancer risk. The model research was supported by national and regional projects, or by public welfare funds such as the Lung Cancer Foundation. Only four studies reported no conflicts of interest with no other studies reporting relevant content. Six studies were validated through external validation and three were cross-validated (Table 3).

3.

Transparency assessment of multiple-use models

| Model | Variable

included |

Model expression | Limitation | Financial support | Conflict of

interest |

Validation |

| LLP, Liverpool Lung Project; PLCO, the Prostate, Lung, Colorectal and Ovarian; Y, reported; N, no reported. | ||||||

| Bach | Y | N | It does not distinguish among the risks of different histologic types of lung cancer, and it is relevant only to one subset (albeit a large subset) of at-risk individuals — those aged 50 years or older who have a smoking history. | Research institution; National project fund | N | Internal validation/

Cross-validation |

| Spitz (2007) | Y | N | The models may not be sufficiently discriminatory to allow accurate risk assessment at the individual level. They are needed to be validated in independent populations. | Research institution; National project fund | N | External

validation |

| Spitz (2008) | Y | N | Without an independent validation. | Research institution; National project fund | Y | Cross-

validation |

| LLP | Y | Y | More work is needed to test the applicability of the model in diverse populations, including those from diverse geographic regions. | Research institution; Foundation | N | Cross-

validation |

| LLPi | Y | Y | More work is needed to test the applicability of the model in diverse populations, including those from diverse geographic regions. | Region project fund; Foundation | Y | Internal

validation |

| PLCO (2009) | Y | N | The study model was developed in asymptomatic individuals. It is unclear whether its performance will be substantially different in symptomatic individuals presenting to clinicians. | National project fund | N | Internal

validation |

| PLCO (2011) | Y | N | The models may not be generalizable to other populations. Data on exposure to radon, asbestos, second-hand smoke, occupational carcinogens, and history of adult pneumonia were not available for analysis. | National project fund | N | Internal validation/

External validation |

| PLCOM2012 | Y | N | Excluded persons who had never smoked. | Research institution | N | External

validation |

| Etzel | Y | N | The study was hospital-based and the controls were drawn only from the metropolitan area of Houston, Texas; therefore, the results may vary in other geographic locations; the sample size of the study was small. | National project fund | Y | Internal validation/

External validation |

| Pittsburgh | Y | N | The model is derived in preselected high-risk populations and not necessarily applicable to the general population of smokers, and it was derived and tested in the United States and applicability to other populations will need to be tested. | Research institution; National project fund | N | External

validation |

| Hoggart | Y | N | Measures of carcinogens are limited to occupational exposures. | European Union project fund | Y | External

validation |

Risk of bias

Two of the included models had a high risk of bias and the remaining nine were of moderate risk. Sensitivity analysis of uncertain variables was not performed for all models, with only one model blinded by predictive factors and outcome evaluations during development. It is worth noting that six models were calibrated after development, making the risk of bias moderate (Table 4).

4.

Risk of bias assessment of multiple-use models

| Model | Blind evaluation

of outcome |

Blind evaluation

of predictor |

Sensitivity

analysis |

Calibration | External

validation |

Risk of bias |

| Y, reported; N, no reported; H, high risk; M, middle risk. | ||||||

| Bach | N | N | Y | Y | N | M |

| Spitz (2007) | N | N | N | N | Y | M |

| Spitz (2008) | Y | Y | N | Y | N | M |

| LLP | N | N | N | N | N | H |

| LLPi | N | N | N | Y | N | M |

| PLCO (2009) | N | N | N | N | N | H |

| PLCO (2011) | N | N | N | N | Y | M |

| PLCOM2012 | N | N | N | Y | Y | M |

| Etzel | N | N | N | Y | Y | M |

| Pittsburgh | N | N | N | Y | Y | M |

| Hoggart | N | N | N | N | Y | M |

Validity

Model internal validity design is used to develop data, perform repeated operations and verify consistency of results. Three models were repeated by the bootstrap method, one study was re-verified using a partial sample, and one study used five similar research data sets to perform internal validation of the model. Regarding cross-validity, two articles were verified 10-fold and one article 3-fold. Only six studies were externally validated. Sample size varied with a maximum of 44,233 cases and a minimum of 325 cases (Table 5).

5.

Validation and samples of multiple-use models

| Model | Internal validation | Cross-validation | External validation |

| LLP, Liverpool Lung Project; PLCO, the Prostate, Lung, Colorectal and Ovarian. | |||

| Bach | Operate the model from five related study sites 3 times | 10-fold cross-validation | |

| Spitz (2007) | 25% of the data | ||

| Spitz (2008) | 3-fold cross-validation | ||

| LLP | 10-fold cross-validation | ||

| LLPi | Bootstrap 200 times | ||

| PLCO (2009) | Bootstrap 1,000 times | ||

| PLCO (2011) | Bootstrap 200 times | 44,233 | |

| PLCOM2012 | 37,332 | ||

| Etzel | 156 | 325 | |

| Pittsburgh | 3,642 | ||

| Hoggart | 10% of the data | ||

Inclusion of variables

According to the statistical results, the variables included in the models were comprised of six aspects: sociodemographic factors, exposure history, smoking history, medical history, family history and genetic risk factors. The most used variables were age and smoking duration by 6 models, and 5 models included family history of lung cancer (Table 6).

6.

Variables of multiple-use models

| Variables | Bach | Spitz

(2007) |

Spitz

(2008) |

LLP | LLPi | PLCO

(2009) |

PLCO

(2011) |

PLCOM2012 | Etzel | Pittsburgh | Hoggart |

| BMI, body mass index; COPD, chronic obstructive pulmonary disease; LLP, Liverpool Lung Project; PLCO, the Prostate, Lung, Colorectal and Ovarian; Y, the variable was included in the model. | |||||||||||

| Sociodemographic factors | |||||||||||

| Age | Y | Y | Y | Y | Y | Y | |||||

| Gender | Y | Y | Y | Y | |||||||

| Race or ethnic group | Y | ||||||||||

| Education | Y | Y | Y | Y | |||||||

| BMI | Y | Y | Y | Y | |||||||

| Exposure history | |||||||||||

| Dust exposures | Y | Y | Y | Y | |||||||

| Asbestos exposure | Y | Y | Y | Y | Y | ||||||

| Environmental tobacco | |||||||||||

| Smoke exposure | Y | ||||||||||

| Smoking history | |||||||||||

| Age stopped smoking | Y | Y | |||||||||

| Smoking duration | Y | Y | Y | Y | Y | Y | |||||

| Pack-years smoked | Y | Y | Y | Y | |||||||

| Smoking status | Y | Y | Y | ||||||||

| Smoking intensity | Y | Y | |||||||||

| Smoking quit time | Y | Y | |||||||||

| Cigarettes per day | Y | ||||||||||

| Time since smoking cessation | Y | ||||||||||

| Medical history | |||||||||||

| Emphysema | Y | Y | |||||||||

| Hay fever | Y | Y | Y | Y | |||||||

| Bleomycin sensitivity | Y | ||||||||||

| Prior diagnosis of pneumonia | Y | ||||||||||

| Prior diagnosis of malignant

tumor |

Y | Y | |||||||||

| COPD | Y | Y | Y | Y | |||||||

| Chest X-ray in past 3 years | Y | ||||||||||

| Personal history of cancer | Y | ||||||||||

| Asthma | Y | ||||||||||

| Family history | |||||||||||

| Family history of cancer | Y | Y | Y | ||||||||

| First-degree relatives with

cancer |

Y | ||||||||||

| Family history of lung cancer | Y | Y | Y | Y | Y | ||||||

| Nodule | Y | ||||||||||

| Family history of smoking-

related cancer |

Y | ||||||||||

| Genetic risk factors | |||||||||||

| DNA repair capacity | Y | ||||||||||

| chr15q25 | Y | ||||||||||

| chr5p15 | Y | ||||||||||

Single-use models

The single-use models were mostly from China, with two from the United States and one from Germany. The types of studies were either cohort or case-control, with most studies from China case-control. Statistical methods were diverse. In addition to Logistic and Cox regression analysis, data mining techniques such as artificial neural network, artificial neural network, support vector machine, decision tree, support vector machine and Fisher discriminant analysis were employed. In addition to the above variables, tumor markers, gene loci and psychological factors emerged, providing a valuable reference for model prediction. A large amount of data was extracted from established samples with the smallest sample size a total of 114 cases. Prediction accuracy and validity evaluation were not disclosed by some studies (Table 7).

7.

Single-use models for lung cancer prediction

| Model | Year | Country

or region |

Research

design |

Statistical

methods |

Variable

included |

Modeling

sample |

Validation | AUC

(95% CI) |

C-index

(95% CI) |

| AUC, area under receiver-operating characteristic curve; 95% CI, 95% confidence interval; C-index, concordance index; ANN, artificial neural network; SVM, support vector machine; BMI, body mass index; CRP, C-reactive protein; HGF, hepatocyte growth factor; SNP, single nucleotide polymorphism; DNMT, DNA-methyltransferase; AFP, alpha-fetal protein; CEA, carcinoembryonic antigen; NSE, neuron specific enolase; CA, carbohydrate antigen; HGH, human growth hormone. | |||||||||

| Wozniak MB (24) | 2015 | Germany | Case-control study | Logistic | Gender, age and smoking status, 24 microRNAs | 100 case;

100 control |

Internal

validation |

0.874 | N |

| Wang X (25) | 2015 | China | Case-control study | Logistic | Gender, age, education, BMI, family history, medical history, exposure history, lifestyle | 705 case;

988 control |

Internal

validation |

0.8851 | N |

| Muller DC (26) | 2017 | US | Cohort study | Flexible parametric survival | Gender, smoking history, medical history, family history | 502,321 | Internal

validation |

N | 0.85

(0.82−0.87) |

| Ma S (27) | 2016 | China | Cohort study | Logistic | Gender, age, smoke, prolactin, CRP, NY-ESI-1, HGF | 543 | External

validation |

0.86 (95% CI:

0.83−0.88 |

N |

| Wu X (28) | 2016 | China | Cohort study | Cox regression analysis | Age, gender, smoking pack-years, BMI, family history, medical history, exposure history, biomarkers | 395,875 | Internal validation; External validation | 0.851, with never smokers

0.806, light smokers 0.847, and heavy smokers 0.732 |

N |

| Gu F (29) | 2017 | US | Cohort study | Cox proportional hazard model | Age, gender, race/ethnicity, education, family history, BMI, smoking status, smoking history | 18,729 | N | Incidence model: 0.6941;

Death model: 0.7376 |

N |

| Lin KF (30) | 2017 | China | Cohort study | Logistic | Age, gender, and BMI, nodule number, family history of lung cancer, family history of other cancer | 784 | N | N | N |

| Sha R (31) | 2017 | China | Case-control study | Logistic | Age, gender, BMI, family history | 227 case;

454 control |

N | Model 1: 0.827 (0.794−0.861);

Model 2: 0.836 (0.804−0.868) |

N |

| Lin H (32) | 2011 | China | Case-control study | Logistic | Gender, age, smoking status, medical history, exposure history, family history | 633 case;

565 control |

N | N | 0.881 |

| Ni R (33) | 2016 | China | Case-control study | ANN, SVM, Decision tree | Gender, age, medical history, smoking history, drinking history, family history | 214 | External validation | N | 0.972 |

| Li H (34) | 2012 | China | Case-control study | Logistic | Gender, age, smoking status, SNPs | N | N | 0.637 | N |

| Feng YJ (35) | 2013 | China | Case-control study | Logistic, Decision tree, ANN, SVM | Gender, age, smoking history, DNMT1, DNMT3a | 136 cancer;

140 benign lung disease; 145 control |

External validation | Logistic: 0.923; Decision tree: 0.946; ANN: 0.877; SVM: 0.851 | N |

| Wang N (36) | 2012 | China | Case-control study | Fisher, Decision tree, ANN | Gender, age, smoking status, medical history, genetic factors | 251 case

256 control |

N | Fisher: 0.722; Decision tree: 0.929; ANN: 0.894 | N |

| Zhang HQ (37) | 2012 | China | Case-control study | Decision tree, ANN, Logistic, Fisher | Ferritin, AFP, CEA, NSE, CA199, CA242, CA125, CA153, HGH9 | 150 case

150 control |

External validation | Decision tree: 0.923; ANN: 0.86; Logistic: 0.809; Fisher: 0.765 | N |

| Sun RL (38) | 2013 | China | Case-control study | Logistic | Family history, smoking status, lifestyle, psychology | 563 case

563 control |

N | N | N |

| Nie GJ (39) | 2009 | China | Case-control study | ANN, Logistic | Tumor marker | 53 case

61 control |

External validation | ANN: 0.88,

Logistic: 0.82 |

N |

| Chang TT (40) | 2011 | China | Case-control study | Fisher | Gender, age, smoking status, medical history, exposure history | 807 case

807 control |

External validation | Non-lung cancer: 0.823; Lung cancer: 0.745 | N |

Discussion

This study included 11 multiple-use models and 17 single-use models. Models used multiple times were developed by European and American countries. In essence, a large number of models were based on large-scale national projects, such as the NLST (multicenter randomized controlled trial, 53,456 samples) (41), Liverpool Lung Project (LLP, case-control study: 800 cases and 400 controls, cohort study: 7,500 samples) (42), and the Prostate, Lung, Colorectal and Ovarian (PLCO, multicenter randomized controlled trial, 74,000 samples) (43). These projects provided model development based on a large quantity of detailed data. Most studies were case-control and cohort, which are convenient for model construction.

A model that can be used multiple times is also a model that can be updated. Four studies incorporated a model that was used to derive subsequent models, which were supplements and adjustments to the previous model. These updated models differ from the previous models. The difference between the previous and the updated version was the scope of the population even though the analysis was the same.

Since the development of the Bach model, many studies have focused on the form of predictive models. Predictive models have been highly valued by the academic community in recent years, and gradually, based on the Bach model, risk factor enrichment has increased. Some predictive models included parameters like tumor markers and genes, which have accelerated model development. Variables now include more basic information and family history, which eliminate the need for traditional factors when combined with single-use models. By the use of new medical information technologies, the accuracy of models has improved.

Transparency is of significance to the promotion and application of models. Through dual disclosure of technical documents and non-technical articles, the user can understand the model’s developmental process, providing application instruction and guidance (10). The multi-use models included in this study have relatively good transparency, although most cited literature does not report expressions of the model. The expression of the model has significance for model popularization. If the variables included in a model were reported, it would be possible for others to consider and weigh the importance of the variables in model prediction. In addition, some studies did not report relevant conflicts of interest, which does not insure the independence of the model.

The existence of bias makes the accuracy of model prediction difficult to assess and can distort the importance of influence on prediction results. There are many forms of biases in the development of a model including research design, field survey, data entry and data analysis, which in turn affect the predictive accuracy of the model. There are many tools for bias evaluation such as the Cochrane tool for randomized control trial (RCT) (44), QUADAS for diagnostic test studies (45), the Newcastle-Ottawa Scale (NOS) scale for cohort studies and case-control studies (46), and systematic review AMSTAR (47). The bias evaluation tool for model development is still immature. This study has developed a bias evaluation checklist based on related research, and found that the risk of bias in lung cancer prediction models is high. The main problem for sensitivity analysis is the lack of a blinding method and variable uncertainty. The absence of blinding may interfere with subjective thinking of the researcher. Sensitivity analysis of uncertain variables is an important step in the refinement of the variables and the main method to improve the validity of the model. Calibration increases the risk of bias in the model’s predictions.

Some models lack verification of external validity. Validation should be ongoing for a model (48). Conducting validation throughout the modeling process is essential in that mistakes can be found and corrected at an early stage of model development. Late validation leaves little time to remedy any issues. The likelihood of finding mistakes increases with the number of validation rounds, minimizing the chance that the model will contain serious errors. For all models, the validation process and its results should be reported. External verification works by comparison of the model’s results with data derived from actual events and by comparison of results. External validity is critical to model development in that the ultimate goal of the model is the application to practice to ensure that best choices are made (7). However, only six of the included studies were externally validated. Although the other studies performed validation (internal validation or cross-validation), these are not adequate for predictive models. A new evaluation model of 2 million high-risk individuals from the Cancer Screening in Urban China Program is being built based on this study. It will integrate analytics including validity, bias and other involved factors that will be applied to this future research project.

Conclusions

This study considers risk prediction models for high-risk lung cancer populations. It rigorously evaluated multiple-use models for transparency, risk of bias and variables. Various models have been developed for different types of populations and were used to predict lung cancer risk based on various conditions (e.g. age and smoking status). The prediction accuracy of the models was high overall, indicating that it is feasible to use models for high-risk population prediction. However, the process of model development and report is not optimal in that the models have a high risk of bias, affecting credibility and predictive accuracy, which influences the promotion and further development of the model. In view of this, model developers need to be more attentive to bias risk control and validity verification in the development of models.

Acknowledgements

This study is supported by National Key R&D Program of China (No. 2017YFC1308700), National Natural Science Foundation of China (No. 81602930), and Chinese Academy of Medical Sciences Initiative for Innovative Medicine (No. 2017-I2M-1-005).

Footnote

Conflicts of Interest: The authors have no conflicts of interest to declare.

Contributor Information

Jiang Li, Email: lij0515@sina.com.

Yao Huang, Email: huangyao93@163.com.

References

- 1.Bray F, Ferlay J, Soerjomataram I, et al Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin. 2018;68:394–424. doi: 10.3322/caac.21492. [DOI] [PubMed] [Google Scholar]

- 2.Ferlay J, Colombet M, Soerjomataram I, et al Cancer incidence and mortality patterns in Europe: Estimates for 40 countries and 25 major cancers in 2018. Eur J Cancer. 2018;103:356–87. doi: 10.1016/j.ejca.2018.07.005. [DOI] [PubMed] [Google Scholar]

- 3.National Lung Screening Trial Research Team Reduced lung-cancer mortality with low-dose computed tomographic screening. N Engl J Med. 2011;365:395–409. doi: 10.1056/NEJMoa1102873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Lopes Pegna A, Picozzi G, Mascalchi M, et al Design, recruitment and baseline results of the ITALUNG trial for lung cancer screening with low-dose CT. Lung Cancer. 2009;64:34–40. doi: 10.1016/j.lungcan.2008.07.003. [DOI] [PubMed] [Google Scholar]

- 5.Field JK, Chen Y, Marcus MW, et al The contribution of risk prediction models to early detection of lung cancer. J Surg Oncol. 2013;108:304–11. doi: 10.1002/jso.23384. [DOI] [PubMed] [Google Scholar]

- 6.Cassidy A, Duffy SW, Myles JP, et al Lung cancer risk prediction: a tool for early detection. Int J Cancer. 2007;120:1–6. doi: 10.1002/ijc.22331. [DOI] [PubMed] [Google Scholar]

- 7.Field JK Lung cancer risk models come of age. Cancer Prev Res (Phila) 2008;1:226–8. doi: 10.1158/1940-6207.CAPR-08-0144. [DOI] [PubMed] [Google Scholar]

- 8.Marcus MW, Raji OY, Field JK Lung cancer screening: identifying the high risk cohort. J Thorac Dis. 2015;7(Suppl 2):S156–62. doi: 10.3978/j.issn.2072-1439.2015.04.19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bach PB, Kattan MW, Thornquist MD, et al Variations in lung cancer risk among smokers. J Natl Cancer Inst. 2003;95:470–8. doi: 10.1093/jnci/95.6.470. [DOI] [PubMed] [Google Scholar]

- 10.Eddy DM, Hollingworth W, Caro JJ, et al Model transparency and validation: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force-7. Value Health. 2012;15:843–50. doi: 10.1016/j.jval.2012.04.012. [DOI] [PubMed] [Google Scholar]

- 11.Kleijnen JP Verification and validation of simulation models. Eur J Operational Res. 1999;82:145–62. doi: 10.1016/0377-2217(94)00016-6>. [DOI] [Google Scholar]

- 12.Mcginn TG, Guyatt GH, Wyer PC, et al Users’ guides to the medical literature: XXII: how to use articles about clinical decision rules. Evidence-Based Medicine Working Group. JAMA. 2000;284:79–84. doi: 10.1001/jama.284.1.79. [DOI] [PubMed] [Google Scholar]

- 13.Carter JL, Coletti RJ, Harris RP Quantifying and monitoring overdiagnosis in cancer screening: a systematic review of methods. BMJ. 2015;350:g7773. doi: 10.1136/bmj.g7773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Spitz MR, Hong WK, Amos CI, et al A risk model for prediction of lung cancer. J Natl Cancer Inst. 2007;99:715–26. doi: 10.1093/jnci/djk153. [DOI] [PubMed] [Google Scholar]

- 15.Spitz MR, Etzel CJ, Dong Q, et al An expanded risk prediction model for lung cancer. Cancer Prev Res (Phila) 2008;1:250–4. doi: 10.1158/1940-6207.CAPR-08-0060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Cassidy A, Myles JP, van Tongeren M, et al The LLP risk model: an individual risk prediction model for lung cancer. Br J Cancer. 2008;98:270–6. doi: 10.1038/sj.bjc.6604158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Marcus MW, Chen Y, Raji OY, et al LLPi: Liverpool Lung Project risk prediction model for lung cancer incidence. Cancer Prev Res (Phila) 2015;8:570–5. doi: 10.1158/1940-6207.CAPR-14-0438. [DOI] [PubMed] [Google Scholar]

- 18.Tammemagi MC, Freedman MT, Pinsky PF, et al Prediction of true positive lung cancers in individuals with abnormal suspicious chest radiographs: a prostate, lung, colorectal, and ovarian cancer screening trial study. J Thorac Oncol. 2009;4:710–21. doi: 10.1097/JTO.0b013e31819e77ce. [DOI] [PubMed] [Google Scholar]

- 19.Tammemagi CM, Pinsky PF, Caporaso NE, et al Lung cancer risk prediction: Prostate, Lung, Colorectal and Ovarian Cancer Screening Trial models and validation. J Natl Cancer Inst. 2011;103:1058–68. doi: 10.1093/jnci/djr173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Tammemägi MC, Katki HA, Hocking WG, et al Selection criteria for lung-cancer screening. N Engl J Med. 2013;368:728–36. doi: 10.1056/NEJMoa1211776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Etzel CJ, Kachroo S, Liu M, et al Development and validation of a lung cancer risk prediction model for African-Americans. Cancer Prev Res (Phila) 2008;1:255–65. doi: 10.1158/1940-6207.CAPR-08-0082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wilson DO, Weissfeld J A simple model for predicting lung cancer occurrence in a lung cancer screening program: The Pittsburgh Predictor. Lung Cancer. 2015;89:31–7. doi: 10.1016/j.lungcan.2015.03.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hoggart C, Brennan P, Tjonneland A, et al A risk model for lung cancer incidence. Cancer Prev Res (Phila) 2012;5:834–46. doi: 10.1158/1940-6207.CAPR-11-0237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wozniak MB, Scelo G, Muller DC, et al Circulating microRNAs as non-invasive biomarkers for early detection of non-small-cell lung cancer. PLoS One. 2015;10:e0125026. doi: 10.1371/journal.pone.0125026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wang X, Ma K, Cui J, et al An individual risk prediction model for lung cancer based on a study in a Chinese population. Tumori. 2015;101:16–23. doi: 10.5301/tj.5000205. [DOI] [PubMed] [Google Scholar]

- 26.Muller DC, Johansson M, Brennan P Lung cancer risk prediction model incorporating lung function: Development and validation in the UK Biobank Prospective Cohort Study. J Clin Oncol. 2017;35:861–9. doi: 10.1200/JCO.2016.69.2467. [DOI] [PubMed] [Google Scholar]

- 27.Ma S, Wang W, Xia B, et al Multiplexed serum biomarkers for the detection of lung cancer. Ebiomedicine. 2016:210–8. doi: 10.1016/j.ebiom.2016.08.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wu X, Wen CP, Ye Y, et al Personalized risk assessment in never, light, and heavy smokers in a prospective cohort in Taiwan. Sci Rep. 2016;6:36482. doi: 10.1038/srep36482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Gu F, Cheung LC, Freedman ND, et al Potential impact of including time to first cigarette in risk models for selecting ever-smokers for lung cancer screening. J Thorac Oncol. 2017;12:1646–53. doi: 10.1016/j.jtho.2017.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Lin KF, Wu HF, Huang WC, et al Propensity score analysis of lung cancer risk in a population with high prevalence of non-smoking related lung cancer. BMC Pulm Med. 2017;17:120. doi: 10.1186/s12890-017-0465-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sha R. Study on influencing factors of lung cancer in Anhui province. Hefei: Anhui Medical University, 2017.

- 32.Lin H, Zhong WZ, Yang XN, et al Forecasting model of risk of cancer in lung cancer pedigree in a case-control study. Zhongguo Fei Ai Za Zhi. 2011;14:581–7. doi: 10.3779/j.issn.1009-3419.2011.07.04>. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ni R. Establishment of the diagnosis model of lung cancer based on epidemiology, clinical symptom, tumor marker and imaging characteristics. Zhengzhou: Zhengzhou University, 2016.

- 34.Li H. Prediction of lung cancer risk in a Chinese population using a multifactorial genetic model. Shanghai: Fudan University, 2012.

- 35.Feng YJ. Application of combined epigenetics markers in the early diagnosis of lung cancer based on data mining techniques. Zhengzhou: Zhengzhou University, 2013.

- 36.Wang N. Study of the early warning model for lung cancer based on data mining. Zhengzhou: Zhengzhou University, 2012.

- 37.Zhang HQ. Application of tumor markers protein biochip in the aided diagnosis of lung cancer based on data mining technology. Zhengzhou: Zhengzhou University, 2012.

- 38.Sun RL, Zhang CL, Xu DX Lung cancer risk factors in Qingdao: retrospective study of 563 cases. Zhongguo Ai Zheng Fang Zhi Za Zhi. 2013;5:304–7. [Google Scholar]

- 39.Nie GJ. The application of artificial neural network technology and tumor markers in lung cancer’s early warning. Zhengzhou: Zhengzhou University, 2009.

- 40.Chang TT. Establishment and investigation of the management system for the prevention and cure of incipient tumors in the elderly of Soochow City. Suzhou: Soochow University, 2011.

- 41.National Lung Screening Trial Research Team The National Lung Screening Trial: overview and study design. Radiology. 2011;258:243–53. doi: 10.1148/radiol.10091808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Field JK, Smith DL, Duffy SW, et al The Liverpool Lung Project research protocol. Int J Oncol. 2005;27:1633–45. doi: 10.3892/ijo.27.6.1633. [DOI] [PubMed] [Google Scholar]

- 43.Prorok PC, Andriole GL, Bresalier RS, et al Design of the Prostate, Lung, Colorectal and Ovarian (PLCO) Cancer Screening Trial. Control Clin Trials. 2000;21(6 Suppl):273S–309S. doi: 10.1016/S0197-2456(00)00098-2. [DOI] [PubMed] [Google Scholar]

- 44.Higgins JP, Altman DG, Gøtzsche PC, et al The Cochrane Collaboration’s tool for assessing risk of bias in randomized trials. BMJ. 2011;343:d5928. doi: 10.1136/bmj.d5928. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Whiting P, Rutjes AW, Reitsma JB, et al The development of QUADAS: a tool for the quality assessment of studies of diagnostic accuracy included in systematic reviews. BMC Med Res Methodol. 2003;3:25. doi: 10.1186/1471-2288-3-25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Wells G, Shea B, O’Connell D, et al. The Newcastle-Ottawa Scale (NOS) for assessing the quality of nonrandomized studies in meta-analyses. Available online: http://www.ohri.ca/programs/clinical_epidemiology/oxford.asp

- 47.Shea BJ, Hamel C, Wells GA, et al AMSTAR is a reliable and valid measurement tool to assess the methodological quality of systematic reviews. J Clin Epidemiol. 2009;62:1013–20. doi: 10.1016/j.jclinepi.2008.10.009. [DOI] [PubMed] [Google Scholar]

- 48.Ingalls RG, Rossetti MD, Smith JS, et al. Quality assessment, verification, and validation of modeling and simulation applications. Simulation Conference. IEEE, 2004.