Abstract

Background

Early accurate detection of all skin cancer types is essential to guide appropriate management and to improve morbidity and survival. Melanoma and squamous cell carcinoma (SCC) are high‐risk skin cancers which have the potential to metastasise and ultimately lead to death, whereas basal cell carcinoma (BCC) is usually localised with potential to infiltrate and damage surrounding tissue. Anxiety around missing early curable cases needs to be balanced against inappropriate referral and unnecessary excision of benign lesions. Teledermatology provides a way for generalist clinicians to access the opinion of a specialist dermatologist for skin lesions that they consider to be suspicious without referring the patients through the normal referral pathway. Teledermatology consultations can be 'store‐and‐forward' with electronic digital images of a lesion sent to a dermatologist for review at a later time, or can be live and interactive consultations using videoconferencing to connect the patient, referrer and dermatologist in real time.

Objectives

To determine the diagnostic accuracy of teledermatology for the detection of any skin cancer (melanoma, BCC or cutaneous squamous cell carcinoma (cSCC)) in adults, and to compare its accuracy with that of in‐person diagnosis.

Search methods

We undertook a comprehensive search of the following databases from inception up to August 2016: Cochrane Central Register of Controlled Trials, MEDLINE, Embase, CINAHL, CPCI, Zetoc, Science Citation Index, US National Institutes of Health Ongoing Trials Register, NIHR Clinical Research Network Portfolio Database and the World Health Organization International Clinical Trials Registry Platform. We studied reference lists and published systematic review articles.

Selection criteria

Studies evaluating skin cancer diagnosis for teledermatology alone, or in comparison with face‐to‐face diagnosis by a specialist clinician, compared with a reference standard of histological confirmation or clinical follow‐up and expert opinion. We also included studies evaluating the referral accuracy of teledermatology compared with a reference standard of face‐to‐face diagnosis by a specialist clinician.

Data collection and analysis

Two review authors independently extracted all data using a standardised data extraction and quality assessment form (based on QUADAS‐2). We contacted authors of included studies where there were information related to the target condition of any skin cancer missing. Data permitting, we estimated summary sensitivities and specificities using the bivariate hierarchical model. Due to the scarcity of data, we undertook no covariate investigations for this review. For illustrative purposes, we plotted estimates of sensitivity and specificity on coupled forest plots for diagnostic threshold and target condition under consideration.

Main results

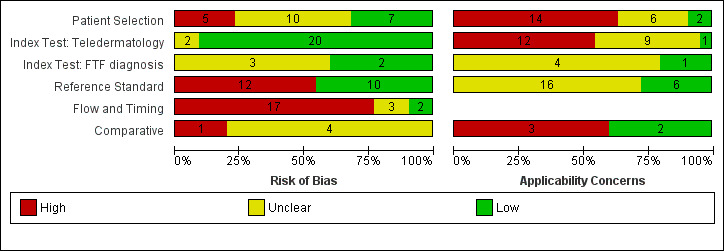

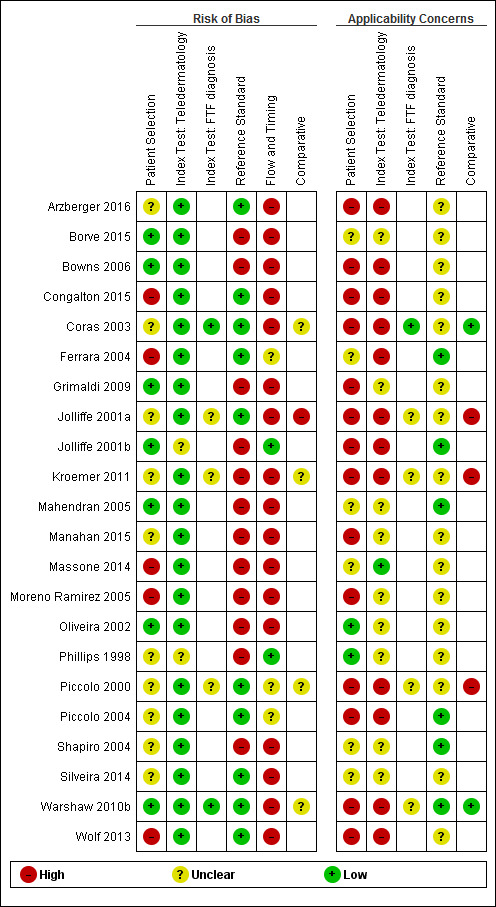

The review included 22 studies reporting diagnostic accuracy data for 4057 lesions and 879 malignant cases (16 studies) and referral accuracy data for reported data for 1449 lesions and 270 'positive' cases as determined by the reference standard face‐to‐face decision (six studies). Methodological quality was variable with poor reporting hindering assessment. The overall risk of bias was high or unclear for participant selection, reference standard, and participant flow and timing in at least half of all studies; the majority were at low risk of bias for the index test. The applicability of study findings were of high or unclear concern for most studies in all domains assessed due to the recruitment of participants from secondary care settings or specialist clinics rather than from primary or community‐based settings in which teledermatology is more likely to be used and due to the acquisition of lesion images by dermatologists or in specialist imaging units rather than by primary care clinicians.

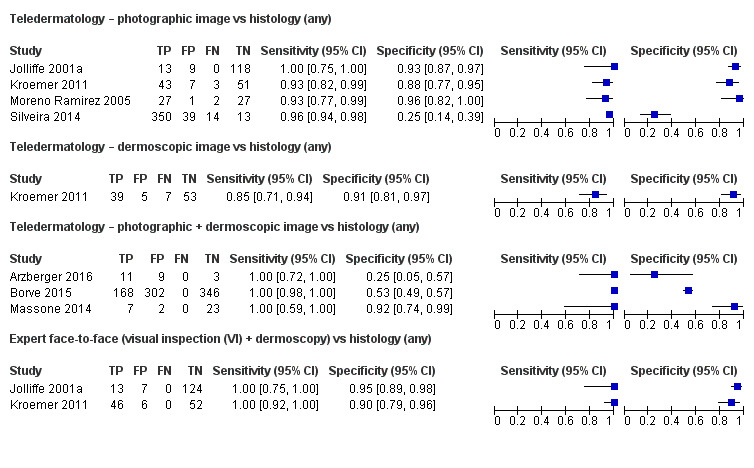

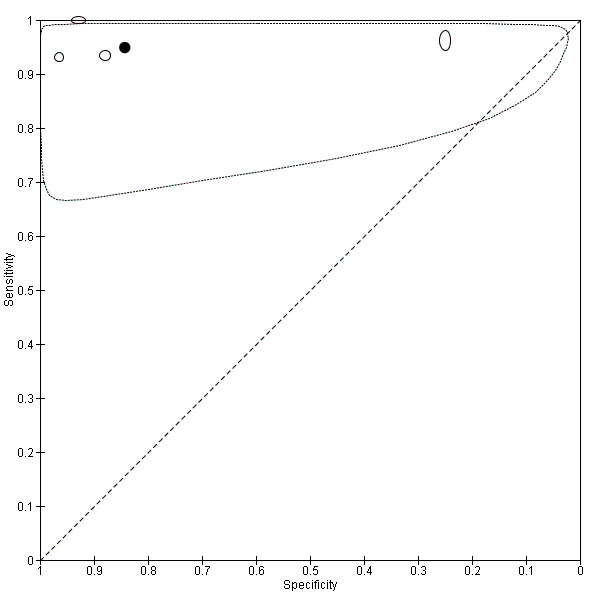

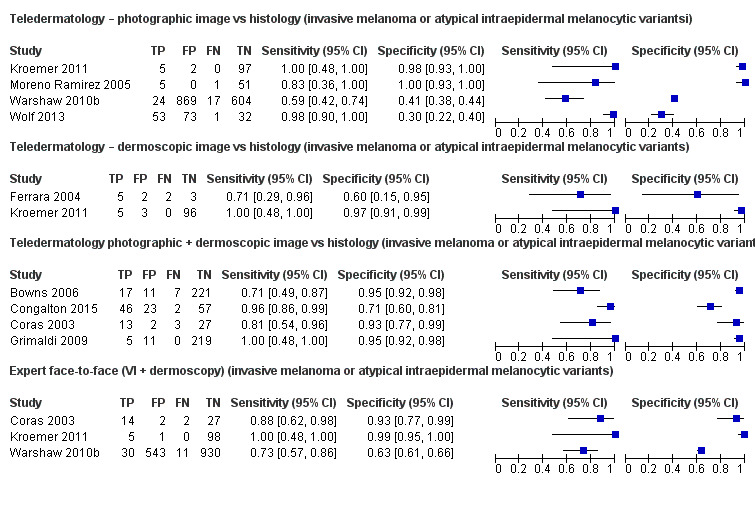

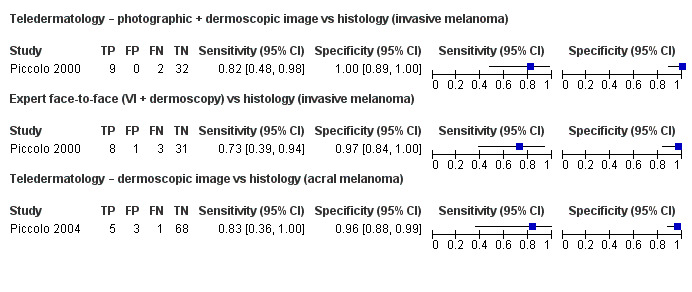

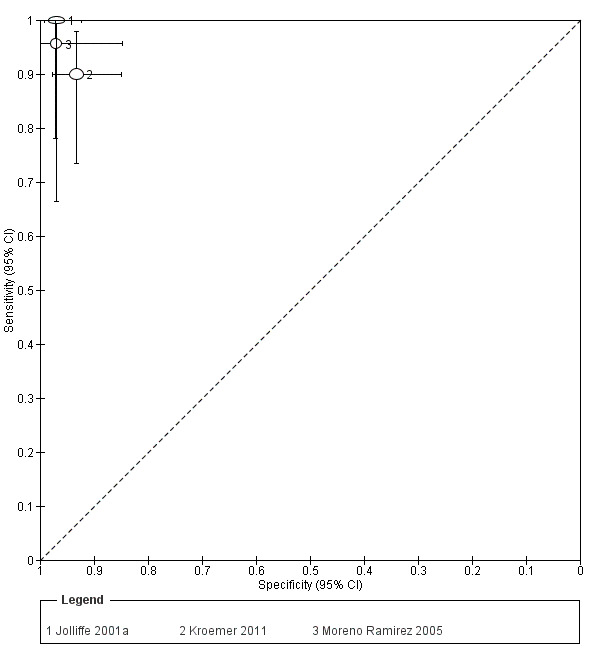

Seven studies provided data for the primary target condition of any skin cancer (1588 lesions and 638 malignancies). For the correct diagnosis of lesions as malignant using photographic images, summary sensitivity was 94.9% (95% confidence interval (CI) 90.1% to 97.4%) and summary specificity was 84.3% (95% CI 48.5% to 96.8%) (from four studies). Individual study estimates using dermoscopic images or a combination of photographic and dermoscopic images generally suggested similarly high sensitivities with highly variable specificities. Limited comparative data suggested similar diagnostic accuracy between teledermatology assessment and in‐person diagnosis by a dermatologist; however, data were too scarce to draw firm conclusions. For the detection of invasive melanoma or atypical intraepidermal melanocytic variants both sensitivities and specificities were more variable. Sensitivities ranged from 59% (95% CI 42% to 74%) to 100% (95% CI 48% to 100%) and specificities from 30% (95% CI 22% to 40%) to 100% (95% CI 93% to 100%), with reported diagnostic thresholds including the correct diagnosis of melanoma, classification of lesions as 'atypical' or 'typical, and the decision to refer or to excise a lesion.

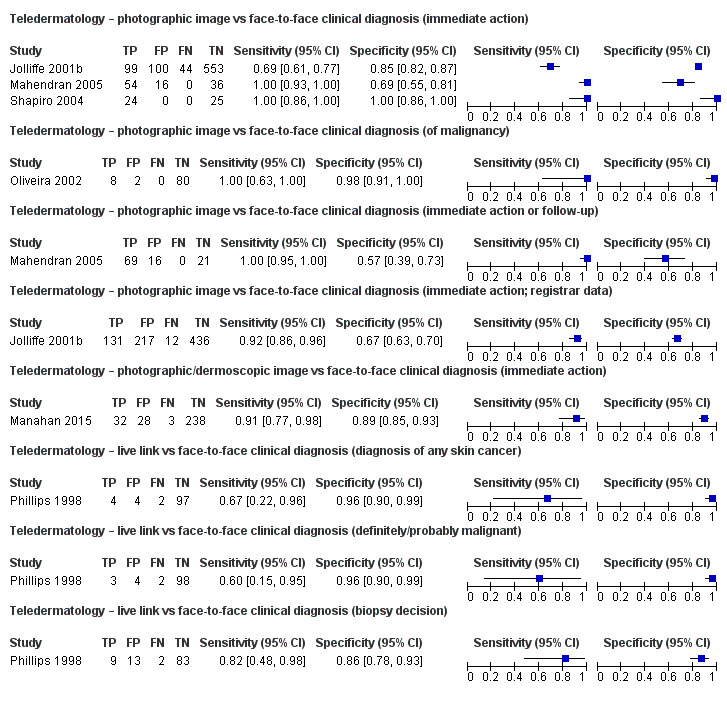

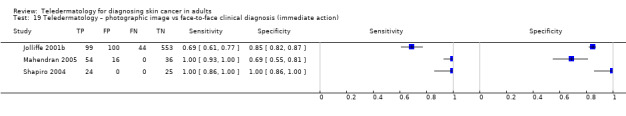

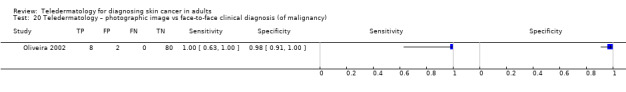

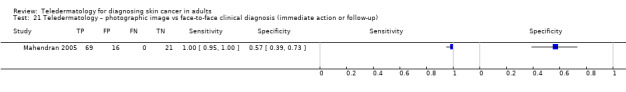

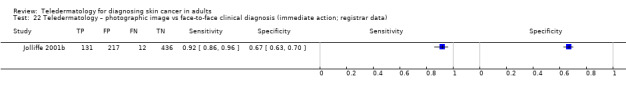

Referral accuracy data comparing teledermatology against a face‐to‐face reference standard suggested good agreement for lesions considered to require some positive action by face‐to‐face assessment (sensitivities of over 90%). For lesions considered of less concern when assessed face‐to‐face (e.g. for lesions not recommended for excision or referral), agreement was more variable with teledermatology specificities ranging from 57% (95% CI 39% to 73%) to 100% (95% CI 86% to 100%), suggesting that remote assessment is more likely recommend excision, referral or follow‐up compared to in‐person decisions.

Authors' conclusions

Studies were generally small and heterogeneous and methodological quality was difficult to judge due to poor reporting. Bearing in mind concerns regarding the applicability of study participants and of lesion image acquisition in specialist settings, our results suggest that teledermatology can correctly identify the majority of malignant lesions. Using a more widely defined threshold to identify 'possibly' malignant cases or lesions that should be considered for excision is likely to appropriately triage those lesions requiring face‐to‐face assessment by a specialist. Despite the increasing use of teledermatology on an international level, the evidence base to support its ability to accurately diagnose lesions and to triage lesions from primary to secondary care is lacking and further prospective and pragmatic evaluation is needed.

Keywords: Adult; Humans; Carcinoma, Basal Cell; Carcinoma, Basal Cell/diagnostic imaging; Carcinoma, Squamous Cell; Carcinoma, Squamous Cell/diagnostic imaging; Data Accuracy; Dermatology; Dermatology/methods; Diagnostic Errors; Diagnostic Errors/statistics & numerical data; ; /diagnostic imaging; Photography; Physical Examination; Physical Examination/methods; Sensitivity and Specificity; Skin Neoplasms; Skin Neoplasms/diagnostic imaging; Telemedicine; Telemedicine/methods

Plain language summary

What is the diagnostic accuracy of teledermatology for the diagnosis of skin cancer in adults?

Why is improving the diagnosis of skin cancer important?

There are different types of skin cancer. Melanoma is one of the most dangerous forms and it is important to identify it early so that it can be removed. If it is not recognised when first brought to the attention of doctors (also known as a false‐negative test result) treatment can be delayed resulting in the melanoma spreading to other organs in the body and possibly causing early death. Cutaneous squamous cell carcinoma (cSCC) and basal cell carcinoma (BCC) are usually localised skin cancers, although cSCC can spread to other parts of the body and BCC can cause disfigurement if not recognised early. Calling something a skin cancer when it is not really a skin cancer (a false‐positive result) may result in unnecessary surgery and other investigations that can cause stress and worry to the patient. Making the correct diagnosis is important. Mistaking one skin cancer for another can lead to the wrong treatment being used or lead to a delay in effective treatment.

What is the aim of the review?

The aim of this Cochrane Review was to find out whether teledermatology is accurate enough to identify which people with skin lesions need to be referred to see a specialist dermatologist (a doctor concerned with disease of the skin) and who can be safely reassured that their lesion (damage or change of the skin) is not malignant. We included 22 studies to answer this question.

What was studied in the review?

Teledermatology means sending pictures of skin lesions or rashes to a specialist for advice on diagnosis or management. It is a way for primary care doctors (general practitioners (GPs)) to get an opinion from a specialist dermatologist without having to refer patients through the normal referral pathway. Teledermatology can involve sending photographs or magnified images of a skin lesion taken with a special camera (dermatoscope) to a skin specialist to look at or it might involve immediate discussion about a skin lesion between a GP and a skin specialist using videoconferencing.

What are the main results of the review?

The review included 22 studies, 16 studies comparing teledermatology diagnoses to the final lesion diagnoses (diagnostic accuracy) for 4057 lesions and 879 malignant cases and five studies comparing teledermatology decisions to the decisions that would be made with the patient present (referral accuracy) for 1449 lesions and 270 'positive' cases.

The studies were very different from each other in terms of the types of people with suspicious skin cancer lesions included and the type of teledermatology used. A single reliable estimate of the accuracy of teledermatology could not be made. For the correct diagnosis of a lesion to be a skin cancer, data suggested that less than 7% of malignant skin lesions were missed by teledermatology. Study results were too variable to tell us how many people would be referred unnecessarily for a specialist dermatology appointment following a teledermatology consultation. Without access to teledermatology services however, most of the lesions included in these studies would likely be referred to a dermatologist.

How reliable are the results of the studies of this review?

In the included studies, the final diagnosis of skin cancer was made by lesion biopsy (taking a small sample of the lesion so it could be examined under a microscope) and the absence of skin cancer was confirmed by biopsy or by follow‐up over time to make sure the skin lesion remained negative for melanoma. This is likely to have been a reliable method for deciding whether people really had skin cancer. In a few studies, a diagnosis of no skin cancer was made by a skin specialist rather than biopsy. This is less likely to have been a reliable method for deciding whether people really had skin cancer*. Poor reporting of what was done in the study made it difficult for us to say how reliable the study results are. Selecting some patients from specialist clinics instead of primary care along with different ways of doing teledermatology were common problems.

Who do the results of this review apply to?

Studies were conducted in: Europe (64%), North America (18%), South America (9%) or Oceania (9%). The average age of people who were studied was 52 years; however, several studies included at least some people under the age of 16 years. The percentage of people with skin cancer ranged between 2% and 88% with an average of 30%, which is much higher than would be observed in a primary care setting in the UK.

What are the implications of this review?

Teledermatology is likely to be a good way of helping GPs to decide which skin lesions need to be seen by a skin specialist. Our review suggests that using magnified images, in addition to photographs of the lesion, improves accuracy. More research is needed to establish the best way of providing teledermatology services.

How up‐to‐date is this review?

The review authors searched for and used studies published up to August 2016.

*In these studies, biopsy, clinical follow‐up or specialist clinician diagnosis were the reference comparisons.

Summary of findings

Summary of findings'. 'Summary of findings table.

| Question: | What is the diagnostic accuracy of teledermatology for the detection of skin cancer in adults? | ||

| Population: | Adults with lesions suspicious for skin cancer | ||

| Index test: | TD using photographic or dermoscopic (or both) images | ||

| Comparator test: | Face‐to‐face diagnosis using visual inspection or dermoscopy (or both) | ||

| Target condition: | Any skin cancer, including invasive melanoma and atypical intraepidermal melanocytic variants, BCC and cSCC | ||

| Reference standard: | Histology with or without long‐term follow‐up (diagnostic accuracy); expert face‐to‐face diagnosis (for referral accuracy) | ||

| Action: | If accurate, positive results ensure the malignant lesions are not missed but are appropriately referred for specialist assessment or are treated appropriately in a non‐referred setting, and those with negative results can be safely reassured and discharged. | ||

| Quantity of evidence | Number of studies | Total lesions | Total cases |

| Diagnostic accuracy | 16 | 4057 | 879 |

| Referral accuracy | 6 | 1449 | 270 |

| Limitations | |||

| Risk of bias: | Low risk for participant selection in 7 studies; high risk (5) from case‐control design (2) or inappropriate exclusion criteria (4). Low risk for teledermatology assessments (22). Low risk for comparison with face‐to‐face diagnosis (2/5); unclear (3). Low risk for reference standard (10/22); high risk from use of expert diagnosis alone – referral accuracy (6) or inadequate reference standard (6). High risk for participant flow (17) due to differential verification (5), and exclusions following recruitment (14); timing of tests not mentioned in 14 studies. | ||

| Applicability of evidence to question: | High concern (14/22) for applicability of participants due to recruitment from secondary care or specialist clinics (12) or inclusion of multiple lesions per participant (6). High concern for applicability of teledermatology assessments (12/22) due to images acquired by dermatologists secondary care settings or in medical imaging units rather than images acquired in primary care. Low concern for reference standard (6/22); unclear concern due to lack of information concerning the expertise of the histopathologist (13) or expert face‐to‐face diagnosis (3). | ||

| Findings: | |||

| 7 studies reported diagnostic accuracy data for the primary target condition of any skin cancer; 9 studies for the detection of invasive melanoma or atypical intraepidermal melanocytic variants; 2 studies for invasive melanoma alone; and 4 studies for BCC alone. 6 studies reported only referral accuracy data (teledermatology decisions versus face‐to‐face decisions). The findings presented are based on results for the primary target condition of any skin cancer and for the detection of invasive melanoma or atypical intraepidermal melanocytic variants. | |||

| Diagnostic accuracy data | Number of datasets | Total lesions | Total malignant |

| Test: TD using photographic images for any skin cancer | 4 | 717 | 452 |

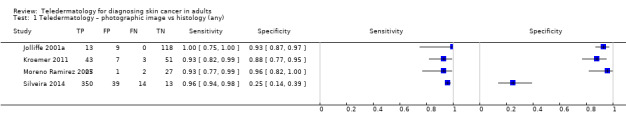

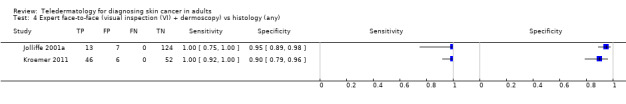

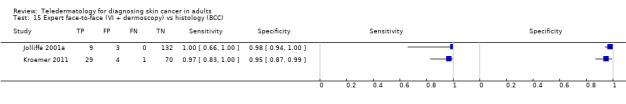

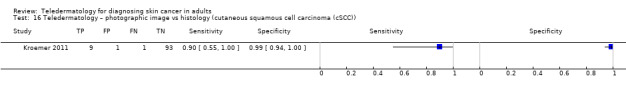

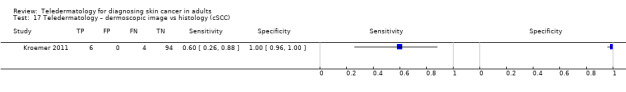

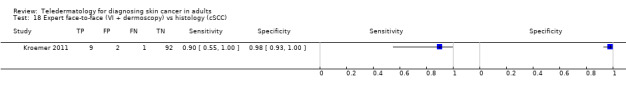

| 3 studies reported a cross‐tabulation of lesion final diagnoses against the diagnosis on teledermatology such that data could be extracted for the detection of any malignancy, regardless of any misclassification of 1 skin cancer for another, e.g. a BCC diagnosed as a melanoma or vice versa; and 1 study presented data for the detection of 'malignant' versus benign cases with no breakdown of individual lesion diagnoses given. Summary sensitivity was 94.9% (95% CI 90.1% to 97.4%) and summary specificity 84.3% (95% CI 48.5% to 96.8%). 2 studies providing a direct comparison between TD assessment and in‐person diagnosis by a dermatologist the data suggested similar accuracy between approaches; however, data were too scarce to draw firm conclusions. | |||

| Test: TD using clinical and dermoscopic images for any skin cancer | 3 | 928 | 215 |

| Sensitivities were 100% in all 3 studies. Specificities ranged from 25% (95% CI 5% to 57%) to 92% (95% CI 74% to 99%). Studies used varying thresholds to decide test positivity and included highly selected populations. No statistical pooling was undertaken. | |||

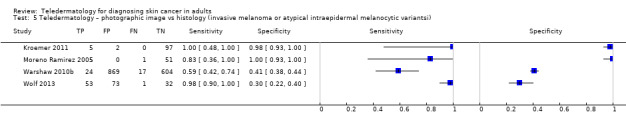

| Test: TD using photographic images for invasive melanoma or atypical intraepidermal melanocytic variants | 4 | 1834 | 106 |

| Sensitivities ranged from 59% (95% CI 42% to 74%) to 100% (95% CI 48% to 100%) and specificities from 30% (95% CI 22% to 40%) to 100% (95% CI 93% to 100%). Diagnostic thresholds were correct diagnosis of melanoma (3) or classification as 'atypical' or 'typical.' Populations also varied, some including only atypical or higher‐risk pigmented lesions and excluding equivocal lesions and others including both pigmented and non‐pigmented lesions who were either self‐referred or were deemed to require lesion excision. The number of melanomas missed ranged from 0 to 17. | |||

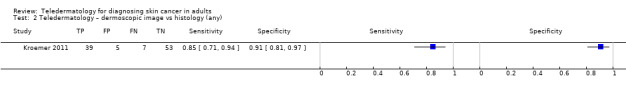

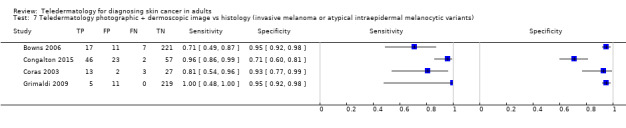

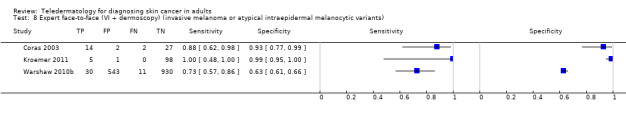

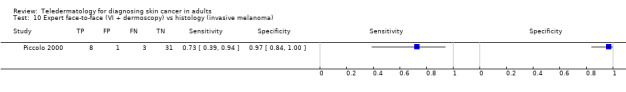

| Test: TD using photographic and dermoscopic images for invasive melanoma or atypical intraepidermal melanocytic variants | 4 | 664 | 93 |

| Summary estimates were 85.4% (95% CI 68.3% to 94.1%) for sensitivity and 91.6% (95% CI 81.1% to 96.5%) for specificity. Sensitivities were lower for the correct diagnosis of melanoma (71% to 81%) compared to the decision to refer or to excise a lesion (96% to 100%). The number of melanomas missed ranged from 0 to 7. | |||

| All data (referral accuracy) | 6 | 1449 | 270 |

| TD diagnoses were reported based on photographic images alone (4), photographic and dermoscopic images (1) and using live‐link TD (1). Diagnostic decisions on TD varied, including the diagnosis of malignancy, the decision to excise a lesion, the decision to refer versus not refer, or to excise or follow‐up at a later date. For store‐and‐forward TD sensitivities were generally above 90% indicating good agreement between remote image‐based decisions with the face‐to‐face reference standard for lesions considered to require some positive action by face‐to‐face assessment. Specificities were more variable ranging from 57% (95% CI 39% to 73%) to 100% (95% CI 86% to 100%) suggesting that remote assessment is more likely to recommend excision, referral or follow‐up for lesions considered of less concern when assessed face‐to‐face. | |||

BCC: basal cell carcinoma; CI: confidence interval; cSCC: cutaneous squamous cell carcinoma; TD: teledermatology.

Background

This review is one of a series of Cochrane Diagnostic Test Accuracy (DTA) reviews on the diagnosis and staging of melanoma and keratinocyte skin cancers conducted for the National Institute for Health Research (NIHR) Cochrane Systematic Reviews Programme. For the purposes of these reviews, diagnostic accuracy is assessed by the sensitivity and specificity of a test. Appendix 1 shows the content and structure of the programme, Appendix 2 provides a glossary of terms used.

Target condition being diagnosed

There are three main forms of skin cancer. Melanoma has the highest skin cancer mortality (Cancer Research UK 2017); however, the most common skin cancers in Caucasian populations are those arising from keratinocytes: basal cell carcinoma (BCC) and cutaneous squamous cell carcinoma (cSCC) (Gordon 2013; Madan 2010). In 2003, the World Health Organization estimated that between two and three million 'non‐melanoma' skin cancers (of which BCC is estimated to account for around 80% and cSCC around 16% of cases) and 132,000 melanoma skin cancers occur globally each year (WHO 2003).

In this DTA review there are three target conditions of interest melanoma, BCC and cSCC.

Melanoma

Melanoma arises from uncontrolled proliferation of melanocytes – the epidermal cells that produce pigment or melanin. Cutaneous melanoma refers to any skin lesion with malignant melanocytes present in the dermis, primarily including superficial spreading, nodular, acral lentiginous and lentigo maligna melanoma variants (see Figure 1). Melanoma in situ refers to abnormal melanocytes that are contained within the epidermis and have not yet invaded the dermis, but are at risk of progression to melanoma if left untreated. Lentigo maligna, a subtype of melanoma in situ in chronically sun‐damaged skin, denotes another form of proliferation of abnormal melanocytes. Melanoma in situ and lentigo maligna are both atypical intraepidermal melanocytic variants. All forms of melanoma in situ can progress to invasive melanoma if growth breaches the dermo‐epidermal junction during a vertical growth phase; however, malignant transformation is both lower and slower for lentigo maligna than for melanoma in situ (Kasprzak 2015). Melanoma is one of the most dangerous forms of skin cancer, with the potential to metastasise to other parts of the body via the lymphatic system and bloodstream. It accounts for only a small percentage of skin cancer cases but is responsible for up to 75% of skin cancer deaths (Boring 1994; Cancer Research UK 2017).

1.

Sample photograph of superficial spreading melanoma(left), basal cell carcinoma (centre) and squamous cell carcinoma (right). Copyright © 2012 Dr Rubeta Matin: reproduced with permission.

The incidence of melanoma rose to over 200,000 newly diagnosed cases worldwide in 2012 (Erdmann 2013; Ferlay 2015), with an estimated 55,000 deaths (Ferlay 2015). The highest incidence was observed in Australia with 13,134 new cases of melanoma of the skin in 2014 (ACIM 2017), and in New Zealand with 2341 registered cases in 2010 (HPA and MelNet NZ 2014). In the USA, the predicted incidence in 2014 was 73,870 per annum and the predicted number of deaths was 9940 (Siegel 2015). The highest rates in Europe are in north‐western Europe and the Scandinavian countries, with the highest incidence reported in Switzerland of 25.8 per 100,000 people in 2012. Rates in the England have tripled from 4.6 and 6.0 per 100,000 in men and women respectively, in 1990, to 18.6 and 19.6 per 100,000 in 2012 (EUCAN 2012). Indeed, in the UK, melanoma has one of the fastest rising incidence rates of any cancer, with the biggest projected increase in incidence between 2007 and 2030 (Mistry 2011). In the decade leading up to 2013, age‐standardised incidence increased by 46%, with 14,500 new cases in 2013 and 2459 deaths in 2014 (Cancer Research UK 2017). Rates are higher in women than in men; however, the rate of incidence in men is increasing faster than in women (Arnold 2014). The rising incidence in melanoma is thought to be primarily related to an increase in recreational sun exposure and tanning bed use and an increasingly ageing population with higher lifetime recreational ultraviolet (UV) exposure, in conjunction with possible earlier detection (Belbasis 2016; Linos 2009). Belbasis 2016 provides a detailed review of putative risk factors, including eye and hair colour, skin type and density of freckles, history of melanoma, sunburn and presence of particular lesion types.

A database in the USA of over 40,000 patients from 1998 onwards, which assisted the development of the 8th American Joint Committee on Cancer (AJCC) Staging System indicated a five‐year survival of 97% to 99% for stage I melanoma, which dropped to 32% to 93% in stage III disease depending on tumour thickness, the presence of ulceration and number of involved nodes (Gershenwald 2017). While these are substantial increases relative to survival in 1975 (Cho 2014), increasing incidence between 1975 and 2010 means that mortality rates have remained static during the same period. This observation, coupled with increasing incidence of localised disease, suggests that improved survival rates may be due to earlier detection and heightened vigilance (Cho 2014). New targeted therapies for advanced (stage IV), melanoma (e.g. BRAF inhibitors), have improved survival, and immunotherapies are evolving such that long‐term survival is being documented (Pasquali 2018; Rozeman 2017). No new data regarding the survival prospects for patients with stage IV disease were analysed for the AJCC 8 staging guidelines due to lack of contemporary data (Gershenwald 2017).

Basal cell carcinoma

BCC can arise from multiple stem cell populations, including from the bulge and interfollicular epidermis (Grachtchouk 2011). BCC growth is usually localised, but it can infiltrate and damage surrounding tissue, sometimes causing considerable destruction and disfigurement, particularly when located on the face (Figure 1). The four main subtypes of BCC are superficial, nodular, morphoeic or infiltrative, and pigmented. They typically present as slow‐growing asymptomatic papules, plaques or nodules which may bleed or form ulcers that do not heal (Firnhaber 2012). People with a BCC often present to healthcare professionals with a non‐healing lesion rather than specific symptoms such as pain. Many lesions are diagnosed incidentally (Gordon 2013).

BCC most commonly occurs on sun‐exposed areas on the head and neck (McCormack 1997), and they are more common in men and in people over the age of 40. Different authors have attributed a rising incidence of BCC in younger people to increased recreational sun exposure (Bath‐Hextall 2007a; Gordon 2013; Musah 2013). Other risk factors include Fitzpatrick skin types I and II (Fitzpatrick 1975; Lear 1997; Maia 1995); previous skin cancer history; immunosuppression; arsenic exposure; and genetic predisposition, such as in basal cell naevus (Gorlin's) syndrome (Gorlin 2004; Zak‐Prelich 2004). Annual incidence is rising worldwide; Europe has experienced a mean increase of 5.5% per year since the late 1970s, the USA 2% per year, while estimates for the UK show incidence appears to be increasing more steeply at a rate of an additional 6 per 100,000 people per year (Lomas 2012). The rising incidence has been explained by an ageing population; changes in the distribution of known risk factors, particularly UV radiation; and improved detection due to the increased awareness among both practitioners and the general population (Verkouteren 2017). Hoorens 2016 points to evidence for a gradual increase in the size of BCCs over time, with delays in diagnosis ranging from 19 to 25 months.

According to National Institute for Health and Care Excellence (NICE) guidance (NICE 2010), low‐risk BCCs are nodular lesions occurring in people older than 24 years who are not immunosuppressed and do not have Gorlin syndrome. Furthermore, they should be located below the clavicle; should be small (diameter of less than 1 cm) with well‐defined margins; not recurrent following incomplete excision; and not in awkward or highly visible locations (NICE 2010). Superficial BCCs are also typically low risk and may be amenable to medical treatments such as photodynamic therapy (PDT) or topical chemotherapy (Kelleners‐Smeets 2017). Assigning BCCs as low or high risk influences the management options (Batra 2002; Randle 1996).

Advanced locally destructive BCC can be found on 'high‐risk' anatomical areas such as the eyebrow, eyelid, nose, ear and temple (these are at higher risk of invisible spread and therefore are more at risk of being incompletely excised (Baxter 2012; Lear 2014)), and they can arise from long‐standing untreated lesions or from a recurrence of aggressive BCC after primary treatment (Lear 2012). Very rarely, BCC metastasises to regional and distant sites resulting in death, especially cases of large neglected lesions in people who are immunosuppressed or those with Gorlin syndrome (McCusker 2014). Rates of metastasis are reported at 0.0028% to 0.55% (Lo 1991), with very poor survival rates. It is recognised that basosquamous carcinoma (more like a high‐risk SCC in behaviour and not considered a true BCC) is likely to have accounted for many cases of apparent metastases of BCC hence the spuriously high reported incidence in some studies of up to 0.55% which is not seen in clinical practice (Garcia 2009).

Squamous cell carcinoma of the skin

Primary cSCC arises from the keratinocytes of the outermost layer (epidermis) of the skin. People with cSCC often present with an ulcer or firm (indurated) papule, plaque or nodule (Firnhaber 2012; Griffin 2016), often with an adherent crust and poorly defined margins (Madan 2010). This type of carcinoma can arise in the absence of a precursor lesion or it can develop from pre‐existing actinic keratosis or Bowen's disease (considered by some clinicians to be squamous cell carcinoma in situ); the estimated annual risk of progression being less than 1% to 20% for newly arising lesions (Alam 2001), and 5% for pre‐existing lesions (Kao 1986). It remains locally invasive for a variable length of time, but it has the potential to spread to the regional lymph nodes or via the bloodstream to distant sites, especially in immunosuppressed individuals (Lansbury 2010). High‐risk lesions are those arising on the lip or ear, recurrent cSCC, lesions arising on non‐exposed sites, scars or chronic ulcers, tumours more than 20 mm in diameter and with depth of invasion more than 4 mm, and poor differentiation on pathological examination (Motley 2009). Perineural invasion of nerves at least 0.1 mm in diameter is a further documented risk factor for high‐risk cSCC (Carter 2013).

Chronic ultraviolet light exposure through recreation or occupation is strongly linked to cSCC occurrence (Alam 2001). It is particularly common in people with fair skin and in less common genetic disorders of pigmentation, such as albinism, xeroderma pigmentosum and recessive dystrophic epidermolysis bullosa (Alam 2001). Other recognised risk factors include immunosuppression; chronic wounds; arsenic or radiation exposure; certain drug treatments, such as voriconazole and BRAF mutation inhibitors; and previous skin cancer history (Baldursson 1993; Chowdri 1996; Dabski 1986; Fasching 1989; Lister 1997; Maloney 1996; O'Gorman 2014). In solid organ transplant recipients, cSCC is the most common form of skin cancer; the risk of developing cSCC has been estimated at 65 to 253 times that of the general population (Hartevelt 1990; Jensen 1999; Lansbury 2010). Overall, local and metastatic recurrence of cSCC at five years is estimated at 8% and 5% respectively (Rowe 1992). The five‐year survival rate of metastatic cSCC of the head and neck is around 60% (Moeckelmann 2018).

Treatment

For primary melanoma, the mainstay of definitive treatment is wide local surgical excision of the lesion, to remove both the tumour and any malignant cells that might have spread into the surrounding skin (Garbe 2016; Marsden 2010; NICE 2015a; SIGN 2017; Sladden 2009). Recommended lateral surgical margins vary according to tumour thickness (Garbe 2016), and to stage of disease at presentation (NICE 2015a).

Treatment options for BCC and cSCC include surgery, other destructive techniques such as cryotherapy or electrodesiccation and topical chemotherapy. A Cochrane Review of 27 randomised controlled trials (RCTs) of interventions for BCC found very little good‐quality evidence for any of the interventions used (Bath‐Hextall 2007b). Complete surgical excision of primary BCC has a reported five‐year recurrence rate of less than 2% (Griffiths 2005; Walker 2006), leading to significantly fewer recurrences than treatment with radiotherapy (Bath‐Hextall 2007b). After apparent clear histopathological margins (serial vertical sections) after standard excision biopsy with 4 mm surgical peripheral margins taken there is a five‐year reported recurrence rate of around 4% (Drucker 2017). Mohs micrographic surgery , whereby surgeons microscopically examine horizontal sections of the tumour peri‐operatively, undertaking re‐excision until the margins are tumour‐free, are options for high‐risk lesions on the face where standard wider excision margins might lead to incomplete excision or considerable functional impairment (Bath‐Hextall 2007b; Lansbury 2010; Motley 2009; Stratigos 2015). Bath‐Hextall 2007b found one trial comparing Mohs micrographic surgery with a 3 mm surgical margin excision in BCC (Smeets 2004); the update of this study showed non‐significantly lower recurrence at 10 years with Mohs micrographic surgery (4.4% with Mohs micrographic surgery compared to 12.2% after surgical excision; P = 0.10) (van Loo 2014).

The main treatments for high‐risk BCC are standard surgical excision, Mohs micrographic surgery or radiotherapy. For low‐risk or superficial subtypes of BCC, or for people with small or multiple (or both) BCCs at low‐risk sites (Marsden 2010), destructive techniques other than excisional surgery may be used (e.g. electrodesiccation and curettage or cryotherapy (Alam 2001; Bath‐Hextall 2007b)). Alternatively, non‐surgical ('non‐destructive') treatments may be considered (Bath‐Hextall 2007b; Drew 2017; Kim 2014), including topical chemotherapy such as imiquimod (Williams 2017), 5‐fluorouracil (Arits 2013), ingenol mebutate (Nart 2015), and photodynamic therapy (PDT) (Roozeboom 2016). Non‐surgical treatments are most frequently used for superficial forms of BCC, with one head‐to‐head trial suggesting topical imiquimod is superior to PDT and 5‐fluorouracil (Jansen 2018). Although non‐surgical approaches are increasingly used, they do not allow histological confirmation of tumour clearance, and their efficacy is dependent on accurate characterisation of the histological subtype and depth of tumour. The 2007 Cochrane review of BCC interventions found limited evidence from very small RCTs for these approaches (Bath‐Hextall 2007b), which have only partially been addressed by subsequent studies (Bath‐Hextall 2014; Kim 2014; Roozeboom 2012). Most BCC trials have compared interventions within the same treatment class, and few have compared medical versus surgical treatments (Kim 2014).

Vismodegib, a first‐in‐class Hedgehog signalling pathway inhibitor is now available for the treatment of metastatic or locally advanced BCC based on the pivotal study ERIVANCE BCC (Sekulic 2017). It is licensed for use in these patients where surgery or radiotherapy is inappropriate, e.g. for treating locally advanced periocular and orbital BCCs with orbital salvage of patients who otherwise would have required exenteration (Wong 2017). However, NICE has recommended against the use of vismodegib based on cost effectiveness and uncertainty of evidence (NICE 2017).

A systematic review of interventions for primary cSCC found only one RCT eligible for inclusion (Lansbury 2010). Current practice therefore relies on evidence from observational studies, as reviewed in Lansbury 2013, for example. Surgical excision with predetermined margins is usually the first‐line treatment (Motley 2009; Stratigos 2015). Estimates of recurrence after Mohs micrographic surgery, surgical excision or radiotherapy, which are likely to have been evaluated in higher‐risk populations, have shown pooled recurrence rates of 3%, 5.4% and 6.4%, respectively, with overlapping confidence intervals (CI); the review authors advised caution when comparing results across treatments (Lansbury 2013).

Index test(s)

Teledermatology is a term used to describe the delivery of dermatological care through information and communication technology (Bashshur 2015). It uses imaging modalities to provide specialist dermatology services either to other healthcare professionals (such as general practitioners (GP)), or to patients directly (Ndegwa 2010). It is considered a valuable tool in the diagnosis and management of skin disease, because of the visual nature of skin lesions and rashes (Warshaw 2011). Teledermatology allows an increased information flow between primary care physicians and dermatologists, which could lead to a reduction in waiting times and limit unnecessary referrals (Ndegwa 2010; Warshaw 2011; Bashshur 2015). In rural areas, where access to speciality services can have significant and potentially off‐putting travel and time implications for the patient, teledermatology has the potential to widen access to specialist opinion.

Teledermatology consultations can be conducted in two main ways, store‐and‐forward or 'asynchronous,' and live interactive or 'synchronous' (Ndegwa 2010). With the store‐and‐forward approach, clinicians and patients are separated by both time and space, as electronic digital images are taken and then transmitted to a dermatologist for review at a later unspecified time (Warshaw 2011). The pictures can be digital photographic (or 'macroscopic') images, or can be magnified dermoscopic images taken using a dermatoscope. Images are often accompanied by a summary of the patient history and demographic information as part of a consultation package (Ndegwa 2010). Furthermore, recent developments in smartphone technology have also introduced a new platform for transferring lesion images from one setting to another (Chuchu 2018). The store‐and‐forward approach is advantageous as it requires less sophisticated technology and lower‐cost equipment (Warshaw 2011); however, it does not allow the specialist to take a direct history, request additional views or communicate in detail the purpose of management to the patient or referrer (Ndegwa 2010).

Live interactive teledermatology uses videoconferencing and image transmission to connect the patient, referrer and dermatologist in real time (Ndegwa 2010). The dermatologist and patient can interact verbally in a similar manner to a traditional clinic‐based encounter, but more extensive telecommunications infrastructure and time are needed (Ndegwa 2010).

Clinical pathway

The diagnosis of melanoma can take place in primary, secondary and tertiary care settings by both generalist and specialist healthcare providers. In the UK, people with concerns about a new or changing skin lesion will usually present first to their GP or, less commonly, directly to a specialist in secondary care, which could include a dermatologist, plastic surgeon, other specialist surgeon (such as an ear, nose and throat specialist or maxillofacial surgeon), or ophthalmologist (Figure 2). Current UK guidelines recommend that all suspicious pigmented lesions presenting in primary care should be assessed by taking a clinical history and visual inspection guided by the revised seven‐point checklist (MacKie 1990). Clinicians should refer those with suspected melanoma or cSCC for appropriate specialist assessment within two weeks (Chao 2014; Marsden 2010; NICE 2015a). Evidence is emerging, however, to suggest that excision of melanoma by GPs is not associated with increased risk compared with outcomes in secondary care (Murchie 2017). In the UK, low‐risk BCC are usually recommended for routine referral, with urgent referral for those in whom a delay could have a significant impact on outcomes, for example due to large lesion size or critical site (NICE 2015b). Appropriately qualified generalist care providers increasingly undertake management of low‐risk BCC in the UK such as by excision of low‐risk lesions (NICE 2010). Similar guidance is in place in Australia (CCAAC Network 2008).

2.

Current clinical pathway for people with skin lesions.

Teledermatology consultations can aid more appropriate triage of lesions, providing reassurance for benign lesions and referral via urgent or non‐urgent routes to secondary care (e.g. for suspected BCC. The distinction between setting and examiner qualifications and experience is important, as specialist clinicians might work in primary care settings (e.g. in the UK, GPs with a special interest in dermatology and skin surgery who have undergone appropriate training), and generalists might practice in secondary care settings (e.g. GPs working alongside dermatologists in secondary care, or plastic surgeons who do not specialise in skin cancer). The level of skill and experience in skin cancer diagnosis varies for both generalist and specialist care providers and impacts on test accuracy.

For referred lesions, the specialist clinician will use history‐taking, visual inspection of the lesion (in comparison with other lesions on the skin) usually in conjunction with dermoscopic examination, and palpation of the lesion and associated regional nodal basins to inform a clinical decision. If melanoma is suspected, then urgent 2 mm excision biopsy is recommended (Lederman 1985; Lees 1991); for cSCC, predetermined surgical margin excision or a diagnostic biopsy may be considered. BCC and premalignant lesions potentially eligible for non‐surgical treatment may undergo a diagnostic biopsy before initiation of therapy. Equivocal melanocytic lesions for which a definitive clinical diagnosis cannot be reached may undergo surveillance to identify any lesion changes that would indicate excision biopsy or reassurance and discharge for lesions that remain stable over a period of time.

Prior test(s)

Although smartphone applications and community‐ or high street pharmacy‐based teledermatology services (e.g. the Boots 'Mole Scanning Service' www.boots.com/health‐pharmacy‐advice/skin‐services/mole‐scanning‐service) can increasingly be accessed directly by people who have concerns about a skin lesion (Kjome 2017), the diagnosis of skin cancer is still based on history taking and clinical examination by a suitably qualified clinician. In the UK, this is typically undertaken at two decision points – first in primary care where the GP makes a decision to refer or not to refer, and then a second time by a dermatologist or other secondary care clinician where a decision is made to biopsy or excise or not.

Visual inspection of the skin is undertaken iteratively, using both implicit pattern recognition (non‐analytical reasoning) and more explicit 'rules' based on conscious analytical reasoning (Norman 2009), the balance of which will vary according to experience and familiarity with the diagnostic question. Various attempts have been made to formalise the "mental rules" involved in analytical pattern recognition for melanoma (Friedman 1985; Grob 1998; MacKie 1985; MacKie 1990; Sober 1979; Thomas 1998); however, visual inspection for keratinocyte skin cancers relies primarily on pattern recognition. Accuracy has been shown to vary according to the expertise of the clinician. Primary care physicians have been reported to miss over 50% of BCCs (Offidani 2002) and to misdiagnose around 33% of BCCs (Gerbert 2000). In contrast, one Australian study found that trained dermatologists were able to detect 98% of BCCs, but with a specificity of only 45% (Green 1988).

A range of technologies have emerged to aid diagnosis to reduce the number of diagnostic biopsies or inappropriate surgical procedures. Dermoscopy using a hand‐held microscope has become the most widely used tool used by clinicians to improve diagnostic accuracy of pigmented lesions, in particular for melanoma (Dinnes 2018a); it is less well established for the diagnosis of BCC or cSCC. Dermoscopy (also referred to as dermatoscopy or epiluminescence microscopy) uses a hand‐held microscope and incident light (with or without oil immersion) to reveal subsurface images of the skin at increased magnification of ×10 to ×100 (Kittler 2001). Used alongside clinical examination, dermoscopy has been shown in some studies to increase the sensitivity of clinical diagnosis of melanoma from around 60% to as much as 90% (Bono 2006; Carli 2002; Kittler 1999; Stanganelli 2000) with much smaller effects in others (Benelli 1999; Bono 2002). The accuracy of dermoscopy depends on the experience of the examiner (Kittler 2001), with accuracy when used by untrained or less‐experienced examiners potentially no better than clinical inspection alone (Binder 1997; Kittler 2002).

The diagnostic accuracy, and comparative accuracy, of visual inspection and dermoscopy have been evaluated in a further three reviews in this series (Dinnes 2018a; Dinnes 2018b; Dinnes 2018c).

Role of index test(s)

The use of teledermatology by primary care or by other generalist clinicians has the potential to ensure that people with suspicious lesions are appropriately referred for examination by a specialist clinician, and people with non‐suspicious lesions are appropriately reassured and managed in primary care. If an accurate triage is made, the proportion of people who are referred unnecessarily will be minimised and lesions requiring urgent referral and treatment correctly identified. By creating an environment where there is facilitated access to more specialist services, selective dermatology referral could ultimately reduce costs while enabling a faster, more reliable and more efficient service (Piccolo 2002). Increased information flow between primary care physicians and dermatologists also has the potential effect of increasing knowledge and reducing isolated decision‐making (Bashshur 2015).

When diagnosing potentially life‐threatening conditions such as melanoma, the consequences of falsely reassuring a person that they do not have skin cancer can be potentially fatal, as the delay to diagnosis means that the window for successful early treatment may be missed. To minimise these false‐negative diagnoses, a good diagnostic test for melanoma demonstrates high sensitivity and high negative predictive value (i.e. very few of those with a negative test result will actually have a melanoma). Giving false‐positive test results (meaning the test has poor specificity and a high false‐positive rate) resulting in the removal of lesions that are benign, is arguably less of an error than missing a potentially fatal melanoma, but does have implications for patient welfare and costs. False‐positive diagnoses cause unnecessary scarring from the biopsy or excision procedure, and increase patient anxiety while they await the definite histology results and increase healthcare costs as the number needed to remove to yield one melanoma diagnosis increases.

Delay in diagnosis of a BCC as a result of a false‐negative result is not as serious as for melanoma because BCCs are usually slow growing and unlikely to metastasise. However, delayed diagnosis can result in a larger and more complex excision with consequent greater morbidity. Very sensitive diagnostic tests for BCC may compromise on lower specificity leading to a higher false‐positive rate, and an enormous burden of skin surgery, such that a balance between sensitivity and specificity is needed. The situation for cSCC is more similar to melanoma in that the consequences of falsely reassuring a person that they do not have skin cancer can be serious and potentially fatal. Thus, a good diagnostic test for cSCC should demonstrate high sensitivity and a corresponding high negative predictive value. In summary, a test that can reduce false‐positive clinical diagnoses without missing true cases of disease has patient and resource benefits. False‐positive clinical diagnoses cause unnecessary morbidity from the biopsy, and could lead to initiation of inappropriate therapies and increase patient anxiety.

Alternative test(s)

Teledermatology provides an alternative means for primary care clinicians (and therefore patients) to access specialist opinion compared to the standard referral process from primary to secondary care. Although the general public can also seek advice on skin lesions, they may be concerned about doing so via smartphone applications or from services provided within community or 'high street' pharmacies. These are not considered direct alternatives to teledermatology services.

Several other tests that may have a role in diagnosis of skin cancer have been reviewed as part of our series of systematic reviews, including visual inspection and dermoscopy (Dinnes 2018a; Dinnes 2018b; Dinnes 2018c), smartphone applications (Chuchu 2018). Reflectance confocal microscopy (Dinnes 2018d; Dinnes 2018e), optical coherence tomography (Ferrante di Ruffano 2018a), and computer‐assisted diagnosis techniques applied to various types of images including those generated by dermoscopy, diffuse reflectance spectrophotometry and electrical impedance spectroscopy (Ferrante di Ruffano 2018b), and high‐frequency ultrasound (Dinnes 2018f). Evidence permitting, the accuracy of available tests will be compared in an overview review, exploiting within‐study comparisons of tests and allowing the analysis and comparison of commonly used diagnostic strategies where tests may be used singly or in combination.

Rationale

Our series of reviews of diagnostic tests used to assist clinical diagnosis of melanoma aimed to identify the most accurate approaches to diagnosis and provide clinical and policy decision‐makers with the highest possible standard of evidence on which to base decisions. With increasing rates of skin cancer and the push towards the use of dermoscopy and other high‐resolution image analysis in primary care, the anxiety around missing early cases needs to be balanced against the risk of over referrals, to avoid sending too many people with benign lesions for a specialist opinion. It is questionable whether all skin cancers detected by sophisticated techniques, even in specialist settings, help to reduce morbidity and mortality, or whether newer technologies run the risk of increasing false‐positive diagnoses. It is also possible that use of some technologies (e.g. widespread use of dermoscopy in primary care with no training), could actually result in harm by missing melanomas if they are used as replacement technologies for traditional history‐taking and clinical examination of the entire skin. Many branches of medicine have noted the danger of such "gizmo idolatry" among doctors (Leff 2008).

Although teledermatology is increasingly used, the accuracy of different approaches to providing teledermatology services (e.g. store‐and‐forward versus live‐link modalities, and use of clinical versus dermoscopic images) has yet to be fully established. A review by Warshaw 2011 suggested that both store‐and‐forward and live‐link teledermatology had acceptable diagnostic accuracy and concordance when compared with clinical face‐to‐face diagnosis; however, clinic‐based dermatology had superior diagnostic accuracy (i.e. in comparison to store‐and‐forward teledermatology consultations). As with any technology requiring significant investment, a full understanding of the benefits including patient acceptability and cost‐effectiveness in comparison to usual practice should be obtained before such an approach can be recommended; establishing the accuracy of diagnosis and referral accuracy is one of the key components. Given the rapidly changing evidence base in skin cancer diagnosis, there is a need for an up‐to‐date analysis of the accuracy of teledermatology for skin cancer diagnosis.

This review followed a generic protocol which covered the full series of Cochrane DTA reviews for the diagnosis of melanoma (Dinnes 2015a); aspects of this review which relate to the diagnosis of BCC and cSCC follow the generic protocol that was written to cover the reviews in the series for the diagnosis of keratinocyte skin cancers (Dinnes 2015b). The 'Background' and 'Methods' sections of this review therefore use some text that was originally published in the protocols (Dinnes 2015a; Dinnes 2015b), and text that overlaps some of our other reviews (Chuchu 2018; Dinnes 2018a; Dinnes 2018b).

Objectives

To determine the diagnostic accuracy of teledermatology for the detection of any skin cancer (melanoma, BCC or cSCC) in adults, and to compare its accuracy with that of in‐person diagnosis.

Accuracy was estimated separately according to the type of teledermatology images used:

photographic images;

dermoscopic images;

photographic and dermoscopic images.

Secondary objectives

To determine the diagnostic accuracy of teledermatology for the detection of invasive melanoma or atypical intraepidermal melanocytic variants in adults, and to compare its accuracy with that of in‐person diagnosis.

To determine the diagnostic accuracy of teledermatology for the detection of invasive melanoma only, in adults, and to compare its accuracy with that of in‐person diagnosis.

To determine the diagnostic accuracy of teledermatology for the detection of BCC in adults, and to compare its accuracy with that of in‐person diagnosis.

To determine the diagnostic accuracy of teledermatology for the detection of cSCC in adults, and to compare its accuracy with that of in‐person diagnosis.

To determine the referral accuracy of teledermatology (i.e. to compare diagnostic decision making based on teledermatology images with that of an in‐person consultation).

Investigation of sources of heterogeneity

We set out to address a range of potential sources of heterogeneity for investigation across our series of reviews, as outlined in our generic protocols (Dinnes 2015a; Dinnes 2015b), and described in Appendix 4; however, our ability to investigate these was necessarily limited by the available data on each individual test reviewed.

Methods

Criteria for considering studies for this review

Types of studies

We included test accuracy studies that allow comparison of the result of the index test with that of a reference standard, including the following:

studies where all participants receive a single index test and a reference standard;

studies where all participants receive more than one index test(s) and reference standard;

studies where participants are allocated (by any method) to receive different index tests or combinations of index tests and all receive a reference standard (between‐person comparative (BPC) studies);

studies that recruit series of participants unselected by true disease status;

diagnostic case‐control studies that separately recruit diseased and non‐diseased groups (see Rutjes 2005); however, we did not include studies that compared results for malignant lesions to those for healthy skin (i.e. with no lesion present);

both prospective and retrospective studies; and

studies where previously acquired clinical or dermoscopic images were retrieved and prospectively interpreted for study purposes.

We excluded studies from which we could not extract 2×2 contingency data or if they included fewer than five cases of melanoma, BCC or cSCC or fewer than five benign lesions. For studies of referral accuracy where a lesion's final diagnosis was not reported, we required at least five 'positive' cases as identified by the expert diagnosis reference standard. The size threshold of five was arbitrary. However, such small studies are unlikely to add precision to estimate of accuracy.

Studies available only as conference abstracts were excluded; however, attempts were made to identify full papers for potentially relevant conference abstracts (Searching other resources).

Participants

We included studies in adults with lesions suspicious for skin cancer or adults at high risk of developing skin cancer. We excluded studies that recruited only participants with malignant or benign final diagnoses.

We excluded studies conducted in children, or which clearly reported inclusion of more than 50% of participants aged 16 and under.

Index tests

We included studies evaluating teledermatology alone, or teledermatology in comparison with face‐to‐face diagnosis.

The following index tests were eligible for inclusion:

store‐and‐forward teledermatology;

real‐time 'live link' teledermatology.

Data for face‐to‐face clinical diagnosis against a histological reference standard was also included where reported, to allow a direct comparison with teledermatology to be made.

Although primary care clinicians can in practice be specialists in skin cancer, we considered primary care physicians as generalist practitioners and dermatologists as specialists. Within each group, we extracted any reporting of special interest or accreditation in skin cancer.

Target conditions

We defined the primary target condition as the detection of any skin cancer, primarily cutaneous melanoma, BCC or cSCC.

We considered four additional definitions of the target condition in secondary analyses, namely, the detection of:

invasive cutaneous melanoma alone;

invasive cutaneous melanoma or atypical intraepidermal melanocytic variants (including melanoma in situ, lentigo maligna);

BCC;

cSCC.

We also considered referral accuracy, comparing decision making from teledermatology compared with the face‐to‐face decisions for the same lesions. These decisions could have included the decision to excise a lesion, follow‐up a lesion or refer a lesion for face‐to‐face assessment.

Reference standards

Teledermatology can be assessed in terms of diagnostic accuracy in comparison to the final lesion diagnosis and referral accuracy in comparison to a face‐to‐face expert management decision.

To establish diagnostic accuracy, the ideal reference standard was histopathological diagnosis in all eligible lesions. A qualified pathologist or dermatopathologist should have performed histopathology. Ideally, reporting should have been standardised detailing a minimum dataset to include the histopathological features of melanoma to determine the AJCC Staging System (e.g. Slater 2014). We did not apply this minimum dataset requirement as a necessary inclusion criterion, but extracted any pertinent information.

Partial verification (applying the reference test only to a subset of those undergoing the index test) was of concern given that lesion excision or biopsy were unlikely to be carried out for all benign‐appearing lesions within a representative population sample. Therefore, to reflect what happens in reality, we accepted clinical follow‐up of benign‐appearing lesions as an eligible reference standard, while recognising the risk of differential verification bias (as misclassification rates of histopathology and follow‐up will differ). Additional eligible reference standards included cancer registry follow‐up and 'expert opinion' with no histology or clinical follow‐up.

All of the above were considered eligible reference standards for establishing lesion final diagnoses (diagnostic accuracy) with the following caveats:

all study participants with a final diagnosis of the target disorder must have had a histological diagnosis, either subsequent to the application of the index test or after a period of clinical follow‐up, and

at least 50% of all participants with benign lesions must have had either a histological diagnosis or clinical follow‐up to confirm benignity.

To establish referral accuracy of teledermatology (i.e. the ability of the remote observer to approximate an in‐person diagnosis), the action recommended by the remote observer was compared with an in‐person 'expert opinion' reference standard (i.e. the diagnosis or management recommendation of an appropriately qualified clinician made face‐to‐face with the study participant).

Search methods for identification of studies

Electronic searches

The Information Specialist (SB) carried out a comprehensive search for published and unpublished studies. A single large literature search was conducted to cover all topics in the programme grant (see Appendix 1 for a summary of reviews included in the programme grant). This allowed for the screening of search results for potentially relevant papers for all reviews at the same time. A search combining disease related terms with terms related to the test names, using both text words and subject headings was formulated. The search strategy was designed to capture studies evaluating tests for the diagnosis or staging of skin cancer. As the majority of records were related to the searches for tests for staging of disease, a filter using terms related to cancer staging and to accuracy indices was applied to the staging test search, to try to eliminate irrelevant studies, for example, those using imaging tests to assess treatment effectiveness. A sample of 300 records that would be missed by applying this filter was screened and the filter adjusted to include potentially relevant studies. When piloted on MEDLINE, inclusion of the filter for the staging tests reduced the overall numbers by around 6000. The final search strategy, incorporating the filter, was subsequently applied to all bibliographic databases as listed below (Appendix 5). The final search result was cross‐checked against the list of studies included in five systematic reviews; our search identified all but one of the studies, and this study was not indexed on MEDLINE. The Information Specialist devised the search strategy, with input from the Information Specialist from Cochrane Skin. No additional limits were used.

We searched the following bibliographic databases to 29 August 2016 for relevant published studies:

MEDLINE via Ovid (from 1946);

MEDLINE In‐Process & Other Non‐Indexed Citations via Ovid;

Embase via Ovid (from 1980).

We searched the following bibliographic databases to 30 August 2016 for relevant published studies:

the Cochrane Central Register of Controlled Trials (CENTRAL) 2016, Issue 7, in the Cochrane Library;

the Cochrane Database of Systematic Reviews (CDSR) 2016, Issue 8, in the Cochrane Library;

Cochrane Database of Abstracts of Reviews of Effects (DARE) 2015, Issue 2;

CRD HTA (Health Technology Assessment) database 2016, Issue 3; and

CINAHL (Cumulative Index to Nursing and Allied Health Literature via EBSCO from 1960).

We searched the following databases for relevant unpublished studies using a strategy based on the MEDLINE search:

CPCI (Conference Proceedings Citation Index), via Web of Science™ (from 1990; searched 28 August 2016); and

SCI Science Citation Index Expanded™ via Web of Science™ (from 1900, using the 'Proceedings and Meetings Abstracts' Limit function; searched 29 August 2016).

We searched the following trials registers using the search terms 'melanoma', 'squamous cell', 'basal cell' and 'skin cancer' combined with 'diagnosis':

Zetoc (from 1993; searched 28 August 2016).

The US National Institutes of Health Ongoing Trials Register (www.clinicaltrials.gov); searched 29 August 2016.

NIHR Clinical Research Network Portfolio Database (www.nihr.ac.uk/research‐and‐impact/nihr‐clinical‐research‐network‐portfolio/); searched 29 August 2016.

The World Health Organization International Clinical Trials Registry Platform (apps.who.int/trialsearch/); searched 29 August 2016.

We aimed to identify all relevant studies regardless of language or publication status (published, unpublished, in press, or in progress). We applied no date limits.

Searching other resources

We screened relevant systematic reviews identified by the searches for their included primary studies, and included any missed by our searches. We checked the reference lists of all included papers, and subject experts within the author team reviewed the final list of included studies. There was no electronic citation searching.

Data collection and analysis

Selection of studies

At least one review author (JDi or NC) screened titles and abstracts, and discussed and resolved any queries by consensus. A pilot screen of 539 MEDLINE references showed good agreement (89% with a kappa of 0.77) between screeners. Primary test accuracy studies and test accuracy reviews (for scanning of reference lists) of any test used to investigate suspected melanoma, BCC or cSCC were included at initial screening. Both a clinical reviewer (from one of a team of 12 clinician reviewers) and a methodologist reviewer (JDi or NC) independently applied inclusion criteria to all full‐text articles (Appendix 6), and resolved disagreements by consensus or by a third party (JDe, CD, HW and RM). We contacted authors of eligible studies when insufficient data were presented to allow for the construction of 2×2 contingency tables.

Data extraction and management

One clinical (as detailed above) and one methodological reviewer (JDi, NC or LFR) independently extracted data concerning details of the study design, participants, index test(s) or test combinations, and criteria for index test positivity, reference standards and data required to complete a 2×2 diagnostic contingency table for each index test using a piloted data extraction form. Data were extracted at all available index test thresholds. We resolved disagreements by consensus or by a third party (JDe, CD, HW, and RM). We entered data into Review Manager 5 (Review Manager 2014).

We contacted authors of included studies where information related to the target condition (in particular to allow the differentiation of invasive cancers from 'in situ' variants) or there were missing diagnostic threshold. We contacted authors of conference abstracts published from 2013 to 2015 to ask whether full data were available. If there was no full paper, we marked conference abstracts as 'pending' and will revisit them in a future review update.

Dealing with multiple publications and companion papers

Where we identified multiple reports of a primary study, we maximised yield of information by collating all available data. Where there were inconsistencies in reporting or overlapping study populations, we contacted study authors for clarification in the first instance. If this contact with authors was unsuccessful, we used the most complete and up‐to‐date data source where possible.

Assessment of methodological quality

We assessed risk of bias and applicability of included studies using the QUADAS‐2 checklist (Whiting 2011), tailored to the review topic (see Appendix 7). We piloted the modified QUADAS‐2 tool on five included full‐text articles. One clinical reviewer (as detailed above) and one methodological reviewer (JDi, NC or LFR) independently assessed quality for the remaining studies; we resolved disagreements by consensus or by a third party (JDe, CD, HW and RM).

Statistical analysis and data synthesis

Our unit of analysis was the lesion rather than the participant. This is because firstly, in skin cancer initial treatment is directed to the lesion rather than systemically (thus it is important to be able to correctly identify cancerous lesions for each person), and secondly, it is the most common way in which the primary studies reported data. Although there is a theoretical possibility of correlations of test errors when the same people contribute data for multiple lesions, most studies included very few people with multiple lesions and any potential impact on findings was likely to be very small, particularly in comparison with other concerns regarding risk of bias and applicability. For each analysis, we included only one dataset per study to avoid multiple counting of lesions.

For the diagnosis of melanoma, any BCCs or invasive cSCCs that were correctly identified by teledermatology but that were identified as melanomas in the 'disease‐negative' group were considered as true‐negative test results rather than as false positives, on the basis that excision of such lesions would be a positive outcome for the participants concerned. However, for the diagnosis of BCC, we considered any melanomas or cSCCs that were mistaken for BCCs as false‐positive results. This decision was taken on the basis that the clinical management of a lesion considered to be a BCC might be quite different to that for a melanoma or cSCC and could potentially lead to a negative outcome for the participants concerned, for example if a treatment other than excision was initiated.

For preliminary investigations of the data, we plotted estimates of sensitivity and specificity on coupled forest plots and in receiver operating characteristic (ROC) space for each index test, target condition and reference standard combination. When meta‐analysis was possible and there were at least four studies, we used a bivariate model to obtain summary estimates of sensitivity and specificity (Chu 2006; Reitsma 2005). When there were fewer than four studies and little or no heterogeneity in ROC space, we pooled sensitivity and specificity using fixed‐effect logistic regression (Takwoingi 2017). We included data for face‐to‐face diagnosis only if reported in comparison to teledermatology diagnosis. Using these direct (head‐to‐head) comparisons, a comparative meta‐analysis to compare the accuracy of teledermatology with face‐to‐face diagnosis was not possible because there were too few studies. However, we tabulated results from the studies and estimated differences in sensitivity and specificity. Since these comparative studies did not report the cross‐classified results of the two index tests in participants with and without a particular form of skin cancer, we were unable to compute CIs for the differences using methods that accounted for the paired nature of the data. Therefore, we assumed independence between the sensitivities and between the specificities of the two tests, and calculated 95% CIs for the differences using the Newcombe‐Wilson method without continuity correction (Newcombe 1998). We performed analyses using Stata version 15 (Stata 2017).

Investigations of heterogeneity

We examined heterogeneity by visually inspecting forest plots of sensitivity and specificity, and summary ROC (SROC) plots. We were unable to perform meta‐regression to investigate potential sources of heterogeneity due to insufficient numbers of studies.

Sensitivity analyses

There were too few data to perform sensitivity analyses.

Assessment of reporting bias

Because of uncertainty about the determinants of publication bias for diagnostic accuracy studies and the inadequacy of tests for detecting funnel plot asymmetry (Deeks 2005), we did not perform tests to detect publication bias.

Results

Results of the search

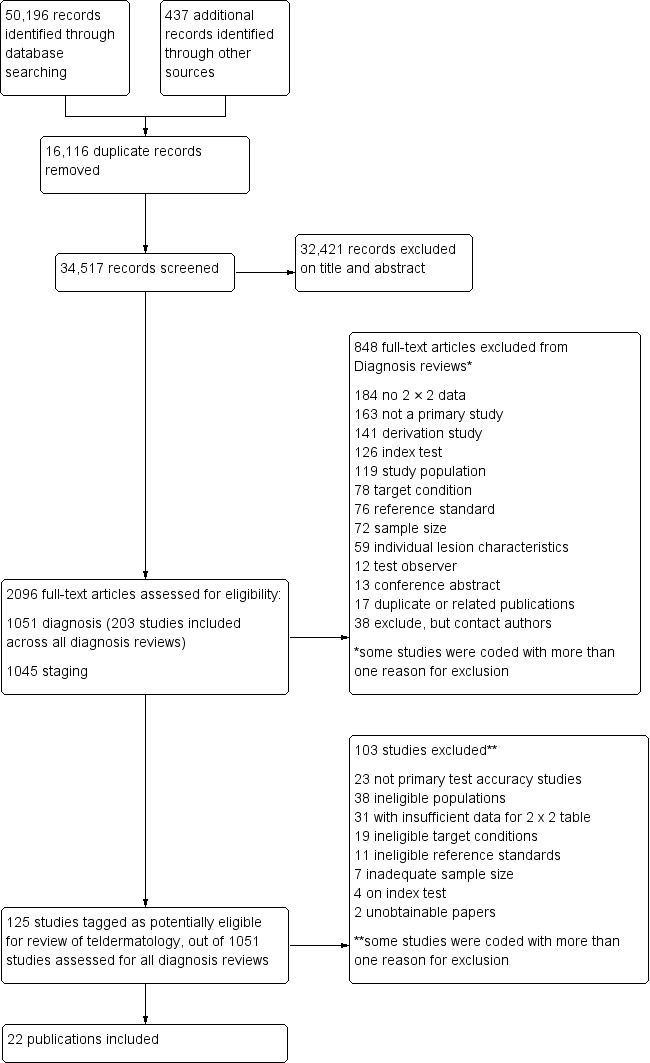

The search identified 34,517 unique references and screened them for inclusion after reading the title and abstract. Of these, we reviewed 1051 full‐text papers for eligibility for any one of the suite of reviews of tests to assist in the diagnosis of melanoma or keratinocyte skin cancer; 203 publications were included in at least one review in our series and 848 publications were excluded (see Figure 3; PRISMA flow diagram of search and eligibility results).

3.

PRISMA flow diagram.

Of the 125 studies tagged as potentially eligible for this review of teledermatology, we included 22 publications. Exclusions from the review were primarily due to: lack of test accuracy data to complete a 2×2 contingency table (31 studies), ineligible populations (38 studies) or target conditions (19 studies), not accuracy studies (23 studies) and inadequate sample size (fewer than five cases of skin cancer, fewer than five benign lesions, or for referral accuracy, fewer than five 'positive' cases identified by the expert diagnosis reference standard) (seven studies) or reference standards (11 studies). Of the 31 studies for which a 2×2 table could not be constructed, 11 reported only agreement between observers or between teledermatology and the reference standard and 14 had at least one piece of missing data. A list of the 103 studies with reasons for exclusion is provided in the Characteristics of excluded studies table, with a list of all studies excluded from the full series of reviews available as a separate pdf (please contact skin.cochrane.org for a copy of the pdf).

We contacted the corresponding authors of 12 studies and asked them to supply further information to allow study inclusion or to clarify diagnostic thresholds or target condition definition. We received responses from four authors allowing inclusion of all four studies in this review (Borve 2015;Mahendran 2005; Warshaw 2010b; Wolf 2013). One of the four authors was unable to provide all of the information requested such that the data presented in the paper could only be partially included in this review (Warshaw 2010b).

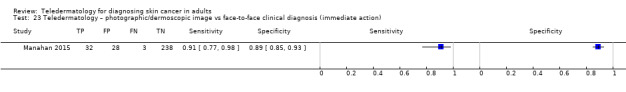

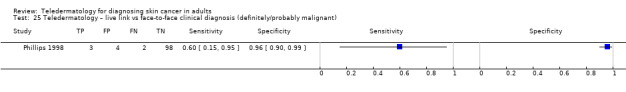

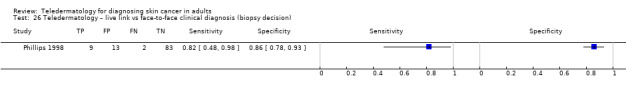

The 22 included study publications reported on 22 cohorts of lesions and provided 96 datasets (individual 2×2 contingency tables). Sixteen studies (73%) including 4057 lesions and 879 malignant cases reported data for the diagnostic accuracy of teledermatology (Arzberger 2016; Borve 2015; Bowns 2006; Congalton 2015; Coras 2003; Ferrara 2004; Grimaldi 2009; Jolliffe 2001a; Kroemer 2011; Massone 2014; Moreno Ramirez 2005; Piccolo 2000; Piccolo 2004; Silveira 2014; Warshaw 2010b; Wolf 2013), five of which also reported data for the diagnostic accuracy of expert face‐to‐face clinical diagnosis (Coras 2003; Jolliffe 2001a; Kroemer 2011; Piccolo 2000; Warshaw 2010b). Six studies (27%) including 1449 lesions reported data for the referral accuracy of teledermatology (i.e. teledermatology diagnosis or management action as the index test versus expert face‐to‐face diagnosis or management action as the reference standard) (Jolliffe 2001b; Mahendran 2005; Manahan 2015; Oliveira 2002; Phillips 1998; Shapiro 2004); these studies included 270 'positive' cases as determined by the reference standard face‐to‐face decision. A cross‐tabulation of studies by reported comparisons, target conditions and types of image used is provided in Table 2 and summary study details is presented in Appendix 8.

1. Cross‐tabulation of included studies against target condition assessed, by type of images used for teledermatology.

| Studies of diagnostic accuracy –TD vs histology |

FTF dxa vs histology |

TD vs expert FTF |

||||

| Study | Any skin cancer | Melanomab | BCC | cSCC | Action | |

| Arzberger 2016 | Photo/Derm (excise or not) | — | — | — | — | — |

| Borve 2015 | Photo/Derm (dx as malignant/possibly malignant) | — | — | — | — | — |

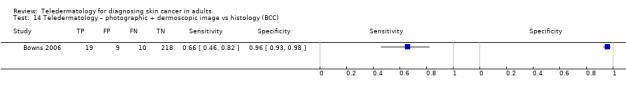

| Bowns 2006 | — | Photo/Derm (dx as MM/MiS) | Photo/Derm (dx as BCC) | — | — | — |

| Congalton 2015 | — | Photo/Derm (excise or not) | — | — | — | — |

| Coras 2003 | — | Photo/Dermc (dx as MM/MiS) | — | — | dx as MM | — |

| Ferrara 2004 | — | Derm only (dx as MM/MiS) | — | — | — | — |

| Grimaldi 2009 | — | Photo/Dermc (excise or not) | — | — | (GP dx as suspicious for malignancy; data not included) | — |

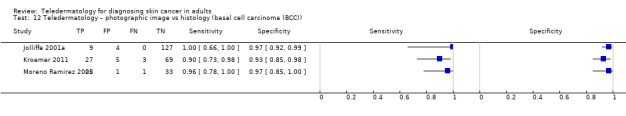

| Jolliffe 2001a | Photo onlyc (dx as any SC) | < 5 MM | Photo onlyc (dx as BCC) | — | dx as any SC dx as BCC |

— |

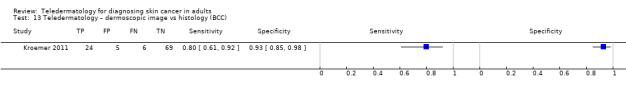

| Kroemer 2011 | Photo onlyc Derm onlyc (dx as malignant) |

Photo onlyc Derm onlyc (dx as MM/MiS) |

Photo onlyc Derm onlyc (dx as BCC) |

Photo onlyc Derm onlyc (dx as cSCC) |

dx as malignant dx as MM/MiS dx as BCC dx as cSCC |

— |

| Massone 2014 | Photo/Derm (dx as malignant) | — | — | — | — | — |

| Moreno Ramirez 2005 | Photol only (dx as malignant) | Photo only (dx as MM/MiS) | Photo only (dx as BCC) | — | — | — |

| Piccolo 2000 | — | Photo/Dermc (dx as MM) | — | — | dx as MM | — |

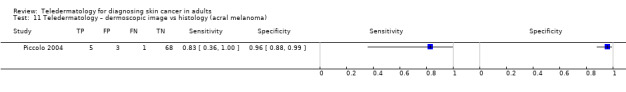

| Piccolo 2004 | — | Derm only (dx as MM) | — | — | — | — |

| Silveira 2014 | Photo only (dx as malignant) | — | — | — | — | — |

| Warshaw 2010b | — | Photo onlyc (dx as MM/MiS) | — | — | dx of MM/MiS | — |

| Wolf 2013 | — | Photo only (dx as atypical) | — | — | — | — |

| Studies of referral accuracy – TD vs expert FTF decision | ||||||

| Jolliffe 2001b | — | — | — | — | — | Clin only (refer or not) |

| Mahendran 2005 | — | — | — | — | — | Clin only (excise or not) |

| Manahan 2015 | — | — | — | — | — | Clin/Derm (see FTF) |

| Oliveira 2002 | — | — | — | — | — | Clin only (dx as malignant) |

| Phillips 1998 | — | — | — | — | — | Live link (dx as any SC; definitely/probably malignant/ excise or not) |

| Shapiro 2004 | — | — | — | — | — | Clin only (excise or not) |

BCC: basal cell carcinoma; clin: clinical; cSCC: cutaneous squamous cell carcinoma; Derm: dermoscopic images; dx: diagnosis; FTF: face‐to‐face; GP: general practitioner; MiS: melanoma in situ (atypical intraepidermal melanocytic variants); MM: invasive melanoma; Photo: photographic images; SC: skin cancer; TD: teledermatology.

aFace‐to‐face diagnosis by an expert/dermatologist unless otherwise stated.

bAll include melanoma in situ as disease positive apart from Piccolo 2000 and Piccolo 2004, which reported detection of invasive melanoma only.

cIncluded a direct comparison with expert face‐to‐face diagnosis versus histology.

Studies were primarily prospective case series (18 studies; 82%), with two retrospective case series (9%) (Moreno Ramirez 2005; Piccolo 2004), and two case‐control studies (9%) (Ferrara 2004; Wolf 2013), three of which retrospectively selected previously acquired images for prospective evaluation in the study (Ferrara 2004; Piccolo 2004; Wolf 2013). Studies were conducted in: Europe (14 (64%) studies), including five studies from Austria (Arzberger 2016; Kroemer 2011; Massone 2014; Piccolo 2000; Piccolo 2004), and four from the UK (Bowns 2006; Jolliffe 2001a; Jolliffe 2001b; Mahendran 2005); North America (four (18%) studies; Phillips 1998; Shapiro 2004; Warshaw 2010b; Wolf 2013); or South America (two studies; 9%; Oliveira 2002; Shapiro 2004); or in Australia (Manahan 2015); or New Zealand (Congalton 2015) (9%). Eight (36.4%) studies included only pigmented (Coras 2003; Grimaldi 2009; Jolliffe 2001a; Moreno Ramirez 2005; Piccolo 2000; Wolf 2013) or melanocytic (Ferrara 2004; Piccolo 2004) lesions (Piccolo 2004 restricting to acral lesions only); the remainder included any suspicious lesion.

Ten studies were based in primary care or community‐based settings. Seven studies acquired lesion images in primary care (Borve 2015; Grimaldi 2009; Mahendran 2005; Massone 2014; Moreno Ramirez 2005; Oliveira 2002; Shapiro 2004), two studies were in a community skin cancer screening outreach programme with image acquisition for 'remote' assessment by specialists in a secondary care setting (Silveira 2014) or using live transmission video‐conferencing (Phillips 1998), and one study recruited participants at high risk of melanoma who took images of their own lesions that they 'did not like the look of' using a smartphone (Manahan 2015). There were no studies conducted in high street pharmacy‐type settings. Five of the 10 studies reported the diagnostic accuracy of the teledermatology image‐based assessment (Borve 2015; Grimaldi 2009; Massone 2014; Moreno Ramirez 2005; Silveira 2014), and five examined referral accuracy, comparing the teledermatology assessment against specialist in‐person assessment (Mahendran 2005; Manahan 2015;Oliveira 2002; Phillips 1998; Shapiro 2004). Of these studies, nine were prospective in design and one was retrospective (Moreno Ramirez 2005).