Abstract

Background

Early accurate detection of all skin cancer types is essential to guide appropriate management and to improve morbidity and survival. Melanoma and cutaneous squamous cell carcinoma (cSCC) are high‐risk skin cancers which have the potential to metastasise and ultimately lead to death, whereas basal cell carcinoma (BCC) is usually localised with potential to infiltrate and damage surrounding tissue. Anxiety around missing early curable cases needs to be balanced against inappropriate referral and unnecessary excision of benign lesions. Computer‐assisted diagnosis (CAD) systems use artificial intelligence to analyse lesion data and arrive at a diagnosis of skin cancer. When used in unreferred settings ('primary care'), CAD may assist general practitioners (GPs) or other clinicians to more appropriately triage high‐risk lesions to secondary care. Used alongside clinical and dermoscopic suspicion of malignancy, CAD may reduce unnecessary excisions without missing melanoma cases.

Objectives

To determine the accuracy of CAD systems for diagnosing cutaneous invasive melanoma and atypical intraepidermal melanocytic variants, BCC or cSCC in adults, and to compare its accuracy with that of dermoscopy.

Search methods

We undertook a comprehensive search of the following databases from inception up to August 2016: Cochrane Central Register of Controlled Trials (CENTRAL); MEDLINE; Embase; CINAHL; CPCI; Zetoc; Science Citation Index; US National Institutes of Health Ongoing Trials Register; NIHR Clinical Research Network Portfolio Database; and the World Health Organization International Clinical Trials Registry Platform. We studied reference lists and published systematic review articles.

Selection criteria

Studies of any design that evaluated CAD alone, or in comparison with dermoscopy, in adults with lesions suspicious for melanoma or BCC or cSCC, and compared with a reference standard of either histological confirmation or clinical follow‐up.

Data collection and analysis

Two review authors independently extracted all data using a standardised data extraction and quality assessment form (based on QUADAS‐2). We contacted authors of included studies where information related to the target condition or diagnostic threshold were missing. We estimated summary sensitivities and specificities separately by type of CAD system, using the bivariate hierarchical model. We compared CAD with dermoscopy using (a) all available CAD data (indirect comparisons), and (b) studies providing paired data for both tests (direct comparisons). We tested the contribution of human decision‐making to the accuracy of CAD diagnoses in a sensitivity analysis by removing studies that gave CAD results to clinicians to guide diagnostic decision‐making.

Main results

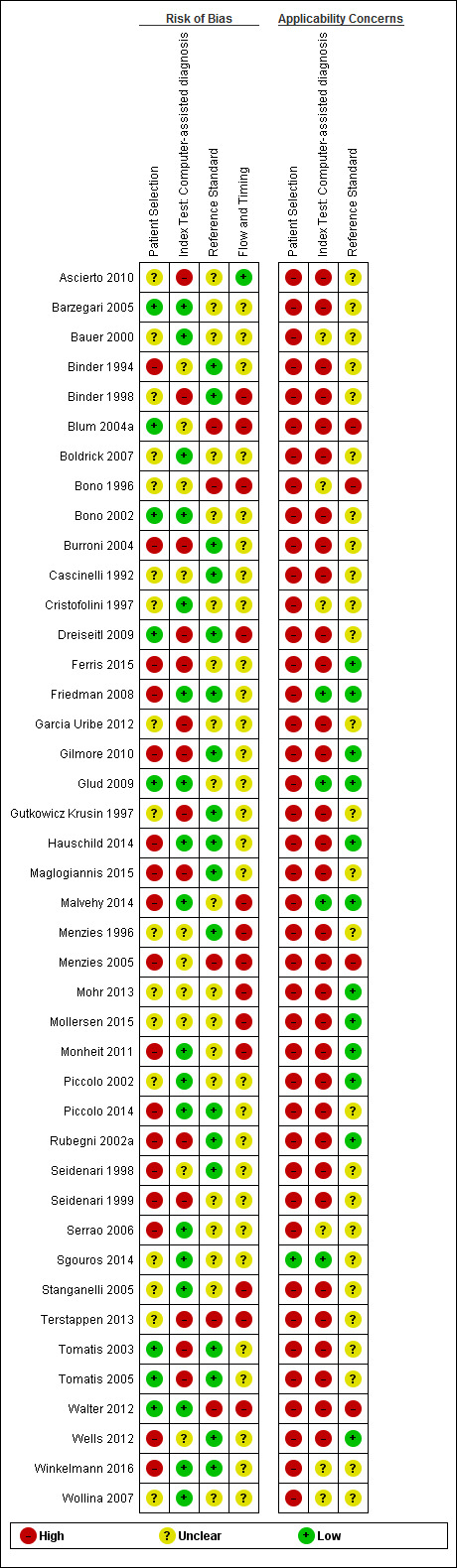

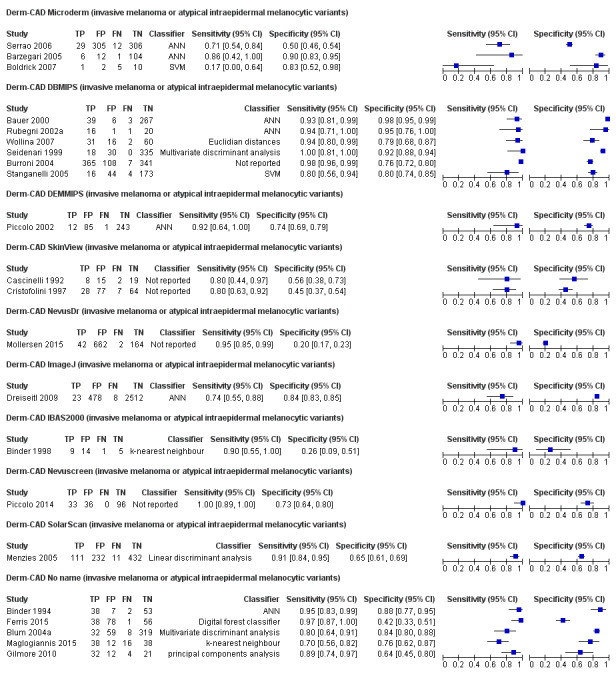

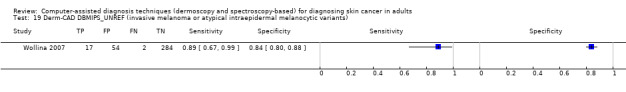

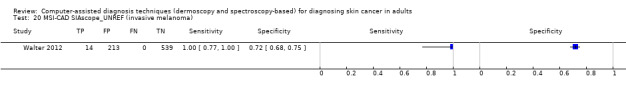

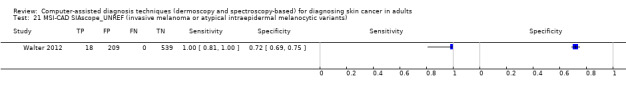

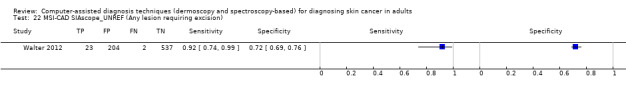

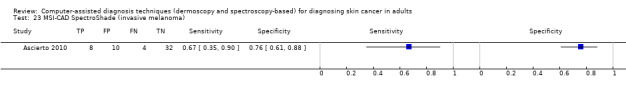

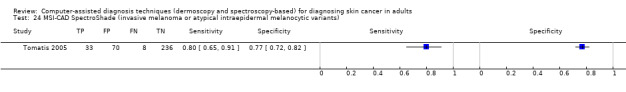

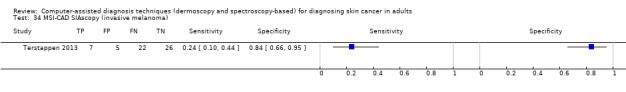

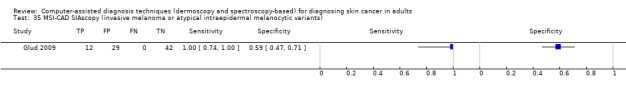

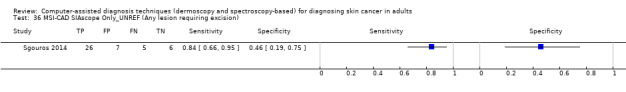

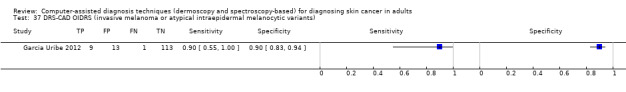

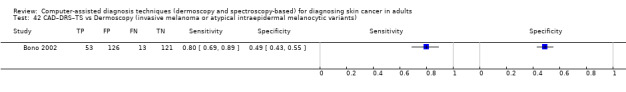

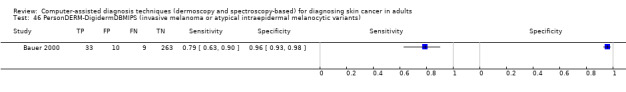

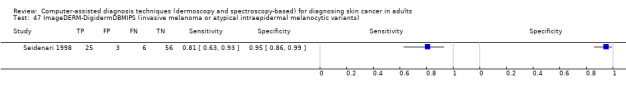

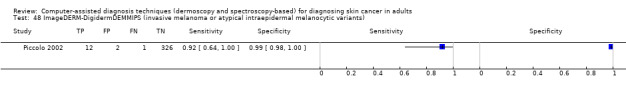

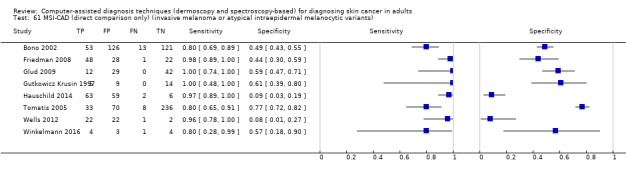

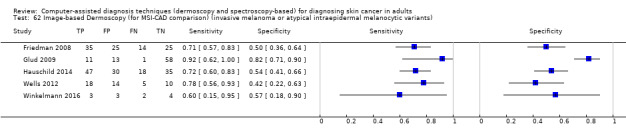

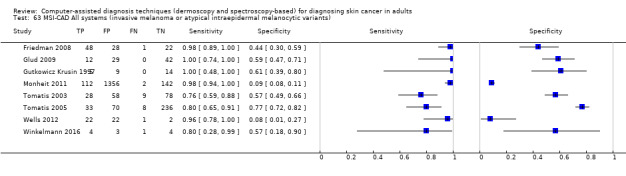

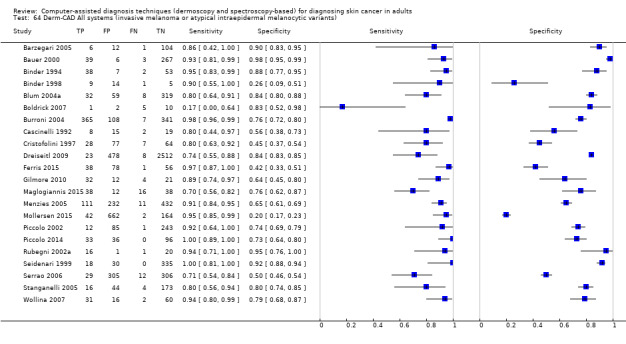

We included 42 studies, 24 evaluating digital dermoscopy‐based CAD systems (Derm–CAD) in 23 study cohorts with 9602 lesions (1220 melanomas, at least 83 BCCs, 9 cSCCs), providing 32 datasets for Derm–CAD and seven for dermoscopy. Eighteen studies evaluated spectroscopy‐based CAD (Spectro–CAD) in 16 study cohorts with 6336 lesions (934 melanomas, 163 BCC, 49 cSCCs), providing 32 datasets for Spectro–CAD and six for dermoscopy. These consisted of 15 studies using multispectral imaging (MSI), two studies using electrical impedance spectroscopy (EIS) and one study using diffuse‐reflectance spectroscopy. Studies were incompletely reported and at unclear to high risk of bias across all domains. Included studies inadequately address the review question, due to an abundance of low‐quality studies, poor reporting, and recruitment of highly selected groups of participants.

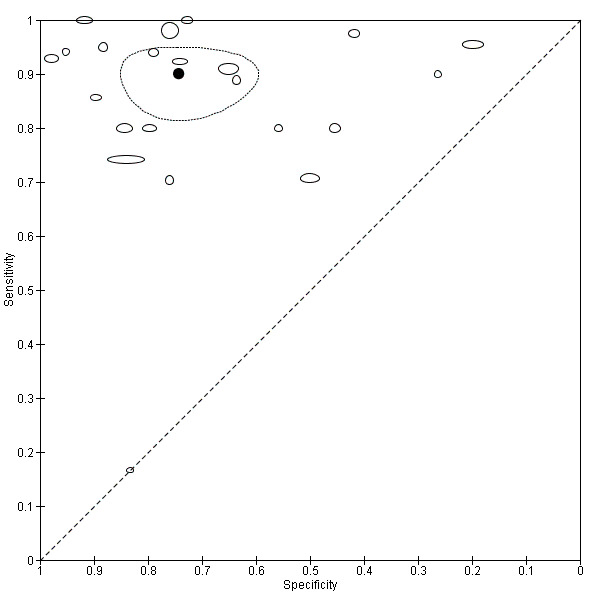

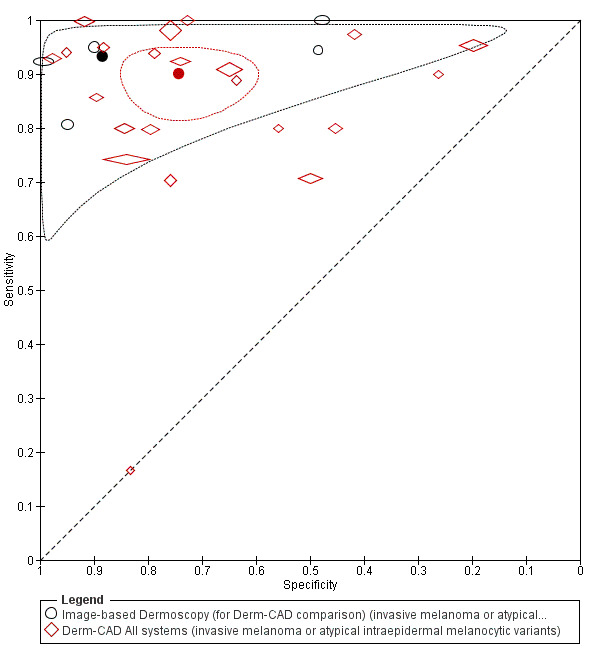

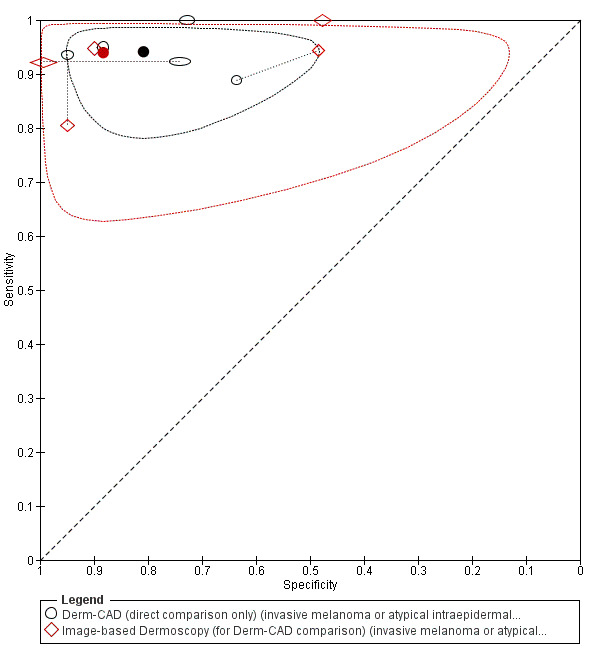

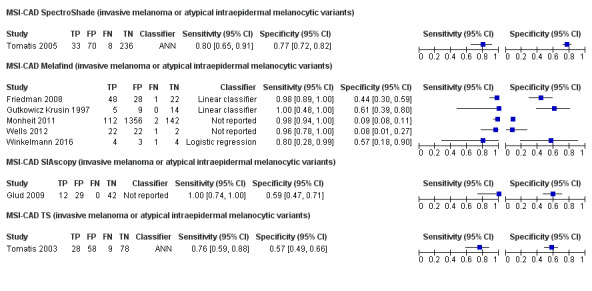

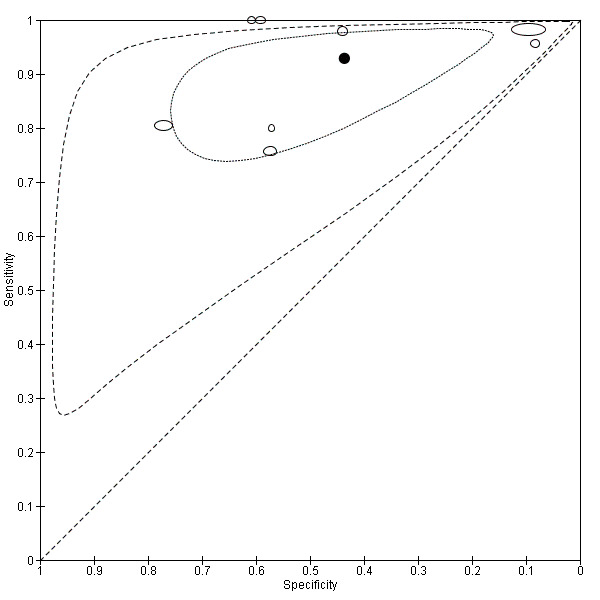

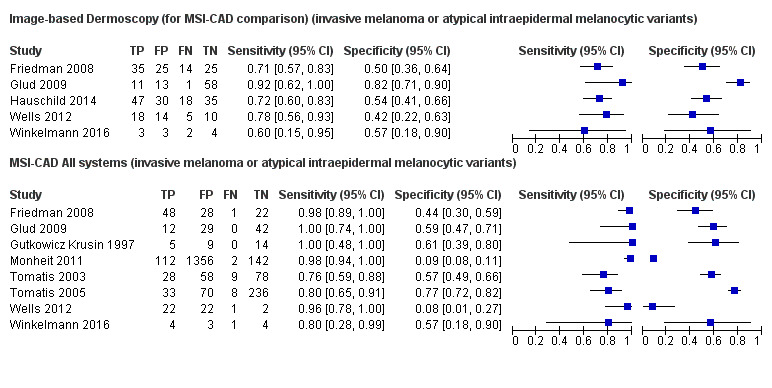

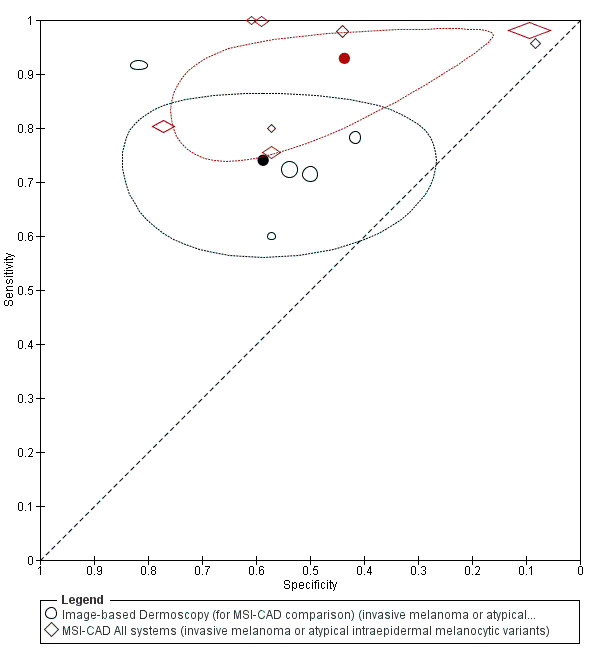

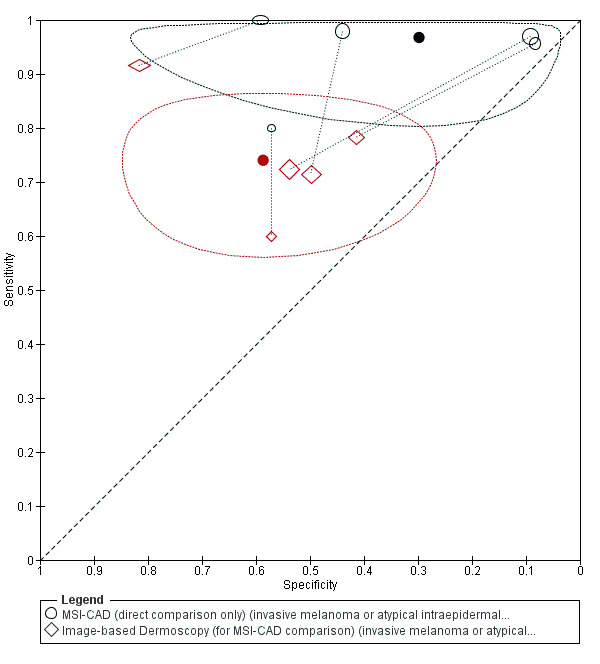

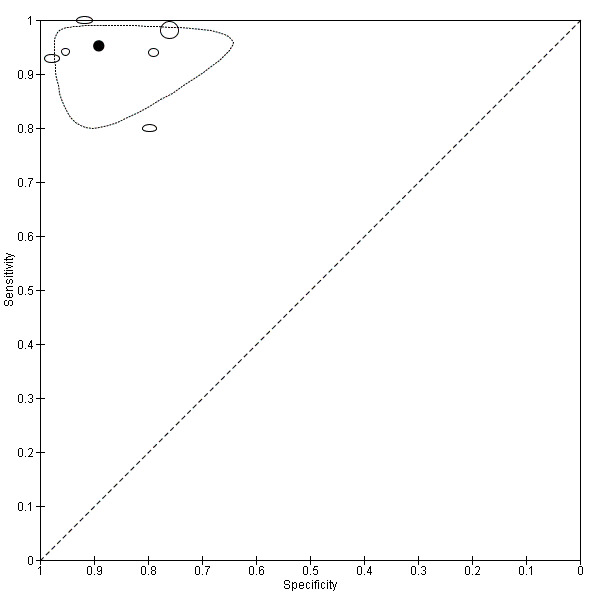

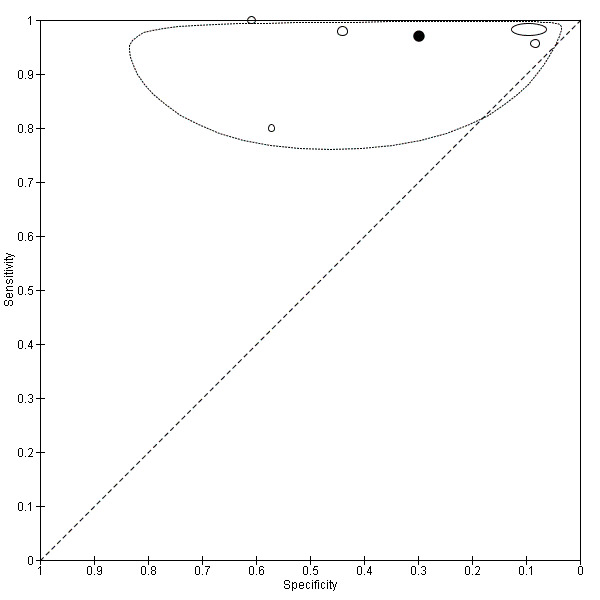

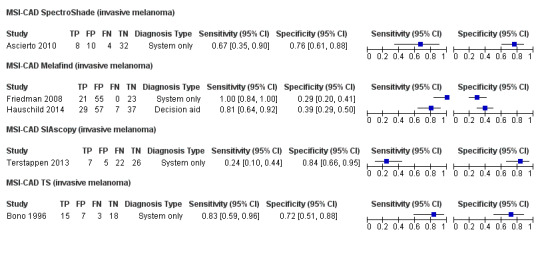

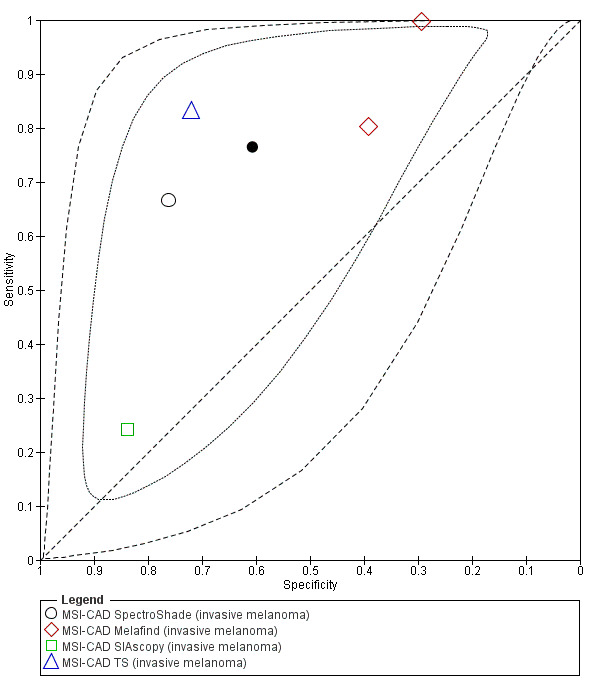

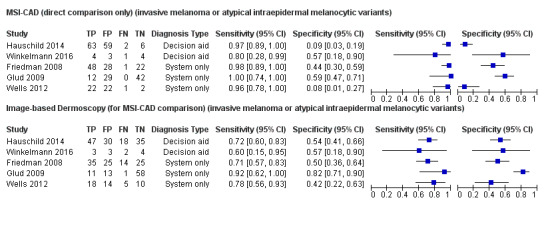

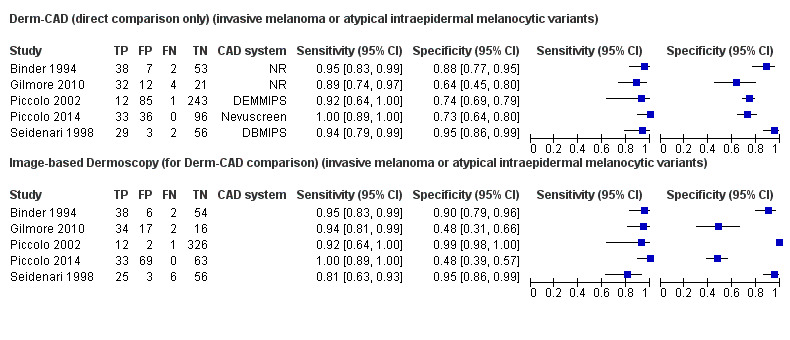

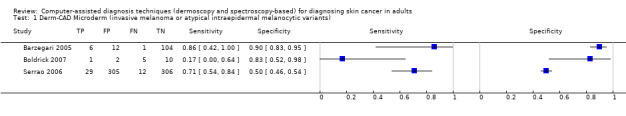

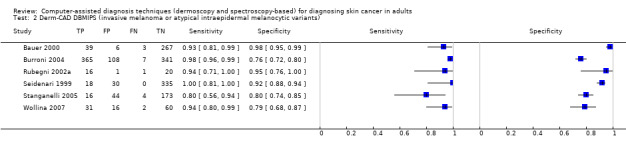

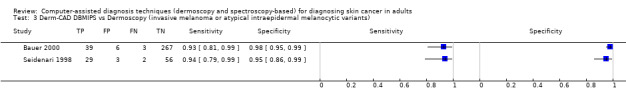

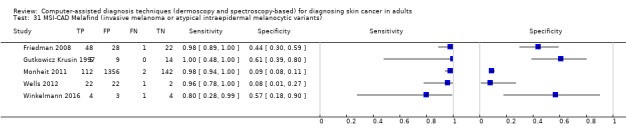

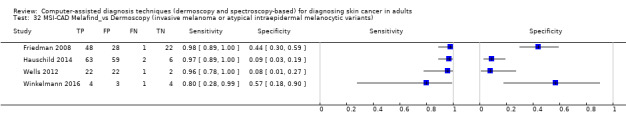

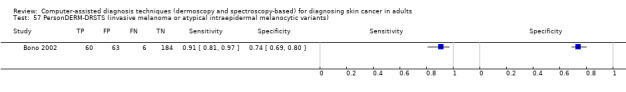

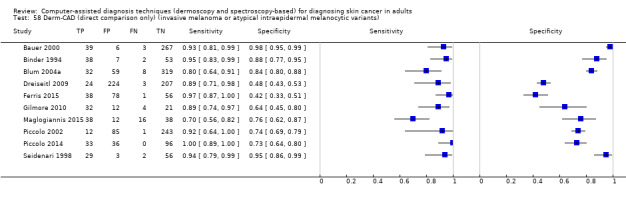

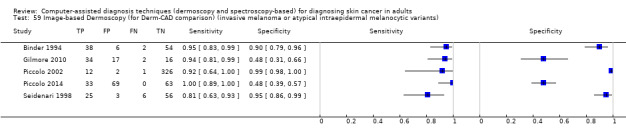

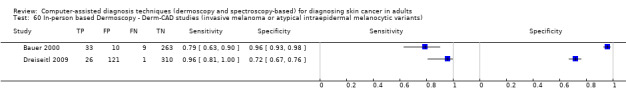

Across all CAD systems, we found considerable variation in the hardware and software technologies used, the types of classification algorithm employed, methods used to train the algorithms, and which lesion morphological features were extracted and analysed across all CAD systems, and even between studies evaluating CAD systems. Meta–analysis found CAD systems had high sensitivity for correct identification of cutaneous invasive melanoma and atypical intraepidermal melanocytic variants in highly selected populations, but with low and very variable specificity, particularly for Spectro–CAD systems. Pooled data from 22 studies estimated the sensitivity of Derm–CAD for the detection of melanoma as 90.1% (95% confidence interval (CI) 84.0% to 94.0%) and specificity as 74.3% (95% CI 63.6% to 82.7%). Pooled data from eight studies estimated the sensitivity of multispectral imaging CAD (MSI–CAD) as 92.9% (95% CI 83.7% to 97.1%) and specificity as 43.6% (95% CI 24.8% to 64.5%). When applied to a hypothetical population of 1000 lesions at the mean observed melanoma prevalence of 20%, Derm–CAD would miss 20 melanomas and would lead to 206 false‐positive results for melanoma. MSI–CAD would miss 14 melanomas and would lead to 451 false diagnoses for melanoma. Preliminary findings suggest CAD systems are at least as sensitive as assessment of dermoscopic images for the diagnosis of invasive melanoma and atypical intraepidermal melanocytic variants. We are unable to make summary statements about the use of CAD in unreferred populations, or its accuracy in detecting keratinocyte cancers, or its use in any setting as a diagnostic aid, because of the paucity of studies.

Authors' conclusions

In highly selected patient populations all CAD types demonstrate high sensitivity, and could prove useful as a back‐up for specialist diagnosis to assist in minimising the risk of missing melanomas. However, the evidence base is currently too poor to understand whether CAD system outputs translate to different clinical decision–making in practice. Insufficient data are available on the use of CAD in community settings, or for the detection of keratinocyte cancers. The evidence base for individual systems is too limited to draw conclusions on which might be preferred for practice. Prospective comparative studies are required that evaluate the use of already evaluated CAD systems as diagnostic aids, by comparison to face–to–face dermoscopy, and in participant populations that are representative of those in which the test would be used in practice.

Keywords: Adult; Humans; Electric Impedance; Algorithms; Carcinoma, Basal Cell; Carcinoma, Basal Cell/diagnosis; Carcinoma, Basal Cell/diagnostic imaging; Carcinoma, Basal Cell/pathology; Carcinoma, Squamous Cell; Carcinoma, Squamous Cell/diagnosis; Carcinoma, Squamous Cell/diagnostic imaging; Carcinoma, Squamous Cell/pathology; Clinical Decision‐Making; Dermoscopy; Dermoscopy/methods; Dermoscopy/standards; Diagnosis, Computer‐Assisted; Diagnosis, Computer‐Assisted/methods; Diagnosis, Computer‐Assisted/standards; False Positive Reactions; Melanoma; Melanoma/diagnosis; Melanoma/diagnostic imaging; Melanoma/pathology; Sensitivity and Specificity; Skin Neoplasms; Skin Neoplasms/diagnosis; Skin Neoplasms/diagnostic imaging; Skin Neoplasms/pathology

Plain language summary

What is the diagnostic accuracy of computer‐assisted diagnosis techniques for the detection of skin cancer in adults?

Why is improving the diagnosis of skin cancer important?

There are a number of different types of skin cancer, including melanoma, squamous cell carcinoma (SCC) and basal cell carcinoma (BCC). Melanoma is one of the most dangerous forms. If it is not recognised early treatment can be delayed and this risks the melanoma spreading to other organs in the body and may eventually lead to death. Cutaneous squamous cell carcinoma (cSCC) and BCC are considered less dangerous, as they are localised (less likely to spread to other parts of the body compared to melanoma). However, cSCC can spread to other parts of the body and BCC can cause disfigurement if not recognised early. Diagnosing a skin cancer when it is not actually present (a false‐positive result) might result in unnecessary surgery and other investigations and can cause stress and anxiety to the patient. Missing a diagnosis of skin cancer may result in the wrong treatment being used or lead to a delay in effective treatment.

What is the aim of the review?

The aim of this Cochrane Review was to find out how accurate computer–assisted diagnosis (CAD) is for diagnosing melanoma, BCC or cSCC. The review also compared the accuracy of two different types of CAD, and compared the accuracy of CAD with diagnosis by a doctor using a handheld illuminated microscope (a dermatoscope or ‘dermoscopy’). We included 42 studies to answer these questions.

What was studied in the review?

A number of tools are available to skin cancer specialists which allow a more detailed examination of the skin compared to examination by the naked eye alone. Currently a dermatoscope which magnifies the skin lesion (a mole or area of skin with an unusual appearance in comparison with the surrounding skin) using a bright light source is used by most skin cancer specialists. CAD tests are computer systems that analyse information about skin lesions obtained from a dermatoscope or other techniques that use light to describe the features of a skin lesion (spectroscopy) to produce a result indicating whether skin cancer is likely to be present. We included CAD systems that get their information from dermoscopic images of lesions (Derm–CAD), or that use data from spectroscopy. Most of the spectroscopy studies used data from multispectral imaging (MSI–CAD) and are the main focus here. When a skin cancer specialist finds a lesion is suspicious using visual examination with or without additional dermoscopy, results from CAD systems can be used alone to make a diagnosis of skin cancer (CAD–based diagnosis), or can be used by doctors in addition to their visual inspection examination of a skin lesion to help them reach a diagnosis (CAD–aided diagnosis). Researchers examined how useful CAD systems are to help diagnose skin cancers in addition to visual inspection and dermoscopy.

What are the main results of the review?

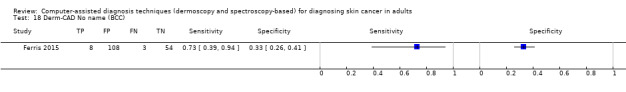

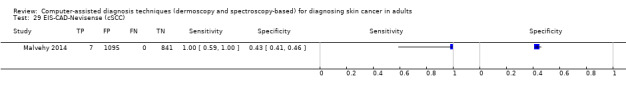

The review included 42 studies looking at CAD systems for the diagnosis of melanoma. There was not enough evidence to determine the accuracy of CAD systems for the diagnosis of BCC (3 studies) or cSCC (1 study).

Derm‐CAD results for diagnosis of melanoma

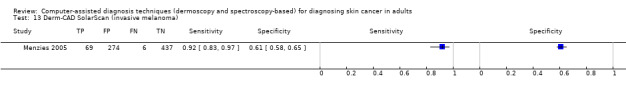

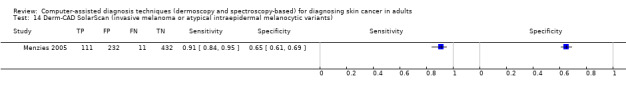

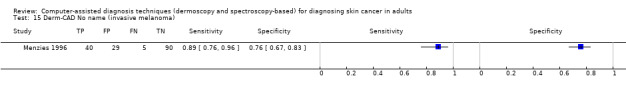

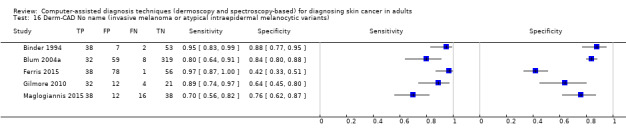

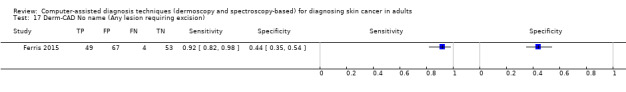

The main results for Derm‐CAD are based on 22 studies including 8992 lesions.

Applied to a group of 1000 skin lesions, of which 200 (20%) are given a final diagnosis* of melanoma, the results suggest that:

‐ An estimated 386 people will have a Derm–CAD result suggesting that a melanoma is present, and of these 206 (53%) will not actually have a melanoma (false‐positive result)

‐ Of the 614 people with a Derm–CAD result indicating that no melanoma is present, 20 (3%) will in fact actually have a melanoma (false‐negative result)

There was no evidence to suggest that dermoscopy or Derm–CAD was different in its ability to detect or rule out melanoma.

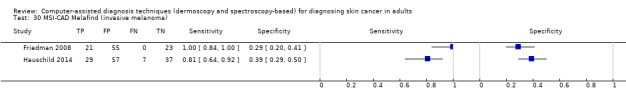

MSI‐CAD results for diagnosis of melanoma

The main results for MSI–CAD are based on eight studies including 2401 lesions. In a group of 1000 people, of whom 200 (20%) actually do have melanoma*, then:

‐ An estimated 637 people will have an MSI–CAD result suggesting that a melanoma is present, and of these 451 (71%) will not actually have a melanoma (false‐positive result)

‐ Of the 363 people with an MSI–CAD result indicating that no melanoma is present, 14 (4%) will in fact have a melanoma (false‐negative result)

MSI–CAD detects more melanomas, but possibly produces more false‐positive results (an increase in unnecessary surgery).

How reliable are the results of the studies of this review?

Incomplete reporting of studies made it difficult for us to judge how reliable they were. Many studies had important limitations. Some studies only included particular types of skin lesions or excluded lesions that were considered difficult to diagnose. Importantly, most of the studies only included skin lesions with a biopsy result, which means that only a sample of lesions that would be seen by a doctor in practice were included. These characteristics may result in CAD systems appearing more or less accurate than they actually are.

Who do the results of this review apply to?

Studies were largely conducted in Europe (29, 69%) and North America (8, 19%). Mean age (reported in 6/42 studies) ranged from 32 to 49 years for melanoma. The percentage of people with a final diagnosis of melanoma ranged from 1% to 52%. It was not always possible to tell whether suspicion of skin cancer in study participants was based on clinical examination alone, or both clinical and dermoscopic examinations. Almost all studies were done in people with skin lesions who were seen at specialist clinics rather than by doctors in primary care.

What are the implications of this review?

CAD systems appear to be accurate for identification of melanomas in skin lesions that have already been selected for excision on the basis of clinical examination (visual inspection and dermoscopy). It is possible that some CAD systems identify more melanomas than doctors using dermoscopy images. However, CAD systems also produced far more false‐positive diagnoses than dermoscopy, and could lead to considerable increases in unnecessary surgery. The performance of CAD systems for detecting BCC and cSCC skin cancers is unclear. More studies are needed to evaluate the use of CAD by doctors for the diagnosis of skin cancer in comparison to face‐to‐face diagnosis using dermoscopy, both in primary care and in specialist skin cancer clinics.

How up‐to‐date is this review?

The review authors searched for and used studies published up to August 2016.

*In these studies, biopsy, clinical follow up, or specialist clinician diagnosis were the reference standards (means of establishing the final diagnosis).

Summary of findings

Summary of findings'. 'Summary of findings table.

| Question: | What is the diagnostic accuracy of computer‐assisted diagnosis for the detection of: i) cutaneous invasive melanoma and atypical intraepidermal melanocyticvariants, ii) BCC, or iii) cSCC in adults? | |||||

| Population: | Adults with lesions suspicious for skin cancer, including:

|

|||||

| Index test: | Computer‐assisted diagnosis (CAD) | |||||

| Comparator test: | Dermoscopy | |||||

| Target condition: | Cutaneous invasive melanoma and atypical intraepidermal melanocytic variants, or basal cell carcinoma (BCC), or cutaneous squamous cell carcinoma (cSCC) | |||||

| Reference standard: | Histology with or without long‐term follow‐up | |||||

| Action: | If accurate, positive results of CAD will identify skin cancers that could otherwise be missed, while negative results will stop patients having unnecessary excision of skin lesions | |||||

| Quantity of evidence | ||||||

| Number of studies | 42a | Total lesions with test results | 13,445 | Total with target condition | 2452 | |

| Limitations | ||||||

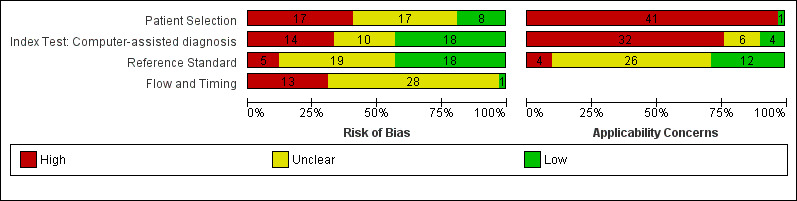

| Risk of bias: | Patient selection methods were poorly reported, with some concern (34/42) due to use of case‐control designs, exclusion of difficult‐to‐diagnose types of lesion, and inadequate reporting to assess risks of bias. CAD was generally evaluated in independent populations (35/42). Some concern as it was not clear that the reference standard was interpreted blind to the CAD results in 19/42 studies. Differential verification was used in 6/42 studies, participants were excluded in 10/42, primarily due to technical difficulties with CAD. Timing of tests was not mentioned in 28/42. | |||||

| Applicability of evidence to question: | High concern for poor clinical applicability of included studies. Almost all studies recruited narrowly‐defined populations (41/42) or multiple lesions per participant (14/42) or both, and may not be representative of populations eligible for CAD. Studies provided little information regarding the thresholds used for presence of malignancy (16/42) and often evaluated unestablished thresholds (23/42). Studies performing training of algorithms provided scarce information on the range of conditions included in training sets. Little information was given about the expertise of the histopathologist. | |||||

| FINDINGS: All analyses are undertaken on subgroups of the studies | ||||||

| All included studies considered the detection of melanoma, three of which also looked at the detection of BCC and one at the detection of cSCC. There are therefore insufficient data to make summary statements about the accuracy of CAD for the detection of BCC or cSCC. All results below consider the detection of the primary target condition: cutaneous invasive melanoma and atypical intraepidermal melanocytic variants. | ||||||

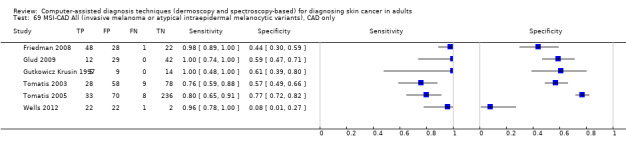

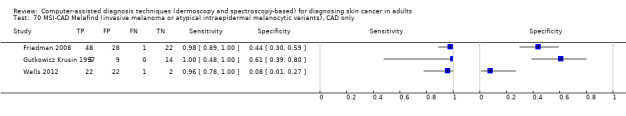

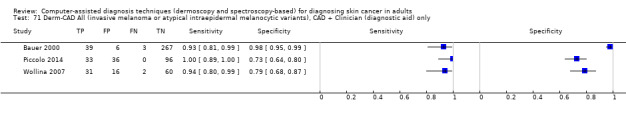

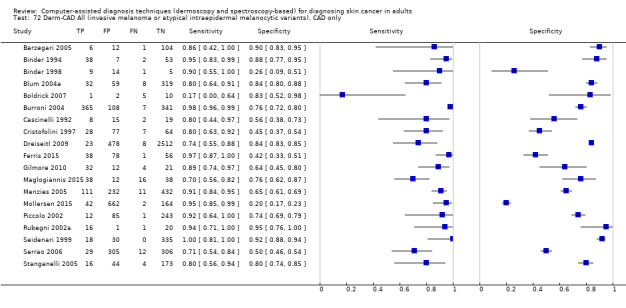

| Test: Digital dermoscopy‐based CAD (all systems) | ||||||

| Quantity of evidence | Number of studies | 22 | Total lesions with test results | 8992 | Total with melanoma | 1063 |

|

Sensitivity (95% CI): Specificity (95% CI): |

90.1% (84.0% to 94.0%) 74.3% (63.6% to 82.7%) |

Numbers observed in a cohort of 1000 people being testedb | ||||

| Consistency | Sensitivity estimates consistent. Some heterogeneity in specificity between studies | Consequences | Prevalence | |||

| 7% | 20% | 40% | ||||

| True positives | Receive necessary excision | 63 | 180 | 360 | ||

| False positives | Receive unnecessary excision | 239 | 206 | 154 | ||

| False negatives | Do not receive required excision | 7 | 20 | 40 | ||

| True negatives | Appropriately do not receive excision | 691 | 594 | 446 | ||

| PPV | 21% | 46% | 70% | |||

| NPV | 99% | 97% | 92% | |||

| Test: Multispectral imaging‐based CAD (all systems) | ||||||

| Quantity of evidence | Number of studies | 8 | Total lesions with test results | 2401 | Total with melanoma | 286 |

|

Sensitivity (95% CI): Specificity (95% CI): |

92.9% (83.7% to 97.1%) 43.6% (24.8% to 64.5%) |

Numbers observed in a cohort of 1000 people being tested | ||||

| Consistency | Sensitivity estimates consistent. High heterogeneity in specificity between studies | Consequences | Prevalence | |||

| 7% | 20% | 40% | ||||

| True positives | Receive necessary excision | 65 | 186 | 372 | ||

| False positives | Receive unnecessary excision | 525 | 451 | 338 | ||

| False negatives | Do not receive required excision | 5 | 14 | 28 | ||

| True negatives | Appropriately do not receive excision | 405 | 349 | 262 | ||

| PPV | 12% | 29% | 52% | |||

| NPV | 99% | 96% | 90% | |||

aSix studies with overlapping lesions (Seidenari 1998 and Seidenari 1999; Tomatis 2003 and Bono 2002; Monheit 2011 and Hauschild 2014). bNumbers estimated at 25th, 50th (median) and 75% percentiles of invasive melanoma and atypical intraepidermal melanocytic variants prevalence, observed across 42 studies reporting evaluations of CAD. BCC ‐ Basal cell carcinoma; CAD ‐ computer‐assisted diagnosis; CI ‐ confidence interval; cSCC ‐ cutaneous squamous cell carcinoma; NPV ‐ negative predictive value; PPV ‐ positive predictive value

Background

This review is one of a series of Cochrane Diagnostic Test Accuracy (DTA) Reviews on the diagnosis and staging of melanoma and keratinocyte skin cancers conducted for the National Institute for Health Research (NIHR) Cochrane Systematic Reviews Programme. Appendix 1 shows the content and structure of the programme. Table 2 provides a glossary of terms used, and a table of acronyms used is provided in Appendix 2.

1. Glossary of terms.

| Term | Definition |

| Artificial intelligence | Computer systems undertaking tasks that normally require human intelligence, such as decision–making or visual perception |

| Atypical intraepidermal melanocytic variant | Unusual area of darker pigmentation contained within the epidermis that may progress to an invasive melanoma; includes melanoma in situ and lentigo maligna |

| Atypical naevi | Unusual looking but noncancerous mole or area of darker pigmentation of the skin |

| Basaloid cells | Cells in the skin that look like those in epidermal basal layer |

| BRAF V600 mutation | BRAF is a human gene that makes a protein called B‐Raf which is involved in the control of cell growth. BRAF mutations (damaged DNA) occur in around 40% of melanomas, which can then be treated with particular drugs. |

| BRAF inhibitors | Therapeutic agents which inhibit the serine‐threonine protein kinase BRAF mutated metastatic melanoma. |

| Breslow thickness | A scale for measuring the thickness of melanomas by the pathologist using a microscope, measured in mm from the top layer of skin to the bottom of the tumour. |

| Congenital naevi | A type of mole found on infants at birth |

| Dermoscopy | Whereby a handheld microscope is used to allow more detailed, magnified, examination of the skin compared to examination by the naked eye alone |

| Dermo‐epidermal junction | The area where the lower part of the epidermis and top layer of the dermis meet |

| Dermis | Layer of skin below the epidermis, composed of living tissue and containing blood capillaries, nerve endings, sweat glands, hair follicles and other structures |

| Desmoplastic subtypes of SCC | An aggressive squamous cell carcinoma variant characterised by a proliferation of fibroblasts and formation of fibrous connective tissue |

| Electrodesiccation | The use of high‐frequency electric currents to cut, destroy or cauterise tissue. It is performed with the use of a fine needle‐shaped instrument |

| Electrical impedance spectroscopy | The measurement of electrical current properties as they pass through skin tissues, to retrieve information on cellular structures |

| Epidermis | Outer layer of the skin |

| False negative | An individual who is truly positive for a disease, but whom a diagnostic test classifies them as disease‐free. |

| False positive | An individual who is truly disease‐free, but whom a diagnostic test classifies them as having the disease. |

| Histopathology/Histology | The study of tissue, usually obtained by biopsy or excision, for example under a microscope. |

| Incidence | The number of new cases of a disease in a given time period. |

| Index test | A diagnostic test under evaluation in a primary study |

| Interferometry | The measurement of waves of light or sound after interference in order to extract information |

| Lentigo maligna | Unusual area of darker pigmentation contained within the epidermis which includes malignant cells but with no invasive growth. May progress to an invasive melanoma |

| Lymph node | Lymph nodes filter the lymphatic fluid (clear fluid containing white blood cells) that travels around the body to help fight disease; they are located throughout the body often in clusters (nodal basins). |

| Melanocytic naevus | An area of skin with darker pigmentation (or melanocytes) also referred to as ‘moles’ |

| Meta‐analysis | A form of statistical analysis used to synthesise results from a collection of individual studies. |

| Metastases/metastatic disease | Spread of cancer away from the primary site to somewhere else through the bloodstream or the lymphatic system. |

| Morbidity | Detrimental effects on health. |

| Mortality | Either (1) the condition of being subject to death; or (2) the death rate, which reflects the number of deaths per unit of population in relation to any specific region, age group, disease, treatment or other classification, usually expressed as deaths per 100, 1000, 10,000 or 100,000 people. |

| Multidisciplinary team | A team with members from different healthcare professions and specialties (e.g. urology, oncology, pathology, radiology, and nursing). Cancer care in the National Health Service (NHS) uses this system to ensure that all relevant health professionals are engaged to discuss the best possible care for that patient. |

| Naevus | A mole or collection of pigment cells (plural: naevi or nevi) |

| Optical coherence tomography (OCT) | Based on the same principle as ultrasound, OCT uses a handheld probe to measure the optical scattering of near‐infrared (1310 nm) light waves (rather than sound waves) from under the surface of the skin |

| Prevalence | The proportion of a population found to have a condition. |

| Prognostic factors/indicators | Specific characteristics of a cancer or the person who has it which might affect the patient’s prognosis. |

| Receiver operating characteristic (ROC) plot | A plot of the sensitivity and 1 minus the specificity of a test at the different possible thresholds for test positivity; represents the diagnostic capability of a test with a range of binary test results |

| Receiver operating characteristic (ROC) analysis | The analysis of a ROC plot of a test to select an optimal threshold for test positivity |

| Recurrence | Recurrence is when new cancer cells are detected following treatment. This can occur either at the site of the original tumour or at other sites in the body. |

| Reference Standard | A test or combination of tests used to establish the final or ‘true’ diagnosis of a patient in an evaluation of a diagnostic test |

| Reflectance confocal microscopy (RCM) | A microscopic technique using infrared light (either in a handheld device or a static unit) that can create images of the deeper layers of the skin |

| Resolution | Resolution in an imaging system refers to its ability to distinguish two points in space as being separate points; resolution is measured in two directions: axial and lateral. |

| Sensitivity | In this context the term is used to mean the proportion of individuals with a disease who have that disease correctly identified by the study test |

| Specificity | The proportion of individuals without the disease of interest (in this case with benign skin lesions) who have that absence of disease correctly identified by the study test |

| Spectroscopy | Study of the interaction between matter and electromagnetic radiation |

| Spindle subtypes of SCC | A squamous cell carcinoma variant characterised by poorly differentiated spindle cells surrounded by collagenous stroma |

| Staging | Clinical description of the size and spread of a patient’s tumour, fitting into internationally agreed categories. |

| Stratum corneum | The outermost layer of the epidermis. This layer is the most superficial layer of skin, which is composed of flattened skin cells organised like a brick wall. In normal conditions cells are not nucleated at this layer |

| Subclinical (disease) | Disease that is usually asymptomatic and not easily observable, e.g. by clinical or physical examination. |

Target condition being diagnosed

There are three main forms of skin cancer. Melanoma is the most widely known amongst the general population, yet the commonest skin cancers in white populations are those arising from keratinocytes: basal cell carcinoma (BCC) and cutaneous squamous cell carcinoma (cSCC) (Gordon 2013; Madan 2010). In 2003, the World Health Organization estimated that between two and three million ‘non‐melanoma’ skin cancers (of which BCC and cSCC are estimated to account for around 80% and 16% of cases, respectively) and 132,000 melanoma skin cancers occur globally each year (WHO 2003).

In this DTA review there are three target conditions of interest: (a) melanoma; (b) basal cell carcinoma (BCC); and (c) cutaneous squamous cell carcinoma (cSCC).

Melanoma

Melanoma arises from uncontrolled proliferation of melanocytes, i.e. the epidermal cells that produce pigment or melanin. Cutaneous melanoma refers to any skin lesion with malignant melanocytes present in the dermis, and includes superficial spreading, nodular, acral lentiginous, and lentigo maligna melanoma variants (see Figure 1). Melanoma in situ refers to malignant melanocytes that are contained within the epidermis and have not invaded the dermis, but are at risk of progression to melanoma if left untreated. Lentigo maligna, a subtype of melanoma in situ in chronically sun‐damaged skin, denotes another form of proliferation of abnormal melanocytes. Lentigo maligna can progress to invasive melanoma if its growth breaches the dermo‐epidermal junction during a vertical growth phase (when it is a 'lentigo maligna melanoma'). However its malignant transformation is both lower and slower than for melanoma in situ (Kasprzak 2015). Melanoma in situ and lentigo maligna are both atypical intraepidermal melanocytic variants. Melanoma is one of the most serious forms of skin cancer, with the potential to metastasise to other parts of the body through the lymphatic system and bloodstream. It accounts for only a small proportion of skin cancer cases but is responsible for up to 75% of deaths from this disease (Boring 1994; Cancer Research UK 2017).

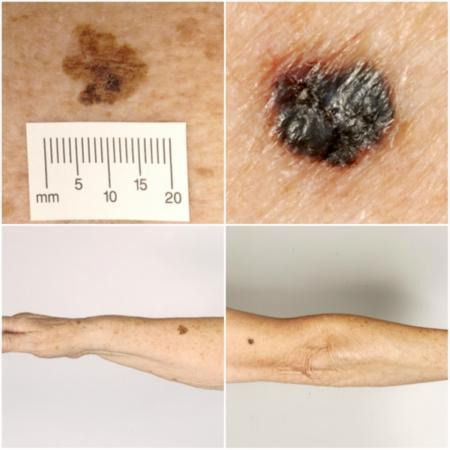

1.

Sample photographs of superficial spreading melanoma (left) and nodular melanoma (right). Copyright © 2010 Dr Rubeta Matin: reproduced with permission.

The incidence of melanoma rose to over 200,000 newly‐diagnosed cases worldwide in 2012 (Erdmann 2013; Ferlay 2015), with an estimated 55,000 deaths (Ferlay 2015). The highest incidence is observed in Australia with 13,134 new cases of melanoma of the skin in 2014 (ACIM 2017) and in New Zealand with 2341 registered cases in 2010 (HPA and MelNet NZ 2014). For 2014 in the USA, the predicted incidence was 73,870 per annum and the predicted number of deaths was 9940 (Siegel 2015). The highest rates in Europe are seen in north‐western Europe and the Scandinavian countries, with a highest incidence reported in Switzerland: 25.8 per 100,000 in 2012. Rates in England have tripled from 4.6 and 6.0 per 100,000 in men and women, respectively, in 1990, to 18.6 and 19.6 per 100,000 in 2012 (EUCAN 2012). Indeed, in the UK, melanoma has one of the fastest‐rising incidence rates of any cancer, and has the biggest projected increase in incidence between 2007 and 2030 (Mistry 2011). In the decade leading up to 2013, age‐standardised incidence increased by 46%, with 14,500 new cases in 2013 and 2459 deaths in 2014 (Cancer Research UK 2017). Rates are higher in women than in men, but the rate of incidence in men is increasing faster than in women (Arnold 2014).The rising incidence of melanoma is thought to be primarily related to an increase in recreational sun exposure and tanning bed use and an increasingly ageing population with higher lifetime recreational ultraviolet (UV) exposure, in conjunction with possible earlier detection (Belbasis 2016; Linos 2009). Putative risk factors are reviewed in detail elsewhere (Belbasis 2016).

A database of over 40,000 US patients from 1998 onwards which assisted the development of the 8th American Joint Committee on Cancer (AJCC) Staging System indicated a five‐year survival of 97% to 99% for stage I melanoma, dropping to between 32% and 93% in stage III disease, depending on tumour thickness, the presence of ulceration and the number of involved nodes (Gershenwald 2017). While these are substantial increases relative to survival in 1975 (Cho 2014), mortality rates have remained static during the same period. This observation, coupled with increasing incidence of localised disease, suggests that improvements in survival may be due to earlier detection and heightened vigilance (Cho 2014). New targeted therapies for advanced (stage IV), melanoma (e.g. BRAF inhibitors), have improved survival, and immunotherapies are evolving such that long‐term survival is being documented (Pasquali 2018; Rozeman 2017). No new data regarding the survival prospects for patients with stage IV disease were analysed for the AJCC 8 staging guidelines due to lack of contemporary data (Gershenwald 2017).

Basal cell carcinoma

BCC can arise from multiple stem cell populations, including from the bulge and interfollicular epidermis (Grachtchouk 2011). BCC growth is usually localised, but it can infiltrate and damage surrounding tissue, sometimes causing considerable destruction and disfigurement, particularly when located on the face (Figure 2). The four main subtypes of BCC are superficial, nodular, morphoeic (infiltrative) and pigmented. Lesions typically present as slow‐growing, asymptomatic papules, plaques, or nodules which bleed or form ulcers that do not heal (Firnhaber 2012). The diagnosis is often made incidentally rather than by people presenting with symptoms (Gordon 2013).

2.

Sample photographs of BCC (left) and cSCC (right). Copyright © 2012 Dr Rubeta Matin: reproduced with permission.

BCC most commonly occurs on sun‐exposed sites on the head and neck (McCormack 1997) and are more common in men and in people over the age of 40. A rising incidence of BCC in younger people has been attributed to increased recreational sun exposure (Bath‐Hextall 2007a; Gordon 2013; Musah 2013). Other risk factors include Fitzpatrick skin types I and II (Fitzpatrick 1975; Lear 1997; Maia 1995), previous skin cancer history, immunosuppression, arsenic exposure, and genetic predisposition such as in basal cell naevus (Gorlin) syndrome (Gorlin 2004; Zak‐Prelich 2004). Annual incidence is increasing worldwide; Europe has experienced an average increase of 5.5% a year over the last four decades, the USA 2% a year, while estimates for the UK show that incidence appears to be increasing more steeply at a rate of an additional 6 per 100,000 persons a year (Lomas 2012). The rising incidence has been attributed to an ageing population, changes in the distribution of known risk factors, particularly ultraviolet radiation, and improved detection due to the increased awareness amongst both practitioners and the general population (Verkouteren 2017). Hoorens 2016 points to evidence for a gradual increase in the size of BCCs over time, with delays in diagnosis ranging from 19 to 25 months.

According to National Institute for Health and Care Excellence (NICE) guidance (NICE 2010), low‐risk BCCs that may be considered for excision are nodular lesions occurring in people older than 24 years who are not immunosuppressed and do not have Gorlin syndrome. Furthermore, low‐risk lesions should be located below the clavicle, should be small (less than 1 cm) with well‐defined margins, not recurrent following incomplete excision and are not difficult to reach surgically or in highly visible locations (NICE 2010). Superficial BCCs are also typically low risk and may be amenable to medical treatments such as photodynamic therapy or topical chemotherapy (Kelleners‐Smeets 2017). Assigning BCCs as low or high risk influences the management options (Batra 2002; Randle 1996).

Advanced locally‐destructive BCC can arise from longstanding untreated lesions or from a recurrence of aggressive basal cell carcinoma after primary treatment (Lear 2012). Very rarely, BCC metastasises to regional and distant sites resulting in death, especially cases of large neglected lesions in those who are immunosuppressed or those with Gorlin syndrome (McCusker 2014). Rates of metastasis are reported at 0.0028% to 0.55% (Lo 1991), with very poor survival rates. It is recognised that baso‐squamous carcinoma (more like a high‐risk cSCC in behaviour and not considered a true BCC) is likely to have accounted for many cases of apparent metastases of BCC, hence the spuriously high reported incidence in some studies of up to 0.55%, which is not seen in clinical practice (Garcia 2009).

Squamous cell carcinoma of the skin

Primary cSCC arises from the keratinocytes in the epidermis or its appendages. People with cSCC often present with an ulcer or firm (indurated) papule, plaque, or nodule (Griffin 2016), often with an adherent crust (Madan 2010). cSCC can arise in the absence of a precursor lesion or it can develop from pre‐existing actinic keratosis (dysplastic epidermis) or Bowen's disease (considered by some to be cSCC in situ). The estimated annual risk of progression is from less than 1% to 20% (Alam 2001), and 5% for lesions developing from pre–existing dysplasia (Kao 1986). It remains locally invasive for a variable length of time, but has the potential to spread to the regional lymph nodes or through the bloodstream to distant sites, especially in immunosuppressed individuals (Lansbury 2010). High‐risk lesions are those arising on the lip or ear, recurrent cSCC, lesions arising on non‐exposed sites, scars or chronic ulcers, tumours larger than 20 mm in diameter or which have a histological depth of invasion greater than 4 mm or poor differentiation status on histopathological examination (Motley 2009).

Chronic ultraviolet light exposure through recreation or occupation is strongly linked to cSCC occurrence (Alam 2001). It is particularly common in people with fair skin and in less common genetic disorders of pigmentation, such as albinism, xeroderma pigmentosum, and recessive dystrophic epidermolysis bullosa (RDEB) (Alam 2001). Other recognised risk factors include immunosuppression; chronic wounds; arsenic or radiation exposure; certain drug treatments, such as voriconazole and BRAF mutation inhibitors; and previous skin cancer history (Baldursson 1993; Chowdri 1996; Dabski 1986; Fasching 1989; Lister 1997; Maloney 1996; O'Gorman 2014). In solid organ transplant recipients, cSCC is the most common form of skin cancer; the risk of developing cSCC has been estimated at 65 to 253 times that of the general population (Hartevelt 1990; Jensen 1999; Lansbury 2010). Overall, local and metastatic recurrence of cSCC at five years is estimated at 8% and 5% respectively. The five‐year survival rate of metastatic cSCC of the head and neck is around 60% (Moeckelmann 2018).

Treatment

For primary melanoma, the mainstay of definitive treatment is wide local surgical excision of the lesion, to remove both the tumour and any malignant cells that might have spread into the surrounding skin (Garbe 2016; Marsden 2010; NICE 2015; SIGN 2017; Sladden 2009). Recommended lateral surgical margins vary according to tumour thickness (Garbe 2016) and stage of disease at presentation (NICE 2015).

Treatment options for BCC and cSCC include surgery, other destructive techniques such as cryotherapy or electrodesiccation and topical chemotherapy. A Cochrane Review of 27 randomised controlled trials (RCTs) of interventions for BCC found very little good‐quality evidence for any of the interventions used (Bath‐Hextall 2007b). Complete surgical excision of primary BCC has a reported five‐year recurrence rate of less than 2% (Griffiths 2005; Walker 2006), leading to significantly fewer recurrences than treatment with radiotherapy (Bath‐Hextall 2007b). After apparent clear histopathological margins (serial vertical sections) after standard excision biopsy with 4 mm surgical peripheral margins taken there is a five‐year reported recurrence rate of around 4% (Drucker 2017). Mohs micrographic surgery, whereby horizontal sections of the tumour are microscopically examined intraoperatively and re‐excision is undertaken until the margins are tumour‐free, can be considered for high‐risk lesions where standard wider excision margins of surrounding healthy skin might lead to considerable functional impairment (Bath‐Hextall 2007b; Lansbury 2010; Motley 2009; Stratigos 2015). Bath‐Hextall and colleagues (Bath‐Hextall 2007b) found a single trial comparing Mohs micrographic surgery with a 3mm standard excision in BCC (Smeets 2004); the update of this study showed non‐significantly lower recurrence at 10 years with Mohs micrographic surgery (4.4% compared to 12.2% after surgical excision, P = 0.10) (Van Loo 2014).

The main treatments for high‐risk BCC are wide local excision, Mohs micrographic surgery and radiotherapy. For low‐risk or superficial subtypes of BCC, or for small and or multiple BCCs at low‐risk sites (Marsden 2010), destructive techniques other than excisional surgery may be used (e.g. electrodesiccation and curettage or cryotherapy (Alam 2001; Bath‐Hextall 2007b)). Alternatively, non‐surgical (or non‐destructive) treatments may be considered (Bath‐Hextall 2007a; Drew 2017; Kim 2014), including topical chemotherapy such as imiquimod (Williams 2017), 5‐fluorouracil (Arits 2013), ingenol mebutate (Nart 2015) and photodynamic therapy (Bath‐Hextall 2007b; Roozeboom 2016). Although non‐surgical techniques are increasingly used, they do not allow histological confirmation of tumour clearance, and their use is dependent on accurate characterisation of the histological subtype and depth of tumour. The 2007 systematic review of BCC interventions found limited evidence from very small RCTs for these approaches (Bath‐Hextall 2007b), which have only partially been addressed by subsequent studies (Bath‐Hextall 2014; Kim 2014; Roozeboom 2012). Most BCC trials have compared interventions within the same treatment class, and few have compared medical versus surgical treatments (Kim 2014).

Vismodegib, a first‐in‐class Hedgehog signalling pathway inhibitor, is now available for the treatment of metastatic or locally‐advanced BCC based on the pivotal study ERIVANCE BCC (Sekulic 2012). It is licensed for use in patients where surgery or radiotherapy is inappropriate, e.g. for treating locally‐advanced periocular and orbital BCCs with orbital salvage of patients who otherwise would have required exenteration (Wong 2017). However, NICE has recently recommended against the use of vismodegib, based on cost effectiveness and uncertainty of evidence (NICE 2017).

A systematic review of interventions for primary cSCC found only one RCT eligible for inclusion (Lansbury 2010). Current practice therefore relies on evidence from observational studies, as reviewed in Lansbury 2013, for example. Surgical excision with predetermined margins is usually the first‐line treatment (Motley 2009; Stratigos 2015). Estimates of recurrence after Mohs micrographic surgery, surgical excision, or radiotherapy, which are likely to have been evaluated in higher‐risk populations, have shown pooled recurrence rates of 3%, 5.4% and 6.4% respectively, with overlapping confidence intervals; the review authors advise caution when comparing results across treatments (Lansbury 2013).

Index test(s)

Computer–assisted diagnosis (CAD) describes a range of artificial intelligence‐based techniques that automate the diagnosis of skin cancer by using a computer to analyse lesion images, and determine the likelihood of malignancy, or the need for excision. Each CAD system has a data collection component, which collects imaging or non‐visual data (e.g. electrical impedance measurements) from the suspicious lesion and feeds it to the data processing component, which then performs a series of analyses to arrive at a diagnostic classification.

Images are acquired using a number of different techniques, although most commonly by digital dermoscopy (Derm–CAD) which creates digital subsurface images of the skin using a computer coupled with a dermatoscope, videocamera and digital television (Rajpara 2009; Esteva 2017). Commercially‐available systems include the DB–MIPS® (DB–Dermo MIPS) (Biomips Engineering SRL, Sienna Italy), MicroDERM (Visiomed AG, Germany), SolarScan (Polartechnics Ltd, Australia) and MoleExpert (DermoScan GmbH, Germany), all of which are hand–held digital or video dermatoscopes that communicate with CAD analysis software (see Figure 3).

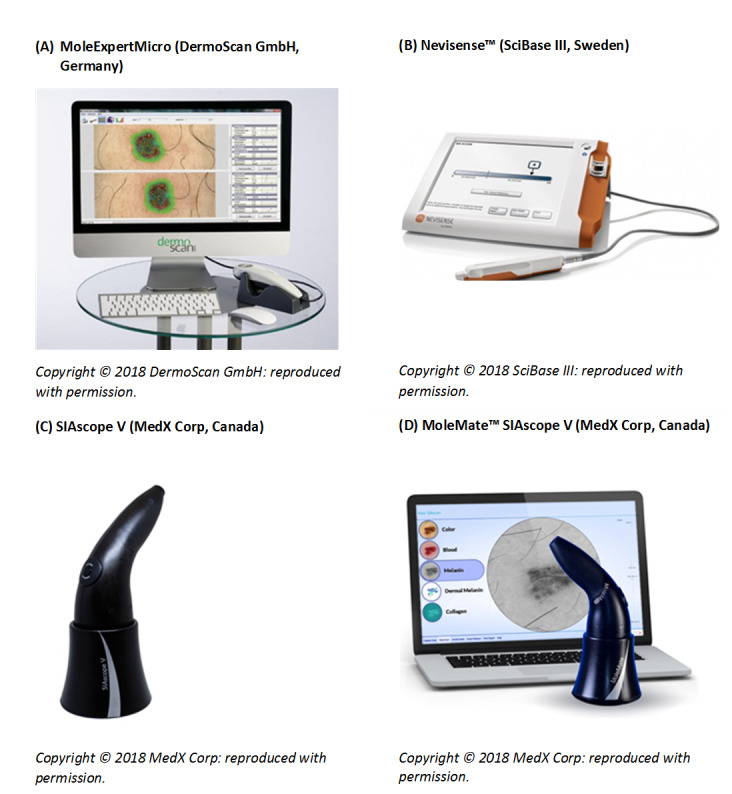

3.

Examples of commercially available CAD systems using digital dermoscopy (A), electrical impedance spectroscopy (B) and multispectral imaging (C and D). Reproduced with permission of the manufacturers. Copyright © 2018 MedX Corp, Canada; DermoScanGmbH, Germany; SciBase III, Sweden: reproduced with permission.

Other systems use spectroscopy (Spectro‐CAD), whereby information on cell characteristics (such as cell shape or size) is gathered by measuring how electromagnetic waves pass through skin lesions. This information is most commonly acquired using multispectral imaging (MSI–CAD) that enables computer–generated graphic representations of lesion morphology to be produced from detecting light reflected at several wavelengths across the lesion. By far the most common of these is diffuse reflectance spectrophotometry imaging (DRSi), which uses light that diffusely penetrates the skin to a depth of 2 to 2.5 mm beneath the surface to produce light reflectance images at a number of specific wavelengths across the visible near‐infrared light spectrum (approximately 400 to 1000 nm) to capture variations in light attenuation and scattering from melanin, collagen and blood vessel structures.

DRSi developed from diffuse reflectance spectroscopy, a non‐visual spectroscopic technique which uses optical reflectance to distinguish between lesion types based on spectral shape and calibrated level of reflected light for wavelengths continuously varying from the ultraviolet (320 nm) to the near‐infrared (1100 nm) with a high spectral resolution (4 nm) (e.g. Marchesini 1992; Wallace 2000b). Commercially available DRSi computer‐assisted diagnosis systems include the SIAscope™ (MedX Health Corp, Canada), a hand–held unit that communicates with CAD analysis software (Figure 3). The MelaFind® system (Strata Skin Sciences (formerly Mela Sciences Inc), Horsham, PA, USA) was Food and Drug Administration (FDA)‐approved; however, it no longer appears to be commercially available.

The Nevisense™ system (SciBase III, Sweden; Figure 3) is also commercially available, but is based on electrical impedance spectroscopy (EIS), a non‐optical method which seeks to provide information on cellular features by measuring the feedback from an electrical current once it has passed through the intended tissue. With Nevisense™, an alternating applied voltage (electrical current) is passed by a probe through a skin lesion and the current that is bounced back is measured by the same probe, which measures a combination of tissue resistance and capacitance. At high frequencies, conduction occurs easily through all tissue components, including cells, but at low frequencies current tends to flow only through the extracellular space. The spectral shape is therefore sensitive to cellular components and dimensions, internal structure and cellular arrangements. The Nevisense™ EIS system measures at four multiple depths and at 35 frequencies logarithmically distributed from 1.0 kHz to 2.5 MHz using a 5 x 5 mm area electrode covered in tiny pins that penetrate into the stratum corneum.

Other non‐visual sources of lesion data include Raman spectroscopy, in which a laser is used to excite vibrations in molecules which then impart wavelength shifts to some of the scattered light waves, creating spectral patterns that are related to the molecular structure of lesions (Maglogiannis 2009), and fluorescence spectroscopy which uses a laser to excite electrons, causing molecules to absorb and then re‐emit light in spectral patterns that are also related to the molecular structure of lesions (Rallan 2004).

All CAD systems use machine learning, where a classification algorithm learns features of groups of lesions (i.e. diagnostic types) by exposure to a ‘training set’ of lesions of known histological diagnosis. This process creates a model which is designed to distinguish between these lesion types in future observations. Examples of machine learning algorithms include discriminant analysis, decision trees, neural networks, fuzzy logic, nearest k‐neighbours, logistic regression and support vector machines (SVMs), and all use different mathematical equations to set out how observed features relate to a given diagnosis (Maglogiannis 2009; Masood 2013). Model outputs also vary, in part according to the type of data used to acquire lesion information, and can take the form of binary outputs indicating the presence of malignancy versus benignity (e.g. the Melafind® system), risk scores which can be used at varying thresholds (e.g. the DANAOS system used by MicroDerm), or graphical representations of the CAD pattern analysis which highlight areas of concern within a lesion (e.g. the SIAgraphs produced by SIAscope™). Artificial intelligence systems using continuous learning algorithms, where computer systems continuously develop their classification algorithm as each new case is examined, and do not stop learning at the end of a training period, are not addressed in this review.

Clinical pathway

The diagnosis of skin lesions occurs in primary‐, secondary‐, and tertiary‐care settings by both generalist and specialist healthcare providers. In the UK, people with concerns about a new or changing lesion will present to their general practitioner (GP) rather than directly to a specialist in secondary care. A GP with clinical concerns usually refers a patient to a specialist in secondary care – usually a dermatologist, but sometimes to a surgical specialist such as a plastic surgeon or an ophthalmic surgeon. Suspicious skin lesions may also be identified in a referral setting, for example by a general surgeon, and referred for a consultation with a skin cancer specialist (Figure 4). Skin cancers identified by other specialist surgeons (such as an ear, nose, and throat (ENT) specialist or maxillofacial surgeon) will usually be diagnosed and treated without further referral.

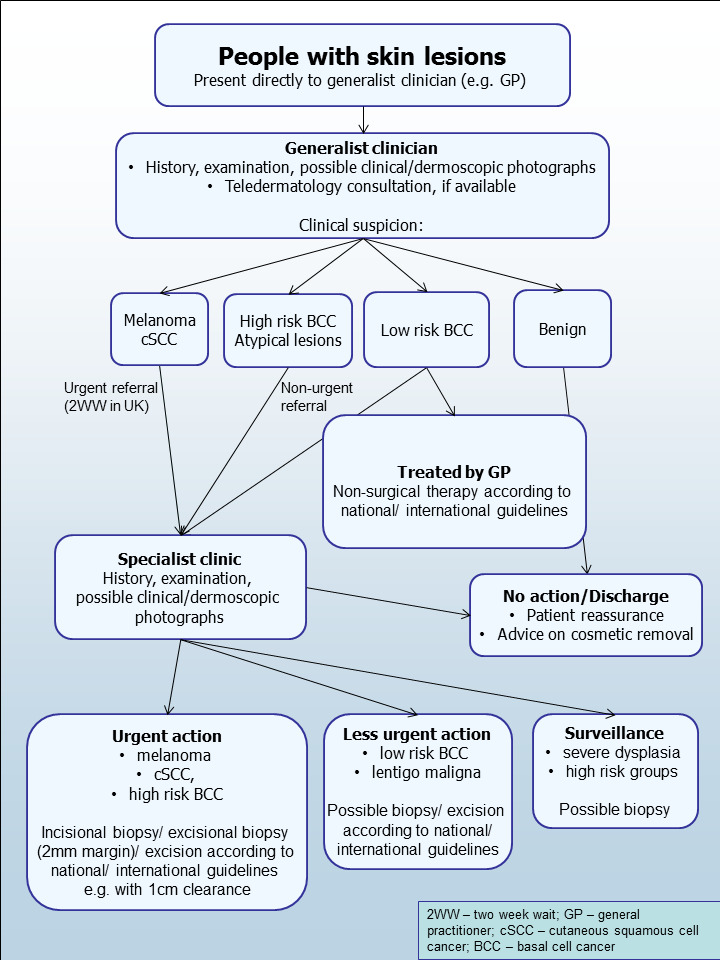

4.

Current clinical pathway for people with skin lesions.

Current UK guidelines recommend that all suspicious pigmented lesions presenting in primary care should be assessed by taking a clinical history and visual inspection (VI) using the seven‐point checklist (MacKie 1990); lesions suspected to be melanoma or cSCC should be referred for appropriate specialist assessment within two weeks (Chao 2013; Marsden 2010; NICE 2015). Evidence is emerging, however, to suggest that excision of melanoma by GPs is not associated with increased risk compared with outcomes in secondary care (Murchie 2017). In the UK, low‐risk BCCs are usually recommended for routine referral, with urgent referral for those in whom a delay could have a significant impact on outcomes, for example due to large lesion size or critical site (NICE 2015). Appropriately‐qualified generalist care providers increasingly undertake management of low‐risk BCC in the UK, such as by excision of low‐risk lesions (NICE 2010). Similar guidance is in place in Australia (CCAAC Network 2008).

For referred lesions, the specialist clinician will use history‐taking, visual inspection of the lesion (in conjunction with other skin lesions), palpation of the lesion and associated lymph nodes in conjunction with dermoscopic examination to inform a clinical decision. If melanoma is suspected, then urgent 2 mm excision biopsy is recommended (Lederman 1985; Lees 1991); for cSCC predetermined surgical margin excision or a diagnostic biopsy may be considered. BCC and pre‐malignant lesions potentially eligible for nonsurgical treatment may undergo a diagnostic biopsy before initiation of therapy if there is diagnostic uncertainty. Equivocal melanocytic lesions for which a definitive clinical diagnosis cannot be reached may undergo surveillance to identify any lesion changes that would indicate excision biopsy or reassurance, and discharge for those lesions that remain stable over a period of time.

Theoretically, teledermatology consultations may aid appropriate triage of lesions into urgent referral; non‐urgent secondary‐care referral (e.g. for suspected basal cell carcinoma); or where available, referral to an intermediate care setting, e.g. clinics run by GPs with a special interest in dermatology. The distinction between setting and examiner qualifications and experience is important, as specialist clinicians might work in primary‐care settings (for example, in the UK, GPs with a special interest in dermatology and skin surgery who have undergone appropriate training), and generalists might practice in secondary‐care settings (for example, plastic surgeons who do not specialise in skin cancer). The level of skill and experience in skin cancer diagnosis will vary for both generalist and specialist care providers and will also impact on test accuracy.

Prior test(s)

Although smartphone applications and community‐based teledermatology services can increasingly be directly accessed by people who have concerns about a skin lesion (Chuchu 2018b), visual inspection of a suspicious lesion by a clinician is usually the first in a series of tests to diagnose skin cancer. In the UK this usually takes place in primary care, but in many countries people with suspicious lesions can present directly to a specialist setting.

A range of technologies has emerged to aid skin cancer diagnosis, both to ensure that malignancies (especially melanoma) are not missed, and at the same time minimising unnecessary surgical procedures. Dermoscopy using a hand‐held microscope has become the most widely used tool for clinicians to improve diagnostic accuracy of pigmented lesions, in particular for melanoma (Argenziano 1998; Argenziano 2012; Haenssle 2010; Kittler 2002), although it is less well established for the diagnosis of BCC or cSCC. Dermoscopy is frequently combined with visual inspection of a lesion in secondary‐care settings, and is also increasingly used in primary care, particularly in countries such as Australia (Youl 2007).The diagnostic accuracy, and comparative accuracy, of visual inspection and dermoscopy have been evaluated in a further three reviews in this series (Dinnes 2018a; Dinnes 2018b; Dinnes 2018c).

Consideration of the degree of prior testing that study participants have undergone is key to interpretation of test accuracy indices, as these are known to vary according to the disease spectrum (or case‐mix) of included participants (Lachs 1992; Leeflang 2013; Moons 1997; Usher‐Smith 2016). Spectrum effects are often observed when tests that are developed further down the referral pathway have lower sensitivity and higher specificity when applied in settings with participants with limited prior testing (Usher‐Smith 2016). Studies of individuals with suspicious lesions at the initial clinical presentation stage ('test‐naïve') are likely to have a wider range of different diagnoses and include a higher proportion of people with benign diagnoses compared with studies of participants who have been referred for a specialist opinion on the basis of visual inspection (with or without dermoscopy) by a generalist practitioner. Furthermore, studies in more specialist settings may focus on equivocal or difficult‐to‐diagnose lesions rather than lesions with a more general level of clinical suspicion. However, this direction of effect is not consistent across tests and diseases, the mechanisms in action often being more complex than prevalence alone, and can be difficult to identify (Leeflang 2013). A simple categorisation of studies according to primary, secondary or specialist setting therefore may not always adequately reflect these key differences in disease spectrum that can affect test performance.

Role of index test(s)

Skin cancer diagnosis, whether by visual inspection alone or with the use of dermoscopy is undertaken iteratively, using both implicit pattern recognition (non‐analytical reasoning) and more explicit ‘rules’ based on conscious analytical reasoning (Norman 2009), the balance of which will vary according to experience and familiarity with the diagnostic question. In the hands of experienced dermatologists, dermoscopy has been shown to enhance the accuracy of skin cancer detection (especially melanoma) when compared to unaided visual examination (Dinnes 2018b; Dinnes 2018c). The subjectivity involved in interpreting lesion morphology is thought to underlie the decrease in accuracy that occurs when the dermatoscope is used by less experienced clinicians (Binder 1995).

The addition of computer–based diagnosis to these investigations has potential to increase the detection of melanomas by reducing the clinicians’ reliance on subjective information, which is necessarily interpreted using their experience of past cases. The additive value of CAD systems is also likely to vary with differences in setting, prior testing and selection of participants, as previously discussed (Prior test(s)). CAD systems could therefore fulfil three different roles in clinical practice: (1) to help GPs, or other clinicians working in unreferred settings, to appropriately triage lesions for referral; (2) as part of a remote diagnostic service; or (3) as an expert–level second opinion to specialists in referral settings. All three roles would rely on CAD being as sensitive for the diagnosis of melanoma as experienced dermatologists. On the other hand, the specificity required for CAD to add value differs for each of these three situations, as discussed below.

If sensitive enough, use of CAD in primary care could allow more appropriate triage of higher‐risk lesions to secondary care by increasing the early detection of potentially malignant lesions. However, although a relatively lower specificity (higher false‐positive rate) may be acceptable in a primary‐care setting, limiting false–positive diagnoses would create health service benefits by avoiding unnecessary referral, and alleviating patient anxiety more promptly. Similarly, the remote use of CAD could inform the need for referral, by sending images or other diagnostic data to specialist clinics, or even to commercial organisations, for remote interpretation, much as teledermatology is already used. In this circumstance, a relatively high specificity would be required in order to avoid unacceptable increases in rates of referral to specialist centres.

Finally, when used in referral settings as a complement to in‐person diagnosis by a specialist, even if CAD could be shown to pick up difficult‐to‐diagnose melanomas that might be missed on visual inspection or dermoscopy, the specificity of the system would need to be very high so as not to inordinately increase the burden of skin surgery. False‐positive diagnoses not only cause unnecessary scarring from a biopsy or excision procedure, but also increase patient anxiety whilst they await the definitive histological results and increase healthcare costs as the number needed to remove to yield one melanoma diagnosis increases. Pigmented lesions are common, so the resource implication for even a small increase in the threshold to excise lesions in populations where melanoma rates are increasing, will avoid a considerable healthcare burden to both patient and healthcare provider, as long as lesions that are not excised turn out to be benign. The use of CAD to detect melanoma in specialist clinics would only be advantageous if it could be shown to detect skin cancers that would otherwise be missed, or to decrease unnecessary surgical intervention (i.e. removal of false–positive lesions) with no loss of sensitivity.

Delay in diagnosis of a BCC as a result of a false‐negative test is not as serious as for melanoma, because BCCs are usually slow‐growing and very unlikely to metastasise. Nevertheless, delayed diagnosis can result in larger and more complex surgical procedures with consequent greater morbidity. Very sensitive diagnostic tests for BCC, however, may compromise on lower specificity, leading to a higher false‐positive rate and an increased burden of skin surgery such that a balance between sensitivity and specificity is needed. The greatest potential advantage of CAD in the management of BCC is likely to lie in its ability to perform rapid, non–invasive assessments of multiple lesions (common in BCC patients (Lear 1997)).

The situation for cSCC is more similar to melanoma, in that the consequences of falsely reassuring a person that they do not have skin cancer can be serious and potentially fatal, given that removal of an early cSCC is usually curative. Thus, a good diagnostic test for cSCC should demonstrate high sensitivity and a corresponding high negative predictive value. A test that can also reduce false‐positive clinical diagnoses without missing true cases of cSCC has patient and resource benefits.

Alternative test(s)

A number of other tests which may have a role in the diagnosis of skin cancer in a specialist setting have been reviewed as part of our series of systematic reviews, including reflectance confocal microscopy (RCM) (Dinnes 2018dDinnes 2018e), optical coherence tomography (OCT) (Ferrante di Ruffano 2018a), high‐frequency ultrasound (Dinnes 2018f) and exfoliative cytology (Ferrante di Ruffano 2018b). Other tests with a role in earlier settings include teledermatology (Chuchu 2018a) and smart–phone applications (Chuchu 2018b). Reviews on the accuracy of gene expression testing and volatile organic compounds could not be performed as planned, due to an absence of relevant studies. Evidence permitting, we will compare the accuracy of available tests in an overview review, exploiting within‐study comparisons of tests and allowing the analysis and comparison of commonly used diagnostic strategies where tests may be used singly or in combination.

We also considered and excluded a number of tests from this review, such as tests used for screening (e.g. total body photography of those with large numbers of typical or atypical naevi) or monitoring (e.g. CAD systems used to monitor the progression of suspicious skin lesions).

Lastly, we did not assess the accuracy of histopathological confirmation following lesion excision, because it is the established reference standard for melanoma diagnosis and will be one of the standards against which the index tests are evaluated in these reviews.

Rationale

Our series of reviews of diagnostic tests used to assist clinical diagnosis of skin cancer aims to identify the most accurate approaches to diagnosis and provide clinical and policy decision‐makers with the highest possible standard of evidence on which to base diagnostic and treatment decisions. With increasing rates of melanoma and basal cell carcinoma and a trend to adopt dermoscopy and other high‐resolution image analysis in primary care, the anxiety around missing early malignant lesions needs to be balanced against the risk of too many unnecessary referrals, and to avoid sending too many people with benign lesions for a specialist opinion. It is questionable whether all skin cancers identified by sophisticated techniques, even in specialist settings, help to reduce morbidity and mortality. It is also a concern that newer technologies incur the risk of increasing false‐positive diagnoses. It is also possible that the use of some technologies, e.g. widespread use of dermoscopy in primary care with little or no training, could actually result in harm by missing melanomas if they are used as replacement technologies for traditional history‐taking and clinical examination of the entire skin. Many branches of medicine have noted the danger of such "gizmo idolatry" amongst doctors (Leff 2008).

The central premise underlying CAD is that it uses quantitative, objective and expert–level assessments of lesion features, which lessen the need for specialist training and lengthy experience in test use. Given the reliance on specialist training and experience to make accurate skin cancer diagnosis using dermoscopy, CAD diagnosis has the potential to improve the health of patients by widening access to specialist diagnostic capabilities in primary and secondary care. If it is sensitive enough, introducing CAD could increase the early detection of skin cancers, which for melanoma and cSCC in particular, is critical to improving outcomes. As with any technology requiring significant investment, a full understanding should be acquired of the benefits including patient acceptability and cost effectiveness compared with usual practice before such an approach can be recommended; establishing the accuracy of diagnosis and referral accuracy is one of the key components.

We identified four published systematic reviews focusing on the accuracy of CAD, two synthesising the performance of Derm–CAD systems (Ali 2012; Rajpara 2009), and two reviewing both Derm–CAD and Spectro–CAD systems (Rosado 2003; Vestergaard 2008). All are limited by out–of–date search periods (Ali 2012 up to 2011, Rajpara 2009 and Vestergaard 2008 up to 2007, Rosado 2003 up to 2002), which is a key concern in the rapidly advancing field of machine learning. Another concern for Ali 2012, Rajpara 2009 and Rosado 2003 is their inclusion of studies which are ineligible for this Cochrane Review due to the absence of an independent validation set, a methodological feature likely to inflate the apparent accuracy of predictive models (Altman 2009). Rosado 2003 also selected datasets on the basis of highest performance, and pooled accuracy estimates for Derm–CAD with Spectro–CAD, which we consider to be two different diagnostic tests. There is therefore a need for an up‐to‐date and rigorous review of the accuracy of dermoscopy–based CAD and of spectroscopy–based CAD which explicitly considers the following key characteristics.

Because CAD models are created by analysing patterns in archived datasets, the degree to which they are likely to make accurate classifications of new observations in real‐life clinical situations relies on the generalisability of the training sets used to develop them (Horsch 2011). Training sets that contain few lesions, or a restricted range of the different diagnoses encountered in clinical practice, are likely to produce models that misclassify new observations due to inadequate learning. Other important attributes thought to influence diagnostic ability are the segmentation process (how the lesion’s border is detected by the computer), which features are selected for analysis (akin to the selection of features for analysis in the algorithms used in epiluminescence microscopy (ELM) dermoscopy, e.g. ABCD or seven–point), the algorithm used, and the type of information produced by the CAD system (e.g. binary outputs indicating presence of malignancy, or visual images of lesions such as macro– or microscopic photographs or graphical representations of highlighting suspect structures).

This review follows a generic protocol which covers the full series of Cochrane DTA Reviews for the diagnosis of melanoma (Dinnes 2015a) and for the diagnosis of keratinocyte cancers (Dinnes 2015b). The Background and Methods sections of this review therefore use some text that was originally published in these protocols (Dinnes 2015a; Dinnes 2015b) and text that overlaps some of our other reviews (Dinnes 2018a; Dinnes 2018b; Ferrante di Ruffano 2018a).

Objectives

To determine the accuracy of CAD systems for diagnosing cutaneous invasive melanoma and atypical intraepidermal melanocytic variants in adults, and to compare the accuracy of CAD systems with that of clinician diagnosis using dermoscopy.

To determine the accuracy of CAD systems for diagnosing BCC in adults, and to compare the accuracy of CAD systems with that of clinician diagnosis using dermoscopy.

To determine the accuracy of CAD systems for diagnosing cSCC in adults, and to compare the accuracy of CAD systems with that of clinician diagnosis using dermoscopy.

Secondary objectives

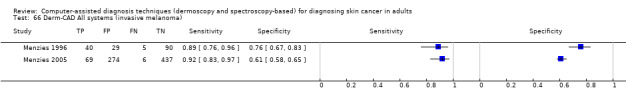

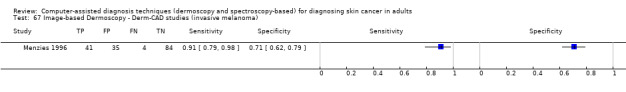

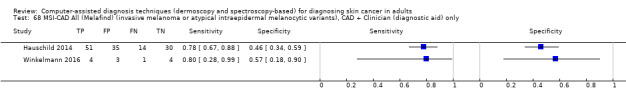

To determine the accuracy of CAD systems for diagnosing invasive melanoma alone in adults, and to compare the accuracy of CAD systems with that of clinician diagnosis using dermoscopy

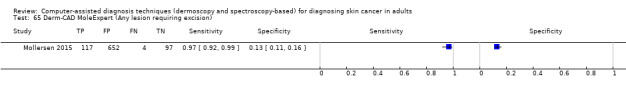

To determine the accuracy of CAD systems for identifying any lesion requiring excision (due to any skin cancer or high–grade dysplasia) in adults, and to compare the accuracy of CAD systems with that of clinician diagnosis using dermoscopy

For each of the primary target conditions:

To compare the diagnostic accuracy of CAD systems to clinician diagnosis using dermoscopy, where both tests have been evaluated in the same studies (direct comparisons);

To determine the diagnostic accuracy of individual CAD systems;

To compare the accuracy of CAD–based diagnosis to CAD–assisted diagnosis (CAD results used by clinicians as a diagnostic aid)

Where CAD systems are used as a diagnostic aid, to determine the effect of observer experience on diagnostic accuracy.

Investigation of sources of heterogeneity

We set out to investigate a range of potential sources of heterogeneity across our series of reviews, as outlined in our generic protocols (Dinnes 2015a; Dinnes 2015b) and described in Appendix 3; however, we could not do this because of the available data on each individual test reviewed.

Methods

Criteria for considering studies for this review

Types of studies

We included test accuracy studies that assessed the result of the index test against that of a reference standard, including the following:

Studies where all participants received a single index test and a reference standard;

Studies where all participants received more than one index test and reference standard;

Studies where participants were allocated (by any method) to receive different index tests or combinations of index tests and all receive a reference standard (between‐person comparative studies (BPC));

Studies that recruited series of participants unselected by true disease status (referred to as case series for the purposes of this review);

Diagnostic case‐control studies that separately recruited diseased and non‐diseased groups (see Rutjes 2005); however, we did not include studies that compared results for malignant lesions to those for healthy skin (i.e. with no lesion present);

Both prospective and retrospective studies;

Studies where previously‐acquired clinical or dermoscopic images were retrieved and prospectively interpreted for study purposes.

We excluded studies from which we could not extract or derive 2 x 2 contingency data of the number of true positives, false positives, false negatives and true negatives, or if studies included fewer than five skin cancer cases or fewer than five benign lesions. The size threshold of five is arbitrary, however such small studies are unlikely to add precision to estimate of accuracy.

Studies available only as conference abstracts were excluded; however, attempts were made to identify full papers for potentially relevant conference abstracts (Searching other resources).

Participants

We included studies in adults with pigmented or non–pigmented skin lesions considered to be suspicious for melanoma or an atypical intraepidermal melanocytic variant or a keratinocyte skin cancer (BCC or cSCC). Studies examining adults at high risk of developing skin cancer, including those with a family history or previous history of skin cancer, atypical or dysplastic naevus syndrome, or genetic cancer syndromes were also eligible for inclusion.

We excluded studies that recruited only participants with malignant diagnoses.

We excluded studies conducted in children or which clearly reported inclusion of more than 50% of participants aged 16 and under.

Index tests

Studies reporting accuracy data for tests using automated diagnosis were eligible for inclusion, whether diagnosis was produced independently by the CAD system (system–based diagnosis), or by a clinician using a CAD system as a diagnostic aid (computer–assisted diagnosis). CAD systems using any type of data capture were eligible, including imaging and non–imaging modalities. We included all machine learning algorithms.

We included studies developing new algorithms or methods of diagnosis (i.e. derivation studies) if they evaluated the new approach using a separate 'test set' of participants or images.

We excluded studies if they:

Evaluated a new statistical model or algorithm in the same participants or images as those used to train the model (i.e. absence of an independent test set);

Used cross‐validation approaches such as 'leave‐one‐out' cross‐validation (Efron 1983); or

Evaluated the accuracy of the presence or absence of individual lesion characteristics or morphological features, with no overall diagnosis of malignancy.

Although primary‐care clinicians can in practice be specialists in skin cancer, we considered primary‐care physicians as generalist practitioners and dermatologists as specialists. Within each group, we extracted any reporting of special interest or accreditation in skin cancer.

Target conditions

We defined the primary target conditions as the detection of:

Any form of invasive cutaneous melanoma, or atypical intraepidermal melanocytic variants (i.e. including melanoma in situ, or lentigo maligna, which has a risk of progression to invasive melanoma)

BCC

cSCC

We considered two additional target conditions in secondary analyses, namely the detection of:

Any form of invasive cutaneous melanoma alone

Any skin lesion requiring excision: all forms of skin cancer listed above, as well as melanoma in situ, lentigo maligna, and lesions with severe melanocytic dysplasia.

Reference standards

The ideal reference standard is histopathological diagnosis in all eligible lesions. A qualified pathologist or dermatopathologist should perform histopathology. Ideally, reporting should be standardised, detailing a minimum dataset to include the histopathological features of melanoma to determine the American Joint Committee on Cancer (AJCC) Staging System (e.g. Slater 2014). We did not apply this as a necessary inclusion criterion, but extracted any pertinent information.

Partial verification (applying the reference test only to a subset of those undergoing the index test) was of concern, given that lesion excision or biopsy is unlikely to be carried out for all benign‐appearing lesions within a representative population sample. To reflect what happens in reality, we therefore accepted clinical follow‐up of benign‐appearing lesions as an eligible reference standard, whilst recognising the risk of variable verification bias (as misclassification rates of histopathology and follow‐up will differ).

Additional eligible reference standards included cancer registry follow‐up and 'expert opinion' with no histology or clinical follow‐up. Cancer registry follow‐up is considered less desirable than active clinical follow‐up, as follow‐up is not carried out within the control of the study investigators. Furthermore, if participant‐based analyses as opposed to lesion‐based analyses are presented, it may be difficult to determine whether the detection of a malignant lesion during follow‐up is the same lesion that originally tested negative on the index test.

We considered all of the above to be eligible reference standards, with the following caveats:

All study participants with a final diagnosis of the target skin cancer disorder must have a histological diagnosis, either subsequent to the application of the index test or after a period of clinical follow‐up

At least 50% of all participants with benign lesions must have either a histological diagnosis or clinical follow‐up to confirm benignity.

Search methods for identification of studies

Electronic searches

The Information Specialist (SB) carried out a comprehensive search for published and unpublished studies. A single large literature search was conducted to cover all topics in the programme grant (see Appendix 1 for a summary of reviews included in the programme grant). This allowed for the screening of search results for potentially relevant papers for all reviews at the same time. A search combining disease related terms with terms related to the test names, using both text words and subject headings was formulated. The search strategy was designed to capture studies evaluating tests for the diagnosis or staging of skin cancer. As the majority of records were related to the searches for tests for staging of disease, a filter using terms related to cancer staging and to accuracy indices was applied to the staging test search, to try to eliminate irrelevant studies, for example, those using imaging tests to assess treatment effectiveness. A sample of 300 records that would be missed by applying this filter was screened and the filter adjusted to include potentially relevant studies. When piloted on MEDLINE, inclusion of the filter for the staging tests reduced the overall numbers by around 6000. The final search strategy, incorporating the filter, was subsequently applied to all bibliographic databases as listed below (Appendix 4). The final search result was cross‐checked against the list of studies included in five systematic reviews; our search identified all but one of the studies, and this study was not indexed on MEDLINE. The Information Specialist (SB) devised the search strategy, with input from the Information Specialist from Cochrane Skin. No additional limits were used.

We searched the following bibliographic databases to 29 August 2016 for relevant published studies:

MEDLINE via OVID (from 1946);

MEDLINE In‐Process & Other Non‐Indexed Citations via OVID;

Embase via OVID (from 1980).

We searched the following bibliographic databases to 30 August 2016 for relevant published studies:

Cochrane Central Register of Controlled Trials (CENTRAL) Issue 7, 2016, in the Cochrane Library;

Cochrane Database of Systematic Reviews (CDSR) Issue 8, 2016 in the Cochrane Library;

Cochrane Database of Abstracts of Reviews of Effects (DARE) Issue 2, 2015;

CRD Health Technology Assessment (HTA) database Issue 3, 2016;

Cumulative Index to Nursing and Allied Health Literature (CINAHL) (via EBSCO from 1960).

We searched the following databases for relevant unpublished studies using a strategy based on the MEDLINE search:

CPCI (Conference Proceedings Citation Index), via Web of Science™ (from 1990; searched 28 August 2016);

SCI Science Citation Index Expanded™ via Web of Science™ (from 1900, using the 'Proceedings and Meetings Abstracts' Limit function; searched 29 August 2016).

We searched the following trials registers using search terms 'melanoma', 'squamous cell', 'basal cell' and 'skin cancer' combined with 'diagnosis':

Zetoc (from 1993; searched 28 August 2016)

The US National Institutes of Health Ongoing Trials Register (www.clinicaltrials.gov); searched 29 August 2016

NIHR Clinical Research Network Portfolio Database (www.nihr.ac.uk/research‐and‐impact/nihr‐clinical‐research‐network‐portfolio/); searched 29 August 2016;

The World Health Organization International Clinical Trials Registry Platform (apps.who.int/trialsearch/); searched 29 August 2016.

We aimed to identify all relevant studies, regardless of language or publication status (published, unpublished, in press, or in progress). We applied no date limits.

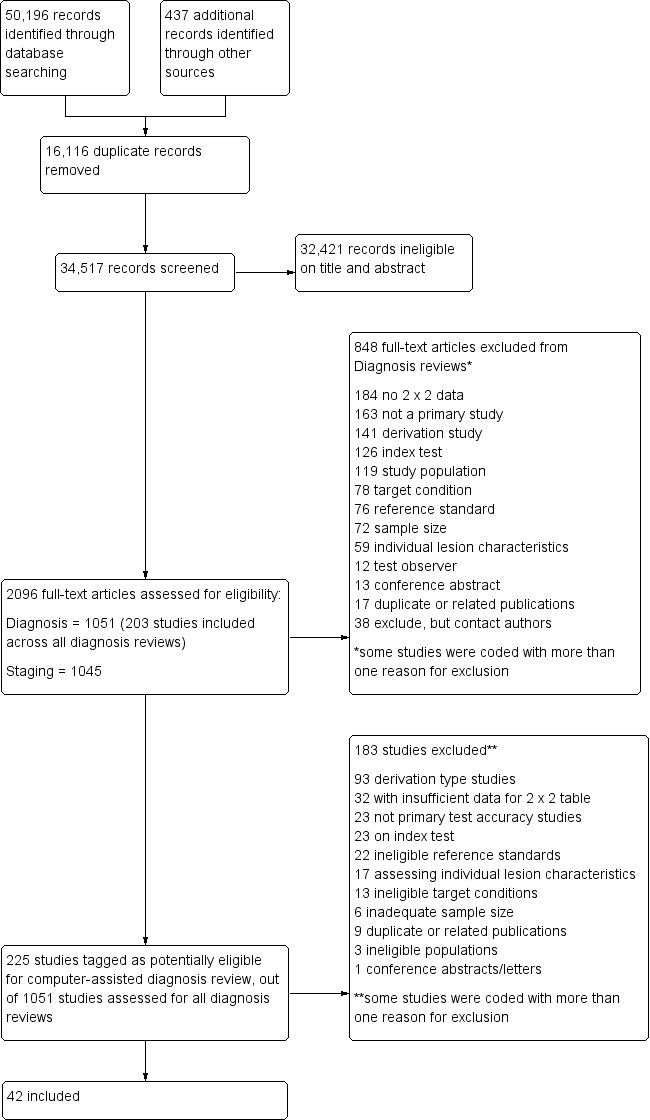

Searching other resources