Abstract

Background

Melanoma accounts for a small proportion of all skin cancer cases but is responsible for most skin cancer‐related deaths. Early detection and treatment can improve survival. Smartphone applications are readily accessible and potentially offer an instant risk assessment of the likelihood of malignancy so that the right people seek further medical attention from a clinician for more detailed assessment of the lesion. There is, however, a risk that melanomas will be missed and treatment delayed if the application reassures the user that their lesion is low risk.

Objectives

To assess the diagnostic accuracy of smartphone applications to rule out cutaneous invasive melanoma and atypical intraepidermal melanocytic variants in adults with concerns about suspicious skin lesions.

Search methods

We undertook a comprehensive search of the following databases from inception to August 2016: Cochrane Central Register of Controlled Trials; MEDLINE; Embase; CINAHL; CPCI; Zetoc; Science Citation Index; US National Institutes of Health Ongoing Trials Register; NIHR Clinical Research Network Portfolio Database; and the World Health Organization International Clinical Trials Registry Platform. We studied reference lists and published systematic review articles.

Selection criteria

Studies of any design evaluating smartphone applications intended for use by individuals in a community setting who have lesions that might be suspicious for melanoma or atypical intraepidermal melanocytic variants versus a reference standard of histological confirmation or clinical follow‐up and expert opinion.

Data collection and analysis

Two review authors independently extracted all data using a standardised data extraction and quality assessment form (based on QUADAS‐2). Due to scarcity of data and poor quality of studies, we did not perform a meta‐analysis for this review. For illustrative purposes, we plotted estimates of sensitivity and specificity on coupled forest plots for each application under consideration.

Main results

This review reports on two cohorts of lesions published in two studies. Both studies were at high risk of bias from selective participant recruitment and high rates of non‐evaluable images. Concerns about applicability of findings were high due to inclusion only of lesions already selected for excision in a dermatology clinic setting, and image acquisition by clinicians rather than by smartphone app users.

We report data for five mobile phone applications and 332 suspicious skin lesions with 86 melanomas across the two studies. Across the four artificial intelligence‐based applications that classified lesion images (photographs) as melanomas (one application) or as high risk or 'problematic' lesions (three applications) using a pre‐programmed algorithm, sensitivities ranged from 7% (95% CI 2% to 16%) to 73% (95% CI 52% to 88%) and specificities from 37% (95% CI 29% to 46%) to 94% (95% CI 87% to 97%). The single application using store‐and‐forward review of lesion images by a dermatologist had a sensitivity of 98% (95% CI 90% to 100%) and specificity of 30% (95% CI 22% to 40%).

The number of test failures (lesion images analysed by the applications but classed as 'unevaluable' and excluded by the study authors) ranged from 3 to 31 (or 2% to 18% of lesions analysed). The store‐and‐forward application had one of the highest rates of test failure (15%). At least one melanoma was classed as unevaluable in three of the four application evaluations.

Authors' conclusions

Smartphone applications using artificial intelligence‐based analysis have not yet demonstrated sufficient promise in terms of accuracy, and they are associated with a high likelihood of missing melanomas. Applications based on store‐and‐forward images could have a potential role in the timely presentation of people with potentially malignant lesions by facilitating active self‐management health practices and early engagement of those with suspicious skin lesions; however, they may incur a significant increase in resource and workload. Given the paucity of evidence and low methodological quality of existing studies, it is not possible to draw any implications for practice. Nevertheless, this is a rapidly advancing field, and new and better applications with robust reporting of studies could change these conclusions substantially.

Keywords: Adult, Humans, Algorithms, Diagnostic Errors, Diagnostic Errors/statistics & numerical data, Early Detection of Cancer, Early Detection of Cancer/instrumentation, Early Detection of Cancer/methods, Melanoma, Melanoma/diagnostic imaging, Mobile Applications, Sensitivity and Specificity, Skin Neoplasms, Skin Neoplasms/diagnostic imaging, Smartphone, Triage, Triage/methods

Plain language summary

How accurate are smartphone applications ('apps') for detecting melanoma in adults?

What is the aim of the review?

We wanted to find out how well smartphone applications can help the general public understand whether their skin lesions might be melanoma.

Why is improving the diagnosis of malignant melanoma skin cancer important?

Melanoma is one of the most dangerous forms of skin cancer. Not recognising a melanoma (a false negative test result) could delay seeking appropriate advice and surgery to remove it. This increases the risk of the cancer spreading to other organs in the body and possibly causing death. Diagnosing a skin lesion as a melanoma when it is not present (a false positive result) may cause anxiety and lead to unnecessary surgery and further investigations.

What was studied in the review?

Specialised applications ('apps') that provide advice on skin lesions or moles that might cause people concern are widely available for smartphones. Some apps allow people to photograph any skin lesion they might be worried about and then receive guidance on whether to get medical advice. Apps may automatically classify lesions as high or low risk, while others can act as store‐and‐forward devices where images are sent to an experienced professional, such as a dermatologist, who then makes a risk assessment based on the photo. Cochrane researchers found two studies, evaluating five apps that used automated analysis of images and one that used a store‐and‐forward approach, to evaluate suspicious skin lesions.

What are the main results of the review?

The review included two studies with 332 lesions, including 86 melanomas, analysed by at least one smartphone application. Both studies used photographs of moles or skin lesions that were about to be removed because doctors had already decided they could be melanomas. The photographs were taken by doctors instead of people taking pictures of their lesions with their own smartphones. For these reasons, we are not able to make a reliable estimate about how well the apps actually work.

Four apps that produce an immediate (automated) assessment of a skin lesion or mole that has been photographed by the smartphone missed between 7 and 55 melanomas.

One app that sends the photograph of a mole or skin lesion to a dermatologist for assessment missed only one melanoma. Another 6 melanomas examined by the dermatologist via the application were not classified as high risk; instead the dermatologist was not able to classify the lesion as either 'atypical' (possibly a melanoma) or 'typical' (definitely not a melanoma).

How reliable are the results of the studies of this review?

The small number and poor quality of included studies reduces the reliability of findings. The people included were not typical of those who would use the applications in real life. The final diagnosis of melanoma was made by histology, which is likely to have been a reliable method for deciding whether patients really had melanoma*. However, the studies excluded between 2% and 18% of images because the applications failed to produce a recommendation.

Who do the results of this review apply to?

Studies took place in the USA and Germany. They did not report key patient information such as age and gender. The percentage of people with a final diagnosis of melanoma was 18% and 35%, much higher than that observed in community settings. The definition of eligible patients was narrow in comparison to likely users of the applications. The photographs used were taken by doctors rather than by smartphone users, which seriously impacts the applicability of results.

What are the implications of this review?

Current smartphone applications using automated analysis are observed to have a high chance of missing melanomas (false negatives). Store‐and‐forward image applications could have a potential role in the timely identification of people with potentially malignant lesions by facilitating early engagement of those with suspicious skin lesions, but they have resource and workload implications.

The development of applications to help identify people who might have melanoma is a fast‐moving field. The emergence of new applications, higher quality and better reported studies could change the conclusions of this review substantially.

How up‐to‐date is this review?

The review authors searched for and used studies published up to August 2016.

*In these studies biopsy was the reference standard (means of establishing final diagnoses).

Summary of findings

Summary of findings'. '.

| Question | What is the diagnostic accuracy of smartphone applications for detecting cutaneous melanoma in adults? | |||||

| Participants | Adults with suspicious skin lesions | |||||

| Prior testing and prevalence | Studies did not report the basis for participant selection. One selected a sample of lesions previously imaged during routine care just before excision of the lesion. The second study evaluated the test on patients who had been referred for further screening of the lesion by a specialist. Prevalence of melanoma was 18% and 35%. | |||||

| Settings | Secondary care | |||||

| Target condition(s) | Invasive melanoma and atypical intraepidermal melanocytic variants | |||||

| Index test | Smartphone applications intended for use by the general public. Lesions not visualised by applications excluded | |||||

| Reference standard | Histology | |||||

| Action | If accurate, positive results of smartphone applications will help to highlight lesions of concern to the lay public, promoting earlier diagnosis of melanoma and reducing consultations for benign lesions. | |||||

| Limitations | ||||||

| Risk of bias | Patient selection methods at high risk of bias due to selective inclusion of lesion types (2/2) and use of a case‐control design (1/2). Test interpretation was blinded to reference standard and pre‐specified for artificial intelligence‐based diagnosis (2/2). Reference standard blinding not described. Timing of index and reference standards not reported. Exclusions due to test failures were not reported (1/2) or their final diagnoses were not described (2/2) | |||||

| Applicability of evidence to question | High concerns about applicability due to unrepresentative participant samples with high disease prevalences (2/2). Test not applied and interpreted by the intended user of the application (2/2). Reference standard interpretation by experienced histopathologists was not described (1/2). | |||||

| Total number of studies:2 | ||||||

| Detection of melanoma | ||||||

| Quantity of evidence | Number of studies | 2 | Total participants with test results | 332 | Total with target condition | 86a |

| Findings | Across the four artificial intelligence‐based applications that classified lesion images (photographs) as either melanomas (one application) or as high risk or 'problematic' lesions (three applications), sensitivities ranged from 7% (95% CI 2% to 16%) to 73% (95% CI 52% to 88%) and specificities from 37% (95% CI 29% to 46%) to 94% (95% CI 87% to 97%). This means that between 27% and 93% of invasive melanoma or atypical intraepidermal melanocytic variants were not picked up as high risk by the automated applications (or as melanomas by one of the four applications). With a prevalence of melanoma ranging between 18% and 37% for these evaluations, the number of melanomas missed was 7 to 55. The single application using store‐and‐forward review of lesion images by a dermatologist had a sensitivity of 98% (95% CI 90% to 100%) and specificity of 30% (95% CI 22% to 40%); the dermatologist missed one melanoma. The number of test failures (lesion images analysed by the applications but classed as unevaluable and excluded by the study authors) ranged from 3 to 31 (or 2% to 18% of lesions analysed). The store‐and‐forward application had one of the highest rates of test failure (15%). At least one melanoma was classed as unevaluable in three of the four application evaluations, the highest number of melanomas excluded by the dermatologist evaluating the store‐and‐forward images (6/60 melanomas assessed). |

|||||

aOf the 60 melanomas included in one study, the four applications successfully analysed 54 to 60.

Background

This is one of a series of Cochrane Diagnostic Test Accuracy (DTA) Reviews on the diagnosis and staging of melanoma and keratinocyte skin cancers as part of the National Institute for Health Research (NIHR) Cochrane Systematic Reviews Programme. Appendix 1 shows the content and structure of the programme. Appendix 2 provides a glossary of terms used.

Target condition being diagnosed

Melanoma arises from uncontrolled proliferation of melanocytes – the epidermal cells that produce pigment or melanin (Thompson 2003). Melanoma can occur in any organ that contains melanocytes, including mucosal surfaces, the back of the eye, and lining around the spinal cord and brain (McLaughlin 2005), but it most commonly arises in the skin (Erdmann 2013; Ferlay 2015). Cutaneous melanoma refers to any skin lesion with malignant melanocytes present in the dermis and includes superficial spreading, nodular, acral lentiginous, and lentigo maligna melanoma variants (see Figure 1). Melanoma in situ refers to malignant melanocytes that are contained within the epidermis and have not yet invaded the dermis, but which are at risk of progression to melanoma if left untreated (Thompson 2003; SEER 2007). Lentigo maligna, a subtype of melanoma in situ in chronically sun‐damaged skin, denotes another form of proliferation of abnormal melanocytes. Lentigo maligna can progress to invasive melanoma if its growth breaches the dermo‐epidermal junction during a vertical growth phase (when it becomes known as 'lentigo maligna melanoma'); however, its malignant transformation is both lower and slower than for melanoma in situ (Kasprzak 2015). Melanoma in situ and lentigo maligna are both atypical intraepidermal melanocytic variants (Thompson 2003; SEER 2007). Melanoma is one of the most dangerous forms of skin cancer, with the potential to metastasise to other parts of the body via the lymphatic system and blood stream; it accounts for only a small percentage of all skin cancer cases but is responsible for up to 75% of skin cancer deaths (Boring 1994; Cancer Research UK 2017a).

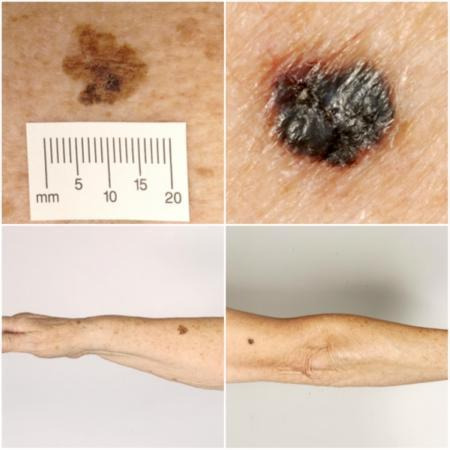

1.

Sample photographs of superficial spreading melanoma (left) and nodular melanoma (right). Copyright © 2010 Dr Rubeta Matin: reproduced with permission.

The annual incidence of melanoma exceeded 200,000 newly diagnosed cases worldwide in 2012 (Erdmann 2013; Ferlay 2015), with an estimated 55,000 deaths (Ferlay 2015). The highest incidence is observed in Australia with 13,134 new cases of melanoma of the skin in 2014 (ACIM 2017) and in New Zealand with 2341 registered cases in 2010 (HPA and MelNet NZ 2014). For 2014 in the USA, the predicted incidence was 73,870 per annum and the predicted number of deaths was 9940 (Siegel 2015). The highest rates in Europe are seen in north‐western Europe and the Scandinavian countries, with a highest incidence reported in Switzerland: 25.8 per 100,000 in 2012. Rates in England have tripled from 4.6 and 6.0 per 100,000 in men and women, respectively, in 1990, to 18.6 and 19.6 per 100,000 in 2012 (EUCAN 2012). Indeed, in the UK, melanoma has one of the fastest rising incidence rates of any cancer and has had the biggest projected increase in incidence between 2007 and 2030 (Mistry 2011). In the decade leading up to 2013, age standardised incidence increased by 46%, with 14,500 new cases in 2013 and 2459 deaths in 2014 (Cancer Research UK 2017b). Rates are higher in women than in men; however, the rate of incidence in males is increasing faster than in females (Arnold 2014).

The rising incidence in melanoma is thought to be primarily related to an increase in recreational sun exposure, tanning bed use and an increasingly ageing population with higher lifetime recreational ultraviolet (UV) exposure, in conjunction with possible earlier detection (Linos 2009; Belbasis 2016). Putative risk factors are reviewed in detail elsewhere (Belbasis 2016); however, risk factors can be broadly divided into host or environmental factors. Host factors include pale skin and light hair or eye colour; older age (Geller 2002); male sex (Geller 2002); previous skin cancer (Tucker 1985); genetically inherited skin disorders, for example xeroderma pigmentosum (Lehmann 2011); a family history of melanoma (Gandini 2005a); and predisposing skin lesions, such as high melanocytic naevus counts (Gandini 2005a), clinically atypical naevi (Gandini 2005a), or large congenital naevi (Swerdlow 1995). Environmental factors include recreational, occupational, and work‐related exposure to sunlight (both cumulative and episodic burning) (Gandini 2005b; Armstrong 2017); artificial tanning (Boniol 2012); and immunosuppression, such as that seen in organ transplant recipients or HIV‐positive individuals (DePry 2011). Lower socioeconomic class may be associated with delayed presentation and thus more advanced disease at diagnosis (Reyes‐Ortiz 2006).

Five‐year survival for stage I melanoma is reported to be 91% to 95%, falling to 27% to 69% in stage III disease (Balch 2009). Tumour thickness, the presence of tumour ulceration and age are the main determinants of melanoma prognosis, and prognostic tools have been developed that include such features (Mahar 2016). Before the advent of targeted and immunotherapies, metastatic melanoma (involving distant sites and visceral organs) resulted in median survival of six to nine months with a three‐year survival of 15% (Balch 2009; Korn 2008). Despite rising incidence, melanoma mortality appears to be stable (Apalla 2017). Between 1975 and 2010, five‐year relative survival for melanoma in the US increased from 80% to 94% but mortality rates showed little change, at 2.1 per 100,000 deaths in 1975 and 2.7 per 100,000 in 2010 (Cho 2014). Increasing incidence in localised disease over the same period (from 5.7 to 21 per 100,000) suggests that the observed survival benefits may be due to earlier detection and heightened vigilance (Cho 2014); however, targeted therapies for stage IV melanoma (e.g. BRAF inhibitors) have improved survival expectation, and immunotherapies are demonstrating potential for long‐term survival (Pasquali 2018).

Treatment of melanoma

For primary melanoma, the mainstay of definitive treatment is wide local excision of the lesion, to remove both the tumour and any malignant cells that might have spread into the surrounding skin (Sladden 2009; Marsden 2010; NICE 2015; Garbe 2016; SIGN 2017). Recommended surgical margins vary according to tumour thickness, as described in Garbe 2016, and stage of disease at presentation, as in NICE 2015 guidelines. The role of narrower (e.g. 1 cm healthy tissue) excision margins for thinner lesions is still debated (Sladden 2009; Wheatley 2016). Following histological confirmation of diagnosis, the lesion is staged according to the American Joint Committee on Cancer (AJCC) Staging System to guide treatment (Balch 2009). Stage 0 refers to melanoma in situ; stages I to II, localised melanoma; stage III, regional metastasis; and stage IV, distant metastasis (Balch 2009). The main prognostic indicators can be divided into histological and clinical factors. Histologically, Breslow thickness is the single most important predictor of survival, as it is a quantitative measure of tumour invasion that correlates with the propensity for metastatic spread (Balch 2001). Microscopic ulceration, mitotic rate, microscopic satellites, regression, lymphovascular invasion, and nodular (rapidly growing) or amelanotic (lacking in melanin pigment) subtypes are also associated with worse prognosis (Shaikh 2012; Moreau 2013).

Independent of tumour thickness, prognosis is worse in older people (Geller 2002); males (Geller 2002); those with recurrent lesions (Dong 2000); and in those with distant lymph node involvement (microscopic or macroscopic), metastatic disease, or both, at the time of primary presentation (Balch 2009). There is debate regarding the prognostic effect from primary lesion site, with some evidence suggesting a worse prognosis for truncal lesions or those on the scalp or neck (Zemelman 2014).

Index test(s)

Smartphones are rapidly evolving from communication and entertainment devices to tools with specialised applications ('apps') that are intimately involved in many aspects of daily life (Kassianos 2015). The processing powers of modern smartphones allow their use in more demanding tasks such as image analysis (Massone 2007). Melanoma risk assessment tools are recent additions and include applications such as Mel App and Skin Scan (Robson 2012).

Once downloaded to a user's mobile phone (both Android and Apple iOS platforms), the applications can act as an information resource about melanoma or other skin cancer, provide guidance on whether people should seek medical advice for a particular lesion that they have photographed with the mobile phone, or be used to monitor skin lesions to identify any changes over time (Kassianos 2015).

Some applications that provide guidance on particular skin lesions can use internally programmed algorithms (or 'artificial intelligence') to catalogue and classify the lesion images. Others are store‐and‐forward applications that forward the photograph of the lesion to an experienced professional such as a dermatologist for review and then communicate a recommendation regarding the nature of the lesion to the user (essentially allowing members of the public direct access to a teledermatology‐type service) (Kassianos 2015).

The artificial intelligence‐based applications use algorithms to compare the acquired image against a bank of exemplar images of malignant and benign lesions or compare the image against a host of benign and malignant lesion characteristics learned from analysing thousands of other images to assess the likelihood of melanoma. These algorithms are generally based on fractal analysis. A fractal, in biology, is a natural phenomenon that exhibits a repeating pattern at every scale (Landini 2011). Fractal analysis can provide a quantitative measure of irregularity where regularity is expected. With regard to melanoma, this includes irregularities in a lesion's physical characteristics, such as those used in established algorithms to assist in the diagnosis of melanoma (e.g. the 'ABCs' of melanoma (Friedman 1985)), as well as texture, patterns, and other geometric features. Fractal analysis has been used for diagnosis of other cancers, for example, mammography for breast cancer (Rangayyan 2007; Raguso 2010), but it has not historically been made available to consumers for assessment of their own malignancy risk. A major benefit of fractal analysis is that it is automated and thus observer‐independent.

A recent review by Kassianos 2015 identified 39 available smartphone applications related to melanoma; most were multifunctional in that they provided information about melanoma in addition to lesion classification or a means of monitoring a given lesion. Just under half of the applications (46%; 18/39) provided some form of image analysis, and a quarter (23%; 9/39) used 'store‐and‐forward' lesion image review by a dermatologist. Those providing image analysis often did not describe how the photographic images were processed and analysed to provide advice on the likelihood of melanoma (Kassianos 2015). Authors described four applications as providing an assessment of the likelihood of melanoma: two used an artificial intelligence‐based algorithm based on the ABCDE method (assessing asymmetry, borders, colour, diameter and evolution), one provided a risk approximation based on the completion of a visual analogue scale by the application user, and one provided insufficient information regarding the method involved (Kassianos 2015). Between 2014 and 2018, 235 new dermatology smartphone applications became available (an increase of 80.8%), including dozens of teledermatology applications, which rose from 32 to 106 (Flaten 2018).

Clinical pathway

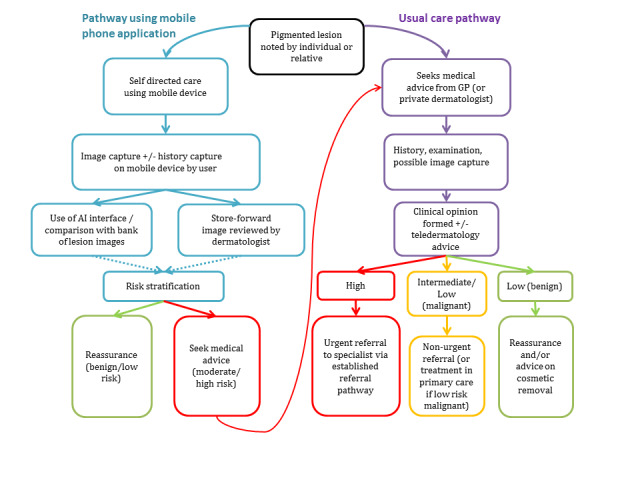

Individuals or their relatives are often best placed to recognise suspicious or changing skin lesions and may use a range of resources to become better informed about their concerns. Smartphone applications could have a role very early on in the clinical pathway, as they are readily accessible and potentially offer an instant risk assessment of the likelihood of malignancy, reassuring those with benign appearing lesions and effectively triaging those who need to seek further medical attention from a clinician for more detailed assessment of the lesion (Figure 2).

2.

Example pathway for an individual using a smartphone application to examine a suspicious mole in resource settings with smartphones

In the UK, people with concerns about a new or changing lesion (either based on skin self‐examination alone or with the aid of a mobile phone application) will then present to their general practitioner (GP) rather than directly to a specialist in secondary care (Figure 3). If the GP has concerns, they may refer the patient to a specialist in secondary care – usually a dermatologist but sometimes a plastic surgeon or an ophthalmologist. Other systems may be in place in other countries, with the possibility of presenting directly to a skin specialist. Other specialists may also identify suspicious skin lesions, for example, a general surgeon or other specialist surgeon (including ear, nose, and throat (ENT) specialist (Figure 3) and refer patients to a specialist consultation with a dermatologist or plastic surgeon. Current UK guidelines recommend that GPs assess all suspicious pigmented lesions presenting in primary care by taking a clinical history and visually inspecting them using the revised seven‐point checklist (MacKie 1990); GPs should urgently refer suspicious pigmented skin lesions for specialist assessment within two weeks (Marsden 2010; Chao 2013; NICE 2015). Evidence is emerging, however, to suggest that excision of melanoma by GPs is not associated with increased risk compared with outcomes in secondary care (Murchie 2017). The specialist clinician will use history‐taking, visual inspection of the lesion (in comparison with other lesions on the skin), and usually dermoscopy to inform a clinical decision. If clinicians suspect melanoma, then urgent excision is advised. Other lesions such as suspected dysplastic naevi or pre‐malignant lesions such as lentigo maligna may also be referred for a diagnostic biopsy, further surveillance, or reassurance and discharge.

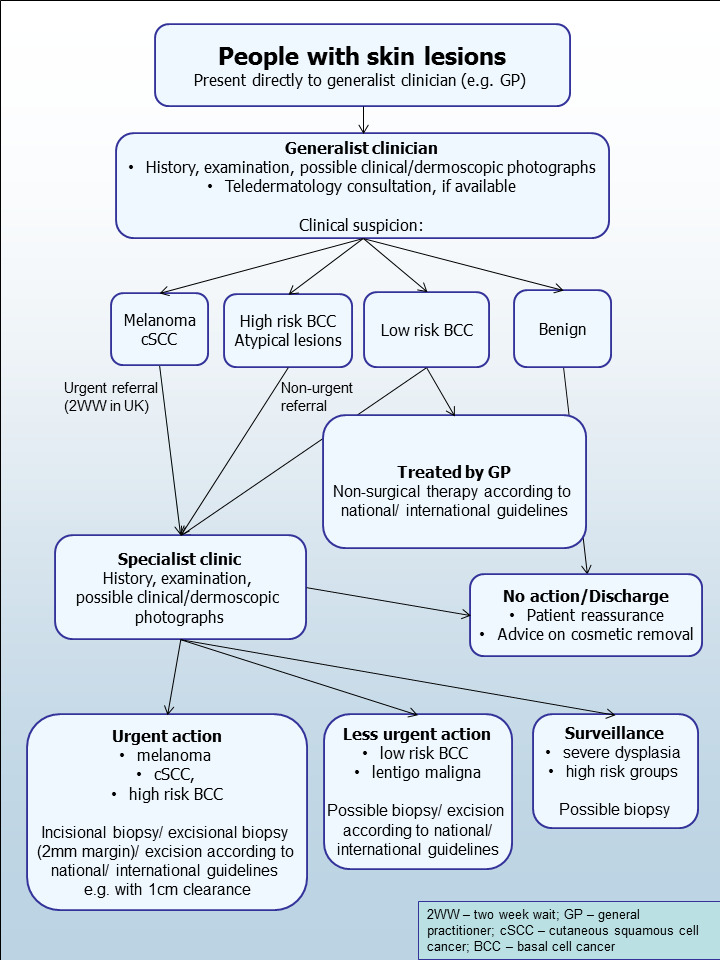

3.

Current clinical pathway for people with skin lesions.

Role of index test(s)

Advances in smartphone technology have provided innovative platforms, where people can become more educated about their medical conditions, leading to better engagement with healthcare professionals (Robertson 2014). The use of smartphone technology can facilitate active self‐management health practices and provide patients information related to their condition (Tyagi 2012). As they are self‐initiated, psychological barriers to seeking medical advice can diminish, as assessments take place outside clinical settings and are often interactive and personalised (Tyagi 2012). The advances in smartphone technology provide new strategies for engaging patients in the management of potentially suspicious skin lesions, increasing the likelihood of detecting melanoma earlier in the progression of the disease. Early detection of melanoma is crucial for patients, dramatically improving survival and reducing morbidity (Balch 2009). There is increased value to the users and healthcare professionals alike, as more educated patients can better engage with their doctors, making consultations more effective and efficient (Robertson 2014).

The greatest concern about mobile phone applications in this context relates to their ability to accurately stratify lesions by level of risk of development of melanoma, particularly given the potential for falsely reassuring people that their lesion is benign. There is real concern that people could be dissuaded from accessing healthcare advice if the app deems their lesion to be low risk (Robson 2012). The most useful applications will therefore be those that maximise sensitivity over specificity for the detection of melanoma. Howeve, this feeds a concern that those who use such applications may be the 'worried well' rather than those who might actually have melanoma, which could flood limited healthcare resources with unnecessary referrals or simply generate profits for private providers who may take advantage of public cancer anxiety.

Alternative test(s)

For the purposes of our series of reviews, we consider each component of the diagnostic process, including visual inspection or clinical examination (whether delivered in‐person or remotely via teledermatology), as a diagnostic or index test, the accuracy of which can be established in comparison with a reference standard of diagnosis, either alone or in combination with other available technologies.

Once an individual or their relatives have identified a suspicious lesion, the only alternative to the use of a mobile phone application is to immediately seek medical advice from their GP or specialist clinician. The clinician will then use history‐taking, visual inspection of the lesion (in comparison with other lesions on the skin), and usually dermoscopy to inform a clinical decision (Figure 2). Our series of systematic reviews has also assessed the accuracy of visual inspection alone and dermoscopy plus visual inspection (Dinnes 2018a; Dinnes 2018b).

Chuchu 2018 has also conducted a review of the accuracy of teledermatology, whereby dermatologists receive clinical photographs or dermoscopic images of a skin lesion, traditionally from non‐specialist clinicians, and give a specialist opinion on a suspicious lesion. This can be done on a store‐and‐forward basis, using digital cameras or mobile phones to acquire photographs or dermoscopic images of a lesion, or via a live video link. According to UK guidelines, 'full dermatology' services (i.e. a replacement for a face‐to‐face consultation) require both clinical and dermoscopic images, whereas 'triage teledermatology' services process only dermoscopic images where facilities permit (BAD 2013).

Teledermatology not only allows clinicians rapid access to expert opinion but may lead to a reduction in waiting times and limit unnecessary referrals (Ndegwa 2010; Warshaw 2010; Bashshur 2015). In rural areas, where people's access to speciality services can have significant and potentially off‐putting travel and time implications, teledermatology has the potential to increase access to specialist opinion.

Teledermatology is also becoming available in a community setting, especially within community or 'high street' pharmacies (for example, the Boots 'Mole Scanning Service', www.boots.com/health‐pharmacy‐advice/skin‐services/mole‐scanning‐service), and is therefore a potential alternative to smartphone applications. Due to their extended opening hours, ease of access, presence of healthcare professionals and availability of consultation rooms, community pharmacies are increasingly providing early detection services (Kjome 2016), such as mole scanning by trained pharmacy staff. In theory, using pharmacies as the first‐line identifier to separate those with skin lesions requiring follow‐up from those who do not, gives general practitioners and specialists more time and resources for those who require intervention (Kjome 2016).

A number of other tests that may have a role in the diagnosis of melanoma in a specialist setting have been reviewed as part of our series of systematic reviews, including reflectance confocal microscopy, optical coherence tomography, computer‐assisted diagnosis or artificial intelligence‐based techniques, and high‐frequency ultrasound (Dinnes 2018c; Ferrante di Ruffano 2018a; Ferrante di Ruffano 2018b; Dinnes 2018d).

Evidence permitting, we will compare the accuracy of available tests in an overview of review, exploiting within‐study comparisons of tests and allowing the analysis and comparison of commonly used diagnostic strategies where tests may be used alone or in combination.

Rationale

Our series of reviews of diagnostic tests used to assist clinical diagnosis of melanoma aims to identify the most accurate approaches to diagnosis and provide clinical and policy decision‐makers with the highest possible standard of evidence on which to base decisions. With increasing rates of melanoma incidence and the push towards the use of dermoscopy and other high‐resolution image analysis in primary care without adequate evidence of effectiveness or safety, the anxiety around missing early cases needs to be balanced against the risk of over referrals, to avoid sending too many people with benign lesions for a specialist opinion. It is questionable whether all skin cancers picked up by sophisticated techniques, even in specialist settings, help to reduce morbidity and mortality or whether newer technologies run the risk of increasing false positive results. It is also possible that use of some technologies (e.g. widespread use of dermoscopy in primary care with no training), could actually result in harm by missing melanomas if they are used as replacement technologies for traditional history‐taking and clinical examination of the entire skin; many branches of medicine have noted the danger of such 'gizmo idolatry' amongst doctors (Leff 2008).

Smartphone applications in general are already widely available and used by consumers, and the popularity of such platforms to offer clinical and diagnostic advice is steadily increasing. Given the rapidly changing evidence base and lack of available systematic reviews on the topic, there is a need for an up‐to‐date analysis of the accuracy of smartphone applications.

As several reviews for each topic area followed the same methodology, we prepared generic protocols in order to avoid duplication of effort: one for diagnosis of melanoma, Dinnes 2015a, and one for diagnosis of keratinocyte skin cancers, Dinnes 2015b. The Background and Methods sections of this review therefore use some text that was originally published in the protocol concerning the evaluation of tests for the diagnosis of melanoma (Dinnes 2015a) and some text that overlaps some of our other reviews (Dinnes 2018b).

Objectives

To assess the diagnostic accuracy of smartphone applications to rule out cutaneous invasive melanoma and atypical intraepidermal melanocytic variants in adults with concerns about suspicious skin lesions.

Methods

Criteria for considering studies for this review

Types of studies

We included test accuracy studies that allow comparison of the result of the index test with that of a reference standard, including the following.

Studies where all participants receive a single index test and a reference standard.

Studies where all participants receive more than one index test(s) and reference standard.

Studies where participants are allocated (by any method) to receive different index tests or combinations of index tests, and all receive a reference standard (between‐person comparative studies (BPC)).

Studies that recruit series of participants unselected by true disease status (referred to as case series for the purposes of this review).

Diagnostic case‐control studies that separately recruit diseased and non‐diseased groups (see Rutjes 2005).

Both prospective and retrospective studies.

Studies where previously acquired clinical or dermoscopic images were retrieved and prospectively interpreted for study purposes.

We excluded studies from which we could not extract 2 × 2 contingency data or small studies with fewer than five disease‐positive or disease‐negative participants. Although the size threshold of five is arbitrary, such small studies are likely to give unreliable estimates of sensitivity or specificity and may be biased, like small randomised controlled trials of treatment effects.

Studies available only as conference abstracts were excluded; however, attempts were made to identify full papers for potentially relevant conference abstracts (Searching other resources).

Participants

We included studies in adults with pigmented skin lesions or lesions suspicious for melanoma. These could include those at high risk of developing melanoma, including those with a family history or previous history of melanoma skin cancer, atypical or dysplastic naevus syndrome, or genetic cancer syndromes. Ideally, participants should be recruited from community settings; however, due to an anticipated paucity of data, we considered participants recruited from any setting as eligible. We excluded studies that recruited only participants with malignant diagnoses and studies that compared test results in participants with malignancy compared with test results based on 'normal' skin as controls, due to the bias inherent in such comparisons (Rutjes 2006). We excluded studies with more than 50% of participants aged 16 and under.

Index tests

We included studies evaluating smartphone applications intended for use by any individual (or member of the public) with a smartphone in a community setting who has a skin lesion that concerns them. Applications intended for use by smartphone users were considered to be those using standard photographs acquired by the mobile phone. We considered applications to be intended for clinician use (e.g. GPs) as a way to access specialist dermatologist opinion (i.e. for store‐and‐forward teledermatology assessments) when the applications required dermoscopic or other microscopic attachments for the acquisition of magnified images.

We included studies developing new mobile phone applications (i.e. derivation studies) if they used a separate independent 'test set' of participants or images to evaluate the new approach.

We excluded studies if they:

used a statistical model to produce a data‐driven equation or algorithm based on multiple diagnostic features, with no separate test set;

used cross‐validation approaches such as 'leave‐one‐out' cross‐validation (Efron 1983); or

evaluated the accuracy of the presence or absence of individual lesion characteristics or morphological features, with no overall diagnosis of malignancy.

Target conditions

The target condition was cutaneous melanoma and atypical intraepidermal melanocytic variants (i.e. including melanoma in situ or lentigo maligna, which has a risk of progression to invasive melanoma).

Reference standards

The preferred reference standard for establishing the final diagnosis of a skin lesion is histopathological diagnosis of the excised lesion or biopsy sample in all eligible lesions. Histopathlogical assessment is not a perfect reference standard because it only samples lesions for examination and may therefore miss tumour cells in non‐sampled portions. As it is a subjective assessment, there is also some degree of inter‐observer variation, especially for borderline lesions. A qualified pathologist or dermatopathologist should perform histopathology. Ideally, reporting should be standardised, detailing a minimum dataset including the histopathological features of melanoma needed to determine staging according to the American Joint Committee on Cancer (AJCC) Staging System (e.g. Slater 2014). We did not apply the reporting standard as a necessary inclusion criterion but extracted any pertinent information.

Due to the potential for partial verification (with lesion excision or biopsy unlikely to be carried out for all benign‐appearing lesions within a representative population sample), we also accepted clinical follow‐up of benign‐appearing lesions, cancer registry follow‐up and 'expert opinion' with no histology or clinical follow‐up as eligible reference standards. We considered the risk of differential verification bias (as misclassification rates of histopathology and follow‐up will differ) in our quality assessment of studies.

We considered all of the above reference standards for establishing final diagnoses of the lesion, with the following caveats:

all study participants with a final diagnosis of the target disorder must have a histological diagnosis, either subsequent to the application of the index test or after a period of clinical follow‐up; and

at least 50% of all participants with benign lesions must have either a histological diagnosis or clinical follow‐up to confirm benignity.

The ability of a smartphone application to correctly triage those who need further assessment of suspicious skin lesions is not the only outcome of interest for this type of test, however. It is possible to estimate referral accuracy (or ability of the smartphone application to approximate an in‐person lesion assessment) by comparing the action recommended by the smartphone application with the management recommendation from face‐to‐face assessment by an appropriately qualified clinician. To this end, 'expert opinion' as the sole reference standard is an eligible reference standard for our reviews of both mobile phone applications and for teledermatology.

Search methods for identification of studies

Electronic searches

The Information Specialist (SB) carried out a comprehensive search for published and unpublished studies. A single large literature search was conducted to cover all topics in the programme grant (see Appendix 1 for a summary of reviews included in the programme grant). This allowed for the screening of search results for potentially relevant papers for all reviews at the same time. A search combining disease related terms with terms related to the test names, using both text words and subject headings was formulated. The search strategy was designed to capture studies evaluating tests for the diagnosis or staging of skin cancer. As the majority of records were related to the searches for tests for staging of disease, a filter using terms related to cancer staging and to accuracy indices was applied to the staging test search, to try to eliminate irrelevant studies, for example, those using imaging tests to assess treatment effectiveness. A sample of 300 records that would be missed by applying this filter was screened and the filter adjusted to include potentially relevant studies. When piloted on MEDLINE, inclusion of the filter for the staging tests reduced the overall numbers by around 6000. The final search strategy, incorporating the filter, was subsequently applied to all bibliographic databases as listed below (Appendix 3). The final search result was cross‐checked against the list of studies included in five systematic reviews; our search identified all but one of the studies, and this study was not indexed on MEDLINE. The Information Specialist devised the search strategy, with input from the Information Specialist from Cochrane Skin. No additional limits were used.

We searched the following bibliographic databases to 29 August 2016 for relevant published studies.

MEDLINE via OVID (from 1946);

MEDLINE In‐Process & Other Non‐Indexed Citations via OVID; and

Embase via OVID (from 1980).

We searched the following bibliographic databases to 30 August 2016 for relevant published studies.

The Cochrane Central Register of Controlled Trials (CENTRAL; 2016, Issue 7), in the Cochrane Library.

The Cochrane Database of Systematic Reviews (CDSR; 2016, Issue 8), in the Cochrane Library.

Cochrane Database of Abstracts of Reviews of Effects (DARE; 2015, Issue 2).

CRD HTA (Health Technology Assessment) database (2016; Issue 3).

CINAHL (Cumulative Index to Nursing and Allied Health Literature via EBSCO from 1960).

We searched the following databases for relevant unpublished studies using a strategy based on the MEDLINE search:

CPCI (Conference Proceedings Citation Index), via Web of Science™ (from 1990; searched 28 August 2016); and

SCI Science Citation Index Expanded™ via Web of Science™ (from 1900, using the 'Proceedings and Meetings Abstracts' Limit function; searched 29 August 2016).

We searched the following trials registers using the search terms 'melanoma', 'squamous cell', 'basal cell' and 'skin cancer' combined with 'diagnosis':

Zetoc (from 1993; searched 28 August 2016).

The US National Institutes of Health Ongoing Trials Register (www.clinicaltrials.gov); searched 29 August 2016.

NIHR Clinical Research Network Portfolio Database (www.nihr.ac.uk/research‐and‐impact/nihr‐clinical‐research‐network‐portfolio/); searched 29 August 2016.

The World Health Organization International Clinical Trials Registry Platform (apps.who.int/trialsearch/); searched 29 August 2016.

We aimed to identify all relevant studies regardless of language or publication status (published, unpublished, in press, or in progress). We applied no date limits.

Searching other resources

We have screened relevant systematic reviews identified by the searches for their included primary studies, and we included any missed by our searches. We have checked the reference lists of all included papers, and subject experts within the author team reviewed the final list of included studies. We did not perform electronic citation searching.

Data collection and analysis

Selection of studies

At least one author (JDi or NC) screened titles and abstracts, discussing and resolving any queries by consensus. A pilot screen of 539 MEDLINE references showed good agreement (89% with a kappa of 0.77) between screeners. At initial screening, we included primary test accuracy studies and test accuracy reviews (for scanning of reference lists) of any test used to investigate suspected melanoma, basal cell carcinoma (BCC), or cutaneous squamous cell carcinoma (cSCC). Both a clinical reviewer (from a team of 12 clinician reviewers) and a methodologist reviewer (JDi or NC) applied inclusion criteria (Appendix 4) to all full text articles, resolving disagreements by consensus or by consultation with a third party (JDe, CD, HW or RM). We contacted authors of eligible studies when they presented insufficient data to allow for the construction of 2 × 2 contingency tables.

Data extraction and management

One clinical (as detailed above) and one methodological reviewer (JDi, NC or LFR) independently extracted data concerning details of the study design, participants, index test(s) or test combinations and criteria for index test positivity, reference standards, and data required to populate a 2 × 2 diagnostic contingency table for each index test using a piloted data extraction form. Data were extracted at all available index test thresholds. We resolved disagreements by consensus or in consultation with a third party (JDe, CD, HW or RM).

We contacted authors of included studies where there was missing information related to the target condition (in particular to allow the differentiation of invasive cancers from in situ variants) or diagnostic threshold. We contacted authors of conference abstracts published from 2013 to 2015 to ask whether full data were available, marking them as 'pending' when we could not obtain a full paper. We will revisit these in future review updates.

Where we identified multiple reports of a primary study, we maximised yield of information by collating all available data. Where there were inconsistencies in reporting or overlapping study populations, we contacted study authors for clarification in the first instance. If contact with authors was unsuccessful, we used the most complete and up‐to‐date data source where possible.

Assessment of methodological quality

We assessed risk of bias and applicability of included studies using the QUADAS‐2 checklist (Whiting 2011), tailored to the topic of skin cancer diagnosis (see Appendix 5). We piloted the modified QUADAS‐2 tool on five full‐text articles. One clinical and one methodological reviewer (JDi, NC or LFR) independently assessed quality for the remaining studies, resolving any disagreement by consensus or in consultation with a third party where necessary (JDe, CD, HW or RM).

Statistical analysis and data synthesis

Due to scarcity of data and the poor quality of studies, we did not undertake a meta‐analysis for this review. For illustrative purposes, we plotted estimates of sensitivity and specificity on coupled forest plots for each application under consideration. Our unit of analysis was the lesion rather than the patient, as initial treatment in skin cancer is directed to the lesion and not systemically to a patient (thus it is important to be able to correctly identify cancerous lesions within each patient). Moreover, this is the most common way in which the primary studies reported data. Although there is a theoretical possibility of correlations of test errors when multiple lesions are included from the same patients, most studies include very few patients with multiple lesions, and any potential impact on findings is likely to be very small, particularly in comparison with other concerns regarding risk of bias and applicability. For each analysis, we included only one dataset per study to avoid multiple counting of lesions.

Investigations of heterogeneity

We examined heterogeneity between studies by visually inspecting the forest plots of sensitivity and specificity and summary receiver operating characteristics (ROC) plots. we did not identify enough studies to allow meta‐regression to investigate potential sources of heterogeneity.

Sensitivity analyses

We did not perform any sensitivity analyses.

Assessment of reporting bias

Due to uncertainty about the determinants of publication bias for diagnostic accuracy studies and the inadequacy of tests for detecting funnel plot asymmetry (Deeks 2005), we did not perform any tests to detect publication bias.

Results

Results of the search

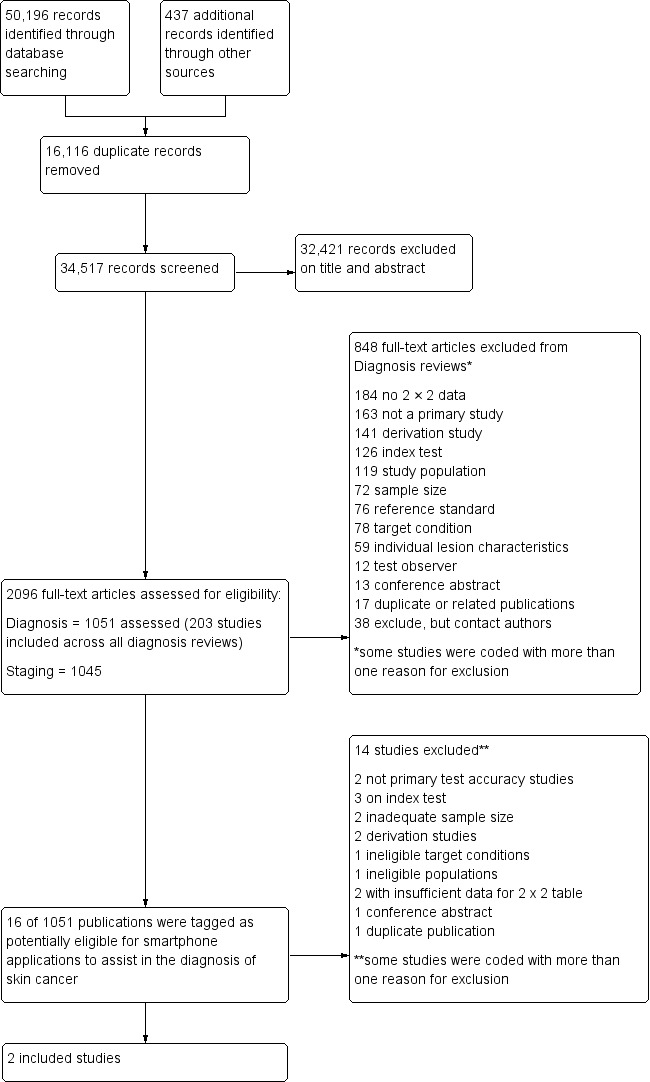

We screened a total of 34,517 unique references for inclusion. Of these, we reviewed 1051 full‐text papers for eligibility and included 203 publications in at least one of the suite of reviews of tests to assist in the diagnosis of melanoma or keratinocyte skin cancer. Figure 4 provides a PRISMA flow diagram of search and eligibility results. We considered 16 studies to be potentially eligible for this review of smartphone applications and ultimately included two publications. Figure 4 lists the reasons for exclusion, while the Characteristics of excluded studies tables list both the studies and the reasons we excluded them. Two studies included fewer than five melanoma cases (Massone 2007; Robson 2012); three studies used an inappropriate index test (including two studies where mobile phones were used to capture dermoscopic (Massone 2007) or otherwise magnified (Diniz 2016) images in a specialist clinic setting); two studies were derivation studies that did not separate data for training and test sets (Ramlakhan 2011; Wadhawan 2011), and one study was a duplicate or related publication (Von Braunmühl 2015 reported data for the same patients as Maier 2015). A list of all studies excluded from the full series of reviews is available as a supplementary file (please contact skin.cochrane.org for a copy).

4.

PRISMA flow diagram.

Across all of our reviews, we contacted the corresponding authors of 84 studies to ask them to supply further information needed to allow study inclusion (37 studies), to clarify diagnostic thresholds (18 studies) or to define the target condition (29 studies). We received responses from 39 authors, allowing the inclusion of 4 studies across various reviews (and 1 study for this review, Wolf 2013), and providing data clarifications for 23 others.

This review reports on two cohorts of lesions published in two studies that provide six datasets (Wolf 2013; Maier 2015). The applications successfully analysed a total of 332 lesions, including 86 melanomas. The studies did not report the number of participants with lesions included in the studies.

Wolf 2013 retrospectively evaluated photographs of lesions selected from their dermatology database of lesions that had been scheduled for excision using a case‐control type design. Health professionals routinely captured these lesion images from participants who had presented with suspicious skin lesions in a dermatological setting rather than the community setting where these applications are intended to be used, and the study included only lesions with a final diagnosis of melanoma, melanoma in situ, lentigo, benign naevi (including compound, junctional and low grade dysplastic naevi), dermatofibroma, sebhorrhoeic keratosis and haemangioma. The study included lesions with good quality photographs (as assessed by one or two dermatologists) and with a clear histological diagnosis. The study excluded lesions that were uncommon or had an equivocal diagnosis (such as 'melanoma cannot be ruled out' or 'atypical melanocytic proliferation') and lesions with moderate or high grade atypia. Investigators excluded more than half the images reviewed for inclusion in the study (52%; 202/390) due to poor image quality, the presence of identifiable patient features or insufficient clinical or histological information. The applications analysed 3 to 29 additional lesions, but trialists considered these to be unevaluable or test failures (see Findings).

Maier 2015 conducted a prospective case series of patients with melanocytic skin lesions seen routinely at the department of dermatology for skin cancer screening. It is unclear whether participants were referred or could access the dermatology clinic directly. Up to three smartphone images (photographs) per lesion were taken, presumably by the dermatologist, before excising the lesion; however, authors did not clearly describe the image acquisition process. The study excluded 20/195 lesions (10%) due to poor image quality or incomplete imaging. Study authors excluded an additional 31 lesions they considered as test failures for the purposes of this review (see Findings), including 13 due to "two‐point differences" (explained as non‐consecutive risk classes, presumably for different images of the same lesion) and 18 "tie‐cases" (defined as having an equal number of results in two consecutive risk classes, e.g. 1 high risk, 1 medium risk and 1 low risk result).

Wolf 2013 took place in the USA and Maier 2015 in Germany. Neither study reported information on the number of patients recruited or their characteristics (e.g. age and sex); total numbers of included lesions were 188 in Wolf 2013 and 144 in Maier 2015. Both studies reported on the accuracy of smartphone applications for detecting melanoma and its atypical intraepidermal melanocytic variants. The prevalence of melanoma was 18% in Maier 2015 and 35% in Wolf 2013.

Wolf 2013 evaluated four different smartphone applications, providing no names to avoid consumer bias; they numbered the applications one to four to allow an assessment of accuracy. Three applications were artificial intelligence‐based classifications of lesions as 'problematic' versus 'okay' (App 1), 'melanoma' versus 'not melanoma' (App 2) and 'high risk' versus 'medium/low‐risk' (App 3) as part of the assessment of the images. It was also possible to dichotomise data for App 3 as 'high/medium risk' (test positive) versus 'low risk' (test negative). App 4 used a store‐and‐forward approach with remote lesion assessment by a qualified dermatologist. Users could run this application on either a smartphone or a website, with lesion images uploaded and transmitted remotely to a dermatologist to make an assessment and return it to the user within 24 hours. The output given was 'atypical' versus 'typical'.

Maier 2015 evaluated an automated risk assessment algorithm using the SkinVision App; this relied on fractal image analysis of three images per lesion. The application classified lesions as 'high risk' versus 'medium or low risk'. The study also reported the diagnostic accuracy of face‐to‐face clinical diagnosis by a dermatologist for the same lesions.

In both studies the reference standard diagnosis was made by histology alone (all lesions were either biopsied or excised).

Methodological quality of included studies

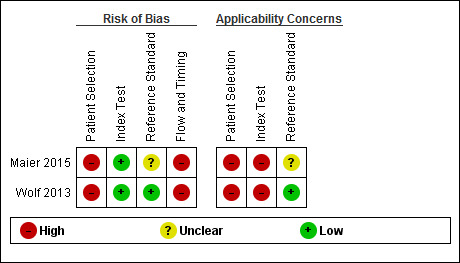

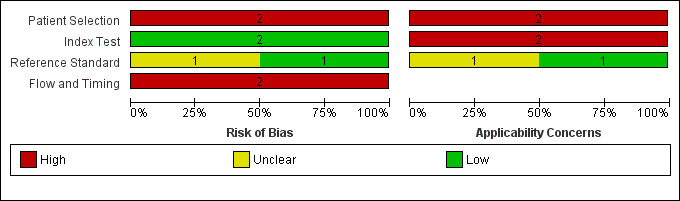

Figure 5 and Figure 6 summarise the overall methodological quality of included studies.

5.

Risk of bias and applicability concerns summary: review authors' judgements about each domain for each included study

6.

Risk of bias and applicability concerns graph: review authors' judgements about each domain presented as percentages across included studies

We assessed both studies as being at high risk of bias for participant selection due to the inappropriate exclusion of lesions that would have otherwise been eligible for assessment with the applications. Wolf 2013 also used a case‐control design, including only lesions with particular final diagnoses. Similarly, both studies caused high concern regarding included participants and setting, due to unclear reporting of patient samples and whether they included multiple lesions per patient. Wolf 2013 excluded lesions that were common or with equivocal diagnoses. All studies included only lesions selected for excision. This is not a representative spectrum of lesions that would be observed in daily life but rather a highly selected sample of participants. These participants would have already presented to a doctor with concerns about a particular lesion and therefore are likely represent a more severe spectrum of abnormality, which will artificially increase the sensitivity of the test in comparison to use by smartphone users in general.

We assessed both studies as being at low risk of bias in the index test domain, with the artificial intelligence‐based assessment made without knowledge of the histological diagnosis. All had a pre‐specified threshold. However, both studies caused high concern about applicability of the index tests, which were not used as intended in practice. Wolf 2013 used archived photographs of lesions rather than images taken using a phone and did not report the real names of the applications used. Maier 2015 did use smartphone images; however, the details around the imaging process were unclear.

All studies reported the use of an acceptable reference standard; however, Maier 2015 did not report blinding of histology to the index test result. Only Wolf 2013 reported histopathological interpretation by an experienced dermatopathologist.

Both studies were at high risk of bias for flow and timing due to exclusion of unevaluable images from further analysis (authors do state the numbers excluded, allowing computation of test failure rates). Both were unclear on the interval between image capture and performance of the reference standard.

Findings

Detection of invasive melanoma or atypical intraepidermal melanocytic variants

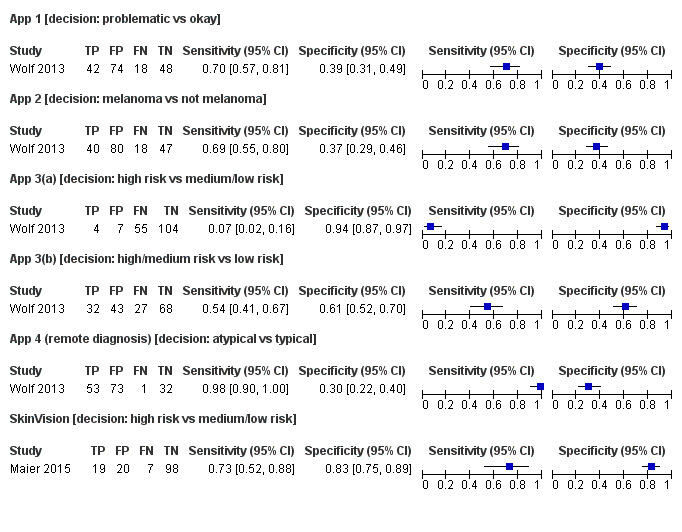

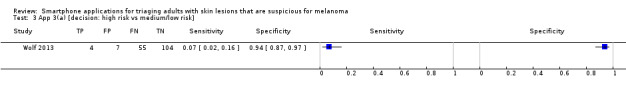

Across the five different applications that the two studies assessed, sensitivities for the detection of invasive melanoma or atypical intraepidermal melanocytic variants ranged from 7% (95% confidence interval (CI) 2% to 16%) to 98% (95% CI 90% to 100%) and specificities from 30% (95% CI 22% to 40%) to 94% (95% CI 87% to 97%; Figure 7).

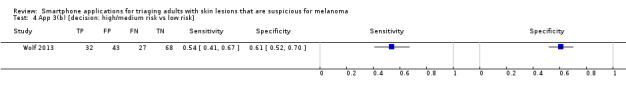

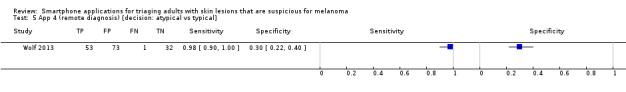

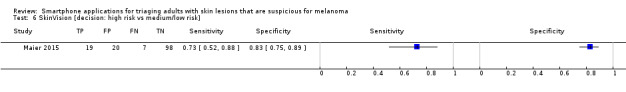

7.

Forest plot of tests: showing sensitivity and specificity of all the applications for the detection of cutaneous melanoma and atypical intraepidermal variants

1 App 1[problematic vs okay], 2 App 2 [mel vs not mel], 3 App 3(a) [high risk vs medium+low risk], 4 App 3(b) [high+medium risk vs low risk], 5 App 4 (remote diagnosis) [atypical vs typical], 6 SkinVision [high risk vs medium/low risk].

One of the four artificial intelligence‐based applications attempted to correctly identify lesions as melanomas or not. For App 2 in Wolf 2013 (185 lesions and 58 melanoma cases), the resulting sensitivity was 69% (95% CI 55% to 80%), and specificity was 37% (95% CI 29% to 46%).

The remaining three artificial intelligence‐based algorithms attempted to categorise lesions as high risk or 'problematic' or not (Figure 7). Sensitivities were around 70% for App 1 in Wolf 2013 (70%, 95% CI 57% to 81%) and for the SkinVision app in Maier 2015 (73%, 95% CI 52% to 88%) but only 7% (95% CI 2% to 16%) for App 3 in Wolf 2013. The corresponding specificities for the three applications were 39% (95% CI 31% to 49%; Wolf 2013; 182 lesions and 60 melanomas), 83% (95% CI 75% to 89%; Maier 2015; 144 lesions and 26 melanomas), and 94% (95% CI 87% to 97%; Wolf 2013; 170 lesions and 59 melanomas). Decreasing the threshold for considering lesions as test positive (i.e. including both high and medium risk lesions) for App 3 in Wolf 2013 (denoted App 3b in Figure 7) increased sensitivity from 7% to 54% (95% CI 41% to 67%), with a fall in specificity from 94% to 61% (95% CI 52% to 70%).

The final application, App 4 in Wolf 2013, was a store‐and‐forward system, with a dermatologist classifying lesion images as atypical or typical. The sensitivity for this application was 98% (95% CI 90% to 100%) and specificity 30% (95% CI 22% to 40%; 159 lesions and 54 melanoma cases).

This application however, recorded the highest percentage of 'test failures' in the Wolf 2013 study (i.e. eligible lesions analysed by the applications but recorded as unevaluable). The test failure rates were: 3% (App 1), 2% (App 2), 6% (App 3) and 15% (App 4; designated by the dermatologist as 'send another photograph' or 'unable to categorise') (Table 2). Three of the four applications classed at least one melanoma as unevaluable, with the dermatologist conducting the assessment for the store‐and‐forward application (App 4) missing 6 (10%) melanomas for this reason.

1. Index test failures – lesions not evaluable by smartphone application.

| Study | Total number of lesions (melanomas) successfully assessed by the each 'app' | Unevaluable lesions (%) | Number (%) of unevaluable melanomas | |

|

Wolf 2013 188 lesions (60 melanomas)a |

Application 1 | 182 (60) | 6 (3%) | 0 (0%) |

| Application 2 | 185 (58) | 3 (2%) | 2 (3%) | |

| Application 3 | 170 (59) | 12 (6%) | 1 (2%) | |

| Application 4 | 159 (54) | 29 (15%) | 6 (10%) | |

|

Maier 2015 175 lesions (number of melanomas: not reported; between 26 and 40)b |

SkinVision | 144 (26) | 31 (18%) | Not reported (≤ 14) |

a52% (202/390) of all images reviewed for inclusion in the study were excluded prior to analysis by the applications. b10% (20/195) of all images reviewed for inclusion in the study were excluded due to poor image quality or incomplete imaging. The original sample of 195 lesions included 60 melanomas. The number of melanomas excluded on the basis of image quality and the number analysed by the application but not included by the study authors (considered as test failures for the purposes of this review) were not separately reported.

The SkinVision application described in Maier 2015 analysed a total of 31 lesions (18%; 31/175) as unevaluable (Table 2). Although the studies did not report the number of melanomas classed as unevaluable; however, the study authors excluded 35% of all melanomas originally eligible for the study (14/40) due to poor image quality or because the images were classed as unevaluable.

Maier 2015 also reported the accuracy of face‐to‐face clinical diagnosis of the same lesions by a dermatologist. These data are not directly comparable to those that the application generated, as the in‐person assessment relates to the diagnosis of melanoma whereas the SkinVision application was developed to identify lesions at high risk of melanoma. The sensitivity of the face‐to‐face assessment was 85% (95% CI 65% to 96%) and specificity, 97% (95% CI 93% to 99%; Figure 8; 144 lesions and 26 melanomas).

8.

Forest plot of tests: SkinVision automated diagnosis compared to face to face clinical diagnosis by a dermatologist

6 SkinVision [high risk vs medium/low risk], 7 Face‐to‐face clinical diagnosis [high risk vs medium/low risk].

Investigations of heterogeneity

We were unable to undertake investigations of heterogeneity listed in the protocol due to an insufficient number of studies.

Discussion

Summary of main results

This review aimed to assess the accuracy of smartphone applications for detecting invasive melanoma or atypical intraepidermal melanocytic variants. We included two studies with a total of 332 lesions, 86 of which were melanomas (Table 1).

Studies were generally of poor methodological quality. Risk of bias was low for both studies only for the index test domain. Poor reporting did not always allow adequate judgement of the quality of the reference standard. Study participants were highly selected in comparison to those who might choose to use a smartphone application to check a skin lesion that was causing them concern. Both of the studies used photographs of skin lesions that were scheduled for excision in a dermatology clinic setting, and clinicians were the ones who took the photographs instead of people using their own smartphones, potentially leading to the acquisition of higher quality images. Studies were blinded for index test interpretation and used pre‐specified test thresholds and adequate reference standards. One study did not report blinding of the reference standard to the lesion images, and one did not mention interpretation by an experienced histopathologist. We are therefore unable to make a reliable estimate of the accuracy of smartphone applications for detecting melanoma or intra‐epidermal melanocytic variants.

Across the four artificial intelligence‐based applications that classified lesion images (photographs) as melanomas (one application) or as high risk or 'problematic' lesions (three applications), sensitivities ranged from 7% (95% CI 2% to 16%) to 73% (95% CI 52% to 88%) and specificities from 37% (95% CI 29% to 46%) to 94% (95% CI 87% to 97%). This means that between 27% and 93% of invasive melanoma or atypical intraepidermal melanocytic variants were not picked up as requiring further assessment by a clinician by the automated applications (or as melanomas by one of the four applications). With a prevalence of melanoma ranging between 18% and 37% for these evaluations, the number of melanomas missed was between 7 and 55.

The single application using store‐and‐forward review of lesion images by a dermatologist had a sensitivity of 98% (95% CI 90% to100%) and specificity of 30% (95% CI 22% to 40%); the dermatologist missed one melanoma.

The number of test failures (lesion images that the applications analysed but that the study authors classed as unevaluable and excluded) ranged from 3 to 31 (or 2% to 18% of lesions analysed). The store‐and‐forward application had one of the highest rates of test failure (15%). Three of the four applications classed at least one melanoma as unevaluable, with the highest number of excluded melanomas (6/60 melanomas assessed) resulting from dermatologist evaluation of the store‐and‐forward images.

Strengths and weaknesses of the review

The strengths of this review include an in‐depth and comprehensive electronic literature search, systematic review methods including double extraction of papers by both clinicians and methodologists, and contact with authors to allow study inclusion or clarify data. We planned a clear analysis structure to allow estimation of test accuracy in different study populations and undertook a detailed and replicable analysis of methodologic quality.

We did not identify any other systematic reviews of smartphone applications during the preparation of this review. However, Kassianos 2015 systematically attempted to identify all available smartphone applications as of July 2014 by searching the online stores of smartphone providers (Apple and Android) and then systemically extracting data about the applications from their online descriptions. Authors made no attempt to identify any diagnostic test accuracy research underlying the applications. It is notable that Kassianos 2015 identified 39 applications, and we were only able to identify test accuracy evaluations for five. We did not contact developers of commercially available smartphone applications for any further accuracy data; however, a future review update could do this.

The main concerns for the review are the clinical applicability of the findings and exclusion of unevaluable test results, with likely overestimation of sensitivity.

Applicability of findings to the review question

The data included in this review are unlikely to be generally applicable to the intended setting. Study participants were people who had skin lesions already scheduled for excision in a dermatology clinic setting rather than smartphone users with concerns about a new or changing mole or skin lesion, and dermatologists were likely to have taken the photographs used with the applications in the clinic setting in both studies. One study also excluded equivocal lesions and those with moderate or high‐grade atypia, both of which could potentially be more likely to produce unevaluable results or be misclassified by the application.

Authors' conclusions

Implications for practice.

We could not produce any summary estimates of test accuracy to answer the research question for this review. Smartphone applications using artificial intelligence‐based analysis have not yet demonstrated sufficient promise in terms of accuracy, and they are associated with a high likelihood of missing melanomas. Available data have limited applicability in practice due to selective participant recruitment from secondary referral settings and the use of images not acquired by the intended users of the smartphone applications (i.e. members of the public). Applications based on store‐and‐forward images could have a potential role in the timely presentation of people with potentially malignant lesions by facilitating active self‐management health practices and early engagement of those with suspicious skin lesions; however, there are resource and workload implications with a store‐and‐forward approach.

Given the paucity of evidence and low methodological quality, we cannot draw any implications for practice. Nevertheless, this is a fast‐moving field, and new and better apps and better reported studies could change these conclusions substantially.

Implications for research.

Prospective evaluation of smartphone applications for identifying people with suspicious skin lesions who should seek further medical advice from a suitably qualified clinician is required to fully understand the accuracy of these tools. Studies should take place in a clinically relevant community or primary care setting, recruiting smartphone users who may have concerns about their risk of developing melanoma or about a new or changing skin lesion. Studies might compare the recommendation from the smartphone with that of a GP following a face‐to‐face clinical diagnosis of the same lesion. In such a study it is important that the GP assesses all lesions examined using the smartphone in the same way, with blinding to the smartphone recommendation. Although histological confirmation of melanoma versus not melanoma is the ideal reference standard, it is not a practical or ethical one for study participants with lesions at low risk of malignancy. Systematic follow‐up of non‐excised lesions over a five‐year period would avoid over‐reliance on a histological reference standard and would further allow results to be more generalisable to routine practice. Although a pragmatic evaluation amongst the general population of smartphone users would be challenging, studies could include those most at risk of developing melanoma, in whom the prevalence of disease would be higher. Use of the test by smartphone users themselves rather than healthcare professionals or equipment experts is also key to ensuring the clinical applicability of study findings and to determine the true test failure rate, which could seriously inhibit the use of smartphone applications in practice. Any future research study should conform to the updated Standards for Reporting of Diagnostic Accuracy (STARD) guideline (Bossuyt 2015).

What's new

| Date | Event | Description |

|---|---|---|

| 19 December 2018 | Amended | Affiliations, Disclaimer and Sources of support updated |

Acknowledgements

Members of the Cochrane Skin Cancer Diagnostic Test Accuracy Group include:

the full project team (Susan Bayliss, Naomi Chuchu, Clare Davenport, Jonathan Deeks, Jacqueline Dinnes, Lavinia Ferrante di Ruffano, Kathie Godfrey, Rubeta Matin, Colette O'Sullivan, Yemisi Takwoingi, Hywel Williams);

our 12 clinical reviewers (Rachel Abbott, Ben Aldridge, Oliver Bassett, Sue Ann Chan, Alana Durack, Monica Fawzy, Abha Gulati, Jacqui Moreau, Lopa Patel, Daniel Saleh, David Thompson, Kai Yuen Wong) and 2 methodologists (Lavinia Ferrante di Ruffano and Louise Johnston), who assisted with full text screening, data extraction and quality assessment across the entire suite of reviews of diagnosis and staging and skin cancer;

our expert advisors and co‐authors Abhilash Jain and Fiona Walter; and

all members of our Advisory Group (Jonathan Bowling, Seau Tak Cheung, Colin Fleming, Matthew Gardiner, Abhilash Jain, Susan O'Connell, Pat Lawton, John Lear, Mariska Leeflang, Richard Motley, Paul Nathan, Julia Newton‐Bishop, Miranda Payne, Rachael Robinson, Simon Rodwell, Julia Schofield, Neil Shroff, Hamid Tehrani, Zoe Traill, Fiona Walter, Angela Webster).

The Cochrane Skin editorial base wishes to thank Urbà González, who was the Dermatology Editor for this review; and the clinical referees, Saul Halpern and David de Berker. We also wish to thank the Cochrane DTA editorial base and colleagues, as well as Meggan Harris, who copy‐edited this review.

Appendices

Appendix 1. Current content and structure of the Programme Grant

| LIST OF REVIEWS | Number of studies | |

| Diagnosis of melanoma | ||

| 1 | Visual inspection | 49 |

| 2 | Dermoscopy +/‐ visual inspection | 104 |

| 3 | Teledermatology | 22 |

| 4 | Smartphone applications | 2 |

| 5a | Computer‐assisted diagnosis – dermoscopy‐based techniques | 42 |

| 5b | Computer‐assisted diagnosis – spectroscopy‐based techniques | Review amalgamated into 5a |

| 6 | Reflectance confocal microscopy | 18 |

| 7 | High‐frequency ultrasound | 5 |

| Diagnosis of keratinocyte skin cancer (BCC and cSCC) | ||

| 8 | Visual inspection +/‐ Dermoscopy | 24 |

| 5c | Computer‐assisted diagnosis – dermoscopy‐based techniques | Review amalgamated into 5a |

| 5d | Computer‐assisted diagnosis – spectroscopy‐based techniques | Review amalgamated into 5a |

| 9 | Optical coherence tomography | 5 |

| 10 | Reflectance confocal microscopy | 10 |

| 11 | Exfoliative cytology | 9 |

| Staging of melanoma | ||

| 12 | Imaging tests (ultrasound, CT, MRI, PET‐CT) | 38 |

| 13 | Sentinel lymph node biopsy | 160 |

| Staging of cSCC | ||

| Imaging tests review | Review dropped; only one study identified | |

| 13 | Sentinel lymph node biopsy | Review amalgamated into 13 above (n = 15 studies) |

Appendix 2. Glossary of terms

| Term | Definition |

| Atypical intraepidermal melanocytic variant | Unusual area of darker pigmentation contained within the epidermis that may progress to an invasive melanoma; includes melanoma in situ and lentigo maligna |

| Atypical naevi | Unusual looking but non‐cancerous mole or area of darker pigmentation of the skin |

| BRAF V600 mutation | BRAF is a human gene that makes a protein called B‐Raf which is involved in the control of cell growth. BRAF mutations (damaged DNA) occur in around 40% of melanomas, which can then be treated with particular drugs. |

| BRAF inhibitors | Therapeutic agents that inhibit the serine‐threonine protein kinase BRAF mutated metastatic melanoma. |

| Breslow thickness | A scale for measuring the thickness of melanomas by the pathologist using a microscope, measured in mm from the top layer of skin to the bottom of the tumour |

| Congenital naevi | A type of mole found on infants at birth |

| Dermoscopy | Whereby a handheld microscope is used to allow more detailed, magnified, examination of the skin compared to examination by the naked eye alone |

| False negative | An individual who is truly positive for a disease, but whom a diagnostic test classifies them as disease‐free |

| False positive | An individual who is truly disease‐free, but whom a diagnostic test classifies them as having the disease |

| Histopathology/Hhistology | The study of tissue, usually obtained by biopsy or excision, for example under a microscope |

| Incidence | The number of new cases of a disease in a given time period |

| Index test | A diagnostic test under evaluation in a primary study |

| Lentigo maligna | Unusual area of darker pigmentation contained within the epidermis which includes malignant cells but with no invasive growth. May progress to an invasive melanoma |

| Lymph node | Lymph nodes filter the lymphatic fluid (clear fluid containing white blood cells) that travels around the body to help fight disease; they are located throughout the body often in clusters (nodal basins). |

| Melanocytic naevus | An area of skin with darker pigmentation (or melanocytes), also referred to as moles |

| Meta‐analysis | A form of statistical analysis used to synthesise results from a collection of individual studies |

| Metastases/metastatic disease | Spread of cancer away from the primary site to somewhere else through the bloodstream or the lymphatic system |

| Micrometastases | Micrometastases are metastases so small that they can only be seen under a microscope |