Abstract

Background

Establishing epidemiological models and conducting predictions seems to be useful for the prevention and control of human brucellosis. Autoregressive integrated moving average (ARIMA) models can capture the long-term trends and the periodic variations in time series. However, these models cannot handle the nonlinear trends correctly. Recurrent neural networks can address problems that involve nonlinear time series data. In this study, we intended to build prediction models for human brucellosis in mainland China with Elman and Jordan neural networks. The fitting and forecasting accuracy of the neural networks were compared with a traditional seasonal ARIMA model.

Methods

The reported human brucellosis cases were obtained from the website of the National Health and Family Planning Commission of China. The human brucellosis cases from January 2004 to December 2017 were assembled as monthly counts. The training set observed from January 2004 to December 2016 was used to build the seasonal ARIMA model, Elman and Jordan neural networks. The test set from January 2017 to December 2017 was used to test the forecast results. The root mean squared error (RMSE), mean absolute error (MAE) and mean absolute percentage error (MAPE) were used to assess the fitting and forecasting accuracy of the three models.

Results

There were 52,868 cases of human brucellosis in Mainland China from January 2004 to December 2017. We observed a long-term upward trend and seasonal variance in the original time series. In the training set, the RMSE and MAE of Elman and Jordan neural networks were lower than those in the ARIMA model, whereas the MAPE of Elman and Jordan neural networks was slightly higher than that in the ARIMA model. In the test set, the RMSE, MAE and MAPE of Elman and Jordan neural networks were far lower than those in the ARIMA model.

Conclusions

The Elman and Jordan recurrent neural networks achieved much higher forecasting accuracy. These models are more suitable for forecasting nonlinear time series data, such as human brucellosis than the traditional ARIMA model.

Electronic supplementary material

The online version of this article (10.1186/s12879-019-4028-x) contains supplementary material, which is available to authorized users.

Keywords: Time series analysis, Human brucellosis, Recurrent neural network

Background

Brucellosis is an anthropozoonosis caused by Brucella melitensis bacteria [1]. The occurrence of human brucellosis results from eating undercooked meat or drinking the unpasteurized milk of infected animals or coming in contact with their secretions. The epidemiological characteristics of brucellosis in industrialized countries have undergone dramatic changes over the past few decades. Brucellosis is previously endemic in these countries but is now primarily related to returning travelers [2]. Huge economic losses can still be caused by brucellosis in developing countries [3]. Although the mortality of brucellosis in humans is less than 1%, it can still cause severe debilitation and disability [4]. Brucellosis is listed as a class II infectious disease by the Chinese Disease Prevention and Control of Livestock and Poultry and as a class II reportable infectious disease by the Chinese Centers for Disease Control and Prevention (CDC) [5]. Currently, human brucellosis is still a main public health problem that endangers the life and health of people in China.

Surveillance and early warning are critical for the detection of infectious disease outbreaks. Therefore, establishing epidemiological models and conducting predictions are useful for the prevention and control of human brucellosis. Autoregressive Integrated Moving Average (ARIMA) models are time domain methods in time series analysis and have been widely used in infectious diseases forecasting [6–10]. ARIMA models can capture the long-term trends and the periodic variations in time series [6]. However, the models cannot handle the nonlinear trends correctly [11, 12]. In contrast, neural networks are flexible and nonlinear tools capable of approximating any kind of arbitrary function [13–15]. Recurrent neural networks (RNNs) can address problems that involve time series data. These models have been mainly used in adaptive control, system identification, and most famously in speech recognition. Elman and Jordan neural networks are two popular recurrent neural networks that have delivered outstanding performances in a wide range of applications [16–20]. Currently, no researchers have used these two neural networks to forecast the time series data of human brucellosis.

In this study, we build prediction models for human brucellosis in mainland China by using Elman and Jordan neural networks. In addition, the fitting and forecasting accuracy of the neural networks were compared with an Autoregressive Integrated Moving Average model.

Methods

Data sources

The reported human brucellosis cases were obtained from the website of the National Health and Family Planning Commission (NHFPC) of China (http://www.nhc.gov.cn). All of the human brucellosis cases were diagnosed according to clinical symptoms such as undulant fevers, sweating, nausea, vomiting, myalgia, arthralgia, an enlarged liver, and an enlarged spleen [21]. Additionally, the human brucellosis cases were also confirmed by a serologic test in terms of the case definition of the World Health Organization (WHO). The human brucellosis cases from January 2004 to December 2017 were assembled as monthly counts. The dataset analyzed during the study is included in Additional file 1. The dataset was split into two sections: a training set and a test set. The training set observed from January 2004 to December 2016 was used to build models, and the test set observed from January 2017 to December 2017 was used to test the forecast results.

Decomposition of the time series

We first plotted the time series of human brucellosis cases and looked for trend and seasonal variations. One of the assumptions of an ARIMA model is that the time series should be stationary. The mean, variance, and autocorrelation of a stationary time series are constant over time. Logarithm and square root transformation of the original series were performed to stabilize the variance. A first-order difference and a seasonal difference were used to stabilize the long-term trend and seasonal variance, respectively. An augmented Dickey-Fuller (ADF) test was performed to check the stationary of the transformed time series.

We used an additive model to decompose the time series. An additive decomposition model was used as follows:

where at time t, xt is the observed series, mt is the trend, st is the seasonal effect, and zt is an error term. The smooth algorithm used in this study is loess [22]. A locally weighted regression technique is used in this method.

The seasonal ARIMA model construction

In the early 1970s, ARIMA models were proposed by statisticians Box and Jenkins and have been considered to be one of the most widely used models for time series analysis [23]. Autoregressive and moving average terms at lag s are included in a seasonal ARIMA model. The seasonal ARIMA(p, d, q) (P, D, Q)s model is written with the backward shift operator as follows:

where ΘP, θp, ΦQ, and ϕq are polynomials of orders P, p, Q, and q, respectively. After a time series has been transformed to be stationary, the figures of the autocorrelation function (ACF) and partial autocorrelation function (PACF) are used to give a rough guide of reasonable models to try. Once the model order has been identified, a parameter test is necessary. We need to estimate the coefficients of autoregressive and moving average terms. The maximum likelihood estimation (MLE) is used to perform the parameter test. A best-fitting model is chosen with an appropriate criterion after trying out a wide range of models. A Ljung-Box Q statistic of the residuals is always used to judge whether the residuals are white noise. This statistic is a one-tailed statistical test. If the p value is greater than the significance level, then the time series is regarded as white noise. A Brock-Dechert-Scheinkman (BDS) test is applied to the residuals of the best-fitting seasonal ARIMA model to detect the nonlinearity of the original time series. A BDS test can test nonlinearity, provided that any linear dependence has been removed from the data. In this study, we wrote a function with R language that could fit a range of likely candidate ARIMA models automatically. The R script of the function is included in Additional file 2. The conditional sum of squares (CSS) method which was more robust was used in the arima function. The consistent Akaike Information Criteria (CAIC) [24] was used to select the best-fitting model. The formula of CAIC is written as follows:

where LL is the model log likelihood estimate, K is the number of model parameters, and n is the sample size. Good models are obtained by minimizing the CAIC.

Building Elman and Jordan recurrent neural networks

Normalization is an important procedure in building a neural network, as it avoids unnecessary results or difficult training processes resulting in algorithm convergence problems. We used the min-max method to obtain all the scaled data between zero and one. The formula for the min-max method is the following:

Since the time series data of human brucellosis cases had strong seasonality characteristics that appeared to depend on the month, we created twelve time-lagged variables as input features. Therefore, we selected twelve as the number of input neurons. There was one output neuron representing the forecast value of the cases of the next month. Supposing that xt represents the human brucellosis cases at time t, then the input matrix and the output matrix of the modeling dataset used in this study are written as follows:

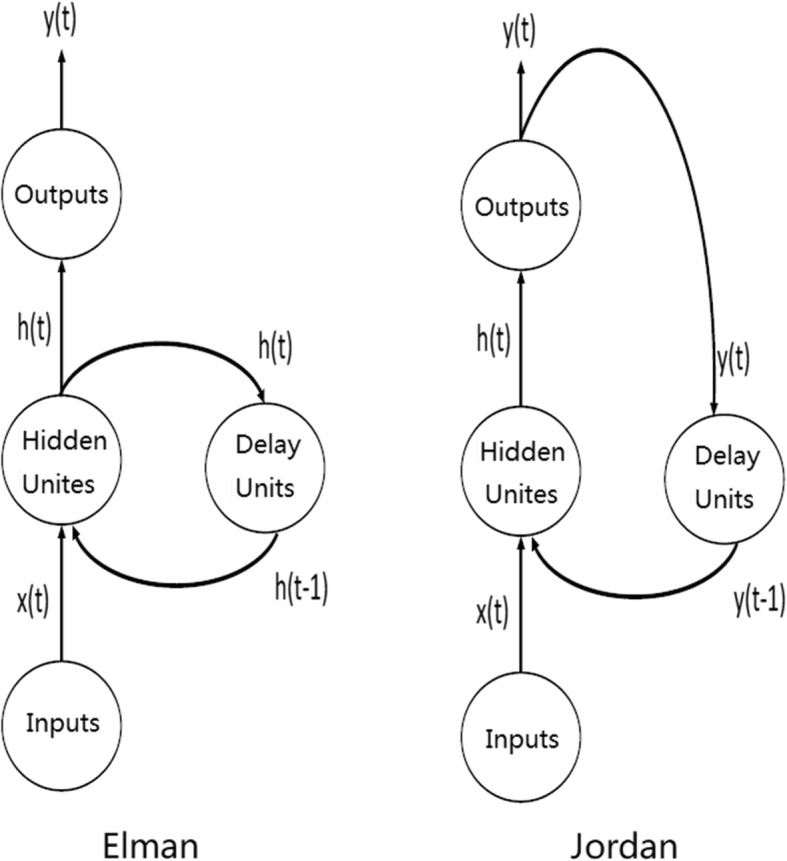

The structure of Elman and Jordan neural networks is illustrated in Fig. 1. Elman and Jordan neural networks consist of an input layer, a hidden layer, a delay layer, and an output layer. The delay neurons of an Elman neural network are fed from the hidden layer, while the delay neurons of a Jordan neural network are fed from the output layer.

Fig. 1.

Structure of the Elman and Jordan neural networks

There are several parameters, such as the number of units in the hidden layer, the maximum number of iterations to learn, the initialization function, the learning function, and the update function, which should be set when we build an Elman or Jordan neural network. In this study, the maximum number of iterations was set to 5000. If the learning rate is too large, then the neural network will converge to local minima. Therefore, the learning rate is usually between 0 and 1. By trial and error, the learning rates of Elman and Jordan neural networks were set to 0.75 and 0.55, respectively. One hidden layer was sufficient for this study. To avoid over fitting during the training process, we adopted the approach of leave-one-out-cross-validation (LOOCV) in the original training set for selecting the optimal number of units in the hidden layer. A single observation was used for the validation set, and the remaining observations made up the new training set. Then, we trained the neural network on the remaining ones and computed the mean squared error (MSE) for the selected single network. We repeated the procedure until every observation had been selected once in the original training set. The number of units in the hidden layer was attempted from 5 to 25. The optimal number of units in the hidden layer had the lowest mean MSE. The parallel computation was used to accelerate the LOOCV procedure on a Dell PowerEdge server T430 with 12 threads. The remaining parameters of the two models were set to default. The R script to conduct the neural network models is included in Additional file 3.

Model comparison

Three performance indexes, root mean squared error (RMSE), mean absolute error (MAE) and mean absolute percentage error (MAPE), were used to assess the fitting and forecasting accuracy of the three models. MAE is the simplest measure of fitting and forecasting accuracy. We can calculate the absolute error with the absolute value of the difference between the actual value and the predicted value. MAE determines how large of an error we can expect from the forecast on average. To address the problem of telling a large error from a small error, we can find the mean absolute error in percentage terms. MAPE is calculated as the average of the unsigned percentage error. The MAPE is scale sensitive and should not be used when working with low-volume data. Since MAE and MAPE are based on the mean error, they may understate the impact of large rare errors. RMSE is calculated to adjust for large rare errors. We first square the errors, then calculate the mean of errors and take the square root of the mean. We can obtain a measure of the size of the error that gives more weight to the large rare errors. We can also compare RMSE and MAE to judge whether the forecast contains large rare errors. Generally, the larger the difference between RMSE and MAE, the more inconsistent the error size is.

Data analysis

All data analyses were conducted by using R software version 3.5.1 on an Ubuntu 18.04 operating system. The decomposition of the time series was performed with the function stl in package stats. Seasonal ARIMA models were built with the function arima in package stats. Elman and Jordan recurrent neural networks were built with the functions elman and jordan in the RSNNS package, respectively. In this study, the statistical significance level was set at 0.05.

Results

Characteristics of human brucellosis cases in mainland China

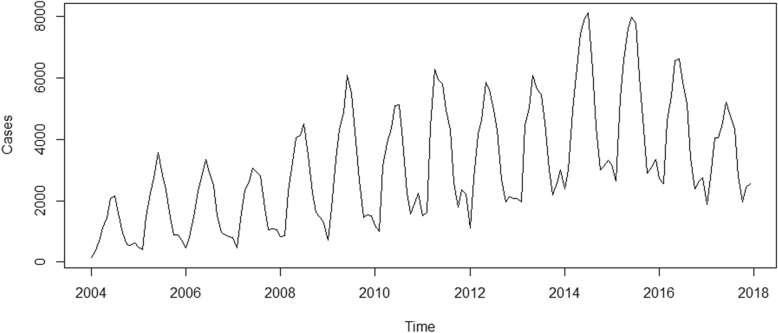

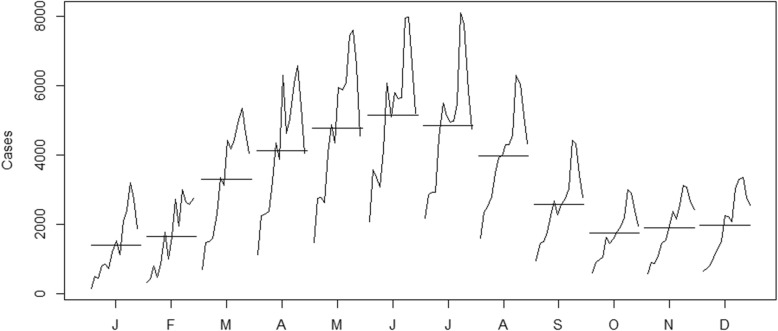

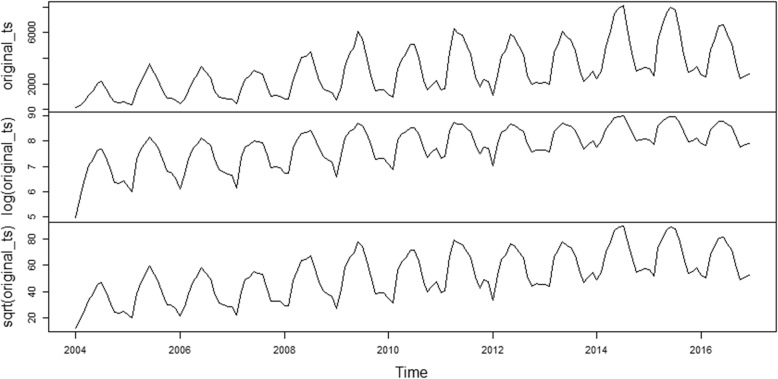

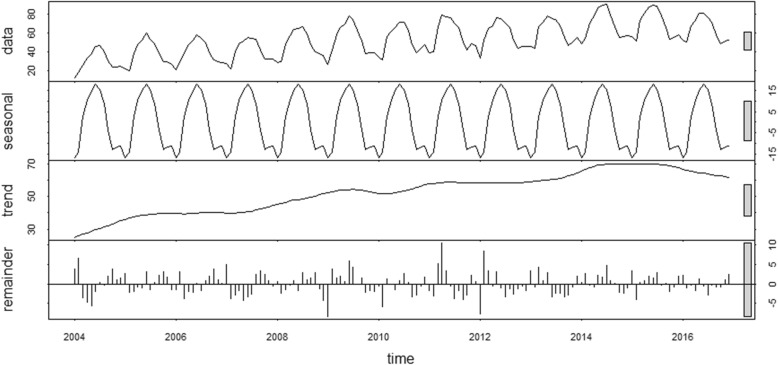

There were 52,868 cases of human brucellosis in Mainland China from January 2004 to December 2017. As shown in Fig. 2, we can observe a general upward trend and seasonal variance in the original time series. The number of human brucellosis cases was higher in summer and lower in winter (Fig. 3). As shown in Fig. 2, the variance seemed to increase with the level of the time series. The variance from 2004 to 2007 was the smallest, followed by the data from 2008 to 2013, and the variance from 2014 to 2016 was the largest. Therefore, the variance was nonconstant. The time series must be transformed to stabilize the variance. Generally, the logarithm or square root transformation can stabilize the variance. The plot of the original time series, logarithm and square root transformed human brucellosis case time series is illustrated in Fig. 4. The variance seemed to decrease with the level of the logarithm transformed human brucellosis case time series. For the square root transformed human brucellosis cases time series, the variance appeared to be fairly consistent. We found that square root transformation was more appropriate for this study after trying these two methods. The decomposition of time series after square root transformation is plotted in Fig. 5. The gray bars on the right side of the plot allowed for the easy comparison of the magnitudes of each component. The square root transformed time series, seasonal, trend, and noise components are shown from top to bottom, respectively. The seasonal component did not change over time. The trend component showed a general upward trend from 2004 to 2015 and declined slightly in 2016. There was no apparent pattern of noise. The results of the decomposition were satisfactory.

Fig. 2.

Time series plot for cases of human brucellosis in Mainland China from 2004 to 2017

Fig. 3.

Month plot for cases of human brucellosis in Mainland China from 2004 to 2017

Fig. 4.

Plot of original time series, logarithm and square root transformed human brucellosis cases

Fig. 5.

Seasonal decomposition of the square root transformed human brucellosis cases

Seasonal ARIMA model

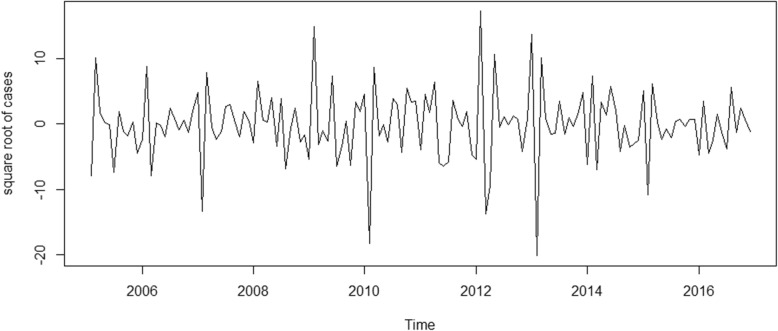

A first-order difference and a seasonal difference made the time series of square root transformed human brucellosis cases look relatively stationary (Fig. 6). The ADF test of the differenced time series suggested that it was stationary (ADF test: t = − 5.327, P < 0.01). Therefore, the parameters d and D for a seasonal ARIMA model were set to 1 and 1, respectively.

Fig. 6.

Plot of square root transformed human brucellosis cases after a first-order difference and a seasonal difference

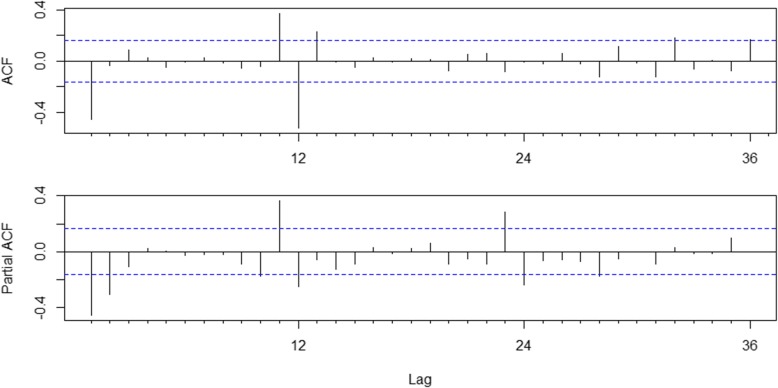

The plots of ACF and PACF are shown in Fig. 7. The significant spike at lag 1 in the ACF suggested a possible nonseasonal MA (1) component, and the significant spike at lag 12 in the ACF suggested a possible seasonal MA (1) component. The significant spikes at lags 1 and 2 in the PACF suggested a possible nonseasonal AR (2) component, and the significant spikes at lags 12 and 24 in the PACF suggested a possible seasonal AR (2) component. Therefore, we tried the parameters p from 0 to 2, q from 0 to 1, P from 0 to 2, and Q from 0 to 1. With the combination of these parameters, 36 likely candidate models were built. The models were considered as to whether they could pass residual and parameter tests. Eventually, five models remained. We found that the ARIMA (2,1,0) × (0,1,1)12 model had the smallest CAIC (798.731) among the candidate models (Table 1). The Ljung-Box Q statistic of the residuals indicated no significant difference (P = 0.097) at the significance level of 0.05. Therefore, we considered that the residuals were white noise. The estimated parameters of the optimal seasonal ARIMA model are listed in Table 2. The results of the BDS test are shown in Table 3, and all of the p values were smaller than the significance level of 0.05. The results suggested that the time series of human brucellosis in Mainland China from 2004 to 2016 was not linear.

Fig. 7.

Autocorrelation and partial autocorrelation plots for the differenced stationary time series

Table 1.

Comparison of five candidate seasonal ARIMA models

| Model | CAIC | Ljung-Box Q | P value |

|---|---|---|---|

| ARIMA (0,1,1) × (0,1,1)12 | 799.923 | 22.787 | 0.199 |

| ARIMA (0,1,1) × (1,1,0)12 | 817.906 | 22.472 | 0.212 |

| ARIMA (0,1,1) × (2,1,0)12 | 813.588 | 25.848 | 0.103 |

| ARIMA (2,1,0) × (0,1,1)12 | 798.731 | 26.107 | 0.097 |

| ARIMA (2,1,0) × (1,1,0)12 | 823.300 | 22.852 | 0.196 |

Table 2.

Estimate parameters of the seasonal ARIMA (2,1,0) × (0,1,1)12 model

| Model parameter | Estimate | Standard error | 95%CI of estimate |

|---|---|---|---|

| AR1 | −0.392 | 0.085 | (−0.559, − 0.225) |

| AR2 | −0.220 | 0.081 | (−0.378, − 0.062) |

| Seasonal MA1 | − 0.726 | 0.063 | (− 0.849, − 0.603) |

Table 3.

Results of BDS test for the residuals of seasonal ARIMA model

| Epsilon | Dimension | Statistic | p-value |

|---|---|---|---|

| 1.838 | 2 | 2.751 | 0.006 |

| 1.838 | 3 | 3.532 | 0.000 |

| 1.838 | 4 | 2.997 | 0.002 |

| 3.677 | 2 | 2.241 | 0.025 |

| 3.677 | 3 | 2.603 | 0.009 |

| 3.677 | 4 | 2.524 | 0.012 |

| 5.515 | 2 | 2.371 | 0.018 |

| 5.515 | 3 | 2.452 | 0.014 |

| 5.515 | 4 | 2.317 | 0.021 |

| 7.354 | 2 | 2.624 | 0.009 |

| 7.354 | 3 | 2.572 | 0.010 |

| 7.354 | 4 | 2.472 | 0.013 |

Elman and Jordan neural networks

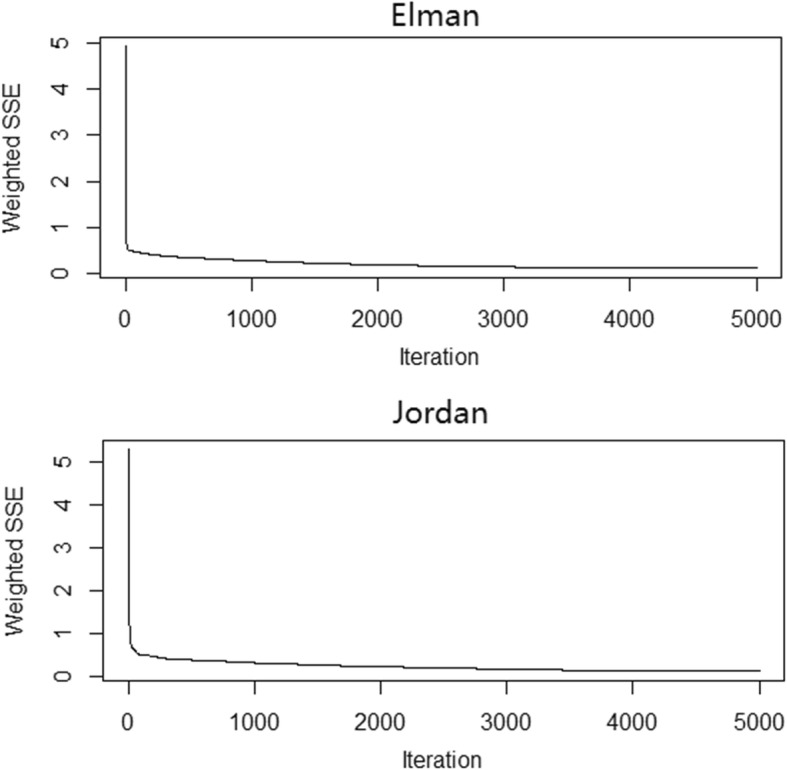

When we used the LOOCV approach in the training set, the lowest mean MSE was 0.018 and 0.010 for Elman and Jordan neural networks when the number of units in the hidden layer was 7 and 8, respectively. The plot of the training error by iteration is shown in Fig. 8. The error dropped sharply within the beginning iterations. This finding indicated that the model was learning from the data. The error then declined at a more modest rate until 5000 iterations.

Fig. 8.

Training error by iteration for Elman and Jordan neural networks

Comparison of the three models

The training set was used to build models. A first-order difference and a seasonal difference were performed in building the seasonal ARIMA model. Therefore, we lost the first 13 values in the training set, and the remaining 143 values were compared. We created 12 time-lagged variables as input features for Elman and Jordan neural networks. Therefore, 144 values were compared in the training set for the neural networks. The fitting and forecasting accuracy of the three models are shown in Table 4. In the training set, the RMSE and MAE of Elman and Jordan neural networks were lower than those of the ARIMA model, whereas the MAPE of Elman and Jordan neural networks was slightly higher than that of the ARIMA model. In the test set, the RMSE, MAE and MAPE of the Elman and Jordan neural networks were far lower than those of the ARIMA model. The Jordan neural network had the best forecasting performance. The RMSE, MAE and MAPE of the Jordan neural network were the lowest. Therefore, the Jordan neural network was the best model for the test set. The actual and forecasted cases of human brucellosis in mainland China from January to December 2017 of the three models are presented in Table 5.

Table 4.

Comparison of the fitting and forecasting accuracy of the three models

| performance index | Training set | Test set | |||||

|---|---|---|---|---|---|---|---|

| ARIMA | Elman | Jordan | ARIMA | Elman | Jordan | ||

| RMSE | 405.746 | 297.181 | 361.283 | 1050.018 | 684.450 | 561.442 | |

| MAE | 294.190 | 231.061 | 287.370 | 873.840 | 502.926 | 374.737 | |

| MAPE | 0.112 | 0.115 | 0.113 | 0.236 | 0.156 | 0.113 | |

Table 5.

The actual and forecasted cases of human brucellosis in mainland China from January to December 2017 of the three models

| Month | Actual values | ARIMA | Elman | Jordan |

|---|---|---|---|---|

| Jan | 1874 | 2274 | 2148 | 2140 |

| Feb | 2740 | 2390 | 2395 | 2399 |

| Mar | 4055 | 4568 | 4211 | 4267 |

| Apr | 4048 | 5530 | 5077 | 5376 |

| May | 4539 | 6521 | 6085 | 5763 |

| Jun | 5203 | 6721 | 4450 | 5175 |

| Jul | 4742 | 6330 | 4873 | 4795 |

| Aug | 4330 | 5228 | 4376 | 4421 |

| Sep | 2781 | 3527 | 3478 | 3141 |

| Oct | 1953 | 2448 | 2890 | 2269 |

| Nov | 2427 | 2675 | 2489 | 2361 |

| Dec | 2549 | 2819 | 2612 | 2337 |

Discussion

There are two methods for time series analysis: frequency domain methods and time domain methods. Seasonal ARIMA models, which belong to time domain methods, have been regarded as one of the most useful models in seasonal time series prediction [25]. There is no need to use any extra surrogate variables [26]. We can usually only analyze with the outcome variable series without considering the factors that will affect the outcome variable. This method is more practical because we cannot obtain all of the time series data of impacting factors most of the time. Before the model identification, the time series should be handled to be stationary with data transformation and difference. Generally, the more differences are used, the more data loss will occur. Fortunately, we only used a first-order difference and a seasonal difference in this study. Eventually, the ARIMA (2,1,0) × (0,1,1)12 model was chosen as the optimal model according to the value of CAIC. The seasonal ARIMA model accurately captured the seasonal fluctuation of human brucellosis cases in mainland China. However, the forecasting accuracy in the test set was not satisfactory. The MAPE of the seasonal ARIMA model reached 0.236 in the test set. The most likely reason was that the time series data of human brucellosis cases in mainland China was not linear. As shown in Fig. 5, although we could observe a long-term upward trend from the trend component, some curves remained after the seasonal and irregular components had been extracted. The results of the BDS test also supported that the time series of human brucellosis in Mainland China from 2004 to 2016 was not linear.

There are mainly two approaches for nonlinear time series forecasting [27]. One approach is model-based parametric nonlinear methods, such as the smoothing transition autoregressive (STAR) model, the threshold autoregressive (TAR) model, the nonlinear autoregressive (NAR) model, the nonlinear moving average (NMA) model, etc. In theory, these parametric nonlinear methods are superior to the traditional ARIMA model in capturing nonlinear relationships in the data. However, there are too many possible nonlinear patterns in practice, which restricts the usefulness of these models. The other approach is nonparametric data driven methods, and the most widely used method is neural networks. Neural networks are inspired by the structure of a biological nervous system. These networks can capture the patterns and hidden functional relationships existing in a given set of data, although these relationships are unknown or hard to identify [28]. Recurrent neural networks contain hidden states that are distributed across time. This characteristic suggests that these networks have the ability to efficiently store much information about the past. Therefore, these networks have the advantage of dealing with time series data. Elman and Jordan neural networks are two widely used recurrent neural networks. Elman neural networks have been used in many practical applications, such as the price prediction of crude oil futures [29], weather forecasting [30], water quality forecasting [31], and financial time series prediction [28]. Jordan neural networks have been used in wind speed forecasting [32] and stock market volatility monitoring [33]. All of these applications have achieved good forecasting performance. In this study, we tried these two neural network models to predict human brucellosis cases in Mainland China. The MAPE of Elman and Jordan neural networks were 0.115 and 0.113, respectively, almost the same as the MAPE of the seasonal ARIMA model at 0.112 in the training set, while the RMSE and MAE of Elman and Jordan neural networks were lower than those of the ARIMA model. The RMSE and MAE of the Elman neural network were the lowest, whereas the MAPE of the Elman neural network was the highest in the training set. The most likely reason was that the Elman neural network gained better fitting accuracy for large values, but gained poorer fitting accuracy for small values in this study. Importantly, the Elman and Jordan neural networks achieved much higher forecasting accuracy in the test set. The RMSE, MAE, and MAPE of Elman and Jordan neural networks were far lower than those of the seasonal ARIMA model. Therefore, Elman and Jordan Recurrent Neural Networks are more appropriate than the seasonal ARIMA model for forecasting nonlinear time series data, such as human brucellosis. However, we must admit that there are still some limitations of neural network models. First, neural network models are black boxes, i.e., we cannot know how much each input variable is influencing the output variables. Second, there are no fix rules to determine the structure and parameters of neural network models. It all depends on the experience of researchers. Third, it is computationally very expensive and time consuming to train neural network models. Neural network models require processors with parallel processing power to accelerate the training process. Some researchers have built hybrid models combining ARIMA models and neural network models to analyze time series data and achieved good results. We will try hybrid models for human brucellosis in the future. There were still some limitations to this study. First, the NHFPC of China only reported the data from 2004 to 2017. More time series data on brucellosis cases can improve the accuracy of forecasting models. Second, the present study is an ecological study, and we cannot avoid ecological fallacy. Third, the factors that affect the occurrence of human brucellosis such as pathogens, host, natural environment, vaccines and socioeconomic variations were not considered when we conducted the models.

Conclusions

In this study, we established a seasonal ARIMA model and two recurrent neural networks, namely, the Elman and Jordan neural networks, to conduct short-term prediction of human brucellosis cases in mainland China. The Elman and Jordan recurrent neural networks achieved much higher forecasting accuracy. These models are more appropriate for forecasting nonlinear time series data, such as human brucellosis, than the traditional ARIMA model.

Additional files

Monthly cases of human brucellosis in Mainland China from 2004 to 2017. (XLSX 13 kb)

R script of the function that can fit a range of likely candidate ARIMA models automatically. (TXT 1 kb)

R script to perform the Elman and Jordan neural network models with leave-one-out-cross-validation method. (TXT 2 kb)

Acknowledgements

We thank Nature Research Editing Service of Springer Nature for providing us a high-quality editing service.

Funding

This study is supported by the National Natural Science Foundation of China (Grant No. 81202254 and 71573275) and the Science Foundation of Liaoning Provincial Department of Education (LK201644 and LFWK201719). The funders had no role in the design of the study and collection, analysis, and interpretation of data and in writing the manuscript.

Availability of data and materials

The datasets analyzed during the current study are included in the additional file 1. The documents included in this study are also available from the corresponding author Bao-Sen Zhou on reasonable request.

Abbreviations

- ACF

Autocorrelation function

- ADF

Augmented Dickey-Fuller

- ARIMA

Autoregressive Integrated Moving Average

- BDS

Brock-Dechert-Scheinkman

- CAIC

Consistent Akaike Information Criteria

- CDC

Centers for Disease Control and Prevention

- CSS

Conditional sum of squares

- LOOCV

Leave-one-out-cross-validation

- MAE

Mean absolute error

- MAPE

Mean absolute percentage error

- MLE

Maximum likelihood estimation

- MSE

Mean squared error

- NAR

Nonlinear autoregressive

- NHFPC

National Health and Family Planning Commission

- NMA

Nonlinear moving average

- PACF

Partial autocorrelation function

- RMSE

Root mean squared error

- RNNs

Recurrent Neural Networks

- STAR

Smoothing transition autoregressive

- TAR

Threshold autoregressive

- WHO

World Health Organization

Authors’ contributions

WW designed and drafted the manuscript. SYA and PG participated in the data collection. WW and DSH participated in the data analysis. BSZ assisted in drafting the manuscript. The final manuscript has been read and approved by all the authors.

Ethics approval and consent to participate

Research institutional review boards of China Medical University approved the protocol of this study, and determined that the analysis of publicly open data of human brucellosis cases in mainland China was part of continuing public health surveillance of a notifiable infectious disease and thus was exempt from institutional review board assessment. All data were supplied and analyzed in the anonymous format, without access to personal identifying or confidential information.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Wei Wu, Email: wuwei@cmu.edu.cn.

Shu-Yi An, Email: shuyian_cdc@163.com.

Peng Guan, Email: pguan@cmu.edu.cn.

De-Sheng Huang, Email: dshuang@cmu.edu.cn.

Bao-Sen Zhou, Email: bszhou@cmu.edu.cn.

References

- 1.Hasanjani Roushan MR, Ebrahimpour S. Human brucellosis: an overview. Caspian J Intern Med. 2015;6(1):46–47. [PMC free article] [PubMed] [Google Scholar]

- 2.Lai S, Zhou H, Xiong W, Gilbert M, Huang Z, Yu J, Yin W, Wang L, Chen Q, Li Y, et al. Changing epidemiology of human brucellosis, China, 1955-2014. Emerg Infect Dis. 2017;23(2):184–194. doi: 10.3201/eid2302.151710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Colmenero Castillo JD, Cabrera Franquelo FP, Hernandez Marquez S, Reguera Iglesias JM, Pinedo Sanchez A, Castillo Clavero AM. Socioeconomic effects of human brucellosis. Rev Clin Esp. 1989;185(9):459–463. [PubMed] [Google Scholar]

- 4.Franco MP, Mulder M, Gilman RH, Smits HL. Human brucellosis. Lancet Infect Dis. 2007;7(12):775–786. doi: 10.1016/S1473-3099(07)70286-4. [DOI] [PubMed] [Google Scholar]

- 5.Deqiu S, Donglou X, Jiming Y. Epidemiology and control of brucellosis in China. Vet Microbiol. 2002;90(1–4):165–182. doi: 10.1016/S0378-1135(02)00252-3. [DOI] [PubMed] [Google Scholar]

- 6.Liu Q, Liu X, Jiang B, Yang W. Forecasting incidence of hemorrhagic fever with renal syndrome in China using ARIMA model. BMC Infect Dis. 2011;11:218. doi: 10.1186/1471-2334-11-218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Song X, Xiao J, Deng J, Kang Q, Zhang Y, Xu J. Time series analysis of influenza incidence in Chinese provinces from 2004 to 2011. Medicine. 2016;95(26):e3929. doi: 10.1097/MD.0000000000003929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Anwar MY, Lewnard JA, Parikh S, Pitzer VE. Time series analysis of malaria in Afghanistan: using ARIMA models to predict future trends in incidence. Malar J. 2016;15(1):566. doi: 10.1186/s12936-016-1602-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Zeng Q, Li D, Huang G, Xia J, Wang X, Zhang Y, Tang W, Zhou H. Time series analysis of temporal trends in the pertussis incidence in mainland China from 2005 to 2016. Sci Rep. 2016;6:32367. doi: 10.1038/srep32367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wang K, Song W, Li J, Lu W, Yu J, Han X. The use of an autoregressive integrated moving average model for prediction of the incidence of dysentery in Jiangsu, China. Asia Pac J Public Health. 2016;28(4):336–346. doi: 10.1177/1010539516645153. [DOI] [PubMed] [Google Scholar]

- 11.Wang KW, Deng C, Li JP, Zhang YY, Li XY, Wu MC. Hybrid methodology for tuberculosis incidence time-series forecasting based on ARIMA and a NAR neural network. Epidemiol Infect. 2017;145(6):1118–1129. doi: 10.1017/S0950268816003216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zhou L, Zhao P, Wu D, Cheng C, Huang H. Time series model for forecasting the number of new admission inpatients. BMC Med Inform Decis Making. 2018;18(1):39. doi: 10.1186/s12911-018-0616-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Montano Moreno JJ, Palmer Pol A, Munoz Gracia P. Artificial neural networks applied to forecasting time series. Psicothema. 2011;23(2):322–329. [PubMed] [Google Scholar]

- 14.Hornik K, Stinchcombe M, HJNn W. Multilayer feedforward networks are universal approximators. Neural Netw. 1989;2(5):359–366. doi: 10.1016/0893-6080(89)90020-8. [DOI] [Google Scholar]

- 15.Cross SS, Harrison RF, Kennedy RLJTL. Introduction to neural networks. Lancet. 1995;346(8982):1075–1079. doi: 10.1016/S0140-6736(95)91746-2. [DOI] [PubMed] [Google Scholar]

- 16.Saha S, Raghava GJPS, Function,, Bioinformatics Prediction of continuous B-cell epitopes in an antigen using recurrent neural network. Struct Funct Bioinforma. 2006;65(1):40–48. doi: 10.1002/prot.21078. [DOI] [PubMed] [Google Scholar]

- 17.Toqeer RS, Bayindir NSJN. Speed estimation of an induction motor using Elman neural network. Neurocomputing. 2003;55(3–4):727–730. doi: 10.1016/S0925-2312(03)00384-9. [DOI] [Google Scholar]

- 18.Mankar VR, Ghatol AAJAANS. Design of adaptive filter using Jordan/Elman neural network in a typical EMG signal noise removal. Adv Artif Neural Systems. 2009;2009:4. doi: 10.1155/2009/942697. [DOI] [Google Scholar]

- 19.Mesnil G, Dauphin Y, Yao K, Bengio Y, Deng L, Hakkani-Tur D, He X, Heck L, Tur G, DJIAToA Y, Speech et al. Using recurrent neural networks for slot filling in spoken language understanding. IEEE/ACM Trans Audio Speech Lang Process. 2015;23(3):530–539. doi: 10.1109/TASLP.2014.2383614. [DOI] [Google Scholar]

- 20.Ayaz E, Şeker S, Barutcu B, EJPiNE T. Comparisons between the various types of neural networks with the data of wide range operational conditions of the Borssele NPP. Prog Nucl Energy. 2003;43(1–4):381–387. doi: 10.1016/S0149-1970(03)00047-7. [DOI] [Google Scholar]

- 21.Guan P, Wu W, Huang D. Trends of reported human brucellosis cases in mainland China from 2007 to 2017: an exponential smoothing time series analysis. Environ Health Prev Med. 2018;23(1):23. doi: 10.1186/s12199-018-0712-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Cleveland RB, Cleveland WS, McRae JE, Terpenning IJJoOS STL: a seasonal-trend decomposition. J Off Stat. 1990;6(1):3–73. [Google Scholar]

- 23.Kane MJ, Price N, Scotch M, Rabinowitz P. Comparison of ARIMA and random Forest time series models for prediction of avian influenza H5N1 outbreaks. BMC Bioinformatics. 2014;15:276. doi: 10.1186/1471-2105-15-276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Bozdogan HJP. Model selection and Akaike's information criterion (AIC): the general theory and its analytical extensions. Psychometrika. 1987;52(3):345–370. doi: 10.1007/BF02294361. [DOI] [Google Scholar]

- 25.Zhang X, Liu Y, Yang M, Zhang T, Young AA, Li X. Comparative study of four time series methods in forecasting typhoid fever incidence in China. PLoS One. 2013;8(5):e63116. doi: 10.1371/journal.pone.0063116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Liu X, Jiang B, Bi P, Yang W, Liu Q. Prevalence of haemorrhagic fever with renal syndrome in mainland China: analysis of National Surveillance Data, 2004-2009. Epidemiol Infect. 2012;140(5):851–857. doi: 10.1017/S0950268811001063. [DOI] [PubMed] [Google Scholar]

- 27.Zhang GP, Patuwo BE, Hu MYJC, Research O. A simulation study of artificial neural networks for nonlinear time-series forecasting. Comput Oper Res. 2001;28(4):381–396. doi: 10.1016/S0305-0548(99)00123-9. [DOI] [Google Scholar]

- 28.Wang J, Wang J, Fang W, Niu H. Financial time series prediction using Elman recurrent random neural networks. Comput Intell Neurosci. 2016;2016:4742515. doi: 10.1155/2016/4742515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hu JW-S, Hu Y-C, Lin RR-W. Applying neural networks to prices prediction of crude oil futures. Math Probl Eng. 2012;2012:12. [Google Scholar]

- 30.Maqsood I, Khan MR, Abraham A. Advances in soft computing. London: Springer London; 2003. Canadian weather analysis using connectionist learning paradigms; pp. 21–32. [Google Scholar]

- 31.Wang H, Gao Y, Xu Z, Xu W. Elman's recurrent neural network applied to forecasting the quality of water diversion in the water source of Lake Taihu, vol. 11. 2011. [Google Scholar]

- 32.More A, Deo MC. Forecasting wind with neural networks. Mar Struct. 2003;16(1):35–49. doi: 10.1016/S0951-8339(02)00053-9. [DOI] [Google Scholar]

- 33.Arnerić J, Poklepović T, Aljinović ZJCORR. GARCH based artificial neural networks in forecasting conditional variance of stock returns. Croat Oper Res Rev. 2014;5(2):329–343. doi: 10.17535/crorr.2014.0017. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Monthly cases of human brucellosis in Mainland China from 2004 to 2017. (XLSX 13 kb)

R script of the function that can fit a range of likely candidate ARIMA models automatically. (TXT 1 kb)

R script to perform the Elman and Jordan neural network models with leave-one-out-cross-validation method. (TXT 2 kb)

Data Availability Statement

The datasets analyzed during the current study are included in the additional file 1. The documents included in this study are also available from the corresponding author Bao-Sen Zhou on reasonable request.