Abstract

The methods available for solving the inverse problem of photoacoustic tomography promote only one feature–either being smooth or sharp–in the resultant image. The fusion of photoacoustic images reconstructed from distinct methods improves the individually reconstructed images, with the guided filter based approach being state-of-the-art, which requires that implicit regularization parameters are chosen. In this work, a deep fusion method based on convolutional neural networks has been proposed as an alternative to the guided filter based approach. It has the combined benefit of using less data for training without the need for the careful choice of any parameters and is a fully data-driven approach. The proposed deep fusion approach outperformed the contemporary fusion method, which was proved using experimental, numerical phantoms and in-vivo studies. The improvement obtained in the reconstructed images was as high as 95.49% in root mean square error and 7.77 dB in signal to noise ratio (SNR) in comparison to the guided filter approach. Also, it was demonstrated that the proposed deep fuse approach, trained on only blood vessel type images at measurement data SNR being 40 dB, was able to provide a generalization that can work across various noise levels in the measurement data, experimental set-ups as well as imaging objects.

1. Introduction

Photoacoustic (PA) imaging is a non-invasive imaging modality which renders optical resolution at ultrasonic depth inside the biological tissue [1–4]. In photoacoustic tomography (PAT), a short (nano-second) laser pulse irradiates the biological tissue under investigation. The incident optical energy is absorbed by the biological tissue, which results in the rise in temperature, generating pressure waves because of thermoelastic expansion and photon absorption. The ultrasonic detectors record the pressure waves at the imaging domain boundary. These acquired pressure waves are utilized in an inverse fashion for computing the initial pressure distribution. The information about the tissue functional parameters, such as hemoglobin, water, oxyhemoglobin, bilirubin, melanin [5] is given by the optical absorption. The applications of PA imaging includes ophthalmology [6], oncology [7,8], neurosciences [9], dermatology [10], cardiology [11], and monitoring tissue health conditions.

Photoacoustic tomography involves solving the initial problem by utilizing the data acquired at the boundary at time “t” to compute the initial pressure distribution at time “t” = 0. This initial value problem is solved using several reconstruction techniques. These include analytical algorithms (delay and sum, and back-projection (BP)) as well as time-reversal (TR) algorithms [12, 13]. These are computationally effective, but need large amount of data for providing meaningful results as compared to the model based methods. The requirement of huge data result in prolonged scan time or costly experimental setups. Also, the setups utilized for capturing the pressure waves generally cover only an aperture resulting in limited data scenarios [14–16]. Often, reconstruction using analytical methods or time reversal method for limited data cases result in poor image quality and poor contrast. The model-based methods generally have improved quantitative accuracy in these limited data cases [17–20]. Since model based methods are iterative, they are more robust to noise and are used for real time reconstructions [12, 21]. These methods are typically computationally expensive as compared to analytical methods and require careful choice of reconstruction (regularization) parameters. The reconstructed image characteristics obtained using these methods are typically complimentary as compared to the analytical methods [22–24].

The post-processing of reconstructed images is a standard procedure in the field of medical imaging. The reconstructed images are generally histogram normalized or a filter is used to enhance the image characteristics. In PA imaging, there have been previous attempts to enhance the resolution for Optical Resolution Photoacoustic Microscopy [25]. The method involves the use of blind deconvolution to estimate the blur kernel using Wiener filter [25]. Similar to these efforts, other techniques were developed which consists of two steps. The model based image reconstruction is the first step and utilizing the model resolution matrix to deblur the image is the second step [22]. The deblurring step is computationally expensive and requires computational time comparable with the first step of model based reconstruction [22,26].

Computer vision community has recently used fusion methods to combine the best features from two different images to get a single image that is improved version compared to the individual input images [27]. Especially in areas of multi-focus and multi-camera fusion, the use of fusion based methods have shown to produce superior results [28]. Recently introduced modified guided filtering [29] based fusion method in PA imaging was shown to improve the reconstructed images. It uses a set of regularization parameters, which need to be selected carefully, to obtain the final image output. In this work, a convolutional neural network (CNN) based architecture for fusion is proposed, to combine the best features from two input images obtained using distinct reconstruction methods. Firstly, the reconstructions are obtained using two different methods (example being Linear Back Projection and Lanczos Tikhonov). These reconstructed images were used as input and the network was trained using the target phantom as the output image. The proposed technique was compared with standard reconstruction techniques, such as total variation (TV) regularization and Lanczos Tikhonov as well as contemporary fusion methods, such as modified guided filtering. It was shown to out perform these methods in a systematic evaluation involving numerical as well as experimental studies. The proposed method (termed as PA-Fuse) was evaluated at different noise levels to show the robustness of the architecture. It was also assessed with inputs other than the network was trained for and shown that the reconstruction results obtained were conforming to broad utility. The experiments were performed with structures that were not seen by the network and also on an experimental phantom data and shown to perform better than contemporary methods. Also, to show the universal utility of the proposed PA-Fuse method, reconstructed results from different experimental setups were utilized to improve the reconstruction results without retraining the network.

2. Photoacoustic image reconstruction

The forward model of PA imaging involves the computation of pressure waves collected by the ultrasonic detectors placed at the imaging domain boundary. The propagation of PA waves inside the tissue is governed by [4]

| (1) |

Here, the pressure at time t and position x is denoted by P(x, t), the speed of sound is denoted by c, specific heat capacity is denoted by Cp, the thermal expansion coefficient is denoted by β, and the energy deposited per unit time per unit volume is denoted by H(x, t).

2.1. System matrix building

The setup used here for numerical experiments is similar to the ones used in Refs. [23,24,30]. It is summarized here briefly for completeness. The dimension of the imaging domain is n × n. It is reshaped into a vector by resizing it to a vector of size n2 × 1 and denoted as x. A (dimension of m × n2) denotes the system matrix. Here m denotes the product of number of time samples acquired by the detectors and the total number of detectors present at the boundary. Impulse response is calculated for each entry of the image. This impulse response is stacked as columns of the system matrix. The data denoted as b of size m × 1 denotes the data collected by the detectors at the imaging domain boundary [23,24]. The forward model is given as

| (2) |

Here, b represents the measurement vector (m × 1 (m = 512 × 100)) and x represents the solution vector (n2 × 1 (n = 201)). The Linear Back-Projected (LBP), which is the simplest image reconstruction method, image can be obtained as [22,31]

| (3) |

with T representing the transpose. The Back-projection (BP) methods are computationally efficient as they are single step in nature [21]. The BP based methods are not used for quantitative imaging as they provide only qualitative results. The starting vector for the iterative reconstruction techniques is generally the BP image. [20,24]. Here, the BP image (xLBP) was used as the input image for the fusion based methods.

2.2. Lanczos Tikhonov regularization method

Since the photoacoustic reconstruction is ill-conditioned for the limited data cases, Tikhonov regularization is a widely used technique for the regularization of these ill-conditioned problems. This technique minimizes the residue of the equations forming the system matrix combined with a regularization term. The objective function is given as

| (4) |

with δ being the regularization parameter. Higher value of regularization parameter over-smooth the image, while a lower value magnifies the noise level in the image. The minimized function and the l2 norm are represented as Ω and ‖.‖2 respectively. Minimizing this equation results in

| (5) |

Solving Eq. 5 takes O(n6) operations and thus computationally expensive. Thus, Lanczos bidiagonalization has been employed here to reduce the dimensionality of the problem at hand, which reduces the memory requirement and also reduces the number of operations [23]. The dimensionality reduction is performed using the Lanczos bidiagonalization method on the system matrix (A) [32]. The Lanczos matrices (Ek - left Lanczos matrix, Fk - right Lanczos matrix), the bidiagonal matrix (Bk) and the system matrix (A) are associated as [23,30]:

| (6) |

| (7) |

| (8) |

The l2 norm of b is represented by γ0 and ek denotes the unit vector of dimension k (which is 1 at the k’th position and 0 at other places). The dimension of Fk and Ek are (n2 × k) and (m × k), correspondingly, where k denotes the number of bidiagonalization iterations. Utilizing Eqns. (6,7,8), the residual can be written as:

| (9) |

x(k) denotes the lower dimensional representation of x when k << n2. Utilizing the property and substitution of Eq. (9) in Eq. (4) and, the new cost function is

| (10) |

The new solution becomes

| (11) |

| (12) |

using the first order condition. For a fixed k and regularization parameter δ [33], the is an estimate of x(k). For a given value of the regularization parameter δ and k = n2, xestimate = xTikhonov. xestimate is an approximation of xTikhonov for all other cases. There exist an optimal value of k which decreases the error between xestimate and xTikhonov [30,32]. The quality of reconstructed image is highly dependent on choice of k and δ, the contemporary method for an automated choice of these parameters is based on error estimate, which provides optimal solution. For more details of the error estimate method, the reader can refer to Ref. [34]. The solution obtained via optimal choice of k and δ using error estimate method will be refereed as xLTO, with LTO referring to Lanczos Tikhonov solution obtained via Optimal choice of reconstruction parameters.

2.3. Total variational regularization method

The smooth nature of the solution in Tikhonov regularization (Eq. 4) is promoted because of the l2 norm present in the objective function. Another method to solve this ill-conditioned problem is by proposing a term minimizing the variation in the solution, and called as total variation (TV) regularization method [35,36]. The cost minimization function can be given as

| (13) |

η denotes the regularization parameter and ‖.‖TV represents the isotropic total variation [37]. Alternating Direction Method of Multipliers (ADMM) framework is utilized for TV regularization which is given in Ref. [38]. The Split Augmented Lagrangian shrinkage Algorithm (SALSA) (given in Algorithm 1), was utilized in this work. The LBP image (Eq. 3) was used as the initialization of x for the SALSA algorithm. The variables f0 and u0 were initialized to zero. The reconstructed PA image obtained using TV regularization is represented by xTV.

Algorithm 1.

SALSA Algorithm

| Input: A, η, b, k = 0, u0, f0 |

| Output: x k+1 |

| Repeat till convergence is satisfied |

| fk+1 = fk − (xk+1 − uk+1) |

| k = k + 1 |

2.4. Image guided filter based fusion

Recently proposed guided filter based method combines the feature from two different reconstructions techniques and was shown to perform better as compared to the contemporary methods for photoacoustic imaging [29]. The cartoon of the fusion process for PA imaging is given in Fig. 1. For complete information the reader can refer to Ref. [29]. The modified guided filtering algorithm is given here (Algorithm 2) for completeness.

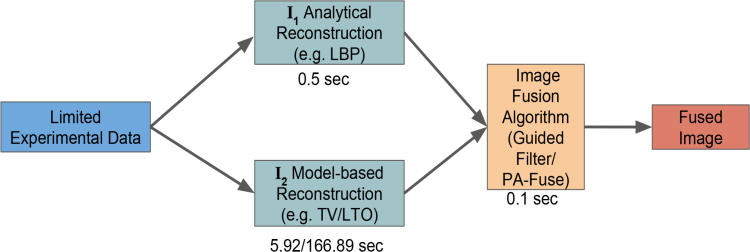

Fig. 1.

Flowchart of the fusion process. The typical computational times for each process is given below each block.

Algorithm 2.

Modified Guided Filter(xI, xG, w, ∊, α, β), go(.) represents performing operation ‘o’ on the arguments

| Input: xI − Input Image to be filtered (xLTO or xTV) |

| xGI − Guidance Image (xLBP) |

| w − Radius of the Window (patch size) |

| ∊, α, β − Regularization Parameters |

| Output xF − Output Image |

| meanxGI = gmean(xGI); meanxI = gmean(xI) |

| corrxGI = gmean(xGI. * xGI); corrxGI−I = gmean(xGI. * xI) |

| varxGI = corrxGI − meanxGI. * meanxGI; covxGI−I = corrxGI−I − meanxGI. * meanxI |

| a = (covxGI−I./(varxGI + ∊))α; b = meanxI − β * a. * meanxGI |

| meanb = gmean(b); meana = gmean(a) |

| xF = meana. * xGI + meanb |

2.5. PA-Fuse (proposed method)

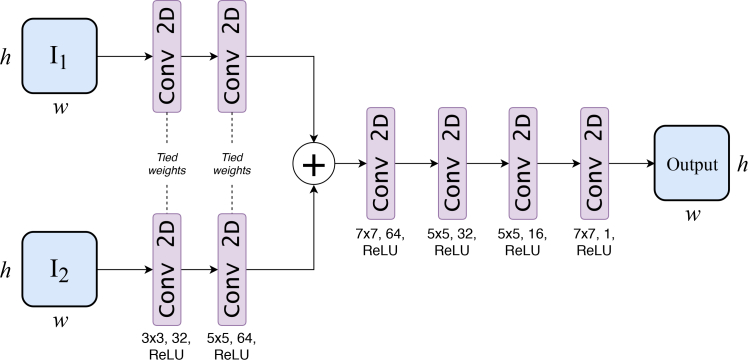

Network architecture: With I1 and I2 as input (obtained from two reconstruction methods as discussed in above sections), the objective is to fuse them together to generate a final image that has combined features of I1 and I2. This is objective of the proposed PA-Fuse (𝒩) model (the fusion process is depicted in Fig. 1). This model has been motivated from the DeepFuse model proposed by Prabhakar et al. [39]. The network architecture of the proposed PA-Fuse model is shown in Fig. 2. In here, Siamese network was utilized to extract similar features for both I1 and I2. The mathematical description of operations in the proposed PA-Fuse network are given below. Let us denote the feature map of Image j at layer i as , convolutional filters at layer c as fc, and convolution operation on d with filter e as d ⊛ e. Then, the series of operations will be as follows:

| (14) |

The similar features are extracted by applying same convolutional filters f1 for layer 1 (and f2 for layer 2) to both images I1 and I2. As filter sizes are same, the extracted feature types will be same. Thus, they can be merged directly.

Fig. 2.

Network architecture of proposed PA-Fuse model. It consists of three stages: feature encoder to extract salient features, feature aggregator to combine them, and feature decoder to reconstruct fused image. In the feature encoder section, feature extraction of input images I1 and I2 using two convolutional layers with tied weights were achieved. The extracted features are fused using sum operation and fused image was reconstructed using four convolutional layers.

The proposed model is sufficient enough to handle increase in training samples. Hence, network parameters updating is not necessary. However, there is a need to adjust training strategy to stabilize the training. This could be achieved by annealing the learning rate parameter over the training epochs. One of the widely followed annealing strategy is to reduce the learning rate by a fixed factor every few epochs (which was not needed here).

In this part, two convolutional layers that has 32 filters with kernel size of 3×3 and 64 filters with kernel size being 5×5 were deployed. Both input images (size of h × w) are passed into the Siamese network to extract feature blobs of size h × w × 1 × 64. These layers have 51,584 trainable parameters. As each feature in the extracted feature blob represent similar feature type, they can be combined by sum operation. The combined feature map is passed onto four convolutional layers to generate a single channel fused image (Ô). The network 𝒩 was trained with ℒ2 loss computed between Ô and ground truth Ogt:

| (15) |

These four layers have 265,601 parameters to be trained beyond the combined feature map (sum operation in Fig. 4). The training images were extracted from databases STARE [40], DRIVE [41], and CHASE [42]. The total number of images used were 4600 with 201 × 201 dimension. These images were used to generate the experimental data (b) using the k-Wave toolbox [43]. The data was then added with a white Gaussian noise resulting in Signal-to-Noise-Ratio (SNR) of 40 dB. This data was utilized in an inverse framework to generate the images using the Back-Projected (xLBP) and Lanczos Tikhonov Optimal (xLTO) reconstruction techniques. The total patches (having size 77 × 77) numbering 1,00,000 out of which 80,000 were used for training the network while 20,000 were used for validation of the network. The code was written in Keras [44] using Tensorflow [45] as the backend for training and testing the network. The ‘Mean Square Error’ was utilized as loss function and Adam optimizer for training the network [46]. The learning rate, the first momentum, and the second momentum were set to be 1 × 10−3, 0.9, and 0.999 respectively.

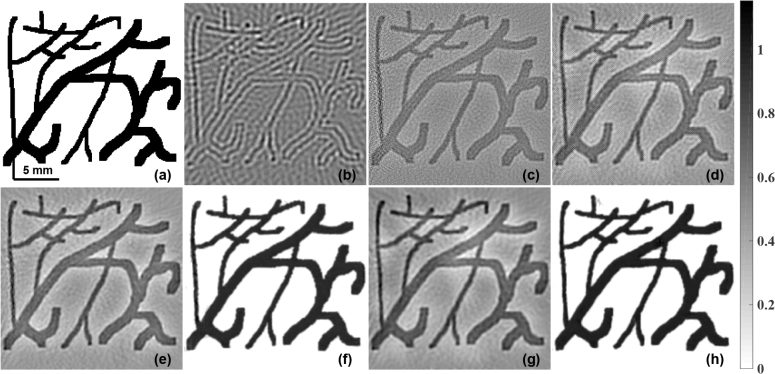

Fig. 4.

Target blood vessel (BV) phantom is shown in (a). The reconstructed initial pressure distribution with SNR of measurement data being 40 dB using (b) LBP, (c) LTO, and (d) TV regularization. The results obtained using modified guided filter with (b) as guiding image and to be guided image being (c) and (d) are correspondingly given in (e) and (g). The results using the PA-Fuse with one input image being (b) and another being (c) and (d) are correspondingly given in (f) and (h).The RMSE and CNR of these images are correspondingly presented in Table-1.

The computations performed in this work, including the training, was on a workstation having Dual Intel Skylake Xeon 4116 (24 core) with 2.10 GHz clock speed having two NVIDIA Tesla P100 12GB GPU having 64GB RAM. The total training time was approximately 8.27 hours.

3. Figures of merit

To compare the quality of the PA image obtained using till now discussed methods, the image metrics, such as Signal-to-Noise Ratio (SNR), Root Mean Square Error (RMSE), and Contrast-to-Noise Ratio (CNR) were used. For numerical phantoms, RMSE and CNR were employed as the target initial pressure was known for these, while for experimental phantoms SNR was used as the evaluation criteria.

3.1. Root mean square error (RMSE)

RMSE is generally used to compare the reconstruction quality as an absolute metric and is defined as [47,48]:

| (16) |

where target initial pressure distribution is denoted by xtarget, the reconstructed initial pressure distribution is denoted by xrecon, and the total number of pixels by N. The lower the value, the closer it is to the reconstructed image quantitatively.

3.2. Contrast-to-Noise Ratio (CNR)

CNR is defined as follows [49–52]:

| (17) |

where v and m denotes the variance and the mean for the background (back) and the region of interest (roi) for the initial pressure distribution reconstruction. The and represents the area ratio. Aroi denotes the area of the region of interest and Aback denotes the area of the background. Note that the initial pressure values being greater than zero is treated as the roi and rest becomes the background. Higher value of CNR represents improved contrast recovery of the reconstruction method.

3.3. Signal-to-noise ratio (SNR)

SNR is defined as [53]:

| (18) |

where PPressure represents the peak initial pressure distribution and nI represents the standard deviation of the image. Higher SNR represents improved quality and less noise in the reconstructed image. The term SNRr was used to differentiate it from the SNR in the measurement vector (b).

4. Numerical and experimental studies

Three different numerical phantoms with distinct characteristics were used in this work for comparing the performance of discussed methods. The numerical phantoms will be helpful in objectively assessing the performance of the reconstruction as the measurement of the actual initial pressure distribution is challenging in the real experimental scenarios, including phantoms. The ultrasonic detectors are placed at a radius of 22 mm and the dimension of the computational grid is 501 × 501 (0.1 mm/pixel). The experimental data generation was performed on a high dimensional grid (401 × 401) and the reconstruction was done on a lower dimensional grid (201 × 201). The Fig. 3 depicts these grids in a schematic manner, including the position of 100 acoustic detectors. White Gaussian noise was added to the data generated to result in SNR levels of the measured data being 30 dB to 50 dB for testing the PA-Fuse model. An open source MATLAB based toolbox k– WAVE [43] was deployed for generation of the data. The data collection was performed at a sampling rate of 20 MHz and time samples were 512. Hundred ultrasonic transducers with 70 % bandwidth and a center frequency of 2.25 MHz placed at equi-distance on the boundary were utilized to collect the boundary data (b). Thus, the system matrix formed had a dimension of 51200 × 40401. The simulation was performed assuming speed of sound in medium as 1500 m/s, along with the assumption that medium is non-absorptive and non-dispersive.

Fig. 3.

Schematic diagram of photoacoustic data acquisition geometry along with depiction of position of 100 acoustic detectors (shown by dots) around the imaging domain. The data generation grid had a dimension of 401×401 and reconstruction grid was 201×201.

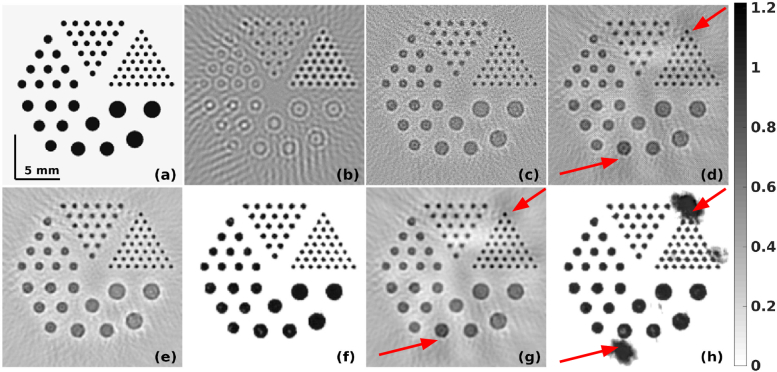

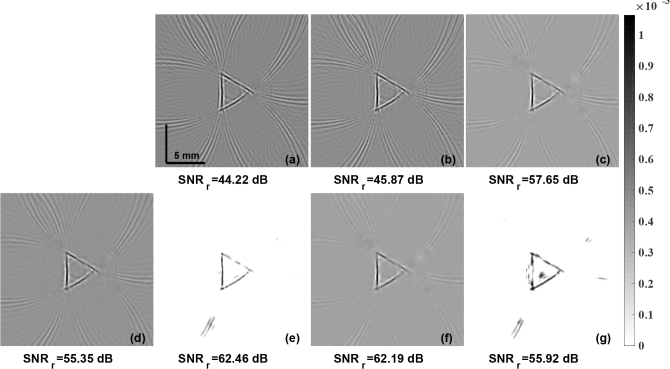

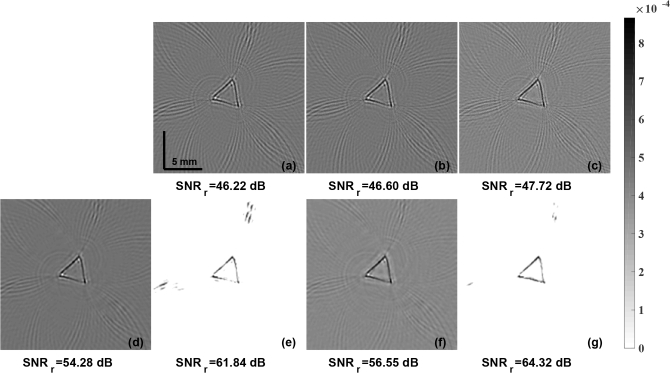

The maximum initial pressure distribution of 1 kPa was set for these numerical phantoms. The Blood vessel (BV) phantom consists of thin and thick blood vessels imitating the real blood vessel structures (shown in Fig. 4(a)). The modified Derenzo phantom was also used which consists of circular objects having varying radius to evaluate the performance of the proposed method in terms of varying size (shown in Fig. 6(a)). Another phantom was made which consists of the letters ‘PAT’ (shown in Fig. 7(a)) was utilized to show the superiority of the reconstruction performance of the proposed PA-Fuse method in terms of recovering the sharp edges.

Fig. 6.

Target modified Derenzo phantom is shown in (a). The reconstructed initial pressure distribution with SNR of measurement data being 40 dB using (b) LBP, (c) LTO, and (d) TV regularization. The results obtained using modified guided filter with (b) as guiding image and to be guided image being (c) and (d) are correspondingly given in (e) and (g). The results using the PA-Fuse with one input image being (b) and another being (c) and (d) are correspondingly given in (f) and (h). The RMSE and CNR of these images are correspondingly presented in Table-1. The red arrows in the TV (reconstructed or fused) images show non-uniform background induced artifacts.

Fig. 7.

Target PAT phantom is shown in (a). The reconstructed initial pressure distribution with SNR of measurement data being 40 dB using (b) LBP, (c) LTO, and (d) TV regularization. The results obtained using modified guided filter with (b) as guiding image and to be guided image being (c) and (d) are correspondingly given in (e) and (g). The results using the PA-Fuse with one input image being (b) and another being (c) and (d) are correspondingly given in (f) and (h).The RMSE and CNR of these images are correspondingly presented in Table-1.

To validate the proposed method experimentally, a horse hair phantom data having triangular-shape and in vivo rat brain data obtained using different image acquisition set ups with Nd:YAG laser and pulsed laser diode (PLD) laser were utilized respectively. The details about the experimental set up and data acquisition for triangular-shaped horse hair phantom data was provided in Refs. [54] & [55]. The in vivo rat brain data collection details were provided in Ref. [55]. Note that all animal experiments conducted as part of this work followed the guidelines and regulations accepted by the institutional Animal Care and Use committee of Nanyang Technological University, Singapore (Animal Protocol Number ARF-SBS/NIE-A0263).

5. Results and discussion

The initial pressure distribution reconstruction for the Blood Vessel (BV) phantom using the LBP is given in Fig. 4(b). The reconstruction using the Lanczos Tikhonov (LTO) method and TV based regularization were shown in Fig. 4(c) and Fig. 4(d) respectively. The optimal parameters for the Lanczos method are calculated by utilizing the error estimate method. The fused result using the guided filter based approach using the LBP reconstruction as the guiding image and the input image being xLTO, and xTV were shown in Fig. 4(e) and Fig. 4(g) respectively. The reconstruction results using the proposed PA-Fuse method with LBP as one input image and other being xLTO and xTV were presented in Fig. 4(f) and Fig. 4(h) respectively. Table-1 presents the quantitative comparison of these results. The improvement obtained with proposed PA-Fuse method using the xLTO as another input (xLBP was always one of the standard input) is 85.41% in RMSE and 147.46% in CNR as compared to the guided filter based fusion method with the same input. In case of measurement data SNR level being 30 dB, the improvement using PA-Fuse in terms of RMSE and CNR was 41.68% and 214.42% respectively and similar improvement was observed in case of 50 dB SNR level. Note that the training of the PA-Fuse network was performed with measurement data SNR being 40 dB, but the network was able to generalize and provide improved performance at other SNR levels as well. On similar note, even though the network was trained with xLTO, from results it is clear that the proposed PA-Fuse model was able to improve the TV based reconstruction result as well.

Table 1.

The figures of merit, RMSE and CNR, for the resultant images using reconstruction methods examined here with SNR in measurement data being 30 dB, 40 dB, and 50 dB utilizing three numerical phantoms (blood vessel (BV), Derenzo, and PAT). For the case of guided filter, guiding image was always LBP and other input being given being given as argument. The results of the proposed method, PA-Fuse, are given in bottom two rows with one input being LBP image and another image given as argument. The highest RMSE and CNR in each column are marked in bold.

| Phantom | Modified Derenzo | Blood Vessel (BV) | PAT | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||||||||||

| SNR | 30 dB | 40 dB (Fig. 6) | 50 dB | 30 dB | 40 dB (Fig. 4) | 50 dB | 30 dB | 40 dB (Fig. 7) | 50 dB | |||||||||

|

| ||||||||||||||||||

| Metric | RMSE | CNR | RMSE | CNR | RMSE | CNR | RMSE | CNR | RMSE | CNR | RMSE | CNR | RMSE | CNR | RMSE | CNR | RMSE | CNR |

|

| ||||||||||||||||||

| LBP | 0.1646 | 0.17 | 0.1666 | 0.17 | 0.1675 | 0.17 | 0.2373 | 0.74 | 0.2383 | 0.74 | 0.2380 | 0.74 | 0.2242 | 1.01 | 0.2202 | 1.02 | 0.2192 | 1.02 |

| LTO | 0.1260 | 0.73 | 0.1249 | 1.12 | 0.1534 | 1.14 | 0.2031 | 1.59 | 0.1901 | 1.99 | 0.1904 | 1.95 | 0.2614 | 1.86 | 0.2172 | 2.83 | 0.2256 | 3.09 |

| TV | 0.2061 | 0.95 | 0.1231 | 1.48 | 0.1166 | 1.49 | 0.1499 | 2.47 | 0.1505 | 2.60 | 0.1695 | 2.48 | 0.2077 | 2.80 | 0.1335 | 3.99 | 0.1393 | 4.08 |

| Guided Filter (LTO) | 0.0902 | 1.15 | 0.0689 | 2.57 | 0.0499 | 4.20 | 0.1771 | 2.02 | 0.1310 | 3.75 | 0.1047 | 4.74 | 0.2442 | 2.24 | 0.1852 | 4.29 | 0.0899 | 8.07 |

| Guided Filter (TV) | 0.0726 | 2.63 | 0.0802 | 2.40 | 0.0783 | 2.66 | 0.1406 | 3.19 | 0.1368 | 3.50 | 0.1312 | 3.78 | 0.1007 | 6.54 | 0.1154 | 6.25 | 0.1154 | 7.12 |

| PA-Fuse (LTO) | 0.0181 | 31.55 | 0.0063 | 159.82 | 0.0079 | 93.75 | 0.0610 | 10.03 | 0.0229 | 9.28 | 0.0192 | 8.83 | 0.0208 | 14.92 | 0.0052 | 20.34 | 0.0057 | 21.49 |

| PA-Fuse (TV) | 0.0542 | 1.55 | 0.0294 | 2.33 | 0.0168 | 4.19 | 0.0291 | 9.03 | 0.0191 | 9.02 | 0.0310 | 8.77 | 0.0229 | 7.38 | 0.0060 | 16.75 | 0.0053 | 16.70 |

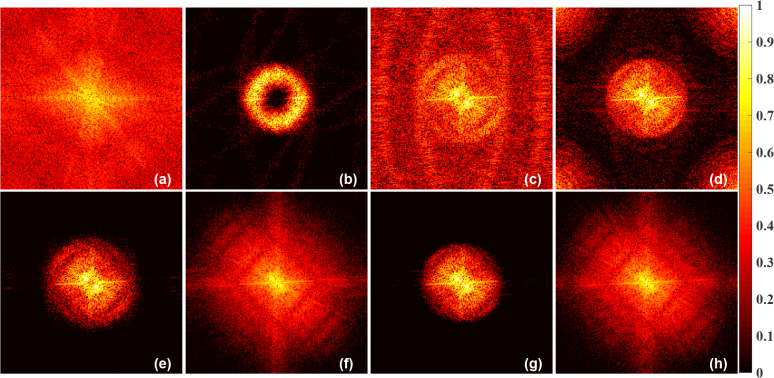

The LBP image (Fig. 4(b)) is smooth, has many streak artifacts, and also lacks sharp features as available data is limited. The LTO/TV regularized reconstruction gives sharp features with caveat that they enhance the noise present in the boundary data. These features (less noise in the LBP image and sharp features present in the LTO/TV image) are combined to provide a fused output that is less noisy with reduced streak artifacts. The frequency spectrum plots of corresponding to reconstructed images of the blood vessel phantom given in Fig. 4 were shown in Fig. 5. The low frequency components are at the center of these plots while the edges represents the high frequency components. The resulting fused images Fig. 4(e–h) significantly changes the spectrum of the frequency components (correspondingly in Fig. 5(e–h)). The fused image contains low-frequency components of the LBP and high-frequency components present in the LTO/TV. The fused image filters/excludes high frequency components, which is typically attributed to the noise in the reconstructed image, while retaining the other components which contains the useful information. Thus fused images figures of merit are significantly improved compared to individual images (refer to Table-1).

Fig. 5.

The logarithm of amplitude of the Fourier spectrum of results pertaining to (a) Target BV Phantom (spatial distribution is given in Fig. 4(a)), (b) Linear Back-Projection (LBP) (spatial distribution is given in Fig. 4(b)), (c) Lanczos-Tikhonov-Optimal (LTO) (spatial distribution is given in Fig. 4(c)), (d) Total Variation (TV) (spatial distribution is given in Fig. 4(d)), (e) Modified guided filter based fused output with LBP and LTO as inputs (spatial distribution is given in Fig. 4(e)), (f) Proposed PA-Fuse output with LBP and LTO as inputs (spatial distribution is given in Fig. 4(f)), (g) Modified guided filter based fused output with LBP and TV as inputs (spatial distribution is given in Fig. 4(g)), and (h) Proposed PA-Fuse output with LBP and TV as inputs (spatial distribution is given in Fig. 4(h)).

The reconstructed initial pressure distribution for the modified Derenzo phantom using the LBP is given in Fig. 6(b). The reconstruction using the Lanczos Tikhonov Optimal (LTO) method and total variation (TV) based methods are shown in Fig. 6(c) and Fig. 6(d) respectively. The fused result using the guided filter based approach using the LBP reconstruction as the guiding image and the input image being xLTO, and xTV were presented in Fig. 6(e) and Fig. 6(g) respectively. The proposed PA-Fuse method results with one input image being xLBP and another being xLTO and xTV were shown in Fig. 6(f) and Fig. 6(h) respectively. Table-1 presents the RMSE and CNR (figures of merit) for the methods discussed here. The improvement obtained with the proposed PA-Fuse using the xLTO as input is 90.85% in RMSE and 6118.67% in CNR as compared to the guided filter based fusion method with the same input for measurement data SNR being 40 dB. For case SNR being 30 dB, the improvement obtained in terms of RMSE and CNR was 75% and 1099.61% respectively using the proposed PA-Fuse compared to guided filter based approach and similar improvement was observed when measurement data SNR being 50 dB. It is also important to observe that the PA-Fuse was trained with images consisting of blood vessel networks, but the network was able to generalize to provide improved fusion even for case of circular structures as present in this numerical phantom case. Note that the artifacts observed in Fig. 6(h) are mainly due to the TV based approach reconstruction result not being optimal for these circular objects and the same artifacts can be seen in the original reconstructed image (Fig. 6(d)) as well. The artifacts are due to non-uniform background and presence of black patches in the Fig. 6(d) (indicated by red arrows). These non-uniformities were not present in the LTO image (Fig. 6(c)) and hence the final output was not affected for the fusion techniques. Even Figs. 6(g) and (h) has these artifacts manifested as one of the input image was Fig. 6(d), with guided filtered fusion (Fig. 6(g)) providing more optimal result in this case. Note that the proposed PA-Fuse network was not trained on these type of images with circular objects, thus provides only sub-optimal result. The network can be retrained with images having these features to eliminate the observed black patches in the fused result (indicated by red arrows in Fig. 6(h)).

The reconstructed initial pressure distribution for the ‘PAT’ phantom using the LBP is given in Fig. 7(b). The reconstruction using the Optimal Lanczos method and total variation based method were given in Fig. 7(c) and Fig. 7(d) respectively. The guided filter based fusion results using the LBP reconstruction as the guiding image and the input image being xLTO, and xTV were shown in Fig. 7(e) and Fig. 7(g) respectively. Results with the proposed PA-Fuse method with one input image being LBP and another input image being xLTO, and xTV are shown in Fig. 7(f) and Fig. 7(h) correspondingly. The RMSE and CNR, similar to earlier results were presented in Table-1. The improvement obtained with proposed PA-Fuse using the xLTO as the input is 95.49% in RMSE and 225.44% in CNR as compared to the guided filter based fusion method. In case of 30 dB SNR level of the measurement data, the improvement obtained in terms of RMSE and CNR was 79.34% and 128.13% respectively and similar improvement was observed in case of 50 dB SNR level. Again, the PA-Fuse network has not been trained with images containing any straight/sharp edges, it was able to provide improved fusion for this numerical phantom as well.

Also from Table-1, it can been seen that for the proposed PA-Fuse method with input image obtained using Lanczos Tikhonov (LTO) consistently resulted in better figures of merit. This can be attributed to optimal choice of reconstruction parameters that were chosen based on error estimation technique utilized in LTO, which results in better quality image.

The initial pressure distribution reconstruction for the experimental horse hair phantom, data collected with utilization of Nd:YAG laser, using the LBP method is shown in Fig. 8(a). The PA images reconstructed using the Optimal Lanczos method and total variation based methods were shown in Fig. 8(b) and Fig. 8(c) respectively. The guided filter based fusion results using the LBP reconstruction as the guiding image and the input image being xLTO, and xTV were presented in Fig. 8(d) and Fig. 8(f) respectively. Results with the proposed PA-Fuse method with one input image being LBP and another input image being xLTO, and xTV are shown in Fig. 8(e) and Fig. 8(g) respectively. The improvement obtained with proposed PA-Fuse using the xLTO as the input is 7.11 dB in SNRr as compared to the guided filter based fusion method. The background artifacts, even in these experimental scenario for which the PA-Fuse was trained, were completely eliminated in the reconstructed image using the proposed PA-Fuse method with a clear improvement in the contrast.

Fig. 8.

The initial pressure distribution reconstruction for the experimental horse hair phantom data obtained using Nd:YAG laser with LBP, LTO, and TV regularization methods are given in (a), (b), and (c) respectively. The results obtained using modified guided filter with (a) as guiding image and to be guided image being (b) and (c) are correspondingly given in (d) and (f). The results using the PA-Fuse with one input image being (a) and another being (b) and (c) are correspondingly given in (e) and (g). SNRr (in dB), of each PA image is given below.

To further evaluate the robustness of the proposed method, experiment involving data acquired using PLD laser was conducted. In here, the SNR of the measurement data is expected to be much lower compared to the case of utilizing Nd:YAG laser. The reconstructed initial pressure distribution in this case using the LBP method is shown in Fig. 9(a). The reconstructed PA images using the LTO and TV based methods were presented in Fig. 9(b) and Fig. 9(c) respectively. The guided filter based fusion results using the LBP reconstruction as the guiding image and the input image being xLTO, and xTV were shown in Fig. 9(d) and Fig. 9(f) respectively. Results with the proposed PA-Fuse method with one input image being LBP and another input image being xLTO, and xTV are shown in Fig. 9(e) and Fig. 9(g) respectively. The SNR of these reconstructed images were given below. The improvement obtained with proposed PA-Fuse using the xTV as the input is 7.77 dB in SNRr in comparison to modified guided filter based fusion method, in agreement to the earlier case (Fig. 8). This experiment showed the versatility of the proposed PA-Fuse working across experimental set-ups without the additional need for retraining.

Fig. 9.

The initial pressure distribution reconstruction for experimental horse hair phantom data obtained using PLD laser with LBP, LTO, and TV regularization methods are given in (a), (b), and (c) respectively. The results obtained using modified guided filter with (a) as guiding image and to be guided image being (b) and (c) are correspondingly given in (d) and (f). The results using the PA-Fuse with one input image being (a) and another being (b) and (c) are correspondingly given in (e) and (g). SNRr (in dB), of each PA image is given below.

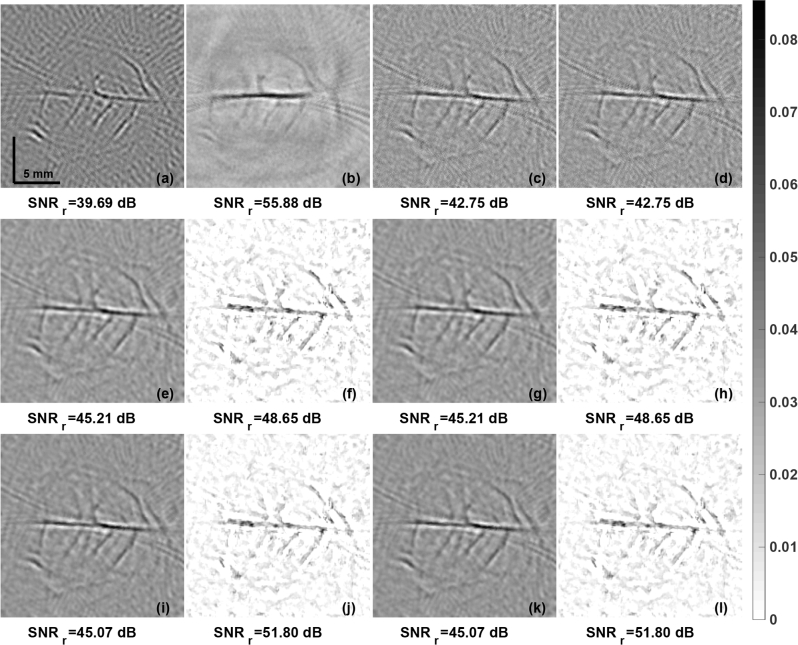

One of the PA imaging important application is in the area of pre-clinical imaging. The reconstruction results obtained using in vivo rat brain were presented in Fig. 10. The reconstruction is shown in Fig. 10(a) using the LBP method. The PA images obtained using LTO and TV reconstruction methods were given in Fig. 10(b) and Fig. 10(c) respectively. The guided filter based fusion results using the LBP reconstruction as the guiding image and the input image being xLTO, and xTV were shown in Fig. 10(d) and Fig. 10(f) respectively. Results with the proposed PA-Fuse method with one input being LBP and another input image being xLTO, and xTV are shown in Fig. 10(e) and Fig. 10(g) respectively. The improvement obtained with proposed PA-Fuse using the xLTO as the input is 3.44 dB as compared to the guided filter based fusion method. Again, the utility of PA-Fuse method even in this in vivo case was clearly demonstrated through this experiment. Another set of experiments were performed where the reconstruction obtained using Delay and Sum (DAS) was used instead of LBP as the input image. The initial pressure distribution obtained using Delay and Sum (DAS) [54] method was given in Fig. 10(b). It was used as one input for the PA-Fuse method instead of the LBP image and the experiments were performed for the same set of LTO and TV images. There is an improvement obtained in SNRr for the fused result as compared to the LTO and TV image (last row of Fig. 10). Since the network was not trained for these DAS images, the improvement obtained was not optimal. Even though the SNRr obtained using DAS compared to rest reconstruction methods (including LBP, LTO, and TV) is significantly better, as DAS is only a analytical technique, it does not provide any quantitative results (the image units are arbitrary). This experiment also confirms that the proposed network including guided filter based methods needs to be trained/tailored for the input images. Other reconstruction results (other than those trained/tailored) may not provide improved fused output.

Fig. 10.

The initial pressure distribution reconstruction for the rat brain in-vivo data with LBP, Delay-and-Sum (DAS), LTO, and TV regularization methods are given in (a), (b), (c), and (d) respectively. The results obtained using the modified guided filter with (a) as guiding image and to be guided image as (c) and (d) are shown in (e) and (g) respectively. The results using the PA-Fuse with one input image being (a) and another being (b) and (c) are correspondingly given in (f) and (h). The results obtained using the modified guided filter with (b) as guiding image and to be guided image as (c) and (d) are shown in (i) and (k) respectively. The results using the PA-Fuse with one input image being (b) and another being (c) and (d) are correspondingly given in (j) and (l). SNRr (in dB), of each PA image is given below.

Image post-processing techniques are commonly used for improving the reconstructed images across imaging modalities. In this work, fusion based approach was utilized as a post-processing step for improving the photoacoustic image reconstruction. Unlike, multi-focus experiments conducted in computer vision, here the input images were obtained using distinct reconstruction methods. One standard input that was utilized for these fusion approaches is linear back projected image, which is easy to obtain in real-experiments and provide good qualitative assessment of acquired data. Also, the fusion methods that were presented here (both guided filter as well as proposed PA-Fuse) are highly computationally efficient with their run times being as low as 1 milli-second. Through systematic evaluation via numerical, experimental, and in-vivo cases, it was clearly demonstrated that the PA-Fuse network outperformed the guided filter based approach and is generalizable across various measurement noise levels, experimental conditions as well structures present in the target/expected photoacoustic image. The PA-Fuse model performed extremely well with the suppression of background noise (streaking artifacts), improvement of the reconstructed image and generalization to unseen phantoms. Here LTO was used as one of the input image to train the network, while the testing was done even on the TV images, which also gave complimentary features as compared to the LBP based image. Hence the method generalizes even on images reconstructed using different techniques showing the superiority of the proposed method. Overall, the deep fusion based method show very good promise in terms of improving the reconstructed photoacoustic images and will surely pave a way for making photoacoustic imaging being main stream modality to image the soft tissue vascularity.

6. Conclusions

A deep learning based fusion model was proposed in this work to improve the model-based reconstruction for photoacoustic tomography. The proposed PA-Fuse model was shown to be a generic model working well across different measurement noise levels, structures in the target image, as well as experimental set-ups. It was also demonstrated that the input image for the fusion model from other reconstruction methods (than the trained one) can also be utilized for achieving improved performance. The improvement in the SNR of the reconstructed image as compared to the state-of-the-art fusion method is as high as 7.77 dB for in-vivo rat brain, making it very attractive in real-time. Moreover, as opposed to the state-of-the-art fusion methods, the proposed PA-Fuse model being fully an automated approach that is driven by the data, it does not require any choice of reconstruction parameters and thus eliminates the bias associated with careful choice of these parameters.

Funding

DST-ICPS division under Data Science (DS) research program (Proposal No: T-851).

Disclosures

The authors declare that there are no conflicts of interest related to this article.

References

- 1.Wang L. V., Hu S., “Photoacoustic tomography: in vivo imaging from organelles to organs,” Science 335, 1458–1462 (2012). 10.1126/science.1216210 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Pramanik M., Ku G., Li C., Wang L. V., “Design and evaluation of a novel breast cancer detection system combining both thermoacoustic (ta) and photoacoustic (pa) tomography,” Med. Phys. 35, 2218–2223 (2008). 10.1118/1.2911157 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Upputuri P. K., Pramanik M., “Recent advances toward preclinical and clinical translation of photoacoustic tomography: a review,” J. Biomed. Opt. 22, 041006 (2017). 10.1117/1.JBO.22.4.041006 [DOI] [PubMed] [Google Scholar]

- 4.Zhou Y., Yao J., Wang L. V., “Tutorial on photoacoustic tomography,” J. Biomed. Opt. 21, 061007 (2016). 10.1117/1.JBO.21.6.061007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cerussi A. E., Berger A. J., Bevilacqua F., Shah N., Jakubowski D., Butler J., Holcombe R. F., Tromberg B. J., “Sources of absorption and scattering contrast for near-infrared optical mammography,” Acad. Radiol. 8, 211–218 (2001). 10.1016/S1076-6332(03)80529-9 [DOI] [PubMed] [Google Scholar]

- 6.Jiao S., Jiang M., Hu J., Fawzi A., Zhou Q., Shung K. K., Puliafito C. A., Zhang H. F., “Photoacoustic ophthalmoscopy for in vivo retinal imaging,” Opt. Express 18, 3967–3972 (2010). 10.1364/OE.18.003967 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ermilov S. A., Khamapirad T., Conjusteau A., Leonard M. H., Lacewell R., Mehta K., Miller T., Oraevsky A. A., “Laser optoacoustic imaging system for detection of breast cancer,” J. Biomed. Opt. 14, 024007 (2009). 10.1117/1.3086616 [DOI] [PubMed] [Google Scholar]

- 8.Heijblom M., Steenbergen W., Manohar S., “Clinical photoacoustic breast imaging: the twente experience,” IEEE Pulse 6, 42–46 (2015). 10.1109/MPUL.2015.2409102 [DOI] [PubMed] [Google Scholar]

- 9.Yao J., Wang L., Yang J.-M., Maslov K. I., Wong T. T., Li L., Huang C.-H., Zou J., Wang L. V., “High-speed label-free functional photoacoustic microscopy of mouse brain in action,” Nat. Methods 12, 407–410 (2015). 10.1038/nmeth.3336 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ford S. J., Bigliardi P. L., Sardella T. C., Urich A., Burton N. C., Kacprowicz M., Bigliardi M., Olivo M., Razansky D., “Structural and functional analysis of intact hair follicles and pilosebaceous units by volumetric multispectral optoacoustic tomography,” J. Investig. Dermatol. 136, 753–761 (2016). 10.1016/j.jid.2015.09.001 [DOI] [PubMed] [Google Scholar]

- 11.Jansen K., Van Der Steen A. F., van Beusekom H. M., Oosterhuis J. W., van Soest G., “Intravascular photoacoustic imaging of human coronary atherosclerosis,” Opt. Lett. 36, 597–599 (2011). 10.1364/OL.36.000597 [DOI] [PubMed] [Google Scholar]

- 12.Wang K., Su R., Oraevsky A. A., Anastasio M. A., “Investigation of iterative image reconstruction in three-dimensional optoacoustic tomography,” Phys. Medicine Biol. 57, 5399–5423 (2012). 10.1088/0031-9155/57/17/5399 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Rosenthal A., Ntziachristos V., Razansky D., “Acoustic inversion in optoacoustic tomography: A review,” Curr. Med. Imaging Rev. 9, 318–336 (2013). 10.2174/15734056113096660006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Xu Y., Wang L. V., Ambartsoumian G., Kuchment P., “Reconstructions in limited-view thermoacoustic tomography,” Med. Phys. 31, 724–733 (2004). 10.1118/1.1644531 [DOI] [PubMed] [Google Scholar]

- 15.Arridge S. R., Betcke M. M., Cox B. T., Lucka F., Treeby B. E., “On the adjoint operator in photoacoustic tomography,” Inverse Probl. 32, 115012 (2016). 10.1088/0266-5611/32/11/115012 [DOI] [Google Scholar]

- 16.Arridge S., Beard P., Betcke M., Cox B., Huynh N., Lucka F., Ogunlade O., Zhang E., “Accelerated high-resolution photoacoustic tomography via compressed sensing,” Phys. Medicine Biol. 61, 8908–8940 (2016). 10.1088/1361-6560/61/24/8908 [DOI] [PubMed] [Google Scholar]

- 17.Dean-Ben X. L., Ntziachristos V., Razansky D., “Acceleration of optoacoustic model-based reconstruction using angular image discretization,” IEEE Transactions on Med. Imaging 31, 1154–1162 (2012). 10.1109/TMI.2012.2187460 [DOI] [PubMed] [Google Scholar]

- 18.Dean-Ben X. L., Buehler A., Ntziachristos V., Razansky D., “Accurate model-based reconstruction algorithm for three-dimensional optoacoustic tomography,” IEEE Transactions on Med. Imaging 31, 1922–1928 (2012). 10.1109/TMI.2012.2208471 [DOI] [PubMed] [Google Scholar]

- 19.Buehler A., Rosenthal A., Jetzfellner T., Dima A., Razansky D., Ntziachristos V., “Model-based optoacoustic inversions with incomplete projection data,” Med. Phys. 38, 1694–1704 (2011). 10.1118/1.3556916 [DOI] [PubMed] [Google Scholar]

- 20.Gutta S., Bhatt M., Kalva S. K., Pramanik M., Yalavarthy P. K., “Modeling errors compensation with total least squares for limited data photoacoustic tomography,” IEEE J. Sel. Top. Quantum Electron. 25, 1–14 (2019). 10.1109/JSTQE.2017.2772886 [DOI] [Google Scholar]

- 21.Paltauf G., Viator J., Prahl S., Jacques S., “Iterative reconstruction algorithm for optoacoustic imaging,” The J. Acoust. Soc. Am. 112, 1536–1544 (2002). 10.1121/1.1501898 [DOI] [PubMed] [Google Scholar]

- 22.Prakash J., Raju A. S., Shaw C. B., Pramanik M., Yalavarthy P. K., “Basis pursuit deconvolution for improving model-based reconstructed images in photoacoustic tomography,” Biomed. Opt. Express 5, 1363–1377 (2014). 10.1364/BOE.5.001363 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Shaw C. B., Prakash J., Pramanik M., Yalavarthy P. K., “Least squares qr-based decomposition provides an efficient way of computing optimal regularization parameter in photoacoustic tomography,” J. Biomed. Opt. 18, 080501 (2013). 10.1117/1.JBO.18.8.080501 [DOI] [PubMed] [Google Scholar]

- 24.Awasthi N., Kalva S. K., Pramanik M., Yalavarthy P. K., “Vector extrapolation methods for accelerating iterative reconstruction methods in limited-data photoacoustic tomography,” J. Biomed. Opt. 23, 071204 (2018). 10.1117/1.JBO.23.7.071204 [DOI] [PubMed] [Google Scholar]

- 25.Chen J., Lin R., Wang H., Meng J., Zheng H., Song L., “Blind-deconvolution optical-resolution photoacoustic microscopy in vivo,” Opt. Express 21, 7316–7327 (2013). 10.1364/OE.21.007316 [DOI] [PubMed] [Google Scholar]

- 26.Prakash J., Dehghani H., Pogue B. W., Yalavarthy P. K., “Model-resolution-based basis pursuit deconvolution improves diffuse optical tomographic imaging,” IEEE Transactions on Med. Imaging 33, 891–901 (2014). 10.1109/TMI.2013.2297691 [DOI] [PubMed] [Google Scholar]

- 27.Mitchell H. B., Image Fusion: Theories, Techniques and Applications (Springer Science & Business Media, 2010). 10.1007/978-3-642-11216-4 [DOI] [Google Scholar]

- 28.Li S., Kang X., Hu J., “Image fusion with guided filtering,” IEEE Transactions on Image Process. 22, 2864–2875 (2013). 10.1109/TIP.2013.2244222 [DOI] [PubMed] [Google Scholar]

- 29.Awasthi N., Kalva S. K., Pramanik M., Yalavarthy P. K., “Image-guided filtering for improving photoacoustic tomographic image reconstruction,” J. Biomed. Opt. 23, 091413 (2018). 10.1117/1.JBO.23.9.091413 [DOI] [PubMed] [Google Scholar]

- 30.Prakash J., Yalavarthy P. K., “A lsqr-type method provides a computationally efficient automated optimal choice of regularization parameter in diffuse optical tomography,” Med. Phys. 40, 033101 (2013). 10.1118/1.4792459 [DOI] [PubMed] [Google Scholar]

- 31.Zeng G. L., Medical Image Reconstruction: A Conceptual Tutorial (Springer, 2010). 10.1007/978-3-642-05368-9 [DOI] [Google Scholar]

- 32.Paige C. C., Saunders M. A., “Lsqr: An algorithm for sparse linear equations and sparse least squares,” ACM Transactions on Math. Softw. 8, 43–71 (1982). 10.1145/355984.355989 [DOI] [Google Scholar]

- 33.Kilmer M. E., O’Leary D. P., “Choosing regularization parameters in iterative methods for ill-posed problems,” SIAM J. on Matrix Analysis Appl. 22, 1204–1221 (2001). 10.1137/S0895479899345960 [DOI] [Google Scholar]

- 34.Bhatt M., Acharya A., Yalavarthy P. K., “Computationally efficient error estimate for evaluation of regularization in photoacoustic tomography,” J. Biomed. Opt. 21, 106002 (2016). 10.1117/1.JBO.21.10.106002 [DOI] [PubMed] [Google Scholar]

- 35.Tao M., Yang J., He B., “Alternating direction algorithms for total variation deconvolution in image reconstruction,” TR0918, Dep. Math. Nanjing Univ. (2009). [Google Scholar]

- 36.Wang Y., Yang J., Yin W., Zhang Y., “A new alternating minimization algorithm for total variation image reconstruction,” SIAM J. on Imaging Sci. 1, 248–272 (2008). 10.1137/080724265 [DOI] [Google Scholar]

- 37.Chambolle A., “An algorithm for total variation minimization and applications,” J. Math. Imaging Vis. 20, 89–97 (2004). 10.1023/B:JMIV.0000011321.19549.88 [DOI] [Google Scholar]

- 38.Eckstein J., Bertsekas D. P., “On the douglas-rachford splitting method and the proximal point algorithm for maximal monotone operators,” Math. Program. 55, 293–318 (1992). 10.1007/BF01581204 [DOI] [Google Scholar]

- 39.Prabhakar K. R., Srikar V. S., Babu R. V., “Deepfuse: A deep unsupervised approach for exposure fusion with extreme exposure image pairs,” in 2017 IEEE International Conference on Computer Vision (ICCV). IEEE, (2017), pp. 4724–4732. 10.1109/ICCV.2017.505 [DOI] [Google Scholar]

- 40.Hoover A., Kouznetsova V., Goldbaum M., “Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response,” IEEE Transactions on Med. Imaging 19, 203–210 (2000). 10.1109/42.845178 [DOI] [PubMed] [Google Scholar]

- 41.Staal J., Abràmoff M. D., Niemeijer M., Viergever M. A., Van Ginneken B., “Ridge-based vessel segmentation in color images of the retina,” IEEE Transactions on Med. Imaging 23, 501–509 (2004). 10.1109/TMI.2004.825627 [DOI] [PubMed] [Google Scholar]

- 42.Fraz M. M., Remagnino P., Hoppe A., Uyyanonvara B., Rudnicka A. R., Owen C. G., Barman S. A., “An ensemble classification-based approach applied to retinal blood vessel segmentation,” IEEE Transactions on Biomed. Eng. 59, 2538–2548 (2012). 10.1109/TBME.2012.2205687 [DOI] [PubMed] [Google Scholar]

- 43.Treeby B. E., Cox B. T., “k-wave: Matlab toolbox for the simulation and reconstruction of photoacoustic wave fields,” J. Biomed. Opt. 15, 021314 (2010). 10.1117/1.3360308 [DOI] [PubMed] [Google Scholar]

- 44.Chollet F., et al. , “Keras: Deep learning library for theano and tensorflow,” URL: https://keras.io/k 7 (2015). [Google Scholar]

- 45.Abadi M., Agarwal A., Barham P., Brevdo E., Chen Z., Citro C., Corrado G. S., Davis A., Dean J., Devin M., et al. , “Tensorflow: Large-scale machine learning on heterogeneous distributed systems,” arXiv preprint arXiv:1603.04467 (2016).

- 46.Kingma D., Ba J. A., “A method for stochastic optimization,” arXiv preprint arXiv:1412.6980 (2014).

- 47.Gandhi N., Allard M., Kim S., Kazanzides P., Bell M. A. L., “Photoacoustic-based approach to surgical guidance performed with and without a da vinci robot,” J. Biomed. Opt. 22, 121606 (2017). 10.1117/1.JBO.22.12.121606 [DOI] [Google Scholar]

- 48.Pai P. P., De A., Banerjee S., “Accuracy enhancement for noninvasive glucose estimation using dual-wavelength photoacoustic measurements and kernel-based calibration,” IEEE Transactions on Instrumentation Meas. 67, 126–136 (2018). 10.1109/TIM.2017.2761237 [DOI] [Google Scholar]

- 49.Song X., Pogue B. W., Jiang S., Doyley M. M., Dehghani H., Tosteson T. D., Paulsen K. D., “Automated region detection based on the contrast-to-noise ratio in near-infrared tomography,” Appl. Opt. 43, 1053–1062 (2004). 10.1364/AO.43.001053 [DOI] [PubMed] [Google Scholar]

- 50.Kruger R. A., Kuzmiak C. M., Lam R. B., Reinecke D. R., Del Rio S. P., Steed D., “Dedicated 3d photoacoustic breast imaging,” Med. Phys. 40 13301 (2013). 10.1118/1.4824317 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Pourebrahimi B., Yoon S., Dopsa D., Kolios M. C., “Improving the quality of photoacoustic images using the short-lag spatial coherence imaging technique,” in Photons Plus Ultrasound: Imaging and Sensing 2013, vol. 8581 (International Society for Optics and Photonics, 2013), p. 85813Y. [Google Scholar]

- 52.Van de Sompel D., Sasportas L. S., Jokerst J. V., Gambhir S. S., “Comparison of deconvolution filters for photoacoustic tomography,” PloS One 11, e0152597 (2016). 10.1371/journal.pone.0152597 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Li L., Zhu L., Shen Y., Wang L. V., “Multiview hilbert transformation in full-ring transducer array-based photoacoustic computed tomography,” J. Biomed. Opt. 22, 076017 (2017). 10.1117/1.JBO.22.7.076017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Kalva S. K., Pramanik M., “Experimental validation of tangential resolution improvement in photoacoustic tomography using modified delay-and-sum reconstruction algorithm,” J. Biomed. Opt. 21, 086011 (2016). 10.1117/1.JBO.21.8.086011 [DOI] [PubMed] [Google Scholar]

- 55.Kalva S. K., Upputuri P. K., Pramanik M., “High-speed, low-cost, pulsed-laser-diode-based second-generation desktop photoacoustic tomography system,” Opt. Lett. 44, 81–84 (2019). 10.1364/OL.44.000081 [DOI] [PubMed] [Google Scholar]