Significance

When individuals lose a sense early in life, widespread neural reorganization occurs. This extraordinary plasticity plays a critical role in allowing blind and deaf individuals to make better use of their remaining senses. Here, we show that area hMT+, selective for visual motion in sighted individuals, responds to auditory frequency as well as auditory motion after early blindness. Remarkably, auditory frequency tuning persisted in two adult sight-recovery subjects, despite their recovered ability to see visual motion. Thus, auditory frequency selectivity coexists with the neural architecture required for visual motion processing. In blind individuals, selectivity for auditory motion and frequency seems to exist within a conserved neural architecture capable of supporting analogous computations in the visual domain should vision be restored.

Keywords: sensory systems, plasticity, visual cortex, sight recovery, blindness

Abstract

Previous studies report that human middle temporal complex (hMT+) is sensitive to auditory motion in early-blind individuals. Here, we show that hMT+ also develops selectivity for auditory frequency after early blindness, and that this selectivity is maintained after sight recovery in adulthood. Frequency selectivity was assessed using both moving band-pass and stationary pure-tone stimuli. As expected, within primary auditory cortex, both moving and stationary stimuli successfully elicited frequency-selective responses, organized in a tonotopic map, for all subjects. In early-blind and sight-recovery subjects, we saw evidence for frequency selectivity within hMT+ for the auditory stimulus that contained motion. We did not find frequency-tuned responses within hMT+ when using the stationary stimulus in either early-blind or sight-recovery subjects. We saw no evidence for auditory frequency selectivity in hMT+ in sighted subjects using either stimulus. Thus, after early blindness, hMT+ can exhibit selectivity for auditory frequency. Remarkably, this auditory frequency tuning persists in two adult sight-recovery subjects, showing that, in these subjects, auditory frequency-tuned responses can coexist with visually driven responses in hMT+.

Compensatory cross-modal reorganization is often thought to respect the functional modularity observed in nondeprived cortex. For example, in people who lose vision early in life, regions associated with visual object processing are implicated in the processing of haptic and auditory information about objects (1, 2); the ventral visual word-form area responds during Braille reading (3, 4); and regions within the occipital dorsal stream, whose activity is linked to visual spatial processing in sighted subjects, are engaged when blind subjects perform tasks involving the spatial attributes of auditory and tactile stimuli (5, 6).

Consistent with this notion of “functional constancy” (7), several fMRI studies have demonstrated novel responses to auditory motion in human middle temporal complex (hMT+) after early blindness (8–10) and sight recovery (7). These responses contain information about the direction of auditory motion (10) and can be used to predict perceptual decisions about motion direction, suggesting that hMT+ may support enhanced performance on auditory motion tasks (11, 12).

In normally sighted individuals, including nonhuman primates, it has been shown that neurons within MT and the medial superior temporal area, and the human analog hMT+, have complex receptive fields with properties that include tuning for spatial frequency, retinotopic location, orientation, and binocular disparity, as well as direction of motion and speed (13–22). These tuning properties are thought to be critical for interpreting the motion of objects in 3D space (13, 16–18).

If hMT+ in early-blind individuals is functionally analogous to hMT+ in sighted individuals, we could expect to observe selectivity for features like auditory frequency, in addition to selectivity for direction of auditory motion, reflecting complex neural receptive fields in the auditory domain. Watkins et al. (23) previously found that, within an area defined as hMT+ based on anatomical criteria, two of five anophthalmic subjects showed significant responses to stationary pure-tone stimuli compared with a silent baseline. The frequency that elicited the largest response varied across voxels, leading the authors to suggest the potential existence of tonotopic organization in hMT+. However, this study did not examine whether frequency selectivity was statistically reliable, and their reliance on a purely anatomical definition of hMT+ makes it likely that their region of interest (ROI) included regions outside hMT+ (7, 24). It is also possible that these responses to pure-tone stimuli in hMT+ might be limited to anophthalmic individuals, in whom underdevelopment of the eyes prevents both visual stimulation and spontaneous retinal waves during prenatal development, resulting in a more extreme developmental deprivation of the visual cortex. Indeed, differences between anophthalmic and early-blind individuals have been noted previously in studies examining visual cortex recruitment during language tasks (25).

Here, we used fMRI to measure auditory frequency tuning in auditory and occipital cortex in two sight-recovery subjects (SR01, SR02), four early-blind individuals (EB01–EB04), and six age-matched sighted controls (SC01–SC06). See Table 1 for subject details and demographics. Sight-recovery subjects afford a unique opportunity to localize hMT+ functionally, since they show robust visual as well as cross-modal auditory motion responses (7). To assess frequency tuning, we estimated a population receptive field (pRF) that characterizes the blood oxygen level-dependent (BOLD) response as a function of auditory frequency for each voxel (26, 27), using both stationary pure-tone and moving band-pass stimuli.

Table 1.

Subject demographics and clinical descriptions

| Subject | Sex | Age, y | Clinical description |

| SR01 | Male | 62 | Blinded in chemical accident at age 3.5 y; vision partially restored (postoperative acuity 20:1,000) after a corneal stem cell replacement in the right eye at age 46 y |

| SR02 | Female | 62 | Congenital blindness due to retinopathy of prematurity and cataracts; vision partially restored (postoperative acuity 20:400) after cataract removal in the right eye at age 43 y |

| EB01 | Male | 32 | Leber’s congenital amaurosis; low light perception |

| EB02 | Female | 38 | Retinopathy of prematurity; no light perception in right eye; minimal peripheral light perception in left eye; 3 mo premature |

| EB03 | Female | 55 | Retinopathy of prematurity; low light perception until retina detachment at age 25 y; 2 mo premature |

| EB04 | Male | 52 | Congenital glaucoma; no light perception |

Results

Since it is impossible to obtain a functional definition of visual hMT+ in early-blind individuals, our initial analysis relied on a purely anatomical definition of hMT+, based on the Jülich probabilistic atlas thresholded at 25% (as in ref. 23; SI Appendix, Methods). We found no difference in the volume of hMT+ across early-blind and sight-recovery subjects vs. sighted controls using parametric [t (10) = 1.62, P = 0.14] or nonparametric (Wilcoxon rank sum, P = 0.31) tests (see Table 2 for individual data). Moreover, because sulcal and gyral landmarks informed the anatomical ROIs (28), the hMT+ location was approximately consistent across the subject groups with reference to cortical folding.

Table 2.

Volume of hMT+ ROIs and percentage showing frequency tuning

| Subject | MT definition | MT size, cm3 | Tuned: moving, % | Tuned: stationary, % | |||

| LH | RH | LH | RH | LH | RH | ||

| SR01 | Functional | 5.51 | 4.56 | 16.46 | 12.44 | 0 | 0 |

| SR02 | 2.13 | 5.88 | 3.19 | 12.74 | 0 | 0 | |

| SR01 | Jülich | 1.86 | 4.49 | 43.90 | 32.32 | 0 | 0 |

| SR02 | 1.68 | 4.11 | 0 | 3.31 | 0 | 0 | |

| EB01 | 5.4 | 1.86 | 23.53 | 4.88 | 0 | 0 | |

| EB02 | 1.41 | 5.76 | 0 | 0.39 | 0 | 0 | |

| EB03 | 3.7 | 1.68 | 54.60 | 8.11 | 0 | 5.41 | |

| EB04 | 1.5 | 3.95 | 0 | 8.05 | 0 | 0 | |

| SC01 | 4.54 | 1.45 | 0 | 0 | 0 | 0 | |

| SC02 | 5.47 | 1.2 | 0.41 | 0 | 0 | 0 | |

| SC03 | 2.45 | 1.2 | 0.93 | 0 | NA | NA | |

| SC04 | 2.45 | 2.06 | 0.00 | 0 | NA | NA | |

| SC05 | 1.57 | 4.04 | NA | NA | 0 | 0 | |

| SC06 | 3.9 | 1.66 | NA | NA | 0 | 0 | |

Rows represent individual subjects. For sight-recovery subjects, we report results for both functional and anatomical (Jülich) definitions of hMT+. Columns represent stimuli (moving vs. stationary) and hemisphere. Permutation tests using stimulus label randomization (see main text) suggested that our threshold of r > 0.2 corresponds to a mean false-positive rate of 5.20% with an upper 5% confidence bound of 5.35%. Percentages above the 5% confidence limit of this false discovery rate are shown in bold. LH, left hemisphere; NA, not available; RT, right hemisphere.

As described in SI Appendix, Methods, we used a voxel-level encoding model (pRF) to find the center (f0, best frequency) and SD (σ) of the Gaussian function in log frequency space that best predicted the measured time course within each voxel. To assess whether voxels showed frequency tuning, we used a stringent leave-one-out cross-validation procedure, in which we repeatedly found the best-fitting center and SD values for the pRF model using all but one scan for each subject, and then calculated the correlation between predicted and obtained time courses for the left-out scan. Voxels with a mean cross-validated correlation coefficient of r > 0.2 across folds were considered to have frequency tuning.

For visualizing tonotopic maps and for analyses examining how pRF parameters varied across blind and sighted subjects, we used pRF estimates based on the full set of runs and a noncross-validated correlation threshold of r > 0.2; it should be noted that this corresponds to a slightly more inclusive criterion than a cross-validated correlation threshold of 0.2.

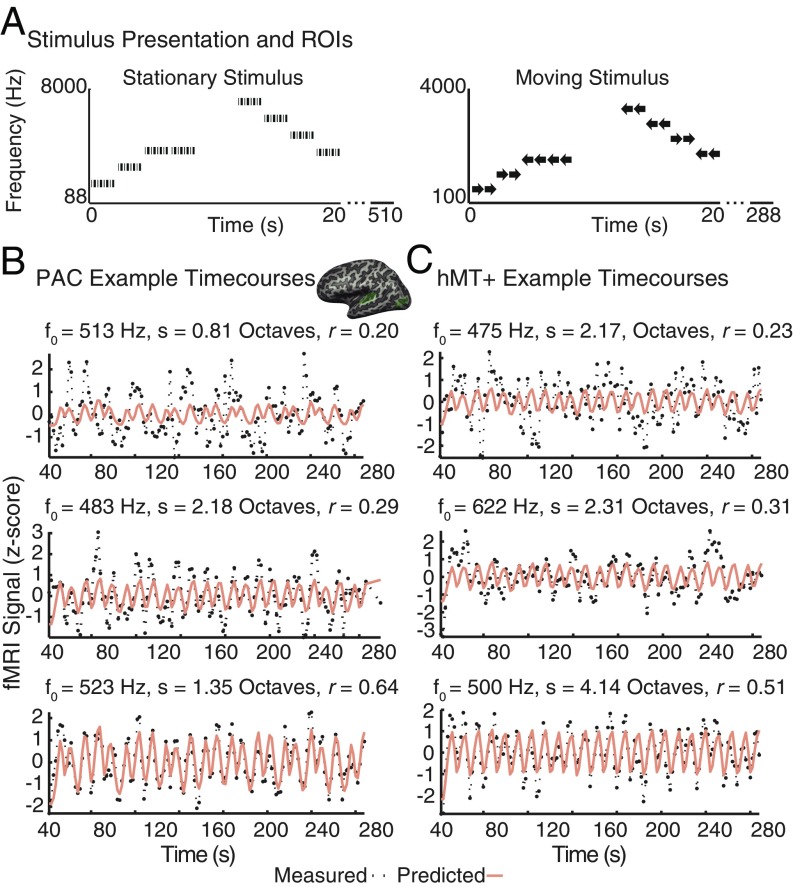

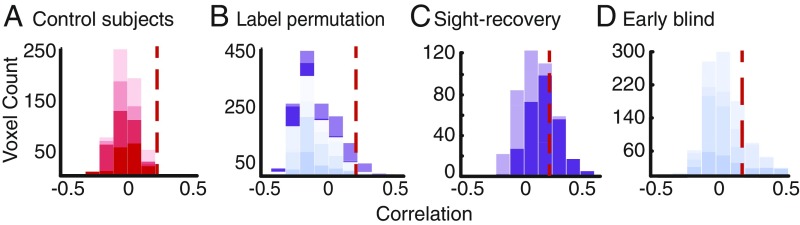

Fig. 1A shows example stationary and moving auditory stimuli. Fig. 1B shows example measured and predicted time courses for representative frequency-tuned voxels, selected to span the range of data quality included in subsequent analysis, within primary auditory cortex (PAC) and hMT+ for sight-recovery subject SR01, for a single scan with the moving auditory stimulus. We first tested whether a significant number of voxels within hMT+ show frequency tuning for the auditory stimulus that contained motion. Fig. 2A shows the distribution of cross-validated correlation values obtained for sighted control subjects (red bars). Only 0.26% of voxels showed correlation values above 0.2 (see Table 2 for individual data). Fig. 2B shows results for a randomized stimulus representation, which serves as a null model for sight-recovery and early-blind subjects. To preserve the temporal structure within individual stimulus blocks, we randomized stimulus-block order while retaining the ascending or descending frequency series structure within each block (SI Appendix, Methods). Data from sight-recovery (purple) and early-blind subjects (blue) were fit using this randomized stimulus. A correlation threshold of r > 0.2 generated a mean false-positive rate of 5.20% with an upper 5% confidence bound of 5.35%. We classified an hMT+ ROI as having significantly more voxels tuned for frequency than would be expected by chance if the percentage of frequency-tuned voxels was higher than this upper 5% confidence bound.

Fig. 1.

(A) Schematic representation of stimuli used for tonotopic mapping. For the stationary stimulus (Left) each 2-s frequency block contained eight pure tone bursts (50 or 200 ms). For the moving stimulus (Right), each 2-s block contained a pair of 1-s bursts that both traveled in the same direction, as implied by the arrows. Motion direction varied pseudorandomly across trials. Motion along the frontoparallel plane was simulated using ITDs. (B and C) Example time courses for frequency-tuned voxels within PAC (B) and hMT+ (C). The center Inset shows the locations of the PAC and hMT+ ROIs from which the voxels were selected. The measured voxel time course for a single scan is plotted in black; the predicted time-course (based on model fits carried out on separate scans) for each voxel is plotted in orange. Three voxels are shown for each ROI: one with a cross-validated correlation value just over 0.2 (representing the time course of voxels that just pass correlation threshold), one with a cross-validated correlation of 0.3 (close to the median of voxels included in our analysis), and one with a cross-validated correlation value close to 0.6 (representing the upper end of our cross-validated correlation values). Fitted pRF parameters [center frequency (f0), σ (s), and correlation (r)] are given for each voxel, above the corresponding time-course.

Fig. 2.

Histograms of mean cross-validated correlation values within hMT+. In each plot, individual subject data are stacked and color coded using distinct intensity levels. (A) Sighted control subjects (red). For a cross-validated threshold of r > 0.2 (marked by a red dashed line in each plot), 0.26% showed frequency tuning within the control group. (B) Permutation null model for sight-recovery (purple) and early-blind (blue) subjects. This model generated an expected false-positive rate of 5.20% with an upper 5% confidence interval of 5.35% (i.e., 5% of permutations resulted in a false-positive rate greater than 5.35%). (C) Individual sight-recovery subjects. (D) Individual early-blind subjects.

Fig. 2C shows results for sight-recovery subjects within an anatomically defined ROI. Using the Jülich definition of hMT+, both hemispheres in SR01 contained a higher percentage of frequency-tuned voxels than expected based on the upper 5% confidence limit of our permutation analysis. Neither hemisphere of SR02 contained a higher percentage of frequency-tuned voxels than the upper 5% confidence limit of our permutation analysis. Fig. 2D shows results for early-blind subjects, again using an anatomical ROI. In four of eight hemispheres, a higher percentage of voxels showed frequency tuning than was expected based on the upper 5% confidence limit of our permutation analysis. See Table 2 for individual sight-recovery, early-blind, and sighted subjects in terms of both percentages and cortical volume.

For any given individual, an hMT+ ROI defined using the Jülich probabilistic ROI using a 25% threshold is expected to contain a significant proportion of voxels that do not fall within hMT+. Thus, one important advantage of including sight-recovery subjects in our subject pool is that it is possible to identify hMT+ in these subjects more accurately by using a visual motion localizer.

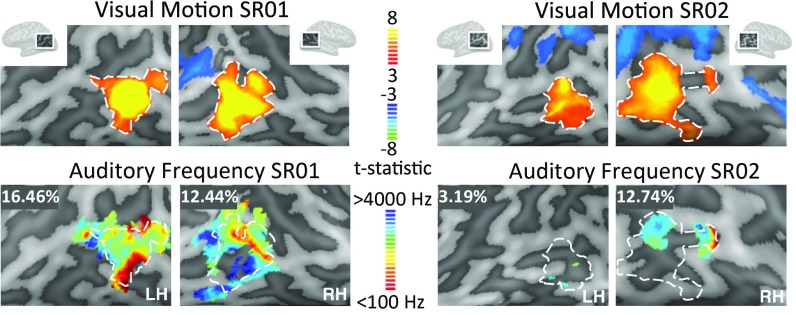

Responses to this visual motion localizer closely overlapped auditory frequency-tuned responses within hMT+ (Fig. 3). With this more accurate functional definition of hMT+, both hemispheres in SR01 and the right hemisphere of SR02 (Table 2) showed a higher percentage of voxels with frequency tuning than the upper 5% confidence limit of our permutation analysis.

Fig. 3.

(Upper) BOLD responses to the visual motion localizer in SR01 and SR02 (false discovery rate adjusted P < 0.05). (Lower) Best-frequency maps plotted on the cortical surface in SR01 and SR02 estimated using the auditory moving stimulus. Functionally defined hMT+ is shown with a white dashed outline. For visualization, pRF estimates were based on the full set of runs, with a threshold of r > 0.2 (not cross-validated). The percentage of voxels showing frequency tuning (defined as having a cross-validated correlation threshold above r > 0.2) within this functionally defined hMT+ ROI are reported in the Upper Left of each frequency-tuning map. Each Inset spans ∼9 cm. LH, left hemisphere; RH, right hemisphere.

Both sight-recovery subjects showed typical visual motion perception, given their acuity, and were able to correctly report the direction of moving random-dot fields. Percent coherence thresholds for sight-recovery subjects reporting the direction of motion of a random-dot kinematogram did not differ from those of sighted controls (25% and 24% for SR01 and SR02, respectively, and 22.6% ± 8.6%, for n = 4 control subjects). As for the fMRI visual motion localizer, these visual stimuli were full contrast, with a single dot subtending 1°. Thus, auditory frequency tuning with hMT+ not only persists after sight recovery, but also coexists with functional visual motion perception.

For the stationary stimulus, only one early-blind individual (EB03) showed more frequency-tuned voxels within hMT+ than predicted by the upper 5% confidence limit of our permutation analysis (Table 2). Thus, selectivity for frequency seems to depend on the presence of motion in the stimulus, but not on the use of a motion-related task.

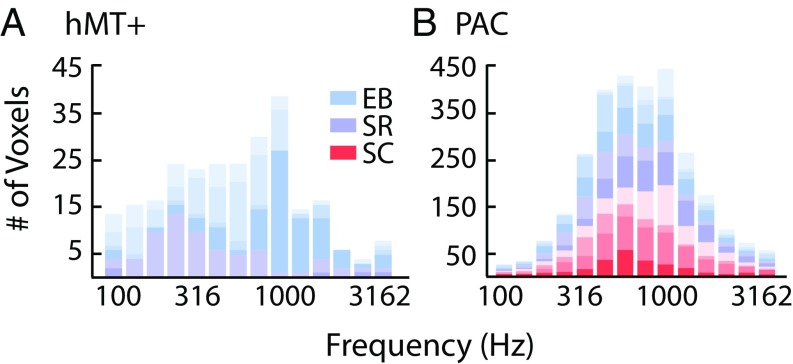

As shown in Fig. 4, hMT+ best-frequency values spanned much of the tested frequency range in both sight-recovery subjects (also see Fig. 3) and in two of the four early-blind subjects. Voxels were clustered within a narrower frequency range for one other early-blind subject (EB04), and, as described above, one early-blind subject (EB02) had very few voxels with frequency tuning.

Fig. 4.

(A) The distribution of auditory frequency tuning within hMT+ ROIs in early-blind (EB; blue) and sight-recovery (SR; purple) subjects. Because no voxels passed a cross-validated threshold of r > 0.20 in the sighted group, no sighted controls (SC; red) are plotted. (B) The distribution of auditory frequency tuning within PAC ROIs in early-blind (blue), sight-recovery (purple), and sighted controls (red).

We next tested whether the cross-modal recruitment of hMT+ in blind subjects involves a biased distribution of auditory frequencies, which might suggest a specialized role for hMT+ in analyzing specific sound categories. Both in sight-recovery and in early-blind subjects, the distribution of frequencies did not differ significantly between hMT+ and PAC. Bootstrapped χ2 tests of independence revealed no significant difference between the distribution of frequency values in hMT+ and auditory cortex either for sight-recovery subjects [χ2 (6, 2) = 175.02, P = 0.086] or for early-blind individuals [χ2 (6, 4) = 79.91, P = 0.54]. We interpret this null result cautiously, given the sample size and the restricted frequency range of the moving stimulus. However, we do not see any evidence for a trend toward greater representation of a specific frequency band, and therefore we suggest that the distribution of frequencies represented within hMT+ may be roughly similar to the distribution found in PAC.

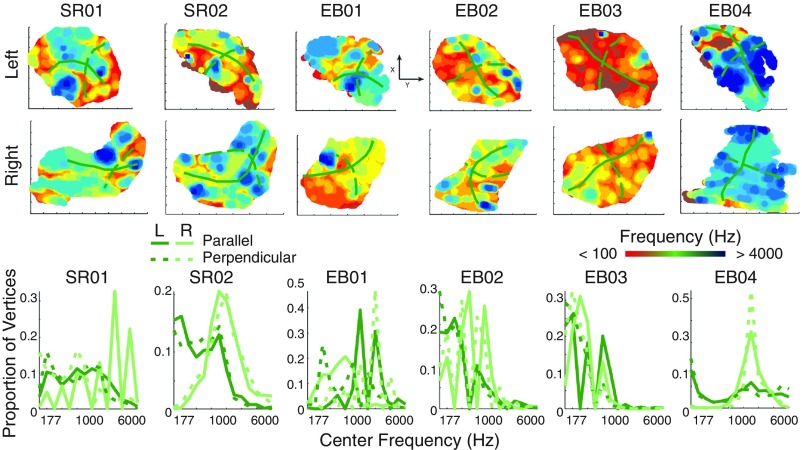

Finally, to examine whether hMT+ showed tonotopic organization, we asked whether best-frequency centers showed a systematic progression across the cortical surface, beyond what might be expected from the spatial blurring of the BOLD signal. Because there is significant variability in individual anatomies in the middle occipital region, we examined tonotopic organization at the individual level. We first defined a generous anatomical ROI that spanned the middle occipital gyrus (MoG) and included each individual subject’s anatomical hMT+ ROI. We then projected (i) unthresholded pRF centers (estimated using the full set of runs) from each subject’s hMT+ ROI and (ii) curvature values from the MoG ROI into a 2D grid to visualize pRF centers on a flat map, as shown in Fig. 5, Upper. We then selected pRF centers either along the gyrus (green solid line) or orthogonal to the gyrus (green dashed line). If pRF centers followed a spatially ordered, tonotopic arrangement relative to the MoG, then the distribution of frequency centers should differ for parallel vs. orthogonal pRF centers. As shown in Fig. 5, Lower, the distribution of frequency centers relative to the MoG (i) varied across subjects and (ii) was not distinct for parallel vs. orthogonal directions. This analysis would, of course, be insensitive to tonotopic organization along certain other directions (e.g., 45°). However, visual inspection of the data shown in Fig. 5 fails to suggest any hint of systematic organization along any other direction across subjects. Results were similar for various levels of thresholding.

Fig. 5.

(Upper) Flat maps showing frequency center values within hMT+ for sight-recovery and early-blind subjects. Best frequency was estimated using the full set of runs, and all voxels within the hMT+ ROI (no correlation threshold) were included for each subject. The location of the MoG is indicated in each flat map with a solid green line. A dashed green line indicates the orientation of the perpendicular ROI. Each flat map represents a cortical area of ∼5 cm3. (Lower) Distribution of frequency centers running along the MoG (solid lines) or perpendicular to the MoG (dashed lines) for each sight-recovery and early-blind subject.

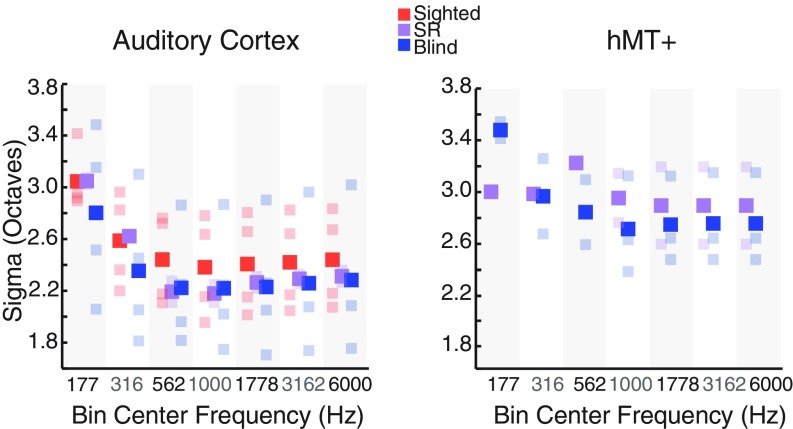

One advantage of the pRF method is that it provides an estimate of tuning width for each voxel. Fig. 6 shows tuning width estimates, obtained using the moving stimulus, for sight-recovery, early-blind, and sighted subjects in both PAC and hMT+. Tuning widths in PAC were narrower in sight-recovery and early-blind individuals than in sighted subjects (Wilcoxon rank sum test, P = 0.046). This effect was also significant when only early-blind subjects were included in the blind group (Wilcoxon rank sum test, P < 0.001). Tuning widths in hMT+ in early-blind and sight-recovery subjects were generally similar to those found within PAC, although bandwidths within hMT+ varied less clearly with stimulus frequency. We note, however, that the moving stimulus contained only five frequency bands, so it was not designed to optimally estimate tuning bandwidth as a function of frequency. Given this, and the relatively small number of subjects, this finding of narrower bandwidths should be considered provisional.

Fig. 6.

PRF tuning width as a function of frequency. Voxels were sorted into half-octave bins based on their best-frequency values. Best frequency and tuning widths were estimated using the full set of runs, and voxels above a threshold of 0.2 (not cross-validated) were included. Mean tuning width was calculated for each bin, separately for the auditory cortex (Left) and hMT+ (Right) ROIs. Sighted, early-blind, and sight-recovery (SR) subjects are plotted in red, blue, and purple, respectively. Group means are plotted in saturated colors; individual subject means are plotted in corresponding desaturated colors.

It has previously been shown that PAC can be accurately and consistently defined using either pure tones or complex, naturalistic stimuli (29–34). Consistent with these studies, we found that the moving and stationary stimuli produced a tonotopic map for all subjects within auditory cortex, as shown in SI Appendix, Fig. S1. SI Appendix, Table S1 gives the proportion of frequency-tuned voxels within PAC for each subject using the moving stimulus, as estimated using our cross-validated threshold of 0.2. Across all subjects, the median percentage of voxels that passed this threshold was 34.74%. This can be considered an upper limit for the sensitivity of our cross-validated pRF analysis.

We did not find a significant difference in the number of voxels fit above threshold either within or outside PAC (within belt and parabelt regions) using the moving vs. stationary stimulus (Wilcoxon rank sum test for the number of voxels fit above threshold in PAC, moving vs. stationary, P = 0.56; Wilcoxon rank sum test for belt + parabelt, P = 1).

As shown in Fig. 4, distributions for frequency within PAC were highly similar across subject groups: Bootstrapped χ2 tests of independence (SI Appendix, Methods) revealed no effect of early blindness on the distribution of frequency tuning within PAC. Bootstrapping results were similar, regardless of whether analyses included sight-recovery subjects [early-blind + sight-recovery vs. controls: χ2 (6, 6) = 15.03, P = 0.76] or were restricted to subjects with ongoing visual deprivation [early-blind vs. controls: χ2 (6, 4) = 7.76, P = 0.94].

Discussion

To compensate for their loss of vision, blind individuals rely more heavily on auditory information to track the movement of objects in space. Here, we assessed frequency tuning within hMT+ in two sight-recovery subjects, four early-blind subjects, and six age-matched sighted controls. Within PAC, we observed similar tonotopic maps for auditory frequency in all subjects, regardless of whether a stationary pure-tone stimulus or a moving band-pass stimulus was used. Within visually defined hMT+, for the moving stimulus, we saw evidence for frequency tuning both in sight-recovery subjects and in a subset of the early-blind subjects. We saw no evidence for frequency tuning within hMT+ in the sighted controls.

Our failure to find frequency tuning using the stationary stimulus is unlikely to have been driven by the difference in spectral composition between the two stimuli (pure tones vs. 0.8-octave band-pass stimuli): Within auditory cortex, both stimuli reliably elicited responses in both PAC (which is thought to be narrowly tuned for frequency) and parabelt regions (which are thought to be more broadly tuned). Thus, our results suggest that the presence of motion in the stimulus may be necessary to drive responses in frequency-tuned regions of hMT+. However, we note that our results do not depend on the use of a motion-related task, since subjects performed a one-back task on stimulus frequency.

We note that our results likely underestimate the true prevalence of frequency tuning within hMT+ in these subjects. First, auditory fMRI is intrinsically noisy, which places an upper limit on correlations between model predictions and data from a single scan. For comparison across subjects, fewer than 35% of voxels within PAC were fit above a threshold of r = 0.2 using a cross-validated analysis. Second, the large anatomical hMT+ ROI was defined based on a 25% probabilistic threshold. Thus, an individual voxel is only expected to overlap the true anatomical location of hMT+ 25% of the time. Based on these two factors alone, even if hMT+ showed similar frequency tuning to PAC, across subjects we would expect only ∼9% of voxels to satisfy our correlation threshold and genuinely be within hMT+. Across sight-recovery and early-blind subjects, ∼14% of voxels within the hMT+ ROI showed frequency tuning.

It has been suggested that, in early-blind individuals, hMT+ may play a role analogous to that of the planum temporale in sighted individuals (12). In early-blind individuals, the recruitment of hMT+ for auditory motion processing has been shown to coincide with a loss of auditory motion selectivity in the planum temporale (12, 35), suggesting a developmental process in which cortical regions compete for a given functional role. In sighted individuals, the planum temporale is selective for auditory motion (e.g., refs. 12, 36, and 37) and exhibits auditory frequency preferences at a voxelwise level, but lacks clear tonotopic organization (38). As described above, we observed a very similar pattern of auditory frequency response preferences for blind subjects in hMT+, which may reflect an underlying similarity in the organization of inputs to and/or computations carried out within hMT+ in blind individuals and in planum temporale in sighted individuals.

Although this work establishes the presence of frequency selectivity within hMT+ using a simple model of frequency tuning, it is worth noting that the auditory receptive fields within hMT+ may well be spectrotemporally complex, as is the case for much of human auditory cortex. Future work will be needed, both to characterize auditory receptive field properties within hMT+ and to understand how the recruitment of hMT+ might help early-blind subjects segregate moving auditory objects within complex auditory environments.

Despite severe deprivation amblyopia due to loss of vision early in life (caused by corneal scarring in his single remaining eye in SR01, and untreated bilateral cataracts in SR02), both sight-recovery subjects showed robust visually driven responses in hMT+, making it possible to obtain a precise functional definition of hMT+ in both individuals. Although hMT+ has a relatively consistent position in relation to sulcal patterns, the stereotaxic location and size of hMT+ varies across subjects (39), and the close proximity of regions selective for tactile and auditory motion (7, 24) makes a purely anatomical definition problematic. Thus, the inclusion of sight-recovery subjects provides critical evidence that the frequency tuning observed in these individuals does indeed overlap with regions selective for visual motion.

As described elsewhere (40–42), SR01 had normal visual experience up to age 3.5 y, at which stage his visual motion processing should have been fairly mature. Thus, it appears that congenital or very early blindness is not required for auditory frequency tuning to emerge: The mechanism underlying the recruitment of visual hMT+ for processing auditory motion and frequency must retain plasticity until at least age 3 y.

Finally, these results suggest that after cross-modal plasticity has occurred within hMT+, it is not disrupted by the resumption of visual motion responses. Both individuals with recovered sight showed responses to auditory motion and frequency tuning in hMT+ that resembled those of early-blind subjects. One possible explanation for this “functional flexibility” may be that, in blind individuals, auditory motion processing is accomplished within a conserved neural architecture that can continue to support computations relevant for processing visual motion, if vision is ever restored.

Methods

Participants.

Two sight-recovery subjects (both age 61 y, one male), four early-blind subjects (ages 33 to 56 y, two males; cause and onset of blindness given in Table 1), and six age-matched sighted subjects participated in two sessions of MRI. Subjects reported normal hearing and no history of neurological or psychiatric illness. All subjects gave informed consent, and all procedures, including recruitment and testing, followed the guidelines of the University of Washington Human Subjects Division and were reviewed and approved by the University of Washington Institutional Review Board.

Stimuli and Task.

Frequency selectivity was assessed using two kinds of stimuli: (i) stationary pure tones and (ii) a moving band-pass stimulus traveling smoothly along the frontoparallel plane. For both stimulus types, subjects performed a one-back task on the center frequency, responding via button press each time a stimulus block repeated the exact frequency of the previous block (10% of trials). Details of stimulus creation and sound system calibration are provided in SI Appendix, Methods. Center frequencies for the moving stimulus were selected to occupy the middle to lower end of the audible range to maximize the efficacy of the interaural time difference (ITD) cue, since sensitivity to ITDs is highest in the range of 700 to 1400 Hz for both pure tones (43, 44) and complex stimuli with carrier frequencies up to roughly 3,900 Hz (45).

MRI Acquisition and Preprocessing.

All subjects participated in two scanning sessions, one for the stationary stimulus, and one for the moving stimulus. Sight-recovery subjects also participated in a separate visual motion localizer scan as described in SI Appendix, Methods. All preprocessing and anatomical ROI selection was carried out using BrainVoyager QX software (version 2.3.1; Brain Innovation B.V.). Additional details of MRI acquisition, data preprocessing, and ROI selection are provided in SI Appendix, Methods.

pRF and Statistical Analysis.

Preprocessed fMRI data within the anatomical ROIs were analyzed using methods described in greater detail in Thomas et al. (27) and in SI Appendix, Methods. Briefly, for each voxel, we assumed a 1D Gaussian sensitivity profile on a log auditory frequency axis. Using custom software written in MATLAB, we found, for each voxel, the center (f0, best frequency) and SD (σ) of the Gaussian that, when multiplied by the stimulus over time after convolution with a canonical hemodynamic response function (46), produced a predicted time course that best correlated with the measured time course within each voxel. Quality of model fits was quantified using a leave-one-scan-out cross-validation procedure, as described in SI Appendix, Methods. Within each ROI, pRF model fits were compared with a null model created using a randomized stimulus representation. Group differences in model parameters were assessed using a nonparametric approach, as also described in SI Appendix, Methods.

Supplementary Material

Acknowledgments

We acknowledge funding from NIH National Eye Institute Grants R01EY014645 (to I.F.) and EY023268 (to F.J.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1815376116/-/DCSupplemental.

References

- 1.Amedi A, Malach R, Hendler T, Peled S, Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nat Neurosci. 2001;4:324–330. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- 2.Amedi A, et al. Shape conveyed by visual-to-auditory sensory substitution activates the lateral occipital complex. Nat Neurosci. 2007;10:687–689. doi: 10.1038/nn1912. [DOI] [PubMed] [Google Scholar]

- 3.Büchel C, Price C, Friston K. A multimodal language region in the ventral visual pathway. Nature. 1998;394:274–277. doi: 10.1038/28389. [DOI] [PubMed] [Google Scholar]

- 4.Reich L, Szwed M, Cohen L, Amedi A. A ventral visual stream reading center independent of visual experience. Curr Biol. 2011;21:363–368. doi: 10.1016/j.cub.2011.01.040. [DOI] [PubMed] [Google Scholar]

- 5.Collignon O, et al. Functional specialization for auditory-spatial processing in the occipital cortex of congenitally blind humans. Proc Natl Acad Sci USA. 2011;108:4435–4440. doi: 10.1073/pnas.1013928108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Renier LA, et al. Preserved functional specialization for spatial processing in the middle occipital gyrus of the early blind. Neuron. 2010;68:138–148. doi: 10.1016/j.neuron.2010.09.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Saenz M, Lewis LB, Huth AG, Fine I, Koch C. Visual motion area MT+/V5 responds to auditory motion in human sight-recovery subjects. J Neurosci. 2008;28:5141–5148. doi: 10.1523/JNEUROSCI.0803-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bedny M, Konkle T, Pelphrey K, Saxe R, Pascual-Leone A. Sensitive period for a multimodal response in human visual motion area MT/MST. Curr Biol. 2010;20:1900–1906. doi: 10.1016/j.cub.2010.09.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Poirier C, et al. Auditory motion perception activates visual motion areas in early blind subjects. Neuroimage. 2006;31:279–285. doi: 10.1016/j.neuroimage.2005.11.036. [DOI] [PubMed] [Google Scholar]

- 10.Wolbers T, Zahorik P, Giudice NA. Decoding the direction of auditory motion in blind humans. Neuroimage. 2011;56:681–687. doi: 10.1016/j.neuroimage.2010.04.266. [DOI] [PubMed] [Google Scholar]

- 11.Lewald J. Exceptional ability of blind humans to hear sound motion: Implications for the emergence of auditory space. Neuropsychologia. 2013;51:181–186. doi: 10.1016/j.neuropsychologia.2012.11.017. [DOI] [PubMed] [Google Scholar]

- 12.Jiang F, Stecker GC, Fine I. Auditory motion processing after early blindness. J Vis. 2014;14:4. doi: 10.1167/14.13.4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Priebe NJ, Cassanello CR, Lisberger SG. The neural representation of speed in macaque area MT/V5. J Neurosci. 2003;23:5650–5661. doi: 10.1523/JNEUROSCI.23-13-05650.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Huk AC, Dougherty RF, Heeger DJ. Retinotopy and functional subdivision of human areas MT and MST. J Neurosci. 2002;22:7195–7205. doi: 10.1523/JNEUROSCI.22-16-07195.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Albright TD. Direction and orientation selectivity of neurons in visual area MT of the macaque. J Neurophysiol. 1984;52:1106–1130. doi: 10.1152/jn.1984.52.6.1106. [DOI] [PubMed] [Google Scholar]

- 16.Bridge H, Parker AJ. Topographical representation of binocular depth in the human visual cortex using fMRI. J Vis. 2007;7:15.1–15.14. doi: 10.1167/7.14.15. [DOI] [PubMed] [Google Scholar]

- 17.Rokers B, Cormack LK, Huk AC. Disparity- and velocity-based signals for three-dimensional motion perception in human MT+ Nat Neurosci. 2009;12:1050–1055. doi: 10.1038/nn.2343. [DOI] [PubMed] [Google Scholar]

- 18.Czuba TB, Huk AC, Cormack LK, Kohn A. Area MT encodes three-dimensional motion. J Neurosci. 2014;34:15522–15533. doi: 10.1523/JNEUROSCI.1081-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Born RT, Bradley DC. Structure and function of visual area MT. Annu Rev Neurosci. 2005;28:157–189. doi: 10.1146/annurev.neuro.26.041002.131052. [DOI] [PubMed] [Google Scholar]

- 20.Britten KH, Shadlen MN, Newsome WT, Movshon JA. The analysis of visual motion: A comparison of neuronal and psychophysical performance. J Neurosci. 1992;12:4745–4765. doi: 10.1523/JNEUROSCI.12-12-04745.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Britten KH, Shadlen MN, Newsome WT, Movshon JA. Responses of neurons in macaque MT to stochastic motion signals. Vis Neurosci. 1993;10:1157–1169. doi: 10.1017/s0952523800010269. [DOI] [PubMed] [Google Scholar]

- 22.Van Essen DC, Maunsell JH, Bixby JL. The middle temporal visual area in the macaque: Myeloarchitecture, connections, functional properties and topographic organization. J Comp Neurol. 1981;199:293–326. doi: 10.1002/cne.901990302. [DOI] [PubMed] [Google Scholar]

- 23.Watkins KE, et al. Early auditory processing in area V5/MT+ of the congenitally blind brain. J Neurosci. 2013;33:18242–18246. doi: 10.1523/JNEUROSCI.2546-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Jiang F, Beauchamp MS, Fine I. Re-examining overlap between tactile and visual motion responses within hMT+ and STS. Neuroimage. 2015;119:187–196. doi: 10.1016/j.neuroimage.2015.06.056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Watkins KE, et al. Language networks in anophthalmia: Maintained hierarchy of processing in ‘visual’ cortex. Brain. 2012;135:1566–1577. doi: 10.1093/brain/aws067. [DOI] [PubMed] [Google Scholar]

- 26.Dumoulin SO, Wandell BA. Population receptive field estimates in human visual cortex. Neuroimage. 2008;39:647–660. doi: 10.1016/j.neuroimage.2007.09.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Thomas JM, et al. Population receptive field estimates of human auditory cortex. Neuroimage. 2015;105:428–439. doi: 10.1016/j.neuroimage.2014.10.060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Malikovic A, et al. Cytoarchitectonic analysis of the human extrastriate cortex in the region of V5/MT+: A probabilistic, stereotaxic map of area hOc5. Cereb Cortex. 2007;17:562–574. doi: 10.1093/cercor/bhj181. [DOI] [PubMed] [Google Scholar]

- 29.Formisano E, et al. Mirror-symmetric tonotopic maps in human primary auditory cortex. Neuron. 2003;40:859–869. doi: 10.1016/s0896-6273(03)00669-x. [DOI] [PubMed] [Google Scholar]

- 30.Humphries C, Liebenthal E, Binder JR. Tonotopic organization of human auditory cortex. Neuroimage. 2010;50:1202–1211. doi: 10.1016/j.neuroimage.2010.01.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Striem-Amit E, Hertz U, Amedi A. Extensive cochleotopic mapping of human auditory cortical fields obtained with phase-encoding FMRI. PLoS One. 2011;6:e17832. doi: 10.1371/journal.pone.0017832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Talavage TM, et al. Tonotopic organization in human auditory cortex revealed by progressions of frequency sensitivity. J Neurophysiol. 2004;91:1282–1296. doi: 10.1152/jn.01125.2002. [DOI] [PubMed] [Google Scholar]

- 33.Da Costa S, et al. Human primary auditory cortex follows the shape of Heschl’s gyrus. J Neurosci. 2011;31:14067–14075. doi: 10.1523/JNEUROSCI.2000-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Saenz M, Langers DR. Tonotopic mapping of human auditory cortex. Hear Res. 2014;307:42–52. doi: 10.1016/j.heares.2013.07.016. [DOI] [PubMed] [Google Scholar]

- 35.Dormal G, Rezk M, Yakobov E, Lepore F, Collignon O. Auditory motion in the sighted and blind: Early visual deprivation triggers a large-scale imbalance between auditory and “visual” brain regions. Neuroimage. 2016;134:630–644. doi: 10.1016/j.neuroimage.2016.04.027. [DOI] [PubMed] [Google Scholar]

- 36.Lewis JW, Beauchamp MS, DeYoe EA. A comparison of visual and auditory motion processing in human cerebral cortex. Cereb Cortex. 2000;10:873–888. doi: 10.1093/cercor/10.9.873. [DOI] [PubMed] [Google Scholar]

- 37.Alink A, Euler F, Kriegeskorte N, Singer W, Kohler A. Auditory motion direction encoding in auditory cortex and high-level visual cortex. Hum Brain Mapp. 2012;33:969–978. doi: 10.1002/hbm.21263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Langers DR, Backes WH, van Dijk P. Representation of lateralization and tonotopy in primary versus secondary human auditory cortex. Neuroimage. 2007;34:264–273. doi: 10.1016/j.neuroimage.2006.09.002. [DOI] [PubMed] [Google Scholar]

- 39.Dumoulin SO, et al. A new anatomical landmark for reliable identification of human area V5/MT: A quantitative analysis of sulcal patterning. Cereb Cortex. 2000;10:454–463. doi: 10.1093/cercor/10.5.454. [DOI] [PubMed] [Google Scholar]

- 40.Fine I, et al. Long-term deprivation affects visual perception and cortex. Nat Neurosci. 2003;6:915–916. doi: 10.1038/nn1102. [DOI] [PubMed] [Google Scholar]

- 41.Huber E, et al. A lack of experience-dependent plasticity after more than a decade of recovered sight. Psychol Sci. 2015;26:393–401. doi: 10.1177/0956797614563957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Levin N, Dumoulin SO, Winawer J, Dougherty RF, Wandell BA. Cortical maps and white matter tracts following long period of visual deprivation and retinal image restoration. Neuron. 2010;65:21–31. doi: 10.1016/j.neuron.2009.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Brughera A, Dunai L, Hartmann WM. Human interaural time difference thresholds for sine tones: Tthe high-frequency limit. J Acoust Soc Am. 2013;133:2839–2855. doi: 10.1121/1.4795778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Macpherson EA, Middlebrooks JC. Listener weighting of cues for lateral angle: The duplex theory of sound localization revisited. J Acoust Soc Am. 2002;111:2219–2236. doi: 10.1121/1.1471898. [DOI] [PubMed] [Google Scholar]

- 45.Henning GB. Detectability of interaural delay in high-frequency complex waveforms. J Acoust Soc Am. 1974;55:84–90. doi: 10.1121/1.1928135. [DOI] [PubMed] [Google Scholar]

- 46.Boynton GM, Engel SA, Glover GH, Heeger DJ. Linear systems analysis of functional magnetic resonance imaging in human V1. J Neurosci. 1996;16:4207–4221. doi: 10.1523/JNEUROSCI.16-13-04207.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.