Abstract

The matrix-completion problem has attracted a lot of attention, largely as a result of the celebrated Netflix competition. Two popular approaches for solving the problem are nuclear-norm-regularized matrix approximation (Candès and Tao, 2009; Mazumder et al., 2010), and maximum-margin matrix factorization (Srebro et al., 2005). These two procedures are in some cases solving equivalent problems, but with quite different algorithms. In this article we bring the two approaches together, leading to an efficient algorithm for large matrix factorization and completion that outperforms both of these. We develop a software package softlmpute in R for implementing our approaches, and a distributed version for very large matrices using the Spark cluster programming environment

Keywords: matrix completion, alternating least squares, svd, nuclear norm

1. Introduction

We have an m × n matrix X with observed entries indexed by the set Ω; i.e. Ω = {(i, j) : Xij is observed}. Following Candès and Tao (2009) we define the projection PΩ(X) to be the m×n matrix with the observed elements of X preserved, and the missing entries replaced with 0. Likewise projects onto the complement of the set Ω.

Inspired by Candès and Tao (2009), Mazumder et al. (2010) posed the following convex-optimization problem for completing X :

| (1) |

where the nuclear norm ||M||* is the sum of the singular values of M (a convex relaxation of the rank). They developed a simple iterative algorithm for solving Problem (1), with the following two steps iterated till convergence:

- Replace the missing entries in X with the corresponding entries from the current estimate :

(2) - Update by computing the soft-thresholded SVD of :

(3)

where the soft-thresholding operator Sλ operates element-wise on the diagonal matrix D, and replaces Dii with (Dii − λ)+. With large λ many of the diagonal elements will be set to zero, leading to a low-rank solution for Problem (1).(4)

For large matrices, step (3) could be a problematic bottleneck, since we need to compute the SVD of the filled matrix . In fact, for the Netflix problem (m, n) ≈ (400K, 20K), which requires storage of 8 × 109 floating-point numbers (32Gb in single precision), which in itself could pose a problem. However, since only about 1% of the entries are observed (for the Netflix dataset), sparse-matrix representations can be used.

Mazumder et al. (2010) use two tricks to avoid these computational nightmares:

Anticipating a low-rank solution, they compute a reduced-rank SVD in step (3); if the smallest of the computed singular values is less than λ, this gives the desired solution. A reduced-rank SVD can be computed by using an iterative Lanczos-style method as implemented in PROPACK (Larsen, 2004), or by other alternating-subspace methods (Golub and Van Loan, 2012).

-

They rewrite in (2) as

(5) The first piece is as sparse as X, and hence inexpensive to store and compute. The second piece is low rank, and also inexpensive to store. Furthermore, the iterative methods mentioned in step (1) require left and right multiplications of by skinny matrices, which can exploit this special structure.

This softlmpute algorithm works very well, and although an SVD needs to be computed each time step (3) is evaluated, this step can use the previous solution as a warm start. As one gets closer to the solution, the warm starts tend to be better, and so the final iterations tend to be faster.

Mazumder et al. (2010) also considered a path of such solutions, with decreasing values of λ. As λ decreases, the rank of the solutions tend to increase, and at each λℓ, the iterative algorithms can use the solution (with λℓ−1 > λℓ) as warm starts, padded with some additional dimensions.

Rennie and Srebro (2005) consider a different approach. They impose a rank constraint, and consider the problem

| (6) |

where A is m × r and B is n × r. This so-called maximum-margin matrix factorization (MMMF) criterion1 is not convex in A and B, but it is bi-convex — for fixed B the function F (A, B) is convex in A, and for fixed A the function F (A, B) is convex in B. Alternating minimization algorithms (ALS) are often used to minimize Problem (6). Consider A fixed, and we wish to solve Problem (6) for B. It is easy to see that this problem decouples into n separate ridge regressions, with each column Xj of X as a response, and the r-columns of A as predictors. Since some of the elements of Xj are missing, and hence ignored, the corresponding rows of A are deleted for the jth regression. So these are really separate ridge regressions, in that the regression matrices are all different (even though they all derive from A). By symmetry, with B fixed, solving for A amounts to m separate ridge regressions.

There is a remarkable fact that ties the solutions to Problems (6) and (1) (Mazumder et al., 2010, for example). If the solution to Problem (1) has rank q ≤ r, then it provides a solution to Problem (6). That solution is

| (7) |

where Ur, for example, represents the sub-matrix formed by the first r columns of U, and likewise Dr is the top r × r diagonal block of D. Note that for any solution to Problem (6), multiplying and on the right by an orthonormal r × r matrix R would be an equivalent solution. Likewise, any solution to Problem (6) with rank r ≥ q gives a solution to Problem (1).

In this paper we propose a new algorithm that profitably draws on ideas used both in softlmpute and MMMF. Consider the two steps (3) and (4). We can alternatively solve the optimization problem

| (8) |

and as long as we use enough columns in A and B, we will have . There are several important advantages to this approach:

Since is fully observed, the (ridge) regression operator is the same for each column, and so is computed just once. This reduces the computation of an update of A or B over ALS by a factor of r.

By orthogonalizing the r-column matrices A or B at each iteration, the regressions are simply matrix multiplies, very similar to those used in the alternating subspace algorithms for computing the SVD.

This quadratic regularization amounts to shrinking the higher-order components more than the lower-order components, and this tends to offer a convergence advantage over the previous approach (compute the SVD, then soft-threshold).

Just like before, these operations can make use of the sparse plus low-rank property of .

As an important additional modification, we replace at each step using the most recently computed or . All combined, this hybrid algorithm tends to be faster than either approach on their own; see the simulation results in Section 6.1

For the remainder of the paper, we present this softImpute-ALS algorithm in more detail, and show that it convergences to the solution to Problem (1) for r sufficiently large. We demonstrate its superior performance on simulated and real examples, including the Netflix data. We briefly highlight two publicly available software implementations, and describe a simple approach to centering and scaling of both the rows and columns of the (incomplete) matrix.

2. Rank-restricted Soft SVD

In this section we consider a complete matrix X, and develop a new algorithm for finding a rank-restricted SVD. In the next section we will adapt this approach to the matrix-completion problem. We first give two theorems that are likely known to experts; the proofs are very short, so we provide them here for convenience.

Theorem 1 Let Xm×n be a matrix (fully observed), and let 0 < r ≤ min(m, n). Consider the optimization problem

| (9) |

A solution is given by

| (10) |

where the rank-r SVD of X is and Sλ(Dr) = diag[(σ1 − λ)+, …, (σr − λ)+].

Proof We will show that, for any Z the following inequality holds:

| (11) |

where, fλ(σ (Z)) is a function of the singular values of Z and σ (X) denotes the vector of singular values of X, such that σi (X) ≥ σi+1(X) for all i = 1, …, min{m, n}.

To show inequality (11) it suffices to show that:

which follows as an immediate consequence of the well-known Von-Neumann trace inequality (Mirsky, 1975; Stewart and Sun, 1990):

that provides an upper bound to the trace of the product of two matrices in terms of the inner product of their singular values.

Observing that

we have established:

| (12) |

Observe that the optimization problem in the right hand side of (12) is a separable vector optimization problem. We claim that the optimum solutions of the two problems appearing in (12) are in fact equal. To see this, let

If the SVD of X is given by UDVT, then the choice satisfies

This shows that:

| (13) |

and thus concludes the proof of the theorem. ■

This generalizes a similar result where there is no rank restriction, and the problem is convex in Z. For r < min(m, n), Problem (9) is not convex in Z, but the solution can be characterized in terms of the SVD of X.

The second theorem relates this problem to the corresponding matrix factorization problem

Theorem 2 Let Xm×n be a matrix (fully observed), and let 0 < r ≤ min(m, n). Consider the optimization problem

| (14) |

A solution is given by and , and all solutions satisfy , where, is as given in Problem (10).

We make use of the following lemma from Srebro et al. (2005); Mazumder et al. (2010), which we give without proof:

Lemma 1

Proof (of Theorem 2). Using Lemma 1, we have that

The conclusions follow from Theorem 1. ■

Note, in both theorems the solution might have rank less than r.

Inspired by the alternating subspace iteration algorithm (a.k.a. Orthogonal Iterations, Chapter 8, Golub and Van Loan, 2012) for the reduced-rank SVD, we present Algorithm 2.1, an alternating ridge-regression algorithm for finding the solution to Problem (9).

Remarks

At each step the algorithm keeps the current solution in “SVD” form, by representing A and B in terms of orthogonal matrices. The computational effort needed to do this is exactly that required to perform each ridge regression, and once done makes the subsequent ridge regression trivial.

The proof of convergence of this algorithm is essentially the same as that for an alternating subspace algorithm, a.k.a. Orthogonal Iterations (Chapter 8, Thm 8.2.2; Golub and Van Loan, 2012) (without shrinkage).

In principle step (7) is not necessary, but in practice it cleans up the rank nicely.

This algorithm lends itself naturally to distributed computing for very large matrices X; X can be chunked into smaller blocks, and the left and right matrix multiplies can be chunked accordingly. See Section 8.

- There are many ways to check for convergence. Suppose we have a pair of iterates (U, D2, V) (old) and (new), then the relative change in Frobenius norm is given by.

which is not expensive to compute.(19) - If X is sparse, then the left and right matrix multiplies can be achieved efficiently by using sparse matrix methods.

Likewise, if X is sparse, but has been column and/or row centered (see Section 9), it can be represented in “sparse plus low rank” form; once again left and right multiplication of such matrices can be done efficiently.

An interesting feature of this algorithm is that a reduced rank SVD of X is available from the solution, with the rank determined by the particular value of λ used. The singular values would have to be corrected by adding λ to each. There is empirical evidence that this is faster than without shrinkage, with accuracy biased more toward the larger singular values.

3. The softImpute-ALS Algorithm

Now we return to the case where X has missing values, and the non-missing entries are indexed by the set Ω. We present Algorithm 3.1 (softImpute-ALS) for solving Problem (6):

where Am×r and Bn×r are each of rank at most r ≤ min(m, n).

The algorithm exploits the decomposition

| (24) |

Suppose we have current estimates for A and B, and we wish to compute the new . We will replace the first occurrence of ABT in the right-hand side of (24) with the current estimates, leading to a filled in , and then solve for in

Using the same notation, we can write (importantly)

| (25) |

This is the efficient sparse + low-rank representation for high-dimensional problems; efficient to store and also efficient for left and right multiplication.

Remarks

This algorithm is a slight modification of Algorithm 2.1, where in step 2(a) we use the latest imputed matrix X* rather than X.

The computations in step 2(b) are particularly efficient. In (22) we use the current version of A and B to predict at the observed entries Ω, and then perform a multiplication of a sparse matrix on the left by a skinny matrix, followed by rescaling of the rows. In (23) we simply rescale the rows of the previous version for BT.

- After each update, we maintain the integrity of the current solution. By Lemma 1 we know that the solution to

(26) is given by the SVD of , with A = UD and B = VD. Our iterates maintain this each time A or B changes in step 2(c), with no additional significant computational cost.Algorithm 3.1.

Rank-Restricted Efficient Maximum-Margin Matrix Factorization: softImpute-ALS The final step is as in Algorithm 2.1. We know the solution should have the form of a soft-thresholded SVD. The alternating ridge regression might not exactly reveal the rank of the solution. This final step tends to clean this up, by revealing exact zeros after the soft-thresholding.

In Section 5 we discuss (the lack of) optimality guarantees of fixed points of Algorithm 3.1 (in terms of criterion (1)). We note that the output of softImpute-ALS can easily be piped into softlmpute as a warm start. This typically exits almost immediately in our R package softlmpute.

4. Broader Perspective and Related Work

Block coordinate descent (for example, Bertsekas, 1999) is a classical method in optimization that is widely used in the statistical and machine learning communities (Hastie et al., 2009). This is useful especially when the optimization problems associated with each block is relatively simple. The algorithm presented in this paper is a stylized variant of block coordinate descent. At a high level vanilla block coordinate descent (which we call ALS) applied to Problem (6) performs a complete minimization wrt one variable with the other fixed, before it switches to over the other variable. softImpute-ALS instead, does a partial minimization of a very specific form. Razaviyayn et al. (2013) study convergence properties of generalized block-coordinate methods that apply to a fairly large class of problems. The same paper presents asymptotic convergence guarantees, i.e., the iterates converge to a stationary point (Bertsekas, 1999). Asymptotic convergence is fairly straightforward to establish for softimpute-ALS. We also describe global convergence rate guarantees2 for softimpute-ALS in terms of various metrics of convergence to a stationary point. Perhaps more interestingly, we connect the properties of the stationary points of the non-convex Problem (6) to the minimizers of the convex Problem (1), which seems to be well beyond the scope and intent of Razaviyayn et al. (2013).

Variations of alternating-minimization strategies are popularly used in the context of matrix completion (Chen et al., 2012; Koren et al., 2009; Zhou et al., 2008). Jain et al. (2013) analyze the statistical properties of vanilla alternating-minimization algorithms for Problem (6) with λ = 0, i.e.,

where, one attempts to minimize the above function via alternating least squares i.e. first minimizing with respect to A (with B fixed) and vice-versa. They establish statistical performance guarantees of the alternating strategy under incoherence conditions on the singular vectors of the underlying low-rank matrix—the assumptions are similar in spirit to the work of Candès and Tao (2009); Candès and Recht (2008). However, as pointed out by Jain et al. (2013), their alternating-minimization methods typically require |Ω| to be larger than than required by convex optimization based methods (Candès and Recht, 2008). We refer the interested reader to more recent work of Hardt (2014), analyzing the statistical properties of alternating minimization methods.

The flavor of our present work is in spirit different from that described above (Jain et al., 2013; Hardt, 2014). Our main goal here is to develop non-convex algorithms for the optimization of Problem (6) for arbitrary λ and rank r. A special case of our framework corresponds to the case where Problem (6) is equivalent to Problem (1), for proper choices of r, λ. In this particular case, we study in Section 5 when our algorithm softImpute-ALS converges to a global minimizer of Problem (1)—this can be verified by a minor check that requires computing the low-rank SVD of a matrix that can be written as the sum of a sparse and low-rank matrix. Thus softImpute-ALS can be thought of a non-convex algorithm that solves the convex nuclear norm regularized Problem (1). Hence softImpute-ALS inherits statistical properties of the convex Problem (1) as established in Candès and Tao (2009); Candès and Recht (2008). We have also demonstrated in Figures 1 and 3 that softimpute-ALS is much faster than the alternating least squares schemes analyzed in Jain et al. (2013); Hardt (2014).

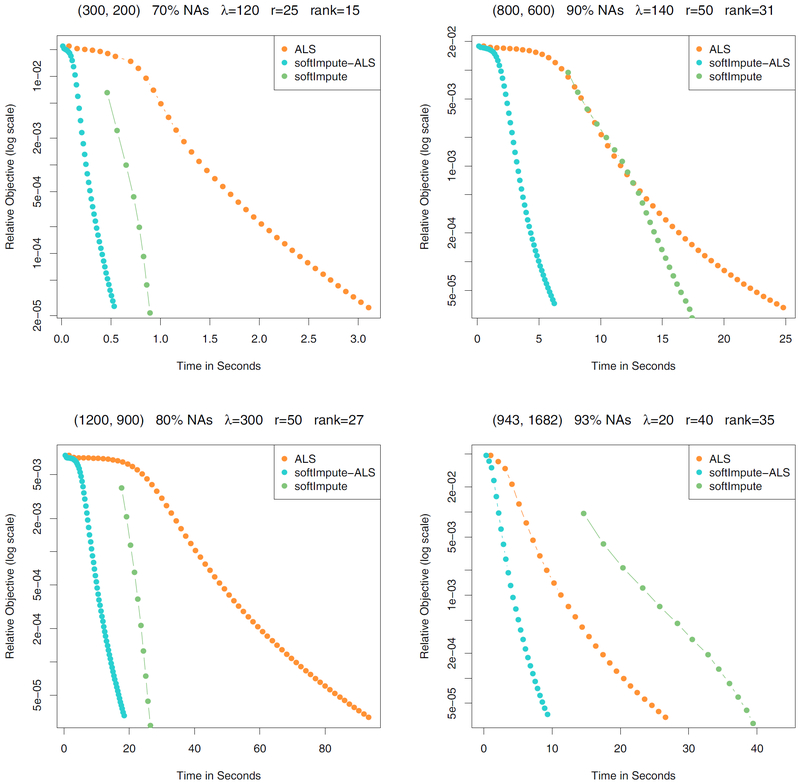

Figure 1:

Four timing experiments. Each figure is labelled according to size (m×n), percentage of missing entries (NAs), value of λ used, rank r used in the ALS iterations, and rank of solution found. The first three are simulation examples, with increasing dimension. The last is the movielens 100K data. In all cases, softImpute-ALS (blue) wins handily against ALS (orange) and softImpute (green).

Figure 3:

Left: timing results on the Netflix matrix, comparing ALS with softImpute-ALS. Right: timing on the MovieLens 10M matrix. In both cases we see that while ALS makes bigger gains per iteration, each iteration is much more costly.

Note that the use of non-convex methods to obtain minimizers of convex problems have been studied in Burer and Monteiro (2005); Journée et al. (2010). The authors study nonlinear optimization algorithms using non-convex matrix factorization formulations to obtain global minimizers of convex SDPs. The results presented in the aforementioned papers also requires one to check whether a stationary point is a local minimizer—this typically requires checking the positive definiteness of a matrix of size O(mr + nr) × O(mr + nr) and can be computationally demanding if the problem size is large. In contrast, the condition (derived in this paper) that needs to be checked to certify whether softimpute-ALS, upon convergence, has reached the global solution to the convex optimization Problem (1), is fairly intuitive and straightforward.

5. Algorithmic Convergence Analysis

In this section we investigate the theoretical properties of the softImpute-ALS algorithm in the context of Problems (1) and (6).

We show that the softImpute-ALS algorithm converges to a first order stationary point for Problem (6) at a rate of O(1/K), where K denotes the number of iterations of the algorithm. We also discuss the role played by λ in the convergence rates. We establish the limiting properties of the estimates produced by the softImpute-ALS algorithm: properties of the limit points of the sequence (Ak, Bk) in terms of Problems (1) and (6). We show that for any r in Problem (6) the sequence produced by the softImpute-ALS algorithm leads to a decreasing sequence of objective values for the convex Problem (1). A fixed point of the softImpute-ALS problem need not correspond to the minimum of the convex Problem (1). We derive simple necessary and sufficient conditions that must be satisfied for a stationary point of the algorithm to be a minimum for the Problem (1)—the conditions can be verified by a simple structured low-rank SVD computation.

We begin the section with a formal description of the updates produced by the algorithm in terms of the original objective function (6) and its majorizers (27) and (28). Theorem 3 establishes that the updates lead to a decreasing sequence of objective values F(Ak, Bk) in (6). Section 5.1 (Theorem 4 and Corollary 1) derives the finite-time convergence rate properties of the proposed algorithm softImpute-ALS. Section 5.2 provides descriptions of the first order stationary conditions for Problem (6), the fixed points of the algorithm softImpute-ALS and the limiting behavior of the sequence (Ak, Bk), k ≥ 1 as k → ∞. Section 5.3 (Lemma 4) investigates the implications of the updates produced by softImpute-ALS for Problem (6) in terms of the Problem (1). Section 5.3.1 (Theorem 6) studies the stationarity conditions for Problem (6) vis-a-vis the optimality conditions for the convex Problem (1).

The softImpute-ALS algorithm may be thought of as an EM or more generally a MM-style algorithm (majorization minimization), where every imputation step leads to an upper bound to the training error part of the loss function. The resultant loss function is minimized wrt A—this leads to a partial minimization of the objective function wrt A. The process is repeated with the other factor B, and continued till convergence.

Recall the objective function in Problem (6):

We define the surrogate functions

| (27) |

| (28) |

Consider the function which is the training error as a function of the outer-product Z = ABT, and observe that for any Z, we have:

| (29) |

where, equality holds above at . This leads to the following simple but important observations:

| (30) |

suggesting that QA(Z1|A, B) is a majorizer of F(Z1,B) (as a function of Z1); similarly, QB (Z2|A, B) majorizes F(A, Z2). In addition, equality holds as follows:

| (31) |

We also define . Using these definitions, we can succinctly describe the softImpute-ALS algorithm in Algorithm 5.1. This is almost equivalent to Algorithm 3.1, but more convenient for theoretical analysis. It has the orthogonalization and redistribution of in step 3 removed, and step 5 removed. Observe that the

softImpute-ALS algorithm can be described as the following iterative procedure:

| (32) |

| (33) |

We will use the above notation in our proof.

We can easily establish that softImpute-ALS is a descent method, or its iterates never increase the function value.

Theorem 3 Let {(Ak, Bk)} be the iterates generated by softImpute-ALS. The function values are monotonically decreasing,

Proof Let the current iterate estimates be (Ak, Bk). We will first consider the update in A, leading to Ak+1, as defined in (32).

Note that, , by definition of Ak+1 in (32).

Using (30) we get that QA(Ak+1| Ak, Bk) ≥ F(Ak+1,Bk). Putting together the pieces we get: F(Ak, Bk) ≥ F(Ak+1, Bk).

Using an argument exactly similar to the above for the update in B we have:

| (34) |

This establishes that F(Ak, Bk) > F(Ak+1, Bk+1) for all k, thereby completing the proof of the theorem. ■

5.1. softImpute-ALS: Rates of Convergence

The previous section derives some elementary properties of the softImpute-ALS algorithm, namely the updates lead to a decreasing sequence of objective values. We will now derive some results that inform us about the rate at which the softImpute-ALS algorithm reaches a stationary point.

We begin with the following lemma, which presents a lower bound on the successive difference in objective values of F(A, B),

Lemma 2 Let (Ak, Bk) denote the values of the factors at iteration k. We have the following:

| (35) |

Proof See Section A.1 for the proof. ■

For any two matrices A and B respectively define A+, B+ as follows:

| (36) |

We will consequently define the following:

| (37) |

Lemma 3 Δ((A, B), (A+, B+)) = 0 iff A, B is a fixed point of softImpute-ALS.

Proof See Section A.2, for a proof. ■

We will use the following notation

| (38) |

Thus ηk can be used to quantify how close (Ak, Bk) is from a stationary point.

If ηk > 0 it means that Algorithm softImpute-ALS will make progress in improving the quality of the solution. As a consequence of the monotone decreasing property of the sequence of objective values F(Ak, Bk) and Lemma 2, we have that, ηk → 0 as k → ∞. The following theorem characterizes the rate at which ηk converges to zero.

Theorem 4 Let (Ak, Bk),k ≥ 1 be the sequence generated by the softImpute-ALS algorithm. The decreasing sequence of objective values F(Ak, Bk) converges to F∞ ≥ 0 (say) and the quantities ηk → 0.

Furthermore, we have the following finite convergence rate of the softImpute-ALS algorithm:

| (39) |

Proof See Section A.3 ■

The above theorem establishes a convergence rate of softImpute-ALS; in other words, for any ϵ > 0, we need at most iterations to arrive at a point (Ak*, Bk*) such that ηk* ≤ ϵ, where, 1 ≤ k* ≤ K.

Note that Theorem 4 establishes convergence of the algorithm for any value of λ ≥ 0. We found in our numerical experiments that the value of λ has an important role to play in the speed of convergence of the algorithm. In the following corollary, we provide convergence rates that make the role of λ explicit.

The following corollary employs three different distance measures to measure the closeness of a point from stationarity.

Corollary 1 Let (Ak, Bk), k ≥ 1 be defined as above. Assume that for all k ≥ 1

| (40) |

where, ℓU, ℓL are constants independent of k.

Then we have the following:

| (41) |

| (42) |

| (43) |

where, ∇A f(A, B) (respectively, ∇B f(A, B)) denotes the partial derivative of F(A, B) wrt A (respectively, B).

Proof See Section A.4. ■

Inequalities (41)–(43) are statements about different notions of distances between successive iterates. These may be employed to understand the convergence rate of softImpute-ALS.

Assumption (40) is a minor one. While it may not be straightforward to estimate ℓU prior to running the algorithm, a finite value of ℓU is guaranteed as soon as λ > 0. The lower bound ℓL> 0, if both , have rank r and the rank remains the same across the iterates. If the solution to Problem (6) has a rank smaller than r, then ℓL = 0, however, this situation is typically avoided since a small value of r leads to lower computational cost per iteration of the softImpute-ALS algorithm. The constants appearing as a part of the rates in (41)–(43) are dependent upon λ. The constants are smaller for larger values of λ. Finally we note that the algorithm does not require any information about the constants ℓL, ℓU appearing as a part of the rate estimates.

5.2. softImpute-ALS: Asymptotic Convergence

In this section we derive some properties of the limiting behavior of the sequence (Ak, Bk), in particular we examine some elementary properties of the limit points of the sequence (Ak, Bk).

At the beginning, we recall the notion of first order stationarity of a point A*, B*. We say that A*, B* is said to be a first order stationary point for the Problem (6) if the following holds:

| (44) |

An equivalent restatement of condition (44) is:

| (45) |

i.e., A*, B* is a fixed point of the softImpute-ALS algorithm updates.

We now consider uniqueness properties of the limit points of (Ak, Bk), k ≥ 1. Even in the fully observed case, the stationary points of Problem (6) are not unique in A*, B*; due to orthogonal invariance. Addressing convergence of (Ak, Bk) becomes trickier if two singular values of are tied. In this vein we have the following result:

Theorem 5 Let {(Ak, Bk)}k be the sequence of iterates generated by Algorithm 5.1. For λ > 0, we have:

Every limit point of {(Ak, Bk)}k is a stationary point of Problem, (6).

-

Let B* be any limit point of the sequence Bk, k ≥ 1, with Bν → B*, where, ν is a subsequence of {1, 2, …,}. Then the sequence Aν converges.

Similarly, let A* be any limit point of the sequence Ak, k ≥ 1, with Aμ → B*, where, μ is a subsequence of {1, 2, …,}. Then the sequence Bμ converges.

Proof See Section A.5 ■

The above theorem is a partial result about the uniqueness of the limit points of the sequence Ak, Bk. The theorem implies that if the sequence Ak converges, then the sequence Bk must converge and vice-versa. More generally, for every limit point of Ak, the associated Bk (sub)sequence will converge. The same result holds true for the sequence Bk.

Remark 1 Note that the condition λ > 0 is enforced due to technical reasons so that the sequence (Ak, Bk) remains bounded. If λ = 0, then A ← cA and for any c > 0, leaves the objective function unchanged. Thus one may take c → ∞ making the sequence of updates unbounded without making any change to the values of the objective function.

5.3. Implications of softImpute-ALS updates in term s of Problem (1)

The sequence (Ak, Bk) generated by Algorithm (5.1) are geared towards minimizing criterion (6), it is interesting to explore what implications the sequence might have for the convex Problem (1). In particular, we know that F(Ak, Bk) is decreasing—does this imply a monotone sequence ? We show below that it is indeed possible to obtain a monotone decreasing sequence with a minor modification. These modifications are exactly those implemented in Algorithm 3.1 in step 3.

The idea that plays a crucial role in this modification is the following inequality (for a proof see Mazumder et al. (2010); see also remark 3 in Section 3):

Note that equality holds above if we take a particular choice of A and B given by:

| (46) |

is the SVD of ABT. The above observation implies that if (Ak, Bk) is generated by softImpute-ALS then

with equality holding if Ak, are represented via (46). Note that this re-parametrization does not change the training error portion of the objective F(Ak, Bk), but decreases the ridge regularization term—and hence decreases the overall objective value when compared to that achieved by softImpute-ALS without the reparametrization (46).

We thus have the following Lemma:

Lemma 4 Let the sequence (Ak, Bk) generated by softImpute-ALS be stored in terms of the factored SVD representation (46). This results in a decreasing sequence of objective values in the nuclear norm penalized Problem (1):

with , for all k. The sequence thus converges to F∞.

Note that, F∞ need not be the minimum of the convex Problem (1). It is easy to see this, by taking r to be smaller than the rank of the optimal solution to Problem (1).

5.3.1. A Closer Look at the Stationary Conditions

In this section we inspect the first order stationary conditions of the non-convex Problem (6) alongside those for the convex Problem (1). We will see that a first order stationary point of the convex Problem (1) leads to factors (A, B) that are stationary for Problem (6). However, the converse of this statement need not be true in general. However, given an estimate delivered by softImpute-ALS (upon convergence) it is easy to verify whether it is a solution to Problem (1).

Note that Z* is the optimal solution to the convex Problem (1) iff:

where, sgn(Z*) is a sub-gradient of the nuclear norm ||Z||* at Z*. Using the standard characterization (Lewis, 1996) of sgn(Z*) the above condition is equivalent to:

| (47) |

where, the full SVD of Z* is given by ; sgn(D*) is a diagonal matrix with ith diagonal entry given by , where, is the ith diagonal entry of D*.

If a limit point of the softImpute-ALS algorithm satisfies the stationarity condition (47) above, then it is the optimal solution of the convex problem. We note that need not necessarily satisfy the stationarity condition (47).

(A, B) satisfy the stationarity conditions of softImpute-ALS if the following conditions are satisfied:

where, we assume that A, B are represented in terms of (46). This gives us:

| (48) |

where ABT = UDVT, being the reduced rank SVD i.e. all diagonal entries of D are strictly positive.

A stationary point of the convex problem corresponds to a stationary point of softImpute-ALS, as seen by a direct verification of the conditions above. In the following we investigate the converse: when does a stationary point of softImpute-ALS correspond to a stationarypoint of Problem (1); i.e. condition (47)? Towards this end, we make use of the ridged least-squares update used by softImpute-ALS. Assume that all matrices Ak, Bk have r rows.

At stationarity i.e. at a fixed point of softImpute-ALS we have the following:

| (49) |

| (50) |

| (51) |

| (52) |

Line (50) and (52) can be thought of doing alternating multiple ridge regressions for the fully observed matrix .

The above fixed point updates are very closely related to the following optimization problem:

| (53) |

The solution to (53) by Theorem 1 is given by the nuclear norm thresholding operation (with a rank r constraint) on the matrix :

| (54) |

Suppose the convex optimization Problem (1) has a solution Z* with rank(Z*) = r*.

Then, for to be a solution to the convex problem the following conditions are sufficient:

r* ≤ r

-

A*, B* must be the global minimum of Problem (53). Equivalently, the outer product must be the solution to the fully observed nuclear norm regularized problem:

(55) The above condition (55) can be verified fairly easily; and requires doing a low-rank SVD of the matrix as a direct application of Algorithm 2.1. This task is computationally attractive due to the “sparse plus low-rank structure” of the matrix: . We summarize the above discussion in the form of the following theorem, where we assume of course that λ > 0.

Theorem 6 Let , be the sequence generated by softImpute-ALS and let (A*, B*) denote a limit point of the sequence. Suppose that Problem (1) has a minimizer with rank at most r. If solves the fully observed nuclear norm regularized problem (55), then Z* is a solution to the convex Problem (1).

5.4. Computational Complexity and Comparison to ALS

The computational cost of softImpute-ALS can be broken down into three steps. First consider only the cost of the update to A. The first step is forming the matrix , which requires O(r|Ω|) flops for the PΩ(ABT) part, while the second part is never explicitly formed. The matrix B(BT B + λI)−1 requires O(2nr2 + r3) flops to form; although we keep it in SVD factored form, the cost is the same. The multiplication X*B (BT B + λI)−1 requires O(r|Ω| + mr2 + nr2) flops, using the sparse plus low-rank structure of X*. The total cost of an iteration is O(2r|Ω| + mr2 + 3nr2+ r3).

As mentioned in Section 1, alternating least squares (ALS) is a popular algorithm for solving the matrix factorization problem in Equation (6); see Algorithm 5.2. The ALS algorithm is an instance of block coordinate descent applied to (6).

Recall that the updates for ALS are given by

| (56) |

| (57) |

and each row of A and B can be computed via a separate ridge regression. The cost for each ridge regression is O(|Ωj|r2+ r3), so the cost of one iteration is O(2|Ω|r2 + mr3 + nr3). Hence the cost of one iteration of ALS is r times more flops than one iteration of softImpute-ALS. We will see in the next sections that while ALS may decrease the criterion at each iteration more than softImpute-ALS, it tends to be slower because the cost is higher by a factor O(r).

Dependence of Computational Complexity on Ω: The computational guarantees derived in Section 5.1 present a worst-case viewpoint of the rate at which softimpute-ALS converge to an approximate stationary point—the results apply to any data and an arbitrary Ω. Tighter rates can be derived under additional assumptions. For example, for the special case where Ω corresponds to a fully observed matrix, softimpute-ALS becomes Algorithm 2.1. For λ = 0, Algorithm 2.1 with Ω fully observed becomes exactly equivalent to the Orthogonal Iteration algorithm of Golub and Van Loan (2012). Theorem 8.2.2 in Golub and Van Loan (2012) shows that the left orthogonal subspace corresponding to A converges to the left singular subspace of X, under the assumption that σr(X) > σr+1(X)—the rate is linear3 and depends upon the ratio . Similar results hold true for the left orthogonal subspace of B. Since the left subspaces of A and B generated by Algorithm 2.1 with λ > 0 are the same for λ = 0, the same linear rate of convergence holds true for Algorithm 2.1 for Problem (14).

For a general Ω it is not clear to us if the rates in Section 5.1 can be improved. However, for a sparse Ω the computational cost of every iteration of softimpute-ALS is significantly smaller than a dense observation pattern—the practical significance being that a large number of iterations can be performed at a very low cost.

6. Experiments

In this section we run some timing experiments on simulated and real datasets, and show performance results on the Netflix and MovieLens data.

6.1. Timing experiments

Figure 1 shows timing results on four datasets. The first three are simulation datasets of increasing size, and the last is the publicly available MovieLens 100K data. These experiments were all run in R using the softImpute package; see Section 7. Three methods are compared:

ALS — Alternating Least Squares as in Algorithm 5.2;

softImpute-ALS — our new approach, as defined in Algorithm 3.1 or 5.1;

softImpute — the original algorithm of Mazumder et al. (2010), as layed out in steps (2)–(4).

We used an R implementation for each of these in order to make the fairest comparisons. In particular, algorithm softImpute requires a low-rank SVD of a complete matrix at each iteration. For this we used the function svd. als from our package, which uses alternating subspace iterations, rather than using other optimized code that is available for this task. Likewise, there exists optimized code for regular ALS for matrix completion, but instead we used our R version to make the comparisons fairer. We are trying to determine how the computational trade-offs play off, and thus need a level playing field.

Each subplot in Figure 6.1 is labeled according to the size of the problem, the fraction missing, the value of λ used, the operating rank of the algorithms r, and the rank of the solution obtained. All three methods involve alternating subspace methods; the first two are alternating ridge regressions, and the third alternating orthogonal regressions. These are conducted at the operating rank r, anticipating a solution of smaller rank. Upon convergence, softImpute-ALS performs step (5) in Algorithm 3.1, which can truncate the rank of the solution. Our implementation of ALS does the same.

For the three simulation examples, the data are generated from an underlying Gaussian factor model, with true ranks 50, 100, 100; the missing entries are then chosen at random. Their sizes are (300, 200), (800, 600) and (1200, 900) respectively, with between 70–90% missing. The MovieLens 100K data has 100K ratings (1–5) for 943 users and 1682 movies, and hence is 93% missing.

We picked a value of λ for each of these examples (through trial and error) so that the final solution had rank less than the operating rank. Under these circumstances, the solution to the criterion (6) coincides with the solution to (1), which is unique under non-degenerate situations.

There is a fairly consistent message from each of these experiments. softImpute-ALS wins handily in each case, and the reasons are clear:

Even though it uses more iterations than ALS, they are much cheaper to execute (by a factor O(r)).

softImpute wastes time on its early SVD, even though it is far from the solution. Thereafter it uses warm starts for its SVD calculations, which speeds each step up, but it does not catch up.

6.2. Netflix Competition Data

We used our softImpute package in R to fit a sequence of models on the Netflix competition data. Here there are 480,189 users, 17,770 movies and a total of 100,480,507 ratings, making the resulting matrix 98.8% missing. There is a designated test set (the “probe set”), a subset of 1,408,395 of the these ratings, leaving 99,072,112 for training.

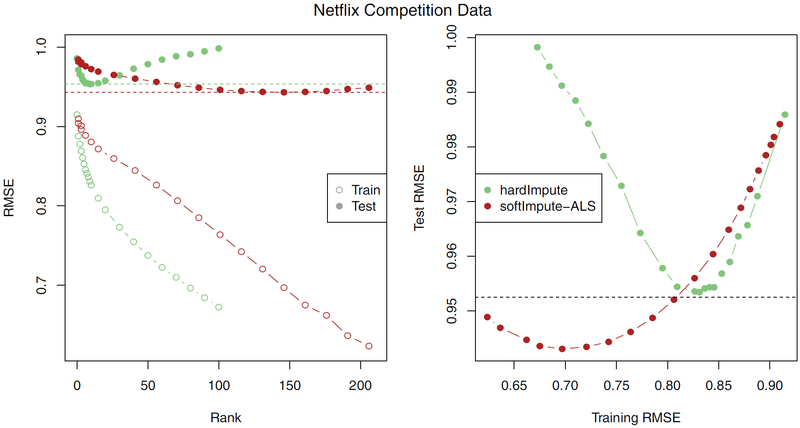

Figure 2 compares the performance of hardImpute (Mazumder et al., 2010) with softImpute-ALS on these data. hardImpute uses rank-restricted SVDs iteratively to estimate the missing data, similar to softImpute but without shrinkage. The shrinkage helps here, leading to a best test-set RMSE of 0.943. This is a 1% improvement over the “Cinematch” score, somewhat short of the prize-winning improvement of 10%.

Figure 2:

Performance of hardImpute versus softImpute-ALS on the Netflix data. hardImpute uses a rank-restricted SVD at each step of the imputation, while softImpute-ALS does shrinking as well. The left panel shows the training and test error as a function of the rank of the solution—an imperfect calibration in light of the shrinkage. The right panel gives the test error as a function of the training error. hardImpute fits more aggressively, and overfits far sooner than softImpute-ALS. The horizontal dotted line is the “Cinematch” score, the target to beat in this competition.

Both methods benefit greatly from using warm starts. hardImpute is solving a non-convex problem, while the intention is for softImpute-ALS to solve the convex Problem (1). This will be achieved if the operating rank is sufficiently large. The idea is to decide on a decreasing sequence of values for λ, starting from λmax (the smallest value for which the solution , which corresponds to the largest singular value of PΩ(X)). Then for each value of λ, use an operating rank somewhat larger than the rank of the previous solution, with the goal of getting the solution rank smaller than the operating rank. The sequence of twenty models took under six hours of computing on a Linux cluster with 300Gb of ram (with a fairly liberal relative convergence criterion of 0.001), using the softImpute package in R.

Figure 3 (left panel) gives timing comparison results for one of the Netflix fits, this time implemented in Matlab. The right panel gives timing results on the smaller MovieLens 10M matrix. In these applications we need not get a very accurate solution, and so early stopping is an attractive option. softImpute-ALS reaches a solution close to the minimum in about 1/4 the time it takes ALS.

7. R Package softImpute

We have developed an R package softImpute for fitting these models (Hastie and Mazumder, 2013), which is available on CRAN. The package implements both softImpute and softImpute-ALS. It can accommodate large matrices if the number of missing entries is correspondingly large, by making use of sparse-matrix formats. There are functions for centering and scaling (see Section 9), and for making predictions from a fitted model. The package also has a function svd .als for computing a low-rank SVD of a large sparse matrix, with row and/or column centering. More details can be found in the package Vignette on the first authors web page, at http://web.stanford.edu/~hastie/swData/softimpute/vignette.html.

8. Distributed Implementation

We provide a distributed version of softimpute-ALS (given in Algorithm 5.1), built upon the Spark cluster programming framework.

8.1. Design

The input matrix to be factored is split row-by-row across many machines. The transpose of the input is also split row-by-row across the machines. The current model (i.e. the current guess for A, B) is repeated and held in memory on every machine. Thus the total time taken by the computation is proportional to the number of non-zeros divided by the number of CPU cores, with the restriction that the model should fit in memory.

At every iteration, the current model is broadcast to all machines, such that there is only one copy of the model on each machine. Each CPU core on a machine will process a partition of the input matrix, using the local copy of the model available. This means that even though one machine can have many cores acting on a subset of the input data, all those cores can share the same local copy of the model, thus saving RAM. This saving is especially pronounced on machines with many cores.

The implementation is available online at http://git.io/sparkfastals with documentation, in Scala. The implementation has a method named multByXstar, corresponding to line 3 of Algorithm 5.1 which multiplies X* by another matrix on the right, exploiting the “sparse-plus-low-rank” structure of X*. This method has signature:

This method has four parameters. The first parameter X is a distributed matrix consisting of the input, split row-wise across machines. The full documentation for how this matrix is spread across machines is available online4. The multByXstar method takes a distributed matrix, along with local matrices A, B, and C, and performs line 3 of Algorithm 5.1 by multiplying X* by C. Similarly, the method multByXstarTranspose performs line 5 of Algorithm 5.1.

After each call to multByXstar, the machines each will have calculated a portion of A. Once the call finishes, the machines each send their computed portion (which is small and can fit in memory on a single machine, since A can fit in memory on a single machine) to the master node, which will assemble the new guess for A and broadcast it to the worker machines. A similar process happens for multByXstarTranspose, and the whole process is repeated every iteration.

8.2. Experim ents

We report iteration times using an Amazon EC2 cluster with 10 slaves and one master, of instance type “c3.4xlarge”. Each machine has 16 CPU cores and 30 GB of RAM. We ran softimpute-ALS on matrices of varying sizes with iteration runtimes available in Table 1, setting k = 5. Where possible, hardware acceleration was used for local linear algebraic operations, via breeze and BLAS.

Table 1:

Running times for distributed softimpute-ALS

| Matrix Size | Number of Nonzeros | Time per iteration (s) |

|---|---|---|

| 106 × 106 | 106 | 5 |

| 106 × 106 | 109 | 6 |

| 107 × 107 | 109 | 139 |

The popular Netflix prize matrix has 17, 770 rows, 480,189 columns, and 100, 480, 507 non-zeros. We report results on several larger matrices in Table 1, up to 10 times larger.

9. Centering and Scaling

We often want to remove row and/or column means from a matrix before performing a low-rank SVD or running our matrix completion algorithms. Likewise we may wish to standardize the rows and or columns to have unit variance. In this section we present an algorithm for doing this, in a way that is sensitive to the storage requirement of very large, sparse matrices. We first present our approach, and then discuss implementation details.

We have a two-dimensional array , with pairs (i, j) ∈ Ω observed and the rest missing. The goal is to standardize the rows and columns of X to mean zero and variance one simultaneously. We consider the mean/variance model

| (58) |

with

| (59) |

| (60) |

Given the parameters of this model, we would standardized each observation via

| (61) |

If model (58) were correct, then each entry of the standardized matrix, viewed as a realization of a random variable, would have population mean/variance (0,1). A consequence would be that realized rows and columns would also have means and variances with expected values zero and one respectively. However, we would like the observed data to have these row and column properties.

Our representation (59)–(60) is not unique, but is easily fixed to be so. We can include a constant μ0 in (59) and then have αi and βj average 0. Likewise, we can have an overall scaling σ0, and then have log τi and log γj average 0. Since this is not an issue for us, we suppress this refinement.

We are not the first to attempt this dual centering and scaling. Indeed, Olshen and Rajaratnam (2010) implement a very similar algorithm for complete data, and discuss convergence issues. Our algorithm differs in two simple ways: it allows for missing data, and it learns the parameters of the centering/scaling model (61) (rather than just applying them). This latter feature will be important for us in our matrix-completion applications; once we have estimated the missing entries in the standardized matrix , we will want to reverse the centering and scaling on our predictions.

In matrix notation we can write our model

| (62) |

where Dτ = diag(τ1,τ2, …, τm), similar for Dγ, and the missing values are represented in the full matrix as NAs (e.g. as in R). Although it is not the focus of this paper, this centering model is also useful for large, complete, sparse matrices X (with many zeros, stored in sparse-matrix format). Centering would destroy the sparsity, but from (62) we can see we can store it in “sparse-plus-low-rank” format. Such a matrix can be left and right-multiplied easily, and hence is ideal for alternating subspace methods for computing a low-rank SVD. The function svd .als in the softImpute package (section 7) can accommodate such structure.

9.1. Method-of-moments Algorithm

We now present an algorithm for estimating the parameters. The idea is to write down four systems of estimating equations that demand that the transformed observed data have row means zero and variances one, and likewise for the columns. We then iteratively solve these equations, until all four conditions are satisfied simultaneously. We do not in general have any guarantees that this algorithm will always converge except in the noted special cases, but empirically we typically see rapid convergence.

Consider the estimating equation for the row-means condition (for each row i)

| (63) |

where Ωi = {j|(i, j) ∈ Ω}, and ni = |Ωi| ≤ n. Rearranging we get

| (64) |

This is a weighted mean of the partial residuals Xij· — βj with weights inversely proportional to the column standard-deviation parameters γj. By symmetry, we get a similar equation for βj,

| (65) |

where Ωj = {i|(i, j) ∈ Ω}, and mj = |Ωj| ≤ m.

Similarly, the variance conditions for the rows are

| (66) |

which simply says

| (67) |

Likewise

| (68) |

The method-of-moments estimators require iterating these four sets of equations (64), (65), (67), (68) till convergence. We monitor the following functions of the “residuals”

| (69) |

| (70) |

In experiments it appears that R converges to zero very fast, perhaps linear convergence. In Appendix B we show slightly different versions of these estimators which are more suitable for sparse-matrix calculations.

In practice we may not wish to apply all four standardizations, but instead a subset. For example, we may wish to only standardize columns to have mean zero and variance one. In this case we simply set the omitted centering parameters to zero, and scaling parameters to one, and skip their steps in the iterative algorithm. In certain cases we have convergence guarantees:

Column-only centering and/or scaling. Here no iteration is required; the centering step precedes the scaling step, and we are done. Likewise for row-only.

Centering only, no scaling. Here the situation is exactly that of an unbalanced two-way ANOVA, and our algorithm is exactly the Gauss-Seidel algorithm for fitting the two-way ANOVA model. This is known to converge, modulo certain degenerate situations.

For the other cases we have no guarantees of convergence.

We present an alternative sequence of formulas in Appendix B which allows one to simultaneously apply the transformations, and learn the parameters.

10. Discussion

We have presented a new algorithm for matrix completion, suitable for solving Problem (1) for very large problems, as long as the solution rank is manageably low. Our algorithm capitalizes on the different strengths and weakness in each of the popular alternatives:

ALS has to solve a different regression problem for every row/column, because of their different amount of missingness, and this can be costly. softImpute-ALS solves a single regression problem once and simultaneously for all the rows/columns, because it operates on a filled-in matrix which is complete. Although these steps are typically not as strong as those of ALS, the speed advantage more than compensates.

softImpute wastes time in early iterations computing a low-rank SVD of a far-from-optimal estimate, in order to make its next imputation. One can think of softImpute-ALS as simultaneously filling in the matrix at each alternating step, as it is computing the SVD. By the time it is done, it has the the solution sought by softImpute, but with far fewer iterations.

softImpute allows for an extremely efficient distributed implementation (Section 8), and hence can scale to large problems, given a sufficiently large computing infrastructure.

Acknowledgments

The authors thank Balasubramanian Narasimhan for helpful discussions on distributed computing in R. The first author thanks Andreas Buja and Stephen Boyd for stimulating “footnote” discussions that led to the centering/scaling in Section 9. Trevor Hastie was partially supported by grant DMS-1407548 from the National Science Foundation, and grant R01-EB001988–15 from the National Institutes of Health. Rahul Mazumder was funded in part by Columbia University’s start-up funds and a grant from the Betty-Moore Sloan Foundation.

Appendix A. Proofs from Section 5.1

Here, we gather some proofs and technical details from Section 5.1.

A.1. Proof of Lemma 2

To prove this we begin with the following elementary result concerning a ridge regression problem:

Lemma 5 Consider a ridge regression problem

| (71) |

with β* ∈ arg minβ H(β). Then the following inequality is true:

Proof The proof follows from the second order Taylor Series expansion of H(β):

and observing that ∇H(β*) =0. ■

We will need to obtain a lower bound on the difference F(Ak+1, Bk) – F(Ak, Bk). Towards this end we make note of the following chain of inequalities:

| (72) |

| (73) |

| (74) |

| (75) |

| (76) |

| (77) |

where, Line (73) follows from (31), and (76) follows from (30).

Clearly, from Lines (77) and (72) we have (78)

| (78) |

| (79) |

where, (79) follows from (78) using Lemma 5.

Similarly, following the above steps for the B-update we have:

| (80) |

Adding (79) and (80) we get (35) concluding the proof of the lemma.

A.2. Proof of Lemma 3

Let us use the shorthand Δ in place of Δ((A, B), (A+, B+)) as defined in (37).

First of all observe that the result (35) can be replaced with (Ak, Bk) ← (A, B) and (Ak+1,Bk+1) ← (A+,B+). This leads to the following:

| (81) |

First of all, it is clear that if A, B is a fixed point then Δ = 0.

Let us consider the converse, i.e., the case when Δ = 0. Note that if Δ = 0 then each of the summands appearing in the definition of Δ is also zero. We will now make use of the interesting result (that follows from the Proof of Lemma 2) in (78) and (79) which says:

Now the right hand side of the above equation is zero (since Δ = 0) which implies that, QA(A|A, B) – QA(A+|A,B) = 0. An analogous result holds true for B.

Using the nesting property (34), it follows that F(A, B) = F(A+, B+)—thereby showing that (A, B) is a fixed point of the algorithm.

A.3. Proof of Theorem 4

We make use of (35) and add both sides of the inequality over k = 1, … ,K, which leads to:

| (82) |

Since, F(Ak, Bk) is a decreasing sequence (bounded below) it converges to F∞ say. It follows that:

| (83) |

Using (83) along with (82) we have the following convergence rate:

thereby completing the proof of the theorem.

A.4. Proof of Corollary 1

Recall the definition of ηk

Since we have assumed that

we then have:

Using the above in (82) and assuming that ℓL > 0, we have the bound:

| (84) |

Suppose instead of the proximity measure:

we use the proximity measure:

Then observing that:

we have:

Using the above bound in (82) we arrive at a bound which is similar in spirit to (41) but with a different proximity measure on the step-sizes:

| (85) |

It is useful to contrast results (41) and (42) with the case λ = 0.

| (86) |

The convergence rate with the other proximity measure on the step-sizes have the following two cases:

| (87) |

The assumption (40) and can be interpreted as an upper bounds to the locally Lipschitz constants of the gradients of QA(Z|Ak, Bk) and QB(Z|Ak+1, Bk) for all k:

| (88) |

The above leads to convergence rate bounds on the (partial) gradients of the function F(A, B), i.e.,

A.5. Proof of Theorem 5

Proof Part (a):

We make use of the convergence rate derived in Theorem 4. As k → ∞, it follows that ηk → 0. This describes the fate of the objective values F(Ak, Bk), but does not inform us about the properties of the sequence Ak,Bk. Towards this end, note that if λ > 0, then the sequence Ak, Bk is bounded and thus has a limit point. Let A*, B* be any limit point of the sequence Ak, Bk. It follows by a simple subsequence argument that F (Ak,Bk) → F (A*,B*) and A*,B* is a fixed point of Algorithm 5.1 and in particular a first order stationary point of Problem (6).

Part (b):

The sequence (Ak, Bk) need not have a unique limit point, however, we show below: for every subsequence of Bk that converges, the corresponding subsequence of Ak also converges.

Suppose, Bk → B* (along a subsequence k ∈ ν). We will show that the sequence Ak for k ∈ ν has a unique limit point.

We argue by the method of contradiction. Suppose there are two limit points of Ak, k ∈ ν, namely, A1and A2 and and with A1 ≠ A2.

Consider the objective value sequence: F (Ak, Bk). For fixed Bk the update in A from Ak to Ak+1 results in

Take k1 ∈ ν1 and k2 ∈ ν2, we have:

| (89) |

| (90) |

where Line 90 follows by using Lemma 5. As k1, k2 → ∞, hence,

However, the lhs of (89) converges to zero, which is a contradiction. This implies that i.e. Ak for k ∈ ν has a unique limit point.

Exactly the same argument holds true for the sequence Ak, leading to the conclusion of the other part of Part (b). ■

Appendix B. Alternative Computing Formulas for Method of Moments

In this section we present the same algorithm, but use a slightly different representation. For matrix-completion problems, this does not make much of a difference in terms of computational load. But we also have other applications in mind, where the large matrix X may be fully observed, but is very sparse. In this case we do not want to actually apply the centering operations; instead we represent the matrix as a “sparse-plus-low-rank” object, a class for which we have methods for simple row and column operations.

Consider the row-means (for each row i). We can introduce a change from the old to the new αi. Then we have

| (91) |

where as before Ωi = {j|(i, j) ∈ Ω}. Rearranging we get

| (92) |

where

| (93) |

Then . By symmetry, we get a similar equation for ,

Likewise for the variances.

| (94) |

| (95) |

Here we modify τi by a multiplicative factor . Here the solution is

| (96) |

By symmetry, we get a similar equation for ,

The method-of-moments estimators amount to iterating these four sets of equations till convergence. Now we can monitor the changes via

| (97) |

which should converge to zero.

Footnotes

Actually MMMF also refers to the margin-based loss function that they used, but we will nevertheless use this acronym.

By global convergence rate, we mean an upper bound on the maximum number of iterations that need to be taken by an algorithm to reach an ϵ-accurate first-order stationary point. This rate applies for any starting point of the algorithm.

Convergence is measured in terms of the usual notion of distance between subspaces (Golub and Van Loan, 2012); and it is also assumed that the initialization is not completely orthogonal to the target subspace, which is typically met in practice due to the presence of round-off errors.

Contributor Information

Trevor Hastie, Department of Statistics, Stanford University, CA 94305, USA.

Rahul Mazumder, Department of Statistics, Columbia University, New York, NY 10027, USA.

Jason D. Lee, Institute for Computational and Mathematical Engineering, Stanford University, CA 94305, USA

Reza Zadeh, Databricks, 2030 Addison Street, Suite 610, Berkeley, CA 94704, USA.

References

- Bertsekas Dimitri P. Nonlinear Programming. Athena Scientific, Belmont, Massachusetts, 2nd edition, 1999. ISBN 1886529000. URL http://www.amazon.com/exec/obidos/redirect?tag=citeulike07-20&path=ASIN/1886529000. [Google Scholar]

- Burer Samuel and Monteiro Renato D.C.. Local minima and convergence in low-rank semidefinite programming. Mathematical Programming, 103(3):427–631, 2005. [Google Scholar]

- Emmanuel Candès and Benjamin Recht. Exact matrix completion via convex optimization. Foundations of Computational Mathematics, 2008. doi: 10.1007/s10208-009-9045-5. URL 10.1007/s10208-009-9045-5. [DOI] [Google Scholar]

- Emmanuel J Candès and Terence Tao. The power of convex relaxation: Near-optimal matrix completion, 2009. URL http://www.citebase.org/abstract?id=oai:arXiv.org:0903.1476.

- Chen Caihua, He Bingsheng, and Yuan Xiaoming. Matrix completion via an alternating direction method. IMA Journal of Numerical Analysis, 32(1):227–245, 2012. [Google Scholar]

- Golub G and Van Loan C. Matrix Computations. Johns Hopkins University Press, 3 edition, 2012. [Google Scholar]

- Hardt Moritz. Understanding alternating minimization for matrix completion. In Foundations of Computer Science (FOCS), 2014 IEEE 55th Annual Symposium on, pages 651–660. IEEE, 2014. [Google Scholar]

- Hastie Trevor and Mazumder Rahul. softImpute: Matrix Completion via Iterative Soft-Thresholded Svd, 2013. URL http://CRAN.R-project.org/package=softImpute. R package version 1.0. [Google Scholar]

- Hastie Trevor, Tibshirani Robert, and Friedman Jerome. The Elements of Statistical Learning, Second Edition: Data Mining, Inference, and Prediction (Springer Series in Statistics). Springer New York, 2 edition, 2009. ISBN 0387848576. [Google Scholar]

- Jain Prateek, Netrapalli Praneeth, and Sanghavi Sujay. Low-rank matrix completion using alternating minimization. In Proceedings of the Forty-Fifth Annual ACM Symposium on Theory of Computing , pages 665–674. ACM, 2013. [Google Scholar]

- Michel Journée F Bach P-A Absil, and Sepulchre Rodolphe. Low-rank optimization on the cone of positive semidefinite matrices. SIAM Journal on Optimization, 20(5):2327–2351, 2010. [Google Scholar]

- Koren Yehuda, Bell Robert, and Volinsky Chris. Matrix factorization techniques for recommender systems. Computer, 42(8):30–37, 2009. [Google Scholar]

- Larsen RM. Propack-software for large and sparse svd calculations, 2004. URL http://sun.stanford.edu/~rmunk/PROPACK/.

- Lewis A. Derivatives of spectral functions. Mathematics of Operations Research, 21(3): 576–588, 1996. [Google Scholar]

- Mazumder Rahul, Hastie Trevor, and Tibshirani Rob. Spectral regularization algorithms for learning large incomplete matrices. Journal of Machine Learning Research, 11:2287–2322, 2010. [PMC free article] [PubMed] [Google Scholar]

- Mirsky Leon. A trace inequality of John von Neumann. Monatshefte für Mathematik, 79 (4):303–306, 1975. [Google Scholar]

- Olshen Richard and Rajaratnam Bala. Successive normalization of rectangular arrays. Annals of Statistics, 38(3):1638–1664, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Razaviyayn Meisam, Hong Mingyi, and Luo Zhi-Quan. A unified convergence analysis of block successive minimization methods for nonsmooth optimization. SIAM Journal on Optimization, 23(2):1126–1153, 2013. [Google Scholar]

- Rennie J and Srebro N. Fast maximum margin matrix factorization for collaborative prediction. In ICML, 2005. [Google Scholar]

- Srebro Nathan, Rennie Jason, and Jaakkola Tommi. Maximum margin matrix factorization. Advances in Neural Information Processing Systems, 17, 2005. [Google Scholar]

- Stewart G and Sun Ji-Guang. Matrix Perturbation Theory. Academic Press, Boston, 1 edition, 1990. ISBN 0126702306. URL http://www.amazon.com/exec/obidos/redirect?tag=citeulike07-20&path=ASIN/0126702306. [Google Scholar]

- Zhou Yunhong, Wilkinson Dennis, Schreiber Robert, and Pan Rong. Large-scale parallel collaborative filtering for the netflix prize. In Algorithmic Aspects in Information and Management, pages 337–348. Springer, 2008. [Google Scholar]