Abstract

Auditory neurons encode stimulus history, which is often modelled using a span of time-delays in a spectro-temporal receptive field (STRF). We propose an alternative model for the encoding of stimulus history, which we apply to extracellular recordings of neurons in the primary auditory cortex of anaesthetized ferrets. For a linear-non-linear STRF model (LN model) to achieve a high level of performance in predicting single unit neural responses to natural sounds in the primary auditory cortex, we found that it is necessary to include time delays going back at least 200 ms in the past. This is an unrealistic time span for biological delay lines. We therefore asked how much of this dependence on stimulus history can instead be explained by dynamical aspects of neurons. We constructed a neural-network model whose output is the weighted sum of units whose responses are determined by a dynamic firing-rate equation. The dynamic aspect performs low-pass filtering on each unit’s response, providing an exponentially decaying memory whose time constant is individual to each unit. We find that this dynamic network (DNet) model, when fitted to the neural data using STRFs of only 25 ms duration, can achieve prediction performance on a held-out dataset comparable to the best performing LN model with STRFs of 200 ms duration. These findings suggest that integration due to the membrane time constants or other exponentially-decaying memory processes may underlie linear temporal receptive fields of neurons beyond 25 ms.

Author summary

The responses of neurons in the primary auditory cortex depend on the recent history of sounds over seconds or less. Typically, this dependence on the past has been modelled by applying a wide span of time delays to the input, although this is likely to be biologically unrealistic. Real neurons integrate the history of their activity due to the dynamical properties of their cell membranes and other components. We show that a network with a realistically narrow span of delays and with units having dynamic characteristics like those found in neurons, succinctly models neural responses recorded from ferret primary auditory cortex. Because these integrative properties are widespread, our dynamic network provides a basis for modelling responses in other neural systems.

Introduction

The tuning properties of auditory neurons are commonly described by a spectro-temporal receptive field (STRF) model, which characterizes the linear dependence of the neural response on the sound spectrum at a range of latencies [1–14]. A static non-linearity is often applied to the output from the linear STRF—this linear non-linear (LN) model estimates the responses of neurons significantly better than the linear estimate [15,16]. While STRF models are somewhat successful in explaining the dependence of neural responses on the past few hundred milliseconds of stimulus history [17], these models do not show how this temporal aspect of receptive fields might be implemented biologically. The most direct biological interpretation of the STRF model would involve auditory cortical neurons receiving inputs at a range of simple delays spanning out to 200 ms; however, this is biologically unrealistic. The onset latencies of neurons in the ventral division of the medial geniculate body (vMGB), which provides the primary ascending input to primary auditory cortex (A1), are typically less than ~14 ms in the ferret [18], the animal species that provided the data that were modelled in this study. In the cat vMGB response latencies are typically less than ~20 ms [19], in the mouse less than ~10 ms [20], and in the marmoset less than ~40 ms [21]. These values are far less than the duration of stimulus history which influences the responses of A1 neurons. Although a few vMGB units do show onset latencies beyond this range (in cat up to 70 ms [19], in mouse up to 60 ms [20], and in marmoset up to 300 ms [21]), this small fraction is unlikely to be the main determinant of the dependence of the neural response in primary auditory cortex on stimulus history beyond a few tens of milliseconds. This is because there are other plausible mechanisms, such as the dynamic temporally-integrative properties intrinsic to neurons.

In this study, using recordings from anesthetised ferrets, we asked how much of the dependence on recent stimulus history—the temporal receptive fields, of primary auditory cortical neurons can instead be explained by certain of these simple dynamical aspects of neurons–an approach more consistent with the known biology. To model a neuron’s response, we constructed a neural network model whose output is the weighted sum of the responses of multiple units, each of which resembles an LN model. However, the response of each unit of the network was modified in accordance with a dynamic firing-rate equation. This low-pass filters the unit’s response (by convolving with an exponential decay impulse response), providing a simple exponentially decaying memory. This integrative characteristic can be related to the capacitance and resistance of nerve cell membranes, and the individual time constant of each unit can be related to the membrane time constant of real neurons [22]. The dynamic aspect of our network can alternatively be interpreted in terms of other neurobiological dynamical phenomena, such as channel-based neural adaptation, short term synaptic plasticity, or recurrent network properties. We found that our biologically-motivated dynamical model can accurately capture the temporal receptive fields of neurons without the need for a wide span of latencies of input.

Materials and methods

Ethics statement

In vivo electrophysiological recordings in ferrets were performed under ketamine (5 mg/kg/h) and medetomidine (0.022 mg/kg/h) anaesthesia. All animal procedures were performed under license from the UK Home Office and were approved by the University of Oxford Committee on Animal Care and Ethical Review.

Stimuli

Models were fitted to single-unit neural responses of anesthetized ferrets to natural sound stimuli. Altogether, 20 sound clips were presented containing human speech in different languages, other animal vocalizations (e.g. ferret) and environmental sounds (e.g. wind and water). All clips were 5 s long with a sampling rate of 48,828.125 Hz and with root mean square intensity ranging from 75 to 82 dB SPL.

Experimental setup and neural responses

The electrophysiological data used in this study were taken from a series of experiments used in a previous study by Harper et al. [23]. Briefly, recordings were made from the primary auditory cortical areas, A1 and the anterior auditory field (AAF) of 6 adult pigmented ferrets (5 females and 1 male) under ketamine (5 mg/kg/h) and medetomidine (0.022 mg/kg/h) anaesthesia for 20 repeats of the 20 natural sound clips played in random order. All animal procedures were performed under license from the United Kingdom Home Office and were approved by the local ethical review committee. In total, 56 penetrations resulted in 549 single and multi-units, of which 284 were single units. A single unit was taken for analysis only if its activity was driven by the stimulus according to the noise ratio (see below). For each unit, the number of spikes was counted in each 5 ms time bin and averaged over repeats, to provide a response profile yn(t), where t is time, and n is the clip number. The total number of time bins in a clip is T. For simpler notation, we will drop the subscript n, unless we note otherwise.

Noise ratio

The noise ratio was calculated as a measure of how much the response of each unit is dependent on the stimuli [9,24]. The noise ratio was measured over all 20 stimuli and 20 repeats. Any unit with a noise ratio > 40 were excluded from the study. Also, only putative single units were used. This resulted in 73 neurons for the study.

Cochleagrams

The models receive the input as a cochleagram, a spectrogram-like transformation of the sound waveform. The cochleagram approximates the spectral filtering performed by the auditory periphery and was calculated as follows [23,25]. For each sound clip, the amplitude spectrum was measured using 10-ms Hanning windows, overlapping by 5ms. The number of frequency channels was then reduced by weighted summation using overlapping triangular windows to provide 34 log-spaced channels (500 Hz to 22,627 Hz center frequencies, adapted from melbank.m (http://www.ee.ic.ac.uk/hp/staff/dmb/voicebox/voicebox.html)). Next, a log function was applied to the value in each time-frequency bin, and any values below a low threshold was set to the threshold. The cochleagram was normalized to zero mean and unit variance over the training set (see Cross-validation and model testing). The input xfq(t) at time t was the chunk of cochleagram preceding t, given by xfq(t) = Kf(t−q+1), where f is the frequency channel and q is the latency and Kf(t) is the cochleagram.

The LN model

The two stage LN model consists of a linear model followed by a sigmoid output nonlinearity.

Linear stage:

| (1) |

where a(t) is the model neuron's activation, wfq is the input weight and b is the baseline activation. Both w and b are free parameters of the model and were estimated by regressing neural response y(t) against input x(t) using glmnet [26]. To overcome overfitting, the weights were regularized using an L1-norm (LASSO regularization). Thus a(t) can be seen as the best linear estimate of y(t) from x(t). The regularization hyperparameter λ controlled the strength of the regularization. To select λ, the model was subjected to k-fold cross-validation (see Cross-validation and model testing).

Nonlinear stage: The nonlinear stage of the model was a parameterized sigmoid nonlinearity:

| (2) |

The four parameters ρi of the function were fitted by minimizing the squared error between v(t) and y(t).

The network receptive field (NRF) model

The NRF model is an artificial neural network with a single hidden layer of 20 hidden units (HU) which converge onto an output unit (OU). Each unit of the model is similar to a single LN model. The activation of the j-th HU, aj(t) is,

| (3) |

The output of the j-th HU, vj(t) is,

| (4) |

where g(aj(t)) is a sigmoid non-linear activation function, 1/(1+exp(−aj(t))). The HUs feed these outputs to the OU. The activation function of the OU ao(t) is,

| (5) |

The output vo(t) of the OU is,

| (6) |

which is the model’s estimate of the neuron’s response at time t.

The dynamic network (DNet) model

A neuron’s response depends on the activity of its presynaptic neurons through the total synaptic current they evoke. However, a neuron’s membrane potential, and consequently its firing rate, is not an instantaneous function of this current. Due to the capacitance and resistance of the neural cell membrane, the membrane potential and hence the firing rate is approximately a low-pass filtered version of the synaptic current. A common simple dynamic model of neurons that captures this phenomenon is the differential equation (Eq 7) known as the firing-rate equation [22].

| (7) |

Here, τcont is the membrane time constant, v is post-synaptic firing rate, I(tcont) is the synaptic current at time tcont,F() is a nonlinear function that relates synaptic current to the post-synaptic firing rate, and tcont is time. Here we use tcont and τcont with the superscript ‘cont’ to denote that for this equation these variables operate in continuous time rather than discrete time. τcont determines how rapidly firing rate approaches steady state for a constant synaptic current, and can be related to the membrane time constant [22]. Although the relationship is not strict, we shall call τcont the membrane time constant. This is equivalent to convolving the instantaneous output of function F() with an exponential decay impulse response.

Eq 7 can be discretized by the Euler method, where c = 1 time-bin = 5 ms. We redefine v and I to depend on time-bin number, t, rather than continuous time (t = tcont / c, where t is an integer). Hence, the discretized form of the firing rate equation is:

| (8) |

The dynamic network model incorporates units that behave like Eq 8. In recognition that the characteristics of units in our model may not exactly correspond to biophysical properties of neurons described by the firing rate equation, and to be consistent with the NRF model, we replace F() with g() and the current I(t−1) with activation a(t).

As a result, activation aj(t) of the j-th HU of the DNet model is the same as the NRF model,

| (9) |

However, the output vj(t) is different from that of the NRF model, incorporating the discretized firing-rate equation.

| (10) |

Similarly, the activation ao(t) of the OU is,

| (11) |

and the output vo(t) of the OU is,

| (12) |

Here, τj and τo are the membrane time constants of j-th hidden unit and output unit respectively.

The sDNet model

The firing-rate equation (Eq 7) assumes that the time constant that governs the relationship between the current and the firing rate is substantially larger than the time constant that governs the decay of post-synaptic potentials. However, if we assume the reverse, i.e., that the synaptic time constant is substantially larger, a different simple model of the relationship between the firing rate and the current becomes appropriate [22]. This model is given by:

| (13) |

| (14) |

Here, I is synaptic current and v is post-synaptic firing rate, z(tcont) is the immediate influence of the presynaptic spikes on the synaptic current, F() is the nonlinear function of synaptic current to post-synaptic firing rate, is synaptic time constant, and tcont is time.

Similar discretization, like that used for the DNet model (see above), can be used for Eq 13 to use this for the units of a neural network model. Again, replacing the synaptic current, I, with the activation a, the activation of hidden units of the sDNet model can be modelled as the following:

| (15) |

And the hidden unit output, replacing F() with g(), is given by:

| (16) |

Hence, in the sDNet model, the activation of each HU, rather than the output of the nonlinearity, is convolved with an exponential decay impulse response.

Similarly, for the output units,

| (17) |

And the output,

| (18) |

Here, τsj and τso are the synaptic time constants of j-th hidden unit and output unit respectively.

Objective function of the NRF and DNet models

The free parameters wjfq, wj, bj, and bo (also τj and τo for the DNet and τsj and τso for the sDNet) were optimized by minimizing the squared error between vo(t) and y(t) subject to L1-regularization of the weights. Thus, the objective function is given by,

| (19) |

Here, n is included to indicate clip number, but was left out of other equations for simplicity. N is the number of clips used in training.

Parameter estimation

All models except for the LN model are fitted by minimizing the objective function with respect to the free parameters using the sum-of-function optimizer algorithm [27]. In using this algorithm, we take one clip to be one minibatch. The optimization algorithm requires calculation of the error gradients in respect to each of the parameters. For the NRF model, error gradients are calculated using standard chain rule (backpropagation). For the dynamic models, the process is similar (see below).

For the network models, before training, the weights were initialized by modified Glorot initialization from a uniform distribution ranging from— to + , where fq is the number of input weights to a HU and l = 1 is the number of output weights from a HU. The biases were initialized similarly [28].

For the DNet model, to prevent the term running into mathematical error (when τ = 0), we substitute with . Parameters, dj and do (for hidden and output units) were each independently initialized with the square root of a random variable from an exponential distribution with a mean of 1. Similar, substitution is used for the sDNet model.

Error-gradient calculation for the DNet model

To estimate the parameters, the gradients of the error with respect to various parameters are calculated using a method similar to the one used for real time recurrent learning [29].

Substituting , the dynamic equations for HUs (Eq 10) and OU (Eq 12) of the DNet model become respectively:

| (20) |

| (21) |

Using the chain rule, the gradient of the error term, E(t) at time t in respect to the weights of the output layer, wj,

| (22) |

| (23) |

The prime denotes the derivative of the function. Similarly, the gradient of the error term, E(t) in respect to the bias of the output layer, bo,

| (24) |

| (25) |

Finally, the gradient of the error term, E(t) with respect to the parameter, do,

| (26) |

| (27) |

Hence, the gradient is passed forward from the current time step to the next time step to be used in calculation of the gradient at that new time step. At t = 1, the values of and are undetermined. So, at time t = 1, the values of these terms are set to zero.

The gradients of the error term, E(t), with respect to the rest of the parameters of the DNet model and the sDNet model are obtained similarly.

Cross-validation and model testing

From the data for the 20 sound stimuli, 4 were chosen as a test set which was not used during training and cross-validation. The cross-validation set (the remaining 16 stimuli) was used to fit the models using k-fold cross validation, where k = 8. The cross-validation set was randomly divided into a training set of 14 stimuli and a validation set of 2 stimuli. The model was trained on the training set for 18 different values of the hyperparameter λ. A log spaced range of lambda values was used, but with a somewhat lower density at the extremes. For the LN model, the exact values of λ used were: 1.00 x 10−1, 2.00 x 10−2, 1.17 x 10−2, 6.84 x 10−3, 4.00 x 10−3, 2.34 x 10−3, 1.37 x 10−3, 8.00 x 10−4, 4.68 x 10−4, 2.74 x 10−4, 1.60 x 10−4, 9.36 x 10−5, 5.41 x 10−5, 3.20 x 10−5, 6.40 x 10−6, 1.28 x 10−6, 2.56 x 10−7, and 5.12 x 10−8. For the rest of the models, the values of λ used were: 1.00 x 10−3, 2.00 x 10−4, 1.17 x 10−4, 6.84 x 10−5, 4.00 x 10−5, 2.34 x 10−5, 1.37 x 10−5, 8.00 x 10−6, 4.68 x 10−6, 2.74 x 10−6, 1.60 x 10−6, 9.36 x 10−7, 5.41 x 10−7, 3.20 x 10−7, 6.40 x 10−8, 1.28 x 10−8, 2.56 x 10−9, and 5.12 x 10−10. For each of the fitted models, neural responses were then predicted for the validation set, and the correlation coefficient between the actual neural responses and the prediction was measured. This process was repeated 8 times for different non-overlapping validation sets. The model was then retrained with the whole cross-validation set using the λ value that provided the highest mean correlation coefficient over all 8 folds. Next, the retrained network was used to predict the neural responses to the test set. All the correlation coefficients and normalized correlation coefficients shown are for this held out test set, and all the model parameters shown are for the retrained network.

To ensure that all models receive the same amount of training data, models with different latency spans of STRFs are provided with exactly the same durations of neural response. This was done by clipping the necessary number of time points from the beginning of the neural response data.

Effective hidden units

The hidden layer of each of the networks contained 20 HUs, but because their weights were L1-regularized, they tended to develop substantive weights only if they explained aspects of the neural response that were not explained by any other units. As a result, many HUs developed weights close to zero, and thus the network models tended to have only a small number of effective HUs. The ‘effectiveness’ of each unit j was calculated [23] as the variance over time of the unit’s weighted output wovj(t). Any HU with variance greater than 5% of the sum of the variances of all 20 HUs is considered effective.

IE score

The IE score [23] measures whether the degree to which a HU is excitatory or inhibitory, on a scale between -1 and 1. Most inhibitory is IE = -1, when all of the input weights are negative or zero, and the output weight is positive, or when all of the input weights are positive or zero and the output weight is negative. Most excitatory is IE = 1, when all of the input weights are positive or zero, and the output weight is positive, or when all of the input weights are negative or zero and the output weight is negative.

| (28) |

Membrane time constant

Because the membrane time constant is modelled as 1+d2 and the duration of each time step is 5 ms, the value of membrane time constant in ms is 5 (1+d2).

Knocking out large time constants of the DNet model

After fitting a network model, HUs with time constant greater than the length of the STRF are selected. The output weights connecting these units to the output unit are then set to zero. As a result, HUs with large time constants become inactive during the test process. Everything else in the model remained unchanged.

Results

The neural response dataset used in this study was recorded from the primary auditory cortical areas, A1 and AAF, of anesthetized ferrets in response to a diverse selection of natural sounds (20 stimuli of 5 s duration), including speech, animal vocalizations and environmental sounds [23,25]. In total, 73 single-unit neural recordings showed sensitivity to the sound stimuli (see Methods), as measured by their noise ratios, and these were analysed in this study.

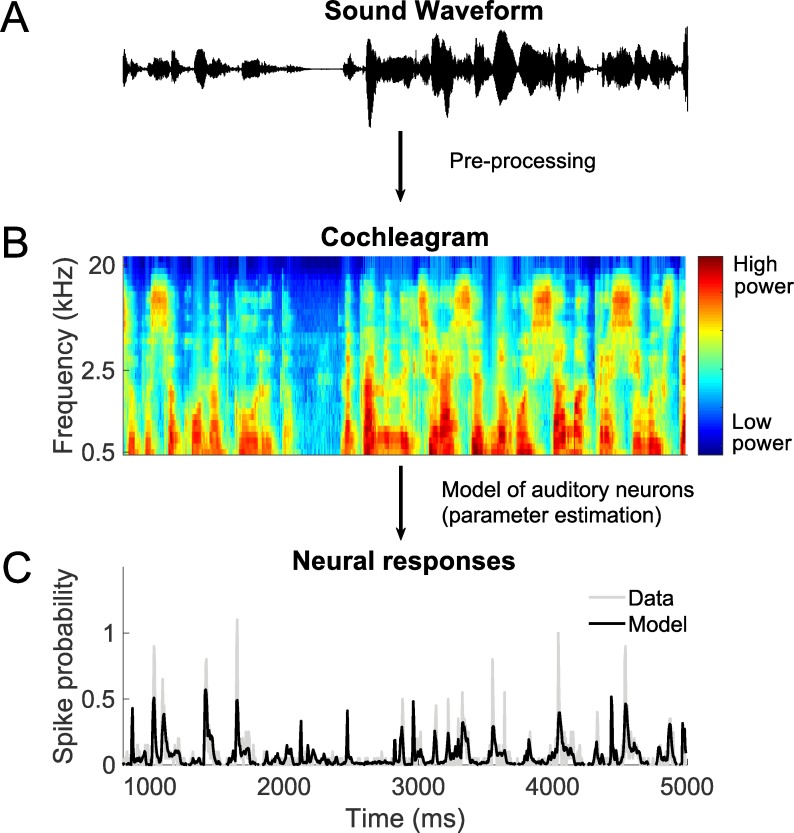

To probe the computations underlying stimulus encoding, models were fitted to estimate the neural firing rate (averaged over stimulus repeats) of each neuron as a function of the preceding sound stimulation (Fig 1). First, for all models, the sound stimuli were pre-processed to generate a spectrogram-like representation (a cochleagram) that approximates the processing that occurs in the cochlea and auditory nerve. Second, using responses to 16 of the natural stimuli, a parameterized model was trained to estimate the firing rate as a function of the preceding cochleagram. After parameters of the models were fitted to the data, their fit quality was tested on a held-out test set composed of the responses to the remaining 4 natural stimuli.

Fig 1. The models have two-stages.

A. The first stage involves pre-processing that mimics the auditory periphery, where the sound waveform is converted into a time-frequency representation (cochleagram). B. The output of the first stage is then used as input to a model that is fitted to the neural responses of auditory cortical neurons. C. After parameter estimation, the model was used to predict neural responses to arbitrary stimuli.

Using standard models, access to 200 ms of recent stimulus history is needed to achieve best prediction

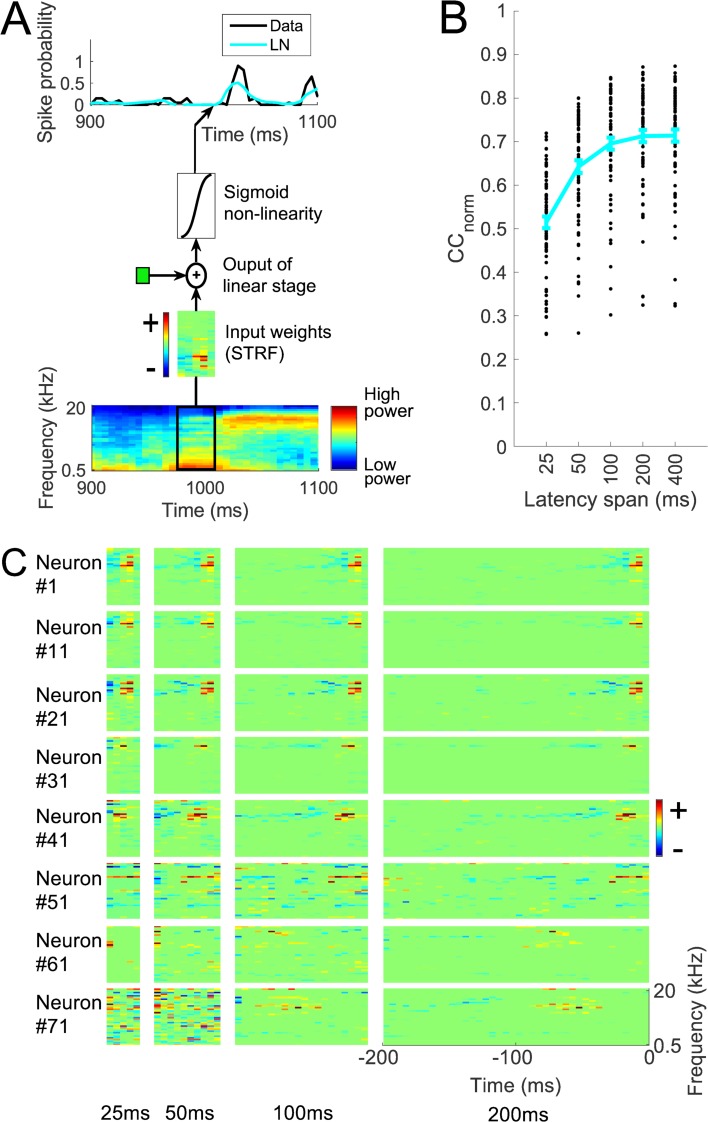

For each neuron, an LN model was fitted to estimate its firing rate as a function of the preceding cochleagram. The LN model consists of an STRF, followed by a sigmoid nonlinearity (Fig 2A). As mentioned in the Methods, the STRF is the weighted sum of the cochleagram over frequency components at a span of latencies. The effect of different spans of latencies (lengths) of the STRF was investigated. For the held-out test set, and averaged over the 73 neurons, the mean normalized correlation coefficient, mean CCnorm [30,31], between the actual neural responses and the prediction of LN models with 25, 50, 100, 200, and 400 ms long STRFs are respectively 0.51, 0.64, 0.69, 0.71, and 0.71 (Fig 2B), where 1.0 is the maximum achievable prediction given the estimated neuronal noise and 0.0 indicates that there is no correlation between actual and predicted neural responses. The means of non-normalized Pearson correlation coefficients (CC) are 0.40, 0.50, 0.54, 0.55, and 0.55 respectively. Fig 2C shows STRFs of some example neurons estimated by the LN model.

Fig 2. Prediction of neural responses by the linear non-linear (LN) model.

A. The LN model, which consists of a spectrotemporal receptive field (STRF) followed by a sigmoid nonlinearity. B. Normalized correlation coefficients (CCnorm) between neural responses and the prediction of the LN model with different latency spans. Each black dot represents a neuron, the curve indicates the mean over 73 neurons and error bars indicate standard error of the mean. C. STRFs of some example neurons, for LN models with different latency spans. Red, excitatory fields, blue, inhibitory. Excitatory and inhibitory fields extend up to 100 ms in most cases and up to 200 ms in some cases.

A network receptive field model (the NRF model) was also developed and fitted to each neuron. This model consisted of the weighted sum of multiple LN-like units. This model is the same as a previously published version [23], except that it uses sigmoid nonlinearities instead of scaled tanh non-linearities. A sigmoid non-linearity was used to be consistent with the dynamic network model that we will come to, which requires non-negative outputs and hence also uses sigmoid non-linearities. The NRF model we use here is essentially an artificial neural network with a single hidden layer, using a sigmoid non-linearity in its hidden units and its single output unit (Fig 3A). The NRF model makes use of multiple component STRFs, which allows modelling of neuronal sensitivity to non-linear interactions of multiple features [23]. STRFs of at least 400 ms are needed for best performance in predicting neural responses (Fig 3B). For the test set, the mean CCnorm between neural responses and the model predictions made by NRF models with 25, 50, 100, 200 and 400 ms long component STRFs are 0.51, 0.64, 0.70, 0.72 and 0.73, respectively (mean CC 0.40, 0.50, 0.54, 0.55 and 0.56, respectively). The NRF model predicts the neural responses equal to or better than the LN model over all durations examined, although by a more modest amount than seen in an earlier study [23]. This may be because the NRF model in this study uses a sigmoid rather than tanh nonlinearity, or because the earlier study [23] used a different way of selecting the test set. Fig 3C shows the input weights (analogous to STRFs) for the ‘effective’ HUs (see Methods) of the model-fit for one example neuron, obtained using the NRF model. Fig 3D and Fig 3E show the ‘effectiveness’ (see Methods) and IE score (see Methods) of the units shown in Fig 3C.

Fig 3. Prediction of neural responses by the network receptive field (NRF) model.

A. The NRF model, which involves a weighted sum of multiple LN-model like units, each with an STRF. B. Normalized correlation coefficients (CCnorm) between neural responses and the prediction of the NRF model with different latency spans. Each black dot represents a neuron, the curve indicates the mean over 73 neurons and error bars indicate standard error of the mean. C. Component STRFs of an example neuron (Neuron #1). Each column represents an NRF model with different latency spans. D. ‘Effectiveness’ (see Methods) and E. IE score (see Methods) of the components STRFs shown in panel C.

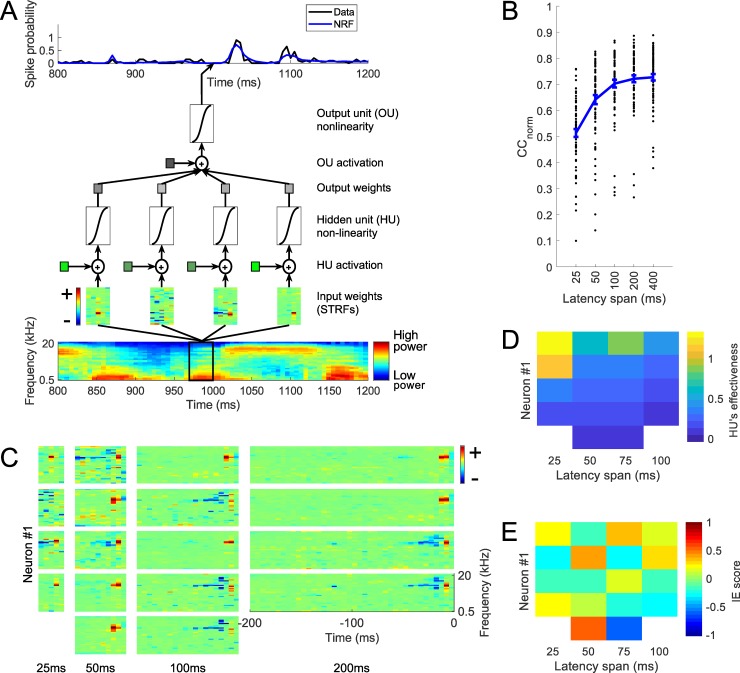

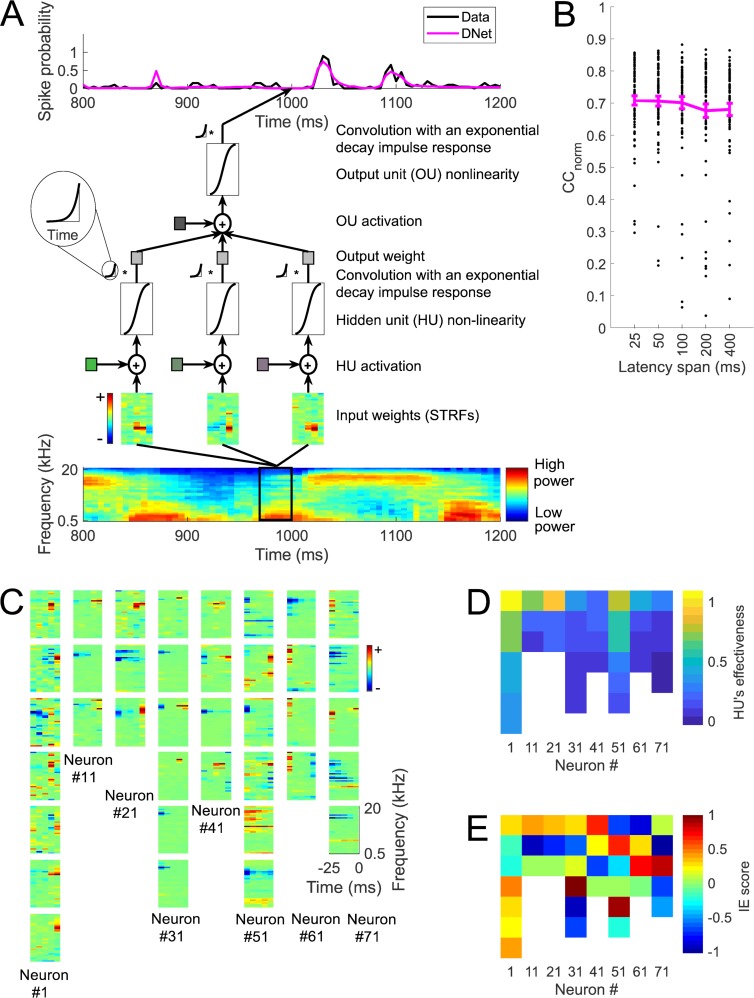

The DNet model can characterize neural responses using very short duration STRFs

Although both the LN and NRF models can capture dependence on stimulus history using a span of input latencies extending up to at least 200 ms, there is little biological evidence for such a wide span of latencies in the auditory thalamic neurons that feed to auditory cortex (see Introduction). With the aim of better understanding the biological underpinnings of stimulus history dependence in auditory cortical responses, a model with a dynamic aspect was developed that integrates the output of its component units over time in a manner similar to those of real neurons (see Methods). Each unit in the DNet model includes exponentially-decaying response integration, motivated by the integrative properties of real neuronal membranes (Fig 4A). This model is in essence the NRF model but with dynamic units, each modelled by the dynamic firing-rate differential equation (Eq 7) [22]. That is, each unit of the model applies an exponentially decaying impulse response to the output of their non-linear activation function. Each unit’s decay rate is governed by its individually fitted time constant, τ (see Methods).

Fig 4. Prediction of neural responses using a dynamic network (DNet) model.

A. The DNet model. The cochleagram is passed through a set of linear-nonlinear filters, whose response is then integrated over time using an exponentially-decaying impulse response to produce the hidden units’ outputs. A weighted sum of these outputs is passed through a similar output unit to make a prediction of the neural response. B. CCnorm for the DNet model with different latency spans. Each black dot is a neuron, the curve indicates the mean over 73 neurons and error bars are standard error of the mean. C. The input weights (STRFs) for the ‘effective’ hidden units (see Methods) of 8 example neurons (each column is a different neuron) for the DNet model. D. ‘Effectiveness’ (see Methods) and E. IE score (see Methods) of the hidden units shown in panel C.

We found that the mean CCnorm between the actual neural responses and the predictions of the DNet model with 25, 50, 100, 200 and 400 ms STRFs are respectively 0.71, 0.71, 0.70, 0.68 and 0.68 (Fig 4B) (mean CC 0.55, 0.55, 0.54, 0.52 and 0.52, respectively). Because the 25-ms model performs the best and the biological range of latencies are typically less than 30 ms[32], we focused on the 25-ms DNet model for further analysis. Fig 4C shows the input weights (analogous to STRFs) for the ‘effective’ hidden units (see Methods) of the model fits for 8 example neurons, using a DNet model with 25-ms long STRFs. Fig 4D and 4E show the ‘effectiveness’ (see Methods) and IE score (see Methods) of the units shown in Fig 4C.

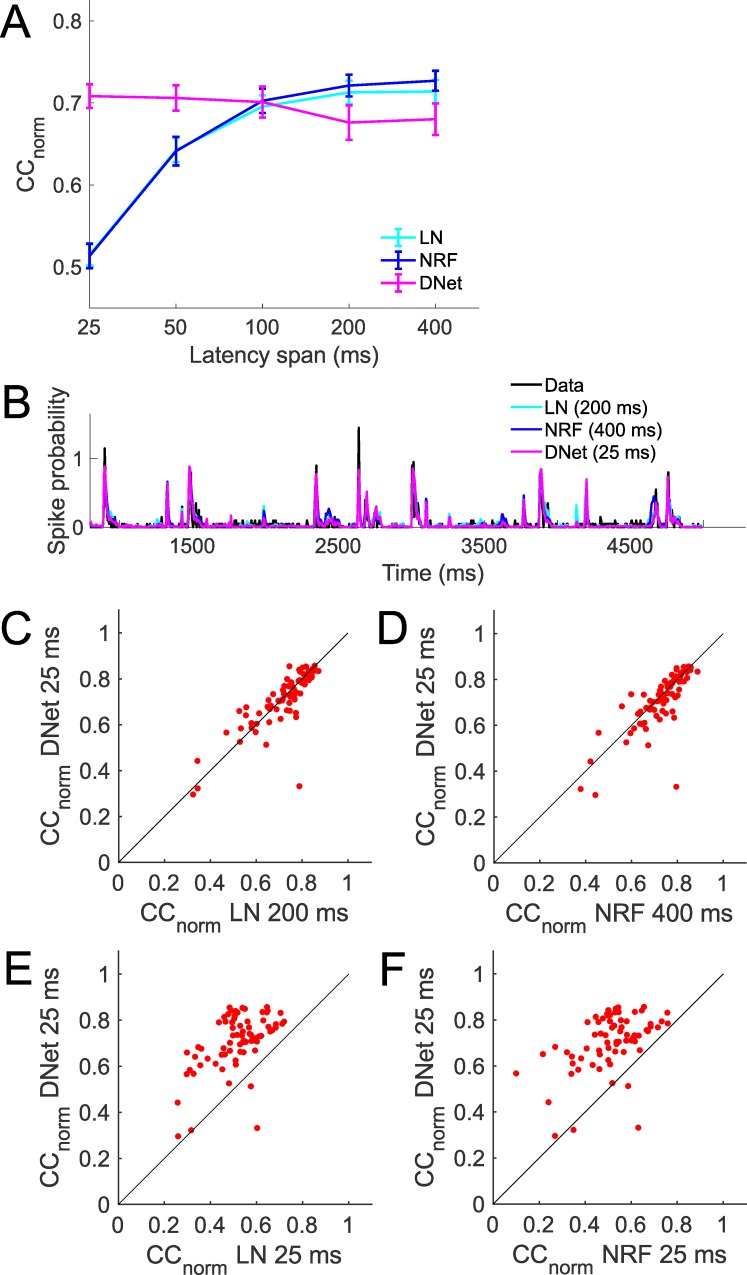

The 25-ms DNet model out performs the DNet with longer (200 ms and 400 ms) STRFs, as measured by prediction of neural responses on a held-out test set averaged over all 73 neurons (Fig 5A). It also achieves performance similar to the best performing LN model, but performs a little worse than the best performing NRF model (Fig 5A). This can be seen qualitatively in the time course of the prediction for an example neuron and stimulus in Fig 5B. A neuron by neuron comparison shows that this result is consistent for most neurons (Fig 5C and 5D). Also, the 25-ms LN and NRF models are outperformed by a large margin by the 25-ms DNet model (Fig 5A). A neuron by neuron comparison shows that this result is also consistent for almost all neurons (Fig 5E and 5F).

Fig 5. Comparison of the performance of the DNet model with other models.

A. Mean CCnorm of linear-nonlinear (LN), network receptive field (NRF) and dynamic network (DNet) models. B. Time course of predictions by best performing versions of each of the LN, NRF and DNet models, compared with real neural responses for an example neuron and stimulus. C. Neuron by neuron comparison of CCnorm values between the 25-ms DNet model and 200-ms LN model. Each red dot is a neuron. D. Neuron by neuron comparison of CCnorm values between the 25-ms DNet model and 400-ms NRF model. E. Neuron by neuron comparison of CCnorm values between the 25-ms DNet model and 25-ms LN model. F. Neuron by neuron comparison of CCnorm values between the 25-ms DNet model and 25-ms NRF model.

Note that the DNet model is equivalent to the NRF model when the discretized time constant for every DNet model unit is at the smallest possible value (τ = 1, d = 0). Hence, because all possible NRF models are a subset of all possible DNet models, the DNet should in theory always equal or outperform the NRF model. The fact that this is not the case suggests that, at least in some cases, the very best fits are not being found for the DNet model, perhaps as a consequence of some overfitting. That the DNet model performs worse for large latency spans, where there are more variables, is again probably due to challenges in fitting the DNet model, likely due to some overfitting to the training set.

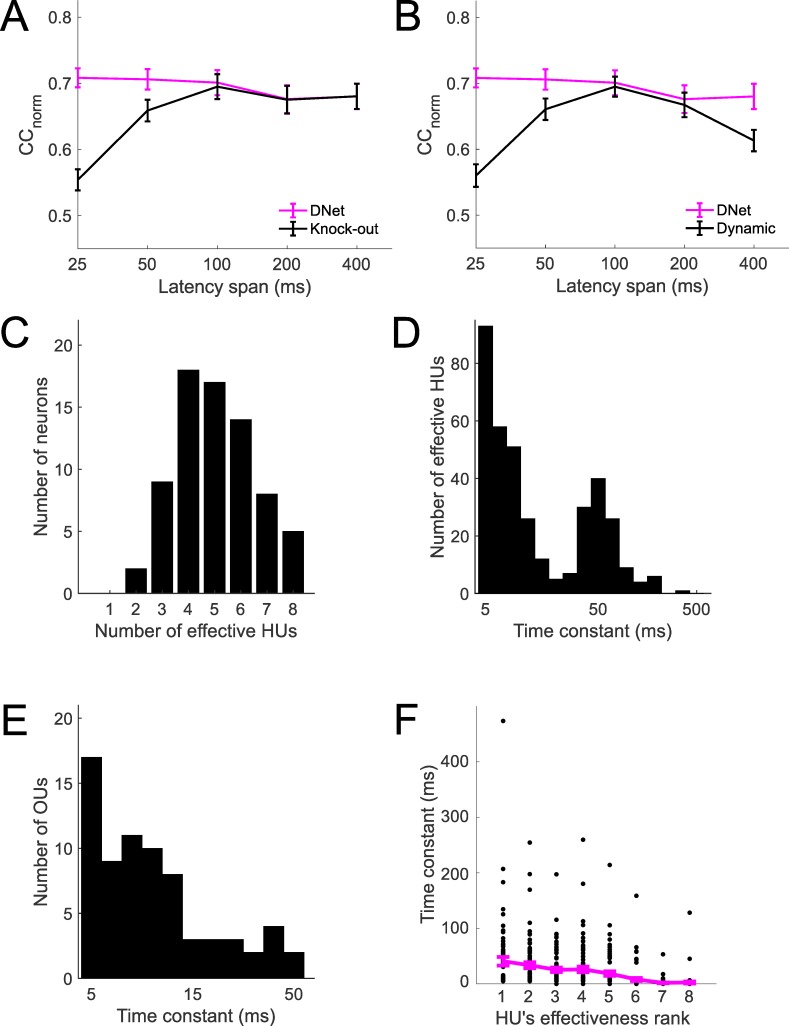

Network structure and response integration are both required for an effective DNet

To understand how much of an effect HUs of the DNet model with long time constants have on the model’s predictions, the fitted model was modified by ‘knocking out’ (see Methods) HUs with time constants longer than the duration of their STRFs. This was achieved by setting the output weights of those units to zero, while keeping all other parameters intact. We found that the 25-ms DNet model is the DNet whose CCnorm is most reduced by this alteration (Fig 6A).

Fig 6. Multiple time constants in a network architecture are required to capture the dependence of neural response on stimulus history.

A. DNet model performance when large time constants are knocked out. B. Performance of the single-unit dynamic model. C. Number of effective hidden units (HUs) used to model each of the 73 neurons using the DNet model. D. Distribution of time constants of HUs of the DNet model. E. Distribution of time constants of the output units (OUs) of the DNet model. F. Relationship between time constant and HU effectiveness for the DNet model.

A single-unit dynamic model was also developed to examine the effect of a time constant in an LN-like single STRF model. Prediction performance of this model was found to be worse than the LN model (Fig 6B). This indicates that both long time constants and a network architecture are needed for the 25-ms DNet model to achieve prediction performance similar to that of standard models.

Although the DNet model had 20 hidden units, the L1 regularization eliminated unnecessary units. Hence there were fewer effective HUs, and for the 25-ms DNet model this number was found to be between 2 and 8 (Fig 6C). We defined an effective HU as one whose effectiveness (variance of weighted output, see Methods) exceeded a certain threshold.

In the 25-ms DNet model, the time constants of the effective HUs ranged between 5 and 475 ms, often far greater than the duration of the STRFs (Fig 6D). The time constants of the OUs of the DNet model ranged from 5 to 130 ms (Fig 6E). To investigate whether long time constants are associated with effective HUs, for each neuron HUs were ranked in order of effectiveness and the mean of the time constants of all HUs in the same rank (over all neurons) were calculated. This showed that high-ranked HUs tend to have long time constants (Fig 6F).

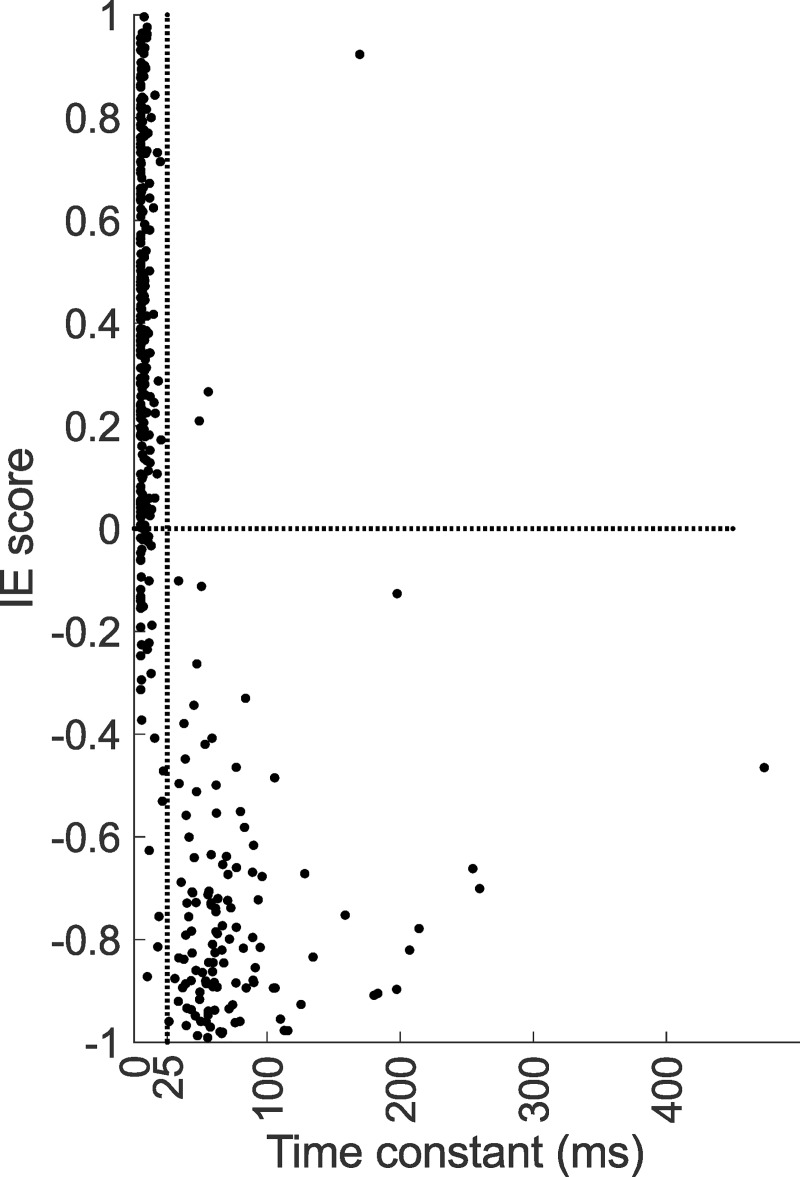

Hidden units with long time constants tend to be more inhibitory

To test whether a relationship exists between the time constant and unit’s inhibitory/excitatory nature, we classified the effective HUs of the 25-ms DNet model into excitatory or inhibitory using an IE score (see Methods). If the output weight is positive, an IE score of 1 means a unit with entirely excitatory influence and an IE score of -1 means a unit with entirely inhibitory influence. We found that almost all units having large time constants are inhibitory in nature (Fig 7).

Fig 7. Relationship between inhibitory/excitatory (IE) score and time constant.

Almost all the HUs of the DNet model with time constants greater than the duration of the STRFs (units right of the vertical dotted line, the duration of the 25 ms STRFs) are inhibitory in nature. IE score = inhibitory/excitatory score.

Membrane time constant versus synaptic time constant

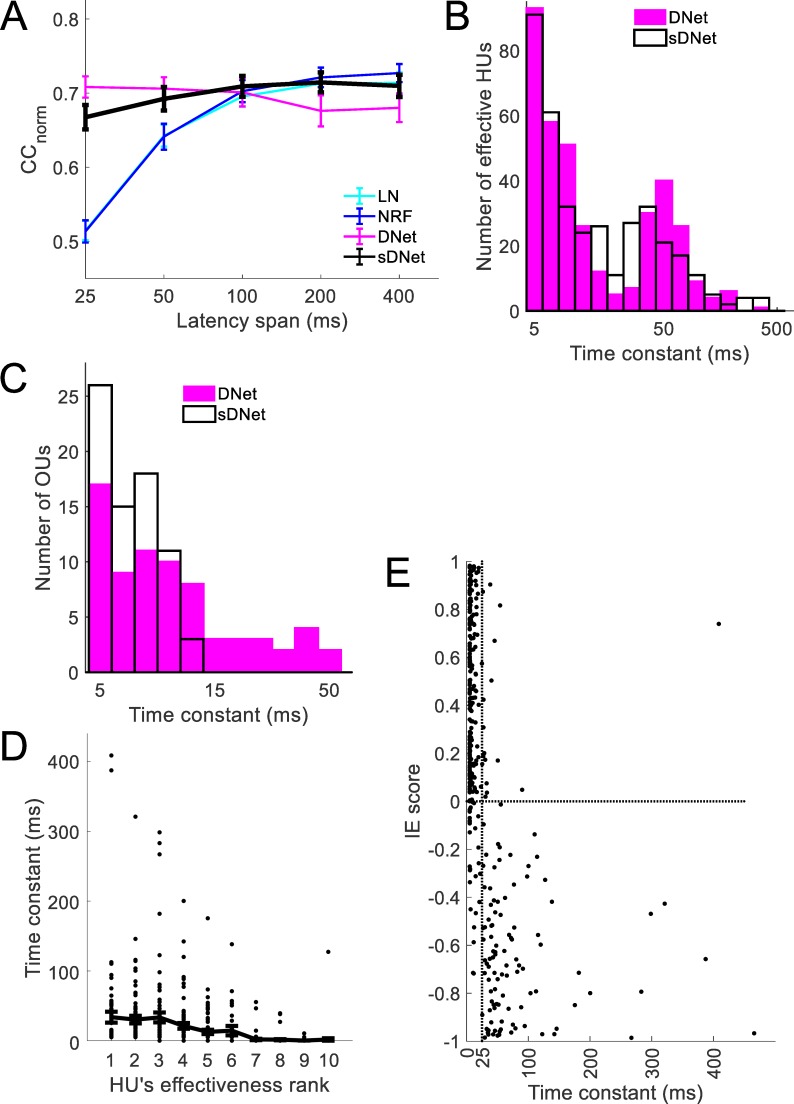

In the DNet model, the integration governed by the time constant occurs after each unit’s nonlinearity. Although the time constant is conceived and implemented in a manner consistent with a membrane constant, within the network it may capture other dynamic neural phenomena such as synaptic time constants, forms of a channel-based or synaptic adaptation, or certain forms of network recurrency. An alternative network model places the integration just before the nonlinearity, which is more consistent with the time constant being synaptic in nature [22]. To investigate how much of the neural activity can be explained by such a model, we modified the DNet model to form the sDNet model (synaptic DNet model), with each unit governed by Eqs 13 and 14 [22].

The sDNet model achieves best performance in predicting neural responses when the duration of the STRFs is 200 ms (CCnorm = 0.71) (Fig 8A). Although the sDNet with 25-ms STRFs predicts neural responses better (CCnorm = 0.67) than the 25-ms LN model (CCnorm = 0.51) and the 25-ms NRF model (CCnorm = 0.51), it is worse than the 25-ms DNet model (CCnorm = 0.71). This suggests that a large percentage of the variance of neural responses can be explained by a time constant before non-linearity, but that having a time constant after the non-linearity further improves prediction.

Fig 8. A modified DNet model with synaptic time constants (sDNet model).

A. Performance of the sDNet model. B. Distribution of the values of time constants of hidden units (HUs) in the sDNet and DNet model C. Distribution of the values of time constants of the output units (OUs) of the sDNet and DNet model. D. Relationship between a HU’s time constant and its effectiveness for the sDNet model. E. Relationship between a HU’s inhibitory/excitatory (IE) score and its time constant for the sDNet model. Most of the HUs with time constants greater than the duration of the STRFs (units right of the vertical dotted line) are inhibitory in nature.

While the largest time constants of the effective HUs of the sDNet model can be as large as ~470 ms (Fig 8B), the distribution of time constants of output units have smaller values compared to the DNet model (Fig 8C). As with the DNet model the more effective HUs of the sDNet model tend to have longer time constants (Fig 8D) and units with long time constants tend to be inhibitory in nature (Fig 8E).

Discussion

We find that a model of primary auditory cortical neurons which incorporates exponentially-decaying memory processes–the DNet model–can capture some of the temporal aspects of the receptive field using very short (25 ms) component STRFs in a network. Standard models such as the LN model (and the NRF model) represent temporal receptive fields of neurons using delay lines whose latencies extend up to a few hundred milliseconds. Our results suggest that, beyond 25ms, the temporal receptive field can be modelled more succinctly by exponentially-decaying memory processes than by delay lines.

When auditory neurons are fitted using a standard LN model, the resulting STRFs often contain narrowband inhibitory fields that decay over approximately 200 ms. In contrast, we find that the STRFs of the DNet model have short inhibitory fields with long time constants. This suggests that these temporally extended inhibitory fields in STRFs may reflect neural mechanisms that can be approximated by exponentially-decaying memory processes.

Although they have not been described in the ferret, a few vMGB units do show onset latencies beyond a few tens of milliseconds: in cat latencies up to 70 ms have been described [19], in mouse up to 60 ms [20], and in marmoset up to 300 ms [21]. Areas other than vMGB that project to cortex may also include long latency responses. Hence, we cannot rule out the possibility that long-latency inputs at least partially explain the dependence of auditory cortical neurons on stimulus history–indeed it is likely that they do to some extent. However, these long-latency responses are relatively rare and we show that it is possible to explain a lot of the dependence of the neural response in primary auditory cortex by exponentially-decaying memory properties consistent with those properties intrinsic to neurons, such as membrane dynamics.

There are many biological mechanisms which may underlie exponentially-decaying memory processes. Neurons have various intrinsic dynamic integrative and adaptive characteristics originating from basic membrane dynamics, channel-based adaptation, post-synaptic potentials and short-term synaptic plasticity [22]. Recurrent network effects could also underlie such memory processes. In a previous study, a model with synaptic depression has been shown to partially capture the integration of stimulus history in A1 [33]. Here, we found that a better prediction of neural responses can be achieved by a model based on the integrative properties of neural membranes (the DNet model) than by the one based on the dynamics of synaptic potentials (the sDNet model). We note, however, that although the DNet model was developed with membrane dynamics in mind, aspects of all the above listed dynamic processes could be captured by the same exponentially-decaying memory in the DNet. These different processes are not exclusive of each other, and combinations of them may contribute to the effects we find.

The firing rate of A1 neurons has been demonstrated to depend on stimulus history up to ~4 s in the past [17]. This dependence cannot be fully captured by linear models such as STRFs (17). This indicates that there are some nonlinear dynamic processes that provide history dependence which cannot be captured either by a linear or LN models, nor by the DNet model. Perhaps this is because the DNet model has limited capacity to capture temporal dynamics that are not exponential in nature.

Much of the relationship between stimulus and neural responses results from neurons being embedded in a network structure and from the nonlinear properties of the units in this network. From the auditory periphery to the A1, there are numerous feedforward, feedback and lateral connections. Many previous models of auditory cortical neurons have not explicitly represented this network character. However, some recent models have incorporated non-linear contextual effects [14,25,34–36]. Furthermore, a few models do explicitly incorporate multiple receptive fields [37–43] or more extensive network structure [23], which perform better in predicting neural responses of A1/AAF neurons than STRF models [23,37].

When modelling the dependence of neural responses on stimulus history, one approach is to include the history of a neuron’s spikes and those of its recorded neighbours in a generalized linear model (GLM) model [35,44]. However, unlike the DNet model, this approach does not model multi-layer network structure with hidden units that are inferred but not recorded. Modelling hidden network structure has been applied to the salamander retina, where in vitro responses to simple artificial stimuli are well predicted by a network model (LNFDSNF) with various non-linearities and response delays mimicking the retinal pathway [45]. The LNFDSNF model is particularly relevant to our study because it is a network model whose units have some memory of their past activity, though the form of this memory differs from that of the DNet model. The LNFDSNF model units have a feedback kernel that acts before the nonlinearity—in contrast, the DNet model units integrate their activity after the nonlinearity using an infinite impulse response that decays exponentially.

The succinct and biologically-motivated description of neural responses provided by the DNet model provides a basis from which to develop further models to account for the ~30% of the CCnorm that remains to be explained. One variant of the model that can be developed involves a network with multiple hidden layers where the units each have just a single latency (i.e. each unit in the first hidden layer uses just a spectral receptive field at a single delay). This approach provides an opportunity to build deeper neural-network-like models with fewer parameters, potentially overcoming overfitting problems for deep network models of neural responses [46]. This network can provide a basis for new network models that incorporate a greater range of intrinsic dynamic processes more explicitly, for example modelling in each unit its basic membrane dynamics, synaptic depression and channel-based adaptation together, with different appropriate time constants in each case. Such models may show more predictive power.

Neurons are intrinsically dynamic systems, each with memory of past events. Furthermore, neurons are embedded in networks. We show that models incorporating both these characteristics can be valuable for explaining moment to moment in vivo responses of neurons in the auditory cortex. This is an arguably more biologically plausible way of capturing the history dependence of neural responses than a set of delay lines. In artificial neural networks, memory is usually implemented through recurrent connections between units. However, real biological neurons also have intrinsic memory processes with particular characteristics. Including such single-unit memory has potential advantages for explaining the in vivo responses of biological neurons and may also be useful in artificial neural networks. In summary, the dynamic network model we present here provides a biologically-plausible alternative encoding model to the classic LN model, as well as a basis for further development of encoding models with hidden units with diverse intrinsic dynamic processes.

Data Availability

The experimental data underlying the results presented in the study were previously published by Harper et al. (2016) and are available from https://osf.io/ayw2p/. All codes are available from https://github.com/monzilur/DNet.

Funding Statement

BDBW, NSH, and AJK were supported by Wellcome Trust funding (WT108369/Z/2015/Z). MR was supported by the Clarendon Fund Scholarship. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Aertsen AMHJ Johannesma PIM, Hermes DJ. Spectro-temporal receptive fields of auditory neurons in the grassfrog. Biol Cybern. 1980;38: 235–248. [DOI] [PubMed] [Google Scholar]

- 2.Aertsen AMHJ Johannesma PIM. A comparison of the spectro-temporal sensitivity of auditory neurons to tonal and natural stimuli. Biol Cybern. 1981;42: 145–156. [DOI] [PubMed] [Google Scholar]

- 3.deCharms RC, Blake DT, Merzenich MM. Optimizing sound features for cortical neurons. Science. 1998;280: 1439–1444. [DOI] [PubMed] [Google Scholar]

- 4.Klein DJ, Depireux DA, Simon JZ, Shamma SA. Robust spectrotemporal reverse correlation for the auditory system: optimizing stimulus design. J Comput Neurosci. 2000;9: 85–111. [DOI] [PubMed] [Google Scholar]

- 5.Theunissen E, Sen K, Doupe AJ. Spectral-temporal receptive fields of nonlinear auditory neurons. J Neurosci. 2000;20: 2315–2331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Schnupp JWH, Mrsic-Flogel TD, King AJ. Linear processing of spatial cues in primary auditory cortex. Nature. 2001;414: 200–204. 10.1038/35102568 [DOI] [PubMed] [Google Scholar]

- 7.Escabí MA, Schreiner CE. Nonlinear spectrotemporal sound analysis by neurons in the auditory midbrain. J Neurosci. 2002;22: 4114–4131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Miller LM, Escabí MA, Read HL, Schreiner CE. Spectrotemporal receptive fields in the lemniscal auditory thalamus and cortex. J Neurophysiol. 2002;87: 516–527. 10.1152/jn.00395.2001 [DOI] [PubMed] [Google Scholar]

- 9.Linden JF, Liu RC, Sahani M, Schreiner CE, Merzenich MM. Spectrotemporal structure of receptive fields in areas AI and AAF of mouse auditory cortex. J Neurophysiol. 2003;90: 2660–2675. 10.1152/jn.00751.2002 [DOI] [PubMed] [Google Scholar]

- 10.Fritz J, Shamma S, Elhilali M, Klein D. Rapid task-related plasticity of spectrotemporal receptive fields in primary auditory cortex. Nat Neurosci. 2003;6: 1216–1223. 10.1038/nn1141 [DOI] [PubMed] [Google Scholar]

- 11.Gill P, Zhang J, Woolley SMN, Fremouw T, Theunissen FE. Sound representation methods for spectro-temporal receptive field estimation. J Comput Neurosci. 2006;21: 5–20. 10.1007/s10827-006-7059-4 [DOI] [PubMed] [Google Scholar]

- 12.Christianson GB, Sahani M, Linden JF. The consequences of response nonlinearities for interpretation of spectrotemporal receptive fields. J Neurosci. 2008;28: 446–455. 10.1523/JNEUROSCI.1775-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gourévitch B, Noreña A, Shaw G, Eggermont JJ. Spectrotemporal receptive fields in anesthetized cat primary auditory cortex are context dependent. Cereb Cortex. 2009;19: 1448–1461. 10.1093/cercor/bhn184 [DOI] [PubMed] [Google Scholar]

- 14.David S V, Mesgarani N, Fritz JB, Shamma SA. Rapid synaptic depression explains nonlinear modulation of spectro-temporal tuning in primary auditory cortex by natural stimuli. J Neurosci. 2009;29: 3374–3386. 10.1523/JNEUROSCI.5249-08.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Machens CK, Wehr MS, Zador AM. Linearity of cortical receptive fields measured with natural sounds. J Neurosci. 2004;24: 1089–1100. 10.1523/JNEUROSCI.4445-03.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Simoncelli EP, Paninski L, Pillow J, Schwartz O. Characterization of neural responses with stochastic stimuli In: Gazzaniga M, editor. The New Cognitive Neurosciences. 3rd ed MIT Press; 2004. pp. 327–338. [Google Scholar]

- 17.Asari H, Zador AM. Long-lasting context dependence constrains neural encoding models in rodent auditory cortex. J Neurophysiol. 2009;102: 2638–2656. 10.1152/jn.00577.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Homma NY, Happel MFK, Nodal FR, Ohl FW, King AJ, Bajo VM. A Role for Auditory Corticothalamic Feedback in the Perception of Complex Sounds. J Neurosci. Society for Neuroscience; 2017;37: 6149–6161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Calford MB. The parcellation of the medial geniculate body of the cat defined by the auditory response properties of single units. J Neurosci. 1983;3: 2350–2364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Anderson LA, Linden JF. Physiological differences between histologically defined subdivisions in the mouse auditory thalamus. Hear Res. Elsevier; 2011;274: 48–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bartlett EL, Wang X. Correlation of neural response properties with auditory thalamus subdivisions in the awake marmoset. J Neurophysiol. American Physiological Society Bethesda, MD; 2011;105: 2647–2667. 10.1152/jn.00238.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dayan P, Abbott LF. Theoretical neuroscience: computational and mathematical modeling of neural systems. Massachusetts Institute of Technology Press; 2001. [Google Scholar]

- 23.Harper NS, Schoppe O, Willmore BDB, Cui ZF, Schnupp JWH, King AJ. Network receptive field modeling reveals extensive integration and multi-feature selectivity in auditory cortical neurons. PLoS Comput Biol. 2016;12: e1005113 10.1371/journal.pcbi.1005113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Rabinowitz NC, Willmore BDB, Schnupp JWH, King AJ. Contrast gain control in auditory cortex. Neuron. 2011;70: 1178–1191. 10.1016/j.neuron.2011.04.030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Willmore BDB, Schoppe O, King AJ, Schnupp JWH, Harper NS. Incorporating midbrain adaptation to mean sound level improves models of auditory cortical processing. J Neurosci. 2016;36: 280–289. 10.1523/JNEUROSCI.2441-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Friedman J, Hastie T, Tibshirani R. Regularization paths for generalized linear models. J Stat Softw. 2010;33: 1–3. [PMC free article] [PubMed] [Google Scholar]

- 27.Sohl-Dickstein J, Poole B, Ganguli S. Fast large-scale optimization by unifying stochastic gradient and quasi-Newton methods. arXiv Prepr arXiv13112115 2013; [Google Scholar]

- 28.Glorot X, Bengio Y. Understanding the difficulty of training deep feedforward neural networks. Proc AISTATS. 2010. pp. 249–256. [Google Scholar]

- 29.Williams RJ, Zipser D. A Learning Algorithm for Continually Running Fully Recurrent Neural Networks. Neural Comput. 1989;1: 270–280. [Google Scholar]

- 30.Hsu A, Borst A, Theunissen F. Quantifying variability in neural responses and its application for the validation of model predictions. Netw Comput Neural Syst. 2004;15: 91–109. [PubMed] [Google Scholar]

- 31.Schoppe O, Harper NS, Willmore BDB, King AJ, Schnupp JWH. Measuring the performance of neural models. Front Comput Neurosci. 2016;10: 1–11. 10.3389/fncom.2016.00001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Bizley JK, Nodal FR, Nelken I, King AJ. Functional organization of ferret auditory cortex. Cereb Cortex. 2005;15: 1637–1653. 10.1093/cercor/bhi042 [DOI] [PubMed] [Google Scholar]

- 33.David S V, Shamma SA. Integration over multiple timescales in primary auditory cortex. J Neurosci. 2013;33: 19154–19166. 10.1523/JNEUROSCI.2270-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ahrens MB, Linden JF, Sahani M. Nonlinearities and contextual influences in auditory cortical responses modeled with multilinear spectrotemporal methods. J Neurosci. 2008;28: 1929–1942. 10.1523/JNEUROSCI.3377-07.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Calabrese A, Schumacher JW, Schneider DM, Paninski L, Woolley SMN. A generalized linear model for estimating spectrotemporal receptive fields from responses to natural sounds. PLoS One. 2011;6: e16104 10.1371/journal.pone.0016104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Rabinowitz NC, Willmore BDB, Schnupp JWH, King AJ. Spectrotemporal contrast kernels for neurons in primary auditory cortex. J Neurosci. 2012;32: 11271–11284. 10.1523/JNEUROSCI.1715-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Atencio CA, Sharpee TO, Schreiner CE. Cooperative nonlinearities in auditory cortical neurons. Neuron. 2008;58: 956–966. 10.1016/j.neuron.2008.04.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Atencio CA, Sharpee TO, Schreiner CE. Hierarchical computation in the canonical auditory cortical circuit. Proc Natl Acad Sci. 2009;106: 21894–21899. 10.1073/pnas.0908383106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Sharpee TO. Computational Identification of Receptive Fields. Annu Rev Neurosci. 2013;36: 103–120. 10.1146/annurev-neuro-062012-170253 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Sharpee TO, Atencio CA, Schreiner CE. Hierarchical representations in the auditory cortex. Curr Opin Neurobiol. Elsevier Ltd; 2011;21: 761–767. 10.1016/j.conb.2011.05.027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Sharpee T, Rust NC, Bialek W. Analyzing neural responses to natural signals: maximally informative dimensions. Neural Comput. 2004;16: 223–250. 10.1162/089976604322742010 [DOI] [PubMed] [Google Scholar]

- 42.Kozlov AS, Gentner TQ. Central auditory neurons have composite receptive fields. Proc Natl Acad Sci. 2016;113: 1441–1446. 10.1073/pnas.1506903113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.McFarland JM, Cui Y, Butts DA. Inferring nonlinear neuronal computation based on physiologically plausible inputs. Bethge M, editor. PLoS Comput Biol. Public Library of Science; 2013;9: e1003143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Pillow JW, Shlens J, Paninski L, Sher A, Litke AM, Chichilnisky EJ, et al. Spatio-temporal correlations and visual signalling in a complete neuronal population. Nature. 2008;454: 995–999. 10.1038/nature07140 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Real E, Asari H, Gollisch T, Meister M. Neural circuit inference from function to structure. Curr Biol. Elsevier Ltd.; 2017;27: 189–198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Meyer AF, Williamson RS, Linden JF, Sahani M. Models of neuronal stimulus-response functions: elaboration, estimation, and evaluation. Front Syst Neurosci. 2017;10: 109 10.3389/fnsys.2016.00109 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The experimental data underlying the results presented in the study were previously published by Harper et al. (2016) and are available from https://osf.io/ayw2p/. All codes are available from https://github.com/monzilur/DNet.