Abstract

Background: Surgical educators are increasingly exploring surgical simulation and other nonclinical teaching adjuncts in the education of trainees. The simulators range from purpose-built machines to inexpensive smartphone or tablet-based applications (apps). This study evaluates a free surgery module from one such app, Touch Surgery, in an effort to evaluate its validity and usefulness in training for hand surgery procedures across varied levels of surgical experience. Methods: Participants were divided into 3 cohorts: fellowship-trained hand surgeons, orthopedic surgery residents, and medical students. Participants were trained in the use of the Touch Surgery app. Each participant completed the Carpal Tunnel Release module 3 times, and participants’ score was recorded for each trial. Participants also completed a customized Likert survey regarding their opinions on the usefulness and accuracy of the app. Statistical analysis using a 2-tailed t test and analysis of variance was performed to evaluate for performance within and between cohorts. Results: All cohorts performed better on average with each subsequent simulation attempt. For all attempts, the experts outperformed the novice and intermediate participants, while the intermediate cohort outperformed the novice cohort. Novice users consistently gave the app better scores for usefulness as a training tool, and demonstrated more willingness to use the product. Conclusions: The study confirms app validity and usefulness by demonstrating that every cohort’s simulator performance improved with consecutive use, and participants with higher levels of training performed better. Also, user confidence in this app’s veracity and utility increased with lower levels of training experience.

Keywords: carpal tunnel release, surgical simulation, resident education, medical student education, surgical app

Introduction

Surgical training has been historically built upon extensive patient-based exposure in the operating room under the apprenticeship model.1 The original Halstedian model emphasized graduated responsibility throughout training until self-sufficiency was accomplished by the end of residency. With recent limitations in postgraduate training duty hours, increasing complexity of surgical interventions, heightened focus on operating room efficiency, and expectations of patient safety and satisfaction, the importance of simulated surgical training as an adjunctive measure to supplement the traditional apprenticeship surgical education model is becoming increasingly evident.1,9

Touch Surgery is a free interactive smart device application (app) that aims to provide a realistic, cognitive motor skill simulation and surgical step rehearsal based on technique and sequential steps that are hallmarks of a given surgical intervention through an array of various surgical specialties.16 The app guides the user through operations by breaking them down into their component steps. The user can practice an operation by taking a “tutorial” module and then take a “test” module to assess his or her understanding of the component steps and techniques. The app does not teach either the pathology of a disease or the actual technical surgical skill needed to manage it, but rather it aims to familiarize and prepare the user of a particular procedure’s surgical anatomy, relevant medications and instruments, and the necessary surgical steps to be taken by the surgeon during the operation.

Initial studies using the Touch Surgery app observed construct, content, and face validity of orthopedic trauma modules and demonstrated the difference based on user experience.15,16 However, no prior work has assessed the app’s validity and user satisfaction in Hand Surgery.

The primary aim of this study was to assess the app’s validity and correlations between app performance and surgical expertise level, as well as to determine whether practice with the simulator translates to improved participant performance. The study’s secondary aim was to evaluate user satisfaction of the app and to obtain end-user information regarding its utility in medical training.

Methods

Touch Surgery Module

The Carpal Tunnel Surgery Phase 2: Carpal Tunnel Release module in the Touch Surgery app was used. Medical students, orthopedic surgery residents, and hand surgery fellowship-trained orthopedic attendings were recruited for participation in the study. Medical students with prior exposure to (1) Touch Surgery or (2) prior clinical exposure to a carpal tunnel release were excluded from the study. Exclusion criteria for orthopedic surgery residents and attendings were: (1) previous exposure to the Touch Surgery App; and (2) a lack of prior clinical exposure to a carpal tunnel release.

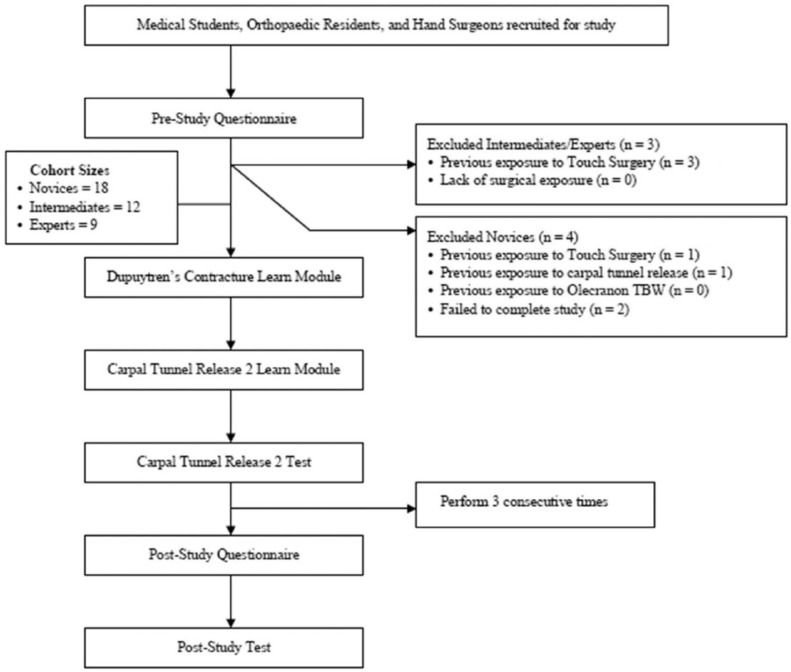

Medical students, orthopedic residents, and hand surgery attendings meeting inclusion criteria were placed into novice (medical students), intermediate (residents), and expert (attendings) cohorts based upon level of training. All study participants carried out the study modules on standard iPad tablets (9.4 inches × 6.6 inches; Apple, Cupertino, California) to ensure a similar operating interface. All participants then carried out the Touch Surgery module “learn” and “test” simulations in a standardized fashion as shown in the study flow diagram in Figure 1. Participants were then asked to begin with the Dupuytren’s Contracture Learn Module to gain familiarity with the application’s interactive touch screen movements and commands. Next, participants completed the Carpal Tunnel Release Learn Module, followed by the actual Carpal Tunnel Release test module 3 consecutive times. Scores, as generated by the Touch Surgery app, were recorded for each cycle.

Figure 1.

Study flow diagram.

After completing these modules, all participants were polled on their level of agreement with statements pertaining to the Touch Surgery app. Answers were assessed using a 5-point Likert scale questionnaire, with assessments from 1 to 5 being defined as follows: strongly disagree, disagree, neutral, agree, and strongly agree.

The novice cohort was also given a 12-item Likert questionnaire assessing both face validity of the Touch Surgery app and user satisfaction with the app. The intermediate and expert cohorts were surveyed with a 15-item Likert questionnaire, with an additional 3 items assessing content validity of the surgical simulations. Poststudy tests on the sequence of surgical steps involved in a Carpal Tunnel Release (8-item test) were carried out by all study participants at the conclusion of the study.

Statistical Analysis

All entries were logged into a database and analyzed for differences within cohorts using a 2-tailed Student t test and across cohorts using analysis of variance and descriptive statistics.

Results

A total of 18 out of 22 medical students recruited for the novice cohort met inclusion criteria and were enrolled in the study. Four students had prior experience with the Touch Surgery app and were excluded. Demographic analysis showed an average age of the novice cohort of 23.2 years. Overall, 61.1% of the novice cohort participants were male and 83.3% of participants were first-year medical students, with the remaining 16.6% being second-year medical students.

In the intermediate cohort, 12 out of 14 residents recruited for the intermediate cohort met inclusion criteria and were enrolled. Two of the recruited participants had prior experience with the Touch Surgery app and were excluded. The average age was 29 years, and all but one participant were male.

In the expert cohort, 9 out of 10 recruited attendings met inclusion criteria and were enrolled in the expert cohort. The average age of the attending participant was 43 years. All attendings were hand surgery fellowship-trained orthopedic surgeons.

All cohorts performed better on average with subsequent simulation attempts (P < .05; Table 1). Novice, intermediate, and expert users demonstrated average improvements of 24%, 19%, and 15%, respectively, over the course of the 3 consecutive simulations. For all attempts, the experts outperformed the novice and intermediate participants, while the intermediate cohort outperformed the novice cohort in all attempts (P < .05).

Table 1.

Performance Scores for CTR.

| CTR 1 | CTR 2 | CTR 3 | P value for differences within cohort performance | |

|---|---|---|---|---|

| Novice performance | 72.1 | 82.7 | 89.5 | <.001 |

| Intermediate performance | 79.7 | 89.4 | 95.0 | <.001 |

| Expert performance | 83.9 | 95.3 | 96.1 | .004 |

| P value for differences between cohort performance | <.01 | <.001 | <.01 |

Note. Scores for each cohort from attempts 1 to 3. Scores are provided by the Touch Surgery app as a normal function of the program, and are graded out of 100 points. Scores demonstrate statistically significant increase throughout the study within each cohort. Scores are significantly higher in each cohort as the participant level of training increases. CTR = carpal tunnel release.

When queried about how accurately the simulation follows the steps of a carpal tunnel release surgery (Table 2), the average Likert score was 2.7 for both intermediate and expert users, while novice users reported an average Likert score of 4.6 and 4.4, respectively, in their opinion that the app would be useful to learn and rehearse a new surgical procedure. Moreover, novice users consistently gave the app better scores for usefulness as a training tool, and demonstrated more willingness to use the product. The lowest scores for realism and usefulness came from the expert cohort, with all average measures of usefulness and realism rated at neutral or negative.

Table 2.

Participant Survey Results.

| Average novice Likert score | Average intermediate Likert score | Average expert Likert score | |

|---|---|---|---|

| Touch Surgery is a useful surgical training tool. | 4.3 | 3.8 | 2.9 |

| Touch Surgery is a useful surgical assessment tool. | 3.6 | 3.1 | 2.8 |

| Touch Surgery would be very useful in learning new operations. | 4.6 | 3.9 | 3.0 |

| Touch Surgery would be very useful to rehearse a known operation. | 4.4 | 3.8 | 2.9 |

| Touch Surgery should be implemented in surgical training curriculum. | 4.1 | 3.5 | 2.7 |

| Touch Surgery graphics were realistic. | 3.4 | 3.1 | 2.8 |

| Touch Surgery simulated environment was realistic. | 3.1 | 2.5 | 2.3 |

| Touch Surgery procedural steps were realistic. | 3.8 | 3.2 | 2.8 |

| I am willing to use Touch Surgery in the future. | 4.4 | 3.6 | 3.0 |

| Touch Surgery was easy to use. | 3.9 | 4.0 | 3.0 |

| Touch Surgery would be my first choice to prepare for an operation. | 3.0 | 1.5 | 2.0 |

| Touch Surgery should be made available to all surgical trainees. | 4.3 | 3.8 | 3.0 |

| Touch Surgery accurately describes and presents the correct sequence of operative steps in the carpal tunnel module. | — | 2.7 | 2.7 |

| Touch Surgery is a valid training module for teaching Orthopaedic Surgeries. | — | 3.3 | 2.9 |

Note. Average responses to the questions on the customized Likert survey administered after use of the app. Questions are graded on a scale of 1 (strongly disagree) to 5 (strongly agree).

Discussion

Interest in surgical simulation training in medical school and residency has increased as a consequence of reduced duty hours and decreased surgical assisting opportunities.13 As a result, surgical simulation mediums have come under scientific analysis in various surgical specialties. Within the General Surgery literature, both analog8 and electronic2,17 simulation techniques have been demonstrated to improve surgical skill in randomized controlled trials. Similar trials have demonstrated effective simulator training in Gynecology,12 Anesthesiology,10 and Endovascular Surgery.11

In the orthopedic literature, much of the early research has focused on arthroscopic procedures. This is in part a result of the technology available in the form of arthroscopic simulators, and in part due to the difficulty of training arthroscopic surgeons. Studies by Gomoll et al4,5 and Srivastava et al14 demonstrated that more-experienced surgeons perform better on arthroscopy simulators. Martin et al7 demonstrated that electronic simulator performance translates to performance in cadaver models. These early studies have demonstrated surgical simulation effectiveness, but none demonstrated that the simulators provided training that later improved surgical performance. However, Howells et al in a 2008 randomized controlled trial demonstrated that training with an arthroscopy simulator resulted in improved operating room effectiveness in inexperienced trainees.6 This study provided direct evidence in the orthopedic literature that simulator training is directly transferrable to operating room efficiency.

We analyzed the performance of medical students, residents, and attending hand surgeons on the Touch Surgery app’s Carpal Tunnel Release module over 3 consecutive trials on the program to demonstrate the app’s validity, assess whether performance was consistent with expertise level, determine whether user performance improved with practice, and obtain user reviews. Overall, the study demonstrated that every cohort’s simulator performance improved with every consecutive use, that performance was consistent with the user’s level of training, and that user confidence in simulator veracity and utility decreases with increased levels of training.

We found that each cohort’s performance on the simulator reliably improved with practice. This is an important aspect of simulation training, as it indicates a positive, dose-dependent effect on participants’ knowledge and technical proficiency (cognitive aspects of the surgical task). Gallagher and colleagues discussed surgical training as consisting of psychomotor and cognitive elements.3 Based on their interpretation, simulator training in one element reduces the attention required for that element of the task, and allows greater attention to the other. By this formulation, improvement of participants’ awareness of procedural steps, equipment requirements, anatomy, and so on, will allow for more focus during actual training on psychomotor aspects of the procedure. Improved app performance, therefore, is an indication of progression in the cognitive aspects of the procedure, and may allow for operative training to focus more on the psychomotor aspects of surgery.

Our study results also demonstrated that higher levels of surgical experience correlated with better performance on the app’s module. While this is not indicative that the simulator improves surgical skill, it does provide evidence of validity, or that there is a relationship between surgical experience and simulator performance.

In terms of user feedback, all but one Likert question (Touch Surgery would be my first choice to prepare for an operation), medical students had the most favorable average responses to the Touch Surgery simulator, followed by residents and then attendings. Medical students were highly enthusiastic with regard to the simulator’s ability to assess surgical understanding and efficacy in teaching new operations. It is unclear why participants with a higher level of training were less confident in the utility of the program, and further research will be required to determine the simulation needs (if any) of this cohort.

The remaining Likert questions demonstrated a high level of satisfaction with the app, especially among the novice cohort. Overall measures of perceived usefulness in this group averaged 4.6/5 and 4.4/5 for practicing unknown and known surgeries, respectively, and average agreement with the statement “I am willing to use Touch Surgery in the future” averaged 4.4/5 in this group. These results, taken together, indicate that this type of simulation app fulfills a perceived need among the novice trainee cohort.

The most significant weakness of this study is the absence of proof that enhanced simulator performance translates to enhanced clinical performance. In general, surgical simulation involves the development of both cognitive and technical skills of the surgical trainee. The Touch Surgery app focuses on cognitive development, specifically cognitive task analysis. Therefore, while participants clearly improved their skills in the simulator with practice, the possibility remains that these skills are useful in the simulator only and not translatable to clinical practice. Further assessment of the real-world efficacy of this simulator will require a randomized trial of simulator training with real-world operating room efficacy as an outcome measure.

In the current era of rapidly improving electronic and virtual reality technology, surgical simulators provide a promising means for enhancing surgical training. The use of smaller, less expensive simulators may allow for improved dissemination of these benefits as surgical training moves into the 21st century. In conclusion, this study demonstrates that participants using the Touch Surgery app demonstrated better initial performance based on prior surgical experience, and improved performance of a simulated surgical procedure with practice. These are important early indicators of the app’s validity and utility as a training tool. The study also demonstrates that medical students and residents were most enthusiastic about the app’s real-world utility in helping them prepare for surgical procedures.

Footnotes

Ethical Approval: This study was approved by our institutional review board.

Statement of Human and Animal Rights: Research protocol was approved by the institutional review board at our institution. All procedures followed were in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1975, as revised in 2008 (5).

Statement of Informed Consent: Informed consent was obtained from all individual participants included in the study.

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) received no financial support for the research, authorship, and/or publication of this article.

ORCID iDs: J Tulipan  https://orcid.org/0000-0003-1932-1042

https://orcid.org/0000-0003-1932-1042

JT Labrum IV  https://orcid.org/0000-0003-0478-0764

https://orcid.org/0000-0003-0478-0764

References

- 1. Fitzgibbons SC, Chen J, Jagsi R, et al. Long-term follow-up on the educational impact of ACGME duty hour limits: a pre-post survey study. Ann Surg. 2012;256(6):1108-1112. [DOI] [PubMed] [Google Scholar]

- 2. Franzeck FM, Rosenthal R, Muller MK, et al. Prospective randomized controlled trial of simulator-based versus traditional in-surgery laparoscopic camera navigation training. Surg Endosc. 2012;26(1):235-241. [DOI] [PubMed] [Google Scholar]

- 3. Gallagher AG, Ritter EM, Champion H, et al. Virtual reality simulation for the operating room: proficiency-based training as a paradigm shift in surgical skills training. Ann Surg. 2005;241(2):364-372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Gomoll AH, O’Toole RV, Czarnecki J, et al. Surgical experience correlates with performance on a virtual reality simulator for shoulder arthroscopy. Am J Sports Med. 2007;35(6):883-888. [DOI] [PubMed] [Google Scholar]

- 5. Gomoll AH, Pappas G, Forsythe B, et al. Individual skill progression on a virtual reality simulator for shoulder arthroscopy: a 3-year follow-up study. Am J Sports Med. 2008;36(6):1139-1142. [DOI] [PubMed] [Google Scholar]

- 6. Howells NR, Gill HS, Carr AJ, et al. Transferring simulated arthroscopic skills to the operating theatre: a randomised blinded study. J Bone Joint Surg Br. 2008;90(4):494-499. [DOI] [PubMed] [Google Scholar]

- 7. Martin KD, Belmont PJ, Schoenfeld AJ, et al. Arthroscopic basic task performance in shoulder simulator model correlates with similar task performance in cadavers. J Bone Joint Surg Am. 2011;93(21):e1271-e1275. [DOI] [PubMed] [Google Scholar]

- 8. Palter VN, Grantcharov T, Harvey A, et al. Ex vivo technical skills training transfers to the operating room and enhances cognitive learning: a randomized controlled trial. Ann Surg. 2011;253(5):886-889. [DOI] [PubMed] [Google Scholar]

- 9. Reznick RK, MacRae H. Teaching surgical skills—changes in the wind. N Engl J Med. 2006;355(25):2664-2669. [DOI] [PubMed] [Google Scholar]

- 10. Samuelson ST, Burnett G, Sim AJ, et al. Simulation as a set-up for technical proficiency: can a virtual warm-up improve live fibre-optic intubation? Br J Anaesth. 2016;116(3):398-404. [DOI] [PubMed] [Google Scholar]

- 11. See KW, Chui KH, Chan WH, et al. Evidence for endovascular simulation training: a systematic review. Eur J Vasc Endovasc Surg. 2016;51(3):441-451. [DOI] [PubMed] [Google Scholar]

- 12. Shore EM, Grantcharov TP, Husslein H, et al. Validating a standardized laparoscopy curriculum for gynecology residents: a randomized controlled trial. Am J Obstet Gynecol. 2016;215(2):204.e1-204.e11. [DOI] [PubMed] [Google Scholar]

- 13. Sonnadara RR, Van Vliet A, Safir O, et al. Orthopedic boot camp: examining the effectiveness of an intensive surgical skills course. Surgery. 2011;149(6):745-749. [DOI] [PubMed] [Google Scholar]

- 14. Srivastava S, Youngblood PL, Rawn C, et al. Initial evaluation of a shoulder arthroscopy simulator: establishing construct validity. J Shoulder Elbow Surg. 2004;13(2):196-205. [DOI] [PubMed] [Google Scholar]

- 15. Sugand K, Mawkin M, Gupte C. Training effect of using Touch Surgery for intramedullary femoral nailing. Injury. 2016;47(2):448-452. [DOI] [PubMed] [Google Scholar]

- 16. Sugand K, Mawkin M, Gupte C. Validating Touch Surgery: a cognitive task simulation and rehearsal app for intramedullary femoral nailing. Injury. 2015;46(11):2212-2216. [DOI] [PubMed] [Google Scholar]

- 17. Zendejas B, Cook DA, Bingener J, et al. Simulation-based mastery learning improves patient outcomes in laparoscopic inguinal hernia repair: a randomized controlled trial. Ann Surg. 2011;254(3):502-509; discussion 509-511. [DOI] [PubMed] [Google Scholar]