Abstract

Over the past few decades, we have witnessed a dramatic rise in life expectancy owing to significant advances in medical science and technology, medicine as well as increased awareness about nutrition, education, and environmental and personal hygiene. Consequently, the elderly population in many countries are expected to rise rapidly in the coming years. A rapidly rising elderly demographics is expected to adversely affect the socioeconomic systems of many nations in terms of costs associated with their healthcare and wellbeing. In addition, diseases related to the cardiovascular system, eye, respiratory system, skin and mental health are widespread globally. However, most of these diseases can be avoided and/or properly managed through continuous monitoring. In order to enable continuous health monitoring as well as to serve growing healthcare needs; affordable, non-invasive and easy-to-use healthcare solutions are critical. The ever-increasing penetration of smartphones, coupled with embedded sensors and modern communication technologies, make it an attractive technology for enabling continuous and remote monitoring of an individual’s health and wellbeing with negligible additional costs. In this paper, we present a comprehensive review of the state-of-the-art research and developments in smartphone-sensor based healthcare technologies. A discussion on regulatory policies for medical devices and their implications in smartphone-based healthcare systems is presented. Finally, some future research perspectives and concerns regarding smartphone-based healthcare systems are described.

Keywords: smartphone, remote healthcare, mHealth, telehealth, medical device, regulation, smartphone sensor

1. Introduction

Life expectancy in many countries has increased drastically over the last several decades. This large increase can be attributed primarily to the remarkable advances in healthcare and medical technologies, and the growing consciousness about health, nutrition, sanitation, and education [1,2,3,4]. However, this increased life expectancy, combined with the globally decreasing birthrate is expected to result in a large aging population in the near future. In fact, the elderly population over the age of 65 years is expected to outnumber the children under the age of 14 years by 2050 [3]. Furthermore, approximately 15% of the world’s population have some forms of disability, and 110–190 million adults suffer from major functional difficulties [5]. Disability of any form in a person limits mobility and independence, thus preventing or delaying them from receiving necessary healthcare support on time. In addition, a significant number of people around the globe suffer from chronic diseases and medical conditions such as cardiovascular diseases, lung diseases, different forms of cancer, diabetes and diabetes-related complications. It is reported that six of ten American adults (>18 years) suffer from at least one chronic disease, with four of ten having multiple chronic conditions [6]. Further, chronic diseases account for ~65–70% of total mortality among the ten leading causes of death [7]. In fact, heart disease and cancer together account for 48% of all deaths, thus becoming the leading cause of mortality [7,8].

In addition, the unregulated blood sugar i.e. diabetes is likely to be the seventh leading cause of death by 2030 [9]. Diabetes increases the risk of long-term complications such as kidney failure, limb amputations, and diabetic retinopathy (DR). Diabetic retinopathy is an eye disease that results from the damage of retinal blood vessels due to the prolonged presence of excessive glucose in the blood. It may lead to blindness if not treated in time. In fact, DR was estimated to account for 5 million blindness globally in 2002 [10]. Other prevalent eye-related diseases include cataract, glaucoma, and age-related macular degeneration (AMD) that together with DR caused 65% of all blindness globally in 2010, with cataract alone accounting for 51% [11]. Furthermore, poor air quality in many large cities threatens city-dwellers with diseases like asthma and lung diseases. Globally, an estimated 235 million people are currently suffering from asthma, which caused 383,000 deaths in 2015 [12]. Therefore, the demand for healthcare services is understandably rising more rapidly than ever before.

An important issue related to providing adequate healthcare services is the continuously increasing cost of pharmaceuticals, modern medical diagnostic procedures and in-facility care services, which together renders the existing healthcare services unaffordable. To give one example, in the 2017 budget of the Province of Ontario in Canada, an additional $11.5 billion was allocated for the next three years in healthcare sectors [13]. Further, the total health spending per Canadian was expected to be $6839 in 2018, representing more than 11% of Canada’s GDP and these numbers are similar to most other OECD (Organization for Economic Co-operation and Development) countries [14]. Therefore, present-day healthcare services are likely to cause a substantial socioeconomic burden on many nations, particularly the developing and least developed ones [15,16,17,18,19]. Furthermore, a large fraction of the elderly relies on other persons such as family members, friends and volunteers, or an expensive formal care services such as caregivers and elderly care centers for their daily living and healthcare needs [20,21,22]. Therefore, enabling superior healthcare and monitoring services at an affordable price is urgently needed, particularly for persons having limited access to healthcare facilities or to those living under constrained or fixed budget conditions. However, through long-term monitoring of key physiological parameters and activities of the elderly in a continuous fashion, many of the medical complications can be avoided or managed properly [22,23,24,25]. Long term monitoring of health enables early diagnoses of developing diseases. However, current practice requires frequent visits to or long term stays at expensive healthcare facilities. In addition, a shortage of skilled healthcare personnel, and limited financial capability, coupled with increasing healthcare costs [26] contribute to the bottleneck in realizing long-term health monitoring. Smartphone-based healthcare systems, on the other hand, can potentially enable a cost-effective alternative for long-term health monitoring and may allow the healthcare personnel to monitor and assess their patients remotely without interfering with their daily activities [22,27].

The enormous advances in energy efficient and high-speed computing and communication technologies have revolutionized the global telecom industry. Furthermore, the significant progress in display, sensor and battery technologies together have paved the way for modern mobile devices such as smartphones and tablets, enabling seamless internet connectivity, entertainment, and health and fitness monitoring on the go along with conventional voice and text communication. Smartphones have grown in popularity over the past decade and by 2021, the global penetration of smartphones is expected to exceed 3.8 billion [28]. Modern day smartphones come with a number of embedded sensors such as a high-resolution complementary metal-oxide semiconductor (CMOS) image sensor, global positioning system (GPS) sensor, accelerometer, gyroscope, magnetometer, ambient light sensor and microphone. These sensors can be used to measure several health parameters such as heart rate (HR), HR variability (HRV), respiratory rate (RR), and health conditions such as skin diseases and eye diseases, thus turning the communication device into a continuous and long-term health monitoring system. Table 1 presents the health parameters and conditions that can be monitored using current embedded sensors of the smartphone. The data that are measured by the sensors can be analyzed and displayed on the phone and/or transmitted to a distant healthcare facility or healthcare personnel for further investigation over the wireless mobile communication platform such as 3G, high speed packet access (HSPA), and long-term evolution (LTE). These existing platforms offer high-speed and seamless internet connectivity even on the go, thereby allowing people to remain connected with their healthcare providers [7,22].

Table 1.

Smartphone sensors used for health monitoring.

| Monitored Health Issues | Typically Used Smartphone Sensors |

|---|---|

| Cardiovascular activity e.g., heart rate (HR) and HR variability (HRV) | Image sensor (camera), microphone |

| Eye health | Image sensor (camera) |

| Respiratory and lung health | Image sensor (camera), microphone |

| Skin health | Image sensor camera) |

| Daily activity and fall | Motion sensors (accelerometer, gyroscope, proximity sensor), Global positioning system (GPS) |

| Sleep | Motion sensors (accelerometer, gyroscope) |

| Ear health | Microphone |

| Cognitive function and mental health | Motion sensors (accelerometer, gyroscope), camera, light sensor, GPS |

In this article, we present a detailed review of the current state of research and development in the health monitoring systems based-on embedded sensors in smartphones. In Section 2, we discuss the evolution of smartphones. Some important recent works on different health monitoring systems are presented in Section 3, which is followed by a discussion (Section 4) on the regulatory policies associated with smartphone-based medical devices. Finally, the paper is concluded in Section 5 with a discussion on future research perspectives and some key challenges in realizing smartphone-based medical devices.

2. Evolution of Smartphone

In 1992, IBM announced a ground-breaking device named Simon Personal Communicator that brought together the functionalities of a cellular phone and a Personal Digital Assistant (PDA) [29,30,31], thus unveiling a whole new concept of so-called ‘smartphone’ in the cellular phone industry. Simon featured a 4.5” × 1.4” monochrome LCD touchscreen and came with a stylus and a charging base station. Along with conventional voice communication, the device was also capable of communicating emails, faxes, and pages—some features that were later attributed to smartphones. Simon featured a notes collection to write in, an address book, calendar, world clock and an appointment scheduler, and was also flexible to third-party applications [29,30,31]. While it was a giant leap into the market by IBM, it, however, was expensive, costing the customer $899 with a service contract. Being much ahead of its time and with such a high price tag, Simon failed to attract customers. Even though the tech giant sold approximately 50,000 units in 6 months [32], it opted out of making a second-generation Simon.

In 1996, Nokia revealed a clamshell phone, Nokia 9000 Communicator, which opened to a full QWERTY keyboard and physical navigation buttons flanking a monochrome LCD screen nearly as big as the device itself. It featured Web browsing capability on top of most of the features that IBM’s Simon offered. However, Ericsson first coined the term ‘Smart-phone’ for its Ericsson GS 88. The GS 88, also known as ‘Penelope’, was strikingly similar to the Nokia 9000. However, it was never released to the public, arguably because of its weight and poor battery quality [31]. Later in 2000, Ericsson first officially used the term ‘Smartphone’ for the Ericsson R380, which was much cheaper, smaller and lighter than the Nokia 9000. Ericsson R380 was the first device to use the mobile-specific Symbian operating system (OS) and only second to IBM’s Simon to have a touchscreen in a phone. R380 was one of the first few smartphones that used the wireless application protocol (WAP) for faster and smoother web browsing.

In 2002, Handspring and RIM released their first smartphones Treo-180 and Blackberry 5810 (5820 for Europe) in the market, respectively [29,30,31]. The Treo-180 brought the functionality of a phone, an email messaging device and a PDA together, and enabled the users to check the calendar while talking on the phone and to dial directly from the contact list. The Blackberry 5810 featured enterprise e-mail and instant messaging services, text messaging, and a WAP browser. However, this device lacked an integrated microphone, for which the user had to attach a headset to make or receive calls. Both the BlackBerry 5810 and the Treo-180 featured a large monochrome screen and a QWERTY keyboard like a PDA. However, unlike the BlackBerry 5810, the Treo-180 came in a clamshell format with a visible antenna and a hinged lid over the phone that flipped up to serve as the earpiece for phone conversations. Then RIM released Blackberry 6210, otherwise known as the ‘BlackBerry Quark’ in the following year, featuring a built-in microphone and speaker [30,31].

In 2000, both Samsung and Sharp introduced a camera phone in their respective local markets. In South Korea, Samsung released SCH-V200 that featured a 1.5″ TFT-LCD display and a 0.35-megapixel video graphics array (VGA) camera that could capture up to 20 images. A few months later, in Japan, Sharp released the J-SH04 in Japan with a 256-color display and a built-in 0.11-megapixel CMOS camera. Although the camera resolution of J-SH04 was much less than that of the SCH-V200, it featured a phone-integrated camera in the true sense for the first time and allowed for transferring of images directly from it, whereas the SCH-V200 brought two separate devices in one enclosure and therefore needed to transfer the pictures to a computer for sharing. However, none of the camera phones supported web browsing and email communication until Sanyo launched the first smartphone with a built-in camera in 2002. The Sanyo SCP-5300 came in a clamshell format and featured dual color displays, WAP browser, and an integrated 0.3-megapixel camera with short-range LED light sensor pro flash. It also had brightness and white balance control, self-timer, digital zoom, and several filter effects such as sepia, black-and-white, and negative colors.

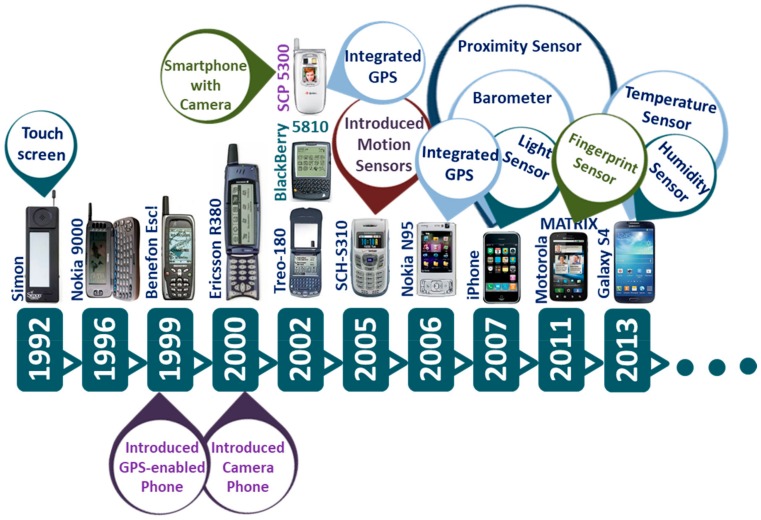

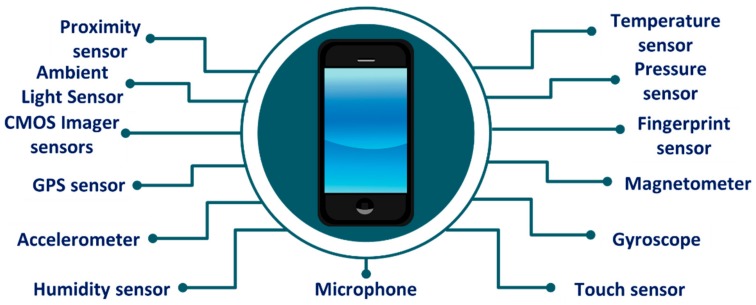

Fast forward to a decade later, modern smartphones featured a number of sensors such as high-resolution and high-speed CMOS image sensor, GPS sensor, accelerometer, gyroscope, magnetometer, ambient light sensor, microphone, and fingerprint sensor (Figure 1). In addition, the processing and data storage capability of current smartphones has improved significantly. Figure 2 shows the built-in sensors that most present-day smartphones possess.

Figure 1.

Evolution of smartphones and smartphone-embedded sensors over time.

Figure 2.

Built-in sensors in a typical present-day smartphone.

3. Smartphone Sensors for Health Monitoring

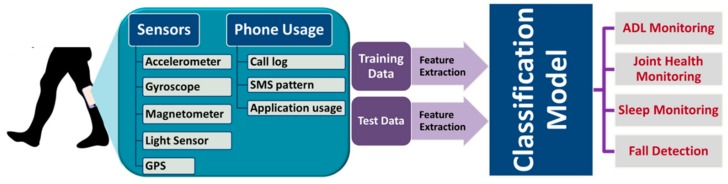

As discussed earlier, the modern-day smartphones are fitted with a number of sensors. These sensors allow for active and/or passive sensing of several health parameters and health conditions. The data thus measured by the smartphone-sensors, sometimes coupled with information related to device usage such as call logs, app usage and short message service (SMS) patterns can provide valuable information of an individual’s physical and mental health over a long period of time. In this way, the smartphone can potentially be turned into a viable and cost-effective device for continuous health monitoring. In the following sections (Section 3.1, Section 3.2, Section 3.3, Section 3.4, Section 3.5, Section 3.6 and Section 3.7), we discuss how the smartphone can be used for heart, eye, skin, mental health and activity monitoring, respectively.

3.1. Cardiovascular Health Monitoring

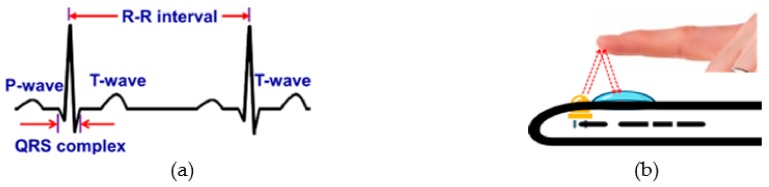

Heart rate (HR) or pulse rate is one of the four ‘vital signs’ that is routinely monitored by physicians to diagnose heart-related diseases such as different types of arrhythmias [22,33]. HR and HR variability (HRV) are typically extracted from the Electrocardiogram (ECG) (Figure 3a). However, these systems, particularly the conventional 12-lead ECG systems are expensive, restrict user’s movement and require trained medical professionals to operate in clinical settings. HR and HRV can also be measured using portable and hand-held single-lead ECG devices [22,34]. Furthermore, with the advancement of wearable sensor technologies, HR and HRV can now be obtained using commercial fitness trackers such as Fitbit® (San Francisco, CA, USA), Jawbone® (San Francisco, CA, USA), Striiv® (Redwood City, CA, USA), and Garmin®, (Olathe, KS, USA) [22].

Figure 3.

Measuring heart rate (a) from a typical trace of a single lead Electrocardiogram (ECG) signal, and (b) using a smartphone camera.

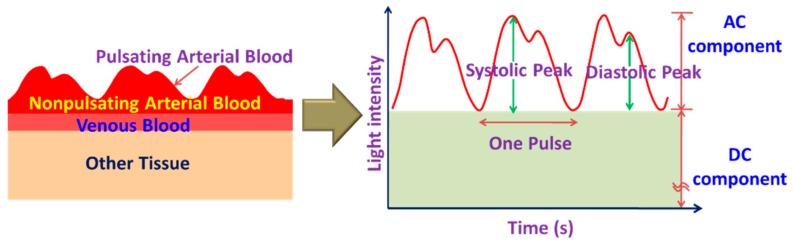

However, these portable and wearable systems require additional accessories, which can be avoided by exploiting the embedded sensors such as a camera and microphone in the smartphone for monitoring HR and HRV. Using smartphone camera sensors, it is possible to estimate HR and HRV from the photoplethysmogram (PPG) signal derived from the video of the bare skin such as of the fingertip (Figure 3b) or the face. The light absorption characteristics of hemoglobin in blood differ from the surrounding body tissues such as flesh and bone. PPG estimates the volumetric changes in blood by detecting the fluctuation of transmissivity and/or reflectivity of light with arterial pulsation through the tissue (Figure 4) [22,35]. Although near-infrared (NIR), red light sources are used in most commercial systems [22,35], some researchers [36,37,38,39,40] exploited the smartphone embedded white flashlight to illuminate the tissue to measure the PPG.

Figure 4.

Photoplethysmograph (PPG) signal obtained from the pulsatile flow of blood volume.

Most published smartphone-based HR and HRV monitoring applications [36,37,38,39,40] follow a similar approach where these parameters are extracted from the PPG signal either by measuring pulse-to-pulse time difference in time-domain [39] or by finding the dominant frequency in the frequency domain [36,38]. In Reference [39], the HR and HRV were estimated from the PPG signal obtained from the fingertip using the flashlight and the camera of a smartphone. The green channel of the video data was used to derive the pulse signal after a low-frequency band-pass filtering. Instead of using a conventional peak/valley detection method, the authors detected the steepest slope of each pulse wave and evaluated the correlation of the PPG signal with a pulse wave pattern to determine the cardiac cycles. The calculated HR and HRV were highly correlated to that measured from a commercial ECG monitor, although the degree of improvement in the measurement accuracy with the proposed algorithm over the conventional method was not reported. In addition, the authors did not evaluate the performance of the proposed algorithm when there were any bodily movements. A previously reported [41,42] motion detection technique was employed in Reference [36] to identify and discard the corrupt video data that is highly affected by motion artifacts. The PPG signal was derived from the video of the index fingertip recorded using all three channels (red, blue and green) of a smartphone camera. They calculated a threshold based on the difference of the maximum and the minimum intensity and summed up the pixel values with intensity greater than the threshold for each frame, from which the PPG signal was obtained. The periodic change in the blood volume flow during the cardiac cycles was reflected in the PPG signal acquired through the red channel in comparison to the other two channels. The pulse rate was finally extracted by performing simple Fast Fourier Transform (FFT) analysis on the PPG obtained through the red channel, achieving an average accuracy of as high as 98% with a maximum error of three beats per minute (bpm) with respect to the actual pulse. However, the algorithm cannot correct motion artifact in the video. Rather, it relies on restarting the video recording when the movement exceeds a predefined level.

In Reference [38], both the front- and rear-cameras of a smartphone were used to simultaneously monitor the heart rate (HR) and respiration rate (RR). The HR was obtained as usual from the PPG signal of the fingertip placed on top of the rear-facing camera. The front camera was used to estimate the RR by detecting the movement of the chest and the abdomen. The HR and RR were obtained by identifying the dominant frequency of the images in frequency-domain (Welch’s power spectral density). The authors reported achieving a high (95%) agreement in the measured HR compared to that obtained using the standard ECG from 11 healthy subjects with a variation ranging from −5.6 to 5.5 beats-per-minute. The RR estimated from the chest and the abdominal walls resulted in average median errors of 1.4% and 1.6%, respectively. In order to achieve a wide dynamic range of 6–60 breaths per minute, an automatic region-of-interest (ROI) selection protocol was used. With this protocol, a signal either from the abdomen or the chest based on the absolute value of mean autocorrelation was selected. However, this approach of monitoring RR restricts the movement of the body during video recording to avoid motion artifacts (MA). MA can be eliminated or reduced by incorporating MA estimation algorithms [41,42,43] in the system. In addition, extra caution is necessary while estimating RR in presence of colorful and patterned cloths, which can affect the fidelity of video recordings, thereby affecting the estimation accuracy of the RR.

All HR monitoring systems discussed above are contract-based, which require the user to keep the fingertip in close contact with the smartphone camera lens using sufficient strength. Any alteration of the finger position and illumination condition may result in an erroneous estimate of HR [44]. Contactless monitoring systems, on the other hand, estimate HR from the PPG signal derived from the video of the face. Such a non-contact cardiac pulse monitoring application named FaceBeat was presented in Reference [45]. The application was based on an algorithm similar to the first-of-its-kind video-based HR monitoring system proposed in Reference [46], which had exploited the webcam of a laptop for video recording. FaceBeat extracts cardiac pulse and measures HR from the video of a user’s face recorded using the front camera of the smartphone. The photodetector array of the camera sensor detects the variation in the reflected light from a specific region of interest (ROI) in the face with the change of blood volume in the facial blood vessels. The authors exploited the green channel data of the recorded video, which as reported in References [40,44,47,48], is most suitable for evaluating HR, particularly in the presence of motion artifacts. The authors used independent component analysis (ICA) to remove noise and motion artifacts from the video data of the ROI and performed the frequency domain analysis on the both the raw and decomposed signals to extract HR and HRV. The HR thus measured showed a maximum average error of 1.5% with respect to the reference ECG signal obtained from a commercial ECG monitor. However, the complex computation process required for the application increase the processor load and computation time, thereby increasing the power consumption and reducing the battery life.

Unlike the conventional approach, which estimates HR from the fluctuation of reflected light through specific color channels (R, G, B), researchers in Reference [49] proposed a method that estimates both HR and RR by detecting the variation in the hue of the reflected light from the face. A 20 s video of the subject’s face was recorded. However, only the forehead region of the face was analyzed in the frequency domain to determine the dominant frequencies in the time-varying changes of the average Hue. The authors reported achieving highly accurate HR and RR measurements showing a higher correlation to the measurements with standard instruments than the green channel PPG. Nevertheless, this approach may not work if the forehead skin is covered with any object such as hair, headband or cap, or has scar tissue on it. Although face-based contactless monitoring of HR offers a more convenient alternative to the contact-based systems, its performance can vary with the variation in illumination, skin color, facial hair, scar and movement of the face. Table 2 presents some smartphone-sensor based cardiovascular monitoring systems presented in the literature.

Table 2.

Smartphone-sensors for cardiovascular health monitoring.

| Ref. | Year | Measured Signs | Type | Smartphone Model | Sensor Used | Video Resolution | Frame Rate (fps) |

Video Length | Method | Performance wrt Standard Monitors | # of Subjects |

|---|---|---|---|---|---|---|---|---|---|---|---|

| [37] | 2018 | HR, HRV | Contact-based (index finger) | iPhone 6, Apple Inc., Cupertino CA | Front camera | 1280 × 720 | 240 | 5 min | • Reflection of light from the finger is measured. | Pearson Correlation coefficient (PC) for most parameters between PPG and ECG: >0.99 | 50 (11 F, 39 M) |

| [39] | 2016 | HR, HRV | Contact-based (index finger) | iPhone 4S, Apple Inc., Cupertino CA | Rear camera | 30 | 5 min | • Combination of the steepest slope detection of pulse wave derived from the green channel of the reflected light and its correlation to an optimized pulse wave pattern. | PC: >0.99 (HR), ≥0.90 (HRV) | 68 (28 F, 40 M) | |

| [38] | 2016 | HR, RR | Contact-based (HR) and contactless (RR) | HTC One M8, HTC Corporation, New Taipei City, Taiwan | Front (for RR) and rear (for HR) camera | RR: 320 × 240 (ROI: 49 × 90 abdomen) HR: 176 × 144 (ROI:176 × 72) |

30 (down-sampled to 20 (RR), 25 (HR)) | -- | • Frequency domain analysis of the noncontact video recordings of chest and abdominal motion. | Average of median errors for RR: 1.43%–1.62% between 6 and 60 breaths per minute | 11 (2 F, 9 M) |

| [45] | 2012 | HR | Contactless (face) | iPhone 4, Apple Inc., Cupertino CA | Front camera | 640 × 480 | 30 | 20 s | • Analysis of the raw video signal (green channel) and ICA-decomposed signals of the face in the frequency domain. | Error rate: 1.1% (raw signal), 1.5% (ICA-decomposed signals) | 10 (2 F, 8 M) |

| [49] | 2018 | HR, RR | Contactless (face) | LG G2, LG Electronics Inc., Korea | Rear camera | -- | 30 (down-sampled to 10) | 20 s | • Frequency domain analysis of the color variations in the reflected light (hue) from the face. | PC: 0.9201 (HR) and 0.6575 (RR) | 25 (10 F, 15 M) |

| [36] | 2016 | HR | Contact-based (index finger) | -- | Rear camera | 1920 × 1080 | -- | -- | • Frame-difference based motion detection for improving data quality. • Uses all 3 channels (R, G, B) for PPG extraction. |

20 | |

| • Blood volume flow was observed clearly in the Red channel. | Average accuracy: 98% |

||||||||||

| [50] | 2015 | Pulse, HR, HRV | Contact-based (index finger) | Motorola Moto X, Motorola, Libertyville, IL and Samsung S 5 | Rear camera | 640 × 480 | 30 | 100 s | • Extracts PPG by averaging the Green channel data of the video. • HR is calculated by detecting the consecutive PPG peaks. |

PC of pulse and R-R interval from two phone models > 0.95 | 11 |

| [40] | 2014 | HR, NPV | Contact-based (index finger) | iPhone 4S, Apple Inc., Cupertino CA | Rear camera | ROI: 192 × 144 |

30 | 20 s | • HR and NPV were measured in the presence of a controlled motion (6 Hz) of the left hand. • Evaluated the effect of motion artifact (MA) on the PPG in all three color (R, G, B) channels. |

Higher SNR for B and G channel PPG in presence of 6Hz MA. PC: HR>0.996 (R, B, G), NPV = 0.79 (G) | 12 (M) |

| [51] | 2014 | HR, HRV | Contact-based (index finger) | Sony Xperia S, Sony Corporation, Tokyo, Japan. | Rear camera | -- | -- | 60 s | • HR was estimated by detecting the consecutive PPG peaks and also the dominant frequency. • Combines several parameters (HR, HRV, Shannon entropy) to detect Atrial fibrillation (AF). |

HR error rate: 4.8% AF detection: 97% specificity, 75% sensitivity | |

| [52] | 2012 | HR, HRV | Contact-based (index finger) | iPhone 4s and Motorola Droid, Motorola, Libertyville, IL |

Rear camera | ROI: 50 × 50 |

30 (iPhone), 20 (Droid) |

2, 5 min (iPhone, Droid) | • Several ECG parameters were extracted with two different models of smartphone both in supine and tilt position and performed comparative analysis with the data obtained from a standard five lead ECG. | PC: ~ 1.0 (HR), PC for Other ECG parameters: 0.72-1 (Droid), 0.8-1 (iPhone) | 9 (iPhone) 13 (Droid) |

| [44] | 2012 | HR | Contact-based (index finger) | HTC HD2 and Samsung Galaxy S |

Rear camera | ROI: 288 × 352 (HTC) 480 × 720 (Samsung) |

25 (HTC) 30 (Samsung) |

6 s | • HR is calculated by detecting the consecutive PPG peaks. | Error: ± 2 bpm | 10 |

| [53] | 2012 | HR | Contact-based (index finger) | Motorola Droid, Motorola, Libertyville, IL | Rear camera | ROI: 176 × 144 |

20 | 5 min | • HR from the PPG signals was obtained at sitting, reading and video gaming by using an Android-based software. | PC: ≥ 0.99 Error: ± 2.1 bpm |

14 (11 F, 3 M) |

3.2. Pulmonary Health Monitoring

Air pollution across the globe has increased significantly in the last decade [54] resulting in billions of people being at increased risk for chronic pulmonary diseases such as cough, asthma, chronic obstructive pulmonary disease (COPD) and lung cancer. In addition, smoking tobacco is one of the key risk factors for lung cancer and other pulmonary diseases [55]. In fact, lung cancer is the most common form of cancers and caused ~19% of all cancer-related deaths in 2018 [55]. Therefore, early detection of these lung diseases and continuous monitoring of pulmonary health are paramount for timely and effective medical intervention. Many researchers [56,57,58,59,60,61,62] used the microphone of a smartphone to detect the sound of a cough and breathing and analyzed the recorded audio signals in efforts to develop a cost-effective and portable tool for faster assessment of pulmonary health.

The smartphone can be used for lung rehabilitation exercise. In Reference [56], an interactive game was developed where the users play to dodge obstacles through inhalation and exhalation. The game, ‘Flappy Breath’ was designed for smartphones and either used its built-in microphone or a Bluetooth-enabled stretchable chest belt to detect breathing. When played using the microphone, the game detects the frequency and strength of the input sound, calculates the average volume of airflow, and also calibrates itself to identify the frequencies corresponding to silence, inhalation and exhalation. However, interferences from other nearby sources can corrupt the sound of breathing and may even make the game unresponsive to breathing. In contrast, the game does not require any initial calibration when played using the chest belt. It also allows the users to keep their hands free, but it incurs an additional cost to the user. The app can also store a person’s breathing patterns and information related to their airflow over a long period of time that can be useful in the long-term monitoring of lung health.

A cough detection algorithm, proposed in Reference [57], was used to analyze the audio signal recorded by the smartphone’s microphone to detect the cough event. There, the authors first obtained the spectrogram from the time-varying audio signal, and the cough sound was found to generate higher energy over a wide frequency range compared to other sounds such as throat clearing, speech, and noise. Based on the distinct pattern, the spectrogram of the cough sound from several training sequences was isolated, normalized and analyzed using the principal component analysis (PCA). A subset of the key principal components was then used to reconstruct the cough signal with high fidelity and finally, to classify the cough events. The authors reported achieving a sensitivity of 92% and a false positive rate of 0.5% in recognizing cough events using a random forest classifier. However, the phone was placed around the user’s neck or in the shirt pocket to improve the audio quality of the recorded sound, which may not be comfortable or always feasible for regular use. Therefore, further research is needed to implement the algorithm in a mobile platform for a complete phone-based cough detection system. A similar phone-based system for identifying and monitoring nasal symptoms such as blowing the nose, sneezing and runny nose was proposed in Reference [58]. This system also tracks the location information using the GPS data in the case of a nose related event, thereby allowing it to keep a record of contextual information related to the event for future reference.

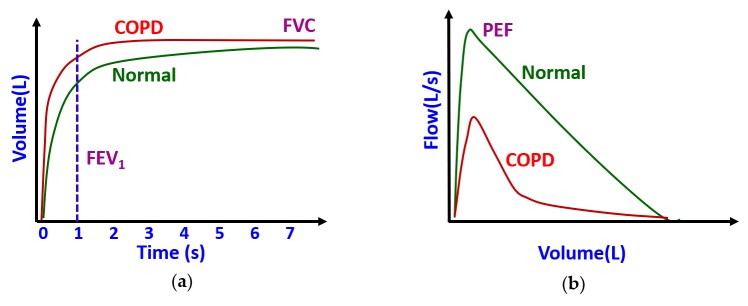

Some researchers [58,59,60,61,62] used the microphone of the smartphones to realize a low-cost spirometer in a mobile platform. Spirometers are widely used in clinical settings to quantify the flow and volume of air inspired and expired by the lungs during breathing. In standard spirometry, a volume-time (VT) curve (Figure 5a) and a flow-volume (FV) curve (Figure 5b) are obtained from forced expiratory flow. These curves are used to extract some clinically important parameters such as forced vital capacity (FVC), forced expiratory volume in 1 s (FEV1), peak expiratory flow (PEF), and the ratio FEV1 to FVC (FEV1/FVC), which are routinely used clinically to determine the degree of airflow obstruction in patients with pulmonary disorders such as asthma, COPD and cystic fibrosis. However, clinical spirometers are expensive and cost several thousands of dollars. Therefore, a smartphone-based spirometer can be a viable low-cost alternative to these expensive spirometers, particularly for the people in remote areas and in underdeveloped countries.

Figure 5.

Typical spirometric flow curves (a) volume-time curve, and (b) flow-volume curve.

A smartphone-based spirometer, ‘SpiroSmart’, was proposed in Reference [58] using the built-in microphone to record the sound of forced exhalation and send the audio data to a remote server for analysis and parameter extraction. In the server, an algorithm to estimate the flow rate from the audio signal of forced exhalation was implemented. There, the authors first compensated for the loss of air pressure as the sound travels a distance from the mouthpiece to the microphone. Then, they estimated the air pressure at the opening of the mouth, which was converted to flow rate. This signal was further processed to extract a set of features from a window of 15 ms to approximate the flow rate over time. Finally, the spirometric parameters were estimated from the flow rate features following a bagged decision tree and mean square error-based regression techniques, and k-means clustering. In addition, the FV curve was estimated by regression using a combination of conditional random field (CRF) and a bagged decision tree. The authors reported achieving a median error of ~8% for the four spirometric parameters in reference to the measurements from a clinical spirometer. In a later work, the same research group proposed a call-in based system in References [60,61], where instead of recording the sound on the phone, users can directly call-in to record the audio of forced exhalation in a remote server. The system, named ‘SpiroCall’, runs a similar algorithm in the server as ‘SpiroSmart’ and estimated the spirometric parameters from the audio data. The transmission of exhalation sound over voice channels was found to have a negligible effect on the bandwidth and resolution of the signal as well as on the accuracy of the system, thus making the system suitable for remote monitoring of lung health.

In the smartphone-based spirometer proposed in Reference [62], the authors attached a commercial mouthpiece with the smartphone using a custom-made 3D-printed adapter fabricated with polylactic acid (PLA) material. Following a down-sampling and subsequent filtering of the original audio signal, the authors performed a time-frequency analysis on the recorded signal using variable frequency complex demodulation (VFCDM). From VFCDM, they derived two curves—maximum power of each sample against accumulated maximum power and accumulated maximum power against time—which showed a similar pattern to the typical FV curve and VT curve, respectively. Finally, FVC, FEV1, and PEF were extracted from these curves. Although the individual parameters showed poor correlation with the reference, the FEV1/FVC or the Tiffeneau-Pinelli index, which is a key metric in diagnosing chronic obstructive pulmonary disease (COPD), was found to be highly correlated () to the reference measurements with a root mean squared error (RMSE) of ~5.5% and ~14.5% in healthy persons and COPD patients, respectively.

In References [63,64], a smartphone application, ‘LungScreen’ was developed to realize a personalized tool for lung cancer risk assessment. The application assesses the risk of lung cancer based on the user’s response to an interactive questionnaire that includes duration of tobacco use, occupational environment and family history of lung cancer. Based on this information, it instantly classifies the users into three risk groups—low, moderate and high. Using the location information from the GPS sensor of the smartphone, the users at high risk of lung cancer are referred and navigated to their nearest screening centers for further investigation. Although the app can be useful for initial and fast screening of lung cancer, the sensitivity and specificity of the app were not reported [63]. In addition, in Reference [64], 32 participants were found positive in a low-dose computed tomography (LDCT) screening among the 158 participants (Baranya County, Hungary) who were identified by the app being at high risk of non-small-cell lung carcinoma (NSCLC), the false negative rate (FNR) of the app was not reported.

3.3. Ophthalmic Health Monitoring

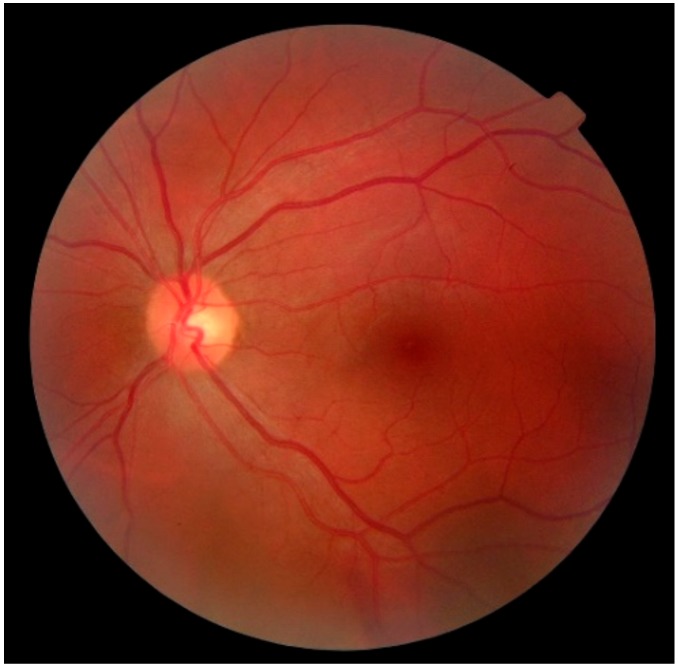

Diabetic Retinopathy (DR) is one of the common complications of diabetes, which if diagnosed late and left untreated, can lead to blindness. Currently, the seven-field stereoscopic-dilated fundus photographs are considered as the ‘gold standard’ for diagnosing DR by the Early Treatment of Diabetic Retinopathy Study (ETDRS) group [65,66]. However, this protocol requires an expensive imaging system, specially-skilled photography personnel, and specialized processing and storage of films. Single-field digital fundus photography (Figure 6), although not as comprehensive as the seven-field stereoscopic-dilated fundus photography, can still serve as a screening tool for DR before a detailed ophthalmic evaluation and management [67]. Single-field digital fundus imaging is less expensive and more convenient in comparison to the standard seven-field stereoscopic ETDRS photography. However, this system is still costly with a price of the complete imaging system ranging from several thousand to ten thousand dollars [68], thus limiting its large-scale adoption for diagnosing DR and other eyes-related diseases, especially in developing and under-developed countries. However, the ever-increasing popularity and rapid technological advances of smartphone cameras, coupled with modern-day cloud-based image processing, storage and management services have paved the way for low-cost and efficient remote screening and diagnosis of ophthalmic diseases. This smartphone-based imaging technology can potentially be useful in inpatient consultations and emergency room visits [69].

Figure 6.

Image of the retinal fundus of a healthy eye; Source: https://pixabay.com/en/eye-fundus-close-1636542/.

At present, there are some smartphone applications available to perform simple ophthalmic tests, although their reliability and performance are often not guaranteed. In order to diagnose Diabetic Macular Edema (DME), Diabetic Retinopathy (DR), and most types of age-related macular degeneration (AMD), a good quality fundus image with an adequate field-of-view is critical. A resolution of at least 50 pixels/° along with an imager larger than 1024 768 pixels are required [70], and most modern-day smartphone-cameras meet this requirement. The idea of using the smartphone camera for retinal fundus imaging was first presented in Reference [69]. In this work, the image of the retinal fundus was captured with a smartphone camera through a 20 Diopter (D) lens, and a pen torch was used for illumination. Although the first of its kind, the system was not user-friendly and cannot ensure good image quality since multiple tasks such as holding the pen torchlight, the smartphone and the lens altogether while directing the light, focusing the camera, and finally pressing the touchscreen for capturing the image, must be performed. An alternative method is proposed in Reference [71], for capturing good quality fundus image. Unlike Reference [69], this system exploited the embedded flashlight of the smartphone as a light source for the camera. The user can use one hand to control the smartphone for focusing, magnifying and recording the video image while directing the light to the patient’s retina by holding a 20D or 28D ophthalmic lens with the other. The still image of the retinal fundus was then obtained from the video sequence. In Reference [72], the authors demonstrated capturing fundus images from human and rabbit eyes using a similar approach as [71]. They exploited an inexpensive application (Filmic Pro, Cinegenix LLC, Seattle, WA, USA; http://fimicpro.com/) to control the illumination, focus, and light exposure while recording the video image. Although all systems reported capturing a fundus image, no validation or comparison in reference to the standard systems was reported. Furthermore, these techniques require the use of both hands, thus leaving no room for eye indentation. In addition, the potential for peripheral retinal imaging was not explored.

In Reference [73], a 3D printed lightweight attachment was designed for smartphones to capture high-quality fundus images. Unlike the systems reported in References [69,71,72], the attachment can hold an ophthalmic lens at a recommended but an adjustable distance from the lens of the smartphone camera and can use either the phone’s built-in flash or external light source for illumination. A similar attachment for smartphones and an external LED light source were used in Reference [68] for near visual acuity testing and fundus imaging, thus enabling remote screening of DR patients. A similar fixture was also presented in Reference [74] which has slots to accommodate a smartphone and hold a 20 D lens, thus allowing the physicians to use the system in one hand and keep the other hand free for indentation. Along with central fundus images, both systems [73,74] enables capturing high-quality images of the peripheral retina such as ora serrata and pars plana. Thus, they can be useful in screening for peripheral lesions as well, although the researchers in Reference [73] did not explore this possibility.

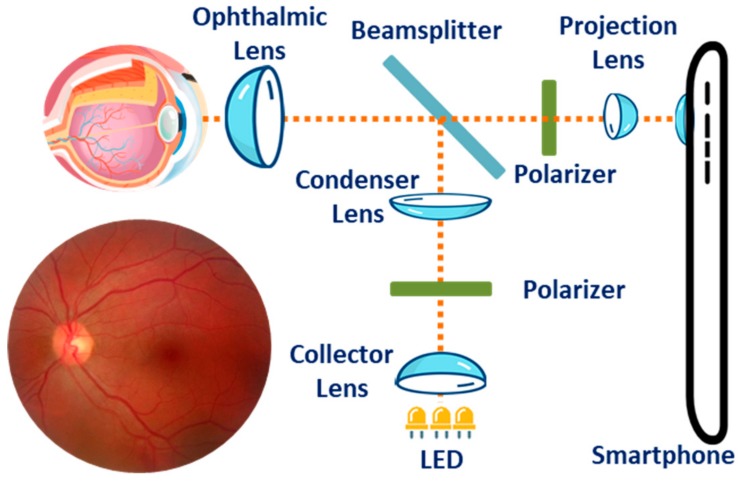

In Reference [75], a 3D printed plastic attachment (14 × 15.25 × 9 cm3) designed for the smartphones to enable high-quality wide-field imaging of retinal fundus was developed. The attachment houses three white LEDs for illumination, optical components including one 54D lens, one 20 mm focal length achromatic lens for light collection, two polarizers and a beam splitter, and a phone holder to ensure proper alignment between the camera sensor and the imaging optics. The smartphone can adjust the illumination level to the retina by regulating a battery powered on/off dimming circuit that independently controls each LED. The variability in axial length and the refractive error in the subject’s eyes were corrected by exploiting the auto-focus mechanism of the smartphone. The Ocular CellScope, as they named the complete imaging system, cost only $883 and was reportedly capable of capturing a wide field-of-view (~55°) in a single fundus image with a dilated pupil, thus making it a promising low-cost alternative to most commercial retinal fundus imaging systems.

Similar to the Ocular CellScope, a much smaller (47 × 18 × 10 mm3) and less expensive ($400) magnetic attachment called D-Eye was reported in References [76] and [77]. The device houses similar optical components except for the light source, for which D-Eye exploits the smartphone’s embedded flashlight. The cross-polarization technique significantly reduced the corneal Purkinje reflections, thus making it possible to easily visualize the optic disc and screen patients, particularly for glaucoma even with undilated pupils. The authors reported achieving a field of view of ∼20° for each fundus image with a significant agreement with dilated retinal bio-microscopy which is conventionally used for grading the severity level of diabetic retinopathy. Furthermore, the retinal fundus imaging of babies was convenient due to their spontaneous attraction to the non-disturbing light emitted by the device. In addition, the device allows the examiner to work at an ergonomically convenient distance (> ~1 cm) while using the smartphone’s screen to focus the light on the patient’s retinal fundus. A stitching algorithm can be used to create a composite image, thus increasing the field-of-view of the retinal fundus. Figure 7 shows the typical arrangement of the optical components for fundus imaging with a smartphone.

Figure 7.

Typical arrangement of the optical components for fundus imaging with a smartphone.

A portable eye examination kit (PEEK) was presented in References [78,79] that exploited the camera of a smartphone to realize a portable ophthalmic imaging system. The system can perform an automatic cataract test by acquiring the image of an eye with a smartphone camera and comparing it to a set of preloaded images of cataract affected eyes with different intensity. However, additional external hardware is required to make PEEK suitable for fundus imaging. The additional hardware primarily comprises a red LED to illuminate the blood vessels and hemorrhages, a blue LED to observe corneal abrasions and ulcers after staining the eye with fluorescein, and a lens to magnify the image. The image of the retinal fundus is then displayed on the screen of the smartphone for diagnostic purpose. The system was successfully used to identify visual impairment in children [80,81] and to monitor the effect of prolonged exposure to extreme environments such as the long frigid darkness of Antarctica during the winter on the health of the explorers’ eyes [82].

3.4. Skin Health Monitoring

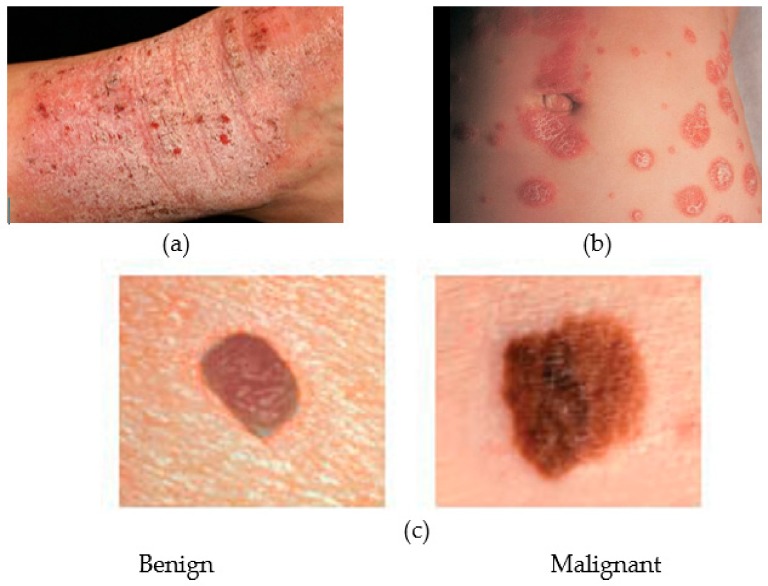

Skin cancer is one of the most common of all human cancers that is caused by the abnormal growth of skin tissue. Experts have identified three major types of skin cancer basal cell carcinoma (BCC), squamous cell carcinoma (SCC), and melanoma, the malignant form of the latter being the most dangerous one among the three. Melanoma is primarily caused by over-exposer to harmful ultra-violet rays of the sun that hinders melanin synthesis by damaging the genetic material of the melanin-producing melanocyte cells of the skin and thus putting people at high risk of skin cancer. Malignant melanoma tends to spread to other parts of the body and may turn fatal if not diagnosed and treated early. According to the America Cancer Society, 91,270 new cases of skin melanoma were estimated to be diagnosed in the US in 2018, out of which 9320 deaths were estimated [83]. Skin cancer is characterized by the development of precancerous lesions with varying shape, size, color and texture. Apart from skin cancer, there are other types of skin diseases such as psoriasis, eczema, and moles that require medical attention, thus causing loss of productivity and increase in medical expenditures. In fact, one in four Americans underwent treatment for at least one skin disease in 2013, costing $11 billion in lost productivity and $75 billion for treatment and associated costs [84]. Therefore, a low-cost solution for early detection of skin disease would be of immense use. Smartphones, being widely popular at present, can offer a viable solution for early diagnosis of skin diseases, most particularly for remote screening and long-term monitoring of skin lesions. Figure 8 shows some common medical conditions associated with the skin.

Figure 8.

Several types of skin diseases (a) Eczema, (b) Psoriasis and (c) two forms of Melanoma.

To evaluate the efficacy of smartphone-based imaging in assessing the evolution of skin lesions, a cross-sectional study of skin disease was conducted in Reference [85]. Photographs were taken and sent by the patients themselves reportedly assisted the physicians to strengthen, modify and confirm the diagnosis in 76.5% of the patients, thus significantly influencing the diagnosis and treatment of skin diseases. Furthermore, in comparison to the conventional paper-based referral system, it was observed that smartphone-based Teledermoscopy (TDS) referral schemes can significantly reduce the waiting time for skin-cancer patients [86]. In addition, the quality of most images captured by the smartphones was good enough to reliably improve the triage decisions, thus potentially making the management of patients with skin cancer faster and more efficient as compared to the traditional paper-based referrals.

Most existing portable solutions for skin disease detection rely on conventional image processing techniques along with conventional monochrome or RGB color imaging [87,88]. However, owing to its poor spatial and spectral resolution, conventional imaging approaches may not be suitable for resolving heterogeneous skin lesions [89,90] and can potentially lead to diagnostic inaccuracies. The spectral imaging techniques, on the other hand, exploits the spectral reflectance characteristics of affected sites on the skin [91,92] to resolve heterogeneous lesions and was reported to be useful in differentiating skin diseases, such as early-stage melanoma from dysplastic nevi and melanocytic nevi [93], and various acne lesion types [94]. A ring-shaped light source for the smartphone camera to study the feasibility of using smartphones for skin chromophore mapping was presented in Reference [95]. The ring comprises one white LED and three LEDs with different emission spectrum (red, green and blue) as well as two orthogonally oriented polarizers. The polarizers reduce the specular-reflected light from the skin, thus allowing the camera sensor to detect light scattered only from the skin [96]. The white light enables identifying the skin malformation, while the distribution of skin chromophores is estimated from the reflected or scattered RGB lights. Thee different smartphones were tested both in vitro and in vivo to estimate the Hemoglobin index (HI) and the Melanin index (MI). Both HI and MI reportedly increased in the in-vitro test with the concentration of absorbents in the phantoms. In vivo tests further showed the Hemangiomas and the Nevi having higher HI and MI, respectively compared to the healthy skin. Although the results achieved from all the smartphones were promising, they lacked consistency to some extent, which was attributed to the built-in automatic camera settings such as white balance, and ISO of some smartphones, and the difference in spectral sensitivities of the cameras.

A smartphone-based miniaturized (92 × 89 × 51 mm3) multi-spectral imaging (MSI ) system, which is somewhat similar in principle to the approach in Reference [95] was implemented in Reference [90] to facilitate portable and low-cost means of skin disease diagnosis. Instead of using four different LEDs as in Reference [95], the system exploits the smartphone’s flashlight, nine narrow-band band-pass filters, and a motorized filter wheel to periodically obtain light of different wavelengths, which along with a plano-concave lens, a mirror, a 10× magnifying lens, and two linear polarizers are housed in a compact enclosure. An Android application (SpectroVision) was developed to control a custom interface circuit, perform multispectral imaging and analyze skin lesions. By controlling the motorized filter remotely via Bluetooth, the application captures nine images at different wavelengths ranging from 440 nm to 690 nm and one white-light image. The captured images are sent to a skin diagnosis/management platform over WiFi or LTE, where further processing of the images such as gray-scale conversion, shading correction, calibration and normalization is performed. A quantitative analysis of the nevus regions using the system was performed. In addition, quantifying acne lesions using ratio-metric spectral imaging and analysis was reported, thus demonstrating the potential of the system in diagnosing and managing skin lesions.

In order to capture the cellular details of human skin with a smartphone, a low-cost and first-of-its-kind confocal microscope was developed and used in Reference [97]. A two-dimensional confocal image of the skin was obtained by using a slit aperture and a diffraction grating, thus avoiding any additional beam scanning devices. The diffraction grating spread the focused illumination line on different locations on the tissue with different wavelengths. The authors reported observing characteristic cellular structures of human skin, including spinous and basal keratinocytes and papillary dermis with a lateral and axial resolution of 2 μm and 5 μm, respectively. However, in comparison to commercial systems, the designed system has a shallow imaging depth, which can be attributed to the use of slit aperture and a shorter wavelength (595 nm) light source of the smartphone device. In addition, the low illumination efficiency limits the imaging speed to only 4.3 fps. A light source of longer wavelength and enhanced coupling with the illumination slit [98] may improve the imaging depth and speed. Nevertheless, the small dimension (16 × 18 × 19 cm3) and low cost ($4200) of such a high-resolution imaging system may enable the system to be used in rural areas with limited resources for standard histopathologic analysis.

A smartphone-based system named DERMA/care was proposed in Reference [99] to assist in the screening of melanoma. An inexpensive and small dimension (6 cm × 4 cm × 2 cm) off-the-shelf microscope was mounted on the camera of the smartphone for capturing high-resolution images of the skin. In addition, a mobile application that can extract some characteristic features from the image of the affected skin was developed. The application was used to extract textural features such as entropy, contrast and variance, and geometric features such as area, perimeter and diameter. The features were then fed to a support vector machine (SVM) classifier to enable automatic classification of skin lesions. In another work, a multi-layer perceptron (MLP) was employed on a smartphone to analyze skin images captured by its camera, thus enabling skin cancer detection [100]. A similar application was proposed in Reference [101], which upon capturing the image of the skin, can alert the users about a potential sunburn and/or the severity of melanoma. There a novel method to compute the time-to-skin-burn by utilizing the information of burn frequency level and UV index level was introduced. Additionally, for dermoscopic image analysis, a system for the smartphones that incorporates algorithms for image acquisition, hair detection and exclusion, lesion segmentation, feature extraction, and classification was developed. After excluding the hair and identifying the ROI, a comprehensive set of features were extracted to feed to a two-level classifier. The authors reported achieving high accuracy (>95%) in classifying among benign, atypical, and melanoma images.

3.5. Mental Health Assessment

As mentioned earlier, present-day smartphones have a number of embedded sensors such as accelerometer, GPS, light sensor and microphone. The data from these embedded sensors can be collected passively with the smartphone, which coupled with the user’s phone usage information such as call history, SMS pattern and application usage may potentially be used to digitally phenotype an individual’s behavior and assess one’s mental health. For example, an individual’s stress level or emotional state can be deduced from their voice while talking over the phone and recording the conversation with the smartphone’s microphone [102,103,104]. In addition, the accelerometer can provide information about physical activity and movement during sleep. The GPS can provide information about the location and thus the context and variety of activity. Therefore, the smartphone enables a less intrusive and more precise alternative to the traditional self-reporting approach, and it may be very useful in assessing the mental wellbeing of an individual.

Many researchers used the smartphone data to assess or predict an individual’s general mental health such as social anxiety [105], mood [106,107] or daily stress level [108]. The GPS location data of 16 university students were analyzed in Reference [105], and it was reported that there was a significant negative correlation between time spent at religious locations and social anxiety. The accelerometer data along with device activity, call history and SMS patterns were analyzed in References [106] and [107] to predict mood. A prediction model based on the Markov-chain Monte Carlo method was developed in Reference [107] and it achieved an accuracy of 70% in mood prediction. In Reference [106], personalized linear regression was used to predict mood from the smartphone data. In Reference [108], by extracting device usage information and data from smartphone sensors (accelerometer, GPS, light sensor, microphone), an attempt was made to determine the factors associated with daily stress levels and mental health status [108]. There, researchers found a correlation between sleep duration and mobility with the daily stress levels. They also found speech duration, geospatial activity, sleep duration and kinesthetic activity to be associated with mental health status.

Some works in the literature also exploited the sensor data and usage information of the smartphone to assess specific mental health conditions such as depression [109,110,111,112,113,114], bipolar disorder [115,116,117,118,119], schizophrenia [119,120,121,122] and autism [123]. A significant correlation between some features of the GPS location information and depression symptom was observed in Reference [111]. For example, in Reference [114], the authors analyzed the data from the smartphone’s GPS, accelerometer, light sensor and microphone as well as call history, application usage, and SMS patterns of 48 university students. They observed that the students’ depression was significantly but negatively correlated with sleep and conversation frequency and duration. Some researchers [109,110,112,113] attempted to predict depression from the smartphone sensor data. In Reference [109], they predicted depression based on the accelerometer, GPS and light sensor data from the smartphone. Both the support vector machine (SVM) and random forest classifier were used in Reference [113] to predict depression from the GPS and accelerometer data, as well as from the calendar, call history, device activity and SMS patterns. However, the prediction accuracy in References [109] and [113] was only slightly better than the chance. In Reference [110], only the GPS data was used to predict depression and with the SVM classifier, moderate sensitivity and specificity of prediction were achieved. In Reference [112], both the GPS data and the device activity information were exploited to predict depressive symptoms. Using a logistic regression classifier for prediction, the authors achieved a prediction accuracy of 86%.

Some significant correlations between the activity levels and bipolar states were observed in some individual patients, where the physical activity level was measured with the smartphone’s accelerometer [115]. In Reference [119], the authors used data from the Bluetooth, GPS sensor and battery consumption information of the smartphone to track an individual’s social interactions and activities. There, they found that the data from these sensors are significantly correlated with their depressive and manic symptoms. In References [116,117], the accelerometer and GPS data of the smartphone were used to detect the mental state and state change of persons with bipolar disorder. They reported detecting state change with 96% precision and 94% recall and achieved an accuracy of 80% in state recognition [116]. Using additional information from the microphone and call logs, the precision and recall increased to 97%; thus improving the reliability of the system. However, the state recognition accuracy was somewhat reduced, which was attributed to the noisy ground-truth and inconsistencies in the daily behavioral patterns of the participants [117]. In Reference [118], the accelerometer and light sensor data of the smartphone, the call history and SMS patterns were exploited in a generalized and a personalized model to predict the state among persons with bipolar disorder. A precision and recall of 85% and 86%, respectively was achieved in state prediction.

In Reference [120], a study on the feasibility and acceptance of passive sensing by smartphone sensors among the people with schizophrenia was conducted. Persons with schizophrenia were mostly found open to sensing with smartphones and two-thirds expressed interest in receiving feedback, but a third expressed concern about privacy. The GPS location information was exploited to recognize outdoor activities among people with schizophrenia and thereby to infer social functioning [121]. In Reference [122], the authors proposed a system called CrossCheck that used data from the GPS, accelerometer, light sensor and microphone as well as call history, application usage, and SMS patterns to predict the change in mental health among patients with schizophrenia. There, they collected data from 21 patients and observed statistically significant associations between the patients’ mental health status and features corresponding to sleep, mobility, conversations and smartphone usage. The authors used random forest regression to predict the mental health indicators in the patients with schizophrenia and reported achieving a mean error of 7.6% with respect to the scores derived from the participants’ responses to a questionnaire.

Recently, Apple Inc.’s ResearchKit initiative launched a mobile application called “Autism and Beyond” [123]. This application captures images of the users’ facial expressions in response to standardized stimuli by the iPhone’s front-facing camera and analyzes these images using algorithms designed for emotion recognition. This application can potentially identify individuals who are at risk of autism and other developmental disorders. A large-scale trial is currently underway to assess the validity and utility of this approach.

3.6. Activity and Sleep Monitoring Systems

Daily physical activities such as walking, running and climbing stairs involve several joints and muscles of the body and require proper coordination between the nervous system and the musculoskeletal system. Therefore, any abnormalities in the functioning of these biological systems may potentially affect the natural patterns of these activities. For example, persons at the early onset of Parkinson’s disease tend to exhibit small and shuffled steps, and occasionally experience difficulties to start, stop and take turns while walking [22,124]. Additionally, due to gradual deterioration of motor control with age, older adults are at high risk of fall and mobility disability. In fact, an estimated 10% (2.7 million) of Canadians, aged 15 years and over, suffered from mobility-related disabilities in 2017 [125]. Furthermore, falls in the older adults may cause hip and bone fractures, joint injuries, and traumatic brain injury, which not only require longer recovery time but also restrict physical movement thereby affecting an individual’s daily activities. In addition, fall-related fractures reportedly have a strong correlation with mortality [124]. Moreover, nearly one-third (30%) of Canadian adults between 18 and 79 years of age were estimated to be at intermediate or high risk for sleep apnea [126], which is often associated with high blood pressure, heart failure, diabetes, stroke, attention deficit/hyperactivity disorder, and increased automobile accidents [127,128]. Therefore, quantitative assessment of gait, knee joints and daily activities including sleep are critical in early diagnosing musculoskeletal or cognitive diseases, sleep disorders, fall and balance assessment, as well as in the post-injury rehabilitation period.

Most existing activity monitoring systems rely on a network of cameras fixed at key locations in a home [22,129]. Although such systems can provide comprehensive information about complex gait activities, they are expensive and generally have a limited field-of-view. In recent years, there has been a growing interest in using smartphone embedded motion sensors such as accelerometers, gyroscopes, and magnetometers as well as location sensors such as the GPS sensor for real-time monitoring of human gait and activities of daily living (ADL) [130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157]. These sensors measure the linear and angular movement of the body, and the location of the user, which can be used to quantify and classify human gait events and activities in real time. A general architecture of smartphone-based activity monitoring is presented in Figure 9.

Figure 9.

General architecture of a smartphone-based activity monitoring system.

At the heart of an activity monitoring and recognition system is the classification or recognition algorithm. However, signal processing techniques and extraction of appropriate features also play critical roles in realizing a computationally efficient and reliable system. Signal processing techniques may include filtering, data normalization and/or data windowing or segmentation. Subsequently, a good number of key features from the statistical, temporal, spatial and frequency domains are extracted to feed into the classification model. Table 3 presents a list of typical features that are extracted from the motion signals. Finally, an appropriate classification model such as support vector machine (SVM) [137,138,155,158,159], naive Bayes (NB) [136,149,155,156], k-means clustering [138,149], logistic regression [134,155,156], k-nearest neighbor (KNN) [133,136,155,156,158], neural network (NN) [140,141,143,151,152] or a combination of models [134,140,141,148,150] are employed for activity recognition.

Table 3.

Typical features extracted from motion signals [22].

| Spatial Domain | Temporal Domain | Frequency Domain | Statistical Domain |

|---|---|---|---|

| Step length | Double support time | Spectral power | Correlation |

| Stride length | Stance time | Peak frequency | Mean |

| Step width | Swing time | Maximum spectral amplitude | standard deviation |

| RMS acceleration | Step time | Covariance | |

| Walking speed | Stride time | energy | |

| Signal vector magnitude (SMV) | Cadence (steps/min) | Kurtosis |

The Manhattan distance metric was used in Reference [130] to compare the accelerometer data of an average gait cycle from a test sample to three template cycles corresponding to three different walking speeds. The authors attempted both statistical and machine learning approaches and the highest accuracy (~99%) in classifying three different walking speeds was achieved with the support vector machine (SVM). An important limitation of this approach is that it relies on the local peak and valley detection to identify the gait cycles, but their consistency varies with walking speed and/or style. A two-stage continuous hidden Markov model (CHMM) was proposed in Reference [131] for recognition of human activities. Some subsets of optimal features were first produced by employing the random forest importance measures. The static and dynamic activities were then distinguished by applying the first-level CHMM, which was followed by a second-level CHMM for achieving a finer classification of the activities with an accuracy of ~92%. In Reference [132], a fuzzy min-max (FMM) neural network based incremental classification approach was used to learn activities, which includes walking, ascending and descending stairs, sitting, standing, and laying. The authors then applied a classification and regression tree (CART) algorithm to predict these activities and reported achieving a recognition accuracy of ~96.5%. A voting scheme was adopted in Reference [134] to combine the classification results from an ensemble of classifiers such as a J48 decision tree, logistic regression (LR) and multilayer perceptron (MLP). These authors reported identifying four activities such as walking, jogging, sitting and standing with an accuracy of more than 97%. However, the proposed approach performed poorly in distinguishing between activities like ascending and descending stairs, where the recognition accuracy reduced to ~85% and ~73%, respectively. In Reference [135], four smartphones were attached to the waist, back, leg, and wrist and captured motion data from the accelerometer and gyroscope for activity measurement; humidity, temperature, and barometric pressure sensors for sensing environmental parameters; and Bluetooth beacons for location estimation. A modified conditional random field (CRF) algorithm was implemented on each unit to classify the activities individually using a set of suitable features extracted from the preprocessed sensor data. The decisions from each unit were then assessed based on their relevance to the body positions to finally determine the activities. The authors reported identifying 19 daily activities including cooking, cleaning utensils and using bathroom sink and refrigerator with more than 80% accuracy.

An orientation independent activity recognition system based on smartphone embedded inertial sensors was reported in Reference [137]. The raw sensor data were processed by signal processing techniques including coordinate transformation and principal component analysis. A set of statistical features were extracted from the processed sensor signals, which is then fed to several classification algorithms such as ANN, KNN and SVM to identify the same six activities (walking, ascending and descending stairs, sitting, standing, and laying) investigated in References [131,132,133]. The authors also presented an online-independent SVM (OISVM) for incremental learning that can deal with the inherent differences among the measured signals resulted from the variability associated with the device placement and the participants. There they reported identifying the activities with an accuracy of ~89% using OISVM. In order to deal with the high computational costs associated with the machine learning techniques for activity recognition, a hardware-friendly support vector machine (HF-SVM) based on fixed-point arithmetic was proposed in Reference [138]. There the authors reported achieving a recognition accuracy of 89%, which is comparable to the performance of the conventional SVM.

Some researchers [139,140,141,142,143,150,151,152] exploited more advanced techniques such as deep neural networks for activity recognition. Unlike conventional machine learning approaches, these techniques do not employ separate feature extraction and feature selection schemes. Rather, they automatically learn the features and perform activity recognition simultaneously. In References [140,141], a deep convolutional neural network (Convnet) was formed by stacking several convolutional and pooling layers to extract key features from the raw sensor data. The Convnet, being coupled with multilayer perceptron (MLP) can classify six activities as [131,132,133] with an accuracy of ~95%. By incorporating additional features extracted from the temporal fast Fourier transform (tFFT) of the raw data, the performance of the Convnet was found to improve by ~1%. The authors in Reference [142] implemented an activity recognition system using a bidirectional long short-term memory (BLSTM)-based incremental learning approach that exploits both the vertical and horizontal components of the preprocessed sensor data to obtain a two-dimensional feature. Several such BLSTM classifiers were then combined to form a multicolumn BLSTM (MBLSTM). The authors compared the performance of MBLSTM with that of several classifiers such as SVM, kNN and BLSTM, and achieved the lowest error rate (~15%) in recognizing seven activities that include jumping, running, normal and quick walking, step walking, ascending and descending stairs. In Reference [150], a smartphone-based activity recognition system was developed using a deep belief network (DBN). There the authors first extracted a set of five hundred and sixty-one features from the motion signals following a signal processing step that includes signal filtering and data windowing. A kernel principal component analysis (KPCA) was then employed on these features and only the first one hundred principal components were fed into the DBN while training the model for activity recognition. The authors reported achieving an accuracy of ~96% in recognizing 12 activities that include standing, sitting, lying down, walking, ascending and descending stairs, stand-to-sit, sit-to-stand, sit-to-lie, lie-to-sit, stand-to-lie, and lie-to-stand. However, unlike most deep learning-based system, the approach in Reference [150] requires a stand-alone feature extraction step, thus increasing the computational load. In general, the key issues associated with the deep learning-based approaches are their high computational cost, making them unsuitable for real-time applications, especially for devices with limited high-end processing capabilities.

Some researchers exploited smartphones for fall detection [144,145,157,158,159,160,161,162] and posture monitoring [146,147]. In Reference [144], the angle between the longitudinal axis of the device and the gravitational vector was continuously monitored using the smartphone embedded motion sensor. When this angle drops below a pre-determined threshold of 40°, the system recognizes the event as a fall. The proposed system, when worn on the waist, was able to distinguish a fall from a normal body motion, i.e., lying, sitting, static standing and horizontal/vertical activities with high accuracy. However, the system requires the phone to remain attached to the waist for fall detection, which may not be comfortable or always feasible for users. A similar fall detection system was implemented in Reference [145] where the smartphone was kept in the shirt pocket with its front side facing the body in order to maintain consistency in the orientation of the sensors. The system monitors the changes in the acceleration along the three directions and detects a fall if the change happens faster than an experimentally established minimum time spent on performing normal activities of daily living. However, no quantitative information regarding the accuracy of the system was provided in both References [144,145]. In Reference [159], the authors proposed an SVM-based fall detection algorithm. They used smartphone’s built-in accelerometer to record user’s motion data by placing the phone in the front pocket of the shirt. The participants performed some activities of daily living (ADL), and also some simulated fall events. The authors reported distinguishing fall events from non-fall activities with high sensitivity (~97%) and specificity (95%). A detailed review of smartphone-based fall detection systems was presented in References [161,162].

In Reference [146], the authors reported a smartphone-based posture monitoring application named Smart Pose. The accelerometer data and facial images were captured simultaneously while holding the smartphone and facing towards it. The pitch and roll orientation of the device, and thereby the cumulative average of tilt angle (CATA) of the user’s neck is estimated, assuming the device’s orientation correlates to and represents the position of the user’s neck. A bad posture is determined when CATA exceeds an acceptable range of 80° to 100°. The authors compared the performance of their proposed system with a commercial three-dimensional posture analysis system and reported achieving similar performance with the Smart Pose. An application named iBalance-ABF was proposed in Reference [147] to assess the balance of the body and to provide audio feedback to the user accordingly. The smartphone embedded accelerometer, gyroscope and magnetometer were used to determine the tilt of the mediolateral trunk. When the tilt angle of the trunk exceeds an adjustable but predetermined threshold, the system sends audio feedback to the user over the earphone. The smartphone, mounted on a belt, however, needs to be attached to the back at the level of the L5 vertebra, which requires assistance. Nevertheless, iBalance-ABF can be useful, particularly for older adults to improve their posture and balance.

Applications based-on smartphone-sensors that facilitate monitoring of knee joints [162,163], sleeping patterns [164,165,166] and sleep disorders [167] were reported. Table 4 summarizes several recent smartphone-sensor based activity and sleep monitoring systems. In addition, it should be noted that there are published research results that used external inertial measurement units (IMUs) for activity recognition [7,22,142]. For example, the authors in Reference [143] combined the convolutional neural network (CNN) and LSTM to form a deep convolutional LSTM (DeepConvLSTM) and used this approach for activity recognition.The CNN can determine the key features from the signal automatically, while the LSTM translates the temporal patterns of the signals into features. The authors reported recognizing a complex set of daily activities with high precision (F1 score > 0.93). Smartphone embedded motion sensors such as accelerometers, gyroscopes and magnetometers can replace these external IMUs and achieve similar performance. However, in order to recognize a complex set of activities, as it was reported in Reference [143], it requires several sets of IMUs/smartphones to be attached at different parts of the body, which is not cost-effective and may not be practical.

Table 4.

Smartphone-sensor based activity monitoring systems.

| Ref. | Proposition | Phone | Sensors | Experiment Protocol | n | Method | Performance/Comment |

|---|---|---|---|---|---|---|---|

| [130] | Human activity and gait recognition | Samsung Nexus S | , ω | • Subjects walked ~30 m for each of three different walking speeds • Smartphone in the trouser pocket • Sampling rate: 150 sample/s |