Abstract

Convolutional neural networks (CNNs) can not only classify images but can also generate key features, e.g., the Google neural network that learned to identify cats by simply watching YouTube videos, for the classification. In this paper, crop models are distilled by CNN to evaluate the ability of deep learning to identify the plant physiology knowledge behind such crop models simply by learning. Due to difficulty in collecting big data on crop growth, a crop model was used to generate datasets. The generated datasets were fed into CNN for distillation of the crop model. The models trained by CNN were evaluated by the visualization of saliency maps. In this study, three saliency maps were calculated using all datasets (case 1) and using datasets with spikelet sterility due to either high temperature at anthesis (case 2) or cool summer damage (case 3). The results of case 1 indicated that CNN determined the developmental index of paddy rice, which was implemented in the crop model, simply by learning. Moreover, CNN identified the important individual environmental factors affecting the grain yield. Although CNN had no prior knowledge of spikelet sterility, cases 2 and 3 indicated that CNN realized about paddy rice becoming sensitive to daily mean and maximum temperatures during specific periods. Such deep learning approaches can be used to accelerate the understanding of crop models and make the models more portable. Moreover, the results indicated that CNN can be used to develop new plant physiology theories simply by learning.

Introduction

Recently, machine learning has experienced tremendous advancements. Deep learning has provided solutions to many tasks that could not be solved by conventional machine learning. One remarkable achievement of deep learning is AlphaGo [1] (developed by DeepMind), a computer program that plays the game Go and can beat professional human Go players without any handicaps.

Convolutional neural networks (CNNs), which are typically used in image processing tasks and AlphaGo, represent a powerful deep learning method. The remarkable accuracy of CNNs was demonstrated when they outperformed conventional image processing in ImageNet Large Scale Visual Recognition Challenge 2012 (ILSVRC-2012) [2–5]. In addition to their accuracy, an interesting feature of CNNs is that they do not require feature engineering. Instead, CNNs alone generate the key features used for classifying images from the input, and then classify the images using these features. For instance, a Google CNN learned to detect cats after being trained by watching YouTube videos.

Machine learning, including deep learning, can also be used for agricultural purposes. Most researches utilizing machine learning in agriculture have focused on image processing tasks, e.g., weed detection and identification [6, 7], disease detection [8–14], pest identification [15–17], stress phenotyping [18], internode length estimation [19], vegetation area detection [20], flower detection [21], leaf counting [22], and fruit detection [23–27]. However, machine learning can be utilized as an alternative approach for discovering embedded knowledge that may be present in a dataset [28]. This type of approach includes maize yield prediction using decision tree models [29]; comparison of machine learning methods for the yield prediction of peppers, beans, corns, potatoes, and tomatoes [30]; support vector machine (SVM)-based crop models for paddy rice [31]; identifying important environmental features for the maize production using random forests [32]; evaluation of relations between the meteorological factors and the rice yield variability using conditional inference forests [28]; identification of important variables for modeling Andean blackberry production using artificial neural networks [33]; and predicting the photosynthetic capacity of leaves using partial least squares regression [34].

As described above, machine learning has already been used to predict crop growth from environmental information. However, there are several problems associated with such research designs. First, data collection in agriculture is a challenging task. Machine learning, especially deep learning, requires a huge amount of data to produce a model with high generalizing capability [14]. For instance, in ILSVRC-2012, 1.2 million images were provided for training. Given the various growing times of important crops, it may take a year to collect a single dataset. For this reason, research that attempts to utilize machine learning for crop growth prediction tends also lacks sufficient data. One way to solve this problem is to use crop models [35–39] that can generate a huge number of datasets on crop growth via simulation.

Crop models and machine learning models differ greatly in how the models are constructed. Crop models perform an abstraction of the dynamic mechanisms of a plant’s physiological stages by fitting them into a mathematical model [30, 40], whereas machine learning produces models based on statistical theories and does not require any prior knowledge about physical mechanisms [30]. To date, there has been no study discussing the relations between machine learning models and crop models. If such a relation could be found, it would suggest that meaningful plant physiology models can be generated by machine learning. It would also mean that machine learning can develop new plant physiology theories by learning, which is similar to the Google AI that learned to identify cats. In this context, a previous study [14] showed that CNNs can use the visual cues employed by an expert rater to identify and quantify the symptoms of plant diseases.

In this research, a crop model has been distilled by applying a CNN to big data from crop growth generated by the crop model. The generated model is then analyzed by deep learning to find relations between the crop model and the deep learning model. Finally, the ability of deep learning to find the plant physiology knowledge behind the distilled crop model from the given data is discussed.

Materials and methods

Crop model

Paddy rice is a key crop in Asian countries, and thus, many crop models for paddy rice have been developed to date [35, 41–45]. SImulation Model for RIce-Weather relations (SIMRIW) [35] is a simplified process model for simulating the growth and yield of irrigated rice in relation to weather. In comparison with other crop models, SIMRIW requires less parameters to be provided in advance; hence, it is applicable to a wide range of environments [35]. Furthermore, SIMRIW requires adjusted parameters for specific rice varieties, but these have already been studied for the major rice varieties in Japan [46].

SIMRIW is a simplified process model for simulating the potential growth and yield of irrigated rice in relation to temperature, solar radiation, and CO2 concentration in the atmosphere [35]. The model is based on the principle that the grain yield YG of a crop forms a specific proportion of the total dry matter production Wt:

| (1) |

where h is the harvest index.

In SIMRIW, Wt is determined by the amount of short-wave radiation absorbed by the canopy. This relation is described as follows:

| (2) |

where Cs is the conversion efficiency of absorbed short-wave radiation and Ss is the daily total absorbed radiation.

The developmental processes of rice crops are strongly influenced by environment and crop genotype [35]. In SIMRIW, these are described by the developmental index (DV I), which is defined as 0.0 at crop emergence, 1.0 at heading, and 2.0 at maturity.

DV I of day t is calculated by accumulating the developmental rate (DV R) until the day

| (3) |

Day length and temperature are the major environmental factors determining DV R [35]; hence, DV R at 0.0 ≤ DV I ≤ 1.0 is defined as

where Tmean and L are daily mean temperature and day length, respectively. DV I* is the value of DV I at which the crop becomes sensitive to photoperiod, Lc is the critical day length, Th is the temperature at which DV R is half the maximum rate at the optimal temperature, and Gv is the minimum number of days required for heading of a cultivar under optimal conditions. A and B are empirical constants.

DV R from heading to maturity (1.0 < DV I ≤ 2.0) is defined as

| (7) |

where Gr is the minimum number of days for the grain-filling period under optimal conditions. Kr and Tcr are empirical constants.

The amount of absorbed radiation (Ss) is a function of leaf area index (LAI). Daily dry matter production ΔWt is calculated by multiplying the Ss value by an appropriate value of the radiation conversion efficiency Cs (Eq 2). Cs is constant for the fronthalf of the grain-filling stage, and decreases gradually toward zero for the back half:

| (8) |

in which P is CO2 concentration (ppm), C0 is the radiation conversion efficiency at 330 ppm CO2, Rm is the asymptotic limit of relative response to CO2, and Kc, C, B, and t are empirical constants.

In SIMRIW, the harvest index h is defined as

| (11) |

where hm is the maximum harvest index of a cultivar under optimal conditions, Kh is an empirical constant, and γ is the percentage of spikelet sterility.

The harvest index decreases when the fraction of sterile spikelets increases or when crop growth stops before completing development due to cool summer temperatures or frost [35]. In SIMRIW, the effect of cool summer damage occurs in the period of the highest sensitivity of the rice panicle by cool temperatures (0.75 < DV I < 1.2) and can be described as follows:

| (12) |

| (13) |

where γL is the percentage of sterility due to cool summer damage. γ0, Kq, and a are empirical constants.

Sterile spikelets are also increased by high temperature at anthesis. In SIMRIW, this is described as follows:

| (14) |

where γH is the percentage of sterility due to high temperature at anthesis and TH is the average daily maximum temperature (Tmax) at 0.96 < DV I ≤ 1.22. The actual spikelet sterility γ is calculated as the maximum of γL and γH.

A schematic representation of the processes of growth, development, and yield formation of rice implemented in SIMRIW is shown in S1 Fig. Refer to S1 Table for details of the variables.

Meteorological data acquisition

Meteorological data were obtained from the Agro-Meteorological Grid Square Data (hereinafter referred to as Grid Data) provided by the National Agriculture and Food Research Organization [47]. Grid Data provides daily data on air temperature, humidity, precipitation, and solar irradiance all over Japan with a 1-km resolution. The available data include past data from 1980 until present as well as forecast data for 26 days ahead.

Meteorological data from 1980 to 2016 on daily mean temperature, daily maximum temperature, and daily total global solar radiation were obtained from Grid Data. Day length was calculated based on the day of the year and latitude. Since CO2 concentration was not available in Grid Data, it was set as a constant value (350 ppm). Since environmental conditions were considerably similar within the 1-km interval, meteorological data were obtained for every 10 km. The meteorological data were divided by year and grid. Consequently, 132,460 datasets were obtained.

Data generation using crop model

The meteorological data obtained from Grid Data were fed into SIMRIW to generate crop growth data. The parameters for Koshihikari, the most common paddy rice variety in Japan, were used for the SIMRIW simulation.

In most rice production areas in Japan, rice is planted in the beginning of May and harvested in the beginning of October of the same year. Therefore, the planting date was set as May 01 of the same year as meteorological data of each dataset. As a result, the amount of plant growth data obtained from SIMRIW was the same as the amount of meteorological data (132,460 datasets).

Climatic conditions are extremely different in the north and south parts of Japan. Therefore, in a few of the grids, In some of the grids, therefore, DV I increase was considerably slow in certain years or certain regions due to overly hot or cool temperatures. Datasets that did not reach 2.0 of DV I by October 05 of the same year as the planting date were excluded from further analysis.

Distillation of crop model using CNN

The neural network design considered for this research is shown in S2 Fig. The first layer of the network was a 3 × 3 pixel convolutional layer with a stride of 1 × 1 pixel (in the horizontal and vertical directions) and padding of 2 × 2 pixel (in the horizontal and vertical directions). This convolutional layer mapped the single channel in the input to 32 feature maps using a 3 × 3 pixel kernel function. The second layer was a 3 × 3 pixel convolutional layer with a stride of 1 × 1 pixel and padding of 2 × 2 pixel, which mapped the 32 feature maps of the first layer to 64 feature maps. Rectified linear unit (ReLU) layers were adopted in all convolutional connected layers. The third and final layers were fully connected to produce a single value of prediction. Herein, the mean squared error (MSE) was used as a loss function. An Adam optimizer [48] was used to minimize error.

Meteorological data were shaped to a 2D array and fed into the CNN to obtain the prediction of the grain yield. The rows of the 2D array were related to days from the planting date, and the columns were related to the meteorological factors L, Tmean, Tmax, Ss, and P. Since the CNN architecture considered herein requires the same shape of inputs, zero padding in the vertical direction was applied when DV I reached 2.0 within 184 days from the planting date. Each meteorological factor was normalized before being fed into the CNN. The data were randomly split into 75% for training and 25% for validation. As shown in S3 Fig, the validation data were within the range of the training data, timewise and spatially. After the normalization, random noise ranging between -0.001 and 0.001 was added to the training and validation data (see S4 Fig and S2 Table for the results with different ranges of the random noise). The training was stopped when validation loss did not improve for 10 consecutive epochs.

Since the objective of this study was not the evaluation of accuracy, the model that produced the lowest validation loss was used for further analysis.

Evaluation of the CNN model

There are several ways to evaluate and explain trained CNN models, e.g., layer visualizations and attention maps. In this study, saliency maps [49], a method concerning attention maps, were used to visualize the salient meteorological factors and timings that most contributed to grain yields.

Positive saliency, which increases the output (in this case, grain yield), was calculated based on the final dense layer of the CNN. Inputs used as initial seeds for the calculation were randomly selected from the training datasets (n = 500).

Three types of saliency were calculated in this study. First, saliency was calculated using all datasets. Next, datasets with higher γH or γL were extracted and used for saliency calculation to evaluate the differences in saliency when spikelet sterility occurred.

Implementation

All calculations were made using Python 3.6 on an Ubuntu 16.04 Linux system. All experiments were executed on the Amazon Elastic Compute Cloud (EC2) with a single GPU of NVIDIA Tesla K80. SIMRIW is available as an R script [50]. In this study, the R script was ported to Python and used for data generation. The CNN model was implemented in Keras 2.1.5 [51]. The calculation of saliency maps was made using the keras-vis package [52].

Source codes developed for this research are available online (https://github.com/ky0on/simriw and https://github.com/ky0on/pysimriw). All the data collected and generated in this study are also available online [53].

Results

Crop modeling

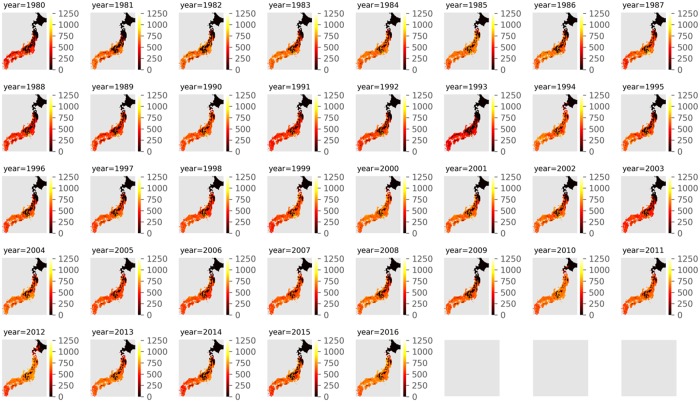

Fig 1 shows a heatmap of the grain yield simulated by SIMRIW. In northern areas of Japan, DV I does not reach 2.0 because of cold temperatures; hence, the grain yield is considerably low. Fig 2 shows a histogram of final DV I in all datasets. In total, 48% of datasets did not reach 2.0 of DV I.

Fig 1. Heatmap of the grain yield simulated by SIMRIW.

In northern areas of Japan, DV I does not reach 2.0 because of cold temperatures; hence, the grain yield is considerably low.

Fig 2. Histogram of final DV I of all datasets.

In total, 48% of datasets do not reach 2.0 of DV I.

Table 1 presents a summary of the harvest index considering γH () and γL (). Datasets for which DV I does not reach 2.0 were eliminated in Table 1. The minimum values of and are 0.15 and 0.13, respectively. The 10th percentiles are 0.36 and 0.35, respectively. Datasets below the 10th percentile were used for saliency calculation to evaluate the effect of spikelet sterility due to high temperature at anthesis or cool summer damage.

Table 1. Summary of the harvest index considering γH and γL, where final DV I ≥ 2.0.

| Mean | Std | Min | 10% | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|

| 0.37 | 0.01 | 0.15 | 0.36 | 0.37 | 0.38 | 0.38 | 0.38 | |

| 0.36 | 0.01 | 0.13 | 0.35 | 0.36 | 0.36 | 0.36 | 0.36 |

Crop model distillation

CNN training stopped at epoch 55 and required 1 h for its completion. In the validation process, 3.1 ms ± 37 μs were required to produce 10 predictions.

Loss during CNN training and validation are shown in S4 Fig. The loss is considerably improved in the first 20 epochs and slightly improved after 20 epochs in the training process. The loss in the validation process also improves as the epochs increase with fluctuations. Overall, the smallest validation loss is observed in the 45th epoch. The trained model of this epoch was thus used for further evaluation.

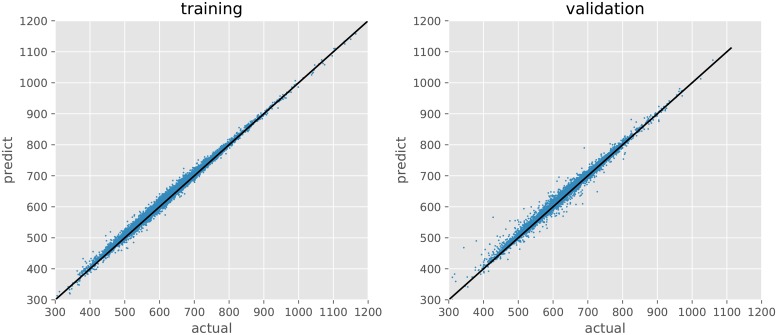

Fig 3 shows the relation between the actual and predicted grain yields in the training and validation processes. The predictions are considerably close to the actual values in both processes. MSE between the actual and predicted values is 41.5 and 68.2 in the training and validation processes, respectively.

Fig 3. Actual and predicted grain yields in training and validation.

MSE between the actual and predicted values is 52.9 and 81.7 in the training and validation processes, respectively.

Model visualization

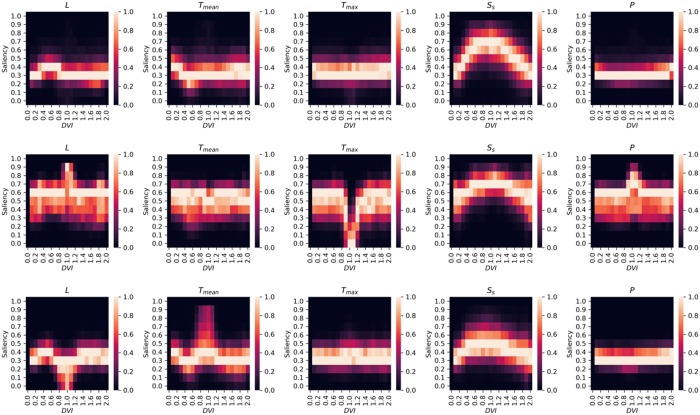

Fig 4 represents the relations between saliency of environmental elements and DV I, which represents the growing stage of paddy rice. Figure panels at the top in Fig 4 show the saliencies calculated using all datasets, while those at the middle and bottom are using datasets with spikelet sterility due to high temperature at anthesis and cool summer damage, respectively. The saliencies of environmental elements change along with DV I. Moreover, there are some differences in the saliency of each environmental element when different datasets were provided.

Fig 4. Positive saliencies of different meteorological elements and DV I.

Saliencies were calculated from 500 datasets that were randomly selected from (top) all datasets, (middle) datasets where , and (bottom) datasets where .

Discussion

Herein, distillation of crop models was conducted to investigate the ability of deep learning to find the plant physiology knowledge behind the distilled crop model from given data. Although most research utilizing machine learning in agriculture lacks sufficient data, this problem was overcome via simulations using crop models. Interestingly, the performance of the model generated by the distillation was analyzed. In addition, the learnings obtained by the model and determination of whether there were any cues related to plant physiological theories behind the distilled crop model were analyzed. CNN, a state-of-the-art method based on deep learning, was used for distillation.

In this study, saliency was calculated using all datasets (case 1) and datasets that faced spikelet sterility due to high temperature at anthesis (case 2) or cool summer damage (case 3). In case 1, the positive saliency of Ss increases rapidly at 0.2 ≤ DV I ≤ 1.0. Subsequently, the saliency of Ss decreases again. In fact, in SIMRIW, it is defined that Ss is a function of leaf area index; the leaf area index increases until DV I reaches 1.0, after which it starts to decrease. In contrast, the positive saliency of Tmean decreases considerably, which means that negative saliency increases and has some influence on the grain yield at 0.4 ≤ DV I ≤ 0.7. At this range of DV I, Koshihikari rice growth is affected by Tmean as well as L. Although the saliency of L does not increase within the range, that of Ss, an environmental element similar to L, does increase. These results indicate that CNN find a developmental index similar to DV I. Moreover, CNN find the important individual meteorological factors affecting the grain yield with the developmental index. Based on these results, CNN is shown to be capable of finding the plant physiology knowledge behind SIMRIW simply by learning climate and plant growth (grain yield) data.

In case 2, the positive saliency of Tmax decreases to almost 0 at 0.9 ≤ DV I ≤ 1.2. In fact, the percentage of spikelet sterility due to high temperature at anthesis is determined by an accumulated daily maximum temperature in the range 0.96 ≤ DV I ≤ 1.2 in SIMRIW. In case 3, the positive saliency of Tmean is found to have slightly increased in the range 0.7 ≤ DV I ≤ 1.2. In SIMRIW, cool temperature stress concerning spikelet sterility was measured based on the daily mean temperature in the range 0.75 ≤ DV I ≤ 1.2. These results indicate that although CNN has no prior knowledge of spikelet sterility, it realizes that paddy rice becomes sensitive to Tmean or Tmax during certain periods. Similar to the results of case 1, CNN successfully find the plant physiology theories behind the crop model simply by learning.

The results demonstrate that machine learning can find plant physiology theories simply by learning climate and plant growth data generated by a crop model without any explicit modeling of the underlying theories. This approach may be helpful for understanding the basic theories behind crop models. For instance, Fig 4 makes it easy to understand the importance of each environmental factor input to SIMRIW in the range of DV I. Moreover, the results indicate that machine learning has the potential to discover new theories, even for crops whose plant physiological theory is not revealed yet, simply by learning.

Explaining some saliencies is a challenging task. For instance, the saliency of P suddenly increases at 0.9 ≤ DV I ≤ 1.2 only when datasets with higher γH were provided. Such a theory was not implemented in SIMRIW. However, SIMRIW has multiple empirical parameters that are difficult to understand and such saliencies may be related to these parameters.

In addition, machine learning can accelerate crop growth simulation. The CNN and SIMRIW models required 3.1 ms ± 37 μs and 782 ms ± 2.12 ms, respectively, to produce prediction from 10 datasets. Since the trained model is saved in the Keras format, it can be easily used from Python and JavaScript by employing TensorFlow.js [54] to convert crop models into web applications. Moreover, the proposed approach can be applied to any crop model (even if it is complex) to make the model easier to use and more portable.

Due to ongoing climate change, the agricultural skills and knowledge accumulated over the centuries may not be beneficial in the near future. Thus, data and artificial intelligence methods are needed to improve farming methods. Although machine learning requires big data, which cannot be easily obtained in agriculture due to growing times, this limitation can be overcome using crop models to generate big data on crop growth. In the future, real big data are required to assess the ability of machine learning to discover new plant physiology theories. To this end, it is essential to determine how to effectively collect data on cultivation environments, crop growth, and cultivation management farmers conducted using IoT technologies, which are rapidly developing.

Supporting information

Refer to S1 Table for details of the variables.

(TIF)

(EPS)

Black and red pixels represent the data used for training and validation, respectively.

(TIFF)

(EPS)

(PDF)

(PDF)

Acknowledgments

I would like to thank Takashi Togami (SoftBank Corp.) and Norio Yamaguchi (Ubiden Inc.) for their helpful advice.

Data Availability

Data are available from GitHub and Zenodo (https://github.com/ky0on/pysimriw; https://github.com/ky0on/simriw; https://zenodo.org/record/2582678).

Funding Statement

The author is an employee of PS Solutions Corp. and SoftBank Corp. This work was supported by PS Solutions Corp and SoftBank Corp. PS Solutions Corp. provided supports in the form of salaries for the author, and funding for computational resources and English corrections. SoftBank Corp. provided fundings for publication. The specific roles of the author are articulated in the "author contributions" section.

References

- 1. Silver D, Huang A, Maddison CJ, Guez A, Sifre L, van den Driessche G, et al. Mastering the game of Go with deep neural networks and tree search. Nature. 2016;529(7587):484–489. 10.1038/nature16961 [DOI] [PubMed] [Google Scholar]

- 2.He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016.

- 3.Simonyan K, Zisserman A. Very Deep Convolutional Networks for Large-Scale Image Recognition. International Conference on Learning Representations (ICRL). 2015;.

- 4.Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, et al. Going deeper with convolutions. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. 2015;07-12-June:1–9.

- 5. Krizhevsky A, Sutskever I, Hinton GE. ImageNet Classification with Deep Convolutional Neural Networks. Advances In Neural Information Processing Systems. 2012; p. 1–9. 10.1016/j.protcy.2014.09.007. [DOI] [Google Scholar]

- 6. Rahman M, Blackwell B, Banerjee N, Saraswat D. Smartphone-based hierarchical crowdsourcing for weed identification. Computers and Electronics in Agriculture. 2015;113:14–23. 10.1016/j.compag.2014.12.012 [DOI] [Google Scholar]

- 7. Ishak AJ, Hussain A, Mustafa MM. Weed image classification using Gabor wavelet and gradient field distribution. Computers and Electronics in Agriculture. 2009;66(1):53–61. 10.1016/j.compag.2008.12.003 [DOI] [Google Scholar]

- 8. Ma J, Li X, Wen H, Fu Z, Zhang L. A key frame extraction method for processing greenhouse vegetables production monitoring video. Computers and Electronics in Agriculture. 2015;111:92–102. 10.1016/j.compag.2014.12.007 [DOI] [Google Scholar]

- 9. Mutka A, Bart R. Image-based phenotyping of plant disease symptoms. Frontiers in Plant Science. 2015;5(734). 10.3389/fpls.2014.00734 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Huang S, Qi L, Ma X, Xue K, Wang W, Zhu X. Hyperspectral image analysis based on BoSW model for rice panicle blast grading. Computers and Electronics in Agriculture. 2015;118:167–178. 10.1016/j.compag.2015.08.031 [DOI] [Google Scholar]

- 11. Yamamoto K, Togami T, Yamaguchi N. Super-Resolution of Plant Disease Images for the Acceleration of Image-based Phenotyping and Vigor Diagnosis in Agriculture. Sensors. 2017;17(11):2557 10.3390/s17112557 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Sladojevic S, Arsenovic M, Anderla A, Culibrk D, Stefanovic D. Deep Neural Networks Based Recognition of Plant Diseases by Leaf Image Classification. Computational Intelligence and Neuroscience. 2016;. 10.1155/2016/3289801 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Ferentinos KP. Deep learning models for plant disease detection and diagnosis. Computers and Electronics in Agriculture. 2018;. 10.1016/j.compag.2018.01.009 [DOI] [Google Scholar]

- 14. Ghosal S, Blystone D, Singh AK, Ganapathysubramanian B, Singh A, Sarkar S. An explainable deep machine vision framework for plant stress phenotyping. Proceedings of the National Academy of Sciences of the United States of America. 2018;115(18):4613–4618. 10.1073/pnas.1716999115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. da Silva FL, Grassi Sella ML, Francoy TM, Costa AHR. Evaluating classification and feature selection techniques for honeybee subspecies identification using wing images. Computers and Electronics in Agriculture. 2015;114:68–77. 10.1016/j.compag.2015.03.012 [DOI] [Google Scholar]

- 16. Boniecki P, Koszela K, Piekarska-Boniecka H, Weres J, Zaborowicz M, Kujawa S, et al. Neural identification of selected apple pests. Computers and Electronics in Agriculture. 2015;110:9–16. 10.1016/j.compag.2014.09.013 [DOI] [Google Scholar]

- 17. Venugoban K, Ramanan A. Image Classification of Paddy Field Insect Pests Using Gradient-Based Features. International Journal of Machine Learning and Computing. 2014;4(1). [Google Scholar]

- 18. Ghosal S, Blystone D, Singh AK, Ganapathysubramanian B, Singh A, Sarkar S. An explainable deep machine vision framework for plant stress phenotyping. Proceedings of the National Academy of Sciences of the United States of America. 2018;115(18):4613–4618. 10.1073/pnas.1716999115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Yamamoto K, Guo W, Ninomiya S. Node Detection and Internode Length Estimation of Tomato Seedlings Based on Image Analysis and Machine Learning. Sensors. 2016;16(7):1044 10.3390/s16071044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Guo W, Rage UK, Ninomiya S. Illumination invariant segmentation of vegetation for time series wheat images based on decision tree model. Computers and Electronics in Agriculture. 2013;96:58–66. 10.1016/j.compag.2013.04.010 [DOI] [Google Scholar]

- 21. Guo W, Fukatsu T, Ninomiya S. Automated characterization of flowering dynamics in rice using field-acquired time-series RGB images. Plant methods. 2015;11(7):1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Ubbens J, Cieslak M, Prusinkiewicz P, Stavness I. The use of plant models in deep learning: an application to leaf counting in rosette plants. Plant Methods. 2018;14(1):6 10.1186/s13007-018-0273-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Linker R, Cohen O, Naor A. Determination of the number of green apples in RGB images recorded in orchards. Computers and Electronics in Agriculture. 2012;81:45–57. 10.1016/j.compag.2011.11.007 [DOI] [Google Scholar]

- 24. Kurtulmus F, Lee WS, Vardar A. Immature peach detection in colour images acquired in natural illumination conditions using statistical classifiers and neural network. Precision Agriculture. 2013;15(1):57–79. 10.1007/s11119-013-9323-8 [DOI] [Google Scholar]

- 25. Yamamoto K, Guo W, Yoshioka Y, Ninomiya S. On Plant Detection of Intact Tomato Fruits Using Image Analysis and Machine Learning Methods. Sensors. 2014;14(7):12191–12206. 10.3390/s140712191 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Sa I, Ge Z, Dayoub F, Upcroft B, Perez T, McCool C. DeepFruits: A Fruit Detection System Using Deep Neural Networks. Sensors. 2016;16(8):1222 10.3390/s16081222 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Rahnemoonfar M, Sheppard C. Deep Count: Fruit Counting Based on Deep Simulated Learning. Sensors. 2017;17(4):905 10.3390/s17040905 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Delerce S, Dorado H, Grillon A, Rebolledo MC, Prager SD, Patiño VH, et al. Assessing Weather-Yield Relationships in Rice at Local Scale Using Data Mining Approaches. PLOS ONE. 2016;11(8):e0161620 10.1371/journal.pone.0161620 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Shekoofa A, Emam Y, Shekoufa N, Ebrahimi M, Ebrahimie E. Determining the Most Important Physiological and Agronomic Traits Contributing to Maize Grain Yield through Machine Learning Algorithms: A New Avenue in Intelligent Agriculture. PLoS ONE. 2014;9(5):e97288 10.1371/journal.pone.0097288 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Gonzalez-Sanchez A, Frausto-Solis J, Ojeda-Bustamante W. Predictive ability of machine learning methods for massive crop yield prediction. Spanish Journal of Agricultural Research. 2014;12(2). 10.5424/sjar/2014122-4439 [DOI] [Google Scholar]

- 31. xue Su Y, Xu H, jiao Yan L. Support vector machine-based open crop model (SBOCM): Case of rice production in China. Saudi Journal of Biological Sciences. 2017;24(3):537–547. 10.1016/j.sjbs.2017.01.024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Marko O, Brdar S, Panić M, Šašić I, Despotović D, Knežević M, et al. Portfolio optimization for seed selection in diverse weather scenarios. PLOS ONE. 2017;12(9):e0184198 10.1371/journal.pone.0184198 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Jiménez D, Cock J, Satizábal HF, Barreto SMA, Pérez-Uribe A, Jarvis A, et al. Analysis of Andean blackberry (Rubus glaucus) production models obtained by means of artificial neural networks exploiting information collected by small-scale growers in Colombia and publicly available meteorological data. Computers and Electronics in Agriculture. 2009;69(2):198–208. 10.1016/j.compag.2009.08.008 [DOI] [Google Scholar]

- 34. Heckmann D, Schlüter U, Weber APM. Machine Learning Techniques for Predicting Crop Photosynthetic Capacity from Leaf Reflectance Spectra. Molecular plant. 2017;10(6):878–890. 10.1016/j.molp.2017.04.009 [DOI] [PubMed] [Google Scholar]

- 35.Horie T, Nakagawa H, H G S C, Kropff MJ. The rice crop simulation model SIMRIW and its testing. In: Modeling the Impact of Climatic Change on Rice Production in Asia. CAB International; 1995. p. 51–66. Available from: http://ci.nii.ac.jp/naid/10011491332/en/.

- 36. Jones JW, Dayan E, Allen LH, Challa H. A dynamic tomato growth and yield model (TOMGRO). Transactions of the ASAE. 1991;34(2):663–672. 10.13031/2013.31715 [DOI] [Google Scholar]

- 37. Bouman B, Kropff M, Tuong T, Wopereis M, ten Berge H, van Laar H. ORYZA2000: modeling lowland rice. vol. 1 IRRI; 2001. [Google Scholar]

- 38. Keating BA, Carberry PS, Hammer GL, Probert ME, Robertson MJ, Holzworth D, et al. An overview of APSIM, a model designed for farming systems simulation. In: European Journal of Agronomy; 2003. 10.1016/S1161-0301(02)00108-9 [DOI] [Google Scholar]

- 39. Jones JW, Tsuji GY, Hoogenboom G, Hunt LA, Thornton PK, Wilkens PW, et al. Decision support system for agrotechnology transfer: DSSAT v3. In: Igarss; 2014; 1998. [Google Scholar]

- 40.Safa B, Khalili A, Teshnehlab M, Liaghat A. Artificial Neural Networks Application to Predict Wheat Yield using Climatic Data. 2004;.

- 41.McMennamy JA, O’Toole JC, Institute IRR. RICEMOD: A Physiologically Based Rice Growth and Yield Model. IRRI research paper series. International Rice Research Institute; 1983. Available from: https://books.google.co.jp/books?id=kijZMQEACAAJ.

- 42.Ritchie JT, Alociija EC, Singh U, Uehara G. IBSNAT and the CERES-Rice model. In: Weather and Rice. IRRI; 1987. p. 271–281.

- 43.Tang L, Zhu Y, Hannaway D, Meng Y, Liu L, Chen L, et al. RiceGrow: A rice growth and productivity model. NJAS—Wageningen Journal of Life Sciences. 2009;.

- 44.Stockle CO, Donatelli M, Nelson R, Stöckle CO, Donatelli M, Nelson R, et al. CropSyst, a cropping systems simulation model. European Journal of Agronomy. 2003;.

- 45.van Diepen CA, Wolf J, van Keulen H, Rappoldt C. WOFOST: a simulation model of crop production. Soil Use and Management. 1989;.

- 46.Horie T. Development of Dynamic Model for Predicting Growth and Yield of Rice. Report of the Grant-in-Aid for Scientific Research (no.03404007) by Ministry of Education, Science, Sports and Culture.; 1994. Available from: https://kaken.nii.ac.jp/en/grant/KAKENHI-PROJECT-03404007/.

- 47. Ohno H, Sasaki K, Ohara G, Nakazono K. Development of grid square air temperature and precipitation data compiled from observed, forecasted, and climatic normal data. Climate in Biosphere. 2016;16:71–79. 10.2480/cib.J-16-028 [DOI] [Google Scholar]

- 48.Kingma DP, Ba J. Adam: A Method for Stochastic Optimization. 2014;.

- 49.Simonyan K, Vedaldi A, Zisserman A. Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps. 2013;.

- 50.R-Forge. cropsim; 2018. https://github.com/rforge/cropsim.

- 51.Chollet F, et al. Keras; 2015. https://keras.io.

- 52.Kotikalapudi R, contributors. keras-vis; 2017. https://github.com/raghakot/keras-vis.

- 53.Yamamoto K. Distillation of crop models to learn plant physiology theories using machine learning; 2019. Available from: 10.5281/zenodo.2582678. [DOI] [PMC free article] [PubMed]

- 54.tensorflow. tfjs; 2018. https://github.com/tensorflow/tfjs.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Refer to S1 Table for details of the variables.

(TIF)

(EPS)

Black and red pixels represent the data used for training and validation, respectively.

(TIFF)

(EPS)

(PDF)

(PDF)

Data Availability Statement

Data are available from GitHub and Zenodo (https://github.com/ky0on/pysimriw; https://github.com/ky0on/simriw; https://zenodo.org/record/2582678).