Abstract

The U.S. Environmental Protection Agency (EPA) is faced with the challenge of efficiently and credibly evaluating chemical safety often with limited or no available toxicity data. The expanding number of chemicals found in commerce and the environment, coupled with time and resource requirements for traditional toxicity testing and exposure characterization, continue to underscore the need for new approaches. In 2005, EPA charted a new course to address this challenge by embracing computational toxicology (CompTox) and investing In the technologies and capabilities to push the field forward. The return on this Investment has been demonstrated through results and applications across a range of human and environmental health problems, as well as initial application to regulatory decision-making within programs such as the EPA’s Endocrine Disruptor Screening Program. The CompTox Initiative at EPA is more than a decade old. This manuscript presents a blueprint to guide the strategic and operational direction over the next five years. The primary goal Is to obtain broader acceptance of the CompTox approaches for application to higher tier regulatory decisions, such as chemical assessments. To achieve this goal, the blueprint expands and refines the use of high-throughput and computational modeling approaches to transform the components in chemical risk assessment, while systematically addressing key challenges that have hindered progress. In addition, the blueprint outlines additional investments in cross-cutting efforts to characterize uncertainty and variability, develop software and information technology tools, provide outreach and training, and establish scientific confidence for application to different public health and environmental regulatory decisions.

Keywords: computational toxicology, predictive toxicology, high throughput assays, toxicokinetics, exposure, risk assessment, ToxCast, ExpoCast, Tox21, cheminformatics, CompTox

Background and History

Human health chemical assessments at the EPA have traditionally relied upon toxicity data from animal bioassays and epidemiological studies to inform derivation of non-cancer and cancer values. Hazard and exposure-response studies typically measure the impact of chemical exposure on apical endpoints (e.g., histological changes) and have historical and legal precedent for use in risk assessment and risk management. The strengths of these traditional testing approaches are offset by high costs and lengthy test durations, resulting in a significant gap between the large number of chemicals in commerce that results in human exposure and the small number of well-studied chemicals with available animal or human data. This data gap poses a considerable challenge for the EPA, which is mandated to apply the best available science to protecting human health and the environment through timely and informed chemical safety decisions.

The challenge of “too many chemicals, too little data” for chemical toxicity testing has been recognized for over three decades (NRC, 1984). Rapid advances in biotechnology and computational modeling were viewed as providing a potential solution to address this challenge. The opportunities afforded by these new approaches led to the development and release of a strategic plan for EPA’s computational toxicology (CompTox) research program (EPA, 2003) and subsequent formation of the National Center for Computational Toxicology (NCCT). Shortly after its formation, NCCT launched the Toxicity Forecaster (ToxCast) project for in vitro high-throughput screening (HTS) of environmental chemicals relevant to the Agency’s mission (Dix et al., 2007). In the first phase of ToxCast, EPA screened 310 data-rich chemicals, predominantly pesticides, across ~700 assay endpoints (Kavlock et al., 2012). In the second phase, EPA expanded the ToxCast library to ~1,000 chemicals and tested the chemicals across a set of ~900 assay endpoints (Richard et al., 2016). The assays in the initial ToxCast portfolio were primarily repurposed from pharmaceutical screening efforts, but were selected for potential relevance to assessing chemical impacts on human health, including cytotoxicity, genotoxicity, cell growth, cell differentiation, cell signaling, and transcriptional regulation (Kavlock, et al., 2012).

As part of the CompTox strategic initiative, EPA, in collaboration with the National Toxicology Program (NTP), funded the formation of an expert committee by the U.S. National Academies of Science and National Research Council (NRC) to provide recommendations to advance the field in this area. The committee’s deliberations resulted in the 2007 Report “Toxicity Testing in the 21st Century” (NRC, 2007). The report supported a fundamental shift from chemical safety decisions based on apical animal endpoints towards broader application of in vitro testing and predictive toxicology methods. The shift in approach relies on quantifying the disruption of molecular events and cellular pathways using higher throughput, in vitro assays and integrating results across diverse chemistries and biological endpoints using computational modeling. The shift in approach goes hand-in-hand with rapid data generation, reduced cost to generate toxicity data, and more directed and hypothesis-driven toxicity and epidemiological studies. In response to the NRC report, EPA partnered with two National Institute of Health organizations, the National Center for Advancing Translational Sciences (NCATS) and NTP, to form a federal partnership in 2008 (Collins et al., 2008; Kavlock et al., 2009). This partnership, named Tox21, aimed to develop and pilot high-throughput in vitro screening technology for application to toxicity testing. In 2010, the U.S. Food & Drug Administration (FDA) joined the partnership. The Tox21 federal partners contributed to a combined library of over 8,500 chemicals and screened those chemicals across more than 80 assay endpoints (Thomas et al., 2018; Tice et al., 2013).

ToxCast and Tox21 typically perform automated screens in concentration-response format. The result is thousands of concentration-response curves per assay. Automated data analysis workflows have been developed to normalize the data and estimate potency and efficacy values for each chemical-assay endpoint combination. The ToxCast data analysis pipeline has evolved over time, and the current iteration includes a robust curve-fitting algorithm, active and inactive responses based on baseline noise and efficacy criteria, and data quality flags to indicate concerns with noisy data, artifacts, and systematic assay errors (Filer et al., 2016). To facilitate transparency and Increase scientific confidence, the Tox21 data are available through PubChem (https://pubchem.ncbi.nlm.nih.gov/) and the ToxCast data are available in raw and processed form from the ToxCast data download site (https://www.epa.gov/chemical-research/toxicitv-forecaster-toxcasttm-data) as well as through EPA’s CompTox Chemicals Dashboard (https://comptox.epa.gov/dashboard). The Tox21 and ToxCast chemical structure libraries are available for download in multiple formats, in addition, major portions of the Tox21 and ToxCast chemical sample libraries have been characterized using analytical methods to confirm chemical Identity, as well as to establish purity and concentration (https://tripod.nih.gov/tox21/samples). Finally, an owner’s manual for ToxCast Is available detailing chemical library management, including procurement, curation and quality control, as well as documenting assay annotations, data analysis procedures, and assay performance characteristics (https://www.epa.gov/chemical-research/toxcast-owners-manual-guidance-exploring-data).

Computational modeling approaches are essential for interpreting and applying HTS data to predicting toxicity. Published models to date have been diverse in form and function, and include statistical and dynamic models, as well as empirical and biologically-based models. Two examples of pathway-based models for the estrogen and androgen receptors combine data from multiple assays to reduce noise and compensate for technological deficiencies (Judson et al., 2015; Kleinstreuer et al., 2016). Statistical models have also been developed to predict toxicological hazards such as hepatotoxicity (Liu et al., 2015), Identify mode-of-action (MOA) based on cancer pathways (Kleinstreuer et al., 2013a), and estimate toxicological ‘tipping points’ associated with the concentration-dependent transition from cellular adaptation to adversity (Frank et al., 2018; Shah et al., 2016b). Lastly, HTS assays have been used in dynamic computational modeling of biological processes to understand the potential mechanistic consequences of chemically-related perturbations. Examples include vascular angiogenesis (Kleinstreuer et al., 2013b), palatal fusion (Hutson et al., 2017), and genital tubercle development (Leung et al., 2016).

An Important recommendation In the 2007 NRC report was the need to place in vitro HTS results into a dose and exposure context to support chemical safety decisions. To provide a dose context, EPA and the Hamner Institutes for Health Sciences collaborated to modify approaches developed in the pharmaceutical industry that parameterize toxicokinetic models using in vitro assay measurements (Rotroff et al., 2010; Wetmore et al., 2015b; Wetmore et al., 2012a). These methods relied on in vitro measurements of plasma protein binding using equilibrium dialysis and measurements of intrinsic hepatic clearance using primary hepatocytes. In vitro-to-in vivo extrapolation (IVIVE) approaches are used to scale the experimental data and subsequently develop toxicokinetic models that estimate steady-state blood concentrations associated with a given administered dose. The development and application of the methods to convert potency values for hundreds of chemicals from the ToxCast and Tox21 HTS assays Into administered dose equivalents have led to the term high-throughput toxicokinetics (HTTK). Many of the HTTK models are publicly available as an R package (Pearce et al., 2017b).

In parallel with the generation of HTS data, legacy in vivo toxicity studies were curated and stored in a computable format together with relevant metadata in the Toxicity Reference Database (ToxRefDB). The Initial objective in compiling ToxRefDB was to provide a resource to compare with HTS data. As a result, the Initial chemicals in ToxRefDB were chosen to maximize overlap with ToxCast Phase I (Richard, et al., 2016). The Initial release of the ToxRefDB data in 2009 covered chronic non-cancer, cancer, developmental, and reproductive studies for over 300 chemicals (Knudsen et al., 2009; Martin et al., 2009a; Martin et al., 2009b). The ToxRefDB has been Improved Iteratively with the addition of more study sources, increased numbers of chemicals, and refinements in the data provided. Curation of the legacy in vivo toxicity studies has enabled the examination of a range of toxicological questions and facilitated comparisons of in vivo, in vitro, and computational modeling outcomes, including development of computational models to quantitatively predict in vivo dose response (Truong et al., 2017), identifying chemicals associated with a particular endpoint of concern (Al-Eryani et al., 2015), examination of cross-species concordance In chemical responses (Judson et al., 2017), associating mechanistic and pathway-level responses with organ and tissue outcomes (Kleinstreuer et al., 2011; Shah et al., 2011), and retrospective analyses to assess the value of a particular endpoint or study for safety-decisions (Theunissen et al., 2014).

The data landscape for chemical exposures is even more sparse than that for chemical toxicity (Egeghy et al., 2012), yet is equally critical for establishing risk. To address this challenge, EPA began a parallel research effort to ToxCast called the Exposure Forecaster (ExpoCast) (Cohen Hubal et al., 2010). The intent of this effort was to develop computationally based exposure predictions for thousands of chemicals based on limited data. To obtain these exposure predictions, the Systematic Empirical Evaluation of Models (SEEM) framework was developed to allow for the calibration and evaluation of multiple exposure models using chemical concentrations from biomonitoring studies (Wambaugh et al., 2013). The SEEM framework provides calibrated exposure estimates for thousands of chemicals, as well as uncertainty around those predictions. In the first application of the SEEM framework, a combination of traditional chemical fate and transport model estimates, production volume, and a binary indicator of whether a chemical was used in the indoor environment provided marginal predictivity of biomonitoring data from the US National Health and Nutrition Examination Survey (NHANES) (Wambaugh, et al., 2013). The SEEM approach was subsequently improved with the next version using production volume and a combination of chemical use indicators to predict exposure for the general population to nearly 8,000 chemicals (Wambaugh et al., 2014). The latest iteration uses chemical structure and physicochemical properties to predict the likely exposure pathway for a chemical followed by consensus exposure predictions from thirteen different exposure models (Ring et al., 2018). The consensus model predicts median intake rate and credible interval for median intake rate, and credible interval for 479,926 chemicals (Ring, et al., 2018).

Initial Successes and Application to Decision Making

The success of the ToxCast and Tox21 efforts in the context of EPA’s CompTox program has been demonstrated across a range of human and environmental health applications. Within EPA, the data have been used for identifying modes-of-action (MOAs) for industrial and environmental chemicals (Kleinstreuer et al., 2014; Shah, et al., 2011), rapid testing of chemicals in emergency response situations (Judson et al., 2010), prioritizing chemicals for analytical chemistry suspect screening analysis (Newton et al., 2018; Rager et al., 2016), and building adverse outcome pathways (AOPs) (Bell et al., 2016; Oki et al., 2016). In addition, the data have been used to inform analog selection in read-across (Shah et al., 2016a), build predictive models for pathway activity and toxicological hazard (Judson, et al., 2015; Kleinstreuer, et al., 2016), and identify priority contaminants for environmental surveillance and monitoring (Blackwell et al., 2017; Li et al., 2017; Newton, et al., 2018; Schroeder et al., 2016).

Initial translation of the developments in CompToxto regulatory decision-making have occurred primarily within the EPA’s Endocrine Disruptor Screening Program (EDSP). In a series of Science Advisory Panel (SAP) meetings, the application of HTS assays (EPA, 2012), development of HTTK and exposure models for dose and exposure context (EPA, 2014b), and the integration of the information using computational modeling (EPA, 2014a) were peer reviewed. Following the SAP meetings, EPA announced its intent to use the high-throughput and computational approaches for predicting estrogen receptor activity to replace specific in vitro and in vivo tests in the Tier 1 EDSP battery and to prioritize chemicals for further evaluation (EPA, 2015). Further applications of CompTox tools and data have been outlined in the recent release of the strategic plan to promote the development and application of alternative methods in the Toxic Substances Control Act (TSCA) program (EPA, 2018) and selecting candidates for prioritization in TSCA (https://www.epa.gov/assessing-and-managing-chemicals-under-tsca/identifying-existing-chemicals-prioritization-under-tsca).

The public release of the ToxCast and Tox21 data, with supporting documentation and applications, has facilitated and promoted its use across the broader scientific and regulatory communities. Examples include identifying MOAs for specific industrial and environmental chemicals (Leet et al., 2014; Wills et al., 2015); mechanistic support in cancer hazard assessments by international bodies (Smith et al., 2016); assessment of chemical alternatives (Chen et al., 2016; NRC, 2014); prioritizing chemicals for biomonitoring (Krowech et al., 2016); and building training sets for Quantitative Structure-Activity Relationship (QSAR) modeling (Mansouri et al., 2016a; Norinder et al., 2016; Sedykh et al., 2011). In addition, the external community has used the data across a wide range of applications including providing mechanistic support for chemical hazard In pesticide risk assessments (CalEPA, 2016); screening for substances of high concern (ECHA, 2017); regulatory and economic impact assessments (EC, 2016); prediction of toxicological hazard (Bhhatarai et al., 2015); a key component In a tiered toxicity testing framework (Thomas et al., 2013); and identification of potential toxicological liabilities for pharmaceuticals (Shah et al., 2014). Health Canada has also outlined a plan that uses CompTox data in identifying priorities for risk assessment within their Chemicals Management Plan (http://www.ec.gc.ca/ese-ees/default.asp?lang=En&n=172614CE-1). The diversity of external organizations utilizing the ToxCast and Tox21 data supports the continued development and public release of toxicity and exposure data. Implementation and application of CompTox tools and approaches can be expanded and this is one of the challenges and goals of this blueprint.

A Blueprint for the Future

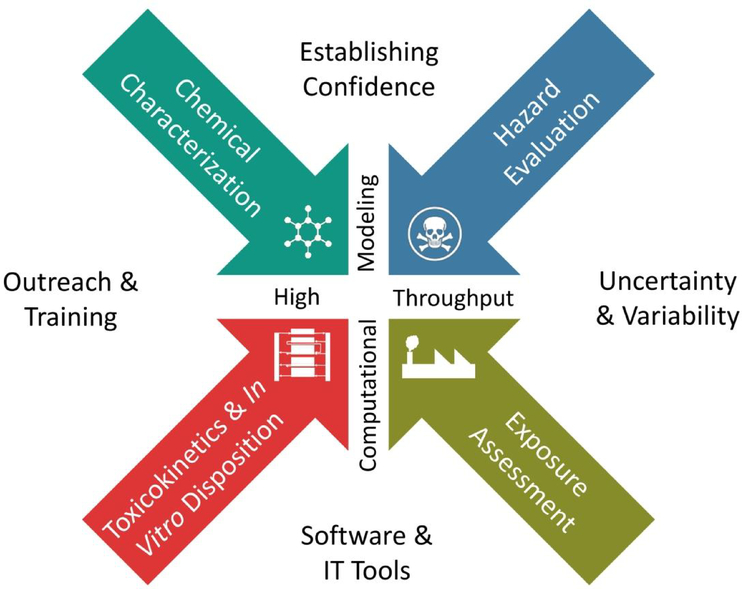

The mission of the CompTox research efforts across EPA is to integrate advances in chemical, biomedical, computational, and informatics sciences to efficiently and economically evaluate the safety of chemicals. To date, the CompTox effort has been successful in developing and applying new approaches to chemical characterization, toxicity testing, toxicokinetics, and exposure modeling. The resulting data streams enable a shift away from evaluating the toxicity of small numbers of chemicals based on apical endpoints in animal studies to evaluating thousands of chemicals based on disruption of molecular events and cellular pathways using high-throughput and computational approaches. Despite the progress, the shift to predictive approaches and reliance on pathway perturbations is not complete. The primary goal for this blueprint is to obtain broader acceptance of the CompTox approaches for application to higher tier regulatory decisions, such as chemical assessments. A blueprint for the next five years requires expanding the scope of existing CompTox efforts, systematically addressing key challenges that have limited progress to date, and additional investments in important cross-cutting areas. The emphasis for the CompTox efforts continues to be the use of computational modeling and high-throughput approaches to transform components used to understand potential health risks of chemicals - chemical characterization, hazard evaluation, toxicokinetics, and exposure assessment (Fig. 1). Surrounding these components are cross-cutting efforts in characterizing uncertainty and variability; development of software and information technology tools that facilitate translation, outreach and training activities; and establishing scientific confidence for different regulatory decisions.

Figure 1.

Key elements of the EPA CompTox blueprint to obtain broader acceptance of the new approaches and application to higher tier regulatory decisions. The CompTox efforts will continue to emphasize the use of computational modeling and high-throughput approaches to connect and transform the traditional components in chemical risk assessment. Cross-cutting efforts in characterizing uncertainty and variability, development of software and information technology tools, outreach and training, and establishing scientific confidence enable translation to regulatory decision making.

Chemical Characterization

The foundation of toxicology, toxicokinetics, and exposure is embedded in the physics and chemistry of chemical-biological Interactions. The accurate characterization of chemical structure linked to commonly used identifiers, such as names and Chemical Abstracts Service Registry Numbers (CASRNs), is essential to support both predictive modeling of the data as well as dissemination and application of the data for chemical safety decisions. Through earlier efforts, EPA built the Distributed Structure-Searchable Toxicity (DSSTox) database to serve as a central resource for curated chemical structure information, as well as to provide the cheminformatics foundation for the ToxCast, Tox21 and ExpoCast initiatives (Richard, 2004). The original DSSTox database consisted of a manually curated set of approximately 20.0 chemicals spanning a dozen lists of high interest to EPA and the toxicology and QSAR modeling communities (Richard, 2004).

Over the past several years, EPA has updated and expanded DSSTox to include state-of-the-art cheminformatics tools for managing chemical and list registration through manual and automated curation into several quality bin levels. DSSTox currently provides structures, nomenclature and IDs, list associations and physicochemical property and environmental fate and transport data for more than 760.0 substances, encompassing not only all chemicals evaluated in the ToxCast and Tox21 testing programs (Richard, et al., 2016), but also a much broader landscape of chemicals of interest for CompTox and ExpoCast research efforts. The EPA CompTox Chemicals Dashboard (https://comptox.epa.gov/dashboard/) provides a portal for EPA researchers, the public, and the scientific community to access DSSTox chemistry data (Williams et al., 2017). As an integral part of the blueprint, chemical curation efforts will continue to expand into areas of high priority interest to provide a comprehensive, harmonized source of quality chemical information for support of CompTox research.

Another important component of the CompTox chemical characterization effort is a robust chemical management infrastructure that can support all the Agency’s chemical screening programs. EPA has developed an information management system (IMS) for documenting and tracking all aspects of the physical chemical sample library supporting ToxCast and Tox21. The IMS includes inventory tracking, supplier information, and analytical quality control information for both the neat materials and HTS assays as well as plate, shipment, and sample identifiers used in HTS assays. EPA’s ToxCast chemical library has been used for high-throughput in vitro bioactivity screening, in vitro and in vivo toxicokinetic studies, and as analytical standards for exposure characterization across a range of media and methods (Sobus et al., 2019). In addition, through collaborative agreements with external stakeholders, EPA has provided portions of the ToxCast chemical library to over 130 academic, industry, and governmental collaborators in exchange for results that can be incorporated into EPA’s databases and publicly released. Apart from supporting library management activities, the cheminformatics infrastructure is being upgraded to support non-targeted and suspect screening analysis (McEachran et al., 2018; McEachran et al., 2017). EPA investments in non-targeted and suspect screening analysis are aimed at a broad range of research activities, including environmental monitoring in a broad range of media [e.g., (Strynar et al., 2015)], exposure characterization (Sobus, et al., 2019), metabolite identification, and biomonitoring.

Beyond structural curation and chemical management, a high-quality, structure-based cheminformatics platform is essential for supporting computational chemistry and structure-based modeling activities. (Q)SAR models have been built and provided through the EPA CompTox Chemicals Dashboard (https://comptox.epa.gov/dashboard/) for a range of physicochemical properties, toxicity, and environmental fate endpoints (Mansouri et al., 2018; Mansouri et al., 2016b). Embedded in the “Predictions” tab of the Dashboard is the ability to predict hazard and physicochemical properties using the Toxicity Estimation Software Tool (TEST) suite of QSAR models (https://www.epa.gov/chemical-research/toxicity-estimation-software-tool-test). Other QSAR models will be added in the future.

Systematic read-across approaches also utilize the chemical structure information to predict a range of hazard-related effects for data-poor chemicals (Shah, et al., 2016a). In traditional read-across, chemical structure together with expert judgment based on physicochemical properties, metabolism considerations, and toxicological mechanisms, when available, are used to identify appropriate analogs, which are then used to infer the effects of a target chemical (Wang et al., 2012; Wu et al., 2010). The reliance on expert judgment to address uncertainties has hindered its use for regulatory decision-making (Patlewicz et al., 2018; Patlewicz et al., 2016). The investment in systematic read-across approaches attempts to quantify the uncertainty and provides a benchmark to assess whether other contexts of similarity (e.g., physicochemical, metabolic or biological as assessed using HTS data) reduce the uncertainty (Helman et al., 2018). Finally, chemical structural descriptors are being used for systematic chemical categorization and prediction of hazard and exposure-related properties. ToxPrint chemotypes have been used to identify structure-activity enrichments in HTS assays related to neurotoxicity (Strickland et al., 2018) and hepatic steatosis (Nelms et al., 2018), while these and other structural descriptors have been used to predict functional use and weight fractions in personal care products (Isaacs et al., 2016). In the blueprint for the future, EPA will continue to invest in computational chemistry and structure-based approaches with the goal of leveraging chemical information to associate chemical structures with hazard, toxicokinetic, and exposure characteristics as well as to resolve biological associations within the HTS data landscape.

Hazard Evaluation

Toxicity testing aims to identify all the potential hazards that a chemical can elicit in an organism, while attempting to characterize the dose-response relationships for those hazards. Consistent with the recommendations by the NRC (NRC, 2007, 2017), the ToxCast program used in vitro HTS technologies to identify potential cellular pathways and processes disrupted by chemicals. Throughout the development and execution of ToxCast and Tox21, key limitations of the current suite of HTS assays have been identified (Tice, et al., 2013). The limitations include inadequate coverage of biological targets and pathways, reduced or distinct xenobiotic metabolism compared to in vivo, and limited evaluation of volatiles and chemicals insoluble in dimethyl sulfoxide. Apart from these technical limitations, application of in vitro test systems in toxicology has also been hampered by the inability to translate perturbations at the molecular level to possible tissue-, organ-, and organism-level effects. In moving forward, the CompTox effort is taking a new approach to hazard identification and characterization that directly addresses many of these challenges, as well as integrates multiple technologies and cheminformatic approaches into a tiered testing framework that will require multi-disciplinary partnership between experimental and computational investigators.

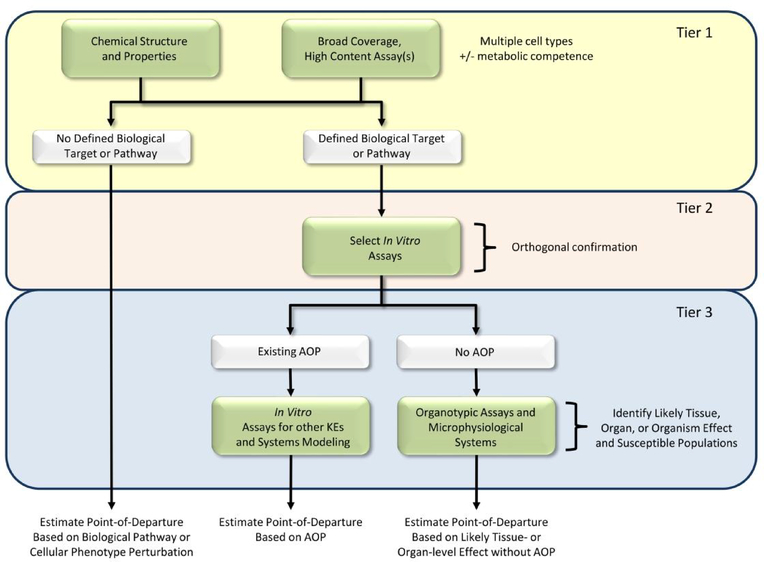

The first tier of testing casts the broadest net possible for capturing the potential hazards associated with chemical treatment as well as groups them based on similarity in the potential hazards (Fig. 2). The grouping of chemicals can be based on both chemical structure considerations as well as experimental data. The use of chemical structural descriptors to inform hazard and identify potential analogs was described in the preceding section. For experimental hazard characterization, two approaches were selected and developed to provide the most robust and comprehensive evaluation of chemical disruption of molecular events and biological processes. The first approach uses RNA-seq-based multiplexed read-outs of gene expression to interrogate the effects of chemical treatment across the entire transcriptome. EPA is applying new technologies that are automatable, high-throughput, and capable of measuring transcriptomic changes directly from cell lysates in 384-well format (Yeakley et al., 2017). This approach, termed high-throughput transcriptomics (HTTr), allows for cost-efficient screening of thousands of chemicals in concentration-response format. Although other ‘omic technologies, such as metabolomics and proteomics, can be used to interrogate other aspects of biological pathway perturbations, the costs and amount of biological material required per sample currently exceed available resources and preclude their use in high throughput screening applications.

Figure 2.

Tiered testing framework for hazard characterization. Tier 1 uses both chemical structure and broad coverage, high content assays across multiple cell types for comprehensively evaluating the potential effects of chemicals and grouping them based on similarity in potential hazards. For chemicals from Tier 1 without a defined biological target/pathway, a quantitative point-of-departure for hazard is estimated based on the absence of biological pathway or cellular phenotype perturbation. Chemicals from Tier 1 with a predicted biological target or pathway are evaluated Tier 2 using targeted follow-up assays. In Tier 3, the likely tissue, organ, or organism-level effects are considered based on either existing adverse outcome pathways (AOP) or more complex culture systems. Quantitative points-of-departure for hazard are estimated based on the AOP or responses in the complex culture system.

The second approach uses high-content imaging of cultured cells labeled with multiple fluorescent probes to measure the effects of chemical treatment on subcellular organelles and structural features (e.g., mitochondria, endoplasmic reticulum, nuclear morphology) (Bray et al., 2016). Image analysis algorithms are used to quantify a broad range of shape, compartmental, spatial, and intensity-related characteristics associated with the probes in an approach referred to as phenotypic profiling (Feng et al., 2009). EPA is applying a high-throughput version of this approach in an automatable, 384-well format to measure changes in hundreds of phenotypic parameters per cell. This approach, termed high-throughput phenotypic profiling (HTPP), also allows for cost-efficient screening of thousands of chemicals in concentration-response format. Over the next several years, the HTTr and HTPP approaches will be applied to multiple cell types, which will provide broad, complementary coverage of molecular and phenotypic responses across a much larger swath of biological space than the existing ToxCast and Tox21 assay portfolios. Statistical methods that characterize diversity in basal gene expression across many different cell types are being used to identify maximally diverse sets of cell types for use in both HTTr and HTPP screening. While diversity in basal gene expression may not fully reflect the diversity in chemical-induced changes in gene expression (i.e., responses) across cell types, these methods are a data driven approach for selecting cell-based screening models with diverse expression in potential biological targets and pathways.

The resulting data will be compared with large databases of reference chemicals and genetic perturbations (e.g., RNAi knockdown and cDNA overexpression) to associate patterns of transcriptional (De Abrew et al., 2016; Lamb et al., 2006) and phenotypic changes (Bray et al., 2017) with a potential biological target or MOA. For chemicals without a close match to a reference chemical or genetic perturbation, a potency estimate for the most sensitive pathway or phenotypic effect will be estimated (Thomas et al., 2011; Thomas, et al., 2013). Previous studies have suggested that most environmental and industrial chemicals are biologically promiscuous and the most sensitive pathway or biological response provides a conservative estimate of the point-of-departure for adverse in vivo effects (Thomas, et al., 2013; Wetmore et al., 2013). Likewise, methods for defining predictive points-of-departure from in vitro high content imaging-based assays are in the early stages of development (Shah, et al., 2016b; Wink et al., 2018). Chemicals with a close match to a reference chemical or genetic perturbation move to a second tier of testing.

For the second tier of testing, EPA is restructuring its portfolio of ToxCast assays to allow small sets of chemicals to be run in specific orthogonal in vitro assays to confirm the interaction with the biological target or MOA identified in the first tier (Fig. 2). For example, if the global transcriptomic evaluation for an environmental chemical shows a close match with a reference chemical that is a known inhibitor of a specific enzyme or an agonist of a particular receptor, a targeted biochemical or cell-based assay will be run to confirm the interaction. The portfolio of assays for orthogonal confirmation may include existing ToxCast assays as well as new assays not in the current portfolio. Computational modeling will integrate the results from the first and second tiers of testing to develop consensus predictions of the biological target or MOA and estimate the potency for the interaction with the biological target or pathway.

In the third tier of testing, chemicals with a verified interaction with a biological target or pathway will be linked with a likely adverse outcome using the AOP framework (Ankley et al., 2010)(Fig. 2). For those chemicals that interact with a molecular initiating event or key event in a known AOP, the remaining key events in the pathway will be evaluated in more complex organotypic cell culture models or microphysiological systems. Evaluation using the more complex cell culture models will enable translation of target and pathway perturbations to possible tissue- and organ-level effects. These can be tailored with human pluripotent stem cells to address the cellular and biomechanical features of a tissue for evaluating key events leading from molecular perturbation to a phenotypic outcome. If the biological target or pathway perturbed by the chemical is not associated with an existing AOP, knowledge of the target or pathway will be used to guide development of both new AOPs and culture models of the adverse outcome, where possible, or additional research as needed. Previous efforts have used crowd-sourcing (https://aopwiki.org/) and computational approaches (Oki, et al., 2016) for AOP development. Virtual tissue models can also be constructed to organize the key event relationships and allow quantitative predictions of the dose and temporal responses associated with the magnitude of perturbation as it propagates across different levels of biological organization. Biologically-inspired, agent-based, cellular systems models, for example, reconstruct tissues in silico cell-by-cell and interaction-by-interaction and can execute tissue dynamical simulations that advance through critical determinants of an adverse phenotype (Hutson, et al., 2017; Kleinstreuer, et al., 2013b; Leung, et al., 2016). Current models focus primarily on developmental responses; however, the portfolio of models can be extended to organ and tissue responses in the adult. By simulating in vitro data under various in vivo exposure scenarios - dose or stage response, critical pathways, non-chemical stressors - these computer models return a probabilistic rendering of where, when and how a defect might occur under different exposure scenarios to yield a potency estimate for the interaction with the biological target or pathway and likely adverse response.

Apart from the development of the tiered testing framework, EPA is systematically addressing other technical limitations that have been associated with the current ToxCast and Tox21 efforts. To diversify the chemical space evaluated in the HTS assays, EPA is assembling a library of DMSO-insoluble, but water-soluble chemicals that is currently being tested. In addition, novel air-liquid interface exposure systems are being used to expose cells in concentration response to volatile chemicals, albeit in lower throughput than the existing assays in the ToxCast portfolio (Zavala et al., 2018). The water library and volatile chemical exposures are being paired with the HTTr platform as part of the tiered testing framework.

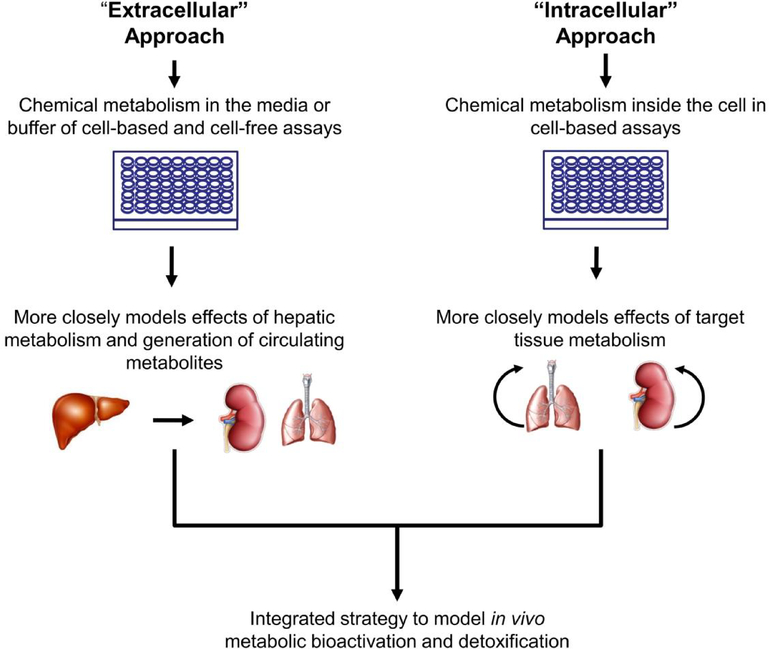

The challenges associated with the lack of metabolic competence for many of the current assays are also being addressed using a two-part strategy broken into ‘extracellular’ and ‘intracellular’ approaches (Fig. 3). In the ‘extracellular’ approach, chemical metabolism will occur in the media of cell-based assays or the buffer of cell-free assays. This part of the strategy is intended to model first-pass metabolism by the liver and exposure of distal target tissues to circulating metabolites. Multiple technologies and approaches are being evaluated to provide the relevant metabolic activity to the assay media or buffer. However, it is likely that multiple technological solutions will be required given the diversity of the assay platforms in the existing ToxCast and Tox21 assay portfolio and the new assays in the tiered testing framework. One promising approach is to embed S9 or microsomal fractions within an alginate matrix and attach the matrix to plastic protrusions on custom designed multi-well plate lids. The protrusions extend down into the well of the plates to allow chemical metabolism to occur without the S9 or microsomal fractions interfering with the assay readouts or causing cytotoxicity. Proof-of-concept experiments have been performed and show bioactivation of known reference chemicals. In the ‘intracellular’ strategy, chemical metabolism will occur inside the cell. This part of the strategy is intended to model the effects of target tissue metabolism. In one approach, chemically-modified mRNAs corresponding to different xenobiotic metabolizing enzymes are synthesized and transfected into target cell types singly or in multiplexed ratios that mimic specific target tissues (e.g., liver) (DeGroot et al., 2018). The approach has shown promise for endowing a range of different cell types with metabolic activity at or near the levels associated with primary cells over a fixed time frame. As part of the blueprint, both the extra cellular and intra-cellular approaches will be applied to retrofit multiple in vitro assays in the first and second tier of the new testing framework.

Figure 3.

Integrated strategy to model in vivo bioactivation and detoxification in a diverse range of in vitro assays. The extracellular approach generates metabolites in the media or buffer of in vitro assays and models the effects of hepatic metabolism on peripheral tissues. The intracellular approach generates metabolites inside the cell and models the effects of target tissue metabolism.

Toxicokinetics and In Vitro Disposition

Dosimetry is an essential component for translating in vitro concentration metrics into equivalent external exposures. Previous EPA efforts in this area have been relatively simple in that they model all chemicals uniformly, with chemical-specific information accounting for only a small number of factors affecting the toxicokinetics of chemicals, and assume complete absorption and steady-state conditions. In a previous analysis of 349 chemicals, the relatively simple and uniform approach produced estimates of steady-state blood concentrations that were within a factor of three of the reported literature value for ~60% of chemicals (~90% were within a factor of ten) where experimental measurements of protein binding and liver metabolism were successful (Wambaugh et al., 2015). In the future, EPA will examine the domain of applicability of the HTTK models across a broader range of environmental chemicals as well as incorporate additional assays and in silico tools that address known limitations in existing approaches. For example, EPA has performed high-throughput bioavailability measurements using the Caco-2 model (Hilgers et al., 1990). Comparisons of the HTTK models with in vivo toxicokinetic studies have suggested that bioavailability can be a significant contributor to the lack of correlation for some chemicals (Wambaugh et al., 2018).

To address the assumption of steady state kinetics, EPA is investing in the refinement and development of computational chemistry and structure-based modeling of tissue partitioning and volume of distribution. The computational chemistry approaches extend previous efforts in the pharmaceutical industry to estimate tissue partition coefficients using physicochemical properties (Pearce et al., 2017a; Schmitt, 2008) to capture a broader range of chemical space (Wambaugh, et al., 2018). The development of dynamic toxicokinetic and physiologically based toxicokinetic (PBTK) models are necessary to calculate important aspects of toxicokinetics, such as the time needed to reach steady state; whereas most ToxCast chemicals analyzed to date reach steady state within weeks, some environmental and industrial chemicals may require decades or more. PBTK models also allow for dosimetric anchoring of toxicokinetic studies, and modeling of tissue dosimetry (e.g., maximal and/or time-integrated concentration) in critical time windows under non-steady state conditions (e.g., windows of developmental susceptibility).

While in vitro toxicokinetic methods provide significantly faster alternatives to traditional toxicokinetic testing, these methods still require the time-consuming and sometimes difficult development of chemical-specific methods for chemical concentration analysis. For this reason, in silico approaches based upon chemical structure features and physico-chemical properties are being developed to predict in vitro toxicokinetic data (Ingle et al., 2016). These new in silico models allow toxicokinetics, exposure, and hazard to be combined for large screening libraries such as Tox21 (Sipes et al., 2017), whereas methods limited to in vitro-measured toxicokinetics deal with hundreds of chemicals at a time (Wetmore et al., 2015a; Wetmore et al., 2012b).

Apart from the need to understand and predict in vivo toxicokinetic behavior, the shift to in vitro models for hazard characterization has necessitated an understanding of in vitro disposition (i.e., the fate and movement of a chemical within an in vitro assay) (Blaauboer, 2010; Fischer et al., 2018; Fischer et al., 2017; Zhang et al., 2018). Previous EPA efforts have generally relied on nominal concentrations as the basis for estimates of in vitro potency; however, for some chemicals, taking into account binding to plastic, intracellular transport, and lipid association can result in a significantly different biologically effective concentration for a chemical (Croom et al., 2015; Groothuis et al., 2015; Kramer et al., 2015; Meacham et al., 2005; Mundy et al., 2004). To overcome this challenge, EPA is collaborating with NTP under the Tox21 consortium to empirically measure differences between nominal and cellular concentrations for a set of chemicals to determine whether in vitro disposition can be modeled using computational approaches, thereby providing a means to identify the appropriate in vitro dose metric (Thomas, et al., 2018).

Exposure Assessment

Exposure data are essential to providing a risk context for doses that result in a particular hazard. To estimate exposure for a broad range of chemicals, relatively simple computational models have been used to predict median exposure rates for the total U.S. population (Wambaugh, et al., 2014). However, the uncertainty around the exposure predictions is relatively large. Although more complex exposure models may reduce uncertainty, they require detailed parameterization of the weight fraction and off-gassing of the chemical in hundreds of products in conjunction with detailed human activity characterization, which is difficult to scale to thousands of chemicals (Isaacs et al., 2014). Based on the finding that consumer product usage was a significant source of exposure (Wallace et al., 1987; Wambaugh, et al., 2013), EPA is increasing its effort to develop new databases of chemicals known to be in consumer products in order to allow for parameterization of more complex exposure models and to reduce uncertainty in exposure predictions (Isaacs, et al., 2016). The updated database will include new sources of data from Safety Data Sheets and reported chemical functional uses (Dionisio et al., 2018). In addition to the data mining and curation activities, EPA is developing computational models that can predict likely uses for a chemical based on structure and is validating the results with chemicals having known uses and function (Philips et al., 2017). In addition, there is a key behavioral economics piece to this puzzle, i.e. what consumer products are being purchased, brought into the home, how used, frequency of use, and in what combination (Egeghy et al., 2016). Portions of this information are routinely collected by retailers and market research firms for business purposes; however, this information is generally not available for ExpoCast applications. Future efforts will focus on acquiring these data to evaluate current and ongoing population-level consumer product use patterns. The ultimate goal of these efforts is predict screening-level rates of exposure for any chemical structure by integrating formulation science, behavioral economics, and mechanistic fate and transport modeling to delineate linkages among inherent properties, functional role, product formulation, use scenarios, and environmental and biological concentrations (Egeghy, et al., 2016).

To provide experimental data on chemicals in the indoor environment, new analytical chemistry methods, such as non-targeted analysis (NTA) and suspect screening analysis (SSA), are being used to characterize the chemical composition of indoor media, such as house dust (Rager, et al., 2016), and various items people frequently encounter (e.g., household products and articles of commerce) (Phillips et al., 2018) and drinking water point-of-use water filters (Newton, et al., 2018). The new analytical methods have identified many chemicals not previously known to be present in those items and can provide semi-quantitative estimates of concentration of their relative mass fraction. The NTA and SSA efforts are supported by the work on the cheminformatics infrastructure, while the strengths and limitations of the technology are being evaluated through activities such as the EPA Non-Targeted Analysis Collaborative Trial (ENTACT)(Sobus, et al., 2019; Sobus et al., 2018; Ulrich et al., 2019). As an important part of the blueprint, the high-throughput exposure modeling activities and non-targeted and suspect screening analysis efforts will converge to expand the domain of applicability of the models and reduce the overall uncertainty.

Databases and new measurements of chemical occurrence in the human environment provide necessary but insufficient information to predict human exposure. Off-gassing of a chemical from whatever matrix it is found in must also be characterized. New, high-throughput models of chemical emissivity are being developed using existing data (e.g., chemical migration from packaging into food (Biryol et al., 2017) and new data are being collected in the hopes of building chemical emissivity models relevant to consumer products.

Finally, advances in exposure sciences are being used to address chemical mixtures. From a toxicological standpoint, testing all mixture permutations of even a hundred chemicals is prohibitively expensive. However, exposure monitoring and modeling combined with advanced data mining methods can identify certain prevalent mixtures of chemicals that either occur frequently in the environment (Tornero-Velez et al., 2012) or within biomonitoring data (Kapraun et al., 2017). In the future, a few prevalent mixtures out of the trillions of possible combinations will be evaluated using high-throughput toxicity testing approaches, enabling a more efficient and focused approach to the problem.

Uncertainty and Variability

A critical piece of any chemical assessment or prioritization activity is an understanding of the uncertainty and variability surrounding each component used in the process. In this context, uncertainty is defined as a lack of knowledge, while variability measures the differences across a population. For decades, the uncertainty and variability in traditional animal studies were most often addressed in risk assessments using standardized adjustment factors called “uncertainty/variability factors”(EPA, 2002). In contrast, efforts to characterize uncertainty and variability in high-throughput hazard, toxicokinetic, and exposure data have generally focused on chemical-specific methods. This focus helps identify where in the process additional data may be useful for reducing uncertainty in evidence that informs risk decisions.

In HTS assays, statistical methods are being developed and evaluated to establish uncertainty bounds around the measured potency and efficacy values (Watt et al., 2018). These approaches involve resampling the data and refitting the concentration response curves thousands of times to quantitatively estimate the uncertainty in potency and efficacy. For inter-individual variability, the Tox21 consortium is working to integrate cells derived from induced pluripotent stem cells (iPSCs) into different HTS assays to evaluate the range of responses from genetically diverse Individuals (Thomas, et al., 2018).

In toxicokinetic modeling, the experimental uncertainty in the plasma protein binding and hepatic metabolism is being propagated to the toxicokinetic models that estimate steady-state blood concentrations from a given administered dose. Inter-Individual variability is incorporated into toxicokinetic models using Monte Carlo methods that vary physiological parameters (such as age and bodyweight) based on a population distribution (Ring et al., 2017). Finally, the ExpoCast SEEM analysis provides a statistical framework that assigns quantitative estimates of uncertainty, along with the ability to describe median exposure predictions for a range of demographic groups within the U.S. population. For future efforts, characterization of the uncertainty and variability for each component Involving hazard, toxicokinetics, and exposure will be integrated to provide an overall estimate of the associated confidence bounds In a chemical-specific manner.

In parallel with characterizing the uncertainty and variability of high-throughput and computational approaches, hundreds of legacy in vivo toxicity studies have been curated by the EPA through the CompTox efforts and released using ToxRefDB (Knudsen, et al., 2009; Martin, et al., 2009a; Martin, et al., 2009b). Over the past four years, the digitization and curation efforts have been expanded to capture quantitative dose response data, study quality scoring, and explicit evaluation of negative study endpoints. In the blueprint for the future, the updated data will be evaluated to qualitatively and quantitatively assess the variability and uncertainty of the legacy in vivo toxicity studies that have underpinned regulatory decisions on hazard classification, labeling, and quantitative risk assessment. The characterization of variability and uncertainty in the traditional models has taken on additional importance given the mandate in the amended Toxic Substances Control Act (TSCA) legislation that states that alternative approaches need to provide “information of equivalent or better scientific quality and relevance...” (https://www.congress.gov/114/plaws/publ182/PLAW-114publ182.pdf).

Software Applications, Tools, and Information Technology Support

In addition to advancing the science in fields that inform chemical safety, achieving the goal of application of high-throughput and computationally-derived data to regulatory decisions requires a parallel investment in myriad translational activities. The development of dashboards, software applications, databases, and models provides an efficient means to assemble, integrate and deliver computational toxicology data to inform the assessment of health and ecological risks. The existing portfolio of computational toxicology dashboards is being upgraded and expanded to provide a broader range of data and capabilities related to chemistry, toxicology, toxicokinetics, and exposure. The data contained in the dashboards is integrated from a variety of internal and external sources. To ensure reliability, procedures are being put in place to verify the quality of the data and attribution to the correct source. This process is transitioning the dashboards from a research endeavor to the reliability necessary for regulatory application.

In addition to the data-delivery dashboards, new decision-support software applications will be released to support EPA regulatory activities. The decision support applications will contain a selection of guided workflows focused on providing support for specific regulatory decisions. For example, a chemical prioritization workflow is being built to allow the flexible integration of experimental and computational data related to toxicity, exposure, persistence, and bioaccumulation. The workflow will allow the data to be transformed in multiple ways, with user defined scoring and weighting as well as options to deal with missing data. The workflow is intended to transparently show the prioritization process and allow users to flexibly explore the relative impact of incorporating different data and approaches. Another workflow under development will focus on estimating human health values for data poor chemicals. The workflow assembles all available traditional and alternative hazard data on a chemical and guides the user through a stepwise approach to select a critical effect and identify effect levels from dose response. In providing a complete picture of available data, the user can evaluate and estimate corresponding uncertainty. To support efficient delivery of tools, software applications and dashboards, key investments are needed in the areas of data integration, software development, and building scalable information technology infrastructure.

Outreach and Training

Together with investments in information technology tools, another important piece of research translation is an increase in outreach and training activities to engage the scientific community, organizations responsible for regulatory decisions, and other stakeholder groups (e.g., U.S. Congress, industry, non-governmental organizations). The initial focus of outreach was to solicit suggestions from the scientific community for ideas on analyzing and interpreting CompTox and high-throughput data. These outreach and training efforts have continued and include activities such as the Computational Toxicology Communities of Practice webinars, coordinated presentations and demonstrations at scientific conferences, organizing workshops on relevant topics in the field, and collaborative research efforts with organizations that have an interest in using CompTox data and approaches.

As the science has advanced towards application to regulatory decisions, outreach efforts are expanding to engage organizations that make regulatory decisions about chemicals as well as other stakeholder groups. Since the decision-context for using the data differs across organizations (e.g., chemicals found in water, Superfund sites), a customized and collaborative approach is being undertaken to encourage decision-makers to provide an overview of their specific decision context, while researchers tailor the development of the tools and research to meet these needs. The collaborative approach typically involves numerous webinars, one-on-one meetings and exchanges of relevant chemical information. In addition to these activities, outreach and training are being augmented through the development of training videos and customized online tutorials.

It is anticipated that as EPA’s CompTox effort continues to expand and mature, the need for outreach activities and training for regulatory, regulated, and broader scientific communities will continue. Given the increased focus on regulatory applications, additional targeted sessions for regulators will also be included, including state, federal, and international entities. The education and training will cover both use and interpretation of the data, as well as use of the specific tools, such as the dashboards and workflows currently under development. To aid in the education and training process, EPA has released a ToxCast Owner’s Manual that covers in detail all aspects of the ToxCast screening effort from chemical procurement, assay annotation and performance characteristics, data analysis, and data availability (https://www.epa.gov/chemical-research/toxcast-owners-manual-guidance-exploring-data).

The use of computational toxicology data for supporting regulatory decisions requires transparency and focus on data quality and quality control procedures. To ensure transparency, EPA will continue to release the raw and processed data, as well as the computational models, databases and tools that underpin the research and ensure reproducibility. In the past, EPA has delivered data and models through a combination of its own data download site, supplemental files for scientific publications, and public repositories. Going forward, the amount of raw and processed data generated by many of the new approaches will dramatically Increase (e.g., HTTr and HTPP will produce hundreds of terabytes of data), posing challenges for data availability. Nonetheless, EPA remains committed to making the data and models publicly available to the stakeholder community and will be implementing a defined process to establish consistent procedures for release of this information.

To encourage broader acceptance of new approaches to testing and assessment, EPA is also moving to describe the assays and data in internationally accepted reporting formats. For example, the ToxCast assays related to endocrine endpoints have been described using a format consistent with Organization for Economic Cooperation and Development (OECD) Guidance Document 211, which is posted on the ToxCast data download site (https://www.epa.gov/chemical-research/toxicity-forecaster-toxcasttm-data) and integrated into the bioactivity summary data table in the EPA CompTox Chemicals dashboard. For quality control and assurance, EPA is Implementing standardized processes to verify data quality, ensure reproducibility, and flag suspect data.

Establishing Scientific Confidence

A final element for application of CompTox data, and perhaps the most critical, is establishing scientific confidence in the results. Traditional approaches to instill confidence in alternative methods, including in vitro assays and computational models, involve lengthy and resource-intensive validation procedures that typically focused on a one-for-one replacement of regulatory endpoints of interest (Patlewicz et al., 2015; Stephens et al., 2013). In the future, EPA, through its involvement in the Tox21 consortium, will be developing a generalizable and scalable evaluation framework for performance standards for many of the new approach methods (Thomas, et al., 2018). The framework will provide guidelines to ensure the reproducibility and performance of the methods, which will be necessary for acceptance in the regulatory community. In addition to performance standards, establishing scientific confidence will require collaborative partnerships with regulators on evaluating alternative methods for regulatory application and performing case studies to examine the application of CompTox data and tools to a variety of regulatory applications and decision scenarios. The EPA has released a strategy for application of new approach methods to TSCA (https://www.epa.gov/assessing-and-managing-chemicals-under-tsca/strategic-plan-reduce-use-vertebrate-animals-chemical). As an important component of the strategy, EPA researchers and regulators will be directly engaged to ensure the methods and data are applicable to regulatory decisions. On an international level, participation in regulatory case studies has already begun under the umbrella of a multi-national series of workshops focused on advancing progress in chemical risk assessment (Kavlock, 2016; Kavlock et al., 2018) as well as at the OECD.

Conclusions

The rapid advances in computational toxicology and alternative testing have moved the field from a nascent research effort to the beginning stages of public health and environmental regulatory application. This blueprint for the EPA CompTox effort aims to solidify the research and translational gains and obtain broader acceptance of the alternative approaches and application to higher tier regulatory decisions. The new blueprint incorporates a broadening of the scientific scope beyond its initial focus on HTS, data generation, and computational modeling, to research activities in multiple areas critical to rapidly and efficiently inform chemical safety decisions. The additional research activities cover the remaining components of the vision outlined in the 2007 NRC Report “Toxicity Testing in the 21st Century” for chemical characterization, dose and extrapolation modeling, and exposure (NRC, 2007) and are consistent with a risk-based tiered testing framework that account for the biological promiscuity of environmental and Industrial chemicals (Thomas, et al., 2013). In each area, the challenges inhibiting progress or contributing the greatest uncertainty are being systematically addressed. In addition to the research activities, the new blueprint includes parallel investments in translational tools, training and outreach, transparency, and establishing scientific confidence. These parallel investments are necessary to facilitate the application of computational toxicology data to a broader continuum of regulatory decisions. The CompTox blueprint for the future is built on lessons learned from past successes and is designed to address 21st Century public health and environmental decision-making with new scientific approaches to advance the safety evaluation of chemicals.

Acknowledgements

The U.S. Environmental Protection Agency has provided administrative review and has approved this paper for publication. The views expressed in this paper are those of the authors and do not necessarily reflect the views of the U.S. Environmental Protection Agency. Reference to commercial products or services does not constitute endorsement. The authors thank Drs. Annette Guiseppi-Elie and Michael DeVito for their helpful technical reviews of the manuscript.

Funding

The United States Environmental Protection Agency through its Office of Research and Development provided funding for the development of this article.

Footnotes

Disclaimer: The U.S. Environmental Protection Agency has provided administrative review and has approved this paper for publication. The views expressed in this paper are those of the authors and do not necessarily reflect the views of the U.S. Environmental Protection Agency.

References

- Al-Eryani L, Wahlang B, Falkner KC, Guardiola JJ, Clair HB, Prough RA, and Cave M (2015). Identification of Environmental Chemicals Associated with the Development of Toxicant-associated Fatty Liver Disease in Rodents. Toxicol Pathol 43(4), 482–97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ankley GT, Bennett RS, Erickson RJ, Hoff DJ, Hornung MW, Johnson RD, Mount DR, Nichols JW, Russom CL, Schmieder PK, et al. (2010). Adverse outcome pathways: a conceptual framework to support ecotoxicology research and risk assessment. Environ Toxicol Chem 29(3), 730–41. [DOI] [PubMed] [Google Scholar]

- Bell SM, Angrish MM, Wood CE, and Edwards SW (2016). Integrating Publicly Available Data to Generate Computationally Predicted Adverse Outcome Pathways for Fatty Liver. Toxicol Sci 150(2), 510–20. [DOI] [PubMed] [Google Scholar]

- Bhhatarai B, Wilson DM, Bartels MJ, Chaudhuri S, Price PS, and Carney EW (2015). Acute Toxicity Prediction in Multiple Species by Leveraging Mechanistic ToxCast Mitochondrial Inhibition Data and Simulation of Oral Bioavailability. Toxicol Sci 147(2), 386–96. [DOI] [PubMed] [Google Scholar]

- Biryol D, Nicolas CI, Wambaugh J, Phillips K, and Isaacs K (2017). High-throughput dietary exposure predictions for chemical migrants from food contact substances for use in chemical prioritization. Environ Int 108, 185–194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blaauboer BJ (2010). Biokinetic modeling and in vitro-in vivo extrapolations. J Toxicol Environ Health B Crit Rev 13(2–4), 242–52. [DOI] [PubMed] [Google Scholar]

- Blackwell BR, Ankley GT, Corsi SR, DeCicco LA, Houck KA, Judson RS, Li S, Martin MT, Murphy E, Schroeder AL, et al. (2017). An “EAR” on Environmental Surveillance and Monitoring: A Case Study on the Use of Exposure-Activity Ratios (EARs) to Prioritize Sites, Chemicals, and Bioactivities of Concern in Great Lakes Waters. Environ Sci Technol 51(15), 8713–8724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bray MA, Gustafsdottir SM, Rohban MH, Singh S, Ljosa V, Sokolnicki KL, Bittker JA, Bodycombe NE, Dancik V, Hasaka TP, et al. (2017). A dataset of images and morphological profiles of 30 000 small-molecule treatments using the Cell Painting assay. Gigascience 6(12), 1–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bray MA, Singh S, Han H, Davis CT, Borgeson B, Hartland C, Kost-Alimova M, Gustafsdottir SM, Gibson CC, and Carpenter AE (2016). Cell Painting, a high-content image-based assay for morphological profiling using multiplexed fluorescent dyes. Nature protocols 11(9), 1757–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- CalEPA (2016). Dicrotophos Risk Characterization Document: Occupational and Residential Bystander Exposures.

- Chen Y, Shu L, Qiu Z, Lee DY, Settle SJ, Que Hee S, Telesca D, Yang X, and Allard P (2016). Exposure to the BPA-Substitute Bisphenol S Causes Unique Alterations of Germline Function. PLoS Genet 12(7), e1006223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen Hubal EA, Richard A, Aylward L, Edwards S, Gallagher J, Goldsmith MR, Isukapalli S, Tornero-Velez R, Weber E, and Kavlock R (2010). Advancing exposure characterization for chemical evaluation and risk assessment. J Toxicol Environ Health B Crit Rev 13(2–4), 299–313. [DOI] [PubMed] [Google Scholar]

- Collins FS, Gray GM, and Bucher J, R. (2008). Toxicology: Transforming environmental health protection.. Science (New York, N.Y 319(5865), 906–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Croom EL, Shafer TJ, Evans MV, Mundy WR, Eklund CR, Johnstone AF, Mack CM, and Pegram RA (2015). Improving in vitro to in vivo extrapolation by incorporating toxicokinetic measurements: a case study of lindane-induced neurotoxicity. Toxicology and applied pharmacology 283(1), 9–19. [DOI] [PubMed] [Google Scholar]

- De Abrew KN, Kainkaryam RM, Shan YK, Overmann GJ, Settivari RS, Wang X, Xu J, Adams RL, Tiesman JP, Carney EW, et al. (2016). Grouping 34 Chemicals Based on Mode of Action Using Connectivity Mapping. Toxicol Sci 151(2), 447–61. [DOI] [PubMed] [Google Scholar]

- DeGroot DE, Swank A, Thomas RS, Strynar M, Lee MY, Carmichael PL, and Simmons SO (2018). mRNA transfection retrofits cell-based assays with xenobiotic metabolism. J Pharmacol Toxicol Methods 92, 77–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dionisio KL, Phillips K, Price PS, Grulke CM, Williams AJ, Biryol B, Hong T, and Isaacs KK (2018). The chemical and products database, a resource for exposure-relevant data on chemicals in consumer products. Scientific Data In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dix DJ, Houck KA, Martin MT, Richard AM, Setzer RW, and Kavlock RJ (2007). The ToxCast program for prioritizing toxicity testing of environmental chemicals. Toxicol Sci 95(1), 5–12. [DOI] [PubMed] [Google Scholar]

- EC (2016). Screening of available evidence on chemical substances for the identification of endocrine disruptors according to different options in the context of an Impact Assessment.

- ECFIA (2017). Screening Definition Document: Scenarios to be Implement for Searching Potential Substances of Concern for Substance Evaluation and Regulatory Risk Management.

- Egeghy PP, Judson R, Gangwal S, Mosher S, Smith D, Vail J, and Cohen Hubal EA (2012). The exposure data landscape for manufactured chemicals. Sci Total Environ 414,159–66. [DOI] [PubMed] [Google Scholar]

- Egeghy PP, Sheldon LS, Isaacs KK, Ozkaynak H, Goldsmith MR, Wambaugh JF, Judson RS, and Buckley TJ (2016). Computational Exposure Science: An Emerging Discipline to Support 21st-Century Risk Assessment. Environmental health perspectives 124(6), 697–702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- EPA (2002). A Review of the Reference Dose and Reference Concentration Processes.

- EPA (2003). Framework for Computational Toxicology Research Program in ORD.

- EPA (2012). Prioritization of the Endocrine Disruptor Screening Program Universe of Chemicals for an Estrogen Receptor Adverse Outcome Pathway Using Computational Toxicology Tools. In (O. o. C. S. a. P. Prevention, Ed.). US Environmental Protection Agency, Federal Register. [Google Scholar]

- EPA (2014a). Integrated Bioactivity and Exposure Ranking: A Computational Approach for the Prioritization and Screening of Chemicals in the Endocrine Disruptor Screening Program. In (O. o. C. S. a. P. Prevention, Ed.). US Environmental Protection Agency, Federal Register. [Google Scholar]

- EPA (2014b). New High-throughput Methods to Estimate Chemical Exposure. In (0. o. C. S. a. P. Prevention, Ed.). US Environmental Protection Agency, Federal Register. [Google Scholar]

- EPA (2015). Use of High Throughput Assays and Computational Tools; Endocrine Disruptor Screening Program; Notice of Availability and Opportunity for Comment. In (0. o. C. S. a. P. Prevention, Ed.). US Environmental Protection Agency, Federal Register. [Google Scholar]

- EPA (2018). Strategic Plan to Promote the Development and Implementation of Alternative Test Methods Within the TSCA Program.

- Feng Y, Mitchison TJ, Bender A, Young DW, and Tallarico JA (2009). Multi-parameter phenotypic profiling: using cellular effects to characterize small-molecule compounds. Nat Rev Drug Discov 8(7), 567–78. [DOI] [PubMed] [Google Scholar]

- Filer DL, Kothiya P, Setzer RW, Judson RS, and Martin MT (2016). tcpl: the ToxCast pipeline for high-throughput screening data. Bioinformatics 33(4), 618–620. [DOI] [PubMed] [Google Scholar]

- Fischer FC, Abele C, Droge STJ, Henneberger L, Konig M, Schlichting R, Scholz S, and Escher BI (2018). Cellular Uptake Kinetics of Neutral and Charged Chemicals in in Vitro Assays Measured by fluorescence Microscopy. Chem Res Toxicol 31(8), 646–657. [DOI] [PubMed] [Google Scholar]

- Fischer FC, Henneberger L, Konig M, Bittermann K, Linden L, Goss KU, and Escher BI (2017). Modeling Exposure in the Tox21 in Vitro Bioassays. Chem Res Toxicol 30(5), 1197–1208. [DOI] [PubMed] [Google Scholar]

- Frank CL, Brown JP, Wallace K, Wambaugh JF, Shah I, and Shafer TJ (2018). Defining toxicological tipping points in neuronal network development. Toxicology and applied pharmacology 354, 81–93. [DOI] [PubMed] [Google Scholar]

- Groothuis FA, Heringa MB, Nicol B, Hermens JL, Blaauboer BJ, and Kramer NI (2015). Dose metric considerations in in vitro assays to improve quantitative in vitro-in vivo dose extrapolations. Toxicology 332, 30–40. [DOI] [PubMed] [Google Scholar]

- Helman G, Shah I, and Patlewicz G (2018). Extending the Generalised Read-Across approach (GenRA): A systematic analysis of the impact of physicochemical property information on read-across performance.. Comp Toxicol 8, 35–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hilgers AR, Conradi RA, and Burton PS (1990). Caco-2 cell monolayers as a model for drug transport across the intestinal mucosa. Pharm Res 7(9), 902–10. [DOI] [PubMed] [Google Scholar]

- Hutson MS, Leung MC, Baker NC, Spencer RM, and Knudsen TB (2017). Computational model of secondary palate fusion and disruption. Chem Res Toxicol 30(4), 965–979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ingle BL, Veber BC, Nichols JW, and Tornero-Velez R (2016). Informing the Human Plasma Protein Binding of Environmental Chemicals by Machine Learning in the Pharmaceutical Space: Applicability Domain and Limits of Predictability. Journal of Chemical Information and Modeling 56(11), 2243–2252. [DOI] [PubMed] [Google Scholar]

- Isaacs KK, Glen WG, Egeghy P, Goldsmith MR, Smith L, Vallero D, Brooks R, Grulke CM, and Ozkaynak H (2014). SHEDS-HT: an Integrated probabilistic exposure model for prioritizing exposures to chemicals with near-Held and dietary sources. Environ Sci Technol 48(21), 12750–9. [DOI] [PubMed] [Google Scholar]

- Isaacs KK, Goldsmith MR, Egeghy P, Philips K, Brooks R, Hong T, and Wambaugh JF (2016). Characterization and prediction of chemical functions and weight fractions in consumer products. Toxicol Reports 3, 723–732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Judson RS, Magpantay FM, Chickarmane V, Haskell C, Tania N, Taylor J, Xia M, Huang R, Rotroff DM, Filer DL, et al. (2015). Integrated Model of Chemical Perturbations of a Biological Pathway Using 18 In Vitro High-Throughput Screening Assays for the Estrogen Receptor. Toxicol Sci 148(1), 137–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Judson RS, Martin MT, Patlewicz G, and Wood CE (2017). Retrospective mining of toxicology data to discover multispecies and chemical class effects: Anemia as a case study. Regul Toxicol Pharmacol 86, 74–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Judson RS, Martin MT, Reif DM, Houck KA, Knudsen TB, Rotroff DM, Xia M, Sakamuru S, Huang R, Shinn P, et al. (2010). Analysis of eight oil spill dispersants using rapid, In vitro tests for endocrine and other biological activity. Environ Sci Technol 44(15), 5979–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kapraun DF, Wambaugh JF, Ring CL, Tornero-Velez R, and Setzer RW (2017). A Method for Identifying Prevalent Chemical Combinations in the U.S. Population. Environmental health perspectives 125(8), 087017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kavlock R (2016). Practitioner Insights: Bringing New Methods for Chemical Safety into the Regulatory Toolbox; It is Time to Get Serious. In Daily Environment Report (Vol. 223 Bloomberg BNA, Bureau of National Affairs, Washington, DC. [Google Scholar]

- Kavlock R, Chandler K, Houck K, Hunter S, Judson R, Kleinstreuer N, Knudsen T, Martin M, Padilla S, Reif D, et al. (2012). Update on EPA’s ToxCast program: providing high throughput decision support tools for chemical risk management. Chem Res Toxicol 25(7), 1287–302. [DOI] [PubMed] [Google Scholar]

- Kavlock RJ, Austin CP, and Tice RR (2009). Toxicity testing in the 21st century: implications for human health risk assessment. Risk Anal 29(4), 485–7; discussion 492–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kavlock RJ, Bahadori T, Barton-Maclaren TS, Gwinn MR, Rasenberg M, and Thomas RS (2018). Accelerating the Pace of Chemical Risk Assessment. Chem Res Toxicol 31(5), 287–290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinstreuer NC, Ceger P, Watt ED, Martin M, Houck K, Browne P, Thomas RS, Casey WM, Dix DJ, Allen D, et al. (2016). Development and Validation of a Computational Model for Androgen Receptor Activity. Chem Res Toxicol doi: 10.1021/acs.chemrestox.6b00347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinstreuer NC, Dix DJ, Houck KA, Kavlock RJ, Knudsen TB, Martin MT, Paul KB, Reif DM, Crofton KM, Hamilton K, et al. (2013a). In vitro perturbations of targets in cancer hallmark processes predict rodent chemical carcinogenesis. Toxicol Sci 131(1), 40–55. [DOI] [PubMed] [Google Scholar]

- Kleinstreuer NC, Dix DJ, Rountree M, Baker N, Sipes N, Reif D, Spencer R, and Knudsen T (2013b). A computational model predicting disruption of blood vessel development. PLoS Comput Biol 9(4), e1002996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinstreuer NC, Judson RS, Reif DM, Sipes NS, Singh AV, Chandler KJ, Dewoskin R, Dix DJ, Kavlock RJ, and Knudsen TB (2011). Environmental Impact on vascular development predicted by high-throughput screening. Environmental health perspectives 119(11), 1596–603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinstreuer NC, Yang J, Berg EL, Knudsen TB, Richard AM, Martin MT, Reif DM, Judson RS, Polokoff M, Dix DJ, et al. (2014). Phenotypic screening of the ToxCast chemical library to classify toxic and therapeutic mechanisms. Nature biotechnology 32(6), 583–91. [DOI] [PubMed] [Google Scholar]

- Knudsen TB, Martin MT, Kavlock RJ, Judson RS, Dix DJ, and Singh AV (2009). Profiling the activity of environmental chemicals in prenatal developmental toxicity studies using the U.S. EPA’s ToxRefDB. Reprod Toxicol 28(2), 209–19. [DOI] [PubMed] [Google Scholar]

- Kramer NI, Di Consiglio E, Blaauboer BJ, and Testai E (2015). Biokinetics in repeated-dosing in vitro drug toxicity studies. Toxicol In Vitro 30(1 Pt A), 217–24. [DOI] [PubMed] [Google Scholar]

- Krowech G, Hoover S, Plummer L, Sandy M, Zeise L, and Solomon G (2016). Identifying Chemical Groups for Biomonitoring. Environmental health perspectives 124(12), A219–A226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamb J, Crawford ED, Peck D, Modell JW, Blat IC, Wrobel MJ, Lerner J, Brunet JP, Subramanian A, Ross KN, et al. (2006). The Connectivity Map: using gene-expression signatures to connect small molecules, genes, and disease. Science (New York, N. Y 313(5795), 1929–35. [DOI] [PubMed] [Google Scholar]

- Leet JK, Lindberg CD, Bassett LA, Isales GM, Yozzo KL, Raftery TD, and Volz DC (2014). High-content screening in zebrafish embryos identifies butafenacil as a potent Inducer of anemia. PLoS ONE 9(8), e104190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leung MC, Hutson MS, Seifert AW, Spencer RM, and Knudsen TB (2016). Computational modeling and simulation of genital tubercle development. Reprod Toxicol 64, 151–61. [DOI] [PubMed] [Google Scholar]