Introduction

Awareness of patient reported outcomes (PROs) as vital elements for big data efforts to incorporate measures of patient’s quality of life (QOL) into analysis of efficacy of care is emerging[1,2]. PRO domains can include functional status, symptoms (intensity, frequency), satisfaction (with medication), multiple domains of well-being, and global satisfaction with life. Today, virtually all validity issues for PROs have been either resolved or clear guidelines have been established [3–5].

Although the value of using PRO’s has been established, the challenge remains to measure QOL through PRO with the observational rigor of any other vital sign or lab test, yet with the ease of administration and rapid processing the clinical environment demands (Figure 1).

Figure 1.

Vision for use of PROs as part of clinical decision frameworks

Given the growth of the use of electronic databases, successful use of QOL data through PROs in many settings means success in gathering electronic responses as part of routine care. It enables the use of this data dimension as part of big data explorations of outcomes and interactions with treatment strategies. The potentially large volume of data also presents challenges [6]. Traditional analytical approaches used for limited datasets do not simply scale up to larger datasets., where the number of variables exceeds the available sample size to make proper statistical inferences. Large datasets could provide new opportunities to define both statistical and clinical significance. However, practical vision for how the data will be used in modeling of patient outcomes and development of metrics is needed to ensure the large volume of data does not languish as it has in past efforts [7–9]

The objective in this manuscript is to highlight the successful development of PRO systems and analytics applied to large sets of PRO data to identify clinically meaningful effects. Issues identified and lessons learned which are applicable to other practice settings will be shared.

Approaches to use of PROs for guiding clinical action

Successful implementation of PROs requires finding an optimal balance between minimizing impact on staff and patient time with the amount of actionable information that can be extracted from the responses. While the community will be familiar with a variety of validated instruments (e.g. FACT-x, IPSS) used to measure QOL in the context of clinical trials, they may be less familiar with instruments developed for use in the context of routine clinical care. Three examples are described in order of increasing numbers of QOL domains monitored.

The big three - screening with 3 QOL domains

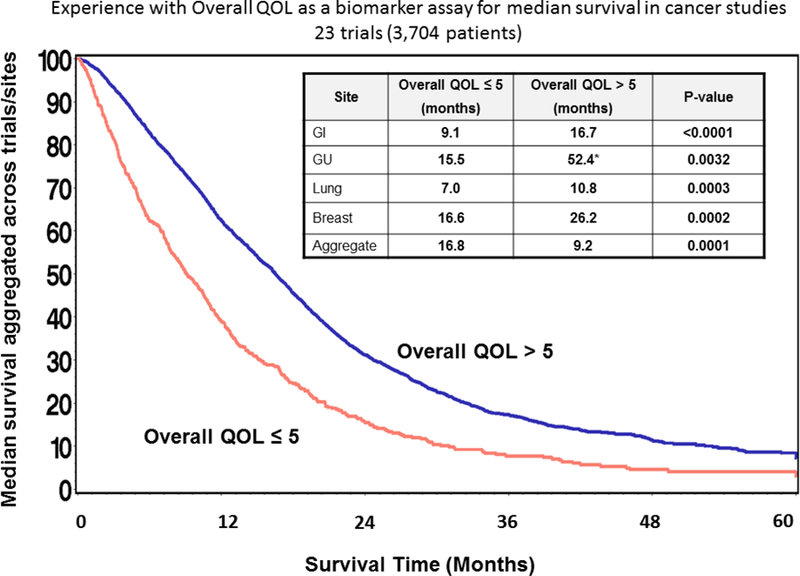

Use of a simple, single-item global measure of overall patient QOL was explored for relevance to clinical practice, where patients rate their overall QOL on a 1–10 scale, (Figure 2a, question 1) and was found to be diagnostic and prognostic for survival in a wide spectrum of cancer patients [10]. More specifically, an overall QOL PRO score of 5 or less on a 0–10 point numerical analogue response scale indicated a clinically significant deficit in overall quality of life [11]. This measure has been validated repeatedly in cancer patients and other populations and the cutoff has been independently verified by other investigations [12]. A score below 5.0 or a change of 2 points over time is indicative of a need for immediate exploration and intervention for the QOL deficit [13]. A deficit in overall quality of life is associated with a doubling of the risk of death at one, two, and five years across a broad spectrum of cancer patients [11]. Figure 3 summarizes experience at Mayo Clinic by Sloan et al with the use of this metric as a biomarker for survival as part of 23 trials involving several disease sites. This single-item QOL measurement approach was expanded to include the domains of pain and fatigue (Figure 2a, questions 2 and 3) based on literature that indicates they are prognostic indicators for how well a patient will deal the effects of cancer and therapeutic intervention, including survival. The cutoff score for a clinically meaningful deficit in pain and fatigue is still 5 or worse (with worse being higher scores in this case since symptoms are routinely reported with higher scores representing worse symptoms).

Figure 2.

Clinically based PROs quantifying a) three primary clinical domains and b) twelve domains using the LASA

Figure 3.

Overall Quality of Life has been demonstrated as a predictor for overall survival as part of several cancer trials

Since July 2010, over 30,000 individual clinical visits to the Mayo Clinic Cancer Center have incorporated the three single-item measures of overall QOL, pain, and fatigue. It has been well received by both patients and clinicians [14]. Between 20% and 50% of patients, depending upon oncology clinic, have reported QOL deficits and had clinical interventions or treatment modified as a result.

LASA – screening with 12 QOL domains generating care path response

QOL literature reviews and meta-analyses have identified the most important aspects of well-being to monitor when an overall QOL deficit emerges [15]. A twelve item linear analog self-assessment (LASA) PRO was developed to extend the 3 domains form using the same 0–10 scale (figure 2b) [16]. It was piloted on a web-based system

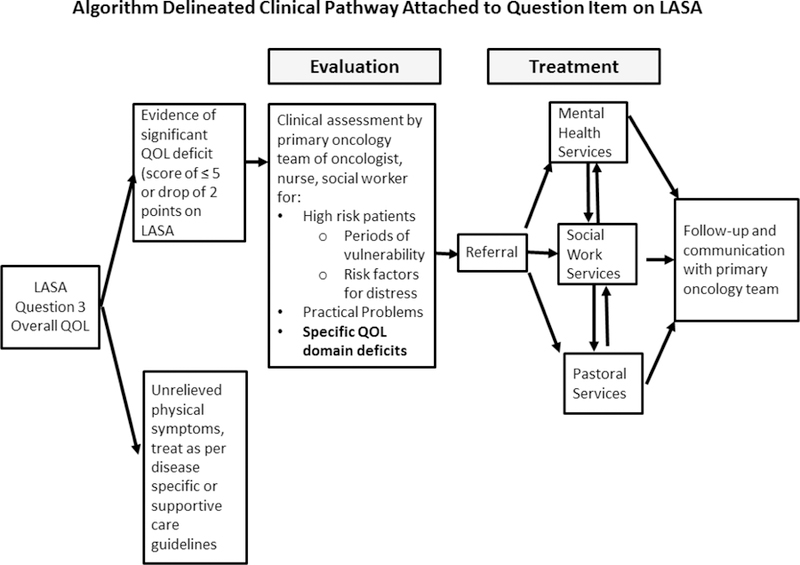

An algorithm delineating clinical pathways based on the US National Comprehensive Cancer Network (NCCN) guidelines for dealing with distress in cancer patients is attached to each domain (Figure 4) [17]. The system generates a report visually indicating a clinically meaningful deficit or change from previous visit, and a flow chart of pathways for deficit management. A score of 5 or worse or a worsening of two points initiates the activation of the NCCN guideline, otherwise the clinical team will simply treat any unrelieved symptoms as indicated via routine care.

Figure 4.

Clinical care pathways linked to LASA questions are used to direct interventions

The pilot of the real-time 12-Item (LASA) QOL assessment monitoring system included 148 patients receiving radiotherapy over five to eight weeks[18]. Using this monitoring system increased clinic visit time by 4 minutes on average but was not seen to add more work to the clinical practice. The primary concern raised by clinicians regarding the QOL assessment system was that the clinical guidelines needed to be more directive and specific. Over 96% of patients endorsed the use of the system and over 90% of the clinicians indicated that they were satisfied with the enhanced communication provided by the system for the clinic visits. After participating in this protocol, patients reported on average a 5-point QOL score improvement in pain severity; 4-point QOL score improvement for fatigue level; and 1–2 points better QOL in the PRO domains of mental well-being, social activity, spiritual well-being, and pain frequency. Approximately 22% and 32% of patients reported a clinically significant improvement over the 5–7 week course of radiotherapy in mental well-being and emotional well-being respectively. Finding and intervening to alleviate these deficits in well-being uncover otherwise undetectable issues. Given the linkage now established between poor QOL and survival, these systems have the potential to substantially alleviate morbidity and mortality among cancer patients.

Beacon - Web based screening of QOL domains directing interventions.

An interactive system using patient reported outcomes ( PROQOL) was developed for diabetic patients that expands the clinical pathway approach by delineating ten categories of possible patient concerns depicted with visual cues. The system asks only one question: “Please touch the picture that corresponds to your single biggest concern right now ….” (Figure 5)[19].

Figure 5.

The BEACON system use a) a touch screen to simplify patient input and has b) been incorporated into clinical care pathways

The patient is then presented with a checklist of potential aspects affecting them within the identified domain of concern and asked to check all that apply or to specify something else not listed. The patient is subsequently asked a few items relating to key domains of QOL for longitudinal follow-up.

The PROQOL was piloted patients with diabetes and used to direct clinical response (figure 5b). Money was the biggest concern (29%), followed by physical health, emotional health, monitoring health and health behaviors. Five out of 10 patients reporting money concerns indicated that they had problems paying their medical bills and 4 patients had put off or postponed getting health care they needed.

The PROQOL system has been adapted and tested in cancer, surgery and palliative care involving over 500 patients to date. Results indicate a 1–3-minute administration time and a saving of clinical consult time. The system is particularly appropriate for implementation in radiation oncology as the regular visit schedule and predictable access to patients allows for a close monitoring of progress in alleviating the biggest concerns expressed by patients in a timely fashion. Comments from participants and clinicians indicated that the PROQOL fulfilled previously unassessed and unmet needs, remedying a longstanding omission in traditional care management.

Implications of large PRO sample sizes for defining statistical and clinical significance

PRO data collection, with its large volume and longitudinal nature can be taken as an example for big data analytics. As in such big data analyses, the primary advantage of having, a large amount of data, is also a limitation in terms of statistical analysis. Common pitfalls such as nonresponses or missing information, cohort selection bias, and choice of statistical significance levels are among many issues that need to be dealt with. For instance, p-values for many analyses become of dubious and little value because the number of observations involved is so large that any statistical test will have virtually 100% power to detect miniscule effect sizes following the Law of Large Numbers, which can be stated with some general assumptions that any proportion based on a representative sample will be accurate to within the square root of the inverse of the sample size (1/square root(n)). For example, in a recent ASTRO guideline [20], it was stated that response rates for radiation therapy among proton beam patients can vary by as much as 50%, depending upon the disease type and stage. More specifically, then, if we were to estimate the number of patients to demonstrate a tumor response to proton beam therapy among prostate cancer patients, and we saw an estimate of 35% for a sample of 100 patients, then we know with 95% confidence that the true response rate in the population is somewhere between 25% and 45% (10% accuracy). In the context of big data, once the sample size expands to 10,000 or more, the estimate for 95% confidence is 1% (34%−36%), which begins for all most intents and purposes to use the point estimate of 35% as a true population value. So what does this mean for reporting summary statistics for descriptive statistics involved in big data studies? Basically, the summary statistics for a dataset of 10,000 patients or more should be considered as population norms, as long as the case can be made that the sample of 10,000 people is representative of the overall population.

As the sample size expands however, the power of the test increases to the point that even small differences become statistically significant despite the fact that their clinical meaning has not changed. Hence statistical tests of hypothesis become basically meaningless, which has huge implications for traditional multivariate modeling processes such as linear or logistic regression methods, which may include variables into the model because their impact is statistically significant but clinically unimportant.

For resolving clinically significant differences the key statistic is the observed effect size: the number of standard deviations (s.d.) by which treatment efforts differ. In general, 20% of a standard deviation is widely accepted as a relatively small effect size while 80% of a standard deviation is a large effect size for any outcome [21]. Further, effect sizes of at least 50% times the standard deviation have been established by extensive application in clinical studies to be non-ignorable [4,11,22]. Calibration of clinical significance through effect sizes also allows for calibration over time, across disease sites and trials.

One of the biggest lessons learned for statistical analysis planning for big data is to specify a priori at least a set of major hypotheses and analysis plans before building the database. This will save database development and modification work that otherwise will be needed to massage the data into a form ready to answer research questions. Most notably among such efforts have been the CaBig experience where despite tremendous resources applied to the building of a database, the return on investment in terms of scientific findings has yet to be realized.

Recently, Sloan et al examined implications of application of 0.5 s.d. based effect size thresholds with an exponential survival distribution to compared ASCO clinician-recommended targets for significance in improvement in median survival for several disease site groups described by Ellis et al. [23,24]. Threshold recommendations for pancreatic cancer patients eligible for FOLFIRONOX (median survival 10–11 months) was 4–5 months (0.25–0.35 s.d.) compared to 7.2–7.9 months for 0.5 s.d. effect size. Threshold recommendations were closer for colon cancer (median survival 4.−6 months): 3–5 months (0.4–0.9 s.d.) compared to 2.9–4.3 months for 0.5 s.d..

DISCUSSION

Incorporating PROs into clinical practice ensures that the most outstanding issue will be identified, essentially through a process of patient ‘self-triage,’ opening the door for clear and unambiguous self-reporting of the greatest challenge or threat the patient is experiencing to their quality of life, and possibly, to their survival. This ‘self-triage’ is critical, not only for the patient-reported outcomes, but also for effective clinical practice.

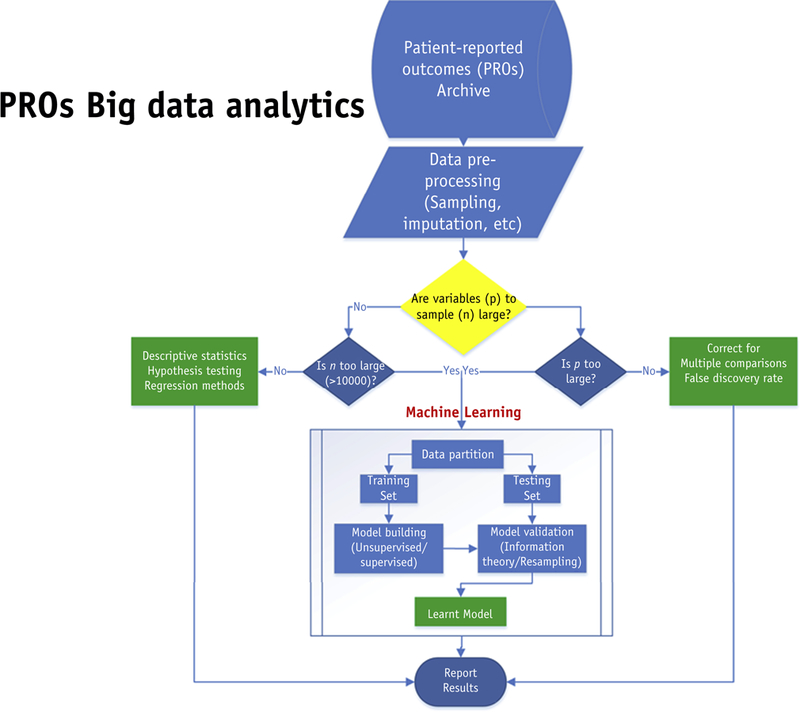

Incorporation of PRO metrics into models for patient response can be anticipated to lead to better decision guidance (figure 6). However, large samples will require sensitivity analysis methods such as resampling techniques. Data reduction techniques based on unsupervised machine learning such as principle component analyses and clustering techniques become indispensible tools for summarizing complex relationships across many variables in a succinct and practical manner. For example, k-means or hierarchal clustering are now applied in generating heatmaps of gene expression studies.

Figure 6.

With demonstration of the capabilities of PROs act as prognostic indicators, their importance as part of modeling outcomes is underscored.

Future research should apply big data analytics using machine learning methods to investigate whether there is a differential effect of patient-reported outcomes in comparative cancer treatment analyses and clinical significance. The figure below indicates the steps involved.

As previously indicated, standard statistical techniques to carry out this work exist and are readily applicable. What is truly novel is the method of interpretation, which requires analysts and clinicians to recognize that given the large sample sizes involved, the meaning of a statistically significant p-value is substantially, if not totally, reduced. With results based on data from thousands of patients and hundreds of data points per patient, the likelihood to capitalize on chance increases along with the power to detect trivially small effect sizes. It remains the purview of the clinical investigator to guard against spurious findings that may achieve statistical significance but fail to have sufficient relevance to change clinical practice.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

This work was supported in part by Public Health Service grants CA-25224, CA-37404, CA-35431, CA-35415, CA-35103, and CA-35269.

References

- 1.Abernethy AP, Coeytauz R, Rowe K, Wheeler JL, Lyerly HK. Electronic patient-reported data capture as the foundation of a learning health care system. JCO 2009. 27:6522. [Google Scholar]

- 2.Sloan JA, Berk L, Roscoe J, Fisch MJ, Shaw EG, Wyatt G, Morrow GR, Dueck AC; Integrating patient-reported outcomes into cancer symptom management clinical trials supported by the National Cancer Institute-sponsored clinical trials networks. J Clin Oncol 2007. November 10;25(32):5070–7. Review. [DOI] [PubMed] [Google Scholar]

- 3.Sloan J (ed) Current problems in Cancer. Integrating QOL assessments for clinical and research purposes 2005, 2006, Volumes 29,30. [DOI] [PubMed] [Google Scholar]

- 4.Brundage M, Blazeby J, Revicki D, Bass B, de Vet H, Duffy H, Efficace F, King M, Lam CL, Moher D, Scott J, Sloan J, Snyder C, Yount S, Calvert M: Patient-reported outcomes in randomized clinical trials: development of ISOQOL reporting standards. Qual Life Res 2013, 22(6):1161–75. 10.1007/s11136-012-0252-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sprangers MA1, Sloan JA, Barsevick A, Chauhan C, Dueck AC, Raat H, Shi Q, Van Noorden CJ; GENEQOL Consortium. Scientific imperatives, clinical implications, and theoretical underpinnings for the investigation of the relationship between genetic variables and patient-reported quality-of-life outcomes. . Qual Life Res 2010. December;19(10):1395–403. 10.1007/s11136-010-9759-5. Epub 2010 Oct 14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hastie Trevor, Tibshirani Robert, and Friedman Jerome. The Elements of Statistical Learning: Data Mining, Inference, and Prediction Springer-Verlag, 2 edition, 2009. [Google Scholar]

- 7.Bakken S, Reame N. The Promise and Potential Perils of Big Data for Advancing Symptom Management Research in Populations at Risk for Health Disparities. Annu Rev Nurs Res 2016;34:247–60. 10.1891/0739-6686.34.247. [DOI] [PubMed] [Google Scholar]

- 8.Covitz Peter A., Hartel Frank, Schaefer Carl, De Coronado Sherri, Fragoso Gilberto, Sahni Himanso, Gustafson Scott and Buetow Kenneth H. (April 23, 2003). “caCORE: A common infrastructure for cancer informatics”. Bioinformatics 19 (18): 2404–2412. 10.1093/bioinformatics/btg335. [DOI] [PubMed] [Google Scholar]

- 9.Foley John (April 8, 2011). “Report Blasts Problem-Plagued Cancer Research Grid”. Information Week Retrieved June 10, 2013.

- 10.Sloan JA, Xhao X, Novotny PJ, Wampfler J, Garces Y, Clark MM, et al. (2012). Relationship between Deficits in Overall Quality of Life and Non-Small-Cell Lung Cancer Survival. Journal of Clinical Oncology, 30(14), 1498–1504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Singh Jasvinder A, Satele Daniel, Pattabasavaiah Suneetha, Buckner Jan C and Sloan Jeff A. Normative data and clinically significant effect sizes for single-item numerical linear analogue self-assessment (LASA) scales. Health and Quality of Life Outcomes 201412:187 10.1186/s12955-014-0187-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gotay CC, Kawamoto CT, Bottomley A, Efficace F: The prognostic significance of patient-reported outcomes in cancer clinical trials. J Clin Oncol 2008, 26: 1355–1363. 10.1200/JCO.2007.13.3439 [DOI] [PubMed] [Google Scholar]

- 13.Huschka MM, Mandrekar SJ, Schaefer PL, Jett JR, Sloan JA: A pooled analysis of quality of life measures and adverse events data in north central cancer treatment group lung cancer clinical trials. Cancer 2007, 109: 787–795. [DOI] [PubMed] [Google Scholar]

- 14.Hubbard Joleen M., MD⇑, Grothey Axel F., MD, McWilliams Robert R., MD, Buckner Jan C., MD and Sloan Jeff A., PhD Physician Perspective on Incorporation of Oncology Patient Quality-of-Life, Fatigue, and Pain Assessment Into Clinical Practice. JOP March 25, 2014 10.1200/JOP.2013.001276. [DOI] [PMC free article] [PubMed]

- 15.Cleeland CS1, Sloan JA, Cella D, Chen C, Dueck AC, Janjan NA, Liepa AM, Mallick R, O’Mara A, Pearson JD, Torigoe Y, Wang XS, Williams LA, Woodruff JF; CPRO (Assessing the Symptoms of Cancer Using Patient-Reported Outcomes) Multisymptom Task Force. Recommendations for including multiple symptoms as endpoints in cancer clinical trials: a report from the ASCPRO (Assessing the Symptoms of Cancer Using Patient-Reported Outcomes) Multisymptom Task Force. Cancer 2013. January 15;119(2):411–20. 10.1002/cncr.27744. Epub 2012 Aug 28. [DOI] [PubMed] [Google Scholar]

- 16.Halyard MY, Tan A, Callister MD, Ashman JB, Vora SA, Wong W, Schild SE, Atherton PJ and Sloan JA. Assessing the clinical significance of real-time quality of life (QOL) data in cancer patients treated with radiation therapy. Journal of Clinical Oncology, 2010. ASCO Annual Meeting Abstracts. Vol 28, No 15_suppl (May 20 Supplement), 2010: 9107 [Google Scholar]

- 17.Mohler JL. The 2010 NCCN clinical practice guidelines in oncology on prostate cancer. J Natl Compr Canc Netw 2010;8(2):145. [DOI] [PubMed] [Google Scholar]

- 18.Halyard MY. The use of real-time patient-reported outcomes and quality-of-life data in oncology clinical practice. Expert Rev Pharmacoecon Outcomes Res 2011. October;11(5):561–70. [DOI] [PubMed] [Google Scholar]

- 19.Ridgeway JL, Beebe TJ, Chute CG, Eton DT, Hart LA, et al. A Brief Patient-Reported Outcomes Quality of Life (PROQOL) Instrument to Improve Patient Care. PLoS Med 2013, 10(11). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Koontz Bridget F., MD, Benda Rashmi, MD, De Los Santos Jennifer, MD, Hoffman Karen E., MD, Huq M. Saiful, PhD, FAAPM, FInstP, Morrell Rosalyn, MD, Sims Amber, MHA, CPH, Stevens Stephanie, MPH, Yu James B., MD, MHS, Chen Ronald C., MD, MPH US radiation oncology practice patterns for posttreatment survivor care. Practical Radiation Oncology, Jan-Feb, 2016. Volume 6, Issue 1, Pages 50–56. [DOI] [PubMed] [Google Scholar]

- 21.Cohen J (1988). Statistical power analysis for the behavioral sciences Hillsdale, NJ: Psychology Press. [Google Scholar]

- 22.Revicki DA, Cella D, Sloan JA, Lenderking WR, & Aaronson NK (2006). Responsiveness and minimal important differences for patient reported outcomes. Health and Quality of Life Outcomes, 4(70). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ellis LM, Bernstein DS, Voest EE, Berlin JD, Sargent D, Cortazar P, et al. (2014). American Society of Clinical Oncology Perspective: Raising the Bar for Clinical Trials by Defining Clinically Meaningful Outcomes. Journal of Clinical Oncology [DOI] [PubMed]