Abstract

In neuroscientific studies, the naturalness of face presentation differs; a third of published studies makes use of close-up full coloured faces, a third uses close-up grey-scaled faces and another third employs cutout grey-scaled faces. Whether and how these methodological choices affect emotion-sensitive components of the event-related brain potentials (ERPs) is yet unclear. Therefore, this pre-registered study examined ERP modulations to close-up full-coloured and grey-scaled faces as well as cutout fearful and neutral facial expressions, while attention was directed to no-face oddballs. Results revealed no interaction of face naturalness and emotion for any ERP component, but showed, however, large main effects for both factors. Specifically, fearful faces and decreasing face naturalness elicited substantially enlarged N170 and early posterior negativity amplitudes and lower face naturalness also resulted in a larger P1.This pattern reversed for the LPP, showing linear increases in LPP amplitudes with increasing naturalness. We observed no interaction of emotion with face naturalness, which suggests that face naturalness and emotion are decoded in parallel at these early stages. Researchers interested in strong modulations of early components should make use of cutout grey-scaled faces, while those interested in a pronounced late positivity should use close-up coloured faces.

Keywords: EEG/ERP, faces, emotion, face naturalness, face realism

Introduction

Human facial expressions are a quick channel for social communication (Tsao and Livingstone, 2008; Jack and Schyns, 2015). The human face enables the observer to recognise a unique identity, exhibiting information about age, gender and race, as well as emotional states (Jack and Schyns, 2015). Not surprisingly, there is a high interest in understanding how humans process faces, as well as in determining the neurophysiological correlates of face perception (e.g. see Bentin et al., 1996; Haxby et al., 2000).

A powerful method to investigate neuronal responses towards faces are event-related potentials (ERPs), which are modulated both by cognitive (e.g. expertise or familiarity for faces; see Sagiv and Bentin, 2001; Itier et al., 2011) and by perceptual factors. These, for example, include spatial frequencies, luminance and colour information of the stimulus (e.g. see Balas and Pacella, 2015; Prete et al., 2015; Schindler et al., 2018), as well as presentation features such as the temporal (flickering) frequency (e.g. see Boremanse et al., 2013).

Often, the earliest component of interest is the occipitally scored P1, which is characterised by a positive peak between 80 and 120 ms, and is thought to reflect early stages of stimulus detection, discrimination and vigilance (Mangun and Hillyard, 1991; Vogel and Luck, 2000; Bublatzky and Schupp, 2012). The P1 is often found to be larger for faces compared to objects (Bentin et al., 1996; Allison et al., 1999). Studies dealing with ERP modulations caused by emotional expressions show mixed findings regarding the P1. Some studies report emotional modulations (e.g. see Foti et al., 2010; Blechert et al., 2012), while others do not detect them (e.g. see Wieser et al., 2012; Smith et al., 2013). Lately, it was hypothesised that this might be explained by the facial expression intensity (Müller-Bardorff et al., 2018). In contrast to the P1, the later occurring negative occipito-temporal N170 potential, which peaks between 130 and 190 ms, seems to be face-sensitive (Allison et al., 1999; Sagiv and Bentin, 2001; Ganis et al., 2012; Schendan and Ganis, 2013). The N170 is viewed as a structural encoding component (Eimer, 2011). Further, a recent meta-analysis showed that the N170 component can be reliably modulated by emotional compared to neutral expressions(Hinojosa et al., 2015).The following occipito-temporal negativities [N250r and early posterior negativity (EPN), peaking between 200 and 300 ms] seem to relate to recognition processes of individual faces (Schweinberger and Neumann, 2016). The EPN is also enlarged for emotional compared to neutral stimuli, including face stimuli (Wieser et al., 2010; Bublatzky et al., 2014). The EPN indicates early attention mechanisms (e.g. Schupp et al., 2004). Finally, the late positive potential arises from ~400 ms onwards over parietal regions. Faces compared to scrambles or objects seem to elicit a larger late positivity (Allison et al., 1999; González et al., 2011), while numerous studies report enhanced Late Positive Potential (LPP) amplitudes for emotional compared to neutral stimuli (e.g. for faces see Blechert et al., 2012; Bublatzky et al., 2014).The LPP indicates stimulus evaluation and controlled attention processes (Schupp et al., 2006; Hajcak et al., 2009).

To investigate emotional ERP modulations, researchers have presented emotional faces in various ways. While some studies make use of grey-scaled faces (e.g. see Righi et al., 2012; Peltola et al., 2014), others use full-coloured faces (e.g. see Calvo et al., 2013; Bublatzky et al., 2017). Further, to reduce perceptual differences, faces are often presented as cutouts, showing only core parts of the face. This follows the notion that not all parts of the face exhibit relevant information about the emotional expression. As an example, for fearful faces, the eyes constitutea crucial region for recognising the emotional expression (e.g. see Adolphs, 2008; Wegrzyn et al., 2015), as well as for modulating ERP responses (Li et al., 2018).

By reviewing the last decade of scientific literature on emotional ERP modulations for faces (2008–2018; see Supplementary Table S1 for detailed references), we found for 100 published studies that almost a third of the studies used close-up coloured faces (28), close-up grey-scaled faces (22) or cutout grey-scaled faces (27). However, in most cases, the rationale of the stimulus selection is missing or is not clearly described, nor is the stimulus’ effect on ERP responses sufficiently explained. In some cases, the heterogeneous use of face manipulations might contribute to conflicting findings. So far, all studies that did not find an EPN emotion effect used close-up coloured faces (Herbert et al., 2013; Thom et al., 2013; Brenner et al., 2014). Regarding P1, N170 and LPP components, emotion effects, as well as null findings, have been reported with all face manipulations. However, the size of emotion effects might differ depending on a given face manipulation. Thus, the present study aimed to investigate effects of the three most employed face manipulations in a within subject design.

Cutting out uninformative noise homogenises the stimulus set, which reduces interstimulus perceptual variance known to influence the N170 (e.g. see Thierry et al., 2007a,b; but see also Bentin et al., 2007; Rossion and Jacques, 2008). On the other hand, it can be hypothsised that face-specific context enhances emotional responsiveness, since specific facial features (colour, hair, etc.) might contribute to a perceived unique identity. Hence, early and late stages of processing could be affected by increasing the emotional salience of the expressions of a unique person (e.g. see Schulz et al., 2012; Itz et al., 2014; Schindler et al., 2017). In this vein, a multitude of studies shows that broader contextual information modulates face perception. Here, ERP modulations were observed if contextual information was provided for a face, either being affective background pictures (Wieser and Keil, 2013) or verbal information (Wieser and Brosch, 2012). Further, preceding emotional or neutral sentences modulated EPN as well as LPP responses towards inherently neutral expressions. Thus, stimuli are integrated and processed with available contextual features, which in turn could even include peripheral facial features.

To explore the impact of face naturalness on emotional responses, we presented the three most common face naturalness levels, showing close-up coloured faces, close-up grey-scaled faces and cutout grey-scaled faces. Based on the literature (on ERP modulations), we expected main effects of emotional expression, leading to larger N170, EPN and—if LPP modulations could be observed—LPP amplitudes. Since there are perceptual differences between the three different types of face image manipulations, main effects for the P1 and N170 component were expected as well. Furthermore, an enlarged LPP for more naturalistic faces was hypothesised (please note, this was mentioned, but no formal pre-registered hypothesis). Crucially, we tested interactions of emotional expression and face naturalness. We tested if either contextual face information, or, as an alternative, the reduction of uninformative noise lead to more pronounced emotional modulations. These theoretical predictions, together with a detailed description of the analysis pipeline, were pre-registered on the Open Science Framework (https://osf.io/5fkt4/).

Materials and methods

Participants

Thirty-seven participants were recruited at the University of Münster. Participants gave written informed consent and received 10 euros per hour for participation. All participants had normal or corrected-to-normal vision, were right-handed and had no reported history of neurological or psychiatric disorders. One participant aborted the experiment, leading to 36 participants in the final Electroencephalography (EEG) analyses. On average, the 36 participants (24 female) were 24.06 (s.d. = 3.43) years old (on average). Average rated tiredness (1 = fully awake, 10 = fully tired) before testing was 2.73 (s.d. = 1.51), during the face perception experiment 5.52 (s.d. = 1.73) and after testing 5.09 (s.d. = 2.03).

Stimuli

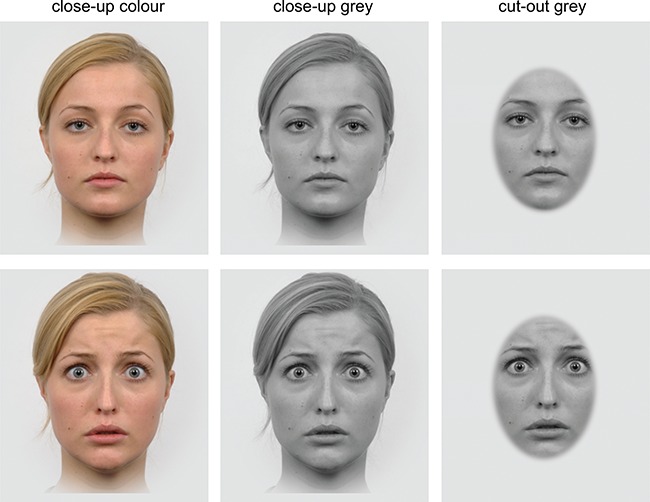

The faces were taken from the Radboud Faces Database (Langner et al., 2010). For the experiment, faces were converted into greyscale and cutouts from the faces were used, showing no facial hair. Thirty-six identities (18 male, 18 female) were used, showing either fearful or neutral expressions, both presented in three different naturalness conditions: In the first condition, a coloured close-up of each face was used, while in the second condition, a grey-scaled close-up was used and in the third condition, a grey-scaled cutout of the core face was presented. The cutout had an elliptical shape with x- and y-radii of 2.29° and 3.77° with blurred edges. As exemplified in Figure 1, the cutout removed any facial hair, the ears and the neck in each image. In line with the suggested maximal influence of fearful faces in naturalistic environments (Hedger et al., 2015), presented face pictures exhibited a visual angle of ~6.2° (bizygomatic diameter).Stimuli were presented on a Gamma-corrected display (Iiyama G-Master GB2488HSU) running at 60 Hz with a Michelson contrast of 0.9979 (Lmin = 0.35 cd/m2; Lmax = 327.43 cd/m2). The background luminance was kept at 262.53 cd/m2.

Fig. 1.

Example facial stimuli showing fearful and neutral expressions. Please note that background colour was identical in all experiments and displayed facial features size (e.g. eyes, nose and mouth) was kept constant.

Procedure

Participants were instructed to avoid eye movements and blinks during stimulus presentation. To ensure that participants paid attention to the presented faces, gaze position was evaluated online with an eye tracker (EyeLink 1000, SRResearch Ltd, Mississauga, Canada), stopping the presentation whenever the centre was not fixated. Thus, stimulus presentation was paused whenever participants were not directing their gaze at a circular region with a radius of 0.7° around the fixation mark. If a gaze deviation was detected for more than 5 s despite a participant’s attempt to fixate the centre, the eye tracker calibration procedure was automatically initiated. For 11 participants, eye tracking data could not be recorded due to technical difficulties. In these cases, we relied on the participants’ following the instructions (to focus) and focussing on the central fixation mark. Additionally, participants were instructed to respond to a non-face trial by pressing the space bar. Response feedback was provided for hits (key presses within 1 s after non-face presentation), slow responses (key presses within 1 to 3 s) and false alarms (key presses outside these windows) through a corresponding text presented for 2 s at screen centre. Non-faces consisted of phase-scrambled faces, i.e. random patterns. The three image naturalness levels were presented in separate blocks, with the order of blocks counterbalanced across participants. Within each block of a given face naturalness, 60 fearful, 60 neutral faces and 5 non-face oddballs were presented in randomised order. In each trial, a fixation mark was presented jittering between 300 and 700 ms, followed by a face for 50 ms and then followed by a blank screen presented for 500 ms before the next trial started. After testing, participants were asked about effort and difficulty of the experiment, tiredness during and after the experiment and their subjective most intense emotional responsiveness towards given face categories.

It is important to note that participants completed two preceding experiments. The first experiment took ~50 min, manipulating perceptual load and directing attention to letters while task-irrelevant angry, happy or neutral faces or scrambled distracters were presented. Afterwards, participants had a long break to rest and refresh. Then, they started a face perception experiment, each lasting for approximately 10 min, presenting fearful and neutral faces with manipulated spatial frequencies.

EEG recording and preprocessing

EEG signal was recorded from 64 BioSemi active electrodes using Biosemi’s Actiview software (www.biosemi.com). Four additional electrodes measured horizontal and vertical eye movements. The recording sampling rate was512 Hz. As recording reference, Biosemi uses two separate electrodes as ground electrodes, a common mode sense active electrode and a Driven Right Leg passive electrode, which form a feedback loop that enables measuring the average potential close to the reference in the A/D-box. Data were re-referenced offline to an average reference, and a 0.1 Hz high-pass forward filter (6 db/oct) as well as a 30 Hz low-pass zero-phase filter (24 db/oct) were applied. Recorded eye movement was corrected using the automatic eye artefact correction method implemented in BESA (Ille et al., 2002). Filtered data were segmented from 100 ms before stimulus onset until 800 ms after stimulus presentation. Baseline correction was used 100 ms before stimulus onset. On average, 4.49 electrodes (s.d. = 2.21) were interpolated. For close-up coloured fearful faces, an average of 52.83 trials was kept, for close-up coloured neutral faces 52.36 trials, for close-up grey-scaled fearful faces 52.06 trials, for close-up grey-scaled neutral faces 53.75 trials, for cutout grey-scaled fearful faces 53.64 trials and for cutout grey-scaled neutral faces 53.75 trials. There were no differences in the number of kept trials between emotional expressions (F(1,35) = 0.44, P = 0.513, partial η2 = 0.012), face naturalness (F(2,70) = 0.72, P = 0.492, partial η2 = 0.020) or an interaction (between) of both (F(2,70) = 1.25, P = 0.294, partial η2 = 0.034).

EEG data analyses

EEG scalp-data was statistically analysed with ElectroMagnetic EncaphaloGraphy Software (EMEGS) (Peyk et al., 2011). Two (emotion: fearful vs neutral expression) by three (face naturalness: close-up colour vs close-up grey-scale vs cutout grey-scale) repeated measure Analysis of Variance (ANOVA) were set up to investigate main effects of emotional expression and face naturalness, as well as their interaction in time windows and electrode clusters of interest. Finally, we pre-registered that ERP modulations might not be sufficiently large enough to detect slight effects of naturalness, planning Bayesian t-tests to detect possible differences in emotional modulations between close-up coloured faces and cutout grey-scale faces (see https://osf.io/5fkt4/). The null hypothesis was specified as a point-null prior (i.e. standardised effect size δ = 0), whereas the alternative hypothesis was defined as a Jeffrey–Zellner–Siow prior, i.e. a folded Cauchy distribution centred around δ = 0 with scaling factors of r = 0.707, and Bayes Factor (BF) scores above (below) 1 indicating that the data are less (more) likely under the null relative to the alternative hypothesis. Partial eta-squared (partial η2) were estimated to describe effect sizes, where ηP2 = 0.02 describes a small, ηP2 = 0.13 a medium and ηP2 = 0.26 a large effect (Cohen, 1992). Time windows were segmented from 80 to 100 ms for the P1, from 130 to 170 ms for the N170, from 230 to 330 ms to investigate EPN effects and from 400 to 600 ms to investigate LPP effects. Since the N170 peaked at about 140 ms (in line with the literature, e.g. see Itier and Taylor, 2004), we carefully rechecked and validated the correct trigger timing. For the P1, an occipital cluster (O1, O2, Oz, PO7, PO8)was examined, while for the N170 and EPN time windows, two symmetrical occipital clusters were examined (left: O1, PO7, P7, P9; right: O2, PO8, P8, P10).However, laterality did not affect the results (for detailed analyses see the supplement). For the LPP, a centro-parietal cluster was examined (P1, P2, Pz, CP1, CP2, CPz; see the Supplementary Figure S1 for an overview of the data and the used electrode clusters).

Eye tracking data

The eye tracking data were only used for online gaze control. We chose not to perform any offline analyses as the experiment was designed to discourage eye movements anyway and interstimulus intervals were likely too small to observe systematic changes in pupil dilation.

Results

Manipulation check

In an open questionnaire, participants reported highest emotionality for close-up coloured faces. While most reported similar emotional responses to all fearful faces (19 participants), some reported no intensive emotional experience for any given face (5 participants) or did not comment (eight participants).

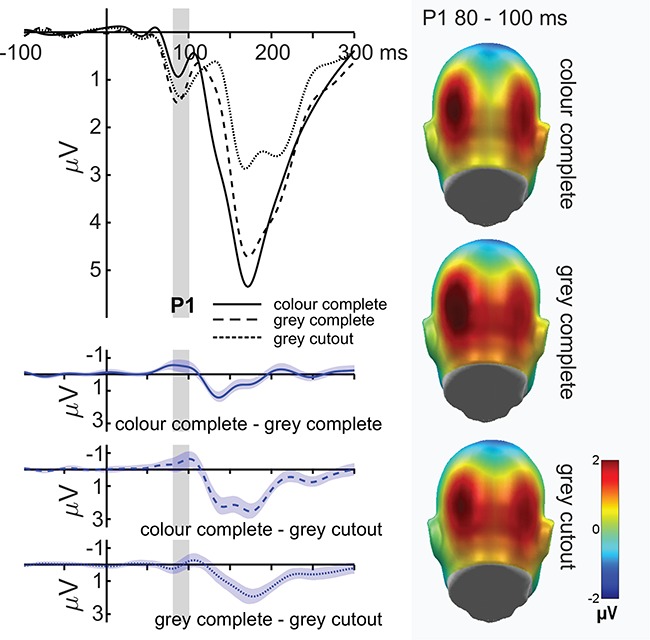

P1 component

For the P1, no main effects of emotion (F(1,35) = 3.02, P = 0.091, partial η2 = 0.079), or (face) naturalness (F(2,70) = 1.79, P = 0.175, partial η2 = 0.049; see Figure 2), as well as no interaction between emotion and face naturalness (F(2,70) = 0.59, P = 0.942, partial η2 = 0.002) were observed. Explorations between the extreme positions conducted by Bayesian t-tests (please see the pre-registered protocol in the Open Science Framework, https://osf.io/5fkt4/) revealed that no difference in emotion effects between the close-up coloured faces and the grey-scaled cutout faces was about five times more likely than the existence of actual differences (BF 01 = 5.316, error % 0.0000207).

Fig. 2.

P1 modulations by depicted face naturalness. The left panel shows the time course for all face naturalness conditions, averaged over electrodes O1, Oz and O2. The right panel shows the amplitudes for each face naturalness condition. P1 amplitudes did not significantly differ between the naturalness levels. All difference plots (blue) contain 95% bootstrap confidence intervals of intraindividual differences.

Exploratory P1 component analyses for face naturalness

Since main effects of face naturalness were expected but absent at the P1, we first reanalysed the P1 with more lateralized sensors (left: P9, P7, PO7, O1; right: P10, P8, PO8, O2; see Supplementary Figures S1 and S2 and the supplement for detailed analyses) and more medial sensors (O1, Oz, O2). For the medial sensor group, no main effect of emotion was found (F(1,35) = 3.74, P = 0.061, partial η2 = 0.061), but a significant main effect of face naturalness was observed (F(1.69,59.25) = 3.37, P = 0.048, partial η2 = 0.088; see Figure 2). Here, close-up coloured faces elicited a smaller P1 compared to close-up grey-scaled faces (P = 0.008), but not significantly compared to cutout grey-scaled faces (P = 0.081). The two grey-scaled face conditions did not differ from one another (P = 0.821). Again, no interaction between emotion and face naturalness was detected (F(2,70) = 0.18, P = 0.840, partial η2 = 0.005).

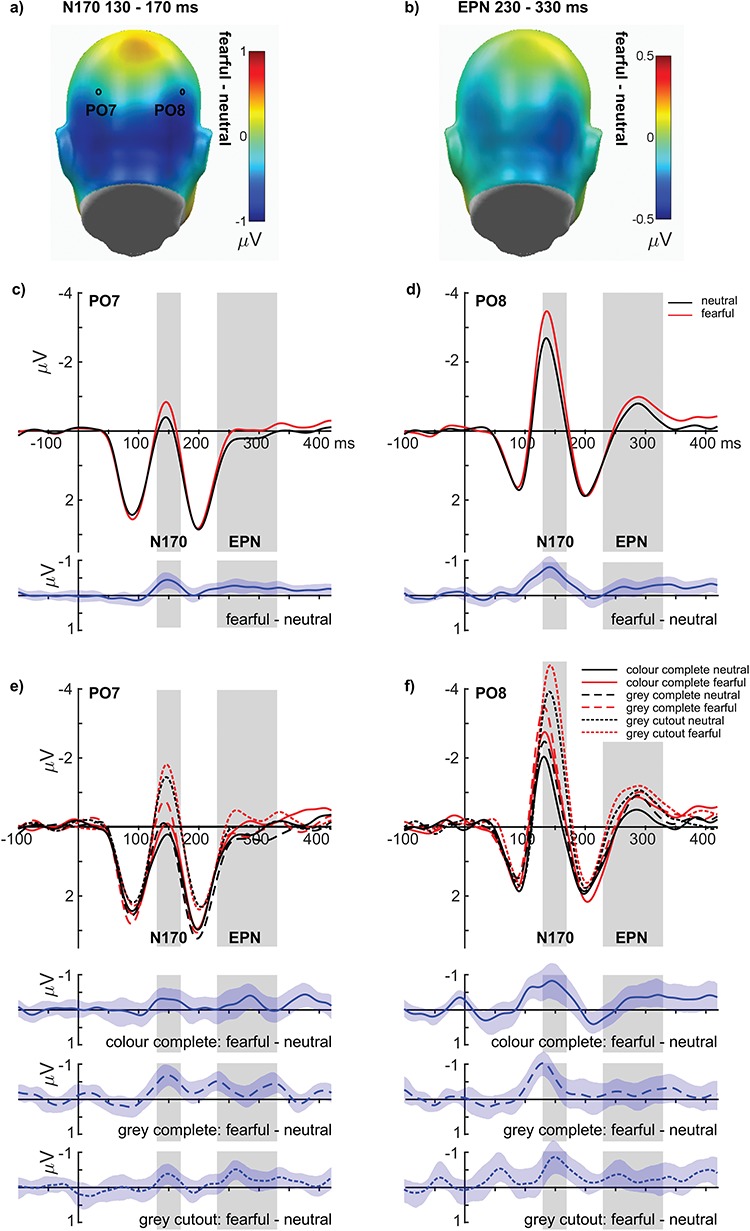

N170

For the N170, large main effects of emotion (F(1,35) = 40.34, P < 0.001, partial η2 = 0.535; see Figure 3A, C and D) and naturalness were found (F(2,70) = 60.55, P < 0.001, partial η2 = 0.634; see Figure 4A–B), but no interaction between emotion and face naturalness (F(2,70) = 1.44, P = 0.243, partial η2 = 0.040; see Figure 3E and F). For the main effect of emotion, fearful expressions elicited a larger N170 compared to neutral expressions. Regarding face naturalness, cutout grey-scaled faces showed the largest N170 amplitudes, followed by close-up grey-scaled faces, and eventually close-up coloured faces. To investigate this closer, polynomial trends were tested, showing linearly increasing N170 amplitudes with decreasing face naturalness (F(1, 35) = 78.79, P < 0.001; explained 92% of the naturalness variance), while a quadratic contrast was also significant (F(1, 35) = 15.13, P < 0.001; 8% variance explained). Explorations for differences between fearful and neutral faces were tested for the extreme positions of face naturalness by using Bayesian t-tests. These tests showed that no difference in emotion effects between the close-up coloured faces and the grey-scaled cutout faces was approximately four times more likely than the existence of actual differences (BF 01 = 4.392, error % 0.00001435).

Fig. 3.

Emotion effects for the N170 and EPN components. (A and B) Difference topographies, showing enhanced negativity for fearful faces over occipital areas. (C and D) The time course for main effects of emotions at electrodes PO7 and PO8. (E and F) Time course for all conditions, showing similar emotion increases at electrodes PO7 and PO8. All difference plots (blue) contain 95% bootstrap confidence intervals of intraindividual differences.

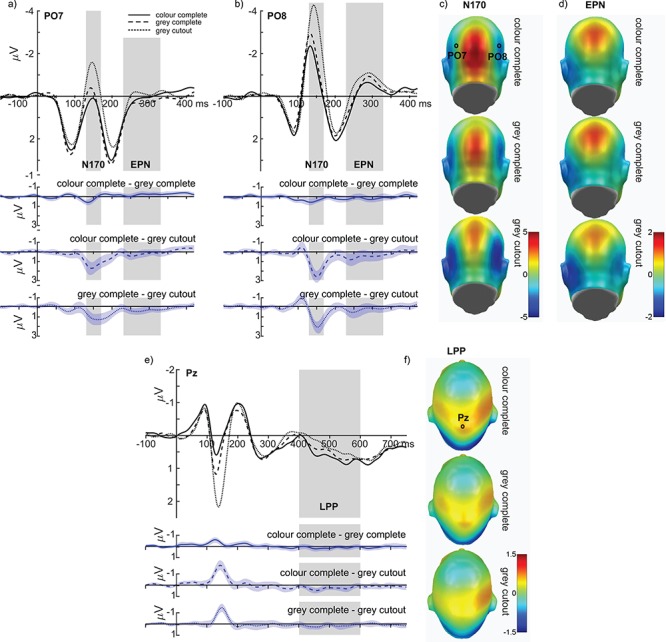

Fig. 4.

Naturalness effects for the N170, EPN and LPP components. (A) and (B) display the time course for electrodes PO7 and PO8, respectively. (C) and (D) show the topographies for each face naturalness level in the EPN and LPP interval, respectively, indicating stronger negativity and lower positivity for decreasing face naturalness. (E) and (F) display time courses and topographies for the LPP component. All difference plots (blue) contain 95% bootstrap confidence intervals of intraindividual differences.

EPN

Regarding the EPN, main effects of emotion (F(1,35) = 8.21, P = 0.007, partial η2 = 0.190; see Figure 3B–D) and (face) naturalness were found (F(2,70) = 4.40, P = 0.016, partial η2 = 0.112; see Figure 4A, B and D), while no interaction between emotion and face naturalness was observed (F(2,70) = 0.104, P = 0.901, partial η2 = 0.003; see Figure 3E and F). For the main effect of emotion, fearful expressions elicited a larger posterior negativity compared to neutral expressions. Regarding face naturalness, cutout grey-scaled faces elicited a larger EPN compared to both close-up grey-scaled faces and close-up coloured faces (ps < 0.05). To investigate this closer, polynomial trends were tested, showing linearly increasing EPN amplitudes with decreasing face naturalness (F(1, 35) = 4.766, P = 0.036; explained 77% of the naturalness variance). Here, a quadratic contrast was not significant (F(1, 35) = 3.48, P = 0.071; 23% variance explained). Explorations for differences between fearful and neutral faces were conducted for the extreme positions of face naturalness by using Bayesian t-tests. These tests revealed that no difference in emotion effects between the close-up coloured faces and the grey-scaled cutout faces was about five times more likely than the existence of actual differences (BF 01 = 5.555, error % 0.00002235).

LPP

For the late positive potential, no main effect of emotion was found (F(1,35) = 0.002, P = 0.968, partial η2 < 0.001), while a main effect of naturalness could be observed (F(2,70) = 5.28, P = 0.007, partial η2 = 0.131; see Figure 4E and F). Again, no interaction between emotion and face naturalness was observed (F(2,70) = 0.640, P = 0.531, partial η2 = 0.018). For the main effect of face naturalness, close-up coloured faces elicited the largest LPP amplitudes, being significantly larger than for cutout grey-scaled faces (P = 0.002) while statistically not being significantly larger than close-up grey-scaled faces (P = 0.148). There were also no significant differences between close-up and cutout grey-scaled faces (P = 0.097). To investigate this closer, polynomial trends were tested, this time showing strongly linearly increasing LPP amplitudes with increasing face naturalness (F(1, 35) = 11.343, P = 0.002; explained 99.7% of the naturalness variance), and the quadratic contrast was not significant (F(1, 35) = 0.034, P = 0.071; 0.3% variance explained). Explorative analyses for differences between fearful and neutral faces were tested for the extreme positions of face naturalness by using Bayesian t-tests. No difference in emotion effects between the close-up coloured faces and the grey-scaled cutout faces was about three times more likely than the existence of actual differences (BF 01 = 3.221, error % 0.00000322).

Discussion

As predicted, main effects of emotion were detected for the N170 and EPN component. In line with a recent meta-analysis (Hinojosa et al., 2015), emotional expressions already influenced the N170, showing pronounced amplitudes for fearful relative to neutral faces. Drawing from their literature review, Hinojosa et al. (2015) reasoned that this might reflect parallel processing of the emotional expression and facial information (see also Joyce and Rossion, 2005 and Eimer, 2011; for a review on structural encoding and person discrimination, see Calder and Young, 2005). Further, in line with previous research, an enhanced processing of fearful compared to neutral faces was found for the EPN (Luo et al., 2010; Wieser et al., 2012; Morel et al., 2014; Peltola et al., 2014). Interestingly, modulations of the N170 and EPN by emotional expressions were found to be present across different common tasks, while the size of the emotion effect did not vary between a gender discrimination, an explicit emotion discrimination and an oddball detection task (Itier and Neath-Tavares, 2017). The EPN component is related to early attentional selection and this differential processing is thought to reflect enhanced early attention devoted to evolutionary more relevant (i.e. fearful) faces (Schupp et al., 2006).

No emotional modulation was observed with regard to very early (P1 component) and later elaborative stimulus processing (LPP). This is partly in line with the mixed results of previous research, and regarding the LPP, might be due to the relatively lower emotional engagement of the present face perception task. Specifically when using passive viewing designs, LPP emotion effects are sometimes not found (Rellecke et al., 2012; Yuan et al., 2014; Schindler et al., 2017). In this study, participants had to simply look at the face stimuli and respond from time to time to a non-facial oddball stimulus. Thus, no elaborate attention to the briefly presented facial expression was needed for correct task performance, which might explain the absence of late emotion effects in the present study.

For face naturalness main effects, we expected modulations of early components (P1, N170), as well as for the LPP, where enlarged LPP amplitudes might reflect perceived higher distinctiveness. Surprisingly, the initial, pre-registered analyses for the P1 showed no main effect of face naturalness. However, exploratory analyses using more occipital sensors (O1, Oz and O2) revealed a significant main effect of face naturalness. Here, a decreased P1 for close-up coloured faces was found compared to close-up grey-scaled faces, and, in tendency, compared to cutout grey-scaled faces. Thus, while P1 effects are detectable, the (spatial) extension of these effects is limited, which could relate to the rather small visual angle of the stimuli, and/or the blockwise presentation mode, possibly introducing adaptation effects.

The subsequently peaking N170, EPN and LPP amplitudes were found to be linearly modulated by face naturalness. Here, N170 and EPN amplitudes were enlarged in a linear fashion for decreasing face naturalness. An explanation for the strong effects at the N170 might be the decreasing ISPV going along with decreasing naturalness (e.g. see Thierry et al., 2007a,b). It is important to note that for each level of decreasing face naturalness, we removed information (first colour, then hair information), which logically decreased stimulus-variance of all faces in the respective condition. Indeed, controlling for ISPV has been found to reduce or even abolish differences in N170 amplitudes between faces and objects (e.g. see Thierry et al., 2007a,b), although it is important to note that even with zero variance, faces elicit larger N170 amplitudes than objects (Ganis et al., 2012; Schendan and Ganis, 2013). In our study, by cutting out more variable colour, and especially hair/neck information, stimuli became more alike (e.g. see Figure 1). Whereas ISPV has been related to the N170 component (i.e. less variable stimuli by pixel-by-pixel correlations), we are not aware of any study showing that stimulus variability could also affect the EPN.

Another interpretation for the enlarged N170 and EPN amplitudes might relate to an increased processing difficulty for less natural faces. Although identity recognition was not task-relevant, this often occurs spontaneously and is much harder for cutout faces. Task difficulty has been shown to elicit larger N170 amplitudes, for instance, for low-frequency filtered faces in a challenging gender categorisation task (Goffaux et al., 2003). Furthermore, larger N170 amplitudes have been reported for inverted compared to upright faces (e.g. see Latinus and Taylor, 2006); this face inversion effect even correlates with task performance (Jacques and Rossion, 2007).

Interestingly, at late stages of processing, we observed an opposite pattern, with enlarged amplitudes in the LPP time window for more naturalistic faces. Enhanced LPP responses have been observed in previous studies for faces with exaggerated facial features or real compared to less realistic and distinctive cartoon faces (e.g. see Schulz et al., 2012; Itz et al., 2014; Schindler et al., 2017). In this experiment, the very same faces were shown—while only colour and hair information was added. This manipulation might have increased subjective distinctiveness or face uniqueness. A relation between distinctive (and) unique faces and enhanced LPPs has been reported previously (similar to e.g. Kaufmann and Schweinberger, 2008; Schulz et al., 2012). However, our manipulation of face naturalness is no strict manipulation of face-uniqueness or face-distinctiveness. Thus, according to our predictions, faces with richer information (including colour and hair) might be perceived as more unique and/or distinct, leading to a larger late positivity.

Crucially, we predicted interaction effects between emotional expression and face naturalness. The basic idea was that faces with more contextual information (i.e. colour and hair) display more diagnostic emotion features (relative to grey-scaled cutout faces), thus leading to pronounced neural differentiation between fearful and neutral faces. However, the present data does not show significant interaction effects between face naturalness and emotion for any of the investigated ERP components. Moreover, using a Bayesian approach, moderate support for the null hypothesis was observed (i.e. no interaction), even when comparing only the two extreme points (coloured complete faces vs cutout grey-scaled faces).Thus, the present results are in line with approximately a third of the reviewed studies (see Supplementary Table S1), which suggest effects of emotion and naturalness on face processing being relatively independent from one another.

Limitations and future directions

It has to be noted that we only used fearful and neutral facial expressions; thus, our findings should not be generalised to other emotional expressions. Furthermore, face pictures were presented very briefly (50 ms), which likely precluded a more in-depth elaboration of facial expressions. This might have caused the absence of emotion effects for the LPP component. However, similar to previous studies, which used even shorter presentation times (e.g. 8 ms or 20 ms; Smith, 2012; Walentowska and Wronka, 2012), emotional modulations have been observed for the N170 and EPN components. These findings support the notion of spontaneous and rather automatic selective emotion processing at such early stages of the visual processing stream (e.g. Schupp et al., 2006). In addition, overall stimulus size might have modulated the present ERP findings. Specifically, the cutout faces display less information (i.e. no hair), though this was necessary to avoid changing the size of the core facial features, which are known to strongly impact ERP amplitudes (e.g. eyes, see Li et al., 2018). Moreover, tiredness or habituation effects might be involved, as participants completed two other face experiments directly preceding the present study. Whereas previous research showed that emotional ERP effects are presumably not affected by massive repetitions (for a review, see Ferrari et al., 2017), future research may account for habituation effects regarding facial naturalness. Finally, our manipulation of face naturalness is not universal, as, for example, various levels of public concealment of the face are common in different cultures.

Future studies may detail whether face naturalness, uniqueness and realism act in parallel or interact with facial emotion and/or identity processing. Moreover, the impact of various levels of attention on the different levels of face naturalness is of interest and should be examined further. For instance, the present findings of independent emotion and face naturalness effects may vary depending on whether people pay attention to faces or whether faces are distractors. By strictly manipulating both face naturalness as well as perceived uniqueness, the (dis-)similarities of these concepts could be better understood. This could be achieved, for instance, by manipulating facial features in real, caricature or cartoon faces.

Conclusion

As the key finding, we showed that emotion ERP effects towards fearful expressions were not interacting with the most commonly used face naturalness manipulations. Although large main effects were observed for facial fear as well as for face naturalness (N170 and EPN component), no interactions were detected for both components. Moreover, face naturalness seems to modulate the N170, EPN and LPP component in a linear fashion, and early components (N170 and EPN) were enlarged for facial stimuli depicting less distracting information (e.g. no hair). In contrast, later processing stages (LPP) were generally enhanced for faces depicting more detailed contextual information. We recommend that researchers interested in strong modulations of early components should make use of cutout grey-scaled faces, while those interested in a pronounced late positivity should use close-up coloured faces providing more realistic information.

Conflict of interest

None declared.

Supplementary Material

Acknowledgments

We acknowledge support from the Open Access Publication Fund of the University of Muenster. F.B. was supported by the German Research Foundation German Research Foundation (Deutsche Forschungsgemeinschaft; BU 3255/1-1). We thank Josefine Eck and Laura Gutewort for their help with data acquisition, Nele Johanna Bögemann and Julian Koc for their valuable corrections and all participants contributing to this study.

References

- Adolphs R. (2008). Fear, faces, and the human amygdala. Current Opinion in Neurobiology, 18(2), 166–172 10.1016/j.conb.2008.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allison T., Puce A., Spencer D.D., McCarthy G. (1999). Electrophysiological studies of human face perception. I: potentials generated in occipitotemporal cortex by face and non-face stimuli. Cerebral Cortex, 9(5), 415–430 10.1093/cercor/9.5.415. [DOI] [PubMed] [Google Scholar]

- Balas B., Pacella J. (2015). Artificial faces are harder to remember. Computers in Human Behavior, 52, 331–337 10.1016/j.chb.2015.06.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S., Allison T., Puce A., Perez E., McCarthy G. (1996). Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience, 8(6), 551–565 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S., Taylor M.J., Rousselet G.A., et al. (2007). Controlling interstimulus perceptual variance does not abolish N170 face sensitivity. Nature Neuroscience, 10(7), 801–802 10.1038/nn0707-801. [DOI] [PubMed] [Google Scholar]

- Blechert J., Sheppes G., Di Tella C., Williams H., Gross J.J. (2012). See what you think: reappraisal modulates behavioral and neural responses to social stimuli. Psychological Science, 23(4), 346–353 10.1177/0956797612438559. [DOI] [PubMed] [Google Scholar]

- Boremanse A., Norcia A.M., Rossion B. (2013). An objective signature for visual binding of face parts in the human brain. Journal of Vision, 13(11), 1–18 10.1167/13.11.6. [DOI] [PubMed] [Google Scholar]

- Brenner C.A., Rumak S.P., Burns A.M.N., Kieffaber P.D. (2014). The role of encoding and attention in facial emotion memory: an EEG investigation. International Journal of Psychophysiology, 93(3), 398–410 10.1016/j.ijpsycho.2014.06.006. [DOI] [PubMed] [Google Scholar]

- Bublatzky F., Gerdes A.B.M., White A.J., Riemer M., Alpers G.W. (2014). Social and emotional relevance in face processing: happy faces of future interaction partners enhance the late positive potential. Frontiers in Human Neuroscience, 8, 1–10 10.3389/fnhum.2014.00493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bublatzky F., Pittig A., Schupp H.T., Alpers G.W. (2017). Face-to-face: perceived personal relevance amplifies face processing. Social Cognitive and Affective Neuroscience, 12(5), 811–822 10.1093/scan/nsx001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bublatzky F., Schupp H.T. (2012). Pictures cueing threat: brain dynamics in viewing explicitly instructed danger cues. Social Cognitive and Affective Neuroscience, 7, 611–622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calder A.J., Young A.W. (2005). Understanding the recognition of facial identity and facial expression. Nature Reviews Neuroscience, 6(8), 641–651 10.1038/nrn1724. [DOI] [PubMed] [Google Scholar]

- Calvo M.G., Marrero H., Beltrán D. (2013). When does the brain distinguish between genuine and ambiguous smiles? An ERP study. Brain and Cognition, 81(2), 237–246 10.1016/j.bandc.2012.10.009. [DOI] [PubMed] [Google Scholar]

- Cohen J. (1992). A power primer. Psychological bulletin, 112(1), 155–159. [DOI] [PubMed] [Google Scholar]

- Eimer M. (2011). The face-sensitive N170 component of the event-related brain potential, Oxford: Oxford Publishing Group; 10.1093/oxfordhb/9780199559053.013.0017. [DOI] [Google Scholar]

- Ferrari V., Codispoti M., Bradley M.M. (2017). Repetition and ERPs during emotional scene processing: a selective review. International Journal of Psychophysiology, 111, 170–177. [DOI] [PubMed] [Google Scholar]

- Foti D., Olvet D.M., Klein D.N., Hajcak G. (2010). Reduced electrocortical response to threatening faces in major depressive disorder. Depression and Anxiety, 27(9), 813–820 10.1002/da.20712. [DOI] [PubMed] [Google Scholar]

- Ganis G., Smith D., Schendan H.E. (2012). The N170, not the P1, indexes the earliest time for categorical perception of faces, regardless of interstimulus variance. NeuroImage, 62(3), 1563–1574 10.1016/j.neuroimage.2012.05.043. [DOI] [PubMed] [Google Scholar]

- Goffaux V., Jemel B., Jacques C., Rossion B., Schyns P.G. (2003). ERP evidence for task modulations on face perceptual processing at different spatial scales. Cognitive Science, 27(2), 313–325 10.1207/s15516709cog2702_8. [DOI] [Google Scholar]

- González I.Q., León M.A.B., Belin P., Martínez-Quintana Y., García L.G., Castillo M.S. (2011). Person identification through faces and voices: an ERP study. Brain Research, 1407, 13–26 10.1016/j.brainres.2011.03.029. [DOI] [PubMed] [Google Scholar]

- Hajcak G., Dunning J.P., Foti D. (2009). Motivated and controlled attention to emotion: time-course of the late positive potential. Clinical Neurophysiology: Official Journal of the International Federation of Clinical Neurophysiology, 120(3), 505–510 10.1016/j.clinph.2008.11.028. [DOI] [PubMed] [Google Scholar]

- Haxby J.V., Hoffman E.A., Gobbini M.I. (2000). The distributed human neural system for face perception. Trends in Cognitive Sciences, 4(6), 223–233 10.1016/S1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Hedger N., Adams W.J., Garner M. (2015). Fearful faces have a sensory advantage in the competition for awareness. Journal of Experimental Psychology: Human Perception and Performance, 41(6), 1748. [DOI] [PubMed] [Google Scholar]

- Herbert C., Sfaerlea A., Blumenthal T. (2013). Your emotion or mine: labeling feelings alters emotional face perception—an ERP study on automatic and intentional affect labeling. Frontiers in Human Neuroscience, 7, 1–14 10.3389/fnhum.2013.00378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hinojosa J.A., Mercado F., Carretié L. (2015). N170 sensitivity to facial expression: a meta-analysis. Neuroscience and Biobehavioral Reviews, 55, 498–509 10.1016/j.neubiorev.2015.06.002. [DOI] [PubMed] [Google Scholar]

- Ille N., Berg P., Scherg M. (2002). Artifact correction of the ongoing EEG using spatial filters based on artifact and brain signal topographies. Journal of Clinical Neurophysiology: Official Publication of the American Electroencephalographic Society, 19(2), 113–124. [DOI] [PubMed] [Google Scholar]

- Itier R.J., Neath-Tavares K.N. (2017). Effects of task demands on the early neural processing of fearful and happy facial expressions. Brain Research, 1663, 38–50 10.1016/j.brainres.2017.03.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itier R.J., Taylor M.J. (2004). N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cerebral Cortex, 14(2), 132–142 10.1093/cercor/bhg111. [DOI] [PubMed] [Google Scholar]

- Itier R.J., Van Roon P., Alain C. (2011). Species sensitivity of early face and eye processing. NeuroImage, 54(1), 705–713 10.1016/j.neuroimage.2010.07.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itz M.L., Schweinberger S.R., Schulz C., Kaufmann J.M. (2014). Neural correlates of facilitations in face learning by selective caricaturing of facial shape or reflectance. NeuroImage, 102(Pt 2), 736–747 10.1016/j.neuroimage.2014.08.042. [DOI] [PubMed] [Google Scholar]

- Jack R.E., Schyns P.G. (2015). The human face as a dynamic tool for social communication. Current Biology, 25(14), R621–R634 10.1016/j.cub.2015.05.052. [DOI] [PubMed] [Google Scholar]

- Jacques C., Rossion B. (2007). Early electrophysiological responses to multiple face orientations correlate with individual discrimination performance in humans. NeuroImage, 36(3), 863–876 10.1016/j.neuroimage.2007.04.016. [DOI] [PubMed] [Google Scholar]

- Joyce C., Rossion B. (2005). The face-sensitive N170 and VPP components manifest the same brain processes: the effect of reference electrode site. Clinical Neurophysiology, 116(11), 2613–2631 10.1016/j.clinph.2005.07.005. [DOI] [PubMed] [Google Scholar]

- Kaufmann J.M., Schweinberger S.R. (2008). Distortions in the brain? ERP effects of caricaturing familiar and unfamiliar faces. Brain Research, 1228, 177–188 10.1016/j.brainres.2008.06.092. [DOI] [PubMed] [Google Scholar]

- Langner O., Dotsch R., Bijlstra G., Wigboldus D.H.J., Hawk S.T., van Knippenberg A. (2010). Presentation and validation of the Radboud Faces Database. Cognition and Emotion, 24(8), 1377–1388 10.1080/02699930903485076. [DOI] [Google Scholar]

- Latinus M., Taylor M.J. (2006). Face processing stages: impact of difficulty and the separation of effects. Brain Research, 1123(1), 179–187 10.1016/j.brainres.2006.09.031. [DOI] [PubMed] [Google Scholar]

- Li S., Li P., Wang W., Zhu X., Luo W. (2018). The effect of emotionally valenced eye region images on visuocortical processing of surprised faces. Psychophysiology, 55(5), e13039), 10.1111/psyp.13039. [DOI] [PubMed] [Google Scholar]

- Luo W., Feng W., He W., Wang N.Y., Luo Y.J. (2010). Three stages of facial expression processing: ERP study with rapid serial visual presentation. NeuroImage, 49, 1857–1867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mangun G.R., Hillyard S.A. (1991). Modulations of sensory-evoked brain potentials indicate changes in perceptual processing during visual-spatial priming. Journal of Experimental Psychology: Human Perception and Performance, 17, 1057–1074. [DOI] [PubMed] [Google Scholar]

- Morel S., George N., Foucher A., Chammat M., Dubal S. (2014). ERP evidence for an early emotional bias towards happy faces in trait anxiety. Biological Psychology, 99, 183–192 10.1016/j.biopsycho.2014.03.011. [DOI] [PubMed] [Google Scholar]

- Müller-Bardorff M., Bruchmann M., Mothes-Lasch M., et al. (2018). Early brain responses to affective faces: a simultaneous EEG-fMRI study. NeuroImage, 178, 660–667 10.1016/j.neuroimage.2018.05.081. [DOI] [PubMed] [Google Scholar]

- Peltola M.J., Yrttiaho S., Puura K., et al. (2014). Motherhood and oxytocin receptor genetic variation are associated with selective changes in electrocortical responses to infant facial expressions. Emotion, 14(3), 469. [DOI] [PubMed] [Google Scholar]

- Peyk P., De Cesarei A., Junghöfer M. (2011). ElectroMagnetoEncephalograhy software: overview and integration with other EEG/MEG toolboxes. Computational Intelligence and Neuroscience, 2011, 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prete G., Capotosto P., Zappasodi F., Laeng B., Tommasi L. (2015). The cerebral correlates of subliminal emotions: an electroencephalographic study with emotional hybrid faces. European Journal of Neuroscience, 42(11), 2952–2962 10.1111/ejn.13078. [DOI] [PubMed] [Google Scholar]

- Rellecke J., Sommer W., Schacht A. (2012). Does processing of emotional facial expressions depend on intention? Time-resolved evidence from event-related brain potentials. Biological Psychology, 90(1), 23–32. [DOI] [PubMed] [Google Scholar]

- Righi S., Marzi T., Toscani M., Baldassi S., Ottonello S., Viggiano M.P. (2012). Fearful expressions enhance recognition memory: electrophysiological evidence. Acta Psychologica, 139(1), 7–18 10.1016/j.actpsy.2011.09.015. [DOI] [PubMed] [Google Scholar]

- Rossion B., Jacques C. (2008). Does physical interstimulus variance account for early electrophysiological face sensitive responses in the human brain? Ten lessons on the N170. NeuroImage, 39(4), 1959–1979 10.1016/j.neuroimage.2007.10.011. [DOI] [PubMed] [Google Scholar]

- Sagiv N., Bentin S. (2001). Structural encoding of human and schematic faces: holistic and part-based processes. Journal of Cognitive Neuroscience, 13(7), 937–951 10.1162/089892901753165854. [DOI] [PubMed] [Google Scholar]

- Schendan H.E., Ganis G. (2013). Face-specificity is robust across diverse stimuli and individual people, even when interstimulus variance is zero. Psychophysiology, 50(3), 287–291 10.1111/psyp.12013. [DOI] [PubMed] [Google Scholar]

- Schindler S., Schettino A., Pourtois G. (2018). Electrophysiological correlates of the interplay between low-level visual features and emotional content during word reading. Scientific Reports, 8(1), 12228. 10.1038/s41598-018-30701-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schindler S., Zell E., Botsch M., Kissler J. (2017). Differential effects of face-realism and emotion on event-related brain potentials and their implications for the uncanny valley theory. Scientific Reports, 7, 45003, 10.1038/srep45003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schulz C., Kaufmann J.M., Kurt A., Schweinberger S.R. (2012). Faces forming traces: neurophysiological correlates of learning naturally distinctive and caricatured faces. NeuroImage, 63(1), 491–500 10.1016/j.neuroimage.2012.06.080. [DOI] [PubMed] [Google Scholar]

- Schupp H.T., Flaisch T., Stockburger J., Junghöfer M. (2006). Emotion and attention: event-related brain potential studies, Chapter 2. Progress in Brain Research. 31–51 10.1016/S0079-6123(06)56002-9 [DOI] [PubMed] [Google Scholar]

- Schupp H.T., Öhman A., Junghöfer M., Weike A.I., Stockburger J., Hamm A.O. (2004). The facilitated processing of threatening faces: an ERP analysis. Emotion, 4(2), 189–200 10.1037/1528-3542.4.2.189. [DOI] [PubMed] [Google Scholar]

- Schweinberger S.R., Neumann M.F. (2016). Repetition effects in human ERPs to faces. Cortex, 80(Supplement C, 141–153 10.1016/j.cortex.2015.11.001. [DOI] [PubMed] [Google Scholar]

- Smith M.L. (2012). Rapid processing of emotional expressions without conscious awareness. Cerebral Cortex, 22(8), 1748–1760 10.1093/cercor/bhr250. [DOI] [PubMed] [Google Scholar]

- Smith E., Weinberg A., Moran T., Hajcak G. (2013). Electrocortical responses to NIMSTIM facial expressions of emotion. International Journal of Psychophysiology, 88(1), 17–25 10.1016/j.ijpsycho.2012.12.004. [DOI] [PubMed] [Google Scholar]

- Thierry G., Martin C.D., Downing P.E., Pegna A.J. (2007a). Is the N170 sensitive to the human face or to several intertwined perceptual and conceptual factors? Nature Neuroscience, 10(7), 802. [Google Scholar]

- Thierry G., Martin C.D., Downing P., Pegna A.J. (2007b). Controlling for interstimulus perceptual variance abolishes N170 face selectivity. Nature Neuroscience, 10(4), 505–511 10.1038/nn1864. [DOI] [PubMed] [Google Scholar]

- Thom N., Knight J., Dishman R., Sabatinelli D., Johnson D.C., Clementz B. (2013). Emotional scenes elicit more pronounced self-reported emotional experience and greater EPN and LPP modulation when compared to emotional faces. Cognitive, Affective, & Behavioral Neuroscience, 14(2), 849–860 10.3758/s13415-013-0225-z. [DOI] [PubMed] [Google Scholar]

- Tsao D.Y., Livingstone M.S. (2008). Mechanisms of face perception. Annual Review of Neuroscience, 31, 411–437 10.1146/annurev.neuro.30.051606.094238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogel E.K., Luck S.J. (2000). The visual N1 component as an index of a discrimination process. Psychophysiology, 37, 190–203. [PubMed] [Google Scholar]

- Walentowska W., Wronka E. (2012). Trait anxiety and involuntary processing of facial emotions. International Journal of Psychophysiology, 85(1), 27–36 10.1016/j.ijpsycho.2011.12.004. [DOI] [PubMed] [Google Scholar]

- Wegrzyn M., Bruckhaus I., Kissler J. (2015). Categorical perception of fear and anger expressions in whole, masked and composite faces. PLoS One, 10(8), e0134790), 10.1371/journal.pone.0134790. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wieser M.J., Brosch T. (2012). Faces in context: a review and systematization of contextual influences on affective face processing. Frontiers in Psychology, 3, 471. 10.3389/fpsyg.2012.00471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wieser M.J., Gerdes A.B.M., Greiner R., Reicherts P., Pauli P. (2012). Tonic pain grabs attention, but leaves the processing of facial expressions intact—evidence from event-related brain potentials. Biological Psychology, 90(3), 242–248 10.1016/j.biopsycho.2012.03.019. [DOI] [PubMed] [Google Scholar]

- Wieser M.J., Keil A. (2013). Fearful faces heighten the cortical representation of contextual threat. NeuroImage, 8119, 317–325 10.1016/j.neuroimage.2013.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wieser M.J., Pauli P., Reicherts P., Mühlberger A. (2010). Don’t look at me in anger! Enhanced processing of angry faces in anticipation of public speaking. Psychophysiology, 47, 271–280. [DOI] [PubMed] [Google Scholar]

- Yuan L., Zhou R., Hu S. (2014). Cognitive reappraisal of facial expressions: electrophysiological evidence of social anxiety. Neuroscience Letters, 577, 45–50. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.