Abstract

Both visual and inertial cues are salient in heading determination. However, optic flow can ambiguously represent self-motion or environmental motion. It is unclear how visual and inertial heading cues are determined to have common cause and integrated vs. perceived independently. In four experiments visual and inertial headings were presented simultaneously with ten subjects reporting visual or inertial headings in separate trial blocks. Experiment 1 examined inertial headings within 30° of straight-ahead and visual headings that were offset by up to 60°. Perception of the inertial heading was shifted in the direction of the visual stimulus by as much as 35° by the 60° offset, while perception of the visual stimulus remained largely uninfluenced. Experiment 2 used ±140° range of inertial headings with up to 120° visual offset. This experiment found variable behavior between subjects with most perceiving the sensory stimuli to be shifted towards an intermediate heading but a few perceiving the headings independently. The visual and inertial headings influenced each other even at the largest offsets. Experiments 3 and 4 had similar inertial headings to experiments 1 and 2, respectively, except subjects reported environmental motion direction. Experiment 4 displayed similar perceptual influences as experiment 2, but in experiment 3 percepts were independent. Results suggested that perception of visual and inertial stimuli tend to be perceived as having common causation in most subjects with offsets up to 90° although with significant variation in perception between individuals. Limiting the range of inertial headings caused the visual heading to dominate the perception.

Keywords: human, visual, vestibular, multisensory, psychophysics

Introduction

When moving through an earth fixed environment we are exposed to visual and inertial (e.g. vestibular and somatosensory) stimuli. Both the visual, in the form of optic flow[1, 2], and inertial[3–6] senses provide cues to heading direction. Previous work has examined how visual and inertial cues are combined to yield a unified heading perception. These experiments have introduced small offsets between the visual and inertial heading while changing the relative reliability of the stimuli[7–9]. In such situations, the visual and inertial stimuli are integrated near optimally based on their relative reliabilities or Bayesian ideal[10–12].

Sensory systems are continually processing stimuli from multiple sensory modalities and sources. In some situations, it is appropriate to integrate sensory signals while in other situations separate consideration is more appropriate. For multisensory integration to occur the sensory signals must be perceived to have a common causation, which has led to a causal inference model of multisensory perception[13–15]. In prior studies of visual-inertial heading integration a multisensory integration strategy has been shown to occur[7, 16–18]. In these prior visual-inertial heading perception experiments the offset between the stimuli was small. Even without an offset, during eccentric gaze, the visual and inertial headings were perceived to be about 15° different when presented separately suggesting physiological offsets and integration occur during such circumstances[12]. It is not always appropriate to perceive optic flow and inertial heading in the same direction: Although inertial information almost always represents self-motion, in situations such as moving through a crowd during a or snow storm visual optic flow cues may represent external motion rather than self-motion through an earth fixed environment. Thus, there are situations in which visual and inertial headings should be perceived as having independent causation and not integrated. One study demonstrated when visual and inertial headings were separated that subjects tended to use only one sensory modality to indicate heading, and detection thresholds were larger than in the visual-only condition[19]. Suggesting that multisensory integration does not always occur in some situations. In that particular case it may be because the motion profiles of the visual and inertial stimuli were perceived as dissimilar in part because of the characteristics of the stimuli used.

Causal inference strategies have been examined in visual-inertial heading integration[20]. Human subjects were presented with visual and inertial heading stimuli within ±25° of straight ahead with cue disparity of up to ±40°. They were asked to judge if stimuli were in the same or different directions (explicit causal inference) or if the inertial stimulus was to the right or left of the visual stimulus (implicit causal inference). They found causal inference was best fit by a model that was independent of visual cue reliability (coherence). The study only included headings near straight ahead and did not use a cue disparity greater than 40°, which did not allow the limits of integration to be fully explored. Furthermore, the subjects were only asked to judge the angle of the inertial heading only as left or right. At the largest cue disparity tested (40°) subjects still felt the cues were the same in about 25% of trials, and at all other disparities tested it was 50% or greater. Thus, the range of cue disparities where the cues can reliably be identified as different was not explored. A second limitation was that subjects were only asked relative headings, so that disparities of 10–40° had a similar effect and the influence on heading estimation or absolute heading direction was unknown.

Current knowledge of the effect of cue disparity on visual-inertial heading integration is limited. Some studies have limited it to ±40°[20] or ±20°[21] which is insufficient to explore the limits of common causation. One study demonstrated that visual-inertial heading offsets of up to 90° were still perceived as having common causation in most subjects[22]. A later experiment by this group looked at effects of stimulus duration, velocity, and acceleration on perception of common causation in visual-inertial headings, but the maximum difference between visual and inertial headings was limited to ±90°[23]. In those experiments subjects were instructed to estimate self-motion direction but were not told to consider visual and inertial headings separately. Thus, the instructions given to the subjects implied that the visual and inertial headings should not be considered independently, which may have influenced perception. The current study looks at synchronous visual and inertial headings and asked subjects to separately report the direction of each in separate trial blocks. The effect of the range of headings presented and visual stimulus polarity (i.e. reporting environmental vs. self-motion) on perception are considered.

Methods

Ethics Statement

The research was conducted according to the principles expressed in the Declaration of Helsinki. Written informed consent was obtained from all participants. The protocol and written consent form were approved by the University of Rochester research science review board prior to the study being conducted.

Human Subjects

Ten subjects participated in the experiments. Six of the subjects were female (#1, 3, 4, 6, 9, and 10). Mean age was 43 ± 19 years (mean ± SD, range 24–70). Subjects had no history of dizziness or vestibular disease, and they had vision that was normal or corrected to normal. Because the experiments were done over a period of time, not all subjects were available to participate in each experiment.

Equipment

Inertial stimuli were delivered using a 6-degree-of-freedom motion platform (Moog, East Aurora, NY, model 6DOF2000E) similar to that used for human motion perception studies in other laboratories [7, 24–26] and described previously for the current laboratory in past heading estimation studies[6, 27]. Subjects sat in a padded racing style seat which included a four-point safety harness. A helmet was used to couple the head to the motion platform.

During visual, inertial, and combined stimulus conditions, an audible white noise was reproduced from two platform-mounted speakers on either side of the subject as previously described [28]. The noise from the platform was similar regardless of motion direction or test condition. Tests in the current laboratory previously demonstrated that subjects could not predict the platform motion direction based on sound alone[28].

Stimuli

Visual and inertial stimuli were presented concurrently and represented the same motion profile. The inertial stimuli consisted of a single cycle 2s (0.5Hz) sine wave in acceleration. This motion profile has been previously used for heading estimation in the horizontal plane [6]. The visual component was presented on a color LCD screen with a 1920 × 1080 pixel resolution at 50 cm from the subject which fills 98° horizontal field-of-view. The visual component consisted of a star field which simulated the movement of the observer through a random-dot cloud with binocular disparity, described previously [6]. Each star was a yellow triangle with a height of 0.5 cm and width at the plane of the screen adjusted for distance. Star density was 0.01 per cm3. The furthest stars were 150 cm and the nearest stars were 20 cm (a depth of field of 130 cm). Visual coherence was set to 70 percent for each trial block to provide a similar relative reliability to both the visual and inertial cues in typical subjects[12]. Coherence was defined as one minus the fraction of points that were randomly repositioned between 60 Hz visual frames.

Experimental Procedure

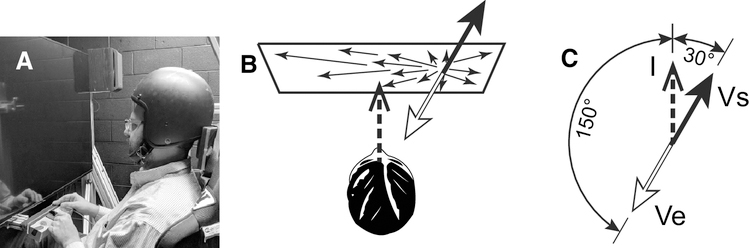

Four experiments were conducted with varying offsets between visual and inertial heading stimuli. The experiments differed in terms of the direction and offsets that were possible as well as the instructions given to subjects. All the experiments were divided into two blocks, one reporting inertial heading and another reporting visual heading although both stimuli were always presented concurrently. Subjects reported heading using a mechanical dial that was centered in front of them below the screen. The dial could be freely rotated about a vertical axis without any discontinuities. After orienting the dial, subjects pressed a button on either side of it to report they were finished. Subjects were asked to report their perception in three different ways in different trials (Fig 1): 1) the direction of inertial motion (I), 2) the direction of self-motion implied by the visual stimulus (Vs) which can be described as the direction the stars were coming from, and 3) the direction of implied environmental motion (Ve) which can be described as the direction the stars were moving. A negative angle corresponds to the left and a positive angle corresponds to the right. Sound cues were used to denote start of trials as previously described [27].

Figure 1.

Experimental setup and diagram demonstrating the relationship between the stimulus directions subjects were asked to report. Panel A: The subject sat in front of a viewing screen with a dial to report the perceived heading direction. Panel B: Example of paired visual-inertial stimuli in which the inertial stimulus (I) is straight ahead. Panel C: The associated visual stimulus (Vs) corresponding to self-motion through a fixed environment is shifted 30° to the right. This same visual stimulus can also be interpreted as environmental motion in the opposite direction relative to a fixed observer (Ve).

Experiment 1

The inertial stimuli had a range of ±30° in 10° increments and the visual offset had a range of ±60° in 20° increments so that the maximum visual heading was 90°. The visual and inertial headings were delivered in random order throughout the trial block with each visual-inertial combination (a total of 49 unique stimuli) delivered twice. In one block subjects were asked to report the inertial heading (I) and in the other block the self-motion heading implied by the visual stimulus (Vs). Experiment 1 included subjects: 1, 2, 3, 9, and 10. There were 196 stimulus presentations per subject.

Experiment 2

These experiments were similar to experiment 1, however the inertial stimulus range was expanded to ±140° in 35° increments and a visual offset range was ±120° in 30° increments. Subjects were again instructed to report I and Vs in separate trial blocks. This experiment was done because it was found that the range of stimuli used in experiment 1 was not sufficient to demonstrate conditions in which there was a complete dissociation in how visual and inertial stimuli were perceived. Experiment 2 included subjects: 1, 3, 4, 5, 6, 9, and 10. There were 324 stimulus presentations per subject, with 81 unique stimuli combinations presented.

Experiment 3

The same range of inertial and visual stimuli as experiment 1 were used. However, the visual stimuli were in the opposite direction relative to experiment 1 and subjects were asked to report the direction of environmental visual motion (Ve). Thus when compared with experiment 1, the visual stimuli were in the opposite direction but because of the instructions to the subjects the ideal responses were the same. As with experiment 1, they also reported the direction of inertial motion, I, in some trials. This experiment investigated the potential for the reported stimulus direction rather than actual direction to influence perception. Experiment 3 included all subjects. There were 196 stimulus presentations per subject.

Experiment 4

The same range of inertial and visual stimuli in experiment 2 were used but with the same instructions given in experiment 3, i.e. environmental visual motion (Ve) was reported. Unlike experiment 3, in this experiment the visual and inertial stimuli covered nearly the full range so there was no need to reverse the direction of the visual stimuli. Experiment 4 included subjects: 1, 2, 4, 5, 9, and 10. There were 324 stimulus presentations per subject.

Data Analysis

Perceived headings (both inertial and visual) were expressed as ±180° relative to the delivered inertial heading. The experiment began with an exploration of the influence of visual stimuli on inertial heading perception and so a paired two-tailed t-test was run between the visual and inertial responses at each inertial angle (p<0.05). The visual offsets relative to the inertial offsets had a similar effect regardless of the direction of the offset (e.g. 30° and −30° had similar influences but in opposite directions). The two opposite offsets were combined by reflecting the responses of the negative offsets. In other words, the responses when the visual offset was to the left were inverted so they could be combined with the condition when the visual stimulus was to the right of the inertial heading. The combined positive and negative offsets led to the use of one-way ANOVA for comparisons of all subjects, as there were twice as many offset responses compared to the 0° offset. Individual subject responses were compared using a rmANOVA and significance was determined using a Bonferroni Test. All statistical analysis and linear regressions were performed using Kaleidagraph 4.5 (Synergy Software, Reading PA). To normalize the level of influence across offsets, both the visual responses and the inertial responses were subtracted from the inertial headings. Thus the ideal normalized visual response was always zero and the ideal visual response was always the offset.

Results

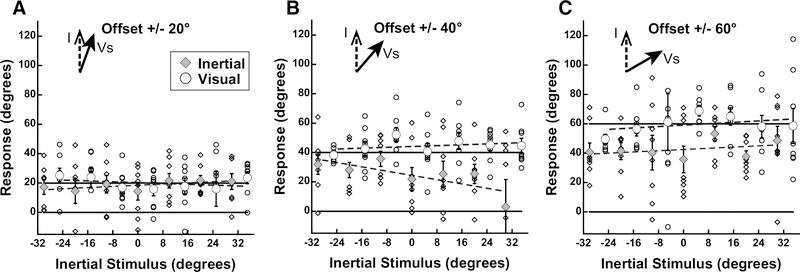

Performance of a single subject which demonstrates the typical variation between trials is shown (Fig. 2).

Figure 2.

Subject 3 was asked to report direction of suggested self-motion through a fixed environment (Vs, solid arrow). The inertial stimulus direction is shown on the x-axis (abscissa) while the visual stimulus is shown relative to the inertial stimulus on the y-axis (ordinate). Solid lines are drawn to show the ideal performance for inertial headings (solid line at 0) and visual headings (solid line at the offset). Trials in which the visual stimulus was reported are shown as an open circle, inertial trials are shown as an gray diamond. Each visual and inertial trial response is shown (small diamonds and circles) and averaged. Dashed lines represent a linear regression fit to the actual responses. This example is from experiment 1, but similar variation was seen in each experiment. The results for each experiment at reflective offset angles (e.g. −60° and 60°) were combined and averaged for each offset: A.20° B. 40° C. 60°.

Experiment 1

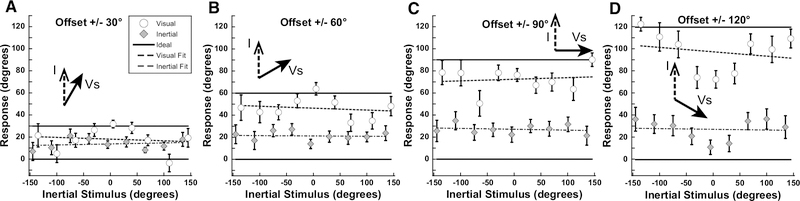

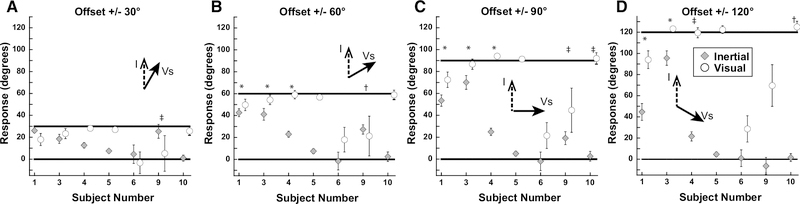

Inertial stimuli were limited to the range of near straight ahead (±30°). Visual stimuli were offset ±20, 40, or 60° relative to inertial heading. With all conditions it was found that when subjects were asked to report the direction of the inertial stimulus there was a strong bias in the direction of the visual stimulus (Fig. 3). Headings were expressed such that the ideal visual response was the offset relative to the inertial heading and the ideal inertial response was defined as zero. For the smallest offset of 20°, the perception was similarly independent of whether subjects were asked to report the visual (22 ± 17°; mean ± SD) or inertial direction 14 ± 15°. For offsets of 40° and 60° the mean visual offset remained near ideal at 46° and 62°. With all offsets, the inertial stimulus had minimal influence on perceived direction when subjects were asked to report the visual stimulus (Vs). The perception of inertial heading was influenced by the visual stimulus at all offsets. With the largest 60° visual offset the inertial heading was offset 35 ± 25° towards the visual stimulus. Performance was varied between subjects with subjects 1, 2, and 3 perceiving the inertial close to visual, subject 9 demonstrating a smaller influence of visual on inertial, while subject 10 perceived them relatively independently (Fig. 4). In most subjects, this experiment demonstrated the perceived inertial heading was strongly biased such that it was perceived as near the visual heading over the range of offsets tested. During each condition, the inertial heading perception was significantly shifted towards the visual heading (p<0.05 for 0° vs 20°, 40°, and 60°; one-way ANOVA; Bonferroni Test). The visual heading was slightly overestimated as being away from the inertial heading. One potential confounding factor in these experiments was that subjects may have determined that inertial stimuli only fall within a relatively narrow range of straight ahead (±30°) while the visual stimulus could be well outside of this narrow range, and such knowledge may have influenced perception.

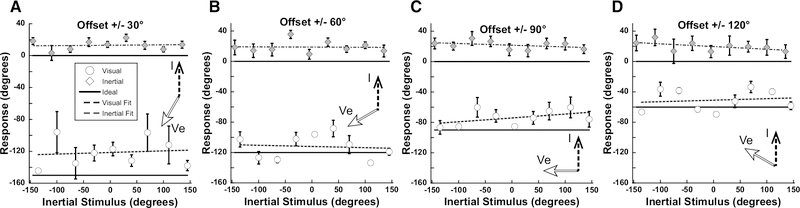

Figure 3.

Aggregate results from experiment 1. Inertial stimuli were limited to the range of ±30° with visual offsets of ±20, ±40, or ±60 deg. Data were plotted similar to Fig. 2 with the inertial stimulus direction on the abscissa and the visual offset on the ordinate. Error bars represent the standard error of the mean (SEM). Ideal performance is shown as a solid line while fits to the perceived heading are shown as a dashed line. Fits were performed by linear least squares regression using Kaleidagraph software. For each offset (20°, 40°, 60°), visual R values were: 0.49, 0.14, and 0.12, respectively, while inertial R values were: 0.73, 0.38, and 0.09, respectively. Inertial angle has been subtracted from the visual and inertial responses.

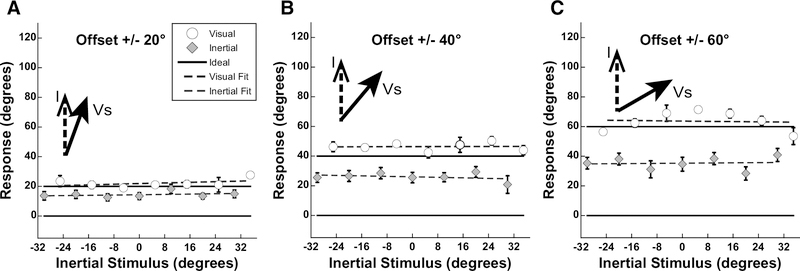

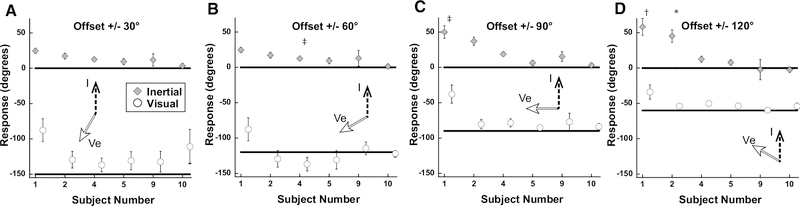

Figure 4.

Results of experiment 1 averaged by subject across the range of inertial heading offsets. Error bars represent ±1 SEM. *: significant shift in heading perception vs 0° offset in both positive and negative offsets (i.e. ±20°). †: significant shift in heading perception vs 0° offset in positive offset (i.e. +20°), but not negative offset (i.e. −20°). ‡: significant shift in heading perception vs 0° offset in negative offset (i.e. −20°), but not negative offset (i.e. +20°). Significance measured using rmANOVA; Bonferroni test.

Experiment 2

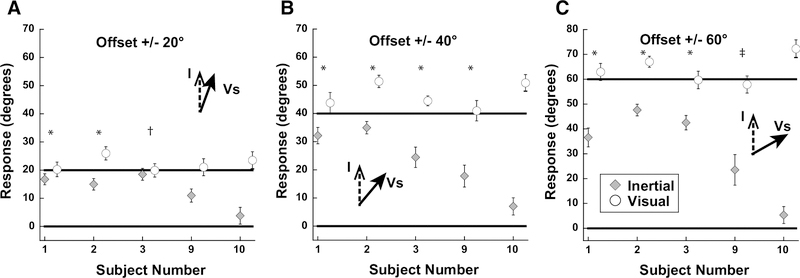

To address the potential effects of the narrow range of stimuli and relatively small offsets between visual and inertial stimuli tested in experiment 1, the range of inertial stimuli was expanded to ±140° in 35° increments and the range of visual offsets was increased to ±30, 60, 90, and 120°. With a ±30° offset the visual and inertial stimuli were perceived to have a similar direction (p = 0.1407, paired t-test) which was intermediate between the two (Fig. 5A). With larger offsets the visual and inertial headings were perceived as different (p < 0.001 at every heading) but were both significantly influenced by the other modality such that the perceived differences were smaller than the actual offsets (Fig. 5B–D). In contrast to experiment 1, the mean visual heading always underestimated the actual heading suggesting that visual heading perception was influenced by the inertial heading. However, at every offset the perceived inertial heading was shifted towards the visual heading by a greater amount suggesting the visual heading still had the larger influence. During each condition, the inertial heading perception was significantly shifted towards the visual heading (p<0.05 for 0° vs 30°, 60°, 90°, and 120°; one-way ANOVA; Bonferroni Test). Visual and inertial stimuli influenced each other such that during the combined stimulus presentation the perceived headings (dashed lines) were closer together than the actual difference (solid lines). In this experiment there was variation in the perception between subjects (Fig. 6) with subjects 4, 5, and 10 perceiving the visual and inertial stimuli relatively independently. Subject 6 perceived the visual stimulus similar to the inertial heading, while subjects 1 and 3 tended to perceive the inertial heading closer to the visual heading. Subject 9 perceived inertial and visual heading near a combination of the two stimuli, except at a ±120° offset in which case inertial and visual headings were perceived independently.

Figure 5.

Aggregate results from experiment 2. Subjects reported inertial (diamond) or visual (circle) stimulus similar to experiment 1. The inertial stimulus range was expanded to ±140° in 35° increments with a visual stimulus offset of ±30 to ±120° in 30° increments. Error bars represent ±1 SEM. Fits to the perceived heading are shown as a dashed line. Fits were performed by linear least squares regression using Kaleidagraph software. For each offset (30°, 60°, 90°,120°), visual R values were: 0.18, 0.33, 0.07, and 0.23, respectively, while inertial R values were: 0.34, 0.25, 0.14, and 0.003, respectively. Inertial angle has been subtracted from the visual and inertial responses.

Figure 6.

Results of experiment 2 by subject. The values represent the average of all inertial stimulus offsets. These results represent a variation in perception by subject: subjects #3, 4, and 10 tended to perceive the visual and inertial stimuli in different directions while the remaining subjects reported them in a similar direction. Error bars represent ±1 SEM. *: significant shift in heading perception vs 0° offset in both positive and negative offsets (i.e. ±30°). †: significant shift in heading perception vs 0° offset in positive offset (i.e. +30°), but not negative offset (i.e. −30°). ‡: significant shift in heading perception vs 0° offset in negative offset (i.e. −30°), but not negative offset (i.e. +30°). Significance measured using rmANOVA; Bonferroni test.

Experiment 3

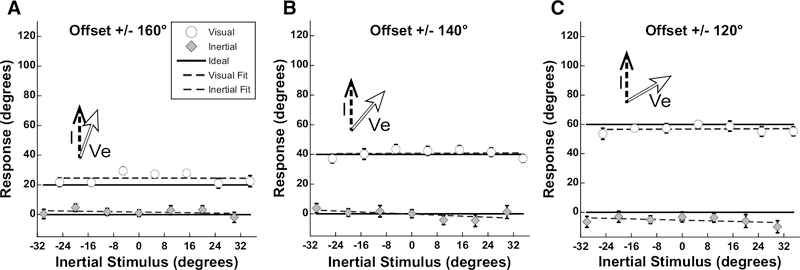

Examined the potential effect of reporting a visual heading direction even if it was not consistent with moving through a fixed environment. The limited range of inertial and visual stimuli in experiment 1 were used, but the direction of the visual stimuli was shifted 180°. Subjects were asked to report inertial heading, I, in one trial block (as before) and the direction of environmental visual motion (Ve) in the other. Thus, the ideal response for the visual stimulus was the same as the response in experiment 1, although the visual stimulus was in the opposite direction. Perception of visual motion and inertial motion was near ideal (Fig. 7), while the visual and inertial stimuli having minimal influence on how the other was perceived. Inertial heading perception was significantly shifted (relative to 0°) at the 60° condition (p<0.05; one-way ANOVA; Bonferroni Test. Performance across subjects was relatively uniform (Fig. 8).

Figure 7.

Aggregate results from experiment 3: The inertial stimuli were similar to experiment 1, but the visual stimuli moved in the opposite direction and subjects were asked to report the direction of environmental motion (Ve). Inertial stimuli covered the range of ±30° and visual stimuli were offset by ±160, ±140, or ±120°. These offsets were chosen such that the reported direction of Ve would be ±20, ±40, or ±60° from I, similar to the report of Vs in experiment 1. Subjects reported the direction of inertial motion as the direction they were going; thus, the range of stimuli were such that the direction of self-motion implied by visual motion would never be in the range of inertial headings tested. Perception of both inertial and visual motion was close to ideal performance. Error bars represent ±1 SEM. Fits were performed by linear least squares regression using Kaleidagraph software. For each offset (20°, 40°, 60°), visual R values were: 0.02, 0.13, and 0.03, respectively, while inertial R values were: 0.38, 0.63, and 0.09, respectively. Inertial angle has been subtracted from the visual and inertial responses.

Figure 8.

Results of experiment 3 by subject. Values represent the average of all inertial offsets tested. All subjects perceived the visual and inertial stimuli in separate directions. Error bars represent ±1 SEM and if not shown are smaller than the plot symbol. ‡: significant shift in heading perception vs 0° offset in negative offset (i.e. −30°), but not negative offset (i.e. +30°). Significance measured using rmANOVA; Bonferroni test.

Experiment 4

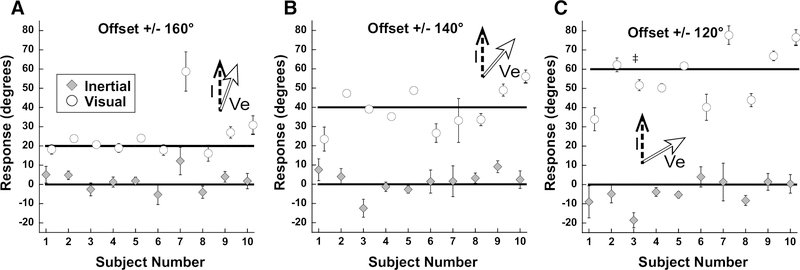

Examined the effect of reporting the direction of visual environmental motion (Ve) and inertial heading (I) when a wider range of stimuli were considered. These stimuli were the same as experiment 2 with the subject essentially asked to report Ve which was opposite Vs. Unlike experiment 3, the visual stimulus headings were not reversed, so the only change relative to experiment 2 was in the instructions given the subject. This change in how visual stimulus direction was reported had no effect on the inertial stimulus perception, which continued to have a deviation towards Vs, even though subjects were asked to report the opposite. The perception of Ve was shifted towards I by an average of 12° for the largest offset (Fig. 9A), and 13–36° for smaller offsets (Fig. 9B–C). Inertial heading perception was significantly shifted (p<0.05) for 0° vs 60°, 90°, and 120° (one-way ANOVA; Bonferroni Test). Examination of the individual subjects reveals much of this deviation was due to subject 1, while the inertial stimulus had little influence on Ve in most subjects (Fig. 10).

Figure 9.

Aggregate results from experiment 4: Visual and inertial stimuli were similar to experiment 2. Subjects reported the direction of inertial motion as the direction they were going while reporting the visual motion as the direction of environment motion (i.e. opposite the direction implied by motion through a fixed environment). In each case the perception of the visual environmental motion (Ve) direction was less (closer to the inertial heading) than ideal. The direction of inertial motion continued to be biased towards Vs (180° from Ve). Fits were performed by linear least squares regression using Kaleidagraph software. For each offset (30°, 60°, 90°,120°), visual R values were: 0.03, 0.16, 0.35, and 0.04, respectively, while inertial R values were: 0.66, 0.42, 0.43, and 0.02, respectively. Inertial angle has been subtracted from the visual and inertial responses.

Figure 10.

Results of experiment 4 by subject. Values represent the average of all inertial offsets tested. *: significant shift in heading perception vs 0° offset in both positive and negative offsets (i.e. ±30°). †: significant shift in heading perception vs 0° offset in positive offset (i.e. +30°), but not negative offset (i.e. −30°). ‡: significant shift in heading perception vs 0° offset in negative offset (i.e. −30°), but not negative offset (i.e. +30°). Significance measured using rmANOVA; Bonferroni test.

Although the experiments included subjects from a wide age range (20 to 70 years), the experiments were not designed to look at effects of aging (e.g. having groups defined by age) and effects seen in some subjects like segregation of visual and inertial perception appeared to occur across the age range and were unlikely to be age related.

Discussion

The current findings extend those of previous experiments that have demonstrated perception of common causation with relatively large offsets between visual and inertial headings. Previous work examined visual-inertial offsets of up to ±90° with subjects reporting their overall perception from the combined stimulus[22, 23]. They concluded that visual and inertial stimuli being perceived as a single heading (i.e. common causality) was part of the multisensory integration process, but the nature of the experiment also forced subjects to report only a single heading. Some prior work has reported perception of individual sensory modalities but this work focused on a more limited range of offsets that did not explore the range of offsets where stimuli were reliably perceived independently, for instance, other studies have used offsets limited to only 10°[17], 20°[21] or 40°[20]. The current experiments contribute to this area by examining a wider range of headings, collecting independent reports of visual and inertial headings, and separating effects of visual environmental motion from the appearance of self-motion through a fixed environment.

Integration of visual-auditory cues has been studied much more extensively relative to visual-inertial heading cues. The best example of visual-auditory cue integration is the “ventriloquism” effect in which the localization of sound is perceived as coming from a different location when there is a visual stimulus consistent with common causation. The angular limit for the “ventriloquism” effect is about 30° in the horizontal plane[29–31]. The current study, and other recent studies of visual-inertial heading integration[22, 23] demonstrate the binding window is at least 3-fold larger. This begs the question as to why visual-inertial integration is relatively insensitive to large offsets. One possibility is that visual heading perception occurs in a retina based coordinate system such that gaze offsets cause visual heading estimates to be biased[12, 32], while inertial headings are perceived relative to body coordinates[33, 34]. One way to deal with such a situation would be to reconcile these into a common coordinate system but this does not occur[12, 34]. In the absence of a common coordinate system, it might be appropriate to allow both sensory modalities to influence each other even with a large offset.

The visual-inertial heading integration strategy was variable between subjects in some conditions. When the headings were close together and limited to near straight ahead (experiment 1), or subjects were asked to report visual headings as the direction of environmental motion (Ve, experiments 3 and 4) the strategy was relatively uniform between subjects. However, when a full range of inertial headings was possible and paired with potentially large offsets in the visual stimulus direction, performance was varied (Fig. 6). Subjects 1 and 3 tended to exhibit a visual capture strategy in which both the inertial and visual stimulus were perceived closer to the visual heading. This was similar to the strategy used by most subjects in experiment 1 when the range of stimuli and offsets were limited. Subject 6 had an opposite inertial capture strategy in which both stimuli were perceived closer to the inertial stimulus. The remaining subjects perceived the visual and inertial stimuli separately even with relatively small offsets. Thus, it seems a full range of strategies are potentially used when the range of possible stimuli and offsets is large. In a subset of subjects heading differences as great as 120° are perceived as similar (Fig. 5D, subjects 3 & 6) while others perceive them as different with differences as small as 20° (Fig. 4A, subject 10). When the same subject was tested across conditions, they tended to show similar trends across the offsets tested. The reason for this variation between subjects is unknown, as all subjects received similar instructions and we did not include individuals with motion sickness or migraine history. We believe all subjects had a similar expectation that the visual and inertial stimuli would not necessarily be in a similar direction, but subjects were not told of the types of offsets that would be possible in each trial block and expectations could have varied. Previous work in this area that considered individual subject performance has also demonstrated that strategies can be different across participants[22, 23], which makes it difficult to generate a theory that predicts individual performance.

These experiments used a task in which visual and inertial stimuli were presented together and the direction of each was reported in separate trial blocks. This is not the only way to approach the question of common causality. Others have addressed the issue by looking at responses during the unisensory condition and comparing this with the perception during the combined stimulus presentations[22, 23]. Asking subjects to judge only one sensory modality when two are presented might imply that the two modalities are separate, while asking for a combined perception might imply that they are the same or force subjects to consciously choose an intermediate heading even if they were perceived as different. Another option would be to ask subjects to report if the sensory modalities represent the same or different headings or to report if one modality is to the left or right of the other. To our knowledge no such experiments have been done with visual-inertial integration.

In conclusion, limiting the range of inertial headings presented allowed visual headings, with a larger possible range, to dominate the perception of the combined stimulus. With a wider range of stimuli there was significant variation between how subjects perceived simultaneous visual and inertial headings. In most subjects the visual and inertial headings influenced each other such that perception was shifted towards an intermediate heading even with large offsets. When asked to report visual motion as the environmental motion direction, the inertial heading had little influence.

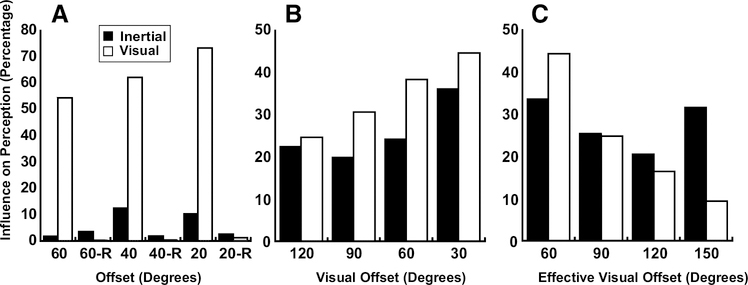

Figure 11.

White bars are the percentage inertial heading perception changed due to visual stimuli. Black bars are the percentage visual heading perception changed due to inertial stimuli. A. Experiment 1: as visual-inertial offset increased, the influence of visual stimuli on inertial perception decreased. B. Experiment 2: visual influence on inertial perception continued to decrease with larger offsets. A. (-R tag) Experiment 3: negligible visual influence on inertial perception. C. Experiment 4: visual influence on inertial perception decreased as effective visual offset increases.

Acknowledgements

The authors would like to thank Kyle Critelli for technical assistance as well as editing the final paper. Grant support was provided by NIDCD R01 DC013580.

References

- 1.Gibson JJ. The perception of the visual world. Boston: Houghton Mifflin; 1950. 235 p. [Google Scholar]

- 2.Warren WH Jr., Hannon DJ. Direction of self-motion perceived from optical flow. Nature. 1988;336:162–3. [Google Scholar]

- 3.Guedry FE Jr. Psychophysics of vestibular sensation In: Kornhuber HH, editor. Handbook of Sensory Physiology. VI/2 New York: Springer-Verlag; 1974. p. 3–154. [Google Scholar]

- 4.Telford L, Howard IP, Ohmi M. Heading judgments during active and passive self-motion. Exp Brain Res. 1995;104(3):502–10. [DOI] [PubMed] [Google Scholar]

- 5.Gu Y, DeAngelis GC, Angelaki DE. A functional link between area MSTd and heading perception based on vestibular signals. Nature neuroscience. 2007;10(8):1038–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Crane BT. Direction Specific Biases in Human Visual and Vestibular Heading Perception. PLoS One. 2012;7(12):e51383 Epub 12/7/12. doi: 10.1371/journal.pone.0051383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Fetsch CR, Turner AH, Deangelis GC, Angelaki DE. Dynamic re-weighting of visual and vestibular cues during self-motion perception. J Neurosci. 2009;29(49):15601–12. doi: 10.1523/JNEUROSCI.2574-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Gu Y, Angelaki DE, Deangelis GC. Neural correlates of multisensory cue integration in macaque MSTd. Nature neuroscience. 2008;11(10):1201–10. doi: 10.1038/nn.2191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Fetsch CR, Pouget A, DeAngelis GC, Angelaki DE. Neural correlates of reliability-based cue weighting during multisensory integration. Nature neuroscience. 2012;15(1):146–54. Epub 2011/1½2. doi: 10.1038/nn.2983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Knill DC, Pouget A. The Bayesian brain: the role of uncertainty in neural coding and computation. Trends in neurosciences. 2004;27(12):712–9. [DOI] [PubMed] [Google Scholar]

- 11.Fetsch CR, Deangelis GC, Angelaki DE. Visual-vestibular cue integration for heading perception: applications of optimal cue integration theory. The European journal of neuroscience. 2010;31(10):1721–9. doi: 10.1111/j.1460-9568.2010.07207.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Crane BT. Effect of eye position during human visual-vestibular integration of heading perception. Journal of neurophysiology. 2017:jn 00037 2017. doi: 10.1152/jn.00037.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Körding KP, Beierholm U, Ma WJ, Quartz S, Tenenbaum JB, Shams L. Causal inference in multisensory perception. PLoS One. 2007;2(9):e943. doi: 10.1371/journal.pone.0000943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sato Y, Toyoizumi T, Aihara K. Bayesian inference explains perception of unity and ventriloquism aftereffect: identification of common sources of audiovisual stimuli. Neural computation. 2007;19(12):3335–55. Epub 2007/11/01. doi: 10.1162/neco.2007.19.12.3335. [DOI] [PubMed] [Google Scholar]

- 15.Wozny DR, Beierholm UR, Shams L. Probability matching as a computational strategy used in perception. PLoS Comput Biol. 2010;6(8). doi: 10.1371/journal.pcbi.1000871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Angelaki DE, Gu Y, Deangelis GC. Visual and vestibular cue integration for heading perception in extrastriate visual cortex. The Journal of physiology. 2011;589(Pt 4):825–33. doi: 10.1113/jphysiol.2010.194720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Butler JS, Smith ST, Campos JL, Bulthoff HH. Bayesian integration of visual and vestibular signals for heading. Journal of vision. 2010;10(11):23. doi: 10.1167/10.11.23. [DOI] [PubMed] [Google Scholar]

- 18.Butler JS, Campos JL, Bulthoff HH, Smith ST. The role of stereo vision in visual-vestibular integration. Seeing Perceiving. 2011;24(5):453–70. doi: 10.1163/187847511X588070. [DOI] [PubMed] [Google Scholar]

- 19.de Winkel KN, Weesie J, Werkhoven PJ, Groen EL. Integration of visual and inertial cues in perceived heading of self-motion. Journal of vision. 2010;10(12):1 Epub 2010/11/05. doi: 10.1167/10.12.1. [DOI] [PubMed] [Google Scholar]

- 20.Acerbi L, Dokka K, Angelaki DE, Ma WJ. Bayesian comparison of explicit and implicit causal inference strategies in multisensory heading perception. PLoS Comput Biol. 2018;14(7):e1006110 Epub 2018/07/28. doi: 10.1371/journal.pcbi.1006110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ramkhalawansingh R, Butler JS, Campos JL. Visual-vestibular integration during self-motion perception in younger and older adults. Psychol Aging. 2018;33(5):798–813. Epub 2018/07/13. doi: 10.1037/pag0000271. [DOI] [PubMed] [Google Scholar]

- 22.de Winkel KN, Katliar M, Bulthoff HH. Forced fusion in multisensory heading estimation. PLoS One. 2015;10(5):e0127104. doi: 10.1371/journal.pone.0127104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.de Winkel KN, Katliar M, Bulthoff HH. Causal Inference in Multisensory Heading Estimation. PLoS One. 2017;12(1):e0169676. doi: 10.1371/journal.pone.0169676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.MacNeilage PR, Banks MS, DeAngelis GC, Angelaki DE. Vestibular heading discrimination and sensitivity to linear acceleration in head and world coordinates. J Neurosci. 2010;30(27):9084–94. Epub 2010/07/09. doi: 30/27/9084 [pii] 10.1523/JNEUROSCI.1304-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Grabherr L, Nicoucar K, Mast FW, Merfeld DM. Vestibular thresholds for yaw rotation about an earth-vertical axis as a function of frequency. Exp Brain Res. 2008;186(4):677–81. Rodriguez & Crane Visual-inertial heading estimation [DOI] [PubMed] [Google Scholar]

- 26.Cuturi LF, Macneilage PR. Systematic biases in human heading estimation. PLoS One. 2013;8(2):e56862. doi: 10.1371/journal.pone.0056862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Crane BT. Human Visual and Vestibular Heading Perception in the Vertical Planes. J Assoc Res Otolaryngol. 2014;15(1):87–102. Epub 2013/1½0. doi: 10.1007/s10162-013-0423-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Roditi RE, Crane BT. Directional asymmetries and age effects in human self-motion perception. J Assoc Res Otolaryngol. 2012;13(3):381–401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Recanzone GH. Interactions of auditory and visual stimuli in space and time. Hear Res. 2009;258(1–2):89–99. Epub 2009/04/28. doi: 10.1016/j.heares.2009.04.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Thurlow WR, Jack CE. Certain determinants of the “ventriloquism effect”. Perceptual and motor skills. 1973;36(3):1171–84. Epub 1973/06/01. doi: 10.2466/pms.1973.36.3c.1171. [DOI] [PubMed] [Google Scholar]

- 31.Witkin HA, Wapner S, Leventhal T. Sound localization with conflicting visual and auditory cues. Journal of experimental psychology. 1952;43(1):58–67. Epub 1952/01/01. [DOI] [PubMed] [Google Scholar]

- 32.Crane BT. Coordinates of Human Visual and Inertial Heading Perception. PLoS One. 2015;10(8):e0135539. doi: 10.1371/journal.pone.0135539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Chen X, Deangelis GC, Angelaki DE. Diverse spatial reference frames of vestibular signals in parietal cortex. Neuron. 2013;80(5):1310–21. doi: 10.1016/j.neuron.2013.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Yang L, Gu Y. Distinct spatial coordinate of visual and vestibular heading signals in macaque FEFsem and MSTd. Elife. 2017;6. doi: 10.7554/eLife.29809. [DOI] [PMC free article] [PubMed] [Google Scholar]