Abstract

Objectives

Hospital (consented) autopsy rates have dropped precipitously in recent decades. Online medical information is now a common resource used by the general public. Given clinician reluctance to request hospital postmortem examinations, we assessed whether healthcare users have access to high quality, readable autopsy information online.

Design

A cross-sectional analysis of 400 webpages. Readability was determined using the Flesch-Kincaid score, grade level and Coleman-Liau Index. Authorship, DISCERN score and Journal of the American Medical Association (JAMA) criteria were applied by two independent observers. Health on the net code of conduct (HON-code) certification was also assessed. Sixty-five webpages were included in the final analysis.

Results

The overall quality was poor (mean DISCERN=38.1/80, 28.8% did not fulfil a single JAMA criterion and only 10.6% were HON-code certified). Quality scores were significantly different across author types, with scientific and health-portal websites scoring highest by DISCERN (analysis of variance (ANOVA), F=5.447, p<0.001) and JAMA (Kruskal-Wallis, p<0.001) criteria. HON-code certified sites were associated with higher JAMA (Mann-Whitney U, p<0.001) and DISCERN (t-test, t=3.5, p=0.001) scores. The most frequent author type was government (27.3%) which performed lower than average on DISCERN scores (ANOVA, F=5.447, p<0.001). Just 5% (3/65) were at or below the recommended eight grade reading level (aged 13–15 years).

Conclusions

Although there were occasional high quality web articles containing autopsy information, these were diluted by irrelevant and low quality sites, set at an inappropriately high reading level. Given the paucity of high quality articles, healthcare providers should familiarise themselves with the best resources and direct the public accordingly.

Keywords: quality, readability, autopsy, online information, DISCERN, post-mortem

Strengths and limitations of this study.

This is the first study to measure the quality and readability of online information available to the public in the often emotive and controversial area of autopsy practice.

The study included articles from the four most commonly used English language search engines and reviewed all the top 25 hits to ensure adequate selection.

Two measures of readability and three measures of quality were used.

Commonly used and validated indices were utilised to measure quality and readability and were independently assessed by two observers.

The study does not include websites beyond the top 25 hits on search engines, which may miss important articles; however, this cut-off was set to include only the articles that a general member of the public is likely to encounter online.

Introduction

The general public increasingly accesses health information online; 71% of European Union residents used the internet every day in 20161 and 72% of American adults have used the internet to access health information.2 Despite its ease of availability, there have been concerns regarding the quality and readability of these unregulated online resources.3–5 In recent decades, there has also been a dramatic reduction in autopsy practice. The rate of consented hospital autopsies has reduced from 25% three decades ago to <1% in recent years in the UK,6 with similar reductions seen worldwide.7–10 The reasons for this decline are multifactorial. There are concerns among physicians that families are unlikely to consent to autopsy, particularly given the organ retention scandals of the mid-1990s.11 However, one study suggests that the majority of families (89%) may agree to a postmortem examination once they are properly informed.12 Another factor is that given the advances in new technology in medicine, some clinicians no longer consider autopsy necessary,13 although the evidence suggests that, even in the intensive-therapy unit, where patients are heavily investigated, the major misdiagnosis rate at autopsy is 28% and almost one-third of these were class I errors.14 Other reasons for the decline in autopsy practice include clinician distaste for the procedure,15 reduced clinical interest16 and concerns regarding medical litigation.17

As clinicians are not offering postmortem examinations, it is incumbent on pathologists to ensure that the public are properly informed about their importance. Unfortunately, there appears to be a frequent, inaccurate depiction of autopsy practice in film and television, at a time when postmortem examinations need to be advocated. This is particularly the case given ongoing medical advances which can both improve the accuracy of the autopsy, but also need to be audited to inform an understanding of the potential consequences of new technologies on individual patients.18 In order to better inform patients, various tools have been created to ensure accurate and reproducible appraisals of online information. These include the DISCERN19 and Journal of the American Medical Association (JAMA) 5 criteria along with the HON-code certification20 which all assess the quality of the information. The Flesch-Kincaid (FK) and Coleman-Liau Index (CLI) are formulae which measure the ease of readability of articles.21 It is recommended that online health information be kept to a seventh to eighth grade (aged 13–15 years in the US schooling system) reading level.22 We hypothesised that the quality of online information would be poor in relation to autopsy given its inherent controversy as a procedure. The aim of the current study was thus to assess the quality and readability of online information from the most popular English-language search engines, which are Google, Yahoo, Bing and ask.com, respectively.23

Materials and methods

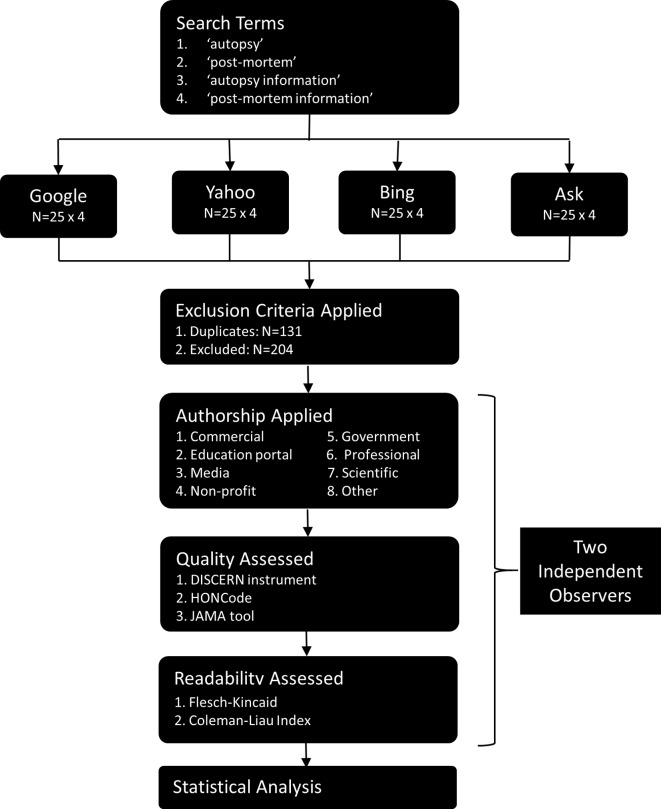

Ethical approval was not deemed necessary for this study. The search terms ‘autopsy’, ‘post-mortem’, ‘autopsy information’ and ‘post-mortem information’ were entered, respectively, into Google, Bing, Yahoo and Ask from a local internet protocol (IP) address in September and October 2017 (figure 1). English language filters were applied. The first 25 links were reviewed from each of these 16 searches and were assessed for inclusion and exclusion criteria (400 links total). This was based on a study which suggested that online consumers of health information rarely search beyond the first 25 links.24 Articles were included if they were in English, >300 words and contained information pertaining to human autopsy. Exclusion criteria included: dictionary definitions; videos/images without a text component over 300 words; foreign language; and non-human websites were excluded. Duplicate websites (n=131) were also excluded.

Figure 1.

Flow diagram of methods of website inclusion.

DISCERN

The DISCERN tool is a 16 part standardised assessment of the quality and reliability of literature, developed by the National Health Service in the UK.19 Articles are scored from 16 to 80, with a higher score indicating better quality. The final score has been grouped according to quality level previously and is summarised in table 1.25 The DISCERN score was assessed by two independent observers (BH and PB) with a good correlation (intraclass correlation (ICC)=0.712, p<0.001) and any disagreements were resolved by consensus decision prior to the final analysis.

Table 1.

DISCERN score according to quality level.

| DISCERN score | Quality standard |

| <27 | Very poor |

| 27–38 | Poor |

| 39–50 | Fair |

| 51–62 | Good |

| >62 | Excellent |

JAMA and HON-code

The JAMA score is a less rigorous four-part scoring system where articles are assessed for the presence of authorship, sources, disclosures and date.5 This was independently assessed by BH and PB with good correlation (ICC=0.799, p<0.001) and any disagreements went to a consensus prior to the final analysis. Additionally, each article was assessed for the presence or abscene of HON-code certification toolbar.26

Readability

Readability was assessed using an online readability tool.27 The readability was assessed using the FK and CLI methods. The FK method primarily assesses the mean length of sentences and syllables per word, while the CLI assesses the average number of letters and sentences per 100 words. There was a significant correlation between the two methods used (Pearson’s 0.687, p<0.001). The FK grade and CLI methods refer specifically to grade (ie, an FK grade of eight refers to a reading level which should be understandable to a child in the eighth grade, ~14 years old). Conversely the FK score is inversely proportional to grade level, where a score <70 approximately is above an eighth grade reading level.

Authorship

Website authorship was categorised according to a recognised classification system.28 Commercial websites aimed to make a profit by providing a service (eg, www.nationalautopsyservices.com). Government sites were regulated by an official governmental body and largely included coronial services (eg, www.manchester.gov.uk), although NHS Trust websites which were written by a healthcare professional were included in the professional category. Health portal websites included many articles on various health topics (eg, www.medscape.com). Media sites included newspapers and magazines (eg, www.newyorker.com). Non-profit sites including charities, supportive and educational organisation that were not aiming to make a profit (eg, www.bereavementadvice.org). The ‘other’ category included sites which did not fit any of the other categories (eg, www.myjewishlearning.com). Professional websites written by experts in the field of autopsy (eg, www.rcpath.org). Scientific sites were academic journals (eg, www.ncbi.nlm.nih.gov). Authorship categories were assigned by two independent investigators, any disagreements were reviewed and a consensus decision was achieved in each case.

Patient and public involvement

No patients or members of the public were involved in the design or planning of this study.

Data availability

The data collected independently by both observers (BH and PB) are available in online supplementary appendix A. The data after consensus agreement are available in online supplementary appendix B.

bmjopen-2018-023804supp001.xlsx (13.3KB, xlsx)

bmjopen-2018-023804supp002.xlsx (11.8KB, xlsx)

Statistical analysis

All normally distributed data were displayed as mean and SD and analysed using parametric tests (t-tests, ANOVA). Otherwise, the data were displayed as median and IQR and non-parametric tests were used (Mann-Whitney U, Kruskal-Wallis). For ANOVA, post hoc analysis was performed using Tukey testing, while for Kruskal-Wallis, post hoc comparisons were performed using Bonferroni-adjusted Mann-Whitney tests. All statisfical analysis was perfoming on SPSS V.24. Significance was set at p<0.05.

Results

Sixty-five websites were included in the analysis. Each web article was categorised according to author type. 27.3% were governmental, 24.2% were professional, 13.6% were non-profits, 9.1% were media, 7.6% were education portals, 6.1% were commercial, 3% were scientific and 7.6% were classified as other. 10.6% (n=7) were HON-code certified.

Quality

The mean overall DISCERN score was poor at 38.1 (SD 11.7) out of a possible 80.

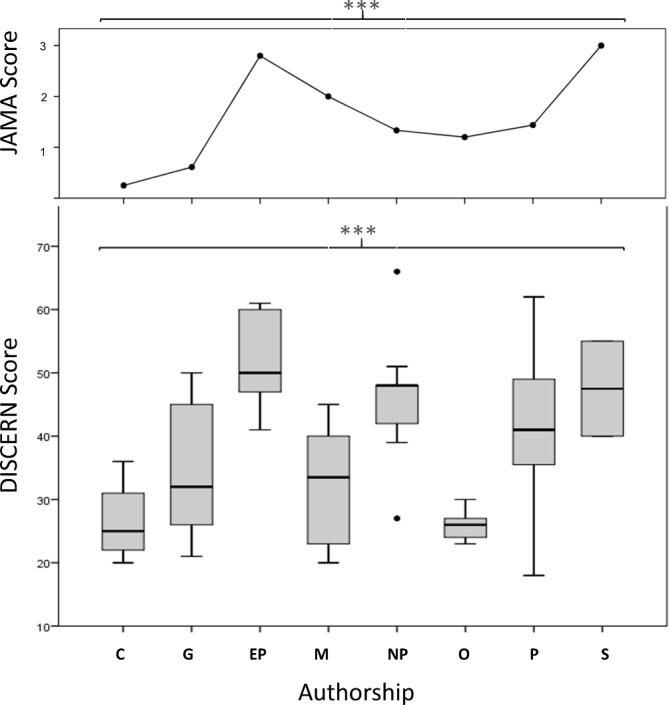

There was a significant difference in the DISCERN score across the various authorship categories (ANOVA, F=5.447, p<0.001). A post hoc Tukey analysis showed that education portal sites had significantly higher scores than commercial (p=0.005), government (p=0.015), media (p=0.032) and other (p=0.002) sites, while non-profits sites had higher scores than commercial (p=0.025) and other (0.009) sites.

The median JAMA criteria score was 1 (IQR=2) and 19/65 (28.8%) received the lowest possible score of 0. There was a significant difference in JAMA code across author groups (Kruskal-Wallis, p<0.001). Bonferroni-adjusted Mann-Whitney tests were used for post hoc comparisons and showed that education portal sites had a significantly higher score than commercial (p=0.026) and government (p=0.004) websites (table 2). HON-code positive sites were associated with a significantly higher JAMA (Mann-Whitney U, p<0.001) and DISCERN scores (t-test, t=3.5, p=0.001) than HON-code negative sites (table 3).

Table 2.

Results of each scoring system stratified according to authorship, presented as mean±SD.

| C | G | EP | M | NP | O | P | S | Sig | |

| FK grade | 12.2±2.2 | 11.5±1.5 | 11.1±4.3 | 12.1±2.6 | 11.8±2.0 | 12.8±2.2 | 12.0±2.2 | 15.2±0.0 | P=0.496 |

| FK score | 40.4±13.4 | 47.8±6.4 | 46.3±20.9 | 45.0±14.1 | 46.4±10.4 | 40.5±9.4 | 43.6±12.0 | 25.4±5.2 | P=0.287 |

| CLI | 12.3±2.4 | 10.2±1.3 | 11.2±2.2 | 11.0±2.0 | 10.3±1.9 | 11.6±1.8 | 11.3±1.9 | 13.5±0.7 | P=0.109 |

| DISCERN | 26.5±6.8 | 34.4±9.4 | 51.8±8.6 | 32.5±9.7 | 46.1±10.4 | 26.0±2.7 | 41.0±11.1 | 47.5±10.6 | ***P<0.001 |

| JAMA | 0.25±0.5 | 0.6±1.0 | 2.8±0.8 | 2.0±6.3 | 1.3±1.0 | 1.2±0.8 | 1.4±1.1 | 3.0±0.0 | ***P<0.001 |

C, commercial; CLI, C oleman- Liau Index; EP, education portal; FK, Flesch-Kincaid; G, governmental; JAMA, Journal of the American Medical Association; M, media; NP, non-profit; O, other; P, professional; S, scientific; Sig, significance.

Table 3.

Results of each scoring system stratified according to HON-code, presented as mean±SD. DISCERN is the score from the DISCERN Instrument. CLI, Coleman-Liau Index; FK, Flesch-Kincaid; JAMA, Journal of the American Medical Association.

| HON-code +ve | HON-code –ve | Sig | |

| FK grade | 11.0±3.8 | 12.1±2.0 | P=0.25 |

| FK score | 46.3±19.0 | 44.3±10.6 | P=0.65 |

| CLI | 11.2±2.0 | 10.9±1.8 | P=0.63 |

| DISCERN | 51.7±7.5 | 36.4±11.1 | ***P=0.001 |

| JAMA | 2.9±0.7 | 1.1±1.0 | ***P<0.001 |

CLI, Co leman- Liau Index; FK, Flesch-Kincaid; JAMA, Journal of the American Medical Association.

Readability

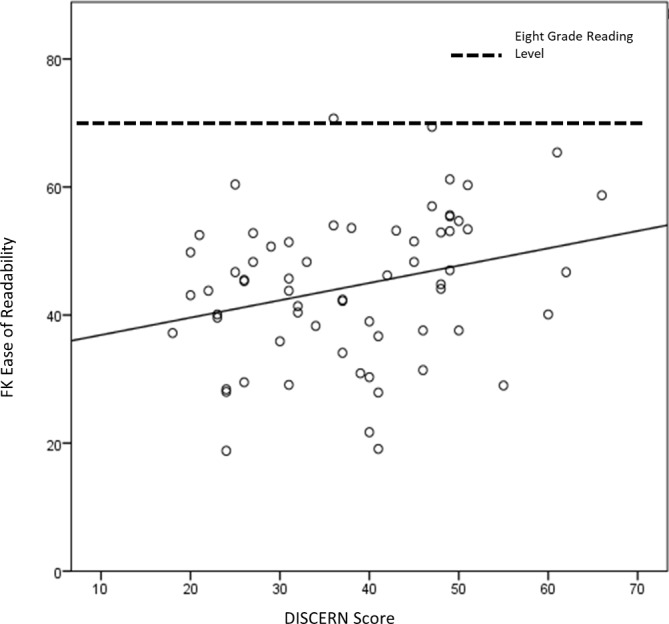

The overall mean CLI, FK grade and score were 11.0 (SD=1.8), 12.0 (SD=2.2) and 44.5 (SD=11.5), respectively. The vast majority of the articles (62/65, 95%) were above the suggested eighth grade reading level, meaning that only four articles (6%, 95% CI 1.7% to 15%) (figure 2). There was no significant difference across the author types in terms of CLI (ANOVA, F=1.781, p=0.109), FK grade (ANOVA, F=0.923, p=0.496) or FK score (ANOVA, F=1.260, p=0.287) (table 2). The presence of an HON-code was not correlated with any change in readability. In addition, the quality, authorship or readability of the web article was not associated with the position of the article in the top 25 search engine hits (data not shown).

Figure 2.

Scatter plot comparing ease of readability (FK score) and DISCERN quality scores. FK, Flesch-Kincaid.

Discussion

The rapid rise in internet popularity over the last decades has taken place during a period which has seen a dramatic decline in hospital autopsy rates.1 6 The reasons for this are not completely understood; however, it is clear that the public is increasingly accessing their medical information online.2 In the present article, we have shown that this online autopsy information is of poor quality, unreliable, often irrelevant and mostly above the recommended eighth grade reading level (aged 13–15 years).

Initially, given that most internet users begin a search using a search engine, and we have assessed the most popular websites, our current study is an accurate representation of what the average English-speaking person will encounter when accessing autopsy information online. The average quality was poor according to DISCERN, almost one-third of articles did not fulfil a single JAMA criterion and only 10.6% were HON-code certified. It must be noted certain articles were of high quality, particularly in the health portal, professional and scientific categories (figure 3). Unfortunately, access to these articles is significantly diluted by poor quality and irrelevant webpages.

Figure 3.

There is a significant difference in quality, as measured by both the DISCERN (boxplot) and JAMA (line plot) instruments, across web articles of different authorship. C, commercial; G, governmental; EP, Education portal; JAMA, Journal of the American Medical Association; M, media; NP, non-profit; O, other; P, professional; S, scientific. ***p<0.001.

Our study has shown that <15% of articles (95% CI 1.7% to 15%) are set at or below the suggested eighth grade readability level, which is disturbingly low.22 This is in line with other studies across several health areas which also show that online health information is often set at an excessively high reading level.29–31 This is concerning because the high level of readability required to comprehend this information means that a cohort of society may be excluded from a meaningful discourse on the topic of autopsy practice, regardless of the quality of the information. These issues will be further compounded if, as it appears, clinicians do not want hospital autopsies and are thus unlikely to engage in any meaningful communication with relatives about them, unless instructed to do so for legal reasons.32

The author type was assessed and approximately half were from the government or professional sources. It makes sense that governmental legal documents featured heavily because the majority of autopsies are now requested by the coroner and hospital autopsies have become rare.33 However, given the mandatory nature of coronial autopsies, online coroner’s websites do not need to be very informative as to intricacies of the autopsies and this is reflected in the lower quality scores for governmental sites. As these make up a sizeable component of the webpages, this further dilutes the high quality articles and compounds this issue. The education portal and scientific articles were of significantly higher quality, a feature, that is, commonly seen in online health information.34 This result makes sense because these are more likely to be written by healthcare professionals, who are familiar with the subject matter. An often cited issue with the internet is that everyone gets an equal voice, regardless of how informed they are on the subject.35 The problem with this strategy is that it dilutes the potentially useful information being provided by actual experts in a particular area. This was seen here in the author category defined as other, which was of very poor quality. Furthermore, it is also worth mentioning that over half of the top 25 (n=204, 51%) articles were irrelevant and omitted on the basis of our exclusion criteria. Although many of these were dictionary definitions, a number contained reference to the supernatural36 and the horror genre.37 38 At best, these associations are unhelpful for the general public; however, it is possible that this may lead to deleterious associations between autopsies and sinister motives, when ideally, autopsy should be associated with its true motives which are often altruistic and for the good of society.33

There are several limitations to this study. First, only the top 25 web articles were included, which was based on prior evidence that consumers of health information rarely search beyond this number.24 It is possible that particularly enthusiastic internet users may search beyond this number of articles. In addition, readability is one factor that contributes to the comprehensibility of a website; however, diagrams, photographs, charts and videos may also play a role. This limitation has been acknowledged previously.34 Another possible study design would have been to test an individual’s comprehension of the subject before and after reading a given article to assess the quality of and comprehensibility of the information. However, given that this would be heavily confounded by factors such as individuals IQ, education and memory, along with the fact that the comprehension would likely increase after each article reviewed, thus necessitating a high number of participants, designing this study in practice was unfeasible. Instead, we used the JAMA and DISCERN tools, which although open to a minimal amount of interpretation on the part of the assessor, they have definite criteria and are thus reliable measures of quality as demonstrated by the high levels of interobserver correlation.

Conclusion and future directions

Thomas Jefferson wrote ‘An informed citizenry is the only true repository of the public will’. We found here that quality and readability of online information on autopsy is generally of a poor standard. Although there are certain author types that tend to publish higher quality information, this is diluted by unreliable and often irrelevant information. Given the reduction in hospital autopsy requests, we need to look at alternative strategies to get the message of autopsy importance out to the public. The internet has now become an omnipresent force, perhaps online information should be considered in getting this communication across to help stem the precipitous drop in hospital autopsy rates. There is no doubt that the current online information could do with improvement.

Supplementary Material

Footnotes

Contributors: BH: hypothesis creation, study design, data collection and analysis, interpretation, literature search, generation of figures and manuscript preparation. PB: data collection and analysis. SO: study design and data collection. MO: supervision, interpretation and manuscript preparation.

Funding: Imperial College London provided the open access article publishing charge. The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: There are no additional unpublished data.

Patient consent for publication: Not required.

References

- 1. Eurostat. Digital economy and society statistics - households and individuals. 2017. http://ec.europa.eu/eurostat/statistics-explained/index.php/File:Frequency_of_internet_use,_2016_(%25_of_individuals_aged_16_to_74)_YB17.png

- 2. Fox S. The social life of health information. 2013. http://www.pewresearch.org/fact-tank/2014/01/15/the-social-life-of-health-information/

- 3. Marshall JH, Baker DM, Lee MJ, et al. Assessing internet-based information used to aid patient decision-making about surgery for perianal Crohn’s fistula. Tech Coloproctol 2017;21:461–9. 10.1007/s10151-017-1648-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. O’Connell Ferster AP, Hu A. Evaluating the quality and readability of Internet information sources regarding the treatment of swallowing disorders. Ear Nose Throat J 2017;96:128–38. 10.1177/014556131709600312 [DOI] [PubMed] [Google Scholar]

- 5. Silberg WM, Lundberg GD, Musacchio RA. Assessing, controlling, and assuring the quality of medical information on the Internet: Caveant lector et viewor--Let the reader and viewer beware. JAMA 1997;277:1244–5. [PubMed] [Google Scholar]

- 6. Turnbull A, Osborn M, Nicholas N. Hospital autopsy: Endangered or extinct? J Clin Pathol 2015;68:601–4. 10.1136/jclinpath-2014-202700 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. McKelvie PA, Rode J. Autopsy rate and a clinicopathological audit in an Australian metropolitan hospital--cause for concern? Med J Aust 1992;156:456–62. [DOI] [PubMed] [Google Scholar]

- 8. Limacher E, Carr U, Bowker L, et al. Reversing the slow death of the clinical necropsy: developing the post of the Pathology Liaison Nurse. J Clin Pathol 2007;60:1129–34. 10.1136/jcp.2006.044420 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Wood MJ, Guha AK. Declining clinical autopsy rates versus increasing medicolegal autopsy rates in Halifax, Nova Scotia. Arch Pathol Lab Med 2001;125:924–30. [DOI] [PubMed] [Google Scholar]

- 10. Gaensbacher S, Waldhoer T, Berzlanovich A. The slow death of autopsies: a retrospective analysis of the autopsy prevalence rate in Austria from 1990 to 2009. Eur J Epidemiol 2012;27:577–80. 10.1007/s10654-012-9709-3 [DOI] [PubMed] [Google Scholar]

- 11. Carr U, Bowker L, Ball RY. The slow death of the clinical post-mortem examination: implications for clinical audit, diagnostics and medical education. Clin Med 2004;4:417–23. 10.7861/clinmedicine.4-5-417 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Tsitsikas DA, Brothwell M, Chin Aleong JA, et al. The attitudes of relatives to autopsy: a misconception. J Clin Pathol 2011;64:412–4. 10.1136/jcp.2010.086645 [DOI] [PubMed] [Google Scholar]

- 13. Nemetz PN, Tanglos E, Sands LP, et al. Attitudes toward the autopsy--an 8-state survey. MedGenMed 2006;8:80. [PMC free article] [PubMed] [Google Scholar]

- 14. Winters B, Custer J, Galvagno SM, et al. Diagnostic errors in the intensive care unit: a systematic review of autopsy studies. BMJ Qual Saf 2012;21:894–902. 10.1136/bmjqs-2012-000803 [DOI] [PubMed] [Google Scholar]

- 15. Hull MJ, Nazarian RM, Wheeler AE, et al. Resident physician opinions on autopsy importance and procurement. Hum Pathol 2007;38:342–50. 10.1016/j.humpath.2006.08.011 [DOI] [PubMed] [Google Scholar]

- 16. Sinard JH. Factors affecting autopsy rates, autopsy request rates, and autopsy findings at a large academic medical center. Exp Mol Pathol 2001;70:333–43. 10.1006/exmp.2001.2371 [DOI] [PubMed] [Google Scholar]

- 17. Society MP. The rising cost of clinical negligence who pays the price? 2017.

- 18. Bajwa M. Emerging 21(st) Century Medical Technologies. Pak J Med Sci 2014;30:649–55. 10.12669/pjms.303.5211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Charnock D, Shepperd S. Learning to DISCERN online: applying an appraisal tool to health websites in a workshop setting. Health Educ Res 2004;19:440–6. 10.1093/her/cyg046 [DOI] [PubMed] [Google Scholar]

- 20. Boyer C, Selby M, Scherrer JR, et al. The Health On the Net Code of Conduct for medical and health Websites. Comput Biol Med 1998;28:603–10. 10.1016/S0010-4825(98)00037-7 [DOI] [PubMed] [Google Scholar]

- 21. DuBay W. The Principles of Readability. 2004:72 files.eric.ed.gov/fulltext/ED490073.pdf

- 22. MedlinePlus. How to Write Easy-to-Read Health Materials. 2017. https://medlineplus.gov/etr.html

- 23. Top 15 Most Popular Search Engine. 2015. http://www.ebizmba.com/articles/search-engines

- 24. Eysenbach G, Köhler C. How do consumers search for and appraise health information on the world wide web? Qualitative study using focus groups, usability tests, and in-depth interviews. BMJ 2002;324:573–7. 10.1136/bmj.324.7337.573 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. San Giorgi MRM, de Groot OSD, Dikkers FG. Quality and readability assessment of websites related to recurrent respiratory papillomatosis. Laryngoscope 2017;127:2293–7. 10.1002/lary.26521 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Health on the Net Foundation. 2017. https://www.hon.ch/HONcode/

- 27. Media B. Readability Formulas. 2017. http://www.readabilityformulas.com/

- 28. Arif N, Ghezzi P. Quality of online information on breast cancer treatment options. Breast 2018;37:6–12. 10.1016/j.breast.2017.10.004 [DOI] [PubMed] [Google Scholar]

- 29. Stewart JR, Heit MH, Meriwether KV, et al. Analyzing the Readability of Online Urogynecologic Patient Information. Female Pelvic Med Reconstr Surg 2019;25:29-35 10.1097/SPV.0000000000000518 [DOI] [PubMed] [Google Scholar]

- 30. Fowler GE, Baker DM, Lee MJ, et al. A systematic review of online resources to support patient decision-making for full-thickness rectal prolapse surgery. Tech Coloproctol 2017;21:853–62. 10.1007/s10151-017-1708-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Kugar MA, Cohen AC, Wooden W, et al. The readability of psychosocial wellness patient resources: improving surgical outcomes. J Surg Res 2017;218:43–8. 10.1016/j.jss.2017.05.033 [DOI] [PubMed] [Google Scholar]

- 32. Ayoub T, Chow J. The conventional autopsy in modern medicine. J R Soc Med 2008;101:177–81. 10.1258/jrsm.2008.070479 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Turnbull A, Martin J, Osborn M. The death of autopsy? Lancet 2015;386:2141 10.1016/S0140-6736(15)01049-1 [DOI] [PubMed] [Google Scholar]

- 34. O’Neill SC, Baker JF, Fitzgerald C, et al. Cauda equina syndrome: assessing the readability and quality of patient information on the Internet. Spine 2014;39:E645–9. 10.1097/BRS.0000000000000282 [DOI] [PubMed] [Google Scholar]

- 35. No SP. You’re Not Entitled To Your Opinion. 2012. http://theconversation.com/no-youre-not-entitled-to-your-opinion-9978

- 36. Wikipedia. en.wikipedia.org/wiki/Alien_autopsy 2017

- 37. Peaceville.com. Puncturing The Grotesque. 2017. https://burningshed.com/index.php?_route_=store/peaceville/related-stores-peaceville/autopsy-peaceville

- 38. Gierasch A. Autopsy. 2008. http://www.imdb.com/title/tt0443435/

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2018-023804supp001.xlsx (13.3KB, xlsx)

bmjopen-2018-023804supp002.xlsx (11.8KB, xlsx)

Data Availability Statement

The data collected independently by both observers (BH and PB) are available in online supplementary appendix A. The data after consensus agreement are available in online supplementary appendix B.

bmjopen-2018-023804supp001.xlsx (13.3KB, xlsx)

bmjopen-2018-023804supp002.xlsx (11.8KB, xlsx)