Abstract

Approaching rewards and avoiding punishments could be considered as core principles governing behavior. Experiments from behavioral economics have shown that choices involving gains and losses follow different policy rules, suggesting that appetitive and aversive processes might rely on different brain systems. Here we contrast this hypothesis with recent neuroscience studies exploring the human brain from brainstem nuclei to cortical areas. Although some circuits show rigid specialization, many others appear to process both appetitive and aversive stimuli, in a flexible manner that depends on a context-wise subjective reference point. Moreover, appetitive and aversive aspects are often integrated into net values that are signaled with enhanced activity in ‘positive regions’, and suppressed activity in ‘negative regions’. This dichotomy might explain why drugs or lesions can produce valence-specific effects, biasing decisions towards approaching a reward or avoiding a punishment.

Introduction

« Good and evil, reward and punishment, are the only motives to a rational creature: these are the spur and reins whereby all mankind are set on work, and guided. » Since these famous words of John Locke, various experiments have been conducted in order to understand how reward and punishment can guide behavior. In standard decision theory [1], choices are based on option values, i.e. estimates of how good or bad the outcome would be. After outcomes are experienced, option values can then be updated following reinforcement learning theory [2]. In principle, the same decision and learning rules could be applied to appetitive and aversive values. In other words, reward and punishment would be two sides in the same dimension.

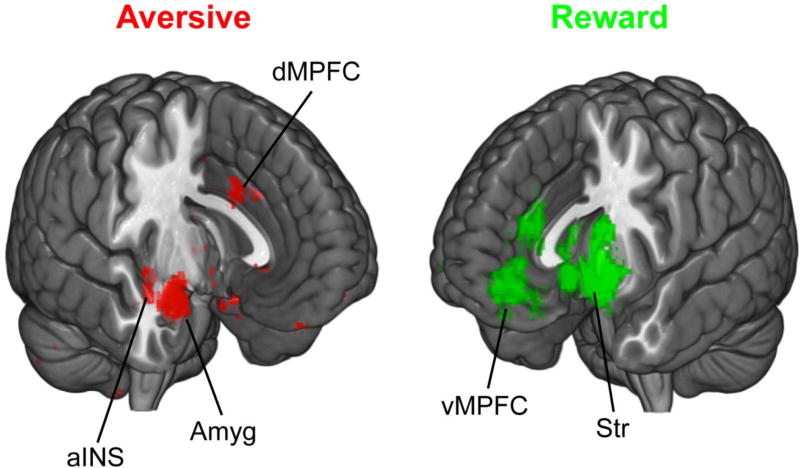

Yet several findings in behavioral economics have suggested that human agents have different attitudes toward gains and losses, as if these were split in two domains separated by a reference point. For instance, the endowment effect shows that people are willing to pay more to retain some thing they own than to obtain the same thing from someone else [3]. According to the framing effect, people are risk-averse when considering potential monetary gains, but risk-seeking when dealing with losses [4]. Also, losses are not discounted with time in the same manner as gains, as seen in the so-called “sign effect” [5]. These observations suggest that the human brain has evolved two different systems dedicated to appetitive and aversive processes, which may exert distinct influences on behavioral biases and clinical symptoms. Meta-analyses of neuroimaging studies lend support to this distinction by highlighting neural circuits associated with processing appetitive and aversive reinforcers (see Figure 1).

Figure 1.

A large-scale meta-analysis of neuroimaging studies of reward (560 total reports) and aversive (169 total reports) processes (Neurosynth; Yarkoni et al. 2011). Aversive includes dorsal medial prefrontal cortex (dMPFC), anterior insula (aINS) and amygdala (Amyg). Reward includes striatum (Str, encompassing nucleus accumbens, caudate and putamen) and ventromedial prefrontal cortex (vMPFC).

In this review, we explore this dissociation by examining recent evidence from experiments using functional magnetic resonance imaging (fMRI), pharmacological manipulations and clinical studies. Specifically, we discuss a) the dissociation of neural systems signaling appetitive and aversive value in the human brain; b) the integration of positive and negative features (benefits and costs) into a net decision value; and c) the effect of contextual shifts in appetitive versus aversive value coding. When discussing appetitive values, we consider data using primary, secondary and more abstracts rewards (e.g., food, money and art respectively), while aversive values include action costs like effort or punishing outcomes such as pain or financial loss. We take the approach of first examining neuromodulatory signals sent by deep brainstem nuclei and then extend our discussion to higher brain areas that are more accessible to neuroimaging techniques.

Appetitive and aversive signaling by neuromodulators

Appetitive and aversive processes appear to be disturbed in many pathological conditions. For instance, depression might be characterized by a negative bias - an exacerbation of the aversive aspects of life events and/or dampening of positive experiences, which is typically alleviated by serotonin reuptake inhibitors [6]. In decision-making, such biases may affect how potential benefits are weighed against potential costs. If the potential reward seems less attractive, or if the required effort seems too demanding, patients would prefer to do nothing. This reduction of behavior would be clinically classified as apathy and commonly seen in conditions such as Parkinson’s disease, which is typically treated with dopamine enhancers [7]. These observations motivated the investigation of neuromodulators such as dopamine and serotonin in the study of appetitive and aversive processing, since they were implicated in the etiology or treatment of neuropsychiatric diseases associated with a shift in how benefits are weighed against costs.

Weighing costs and benefits

The trade-off between effort and reward has been explored for a long time in animals, using tasks involving a choice between going for a small food reward that requires little effort or larger reward that requires more effort (e.g., climbing a barrier). The willingness to accept higher efforts for higher rewards is critically dependent on dopamine levels in the nucleus accumbens [8]. This has been replicated in humans, using monetary rewards in exchange of physical efforts exerted on a handgrip. The propensity to choose high effort – big reward options was shown to depend on dopamine level in the striatum and ventromedial prefrontal cortex measured with PET imaging, and to be enhanced by administration of d-amphetamine [9, 10]. Thus, the arbitrage between costs and benefits may depend on neuromodulators such as dopamine. This might explain cases of apathy, such as those observed in depression, negative schizophrenia or Parkinson’s disease, where dopaminergic targets such as the striatum have a blunted response to reward [11, 12].

The shift in cost/benefit trade-off does not imply that different brain systems are involved in appetitive and aversive processes. It could be that dopamine level increases with positive events, favoring approach behavior, and decreases with negative events, favoring avoidance behavior. An a priori argument against this possibility is that the possible range for coding is too narrow on the negative side, since the baseline firing rate of dopamine neurons is quite low (3–5Hz, [13]). This argument suggests the existence of an opponent system that would code negative events positively (with increased firing rate). Dissociating these opponent systems involves comparing choices made between two appetitive on the one hand and two aversive options on the other hand. This is not trivial, since subjects might reframe their expectations if they know the condition (appetitive or aversive) in which they are, such that not being punished can become rewarding, or vice-versa [14].

Learning by carrots or by sticks

Clear separation of appetitive and aversive processing has been operationalized in instrumental learning paradigms. These tasks involve subjects learning by trial and error to select the option that is more rewarding or to avoid the option that is more punishing. Simple models of instrumental learning [2] propose that chosen option value is updated based on the outcome, in proportion to reward prediction error (actual minus predicted reward). Single-unit recording studies in monkeys have suggested that dopamine neurons might precisely encode reward prediction errors [15]. Encoding of reward prediction errors has also been reported in humans using both single-unit electrophysiology [16] and brainstem fMRI [17]. Whole-brain fMRI results mostly show reward prediction errors correlating with dopaminergic targets in the prefrontal cortex [18] and the striatum [19]. Interestingly, the magnitude of the prediction error signal in the striatum has been found to correlate with behavioral performance [20, 21].

The claim that dopamine neurons could also encode aversive events such as effort and punishment is more debated, as many electrophysiology and voltammetry studies have observed that the effect of aversive stimuli is small compared to that of unexpected reward [22]. However, reliable activations related to noxious stimuli have been recorded in dopaminergic nuclei, sometimes in distinct populations of dopaminergic cells [23, 24], or possibly in non-dopaminergic cells [25, 26]. Direct manipulations using optogenetics and microstimulation have been more conclusive on showing a specific involvement of dopamine neurons in reward processing and appetitive behavior [27, 28]. These studies are consistent with the idea that dopamine might influence future choices by increasing the values of chosen options following reward obtainment.

This idea has recently been tested in humans. Dopamine enhancers given to healthy subjects or to patients with Parkinson’s disease improve reward learning but leave unaffected, or sometimes even impair, punishment learning [20, 29]. Conversely, dopamine blockers given to healthy subjects or patients with Tourette syndrome impair reward learning but not punishment learning [30, 31]. Furthermore, reward-related learning has been shown to depend on subject-wise striatal dopamine level measured with PET, and on polymorphism of dopamine receptor or transporter genes [32, 33]. The increase in sensitivity to positive reinforcement might explain the compulsive behaviors, such as hypersexuality or pathological gambling, developed by patients with Parkinson’s disease under dopamine receptor agonists [34].

In face of the evidence for dopamine being implicated in the reward domain, many authors have looked for opponent systems that would be dedicated to the punishment domain. Among neuromodulators, an influential theory has suggested that serotonin could encode punishment prediction errors and therefore teach the brain to avoid repeating unfortunate choices [35]. However, evidence for this proposal is scant, since electrophysiological recording and pharmacological manipulation studies have implicated serotonin in both reward and punishment processing [36, 37]. The theory has been revisited to incorporate the role assigned to serotonin in inhibition, as opposed to invigoration, of behavior [38]. Some studies have indeed reported that decreasing serotonin level through tryptophan depletion impaired punishment-induced inhibition [39, 40] but the evidence remains mixed. Serotonin has been implicated in other types of costs such as the cost of information sampling [41] or the opportunity cost induced by waiting for reward delivery [42, 43]. Thus, it is tempting to conclude that serotonin has a differential implication in the cost rather than the benefit domain. However, whether serotonin helps avoiding costs or overcoming costs (in order to get more reward) is still a matter of debate [44, 45].

Beyond neuromodulation: whole-brain mapping of appetitive and aversive values

Common neural currencies

Outside the brainstem, the search for opponent systems underlying appetitive and aversive processes has been extended to the entire brain in humans using fMRI. Seminal studies in animals have allowed for the delineation of a neural circuit involved in processing rewards (for review see [46]). Central to this circuit is the role of the striatum and ventromedial prefrontal cortex (vmPFC) in representing and adjusting the value of a stimulus to inform decision-making (e.g., [47, 48]), particularly in humans, as highlighted by recent neuroimaging meta-analyses (e.g., [49, 50]). Both regions are engaged by typical rewards such as food, liquids and money (for review see [51, 52]), but also more abstract rewards including art (e.g., [53]), attractive faces (e.g., [54]), social feedback (e.g., [55]) and even intrinsic experiences related to well-being and positive emotions (e.g., [56]). The ability of the striatum and vmPFC to represent distinct types of rewards allows for the flexible comparison of such stimuli when making decisions (e.g., valuation of a monetary reward in comparison with social praise). Indeed, the vmPFC in particular has been associated with representing the difference in subjective value of distinct goods (e.g., mugs; [57]; snacks; [58]) as a function of how much people are willing to pay for it (e.g., [59]), which provides a “common currency” where value signals from distinct rewards are coded on a common scale to inform decision-making [52].

While the neural circuits underlying reward processing are well characterized, there are more questions surrounding the neural basis of aversive processing. Aversive outcomes that elicit intense feelings of arousal such as disgusting pictures are associated with activity in the insula (e.g., [60]). Aversive conditioning with primary reinforcers such as shock is thought to depend on the integrity of the amygdala [61], which has been posited to potentially code for the associability of a conditioned stimulus [62] and assisting in the computations of how aversive an outcome is prior to decision-making [63]. In contrast to primary reinforcers such as shock, aversive outcomes associated with secondary reinforcers (e.g. financial loss) yield inconsistent results with respect to amygdala activation, with some observations [64] particularly in the context of framing [65], but other non-observations perhaps due to the intensity of the stimuli [66]. However, monetary loss is often observed in the striatum [67] and medial prefrontal cortex [68], characterized by a decrease in activity (compared to a neutral baseline) that can correlate with behavioral decisions [69]. The insula has also been shown to respond to monetary losses, especially the anticipation of a loss [68], which has been related to risk predictions [70] and decisions to be risk-averse [71]. Finally, the dorsal anterior cingulate, part of the dorsomedial prefrontal cortex has been linked with aversive values such as calculating the effort (i.e., cost) necessary to obtain a reward [72].

FMRI studies also provide several examples of overlapping appetitive and aversive processes in the human brain. The striatum, for example, while often linked to reward processes, is also recruited during aversive learning [66] perhaps reflecting a role in actively coping with negative reinforcers [73] as in avoidance learning [74, 75]. Consistent with a role of the striatum in reinforcement learning, aversive prediction error signals are also observed in this region, although compared to reward prediction errors, aversive signals are argued to be spatially distinct within the striatum [76] and selectively potentiated under a stressful state [77]. Finally, activity in the putamen to both appetitive [78] and aversive [79] Pavlovian cues exerts a behavioral influence on instrumentally learned behavior. Taken together, neuroimaging evidence suggests that appetitive and aversive processing overlaps in the human striatum, however with some noted spatial distinctions within the striatum. Notably, appetitive and aversive processing have been associated with anterior and posterior parts of the striatum respectively [76] and even ventral and dorsal divisions respectively, with the dorsal striatum reflecting more anxious feelings associated with a decision [80].

Contextual dependencies

The activity in value related regions to appetitive and aversive information has been suggested to reflect the relative magnitude of the potential outcome with respect to a reference point, as observed by increases in activation for gains and decreases for losses during receipt in striatum [67] and mPFC [68]. This could be viewed as evidence for separate systems, or could be thought of as an overlapping system that represents value in a continuous scale. Indeed, when decisions involve both gains and losses and the integration of cost-benefit must occur to inform choices then vmPFC in particular has been observed to reflect appetitive and aversive values in an economically coherent way. For example, it has been argued that loss aversion during decisions – i.e., greater sensitivity to losses than gains –is reliant on this relative coding with respect to a reference point in neural regions associated with value [81]. This is also observed with primary appetitive and aversive reinforcers (e.g., food) which have been shown to be similarly represented in the vmPFC to inform decision-making [82].

Thus, the calculation of costs and benefits to inform decisions involves putative reward circuitry in a manner that suggests a calculation of positive values (to attain a reward or avoid a punishment). Importantly, this value calculation has to take into account not only the appetitive and aversive values but the context in which they are experienced. Context can refer to a person’s state (e.g., stressed individuals show blunted striatal responses to monetary gains - [83] - akin to blunted response by individuals with depression; [11]), but can also simply refer to the subjective value of a reinforcer (e.g., benefit) in the presence of a different reinforcer (e.g., cost). For instance, an individual’s preference for control during decision-making has been associated with ventral striatum activity [84] a bias posited to be driven by greater prediction errors [85]. Interestingly, this preference for control is apparent when the choice is solely tied to a potential monetary gain or to avoiding a monetary loss, but it is abolished when losses and gains are presented concurrently [86]. It suggests that context in which an appetitive reinforcer is viewed (i.e., in the presence or absence of a potential aversive stimulus) can influence value related neural signals. In accordance with this, context-dependent shifts in risk preferences (greater preference for risk in the context of a prior loss) are observed in vmPFC [87]. An interesting effect of context is the case of the “near miss” during gambling where an aversive event (a miss) is treated as an appetitive event due to the proximity to what would have been a “win” response. In such cases, near miss trials evoke activity in reward-related regions such as the striatum and have increased connectivity with the insula [88].

The calculation of subjective value when a decision involves potential gains and losses often involves a consideration of cost-benefit comparisons that factors an individual’s susceptibility to loss aversion and sensitivity towards risk. However, at times decisions that can lead to a reward may also be associated with costs of different modalities (e.g., effort or avoiding pain). When costs (pain by electric shock) and benefits (monetary rewards) are presented, decision-making is influenced by the interaction of the two as the appeal of benefits is attenuated with increases in costs, a process that leads to attenuation of ventral striatum and vmPFC prediction error signals [89] and stronger coupling of vmPFC and amygdala pathways [90]. A potential mechanistic explanation of how cost-benefit computations may occur supports the idea of two potential systems that interact prior to decision-making [63]. Specifically, Basten and colleagues suggest that the vmPFC and dorsolateral PFC calculate the difference between costs and benefits that arise from anticipatory signals within the amygdala and ventral striatum respectively.

Conclusions and moving forward

In this review, we highlight neural systems involved in appetitive and aversive processes that can exert distinct behavioral biases. While some neural regions have been primarily linked with appetitive or aversive processes (e.g., ventral striatum and anterior insula respectively), alluding to the existence of two separate systems, we also note examples in which this dichotomy is not fully supported, suggesting that there may not be regions responsible for processing solely one domain or the other. Rather, the classification of values as appetitive or aversive may flexibly depend on the context the information is perceived and reflect changes in value in accordance with a subjective reference point. Even when correlation with appetitive and aversive processes are observed at the scale of brain networks using fMRI, electrophysiological studies often show much more diversity within the single-cell level, as exemplified by findings of dopaminergic and non-dopaminergic cells in the ventral tegmentum area. One question for future investigations is to understand how aggregate signals recorded with human neuroimaging techniques can emerge from the computations operated by individual neurons.

Yet some functional specialization has been consistently observed at the population level and helps explain why certain neural perturbations have valence-dependent effects, such as those induced by dopaminergic drugs or by focal brain lesions (Box 1). This has been helped by the use of parametric designs, which avoid confounding value coding with differences or commonalities in sensory features. A key finding is that some brain regions such as the vmPFC integrate information about appetitive and aversive values across different reinforcer modalities (from basic rewards such as food to more abstract ones such as social reinforcers), so as to compute a net value that might guide decision making in a cost-benefit type of calculation. How such net value signal connects to the motor representations that underpin the behavior is another matter for future investigations. It remains possible that some low-level representations of aversive and appetitive values (as perhaps in neuromodulatory systems) might directly map onto approach and avoidance motor patterns, and compete for behavioral control in a more implicit fashion than the cost-benefit arbitrage that is operated in higher-level cortical areas. New and more direct techniques, such as optogenetics, or a combination of methods, such as the use of pharmacological manipulations with neuroimaging techniques, may allow for better insight and characterization of neural systems involved in appetitive and aversive processes.

BOX 1. Effects of focal lesions on appetitive versus aversive processes.

Activation studies using either electrophysiology or fMRI have failed to identify brain regions that would be purely dedicated to reward or punishment. However, some consensus has emerged about some neural representation of net value (in which appetitive aspects are discounted by aversive aspects). For instance, common findings in fMRI studies are the positive correlation with net value in ventral fronto-striatal circuits, and the negative correlation in the anterior insula and sometimes amygdala [50]. These correlations do not mean that these regions are necessary for integrating appetitive and aversive values in decision making. However, these findings can be corroborated by studies with brain-damaged patients which provide a better argument for causality. For instance, patients with Huntington’s disease were impaired in reward-based learning as soon as neural degeneration reached their ventral striatum [75]. On the contrary, patients with glioma affecting the anterior insula were impaired in punishment-based learning but not reward-based learning [75]. Damage to the amygdala is known to impair avoidance of aversive objects, as described in the Kluver-Bucy syndrome [91]. A recent study investing choices between lotteries reported that patients with amygdala calcification did not exhibit the change in risk attitude between gain and loss domains (the so-called framing effect) and were therefore considered as more rational than healthy subjects [92]. Thus, damage to regions that positively encode net value is likely to reduce the contribution of appetitive processes and damage to regions encoding net value negatively might impair the contribution of aversive processes to decision making. The situation is more balanced with lesions to the ventromedial prefrontal cortex, in keeping with the putative role of this region in integrating appetitive and aversive dimensions. Such a lesion was shown to affect information integration in multi-attribute decision-making [93], to amplify inconsistencies in choices between appetitive food items [94], but also to impair learning from negative feedback [95].

Highlights.

-

-

The same brain regions process rewards or punishments across reinforcer modalities

-

-

No strict separation of brain systems processing appetitive and aversive events

-

-

Appetitive or aversive depends on a context-dependent subjective reference point

-

-

Some brain regions integrate appetitive and aversive aspects into net values

-

-

Net values are positively encoded in some brain regions, negatively in others

Acknowledgments

M.P. was supported by funding from the European Union Human Brain Project. M.R.D. was supported by funding from the National Institute on Drug Abuse (DA027764). The authors wish to acknowledge David Smith for assistance with figures.

Footnotes

Conflict of interest: The authors declare no conflict of interest.

References

- 1.Von Neumann J, Morgenstern O. Theory of games and economic behavior. Princeton: Princeton university press; 1944. p. xviii.p. 625. [Google Scholar]

- 2.Sutton RS, Barto AG. Adaptive computation and machine learning. Cambridge, Mass.: MIT Press; 1998. Reinforcement learning : an introduction; p. xviii.p. 322. [Google Scholar]

- 3.Kahneman D, Knetsch JL, Thaler RH. Experimental Tests of the Endowment Effect and the Coase Theorem. Journal of Political Economy. 1990;98(6):1325–1348. [Google Scholar]

- 4.Kahneman D, Tversky A. Prospect Theory: An Analysis of Decision Under Risk. Econometrica. 1979;47(2) [Google Scholar]

- 5.Thaler R. Some Empirical-Evidence on Dynamic Inconsistency. Economics Letters. 1981;8(3):201–207. [Google Scholar]

- 6.Harmer CJ, Cowen PJ. 'It's the way that you look at it'--a cognitive neuropsychological account of SSRI action in depression. Philos Trans R Soc Lond B Biol Sci. 2013;368(1615):20120407. doi: 10.1098/rstb.2012.0407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Marin RS. Differential diagnosis and classification of apathy. Am J Psychiatry. 1990;147(1):22–30. doi: 10.1176/ajp.147.1.22. [DOI] [PubMed] [Google Scholar]

- 8.Salamone JD, Correa M. The Mysterious Motivational Functions of Mesolimbic Dopamine. Neuron. 2012;76(3):470–485. doi: 10.1016/j.neuron.2012.10.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Treadway MT, et al. Neural Mechanisms of Effort-Based Decision-Making in Depressed Patients. Biological Psychiatry. 2012;71(8):311s–311s. [Google Scholar]

- 10.Wardle MC, et al. Amping Up Effort: Effects of d-Amphetamine on Human Effort-Based Decision-Making. Journal of Neuroscience. 2011;31(46):16597–16602. doi: 10.1523/JNEUROSCI.4387-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Whitton AE, Treadway MT, Pizzagalli DA. Reward processing dysfunction in major depression, bipolar disorder and schizophrenia. Curr Opin Psychiatry. 2015;28(1):7–12. doi: 10.1097/YCO.0000000000000122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Chaudhuri KR, Schapira AH. Non-motor symptoms of Parkinson's disease: dopaminergic pathophysiology and treatment. Lancet Neurol. 2009;8(5):464–74. doi: 10.1016/S1474-4422(09)70068-7. [DOI] [PubMed] [Google Scholar]

- 13.Glimcher PW. Understanding dopamine and reinforcement learning: the dopamine reward prediction error hypothesis. Proc Natl Acad Sci U S A. 2011;108(Suppl 3):15647–54. doi: 10.1073/pnas.1014269108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kim H, Shimojo S, O'Doherty JP. Is avoiding an aversive outcome rewarding? Neural substrates of avoidance learning in the human brain. Plos Biology. 2006;4(8):1453–1461. doi: 10.1371/journal.pbio.0040233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275(5306):1593–9. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 16.Zaghloul KA, et al. Human Substantia Nigra Neurons Encode Unexpected Financial Rewards. Science. 2009;323(5920):1496–1499. doi: 10.1126/science.1167342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.D'Ardenne K, et al. BOLD responses reflecting dopaminergic signals in the human ventral tegmental area. Science. 2008;319(5867):1264–7. doi: 10.1126/science.1150605. [DOI] [PubMed] [Google Scholar]

- 18**.Rutledge RB, et al. Testing the Reward Prediction Error Hypothesis with an Axiomatic Model. Journal of Neuroscience. 2010;30(40):13525–13536. doi: 10.1523/JNEUROSCI.1747-10.2010. An fMRI study that systematically tests whether activity in various brain regions satisfy a set of axioms that define signaling of reward prediction error. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.O'Doherty JP, et al. Temporal difference models and reward-related learning in the human brain. Neuron. 2003;38(2):329–337. doi: 10.1016/s0896-6273(03)00169-7. [DOI] [PubMed] [Google Scholar]

- 20.Pessiglione M, et al. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442(7106):1042–1045. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Schonberg T, et al. Reinforcement learning signals in the human striatum distinguish learners from nonlearners during reward-based decision making. J Neurosci. 2007;27(47):12860–7. doi: 10.1523/JNEUROSCI.2496-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Gan JO, Walton ME, Phillips PE. Dissociable cost and benefit encoding of future rewards by mesolimbic dopamine. Nat Neurosci. 2010;13(1):25–7. doi: 10.1038/nn.2460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Brischoux F, et al. Phasic excitation of dopamine neurons in ventral VTA by noxious stimuli. Proc Natl Acad Sci U S A. 2009;106(12):4894–9. doi: 10.1073/pnas.0811507106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24**.Matsumoto M, Hikosaka O. Two types of dopamine neuron distinctly convey positive and negative motivational signals. Nature. 2009;459(7248):837–41. doi: 10.1038/nature08028. Electrophysiological recordings in midbrain neurons of non-human primates reveal distinct subset of dopamine neurons that were distinguished by anatomical location and responses to appetitive stimuli only, or to both appetitive and aversive stimuli. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Cohen JY, et al. Neuron-type-specific signals for reward and punishment in the ventral tegmental area. Nature. 2012;482(7383):85–8. doi: 10.1038/nature10754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ungless MA, Grace AA. Are you or aren't you? Challenges associated with physiologically identifying dopamine neurons. Trends Neurosci. 2012;35(7):422–30. doi: 10.1016/j.tins.2012.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Arsenault JT, et al. Role of the primate ventral tegmental area in reinforcement and motivation. Curr Biol. 2014;24(12):1347–53. doi: 10.1016/j.cub.2014.04.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Steinberg EE, et al. A causal link between prediction errors, dopamine neurons and learning. Nat Neurosci. 2013;16(7):966–73. doi: 10.1038/nn.3413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Frank MJ, Seeberger LC, O'Reilly R C. By carrot or by stick: cognitive reinforcement learning in parkinsonism. Science. 2004;306(5703):1940–3. doi: 10.1126/science.1102941. [DOI] [PubMed] [Google Scholar]

- 30.Palminteri S, et al. Pharmacological modulation of subliminal learning in Parkinson's and Tourette's syndromes. Proceedings of the National Academy of Sciences of the United States of America. 2009;106(45):19179–19184. doi: 10.1073/pnas.0904035106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.van der Schaaf ME, et al. Establishing the dopamine dependency of human striatal signals during reward and punishment reversal learning. Cereb Cortex. 2014;24(3):633–42. doi: 10.1093/cercor/bhs344. [DOI] [PubMed] [Google Scholar]

- 32**.Frank MJ, et al. Genetic triple dissociation reveals multiple roles for dopamine in reinforcement learning. Proc Natl Acad Sci U S A. 2007;104(41):16311–6. doi: 10.1073/pnas.0706111104. A computational dissociation of three dopamine-related polymorphisms impact instrumental learning from reward and punishment. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33**.Cools R, et al. Striatal dopamine predicts outcome-specific reversal learning and its sensitivity to dopaminergic drug administration. J Neurosci. 2009;29(5):1538–43. doi: 10.1523/JNEUROSCI.4467-08.2009. A combination of PET imaging and pharmacology showing that a same dose of D2 agonist can improve either reward- or punishment-based learning, depending on baseline striatal dopamine. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Voon V, et al. Chronic dopaminergic stimulation in Parkinson's disease: from dyskinesias to impulse control disorders. Lancet Neurol. 2009;8(12):1140–9. doi: 10.1016/S1474-4422(09)70287-X. [DOI] [PubMed] [Google Scholar]

- 35.Daw ND, Kakade S, Dayan P. Opponent interactions between serotonin and dopamine. Neural Netw. 2002;15(4–6):603–16. doi: 10.1016/s0893-6080(02)00052-7. [DOI] [PubMed] [Google Scholar]

- 36.Cohen JY, Amoroso MW, Uchida N. Serotonergic neurons signal reward and punishment on multiple timescales. Elife. 2015;4 doi: 10.7554/eLife.06346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Palminteri S, et al. Similar Improvement of Reward and Punishment Learning by Serotonin Reuptake Inhibitors in Obsessive-Compulsive Disorder. Biological Psychiatry. 2012;72(3):244–250. doi: 10.1016/j.biopsych.2011.12.028. [DOI] [PubMed] [Google Scholar]

- 38**.Boureau YL, Dayan P. Opponency revisited: competition and cooperation between dopamine and serotonin. Neuropsychopharmacology. 2011;36(1):74–97. doi: 10.1038/npp.2010.151. An influential theory that integrates the opposite roles of dopamine and serotonin in two dimensions: reward versus punishment and invigoration versus inhibition of behavior. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Cools R, et al. Tryptophan depletion disrupts the motivational guidance of goal-directed behavior as a function of trait impulsivity. Neuropsychopharmacology. 2005;30(7):1362–73. doi: 10.1038/sj.npp.1300704. [DOI] [PubMed] [Google Scholar]

- 40.Crockett MJ, Clark L, Robbins TW. Reconciling the role of serotonin in behavioral inhibition and aversion: acute tryptophan depletion abolishes punishment-induced inhibition in humans. J Neurosci. 2009;29(38):11993–9. doi: 10.1523/JNEUROSCI.2513-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Crockett MJ, et al. The effects of acute tryptophan depletion on costly information sampling: impulsivity or aversive processing? Psychopharmacology (Berl) 2012;219(2):587–97. doi: 10.1007/s00213-011-2577-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Fonseca MS, Murakami M, Mainen ZF. Activation of dorsal raphe serotonergic neurons promotes waiting but is not reinforcing. Curr Biol. 2015;25(3):306–15. doi: 10.1016/j.cub.2014.12.002. [DOI] [PubMed] [Google Scholar]

- 43.Schweighofer N, et al. Low-serotonin levels increase delayed reward discounting in humans. J Neurosci. 2008;28(17):4528–32. doi: 10.1523/JNEUROSCI.4982-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Dayan P. Instrumental vigour in punishment and reward. Eur J Neurosci. 2012;35(7):1152–68. doi: 10.1111/j.1460-9568.2012.08026.x. [DOI] [PubMed] [Google Scholar]

- 45.Miyazaki K, Miyazaki KW, Doya K. The role of serotonin in the regulation of patience and impulsivity. Mol Neurobiol. 2012;45(2):213–24. doi: 10.1007/s12035-012-8232-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Haber SN, Knutson B. The reward circuit: linking primate anatomy and human imaging. Neuropsychopharmacology. 2010;35(1):4–26. doi: 10.1038/npp.2009.129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Balleine BW, O'Doherty JP. Human and rodent homologies in action control: corticostriatal determinants of goal-directed and habitual action. Neuropsychopharmacology. 2010;35(1):48–69. doi: 10.1038/npp.2009.131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9(7):545–56. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Clithero JA, Rangel A. Informatic parcellation of the network involved in the computation of subjective value. Soc Cogn Affect Neurosci. 2014;9(9):1289–302. doi: 10.1093/scan/nst106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Bartra O, McGuire JT, Kable JW. The valuation system: a coordinate-based meta-analysis of BOLD fMRI experiments examining neural correlates of subjective value. Neuroimage. 2013;76:412–27. doi: 10.1016/j.neuroimage.2013.02.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Delgado MR. Reward-related responses in the human striatum. Ann N Y Acad Sci. 2007;1104:70–88. doi: 10.1196/annals.1390.002. [DOI] [PubMed] [Google Scholar]

- 52**.Levy DJ, Glimcher PW. The root of all value: a neural common currency for choice. Curr Opin Neurobiol. 2012;22(6):1027–38. doi: 10.1016/j.conb.2012.06.001. A meta-analysis of neuroimaging studies highlighting the role of the vmPFC in representing different types of reward in a common scale. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Abitbol R, et al. Neural mechanisms underlying contextual dependency of subjective values: converging evidence from monkeys and humans. J Neurosci. 2015;35(5):2308–20. doi: 10.1523/JNEUROSCI.1878-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.O'Doherty J, et al. Beauty in a smile: the role of medial orbitofrontal cortex in facial attractiveness. Neuropsychologia. 2003;41(2):147–55. doi: 10.1016/s0028-3932(02)00145-8. [DOI] [PubMed] [Google Scholar]

- 55.Izuma K, Saito DN, Sadato N. Processing of social and monetary rewards in the human striatum. Neuron. 2008;58(2):284–94. doi: 10.1016/j.neuron.2008.03.020. [DOI] [PubMed] [Google Scholar]

- 56.Speer ME, Bhanji JP, Delgado MR. Savoring the Past: Positive Memories Evoke Value Representations in the Striatum. Neuron. 2014;84(4):847–856. doi: 10.1016/j.neuron.2014.09.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.FitzGerald TH, Seymour B, Dolan RJ. The role of human orbitofrontal cortex in value comparison for incommensurable objects. J Neurosci. 2009;29(26):8388–95. doi: 10.1523/JNEUROSCI.0717-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Chib VS, et al. Evidence for a common representation of decision values for dissimilar goods in human ventromedial prefrontal cortex. J Neurosci. 2009;29(39):12315–20. doi: 10.1523/JNEUROSCI.2575-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59**.Smith DV, et al. Distinct value signals in anterior and posterior ventromedial prefrontal cortex. J Neurosci. 2010;30(7):2490–5. doi: 10.1523/JNEUROSCI.3319-09.2010. One of the first fMRI studies to suggest the existence of a common neural currency, by demonstrating that each participant's willingness to trade monetary reward for viewing a beautiful face was predicted by the posterior VMPFC's differential response to receiving monetary rewards and viewing beautiful faces. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Calder AJ, et al. Disgust sensitivity predicts the insula and pallidal response to pictures of disgusting foods. Eur J Neurosci. 2007;25(11):3422–8. doi: 10.1111/j.1460-9568.2007.05604.x. [DOI] [PubMed] [Google Scholar]

- 61.Phelps EA, LeDoux JE. Contributions of the amygdala to emotion processing: from animal models to human behavior. Neuron. 2005;48(2):175–87. doi: 10.1016/j.neuron.2005.09.025. [DOI] [PubMed] [Google Scholar]

- 62.Li J, et al. Differential roles of human striatum and amygdala in associative learning. Nat Neurosci. 2011;14(10):1250–2. doi: 10.1038/nn.2904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63**.Basten U, et al. How the brain integrates costs and benefits during decision making. Proc Natl Acad Sci U S A. 2010;107(50):21767–72. doi: 10.1073/pnas.0908104107. An fMRI study of how the brain integrates neural signals associated with costs and benefits in the vmPFC and dlPFC to inform decision-making. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Yacubian J, et al. Dissociable systems for gain- and loss-related value predictions and errors of prediction in the human brain. J Neurosci. 2006;26(37):9530–7. doi: 10.1523/JNEUROSCI.2915-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.De Martino B, et al. Frames, biases, and rational decision-making in the human brain. Science. 2006;313(5787):684–7. doi: 10.1126/science.1128356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Delgado MR, Jou RL, Phelps EA. Neural systems underlying aversive conditioning in humans with primary and secondary reinforcers. Frontiers in Neuroscience. 2011;5 doi: 10.3389/fnins.2011.00071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Delgado MR, et al. Tracking the hemodynamic responses to reward and punishment in the striatum. Journal of Neurophysiology. 2000;84(6):3072–3077. doi: 10.1152/jn.2000.84.6.3072. [DOI] [PubMed] [Google Scholar]

- 68.Knutson B, et al. A region of mesial prefrontal cortex tracks monetarily rewarding outcomes: characterization with rapid event-related fMRI. Neuroimage. 2003;18(2):263–72. doi: 10.1016/s1053-8119(02)00057-5. [DOI] [PubMed] [Google Scholar]

- 69**.Bhanji JP, Delgado MR. Perceived Control Influences Neural Responses to Setbacks and Promotes Persistence. Neuron. 2014;83(6):1369–1375. doi: 10.1016/j.neuron.2014.08.012. In this fMRI investigation, decreased activation in response to negative outcomes is observed in both striatum and vmPFC. This activity correlates with behavioral decisions to persist based on the perceived context in which the negative feedback is experienced (participant feels in control or not in control). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Preuschoff K, Quartz SR, Bossaerts P. Human insula activation reflects risk prediction errors as well as risk. J Neurosci. 2008;28(11):2745–52. doi: 10.1523/JNEUROSCI.4286-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Kuhnen CM, Knutson B. The neural basis of financial risk taking. Neuron. 2005;47(5):763–70. doi: 10.1016/j.neuron.2005.08.008. [DOI] [PubMed] [Google Scholar]

- 72.Skvortsova V, Palminteri S, Pessiglione M. Learning To Minimize Efforts versus Maximizing Rewards: Computational Principles and Neural Correlates. Journal of Neuroscience. 2014;34(47):15621–15630. doi: 10.1523/JNEUROSCI.1350-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.LeDoux JE, Gorman JM. A call to action: overcoming anxiety through active coping. Am J Psychiatry. 2001;158(12):1953–5. doi: 10.1176/appi.ajp.158.12.1953. [DOI] [PubMed] [Google Scholar]

- 74.Delgado MR, et al. Avoiding negative outcomes: tracking the mechanisms of avoidance learning in humans during fear conditioning. Frontiers in Behavioral Neuroscience. 2009;3 doi: 10.3389/neuro.08.033.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75**.Palminteri S, et al. Critical Roles for Anterior Insula and Dorsal Striatum in Punishment-Based Avoidance Learning. Neuron. 2012;76(5):998–1009. doi: 10.1016/j.neuron.2012.10.017. A computational characterization of how brain damage can causally and differentially affect instrumental learning from reward and punishment. [DOI] [PubMed] [Google Scholar]

- 76.Seymour B, et al. Differential encoding of losses and gains in the human striatum. J Neurosci. 2007;27(18):4826–31. doi: 10.1523/JNEUROSCI.0400-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Robinson OJ, et al. Stress increases aversive prediction error signal in the ventral striatum. Proc Natl Acad Sci U S A. 2013;110(10):4129–33. doi: 10.1073/pnas.1213923110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Prevost C, et al. Neural correlates of specific and general Pavlovian-to-Instrumental Transfer within human amygdalar subregions: a high-resolution fMRI study. J Neurosci. 2012;32(24):8383–90. doi: 10.1523/JNEUROSCI.6237-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Lewis AH, et al. Avoidance-based human Pavlovian-to-instrumental transfer. Eur J Neurosci. 2013;38(12):3740–8. doi: 10.1111/ejn.12377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Shenhav A, Buckner RL. Neural correlates of dueling affective reactions to win-win choices. Proc Natl Acad Sci U S A. 2014;111(30):10978–83. doi: 10.1073/pnas.1405725111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Tom SM, et al. The neural basis of loss aversion in decision-making under risk. Science. 2007;315(5811):515–8. doi: 10.1126/science.1134239. [DOI] [PubMed] [Google Scholar]

- 82.Plassmann H, O'Doherty JP, Rangel A. Appetitive and aversive goal values are encoded in the medial orbitofrontal cortex at the time of decision making. J Neurosci. 2010;30(32):10799–808. doi: 10.1523/JNEUROSCI.0788-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Porcelli AJ, Lewis AH, Delgado MR. Acute stress influences neural circuits of reward processing. Frontiers in Neuroscience. 2012;6 doi: 10.3389/fnins.2012.00157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Leotti LA, Delgado MR. The inherent reward of choice. Psychol Sci. 2011;22(10):1310–8. doi: 10.1177/0956797611417005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Cockburn J, Collins AG, Frank MJ. A reinforcement learning mechanism responsible for the valuation of free choice. Neuron. 2014;83(3):551–7. doi: 10.1016/j.neuron.2014.06.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Leotti LA, Delgado MR. The value of exercising control over monetary gains and losses. Psychol Sci. 2014;25(2):596–604. doi: 10.1177/0956797613514589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Losecaat Vermeer AB, Boksem MA, Sanfey AG. Neural mechanisms underlying context-dependent shifts in risk preferences. Neuroimage. 2014;103:355–63. doi: 10.1016/j.neuroimage.2014.09.054. [DOI] [PubMed] [Google Scholar]

- 88.van Holst RJ, Chase HW, Clark L. Striatal connectivity changes following gambling wins and near-misses: Associations with gambling severity. Neuroimage Clin. 2014;5:232–9. doi: 10.1016/j.nicl.2014.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Talmi D, et al. How humans integrate the prospects of pain and reward during choice. J Neurosci. 2009;29(46):14617–26. doi: 10.1523/JNEUROSCI.2026-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Park SQ, et al. Neurobiology of value integration: when value impacts valuation. J Neurosci. 2011;31(25):9307–14. doi: 10.1523/JNEUROSCI.4973-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Baron-Cohen S, et al. The amygdala theory of autism. Neurosci Biobehav Rev. 2000;24(3):355–64. doi: 10.1016/s0149-7634(00)00011-7. [DOI] [PubMed] [Google Scholar]

- 92.De Martino B, Camerer CF, Adolphs R. Amygdala damage eliminates monetary loss aversion. Proc Natl Acad Sci U S A. 2010;107(8):3788–92. doi: 10.1073/pnas.0910230107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Fellows LK. Deciding how to decide: ventromedial frontal lobe damage affects information acquisition in multi-attribute decision making. Brain. 2006;129(Pt 4):944–52. doi: 10.1093/brain/awl017. [DOI] [PubMed] [Google Scholar]

- 94.Camille N, et al. Ventromedial frontal lobe damage disrupts value maximization in humans. J Neurosci. 2011;31(20):7527–32. doi: 10.1523/JNEUROSCI.6527-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Wheeler EZ, Fellows LK. The human ventromedial frontal lobe is critical for learning from negative feedback. Brain. 2008;131(Pt 5):1323–31. doi: 10.1093/brain/awn041. [DOI] [PubMed] [Google Scholar]