Abstract

Brain-Computer Interfaces (BCIs) translate neuronal information into commands to control external software or hardware, which can improve the quality of life for both healthy and disabled individuals. Here, a multi-modal BCI which combines motor imagery (MI) and steady-state visual evoked potential (SSVEP) is proposed to achieve stable control of a quadcopter in three-dimensional physical space. The complete information common spatial pattern (CICSP) method is used to extract two MI features to control the quadcopter to fly left-forward and right-forward, and canonical correlation analysis (CCA) is employed to perform the SSVEP classification for rise and fall. Eye blinking is designed to switch these two modes while hovering. Real-time feedback is provided to subjects by a global camera. Two flight tasks were conducted in physical space in order to certify the reliability of the BCI system. Subjects were asked to control the quadcopter to fly forward along the zig-zag pattern to pass through a gate in the relatively simple task. For the other complex task, the quadcopter was controlled to pass through two gates successively according to an S-shaped route. The performance of the BCI system is quantified using suitable metrics and subjects are able to acquire 86.5% accuracy for the complicated flight task. It is demonstrated that the multi-modal BCI has the ability to increase the accuracy rate, reduce the task burden, and improve the performance of the BCI system in the real world.

Keywords: multi-modal EEG, motor imagery, SSVEP, eye movement, quadcopter flight control

Introduction

Brain-computer interfaces (BCIs) have provided an entirely new communication way to interact for both disabled and healthy people with the external world. Such a BCI system is achieved through sensing, processing, and actuation (Nicolas-Alonso and Gomez-Gil, 2012). An electrophysiological signal is firstly collected, amplified, and digitized. A computer then interprets the underlying neurophysiology of the signal in order to translate user's intents into the specific commands. These commands are finally actuated by the external devices. Moreover, the user could receive feedback in order to adjust his thoughts to generate updated and adapted commands (Yuan and He, 2014). Among non-invasive BCI, the scalp-recorded electroencephalogram (EEG) is widely used due to its high temporal resolution and convenience of use. Brain patterns including event-related desynchronization/synchronization (ERD/ERS), steady-state visual evoked potential (SSVEP), and P300 potential are utilized for the EEG-based BCI (Hong and Khan, 2017). Upon performing motor imagery, local neuron populations over a sensorimotor area in charge of imagination task experience a desynchronization and result in a decrease of mu-beta power, which is accompanied by an increased synchronization in the non-task hemisphere. These phenomena are termed ERD and ERS, respectively (Pfurtscheller et al., 2006). SSVEP is a continuous oscillating response from the posterior scalp of the brain to a stimulus flickering at a constant frequency. The amplitude of SSVEP is enhanced when a subject's attention is cued to the stimulus (Xie et al., 2016). P300 is one of the components for event-related potentials (ERPs) that indicate the responses to specific cognitive, sensory, or motor events. The presentation of a stimulus in an oddball paradigm could produce a positive peak which appeared 300 ms after the onset of the stimulus (Xie et al., 2014; Ramadan and Vasilakos, 2017).

Many researchers have begun to focus on flying robot navigation in real space using BCI recently, since it could achieve flexible movement in 3D real space, and any remote mobile device which has meaningful interaction with the real world could be a substitute as well. Shi et al. investigated the Unmanned Aerial Vehicle (UAV) control by using a left/right-hand MI-based BCI and semi-autonomous navigation for indoor target searching, where MI EEG is simply employed to choose or not choose the current feasible direction (Shi et al., 2015). Kim et al. demonstrated the viability of flight control using a hybrid interface with EEG and eye tracking. Eight different directions were achieved by using eye tracking, while mental concentration detected by EEG is only utilized for switching (Kim et al., 2014). Though these flying robots are controlled with a relatively high accuracy, BCI is only responsible for a small part of the control system.

Some researchers have also investigated the brain-controlled flying robot when the BCI is applied to users to deal with all the commands. On the one hand, Audrey et al. used four-class hand motor imagery to fly a virtual helicopter in a 3-D world with the aid of intelligent control strategies (Royer et al., 2010). LaFleur et al. successfully conducted a quadcopter flight control experiment using a MI-based BCI in physical world. Separable control of three dimensions was obtained by imagining clenching of left hand, right hand, both hands, and idle state. The success rate reached 79.2% (LaFleur et al., 2013). To improve the success rate of motor imagery signals, various feature extraction algorithms such as the common spatial pattern, cross-correlation method, and neural network by complex Morlet wavelets were investigated (Shi et al., 2015; Das et al., 2016; Zhang et al., 2019). Moreover, the computational cost should be paid attention to ensure real-time operation. To this end, an improved CSP method called the complete information common spatial pattern (CICSP) is selected in our system, which employs additional intermediate spatial filters to extract more discriminable features in motor imagery. These early efforts have laid the groundwork for other research teams; however, in this scenario, individual imagining movements may generate a mental burden. Furthermore, performing a continuous mental task to control a quadcopter in real time could be an exhausting procedure, and when added to environmental distractions, it could lead to a loss of control over the quadcopter.

On the other hand, SSVEP-based evoked BCI systems could be set up easily with almost no training. Other researchers have focused on developing a SSVEP-based BCI system with a shorter time and lower error rate (Middendorf et al., 2000; Liu et al., 2018). In order to overcome the discomfort of eyes due to flickering, Wang et al. designed a wearable BCI system based on 4-class SSVEP which presented using a head-mounted device (Wang et al., 2018). Although it alleviates the user's visual burden to some extent, this was only conducted in the simulated 3D environment.

Considering these problems, a multi-modal BCI based on the combination of different brain patterns was introduced recently which is capable of beating specific targets more successfully than a single-modal BCI (Li et al., 2016). The active MI task combined with the reactive SSVEP task is commonly used to constitute the multi-modal BCI system. It reduces the mental burden, decelerating visual fatigue over time. This multi-modal BCI consists of two protocols; that is, performing both imagining movements and focusing on oscillating visual attention simultaneously as well as executing two tasks separately (Allison et al., 2010, 2012). A previous work found the dual-task interference; that is, performing a simultaneous SSVEP task might impair the performance of an ERD task, whereas performing a secondary task (such as ERD) does not impair the performance on a primary task (such as SSVEP) (Pfurtscheller et al., 2010a; Das et al., 2016). Performing two tasks separately could be more in line with the physiological basis. Therefore, in this case, few studies have investigated a protocol that performs motor imagery and visual attention separately. A study developed a sequentially operating hybrid BCI that used a one-channel imagery-based BCI to turn on/off an SSVEP BCI (Pfurtscheller et al., 2010b; Brunner et al., 2011). Horki et al. designed a hybrid BCI that employed imaging the brisk feet dorsi flexion to control the open and close function of a gripper, and focusing on flickering lights to control the extension and flexion function of the elbow (Horki et al., 2011). Duan et al. employed three SSVEP signals and one feet motor imagery signal to design a hybrid BCI system which could provide both manipulation and mobility commands to a service robot. Moreover, Alpha rhythm is considered as a switch from SSVEP to motor imagery (Duan et al., 2015). These methods described above inspired the protocol of the multi-modal BCI-controlled quadcopter in our research.

In this study, we investigate the capacity of a flying robot controlled using MI and SSVEP combined with multi-modal BCI in the three-dimensional physical world, aiming at enhancing the ability of interacting with the outside world. Subjects are trained to imagine left/right-hand movement as well as to gaze at two flickering lights which generate ERD/ERS and SSVEP in order to actuate the quadcopter in both horizontal and vertical directions, respectively. Moreover, two modes are switched by eye blinking, since people who have motor neuro disease could nonetheless perceive and respond to the external world by receiving visual stimuli and eye movements effectively (Lin et al., 2010). Control commands decoded from EEG are transmitted with a fixed time interval to the quadcopter for updating its motor direction via Wi-Fi. The real-time video acquired from the global camera is sent back to the subject's monitor in order to complete the BCI control by telepresence. Two outdoor flight experiments were performed and some metrics were utilized to evaluate the performance of the multi-modal BCI system.

The remainder of this paper is arranged as follows. In section Materials and Methods, the experimental set-up and paradigm are explained, and the methods of EEG pattern recognition are given. In addition, the metrics of performance analysis are also introduced in this section. The experimental results are depicted in section Results. Section Discussion includes the discussion. Finally, section Conclusion summarizes the conclusions obtained.

Materials and Methods

Architecture of BCI System

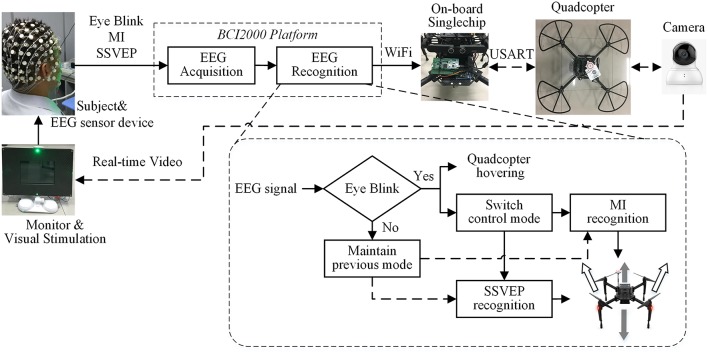

Figure 1 shows the architecture of the multi-modal BCI system for quadcopter flight control. Subjects perform three types of tasks—motor imagery, SSVEP, and eye blinking to control the quadcopter flying in the physical environment. The EEG signals were collected from a comfortable and easy-to-use Geodesic Sensor Net (Electrical Geodesics Inc, OR) before they were imported into the Net Amps 300 amplifier (Electrical Geodesics Inc, OR) to get the low-noise and high-quality data. The EEG data acquisition and pattern recognition were run on BCI2000 platform. The EEG data was decoded into five specific patterns, that is flying left-forward, flying right-forward, rise, fall, and flight mode switch while flight hovering, as shown by means of the gray and white arrows, specifically. The decoded outputs were transmitted to the quadcopter's onboard single chip via Wi-Fi, in which they were converted into the control instructions. After that, the instructions were sent to the flight control system by USART to complete the continuous control of the quadcopter. The subject watched the monitor of the experimental site real-time video from a mounted camera; meanwhile, executed the appropriate tasks to control the quadcopter autonomously. Two flight modes, MI mode and SSVEP mode, took charge of the actuation of the horizontal and vertical dimensions, respectively, and the two modes were switched by eye blinking. As for the stimulation of SSVEP, subjects had to gaze at one of the two green LEDs which were placed on the top and bottom of the monitor, while it did not require any for MI mode.

Figure 1.

Architecture of the multi-modal BCI system for Quadcopter control.

Experiment Layout

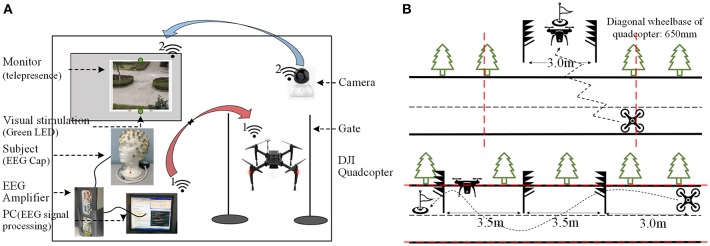

Figure 2A illustrates the experiment layout of the flight controlled by using the BCI system. The flight environment was set up on vacant land outside the laboratory building while subjects controlled the flight autonomously in a corner of the land using telepresence. Subjects were asked to sit in a comfortable chair with their arms relaxing on the chair handle. The monitor was adjusted so that the screen was exactly at the center of the subjects' visual field. The length and width of the screen were 16 and 12 cm, respectively, and the distance between the two LEDs was 23.5 cm. Outdoors, two barriers were made on one gate so that the quadcopter could pass through, and the top of the gate was ~2.5 m above the ground. The quadcopter could not fly out of the top of the gate. Subjects were situated on the back of the flight field to ensure safety. Since the subject's sight was blocked out, the flight status of the quadcopter was presented to the subject by a monitor from the camera.

Figure 2.

The view of the experiment layout (A) The sketch map of the experiment set-up. (B) The simpler and more complex flight tasks.

In order to prove the reliability of the BCI system, the flight tasks consisted of two phases, as shown in Figure 2B. The first one was relatively simple for the subject to familiarize themselves with the real-time BCI system in the physical world, and the second task was more complex in order to testify the performance of the flight controlling system. The pre-designed trajectory of the first flight task is shown in the top (b). The quadcopter was controlled to fly forward along the zigzag pattern, while its height was adjusted so that it could pass through the gate, which was 3 meters wide and 1.5 meters high, and subsequently landed at the designated destination area. The second task is illustrated in the bottom (b). The quadcopter's starting position was located nearly on the extension of the connection line of three obstacles and three meters away from the first obstacle. Subjects were asked to control the quadcopter to pass through two gates successively according to an S-shaped route. The quadcopter bypassed the first barrier and then adjusted its flight direction to pass through the first 3.5-m-wide gate, which was immediately followed by the second direction change, and then it passed through the second gate of the same size and finally landed at the designated destination area. During the experiment, subjects were required to provide instructions for the task and destination, of which they were informed in advance. Other experimental personnel were not allowed to give any tips to the subjects. In order to ensure the security of the flight, the safety boundaries of the control area were stipulated as the vertical extension lines of the trees beside the two barriers for the simple task, as well as two edges of the road for the complex flight task (as shown by the red dotted line in Figure 2B). Once the quadcopter went beyond the boundaries, the experimenter landed the quadcopter in a safe position by using the remote controller and announced an end of this trial instantly.

The Matrix 100 (M100) quadcopter (DJI, Shenzhen, China) was chosen as the external device for the BCI experiment due to the extensive open source platform, which was suitable for scientific research, and it was able to expand the capabilities of the aerial platform with an onboard embedded system that supported serial communication as well as DJI SDK. It provided the interface to program a wide range of speed and yaw rate in three dimensions. In addition, the M100 quadcopter provided a stable and reliable flight, with up to 40 min of flight time.

Calibration Phase

Before steering an actual quadcopter, a training phase was carried out in a virtual quadcopter flight simulator developed by DJI with the main purpose being to calibrate subjects' control signals. A target instruction consisting of left-forward, right-forward, rise, fall, as well as eye blinking were informed to the subject by sound. EEG signals were collected to train the recognition method for the three modes. The training would not stop until subjects obtained a success rate of 80% or above for three separate modals (Zhang et al., 2016).

Experimental Paradigm

Each trial started after the quadcopter took off, hovering about 1 m off the ground. Imagining left-hand movement turned the quadcopter to the left-forward direction at an angle of −42 degrees, with the forward direction speed at 0.25 m/s, while imagining right-hand movement turned the quadcopter to right-forward direction at a symmetrical angle to the left and the same speed. Gazing at the top flickering LED for a climb with a speed of 0.2 m/s while gazing at the bottom flickering LED made the quadcopter descend with a speed of −0.3 m/s. When the system switched to MI mode, the LEDs stopped flickering and subjects had to concentrate on thinking of left- or right-hand movement continuously. Consciously blinking eyes kept the quadcopter hovering, and two flight modes switched simultaneously. The 1.5 s time window of raw EEG signal was pattern-recognized online to generate a control output which was sent wirelessly every 1 s to the quadcopter's onboard single chip.

Nine human subjects (one female and eight males, aged 22–32) were recruited to participate in this experiment. The details of the subjects are listed in Table 1. Three of them attended the BCI experiment before and the rest were naive to the experiment. All subjects had normal or corrected-to-normal visual acuity. The experimental procedures were approved by the Northwestern Polytechnical University Hospital Ethics Committee. Subjects attended the experiment for 8 non-consecutive days, with about 5 trials each session each day. Subjects were instructed to avoid body movement during each trial. The raw EEG signal was recorded from 12 electrodes (CP1, CP2, FC1, FC2, FC3, FC4, C1, C2, C3, C4, Oz, Fp2), although the Geodesic Sensor Net contained 64 electrodes of the standard 10/20 international EEG positioning system. A reference electrode Cz was placed on the central-parietal area. All impedances were kept below 5 kΩ. EEG data were band-pass filtered between 0.3 and 100 Hz and sampled at 1,000 Hz. The EEG processing was performed in MATLAB R2013a (The MathWorks, Inc., Natick, MA, USA). If the quadcopter passed through the gate navigating by the subject successfully, a “gate acquisition” was recorded; however, if the quadcopter went beyond the borders of the control area, an “out of borders” was recorded.

Table 1.

The details of subjects.

| Subject number | Age | Sex | Handedness | Corrected visual acuity | Former experimental experience |

|---|---|---|---|---|---|

| 1 | 23 | Male | Right | 0.8/0.8 | Yes |

| 2 | 22 | Female | Right | 0.9/0.8 | No |

| 3 | 32 | Male | Right | 1/0.9 | No |

| 4 | 22 | Male | Right | 1/1.2 | No |

| 5 | 25 | Male | Right | 0.8/0.9 | Yes |

| 6 | 25 | Male | Left | 0.8/0.8 | Yes |

| 7 | 25 | Male | Right | 1/1 | No |

| 8 | 26 | Male | Right | 0.9/0.9 | No |

| 9 | 26 | Male | Right | 1/0.9 | No |

Pattern Recognition Methods

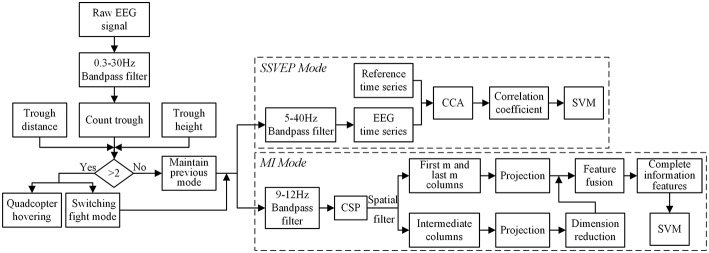

Figure 3 illustrates the procedure of eye blinking, SSVEP, and MI recognition algorithms. Firstly, a block of the raw EEG signal is detected by the count trough method. Once the number of troughs within a block was higher than 2, the quadcopter kept hovering, and the two flight modes switched simultaneously. Otherwise, this signal block is sent to the previous mode, which is either SSVEP or MI mode for processing.

Figure 3.

The procedure of recognition for three EEG patterns.

Eye Blinking

Eye blinking was used to switch between the two flight modes. Conscious eye blinking is defined as excessive effort blinking with a fixed frequency; that is, blinking twice within a 1.5 s EEG data block. The EEG time series of the conscious eye blinking was recorded by the Fp2 channel, which is located at the upper right orbital in the prefrontal lobe. It demonstrated that the downward vertical eye movement could generate a large-magnitude negative deflection, representing thus a remarkable trough for an eye blinking (Corby and Kopell, 1972). A counting trough method was proposed to the detect eye blinking of the subject. The flow chart of the method is shown in the left part of Figure 3. A 0.3–30 Hz bandpass filter was employed since the frequency range of eye movement activity is maximal at frequencies below 4 Hz. While removing the EEG baseline and high-frequency noise, the characteristic waveform of eye blinking was preserved. Then the number of troughs was counted in a 1.5 s time window under the constraints of the average distance between two troughs d and the minimum absolute height of the trough h. Two constraints we set here aimed at differentiating conscious eyes blinking from normal blinking or other noise like head movements or frowning. The parameter d was set as 0.75 s long, while the parameter h was averaged among the filtered EEG measurements of calibration dataset. If the number of troughs was >2, the subject was considered as eye blinking; otherwise, it was further recognized within MI/SSVEP modes.

SSVEP

SSVEP is a periodic evoked potential induced by rapidly repetitive visual stimulation, typically at specific frequencies >6 Hz. Subjects were able to steer the quadcopter rise and fall simply by gazing at the top and bottom LED flicking at 12.4 and 18 Hz, respectively (Liu et al., 2018). The EEG signals were bandpass filtered between 5 and 40 Hz, which included the fundamental frequency of the visual stimulus and its first harmonic (Müller-Putz et al., 2005). The canonical correlation analysis (CCA) frequency recognition method, which is the most commonly used feature extraction method, was employed for SSVEP detection, as shown in the upper right part of Figure 3. CCA was able to obtain the correlation between two sets of variables in general. Considering two sets of variables X and Y, CCA aims to find a pair of vectors a and b, which could maximize the correlation between x = aTX and y = bTY. The maximization problem can be described as below:

| (1) |

In this approach, the CCA coefficients are calculated between EEG measurements recorded by channel OZ and all reference time series. The reference series is a set of Fourier series of the specific frequency period signal (the same frequency fi of the flickering frequency of LEDs) which can be described as below:

| (2) |

Where Nh = 2 represents the number of harmonics. The frequency with the largest coefficient corresponds to the one of SSVEP (Lin et al., 2006; Duan et al., 2015).

Motor Imagery

EEG signals from 10 electrodes (CP1, CP2, FC1, FC2, FC3, FC4, C1, C2, C3, C4), which were distributed symmetrically over two hemispheres of the sensorimotor area, were calculated to extract MI features using an improved common spatial pattern method called the complete information common spatial pattern (CICSP). The aim of the conventional CSP is to construct an optimal spatial filter which maximizes the variance of one class while minimizing that of the other using the simultaneous diagonalization of two covariance matrices mathematically (Ramoser et al., 2000). Normally, only a few first and a few last vectors of the spatial filters are most suitable for discrimination of the two MI tasks, which were used for the construction of the classifier, while the CICSP also extracted useful information of the intermediate columns of the spatial filter vector using a dimension reduction method. The EEG signals were firstly bandpass filtered restricting to 9–12 Hz, which encompassed the mu frequency band, and were subsequently spatially filtered with a common average reference (CAR) filter (McFarland et al., 1997). The spatial filter was then calculated by using the CICSP method. Two populations X1 and X2 related to left and right motor imagery EEG measurements were spatially filtered by Q1 and Q2, leading to a new time series Zij, formulated as follows:

| (3) |

Where i and j denote the index of spatial filters and EEG populations, respectively. Q1 and Q2 are defined as the m first and the m last column as well as the intermediate column of the spatial filter vector, respectively. The CSP features are calculated by

| (4) |

Where diag() is the diagonal element of the matrix, tr[·] is the sum of the diagonal elements, f1 = [λ11; λ12] represents the first m and last m feature vectors, and f2 = [λ21; λ22] represents the intermediate feature vectors of two tasks, and then the dimension canbe reduced by using principal component analysis (PCA) to extract the most useful information, leading to .

| (5) |

f1 and were concatenated together to become the complete information feature vectors F, which have been classified using a linear kernel-based support vector machine (SVM) where the SVM classifier model was trained among the calibration dataset (Chang and Lin, 2011).

In addition, during the online implementation, the 1.5-s time window EEG measurement X′ was separated into a 0.5-s time window EEG series , where k = {1, 2, 3}. were projected through the intermediate spatial filter Q2, which was calculated in the calibration phase, then the CSP features were calculated by (4). were reduced to one dimension, which was considered to be the intermediate feature vector for one EEG measurement.

Performance Analysis

The analogous information transfer rate (ITR) metric was created for the physical world BCI task.

| (6) |

The numerator of formula (6) is an index of difficulty computed using the Fitt's law formalization (Decety and Jeannerod, 1995). The displacement from the initial position to the center of the gate for two flight tasks are both 4.75 m, and the width of the gate, as shown in Figure 2B, is 3.5 m. The quadcopter passing through the gate and landing at the designated area was considered as successful completion, whereas flying around the gate or flying beyond the boundaries was deemed to be a failure. It is a relatively simple computation which only related to two distances and emphasizes the ability of the subject who could give specific instructions throughout the entire controlling process, including the time used to correct the instructions (which was unintentional on the part of the subject). This metric is a rough estimate of ITR, and some specific metrics to evaluate the performance of the BCI system were also introduced. The average gate acquisition time (AGAT) was used to evaluate the speed of control, calculated as the total flight time divided by the total number passing through the gate. Out Boundaries Per Unit Time (OBUT) reports the average number of boundary crossings that occurred in each trial. The Percent Task Correct (PTC) metric reports the success rate of the BCI system, which is defined as the number of passes through the gate divided by the sum of the number of passes through gate and failures. The formulas are listed below (Doud et al., 2011).

| (7) |

| (8) |

| (9) |

Results

Results in Calibration Phase

The success rate of the three modes was obtained using the whole EEG samples in calibration phase for nine subjects, which is listed in Table 1. According to the results, the average correct recognition rate of SSVEP was 83.44%, the average correct recognition rate of MI was 80.45%, and that of eye blinking was 99.07%. The success rate of the entire task reached 87.65%. The best performance was 97.76% whereas the worst performance was 50.63% in the SSVEP experiment. Similarly, in the detection of MI, the best success rate was 93.70%, and the worst performance was below the level of chance. These two worst success rates came from the same subject (subject 4), who demonstrated to be incapable of the BCI approach. For comparison purposes, Table 2 also shows the performance of the CSP method in MI recognition. It indicated that the CICSP method clearly outperformed CSP among eight of the nine subjects, with an average improvement of 4.45%. A paired t-test revealed a significant difference between the accuracy rate for CICSP and CSP (P = 0.009). All nine subjects performed remarkably in eye blinking, six of whom reached 100%. Five subjects (subjects 5, 6, 7, 8, and 9) performed at least an 80% success rate for each mode in which they participated in the experiment. The average success rates of SSVEP, MI, and eye blinking among these five subjects were 92.06, 90.20, and 98.75%, respectively. The success rate of the entire task was up to 93.67% among these five subjects.

Table 2.

Experimental results in terms of success rate for 9 subjects in calibration phase.

| Subject | Correct recognition rate | Success rate of the entire task | |||

|---|---|---|---|---|---|

| SSVEP | MI | Eye blinking | |||

| CSP | CICSP | ||||

| 1 | 78.44% | 82.61% | 84.43% | 100% | 87.62% |

| 2 | 62.19% | 74.72% | 78.82% | 97.92% | 79.64% |

| 3 | 99.38% | 51.54% | 60.14% | 100% | 86.51% |

| 4 | 50.63% | 41.62% | 49.63% | 100% | 66.75% |

| 5 | 97.76% | 86.94% | 88.76% | 97.92% | 94.81% |

| 6 | 97.06% | 91.51% | 93.70% | 100% | 96.92% |

| 7 | 97.62% | 92.04% | 91.63% | 100% | 96.42% |

| 8 | 87.05% | 85.99% | 87.97% | 95.83% | 90.28% |

| 9 | 80.80% | 86.20% | 88.95% | 100% | 89.92% |

| Average | 83.44% | 77.02% | 80.45% | 99.07% | 87.65% |

SSVEP, steady-state visually evoked potential; MI, motor imagery.

Results in Actual Environment

The performance of the quadcopter flight experiment using the multi-modal BCI system is presented as below. As shown in Table 3, the simple flight task consisted of 10 trials, and two of the five subjects successfully completed PTC with 100% accuracy, and the average for PTC was 92%. Only two subjects navigated the quadcopter beyond boundaries once, and the total flight time was 4.6 min on average. The simple flight task aimed to give subjects a glimpse of the brain-controlled quadcopter, and the experiment results demonstrated that subjects had the ability to reach the gate in succession.

Table 3.

Experimental results and performance of simple flight task.

| BCI | Total trials | Numbers of passing through gate | Numbers of beyond boundaries | PTC (%) | Total flight time |

|---|---|---|---|---|---|

| Sub5 | 10 | 9 | 0 | 90 | 4.8 |

| Sub6 | 10 | 10 | 0 | 100 | 3.8 |

| Sub7 | 10 | 10 | 0 | 100 | 4.2 |

| Sub8 | 10 | 9 | 1 | 90 | 5.2 |

| Sub9 | 10 | 8 | 1 | 80 | 5.1 |

| Average | 10 | 9.2 | 0.4 | 92 | 4.6 |

BCI, brain computer interface; PTC, percent task correct.

In order to provide a comparison between the BCI control and the common approach of control as well as the baseline level, two other experiments were also performed by using a remote controller in absence of the subjects' intent instead of a BCI. In the remote controller experiment, an experimenter who had experience but did not achieve proficiency completed the complex task. In this protocol, the maximum rise and fall speeds were equal to those of BCI. For the horizontal direction, the motion could be in any direction such that not only the two actuations occurred, and the maximum speed was also restricted to that in the BCI control. In addition, the baseline level aimed to identify the extent to which the subjects' performance could be attributed to the success of the BCI system. A subject was instructed to sit quietly and to watch a video of a quadcopter flight in the experimental site, which was considered as fake feedback, without executing the mental or visual task or any eye movement. The BCI system was set up identically to the actual experimental protocol. EEG signals were recorded and controlled the actuation of quadcopter after recognition. It should be noted that poor performance cannot be attributed to the selection of an unacceptable magnitude of EEG or random noise, due to the fact that true EEG signals were employed as the input to the system.

In the complex task, subjects passing through two gates sequentially was considered a success, while passing through one gate was regarded as a half success. Subjects were successful in achieving accurate control of the quadcopter in the actual environment. The performances in various metrics are listed in Table 4 according to the experimental record, and the results of “remote control” and “baseline level” were also evaluated for the purpose of comparison. PTC represented the success rate among all trials. The average among five subjects for PTC was up to 86.5%, which was slightly lower than the RC control (100%) but considerably higher than the baseline level (2.5%). In other words, subjects were able to achieve an average of ~35 gates per 20 trials. Subject 6 reached the highest PTC (97.5%), where 39 gates were hit. Subject 9 passed through 27 gates and performed worst in the calibration phase among the five subjects. It took 26.8 min of total flight time on average for the 20 trials. The best performer, subject 6, spent the shortest amount of time (20.7 min) to complete the flight task, whereas the total flight time of subject 9 was 1.6 times longer than that of subject 6. Because more time was needed to correct the misclassification of commands, the subject had a strong sense of frustration after navigating beyond the boundary, which affected the performance in the following trial. The average time for the quadcopter traveling through a gate was 0.80 min with a constant forward speed of 0.25 m/s, which was nearly 2.5 times longer than the remote-control protocol (0.32 min); however, this was far below the time required for the baseline (20min). The average number of beyond-boundary flights was around 2; thus, the OBUT metric for the BCI control was equal to 0.06, which was far below the baseline. In fact, the quadcopter flew out of the boundary quickly after taking off in each trial. Five subjects who participated in the complex flight experiment displayed an average analogous ITR of 1.69 bit/min compared to 3.69 bit/min of RC protocol, and individual subject values are listed in Table 4. It is a fact that the analogous ITR for the BCI control was 18.8 times higher than that of the baseline level, which is an indication that subjects made an effort to intentionally modulate the EEG signal in order to complete the task at a high success rate.

Table 4.

Experimental results and performance in various metrics of complex flight task.

| Total trials | Number of successes | Number of half successes | Number of times passing through gate | Numbers of times beyond boundaries | |

|---|---|---|---|---|---|

| Sub5 | 20 | 16 | 4 | 36 | 0 |

| Sub6 | 20 | 19 | 1 | 39 | 0 |

| Sub7 | 20 | 19 | 0 | 38 | 1 |

| Sub8 | 20 | 16 | 1 | 33 | 3 |

| Sub9 | 20 | 12 | 3 | 27 | 5 |

| Total flight time (min) | AGAT (min/gate) | OBUT (Numbers/min) | PTC (%) | Analogous ITR (bit/min) | |

| Sub5 | 27.4 | 0.76 | 0 | 90.0 | 1.63 |

| Sub6 | 20.7 | 0.53 | 0 | 97.5 | 2.33 |

| Sub7 | 22.1 | 0.58 | 0.05 | 95.0 | 2.13 |

| Sub8 | 30.0 | 0.90 | 0.10 | 82.5 | 1.37 |

| Sub9 | 34.0 | 1.25 | 0.15 | 67.5 | 1.00 |

| Average | 26.8 | 0.80 | 0.06 | 86.5 | 1.69 |

| Remote control | – | 0.32 | 0 | 100 | 3.90 |

| Baseline | – | 20 | 7 | 2.5 | 0.09 |

AGAT, average gate acquisition time; OBUT, out boundaries per unit time; PTC, percent task correct; Analogous ITR, analogous information transfer rate.

Discussion

The present work demonstrates the capacity for subjects controlling a quadcopter in the real world by a multi-modal EEG-based BCI. The purpose of multi-modal BCI is to increase the number of control commands, reducing the task burden and improving the recognition success rate (Hong and Khan, 2017). It was observed that through this practical BCI, the user could successfully and efficiently navigate a quadcopter to approach a target while avoiding the obstacles in an outdoor actual environment according to a fixed view. The multi-modal BCI system could restore the capacity to explore the real world for disabled people as well as extend the ability of fully capable people in more practical ways. In the future, this multi-modal BCI system could provide more practical purposes. (1) Injured soldiers could rely on it remain in combat in battle fields. (2) It could assist astronauts to accomplish multitask missions in space. (3) Patients with severe disabilities could use them for transporting objects (Nourmohammadi et al., 2018).

We would like to remark that the usage of blinking to facilitate multi-modal BCI is the result of our careful consideration. At the very beginning, the transformation of two modes was completed automatically. If the coefficient in the detection of SSVEPs was higher than a threshold, the EEG block was considered as the SSVEP mode—otherwise, it was considered as the motor imagery mode. Since the detection of the two modes owed to the channels located in two separate areas of the brain, the evoked potential, and the induced potential would not affect each other. Under this circumstance, the LEDs had to keep flickering during the whole process of the experiment. However, gazing at the flickering lights would distract the concentration required for the mental tasks, which led to the increasing number of flights beyond boundaries. Therefore, a switch was introduced in our work to turn on/off the LEDs. Considering the fact that navigating a quadcopter outdoors at a lower altitude is a time-sensitive task, some prototypes have incorporated non-mental features such as eye blinking and muscle movement as a precise switch to minimize the manipulation delay. Since the detection of facial muscle movement needs more electrodes, eye blinking was selected in our work. Once a subject's conscious eye blinking is well-trained with a fixed frequency and the same strength (at least higher than the trained strength), the parameter d (the average distance between two troughs) in the blink-recognizing algorithm would remain constant. The parameter h (the minimum absolute height of the trough) needs to be estimated using the dataset recorded in calibration phase. Therefore, the blink-recognizing algorithm is robust, and the rest of the BCI system worked properly as well. The quadcopter remains hovering instead of engaging in reckless movement in the case of eye blinking as it does not include mental information.

During the initial phase of the actual quadcopter controlling experiments, the noises generated by the flying quadcopter in the flight field caused the nervousness of some of the subjects to affect the experimental results. Subjects adapted to the environment over time. Since subjects could clearly see the consequence of the task failure such as flying into the bushes, hitting obstacles, and even crashing, this could influence the motivation of the subjects' success in the experiment.

The control commands generated by our current multi-modal BCI system could be further extended. Since CICSP has been demonstrated as an efficient method for multi-class motor imagery classification, in future work we plan to extend the CI-CSP method for multi-class ERD/ERS classification as well as place more flickering LEDs on the screen for generating SSVEP features to actuate more motions on three dimensions.

Generally speaking, the multi-modal EEG signals could be used together to generate a series of control commands for subjects interacting with the real world. The performance of our multi-modal BCI system presents some advantages compared with previous studies (LaFleur et al., 2013; Duan et al., 2015; Wang et al., 2018). Duan et al. utilized a hybrid BCI system on an actual humanoid robot. In the simulation experiment, the average success rate for the entire task was higher than 80%, which was lower than that of our system (93.67%). The overall success rate on an actual device was 73.3%, lower than that for PTC (86.5%), which represented the success rate among all trials we obtained. The manipulation task was achieved with the aid of a visual servo module on a service robot. On the contrary, the quadcopter was controlled using only EEG signals throughout the flight in our experiment. Wang et al. used a 4-class SSVEP which was presented in a virtual reality environment to control a simulated quadcopter. The online accuracy achieved 78%, which was lower than the overall accuracy rate of our multi-modal BCI (86.5%). Although it alleviates the user's visual burden to some extent, it is only conducted in the simulated environment. LaFleur et al. conducted a quadcopter control in the physical word using unitary MI mode. The average ITR of this work was 1.16, which was lower than that of our multi-modal BCI system (1.69). The results indicate that our multi-modal BCI system could effectively increase the accuracy rate while alleviating both mental burden and visual fatigue to a large extent.

Conclusion

This paper presents a multi-modal BCI system to accurately and stably control a quadcopter to pass through gates in three-dimensional physical space. The MI and SSVEP modes—which are associated with two types of regular EEG patterns, ERD/ERS, and SSVEP—were employed to actuate the flight on both horizontal and vertical directions. Two modes were rapidly switched by eye blinking. The ERD/ERS and SSVEP patterns were analyzed by using the CICSP feature extraction method and the CCA frequency recognition method, respectively. Eye blinking was detected by counting the peak method within each EEG data block. Subjects were able to navigate the quadcopter passing through a series of gates in the outdoor environment continuously, accurately and rapidly. Several metrics for real-world BCI systems were used to assess the performance of this system. The PTC reached 86.5% and the analogous ITR attained 1.69 bit/min for five subjects, with the average gate acquisition time being nearly 0.80 min. This system could build the ability for people who suffer from paralyzing disorders to interact with three-dimensional real world. The multi-modal BCI could increase the accuracy rate while alleviating both the mental burden and the visual fatigue to a large extent.

Ethics Statement

This study was carried out in accordance with the recommendations of guiding research into human subjects, Northwestern Polytechnical University Hospital Ethics Committee with written informed consent from all subjects. All subjects gave written informed consent in accordance with the Declaration of Helsinki. The protocol was approved by the Northwestern Polytechnical University Hospital Ethics Committee.

Author Contributions

XD, SX, and XX contributed equally to this work. They conceptualized the idea and proposed the experimental design. XD also programmed and performed all data analysis as well as wrote the manuscript at all stages. SX and XX revised the manuscript at all stages. YM and ZX conducted the experiments. All authors read and approved the final manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thank the subjects for participating in the BCI experiments and the reviewers for their helpful comments.

Footnotes

Funding. This research is supported partly by the National Science Fund of China (Grant No. 61273250), the Key R&D Program of Shaanxi (2018ZDXM-GY-101), Shaanxi Innovation Capability Support Program (2018GHJD-10), the Fundamental Research Funds for the Central Universities (No. 3102018XY021), the Innovation Foundation for Doctor Dissertation of Northwestern Polytechnical University (CX201951), and the Seed Foundation of Innovation and Creation for Graduate Students in Northwestern Polytechnical University (No. ZZ2018142).

References

- Allison B. Z., Brunner C., Altstätter C., Wagner I. C., Grissmann S., Neuper C. (2012). A hybrid ERD/SSVEP BCI for continuous simultaneous two dimensional cursor control. J. Neurosci. Meth. 209, 299–307. 10.1016/j.jneumeth.2012.06.022 [DOI] [PubMed] [Google Scholar]

- Allison B. Z., Brunner C., Kaiser V., Müller-Putz G. R., Neuper C., Pfurtscheller G. (2010). Toward a hybrid brain–computer interface based on imagined movement and visual attention. J. Neural Eng. 7:026007. 10.1088/1741-2560/7/2/026007 [DOI] [PubMed] [Google Scholar]

- Brunner C., Allison B. Z., Altstätter C., Neuper C. (2011). A comparison of three brain–computer interfaces based on event-related desynchronization, steady state visual evoked potentials, or a hybrid approach using both signals. J. Neural Eng. 8:025010 10.1088/1741-2560/8/2/025010 [DOI] [PubMed] [Google Scholar]

- Chang C., Lin C. (2011). LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. 2, 1–27. 10.1145/1961189.1961199 [DOI] [Google Scholar]

- Corby J. C., Kopell B. S. (1972). Differential contributions of blinks and vertical eye movements as artifacts in EEG recording. Psychophysiology 9, 640–644. 10.1111/j.1469-8986.1972.tb00776.x [DOI] [PubMed] [Google Scholar]

- Das A. K., Suresh S., Sundararajan N. (2016). A robust interval Type-2 Fuzzy Inference based BCI system, in 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest. [Google Scholar]

- Decety J., Jeannerod M. (1995). Mentally simulated movements in virtual reality: does Fitt's law hold in motor imagery? Behav. Brain Res. 72, 127–134. 10.1016/0166-4328(96)00141-6 [DOI] [PubMed] [Google Scholar]

- Doud A. J., Lucas J. P., Pisansky M. T., He B. (2011). Continuous three-dimensional control of a virtual helicopter using a motor imagery based brain-computer interface. PLoS ONE 6:e26322. 10.1371/journal.pone.0026322 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duan F., Lin D., Li W., Zhang Z. (2015). Design of a multimodal EEG-based hybrid BCI system with visual servo module. IEEE Trans. Autonom. Mental Dev. 7, 332–341. 10.1109/TAMD.2015.2434951 [DOI] [Google Scholar]

- Hong K. S., Khan M. J. (2017). Hybrid Brain–computer interface techniques for improved classification accuracy and increased number of commands: a review. Front. Neurorobot. 11:35. 10.3389/fnbot.2017.00035 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horki P., Solis-Escalante T., Neuper C., Müller-Putz G. (2011). Combined motor imagery and SSVEP based BCI control of a 2 DoF artificial upper limb. Med. Biol. Eng. Comput. 49, 567–577. 10.1007/s11517-011-0750-2 [DOI] [PubMed] [Google Scholar]

- Kim B. H., Kim M., Jo S. (2014). Quadcopter flight control using a low-cost hybrid interface with EEG-based classification and eye tracking. Comput. Biol. Med. 51, 82–92. 10.1016/j.compbiomed.2014.04.020 [DOI] [PubMed] [Google Scholar]

- LaFleur K., Cassady K., Doud A., Shades K., Rogin E., He B. (2013). Quadcopter control in three-dimensional space using a noninvasive motor imagery-based brain–computer interface. J. Neural Eng. 10:046003. 10.1088/1741-2560/10/4/046003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Y., Pan J., Long J., Yu T., Wang F., Yu Z., et al. (2016). Multimodal BCIs: target detection, multidimensional control, and awareness evaluation in patients with disorder of consciousness. P. IEEE 104, 332–352. 10.1109/JPROC.2015.2469106 [DOI] [Google Scholar]

- Lin J., Chen K., Yang W. (2010). EEG and eye-blinking signals through a Brain-Computer Interface based control for electric wheelchairs with wireless scheme, in 4th International Conference on New Trends in Information Science and Service Science (Gyeongju: ). [Google Scholar]

- Lin Z., Zhang C., Wu W., Gao X. (2006). Frequency recognition based on canonical correlation analysis for SSVEP-based BCIs. IEEE T. Bio. Med. Eng. 53, 2610–2614. 10.1109/TBME.2006.886577 [DOI] [PubMed] [Google Scholar]

- Liu C., Xie S., Xie X., Duan X., Wang W., Obermayer K. (2018). Design of a video feedback SSVEP-BCI system for car control based on improved MUSIC method, in 2018 6th International Conference on Brain-Computer Interface (BCI) (GangWon: ). [Google Scholar]

- McFarland D. J., McCane L. M., David S. V., Wolpaw J. R. (1997). Spatial filter selection for EEG-based communication. Electroencephalogr. Clin. Neurophysiol. 103, 386–394. 10.1016/S0013-4694(97)00022-2 [DOI] [PubMed] [Google Scholar]

- Middendorf M., McMillan G., Calhoun G., Jones K. S. (2000). Brain-computer interfaces based on the steady-state visual-evoked response. IEEE Trans. Rehabil. Eng. 8, 211–214. 10.1109/86.847819 [DOI] [PubMed] [Google Scholar]

- Müller-Putz G. R., Scherer R., Brauneis C., Pfurtscheller G. (2005). Steady-state visual evoked potential (SSVEP)-based communication: impact of harmonic frequency components. J. Neural Eng. 2, 123–130. 10.1088/1741-2560/2/4/008 [DOI] [PubMed] [Google Scholar]

- Nicolas-Alonso L. F., Gomez-Gil J. (2012). Brain computer interfaces, a review. Sensors 12, 1211–1279. 10.3390/s120201211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nourmohammadi A., Jafari M., Zander T. O. (2018). A survey on unmanned aerial vehicle remote control using brain–computer interface. IEEE T. Hum. Mach. Syst. 48, 337–348. 10.1109/THMS.2018.2830647 [DOI] [Google Scholar]

- Pfurtscheller G., Allison B. Z., Brunner C., Solis-Escalante T., Scherer R., et al. (2010a). The hybrid BCI. Front. Neurosci. 4:3. 10.3389/fnpro.2010.00003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfurtscheller G., Brunner C., Schlögl A., Lopes da Silva F. H. (2006). Mu rhythm (de)synchronization and EEG single-trial classification of different motor imagery tasks. Neuroimage 31, 153–159. 10.1016/j.neuroimage.2005.12.003 [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G., Solis-Escalante T., Ortner R., Linortner P., Müller-Putz G. R. (2010b). Self-paced operation of an SSVEP-based orthosis with and without an imagery-based “brain switch:” a feasibility study towards a hybrid BCI. IEEE T. Neur. Sys. Reh. 18, 409–414. 10.1109/TNSRE.2010.2040837 [DOI] [PubMed] [Google Scholar]

- Ramadan R. A., Vasilakos A. V. (2017). Brain computer interface: control signals review. Neurocomputing 223, 26–44. 10.1016/j.neucom.2016.10.024 [DOI] [Google Scholar]

- Ramoser H., Muller-Gerking J., Pfurtscheller G. (2000). Optimal spatial filtering of single trial EEG during imagined hand movement. IEEE Trans. Rehabil. Eng. 8, 441–446. 10.1109/86.895946 [DOI] [PubMed] [Google Scholar]

- Royer A. S., Doud A. J., Rose M. L., He B. (2010). EEG control of a virtual helicopter in 3-dimensional space using intelligent control strategies. IEEE T. Neur. Sys. Reh. 18, 581–589. 10.1109/TNSRE.2010.2077654 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shi T., Wang H., Zhang C. (2015). Brain computer interface system based on indoor semi-autonomous navigation and motor imagery for unmanned aerial vehicle control. Expert Syst. Appl. 42, 4196–4206. 10.1016/j.eswa.2015.01.031 [DOI] [Google Scholar]

- Wang M., Li R., Zhang R., Li G., Zhang D. (2018). A wearable SSVEP-based BCI system for quadcopter control using head-mounted device. IEEE Access 6, 26789—26798. 10.1109/ACCESS.2018.2825378 [DOI] [Google Scholar]

- Xie S., Liu C., Obermayer K., Zhu F., Wang L., Xie X., et al. (2016). Stimulator selection in SSVEP-based spatial selective attention study. Comput. Intell. Neurosci. 2016:9. 10.1155/2016/6410718 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xie S., Wu Y., Zhang Y., Zhang J., Liu C. (2014). Single channel single trial P300 detection using extreme learning machine: Compared with BPNN and SVM, in 2014 International Joint Conference on Neural Networks (IJCNN) (Beijing: ). [Google Scholar]

- Yuan H., He B. (2014). Brain–computer interfaces using sensorimotor rhythms: current state and future perspectives. IEEE T. Bio. Med. Eng. 61, 1425–1435. 10.1109/TBME.2014.2312397 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang R., Li Y., Yan Y., Zhang H., Wu S., Yu T., et al. (2016). Control of a wheelchair in an indoor environment based on a brain–computer interface and automated navigation. IEEE T. Neur. Sys. Reh. 24, 128–139. 10.1109/TNSRE.2015.2439298 [DOI] [PubMed] [Google Scholar]

- Zhang Z., Duan F., Solé-Casals J., Dinarès-Ferran J., Cichocki A., Yang Z., et al. (2019). A novel deep learning approach with data augmentation to classify motor imagery signals. IEEE Access 7, 15945–15954. 10.1109/ACCESS.2019.2895133 [DOI] [Google Scholar]