Abstract

In this ever-progressive digital era, conventional e-learning methods have become inadequate to handle the requirements of upgraded learning processes especially in the higher education. E-learning adopting Cloud computing is able to transform e-learning into a flexible, shareable, content-reusable, and scalable learning methodology. Despite plentiful Cloud e-learning frameworks have been proposed across literature, limited researches have been conducted to study the usability factors predicting continuance intention to use Cloud e-learning applications. In this study, five usability factors namely Computer Self Efficacy (CSE), Enjoyment (E), Perceived Ease of Use (PEU), Perceived Usefulness (PU), and User Perception (UP) have been identified for factor analysis. All the five independent variables were hypothesized to be positively associated to a dependent variable namely Continuance Intention (CI). A survey was conducted on 170 IT students in one of the private universities in Malaysia. The students were given one trimester to experience the usability of Cloud e-Learning application. As an instrument to analyse the usability factors towards continuance intention of the application, a questionnaire consisting thirty questions was formulated and used. The collected data were analysed using SMARTPLS 3.0. The results obtained from this study observed that computer self-efficacy and enjoyment as intrinsic motivations significantly predict continuance intention, while perceived ease of use, perceived usefulness and user perception were insignificant. This outcome implies that computer self-efficacy and enjoyment significantly affect the willingness of students to continue using Cloud e-learning application in their studies. The discussions and implications of this study are vital for researchers and practitioners of educational technologies in higher education.

Keywords: Psychology, Behavioral psychology, Information science, Education, Cloud e-learning, Continuance intention, Usability factors, Structural equation modeling

1. Introduction

In 21st century, learners are visually sophisticated and accustomed to digital media (Chunwijitra et al., 2013). Educational landscape is changing swiftly due to the heavy technology adoption among new generation of learners. Digital devices installed with various applications such as Facebook, chatting apps, YouTube, etc. have changed people's way of living, including communication and social affairs, as well as education methods (Tiyar and Khoshsima, 2015). The increasing tendency towards interactive video content creation and collaborative technologies seems to validate the beliefs that enhanced educational technologies and learning systems help engage learners in learning and improve learning productivity (Chunwijitra et al., 2013). “Higher education is emphasising more on higher order experiences and outcomes which requires a major transformation in knowledge and communication-based society” (Thomas, 2011). Thus, the conventional e-learning methods are no longer adequate to cater for the needs of upgraded learning processes especially in higher education.

The great demand for e-learning content especially multimedia element requires rapid storage growth and dynamic concurrency demands, which is not adequate to be handled by the conventional e-learning methods. The creation of multimedia e-learning content is expensive and takes time; hence, the advantage of reusability and shareability of the e-learning content is essential to enhance production of multimedia content. Besides that, learning content in conventional learning methods is inflexible to contribute to the highly distributed learning resources. Hence, the conventional methods are lacking of the ability in solving the challenges of optimizing the allocation of resources, handling the requirements for enormous storage growth of multimedia elements, and cost distribution.

The readiness of the state-of-the-art Internet and Cloud technologies inspire the envisioning of an e-learning framework employing Cloud technology to promote the flexibility of learning content and to address issues of conventional learning methods. Cloud computing has been adopted to support cooperative learning and remote e-learning based on Cloud computing environment and the transformation of computer fundamental curriculum in universities (Lin, 2011). An upgraded education domain that shares the Cloud characteristics of elasticity, flexibility, efficiency and reliability can be formed by embracing Cloud technology into e-learning (Gong et al., 2010). Students will be able to take courses online and perform learning activities at their own pace; whereas lecturers will be able to manage learning content, activities, and assessment anytime and anywhere via Cloud applications (Riahi, 2015). Another distinct advantage of Cloud technology is the ability to share, process, edit and store huge amount of learning content within educational environments (Abusfian Elgelany, 2017). Cloud computing also delivers a low-cost solution to higher learning institutions for their researchers, academicians and students, at the same time, improved learning performance (Al-Zoube et al., 2010; Riahi, 2015). Therefore, in this study, a Cloud e-learning framework that embraces most of the Cloud characteristics is proposed. Subsequently, a Cloud e-learning application is designed and developed according to the principles of the proposed framework.

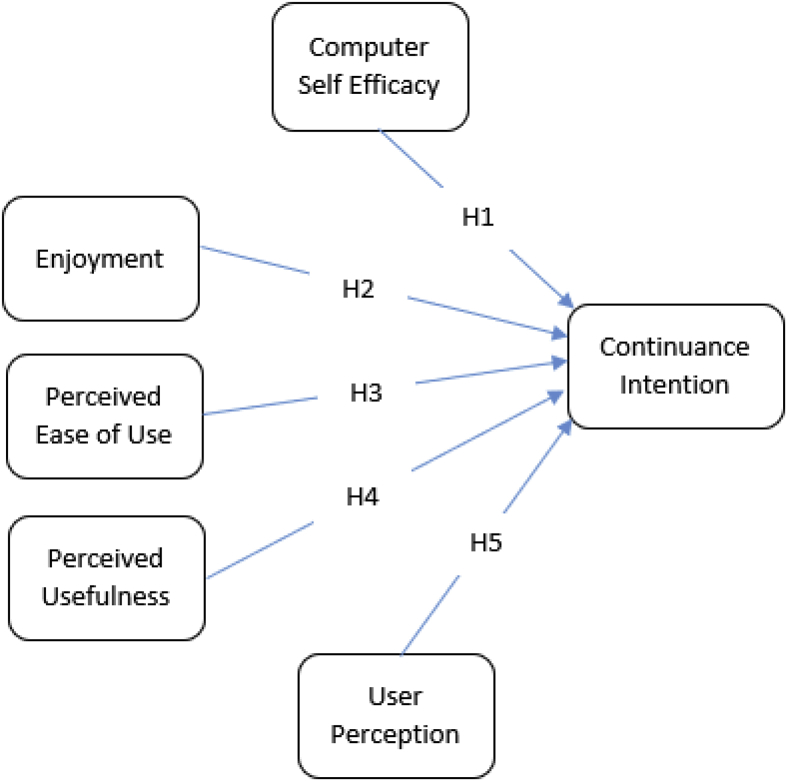

The objective of this study is to propose and validate a theoretical model for predicting continuance intention to use Cloud e-Learning application among IT students in a private university in Malaysia. A comprehensive Cloud e-Learning application is designed and developed embracing a series of Cloud learning tools and Web 2.0 tools. Numerous literatures on technology acceptance (Abu-Al-Aish & Love, 2013; Briz-Ponce and García-Peñalvo, 2015; Calisir et al., 2014; Davis et al., 1992; Davis, 1989; Hamid et al., 2016; Hess et al., 2014; Mou, Shin and Cohen, 2017a, 2017b; Padilla-Meléndez et al., 2013)., continuance intention (Almaghrabi et al., 2011; Amoroso and Chen, 2017; Han et al., 2018; Susanto et al., 2016; Tella and Olasina, 2014; Tiyar and Khoshsima, 2015), usability standards (Bahn et al., 2007; Quesenbery, 2005; Traynor, 2011), etc. have been reviewed to identify the key usability factors. Four prominent models and theories relating to understanding user perceptions of information technology (IT) and user acceptance of information system (IS) have been identified, namely Technology Acceptance Model (Davis, 1989), Theory of Planned Behaviour (Ajzen, 1991), Social-Cognitive Theory (Bandura, 1986), and Motivational Model (Scott et al., 1988; Vallerand, 1997). The proposed theoretical model thus adopts five usability factors from the identified models: Computer Self Efficacy from Social-Cognitive Theory (Bandura, 1986); Enjoyment from Motivational Model (Scott et al., 1988; Vallerand, 1997); Perceived Ease of Use, and Perceived Usefulness from Technology Acceptance Model (Davis, 1989); and User Perception from Combined TAM-TPB (Taylor and Todd, 1995). This study focuses on the students' behavioural intention towards Cloud e-learning. The five factors are adopted into the proposed framework because these factors are able to directly assess the continuance intention of Cloud e-learning application from the perspective of user perception and usability. Perceived ease of use, perceived usefulness, and user perception are chosen to comprehend students' perception and understanding towards Cloud e-learning which subsequently lead to continuance intention to use its application. Computer self-efficacy and enjoyment are chosen to comprehend students' subjective experiences such as being confidence and having enjoyments while learning via Cloud applications. All independent variables are hypothesized to be positively associated to Continuance Intention (CI) to use Cloud e-learning application.

Frameworks integrated IS theories and technology acceptance models allow researchers to comprehend user behavioural intention from different viewpoints (Gan and Balakrishnan, 2018). However, a proper technology acceptance framework for Cloud e-learning has yet to be discovered. Thus, findings obtained from this study can lead to new insights on Cloud e-learning acceptance in higher education.

2. Background

2.1. Relevant cloud e-learning frameworks

E-learning, based on the definition given by Brandon Hall Research Reports (Hall, 2005), is an instruction that is delivered electronically partially or solely via a web browser, such as Netscape Navigator, through the Internet or multimedia platforms such as CD-ROM or DVD. In short, e-learning is an Internet-based learning method (Riahi, 2015).

Cloud computing is an essential service-oriented computing and consistently shows its excellent ability in scalability, flexibility and accessibility (Zhang et al., 2017). Cloud Computing is also a key technology for resource sharing (Kalagiakos and Karampelas, 2011). Holding the aptitudes of distributing computation and storage resources as services, Cloud Computing has become a desirable technology in teaching and learning (Dong et al., 2009). Adopting Cloud computing in e-learning can produce an upgraded learning process that shares Cloud characteristics of scalability, flexibility, accessibility and shareability (Gong et al., 2010).

Cloud e-learning is the adoption of Cloud computing technology in the field of e-learning where all the hardware and software computing resources can be engaged as e-learning services (Riahi, 2015). In 2009, a private Cloud architecture along with its modules and components such as Monitoring Management component, Security component, etc. was well presented (Sulistio et al., 2009). CLoudIA provides on-demand creation and configuration of virtual machine images to enable students to have their own Java environment for experimentation, containing MySQL, PHP, and Apache web server. In the same year, another notable e-learning framework called BlueSky Cloud framework was presented (Dong et al., 2009). BlueSky solved the resource utilization and scalability issues in e-learning.

Shaik Saidhbi (2012) presented Ethiopian Universities Hybrid Cloud (EUHC), which offers the joint benefits of public and private Cloud by adopting hybrid Cloud in Ethiopian universities. In 2013, a relatively complete academic Cloud framework was presented (Madhumathi and Ganapathy, 2013). Their framework specifies the virtualization technology to be used to build an academic Cloud above the existing university infrastructure in order to use the resources more effectively and also to support the quality of service (QoS) objectives such as high availability, performance, reliability, scalability, load balancing and security in Cloud service models. In the subsequent year, Kaur and Chawla proposed a Cloud E-Learning (CEL) as a platform to implement advance Java e-learning in Cloud (Kaur and Chawla, 2014). The frameworks described well-defined learning content in its learning application layer.

In 2017, Rajput and Deora proposed a Cloud framework for e-learning consisting five layers incorporating three Cloud services (Rajput and Deora, 2017). The framework also adopted virtualization technology to build an academic Cloud above their existing university infrastructure in Cloud services models. In the same year, a model utilizing social Cloud was proposed (Encalada and Castillo Sequera, 2017). The model enabled universities in teaching practical IT skills by implementing ecosystems, and also allowed students to foster all the educational pillars through IT training from massive open online courses (MOOCs). In 2018, a Cloud computing framework for higher education institutes in developing countries (CCF_HEI_DC) was proposed by Shukur, Khanapi and Ghani. The framework with six layers was able to be implemented and developed in any developing countries. A new Cloud service named Data as a Service (DaaS) was added to the model to provide raw data as well as generated data sufficiently (Shukur et al., 2018).

From the outcomes of literature studies, strengths of the existing Cloud learning frameworks were adopted as added value into present research. A Cloud e-learning framework has been subsequently proposed for the shaping of a new education domain that shares the Cloud characteristics particularly reusability and shareability. The proposed Cloud e-learning framework consist of five layers namely User Interface Layer, Application layer, Cloud Management Layer, Data Information Layer, and Virtual Infrastructure Layer. Each layer in the framework is made up of a series of components for different purposes. The highlight of the proposed Cloud e-learning framework lies in the data information layer which contains Could e-learning objects which flexible, reusable and shareable.

2.2. Relevant theoretical models to explain IT usage and usability factors

Technology Acceptance Model (TAM) by Davis (1989) is extensively studied and adopted for its simplicity and predictive accuracy in the field of technology acceptance. TAM model has been expanded by numerous researchers and has been applied in various technologies including mobile banking (Lule et al., 2012), e-learning (Cheung and Vogel, 2013; Chow et al., 2012), teleconferencing (Park et al., 2014b), short message service (Muk and Chung, 2015), e-government (Hamid et al., 2016; Lin et al., 2011), mobile learning (Park et al., 2012; Gan and Balakrishnan, 2016, 2017, 2018), etc.

Continuance intention has been the subject of substantial theoretical developments (Rahman et al., 2017) such as IS continuance (Almaghrabi et al., 2011; Amoroso and Chen, 2017; Han et al., 2018; Susanto et al., 2016; Tella and Olasina, 2014; Tiyar and Khoshsima, 2015) and post-adoption usage (Jia et al., 2016; Ong and Lin, 2016). Continuance intention reflects post adoption behaviour and intention of continue using an information system (Limayem and Cheung, 2011). The IS continuance researches are the derivation from prior theories and models such as theory of planned behaviour (TPB), technology acceptance model (TAM), and etc. that tried to explain user intention towards IT usage (Tella and Olasina, 2014).

Theory of Planned Behaviour (TPB) originated from the field of social psychology which claimed that behavioural intention predicts actual user behaviour (Ajzen, 1991). TPB introduced perceived behavioural control (PBC) as a determinant of intention. PCB is determined by perceived importance of skills, resources, and opportunities to achieve outcome. Combined TAM-TPB (Taylor and Todd, 1995) links the predictors of TPB with constructs in TAM. It is also known as the “decomposed” theory of planned behaviour because the belief structure is decomposed in this model (Lau, 2011). Lau (2011) investigated user perceptions for the adoption of Web 2.0 tools among hospital-based nurses and concluded that the identified behavioural perceptions are crucial to understand the adoption intention of Web 2.0 for knowledge sharing, learning, social interaction, and the production of collective intelligence. User perception is often associated with measures of perceived usability (Zhuang et al., 2016).

In the TAM, Davis (1989) identified two key beliefs, perceived usefulness and perceived ease of use to model user acceptance of information technologies. Perceived ease of use refers to the extent to which an individual perceived that using a system is easy and effortless (Davis, 1989), whereas perceived usefulness refers to the degree to which an individual believes that using a particular system would enhance his or her job performance (Davis, 1989). It suggested that computer usage was determined by a behavioural intention to use a system, which was jointly determined by perceived ease of use and perceived usefulness. Previous studies revealed that if an individual perceives a system to be ease to use, he or she is more likely to perceive the system to be useful also (Adams et al., 1992; Morris and Dillon, 1997). If an individual perceives the system to be easy to use, the individual is more likely perceive the system to be useful, and more likely to use the system, especially among novice users (Lau, 2008). Numerous studies have validated the significance of perceived ease of use and perceived usefulness as major determinants of attitude towards technology acceptance (Abu-Al-Aish & Love, 2013; Briz-Ponce and García-Peñalvo, 2015; Calisir et al., 2014; Davis et al., 1992; Davis, 1989; Hamid et al., 2016; Hess et al., 2014; Mou, Shin and Cohen, 2017a, 2017b; Padilla-Meléndez et al., 2013).

Another line of research that can help explain IT usage patterns was inspired by Social-Cognitive Theory (SCT) model (Bandura, 1986). SCT provides a basis for understanding, predicting, and changing human behaviour and suggests that human behavioural change is affected by personal factors and environmental conditions. A pivotal principle of SCT is the concept of self-efficacy. SCT advocates that adoption requires not only the benefits brought forth by the technology, but also the skills and confidence of a user towards the technology (Bandura, 1986).

Computer self-efficacy, referring to an individual's confidence in his or her capability to use new technology and applications, is a vital predictor for technology acceptance (Bandura, 1977, 1989; Compeau and Higgins, 1995). “The degree of self-efficacy of an individual affects his or her capabilities to garner motivation, cognitive resources, and courses of action needed to meet situational demands” (Bandura, 1989). Computer self-efficacy also impacts decisions, goals, and amount of effort in performing a task, and the length of time they would persist through challenges and complications (Liew et al., 2014). Computer self-efficacy builds up motivational base of an individual in navigating a computer-based environment (Deimann and Keller, 2006). A study on the effect of computer self-efficacy towards behaviours in simulation-based learning by Liew et al. (2014) demonstrated partial evidence that computer self-efficacy affects how one behaves in simulation-based learning. An empirical study on mobile technology acceptance by Gan and Balakrishnan (2017) also presented a consistent result where computer self-efficacy moderately predict behavioural intention. In education related literatures, computer self-efficacy has been proven for its encouraging effect on technology acceptance for learning purposes (Alqurashi, 2016; Chester et al., 2011; Doménech-Betoret et al., 2017; Hillier et al., 2013; Schunk, 1985; D. Shank and Cotten, 2014; Valencia-Vallejo et al., 2016).

In the field of motivational psychology, motivation theory has supported numerous researches in behavioural explanation. Motivation theory consists of two major factors of motivations: extrinsic motivation and intrinsic motivation (Scott et al., 1988). Davis et al. (1992) tested the extrinsic (e.g., perceived usefulness) and intrinsic (e.g., enjoyment) motivation on user behavioural intention in workplace and found they strong predictors in behavioural intention to use technology. Motivation theory has since been applied and examined in various studies to understand new technology adoption and use (Davis et al., 1992; Koo et al., 2015; Park et al., 2014a; Venkatesh and Speier, 1999).

Enjoyment can be defined as the extent to which an individual experiences happiness when performing a task, without the need of external reinforcements (Davis et al., 1992; Scott et al., 1988; Vallerand, 1997). Enjoyment is always portrayed as one of the intrinsic motivations that drives people to do something because they enjoy doing it. Multiple studies revealed that enjoyment as intrinsic motivator is one of the significant factors in predicting continuance intention and acceptance of IT technology (Gan and Balakrishnan, 2016, 2017; Park et al., 2014a; Teo and Noyes, 2011; Venkatesh, 2000; Wang et al., 2013; Wu and Gao, 2011; Yi and Hwang, 2003; Zhang et al., 2008). It is evidence that people will be willing to spend more time and effort on a task and have increased explanatory behaviour and greater acceptance of information technology when tasks create a high level of enjoyment.

3. Model

In recent years, more attentions have been given to the post-acceptance or continue usage behaviours because the study of IS acceptance is already approaching maturation (Lin and Ong, 2010). Based on several literatures reviewed (Almaghrabi et al., 2011; Amoroso and Chen, 2017; Han et al., 2018; Limayem and Cheung, 2011; Susanto et al., 2016; Tella and Olasina, 2014), continuance intention has been selected as an dependent variable to explain Cloud e-learning continuance, and then integrated complementary theories such as technology acceptance model (TAM), social cognitive theory (SCT), and motivational model (MM) to better understand Cloud e-learning continuance.

The main objective of this study is to establish a theoretical model to study usability factors predicting continuance intention to use Cloud e-Learning application. Five usability factors namely computer self-efficacy, enjoyment, perceived ease of use, perceived usefulness, and user perception have been identified and adopted to examine the continuance intention to use Cloud e-learning application among a group of IT students.

Computer self-efficacy and enjoyment play a crucial role as intrinsic motivators (Giesbers et al., 2013; Gan and Balakrishnan, 2017, 2018; Park et al., 2012) for continuance intention. In the context of this study, computer self-efficacy can be defined as the confidence level of a student in his or her capability to use Cloud e-Learning application, whereas enjoyment can be defined as the degree to which a student experiences joy when using Cloud e-Learning application. Hence, the following hypotheses were derived.

H1

Computer Self Efficacy positively affects Continuance Intention to use Cloud e-learning application.

H2

Enjoyment positively affects Continuance Intention to use Cloud e-learning application.

Perceived ease of use and perceived usefulness from TAM (Davis, 1989) are well supported across a wide range of studies, but not yet been apparently validated in the context of Cloud e-learning continuance research, thus adopted as the determinants of continuance intention of Cloud e-learning application. An assumption that the complete and user-friendly functionalities of the application make it likely that student may perceives it easy to use and useful in learning. If the Cloud e-learning application is relatively useful and ease to use, students will be more willing to learn about the features and finally has the intention of continue using it. Thus, the following hypotheses were derived.

H3

Perceived Ease of Use positively affects Continuance Intention to use Cloud e-learning application.

H4

Perceived Usefulness positively affects Continuance Intention to use Cloud e-learning application.

Lastly, user perception can be defined as the degree of understanding the concept of Cloud e-Learning Application, e.g., appearance, activities, content, technical aspect, etc (Zhuang et al., 2016). Hence, the following hypothesis was derived.

H5

User Perception positively affects Continuance Intention to use Cloud e-learning application.

The proposed theoretical model is thereby portrayed in Fig. 1.

Fig. 1.

The proposed Theoretical Model.

4. Methodology

4.1. Sampling

Convenience sampling was selected for this empirical study. The targeted respondents were a group of IT students in Multimedia University (MMU), Malaysia. Students enrolled in IT course, regardless of gender, age range, year of study and IT major participated in the survey. These students are conveniently accessible to the researcher, and more importantly, they possess basic knowledge and ability in handling educational technology, thus suited to represent a body of computer literate students who would be interested to adopt Cloud e-learning in their studies. A set of questionnaires were distributed to the students at the end of the trimester, and data collection ended when all the students submitted the completed questionnaire.

4.2. Measurement instrument design and development

A set of 30-question Likert-scale questionnaire was formulated. The English written questionnaire was set for self-perceived characteristics; therefore, the questions were phrased to be of self-understanding of the respondents. Since Cloud e-Learning Application was designed for IT students, the questions were also expressed in the context of IT relevance. The main question items were built based on the six identified constructs (CSE, E, PEU, PU, UP, and CI) of the proposed theoretical model. The 5-scale Likert style questions were labelled from “Strongly Disagree”, “Disagree”, “Neutral”, “Agree” and “Strongly Agree”, scaled from 1 to 5 respectively.

To establish content validity of the constructs, several interviews with experts were conducted. A pilot test was then conducted where the questionnaire was distributed to 10 selected students from the same class before the actual data collection. Of the ten selected students, seven (70%) were males and three (30%) were females. Two students (20%) were within the age range of 17–20, and 8 (80%) were within 21–24 years old. Majority of the respondents were in their third year of study (40%), followed by first year and second year, both 30% respectively. All the students were from the IT field, having four different majors, namely Security Technology (ST), Data Communication and Networking (DCN), Artificial Intelligence (AI) and Information Technology Management (ITM), with the percentage of 30%, 30%, 20% and 20% respectively. The students were given 15 minutes to complete the questionnaire, and feedback were gathered via face-to-face interview. During the interview, students were asked if they understood or agreed with the definition of study, objectives of survey, consensus, demographic details, 5-scale Likert style, clarity of questions, etc. The interview has ensured that the survey items made sense and suitable for the scope of study. More importantly, the pilot test was conducted to verify that the students understand the definitions and terms used in the questionnaire. Comments from the interview were then compiled and the questionnaire was revised accordingly. Several survey item terms and phrases were refined to improve clarity. For further details, please see the Appendices.

4.3. Research design and procedures

In educational study, research design can be classified as qualitative, quantitative, or mixed method (Daniel, 2016; Johnson and Christensen, 2012). The usefulness of qualitative and quantitative approach in educational research has been discussed and heavily debated (Cohen et al., 2011; Daniel, 2016; Johnson and Christensen, 2012; Queirós et al., 2017; Rahman, 2016).

Qualitative research is characterized as meanings, a concept, a definition, metaphors, symbols and a description of things (Berg and Lune, 2012). Qualitative research instruments include observation, interview, field notes, etc., where data are collected in natural settings. Data collected is capable to provide complete description of the research with regard to participants involved (Daniel, 2016). However, results from qualitative research is static and cannot be generalized (Johnson and Christensen, 2012), which is not suitable to be solely adopted for the continuance study of Cloud e-learning in higher education.

Quantitative research, on the other hand, “emphasizes quantification in the collection and analysis of data” (Bryman, 2012). Quantitative approach is the use of statistical data as a tool for research descriptions and analysis with much reduced time and resources (Connolly, 2007; Daniel, 2016). Data collection and analysis in quantitative research make generalization possible, i.e., results obtained from a particular group can be reflective of the wider society in terms of samples, contents and patterns (Cohen et al., 2011; Shank and Brown, 2007). Besides that, quantitative research basically relies on hypotheses testing where clear guidelines and objectives can be easily followed, thus it can be repeated anywhere at any time and the result is still the same (Shank and Brown, 2007). In this study, hypotheses are tested by investigating the cause and effect relationships to predict continuance intention to use Cloud e-learning application. Findings are then generalized to a larger population for higher education in Malaysia.

4.4. Data collection

Survey is one of the most widely used research method in technology acceptance study. It allows the collection of data through the formulation of questions that reflect the opinions, perceptions and behaviors of a group of respondents (Queirós et al., 2017). Besides that, survey offers high representativeness of the entire population and it is low cost. Therefore, quantitative survey is adopted as data collection method in this study to investigate continuance intention to use Cloud e-learning application.

Quantitative research requires adequate sample for empirical study (Hair et al., 2010). Sample size is always an important consideration in Structural Equation Modelling (SEM) because it significantly affects the reliability and validity of parameter estimates, model fit, and statistical power (Aguirre-Urreta and Rönkkö, 2015; Shah and Goldstein, 2006; Wolf et al., 2013). Various rules have been suggested to determine the minimum sample size for regression analyses. Based on Hair et al. (2017), the sample size in PLS-SEM is determined by the often-cited 10 times rule which indicates that the sample size should be equal to the larger value between the construct with the biggest number of formative indicators and the endogenous construct with the largest number of independent exogenous constructs predicting it. In this study, all the constructs are reflective. The endogenous construct with the largest number of exogenous constructs is continuance intention (CI) which has 5 exogenous predictors. This implies that this study requires a minimum sample of 50 (10 × 5). Taken into consideration the sample size recommendation, this study sets down to gather a more than sufficient number of 170 responses from the targeted population.

In the beginning of the trimester, a sample size of 170 students were given a thorough instruction and explanation about the Cloud e-learning application. They were strongly encouraged to learn via this e-learning application optimally. They were also being informed that they will be completing a survey by the end of the trimester to input their thoughts after using the Cloud e-learning application. Students were given approximately four months to experience the Cloud e-learning application. In the process, proper guidance was provided along the way to guarantee an optimized experience on the e-learning application. At the same time, non-participatory observation approach was taken to observe the students in a neutral and non-interference manner (Patton, 2014). At the end of the trimester, all the 170 students gave full cooperation and answered the printed questionnaire to input their experience with the Cloud e-Learning application, thus the response rate of the survey is 100%. Data were then input into excel and saved as Comma Separated Values (CSV) file format. The CSV file was subsequently fed into SMARTPLS 3.0 for statistical analysis.

4.5. Statistical analysis techniques

To test the proposed hypotheses, structural equation modelling (SEM) was used. SEM is considered as the second generation multivariate data analysis method that gains popularity among social scientist because of its ability in testing theoretical supported and additive causal models (Chin, 1998; Michael Haenlein, 2004; Ramayah et al., 2018). SEM is able to test multiple regression models or equations simultaneously.

Thus far, there are two streams of SEM, namely covariance-based SEM (CB-SEM) and partial least squares SEM (PLS-SEM). CB-SEM examines the fit between observed and theoretical covariance matrix, and focuses less on the explained variance, whereas PLS-SEM focuses more on prediction and estimation, and is useful in maximizing the explained variance of independent variables on the dependent variables (Gan and Balakrishnan, 2018; Ramayah et al., 2018). PLS-SEM is useful for prediction as it aims to assess the degree in which set of exogenous constructs to predict the endogenous constructs (Fornell and Bookstein, 1982).

Based on the studies on the statistical analysis techniques, PLS-SEM was deemed to be appropriate choice in association to the objective of this study, which is to predict continuance intention to use Cloud e-Learning application in higher education with the aim to improve learning productivity. SMARTPLS 3.0 was used to run confirmatory factor analysis (CFA) and to verify the internal consistency, reliability, and validity of the theoretical model. Structural model was estimated, and the proposed hypotheses were confirmed.

5. Results

5.1. Demographic analysis

A total of 170 IT students in a private university in Malaysia participated in the study by completing a questionnaire. Table 1 shows the demographic details of the respondents. Of the 170 respondents, 134 (78.8%) were males and 36 (21.2%) were females. It is a common phenomenon that male to female ratio is higher in technical courses such as IT and Engineering in higher education. According to Malaysia's Ministry of Higher Education (MOE) report published in 2017, male graduates were 10% higher than female graduates in technical field of study (MOE, 2017). The average age of the respondents was 22 years old. Majority of the respondents were in their third year of study (52.4%), followed by first year (27.6%), second year (18.2%) and foundation (1.8%). All the respondents were from the IT field, having four different majors, namely Security Technology (ST), Data Communication and Networking (DCN), Artificial Intelligence (AI) and Information Technology Management (ITM), with the percentage of 47.6%, 31.2%, 10.6% and 10.6% respectively. Out of 170 respondents, 166 (97.7%) possessed at least one digital device such as smartphone, laptop, tablet, etc. with internet access. 155 (91.2%) respondents have used their digital devices for learning purposes.

Table 1.

Demographic profile of respondents.

| Count | Percentage | ||

|---|---|---|---|

| Gender | Male | 134 | 78.8 |

| Female | 36 | 21.2 | |

| Age Range | 17–20 | 14 | 8.2 |

| 21–24 | 154 | 90.6 | |

| 25–28 | 2 | 1.2 | |

| Year of Study | Foundation | 3 | 1.8 |

| First Year | 47 | 27.6 | |

| Second Year | 31 | 18.2 | |

| Third Year | 89 | 52.4 | |

| IT Major | AI | 18 | 10.6 |

| DCN | 53 | 31.2 | |

| ITM | 18 | 10.6 | |

| ST | 81 | 47.6 | |

5.2. Factor analysis

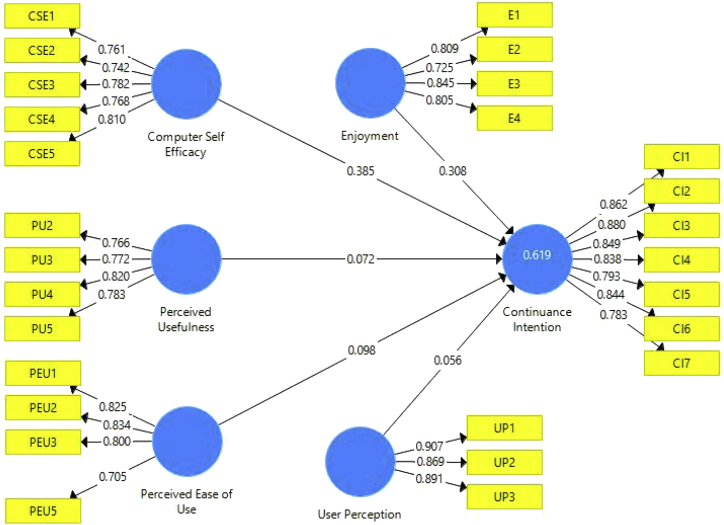

A total of six constructs namely Computer Self Efficacy (CSE), Enjoyment (E), Perceived Ease of Use (PEU), Perceived Usefulness (PU), User Perception (UP) and Continuance Intention (CI) have been identified and adopted for the usability factor analysis. The constructs consist of five exogenous variables, each loaded with five research items, and one endogenous variable loaded with seven research items. The structural model along with its path coefficients are illustrated in Fig. 2.

Fig. 2.

Structural model and path coefficients.

Coefficient of determination, R2 value, was determined to evaluate the predictive accuracy of the structural model's endogenous construct. From the structural model, it is observed that R2 is 0.619 for the endogenous construct, CI. This implies that the five exogenous constructs (CSE, E, PEU, PU and UP) moderately explain 61.9% of the variance in CI, which evaluates to moderate predictive power (Chin, 1998; Hair et al., 2017; Henseler, 2010).

The inner model suggests that CSE and E are strong predictors that significantly affect CI, with CSE (β = 0.385, t-value = 4.933) emerging as the strongest predictor, followed by E (β = 0.308, t-value = 3.803). Having t-value>1.645 for significance level of 5 percent (α = 0.05) in one-tailed test also implies that CSE and E have strong positive relationships with CI (Hair et al., 2017).

In the assessment of the theoretical model, three main assessment criteria namely internal consistency reliability, convergent validity and discriminant validity were adopted. Convergent validity is the extent to which a measure correlates positively with alternative measures of the same construct. To assess convergent validity, the outer loadings of the indicators and the average variance extracted (AVE) were measured (Hair et al., 2017). Loading values equal to or greater than 0.7 (Hair et al., 2010) are deemed to be statistically significant, indicating adequate convergent validity. Besides that, AVE values equal to or greater than 0.5 (Bagozzi and Yi, 1988; Fornell and Larcker, 1981; Hair et al., 2017) also suggest satisfactory convergent validity. Composite reliability (CR) measures internal consistency by taking into account of the outer loadings of the indicators (Gefen et al., 2000). CR values above 0.7 can be regarded as satisfactory (Fornell and Larcker, 1981; Gefen et al., 2000; Ramayah et al., 2018). Table 2 displays the loading values of each construct, its CR and its AVE.

Table 2.

Convergent validity and composite reliability.

| Construct | Items | Loadings | CR | AVE |

|---|---|---|---|---|

| Computer Self Efficacy (CSE) | CSE1 | 0.761 | 0.881 | 0.597 |

| CSE2 | 0.742 | |||

| CSE3 | 0.782 | |||

| CSE4 | 0.768 | |||

| CSE5 | 0.81 | |||

| Continuance Intention (CI) | CI1 | 0.862 | 0.942 | 0.699 |

| CI2 | 0.88 | |||

| CI3 | 0.849 | |||

| CI4 | 0.838 | |||

| CI5 | 0.793 | |||

| CI6 | 0.844 | |||

| CI7 | 0.783 | |||

| Enjoyment (E) | E1 | 0.809 | 0.874 | 0.635 |

| E2 | 0.725 | |||

| E3 | 0.845 | |||

| E4 | 0.805 | |||

| Perceived Ease of Use (PEU) | PEU1 | 0.825 | 0.871 | 0.628 |

| PEU2 | 0.834 | |||

| PEU3 | 0.8 | |||

| PEU5 | 0.705 | |||

| Perceived Usefulness (PU) | PU2 | 0.766 | 0.866 | 0.617 |

| PU3 | 0.772 | |||

| PU4 | 0.82 | |||

| PU5 | 0.783 | |||

| User Perception (UP) | UP1 | 0.907 | 0.919 | 0.791 |

| UP2 | 0.869 | |||

| UP3 | 0.891 |

Note: E5, PEU4 and PU1 were deleted due to low loadings.

Research items that failed to adequately measure the latent variables were removed from further empirical testing. All construct indicators were having the outer loading ≥0.7, which were considered to be acceptable (Hair et al., 2010) and high convergent validity (Byrne, 2001). In other words, all indicators achieved the threshold value; hence, satisfactory indicator reliability was achieved. Average Variance Extracted (AVE) was evaluated for all constructs to check the convergent validity. It can be observed that all the constructs have met the satisfactory level of AVE result of ≥0.5; hence, convergent validity was confirmed (Hair et al., 2017). As for the internal consistency reliability, it is observed that all the constructs have also met the satisfactory level of CR result of ≥0.7, which were considered acceptable (Hair et al., 2017). It is thus concluded that the constructs met reliability and convergent validity requirement at this stage.

Subsequently, discriminant validity was assessed to determine the extent to which the factors are truly distinct from other factors in the model. To assess discriminant validity, the Fornell Larcker criterion, cross loading criterion (Hair et al., 2017) and Heterotrait-Monotrait ratio of correlations (HTMT) (Henseler et al., 2015) were used. Fornell and Larcker (1981) suggested that “the square roots of AVE in each latent variable can be used to establish discriminant validity if the value is larger than other correlation values among the latent variables”. The results in Table 3 indicate that all constructs exhibited sufficient or satisfactory discriminant validity, where the square roots of AVEs for the reflective constructs of CSE (0.773), CI (0.836), E (0.797), PEU (0.792), PU (0.785) and UP (0.889) were all higher than the values of the inter-construct on the same columns and rows (Fornell and Larcker, 1981).

Table 3.

Discriminant validity – Fornell-Larcker criterion.

| CSE | CI | E | PEU | PU | UP | |

|---|---|---|---|---|---|---|

| CSE | 0.773 | |||||

| CI | 0.697 | 0.836 | ||||

| E | 0.568 | 0.678 | 0.797 | |||

| PEU | 0.592 | 0.63 | 0.684 | 0.792 | ||

| PU | 0.616 | 0.637 | 0.699 | 0.742 | 0.785 | |

| UP | 0.634 | 0.613 | 0.619 | 0.731 | 0.718 | 0.889 |

Note: Values on the diagonal (bolded) represent square root of AVE while off-diagonals represent correlations.

For cross loading criterion, the loadings of indicators on the assigned constructs were all higher than the loadings on all other constructs (Table 4), indicating that the indicators of different constructs are inter-changeable. The difference between loadings across constructs were not less than 0.1 (Chin, 1998; Snell and Dean, 2017).

Table 4.

Discriminant validity – cross-loading criterion (bolded values represent the loadings of indicators on the assigned constructs).

| CSE | CI | E | PEU | PU | UP | |

|---|---|---|---|---|---|---|

| CI1 | 0.651 | 0.862 | 0.64 | 0.613 | 0.6 | 0.587 |

| CI2 | 0.614 | 0.88 | 0.585 | 0.553 | 0.577 | 0.491 |

| CI3 | 0.574 | 0.849 | 0.606 | 0.515 | 0.504 | 0.476 |

| CI4 | 0.56 | 0.838 | 0.498 | 0.505 | 0.515 | 0.512 |

| CI5 | 0.587 | 0.793 | 0.554 | 0.466 | 0.471 | 0.5 |

| CI6 | 0.548 | 0.844 | 0.571 | 0.49 | 0.522 | 0.512 |

| CI7 | 0.535 | 0.783 | 0.499 | 0.533 | 0.53 | 0.506 |

| CSE1 | 0.761 | 0.566 | 0.478 | 0.494 | 0.52 | 0.543 |

| CSE2 | 0.742 | 0.499 | 0.435 | 0.418 | 0.418 | 0.504 |

| CSE3 | 0.782 | 0.554 | 0.452 | 0.396 | 0.423 | 0.445 |

| CSE4 | 0.768 | 0.443 | 0.364 | 0.425 | 0.44 | 0.47 |

| CSE5 | 0.81 | 0.606 | 0.453 | 0.538 | 0.563 | 0.488 |

| E1 | 0.44 | 0.537 | 0.809 | 0.575 | 0.58 | 0.529 |

| E2 | 0.419 | 0.436 | 0.725 | 0.507 | 0.491 | 0.539 |

| E3 | 0.536 | 0.606 | 0.845 | 0.553 | 0.568 | 0.512 |

| E4 | 0.41 | 0.566 | 0.805 | 0.547 | 0.586 | 0.413 |

| PEU1 | 0.475 | 0.464 | 0.529 | 0.825 | 0.581 | 0.602 |

| PEU2 | 0.483 | 0.469 | 0.54 | 0.834 | 0.556 | 0.551 |

| PEU3 | 0.434 | 0.502 | 0.53 | 0.8 | 0.584 | 0.603 |

| PEU5 | 0.473 | 0.543 | 0.552 | 0.705 | 0.611 | 0.55 |

| PU2 | 0.477 | 0.467 | 0.467 | 0.619 | 0.766 | 0.601 |

| PU3 | 0.392 | 0.472 | 0.6 | 0.522 | 0.772 | 0.475 |

| PU4 | 0.515 | 0.515 | 0.552 | 0.585 | 0.82 | 0.628 |

| PU5 | 0.543 | 0.541 | 0.575 | 0.602 | 0.783 | 0.55 |

| UP1 | 0.575 | 0.562 | 0.541 | 0.705 | 0.701 | 0.907 |

| UP2 | 0.543 | 0.512 | 0.559 | 0.583 | 0.599 | 0.869 |

| UP3 | 0.572 | 0.56 | 0.553 | 0.658 | 0.613 | 0.891 |

Henseler et al. (2015) suggested HTMT as an alternative approach to assess discriminant validity to measure the ratio of correlations within the constructs to correlations between the constructs. HTMT was used to ensure every construct in this study is truly distinct from one another. In Table 5, none of the confidence interval of HTMT values for the structural paths contains the value of 1, indicating the sufficiency of discriminant validity (Henseler et al., 2015). Fornell-Larcker criterion, cross-loading criterion, and HTMT thus confirmed the discriminant validity of the constructs. In other words, there was no issue of high cross-loading among one another.

Table 5.

Discriminant validity – HTMT.

| CSE | CI | E | PEU | PU | UP | |

|---|---|---|---|---|---|---|

| CSE | --- | |||||

| CI | 0.784 | --- | ||||

| E | 0.686 | 0.773 | --- | |||

| PEU | 0.717 | 0.723 | 0.846 | --- | ||

| PU | 0.749 | 0.739 | 0.87 | 0.924 | --- | |

| UP | 0.745 | 0.682 | 0.747 | 0.872 | 0.864 | --- |

5.3. Hypothesis testing

The evaluation of the structural model is presented in Table 6 and subsequently discussed. It is crucial to address the lateral collinearity issue. In order to assess such collinearity issue, the same rule of thumb, variance inflation factor (VIF) values were applied. All the inner VIF values for the independent variables were examined and the lateral multicollinearity was observed to be clearly above the threshold of 0.2 and below the threshold of 5, indicating lateral multicollinearity was not a concern in this study (Hair et al., 2017).

Table 6.

Lateral collinearity assessment and hypothesis testing.

| Hypothesis | Relationship | VIF | Std Beta | Std Error | t-value | R2 | f2 | Q2 |

|---|---|---|---|---|---|---|---|---|

| H1 | CSE -> CI | 1.909 | 0.385 | 0.078 | 4.933*** | 0.619 | 0.204 | 0.398 |

| H2 | E -> CI | 2.296 | 0.308 | 0.081 | 3.803*** | 0.109 | ||

| H3 | PEU -> CI | 2.960 | 0.098 | 0.094 | 1.036 | 0.008 | ||

| H4 | PU -> CI | 3.016 | 0.072 | 0.092 | 0.778 | 0.004 | ||

| H5 | UP -> CI | 2.738 | 0.056 | 0.077 | 0.719 | 0.003 |

Note: ***p < 0.001.

In this study, five direct hypotheses were developed between the constructs. In order to test the significance level, t-statistics for all paths were generated using SMARTPLS 3.0 bootstrapping function. Based on the assessment of the path coefficient as shown in Table 6, two out of five relationships were found to have t-value > 1.645, thus significant at 0.05 level of significance. Specifically, the predictors of CSE (β = 0.385, t-value = 4.933, p < 0.001) and E (β = 0.308, t-value = 3.803, p < 0.001) are positively related on CI. Hence, H1 and H2 are supported.

Next, the effect size of the predictor constructs was evaluated using Cohen's f2 (J. Cohen, 1988) According to Cohen (1998), f2 for CSE (0.204) and E (0.109) were considered as medium effect size, whereas f2 for PEU (0.008), PU (0.004) and UP (0.003) were considered as small effect size.

The predictive relevance, Stone-Geisser's Q2 value for the endogenous construct CI was 0.398. It was clearly above zero and was above the medium threshold, indicating that exogenous constructs (CSE, E, PEU, PU and UP) have medium predictive relevance for endogenous construct CI (Hair et al., 2017; Geisser, 1974; Stone, 1974).

6. Discussion

The objective of this study was to validate the usability factors to predict continuance intention to use Cloud e-Learning Application in higher education. Five usability factors, namely CSE, E, PU, PEU and UP, have been identified from various literatures such as technology acceptance model, motivational model, etc. to predict continuance intention (CI).

From the factor analysis, it was first observed that the moderate predictive power (R2 = 0.619), indicating that the five exogenous constructs (CSE, E, PEU, PU and UP) moderately predicted (Chin, 1998; Hair et al., 2017; Henseler, 2010) continuous intention (CI) to use Cloud e-Learning Application. Besides that, the proposed theoretical model was observed to have Q2 = 0.398, indicating that exogenous constructs (CSE, E, PEU, PU and UP) have medium predictive relevance (Hair et al., 2017; Geisser, 1974; Stone, 1974) for endogenous construct CI.

The findings also showed that CSE (t-value = 4.933, p < 0.001) and E (t-value = 3.803, p < 0.001) appeared to be strong predictors of CI, while PEU, PU and UP were found to be not significant. Studies have proven the importance of computer self-efficacy and enjoyment as intrinsic motivators for the continuance intention (Giesbers et al., 2013; Gan and Balakrishnan, 2017, 2018; Park et al., 2012). Thus, it is consistence with our prediction that computer self-efficacy and enjoyment moderately predict continuance intention in our research study.

Deducing from this result, there could be two possible theoretical reasons to why computer self-efficacy is crucial in continuance intention. According to Deimann and Keller (2006), computer self-efficacy affects the motivational base of a user in navigating a computer-based environment, and thus in the context of our study, this factor may have led to the likelihood of students learning optimally via Cloud e-Learning application. Besides that, according to Langford and Reeves (1998), computer self-efficacy is correlated to the ability of a user to understand computer-based applications. Hence, in the context of our study, students who were able to understand and handle the flow and design of Cloud e-Learning application were more likely to continue using it. Overall, computer self-efficacy could boost up the level of confidence of a user in using computer applications, thus positively affects continuance intention to use Cloud e-Learning application.

Enjoyment was found to be equally strong predictor, with a high beta coefficient value in the proposed theoretical model. This result aligned with previous studies that deemed enjoyment as a key factor in predicting technology acceptance and continuance intention technology (Gan and Balakrishnan, 2016, 2017; Park et al., 2014a,b; Teo and Noyes, 2011; Venkatesh, 2000; Wang et al., 2013; Wu and Gao, 2011; Yi and Hwang, 2003; Zhang et al., 2008). A notable study that showed enjoyment as an influential factor is Facebook which remained the most popular choice of social media platform (Beldad and Hegner, 2017; Duggan et al., 2015), despite its complex features. Validated by the literatures, we confidently conclude that Cloud e-Learning application has brought some fun elements and joys to the students; thus, they were willing to continue learning via the e-learning application.

On the other hand, however, perceived ease of use, perceived usefulness and user perception exhibited contrary results, as they were found insignificant in the proposed theoretical model. This contradicted studies that validated the significance of perceived ease of use and perceived usefulness as predictors of technology and information system acceptance (Abu-Al-Aish & Love, 2013; Briz-Ponce and García-Peñalvo, 2015; Calisir et al., 2014; Hamid et al., 2016; Hess et al., 2014; Mou et al., 2017b, 2017a; Padilla-Meléndez et al., 2013). This study failed to substantiate the traditional TAM and previous studies on the effects of perceived ease of use and perceived usefulness towards continuance intention to use Cloud e-Learning application. As such, collectively, the insignificance of perceived ease of use suggests that resistance towards new technology may not be as pivotal as it once was. For example, perceived ease of use and perceived usefulness was a weak predictor for students' behavioral intentions to use YouTube for procedural learning (Lee and Lehto, 2013), and students' adoption intention of mobile technology for aiding student-lecturer interactions (Gan and Balakrishnan, 2017, 2018). Another interesting study, which was found to be aligned with our present research outcome, also proved that enjoyment was more significant than perceived ease of use and perceived usefulness when predicting technology acceptance among a group of teachers (Chen et al., 2012; Teo and Noyes, 2011). Lastly, the obtained result for user perception also found to be contradicted studies that demonstrated a positive and moderate relationship between user perception and user behavior (Zhuang et al., 2016). The rejection of H3, H4 and H5 may signify a shift in thinking paradigm among adolescents and young adults who are adept and savvy with digital technologies (Gan and Balakrishnan, 2017, 2018).

7. Conclusion

The evolution in e-learning has produced innovative applications for supporting teaching and learning endeavours of teachers and students. Numerous education frameworks and applications especially e-learning have been presented in recent years. Thus, understanding the continuance intention of students towards using Cloud e-learning application is crucial. This study validated the proposed theoretical model for predicting continuance intention to use Cloud e-learning application among IT students in a private university in Malaysia. Five usability factors namely Computer Self Efficacy (CSE), Enjoyment (E), Perceived Ease of Use (PEU), Perceived Usefulness (PU), and User Perception (UP) were hypothesized to be positively associated to Continuance Intention (CI).

The proposed theoretical model effectively explains continuance intention (R2 = 0.619) of IT students to use Cloud e-Learning application. From the factor analysis results, two out of five proposed hypotheses are found to exert positive effects on continuance intention. Computer self-efficacy and enjoyment have strong positive effect on continuance intention, indicating on the relevance of the Cloud e-learning applications functional and enjoyment features. This finding suggests that in order for the students to be willing to continue using Cloud e-learning application, how they feel and do they enjoy the learning process via the application is vital. However, perceived ease of use, perceived usefulness and user perception, despite their prominence in various acceptance studies, did not show significant impact on continuance intention. This finding suggests that young technology users do not exert cognitive efforts and reflect their confidence in using Cloud e-learning applications. It is also observed that the rejected hypotheses are all related to students' perception towards continuance intention, indicating that students' perception or understanding towards technology does not impact their continuance intention to use Cloud e-learning applications. Instead, continuance intention to use Cloud e-learning is strongly impacted by students' positive subjective experiences such as being confidence and having enjoyments while learning via Cloud applications. This implies that it is important to ensure that Cloud applications contain fun and entertaining elements in students' learning processes in order to encourage higher continuance intention to use Cloud e-learning application. Besides that, it is also crucial that students are being taught and equipped with basic IT knowledge along their learning process to boost their confidence in using any e-learning applications to enhance learning productivity.

There is a limitation in this study where the scope of respondents was limited to IT students in one higher learning institution. IT students are generally more technology savvy if compared to non-IT students. Hypotheses test could have yielded different results if the respondents' range is widened. Thus, future work of interest is to include more predictive factors from different theories and models into the theoretical model, and subsequently expand this data collection to other higher learning institutions in order to obtain a more generalized statistical result.

Declarations

Author contribution statement

Lillian-Yee-Kiaw Wang: Conceived and designed the experiments; Performed the experiments; Analyzed and interpreted the data; Contributed reagents, materials, analysis tools or data; Wrote the paper.

Sook-Ling Lew, Siong-Hoe Lau: Analyzed and interpreted the data; Contributed reagents, materials, analysis tools or data.

Meng-Chew Leow: Conceived and designed the experiments; Performed the experiments.

Funding statement

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Competing interest statement

The authors declare no conflict of interest.

Additional information

No additional information is available for this paper.

Appendix A. Supplementary data

The following is the supplementary data related to this article:

References

- Abu-Al-Aish A., Love S. Factors influencing students’ acceptance of m-learning: an investigation in higher education. Int. Rev. Res. Open Distance Learn. 2013;14(5):82–107. [Google Scholar]

- Abusfian Elgelany W.G.A. Cloud computing: empirical studies in higher education. Int. J. Adv. Comput. Sci. Appl. 2017;8(10):1–12. http://net.educause.edu/section_params/conf/CCW10/highered.pdf Retrieved from. [Google Scholar]

- Adams D.A., Nelson R.R., Todd P. Perceived usefulness, ease of use, and usage of information technology: a replication. MIS Q. 1992;16(2):227–247. [Google Scholar]

- Aguirre-Urreta M., Rönkkö M. Sample size determination and statistical power analysis in PLS using R : an annotated tutorial simsem and matrixpls . You can download an up to date version at sample size determination and statistical power analysis in PLS using R : an annotated tutoria. Commun. Assoc. Inf. Syst. 2015;36(January 2015):33–51. [Google Scholar]

- Ajzen I. Theory of planned behavior Theory of planned behavior. Organ. Behav. Hum. Decis. Process. 1991;50(2):179–211. [Google Scholar]

- Al-Zoube M., Abou El-Seoud S., Wyne M.F. Cloud computing based E-learning system. Int. J. Distance Educ. Technol. 2010;8(2):58–71. [Google Scholar]

- Almaghrabi T., Dennis C., Halliday S.V., Binali A.A. Determinants of customer continuance intention of online shopping. Int. J. Bus. Sci. Appl. Manag. 2011;6(1):41–65. http://elibrary.vu.nl/primo_library/libweb/action/display.do?tabs=detailsTab&ct=display&fn=search&doc=TN_doaj_xmloai%3Adoaj.org%2Farticle%3Ab91ec7f58bf04d23bb90109b447d69d1&indx=1&recIds=TN_doaj_xmloai%3Adoaj.org%2Farticle%3Ab91ec7f58bf04d23bb90109b447d69 Retrieved from. [Google Scholar]

- Alqurashi E. Self-efficacy in online learning environments: a literature review. Contemp. Issues Educ. Res. 2016;9(1):45. [Google Scholar]

- Amoroso D.L., Chen Y.A.N. Constructs Affecting Continuance intention in consumers with mobile financial apps : a dual factor approach. J. Inf. Technol. Manag. 2017;XXVIII(3) http://jitm.ubalt.edu/XXVIII-3/article1.pdf Retrieved from. [Google Scholar]

- Bagozzi R.P., Yi Y. On the evaluation of structural equation models. J. Acad. Mark. Sci. 1988;16(1):74–94. [Google Scholar]

- Bahn S., Lee C., Jo J.H., Suh W.Y., Song J., Yun M.H. Vols 1–4. 2007. Incorporating user acceptance into usability evaluation scheme for the user interface of mobile services; pp. 492–496. (2007 Ieee International Conference on Industrial Engineering and Engineering Management). [Google Scholar]

- Bandura A. Self-efficacy: toward a unifying theory of behavioral change. Psychol. Rev. 1977;84(2):191–215. doi: 10.1037//0033-295x.84.2.191. [DOI] [PubMed] [Google Scholar]

- Bandura A. Prentice-Hall; Englewood Cliffs, N.J. : 1986. Social Foundations of Thought and Action : a Social Cognitive Theory. ©1986. [Google Scholar]

- Bandura A. Regulation of cognitive processes through perceived self-efficacy. Dev. Psychol. 1989;25(5):729–735. [Google Scholar]

- Beldad A.D., Hegner S.M. More photos from me to thee: factors influencing the intention to continue sharing personal photos on an online social networking (OSN) site among young adults in The Netherlands. Int. J. Hum. Comput. Interact. 2017;33(5):410–422. [Google Scholar]

- Berg B.L., Lune H. eighth ed. Pearson; Boston: 2012. Qualitative Research Methods for the Social Sciences. [Google Scholar]

- Brandon Hall Research Reports . 2005. E-learning Reports.http://www.brandon-hall.com Retrieved from. [Google Scholar]

- Briz-Ponce L., García-Peñalvo F. An empirical assessment of a technology acceptance model for apps in medical education. J. Med. Syst. 2015;39(11):1–5. doi: 10.1007/s10916-015-0352-x. [DOI] [PubMed] [Google Scholar]

- Bryman A. fourth ed. Oxford University Press; 2012. Social Research Methods. [Google Scholar]

- Byrne B.M. Structural equation modeling with AMOS, EQS, and LISREL: comparative approaches to testing for the factorial validity of a measuring instrument. Int. J. Test. 2001;1(1):55–86. [Google Scholar]

- Calisir F., Gumussoy C.A., Bayraktaroglu A.E., Karaali D. Predicting the intention to use a web-based learning system: perceived content quality, anxiety, perceived system quality, image, and the technology acceptance model. Hum. Factors Ergon. Manuf. 2014;24(5):515–531. [Google Scholar]

- Chen C., Shih B., Yu S. Disaster prevention and reduction for exploring teachers’ technology acceptance using a virtual reality system and partial least squares techniques. Nat. Hazards. 2012;62(3):1217–1231. [Google Scholar]

- Chester A., Buntine A., Hammond K., Atkinson L. Podcasting in education: student attitudes, behaviour and self-efficacy. Educ. Technol. Soc. 2011;14(2):236–247. [Google Scholar]

- Cheung R., Vogel D. Predicting user acceptance of collaborative technologies: an extension of the technology acceptance model for e-learning. Comput. Educ. 2013;63:160–175. [Google Scholar]

- Chin W.W. Commentary: issues and opinion on structural equation modeling. MIS Q. 1998;22(1) https://www.jstor.org/stable/249674 Retrieved from. [Google Scholar]

- Chow M., Herold D.K., Choo T.-M., Chan K. Extending the technology acceptance model to explore the intention to use Second Life for enhancing healthcare education. Comput. Educ. 2012;59(4):1136–1144. [Google Scholar]

- Chunwijitra S., John Berena A., Okada H., Ueno H. Advanced content authoring and viewing tools using aggregated video and slide synchronization by key marking for web-based e-Learning system in higher education. IEICE Trans. Info Syst. 2013;E96–D(8):1754–1765. [Google Scholar]

- Cohen J. second ed. Lawrence Erlbaum Associates; Hillsdale, NJ: 1988. Statistical Power Analysis for the Behavioral Science. [Google Scholar]

- Cohen L., Manion L., Morrison K. seventh ed. Routledge; London ; New York: 2011. Research Methods in Education. [Google Scholar]

- Compeau D., Higgins C. Computer self-efficacy: development of a measure and initial test. MIS Q. 1995;19:189–211. [Google Scholar]

- Connolly P. Routledge; London: 2007. Quantitative Data Analysis in Education: A Critical Introduction Using SPSS. [Google Scholar]

- Daniel E. The usefulness of qualitative and quantitative approaches and methods in researching problem-solving ability in science education curriculum. J. Educ. Pract. 2016;7(15):100–191. [Google Scholar]

- Davis F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989;13(3):319–340. [Google Scholar]

- Davis F.D., Bagozzi R.P., Warshaw P.R. Extrinsic and intrinsic motivation to use computers in the workplace. J. Appl. Soc. Psychol. 1992;22:1111–1132. [Google Scholar]

- Deimann Markus, Keller J.M. Volitional aspects of multimedia learning. J. Educ. Multimedia Hypermedia. 2006;15(2):137–158. https://www.learntechlib.org/primary/p/6140/ Retrieved from. [Google Scholar]

- Doménech-Betoret F., Abellán-Roselló L., Gómez-Artiga A. Self-efficacy, satisfaction, and academic achievement: the mediator role of students’ expectancy-value beliefs. Front. Psychol. 2017;8(JUL):1–12. doi: 10.3389/fpsyg.2017.01193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dong B., Zheng Q., Qiao M., Shu J., Yang J. Cloud Computing: First International Conference. CloudCom; 2009. BlueSky cloud Framework : an E-learning framework embracing cloud computing; pp. 577–582. [Google Scholar]

- Duggan M., Ellison N., Lampe C., Lenhart A., Madden M. 2015. Social Media Update 2014.http://www.pewinternet.org/2015/01/09/social-media-update-2014/ Retrieved from. [Google Scholar]

- Encalada W.L., Castillo Sequera J.L. Model to implement virtual computing labs via cloud computing services. Symmetry. 2017;9(7):1–15. [Google Scholar]

- Fornell C., Bookstein F.L. Two structural equation models: LISREL and PLS applied to consumer exit-voice theory. J. Mark. Res. 1982;19(4):440–452. [Google Scholar]

- Fornell C., Larcker D.F. Evaluating structural equation models with unobserved variables and measurement error. J. Mark. Res. 1981 [Google Scholar]

- Gan C.L., Balakrishnan V. An empirical study of factors affecting mobile wireless technology adoption for promoting interactive lectures in higher education. Int. Rev. Res. Open Distance Learn. 2016;17(1):214–239. [Google Scholar]

- Gan C.L., Balakrishnan V. Predicting acceptance of mobile technology for aiding student-lecturer interactions: an empirical study. Australas. J. Educ. Technol. 2017;33(2):143–158. [Google Scholar]

- Gan C.L., Balakrishnan V. Mobile technology in the classroom: what drives student-lecturer interactions? Int. J. Hum. Comput. Interact. 2018;34(7):666–679. [Google Scholar]

- Gefen D., Straub D., Boudreau M.-C. Structural equation modeling and regression: guidelines for research practice. Commun. Assoc. Inf. Syst. 2000;4(7):1–77. [Google Scholar]

- Geisser Seymour. A predictive approach to the random effect model. Biometrika. 1974;61(1):101–107. [Google Scholar]

- Giesbers Bas, Rienties Bart, Dirk Tempelaar W.G. Investigating the relations between motivation, tool use, participation, and performance in an e-learning course using web-videoconferencing. Comput. Hum. Behav. 2013;29(1):285–292. [Google Scholar]

- Gong C., Liu J., Zhang Q., Chen H., Gong Z. The characteristics of cloud computing. Parallel Process. 2010:275–279. [Google Scholar]

- Hair J.F., Black W.C., Babin B.J., Anderson R.E. seventh ed. Pearson Education; Upper Saddle River, NJ: 2010. Multivariate Data Analysis: A Global Perspective. [Google Scholar]

- Hair J.F., Hult T.M., Ringle C.M., Sarstedt M. SAGE Publications, Inc; Thousand Oaks, CA: 2017. A Primer on Partial Least Square Structural Equation Modeling (PLS-SEM) [Google Scholar]

- Hamid A.A., Razak F.Z.A., Bakar A.A., Abdullah W.S.W. The effects of perceived usefulness and perceived ease of use on continuance intention to use E-government. Proc. Econ. Finance. 2016;35(October 2015):644–649. [Google Scholar]

- Han M., Wu J., Wang Y., Hong M. A model and empirical study on the user’s continuance intention in online China brand communities based on customer-perceived benefits. J. Open Innov. Technol. Market Complex. 2018;4(4):46. [Google Scholar]

- Henseler J. On the convergence of the partial least squares path modeling algorithm. Comput. Stat. 2010;25(1):107–120. [Google Scholar]

- Henseler J., Ringle C.M., Sarstedt M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 2015;43(1):115–135. [Google Scholar]

- Hess T.J., Mcnab A.L., Basoglu K.A. Reliability generalization of perceived ease of use, perceived usefulness, and behavioral intentions. Manag. Inf. Syst. Q. 2014;38(1):1–28. [Google Scholar]

- Hillier E., Beauchamp G., Whyte S. A study of self-efficacy in the use of interactive whiteboards across educational settings: a European perspective from the iTILT project. Educ. Futures. 2013;5(2):3–23. http://educationstudies.org.uk/wp-content/uploads/2013/11/emily_hillier_besav3.pdf Retrieved from. [Google Scholar]

- Jia Q., Guo Y., Barnes S.J. Proceedings of the International Conference on Electronic Business (ICEB) 2016. E2.0 post-adoption: extending the IS continuance model based on the technology-organization-environment framework; pp. 695–707. (September) [Google Scholar]

- Johnson R.B., Christensen L.B. fourth ed. Vol. 91. SAGE Publications, Inc; California: 2012. (Educational Research Quantitative, Qualitative, and Mixed Approaches). [Google Scholar]

- Kalagiakos P., Karampelas P. 2011 5th International Conference on Application of Information and Communication Technologies (AICT) Ieee; 2011. Cloud Computing Learning; pp. 1–4. [Google Scholar]

- Kaur G., Chawla S. Cloud E learning Application : architecture and framework. SSRG Int. J. Comp. Sci. Eng. SSRG-IJCSE. 2014;1(4):1–5. [Google Scholar]

- Koo C., Chung N., Nam K. Assessing the impact of intrinsic and extrinsic motivators on smart green IT device use: reference group perspectives. Int. J. Inf. Manag. 2015;35(1):64–79. [Google Scholar]

- Langford M., Reeves T. The relationships between computer self-efficacy and personal characteristics of the beginning information systems student. J. Comput. Inf. Syst. 1998;38(4):41–45. [Google Scholar]

- Lau S.H. Multimedia University; 2008. An Empirical Study of Students' Acceptance of Learning Objects. [Google Scholar]

- Lau A. Hospital-based nurses’ perceptions of the adoption of Web 2.0 tools for knowledge sharing, learning, social interaction and the production of collective intelligence. J. Med. Internet Res. 2011;13(4) doi: 10.2196/jmir.1398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee D.Y., Lehto M.R. User acceptance of YouTube for procedural learning: an extension of the technology acceptance model. Comput. Educ. 2013;61(1):193–208. [Google Scholar]

- Liew T.W., Tan S.-M., Seydali R. The effects of learners ’ differences on variable manipulation behaviors in simulation-based learning. J. Educ. Technol. Syst. 2014;43(1):13–34. [Google Scholar]

- Limayem M., Cheung C. Predicting the continued use of internet-based learning technologies: the role of habit. Behav. Inf. Technol. 2011;30(1):91–99. [Google Scholar]

- Lin K.M. E-Learning continuance intention: moderating effects of user e-learning experience. Comput. Educ. 2011;56(2):515–526. [Google Scholar]

- Lin M.Y., Ong C. Pacific Asia Conference on Information Systems, PACIS 2010. 2010. Understanding information systems continuance intention: a five-factor model of personality perspective; pp. 367–376. [Google Scholar]

- Lin F., Fofanah S.S., Liang D. Assessing citizen adoption of E-government initiatives in Gambia: a validation of the technology acceptance model in information system success. Gov. Inf. Q. 2011;28:271–279. [Google Scholar]

- Lule I., Omwansa T.K., Waema T.M.P. Application of technology acceptance model ( TAM ) in M-banking adoption in Kenya. Int. J. Comput. Intell. Res. 2012;6(1):31–43. [Google Scholar]

- Madhumathi C., Ganapathy G. An Academic Cloud Framework for Adapting e-Learning in Universities. Int. J. Adv. Res. in Comp. Commun. Eng. 2013;2(11):4480–4484. https://www.ijarcce.com/upload/2013/november/66-S-Madhu Mathi -AN academic.pdf Retrieved from. [Google Scholar]

- Michael Haenlein A.M.K. A beginner’s guide to partial least squares analysis, Understanding Statistics”. Stat. Issues Psychol. Soc. Sci. 2004;3(4):283–297. [Google Scholar]

- MOE . 2017. Quick Facts 2017 Malaysia Educational Statistics. Educational Data Sector, Educational Planning and Research Division, Ministry of Education Malaysia.https://www.moe.gov.my/images/Terbitan/Buku-informasi/QUICK-FACTS-2017/20170809_QUICK-FACTS_2017_FINAL5_interactive.pdf Putrajaya. Retrieved from. [Google Scholar]

- Morris M.G., Dillon A. How user perceptions influence software use. IEEE Software. 1997;14(4):58–64. [Google Scholar]

- Mou J., Shin D.-H., Cohen J. Tracing college students’ acceptance of online health services. Int. J. Hum. Comput. Interact. 2017;33(5):371–384. [Google Scholar]

- Mou J., Shin D.-H., Cohen J. Understanding trust and perceived usefulness in the consumer acceptance of an e-service: a longitudinal investigation. Behav. Inf. Technol. 2017;36(2):125–139. [Google Scholar]

- Muk A., Chung C. Applying the technology acceptance model in a two-country study of SMS advertising. J. Bus. Res. 2015;68(1):1–6. [Google Scholar]

- Ong C.S., Lin M.Y.C. Is being satisfied enough? Well-being and IT post-adoption behavior: an empirical study of Facebook. Inf. Dev. 2016;32(4):1042–1054. [Google Scholar]

- Padilla-Meléndez A., Aguila-Obra A.R., Garrido-Moreno A. Perceived playfulness, gender differences and technology acceptance model in a blended learning scenario. Comput. Educ. 2013;63:306–317. [Google Scholar]

- Park S.Y., Nam M., Cha S. University students’ behavioral intention to use mobile learning: evaluating the technology acceptance model. Br. J. Educ. Technol. 2012;43:592–605. [Google Scholar]

- Park E., Baek S., Ohm J., Chang H.J. Determinants of player acceptance of mobile social network games: an application of extended technology acceptance model. Telematics Inf. 2014;31(1):3–15. [Google Scholar]

- Park N., Rhoads M., Hou J., Lee K. Understanding the acceptance of teleconferencing systems among employees: an extension of the technology acceptance model. Comput. Hum. Behav. 2014;39:118–127. [Google Scholar]

- Patton M.Q. fourth ed. SAGE Publications, Inc; 2014. Qualitative Research & Evaluation Methods Integrating Theory and Practice. [Google Scholar]

- Queirós A., Faria D., Almeida F. Strengths and limitations of qualitative and quantitative research methods. Eur. J. Educ. Stud. 2017;3(9):369–387. [Google Scholar]

- Quesenbery W. IEEE International Professional Communication Conference. 2005. Usability standards: connecting practice around the world; pp. 451–457. [Google Scholar]

- Rahman M.S. The advantages and disadvantages of using qualitative and quantitative approaches and methods in language “testing and assessment” research: a literature review. J. Educ. Learn. 2016;6(1):102–112. [Google Scholar]

- Rahman M.N.A., Syed Zamri S.N.A., Eu L.K. A meta-analysis study of satisfaction and continuance intention to use educational technology. Int. J. Acad. Res. Bus. Soc. Sci. 2017;7(4):1059–1072. [Google Scholar]

- Rajput L.S., Deora B.S. International Conference on Innovation Research in Science, Technology and Management. 2017. Developing a cloud based E-learning framework for higher education institutions (HEI) pp. 237–244. [Google Scholar]

- Ramayah T., Cheah J., Chuah F., Ting H., Memon M.A. second ed. Pearson; 2018. Partial Least Squares Structural Equation Modeling (PLS-SEM) Using SmartPLS 3.0: an Updated Guide and Practical Guide to Statistical Analysis.https://scholar.google.com/scholar?hl=en&as_sdt=0,5&cluster=6234200078132602017 Retrieved from. [Google Scholar]

- Riahi G. E-learning systems based on cloud computing: a review. Proc. Comp. Sci. 2015;62(Scse):352–359. [Google Scholar]

- Saidhbi S. A cloud computing framework for Ethiopian higher education institutions. IOSR J. Comput. Eng. 2012;6(6):01–09. http://www.iosrjournals.org/iosr-jce/papers/Vol6-Issue6/A0660109.pdf Retrieved from. [Google Scholar]

- Schunk D.H. Self-efficacy and classroom learning. Psychol. Sch. 1985;22(2):208–223. [Google Scholar]

- Scott W., Farh J.-L., Podsakoff P.M. The effects of “intrinsic” and “extrinsic” reinforcement contingencies on task behavior. Organ. Behav. Hum. Decis. Process. 1988;41(3):405–425. [Google Scholar]

- Shah R., Goldstein S.M. Use of structural equation modeling in operations management research: looking back and forward. J. Oper. Manag. 2006;24(2):148–169. [Google Scholar]

- Shank G., Brown L. Routledge; New York: 2007. Exploring Educational Research Literacy. [Google Scholar]

- Shank D., Cotten S. Does technology empower urban youth? The relationship of technology use to self-efficacy. Comput. Educ. 2014;70(1):184–193. [Google Scholar]

- Shukur B.S., Khanapi M., Ghani A. International Conference on Intelligent and Interactive Computing IIC2018. 2018. A cloud computing framework for higher education institutes in developing countries ( CCF _ HEI _ DC ) problems of higher education institutes in developing; pp. 1–11. [Google Scholar]

- Snell S.A., Dean J.W. Integrated manufacturing and human resource management: a human capital perspective. Acad. Manag. J. 2017;35(3):467–504. [Google Scholar]

- Stone M. Cross-validation and multinomial prediction. Biometrika. 1974;61(3):509–515. [Google Scholar]

- Sulistio A., Reich C., Doelitzscher F. CloudCom ’09 Proceedings of the 1st International Conference on Cloud Computing. 2009. Cloud infrastructure & applications – CloudIA; pp. 583–588. [Google Scholar]

- Susanto A., Chang Y., Ha Y. Determinants of continuance intention to use the smartphone banking services : an Extension to the Expectation - confirmation Model. Ind. Manag. Data Syst. 2016;116(3):508–525. [Google Scholar]

- Taylor S., Todd P.A. TaylorTodd - good for comparing models.pdf. Inf. Syst. Res. 1995;6:144–176. [Google Scholar]

- Tella A., Olasina G. Predicting users’ continuance intention toward E-payment system. Int. J. Inf. Syst. Soc. Chang. 2014;5(1):47–67. [Google Scholar]

- Teo T., Noyes J. An assessment of the influence of perceived enjoyment and attitude on the intention to use technology among pre-service teachers: a structural equation modeling approach. Comput. Educ. 2011;57(2):1645–1653. [Google Scholar]

- Thomas P.Y. 2011. Cloud Computing: A Potential Paradigm for Practising the Scholarship of Teaching and Learning; pp. 1–7. [Google Scholar]

- Tiyar F.R., Khoshsima H. Understanding students’ satisfaction and continuance intention of e-learning: application of expectation–confirmation model. World J. Educ. Technol. 2015;7(3):157. [Google Scholar]

- Traynor B. IEEE International Professional Communication Conference. 2011. Usability standards – evolution, access and practice. [Google Scholar]

- Valencia-Vallejo N., López-Vargas O., Sanabria-Rodríguez L. Self-efficacy in computer-based learning environments: a bibliometric analysis. Psychology. 2016;07(14):1839–1857. [Google Scholar]

- Vallerand R.J. Toward a hierarchical model of intrinsic and extrinsic motivation. Adv. Exp. Soc. Psychol. 1997;29:271–360. [Google Scholar]

- Venkatesh V. Determinants of perceived ease of use integrating control, intrinsic motivation, and emotion into the technology acceptance model. Inf. Syst. Res. 2000;11(4):342–365. [Google Scholar]

- Venkatesh V., Speier C. Computer technology training in the workplace: a longitudinal investigation of the effect of mood- organizational behavior and human decision processes. Organ. Behav. Hum. Decis. Process. 1999;79(1):1–28. doi: 10.1006/obhd.1999.2837. [DOI] [PubMed] [Google Scholar]

- Wang Y.S., Yeh C.H., Liao Y.W. What drives purchase intention in the context of online content services? the moderating role of ethical self-efficacy for online piracy. Int. J. Inf. Manag. 2013 [Google Scholar]

- Wolf E.J., Harrington K.M., Clark S.L., Miller M.W. Sample size requirements for structural equation models: an evaluation of power, bias, and solution propriety. Educ. Psychol. Meas. 2013;76(6):913–934. doi: 10.1177/0013164413495237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu X., Gao Y. Applying the extended technology acceptance model to the use of clickers in student learning: some evidence from macroeconomics classes. Am. J. Bus. Educ. 2011;4(7):43–50. [Google Scholar]