Visual Abstract

Keywords: eye–hand coordination, hand dominance, humans, internal model, visuomotor tracking

Abstract

Skilled motor behavior relies on the ability to control the body and to predict the sensory consequences of this control. Although there is ample evidence that manual dexterity depends on handedness, it remains unclear whether control and prediction are similarly impacted. To address this issue, right-handed human participants performed two tasks with either the right or the left hand. In the first task, participants had to move a cursor with their hand so as to track a target that followed a quasi-random trajectory. This hand-tracking task allowed testing the ability to control the hand along an imposed trajectory. In the second task, participants had to track with their eyes a target that was self-moved through voluntary hand motion. This eye-tracking task allowed testing the ability to predict the visual consequences of hand movements. As expected, results showed that hand tracking was more accurate with the right hand than with the left hand. In contrast, eye tracking was similar in terms of spatial and temporal gaze attributes whether the target was moved by the right or the left hand. Although these results extend previous evidence for different levels of control by the two hands, they show that the ability to predict the visual consequences of self-generated actions does not depend on handedness. We propose that the greater dexterity exhibited by the dominant hand in many motor tasks stems from advantages in control, not in prediction. Finally, these findings support the notion that prediction and control are distinct processes.

Significance Statement

Humans often exhibit greater manual dexterity with the dominant hand. Here we assessed whether handedness similarly impacts control and prediction, two key processes for skilled motor behavior. Using two eye–hand coordination tasks that differently rely on control and prediction, we show that, although handedness impacts the accuracy of hand movement control, it has virtually no influence on the ability to predict the visual consequences of hand movements. We propose that the superior performance of the dominant hand stems from advantages in control, not in prediction. In addition, these findings provide further evidence that prediction and control are distinct neural processes.

Introduction

Skilled motor behavior relies on the brain learning both to control the body and predict the consequences of this control (Flanagan et al., 2003). Control turns desired consequences into motor commands, whereas prediction turns motor commands into expected sensory consequences (Kawato, 1999; Wolpert et al., 2011; Shadmehr, 2017). Although there is ample evidence that manual dexterity depends on handedness, it remains unclear whether the superiority of the dominant hand stems from more efficient control and/or predictive mechanisms. Here, two eye–hand coordination tasks, known to rely differently on control and prediction were used to determine if these two processes are similarly influenced by handedness.

Motor control is generally more efficient for the dominant hand than the non-dominant hand. This idea is supported by numerous reports comparing the time to complete tests of manual dexterity (Bryden and Roy, 2005; Noguchi et al., 2006; Wang et al., 2011), as well as reports comparing the accuracy and variability of reaching movements (Carson et al., 1993; Elliott et al., 1993; Roy et al., 1994; Carey and Liddle, 2013; Schaffer and Sainburg, 2017). As for the effect of handedness on predictions, however, this issue has been less explored. Nonetheless, indirect evidence hints at the possibility that prediction could also be superior for the dominant hand. For instance it has been suggested that dominant hand movements rely on a better prediction of intersegmental dynamics (Sainburg and Kalakanis, 2000; Pigeon et al., 2013; Sainburg, 2014). Similarly, motor imagery, known to engage predictive mechanisms (Kilteni et al., 2018), has been shown to be more accurate for the dominant hand (Gandrey et al., 2013).

To assess whether the effect of handedness differs for control and prediction of hand movements, we tested right-handed participants on two types of eye–hand coordination tasks, each task being completed either by the right or the left hand. The first task was a hand-tracking task designed to assess the ability of participants to control their hand movement along an imposed trajectory (Carey et al., 1994; Foulkes and Miall, 2000; Sarlegna et al., 2010; Aoki et al., 2016; Moulton et al., 2017). During this task, participants had to control a cursor by means of a joystick so as to track a visual target that followed an unpredictable trajectory (Ogawa and Imamizu, 2013; Mathew et al., 2018). The second task was an eye-tracking task designed to test the ability of participants to predict the visual consequences of their hand movements. This time, participants were required to track with the eyes a target that was moved by their hand (Vercher et al., 1996; Landelle et al., 2016; Danion et al., 2017; Mathew et al., 2017). Such eye tracking of a self-moved target is known to rely on predictive mechanisms, supposedly based on the hand efference copy (Steinbach and Held, 1968; Scarchilli et al., 1999) as evidenced by the reduced temporal lag between eye and target position compared with eye tracking a target that is moved by an external agent (Steinbach and Held, 1968; Gauthier and Hofferer, 1976; Domann et al., 1989; Vercher et al., 1996).

In line with a large body of literature on arm reaching movements (Carson et al., 1993; Elliott et al., 1993; Roy et al., 1994; Carey and Liddle, 2013), previous studies have shown that the dominant (right) hand is more accurate for tracking a continuously moving target (Simon et al., 1952; Aoki et al., 2016; but see Carey et al., 1994; Moulton et al., 2017 ). We thus hypothesized that hand tracking, which reflects control, would be more accurate with the dominant hand. However, to our knowledge the possible influence of handedness on eye tracking a self-moved target has never been explored. In previous studies investigating this task, only the right dominant hand was used (Vercher et al., 1993, 1996; Scarchilli and Vercher, 1999; Chen et al., 2016a; Landelle et al., 2016; Danion et al., 2017; Mathew et al., 2017, 2018) or no (or incomplete) information was provided regarding participants’ handedness or the hand used in the task (Steinbach and Held, 1968; Steinbach, 1969; Gauthier and Hofferer, 1976; Gauthier et al., 1988). To date, we are only aware of a single study in which dominant and non-dominant hands were used (Chen et al., 2016b), but the putative impact of handedness was not reported.

Methods

Participants

Twenty-eight healthy right-handed volunteers (mean ± SD age, 26.6 ± 5.4 years; 13 females) were recruited. Handedness of participants was verified using the Oldfield Handedness Inventory (Oldfield, 1971) with a mean laterality quotient of 87.5 ± 12.9%. The experimental paradigm (2016-02-03-007) was approved by the local ethics committee of Aix-Marseille University and complied with the Declaration of Helsinki. All participants gave written consent before participation.

Apparatus

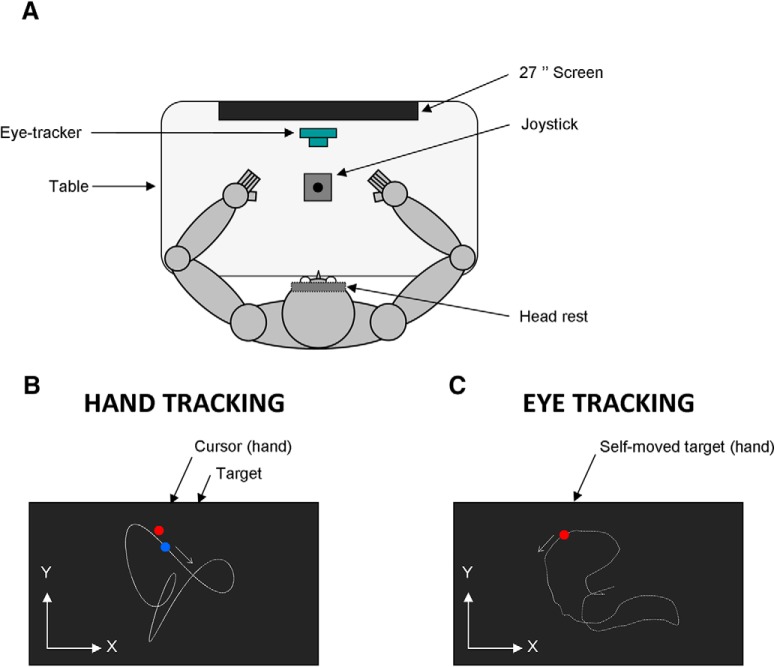

Figure 1 shows the experimental setup. Participants were comfortably seated in a dark room facing a screen (Benq, 1920 × 1080 pixels, 27 inches, 144 Hz) positioned in the frontal plane 57 cm away from their eyes. Note that 1° of visual angle is approximately equivalent to a distance of 1 cm on the screen at an eye-to-screen distance of 57 cm. Participants’ head movements were restrained by a chin rest and a padded forehead rest so that the eyes in primary position were directed toward the center of the screen. Both right and left forearms were resting on the table. To prevent vision of their hands, a piece of cardboard was positioned under the participants’ chin. Participants were required to hold with the hand a joystick (812 series, Megatron; with 25° of inclination along the x- and y-axes with no force bringing it back to the central position). The analog output of the joystick was sent to a data acquisition system (Keithley ADwin Real Time, Tektronix) and sampled at 1000 Hz.

Figure 1.

Schematic drawing of the experimental setup. A, Top view of the participant sitting in the experimental setup. B, Schematic view of the screen during the hand tracking condition. C, Schematic view of the screen during the eye tracking condition (see Materials and Methods). The target trajectory (white dotted trace) and x–y reference system is displayed for illustration purposes but was not visible to the participant.

Eye movements were recorded using an infrared video-based eye tracker (EyeLink 1000 Desktop, SR Research). Horizontal and vertical positions of the right eye were recorded at a sampling rate of 1000 Hz. The output from the eye tracker was calibrated before every block of trials by recording the raw eye positions as participants fixated a grid composed of nine known locations. The mean values during 1000 ms fixation intervals at each location were then used off-line for converting raw eye data to horizontal and vertical eye position in degrees of visual angle.

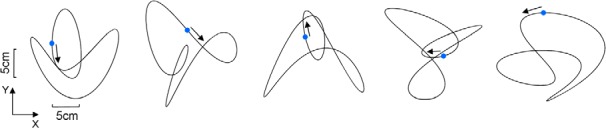

Procedure

Participants performed one of two tracking tasks. In the hand-tracking task, participants had to move the joystick with their hand, so as to bring the cursor (red disk, 0.5 cm diameter) as close as possible to the target (blue disk, 0.5 cm in diameter) moving along a predefined trajectory. This task was used to probe the ability to control hand movements along an imposed trajectory (Tong and Flanagan, 2003; Ogawa and Imamizu, 2013; Mathew et al., 2018). The motion of the target resulted from the combination of sinusoids: two along the frontal axis (one fundamental and a second or third harmonic), and two along the sagittal axis (same procedure). The following equations determined the target’s motion:

This technique was used to generate pseudorandom 2D patterns while preserving smooth changes in velocity and direction (Mrotek and Soechting, 2007; Soechting et al., 2010). A total of five patterns with identical lengths were used throughout the experiment (Table 1; Fig. 2). All trajectories had a period of 5 s (fundamental = 0.2 Hz). During this task, participants did not receive any explicit constraints regarding their gaze, meaning they were free to look at the target, the cursor, or both (Danion and Flanagan, 2018).

Table 1.

Target trajectory parameters in the hand-tracking task

| Trajectory | A1x, cm | A2x, cm | Harmonic x | Phase x, ° | A1y, cm | A2y, cm | Harmonic y | Phase y, ° |

|---|---|---|---|---|---|---|---|---|

| 1 | 5 | 5 | 2 | 45 | 5 | 5 | 3 | −135 |

| 2 | 4 | 5 | 2 | −60 | 3 | 5 | 3 | −135 |

| 3 | 4 | 5.1 | 3 | −60 | 4 | 5.2 | 2 | −135 |

| 4 | 5 | 5 | 3 | 90 | 3.4 | 5 | 2 | 45 |

| 5 | 5.1 | 5.2 | 2 | −90 | 4 | 5 | 3 | 22.5 |

Figure 2.

Target trajectories used during the hand-tracking task. The blue dot shows the initial position of the target, and the arrow shows its initial direction (see Materials and Methods).

In the eye-tracking task, participants were instructed to voluntarily move the joystick held in one hand so as to move a cursor (red disk, 0.5 cm in diameter) on the screen while concurrently keeping their eyes as close as possible to the cursor, which was thus a self-moved target. This task was used to probe the ability to predict the visual consequences of one’s hand movement (Vercher et al., 1995; Chen et al., 2016a; Landelle et al., 2016; Danion et al., 2017). Constraints were given with regard to the target (and thus hand) movement. First, participants were asked to generate random movements so as to make target motion as unpredictable as possible (Steinbach and Held, 1968; Landelle et al., 2016; Mathew et al., 2017). To facilitate the production of random movements, a template was provided on the screen during demonstration trials. Second, to maintain consistency across participants and trials, we ensured that, for each trial, mean tangential target velocity was close to 16 cm/s (thereby preserving task difficulty). This was done by computing mean target velocity online and by providing participants with verbal feedback during the experimental trials such as “please move faster” or “please slow down” when necessary. This procedure ensured minimal changes in mean target velocity across participants, trials, and hands. Participants were encouraged to cover the whole extent of the screen.

For both eye and hand-tracking tasks, we employed a fixed mapping between the joystick motion and the cursor motion with 25° of joystick inclination resulting in 15 cm on the screen. This mapping was such that a rightward/leftward hand motion corresponded to a rightward/leftward cursor motion, and a forward/backward hand motion corresponded to an upward/downward cursor motion. The duration of a trial was 10 s for both the eye- and hand-tracking tasks.

Participants were split into two groups that either performed the eye- or the hand-tracking task. One group of participants (N = 14, 8 males, mean age = 25.4 ± 4.0) performed the hand-tracking task, which consisted of one block of 10 trials with one hand followed by another 10 trial block with the other hand. Half of the participants started with the right hand. The second group of participants (N = 14, 7 males, mean age = 27.9 ± 6.4) followed the same type of protocol but with the eye-tracking task, i.e., each participant performed a block of 10 trials with each hand. Similarly, half of the participants started with the right hand. Before the beginning of the experiment, each participant performed a few practice trials (2 or 3) to familiarize with the task. Separate groups of participants were tested for hand and eye tracking because learning can transfer across these two tasks (Mathew et al., 2018).

To ensure that the eye-tracking task relied on predictive mechanisms, some participants of the second group (N = 10) completed 10 more trials in which they were asked to track with their eyes the target trajectories they had previously generated with their hand. During those trials, for each participant, we played back the last five target trajectories that he or she had generated with the right and left hand (Angel and Garland, 1972; Landelle et al., 2016; Mathew et al., 2017). Not only did this procedure allow for within-participant comparisons, it also minimized possible effects due to changes in target kinematics. The original order of trial presentation was maintained for each participant. We reasoned that if predictive mechanisms linking hand and eye actions are engaged when eye tracking the self-moved target, eye tracking of a self-moved target should be more accurate than eye tracking of a target, which follows the same trajectory but is moved by an external agent (Vercher et al., 1995; Landelle et al., 2016; Mathew et al., 2017).

Data analysis

To assess hand-tracking performance, the following dependent variables were computed for each trial. First, we measured the mean Euclidian distance between the cursor (moved by hand) and the externally moved target (Gouirand et al., 2019). Second, we evaluated the time lag between the cursor and the target by means of cross-correlations (Danion et al., 2017). This procedure was conducted separately for the vertical and the horizontal axes, and the resulting lags were then averaged. To assess eye-tracking performance, the following dependent variables were computed from each trial. First, we measured the mean Euclidian distance between the eye and the self-moved target (Mathew et al., 2018). Second, we evaluated the time lag between gaze and target using the method described above. For all analyses, the first second of each trial was discarded.

To gain more insight about gaze behavior in both tasks, a sequence of analyses was performed to separate periods of smooth pursuit, saccades and blinks (Landelle et al., 2016; Danion et al., 2017; Mathew et al., 2017). The identification of the blinks was performed based on the pupil diameter (that was also recorded). This procedure led to the removal of 0.3% of eye recordings. Eye position time series in x- and y-axes were then separately low-pass filtered with a Butterworth (4th order) using a cutoff frequency of 25 Hz. The resultant eye position signals were differentiated to obtain the velocity traces. Tangential eye velocity was calculated from velocity traces in x- and y-axes. The eye velocity signals were low-pass filtered (Butterworth, 4th order, cutoff frequency: 25 Hz) to remove the noise from the numerical differentiation. The resultant eye velocity signals were then differentiated to provide the acceleration traces that were also low-pass filtered (Butterworth, 4th order, cutoff frequency: 25 Hz). Saccades were identified based on the acceleration and deceleration peaks (>1500 cm/s2). Further visual inspection allowed to identify smaller saccades (<1 cm) that could not be identified automatically by our program. Based on these computations, we evaluated for each trial the mean rate and amplitude of catch-up saccade, as well as the gain of smooth pursuit in both tasks (Mathew et al., 2017; Danion and Flanagan, 2018).

To provide more information about the dynamics of the tracking error in both tasks, power spectral analyses of the hand-target and eye-target distance were performed in the 0–5 Hz frequency range. To assess whether the complexity of hand/target motion was similar for the right and left hand during the eye-tracking task, approximate entropy (ApEn) was used as an index to characterize the unpredictability of a signal (Pincus, 1991); the larger the approximate entropy the more unpredictable the signal is. To compute approximate entropy we used the following MATLAB function: https://fr.mathworks.com/matlabcentral/fileexchange/32427-fast-approximate-entropy [with the following settings: embedded dimension = 2, tolerance = 0.2 × STD(target trajectory)]. Approximate entropy was measured separately on the x- and y-axes.

Statistics

Paired t tests and repeated-measures ANOVAs were used to assess the effects of HAND (i.e., Right/Left), FREQUENCY, and AGENCY (Self/External). Newman–Keuls post hoc tests were used whenever needed. Kolmogorov–Smirnov tests showed that none of the dependent variables significantly deviated from a normal distribution. A 0.05 significance threshold was used for all analyses.

Results

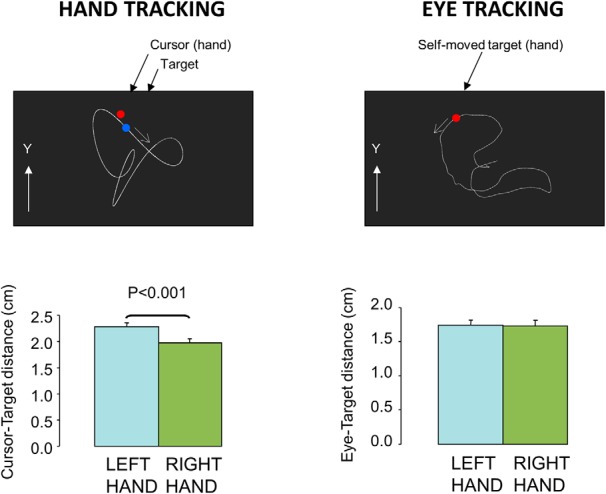

Typical trials

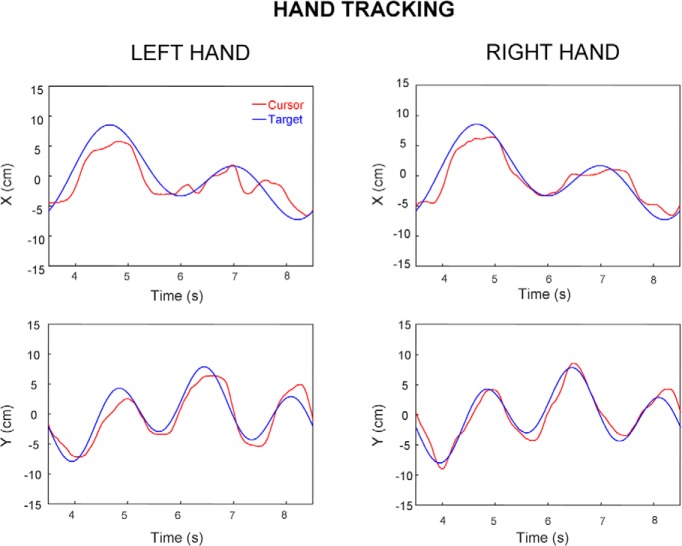

Figure 3 plots two representative portions of trials performed by one right-handed participant who tracked the visual target either with the right or the left hand. As can be seen, this figure suggests that hand tracking was more accurate when using the right (dominant) hand.

Figure 3.

Typical portions of hand tracking trials performed by the same participant with the same target trajectory. Left and right columns, respectively, display the performance of left and right hands. Top and bottom rows, respectively, display the horizontal and vertical components of hand (cursor, red) and target (blue) movement. The cursor is generally closer to the target when being moved by the right hand compared with the left hand.

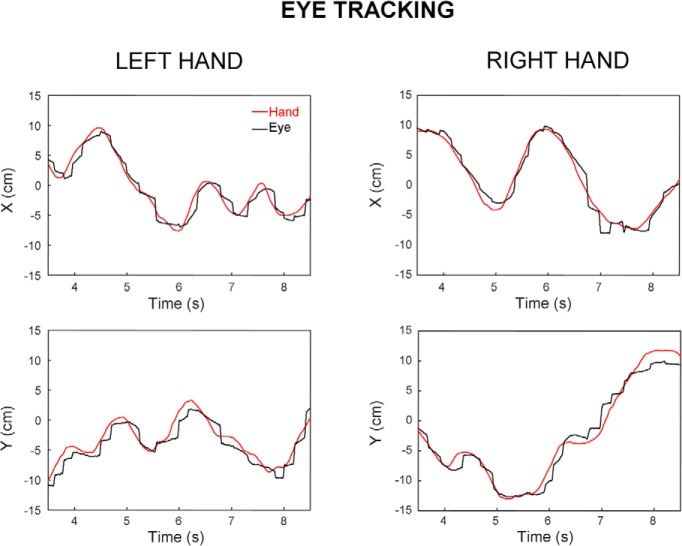

Figure 4 shows two representative portions of trials performed by another right-handed participant that had to track with the eyes a target moved either by the right (right column) or left hand (left column). In this case, visual inspection does not suggest any evident difference in eye tracking accuracy across hands. In the next sections, we analyze in more details the possible effect of handedness on eye and hand tracking across all participants.

Figure 4.

Typical portions of eye tracking trials performed by the same participant. Left and right columns, respectively, display eye-tracking performance when moving the target either with the left or right hand. Top and bottom rows, respectively, display the horizontal and vertical components of hand (self-moved target; red) and eye (black) movement.

Hand tracking is more accurate with the dominant hand

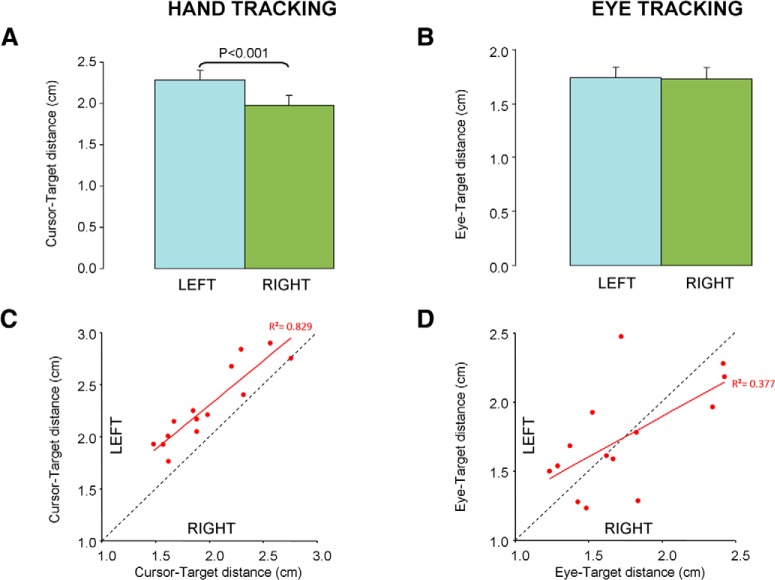

Mean data showed that right-handed participants tracked the target more accurately with the right than the left hand (Fig. 5A). On average, the cursor-target distance was 16% larger when using the left hand (2.29 ± 0.39 vs 1.98 ± 0.37 cm; t(13) = 6.96; p < 0.001). Figure 5C shows that this difference was quite systematic across participants, and also that the accuracies of the right and left hand were correlated across participants (R = 0.91; p < 0.001). Regarding the temporal relationship between cursor and target, the lag did not significantly differ between the right and left hands (70 vs 77 ms; t(13)=1.41; p = 0.18), and those lags were correlated across participants (R = 0.83; p < 0.001).

Figure 5.

Effect of handedness on tracking accuracy. A, Mean group hand tracking error when tracking the target with the right or the left hand. Error bars represent the standard error of the mean. B, Same as A for eye-tracking error. C, Correlation between right and left hand-tracking performance. Each red dot represents one participant. The red line indicates the linear regression, and the dotted black line indicates equality between right and left hand. D, Same as C for eye tracking when moving the target with either the right or the left hand.

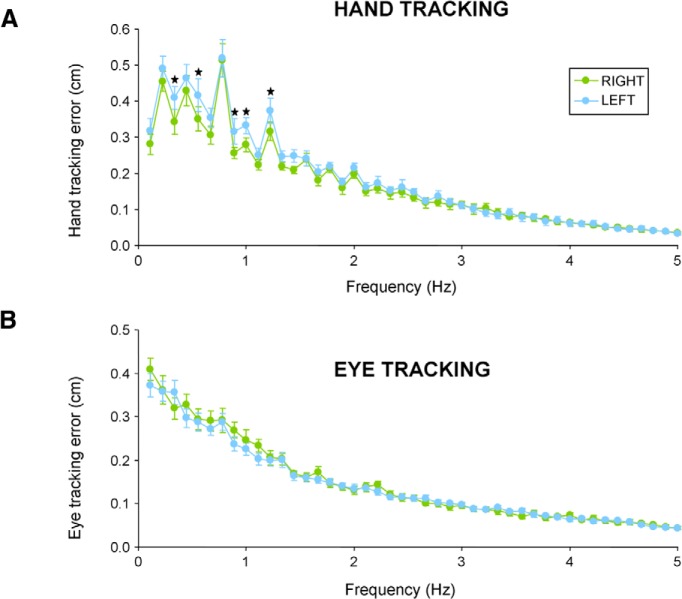

Figure 6A presents the corresponding power spectrum of hand tracking error as a function of hand. A two-way ANOVA with FREQ (45 levels: 0.11–5 Hz with 0.11 Hz step) and HAND showed a main effect of HAND (F(1,13)=10.2; p < 0.01), as well as an effect of FREQ (F(44,572)=74.76; p < 0.001) and an interaction between the two (F(44,572)=1.7; p < 0.01). Post hoc analysis of the interaction showed that bins in which hand-tracking errors were larger with the left hand were in the 0.3–1.2 Hz frequency range.

Figure 6.

Effect of handedness on the power spectrum of tracking error in each task. A, Power spectrum of cursor-target distance during hand tracking. B, Power spectrum of eye-target distance during eye tracking. Error bars represent the standard error of the mean. Black stars indicate frequency bin in which a significant difference across hands was observed (p < 0.05).

Further analyses were conducted to examine whether those differences in hand tracking accuracy were associated with different gaze behaviors. T tests showed no significant differences between gaze behaviors when tracking the target with the right or left hand, neither in terms of eye-target distance (1.50 vs 1.54 cm; t(13)=0.74; p = 0.47), nor in terms of saccade rate (2.72 vs 2.68 sac/s; t(13)=0.49; p = 0.63), saccade amplitude (2.0 vs 2.0 cm; t(13)=0.16; p = 0.87) or even smooth-pursuit gain (0.82 vs 0.82; t(13)=0.68; p = 0.51). We conclude that the greater accuracy of the right hand for manual tracking does not stem from a better monitoring of target motion by the eyes.

Handedness does not influence eye tracking of a self-moved target

In contrast to hand tracking, participants exhibited similar levels of performance in eye tracking when moving the target with the right or left hand (Fig. 5B). Indeed we found no significant difference in tracking accuracy across hands (t(13)=0.11; p = 0.92) with mean group eye-target distance being respectively 1.73 ± 0.40 and 1.74 ± 0.39 cm when using the right or left hand. The accuracy of eye tracking when using the right and left hand was correlated across participants (R = 0.61; p = 0.01; Fig. 5D). Regarding the temporal relationship between eye and target, we found that the eye followed the target by ∼40 ms but the lags for the right and left hands did not significantly differ (41 vs 45 ms; t(13)=1.30; p = 0.22), and were correlated with each other (R = 0.57; p < 0.05).

Similar gaze strategies appeared to be used with both hands. Indeed t tests showed no significant effects of HAND for smooth-pursuit gain (0.62 vs 0.63; t(13)=1.25; p = 0.23), saccade rate (3.03 vs 3.15 sac/s; t(13)=1.41; p = 0.18), and saccade amplitude (2.0 vs 2.1 cm; t(13)=1.08; p = 0.30). For all these dependent variables, the correlation between hands was significant (each R > 0.64, each p < 0.01). Analysis of target motion randomness by means of approximate entropy along either the x- or y-axis showed no significant effect of HAND (each t(13)<1.64, p > 0.12). Further analyses of mean target tangential velocity also failed to show a significant difference across hands (15.9 vs 15.9 cm/s; t(13)=0.05; p = 0.96).

Regarding FFT analyses of eye tracking error, Figure 6B presents the corresponding power spectrum associated with each hand. A two-way ANOVA showed a main effect of FREQ (F(44,572)=125.45; p < 0.001) but no significant main effect of HAND (F(1,13)=0.36; p = 0.55) and no significant interaction between FREQ and HAND (F(44,572)=1.03; p = 0.41). These results further support the view that eye tracking had similar dynamics when moving the target with the right or the left hand. Overall eye tracking was rather insensitive to which hand was used to move the target.

The lack of significant differences across hands in the eye-tracking task should not automatically lead to the conclusion that handedness does not influence eye tracking of a self-moved target. To quantify how true the null hypothesis may be, we used Bayesian statistics with the JASP free software (https://jasp-stats.org). Repeating the previous t tests with the Bayesian approach led to BF10 scores that ranged between 0.27 and 0.62, providing from substantial to anecdotal evidence in favor of the null hypothesis (Lee and Wagenmakers, 2014). None of these Bayesian t tests provided evidence for the alternative hypothesis.

Additional evidence that prediction underlies eye tracking of a self-moved target: self-moved versus externally-moved target

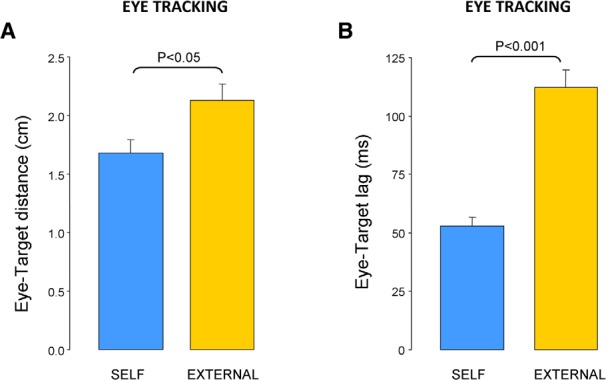

For comparison purposes, 10 participants of the eye-tracking group were also asked to track with their eyes target trajectories that each of them had previously generated during the self-moved condition. Figure 7 shows that eye-tracking performance was less accurate in those playback trials with an externally-moved target than those in which they moved the target themselves. This view was confirmed by a two-way ANOVA (AGENCY×HAND) showing a main effect of AGENCY (F(1,9)=6.59; p < 0.05) on eye-target distance, which was 27% larger during trials with an externally-moved target than during self-moved trials (2.13 vs 1.68 cm; Fig. 7A). There was no significant effect of HAND (F(1,9)=0.10; p = 0.75), or interaction between HAND and AGENCY (F(1,9)=0.16; p = 0.69). Similar results were obtained when analyzing the eye-target lag (Fig. 7B) as we found a main effect of AGENCY (F(1,9)=51.06; p < 0.001) showing a twofold increase in the eye-target lag in playback trials with an externally-moved target compared with self-moved trials (112 vs 53 ms, respectively). There was no significant effect of HAND (F(1,9)=1.82; p = 0.21) or interaction (F(1,9)=2.00; p = 0.19). These results are consistent with the idea of predictive mechanisms linking eye and hand actions when participants have to track a self-moved target.

Figure 7.

Comparison between eye tracking a self-moved target and an externally moved target. A, Effect of agency on eye-target distance. B, Effect of agency on eye-target lag. Error bars represent SEM.

Discussion

Our main objective was to tease apart the possible effect of handedness on prediction and control of hand movements. To achieve this objective, we investigated interlimb differences when performing either a hand tracking or an eye-tracking task. Our main observation is that, in contrast to hand tracking that was clearly impacted by handedness, eye tracking was nearly identical irrespective of whether the target was moved by the right or the left hand. We now propose to discuss in more detail these findings and their implications for prediction and control of hand movements.

Handedness matters for hand tracking

We found that when asked to move a cursor along an imposed trajectory, right-handed participants were more accurate when using their right (dominant) hand compared with the left (non-dominant) hand. Indeed, as shown by our analyses, the cursor-target distance was lower when participants used their right hand. Our FFT analyses further confirmed the superiority of the right hand with lower tracking error between 0.3 and 1.2 Hz, a frequency range that matches with rather slow (voluntary) visuomotor feedback loops. Overall these results are consistent with previous studies that explored the effect of hand dominance during hand tracking (Simon et al., 1952; Carey et al., 2003; Aoki et al., 2016), as well as other studies investigating reaching movements (Carson et al., 1993; Elliott et al., 1993; Roy et al., 1994; Carey and Liddle, 2013; Schaffer and Sainburg, 2017), and conventional tests of manual dexterity (Bryden and Roy, 2005; Noguchi et al., 2006).

Despite clear differences in hand tracking accuracy, there were strong correlations between the right and left hand behavior across participants, both in terms of cursor-target distance and cursor-target lag. Our observations echo another study showing that the consistency of hand reaching movements is correlated across hands (Haar et al., 2017b). Altogether, these observations suggest that the neural circuits driving right and left hand actions are coupled to some extent. This coupling across hands can stem from various factors including visual perception, motivation/arousal, and decisional/planning processes.

Because during hand tracking, gaze is related more closely to the target than the cursor (Danion and Flanagan, 2018), it was crucial to assess whether the asymmetry across hands could be explained by different gaze behaviors. Our analyses of gaze showed that neither the eye-target distance, nor the saccade rate, the saccade amplitude or the smooth-pursuit gain, were influenced by handedness. We conclude that the lower performance exhibited by the left hand does not stem from poorer processing of visual information about the target motion. Altogether, those results suggest that the ability to generate adequate hand motor commands to bring the cursor close to the moving target is better for the right hand. These findings thus extend the idea that there is a right hand advantage for trajectory control toward a stationary target (Sainburg and Kalakanis, 2000; Bagesteiro and Sainburg, 2002; Mutha et al., 2012) to the condition of a moving target.

Handedness does not matter for eye tracking a self-moved target

We consistently found no significant difference in eye-tracking performance when moving the target with the right or the left hand. This view was supported by similar eye-target distance, eye-target lag, saccade rate, saccade amplitude, smooth pursuit gain, and spectral analyses of error. One possible confound could be that right hand motion was faster and/or more complex than left hand motion but we showed that mean target velocity, as well as randomness of target motion were similar for both hands, the latter observation being consistent with a report comparing the randomness of right and left finger movements (Newell et al., 2000). Finally, because one could argue that predictive mechanisms were not at play in our eye-tracking task, we performed additional trials demonstrating that eye-tracking performance was substantially improved when the target was self-moved compared with when it was externally moved, which fits with many other studies (Steinbach and Held, 1968; Vercher et al., 1995; Chen et al., 2016b; Landelle et al., 2016). Overall, our study suggests that the ability to predict visual consequences arising from voluntary hand actions does not depend on handedness. At first sight this conclusion may seem inconsistent with the idea of Sainburg et al. (1995) that the dominant hand has an advantage for predicting intersegmental torques (Yadav and Sainburg, 2014), but in our opinion this ability could also reflect a better inverse model of arm dynamics.

One may wonder to what extent increasing the difficulty of eye tracking a self-moved target could have been helpful to further tease apart the predictive mechanisms engaged for each hand. Pilot data collected when first exploring this task with the right hand (Landelle et al., 2016) showed that faster hand/target motion led to a drop in eye-tracking performance, making the involvement of predictive mechanisms less obvious (i.e., the difference between self-moved and externally-moved target conditions faded). Whether this drop in predictive performance induced by increasing task difficulty would be similar for both hands remains to be explored.

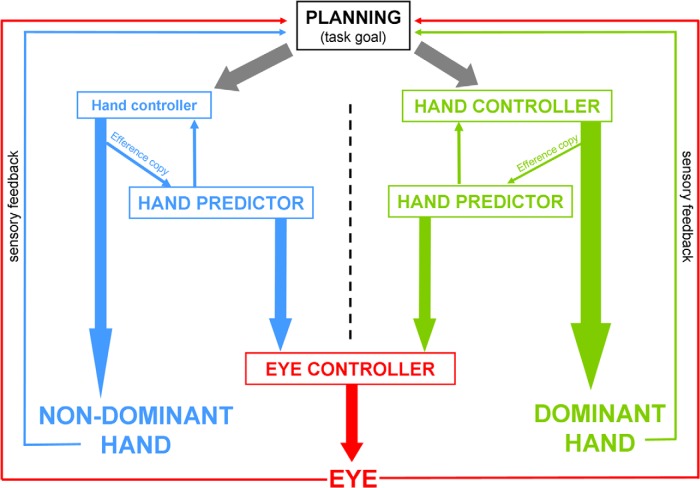

Implications for control and prediction of the right and left hands: toward a possible scheme

The main goal of the study was to determine whether control and prediction are similarly influenced by handedness as we hoped to clarify whether the superiority of the dominant hand stems from more efficient control, prediction, or both. We found that right-handed participants were more accurate when using their right hand for hand tracking, an effect expected from the literature, but this right-hand advantage was not observed in the eye-tracking task. Moreover, we observed in each task that performance of the right and left hands were correlated such that if one participant had poor performance with one hand, he or she was likely to also exhibit poor performance with the other hand. In Figure 8 we propose a hypothetical scheme that could account for all these observations. Although this scheme is largely inspired from other accounts in which an inverse model (also called controller) and a forward model (also called predictor) contribute to hand movements (Kawato, 1999; Wolpert and Flanagan, 2001; Diedrichsen et al., 2010; Shadmehr et al., 2010; Scott, 2012), we propose to emphasize the possible difference between dominant and non-dominant hand actions.

Figure 8.

Possible scheme accounting for separate effects of handedness on hand tracking and eye tracking. High-level planning of cursor/target motion is effector independent, which may partly explain the correlated hand performances. Each hand is associated with a separate controller and predictor though. During eye tracking a self-moved target, the eye controller is fed by the predictor of the moving hand. Both predictors have a similar accuracy, resulting in similar performance when tracking with the eyes a target moved by the dominant (right) or non-dominant (left) hand. However, the controller of the dominant hand is more accurate, resulting in better performance when tracking a visual target with this hand.

A parsimonious explanation for better hand tracking with the dominant hand is that the controller (inverse model) in charge of this hand issues motor commands that allow reaching more adequately the desired (target) position. This possibility receives credit from several brain imaging studies showing a larger hand representation in the primary motor cortex of the dominant hemisphere (Triggs et al., 1994; Amunts et al., 1996; Volkmann et al., 1998; Hammond, 2002), a brain region often evoked as a possible site for an inverse model (Shadmehr and Krakauer, 2008; Scott, 2012). As for the correlation in performance across hands, this effect may arise from common visual processing of target motion (i.e., similar gaze behavior), motivational factors, as well as effector-independent planning linking ongoing cursor and target states to desired cursor motion (Medendorp et al., 2003), all taking place upstream from the computations of the motor commands issued by the inverse model. This correlation could also be supported by the fact that upper limb movements involve effector-independent representations in the contra and ipsilateral hemisphere (Haar et al., 2017a), as well as bilateral representations (Berlot et al., 2019).

As eye-tracking performance was similar across hands, a first option would be to consider that a single forward model is in charge of predicting the visual consequences of both hand movements. Such a shared forward model fed by higher-order signals, for instance hand direction in extrinsic coordinates at the planning stage (Crawford et al., 2004), would account for the lack of hand dominance effect. However, one problem with this scheme is that we observed only moderate correlation in eye-tracking performance across hands (especially compared with hand tracking, supposedly driven by separate controllers). As a result we favor the hypothesis that there are separate forward models in charge of predicting the visual consequences of each hand movement. In line with earlier suggestions (Steinbach and Held, 1968; Vercher et al., 1996; Scarchilli et al., 1999), we propose that these forward models are fed by the associated hand efference copy, a signal that could be issued upstream of the primary motor cortex (Voss et al., 2007; Mathew et al., 2017). In contrast with inverse models, our findings suggest that dominant and non-dominant forward models have a similar accuracy, meaning that their ability to predict the outcome of hand movements is not impacted by the correctness of the input signal. The fact that eye-tracking performance was correlated across hands suggests that these two forward models might not be fully independent of each other. Although brain regions such as the parietal cortex and the cerebellum have often been evoked for their contribution to sensory prediction (Blakemore and Sirigu, 2003; Pasalar et al., 2006; Miall et al., 2007; Mulliken et al., 2008; Shadmehr and Krakauer, 2008; Scott, 2012), lateralization and/or possible asymmetries in these structures remains poorly understood. Yet there is evidence that volume asymmetries in the cerebellum may depend on handedness (Ocklenburg et al., 2016; but see Snyder et al., 1995 ). Despite several evidences that the cerebellum is key for eye–hand coordination (Vercher and Gauthier, 1988; Miall et al., 2001), the possible structural asymmetry of the cerebellum did not seem to significantly influence eye-tracking performance.

The scheme presented in Figure 8 in which we hypothesize different controllers but similar predictors raises a question: why do participants exhibit worse hand-tracking performance with the left hand, if prediction is supposedly as accurate for right and left hand movements? It has been proposed that forward modeling provides internal feedback loops optimizing the accuracy of hand movements (Desmurget and Grafton, 2000), so why can’t the predictor of the left hand compensate for the putatively weaker controller of the left hand? We see several possible reasons. First, the eye-tracking task used in the current study suggests similar abilities to predict the visual consequences of right and left hand movements, but it remains unclear whether this finding extends to somatosensory consequences of right and left hand movements. This reasoning goes along with the proposition that the brain could predict separately the visual and the somatosensory consequences of actions (Miall et al., 1993) by using different neural populations (Liu et al., 2003). Moreover our eye-tracking task tested the ability of the eye to make use of predicted hand movements, but it did not explicitly test the internal feedback loops associated with the control of hand movements (Desmurget and Grafton, 2000). One possibility could be that in these two contexts, eye and hand rely differently on predictions made for visual and proprioceptive consequences of hand movement. In addition, one may hypothesize that in the current context in which the mapping between the cursor and the joystick is one-to-one (no perturbation), the coupling between the predictor and the controller is weaker than when adaptation is required (Honda et al., 2018).

Final comments

Although it is usually difficult to tease apart the contribution of forward and inverse models (Lalazar and Vaadia, 2008; Mulliken et al., 2008), the current design allowed to unpack these contributions, and revealed an asymmetrical effect of handedness on prediction and control. What are the implications of this finding with respect to the greater dexterity exhibited by the dominant hand in a wide range of task? At this stage, our results suggest that the dominant hand advantage stems from better control, but not necessarily from better prediction. Although brain imaging studies have provided evidences for functional and structural asymmetries between the right and left hemispheres of the human brain (Hammond, 2002; Toga and Thompson, 2003), some of these being correlated with handedness (Kim et al., 1993; Elbert et al., 1995; Amunts et al., 1996), here we show that handedness does not impact the ability to predict visual consequences of hand actions. More generally these findings provide further evidence that prediction and control are distinct processes (Kawato, 1999; Flanagan et al., 2003; Shadmehr, 2017).

Synthesis

Reviewing Editor: Gustavo Deco, Universitat Pompeu Fabra

Decisions are customarily a result of the Reviewing Editor and the peer reviewers coming together and discussing their recommendations until a consensus is reached. When revisions are invited, a fact-based synthesis statement explaining their decision and outlining what is needed to prepare a revision will be listed below. The following reviewer(s) agreed to reveal their identity: Hiroshi Imamizu, Charalambos Papaxanthis.

Reviewer 1:

The authors examined whether handedness affects prediction of the visual consequence of hand movements. They made participants track with their eyes a target that was self-moved by their dominant or non-dominant hands. This task tests the ability to predict the visual consequence of hand movements from motor commands. Their results indicate that the ability to predict the visual consequences of self-generated actions does not depend on handedness. This suggests that greater dexterity exhibited by the dominant hand stems from advantages in control but not in prediction.

This is an interesting work that covers an overlooked issue in human motor control by clever combination of simple experiments. The manuscript is clearly and concisely written with respects to the introduction, method and result sections. However, there seems to be gaps between their results and conclusions that should be filled by the additional analysis or discussion.

1. The authors assume predictors separately for the left and right hands (e.g., Figure 8), and concluded no significant difference in performance between the two predictors. However, is there any possibility that there is only one predictor that predicts visual consequence from higher order signals, which are unspecific to the hands (such as a hand direction in the extrinsic coordinates at the planning stage), and that output signal from the single model is utilized by the eye controller?

2. Their conclusion is based on observation that there is a significant effect of handedness on accuracy of the hand tracking task but not the eye tracking task. However, there seems to be larger individual difference in the eye tracking task (Figure 5D) than the hand tracking task (Figure 5C). Is there any possibility that the difference affected the results?

Reviewer 2:

IIn the present study the authors examined whether on-line control and predictive processes are similarly impacted by manual dexterity. Right-handed participants performed two tasks either with the right or the left hand. In the first task, which tested the ability to control online the hand along an imposed trajectory, participants had to move a cursor with their and so as to track a target that followed a quasi-random trajectory. In the second task, which tested the ability to predict the visual consequences of hand movements, participants had to track with their eyes a target that was self-moved through voluntary hand motion. The main results showed that hand tracking was more accurate with the right hand than with the left hand. In contrast, eye tracking was similar in terms of spatial and temporal gaze attributes whether the target was moved by the right or the left hand. The authors propose that the greater dexterity exhibited by the dominant hand in many motor tasks stems from advantages in control, not in prediction.

Comments:

The study is interesting and original. The paper is well-written and the results are clearly presented.

One question is whether the hand-tracking and the eye-tracking tasks were of similar difficulty. Could the authors clarify this point? In the same line, do the authors think that differences between the two hands would appear (eye-tracking task) if hand trajectories were more complex and faster?

From a computational point of view, if predictive mechanisms do not differ between hands, and theoretically both hands can take advantage from similar internal correction processes, why control process is better for the right than the left hand? Is authors explanation about a better ‘inverse model’ for the right hand sufficient (lines 413-417)? This point merits further development in the discussion.

Please explicitly state that variables followed normal distribution.

References

- Amunts K, Schlaug G, Schleicher A, Steinmetz H, Dabringhaus A, Roland PE, Zilles K (1996) Asymmetry in the human motor cortex and handedness. Neuroimage 4:216–222. 10.1006/nimg.1996.0073 [DOI] [PubMed] [Google Scholar]

- Angel RW, Garland H (1972) Transfer of information from manual to oculomotor control system. J Exp Psychol 96:92–96. [DOI] [PubMed] [Google Scholar]

- Aoki T, Rivlis G, Schieber MH (2016) Handedness and index finger movements performed on a small touchscreen. J Neurophysiol 115:858–867. 10.1152/jn.00256.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bagesteiro LB, Sainburg RL (2002) Handedness: dominant arm advantages in control of limb dynamics. J Neurophysiol 88:2408–2421. 10.1152/jn.00901.2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berlot E, Prichard G, O’Reilly J, Ejaz N, Diedrichsen J (2019) Ipsilateral finger representations in the sensorimotor cortex are driven by active movement processes, not passive sensory input. J Neurophysiol 121:418–426. 10.1152/jn.00439.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blakemore SJ, Sirigu A (2003) Action prediction in the cerebellum and in the parietal lobe. Exp Brain Res 153:239–245. 10.1007/s00221-003-1597-z [DOI] [PubMed] [Google Scholar]

- Bryden PJ, Roy EA (2005) A new method of administering the grooved pegboard test: performance as a function of handedness and sex. Brain Cogn 58:258–268. 10.1016/j.bandc.2004.12.004 [DOI] [PubMed] [Google Scholar]

- Carey JR, Bogard CL, King BA, Suman VJ (1994) Finger-movement tracking scores in healthy subjects. Percept Mot Skills 79:563–576. 10.2466/pms.1994.79.1.563 [DOI] [PubMed] [Google Scholar]

- Carey JR, Comnick KT, Lojovich JM, Lindgren BR (2003) Left- versus right-hand tracking performance by right-handed boys and girls: examination of performance asymmetry. Percept Mot Skills 97:779–788. 10.2466/pms.2003.97.3.779 [DOI] [PubMed] [Google Scholar]

- Carey DP, Liddle J (2013) Hemifield or hemispace: what accounts for the ipsilateral advantages in visually guided aiming? Exp Brain Res 230:323–331. 10.1007/s00221-013-3657-3 [DOI] [PubMed] [Google Scholar]

- Carson RG, Goodman D, Chua R, Elliott D (1993) Asymmetries in the regulation of visually guided aiming. J Mot Behav 25:21–32. 10.1080/00222895.1993.9941636 [DOI] [PubMed] [Google Scholar]

- Chen J, Valsecchi M, Gegenfurtner KR (2016a) LRP predicts smooth pursuit eye movement onset during the ocular tracking of self-generated movements. J Neurophysiol 116:18–29. 10.1152/jn.00184.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen J, Valsecchi M, Gegenfurtner KR (2016b) Role of motor execution in the ocular tracking of self-generated movements. J Neurophysiol 116:2586–2593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crawford JD, Medendorp WP, Marotta JJ (2004) Spatial transformations for eye–hand coordination. J Neurophysiol 92:10–19. 10.1152/jn.00117.2004 [DOI] [PubMed] [Google Scholar]

- Danion FR, Flanagan JR (2018) Different gaze strategies during eye versus hand tracking of a moving target. Sci Rep 8:10059. 10.1038/s41598-018-28434-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Danion F, Mathew J, Flanagan JR (2017) Eye tracking of occluded self-moved targets: role of haptic feedback and hand-target dynamics. eNeuro 3:ENEURO.0101-17.2017 10.1523/ENEURO.0101-17.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desmurget M, Grafton S (2000) Forward modeling allows feedback control for fast reaching movements. Trends Cogn Sci 4:423–431. [DOI] [PubMed] [Google Scholar]

- Diedrichsen J, Shadmehr R, Ivry RB (2010) The coordination of movement: optimal feedback control and beyond. Trends Cogn Sci 14:31–39. 10.1016/j.tics.2009.11.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Domann R, Bock O, Eckmiller R (1989) Interaction of visual and non-visual signals in the initiation of smooth pursuit eye movements in primates. Behav Brain Res 32:95–99. [DOI] [PubMed] [Google Scholar]

- Elbert T, Pantev C, Wienbruch C, Rockstroh B, Taub E (1995) Increased cortical representation of the fingers of the left hand in string players. Science 270:305–307. [DOI] [PubMed] [Google Scholar]

- Elliott D, Roy EA, Goodman D, Carson RG, Chua R, Maraj BKV (1993) Asymmetries in the preparation and control of manual aiming movements. Can J Exp Psychol Can Psychol Expérimentale 47:570–589. 10.1037/h0078856 [DOI] [Google Scholar]

- Flanagan JR, Vetter P, Johansson RS, Wolpert DM (2003) Prediction precedes control in motor learning. Curr Biol CB 13:146–150. [DOI] [PubMed] [Google Scholar]

- Foulkes AJ, Miall RC (2000) Adaptation to visual feedback delays in a human manual tracking task. Exp Brain Res 131:101–110. [DOI] [PubMed] [Google Scholar]

- Gandrey P, Paizis C, Karathanasis V, Gueugneau N, Papaxanthis C (2013) Dominant vs. nondominant arm advantage in mentally simulated actions in right handers. J Neurophysiol 110:2887–2894. 10.1152/jn.00123.2013 [DOI] [PubMed] [Google Scholar]

- Gauthier GM, Hofferer JM (1976) Eye tracking of self-moved targets in the absence of vision. Exp Brain Res 26:121–139. [DOI] [PubMed] [Google Scholar]

- Gauthier GM, Vercher JL, Mussa Ivaldi F, Marchetti E (1988) Oculo-manual tracking of visual targets: control learning, coordination control and coordination model. Exp Brain Res 73:127–137. [DOI] [PubMed] [Google Scholar]

- Gouirand N, Mathew J, Brenner E, Danion F (2019) Eye movements do not play an important role in the adaptation of hand tracking to a visuomotor rotation. J Neurophysiol 121:1967–1976. 10.1152/jn.00814.2018 [DOI] [PubMed] [Google Scholar]

- Haar S, Dinstein I, Shelef I, Donchin O (2017a) Effector-invariant movement encoding in the human motor system. J Neurosci 37:9054–9063. 10.1523/JNEUROSCI.1663-17.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haar S, Donchin O, Dinstein I (2017b) Individual movement variability magnitudes are explained by cortical neural variability. J Neurosci 37:9076–9085. 10.1523/JNEUROSCI.1650-17.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hammond G (2002) Correlates of human handedness in primary motor cortex: a review and hypothesis. Neurosci Biobehav Rev 26:285–292. [DOI] [PubMed] [Google Scholar]

- Honda T, Nagao S, Hashimoto Y, Ishikawa K, Yokota T, Mizusawa H, Ito M (2018) Tandem internal models execute motor learning in the cerebellum. Proc Natl Acad Sci U S A 115:7428–7433 10.1073/pnas.1716489115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawato M (1999) Internal models for motor control and trajectory planning. Curr Opin Neurobiol 9:718–727. [DOI] [PubMed] [Google Scholar]

- Kilteni K, Andersson BJ, Houborg C, Ehrsson HH (2018) Motor imagery involves predicting the sensory consequences of the imagined movement. Nat Commun 9:1617. 10.1038/s41467-018-03989-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim SG, Ashe J, Hendrich K, Ellermann JM, Merkle H, Uğurbil K, Georgopoulos AP (1993) Functional magnetic resonance imaging of motor cortex: hemispheric asymmetry and handedness. Science 261:615–617. [DOI] [PubMed] [Google Scholar]

- Lalazar H, Vaadia E (2008) Neural basis of sensorimotor learning: modifying internal models. Curr Opin Neurobiol 18:573–581. 10.1016/j.conb.2008.11.003 [DOI] [PubMed] [Google Scholar]

- Landelle C, Montagnini A, Madelain L, Danion F (2016) Eye tracking a self-moved target with complex hand-target dynamics. J Neurophysiol 116:1859–1870. 10.1152/jn.00007.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee MD, Wagenmakers E-J (2014) Bayesian cognitive modeling: a practical course. Cambridge: Cambridge UP. [Google Scholar]

- Liu X, Robertson E, Miall RC (2003) Neuronal activity related to the visual representation of arm movements in the lateral cerebellar cortex. J Neurophysiol 89:1223–1237. 10.1152/jn.00817.2002 [DOI] [PubMed] [Google Scholar]

- Mathew J, Eusebio A, Danion F (2017) Limited contribution of primary motor cortex in eye–hand coordination: a tms study. J Neurosci 37:9730–9740. 10.1523/JNEUROSCI.0564-17.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mathew J, Bernier PM, Danion FR (2018) Asymmetrical relationship between prediction and control during visuomotor adaptation. eNeuro 5:ENEURO.0280-18.2018 10.1523/ENEURO.0280-18.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Medendorp WP, Goltz HC, Vilis T, Crawford JD (2003) Gaze-centered updating of visual space in human parietal cortex. J Neurosci 23:6209–6214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miall RC, Christensen LOD, Cain O, Stanley J (2007) Disruption of state estimation in the human lateral cerebellum. PLoS Biol 5:e316. 10.1371/journal.pbio.0050316 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miall RC, Reckess GZ, Imamizu H (2001) The cerebellum coordinates eye and hand tracking movements. Nat Neurosci 4:638–644. 10.1038/88465 [DOI] [PubMed] [Google Scholar]

- Miall RC, Weir DJ, Wolpert DM, Stein JF (1993) Is the cerebellum a smith predictor? J Mot Behav 25:203–216. 10.1080/00222895.1993.9942050 [DOI] [PubMed] [Google Scholar]

- Moulton E, Galléa C, Kemlin C, Valabregue R, Maier MA, Lindberg P, Rosso C (2017) Cerebello-Cortical differences in effective connectivity of the dominant and non-dominant hand during a visuomotor paradigm of grip force control. Front Hum Neurosci 11:511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mrotek LA, Soechting JF (2007) Target interception: hand-eye coordination and strategies. J Neurosci 27:7297–7309. 10.1523/JNEUROSCI.2046-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mulliken GH, Musallam S, Andersen RA (2008) Forward estimation of movement state in posterior parietal cortex. Proc Natl Acad Sci U S A 105:8170–8177. 10.1073/pnas.0802602105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mutha PK, Haaland KY, Sainburg RL (2012) The effects of brain lateralization on motor control and adaptation. J Mot Behav 44:455–469. 10.1080/00222895.2012.747482 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newell KM, Challis S, Morrison KM (2000) Dimensional constraints on limb movements. Hum Mov Sci 19:175–201. 10.1016/S0167-9457(00)00010-5 [DOI] [Google Scholar]

- Noguchi T, Demura S, Nagasawa Y, Uchiyama M (2006) An examination of practice and laterality effects on the Purdue pegboard and moving beans with tweezers. Percept Mot Skills 102:265–274. 10.2466/pms.102.1.265-274 [DOI] [PubMed] [Google Scholar]

- Ocklenburg S, Friedrich P, Güntürkün O, Genç E (2016) Voxel-wise grey matter asymmetry analysis in left- and right-handers. Neurosci Lett 633:210–214. 10.1016/j.neulet.2016.09.046 [DOI] [PubMed] [Google Scholar]

- Ogawa K, Imamizu H (2013) Human sensorimotor cortex represents conflicting visuomotor mappings. J Neurosci 33:6412–6422. 10.1523/JNEUROSCI.4661-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oldfield RC (1971) The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9:97–113. [DOI] [PubMed] [Google Scholar]

- Pasalar S, Roitman AV, Durfee WK, Ebner TJ (2006) Force field effects on cerebellar Purkinje cell discharge with implications for internal models. Nat Neurosci 9:1404–1411. 10.1038/nn1783 [DOI] [PubMed] [Google Scholar]

- Pigeon P, DiZio P, Lackner JR (2013) Immediate compensation for variations in self-generated Coriolis torques related to body dynamics and carried objects. J Neurophysiol 110:1370–1384. 10.1152/jn.00104.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pincus SM (1991) Approximate entropy as a measure of system complexity. Proc Natl Acad Sci U S A 88:2297–2301. 10.1073/pnas.88.6.2297 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roy MS, Lachapelle P, Polomeno RC, Frigon JY, Leporé F (1994) Human strabismus: evaluation of the interhemispheric transmission time and hemiretinal differences using a reaction time task. Behav Brain Res 62:63–70. [DOI] [PubMed] [Google Scholar]

- Sainburg RL (2014) Convergent models of handedness and brain lateralization. Front Psychol 5:1092. 10.3389/fpsyg.2014.01092 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sainburg RL, Ghilardi MF, Poizner H, Ghez C (1995) Control of limb dynamics in normal subjects and patients without proprioception. J Neurophysiol 73:820–835. 10.1152/jn.1995.73.2.820 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sainburg RL, Kalakanis D (2000) Differences in control of limb dynamics during dominant and nondominant arm reaching. J Neurophysiol 83:2661–2675. 10.1152/jn.2000.83.5.2661 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarlegna FR, Baud-Bovy G, Danion F (2010) Delayed visual feedback affects both manual tracking and grip force control when transporting a handheld object. J Neurophysiol 104:641–653. 10.1152/jn.00174.2010 [DOI] [PubMed] [Google Scholar]

- Scarchilli K, Vercher JL (1999) The oculomanual coordination control center takes into account the mechanical properties of the arm. Exp Brain Res 124:42–52. [DOI] [PubMed] [Google Scholar]

- Scarchilli K, Vercher JL, Gauthier GM, Cole J (1999) Does the oculo-manual co-ordination control system use an internal model of the arm dynamics? Neurosci Lett 265:139–142. [DOI] [PubMed] [Google Scholar]

- Schaffer JE, Sainburg RL (2017) Interlimb differences in coordination of unsupported reaching movements. Neuroscience 350:54–64. 10.1016/j.neuroscience.2017.03.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott SH (2012) The computational and neural basis of voluntary motor control and planning. Trends Cogn Sci 16:541–549. 10.1016/j.tics.2012.09.008 [DOI] [PubMed] [Google Scholar]

- Shadmehr R (2017) Learning to predict and control the physics of our movements. J Neurosci 37:1663–1671. 10.1523/JNEUROSCI.1675-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shadmehr R, Krakauer JW (2008) A computational neuroanatomy for motor control. Exp Brain Res 185:359–381. 10.1007/s00221-008-1280-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shadmehr R, Smith MA, Krakauer JW (2010) Error correction, sensory prediction, and adaptation in motor control. Annu Rev Neurosci 33:89–108. 10.1146/annurev-neuro-060909-153135 [DOI] [PubMed] [Google Scholar]

- Simon JR, Crow TW, Lincoln RS, Smith KU (1952) Effects of handedness on tracking accuracy. Percept Mot Skills Res Exch 4:53–57. 10.2466/PMS.4.3.53-57 [DOI] [Google Scholar]

- Snyder PJ, Bilder RM, Wu H, Bogerts B, Lieberman JA (1995) Cerebellar volume asymmetries are related to handedness: a quantitative MRI study. Neuropsychologia 33:407–419. [DOI] [PubMed] [Google Scholar]

- Soechting JF, Rao HM, Juveli JZ (2010) Incorporating prediction in models for two-dimensional smooth pursuit. PloS One 5:e12574. 10.1371/journal.pone.0012574 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinbach MJ (1969) Eye tracking of self-moved targets: the role of efference. J Exp Psychol 82:366–376. [DOI] [PubMed] [Google Scholar]

- Steinbach MJ, Held R (1968) Eye tracking of observer-generated target movements. Science 161:187–188. [DOI] [PubMed] [Google Scholar]

- Toga AW, Thompson PM (2003) Mapping brain asymmetry. Nat Rev Neurosci 4:37–48. 10.1038/nrn1009 [DOI] [PubMed] [Google Scholar]

- Tong C, Flanagan JR (2003) Task-specific internal models for kinematic transformations. J Neurophysiol 90:578–585. 10.1152/jn.01087.2002 [DOI] [PubMed] [Google Scholar]

- Triggs WJ, Calvanio R, Macdonell RAL, Cros D, Chiappa KH (1994) Physiological motor asymmetry in human handedness: evidence from transcranial magnetic stimulation. Brain Res 636:270–276. 10.1016/0006-8993(94)91026-X [DOI] [PubMed] [Google Scholar]

- Vercher JL, Gauthier GM (1988) Cerebellar involvement in the coordination control of the oculo-manual tracking system: effects of cerebellar dentate nucleus lesion. Exp Brain Res 73:155–166. [DOI] [PubMed] [Google Scholar]

- Vercher JL, Gauthier GM, Guédon O, Blouin J, Cole J, Lamarre Y (1996) Self-moved target eye tracking in control and deafferented subjects: roles of arm motor command and proprioception in arm-eye coordination. J Neurophysiol 76:1133–1144. 10.1152/jn.1996.76.2.1133 [DOI] [PubMed] [Google Scholar]

- Vercher JL, Quaccia D, Gauthier GM (1995) Oculo-manual coordination control: respective role of visual and non-visual information in ocular tracking of self-moved targets. Exp Brain Res 103:311–322. [DOI] [PubMed] [Google Scholar]

- Vercher JL, Volle M, Gauthier GM (1993) Dynamic analysis of human visuo-oculo-manual coordination control in target tracking tasks. Aviat Space Environ Med 64:500–506. [PubMed] [Google Scholar]

- Volkmann J, Schnitzler A, Witte OW, Freund HJ (1998) Handedness and asymmetry of hand representation in human motor cortex. J Neurophysiol 79:2149–2154. 10.1152/jn.1998.79.4.2149 [DOI] [PubMed] [Google Scholar]

- Voss M, Bays PM, Rothwell JC, Wolpert DM (2007) An improvement in perception of self-generated tactile stimuli following theta-burst stimulation of primary motor cortex. Neuropsychologia 45:2712–2717. 10.1016/j.neuropsychologia.2007.04.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang Y-C, Magasi SR, Bohannon RW, Reuben DB, McCreath HE, Bubela DJ, Gershon RC, Rymer WZ (2011) Assessing dexterity function: a comparison of two alternatives for the NIH Toolbox. J Hand Ther 24:313–320; quiz 321. 10.1016/j.jht.2011.05.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolpert DM, Diedrichsen J, Flanagan JR (2011) Principles of sensorimotor learning. Nat Rev Neurosci 12:739–751. 10.1038/nrn3112 [DOI] [PubMed] [Google Scholar]

- Wolpert DM, Flanagan JR (2001) Motor prediction. Curr Biol 11:R729–R732. [DOI] [PubMed] [Google Scholar]

- Yadav V, Sainburg RL (2014) Limb dominance results from asymmetries in predictive and impedance control mechanisms. PloS One 9:e93892. 10.1371/journal.pone.0093892 [DOI] [PMC free article] [PubMed] [Google Scholar]