Abstract

Organisms must frequently make cost-benefit decisions based on time, risk, and effort in choosing rewards to pursue. Various tasks have been developed to assess effort-based choice in rats, and experimenters have found largely similar results across tasks and brain regions. In this review, we focus primarily on the convergence of different effort-based choice tasks where quality or quantity of reward are manipulated. In the former, the rat is typically presented with the option to work for a preferred reward or select a less preferred, but freely-available reward. In such paradigms, the rewards are of different identities but are confirmed to differ qualitatively in value by a food preference task when both are freely-available. In the latter task type, rats are required to select between higher magnitude versus lower magnitudes of the same reward, but each with a similar effort requirement. We discuss the strengths/limitations of these paradigms, and describe brain regions that have been probed that result in converging or equivocal findings. Results are also reviewed with reference to a need for future work, and the broader impacts and implications of studies probing the mechanisms of effort.

Keywords: Anterior cingulate cortex, Basolateral Amygdala, Discounting, Reinforcement learning, Orbitofrontal Cortex

Introduction

How are effort decisions experienced in daily life? It is a situation we have all encountered: it has been a long day in the lab, perhaps you are trying to get that last bit of data for the conference next week, or you have an impending grant deadline. All that brain power requires energy, so what is the next thought on the journey home? That it is time to eat and there are many options to choose from. On the one hand, you can choose to cook your favorite meal, but there is an associated cost of going to the grocery store, gathering all the ingredients, making the trek home, and finally preparing the meal. Of course, it is always possible to simply purchase fast food, but that option is not nearly as tasty as your favorite meal, albeit less effort to procure. Herein lies the problem: how do we choose between these options with varying effort requirements, and what are the underlying neural mechanisms? There are several rodent models designed to address this question. This review will focus on convergent findings in the mechanisms of effort-based choice as assessed by several different behavioral paradigms and a set of behavioral economic analyses. Although a large amount of work has been published in this field, we will focus on summarizing the similarities across different tasks measuring the same construct of effort.

Quality effort-based choice

Much like the hungry scientist, rats can be presented with a choice between working for a preferred option versus selecting a low effort (freely-available), but less preferred option. This finding was first published in 1991, when Salamone and colleagues (Salamone et al. 1991) reported that either haloperidol and 6-hydroxydopamine lesions decreased effort output on a fixed-ratio 5 schedule of reinforcement for the preferred Bioserv pellets and instead biased behavior toward the lower effort lab chow. Since then, similar qualitative effort-based choice procedures have been adopted, primarily by varying the schedule of reinforcement. Others have provided the preferred food type on progressive ratio (PR) schedules (Randall et al. 2012; Randall et al. 2014; Randall et al. 2015; Yohn et al. 2016; Hart and Izquierdo 2017; Hart et al. 2017; Thompson et al. 2017; Hart, Gerson, and Izquierdo 2018; Munster and Hauber 2017) or random ratio schedules with higher work requirements than FR5 (Trifilieff et al. 2013; Bailey et al. 2018).

In a typical protocol administered by our lab, rats are first given fixed-ratio (FR)-1 training where each lever press earns a single sucrose pellet. Rats are kept on this schedule until they earn at least 30 pellets within 30 minutes. Following this, rats are shifted to a PR schedule where the required number of presses for each pellet increases according to the formula:

where is ni equal to the number of presses required on the ith ratio, rounded to the nearest whole number (Richardson and Roberts 1996), after 5 successive schedule completions. Rats are then tested on the PR schedule until they earn at least 30 pellets on any given day (~5 d). Upon meeting this criterion, a ceramic ramekin containing 18 g of lab chow is introduced (modified from (Randall et al. 2012)) during testing. Rats are then free to select between consuming freely-available but less preferred chow or lever pressing for preferred sucrose pellets. This phase is what we can refer to as quality effort-based choice.

Quantity effort-based choice

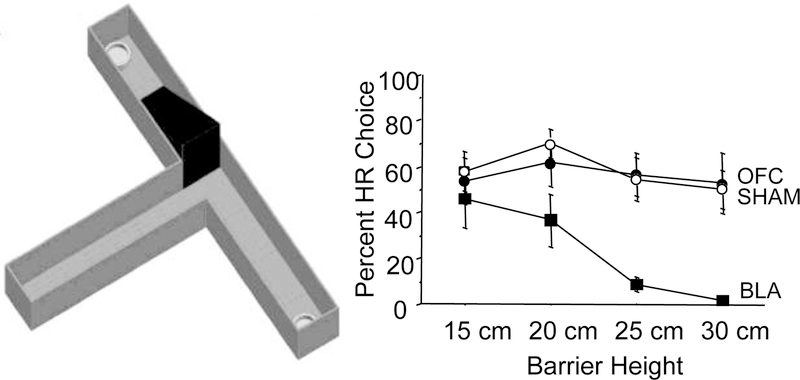

In a similar way that palatable high carbohydrate pellets are preferable to lab chow, more of a food option is preferable to less of the same food option to hungry humans and other animals. Subjects must exert more effort to get to more food in this case. The first such procedure made use of a T-maze apparatus where rats could choose between two arms of a maze (Figure 1). Selection of one arm was reinforced by two highly palatable pellets that rats could simply approach without difficulty. Behavior toward the other arm was reinforced by four pellets, where rats had to climb a tall barrier (Salamone, Cousins, and Bucher 1994). There are variations to this maze task. For example, in an earlier study from our lab we assessed such effortful choices between a high- and low-magnitude reward on a T-maze (Experiment 1, (Ostrander et al. 2011)) and effortful choices when a cue signaled changes in that reward magnitude (Experiment 2), requiring more flexibility than the average effort-choice paradigm. Unlike the quality effort-based choice tasks, in such quantity effort-based choice T-maze tasks rats are required to first learn about the reward values associated with each arm of the maze (discrimination training with free sampling phase). In this learning phase, one goal arm is baited with a high magnitude reward (HR) such as 2 cereal loops, and the other with a low magnitude reward (LR), such as a ½ cereal loop. The rat is allowed to sample freely from both arms at the beginning of testing. In such paradigms, HR and LR arm designations are counterbalanced among rats but remain constant for each rat for the duration of testing. Also for these T-maze paradigms, there are typically forced-choice trials, where HR and LR arms are blocked, ‘forcing’ the animal to experience the reward contingencies/values, which serve as reminders throughout testing. These forced-choice trials typically occur on no more than 2 trials within a session, and during all other trials, the rat is allowed to freely select either the HR or LR arm.

Figure 1. Quantity effort-based T-maze and sample data.

(Left) An example of an effortful choice T-maze apparatus containing a start arm and two goal arms, each baited with either a high reward, HR (2 cereal loops) or low reward, LR (½ cereal loop). Barriers are placed in the maze that rats must climb to obtain the HR. (Right) Mean ± SEM percent HR choices per lesion group as a function of increasing barrier heights, 15, 20, 25, and 30 cm. Lower scores indicate greater work aversion. Basolateral amygdala (BLA)-lesioned rats displayed significantly fewer HR choices compard to orbitofrontal cortex (OFC)-lesioned or SHAM-operated rats. Adapted from Ostrander et al. 2011.

Notably, T-maze paradigms often feature far fewer trials of choice behavior than other automated operant procedures, and thereby limit the application of different theoretical models which require richer datasets. Other automated quantity effort-based choice tasks have since been developed. A frequently used paradigm involves rats selecting between two different levers, each of which earns either a small magnitude (often 1 or 2) pellets or a large magnitude (4) of the same food type (Floresco, Tse, and Ghods-Sharifi 2008). While the low effort/low reward lever requires only a single press, the high effort/high reward lever requires either 5, 10, or 20 presses, presented often in discrete trial blocks, in ascending order.

To our knowledge, no study has been conducted examining the experience of effort-based choice across tasks (i.e. order effects: quality to quantity, or vice-versa), and to other reward-based behaviors (e.g. transfer: effort tasks to reinforcer devaluation tasks, or effort tasks to reversal learning tasks). For example, if a rat chooses the high effort option in one task, does this attribute select for certain characteristic that enhance or detract from performance on a subsequent paradigm? How does learning about value in a cost-benefit paradigm bias performance on reinforcement learning, in general? These questions have not yet been fully addressed.

Convergent findings

Ventral Striatum

Much of the motivating force behind developing effort-based choice tasks was to further understand the behavioral functions of dopamine in the ventral striatum (VS). While early theories posited that dopamine was the “reward molecule” (Wise et al. 1978; Wise, Spindler, and Legault 1978), this idea fell out of favor in light of the many other functions of dopamine, including aversively motivated behaviors (McCullough, Sokolowski, and Salamone 1993), and reward prediction error (RPE) signals that drive learning (Niv, Duff, and Dayan 2005; Gershman 2017). Salamone and colleagues found that ventral striatal dopamine D2 receptor blockade or dopamine depletion made rats less willing to exert high levels of effort for the preferred food in the first published example of a quality effort-based choice task. Additionally, rats’ consumption of the freely available less preferred alternative increased. This work challenged the idea that ventral striatal dopamine directly signaled the reinforcing properties of appetitive stimuli: rats were simply less willing to work for reward, but their behavior was still directed toward the acquisition and consumption of food. The early findings in the VS suggested interesting possibilities for nuance in effort-related behavior, and make it the ideal region to begin detailing where there have been convergent findings in different tasks.

As mentioned above, both systemic dopamine receptor blockade and ventral striatal dopamine depletion decreased choice of the high effort option (lever pressing on a fixed ratio 5 schedule for highly palatable pellets) and instead increased consumption of the freely available lab chow (Salamone et al. 1991). Ventral striatal effects have been found in numerous other effort tasks since then. Indeed, the first quality effort-based choice task was quickly followed by the first quantity effort-based choice task using a T-maze (Salamone, Cousins, and Bucher 1994). Similar to the fixed ratio 5/chow choice procedure, both haloperidol and ventral striatal dopamine depletion decreased choice of the high effort option; in this case climbing a 44 cm barrier to acquire twice as many pellets as the low effort option.

These original findings have been validated several times in both quality and quantity effort-based choice tasks. In the FR5/chow task, both D1 and D2 receptor blockade in nucleus accumbens core and shell decreased high effort lever pressing and instead biased animals toward low effort chow consumption (Nowend et al. 2001). These effects seem to be mediated primarily by the accumbens core specifically, as inactivations with smaller injection volumes of core, but not shell, produce similar decreases of choice of the high effort option in a quantity effort-based choice task where rats selected between high effort/high reward and low effort/low reward levers (Ghods-Sharifi and Floresco 2010), and dopamine depletions of accumbens shell were not sufficient to suppress reinforced lever pressing (Sokolowski and Salamone 1998). Interestingly, in the lever quantity effort-based choice task pioneered by Stan Floresco and colleagues (Floresco, Tse, and Ghods-Sharifi 2008), where both options can be equated in their temporal delay to reward, which is experimentally problematic in quality-based tasks, accumbens core inactivations have the same effect when the time delays are not equivalent. Thus, the effects of accumbens core manipulations are not due to delay intolerance and instead reflect a robust and consistent role of the VS, particularly accumbens core, in supporting effort-based choice across tasks.

Anterior cingulate cortex

The idea that VS is not the only part of the brain involved in cost-benefit analyses involving effort took approximately 10 years to gain popularity following publication of the first effort-based choice tasks. Much of this research focused on the anterior cingulate cortex (ACC) based on its anatomical connectivity with the striatum (Brog et al. 1993; Berendse, Galis-de Graaf, and Groenewegen 1992) and its role in motivated behavior generally (Paus 2001). The first work to probe ACC in effort adopted the aforementioned T-maze quantity effort-based choice task in rats with extensive lesions encompassing ACC, prelimbic, and infralimbic cortex (Walton, Bannerman, and Rushworth 2002), which was soon after followed with smaller lesions targeting ACC (Walton et al. 2003). In both of these cases, lesions decreased choice of the high effort/high reward option. Barrier climbing ability and reward magnitude discrimination were unaffected under conditions where both arms were impeded by a barrier (i.e. equal work).

These first two quantity-based findings were soon replicated and expanded upon by exposing ACC lesioned rats to a qualitative choice task where rats chose between lever pressing for sucrose pellets on a progressive ratio and consuming freely available lab chow (Schweimer and Hauber 2005). Interestingly, although the ACC quantitative choice task findings replicated those assessed via a T-maze task, Schweimer & Hauber (2005) found no group differences when the same animals were subjected to the progressive ratio qualitative choice task. This was pursued several years later, when ACC lesioned rats were tested in a similar progressive ratio qualitative choice task with a slightly modified progressive ratio schedule (Hart et al. 2017). Notably, our lab in this case did find group differences in the progressive ratio qualitative choice task, replicating the earlier T-maze findings. Thus, the potential discrepancy in these findings could be due to differences in the schedule of reinforcement, or perhaps prior experience in the T-maze attenuated any group differences that may have been subsequently observed on the progressive ratio choice task. Nevertheless, there is now a consistent line of evidence implicating ACC in choosing a higher effort option over a less effortful alternative, whether the high effort option is better in terms of quantity or quality.

Basolateral amygdala

Regions of the amygdala, particularly the basolateral complex (BLA) project to both the striatum (McDonald 1991) and frontal cortex (McDonald 1991), and it was not long until an experimental line of evidence probing BLA in effort-based choice was initiated. The first of which made use of a T-maze quantity-based choice task. Floresco and colleagues found that BLA inactivations, like ACC lesions and VS manipulations, decrease choice of the high effort option without affecting motoric ability or reward discrimination (Floresco and Ghods-Sharifi 2007). This effect was later replicated in a similar T-maze quantitative choice task with BLA lesions (Ostrander et al. 2011). Replication of the T-maze findings in a lever-based quantitative choice task confirmed that effects of BLA manipulations on effort-based choice were not due to delay discounting (Ghods-Sharifi, St Onge, and Floresco 2009). In the first test of BLA effects on the progressive ratio qualitative choice task (Hart and Izquierdo 2017), pharmacological inactivations via baclofen-muscimol produced similar effects to those seen in quantitative tasks, decreasing choice of the high effort option without affecting motoric ability or appetite.

Although not explicitly examining the role of each of the aforementioned regions alone, disconnection studies provide considerable insight into the mechanisms of effort-based choice. By unilaterally inactivating or lesioning one structure and doing the same to another structure in the contralateral hemisphere, one can disrupt serial communication between two structures, thus determining the role of this communication in effort-based choice. Consistent with the idea that VS, ACC, and BLA act as part of a circuit that regulates effort-related functions (Salamone et al. 2007), both disconnection of ACC and VS (Hauber and Sommer 2009) as well as BLA and ACC (Floresco and Ghods-Sharifi 2007) have similar effects as interfering with any of these regions alone. With modern neuroscience tools including optogenetics and designer receptors exclusively activated by designer drugs, more precise circuit dissection can be achieved. For example, dopamine neurons can be targeted based on genetic characteristics to determine their role in PR responding (Fischbach-Weiss, Reese, and Janak 2018), and specific terminals from one region can be silenced in an efferent target (Lichtenberg et al. 2017).

Other regions

For the sake of brevity, here we will group together other brain regions where the current literature regarding their role in effort-related decision-making is mixed, starting with dorsomedial striatum (DMS). DMS has a longstanding role in controlling movement vigor, often defined as velocity or latency (Wang, Miura, and Uchida 2013; Opris, Lebedev, and Nelson 2011; Bartholomew et al. 2016; Panigrahi et al. 2015). How exactly vigor relates to effort choice, however, is unclear. While vigor is certainly a component of effortful behavior, work output is also characterized by persistence and duration of activity (Salamone and Correa 2012). While DMS is unequivocally involved in vigor, its role in effort-related decision-making is less clear. For example, DMS dopamine depletion and related manipulations do not have any effects on effort-based choice as assessed by the FR5 qualitative choice task (Nunes et al. 2013; Font et al. 2008), and no effects of methamphetamine withdrawal on c-Fos immunoreactivity were found in DMS following testing in the PR effort-based choice qualitative task (Hart, Gerson, and Izquierdo 2018). Nevertheless, others have found that DMS dopamine receptor blockade does reduce random-ratio 15 reinforced lever pressing in a qualitative choice task (Bailey et al. 2018). Given that DMS dopamine depletion can alter inter-response times, a measure of vigor, without affecting total lever presses (Salamone et al. 1993), it is plausible that DMS is involved in the total amount of effort subjects will exert as well as the vigor with which effort is exerted, and the extent to which DMS is involved in these depends on the schedule of reinforcement and other task constraints.

The orbitofrontal cortex (OFC), while clearly involved in many behaviors related to reward-learning and decision-making (Izquierdo 2017), has a less clear role in effort. Both OFC D1 receptor blockade (Cetin et al. 2004) and optogenetic inhibition (Gardner et al. 2018) decreased PR responding. Others found that pharmacologic inhibition increased PR responding, while pharmacologic excitation decreased PR responding (Munster and Hauber 2017). Ostrander et al. (2011) reported no such quantity effort-based choice impairment (Figure 1), only learning impairments when animals were required to use cues to make choices. In short, it is unknown whether these seemingly divergent findings are due to different interference methods, stereotaxic targeting, schedules of reinforcement, or whether/how cues are present (Ostrander et al. 2011), so future experiments should clarify the role of OFC in effort as well as in effort-based choice.

While the results of subthalamic nucleus (STN) manipulations are not mixed, they are certainly within the purview of this review. The STN receives most input from the ventral pallidum (Canteras et al. 1990), a major output structure of the striatum. While all of the aforementioned lesion and inactivation studies showed that ACC, VS, and BLA manipulations decrease effort for a preferred reward, STN lesions increase effort (PR responding), at least for food (Baunez, Amalric, and Robbins 2002; Baunez et al. 2005). Interestingly, the same STN lesions that increase PR responding for food decrease PR responding for cocaine (Baunez et al. 2005). Why is it that STN exerts opposite control over natural versus drug reinforcers, does its role extend to effort choice behavior, and is this the case for other parts of the brain? Future experiments should shed light on these questions.

Comparing effect sizes: Quantity stronger than Quality

A direct comparison of identical manipulations (e.g. haloperidol and nucleus accumbens dopamine depletion) on effort-based choice involving quantity/T-maze climbing vs. quality/lever pressing would be very informative (Salamone et al. 1991; 1994). Yet there are several limitations in directly comparing quality and quantity procedures: i. To compare effect sizes one needs means and standard deviations of the different groups, and these values are often not reported in many of the older studies and presented only in the figures as means with respective standard error bars, ii. there are strain differences that may factor significantly into these comparisons, iii. there are differences in the ecological validity of the paradigms. For example, manual T-maze tasks are easier for rodents to learn than automated lever responding tasks, perhaps mimicking more naturalistic foraging behavior observed in the wild. Relatedly, it may be more important for an animal’s survival to distinguish more vs. less (i.e. relative quantities) than more nuanced qualitative differences. In support of this, it has also proposed that the ability to determine magnitude or represent numerosity is a subcortical process, highly evolutionarily conserved (Collins, Park, and Behrmann 2017), and iv. there may be differences in the neural interference methods (e.g. lesion, pharmacological inactivation) that could account for choice behavior.

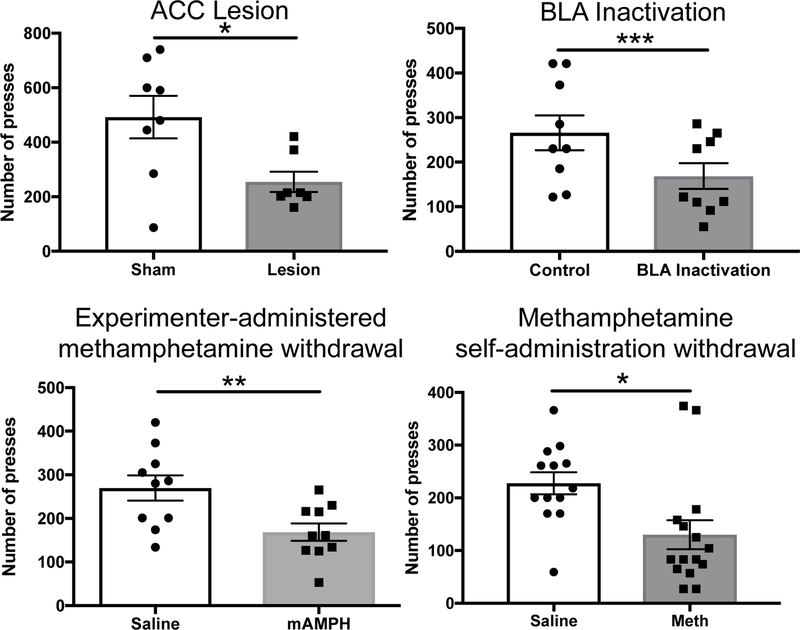

To overcome some of these issues, we compared the results from two reports from our lab: Ostrander et al. (2011) and Hart & Izquierdo (2017). Both of these studies involved targeting of the BLA using identical stereotaxic coordinates in male Long-Evans rats obtained from the same vendor (Charles River Laboratories, Hollister CA). We reanalyzed high reward (HR) choice probabilities when the HR arm was impeded by the tallest (30 cm) barrier in sham-operated (SHAM) rats versus those with BLA lesions via ibotenic acid. We then compared this effect size with that obtained when rats selected between lever pressing for a sucrose pellet reward or consuming freely-available lab chow, following BLA baclofen/muscimol inactivation (or vehicle). When we computed Cohen’s d for each of these studies, we found that BLA lesions resulted in a much larger effect size in quantity/T-maze climbing effort-based choice (d=2.87) compared to BLA inactivation in quality-lever press responding effort-based choice (d=0.95). It is tempting to attribute this difference in effect size solely to the manipulation strength (lesion vs. inactivation), yet it is known that recovery of function often occurs after “permanent” lesions and chronic microinfusions can similarly produce damage to surrounding tissue (Otchy et al. 2015). The difference is likely not explained by the manipulation technique because ACC lesions (Hart et al. 2017) also result in an effect size of d = 1.39 on quality effort-based choice, similar to BLA inactivation. Indeed, of the different experiments we have conducted in our lab investigating effects on quality effort-based choice, none of the effect sizes match that of quantity effort-based choice: experimenter-administered methamphetamine, d=1.29 (Thompson et al., 2017); intravenous methamphetamine self-administration d=1.05 (Hart et al. 2018), see Figure 2. This lends support to the idea that the representation of reward magnitude is more salient than reward identity.

Figure 2. Comparison of different manipulations on the same quality effort-based paradigm in our lab.

(Top left) ACC lesions decreased lever pressing in the context of a competing, low effort reward. (Top right) BLA inactivations decreased high effort lever pressing in the context of choice. (Bottom left) Withdrawal from experimenter-administered methamphetamine decreased choice lever pressing. (Bottom right) Withdrawal from methamphetamine intravenous self-administration decreased choice lever pressing. Data are adapted from Hart et al. (2017), Hart & Izquierdo (2017), Thompson et al. (2017), and Hart et al. (2018).

Theoretical Frameworks

Psychologists have adopted concepts from economics to explain behavior. Namely, the concept of demand elasticity, that is, the demand for a good when only the price changes, has been applied to understanding fixed-ratio responding. For example, ventral striatal dopamine depletions have no effect on fixed-ratio 1 responding for food but reduce lever pressing to a greater extent as the work requirement increases to fixed-ratio 64 (Aberman and Salamone 1999). Thus, ventral striatal dopamine depletions increase demand elasticity and decrease “willingness to pay”.

Economic analyses may be useful for comparing cost-benefit decisions in humans and in experimental animals (Bentzley, Fender, and Aston-Jones 2013; Hursh 1980; Hursh and Silberberg 2008). Economic measures may have high translational value because estimates of cost sensitivity and other parameters can be used to roughly equate measures across different schedules of reinforcement, reinforcers, and species in different tasks. Using these economic models, demand curves are generated that allow us to estimate consumption at the lowest effort cost (Q0), the rate of decline in consumption as a function of increasing effort cost (α), and an index of demand inelasticity derived from α, essential value (EV). These measures have been shown to predict addiction-like behaviors (Murphy et al. 2009; Petry 2001; Gray and MacKillop 2014; Galuska et al. 2011; Bentzley and Aston-Jones 2015), decreased food demand (Galuska et al. 2011), and they can dissociate unconstrained consumption (i.e. Q0) from willingness to pay (i.e. α) under dopamine antagonism or depletion (Aberman and Salamone 1999; Salamone et al. 2016; Salamone et al. 2017). We recently fit an exponential model (Hursh and Silberberg 2008) to our effort-based choice data (Hart, Gerson, and Izquierdo 2018):

where Q = consumption at a given price C, or cost (FR value), Q0 = demand intensity, consumption at lowest price, k = constant parameter reflecting the range of consumption values in log10 units, α = demand elasticity, or the derived demand parameter reflecting the rate of consumption decline associated with increasing price. Q0 and α are calculated based on the number of pellets earned at each ratio. Because the number of pellets earned at each ratio in our task is restricted to five, differences in α would be due to animals reaching higher/lower ratios or earning more/fewer pellets at a given ratio. It is unclear, therefore, how this model captures more than the session average performance since it allows no value update (see below), though this criticism can apply to other computational models of cost-benefit choice, including delay discounting. Other models, described below, may better capture within-session sensitivity to cost or benefit. For these economic models, for each rat, we have also previously calculated Essential Value (EV) to quantify the ‘strength’ of the reinforcer (i.e. sucrose pellets) (Kearns et al. 2017), based on the value of the exponential rate constant. As indicated above, the value of α is the rate of consumption decline and k is the consumption range for each rat in the following:

All behavioral economic indices can then be correlated with brain activity data, and regression analyses conducted to predict indices from brain data, as in Hart et al. (2018). Some factors worthy of future consideration in the context of how behavioral economic indices fit effort paradigms are: i. how direct comparisons of quantity and quality may differentially impact behavioral economic indices, ii. how different reinforcers could differentially support performance because they are presented in an open or closed economy, and iii. how different food restriction protocols in the homecage environment may influence effort-based performance in the operant chamber. As mentioned previously, T-maze tasks generate impoverished datasets relative to lever pressing tasks, and are unlikey to yield the necessary number of trials to fit these exponential models to behavioral data.

Standard reinforcement learning algorithms may also be used to capture behavior on either quality or quantity-based effort choice paradigms, but to our knowledge they have not been applied to rat behavioral data such as those described in this review. A conventional temporal difference model (Sutton and Barto 1998; Sutton 1988) consisting of a prediction error term may be used, since prediction error has been shown to reflect marginal utility (Stauffer, Lak, and Schultz 2014). Such a model would allow estimation of a more dynamic, within-session value change in effort-based choice. This is not a viable solution because in typical effort choice tasks, the rat has already learned about the contingencies and can easily discriminate the options. However, the rat could still learn about the (diminishing) value of the preferred, more effortful option as the session goes on. Changes could be driven either by changes in subjective effort or by subjective benefit of the reward, and no model effectively dissociates these possibilities. It will be interesting to fit existing effort-choice datasets to this model in the future, and use the discount factor and/or the diminishing utility function as a variable to correlate with neural activity in the regions identified here.

Finally, it should be noted that in such effort-based paradigms the more effortful, higher-valued option is often associated with more variability or uncertainty than the lower-valued option. This can be either because the animal ‘gives up’ on one more often, or simply because the value is more dependent on satiety level on any given day. Therefore, it will useful to compare models used in explaining effects on expected uncertainty or known risk (Stolyarova and Izquierdo 2017) to these data as well.

Why effort is important

To be successful at foraging, organisms must evaluate the cost of reward (i.e., risk, delay, effort) and often appreciate this cost in relative terms (i.e. in comparison with other options that may be concurrently available). This ability is of interest to a variety of fields including psychology, neuroscience, behavioral ecology, and ethology (Dayan and Daw 2008). Effort, delay, and risk discounting paradigms generally do not access how animals gather information of the options in unknown or changing reward environments (i.e. learning) but instead test how well they maximize or exploit rewards in well-known conditions (i.e. performance). As mentioned above, one important exception is that the rat must learn about and adapt to the decreasing value of each option, if food rewards are involved. This would ostensibly make brain regions involved in updating behavior according to motivational state changes critical to this behavior. When we consider a hierarchy of costs, some are given priority consideration: for example, risk of punishment or predation is weighed more heavily than physical effort costs, delay costs, or risk of non-reward. Similarly, the opportunity to exploit a resource often comes at the expense of exploring another (Addicott et al. 2017), known as the explore-exploit tradeoff. This is likely not a factor in environments where resources are not depleting, and where reinforcement contingencies are known to the organism. Physical effort has underpinnings deeply embedded in the mesocorticolimbic system. Indeed, we have found similarities with inhibition of normal functioning of ACC (Hart et al. 2017), BLA (Hart and Izquierdo 2017), and chronic methamphetamine experience, whether experimenter- or self-administered (Thompson et al. 2017; Hart, Gerson, and Izquierdo 2018), in making rats ‘selectively lazy’ for the qualitatively preferred option (Figure 1). It would be interesting to determine how exposure to other drugs of abuse may influence performance on this task.

Though this review focuses on convergence in effort tasks, future work should determine whether this is the case for tasks where other aforementioned costs (time, risk) are involved. To our knowledge, paradigms for assessing delay costs (Bickel, Odum, and Madden 1999; Coffey et al. 2003; Floresco, Tse, and Ghods-Sharifi 2008; Mendez et al. 2010) and risk costs (Floresco and Whelan 2009; St Onge, Stopper, et al. 2012; St Onge, Ahn, et al. 2012; Larkin, Jenni, and Floresco 2016; Ghods-Sharifi, St Onge, and Floresco 2009) have exclusively employed rewards that vary in quantity, save a few exceptions where the larger reward had a higher probability of receiving shock, rather than non-reinforcement (Orsini et al. 2015; Orsini et al. 2017; Simon et al. 2009). Interestingly, a recent report showed D2 receptor stimulation results in decreased risky choice under risk of shock (Blaes et al. 2018), where others have found increased risky choice with D2 receptor stimulation under risk of non-reinforcement (St Onge and Floresco 2009). This suggests that risk of punishment versus non-reinforcement may have nonoverlapping mechanisms. Is this the case for other neural substrates that regulate risk-based decisions? Would testing mechanisms found in typical delay-discounting paradigms provide similar results in a quality-based delay discounting task? These remain empirical questions.

Overcoming effort costs to acquire food is necessary for survival in humans and other animals. Of course, other appetitive events, such as exercise, sex, or drugs are often impeded by effort costs, and behavior directed toward any one of these events may or may not be adaptive depending on several situational factors. For example, it might be maladaptive for an obese rat to pursue more food at the cost of other rewards. It may be similarly maladaptive for addicted individuals to pursue drugs. Growing evidence points to similar underlying mechanisms in effort for food as those in willingness to exercise (Correa et al. 2016; Lopez-Cruz et al. 2018) or to seek drugs (Kavanagh et al. 2015). Future experiments should test whether the circuitry that is known to regulate effort to acquire food similarly affects other rewards. This line of work is important because effort tasks have high translational value (Treadway et al. 2009; Wardle et al. 2011; Treadway and Zald 2011; Treadway et al. 2012): effort is implicated in many conditions, including drug withdrawal (Thompson et al. 2017; Hart, Gerson, and Izquierdo 2018), depression (Treadway et al. 2012), and schizophrenia (Gold et al. 2013; Treadway et al. 2015). Further, fatigue, which can be characterized as decreased effort, is one the most common symptoms in general medicine (Demyttenaere, De Fruyt, and Stahl 2005), and tasks assessing effort could be useful in modeling effort-related symptoms that occur in depression and other conditions (Nunes et al. 2013).

Psychological science and other fields are currently undergoing a major reproducibility crisis (Estimating the reproducibility of psychological science 2015). That several tasks, methods, and brain region manipulation have produced consistent and replicable findings bodes well for this line of work. Indeed, the existing literature characterizing the neural bases of effort opens the door for future research investigating how these regions act as a network involved in the regulation of deciding how much effort to exert not just for food, but for drugs, sex, aggression, and other reinforcers. Indeed, with increasingly powerful tools to record electrical activity of hundreds of neurons (Jun et al. 2017) and open source optical imaging methods (Cai et al. 2016) coupled with sophisticated computational approaches (Wikenheiser, Marrero-Garcia, and Schoenbaum 2017; Sadacca et al. 2018; Mashhoori et al. 2018), future research can reveal many functions of the mammalian brain in effort and beyond.

Highlights.

Different behavioral paradigms have been used to assess effort-based choice in rats

The rat selects between an effortful, preferred vs. a low effort, less preferred option

Generally, the preferred option is better in quality or magnitude

We discuss a variety of experimental and theoretical approaches related to effort

We highlight areas for future work and the relevance to the human condition

Acknowledgements

This work was supported by CRCNS R01DA047870, UCLA’s Division of Life Sciences Recruitment and Retention Fund, and UCLA Academic Senate Grant (Izquierdo). We also acknowledge the UCLA Dissertation Year Fellowship (Hart). We thank members of the Izquierdo lab for thoughtful feedback and discussions of an earlier version of this review.

Abbreviations

- ACC

Anterior cingulate cortex

- BLA

Basolateral amygdala

- DMS

Dorsomedial striatum

- OFC

Orbitofrontal cortex

- STN

Subthalamic nucleus

- VS

Ventromedial striatum

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Aberman JE and Salamone JD 1999. Nucleus accumbens dopamine depletions make rats more sensitive to high ratio requirements but do not impair primary food reinforcement. Neuroscience, 92: 545–52. [DOI] [PubMed] [Google Scholar]

- Addicott MA, Pearson JM, Sweitzer MM, Barack DL and Platt ML 2017. A Primer on Foraging and the Explore/Exploit Trade-Off for Psychiatry Research. Neuropsychopharmacology, 42: 1931–1939. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bailey MR, Goldman O, Bello EP, Chohan MO, Jeong N, Winiger V, Chun E, Schipani E, Kalmbach A, Cheer JF, Balsam PD and Simpson EH 2018. An Interaction between Serotonin Receptor Signaling and Dopamine Enhances Goal-Directed Vigor and Persistence in Mice. J Neurosci, 38: 2149–2162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartholomew RA, Li H, Gaidis EJ, Stackmann M, Shoemaker CT, Rossi MA and Yin HH 2016. Striatonigral control of movement velocity in mice. Eur J Neurosci, 43: 1097–110. [DOI] [PubMed] [Google Scholar]

- Baunez C, Amalric M and Robbins TW 2002. Enhanced food-related motivation after bilateral lesions of the subthalamic nucleus. J Neurosci, 22: 562–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baunez C, Dias C, Cador M and Amalric M 2005. The subthalamic nucleus exerts opposite control on cocaine and ‘natural’ rewards. Nat Neurosci, 8: 484–9. [DOI] [PubMed] [Google Scholar]

- Bentzley BS and Aston-Jones G 2015. Orexin-1 receptor signaling increases motivation for cocaine-associated cues. Eur J Neurosci, 41: 1149–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentzley BS, Fender KM and Aston-Jones G 2013. The behavioral economics of drug self-administration: a review and new analytical approach for within-session procedures. Psychopharmacology (Berl), 226: 113–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berendse HW, Galis-de Graaf Y and Groenewegen HJ 1992. Topographical organization and relationship with ventral striatal compartments of prefrontal corticostriatal projections in the rat. J Comp Neurol, 316: 314–47. [DOI] [PubMed] [Google Scholar]

- Bickel WK, Odum AL and Madden GJ 1999. Impulsivity and cigarette smoking: delay discounting in current, never, and ex-smokers. Psychopharmacology (Berl), 146: 447–54. [DOI] [PubMed] [Google Scholar]

- Blaes SL, Orsini CA, Mitchell MR, Spurrell MS, Betzhold SM, Vera K, Bizon JL and Setlow B 2018. Monoaminergic modulation of decision-making under risk of punishment in a rat model. Behav Pharmacol, 29: 745–761. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brog JS, Salyapongse A, Deutch AY and Zahm DS 1993. The patterns of afferent innervation of the core and shell in the “accumbens” part of the rat ventral striatum: immunohistochemical detection of retrogradely transported fluoro-gold. J Comp Neurol, 338: 255–78. [DOI] [PubMed] [Google Scholar]

- Cai DJ, Aharoni D, Shuman T, Shobe J, Biane J, Song W, Wei B, Veshkini M, La-Vu M, Lou J, Flores SE, Kim I, Sano Y, Zhou M, Baumgaertel K, Lavi A, Kamata M, Tuszynski M, Mayford M, Golshani P and Silva AJ 2016. A shared neural ensemble links distinct contextual memories encoded close in time. Nature, 534: 115–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Canteras NS, Shammah-Lagnado SJ, Silva BA and Ricardo JA 1990. Afferent connections of the subthalamic nucleus: a combined retrograde and anterograde horseradish peroxidase study in the rat. Brain Res, 513: 43–59. [DOI] [PubMed] [Google Scholar]

- Cetin T, Freudenberg F, Fuchtemeier M and Koch M 2004. Dopamine in the orbitofrontal cortex regulates operant responding under a progressive ratio of reinforcement in rats. Neurosci Lett, 370: 114–7. [DOI] [PubMed] [Google Scholar]

- Coffey SF, Gudleski GD, Saladin ME and Brady KT 2003. Impulsivity and rapid discounting of delayed hypothetical rewards in cocaine-dependent individuals. Exp Clin Psychopharmacol, 11: 18–25. [DOI] [PubMed] [Google Scholar]

- Collins E, Park J and Behrmann M 2017. Numerosity representation is encoded in human subcortex. Proc Natl Acad Sci U S A, 114: E2806–E2815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Correa M, Pardo M, Bayarri P, Lopez-Cruz L, San Miguel N, Valverde O, Ledent C and Salamone JD 2016. Choosing voluntary exercise over sucrose consumption depends upon dopamine transmission: effects of haloperidol in wild type and adenosine A(2)AKO mice. Psychopharmacology (Berl), 233: 393–404. [DOI] [PubMed] [Google Scholar]

- Dayan P and Daw ND 2008. Decision theory, reinforcement learning, and the brain. Cogn Affect Behav Neurosci, 8: 429–53. [DOI] [PubMed] [Google Scholar]

- Demyttenaere K, De Fruyt J and Stahl SM 2005. The many faces of fatigue in major depressive disorder. Int J Neuropsychopharmacol, 8: 93–105. [DOI] [PubMed] [Google Scholar]

- Estimating the reproducibility of psychological science. 2015. Science, 349: aac4716. [DOI] [PubMed] [Google Scholar]

- Fischbach-Weiss S, Reese RM and Janak PH 2018. Inhibiting Mesolimbic Dopamine Neurons Reduces the Initiation and Maintenance of Instrumental Responding. Neuroscience, 372: 306–315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Floresco SB and Ghods-Sharifi S 2007. Amygdala-prefrontal cortical circuitry regulates effort-based decision making. Cereb Cortex, 17: 251–60. [DOI] [PubMed] [Google Scholar]

- Floresco SB, Tse MT and Ghods-Sharifi S 2008. Dopaminergic and glutamatergic regulation of effort- and delay-based decision making. Neuropsychopharmacology, 33: 1966–79. [DOI] [PubMed] [Google Scholar]

- Floresco SB and Whelan JM 2009. Perturbations in different forms of cost/benefit decision making induced by repeated amphetamine exposure. Psychopharmacology (Berl), 205: 189–201. [DOI] [PubMed] [Google Scholar]

- Font L, Mingote S, Farrar AM, Pereira M, Worden L, Stopper C, Port RG and Salamone JD 2008. Intra-accumbens injections of the adenosine A2A agonist CGS 21680 affect effort-related choice behavior in rats. Psychopharmacology (Berl), 199: 515–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galuska CM, Banna KM, Willse LV, Yahyavi-Firouz-Abadi N and See RE 2011. A comparison of economic demand and conditioned-cued reinstatement of methamphetamine-seeking or food-seeking in rats. Behav Pharmacol, 22: 312–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner MP, Conroy JC, Styer CV, Huynh T, Whitaker LR and Schoenbaum G 2018. Medial orbitofrontal inactivation does not affect economic choice. Elife, 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gershman SJ 2017. Dopamine, Inference, and Uncertainty. Neural Comput, 29: 3311–3326. [DOI] [PubMed] [Google Scholar]

- Ghods-Sharifi S and Floresco SB 2010. Differential effects on effort discounting induced by inactivations of the nucleus accumbens core or shell. Behav Neurosci, 124: 179–91. [DOI] [PubMed] [Google Scholar]

- Ghods-Sharifi S, Onge JR St and Floresco SB 2009. Fundamental contribution by the basolateral amygdala to different forms of decision making. J Neurosci, 29: 5251–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold JM, Strauss GP, Waltz JA, Robinson BM, Brown JK and Frank MJ 2013. Negative symptoms of schizophrenia are associated with abnormal effort-cost computations. Biol Psychiatry, 74: 130–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gray JC and MacKillop J 2014. Interrelationships among individual differences in alcohol demand, impulsivity, and alcohol misuse. Psychol Addict Behav, 28: 282–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart EE, Gerson JO and Izquierdo A 2018. Persistent effect of withdrawal from intravenous methamphetamine self-administration on brain activation and behavioral economic indices involving an effort cost. Neuropharmacology, 140: 130–138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart EE, Gerson JO, Zoken Y, Garcia M and Izquierdo A 2017. Anterior cingulate cortex supports effort allocation towards a qualitatively preferred option. Eur J Neurosci, 46: 1682–1688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart EE and Izquierdo A 2017. Basolateral amygdala supports the maintenance of value and effortful choice of a preferred option. Eur J Neurosci, 45: 388–397. [DOI] [PubMed] [Google Scholar]

- Hauber W and Sommer S 2009. Prefrontostriatal circuitry regulates effort-related decision making. Cereb Cortex, 19: 2240–7. [DOI] [PubMed] [Google Scholar]

- Hursh SR 1980. Economic concepts for the analysis of behavior. J Exp Anal Behav, 34: 219–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hursh SR and Silberberg A 2008. Economic demand and essential value. Psychol Rev, 115: 186–98. [DOI] [PubMed] [Google Scholar]

- Izquierdo A 2017. Functional Heterogeneity within Rat Orbitofrontal Cortex in Reward Learning and Decision Making. J Neurosci, 37: 10529–10540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jun JJ, Steinmetz NA, Siegle JH, Denman DJ, Bauza M, Barbarits B, Lee AK, Anastassiou CA, Andrei A, Aydin C, Barbic M, Blanche TJ, Bonin V, Couto J, Dutta B, Gratiy SL, Gutnisky DA, Hausser M, Karsh B, Ledochowitsch P, Lopez CM, Mitelut C, Musa S, Okun M, Pachitariu M, Putzeys J, Rich PD, Rossant C, Sun WL, Svoboda K, Carandini M, Harris KD, Koch C, O’Keefe J and Harris TD 2017. Fully integrated silicon probes for high-density recording of neural activity. Nature, 551: 232–236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kavanagh KA, Schreiner DC, Levis SC, O’Neill CE and Bachtell RK 2015. Role of adenosine receptor subtypes in methamphetamine reward and reinforcement. Neuropharmacology, 89: 265–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kearns DN, Kim JS, Tunstall BJ and Silberberg A 2017. Essential values of cocaine and non-drug alternatives predict the choice between them. Addict Biol, 22: 1501–1514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Larkin JD, Jenni NL and Floresco SB 2016. Modulation of risk/reward decision making by dopaminergic transmission within the basolateral amygdala. Psychopharmacology (Berl), 233: 121–36. [DOI] [PubMed] [Google Scholar]

- Lichtenberg NT, Pennington ZT, Holley SM, Greenfield VY, Cepeda C, Levine MS and Wassum KM 2017. Basolateral Amygdala to Orbitofrontal Cortex Projections Enable Cue-Triggered Reward Expectations. J Neurosci, 37: 8374–8384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lopez-Cruz L, San Miguel N, Carratala-Ros C, Monferrer L, Salamone JD and Correa M 2018. Dopamine depletion shifts behavior from activity based reinforcers to more sedentary ones and adenosine receptor antagonism reverses that shift: Relation to ventral striatum DARPP32 phosphorylation patterns. Neuropharmacology, 138: 349–359. [DOI] [PubMed] [Google Scholar]

- Mashhoori A, Hashemnia S, McNaughton BL, Euston DR and Gruber AJ 2018. Rat anterior cingulate cortex recalls features of remote reward locations after disfavoured reinforcements. Elife, 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCullough LD, Sokolowski JD and Salamone JD 1993. A neurochemical and behavioral investigation of the involvement of nucleus accumbens dopamine in instrumental avoidance. Neuroscience, 52: 919–25. [DOI] [PubMed] [Google Scholar]

- McDonald AJ 1991. Organization of amygdaloid projections to the prefrontal cortex and associated striatum in the rat. Neuroscience, 44: 1–14. [DOI] [PubMed] [Google Scholar]

- Mendez IA, Simon NW, Hart N, Mitchell MR, Nation JR, Wellman PJ and Setlow B 2010. Self-administered cocaine causes long-lasting increases in impulsive choice in a delay discounting task. Behav Neurosci, 124: 470–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Munster A and Hauber W 2017. Medial Orbitofrontal Cortex Mediates Effort-related Responding in Rats. Cereb Cortex: 1–11. [DOI] [PubMed]

- Murphy JG, MacKillop J, Skidmore JR and Pederson AA 2009. Reliability and validity of a demand curve measure of alcohol reinforcement. Exp Clin Psychopharmacol, 17: 396–404. [DOI] [PubMed] [Google Scholar]

- Niv Y, Duff MO and Dayan P 2005. Dopamine, uncertainty and TD learning. Behav Brain Funct, 1: 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nowend KL, Arizzi M, Carlson BB and Salamone JD 2001. D1 or D2 antagonism in nucleus accumbens core or dorsomedial shell suppresses lever pressing for food but leads to compensatory increases in chow consumption. Pharmacol Biochem Behav, 69: 373–82. [DOI] [PubMed] [Google Scholar]

- Nunes EJ, Randall PA, Hart EE, Freeland C, Yohn SE, Baqi Y, Muller CE, Lopez-Cruz L, Correa M and Salamone JD 2013. Effort-related motivational effects of the VMAT-2 inhibitor tetrabenazine: implications for animal models of the motivational symptoms of depression. J Neurosci, 33: 19120–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Opris I, Lebedev M and Nelson RJ 2011. Motor Planning under Unpredictable Reward: Modulations of Movement Vigor and Primate Striatum Activity. Front Neurosci, 5: 61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orsini CA, Hernandez CM, Singhal S, Kelly KB, Frazier CJ, Bizon JL and Setlow B 2017. Optogenetic Inhibition Reveals Distinct Roles for Basolateral Amygdala Activity at Discrete Time Points during Risky Decision Making. J Neurosci, 37: 11537–11548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orsini CA, Trotta RT, Bizon JL and Setlow B 2015. Dissociable roles for the basolateral amygdala and orbitofrontal cortex in decision-making under risk of punishment. J Neurosci, 35: 1368–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ostrander S, Cazares VA, Kim C, Cheung S, Gonzalez I and Izquierdo A 2011. Orbitofrontal cortex and basolateral amygdala lesions result in suboptimal and dissociable reward choices on cue-guided effort in rats. Behav Neurosci, 125: 350–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Otchy TM, Wolff SB, Rhee JY, Pehlevan C, Kawai R, Kempf A, Gobes SM and Olveczky BP 2015. Acute off-target effects of neural circuit manipulations. Nature, 528: 358–63. [DOI] [PubMed] [Google Scholar]

- Panigrahi B, Martin KA, Li Y, Graves AR, Vollmer A, Olson L, Mensh BD, Karpova AY and Dudman JT 2015. Dopamine Is Required for the Neural Representation and Control of Movement Vigor. Cell, 162: 1418–30. [DOI] [PubMed] [Google Scholar]

- Paus T 2001. Primate anterior cingulate cortex: where motor control, drive and cognition interface. Nat Rev Neurosci, 2: 417–24. [DOI] [PubMed] [Google Scholar]

- Petry NM 2001. A behavioral economic analysis of polydrug abuse in alcoholics: asymmetrical substitution of alcohol and cocaine. Drug Alcohol Depend, 62: 31–9. [DOI] [PubMed] [Google Scholar]

- Randall PA, Lee CA, Nunes EJ, Yohn SE, Nowak V, Khan B, Shah P, Pandit S, Vemuri VK, Makriyannis A, Baqi Y, Muller CE, Correa M and Salamone JD 2014. The VMAT-2 inhibitor tetrabenazine affects effort-related decision making in a progressive ratio/chow feeding choice task: reversal with antidepressant drugs. PLoS One, 9: e99320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Randall PA, Lee CA, Podurgiel SJ, Hart E, Yohn SE, Jones M, Rowland M, Lopez-Cruz L, Correa M and Salamone JD 2015. Bupropion increases selection of high effort activity in rats tested on a progressive ratio/chow feeding choice procedure: implications for treatment of effort-related motivational symptoms. Int J Neuropsychopharmacol, 18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Randall PA, Pardo M, Nunes EJ, Lopez Cruz L, Vemuri VK, Makriyannis A, Baqi Y, Muller CE, Correa M and Salamone JD 2012. Dopaminergic modulation of effort-related choice behavior as assessed by a progressive ratio chow feeding choice task: pharmacological studies and the role of individual differences. PLoS One, 7: e47934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richardson NR and Roberts DC 1996. Progressive ratio schedules in drug self-administration studies in rats: a method to evaluate reinforcing efficacy. J Neurosci Methods, 66: 1–11. [DOI] [PubMed] [Google Scholar]

- Sadacca BF, Wied HM, Lopatina N, Saini GK, Nemirovsky D and Schoenbaum G 2018. Orbitofrontal neurons signal sensory associations underlying model-based inference in a sensory preconditioning task. Elife, 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salamone JD and Correa M 2012. The mysterious motivational functions of mesolimbic dopamine. Neuron, 76: 470–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Farrar A and Mingote SM 2007. Effort-related functions of nucleus accumbens dopamine and associated forebrain circuits. Psychopharmacology (Berl), 191: 461–82. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Yohn S, Lopez Cruz L, San Miguel N and Alatorre L 2016. The pharmacology of effort-related choice behavior: Dopamine, depression, and individual differences. Behav Processes, 127: 3–17. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Yohn SE, Yang J-H, Somerville M, Rotolo RA and Presby RE 2017. Behavioral activation, effort-based choice, and elasticity of demand for motivational stimuli: Basic and translational neuroscience approaches. Motivation Science, 3: 208–229. [Google Scholar]

- Salamone JD, Cousins MS and Bucher S 1994. Anhedonia or anergia? Effects of haloperidol and nucleus accumbens dopamine depletion on instrumental response selection in a T-maze cost/benefit procedure. Behav Brain Res, 65: 221–9. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Kurth PA, McCullough LD, Sokolowski JD and Cousins MS 1993. The role of brain dopamine in response initiation: effects of haloperidol and regionally specific dopamine depletions on the local rate of instrumental responding. Brain Res, 628: 218–26. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Steinpreis RE, McCullough LD, Smith P, Grebel D and Mahan K 1991. Haloperidol and nucleus accumbens dopamine depletion suppress lever pressing for food but increase free food consumption in a novel food choice procedure. Psychopharmacology (Berl), 104: 515–21. [DOI] [PubMed] [Google Scholar]

- Schweimer J and Hauber W 2005. Involvement of the rat anterior cingulate cortex in control of instrumental responses guided by reward expectancy. Learn Mem, 12: 334–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simon NW, Gilbert RJ, Mayse JD, Bizon JL and Setlow B 2009. Balancing risk and reward: a rat model of risky decision making. Neuropsychopharmacology, 34: 2208–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sokolowski JD and Salamone JD 1998. The role of accumbens dopamine in lever pressing and response allocation: effects of 6-OHDA injected into core and dorsomedial shell. Pharmacol Biochem Behav, 59: 557–66. [DOI] [PubMed] [Google Scholar]

- Onge JR St, Ahn S, Phillips AG and Floresco SB 2012. Dynamic fluctuations in dopamine efflux in the prefrontal cortex and nucleus accumbens during risk-based decision making. J Neurosci, 32: 16880–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Onge JR St and Floresco SB 2009. Dopaminergic modulation of risk-based decision making. Neuropsychopharmacology, 34: 681–97. [DOI] [PubMed] [Google Scholar]

- Onge JR St, Stopper CM, Zahm DS and Floresco SB 2012. Separate prefrontal-subcortical circuits mediate different components of risk-based decision making. J Neurosci, 32: 2886–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stauffer WR, Lak A and Schultz W 2014. Dopamine reward prediction error responses reflect marginal utility. Curr Biol, 24: 2491–500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stolyarova A and Izquierdo A 2017. Complementary contributions of basolateral amygdala and orbitofrontal cortex to value learning under uncertainty. Elife, 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton RS 1988. Learning to predict by the methods of temporal differences. Machine learning, 3: 9–44. [Google Scholar]

- Sutton RS and Barto AG 1998. Introduction to reinforcement learning MIT press Cambridge. [Google Scholar]

- Thompson AB, Gerson J, Stolyarova A, Bugarin A, Hart EE, Jentsch JD and Izquierdo A 2017. Steep effort discounting of a preferred reward over a freely-available option in prolonged methamphetamine withdrawal in male rats. Psychopharmacology (Berl), 234: 2697–2705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treadway MT, Bossaller NA, Shelton RC and Zald DH 2012. Effort-based decision-making in major depressive disorder: a translational model of motivational anhedonia. J Abnorm Psychol, 121: 553–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treadway MT, Buckholtz JW, Schwartzman AN, Lambert WE and Zald DH 2009. Worth the ‘EEfRT’? The effort expenditure for rewards task as an objective measure of motivation and anhedonia. PLoS One, 4: e6598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treadway MT, Peterman JS, Zald DH and Park S 2015. Impaired effort allocation in patients with schizophrenia. Schizophr Res, 161: 382–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treadway MT and Zald DH 2011. Reconsidering anhedonia in depression: lessons from translational neuroscience. Neurosci Biobehav Rev, 35: 537–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trifilieff P, Feng B, Urizar E, Winiger V, Ward RD, Taylor KM, Martinez D, Moore H, Balsam PD, Simpson EH and Javitch JA 2013. Increasing dopamine D2 receptor expression in the adult nucleus accumbens enhances motivation. Mol Psychiatry, 18: 1025–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton ME, Bannerman DM, Alterescu K and Rushworth MF 2003. Functional specialization within medial frontal cortex of the anterior cingulate for evaluating effort-related decisions. J Neurosci, 23: 6475–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton ME, Bannerman DM and Rushworth MF 2002. The role of rat medial frontal cortex in effort-based decision making. J Neurosci, 22: 10996–1003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang AY, Miura K and Uchida N 2013. The dorsomedial striatum encodes net expected return, critical for energizing performance vigor. Nat Neurosci, 16: 639–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wardle MC, Treadway MT, Mayo LM, Zald DH and de Wit H 2011. Amping up effort: effects of d-amphetamine on human effort-based decision-making. J Neurosci, 31: 16597–602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wikenheiser AM, Marrero-Garcia Y and Schoenbaum G 2017. Suppression of Ventral Hippocampal Output Impairs Integrated Orbitofrontal Encoding of Task Structure. Neuron, 95: 1197–1207 e3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wise RA, Spindler J, deWit H and Gerberg GJ 1978. Neuroleptic-induced “anhedonia” in rats: pimozide blocks reward quality of food. Science, 201: 262–4. [DOI] [PubMed] [Google Scholar]

- Wise RA, Spindler J and Legault L 1978. Major attenuation of food reward with performance-sparing doses of pimozide in the rat. Can J Psychol, 32: 77–85. [DOI] [PubMed] [Google Scholar]

- Yohn SE, Lopez-Cruz L, Hutson PH, Correa M and Salamone JD 2016. Effects of lisdexamfetamine and s-citalopram, alone and in combination, on effort-related choice behavior in the rat. Psychopharmacology (Berl), 233: 949–60. [DOI] [PubMed] [Google Scholar]