Abstract

Perceivers’ ability to correctly identify the internal states of social targets— known as empathic accuracy (EA)—is critical to social interactions, but little work has examined the specific types of information that support EA. In the current study, social targets varying in trait emotional expressivity were videotaped while discussing emotional autobiographical events. Perceivers watched these videos and inferred targets’ affect while having access to only visual or auditory information, or both. EA was assessed as the correlation of perceivers’ inference and targets’ self-ratings. Results suggest that auditory, and especially verbal information, is critical to EA. Furthermore, targets’ expressivity predicted both target behavior and EA, an effect influenced by the valence of the events they discussed. Specifically, expressive targets produced more nonverbal negative cues, and higher levels of EA when perceivers could only see them discussing negative events; expressive targets also produced more positive verbal cues, and higher levels of EA when perceivers could only hear them discussing positive events. These results are discussed in relation to social display rules and clinical disorders involving social deficits.

Keywords: empathy, emotional expressivity, social cognition

Empathy— understanding and responding to the emotional and mental states of others—is central to human social life, and impairments in empathy, such as those seen in autism and psychopathy, result in severe deficits in social function (Blair, 2005). Given its importance, empathy has become the focus of a growing amount of research, and various theories about the mechanisms underlying empathic abilities have been put forth (Davis, 1994, Chapters 1 & 2; Decety & Jackson, 2004; Keysers & Gazzola, 2006, 2007; Preston & de Waal, 2002). Current theories recognize that empathy subsumes related but independent subcomponents, including sharing the affect of others, feeling concern over the well being of others, and being able to accurately judge the thoughts and feelings of others; the last of these is known as empathic accuracy (EA, see Ickes, 1997; Ickes, Stinson, Bissonnette, & Garcia, 1990; Levenson & Ruef, 1992). EA is commonly operationalized as the correspondence between the thoughts and feelings social targets report experiencing, and the thoughts and feelings that perceivers infer from targets’ behavior (Ickes et al., 1990; Levenson & Ruef, 1992).

Early work on EA was aimed primarily at identifying the dispositions of “accurate perceivers” (Dymond, 1949; Taft, 1955), but this effort failed to identify dispositions that could consistently predict EA (Ickes et al., 2000). More recent work suggests that EA is fundamentally interpersonal, and may depend more on the dispositions of targets than on those of perceivers, as suggested by studies finding that EA depends on the extent to which targets express their emotions (Flury, Ickes, & Schweinle, in press; Zaki, Bolger, & Ochsner, 2008).

Focusing on targets as central to accuracy raises new questions about EA: namely, what types of informational cues (i.e., facial expressions or semantic information) do targets produce that lead to EA in general, and how does production of such cues relate to or explain the increased emotional “readability” of emotionally expressive targets? While such questions could help clarify the interpersonal sources of EA, they have as of yet not been explored. The current study sought to address this gap in knowledge by exploring the informational bases of EA in greater detail than previous work.

Contributions of Visual, Auditory, and Semantic Information to EA

One major question concerns the extent to which EA generally depends on visual, auditory, and semantic information. The variety of informational cues that targets produce is underscored by considering the difference between witnessing the emotions of a grimacing child as compared with those of a friend whose facial expression and prosody are neutral as she tells you she has failed an important exam. In the first case, a perceiver has access to clearly affective visual cues. In the second case, that perceiver has access to detailed semantic knowledge about the target (i.e., how salient the exam was to her) and can use this knowledge to make “top-down,” rule-based inferences about the targets’ affect (i.e., “people who fail important exams tend to feel bad”). Some research suggests that verbal and nonverbal cues to emotion may be processed by separable neural systems (Keysers & Gazzola, 2007; Uddin, Iacoboni, Lange, & Keenan, 2007), and may independently inform judgments about targets’ emotions (Carroll & Russell, 1996). Exactly how and when different types of cues combine to support empathically accurate judgments, however, is not yet clear.

Prior work suggests that several types of information provided by targets play important, independent roles in providing the informational basis for EA. One type of information is the words targets use. Two previous studies (Gesn & Ickes, 1999; Hall & Schmid Mast, 2007) have explored the effects of informational “channels” on EA, by having perceivers infer the internal states of targets either while having access to both auditory and visual information, or under conditions where only a subset of information (i.e., silent video, written transcripts of target speech) were available. These studies found that verbal information alone produced EA levels similar to those observed when both verbal and visual information were available, suggesting that verbal information is the primary source of EA. Notably, neither study examined the specific verbal content (e.g., the use of specific types of emotion language) that predicted increases in EA.

Although intriguing, the interpretability of these findings may be limited by methodological concerns. Regardless of the channel of information available to them (e.g., visual only or transcript only) perceivers in these studies were instructed to provide written descriptions of what they believed targets were thinking or feeling. These descriptions were then compared with targets’ own written descriptions of their internal states to produce a measure of EA. Because the measure of EA in these studies depends on producing a verbal description of target states, it may be unsurprising that verbal information was most valuable to perceivers in identifying those thoughts. It remains unclear whether verbal content would prove as important in circumstances where EA is not measured verbally.

A second type of cue that predicts EA is nonverbal affective behavior, such as emotional facial expressions or other nonverbal (e.g., postural, prosodic) cues. The importance of nonverbal behavior has been highlighted by studies that do not ask for written descriptions of targets’ thoughts and feelings, but instead ask perceivers to make decisions about social relationships between targets (such as deciding, based on a conversation, which of two targets is in a position of authority over the other). Whereas some of these studies have found that accuracy about these scenarios was better predicted by visual than auditory cues (i.e., Archer & Akert, 1977), others have found that each type of information produces roughly equal levels of accuracy (Archer & Akert, 1980). Although the precise nature of the behaviors driving accuracy in these studies has not been identified, this work presents a counterargument to the primacy of verbal cues in predicting EA.

To examine the relative contribution of different informational channels to EA, the present study compared EA for perceivers with access to only video, only sound, and both video and sound from clips recorded by targets. Following the work reviewed above, we predicted that auditory, and specifically semantic information would produce higher levels of EA than visual information (Hypothesis 1). Unlike previous studies, however, we calculated EA not from qualitative written reports that might be biased toward identifying verbal information as critical, but from the correlation between a perceiver’s judgments about target affect and the targets’ self-reported affect (see also Levenson & Reuf, 1992). Thus, the present study examined whether verbal information predicts EA even when a quantitative measure of EA is employed.

Target Expressivity and Production of Affective Cues

The second main question we wished to address concerned the means through which expressive targets become more emotionally “readable” to perceivers than less expressive targets. Prior work has demonstrated that targets’ emotional expressivity predicts perceivers’ levels of EA for those targets (Zaki et al., 2008). The preceding review suggests that this effect may occur if expressive targets produce more informational cues about their affect, for example by producing more intense and frequent affective facial expressions or using more affective language. The types of cues targets provide, and the relationship of production of specific affective cues to individual differences in expressivity, however, have not been explored. The current study examined the types of affect cues that targets provided by separately coding targets’ nonverbal behavior (such as facial expressions) and the semantic content of their speech, allowing us to examine the relationship between expressivity and the production of specific affective cues.

The Effect of Valence on Production of Affective Cues

Related to the overall relationship between expressivity and production of affective cues, there is reason to believe that the types of cues expressive individuals produce may vary across features of situations, such as whether they are experiencing negative or positive affect. For example, one study used individual differences in expressivity to predict the correspondence between affect experienced by targets watching emotional films and the amount of visual affective cues (i.e., smiling, crying) they produced. Interestingly, expressivity predicted a relationship between affective experience and visual affect cues during negative, but not positive, films (Gross, John, & Richards, 2000).

Why would expressive people translate their experience into emotional behavior in a valence-asymmetric way? Gross et al. (2000) suggested that this dissociation results from the effects of social display rules. Individuals in many cultures are socialized to express more positive than negative affect (Ekman & Friesen, 1969). Thus, in social situations people often inhibit negative affective displays, smiling more and frowning less than when alone, even under negative circumstances (Jakobs, Manstead, & Fischer, 2001). The motivations to positively slant affective displays are numerous: observers judge smiling targets as affiliative and competent (Harker & Keltner, 2001; Knutson, 1996) and displays of positive affect predict adaptive personal outcomes, such as greater psychological adjustment after bereavement (Papa & Bonnano, 2008). As a result, positive displays are common and may not be diagnostic of targets’ internal states. Indeed, people often smile to be polite, or to obscure their negative feelings (Ansfield, 2007; Hecht & LaFrance, 1998). Importantly, an individual’s expressivity may affect their adherence to social display rules. Emotionally expressive people are less likely to report suppressing negative affect, and have been shown to display more negative affect in social situations (King & Emmons, 1990). By contrast, social display rules may motivate targets at all levels of expressivity to display positive visual cues (such as smiling).

Unlike previous studies, perceivers in the current study inferred emotion from several targets varying in their levels of self-reported emotional expressivity. We also asked targets to discuss both positive and negative content while being videotaped. We hypothesized that expressive targets would produce more affective cues than less expressive ones, but that this would vary depending on the valence of the content they discussed. Specifically, theories about social display rules suggested that expressive targets would produce more visual affective cues, such as emotional facial expressions, when experiencing negative affect (Hypothesis 2). By contrast, when discussing positive content, we predicted that all targets would produce high levels of positive visual cues, regardless of their levels of expressivity. However, in our prior work, target expressivity predicted higher levels of EA for both negative and positive affect, suggesting that expressive targets do provide more positive affective cues than less expressive targets, but that these cues may not be visual. Instead, given the importance of auditory and semantic information in predicting EA (Gesn & Ickes, 1999) we predicted that expressive targets would produce more auditory and semantic affective cues, such as emotional language, when experiencing positive affect (Hypothesis 3).

Target Expressivity Predicting EA Through Affective Cues

Finally, we examined the sources through which target expressivity would predict EA depending on informational conditions and valence. In general, we expected expressive targets’ affective readability to be mediated by their increased production of affective cues, but only under conditions in which they produce increased amounts of such cues. Specifically, we hypothesized that target expressivity would predict EA overall, but that this would be qualified by an interaction of expressivity and valence. That is, if expressive targets produced more visual affect cues only when discussing negative content, expressivity would predict EA in the visual only condition for negative, but not positive videos. Similarly, if expressive targets produce more auditory or verbal affect cues for positive content, then expressivity would predict EA in the auditory only condition for positive, but not negative videos (Hypothesis 4). Finally, we predicted that the effects on expressivity in each of these conditions would be driven by the cues that targets produced, and that target expressivity would predict EA through expressive targets’ production of negative visual and positive auditory affect cues (Hypothesis 5).

Method

The current study was comprised of two phases. In the first, we assembled a library of stimulus videotapes. For Phase 1— described in greater detail in Zaki et al. (2008, 2009)—targets (N = 14, 7 female, mean age = 26.5) participated in exchange for monetary compensation and signed informed consent as per the regulations of the Columbia University Institutional Review Board. Targets first completed the 16-item Berkeley Expressivity Questionnaire (BEQ; see Gross, 2000), a standard measure of emotional expressivity with high reliability and validity (J. Gross & John, 1997). After completing this questionnaire, targets were videotaped while discussing four positive and four negative emotional events from their lives. Within 30 min after they had discussed these events, targets watched the videotapes that had been made of them, and continuously rated how positive or negative they had felt while speaking, using a sliding Likert scale (on which 1 = “very negative” and 9 = “very positive”). This scale was similar to the affect rating “dial” used by Levenson and Ruef (1992), and allowed targets to update their affect ratings at any point during the video by moving an indicator along the scale. After rating each video, targets made summary judgments on a 9-point scale of how positive or negative and how aroused they had felt while speaking.

Stimuli

Two targets did not consent to the use of their videotapes as stimuli, and one target showed inadequate variability in their affect ratings for meaningful analysis of EA. Of the remaining 88 videos collected from 11 targets, 40 were chosen to balance the number of negative and positive videos and the number of videos with male and female targets. Videos were also chosen such that both positive and negative clips, while differing in the overall valence, did not differ in their summary arousal ratings.

Phase 2 Protocol

Perceivers (N = 95) gave informed consent to participate in the next phase of the experiment in exchange for either payment or course credit. Perceivers completed the Balanced Emotional Empathy Scale (BEES), a standard measure of emotional empathy that assessed responders’ tendency to share the affect of others with high reliability and validity (Mehrabian, 1972). Perceivers were then randomized into one of three conditions in this between subjects design. All three conditions involved watching a series of 20 target videos. A pseudorandomized Latin square design ensured that perceivers saw an equal number of positive and negative videos, and that each video was viewed by an approximately equal number of perceivers. While watching these videos, perceivers used the same sliding Likert scale that targets had used to continuously rate how positive or negative they thought targets felt at each moment.

The groups into which perceivers were randomized differed only in the informational channels they had access to while judging target emotions. In the sound only condition, perceivers could hear, but not see, targets while inferring their emotions. In the visual only condition, perceivers could see, but not hear, targets. The visual and sound condition utilized data from perceivers in our previous study (Zaki et al., 2008), who could both see and hear targets. Because of equipment failure, data from one participant in the sound only condition and one participant in the visual only condition were unusable, leaving a total of 93 perceivers for subsequent analyses (30 in the visual only condition, 30 in the sound only condition, and 33 in the visual and sound condition).

Analysis of EA

Data reduction and time-series correlations were performed using Matlab 7.1 (Mathworks, Sherborn, MA). Affect-rating data were averaged across 5-s periods and each 5-s mean served as one point in subsequent time-series analyses. Perceivers’ ratings of target affect were then correlated with targets’ affect ratings. The resulting coefficients are referred to as accuracy for a given perceiver/ clip combination. This resulted in an accuracy score for each of 20 videos watched by each of the 93 perceivers (for a total of 1,860 correlation-based accuracy scores). All correlation coefficients were r to Z transformed to be normally distributed for subsequent analyses (transformed values of EA are used in all figures except Figure 2).

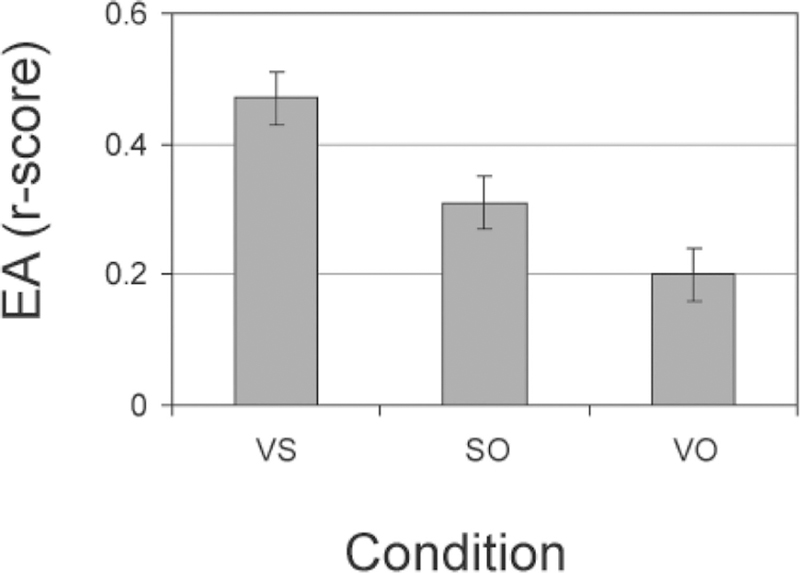

Figure 2.

Mean EA (represented by r scores) by condition. VS = visual-and-sound; VO = visual-only; SO = sound-only; EA = empathic accuracy.

Target Behavior

As we were interested in targets’ specific use of affective language and nonverbal cues, these behaviors were independently coded. The Linguistic Inquiry and Word Count program, developed by James Pennebaker and colleagues (LIWC, see Pennebaker, Francis, & Booth, 2001), was used to analyze the content of transcripts of target speech in each target video. The LIWC program extracts information about the proportion of words from various categories (e.g., words describing places) present in a given speech transcript. Of particular interest to us was the amount of affective language targets employed. Given that many types of emotional language are separately coded by the LIWC, we factor analyzed results from the LIWC output using a Varimax procedure, requiring each resulting factor to have an eigenvalue of at least 1. The results produced 11 factors, one of which was highly correlated with many affective language categories, including both positive and negative emotion words. We hereby refer to this factor as “affective language” (for loadings of this factor along various emotional language dimensions, see Table 1).

Table 1.

The Linguistic Factor Loadings for “Affective Language Usage”

| Category | r Score |

|---|---|

| Affective processes | 0.48 |

| Positive emotion | 0.32 |

| Positive feeling | 0.88 |

| Negative emotion | 0.37 |

| Anger | 0.51 |

| Sadness | 0.66 |

| Tentative | 0.56 |

Note. The column represents correlations between this factor and the usage of individual linguistic categories by targets. Only correlations larger than .3 are shown.

To code nonverbal affective behavior, we used the Global Behavioral Coding system developed by Gross and Levenson (1993). This coding system uses rules developed by Ekman & Friesen (1975/2003) to assess visual cues of sadness, anger, disgust, fear, and happiness, on a 0 – 6 scale. In addition, we coded the overall valence and intensity of any emotions they displayed. The intensity measurement was of special interest to us, as it is theoretically unrelated to the valence of their emotional expression, and provided a simple, global measure of the strength of targets’ nonverbal emotional displays. Two independent coders trained in the use of this system and blind to the hypotheses of the study rated silent versions of each video for each of these categories, producing reliable ratings (mean Cronbach’s alpha: .81; for category specific alphas, see Table 2).

Table 2.

Reliability Ratings Based on Two Independent Coder’s Assessment of Target Nonverbal Emotional Behavior in Each Video

| Category | Cronbach’s alpha |

|---|---|

| Anger | 0.78 |

| Happiness | 0.94 |

| Sadness | 0.69 |

| Disgust | 0.67 |

| Fear | 0.85 |

| Valence | 0.88 |

| Intensity | 0.83 |

Modeling Sources of Accuracy

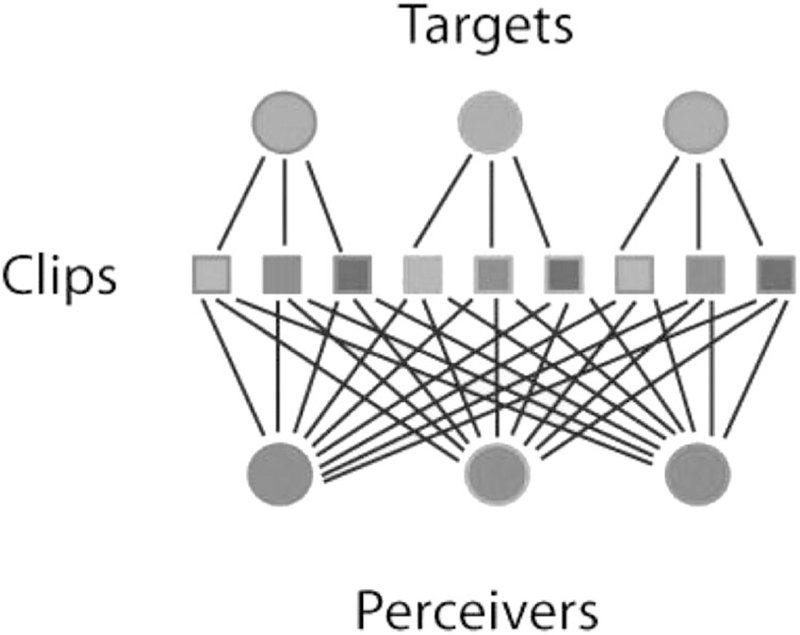

Empathic accuracy was modeled as a function of our predictors using a mixed linear model in SAS 9.1 (SAS Institute, 2002). The data reported here are multilevel: the time-series correlation EA scores for perceiver/clip combinations (total N = 1860) were nested within perceivers (N = 93), and within scores for that clip across all the perceivers who viewed it. Furthermore, clips (N = 40) were nested within targets (N = 11). A diagram of this data structure can be found in Figure 1. This structure is ideal for studying interpersonal phenomena like EA, in that data can be modeled at the level that is most appropriate for a given analysis. For each analysis described below, we have specified the level at which results were examined, as either the “clip/perceiver pair level” the “clip level,” the “perceiver level,” or the “target level.”

Figure 1.

The multilevel structure of the current dataset. Each target made multiple clips, which were then viewed by multiple perceivers. Components of accuracy can be modeled at the level of the perceiver, target, clip, or the unique pairings of clips and perceivers (the “interaction”).

The number of truly independent predictors varied for each level of analysis described here, and we adjusted our degrees of freedom accordingly. When sample sizes are not large, some statisticians advocate using a t-distribution and using some approximation of degrees of freedom that takes into account the number of parameters estimated and the nonindependence of the observations. A conservative approach toward choosing appropriate degrees of freedom is to base them on the numbers of subjects rather than the number of subjects multiplied by the number of repeated measurements. We used this approach, and constrained the degrees of freedom to the appropriate number given the N at the level at which each analysis was performed. For example, when analyzing the effects of our between-subjects manipulation of informational channels, the data from each of our 93 perceivers are nonindependent, and we constrained the degrees of freedom for such tests accordingly. Regardless of the level at which results are examined, targets and perceivers were treated as random effects. This approach allows us to parse variance in EA as a function of targets, perceivers, and unique interactions between them (see Figure 1).

Results

Contributions of Visual, Auditory, and Semantic Information to EA (Hypothesis 1)

First, we tested the contribution of auditory and visual information to EA, under the prediction that auditory information alone would produce greater EA than visual information alone. As this was a test of our between-perceiver manipulation, these analyses were performed at the perceiver level. Results of this analysis are presented in Figure 2. When both auditory and visual channels were available to them, perceivers were moderately accurate about target affect (average r = .47). Perceivers were less accurate when only utilizing auditory information (average r for sound only = .31), and even less accurate when using only visual information (average r for visual only = .21). Accuracy in all conditions was significantly above chance (all ps < .001), suggesting that any type of information is useful when inferring affect from targets. Some channels were more useful than others were, however. The main effect of channel was highly significant, F(2, 91) = 92.15, p < .001, as were contrasts of visual and sound versus sound only, F(1, 92) = 38.96, p < .001, and of sound only versus visual only, F(1, 92) = 21.02, p < .001. This pattern of decreasing accuracy from visual-and-sound to sound-only to visual-only matches the results obtained by Hall and Schmidt Mast (2007), and by Gesn and Ickes (1999).

Target Expressivity and Production of Affective Cues (Hypotheses 2 and 3)

Our second set of predictions centered on targets’ production of visual and verbal affective cues as a function of those targets individual levels of self reported expressivity. We predicted that expressive targets would produce more intense visual affect cues than less expressive targets only when discussing negative content (Hypothesis 2), and would produce more nonvisual cues, such as affective language, when discussing positive content (Hypothesis 3). These analyses were conducted at the target level. We first entered both valence and target expressivity into a single model as predictors of targets’ production of affective cues, and found a significant interaction between these factors in predicting both verbal, F(2, 9) = 9.14, p < .001 and visual, F(2, 9) = 10.29, p < .001 affective cues. To further explore the sources of these interactions, expressivity was modeled as a predictor of target behavior separately for positive and negative clips.

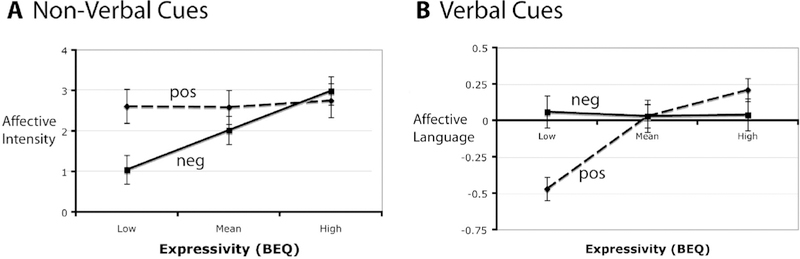

Nonverbal affective cues.

On average, independent observers rated our targets as displaying moderate affective intensity through their facial expressions and other nonverbal cues (M = 2.43 on a scale of 0 – 6). Consistent with theories about social display rules, targets demonstrated more intense nonverbal cues overall when discussing positive experiences than when discussing negative experiences (Mpos = 2.75, Mneg = 2.11, t(9) = 4.18, p < .005). Additionally, when targets discussed negative experiences, expressivity was a strong predictor of their nonverbal affective intensity (r = .57, p < .001), but this relationship was not apparent when targets talked about positive experiences (r = .01, p > .5; see Figure 3a). This pattern is consistent with the predictions that, although people generally inhibit negative as compared to positive nonverbal expressions, expressive targets display more visual intense nonverbal affect while discussing negative events (Hypothesis 2).

Figure 3.

(A) Nonverbal affective intensity (scored by independent raters using the global behavioral coding system), predicted by valence and expressivity. Intensity is presented for positive (pos) and negative (neg) valence, at low (—1 SD), mean, and high (+1 SD) levels of target expressivity (B) The usage of affective language predicted by valence and expressivity. Affective language usage is presented for positive and negative valence, at low (—1 SD), mean, and high (+1 SD) levels of target expressivity. BEQ = Berkeley Expressivity Questionnaire.

Affective language usage.

Affective language, as determined by the unit average of all individual linguistic categories loading significantly on the affective language factor produced by the LIWC analysis, constituted 2.29% of all speech. Results of factor scores in subsequent analyses are centered at zero. Target expressivity was a significant predictor of affective language (mean r(9) = .26, t = 12.45, p < .001). However, this effect was moderated by an interaction of expressivity and valence in predicting affective language usage. Overall, targets used more affective language while discussing negative than while discussing positive experiences (Mpos = —.11, Mneg = .06; t(9) = 3.82, p < .01). However, while discussing positive experiences, expressivity was a strong predictor of affective language usage, whereas it was only a marginal predictor of affective language when discussing negative experiences (positive: mean r(9) = .53, p < .001; negative: mean r(9) = .05, p = .08; see Figure 3b). These results suggest that discussing negative experiences produces a relatively high amount of affective language regardless of a target’s expressivity, whereas for positive experiences, the amount of affective language used by targets is dependent on individual differences in expressivity, consistent with Hypothesis 3.

Target Expressivity Predicting EA Through Affective Cues (Hypotheses 4 and 5)

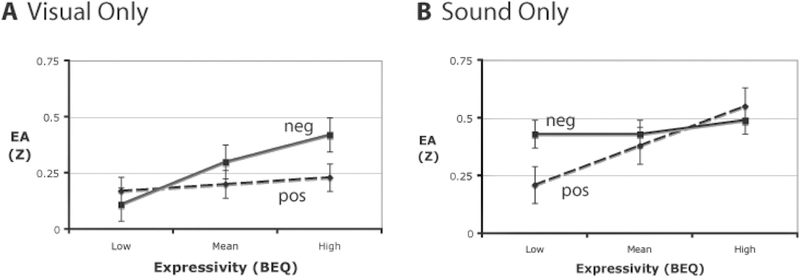

We were also interested in the effect of target expressivity on EA under different conditions and different affective valence. Specifically, we predicted that target expressivity would predict EA most when perceivers had access to the informational channel that differentiated high and low expressivity targets: in the visual only condition for negative videos, and auditory only condition for positive videos (Hypothesis 4). Our results were consistent with this prediction. Including target expressivity, valence, and informational channel into a single model as predictors of EA resulted in a significant three way interaction between these factors, F(1, 9) = 11.39, p < .001. To further explore the sources of this interaction, we examined the pattern of result for each informational condition separately. When perceivers had access to both informational channels, target expressivity predicted EA when videos were positive (b(9) = .30, p < .01) and marginally predicted EA when videos were negative (b (9) = .17, p < .07). The interaction between valence and expressivity was not significant (b (9) = .04, p > .7). When perceivers had access to only visual information, target expressivity predicted EA for negative videos (b (9) = .16, p < .01), but not for positive videos (b (9) = .02, p > .80), and the interaction between valence and expressivity was significant (b (9) = .20, p > .005, see Figure 4a), consistent with similar findings by Gross et al. (2000). When perceivers had access to only auditory information, this effect was reversed: target expressivity marginally predicted EA for positive (b (9) = .16, p < .07), but not for negative videos (b (9) = .04, p > .40), and the interaction between expressivity and valence was marginally significant (b (9) = .11, p < .07, see Figure 4b).

Figure 4.

EA predicted by condition, valence, and expressivity. EA is presented for visual only and sound only conditions, and both positive (pos) or negative (neg) valence. Data are plotted at low (—1 SD), mean, and high (+1 SD) levels of target expressivity. EA = empathic accuracy; BEQ = Berkeley Expressivity Questionnaire.

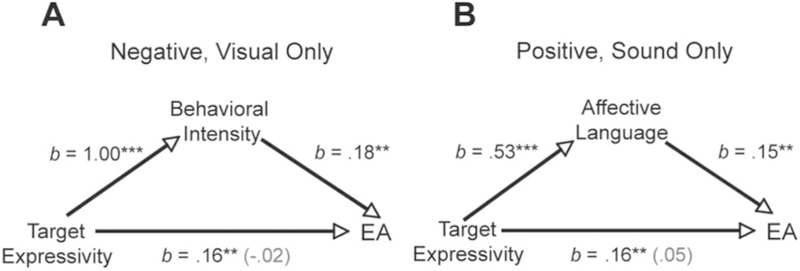

Mediation analyses.

The data presented above suggest that expressive targets indeed produce more verbal positive cues and more nonverbal negative cues than less expressive targets. Furthermore, expressive targets produced higher levels of EA than nonexpressive targets when discussing positive experiences in the sound only condition, and when discussing negative experiences in the visual only condition. Is the effect of expressivity on EA explained by expressive targets’ increased usage of verbal and nonverbal cues? We predicted that expressive targets would become more affectively “readable” in certain informational conditions, and when discussing content of specific affective valence, through their use of specific affect cues (Hypothesis 5). To test this, we performed two mediation analyses.

First, we tested whether the effect of expressivity on EA in the sound only, positive affect condition was mediated by affective language usage. Consistent with the emerging model, expressivity predicted affective language usage, and affective language usage predicted sound-only EA when controlling for expressivity (b(8) = .14, p < .05). Furthermore, controlling for affective language usage eliminated the effect of expressivity on EA (b(8) = .05, p > .3; Sobel Z = 2.27, p < .05 see Figure 5a), suggesting that expressivity predicts EA for positive affect through expressive targets’ use of more positive affective language.

Figure 5.

Expressivity predicting empathic accuracy through targets’ verbal and nonverbal affect cues. For both graphs, expressivity is presented on the left, and empathic accuracy (EA) in the relevant (labeled) condition is presented at the right. The relevant verbal (affective language usage) or nonverbal (global behavior coding system “nonverbal affective intensity”) affective cues provided by targets are used as mediators. Red text indicates the coefficient of the main effect when controlling for the mediator. † p < .1. * p < .05. ** p < .01. *** p < .001.

Second, we tested whether the effect of expressivity on EA in the visual only, negative affect condition was mediated by the intensity of targets’ nonverbal affective behavior. In this condition expressivity was a strong predictor of nonverbal affective intensity (b = 1.00, p < .001), and when controlling for expressivity, nonverbal intensity remained a significant predictor of EA (b(8) = .24, p < .01). Furthermore, controlling for nonverbal intensity eliminated the effect of expressivity on EA (b(8) = −.02, p > .7; Sobel Z = 3.32, p < .001 see Figure 5b), suggesting that when expressive targets discuss negative experiences, they produce higher levels of EA than nonexpressive targets because they produce more nonverbal affective cues.

Other predictors of EA.

Finally, though they were not part of our hypotheses, we also tested the effect of overall valence and perceivers’ self reported empathy affect sharing on EA, as these factors have been theorized to contribute to interpersonal accuracy. As these analyses compared perceivers across our conditions, they were performed at the perceiver level. Overall, valence had no overall effect on EA, F(1, 92) = .23, p > .6 and did not interact with channel, F(2, 91) = 2.15, p > .1. Similarly, and consistent with previous findings (i.e., Ickes et al., 1990; Levenson & Ruef, 1992), there was no overall effect of perceiver self-reported affect sharing (as indicated by the BEES) on EA (r = −.04, p > .5). Furthermore, perceiver self-reported empathy did not have any effect on EA in any of the individual informational conditions or for positive or negative clips (all ps > .2).

Discussion

The current study sought to expand on previous work on the kinds of information that supports accurate inferences about another’s emotions. By utilizing a modified empathic accuracy paradigm, we were able to assess the types of affective cues targets give off, and the types of information perceivers use to infer emotions from targets. By using several targets varying in their levels of emotional expressivity, we were able to examine how dispositional differences influence targets’ use of these cues. Finally, by examining EA both as a function of informational condition and specific target behaviors, we could exlpore how targets’ individual differences translate into EA through differences in specific kinds of expressive behavior.

Contributions of Visual, Auditory, and Semantic Information to EA

What types of information support EA? We used a quantitative, correlational measure of EA to assess the informational sources of accuracy. Because EA in this study was operationalized as the correlation between perceiver ratings of target affect and targets’ rating of their own affect over time, this measure was not biased toward making EA dependent on either verbal or visual information. Nonetheless, we reproduced the patterns reported by both Gesn and Ickes (1999) and Hall & Schmid Mast (2007): having access to both channels produced the highest level of EA, verbal information alone produced higher EA than visual information alone, and all conditions produced EA that was significantly above chance. These effects did not vary by valence, and were unaffected by perceiver empathy, reproducing previous results (Ickes et al., 1990; Levenson & Ruef, 1992; Zaki et al., in 2008). Therefore, our data support and build on prior work by demonstrating that even when using nonverbal measures of EA, auditory information alone is more effective in producing EA than is visual information only.

One important question is whether EA for auditory information is driven by verbal cues, such as the affective language targets use, or nonverbal auditory information such as prosody. Though our later analyses and the two previous studies on informational channels and EA (Gesn & Ickes, 1999; Hall & Shmid-Mast, 2007) suggest that verbal information is the main source of EA in the auditory channel, this could be because our targets produced verbal content and prosody that were matched in valence, and therefore provided redundant information. There are other situations in which prosody could provide extra information critical to affect inference, such as when prosody and verbal content provide conflicting information. For example, an otherwise positive statement may seem neutral or even negative depending on the valence with which a target delivers it, and conflicts between content and prosody can affect the processing of target speech (Decety & Chaminade, 2003). Furthermore, positive and negative prosody for otherwise neutral statements can alter perceivers’ moods accordingly (Neumann & Strack, 2000), providing a nonverbal, auditory source of emotional contagion. Sources of accuracy when informational channels provide noncongruent information should be explored in further research.

Target Expressivity and Production of Affective Cues

What types of expressive cues do targets produce, and how do these cues vary as a function of targets’ emotional expressivity? We hypothesized that following social display rules, targets would be motivated to produce positive, and inhibit negative, nonverbal cues. Consistent with this idea, overall, targets did produce more positive than negative nonverbal behavior. This is consistent with self report and behavioral data demonstrating that people display more positive than negative emotions through facial expressions and other visual cues (J. J. Gross & John, 1995) and that this effect is strengthened when they are being observed by others: people in public settings both smile more (Kraut & Johnston, 1979; Ruiz-Belda, Fernandez-Dols, Carrera, & Barchard, 2003) and frown less (Jakobs et al., 2001) than they do in private.

We also predicted that while expressive targets would produce more affective cues than their nonexpressive counterparts, the type of cues they produce would vary as a function of valence. Specifically, because expressive individuals may adhere less to social display rules than nonexpressive ones, we believed that they would produce more negative visual affect cues. Consistent with this, when discussing negative— but not positive— content, target expressivity predicted the amount of nonverbal affective cues targets produced. However, as previous research had shown that expressive targets produce higher levels of EA for positive affect as well, we reasoned that they might produce more nonvisual affect cues when discussing positive content. Consistent with this idea, when discussing positive— but not negative— content, expressivity targets predicted targets’ affective language usage.

Target Expressivity Predicting EA Through Affective Cues

How do the cues targets produce relate to EA? We hypothesized that EA, overall, would be higher for highly expressive targets, but that the conditions in which this effect was strongest would track with the behavioral differences shown by expressive and nonexpressive targets. Consistent with this notion, expressivity predicted EA when targets visually expressed negative affect, or when they verbally expressed positive affect. Furthermore, the effects of expressivity on EA in each of these conditions were mediated by expressive targets’ use of specific nonverbal and verbal affect cues. These results support the intriguing hypothesis that while emotionally expressive people use more affectively laden language, and also present more nonverbal cues such as facial expressions than nonexpressive targets, these behaviors and their interpersonal consequences may depend on the affective valence targets are experiencing (Gross & John, 1997).

Expressive targets in this sample produced more negative non-verbal affective cues than less expressive targets. Based on social display rules and their consequences, displaying negative affect could be seen as socially and personally maladaptive. However, using EA, we were able to demonstrate a possible social benefit to such displays. Because negative facial expressions tend to be avoided in social situations, they may carry particular salience for perceivers when they do occur, enabling them to make accurate judgments about targets’ affect, and act accordingly, for example, by providing social support to targets. Furthermore, in cases when it is appropriate to experience negative emotions, failing to display such cues can disrupt social interactions, causing interaction partners to feel less rapport with targets and provoking anxiety (Butler et al., 2003).

In the domain of positive affect, however, facial expressions may not be as diagnostic. People commonly smile in social situations, even under ostensibly negative circumstances such as watching sad films in the company of others (Jakobs et al., 2001). Furthermore, smiling in many situations is uncorrelated with positive affect, especially when individuals are under social pressure to be positive (as, e.g., when in the company of high authority figures, see Hecht & LaFrance, 1998). As such, more common positive facial expressions may be less diagnostic of internal states. Our data suggests that when discussing positive experiences, expressive individuals instead use more affective language, which in turn makes their emotions clearer to others.

Taken together, these results speak to a remarkable calibration between the cues expressive targets display and the types of information perceivers utilize. Without instructions (and most likely without knowing they are doing so), expressive individuals not only produce more affective cues than less expressive targets, but also seem to spontaneously produce to the type of emotional cue (verbal or visual) most effective for communicating affect of the valence they are experiencing. These cues in turn help to facilitate EA. This is especially interesting in that it allows us to frame individual differences in emotional expression in light of their impact on EA.

Limitations

While these data clarify and expand on previous knowledge about the informational bases of EA, it is important to note that the methodology used here in some ways limits the inferences that can be drawn from our findings. We used a continuous, single-axis (from negative to positive) rating of affect, made by both targets and perceivers, and a time-series correlation between target and perceiver ratings as a measure of EA. This follows the method used by Levenson and Ruef (1992), but importantly does not tap perceivers’ ability to infer specific thoughts and feelings experienced by targets. As such, we cannot conclude that the effects of informational channel and expressivity found here would hold for perceivers trying to infer the specific content of targets’ experience, as opposed to its valence and intensity. Continuous measures like the ones used in the current study and other measures employing a verbal measure of EA complement each other by assessing the effects of situational context and individual difference on EA for both affect fluctuations over time and the specific content of target emotional and mental states.

Future Directions

The present study suggests several possibilities for clinical and intervention studies of EA as well as testable hypotheses about manipulations that could improve EA generally. There are two main avenues for such studies, involving sources of EA related to targets and perceivers. With respect to targets, understanding the specific channels through which targets produce clear affect cues could inform interventions aimed at trading targets to communicate their affect more clearly. For example, future studies could manipulate targets’ self-presentation goals, instructing them to display their affect specifically through verbal or visual channels, with the goal of training targets to produce the clearest affective cues possible. Similar practices could be utilized in psychosocial interventions for schizophrenia and depression. Both of these illnesses are associated with impoverished emotional expressivity (Brune et al., 2008; Gaebel & Wolwer, 2004), which may worsen the ability of others to accurately understand what patients are experiencing.

With respect to perceivers, experimental studies could focus on orienting perceivers to attend to one modality or another when inferring target affect of different valences. The current data suggest that EA could be improved by teaching perceivers to flexibly orient their attention to cues of specific modalities depending on their initial assessments about the valence of a target’s affect. Such findings could inform clinical work in disorders such as autism spectrum disorder (Ponnet, Buysse, Roeyers, & De Clercq, 2007; Roeyers, Buysse, Ponnet, & Pichal, 2001) and schizophrenia (Allen, Strauss, Donohue, & van Kammen, 2007; Mueser et al., 1996; cf. Couture, Penn, & Roberts, 2006), which are characterized by failures in social cognition and emotion perception.

Interestingly, studies that are more recent suggest that social– cognitive deficits in autism are not uniform, and that it is important to map the contextual domains in which they are most severe. For example, a recent study found that autistic individuals demonstrated worse EA than controls, but when they watched a highly structured social interaction (in which strangers interviewed each other instead of having a more naturalistic conversation), their performance improved to almost the level of controls (Ponnet et al., 2007). Presumably, structured social interactions in which targets are answering direct questions about their preferences and history produce clearer verbal cues to their internal states. Examining the particular informational bases that allow otherwise impaired perceivers to improve their EA could be critical to improving social relationships in these populations.

Conclusion

Understanding the emotions of others is a critical social ability, and target individual differences predict how effectively targets can communicate their emotions to perceivers. However, surprisingly little work has explored the specific types of information that forms the basis of accurate interpersonal understanding. The current study provides new insights into this topic by confirming that auditory information is a primary source of EA and further demonstrating that individual differences in target expressivity affect EA through varying informational channels, depending on the valence of the emotion being discussed. Overall, these data serve to broaden the study of specific sources of EA, and suggest both basic and clinical directions for future work.

Acknowledgments

We thank Alison Levy, Daniel Chazin, and Natalie Berg for assistance in data collection, Sam Gershman and Jared van Snellenberg for advice in writing analysis scripts, and NIDA Grant 1R01DA022541–01 for support of this work.

References

- Allen DN, Strauss GP, Donohue B, & van Kammen DP (2007). Factor analytic support for social cognition as a separable cognitive domain in schizophrenia. Schizophrenia Research, 93, 325–333. [DOI] [PubMed] [Google Scholar]

- Ansfield M (2007). Smiling when distressed: When a smile is a frown turned upside down. Personality and Social Psychology Bulletin, 33, 763–775. [DOI] [PubMed] [Google Scholar]

- Archer D, & Akert R (1977). Words and everything else: Verbal and nonverbal cues in social interpretation. Journal of Personality and Social Psychology, 35, 443–449. [Google Scholar]

- Archer D, & Akert R (1980). The encoding of meaning: A test of three theories of social interaction. Sociological Inquiry, 50, 393–419. [Google Scholar]

- Blair RJ (2005). Responding to the emotions of others: Dissociating forms of empathy through the study of typical and psychiatric populations. Consciousness and Cognition, 14, 698–718. [DOI] [PubMed] [Google Scholar]

- Brune M, Sonntag C, Abdel-Hamid M, Lehmkamper C, Juckel G, & Troisi A (2008). Nonverbal behavior during standardized interviews in patients with schizophrenia spectrum disorders. Journal of Nervous and Mental Disease, 196, 282–288. [DOI] [PubMed] [Google Scholar]

- Butler EA, Egloff B, Wilhelm FH, Smith NC, Erickson EA, & Gross JJ (2003). The social consequences of expressive suppression. Emotion, 3, 48–67. [DOI] [PubMed] [Google Scholar]

- Carroll JM, & Russell JA (1996). Do facial expressions signal specific emotions? Judging emotion from the face in context. Journal of Personality and Social Psychology, 70, 205–218. [DOI] [PubMed] [Google Scholar]

- Couture SM, Penn DL, & Roberts DL (2006). The functional significance of social cognition in schizophrenia: A review. Schizophrenia Bulletin, 32(Suppl. 1), S44 –S63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis M (1994). Empathy: A social psychological approach Madison, WI: Brown & Benchmark Publishers. [Google Scholar]

- Decety J, & Chaminade T (2003). Neural correlates of feeling sympathy. Neuropsychologia, 41, 127–138. [DOI] [PubMed] [Google Scholar]

- Decety J, & Jackson PL (2004). The functional architecture of human empathy. Behavioral and Cognitive Neuroscience Reviews, 3, 71–100. [DOI] [PubMed] [Google Scholar]

- Dymond R (1949). A scale for the measurement of empathic ability. Journal of Consulting Psychology, 13, 127–133. [DOI] [PubMed] [Google Scholar]

- Ekman P, & Friesen W (1975/2003). Unmasking the face Cambridge, MA: Malor Books. [Google Scholar]

- Ekman P, & Friesen WV (1969). The repertoire of nonverbal behavior: Categories, origin, usage, and coding. Semiotica, 1, 49–98. [Google Scholar]

- Flury J, Ickes W, & Schweinle W (2008). The Borderline Empathy Effect: Do high BPD individuals have greater empathic ability? Or are they just more difficult to “read”? Journal of Research in Personality, 42, 312–322. [Google Scholar]

- Gaebel W, & Wolwer W (2004). Facial expressivity in the course of schizophrenia and depression. European Archives of Psychiatry and Clinical Neuroscience, 254, 335–342. [DOI] [PubMed] [Google Scholar]

- Gesn P, & Ickes W (1999). The development of meaning and contexts for empathic accuracy: Channel and sequence effects. Journal of Personality and Social Psychology, 77, 746–761. [Google Scholar]

- Gross J (2000). The Berkeley Expressivity Questionnaire. In Maltby J, Lewis CA & Hill A (Eds.), Commissioned reviews on 300 psychological tests Lampeter, Wales: Edwin Mellen Press. [Google Scholar]

- Gross J, & John OP (1997). Revealing feelings: Facets of emotional expressivity in self-reports, peer ratings, and behavior. Journal of Personality and Social Psychology, 72, 435–448. [DOI] [PubMed] [Google Scholar]

- Gross JJ, & John OP (1995). Facets of emotional expressivity: Three self-report factors and their correlates. Personality and Individual Differences, 19, 555–568. [Google Scholar]

- Gross J, John O, & Richards J (2000). The dissociation of emotion expression from emotion experience: A personality perspective. Personality and Social Psychology Bulletin, 26, 712–726. [Google Scholar]

- Gross JJ, & Levenson RW (1993). Emotional suppression: Physiology, self-report, and expressive behavior. Journal of Personality and Social Psychology, 64, 970–986. [DOI] [PubMed] [Google Scholar]

- Hall JA, & Schmid Mast M (2007). Sources of accuracy in the empathic accuracy paradigm. Emotion, 7, 438–446. [DOI] [PubMed] [Google Scholar]

- Harker L, & Keltner D (2001). Expressions of positive emotion in women’s college yearbook pictures and their relationship to personality and life outcomes across adulthood. Journal of Personality and Social Psychology, 80, 112–124. [PubMed] [Google Scholar]

- Hecht M, & LaFrance M (1998). License or obligation to smile: The effect of power and sex on amount and type of smiling. Personality and Social Psychology Bulletin, 24, 1332–1342. [Google Scholar]

- Ickes W (1997). Empathic accuracy New York: Guilford Press. [Google Scholar]

- Ickes W, Buysse A, Pham H, Rivers K, Erickson J, Hankock M, et al. (2000). On the difficulty of distinguishing “good” and “poor” perceivers: A social relations analysis of empathic accuracy data. Personal Relationships, 7, 219–234. [Google Scholar]

- Ickes W, Stinson L, Bissonnette V, & Garcia S (1990). Naturalistic social cognition: Empathic accuracy in mixed-sex dyads. Journal of Personality and Social Psychology, 59, 730–742. [Google Scholar]

- Jakobs E, Manstead AS, & Fischer AH (2001). Social context effects on facial activity in a negative emotional setting. Emotion, 1, 51–69. [DOI] [PubMed] [Google Scholar]

- Keysers C, & Gazzola V (2006). Towards a unifying neural theory of social cognition. Progress in Brain Research, 156, 379–401. [DOI] [PubMed] [Google Scholar]

- Keysers C, & Gazzola V (2007). Integrating simulation and theory of mind: From self to social cognition. Trends in Cognitive Science, 11, 194–196. [DOI] [PubMed] [Google Scholar]

- King LA, & Emmons RA (1990). Conflict over emotional expression: Psychological and physical correlates. Journal of Personality and Social Psychology, 58, 864–877. [DOI] [PubMed] [Google Scholar]

- Knutson B (1996). Facial expressions of emotion influence interpersonal trait inferences. Journal of Nonverbal Behavior, 20, 165–182. [Google Scholar]

- Kraut R, & Johnston R (1979). Social and emotional messages of smiling: Ethologial approach. Journal of Personality and Social Psychology, 37, 1539–1553. [Google Scholar]

- Levenson RW, & Ruef AM (1992). Empathy: A physiological substrate. Journal of Personality and Social Psychology, 63, 234–246. [PubMed] [Google Scholar]

- Mehrabian A, & Epstein N (1972). A measure of emotional empathy. Journal of Personality, 40, 525–543. [DOI] [PubMed] [Google Scholar]

- Mueser KT, Doonan R, Penn DL, Blanchard JJ, Bellack AS, Nishith P, et al. (1996). Emotion recognition and social competence in chronic schizophrenia. Journal of Abnormal Psychology, 105, 271–275. [DOI] [PubMed] [Google Scholar]

- Neumann R, & Strack F (2000). “Mood contagion”: the automatic transfer of mood between persons. Journal of Personality and Social Psychology, 79, 211–223. [DOI] [PubMed] [Google Scholar]

- Ostrom C (1990). Time series analysis: Regression techniques Thousand Oaks, CA: Sage. [Google Scholar]

- Papa A, & Bonnano G (2008). Smiling in the face of adversity: The interpersonal and intrapersonal functions of smiling. Emotion, 8, 1–12. [DOI] [PubMed] [Google Scholar]

- Pennebaker JW, Francis ME, & Booth RJ (2001). Linguistic Inquiry and Word Count (LIWC): A computerized text analysis program Mahwah, NJ: Erlbaum Publisher. [Google Scholar]

- Ponnet K, Buysse A, Roeyers H, & De Clercq A (2007). Mind-Reading in Young Adults with ASD: Does Structure Matter? Journal of Autism and Developmental Disorders, 38, 905–918. [DOI] [PubMed] [Google Scholar]

- Preston SD, & de Waal FB (2002). Empathy: Its ultimate and proximate bases. Behavioral and Brain Sciences, 25, 1–20; discussion 20–71. [DOI] [PubMed] [Google Scholar]

- Roeyers H, Buysse A, Ponnet K, & Pichal B (2001). Advancing advanced mind-reading tests: Empathic accuracy in adults with a pervasive developmental disorder. Journal of Child Psychology and Psychiatry and Allied Disciplines, 42, 271–278. [PubMed] [Google Scholar]

- Ruiz-Belda M, Fernandez-Dols J, Carrera P, & Barchard K (2003). Spontaneous facial expressions of happy bowlers and soccer fans. Cognition and Emotion, 17, 315–326. [DOI] [PubMed] [Google Scholar]

- SAS Institute. (2002). SAS 9.1.3 help and documentation

- Taft R (1955). The ability to judge people. Psychological Bulletin, 52, 1–22. [DOI] [PubMed] [Google Scholar]

- Uddin LQ, Iacoboni M, Lange C, & Keenan JP (2007). The self and social cognition: The role of cortical midline structures and mirror neurons. Trends in Cognitive Science, 11, 153–157. [DOI] [PubMed] [Google Scholar]

- Zaki J, Bolger N, & Ochsner K (2008). It takes two: The interpersonal nature of empathic accuracy. Psychological Science, 19, 399–404. [DOI] [PubMed] [Google Scholar]

- Zaki J, Weber J, Bolger N, & Ochsner K (2009). The neural bases of empathic accuracy. Proc Natl Acad Sci U S A, 27, 11382–11387. [DOI] [PMC free article] [PubMed] [Google Scholar]