Abstract

Classic theories of emotion posit that awareness of one’s internal bodily states (interoception) is a key component of emotional experience. This view has been indirectly supported by data demonstrating similar patterns of brain activity—most importantly, in the anterior insula—across both interoception and emotion elicitation. However, no study has directly compared these two phenomena within participants, leaving it unclear whether interoception and emotional experience truly share the same functional neural architecture. The current study addressed this gap in knowledge by examining the neural convergence of these two phenomena within the same population. In one task, participants monitored their own heartbeat; in another task they watched emotional video clips and rated their own emotional responses to the videos. Consistent with prior research, heartbeat monitoring engaged a circumscribed area spanning insular cortex and adjacent inferior frontal operculum. Critically, this interoception-related cluster also was engaged when participants rated their own emotion, and activity here correlated with the trial-by-trial intensity of participants’ emotional experience. These findings held across both group-level and individual participant-level approaches to localizing interoceptive cortex. Together, these data further clarify the functional role of the anterior insula and provide novel insights about the connection between bodily awareness and emotion.

Keywords: Insula, interoception, emotion, embodied cognition, fMRI

The role of bodily states in emotional experience has fascinated psychologists for over a century, with both classic and modern theories of emotion positing that bodily states contribute to—and might even be essential for—emotional experience (Damasio, 1999; James, 1894; Schachter & Singer, 1962; Valins, 1966). This “embodied” model of emotion has been supported by several pieces of indirect behavioral evidence. For example, emotional experience is influenced by alterations in individuals’ arousal levels (Schachter & Singer, 1962) as well as false feedback about internal bodily states (Valins, 1966). Further, individuals who are more accurate about (and presumably more aware of) their bodily states report more intense emotional experiences than less somatically aware individuals (Barrett, Quigley, et al., 2004; Critchley, Wiens, et al., 2004; Pollatos, Gramann, & Schandry, 2007; Wiens, 2005).

In the past decade, neuroscience research has produced two findings that, together, similarly support an embodied view of emotion. First, some cortical regions, and especially the anterior insula (AI), are engaged when individuals attend to—or attempt to control—a number of internal bodily states, including pain, temperature, heart rate, and arousal (Critchley, 2004; Peyron, Laurent, & Garcia-Larrea, 2000; Williams, Brammer, et al., 2000), supporting a role for the AI in the perception of one’s internal bodily state (interoception). Second, the AI is engaged by a wide variety of emotion elicitation tasks and types of cues (Kober, Barrett, et al., 2008; Lindquist, Wager, et al., 2011; Singer, Seymour, et al., 2004; Wager & Feldman Barrett, 2004; Wicker, Keysers, et al., 2003), which supports a role for the AI in the experience of emotion.

The apparent convergence of interoceptive and emotional processes in the AI has motivated neuroscientists to argue that perceptions of internal bodily states—as supported by this region—are central to emotional experience (Craig, 2002, 2009; Lamm & Singer, 2010; Singer, Critchley, & Preuschoff, 2009). However, sub-regions of insula can differ functionally (Kurth, Zilles, et al., 2010), and the actual convergence of emotion and interoception in insular cortex remains unclear because no studies have compared the functional neuroanatomy of these two phenomena within the same participants.

The current study addressed this gap in extant knowledge. Participants were scanned using functional MRI while completing two tasks: they first watched videos of people recounting emotional stories, and rated their own emotional experience in response to these videos. They then completed an interoception localizer task that required them to monitor their own heartbeat. This design allowed us to examine the extent to which neural structures engaged by interoception—and especially the AI—also were engaged by monitoring one’s own emotions. We further used participants’ trial-by-trial ratings to determine whether activity in AI clusters engaged by interoception also tracked the intensity of emotions that participants experienced. Given both empirical evidence and theoretical arguments that bodily states are a key component of emotional responses (Davis, Senghas, et al., 2010; James, 1884; Stepper & Strack, 1993) we predicted that AI subregions responsive to interoception would also be engaged by emotional experience.

METHODS

Participants and task

Sixteen participants (11 female, mean age = 19.10, SD = 1.72, all right handed with no history of neurological disorders) took part in this study in exchange for monetary compensation and completed informed consent in accordance with the standards of the Columbia University Institutional Review Board. They were then scanned using fMRI while performing two types of tasks (See Figure 1):

Figure 1.

Task schematic. During the emotion task, participants watched videos of people describing emotional autobiographical events; participants continuously rated either (i) how they (participants) felt while watching the video or, (ii) the direction of the speaker’s eye gaze. During the interoception task, participants made responses either corresponding to (i) their own heart beat, (ii) repeating tones, or, (iii) their heartbeat in the presence of repeating tones.

Emotion

Participants viewed 12 videos in which social targets (not actors) described emotional autobiographical events (for more information on these stimuli and tasks, see Zaki, Bolger, & Ochsner, 2008; Zaki & Ochsner, 2011, 2012; neural correlates of participants’ accuracy about target emotions are described by Zaki, Weber, et al., 2009). Videos met the requirements that (1) an equal number of videos contained male and female targets, (2) an equal number of videos contained positive and negative emotions, and (3) no video was longer than 180 seconds (mean = 125 sec).

While watching each video, participants continuously rated either (1) their own emotions, or (2) eye gaze direction of the person in the video1. This eye gaze control condition allowed us to isolate neural structures preferentially engaged by explicitly focusing on one’s emotions, controlling for low-level features of the video stimuli or participants’ general need to attend to the person in the video.2 Each participant saw six videos in each condition, and the specific videos viewed in each condition were counterbalanced across participants. Videos were presented across three functional runs, each containing 6 videos (2 from each of the conditions). Runs lasted ~9–13 minutes, as determined by video lengths.

Prior to the presentation of each video, participants were cued for 3 seconds with a prompt on the screen, which indicated which task they would be performing while viewing that video. Following this, videos were presented in the center of the screen. A question orienting participants to the task they should perform was presented above the video, and a 9-point Likert scale was presented below the video. At the beginning of each video, the number 5 was presented in bold. Whenever participants pressed an arrow key, the bolded number shifted in that direction (e.g., if they pressed the left arrow key, the bolded number shifted from 5 to 4). Participants could change their rating an unlimited number of times during a video, and the number of ratings made during each video was recorded. Labels for the scale and task cues depended on condition.

The question “how are you feeling?” presented above the video cued participants to continuously rate their own emotional experience (emotion rating condition). The points on the Likert scale represented affective valence (1 = “very negative,” 9 = “very positive”). The question “where is this person looking?” cued participants to continuously rate how far to the left or right targets’ eye-gaze was directed; for this condition the points on the Likert scale represented direction (1 = “far left,” 9 = “far right”, eye gaze rating condition).

Interoception

Following the emotion task, participants performed a task designed to localize interoception-related brain activity through monitoring of an internal bodily state: heart rate. In a modified version of Weins’ (2005) method for isolating interoception from exteroception (cf. Critchley, 2004), we compared attention to one’s heartbeat with attention to auditory tones.3

This modified task included 3 classes of judgments. (1) During the heartbeat monitoring alone condition, participants were instructed to make a keypress response each time they felt their heart beat. No external stimuli were presented during this condition. (2) During the tone monitoring condition, participants were instructed to make a keypress response each time they heard a tone. Prior to the experiment, we recorded each participant’s resting heart rate, and tones were presented at this rate, with an additional 25% random variance to roughly simulate heart rate variability. For example, if a given participants’ resting heart rate was 60 beats per minute, they would hear one tone every 1.00 ± 0.25 seconds.

Although this modified task allowed for a contrast of attention to one’s heartbeat on the one hand, or to tones on the other hand, it also necessarily included a less interesting difference across conditions: heartbeat monitoring alone included only one type of stimulus – one’s heartbeat – whereas tone monitoring included two types of stimuli – one’s heartbeat and the external tones. To address this potential confound, we added (3) a heartbeat monitoring with tone condition. The heartbeat monitoring with tone condition had the same two types of stimuli as the tone monitoring condition. During the heartbeat monitoring with tone condition, participants heard tones (as in the tone monitoring condition) but were instructed to make a response each time they felt their heartbeat (as in the heartbeat monitoring alone condition).

Importantly, the only aspect common to both heartbeat monitoring conditions, but not the tone monitoring condition, was interoception. Thus, by comparing each heartbeat monitoring condition to the tone monitoring condition, and then examining only those regions common to both contrasts (see below), we were able to better, and more conservatively, isolate brain activity related to interoceptive attention. Each task was presented in six 30-second blocks across two 300-second functional runs. Each block was preceded by a two second visual presentation of the phrase “rate heart beat” or “rate tones,” indicating which task they should perform during that block. Blocks were separated by a fixation cross, presented for 3±1 seconds.

Imaging acquisition and analysis

Images were acquired using a 1.5 Tesla GE Twin Speed MRI scanner equipped to acquire gradient-echo, echoplanar T2*-weighted images (EPI) with blood oxygenation level dependent (BOLD) contrast. Each volume comprised 26 axial slices of 4.5mm thickness and a 3.5 × 3.5mm in-plane resolution, aligned along the AC-PC axis. Volumes were acquired continuously every 2 seconds. Three emotion/eye-gaze rating functional runs were acquired from each participant, followed by two interoceptive attention functional runs. Each run began with 5 ‘dummy’ volumes, which were discarded from further analyses. At the end of the scanning session, a T-1 weighted structural image was acquired for each participant.

Images were preprocessed and analyzed using SPM2 (Wellcome Department of Cognitive Neurology, London, UK) and custom code in Matlab 7.1 (The Mathworks, Matick, MA). All functional volumes from each run were realigned to the first volume of that run, spatially normalized to the standard MNI-152 template, and smoothed using a Gaussian kernel with a full width half maximum (FWHM) of 6mm. The intensity of all volumes from each run was centered at a mean value of 100, trimmed to remove volumes with intensity levels more than 3 standard deviations from the run mean, and detrended by removing the line of best fit. After this processing, all three video-watching runs were concatenated into one consecutive timeseries for the regression analysis. The two heartbeat detection runs were similarly concatenated, and analyzed separately.

Our analytic strategy for the neuroimaging data comprised two main steps. We first isolated brain activity engaged by the emotion and interoception tasks. For the emotion task, we separately analyzed (i) the main effect of monitoring one’s own emotion, and (ii) brain activity scaling parametrically with self-reported emotional experience, on a video-by-video basis. The video-by-video emotional experience values captured the same type of emotional monitoring as analysis (i), by averaging across each video, and then allowed for an analysis of brain activity that changed as that average emotional intensity changed. Second, we identified functional overlaps between interoception- and emotion-related brain activity, at both group and individual levels (see below).

Neural correlates of interoception

Each heartbeat monitoring task differed from the control, tone monitoring, task in more than one way. Heartbeat monitoring alone differed from tone monitoring both in the need to attend to interoceptive (heart rate) cues and also in its absence of external stimuli (tones). Heartbeat monitoring with tone differed from tone monitoring both in the need to attend to interoceptive cues, and in the need to block out an external distracter (tones). Thus, comparing either heart beat rating condition alone to tone monitoring could be considered functionally ambiguous. However, the only task parameter common to both heartbeat monitoring tasks, but not the tone monitoring task, was participants’ attention to their heartbeat. As such, we computed a conjunction of two contrasts: (1) heartbeat monitoring alone > tone monitoring and (2) heartbeat monitoring with tone > tone monitoring. This analysis provided a conservative assessment of brain activity related to interoceptive attention in general, as opposed to the presence/absence of tones or the need to filter out distracters, neither of which were common to the two conjoined contrasts.

Neural correlates of emotion monitoring and intensity

We examined the neural correlates of emotion in two ways. First we identified brain regions related to monitoring emotion. For this analysis, we performed a main effects contrast of emotion rating > eye-gaze rating. This comparison is analogous to those made in many previous studies, in which explicit monitoring and judgment of one’s own emotions or mental states is compared to lower level judgments made about similar stimuli (Mitchell, Banaji, & Macrae, 2005; Ochsner, Knierim, et al., 2004; Winston, O’Doherty, & Dolan, 2003).

Second, we identified brain regions whose activity correlated on a video-by-video basis with the intensity of participants’ emotional experience. In this analysis regressors were constructed by using the mean intensity of emotion during each video as parametric modulators. Because we were interested in the intensity of affective experiences irrespective of their positive or negative valence, we operationalized this construct as the absolute distance separating a participant’s judgment of their experience from the “neutral” rating of 5 on the 9-Point Likert scale. For example, if a participants’ mean self-rated emotion during one video was 3 on the 9-point scale, the time during which this video was presented would be given a weight of 2, meaning that the overall intensity of their affective experience diverged by 2 scale points from the neutral rating of 5. This analysis also included a regressor of no interest corresponding to the number of ratings perceivers had made per minute during each video, to control for the possibility that an increased number of ratings could drive brain activity. A separate regressor corresponding to the overall emotion rating condition was included in this model to ensure that brain activity related to emotional intensity was independent of the overall task of monitoring emotions.

Anatomical overlap between interoception and emotion

We assessed anatomical overlap between interoception and emotion using two approaches: targeted at the group and participant-specific levels, respectively.

At the group level, we computed a series of conjunction analyses, using the minimum statistic approach advocated by Nichols et al. (2005); these analyses allowed us to isolate overlap between group-level contrast maps corresponding to (1) interoception, (2) monitoring emotion, and (3) video-by-video self-reported emotional intensity. We first computed a 2-way conjunction between the interoception and monitoring emotion contrasts, to determine whether attending to one’s bodily states overlapped functionally with monitoring one’s own emotions. Second, we computed a 2- way conjunction between the interoception and video-by-video emotional intensity contrasts. Finally, we computed a 3-way conjunction between interoception, monitoring emotion, and emotional intensity contrasts. Monitoring emotion and emotional intensity represent two separate aspects of an emotion that together more richly define the emotional response. Thus, this 3-level conjunction tested the extent to which interoceptive cortex was involved in both of these facets of emotion.

Participant-specific overlap between interoception and emotion tasks

Cortical structure and functional anatomy differ across individuals (Brett, Johnsrude, & Owen, 2002). To account for this variance, we supplemented our group analysis with a participant-specific functional localizer approach. This analysis was designed to isolate interoception-related cortex in each participant and interrogate activity in this functionally defined region during the emotion tasks (for other uses of this approach, see Mitchell, 2008; Saxe & Kanwisher, 2003; Schwarzlose, Baker, & Kanwisher, 2005). For each participant, we identified the peak in the interoceptive contrast (i.e., the conjunction of heartbeat monitoring > tone monitoring and heartbeat monitoring with tone > tone monitoring) anatomically closest to the group AI peak (MNI coordinates: 46, 24, −4), at a lenient threshold of p < .01, uncorrected. We then formed spherical ROIs with a radius of 6mm about each participant’s interoception-related peak. Finally, we extracted parameter estimates from these ROIs corresponding to each participant’s emotion rating > eye gaze rating, and video-by-video emotional intensity contrast maps.

Thresholding

Main effect maps were thresholded at p < .005, with a spatial extent threshold of k = 30, corresponding to a threshold of p < .05, corrected for multiple comparison, as assessed through Monte Carlo simulations implemented in Matlab (Slotnick, Moo, et al., 2003). To compute appropriate thresholds for maps of the 2- and 3-way conjunctions, we employed Fisher’s (1925) methods, which combines probabilities of multiple hypothesis tests using the formula:

where pi is the p-value for the ith test being combined, k is the number of tests being combined, and the resulting statistic has a chi-square distribution with 2k degrees of freedom. Thus, thresholding each test at p values of .01 for a 2-way conjunction and .024 for a 3-way conjunction corresponded to a combined threshold p value of .001, uncorrected. We combined these values with an extent threshold of k = 20, again corresponding to a corrected threshold of p < .05 as assessed using Monte Carlo simulations.

RESULTS

Behavioral results

Interoception manipulation check

We first performed a two-part manipulation check on data from the interoception task, to confirm that participants had performed it correctly. (1) Because tones were presented to participants at a frequency analogous to their resting heart rates, we verified that they made comparable numbers of responses during heartbeat monitoring and tone monitoring blocks. (2) If participants were performing the correct task (e.g. responding to tones only in the tone monitoring condition) we would expect their responses to be time-locked to the presentation of tones during the tone monitoring condition, but not during the heartbeat monitoring with tone condition. Thus, we predicted that the average lag time between tone presentations and subsequent responses would be shorter during the tone monitoring condition than during the heartbeat monitoring with tone condition. Both of these predictions were borne out. First, response rates did not differ significantly across heartbeat monitoring alone, heartbeat monitoring with tone, and tone monitoring conditions (all ps > .20). Second, the average lag time was significantly lower during the tone monitoring condition (mean = 0.37 sec) than during the heartbeat monitoring with tone condition (mean = 0.72 sec, t(15) = 8.11, p < .001). These results suggest that participants did, in fact, monitor their heartbeat, or tones, during the appropriate task conditions.

Emotion ratings in response to videos

Participants experienced moderately intense emotions while watching both negative and positive target videos during the emotion rating condition (mean negative = 3.62, mean positive = 6.42, overall intensity as measured by divergence from the scale’s neutral point = 1.40).

Response rate

Individuals made significantly more ratings during the eye-gaze rating (mean = 14.11 ratings/minute) condition than during the emotion rating (mean = 10.21 ratings/minute) condition, t(15) = 3.02, p < .01. Across all conditions, participants on average made ratings at least one rating per each 6.1 seconds, suggesting that they were engaged in both tasks. Rating rates were controlled for in all imaging analyses.

Neuroimaging results

Neural correlates of interoception

Conjunction of heartbeat monitoring alone & heartbeat monitoring with tone > tone monitoring revealed a pattern of activity strikingly similar to prior studies of interoception, including a large cluster of activation spanning the right AI and adjacent inferior frontal operculum (IFO), as well as activity in the right middle frontal gyrus, and the mid cingulate cortex (see Table 1 and Figure 2); all of these regions were also engaged by prior heartbeat detection tasks (e.g., Critchley et al., 2004).

Table 1.

Brain areas more engaged by heartbeat monitoring than tone monitoring

| Region | x | y | z | T score | Volume (vox) |

|---|---|---|---|---|---|

| Anterior Insula/Frontal Operculum | 46 | 24 | −4 | 5.85 | 316 |

| Dorsomedial PFC | 14 | 32 | 52 | 3.76 | 37 |

| Middle Frontal Gyrus | 54 | 26 | 24 | 4.6 | 126 |

| Midcingulate cortex | −6 | 0 | 34 | 4.83 | 54 |

| Hypothalamus | 10 | −14 | −10 | 3.96 | 57 |

Note: Coordinates are in the stereotaxic space of the Montreal Neurologic Institute. T values reflect the statistical difference between conditions, as computed by SPM.

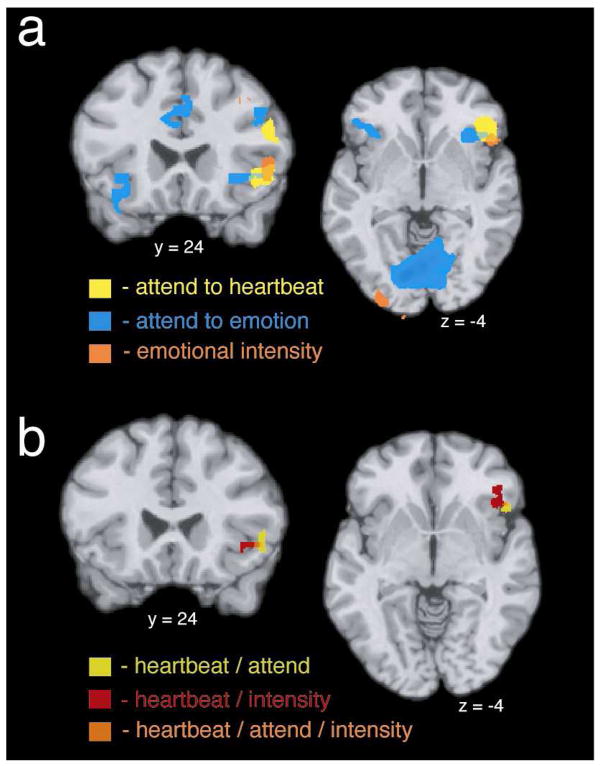

Figure 2.

A: Brain activity related to interoception, monitoring one’s own emotions, and video-by-video variation in the intensity of self-rated emotional experience. B: Conjunction maps representing functional overlap between interoception, monitoring emotion, and video-by-video variation in emotional intensity in AI and adjacent areas of paralimbic cortex.

Neural correlates of monitoring emotion

Compared to eye-gaze rating, emotion rating engaged neural structures commonly associated with appraisals of emotional states, including the medial prefrontal cortex, posterior cingulate cortex, and temporal lobes (Mitchell, 2009; Ochsner, et al., 2004). Notably, emotion rating, as compared to eye-gaze rating, engaged an area spanning the right AI and IFO (see Table 2 and Figure 2a).

Table 2.

Brain areas more engaged by emotion rating than by eye gaze rating

| Region | x | y | z | T score | Volume (vox) |

|---|---|---|---|---|---|

| Anterior Insula/Frontal Operculum | 34 | 18 | −16 | 4.82 | 477 |

| Anterior Insula/Frontal Operculum | −32 | 22 | −2 | 4.58 | 100 |

| Medial PFC | −2 | 48 | 14 | 3.78 | 128 |

| Dorsomedial PFC | −2 | 48 | 38 | 3.46 | 31 |

| Dorsomedial PFC | −2 | 36 | 60 | 4.83 | 76 |

| Superior Frontal Gyrus | −46 | 18 | 36 | 6.46 | 187 |

| Anterior Cingulate Cortex | −4 | 20 | 38 | 4.07 | 145 |

| Posterior Cingulate | 0 | −30 | 36 | 3.84 | 145 |

| Posterior Cingulate | −2 | −54 | 42 | 5.96 | 394 |

| Angular Gyrus | −46 | −70 | 34 | 5.35 | 453 |

| Thalamus | −2 | −14 | 12 | 4.49 | 81 |

| Lingual Gyrus | 8 | −76 | −4 | 7.29 | 1743 |

| Cerebellum | 24 | −82 | −40 | 3.78 | 73 |

Note: Coordinates are in the stereotaxic space of the Montreal Neurologic Institute. T values reflect the statistical difference between conditions, as computed by SPM.

Neural correlates of video-by-video emotional intensity

A similar pattern of results to the latter emerged. AI/IFO activity tracked with the intensity of participant’s self-reported emotional experience during each video, across the emotion rating condition (see Table 3 and Figure 2a).

Table 3.

Brain areas parametrically tracking emotional intensity on a video-by-video basis

| Region | x | y | z | T score | Volume (vox) |

|---|---|---|---|---|---|

| Anterior Insula/Frontal Operculum | 46 | 18 | 2 | 4.24 | 100 |

| Anterior Insula | −30 | 16 | −12 | 3.64 | 55 |

| Middle Frontal Gyrus | 22 | 24 | 46 | 4.1 | 52 |

| Ventral Striatum | −16 | 4 | −12 | 3.55 | 42 |

| Occipital Cortex | −38 | −82 | 4 | 4.77 | 155 |

Note: Coordinates are in the stereotaxic space of the Montreal Neurologic Institute.

Functional convergence of interoception and emotion

Strikingly, both 2-way conjunctions—between interoception and monitoring emotion, and between interoception and video-by-video emotional intensity—as well as the 3-way conjunction—between interoception, monitoring emotion, and video-by-video emotional intensity—produced highly circumscribed overlap, limited to one cluster in the AI/IFO (see Table 4 and Figure 2b).

Table 4.

Conjunctions between attending to heartbeat, rating one’s own emotion, and intensity of one’s own emotion

| Region | x | y | z | T score | Volume (vox) |

|---|---|---|---|---|---|

| Heartbeat rating ∩ Rating Emotion | |||||

| Anterior Insula/Frontal Operculum | 46 | 22 | −4 | 3.58 | 39 |

| Heartbeat rating ∩ Emotional Intensity | |||||

| Anterior Insula/Frontal Operculum | 50 | 22 | 0 | 2.89 | 35 |

| Heartbeat rating ∩ Rating Emotion ∩ Emotional Intensity | |||||

| Anterior Insula/Frontal Operculum | 52 | 22 | −2 | 2.51 | 31 |

Note: Coordinates are in the stereotaxic space of the Montreal Neurologic Institute.

Participant-specific neural correlates of interoception

Based on the above group analyses, we extracted interoception-related activation peaks from each participant, most closely corresponding with the group AI peak. Participant-specific peaks were, on average, within a Euclidian distance of 10mm as compared to the group activation peak, and within insular cortex or the adjacent IFO when examined visually against participants’ anatomical images (for each individual’s peak coordinates, see Table S1).

Participant-specific functional overlap between interoception and emotion

We extracted parameter estimates for each participant’s interoception-related AI peak coordinates from their contrasts related to (i) monitoring emotion and (ii) video-by-video emotional intensity. Results of these analyses were consistent with the group effects described above: activity in participant-specific interoceptive cortex was engaged by monitoring emotion (emotion rating as opposed to eye-gaze rating, t(15) = 3.99, p = .001), and tracked with the video-by-video intensity of participants’ self-rated emotional experience, t(15) = 2.69, p < .02.

DISCUSSION

The role of bodily experience in emotion has been a topic of interest in psychology for over a century (e.g. James 1884), and more recently, neuroscience research has added converging support for the idea that bodily feelings and emotional experience share overlapping information processing mechanisms. Neuroscientific models now posit that the processing of visceral information in right AI may support this overlap between the perception of bodily and emotional states (Lamm & Singer, 2010). Specifically, a long research tradition—elegantly summarized by Craig (2002, 2003, 2009)—suggests that right lateralized AI plays a specific role in evaluating the subjective relevance of bodily states. Such “second-order” representations of the body (i) characterize the interoception task in the current study, and (ii) are posited to directly support the link between bodily states and emotions.

Here we leveraged the neuroimaging logic of association (cf. Henson, 2006) to test the hypothesis that interoception and emotional experience might share key information processing features, by examining functional overlap between these phenomena within a single population. Participants engaged overlapping clusters of AI and adjacent IFO when attending to their internal bodily states and when monitoring their own emotional states. Importantly, the amount of activity in the overlap region correlated with trial-by-trial variance in the intensity of the emotions they reported experiencing. This functional overlap was highly circumscribed and selective to an area of the AI previously identified as related to interoception and second-order bodily representations (Craig, 2009; Critchley, 2009). As such, our data provide important converging evidence for a model in which such second-order representations are a key feature of emotion generation and experience and—more broadly—that emotional experience is intimately tied to information about internal bodily states.

The history of emotion research can—at one level—be cast as counterpoint between theories upholding the embodied model we support here on the one hand, and so-called “appraisal” theories. These theories have often suggested that bodily information is either too slow (Cannon, 1927) or too undifferentiated (Schachter & Singer, 1962) to support emotional states, and that these states must instead be “constructed” based on top-down (and likely linguistic) information about goals, contexts, and the like (Barrett, Lindquist, & Gendron, 2007; Barrett, Mesquita, et al., 2007; Scherer, Schorr, & Johnstone, 2001). The current data are not at odds with the idea that appraisal influences emotion. It is possible—indeed, highly probable—that appraisals affect every stage of emotional experience (Barrett, Mesquita, et al., 2007), in part by altering bodily responses to affective elicitors (Gross, 1998; Ochsner & Gross, 2005). Within that framework, the current data suggest that bodily representations nonetheless constitute a key feature of emotional awareness and experience.

Limitations and future directions

It is worth noting three limitations of the current study. First, the interoception task used in this study, unlike some prior work, did not include a performance measure to assess individual differences in interoceptive acuity. That being said, both participants’ behavior and the strong convergence between interoceptive cortex as isolated by our analysis and others’ work (Critchley, 2004) support the view that our manipulation indeed tapped attention to internal bodily states. Future work should build on the known relationship between individual differences in interoceptive acuity and emotional experience, by exploring whether activity in clusters of AI or adjacent cortex common to both interoception and emotional experience covaries with such individual differences.

Second, the control condition we used to isolate emotional brain activity—eye gaze monitoring—may not be entirely “non-emotional.” Eye gaze is a salient social signal that can provide information about a person’s moods (Macrae, et al., 2002; Mason, et al., 2005). As such, our use of this control condition provided a conservative comparison. With it, we compared explicit monitoring of one’s own emotional state to what may have included implicit processing of such states, as part of monitoring eye-gaze.

Third, although the logic of association posits that two tasks or cognitive phenomena that engage overlapping brain regions may also involve overlapping information processing mechanisms, this logic is far from conclusive. Neuroimaging operates at a relatively coarse level of analysis; as such, overlapping activation at the level of voxels in no way signals overlapping engagement at the level of neurons or even functional groups of neurons. Techniques such as multivoxel pattern analysis (MVPA) offer more fine grained information about the spatial characteristics of brain activity, and future work should apply such techniques to further explore the physiological overlap between bodily states and emotional experience. However, it is important to note that even techniques such as MVPA cannot be used to draw direct inferences about neurophysiology. Instead of providing concrete answers about neurophysiological overlap, the current data lend converging support to a growing body of behavioral (Barrett, et al., 2004) and neuroimaging (Pollatos, et al., 2007) data suggesting that bodily awareness and emotion are intimately linked.

A “convergence zone” for the representations of the body and emotion

Since William James, the relationship between bodily states and emotional experience has attracted broad interest from psychologists, and more recently, neuroscientific data have suggested that cortical regions such as the AI may instantiate the convergence between these phenomena. Previous authors have speculated specifically that activity in the AI reflects the functional overlap of emotional and bodily experiences (Lamm & Singer, 2010). The current study provides the strongest support to date for this idea and, in doing so, speaks directly to an integrated view of affective and autonomic processing.

Supplementary Material

Acknowledgments

This work was supported by Autism Speaks Grant 4787 (to J.Z.) and National Institute on Drug Abuse Grant 1R01DA022541-01 (to K.O.).

Footnotes

Participants viewed an additional 6 videos while making judgments about the emotions of the person in the video; findings related to these videos are not discussed here, but can be found in Zaki et al. (2009).

Eye-gaze and eye-gaze direction are, at some level, social cues (Macrae, Hood, et al., 2002; Mason, Tatkow, & Macrae, 2005), which, in this case, might pertain to emotions expressed by the individuals in the video, and attending to eye-gaze can engage some neural structures commonly associated with social perception (Calder, Lawrence, et al., 2002). As such, comparing emotion rating with eye-gaze rating provided an especially conservative contrast that focused specifically on explicit attention to emotion, as opposed to incidental processing of social information (see Discussion) or attentional and motoric demands.

Due to excessive electromagnetic noise in the scanner environment, we were not able to provide real-time feedback to participants regarding their heartbeat, precluding us from calculating interoceptive accuracy (see Discussion). However, a modified version of that procedure permitted us to focus on the variable of primary interest, which was neural activity in response to interoceptive attention to one’s own bodily states.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Barrett LF, Lindquist KA, Gendron M. Language as context for the perception of emotion. Trends Cogn Sci. 2007;11(8):327–332. doi: 10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Mesquita B, Ochsner KN, Gross JJ. The experience of emotion. Annu Rev Psychol. 2007;58:373–403. doi: 10.1146/annurev.psych.58.110405.085709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Quigley KS, Bliss-Moreau E, Aronson KR. Interoceptive sensitivity and self-reports of emotional experience. J Pers Soc Psychol. 2004;87(5):684–697. doi: 10.1037/0022-3514.87.5.684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brett M, Johnsrude IS, Owen AM. The problem of functional localization in the human brain. Nat Rev Neurosci. 2002;3(3):243–249. doi: 10.1038/nrn756. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Lawrence AD, Keane J, Scott SK, Owen AM, Christoffels I, et al. Reading the mind from eye gaze. Neuropsychologia. 2002;40(8):1129–1138. doi: 10.1016/s0028-3932(02)00008-8. [DOI] [PubMed] [Google Scholar]

- Cannon W. The James-Lange theory of emotion: a critical examination and an alternative theory. American Journal of Psychology. 1927;39:106–124. [PubMed] [Google Scholar]

- Craig AD. How do you feel? Interoception: the sense of the physiological condition of the body. Nat Rev Neurosci. 2002;3(8):655–666. doi: 10.1038/nrn894. [DOI] [PubMed] [Google Scholar]

- Craig AD. Interoception: the sense of the physiological condition of the body. Curr Opin Neurobiol. 2003;13(4):500–505. doi: 10.1016/s0959-4388(03)00090-4. [DOI] [PubMed] [Google Scholar]

- Craig AD. How do you feel--now? The anterior insula and human awareness. Nat Rev Neurosci. 2009;10(1):59–70. doi: 10.1038/nrn2555. [DOI] [PubMed] [Google Scholar]

- Critchley HD. The human cortex responds to an interoceptive challenge. Proc Natl Acad Sci U S A. 2004;101(17):6333–6334. doi: 10.1073/pnas.0401510101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Critchley HD. Psychophysiology of neural, cognitive and affective integration: fMRI and autonomic indicants. Int J Psychophysiol. 2009;73(2):88–94. doi: 10.1016/j.ijpsycho.2009.01.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Critchley HD, Wiens S, Rotshtein P, Ohman A, Dolan RJ. Neural systems supporting interoceptive awareness. Nat Neurosci. 2004;7(2):189–195. doi: 10.1038/nn1176. [DOI] [PubMed] [Google Scholar]

- Damasio A. The Feeling of What Happens: Body and Emotion in the Making of Consciousness. New York: Harcourt; 1999. [Google Scholar]

- Davis JI, Senghas A, Brandt F, Ochsner KN. The effects of BOTOX injections on emotional experience. Emotion. 2010;10(3):433–440. doi: 10.1037/a0018690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher RA. Statistical Methods for Research Workers. Edinburgh: Oliver & Boyd; 1925. [Google Scholar]

- Gross JJ. The emerging field of emotion regulation: An integrative review. Review of general psychology. 1998;2(3):271. [Google Scholar]

- Henson R. Forward inference using functional neuroimaging: dissociations versus associations. Trends Cogn Sci. 2006;10(2):64–69. doi: 10.1016/j.tics.2005.12.005. [DOI] [PubMed] [Google Scholar]

- James W. What is an emotion? Mind. 1884;9:188–205. [Google Scholar]

- James W. The physical basis of emotion. Psychological Review. 1894;1:516–529. doi: 10.1037/0033-295x.101.2.205. [DOI] [PubMed] [Google Scholar]

- Kober H, Barrett LF, Joseph J, Bliss-Moreau E, Lindquist K, Wager TD. Functional grouping and cortical-subcortical interactions in emotion: a meta-analysis of neuroimaging studies. Neuroimage. 2008;42(2):998–1031. doi: 10.1016/j.neuroimage.2008.03.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurth F, Zilles K, Fox PT, Laird AR, Eickhoff SB. A link between the systems: functional differentiation and integration within the human insula revealed by meta-analysis. Brain Struct Funct. 2010;214(5–6):519–534. doi: 10.1007/s00429-010-0255-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamm C, Singer T. The role of anterior insular cortex in social emotions. Brain Struct Funct. 2010;214(5–6):579–591. doi: 10.1007/s00429-010-0251-3. [DOI] [PubMed] [Google Scholar]

- Lindquist KA, Wager TD, Kober H, Bliss-Moreau E, Barrett LF. The brain basis of emotion: A meta-analytic review. Behavioral and Brain Sciences. 2011:1–86. doi: 10.1017/S0140525X11000446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Macrae CN, Hood BM, Milne AB, Rowe AC, Mason MF. Are you looking at me? Eye gaze and person perception. Psychol Sci. 2002;13(5):460–464. doi: 10.1111/1467-9280.00481. [DOI] [PubMed] [Google Scholar]

- Mason MF, Tatkow EP, Macrae CN. The look of love: gaze shifts and person perception. Psychol Sci. 2005;16(3):236–239. doi: 10.1111/j.0956-7976.2005.00809.x. [DOI] [PubMed] [Google Scholar]

- Mitchell JP. Activity in right temporo-parietal junction is not selective for theory-of-mind. Cereb Cortex. 2008;18(2):262–271. doi: 10.1093/cercor/bhm051. [DOI] [PubMed] [Google Scholar]

- Mitchell JP. Inferences about mental states. Philos Trans R Soc Lond B Biol Sci. 2009;364(1521):1309–1316. doi: 10.1098/rstb.2008.0318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell JP, Banaji MR, Macrae CN. The link between social cognition and self-referential thought in the medial prefrontal cortex. J Cogn Neurosci. 2005;17(8):1306–1315. doi: 10.1162/0898929055002418. [DOI] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline JB. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25(3):653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- Ochsner KN, Gross JJ. The cognitive control of emotion. Trends Cogn Sci. 2005;9(5):242–249. doi: 10.1016/j.tics.2005.03.010. [DOI] [PubMed] [Google Scholar]

- Ochsner KN, Knierim K, Ludlow DH, Hanelin J, Ramachandran T, Glover G, et al. Reflecting upon feelings: an fMRI study of neural systems supporting the attribution of emotion to self and other. J Cogn Neurosci. 2004;16(10):1746–1772. doi: 10.1162/0898929042947829. [DOI] [PubMed] [Google Scholar]

- Peyron R, Laurent B, Garcia-Larrea L. Functional imaging of brain responses to pain. A review and meta-analysis (2000) Neurophysiol Clin. 2000;30(5):263–288. doi: 10.1016/s0987-7053(00)00227-6. [DOI] [PubMed] [Google Scholar]

- Pollatos O, Gramann K, Schandry R. Neural systems connecting interoceptive awareness and feelings. Hum Brain Mapp. 2007;28(1):9–18. doi: 10.1002/hbm.20258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saxe R, Kanwisher N. People thinking about thinking people. The role of the temporo-parietal junction in “theory of mind”. Neuroimage. 2003;19(4):1835–1842. doi: 10.1016/s1053-8119(03)00230-1. [DOI] [PubMed] [Google Scholar]

- Schachter S, Singer JE. Cognitive, social, and physiological determinants of emotion state. Psychological Review. 1962;69:379–99. doi: 10.1037/h0046234. [DOI] [PubMed] [Google Scholar]

- Scherer KR, Schorr A, Johnstone T, editors. Appraisal Processes in Emotion. Oxford University Press; 2001. [Google Scholar]

- Schwarzlose RF, Baker CI, Kanwisher N. Separate face and body selectivity on the fusiform gyrus. J Neurosci. 2005;25(47):11055–11059. doi: 10.1523/JNEUROSCI.2621-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singer T, Critchley HD, Preuschoff K. A common role of insula in feelings, empathy and uncertainty. Trends Cogn Sci. 2009;13(8):334–340. doi: 10.1016/j.tics.2009.05.001. [DOI] [PubMed] [Google Scholar]

- Singer T, Seymour B, O’Doherty J, Kaube H, Dolan RJ, Frith CD. Empathy for pain involves the affective but not sensory components of pain. Science. 2004;303(5661):1157–1162. doi: 10.1126/science.1093535. [DOI] [PubMed] [Google Scholar]

- Slotnick SD, Moo LR, Segal JB, Hart J., Jr Distinct prefrontal cortex activity associated with item memory and source memory for visual shapes. Brain Res Cogn Brain Res. 2003;17(1):75–82. doi: 10.1016/s0926-6410(03)00082-x. [DOI] [PubMed] [Google Scholar]

- Stepper S, Strack F. Proprioceptive determinants of emotional and nonemotional feelings. J Pers Soc Psychol. 1993;64:211–220. [Google Scholar]

- Valins S. Cognitive effects of false heart-rate feedback. J Pers Soc Psychol. 1966;4(4):400–408. doi: 10.1037/h0023791. [DOI] [PubMed] [Google Scholar]

- Wager T, Feldman Barrett L. From affect to control: Functional specialization of the insula in motivation and regulation. PsychExtra 2004 [Google Scholar]

- Wicker B, Keysers C, Plailly J, Royet JP, Gallese V, Rizzolatti G. Both of us disgusted in My insula: the common neural basis of seeing and feeling disgust. Neuron. 2003;40(3):655–664. doi: 10.1016/s0896-6273(03)00679-2. [DOI] [PubMed] [Google Scholar]

- Wiens S. Interoception in emotional experience. Curr Opin Neurol. 2005;18(4):442–447. doi: 10.1097/01.wco.0000168079.92106.99. [DOI] [PubMed] [Google Scholar]

- Williams LM, Brammer MJ, Skerrett D, Lagopolous J, Rennie C, Kozek K, et al. The neural correlates of orienting: an integration of fMRI and skin conductance orienting. Neuroreport. 2000;11(13):3011–3015. doi: 10.1097/00001756-200009110-00037. [DOI] [PubMed] [Google Scholar]

- Winston JS, O’Doherty J, Dolan RJ. Common and distinct neural responses during direct and incidental processing of multiple facial emotions. Neuroimage. 2003;20(1):84–97. doi: 10.1016/s1053-8119(03)00303-3. [DOI] [PubMed] [Google Scholar]

- Zaki J, Bolger N, Ochsner K. It takes two: The interpersonal nature of empathic accuracy. Psychological Science. 2008;19(4):399–404. doi: 10.1111/j.1467-9280.2008.02099.x. [DOI] [PubMed] [Google Scholar]

- Zaki J, Ochsner K. Reintegrating accuracy into the study of social cognition. Psychol Inquiry. 2011;22(3):159–182. [Google Scholar]

- Zaki J, Ochsner K. The neuroscience of empathy: Progress, pitfalls, and promise. Nat Neurosci. 2012;15(5):675–680. doi: 10.1038/nn.3085. [DOI] [PubMed] [Google Scholar]

- Zaki J, Weber J, Bolger N, Ochsner K. The neural bases of empathic accuracy. Proc Natl Acad Sci U S A. 2009;106(27):11382–11387. doi: 10.1073/pnas.0902666106. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.