Significance

Our senses provide rich information about world. For example, we might learn that elephants are large and gray by seeing one in a zoo. Sensory experience is not always necessary, however. People born blind have knowledge about “visual” ideas (e.g., color and light). How is appearance information acquired in the absence of sensory access? A seemingly obvious idea is that blind individuals learn from sighted people’s verbal descriptions. We compared blind and sighted people’s knowledge of the appearance of common animals and find that individuals who are blind infer appearance from other properties (e.g., taxonomy and habitat). In the absence of direct sensory access, knowledge of appearance is acquired primarily through inference, rather than through memorization of verbally stipulated facts.

Keywords: blindness, vision, language, animals

Abstract

How does first-person sensory experience contribute to knowledge? Contrary to the suppositions of early empiricist philosophers, people who are born blind know about phenomena that cannot be perceived directly, such as color and light. Exactly what is learned and how remains an open question. We compared knowledge of animal appearance across congenitally blind (n = 20) and sighted individuals (two groups, n = 20 and n = 35) using a battery of tasks, including ordering (size and height), sorting (shape, skin texture, and color), odd-one-out (shape), and feature choice (texture). On all tested dimensions apart from color, sighted and blind individuals showed substantial albeit imperfect agreement, suggesting that linguistic communication and visual perception convey partially redundant appearance information. To test the hypothesis that blind individuals learn about appearance primarily by remembering sighted people’s descriptions of what they see (e.g., “elephants are gray”), we measured verbalizability of animal shape, texture, and color in the sighted. Contrary to the learn-from-description hypothesis, blind and sighted groups disagreed most about the appearance dimension that was easiest for sighted people to verbalize: color. Analysis of disagreement patterns across all tasks suggest that blind individuals infer physical features from non-appearance properties of animals such as folk taxonomy and habitat (e.g., bats are textured like mammals but shaped like birds). These findings suggest that in the absence of sensory access, structured appearance knowledge is acquired through inference from ontological kind.

We learn about the world around us from multiple, redundant sources. We might find out that elephants are gray and have long trunks by observing them in a zoo, hearing people talk about them, reading about them in books, or all of the above. For example, take J. K. Rowling’s description of an imaginary creature in Fantastic Beasts and Where to Find Them:

“The Clabbert is a tree-dwelling creature, in appearance something like a cross between a monkey and a frog. It originated in the southern states of America, though it has since been exported worldwide. The smooth and hairless skin is a mottled green, the hands and feet are webbed, and the arms and legs are long and supple, enabling the Clabbert to swing between branches with the agility of an orangutan. The head has short horns, and the wide mouth, which appears to be grinning, is full of razor-sharp teeth” (ref. 1, p. 13).

Understanding that a Clabbert looks like a cross between a monkey and a frog and is “mottled green” arguably makes use of previous sensory experiences of monkeys, frogs, and the color green.

One approach to disentangling different types of experiences is to compare the knowledge of people with different sensory histories, such as people who are blind from birth and those who are sighted. Empiricist philosophers engaged in thought experiments about blindness (2–4). Locke argued that no amount of explanation or motivation would enable a blind person to understand light and color. He reasoned that a blind person who learns that marigolds are yellow could describe them as “yellow” but mistakenly believe that yellow referred to a marigold’s texture (2).

Empirical studies suggest, however, that blind adults and children do have knowledge about aspects of the world that can only be experienced directly through sight. Young blind children distinguish between different acts of seeing, understanding that one can “look” without “seeing” (5). Blind and sighted adults generate similar features for visual words and judge the same visual verbs to be semantically similar (6, 7). For example, sighted and blind adults alike distinguish among acts of visual perception along dimensions of duration and intensity (e.g., staring is intense and prolonged, whereas peeking is brief) and among light emission events along dimensions of periodicity and intensity (e.g., flash is intense and periodic, whereas glow is low intensity and stable) (7).

Contrary to Locke’s supposition, blind individuals also have knowledge of colors. From early in development, blind children understand that colors are properties of physical but not mental objects and can only be perceived with the eyes (5). Blind adults know that warm colors, like orange and red, are similar to each other but different from cool colors like blue and green (8–10). One study found that while semantic similarity judgments for common objects are highly correlated across sighted and blind participants (r > 0.88), blind individuals are less likely than the sighted to take color into account (11). This is true even for blind individuals who correctly report object colors (e.g., that bananas are yellow). On the whole, blind individuals turn out to know many things about appearance and vision.

However, the nature, extent, and origins of appearance knowledge acquired without sensory access remain poorly understood. In the current study, we examined blind and sighted people’s knowledge of distal objects—specifically, animals—as a window into the role of first-person sensory experience in knowledge acquisition. Animals are an interesting case because they have multiple physical features such as size, texture, shape, and color. These features are characteristic of kind and stable over time. Which aspects of this information are learned through vision, and which can be acquired similarly without it? Does acquisition in the absence of vision differ across dimensions that are exclusively visual (e.g., color) and dimensions that are in principle accessible via other modalities but, practically speaking, typically perceived through vision (e.g., the size, height, shape, and skin texture of large animals)?

Studying knowledge of animal appearance in blindness further provides a window into how language conveys information. A logical possibility is that language provides individuals who are blind with information about visual phenomena. This could occur in a number of different ways, however (5, 12). One obvious possibility is that blind individuals learn by remembering verbal descriptions of appearance or stipulated facts such as “marigolds are yellow,” as Locke had in mind. Many languages, like English, have elaborate vocabularies for colors, light events, and verbs of visual perception, which could be used in such descriptions (e.g., refs. 13 and 14).

If indeed blind individuals learn about visual appearance primarily from descriptions of appearance, then a straightforward prediction is that blind and sighted people’s knowledge about appearance will differ most in cases of low verbalizability. Verbal descriptions of physical appearance are often vague, leaving gaps to be filled in by pragmatics, prior knowledge, and context (e.g., “tall” when it refers to a tree versus a man) (15–19). Physical dimensions of concrete objects (e.g., size or color) vary continuously, and direct sensory experience provides access to this analog information. Words, on the other hand, refer to discrete aspects of these dimensions (e.g., a cherry and a raspberry are both red, and lions and elephants are both large) (17, 20, 21). The learn-from-description hypothesis therefore predicts that when probed deeply, differences will emerge in what blind and sighted people know about appearance and that blind and sighted individuals will disagree most about things that are difficult to verbalize.

A different, although not mutually exclusive, possibility is that blind individuals infer physical appearance from non–appearance-related knowledge, such as that of ontological kinds, together with an understanding of how appearance is related to ontological category. Children use knowledge about object kind and how kind relates to physical properties to infer aspects of appearance they cannot observe directly (e.g., animal insides) (22–24). For example, children infer that two different-looking animals will have more similar insides than an animal and a similar looking artifact, even before they know what the insides actually are (e.g., ref. 25). Conversely, children use what they know about appearance (e.g., shape) to infer object kind (i.e., two things that have the same shape are likely to be the same type of object) (26, 27). Furthermore, previous studies with sighted adults suggest that people use taxonomic information to infer animal properties they have not learned directly (e.g., to what disease an animal is most vulnerable) (e.g., ref. 28). Individuals who are blind might infer external properties of objects in an analogous manner; for instance, inferring that robins have feathers and a winged shape from knowing that they are birds. On this view, language serves as an indirect source of information about appearance by providing information about ontological kind. Unlike the learn-from-description hypothesis, the inference-from-kind hypothesis predicts that blind and sighted individuals are most likely to agree on those aspects of appearance that are predictable from kind, irrespective of verbalizability.

To test these hypotheses, we tested knowledge of animal shape, skin texture, color, size, and height among individuals who are born blind and those who are sighted by presenting names of animals verbally, in written print (sighted), or in Braille (blind) (Fig. 1). Participants rated the overall familiarity of the animals tested to determine whether visual access affects the subjective feeling of familiarity. Participants were asked to rank animals by their size and height and performed a shape odd-one-out task (which animal is most different in shape?) and a texture forced choice task (does this animal have skin, scales, feathers, or fur?). Participants also sorted animals into groups according to their similarity in three different appearance dimensions, shape, texture, and color, and were asked to verbally label their sorting piles. Similarity matrices were generated based on the sorting data and compared across groups and dimensions, as well as to a matrix of biological taxonomic similarity. We then quantified the verbalizability of each dimension (texture, shape, and color) by calculating Simpson’s Diversity Index (SDI). SDI takes into account the number of words used (fewer = more verbalizable), as well as agreement across speakers, and has previously been used to compare verbalizability of different physical dimensions across languages (e.g., refs. 13, 14, and 29). Participants also performed a set of non–appearance-related sorting tasks (i.e., sorting animals by diet and habitat and sorting objects by typical location). We compared the responses of a blind group to two groups of sighted participants (one tested in the laboratory and one on Amazon Mechanical Turk).

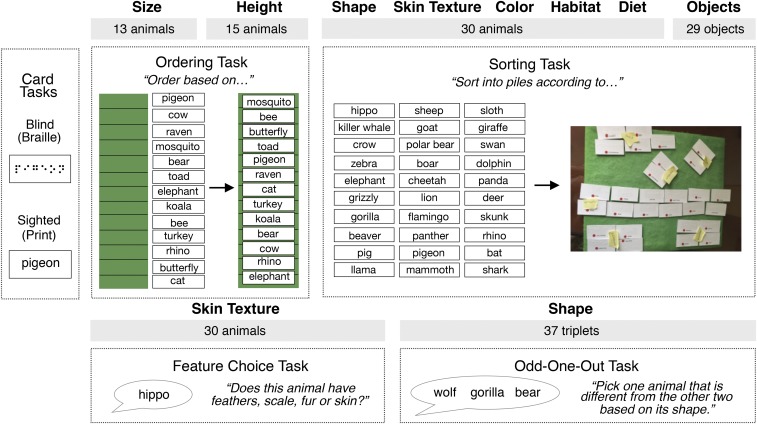

Fig. 1.

Experimental tasks used to probe knowledge about size (card ordering), height (card ordering), shape (card sorting and odd-one-out), skin texture (card sorting and feature choice), and color (card sorting). Card tasks required participants to order or sort cards based on a given dimension. Names of animals (or objects) were written on cardboard cards, in Braille for blind and in print for sighted participants.

Results

Familiarity Ratings.

An ordinal logistic mixed regression (random effects for subject and item) revealed that blind participants rated animals as overall less familiar than the sighted (Fig. 2A; sighted ratings, M = 3.26, SD = 0.39; blind ratings, M = 2.58, SD = 0.55; sighted compared with blind, odds ratio β = 10.18; Wald z statistic, z = 3.43, P = 0.0006). However, blind and sighted participants’ familiarity ratings were still highly correlated (Spearman’s correlation, rho = 0.82; permutation test, P < 0.0001).

Fig. 2.

(A) Familiarity ratings [1 (least familiar) to 4 (most familiar)] for all unique animals used across tasks (n = 94). (B) Verbalizability of sighted participants’ sorting group descriptions (color, texture, and shape). Simpson’s Diversity Index calculated for individual animals. Mean ± SEM. *P < 0.05, ***P < 0.001.

Animal Size and Height Ordering.

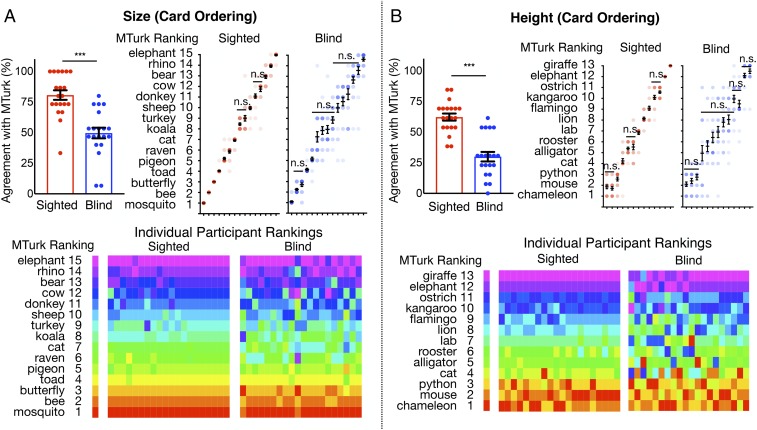

Blind participants and two groups of sighted participants (one in the laboratory and one on Amazon’s Mechanical Turk) ordered animals according to their size (13 animals) and height (15 animals), from smallest to largest and shortest to tallest (Fig. 1). When rankings were averaged across participants within each group, the resulting orderings were nearly identical across groups, and a subset of blind participants showed high agreement with the sighted (Fig. 3). However, on average, blind participants showed significantly lower agreement with sighted MTurk participants, compared with in-laboratory sighted participants (bar graphs in Fig. 3; for size, sighted, M = 80.67%, SD = 17.29%; blind, M = 49.67%, SD = 19.4%, Mann–Whitney U = 45, P < 0.0001; for height, sighted, M = 62.31%, SD = 12.94%; blind, M = 30%, SD = 17.45%, Mann–Whitney U = 29, P < 0.0001). In particular, relative to the sighted, blind participants made fewer distinctions between individual pairs of animals (Fig. 3).

Fig. 3.

Results for animal (A) size and (B) height (card ordering). Bar graphs show sighted and blind participants’ overall agreement with rankings obtained from MTurk. Plots on the Upper Right show average rankings provided for each animal. Pairwise comparisons between successive animals are significant (P < 0.05) unless noted otherwise (n.s.). Rainbow plots show individual participants’ rankings. Each column is a single participant, ordered from lowest to highest (left to right) in agreement with average mTurk ranking. Error bars are mean ± SEM. ***P < 0.001.

Sorting by Shape, Texture, and Color.

Correlations within dimension, within and across groups.

To analyze results from the card sorting task (30 animals), for each pair of animals, 1 was given if two animals were placed in the same pile and 0 otherwise. Individual subject binary matrices were then averaged across participants within group and sorting round to generate group similarity matrices.

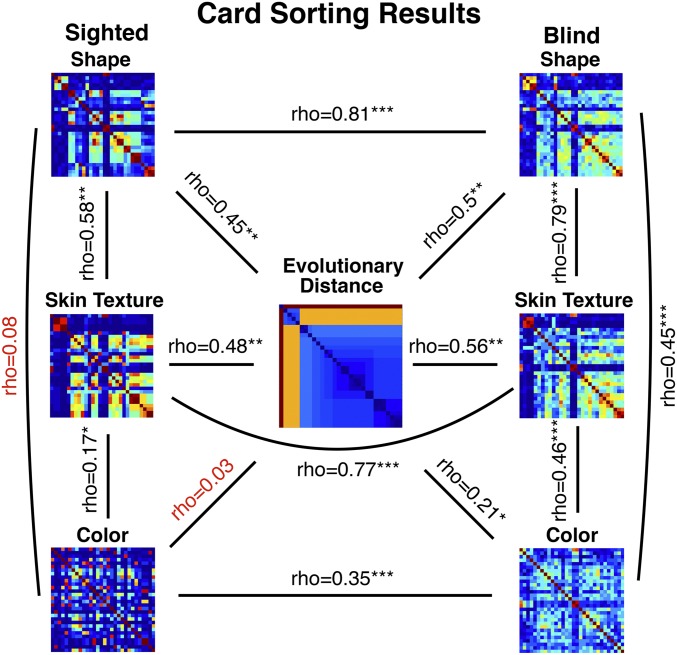

For shape, group similarity matrices were significantly correlated across blind and sighted groups (Fig. 4; Spearman’s rho = 0.81, P < 0.0001). Note that significances for all group matrix correlations were determined through a permutation test (Mantel), where a distribution of correlations coefficients is obtained by randomly scrambling the rows and columns of one matrix (n = 1,000). We next correlated individual subjects to the group matrices using a leave-one-subject out procedure. All statistics reported below were performed on Fisher z-transformed coefficients. The individual sighted to sighted group correlations were slightly but not significantly higher than the blind individual to sighted group and blind individual to blind group correlations [Fig. 5, bar graphs; S-to-S correlation coefficients, M = 0.6, SD = 0.24; B-to-S correlation coefficients, M = 0.46, SD = 0.32; comparing B-to-S vs. S-to-S, t (38) = 1.55, P = 0.13; B-to-B correlation coefficients, M = 0.46, SD = 0.31; comparing B-to-B vs. S-to-S, t (38) = 1.6, P = 0.12].

Fig. 4.

Correlations of sorting results (shape, skin texture, and color) with taxonomy (evolutionary distance), as well as within-group and across-group correlations. All matrices are ordered based on the optimal order for evolutionary distance (see SI Appendix, Fig. S2, for enlarged version of the taxonomy matrix, with a full legend). Red indicates nonsignificant correlations (Spearman’s rho). *P < 0.05, **P < 0.01, ***P < 0.001.

Fig. 5.

Results from card sorting task for animal shape, skin texture, and color. (Upper Left) Sorting similarity matrices averaged across participants within each group and sorting round (color indicates percentage of participants who grouped a given pair of animals into the same sorting pile). Within each sorting dimension, matrices are ordered according to the optimal order for sighted participants’ sorting results. (Right) Legends for similarity matrices, with groups of animals that correspond to number labels within matrices. Bar graphs show correlation of individual participant to group similarity matrices, within group (sighted individual to sighted group and blind individual to blind group) and across group (blind individual to sighted group). Mean ± SEM. **P < 0.01, ***P < 0.001.

Group-wise skin texture matrices were also similar across blind and sighted groups (Fig. 4; rho = 0.77, P < 0.0001). However, unlike with shape, individual blind participants’ sortings were significantly different from those of sighted participants and more variable within the blind group [Fig. 5, bar graphs; S-to-S correlation coefficients, M = 0.74, SD = 0.28; B-to-S, M = 0.44, SD = 0.14; comparing B-to-S vs. S-to-S, t (37) = 4.15, P = 0.0002; B-to-B correlation coefficients, M = 0.51, SD = 0.19; comparing B-to-B vs. S-to-S, t (37) = 3.11, P = 0.004].

Group differences for color sorting were even more pronounced than for skin texture. The correlation between group matrices was smaller, although still significant (rho = 0.35, P < 0.0001), and blind participants’ answers were significantly different from those of the sighted as well as more variable [Fig. 5, bar graphs; S-to-S correlation coefficients, M = 0.53, SD = 0.09; B-to-S, M = 0.12, SD = 0.12; comparing B-to-S vs. S-to-S, t (38) = 12.11, P < 0.0001; B-to-B correlation coefficients, M = 0.18, SD = 0.09; comparing B-to-B vs. S-to-S, t (38) = 12.7, P < 0.0001].

A one-way ANOVA confirmed that the degree to which blind and sighted groups disagreed differed across dimensions [comparing B-to-S across shape, texture, color, F(2,56) = 4.73, P = 0.01]. Pairwise comparisons further confirmed that group differences were more pronounced for color than for shape or texture [B-to-S for shape vs. texture, t (37) = 0.21, P = 0.8; shape vs. color, t (38) = 4.39, P < 0.0001; texture vs. color, t (37) = 7.5, P < 0.0001].

Visual inspection of the group matrices suggests that for shape and skin texture, blind and sighted participants made similar groupings based on physical features that covary with taxonomy and habitat (Fig. 5). For example, both groups separated aquatic animals (dolphin, shark, and killer whale) from birds (pigeon, crow, swan, and flamingo) and four-legged land mammals. In contrast, small clusters within the broad taxonomic class of four-legged land animals differed across groups. For instance, when sorting by shape, only sighted participants grouped gorilla, grizzly bear, polar bear, panda, sloth, beaver, and skunk as being distinct from other four-legged animals such as pigs, boars, and sheep. Analogously, when sorting by skin texture, only sighted participants grouped hippo, rhino, elephant, and pig into a distinct subcategory.

For color, sighted participants formed groups for white (polar bear, sheep, and swan), pink (pig and flamingo), black (gorilla, panther, crow, and bat), black and white (killer whale, panda, skunk, and zebra), yellow (giraffe, cheetah, and lion), brown (e.g., deer and grizzly bear), and gray (pigeon, dolphin, shark, elephant, rhino, and hippo) animals with high consistency. Blind participants’ color sortings, however, did not reveal any such groups.

Correlations with taxonomy (evolutionary distance).

There was an increase in the across- relative to within-dimension correlations in the blind relative to the sighted group (SI Appendix, Fig. S1). For the sighted group, all within-dimension correlations were significantly higher than across-dimension correlations (e.g., shape sighted individual to sighted group higher than shape to texture and shape to color). For the blind group, this effect was significant for texture, in the predicted direction but not significant for shape, and not present for color.

For shape, both blind and sighted group similarity matrices were significantly correlated with evolutionary distance (Fig. 4; sighted, rho = 0.45, P = 0.001; blind, rho = 0.5, P = 0.002). Correlation coefficients for individual participants’ shape sorting matrix correlated to taxonomy similarly showed no group differences [SI Appendix, Fig. S3; t (38) = 0.45, P = 0.7]. Similarly, for skin texture, both blind and sighted group similarity matrices were significantly correlated with evolutionary distance (Fig. 4; sighted, rho = 0.48, P = 0.001; blind, rho = 0.56, P = 0.001). Individual participants’ correlations with taxonomy again did not differ across groups [SI Appendix, Fig. S3; t (37) = 0.21, P = 0.8]. For color, only the blind group’s color similarity matrix was correlated with evolutionary distance (Fig. 4; sighted, rho = 0.03, P = 0.75; blind, rho = 0.21, P = 0.02). Unlike with shape and skin texture, correlation coefficients for correlation between taxonomy and individual matrices were significantly different across groups [SI Appendix, Fig. S3; t (38) = 2.94, P = 0.006].

Verbal labels of sorting groups for shape, skin texture, and color sorting.

Verbalizability of sighted participants’ descriptions for sorting piles (Simpson’s Diversity Index; see Methods for detail) was lowest for shape, followed by skin texture, then color (Fig. 2B; shape, M = 0.11, SD = 0.03; skin texture, M = 0.17, SD = 0.12; color, M = 0.47, SD = 0.22; color vs. texture, Wilcoxon signed-rank test, P < 0.0001; texture vs. shape, P = 0.02). Note that the average number of sorting groups created by sighted participants was comparable across dimensions: shape = 6.8, texture = 5.1, and color = 7. An analysis of blind participants’ verbal descriptions can be found in SI Appendix, Fig. S4.

Performance on non-appearance sorting tasks.

Correlation between group matrices were high and significant across all non-appearance feature sorting tasks (SI Appendix, Fig. S5; objects, rho = 0.8, P < 0.0001; habitat, rho = 0.83, P < 0.0001; diet, rho = 0.78, P < 0.0001). For sorting objects based on where they are stored, blind participants’ answers did not differ from those of the sighted group, although they were more variable [S-to-S correlation coefficients, M = 0.95, SD = 0.11; B-to-S, M = 0.88, SD = 0.19; S-to-S vs. B-to-S, t (37) = 1.36, P = 0.18; B-to-B, M = 0.78, SD = 0.14; S-to-S vs. B-to-B, t (37) = 4.11, P = 0.0002]. For sorting animals by where they live, there were no within- or across-group differences [S-to-S correlation coefficients, M = 0.59, SD = 0.11; B-to-S, M = 0.53, SD = 0.16; S-to-S vs. B-to-S, t (33) = 1.39, P = 0.17; B-to-B, M = 0.53, SD = 0.15; S-to-S vs. B-to-B, t (33) = 1.39, P = 0.17]. Somewhat surprisingly, blind and sighted groups differed in how they sorted animals by diet [S-to-S correlation coefficients, M = 0.57, SD = 0.16; B-to-S, M = 0.36, SD = 0.26; S-to-S vs. B-to-S, t (38) = 3.04, P = 0.004; B-to-B, M = 0.34, SD = 0.18; S-to-S vs. B-to-B, t (38) = 4.33, P = 0.0001].

Shape Odd-One-Out Triplet Task.

For the shape odd-one-out task, blind and sighted participants’ responses were compared with responses of sighted MTurk participants (i.e., for each triplet, the animal chosen by a plurality of MTurk participants as the odd-one-out was considered the sighted agreed upon answer). Blind participants showed less agreement than the sighted with sighted MTurk participants (Fig. 6A; percentage of correct trials, sighted, M = 74.44%, SD = 9.22%; blind, M = 55.12%, SD = 10.65%). A mixed logistic model (subject and trial as random effects and responses coded as consistent or inconsistent with expected answer) showed a significant effect of group (sighted compared with blind, odds ratio, β = 2.77; z = 6.51, P < 0.0001). On some of the trials where taxonomy was pitted against shape, blind and sighted groups diverged in their answers (e.g., for wolf/gorilla/bear, 70% of sighted participants picked wolf, while 63% of blind participants chose gorilla, and for parrot/giraffe/ostrich, 65% of sighted said parrot, and 63% of blind participants answered giraffe).

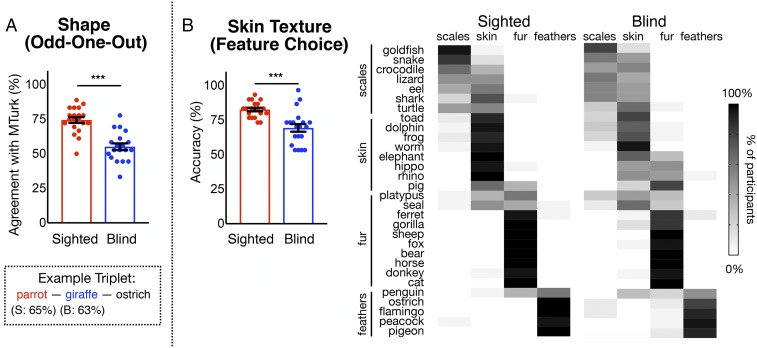

Fig. 6.

(A) Results for animal shape (odd-one-out triplets task). Bar graphs show sighted and blind participants’ overall agreement with MTurk participants. Box shows one example triplet, with the most common sighted group answer in red and most common blind group answer in blue. (B) Results for skin texture (feature choice task). Bar graphs show sighted and blind participants’ overall agreement with MTurk participants. Grayscale figures show percentages of participants who chose scales, feathers, skin, or fur for all items. Error bars are mean ± SEM. ***P < 0.001.

Skin Texture: Feature Choice Task.

For the skin texture feature choice task, answers were scored according to whether they agreed with the following criteria: for reptiles and fish, scales; feathers for birds; fur for mammals whose hides are covered in fur or hair (e.g., gorilla, sheep, horse, and cat); and skin for all other mammals (e.g., dolphin, elephant, and pig) (see Methods for complete list). While there was substantial agreement overall in blind and sighted people’s responses, there were also clear differences between groups (Fig. 6B; sighted agreement to criteria, M = 82.67%, SD = 5.68%; blind agreement, M = 69.33%, SD = 12.36%; result of mixed logistic regression, sighted compared with blind, odds ratio, β = 2.77; z = 6.51, P < 0.0001).

Inspection of the data suggests that blind and sighted participants were similarly likely to say that birds have feathers (e.g., 100% of the sighted and 90% of blind participants said pigeons have feathers; Fig. 6B). However, blind participants differed from the sighted in classifying mammals according to whether they have skin or fur. A sizeable proportion of blind participants answered that elephant (30%), rhino (45%), and hippo (50%) have fur, whereas almost all sighted participants (>95%) responded that they had skin. Interestingly, blind participants reported that sharks have scales (55%), while sighted reported that they have skin (75%). In fact, sharks have fine scales called placoid scales, which are not easily visible with the naked eye.

Discussion

Language Is an Efficient but Incomplete Source of Information About Appearance: Partially Shared Knowledge of Animal Appearance Among Blind and Sighted.

The present results show that blind and sighted individuals living in the same culture share a substantial body of information about the shape, skin texture, height, and size of common animals, and this knowledge can be queried with verbal stimuli. There was considerable agreement between sighted and blind groups, even in cases where the majority of blind individuals are unlikely to have had direct sensory access. For example, 15 out of 20 blind and 19 out of 20 sighted subjects judged elephants to be bigger than rhinos. Participants in both groups reported that birds have feathers and that most land mammals have fur. In the card sorting task, 66% of the variance for shape and 59% of the variance for texture was common to sighted and blind groups. For shape, texture, height, and size, blind participants tended to agree among themselves and with sighted participants on distinctions between groups of animals, and a subset (>30%) of blind participants made judgments that were indistinguishable from those of the sighted.

Despite substantial shared knowledge, there were differences across groups as well. First, while blind people agreed with the sighted about which animals were most familiar, overall, blind individuals rated animals as less familiar. (Note that since we only measured familiarity for animals, we cannot rule out the possibility that blind and sighted individuals were merely using different criteria.) Disagreement about appearance across groups was most pronounced for color and apparent even for dimensions such as size, height, shape, and texture, which are in principle available via touch. For size, height, shape, and texture, there was greater disagreement regarding the appearance of individual animals than subgroups of animals. For example, when sorting by height and size, blind and sighted participants agreed that elephants and rhinos are larger than bears and cows and that elephants and giraffes are taller than kangaroos and lions. However, only sighted participants also systematically judged bears to be larger than cows and giraffes to be taller than elephants. Similar effects emerged across all of the tested dimensions.

On the whole, when it comes to appearance, blind and sighted people share knowledge of animal groupings (e.g., fish, birds, reptiles and amphibians, land mammals, and sea mammals), knowledge of which animals belong to which groups, and knowledge of which physical features are most likely to be observed in each group (e.g., birds have feathers). There is also some shared knowledge about the appearance of individual animals, which are likely acquired from verbal descriptions (e.g., that elephants are one of the larger land mammals). However, language and vision are not equivalent sources of information about what things look like (21, 17, 30, 31). Whether because descriptions do not contain the relevant information or because representations of appearance are not learned from description as easily as they are from perception, representations of appearance developed with and without vision are different.

The current results inform an ongoing discussion about how the source of knowledge affects the nature of the representations formed. A number of recent neuroimaging studies have suggested that blind and sighted people have similar neural representations of object appearance in the ventral occipitotemporal cortex and elsewhere in the brain (32–36). This neuroimaging work has focused on neural differences between broad categories of objects, such as faces and places. The present results suggest that when it comes to distinctions in the appearance of individual entities within the animal category, representations acquired through language versus visual perception are not indistinguishable. One possibility is that blind and sighted individuals share broad category distinctions but differ on the fine-grained, within-category distinctions. Alternatively, it has recently been suggested that representations of appearance (and shape in particular) are more different across sighted and blind groups for living things than other categories such as artifacts because in the case of living things, shape cannot be inferred from motor interactions (37). Whatever the explanation, blind and sighted people differ in the details of their representations of the surface properties of distal objects.

There is also evidence that relative to the sighted, blind individuals develop more elaborated mechanisms for recognition and categorization through audition and touch, showing superior recognition of voices and Braille-like patterns (38, 39). Together, these results suggest that humans develop representations of surface properties of objects that are adapted to their own sensory experience.

Learning About Appearance Through Inference from Ontological Kind.

While some of the differences in the knowledge of sighted and blind groups are likely related to the difficulty of describing complex continuous dimensions in words, the present results suggest that description and verbally stipulated facts are not the primary source of information about appearance for people who are blind. When looking across dimensions, blind and sighted participants disagreed most on the dimension that was easiest to verbalize—color. Sighted subjects’ descriptions of their color groups were succinct, typically one or two words; precisely captured how members of a group were similar to each other (e.g., black and white for pandas, killer whale, zebra, and skunk); and were common across participants (groups were labeled as white, brown, pink/red, gray, black, black and white, and yellow by 90% of sighted participants). Thus, the aspects of color knowledge that were tested in the current study are highly verbalizable. However, color was the only dimension for which no single blind participant was indistinguishable from the sighted group, and the correlation between sighted and blind groups was lower than the correlation across dimensions within the blind group. In other words, despite being verbalizable, animal colors are not systematically acquired by people who are blind.

By contrast, shape was the least verbalizable of the tested dimensions. When sighted participants were asked to label their shape-based sorting piles, labels were long, inefficient, and variable across participants and did not closely mirror how animals were sorted. Descriptions frequently contained features that applied to multiple groups of animals and therefore failed to capture the similarities unique to the members of a specific group (e.g., four legs or large). Thus, in the current study, shape was less verbalizable than color. Consistent with these results, previous findings suggest that shape is less verbalizable than color across languages (13). However, blind and sighted participants agreed most about shape.

Why are blind individuals more likely to agree with the sighted about animal shape than color? It is possible that despite being easier to describe, animal color is talked about less. We are not aware of any evidence for this idea, however. Another explanation can be found in the writings of Locke (2). He argued that a blind person could learn the shape but not the color of a novel statue from description because blind people have previously experienced shape but not color nonverbally (i.e., through touch). According to this idea, when a sighted person learns that a polar bear is white, they can apply the word to their memory of white, whereas blind individuals only have access to the association between the words white and polar bear. By contrast, hearing that something is round is equivalent for sighted and blind individuals.

Locke’s inaccessibility explanation cannot be fully ruled out based on the present data. However, prior studies have shown that blind individuals can and do learn about colors as well as other purely visual phenomena (e.g., sparkle) (7–10). As noted in the introduction, Landau and Gleitman (5) showed that Kelli, a blind 4 y old, knew that colors are physical properties perceptible only with the eyes. Therefore, it is not the case that blind individuals do not learn anything about color. Rather, it seems that the colors of objects, and animal colors in particular, are less likely to be shared across blind and sighted individuals. These findings do not support the idea that blind individuals learn about what things look like primarily from sighted people’s descriptions of appearance (i.e., the learn-from-description hypothesis).

Instead, the data suggest that blind individuals use folk taxonomy and other knowledge about animals (e.g., their habitat) to make inferences about what animals look like. Such inference works better for shape and texture than for color because color is less inferentially related to other properties of animals such as their taxonomic category and behavior. The inference-from-kind hypothesis is supported by the disagreement patterns that arose between the sighted and blind groups across the dimensions tested.

For blind but not sighted participants, sorting based on color was correlated with taxonomy. There is no correlation in the sighted presumably because sighted people learn the colors of common animals from vision, and color and folk taxonomy are not in fact correlated (polar bears, swans, and sheep are all white). As noted above, even for shape, size, and texture, there was greater agreement between blind and sighted participants about the appearance of broad folk taxonomic groups (e.g., birds and aquatic animals) than about animals within these groups (e.g., among mammals), with blind participants sometimes collapsing perceptual subgroups made by the sighted within a taxonomic class. For example, when sorting by shape, blind participants tended to group all land mammals together, while sighted participants separated gorilla, polar bear, grizzly, panda, and sloth from the other quadrupeds (e.g., deer, rhino, and lion). Blind people were no more likely than the sighted, however, to group birds with mammals or to put sea-dwelling animals with land animals. Similarly, during texture sorting, blind participants grouped all land mammals together, whereas the sighted made distinctions within them, such as between short-haired animals (e.g., deer, zebra, and giraffe) and those with fur (e.g., grizzly, gorilla, and mammoth). Analogously, in the texture feature choice task, sighted participants distinguished among mammals whose skin is covered with fur (e.g., foxes and bears) and those that are hairless (e.g., hippos and elephants). By contrast, a sizable subset (>30%) of blind participants responded that hippos, elephants, rhinos, and pigs have fur. Blind participants were no more likely than the sighted to say that a bird had fur or skin, however. Blind participants were more likely than the sighted to report that sharks have scales (55% vs. 20%), which is what one would infer from the fact that they are fish. By contrast, the majority of sighted participants reported that sharks have skin (sharks have fine scales that are difficult to discern through visual perception). Further, blind participants’ judgments were more correlated across dimensions than those of the sighted, supporting the idea of a common source of information.

Perhaps the best evidence for the idea that blind individuals relied on taxonomy to infer appearance comes from the odd-one-out shape task. When animal kind was pitted against appearance, sighted participants went with appearance, whereas the blind group tended to go with taxonomy (e.g., for parrot-ostrich-giraffe, a majority of sighted participants picked parrot as the odd-one-out, whereas blind participants chose giraffe; for wolf-gorilla-bear, sighted picked wolf, and blind picked gorilla). Together, this evidence suggests that blind individuals use their knowledge of folk taxonomic distinctions, as well as other semantic information about animals (e.g., whether they live on land or in water), to make inferences about their appearance.

Humans across cultures organize animals into taxonomies and use these to make inferences about their physical and nonphysical properties. Animals belonging to the same taxa are judged to share characteristics such as how they behave, grow, and catch diseases (e.g., refs. 40 and 41). There is also evidence that differences in both amount and type of expertise about animals and plants influence the degree to which people rely on taxonomy in making semantic judgments about them (42, 43). As noted in the introduction, children and adults also use knowledge about what category an animal belongs to, to make inferences about its unobservable properties, such as its insides (e.g., refs. 25 and 44). Analogously, individuals who are blind infer the surface appearance of an animal from an understanding of the category to which the animal belongs and of the way that category predicts surface properties (e.g., most land mammals walk and have four legs). Interestingly, although blind participants inferred color from taxonomy more than the sighted, they were less likely to do so than for shape and texture, suggesting that like the sighted, blind individuals are sensitive to the fact that color is less predictive of kind than shape (26, 45).

In all, the results favor the inference-from-kind, rather than learn-from-description hypothesis. The primary way in which language transmits appearance information appears to be indirect. Language conveys information about kind and about how kind is related to surface features (e.g., that ostriches are birds, and birds have wings and feathers). The rest is left to inferential mechanisms shared by sighted and blind people alike (22, 44, 46, 47).

How Different Is the Knowledge of People Who Are Blind and Sighted? Some Caveats.

The present study focused on knowledge of appearance and, in doing so, only scratched the surface of people’s knowledge about animals. For example, although the present data suggest that blind and sighted people share folk taxonomic knowledge, the experiments were not designed to test it. In future work it would be interesting to ask whether there are any differences in folk taxonomies across people who live in the same culture but have different access to visual and linguistic sources. In some cases, we might expect vision to provide useful information about taxonomy. For example, watching lions hunt might tell us that they are related to other carnivores, and their shape provides clues about their relationship to other cats. In other cases, vision might be misleading, causing people to believe that evolutionarily distinct animals are similar (e.g., dolphins and sharks). Such differences in access may lead to intriguing taxonomic disagreements.

There is also other knowledge about animals that is almost certainly common to people who are sighted and blind that was not measured in the current study. Previous research has shown that even young children reason about animals in causal ways that go well beyond what they look like. For example, young children know that animals breathe, have internal invisible parts (e.g., hearts), have babies, and can hear and see things (e.g., refs. 48 and 49). Older children develop more sophisticated concepts about life (e.g., that things can be alive but inanimate), death, and species kind (49). Blind and sighted adults almost certainly share such knowledge, which we did not test.

It is also possible that appearance-related knowledge is less shared for animals than for other categories of objects among blind and sighted people. It has been previously noted that people living in industrialized societies have rather impoverished knowledge of animals relative to those in societies in closer contact with nature (41, 50, 51). For example, Itzaj Maya people have different and richer representations of plant and animal life than American undergraduates living in Michigan (41). Blind and sighted individuals living in industrialized societies might therefore be more likely to share appearance-related knowledge of more ecologically relevant objects. Consistent with this idea, Landau and Gleitman (5) reported that the blind 4-y-old Kelli knew the colors of some familiar objects (e.g., that her dog was golden, milk was white, and grass was green). Blind adults may also be more likely to agree with the sighted about the colors of common fruits and vegetables than the colors of common animals (11). Nevertheless, although the degree of shared object color knowledge may increase with ecological relevance and sheer frequency, we hypothesize that the general principles observed in the current study will still apply: individuals who are blind will be most likely to agree with the sighted about those aspects of appearance that are easiest to predict based on intuitive theories of how appearance works.

Methods

Participants.

Twenty congenitally blind (16F/4M) and 20 control sighted (13F/7M) participants took part in the study (SI Appendix, Table S1). Control participants were matched to blind participants on age (blind group, M = 29.75 y of age, SD = 9.12; sighted group, M = 29.95, SD = 2.12) and years of education (blind group, M = 15.5, SD = 2.12; sighted group, M = 15.35, SD = 2.38). One additional blind participant (participant 21) was dropped because they turned out to not be a native English speaker upon further screening. All blind participants were tested at the 2016 National Federation of the Blind Convention in Orlando, Florida. Most participants completed all of the experimental tasks. However, the following tasks were only completed by a subset of blind participants: object sorting (n = 19), habitat sorting (n = 15), skin texture sorting (n = 19), and shape odd-one-out (n = 19). To assess general cognitive abilities, the Woodcock Johnson III Tests of Achievement (subtests Word ID, Word Attack, Synonyms, Antonyms, and Analogies) and letter span test (forward and backward) were administered. All participants scored within two SDs from the mean of the current group on the WJIII tests. Participants were additionally recruited from Amazon’s Mechanical Turk for height card ordering (n = 10), size card ordering (n = 10), and shape odd-one-out tasks (n = 15). Experimental procedures were approved by the Johns Hopkins Homewood Institutional Review Board. All participants provided informed consent and were compensated for their participation.

Materials and Methods.

Blind and sighted participants were asked about the size (card ordering), height (card ordering), shape (card sorting and odd-one-out tasks), skin texture (card sorting and feature choice tasks), and color (card sorting) of animals (Fig. 1). As a practice condition for card sorting, participants sorted household objects based on where they are stored. To probe knowledge about non-appearance features of animals, we additionally asked about the habitat and diet of animals (card sorting). Participants additionally rated their familiarity with all animals presented during the task (94 animals) on a scale of 1–4. For half of the participants (both blind and sighted), sorting tasks were administered first, followed by all other tasks (and vice versa for the other half of participants). Within sorting tasks, the order of sorting rounds were kept the same, as was the order of tasks within nonsorting tasks (familiarity ratings, size, height, shape odd-one-out, then texture feature choice).

Card Ordering Task (Size and Height).

Participants were asked to order a list of either 13 (size) or 15 (height) animals. Multiple lists of animals were first piloted on MTurk (in the online version, participants typed in the order of animals on list of slots). Various lists were tested to ensure that the ordering was neither too easy nor too difficult. The final orderings (Fig. 3) were based on the average rankings for each item across MTurk participants (e.g., for mosquito, most participants gave a rank of 1, but a few also responded 2, resulting in a ranking average of 1.3). Individual MTurk participants’ agreement with the group ranking was 89.2% for size (SD = 12.7%) and 66% for height (SD = 15.8%), where agreement was calculated as the percentage of animals that were given the same rank.

Participants were instructed to order the animals first based on their size (“the overall amount of space that an animal takes up in the world”) and their height (“the distance from the ground to the highest point of the animal when it is typical posture…for instance, a snake would typically have its whole body flat on the ground, so you are thinking about the distance from the ground to the top of its head when it is lying down, whereas a crocodile usually lies flat on its stomach, and for other four-legged animals like dogs, think about their height when they are standing on all fours”).

For blind participants, animal or object names were written in Braille (contracted, Grade II) on small cardboard cards (around 3 × 1 in. in size), while names were written in print for sighted participants. At the start of the task, the experimenter placed all 13 (size)/15 (height) cards vertically in one of two predetermined orders (counterbalanced) and instructed the participants to read the animal cards out loud, starting from the top. Participants then started ordering the cards by picking the animal that they thought was the smallest/shortest. The experimenter then placed the chosen animal on the first slot of a long, felt board placed next to the vertically arranged animal cards (Fig. 1) and asked the participant to continue (i.e., pick the next smallest/shortest animal and so on). At any time, participants could reread and change the ordering of the cards on the board. Once all animal cards had been placed on board, the experimenter read the final list out loud to make sure participants were satisfied with the ordering. In-laboratory sighted and blind participants’ rankings were compared against the average MTurk rankings.

Card Sorting Task (Shape, Skin Texture, Color, Habitat, Diet, and Objects).

In the card sorting task, participants sorted the same 30 animal cards into groups according to various sorting rules. The animals were selected as to maximize differential sorting across rules. There were five different animal sorting rules: three related to perceptible physical features (shape, skin texture, and color) and the remaining two to nonphysical features (habitat and diet). Participants also sorted 29 objects according to where they are stored around the house, as a practice round.

Participants were informed that they would sort the same cards multiple times, each time according to a different rule, with no restrictions on the number of groups that can be formed. At the start of each sorting, the experimenter handed the cards to the participants one at a time, reading them out loud. Cards had Velcro taped to the back such that blind participants could easily place and remove them from a felt board which served as their sorting surface. Participants were free to reread and adjust their groups at any time. At the end of each sorting, participants were asked to provide a verbal label to describe each group (“How would you describe this group?”). To ensure that participants were satisfied with their groupings, the experimenter read the label provided by the participant, along with the members of the group, out loud (e.g., “In this group that you labeled ‘Kitchen’, you have spoon, bowl, fork, and spatula”).

The object sorting task was administered first and served as a practice round to get participants accustomed to the task. Before each sorting round, participants were given the specific rule (e.g., “Sort into different groups according to where these objects are usually stored”). The experimenter then instructed the participants to sort the animals according to (i) their shape (“the outline of their body”), (ii) the texture of their skin, (iii) their color or pattern, (iv) the habitat (“where they live”), and (v) their diet (“what they eat”). All participants performed the task in this fixed order. Before the start of the animal sorting tasks, participants were further reminded that some animals may fall into different groups depending on the specific individual of the species (e.g., dogs can have many different shapes) and to decide based on their intuition of the most common kind of a given animal (e.g., a Labrador).

Card Sorting Task Similarity Matrix Analysis.

A similarity matrix was constructed for each participant and each sorting round, assigning, for any given pair of objects or animals, 1 if the two items had been placed in the same group and 0 otherwise. The matrices of all participants were averaged to obtain one group matrix per group (Figs. 4 and 5). The two group matrices were then correlated. To examine variability within each group, each individual blind or sighted participant’s matrix was correlated to the average of the group with the single individual left out (within-group, sighted individual to sighted group or blind individual to blind group, S-S and B-B). In addition, each individual blind participant’s matrix was correlated with the sighted group matrix (across group, blind individual to sighted group, B-S). Spearman correlation was used for all matrix correlations, and Fisher’s z transformation was applied to the resulting rank correlation coefficients (rho) to allow comparison across groups.

We examined similarity across sorting rules within each group (e.g., shape vs. color for blind group) as well as similarity across groups within each sorting rule (e.g., blind vs. sighted for shape) (Fig. 4). For within-group correlations, the group matrices for shape, texture, and color were correlated with each other, resulting in three correlation coefficients. For across-group correlations, the blind group and sighted group matrices were correlated for each sorting rule. Significance of group matrix correlations was determined using a permutation test.

To examine the degree to which participant sorting was predictable based on taxonomic similarities between animals, each group matrix, for each sorting round, was further correlated with an evolutionary distance similarity matrix (Fig. 4). Evolutionary distance between pairs of animals was obtained using an online database pooling published data from ∼2,000 studies of species diversification (timetree.org) (ref. 52 and SI Appendix, Fig. S2). To enable statistical comparison of correlations with taxonomy across blind and sighted groups, each individual participant’s similarity matrix was additionally correlated with the evolutionary distance matrix and z-transformed (SI Appendix, Fig. S3).

Sorting Pile Descriptions Analysis.

During the card sorting task, participants were asked to label each of the piles they created. For each sorting rule (shape, texture, or color) and each animal, a verbalizability (or codability) score was calculated using Simpson’s Diversity Index, as below, where for unique words 1 to R provided for each animal, n is the count of how many times each word was used across participants, and N is the total number of words (13, 29). The index ranges from 0 to 1, where a score of 0 would indicate that the same word was never used more than once (i.e., low verbalizability), and 1 would suggest that all participants provided the same word (i.e., high verbalizability).

All words provided by participants were treated as discrete utterances, with the following exceptions, which were treated as single words: upside-down, on the ground, and any “is-like” statement (e.g., fish-like and human-like).

Odd-One-Out Triplets Task (Shape).

During the shape triplets task, participants were presented with three animals at a time and asked to choose the animal that is different from the other two based on overall shape. The task was administered verbally for both blind and sighted participants. Participants were reminded to ignore other features such as color, size, texture, or type of animal. Triplets of animals were picked based on piloting on MTurk and created to minimize confounds with nonshape dimensions like size and category (e.g., wolf, bear, and gorilla and parrot, giraffe, and ostrich; Fig. 6A). Answers were scored as correct or incorrect based on the answer agreed on by the MTurk group.

Feature Choice Task (Texture).

To further probe knowledge about the texture of animals, participants performed a feature choice task. The task was administered verbally for both blind and sighted participants. The experimenter read out loud the name of 30 animals (Fig. 6B) one at a time, and participants had to decide whether the animal had scales, feathers, skin, or fur. If skin was picked, participants were further asked whether the skin is smooth and rough, and for fur, they were asked whether the fur is short, medium, or long in length and whether it is thick or fine. The 30 animals used for this task were different from the 30 animals used in the sorting task. For a majority of the 30 animals, there is a clear correct answer: birds have feathers, reptiles and fish have scales, and some animals have no hair or fur (e.g., worm, frog, toad, and dolphin). Animals that have skin with some short hairs or bristles (e.g., pig) were scored as correct for “skin” responses, and animals that have fur in some but not all parts of the body (e.g., horse and donkey) were scored correct if “fur” was the answer.

The data, processing code, and analysis scripts reported in this paper are available in a GitHub repository, https://github.com/judyseinkim/Animals, as well as on Open Science Framework (https://osf.io/zgucm/) (53).

Supplementary Material

Acknowledgments

We thank the participants, the blind community, and the National Federation of the Blind for making this research possible; Lindsay Yazzolino for insights that served as motivation for this project; and Erin Brush for assistance with data collection. We are grateful to Justin Halberda, Jon Flombaum, Chaz Firestone, and Barbara Landau for their astute feedback on data interpretation and the reviewers for helpful suggestions that improved the paper significantly.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

Data deposition: The data, processing code, and analysis scripts reported in this paper have been deposited in a GitHub repository (https://github.com/judyseinkim/Animals) and posted on Open Science Framework (https://osf.io/zgucm/).

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1900952116/-/DCSupplemental.

References

- 1.Scamander N., Rowling J. K., Fantastic Beasts and Where to Find Them (Scholastic, New York, NY, 2017). [Google Scholar]

- 2.Locke J., An essay concerning human understanding. Nature 114, 462 (1924). [Google Scholar]

- 3.Berkeley G., An Essay Towards a New Theory of Vision (Kessinger Publishing, LLC, 1709). [Google Scholar]

- 4.Hume D., Essays and Treatises on Several Subjects (Palala Press, 1758). [Google Scholar]

- 5.Landau B., Gleitman L., Language and Experience: Evidence from the Blind Child (Harvard University Press, Cambridge, MA, 1985). [Google Scholar]

- 6.Lenci A., Baroni M., Cazzolli G., Marotta G., BLIND: A set of semantic feature norms from the congenitally blind. Behav. Res. Methods 45, 1218–1233 (2013). [DOI] [PubMed] [Google Scholar]

- 7.Bedny M., Koster-Hale J., Elli G., Yazzolino L., Saxe R., There’s more to “sparkle” than meets the eye: Knowledge of vision and light verbs among congenitally blind and sighted individuals. Cognition 189, 105–115 (2019). [DOI] [PubMed] [Google Scholar]

- 8.Shepard R. N., Cooper L. A., Representation of colors in the blind, color-blind, and normally sighted. Psychol. Sci. 3, 97–104 (1992). [Google Scholar]

- 9.Marmor G. S., Age at onset of blindness and the development of the semantics of color names. J. Exp. Child Psychol. 25, 267–278 (1978). [DOI] [PubMed] [Google Scholar]

- 10.Saysani A., Corballis M. C., Corballis P. M., Colour envisioned: Concepts of colour in the blind and sighted. Vis. Cogn. 26, 382–392 (2018). [Google Scholar]

- 11.Connolly A. C., Gleitman L. R., Thompson-Schill S. L., Effect of congenital blindness on the semantic representation of some everyday concepts. Proc. Natl. Acad. Sci. U.S.A. 104, 8241–8246 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gleitman L. R., Cassidy K., Nappa R., Papafragou A., Trueswell J. C., Hard words. Lang. Learn. Dev. 1, 23–64 (2005). [Google Scholar]

- 13.Majid A., et al. , Differential coding of perception in the world’s languages. Proc. Natl. Acad. Sci. U.S.A. 115, 11369–11376 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Winter B., Perlman M., Majid A., Vision dominates in perceptual language: English sensory vocabulary is optimized for usage. Cognition 179, 213–220 (2018). [DOI] [PubMed] [Google Scholar]

- 15.Van Deemter K., Utility and language generation: The case of vagueness. J. Philos. Log. 38, 607–632 (2009). [Google Scholar]

- 16.Solt S., Vagueness and imprecision: Empirical foundations. Annu. Rev. Linguist. 1, 107–127 (2015). [Google Scholar]

- 17.Landau B., Jackendoff R., Whence and whither in spatial language and spatial cognition? Behav. Brain Sci. 16, 255–265 (1993). [Google Scholar]

- 18.Fussell S. R., Krauss R. M., Coordination of knowledge in communication: Effects of speakers’ assumptions about what others know. J. Pers. Soc. Psychol. 62, 378–391 (1992). [DOI] [PubMed] [Google Scholar]

- 19.Westerbeek H., Koolen R., Maes A., Stored object knowledge and the production of referring expressions: The case of color typicality. Front. Psychol. 6, 935 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Talmy L., “How language structures space” in Spatial Orientation, Pick H., Ed. (Springer, 1983), pp. 225–282. [Google Scholar]

- 21.Pinker S., The Stuff of Thought: Language as a Window into Human Nature (Penguin, 2007). [Google Scholar]

- 22.Gelman S. A., Wellman H. M., Insides and essences: Early understandings of the non-obvious. Cognition 38, 213–244 (1991). [DOI] [PubMed] [Google Scholar]

- 23.Keil F. C., Concepts, Kinds, and Conceptual Development (MIT Press, Cambridge, MA, 1989). [Google Scholar]

- 24.Medin D., Ortony A., “Psychological essentialism” in Similarity and Analogical Reasoning, Vosniadou S., Ortony A., Eds. (Cambridge University Press, 1989), p. 179. [Google Scholar]

- 25.Simons D. J., Keil F. C., An abstract to concrete shift in the development of biological thought: The insides story. Cognition 56, 129–163 (1995). [DOI] [PubMed] [Google Scholar]

- 26.Landau B., Smith L. B., Jones S. S., The importance of shape in early lexical learning. Cogn. Dev. 3, 299–321 (1988). [Google Scholar]

- 27.Soja N. N., Carey S., Spelke E. S., Perception, ontology, and word meaning. Cognition 45, 101–107 (1992). [DOI] [PubMed] [Google Scholar]

- 28.Coley J. D., Medin D. L., Proffitt J. B., Lynch E., Atran S., Inductive reasoning in folkbiological thought. Folkbiology 205, 211–212 (1999). [Google Scholar]

- 29.Majid A., Burenhult N., Odors are expressible in language, as long as you speak the right language. Cognition 130, 266–270 (2014). [DOI] [PubMed] [Google Scholar]

- 30.Rubinstein D., Levi E., Schwartz R., Rappoport A., “How well do distributional models capture different types of semantic knowledge?” in Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Association for Computational Linguistics, 2015), vol. 2, pp. 726–730. [Google Scholar]

- 31.Collell G., Moens M. F., “Is an image worth more than a thousand words? On the fine-grain semantic differences between visual and linguistic representations” in Proceedings of COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers (The COLING 2016 Organizing Committee, 2016), pp. 2807–2817. [Google Scholar]

- 32.He C., et al. , Selectivity for large nonmanipulable objects in scene-selective visual cortex does not require visual experience. Neuroimage 79, 1–9 (2013). [DOI] [PubMed] [Google Scholar]

- 33.Mahon B. Z., Anzellotti S., Schwarzbach J., Zampini M., Caramazza A., Category-specific organization in the human brain does not require visual experience. Neuron 63, 397–405 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Peelen M. V., He C., Han Z., Caramazza A., Bi Y., Nonvisual and visual object shape representations in occipitotemporal cortex: Evidence from congenitally blind and sighted adults. J. Neurosci. 34, 163–170 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kitada R., et al. , Early visual experience and the recognition of basic facial expressions: Involvement of the middle temporal and inferior frontal gyri during haptic identification by the early blind. Front. Hum. Neurosci. 7, 7 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.van den Hurk J., Van Baelen M., Op de Beeck H. P., Development of visual category selectivity in ventral visual cortex does not require visual experience. Proc. Natl. Acad. Sci. U.S.A. 114, E4501–E4510 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Bi Y., Wang X., Caramazza A., Object domain and modality in the ventral visual pathway. Trends Cogn. Sci. 20, 282–290 (2016). [DOI] [PubMed] [Google Scholar]

- 38.Bull R., Rathborn H., Clifford B. R., The voice-recognition accuracy of blind listeners. Perception 12, 223–226 (1983). [DOI] [PubMed] [Google Scholar]

- 39.Wong M., Gnanakumaran V., Goldreich D., Tactile spatial acuity enhancement in blindness: Evidence for experience-dependent mechanisms. J. Neurosci. 31, 7028–7037 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Medin D. L., Atran S., The native mind: Biological categorization and reasoning in development and across cultures. Psychol. Rev. 111, 960–983 (2004). [DOI] [PubMed] [Google Scholar]

- 41.Lopez A., Atran S., Coley J. D., Medin D. L., Smith E. E., The tree of life: Universal and cultural features of folkbiological taxonomies and inductions. Cognit. Psychol. 32, 251–295 (1997). [Google Scholar]

- 42.Boster J. S., Johnson J. C., Form or function: A comparison of expert and novice judgments of similarity among fish. Am. Anthropol. 91, 866–889 (1989). [Google Scholar]

- 43.Medin D. L., Ross N., Atran S., Burnett R. C., Blok S. V., Categorization and reasoning in relation to culture and expertise. Psychol. Learn. Motiv. 41, 1–41 (2002). [Google Scholar]

- 44.Gopnik A., Meltzoff A. N., Learning, Development, and Conceptual Change (MIT Press, 1997). [Google Scholar]

- 45.Diesendruck G., Bloom P., How specific is the shape bias? Child Dev. 74, 168–178 (2003). [DOI] [PubMed] [Google Scholar]

- 46.Schulz L. E., Standing H. R., Bonawitz E. B., Word, thought, and deed: The role of object categories in children’s inductive inferences and exploratory play. Dev. Psychol. 44, 1266–1276 (2008). [DOI] [PubMed] [Google Scholar]

- 47.Williams J. J., Lombrozo T., Rehder B., The hazards of explanation: Overgeneralization in the face of exceptions. J. Exp. Psychol. Gen. 142, 1006–1014 (2013). [DOI] [PubMed] [Google Scholar]

- 48.Johnson S. C., Carey S., Knowledge enrichment and conceptual change in folkbiology: Evidence from Williams syndrome. Cognit. Psychol. 37, 156–200 (1998). [DOI] [PubMed] [Google Scholar]

- 49.Keil F. C., “The origins of an autonomous biology” in Modularity and Constraints in Language and Cognition: The Minnesota Symposia on Child Psychology, Gunnar M. R., Maratsos M., Eds. (Psychology Press,1992), vol. 24, pp. 103–137. [Google Scholar]

- 50.Atran S., Folk biology and the anthropology of science: Cognitive universals and cultural particulars. Behav. Brain Sci. 21, 547–569, discussion 569–609 (1998). [DOI] [PubMed] [Google Scholar]

- 51.Atran S., et al. , Folkbiology doesn’t come from folkpsychology: Evidence from Yukatek Maya in cross-cultural perspective. J. Cogn. Cult. 1, 3–42 (2001). [Google Scholar]

- 52.Hedges S. B., Marin J., Suleski M., Paymer M., Kumar S., Tree of life reveals clock-like speciation and diversification. Mol. Biol. Evol. 32, 835–845 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Kim J. S., Elli G., Bedny M., Furry hippos and scaly sharks: Knowledge of animal appearance among blind and sighted individuals. Open Science Framework. https://osf.io/zgucm/. Deposited 1 April 2019.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.