Abstract

What information single neurons receive about general neural circuit activity is a fundamental question for neuroscience. Somatic membrane potential (Vm) fluctuations are driven by the convergence of synaptic inputs from a diverse cross-section of upstream neurons. Furthermore, neural activity is often scale-free, implying that some measurements should be the same, whether taken at large or small scales. Together, convergence and scale-freeness support the hypothesis that single Vm recordings carry useful information about high-dimensional cortical activity. Conveniently, the theory of “critical branching networks” (one purported explanation for scale-freeness) provides testable predictions about scale-free measurements that are readily applied to Vm fluctuations. To investigate, we obtained whole-cell current-clamp recordings of pyramidal neurons in visual cortex of turtles with unknown genders. We isolated fluctuations in Vm below the firing threshold and analyzed them by adapting the definition of “neuronal avalanches” (i.e., spurts of population spiking). The Vm fluctuations which we analyzed were scale-free and consistent with critical branching. These findings recapitulated results from large-scale cortical population data obtained separately in complementary experiments using microelectrode arrays described previously (Shew et al., 2015). Simultaneously recorded single-unit local field potential did not provide a good match, demonstrating the specific utility of Vm. Modeling shows that estimation of dynamical network properties from neuronal inputs is most accurate when networks are structured as critical branching networks. In conclusion, these findings extend evidence of critical phenomena while also establishing subthreshold pyramidal neuron Vm fluctuations as an informative gauge of high-dimensional cortical population activity.

SIGNIFICANCE STATEMENT The relationship between membrane potential (Vm) dynamics of single neurons and population dynamics is indispensable to understanding cortical circuits. Just as important to the biophysics of computation are emergent properties such as scale-freeness, where critical branching networks offer insight. This report makes progress on both fronts by comparing statistics from single-neuron whole-cell recordings with population statistics obtained with microelectrode arrays. Not only are fluctuations of somatic Vm scale-free, they match fluctuations of population activity. Thus, our results demonstrate appropriation of the brain's own subsampling method (convergence of synaptic inputs) while extending the range of fundamental evidence for critical phenomena in neural systems from the previously observed mesoscale (fMRI, LFP, population spiking) to the microscale, namely, Vm fluctuations.

Keywords: balanced networks, membrane potential, neural computation, neuronal avalanches, renormalization group, scale-free

Introduction

How do cortical population dynamics impact single neurons? What can we learn about cortical population dynamics from single neurons? These questions are central to neuroscience. Uncovering the functional significance of multiscale organization within cerebral cortex requires knowing the relationship between the dynamics of networks and individual neurons within them (Nunez et al., 2013).

For pyramidal neurons in the visual cortex, somatic spike generation is ambiguously related to presynaptic firing (Tsodyks and Markram, 1997; Brunel et al., 2014; Gatys et al., 2015; Stuart and Spruston, 2015; Moore et al., 2017). Such neurons pass spiking information to many postsynaptic neurons (Lee et al., 2016). However, a presynaptic pool with multifarious neighboring and distant neurons (Hellwig, 2000; Wertz et al., 2015) provides excitatory and inhibitory synaptic inputs throughout the soma and complex dendritic architecture (Magee, 2000; Larkum et al., 2008; Moore et al., 2017). Input propagation to the axon hillock has both active and passive features (London and Häusser, 2005) and the membrane potential (Vm) response is increasingly nonlinear near the action potential threshold. Thus, such details of network propagation give Vm more utility than focusing solely on spiking.

Most computational neuroscientists use spiking data because spikes are “the currency of the brain” (Wolfe et al., 2010) and extracellular recording is straightforward compared with whole-cell recording. Yet, the paucity of single-neuron spiking (Shoham et al., 2006) and limited foreknowledge about connections (Helmstaedter, 2013) make extracellular single-unit observation an impoverished means of studying neuronal circuits. In contrast, subthreshold Vm fluctuations contain rich information about the circuits containing each neuron (Sachidhanandam et al., 2013; Petersen, 2017). Integral to gaining a neuron's view of the brain is uncovering relationships between the statistics of Vm fluctuations and fluctuations of local spiking and then contrasting against other plausible one-dimensional signals.

We look for such relationships in the strict predictions and rigorous measurements of scale-freeness used to identify a fragile network connectivity pattern known as “critical branching.” This pattern exhibits emergent properties valuable for information processing, such as higher susceptibility and dynamic range (Haldeman and Beggs, 2005; Beggs, 2008; Shew and Plenz, 2013; Shriki and Yellin, 2016; Timme et al., 2016), but omits some neuronal dynamics (Poil et al., 2008, 2012) without extension (Porta and Copelli, 2018). The pattern is as follows: on average over all neuronal avalanches (spiking above baseline; Friedman et al., 2012), one spike leads to exactly one other spike. In most arbitrary networks there is less or more than one; these are “subcritical” and “supercritical” respectively. Among the dazzling emergent properties of “criticality” are universality, self-similarity, and scale-free correlations (Stanley, 1999).

These are as follows: A “universality class” is a set of incongruous systems exhibiting identical statistics only at their “critical points.” “Self-similarity” includes fractal patterns and power laws in geometrical analysis of avalanches (power laws are “scale-invariant,” popularly called “scale-free”). Avalanches of any duration have identical average shapes after normalization (Shaukat and Thivierge, 2016). Avalanche areas grow with duration as another power law (Sethna et al., 2001). However, observation methods must be consistent with event propagation (Priesemann et al., 2009; Yu et al., 2014; Levina and Priesemann, 2017). Additionally, pairwise correlation versus length or time are also power laws (Chialvo, 2010), meaning any input has a nonzero chance of propagating forever or anywhere.

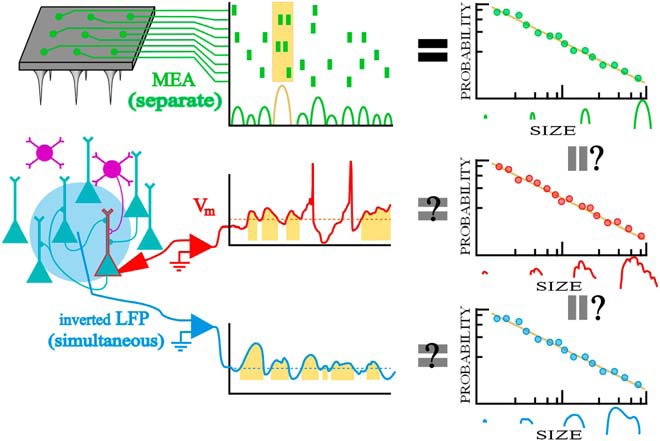

In summary, the theory of critical branching networks offers superb standards of comparison for three reasons: neuronal avalanche analysis applies to Vm, offers promising insights, and makes precise predictions about fluctuation geometry. We study both Vm fluctuations and criticality with one simple question: Do Vm fluctuations match the scale-free statistics of cortical populations (see Fig. 1)?

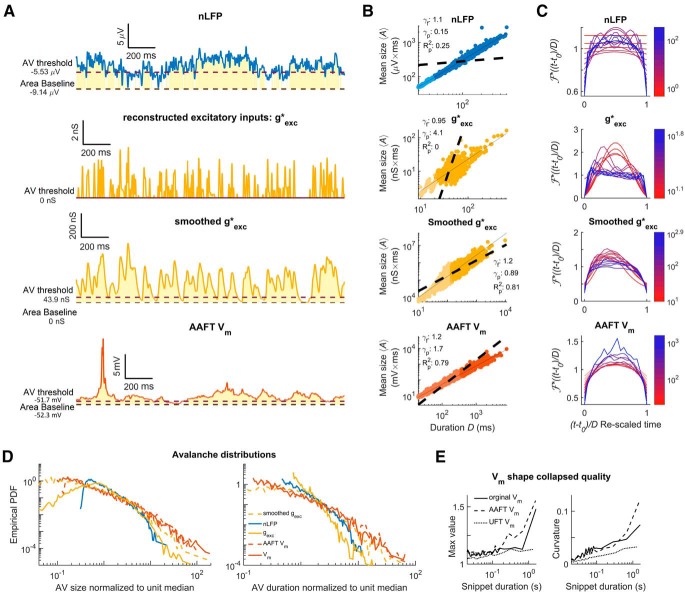

Figure 1.

Will fluctuations in somatic Vm and comparable signals reflect the scale-free nature of neuronal avalanches from microelectrode array data? A recurrent network with excitatory (teal) and inhibitory (purple) neurons is measured in three ways: MEA (green/top), whole-cell recording (red/middle), and LFP (blue/bottom). Neuronal avalanches (highlighted in gold) are inferred from the population raster and fluctuations are analyzed like avalanches for the Vm and inverted LFP signals. Neuronal avalanches are defined as spurts of activity with quiet periods between them for MEA or excursions above the 25th percentile for continuous nonzero data. The ultimate question is whether Vm fluctuations will recapitulate the entire neuronal avalanche analysis previously conducted on MEA data, including power laws in size and duration as well as a universal avalanche shape. This is abridged in the right-most column which illustrates power-law distributions.

To address this question, we simultaneously recorded somatic Vm from pyramidal neurons and local field potential (LFP) in visual cortex and performed avalanche analysis on fluctuations. We found that subthreshold Vm fluctuation statistics match published microelectrode array (MEA) data. We used surrogate testing to show why negative LFP fluctuations don't match and modeling to demonstrate dependence on critical branching.

Materials and Methods

Surgery and visual cortex

All procedures were approved by Washington University's Institutional Animal Care and Use Committees and conform to the National Institutes of Health's Guide for the Care and Use of Laboratory Animals. Fourteen adult red-eared sliders (Trachemys scripta elegans, 150–1000 g) were used for this study; their genders were not recorded. Turtles were anesthetized with propofol (2 mg of propofol/kg) then decapitated. Dissection proceeded as described previously (Saha et al., 2011; Crockett et al., 2015; Wright et al., 2017a).

To summarize, immediately after decapitation, the brain was excised from the skull with the right eye intact and bathed in cold extracellular saline containing the following (in mm): 85 NaCl, 2 KCl, 2 MgCl2*6H2O, 20 dextrose, 3 CaCl2-2H2O, 45 NaHCO3. The dura was removed from the left cortex and right optic nerve and the right eye hemisected to expose the retina. The rostral tip of the olfactory bulb was removed, exposing the ventricle that spans the olfactory bulb and cortex. A cut was made along the midline from the rostral end of the remaining olfactory bulb to the caudal end of the cortex. The preparation was then transferred to a perfusion chamber (Warner RC-27LD recording chamber mounted to PM-7D platform) and placed directly on a glass coverslip surrounded by Sylgard. A final cut was made to the cortex (orthogonal to the previous and stopping short of the border between medial and lateral cortex), allowing the cortex to be pinned flat with the ventricular surface exposed. Multiple perfusion lines delivered extracellular saline to the brain and retina in the recording chamber (adjusted to pH 7.4 at room temperature).

We used a phenomenological approach to identify the visual cortex, described previously (Shew et al., 2015). In brief, this region was centered on the anterior lateral cortex, in agreement with voltage-sensitive dye studies (Senseman and Robbins, 1999, 2002). Anatomical studies identify this as a region of cortex receiving projections from lateral geniculate nucleus (Mulligan and Ulinski, 1990). We further identified a region of neurons as belonging to the visual cortex when the average LFP response to visual stimulation crossed a given threshold and patched within that neighborhood (radius of ∼300 μm).

Intracellular recordings

For whole-cell current-clamp recordings, patch pipettes (4–8 MΩ) were pulled from borosilicate glass and filled with a standard electrode solution containing the following (in mm): 124 KMeSO4, 2.3 CaCl2-2H2O, 1.2 MgCl2, 10 HEPES, and 5 EGTA adjusted to pH 7.4 at room temperature. Cells were targeted for patching using a differential interference contrast microscope (Olympus). Vm recordings were collected using an Axoclamp 900A amplifier, digitized by a data acquisition panel (National Instruments PCIe-6321), and recorded using a custom LabVIEW program (National Instruments), sampling at 10 kHz. As described in (Crockett et al., 2015; Wright and Wessel, 2017; Wright et al., 2017a,b), before recording from a cell after initial patching current was injected to elicit spiking. This current injection test was also repeated intermittently between recording trials. Recording did not proceed if a cell spiked inconsistently (e.g., failure to spike, insufficient spike amplitude) in response to injected current or exhibited extreme depolarization in response to small current injection amplitudes. If a clog or loss of seal was suggested by unusually erratic Vm short timescales current, the current injection test was performed and, upon failure, the affected recording was marked for exclusion from analysis. We excluded cells that did not display stable resting Vms for long enough to gather enough avalanches. Up to three whole-cell recordings were made simultaneously. In total, we obtained recordings from 51 neurons from 14 turtles.

Recorded Vm fluctuations taken in the dark (no visual stimulation) were interpreted as ongoing activity. Such ongoing cortical activity was interrupted by visual stimulation of the retina with whole-field flashes and naturalistic movies as described previously (Wright and Wessel, 2017; Wright et al., 2017a,b). An uninterrupted recording of ongoing activity lasted for 2–5 min. Periods of visual stimulation were too short and were too frequently interrupted by action potentials to yield the great number of avalanches required for rigorous power-law fitting.

A sine-wave removal algorithm was used to remove 60 Hz line noise. Action potentials in turtle cortical pyramidal neurons are relatively rare. An algorithm was used to detect spikes and the Vm recordings between spikes were extracted and filtered from 0 to 100 Hz. Vm recordings were detrended by subtracting the fifth percentile in a sliding 2 s window. The resulting signal was then shifted to have the same mean value as before subtraction. Detrending did not affect the size of Vm fluctuations (data not shown).

Extracellular recordings

Extracellular recordings were achieved with tungsten microelectrodes (microprobes heat-treated tapered tip) with ∼0.5 MΩ impedance. Electrodes were slowly advanced through tissue under visual guidance using a manipulator (Narishige) while monitoring for activity using custom acquisition software (National Instruments). The extracellular recording electrode was located within ∼300 μm of patched neurons. Extracellular activity was collected using an AM Systems Model 1800 amplifier, band-pass filtered between 1 Hz and 20,000 Hz, digitized (NI PCIe-6231), and processed using custom software (National Instruments). Extracellular recordings were downsampled to 10,000 Hz and then filtered (100 Hz low-pass), yielding the LFP. The LFP was filtered and detrended as described above (see “Intracellular recordings” section) except that the mean of the entire signal was subtracted and the signal was multiplied by −1 before it was detrended. This final inverted signal is commonly featured in the literature as negative LFP or nLFP (Kelly et al., 2010; Kajikawa and Schroeder, 2011; Okun et al., 2015; Ness et al., 2016).

Experimental design and statistical analysis

Setwise comparisons

To measure differences between sets of statistics, we relied on three nonparametric measures. We used the MATLAB Statistics and Machine Learning Toolbox implementation of Fisher's exact test (Hammond et al., 2015). This allowed us to measure the effect size (odds ratio rOR) and statistical significance (p-value) of finding that consistency-with-criticality is more frequent or less frequent in an experimental group than a control group.

To quantify the similarity between the exponents measured in different sets of data, we used the MATLAB Statistics and Machine Learning Toolbox implementations of the exact Wilcoxon rank-sum test (Hammond et al., 2015) and the exact Wilcoxon signed-rank test. In both cases effect size, rSDF is measured by the simple difference formula (Kerby, 2014). The rank-sum test is used when comparing nonsimultaneous recordings, such as comparing MEA data with Vm data. The signed-rank test is used when comparing data that can be paired, such as Vm data to concurrent LFP. When comparing whether a dataset differs from a specific value, we can use the sign test.

The significance level was set at p = 0.05 for all tests. Each setwise comparison test stands alone as its own conclusion. They were not combined to assess the significance of any effect across sets-of-sets. Thus, we are not making multiple comparisons and no corrections are warranted (Bender and Lange, 2001).

Random surrogate testing

It is possible that scale-free observations have an origin in independent random processes of a type demonstrated previously (Touboul and Destexhe, 2017). To control for this, we phase-shuffled the Vm fluctuations using the amplitude-adjusted Fourier transform (AAFT) algorithm (Theiler et al., 1992). This tests against the null hypothesis that a measure on a time series can be reproduced by performing a nonlinear rescaling of a linear Gaussian process with the same autocorrelation (same Fourier amplitudes) as the original process. Phase information is randomized, which removes higher-order correlations but preserves the scale-free power-spectrum.

The AAFT tests only higher-order correlations, but a simpler algorithm tests against the null hypothesis that an un-rescaled linear Gaussian process with the same autocorrelation as the original process can produce the same results (Theiler et al., 1992). This is known as the unwindowed Fourier transform (UFT). Once we see what measures depend on the higher-order correlations with the AAFT, we can use the UFT to see how measures depend on the non-Gaussianity (nonlinear rescaling), which is inherent to excitable membranes. Using the UFT alone would make it difficult to attribute whether statistically significant differences are due to the rescaling or to the higher-order correlations (Rapp et al., 1994).

We performed AAFT and UFT on each Vm time series once and then compared how the two datasets performed on every metric used in this study. The datasets were compared with a matched Wilcoxon sign-rank test implemented via MATLAB's statistics tool box. Doing the comparison at a dataset level allowed us to obtain a discrimination statistic for every metric that we used without repeating the computationally expensive analysis procedure hundreds or thousands of times on every Vm trace. With enough individual recordings in each dataset, the matched Wilcoxon sign-rank test is a reliable measure that empowered us to efficiently compare all important metrics.

Neuronal avalanche analysis

Neuronal avalanches were defined by methods analogous to those described previously (Poil et al., 2012), which are used for uninterrupted ongoing signals; conversely, methods based on event detection (Beggs and Plenz, 2003) require periods of nonactivity. A threshold is defined and an avalanche starts when the signal crosses the threshold from below and ends when the signal crosses the threshold from above. The choice of threshold is a free parameter and we set it to the 25th percentile before conducting the complete analysis. In similar situations (continuous nonzero signals), researchers chose one-half the median (Poil et al., 2012; Larremore et al., 2014). However, one-half the median cannot work for negative signals or signals with high mean but low variance. Before analysis, threshold choices between the 15th to 50th percentile were tested on data from the five cells with the most recordings to see how threshold may affect the number of avalanches. The 25th percentile was consistent with the existing literature and gave many avalanches compared with alternatives. Having a large number of avalanches is important because it gives the best statistical resolution. An analysis with a choice of threshold that yields fewer avalanches (or changing the threshold for each recording) would be suspect for selecting serendipitous results. After the analysis was conducted, eight percentiles between the 15th to 50th percentile were tested and gave similar power-law exponents.

We quantified each neuronal avalanche by its size A and its duration D. The avalanche size is the area between the processed Vm recording and the baseline. The baseline is another free parameter that was set at the second percentile of the processed Vm recording. The second percentile was chosen because its value is more stable than the absolute minimum. The avalanche duration D is the time between threshold crossings.

The lower limit of avalanche duration is defined by the membrane time constant, which has been reported to be between 50 and 140 ms for the turtle brain at room temperature (Ulinski, 1990; Larkum et al., 2008). We took a conservative approach by setting the limit at less than half the lower bound on membrane time constant, which was significantly less than the lower cutoff from power-law fits. Only avalanches with a duration longer than 20 ms were included in the analysis. Thus, we avoided artificially retaining only the events most likely to be power-law distributed.

Following the procedure described above, each processed Vm recording of uninterrupted ongoing activity (i.e., a recording of 2–5 min duration) yielded 327 ± 148 (mean ± SD) avalanches. This is insufficient for rigorous statistical fitting on recordings individually (Clauset et al., 2009). Therefore, we grouped avalanches from multiple recordings of ongoing activity of the same cells. Each cell produced between 3 and 19 recordings of ongoing activity (2–5 min duration each recording), with trials recorded intermittently over a period of 10–60 min. We grouped recordings based on whether they occurred in the first or second 20 min period since the beginning of recording from that neuron. Then, all the avalanches from the first or second 20 min period were grouped together with one data object (the group) storing the size and duration of each avalanche. It is rare for neurons to have recordings in the third 20 min periods, so these data were not included. Since there was a slow drift in the mean Vm over a period of several minutes, we scaled the avalanche sizes from each recording to have the same median as other recordings from the same group. Z-scoring was not useful for accounting for trial to trial variability because it does affect whether a specific time point is above or below a certain percentile threshold. Therefore, it is not useful for removing variability in avalanche duration. Windowed z-scoring introduces artifacts near action potentials. On average, four recordings were possible in each 20 min period. There were 51 neurons with multiple recordings of ongoing activity in the first 20 min of experimentation (thus 51 recording groups); of these, 18 neurons had an additional 20 min period with more than one recording. This produced a total of 69 groups with 1346 ± 1018 (mean ± SD) avalanches for each group. Of these 69 groups, 57% had >1000 avalanches. The largest number of avalanches was 7495 and the smallest was 313. Only five groups had <500 avalanches. We report on the 51 groups from the first 20 min period separately from the 18 groups with recordings from the second 20 min period of experimentation.

For each group, we evaluated the avalanche size and duration distributions with respect to power laws. To test whether a distribution followed a power law, we applied the rigorous statistical fitting routine described previously (Clauset et al., 2009). We tested three power-law forms: P(x) ∝ x−α (with and without truncation) (Deluca and Corral, 2013), as well as a power law with exponential cutoff, P(x) ∝ x−αe−x/r. We compared these against log normal and exponential alternative (non-power-law) hypotheses. Distribution parameters were estimated using maximum likelihood estimation (MLE) and the best model out of those fitted to the data was chosen using the Akaike information criterion (Bozdogan, 1987). It should be acknowledged that a small power-law region in the truncated form would be suspect for false positives, likewise for a strong exponential cutoff (Deluca and Corral, 2013). Finally, to decide whether a fitted model was plausible, pseudorandom datasets were drawn from a distribution with the estimated parameters and then the fraction that had a lower fit quality (Kolmogorov–Smirnov distance) than the experimental data was calculated. If this fraction, called the comparison quotient q, was >0.10, then the best-fit model (according to the Akaike information criterion) was accepted as the best candidate. Otherwise, the next best model was considered.

We applied several additional steps and strict criteria to control for false positives. One such step was assessing whether the scaling relation was obeyed over the whole avalanche distribution for each group (not just the portion above the apparent onset of power-law behavior). The scaling relation is another power-law, 〈A〉(D) ∝ Dγ, predicting how the measured size of avalanches increases geometrically with increasing duration (on average). For any dataset with three power laws, 〈A〉(D) ∝ Dγ (scaling relation), P(A) ∝ A−τ (size distribution), and P(D) ∝ D−β (duration distribution), the scaling relation exponent is predicted by the other two exponents by γ ≈ γp = (Scarpetta et al., 2018). Note that γp = 1 is a trivial value because it implies 〈A〉(D) ∝ D and that would suggest that individual avalanches were just noise symmetric about a constant value. This would mean that the average avalanche shape is just a flat line at some constant of proportionality, = a, where is a function describing the shape of an avalanche of duration D, t0 is the beginning of the avalanche, and a is a constant.

Standards for consistency with critical point behavior.

We applied four standardized criteria to provide a transparent and systematic way to produce a binary classification; either “no inconsistencies with activity near a critical point were detected” or “some inconsistencies with activity near a critical point were detected.”

First, a collection of avalanches must be power-law distributed in both its size and duration distributions.

Second, the collection of avalanches must have a power-law scaling relation as determined by R2 > 0.95 (coefficient of determination) for linear least-squares regression to a log-log plot of average size vs durations: log (A(D)) ∼ γ log (D) + b. This R2 represents the best that any linear fit can achieve and must include all the avalanches, not a subset. We denote the scaling exponent (slope from linear regression) from this fit as γf.

Third, the scaling relation exponent predicted by theory (denoted as γp) must correspond to a trendline on a log-log scatter plot of 〈A〉(D) with an R2 that is within 90% of the best-case fitted trendline from the second criterion. Again, the R2 for the predicted scaling relation is calculated across all avalanches, not just the subset above the inferred lower cutoff of power-law behavior (which was found for the first criterion). This cross-validates agreement with theory.

Fourth, the fitted scaling relation exponent must be significantly greater than 1: (γf − 1) > σγf where σγf is the SD. This last requirement eliminates scaling that might be trivial in origin. It is measured after getting the fitted scaling relation exponent for all of the data so that a dataset SD can be determined. It is necessary to also check that the set of scaling relation exponents from the power-law fits to all avalanche sets is significantly different from 1 at a dataset level. A scaling relation exponent equal to one suggests a linear relationship between mean size and duration that is not consistent with criticality in neural systems (Haldeman and Beggs, 2005).

Our four-criterion test cannot measure distance from a critical point nor eliminate all risk of false positives. To complete our analysis, we also looked at three additional factors: (1) whether exponent values match exponent values from other experiments as expected from the universality prediction of theory, (2) whether all the exponents within our dataset have similar scaling relation predictions, and (3) whether the avalanches within our dataset exhibit shape collapse across all the recordings.

Obtaining shape collapse and analyzing it quantitatively and qualitatively.

Shape collapse is a very literal manifestation of scale-invariance (also called “self-similarity”) (Sethna et al., 2001; Beggs and Plenz, 2003; Friedman et al., 2012; Pruessner, 2012; Timme et al., 2016). Avalanches of different durations should rise and fall in the same way on average. This average avalanche profile is called a scaling function. The average avalanche profile for avalanches of duration D is predicted to be A (t,D) = D(γ−1) where D(γ−1) is the power-law scaling coefficient that modulates the height of the profile and is the universal scaling function itself (normalized in time). Shape collapse analysis provides an independent estimate of the scaling relation exponent γSC, which is only expected to be accurate at criticality (Sethna et al., 2001; Scarpetta and de Candia, 2013; Shaukat and Thivierge, 2016), and a visual test of conformation to an empirical scaling function.

Exponent estimation is very sensitive to the unrelated, intermediate rescaling steps involved in combining the avalanches from multiple recordings into one group. To get an estimate of the scaling relation exponent for each group, γSC, we averaged the scaling exponents, γi, found individually for each recording in that group where i denotes the ith recording and SC is shape collapse).

Naturally, individual avalanche profiles are vectors of variable length D. We must first “rescale in time” to make them vectors of equal length without losing track of what each vector's original duration was. We do that by linearly interpolation with 20 evenly spaced points. So, the jth avalanche profile of the ith recording is denoted as a 20-element vector where the top arrow denotes a vector.

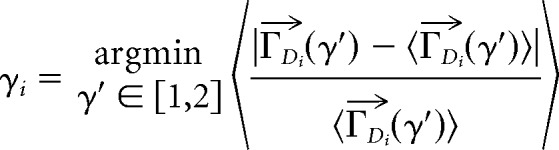

Next, the set of all profiles from recording i with the exact same duration D, denoted as ΓDi where bold indicates a set, were averaged and divided by a test scaling factor D(γ′i−1). We define this as (γ′) = 〉D−(γ′i−1). The prime indicates a test rescaling. The average is over all vectors in the set ΓDi. The choice of γi was optimized using MATLAB's fminsearch function to minimize the mean relative error between the average over all durations 〈(γ′)〉 and the set members (γ′) so that for recording i:

|

This error minimization and applying the rescaling is the “collapse” in “shape collapse.”

Once we have the γi for the avalanches in each individual recording of ongoing activity, we compare the average, γSC = 〈γi〉, with the predicted and fitted scaling relation exponents for the group of recordings, γp and γf (statistical comparison tests are described in a previous section). Thus, quantitative analysis of shape collapse was done by comparing γSC, γp, and γf for each of the 69 groups individually.

Visual assessment of how well avalanche profiles can be described by one universal scaling function, supports the quantitative exponent estimation. This was performed by averaging all the profiles within specific duration bins (regardless of trial or group) and plotting them on top of one another. Shape collapse always requires a very large number of avalanches, so we had to combine avalanches from all 69 groups. However, the resting Vm differs from recording to recording and cell to cell. Therefore, avalanche profiles from different recordings are vertically misaligned. To combine avalanche profiles from different recordings, we divided all the profiles by a scalar value unique to each recording: the time average over all the collapsed profiles. This produced rescaled and mean-shifted profiles, denoted by a double prime, = /〈Γ′ijk〉, where k ∈ [1,20] denotes the interpolated time point. The set of avalanches from each recording were thus aligned, but individual variability was preserved and thus profiles from different recordings could be averaged without introducing artifacts. This set, Γ″ij, contained a total of 106,220 shifted and rescaled profiles for the Vm data.

The set of shifted and rescaled profiles falling into a duration bin is denoted Γ″D. Each duration bin then provides its own estimate of the scaling function ∼ . For each bin, D was defined as the average duration of all constituent profiles. If <700 avalanches had a particular duration, we included the next longest duration iteratively until we met or exceeded 700 avalanches. This only applied to long durations. The choice of 700 was made because it allowed smooth averaging without excessively wide duration bin widths.

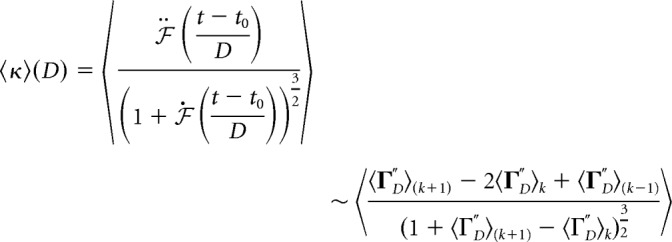

We also assessed the mean curvature of avalanche profiles from the rescaled profile for a particular duration . This allowed us to plot how curvature depends on duration. Mean curvature 〈κ〉 is defined as follows, where k still denotes time points:

|

Model simulations

We simulated a model network consisting of N = 104 binary probabilistic model neurons. The model neurons form a directed random network (Erdős–Rényi random graph) where the probability that neuron j connects to neuron i is c. In a network of N neurons, this results in a mean in-degree and out-degree of cN. We tested nine not quite evenly distributed values of connection probabilities, c ∈ [0.5, 1, 3, 5, 7.5, 10, 15, 20, 25] × 10−2. As discussed previously (Kinouchi and Copelli, 2006; Larremore et al., 2011a, 2014), the impact of connectivity on network dynamics is nonlinear, so we took a finer look at smaller connection probabilities while maintaining thorough coverage of intermediate connection probabilities.

The strength of the connection from neuron j to neuron i is quantified in terms of the network adjacency or weight matrix, W, with the fortune of having a simple and intuitive meaning. For each existing connection from neuron j to neuron i, Wij is the direct change in the probability that neuron i will fire at the next time step if neuron j spikes in the current time step.

The dynamics of this network are well characterized by the largest eigenvalue λ of the network weight matrix W with criticality occurring at λ = 1 (Kinouchi and Copelli, 2006; Larremore et al., 2011a,b, 2012, 2014). The physical interpretation of λ is a “branching parameter”(Haldeman and Beggs, 2005) that governs expected number of spikes immediately caused by the firing of one neuron. If λ = 1, then one spike causes one other spike on average, while, if λ > 1,then one spike causes more than one on average and vice versa.

We tested five different values of largest eigenvalue at, near and far from criticality λ ∈ [0.9, 0.95, 1, 1.015, 1.03]. A fraction, χ, of the neurons is designated as inhibitory. This is done by multiplying all outgoing connections of an inhibitory neuron by −1. We tested nine different values of the fraction of inhibitory neurons in the range from 0 to 0.25, thus including the value 0.2, corresponding to the fraction of inhibitory neurons in the mammalian cortex (Meinecke and Peters, 1987). The magnitudes of nonzero weights are independently drawn from a distribution of positive numbers with mean η, where the distribution is uniform on [0,2η] and η is given by η = λ/(cN(1 − 2χ)). The maximum eigenvalue is then fine-tuned by dividing W by the current maximum eigenvalue and set to the exactly desired value W = λW′/λ′ where W′ and λ′ are the matrices and eigenvalues before correction.

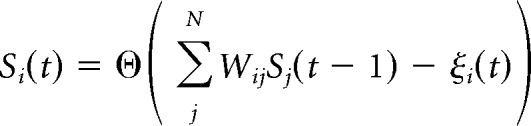

The binary state Si(t) of neuron i at time t denotes whether the model neuron spikes (Si(t) = 1) or does not spike (Si(t) = 0) at time t. At each time step, the states of all neurons are updated synchronously according to the following update rule:

|

Where ξi(t) is a random number on [0,1] drawn from a uniform distribution, and Θ is the Heaviside step function. In addition to this update rule, a refractory period of one time step (translated to ∼2 ms) was imposed for certain parameter conditions. A simulation begins with initiating the activity of one randomly chosen excitatory neuron and continuing the simulation until overall network activity had ceased. The process was then repeated.

From the simulated binary states of 104 model neurons, we extracted three measures of simulated activity. First, the network activity F(t) = ∑i=1NSi(t) / N is the fraction of neurons spiking at time t. Second, the input to model neuron i at time t is Pi(t) = ∑jNWijSj(t − 1), which is almost always positive for our parameters. Note that Pi′(t) = Pi(t) × Θ(Pi(t)) directly represents the probability for the neruon i to spike at time t. Third, we constructed a proxy for the Vm signal, Φi(t) = (αh * Pi)(t), by convolving the input Pi(t) with an alpha-function: αh(t) = exp with hm = 2 time steps (assumed to be ∼4 ms).

A total of 405 different parameter combinations (connection density, inhibition, maximum eigenvalue) were simulated. Each combination was simulated 10 times. Based on the connection probability c and the fraction of inhibition χ, we distinguish four regions in parameter space classified according to the behavior of the critical model; that is, λ = 1.

The first region is the “positive weights” region. Without inhibition activity increases or dies out in accordance with the branching parameter. This region is defined by χ = 0. With moderate inhibition and dense connectivity, there is a region of parameter space we call “quiet”; activity lasts only slightly longer than in a system with no inhibition. This region is defined by the ex post facto boundaries c ≥ e11χ/25 and χ > 0. Further increasing inhibition relative to connection density produces a behavior like “up and down” states (or “telegraph noise”) (Sachdev et al., 2004; Millman et al., 2010). We call this the “switching” regime because network activity switches between a low mean and a high mean. This region is defined by c < e11χ/25 and c ≥ (10e12χ − 13)/100 and χ > 0. When inhibition is high relative to connection density, the system enters the “ceaseless” region where stimulating one neuron causes activity that effectively never dies out. An especially attractive feature of this model is that the “ceaseless” and “switching” regimes exhibit sustained self-generated activity. This provides a way to model spontaneous neural activity without externally imposed firing patterns.

Refractoriness was studied in the network without inhibition and it was found that dynamic range was inversely proportional to refractory period (Larremore et al., 2011a), but the empirical branching parameter (criticality) displayed no dependence on refractory period (Kinouchi and Copelli, 2006). In studies that featured inhibition and introduced ceaselessness, no refractory was used (Larremore et al., 2014). However, we found that, for some networks in the switching regime, the maximum eigenvalue was a better predictor of the empirical branching ratio if the refractory period was one time step. Because this relationship is central to our understanding of criticality in this model, we ran an initial testing cycle before each simulation begins to decide whether to set the refractory period to one time step or to zero. Doing so ensures that the network displays critical-like phenomena in all regimes (the maximum eigenvalue of connectivity), but also ensures that the model is consistent with prior studies.

We performed avalanche analysis on each of the simulated signals using the methods described above for Vm recordings. If the network was in the switching regime, then we only performed analysis on the periods when the network was in the mode (high or low mean) in which it spent the majority of its time. As before, the 25th percentile defined the avalanche threshold. If the signal had negative values, as in the case of single neuron Vm proxies in networks with inhibition, then the signal was shifted by subtracting the second percentile. To obtain good statistics, we continued stimulating and extracting avalanches until a simulation either reached 104 avalanches or 5 × 103 avalanches and a very large file size or a very long computational time. This ensured that there were between 2000 and 10,000 avalanches per trial.

Data and software accessibility

All raw data are available at https://github.com/jojker/continuous_signal_avalanche_analysis and the code developed for this analysis is available upon request to the corresponding author.

Results

Single-neuron Vm fluctuations are thought to be dominated by synaptic inputs from multitudes of presynaptic neurons (Stepanyants et al., 2002; Brunel et al., 2014; Petersen, 2017). Because the way neurons integrate their diverse inputs is central to information processing in the brain, it is important that neuroscience gain a thorough understanding of the relationship between subthreshold Vm fluctuations and population activity. A basic step is to compare statistical analyses, especially analyses in which a meaningful relationship is expected. We investigated whether an avalanche analysis on Vm fluctuations would reveal the same signatures of scale-freeness and critical network dynamics found in measures of population activity (Fig. 1) (Friedman et al., 2012; Shew et al., 2015; Marshall et al., 2016). To address this comparison across organizational levels, we recorded Vm fluctuations from 51 pyramidal neurons in visual cortex of 14 turtles and assessed evidence for critical network dynamics from these recordings.

In a model investigation we corroborated results evaluated the conditions needed to enable inferring dynamical network properties from the inputs to single neurons. Finally, we extended the analysis to other commonly recorded time series of neural activity for comparison with the information content of Vm fluctuations about the dynamical network properties.

Vm fluctuations reveal signatures of critical point dynamics

We obtained whole-cell recordings from pyramidal neurons in the visual cortex of the turtle ex vivo eye-attached whole-brain preparation (Fig. 2A). Recorded Vm fluctuations taken in the dark (no visual stimulation) were interpreted as ongoing activity. We analyzed the recorded ongoing Vm fluctuations employing the concept of “neuronal avalanches” (Beggs and Plenz, 2003; Poil et al., 2012; Shew et al., 2015), which are positive fluctuations of network activity. For continuous time series such as the Vm recording, one selects a threshold and a baseline. We defined a neuronal avalanche based on the positive threshold crossing followed by a negative threshold crossing of the Vm time series (Poil et al., 2012; Hartley et al., 2014; Larremore et al., 2014; Karimipanah et al., 2017a). We quantified each neuronal avalanche by its size, A, defined as the area between the curve and the baseline, and its duration D, defined as the time between threshold crossings (Fig. 2B).

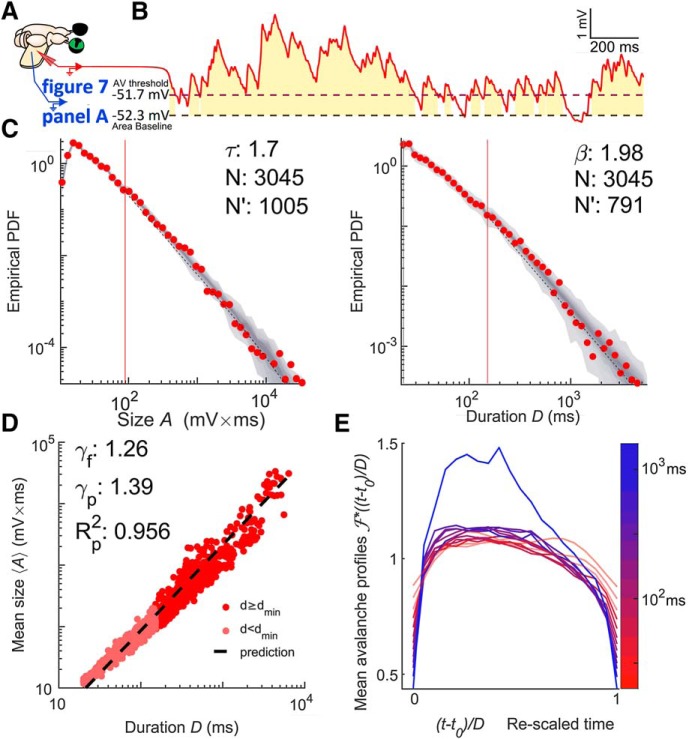

Figure 2.

Vm fluctuations reveal signatures of critical point dynamics. A, Whole-brain eye attached joint Vm and LFP recording preparation. B, Vm (red) thresholded at the 25th percentile (a dashed line). Avalanches are defined by excursions above this threshold. The gold region represents the size of the avalanche, which is the area between the signal and its second percentile (a dashed line). The duration of the avalanche is the duration of the excursion. C, Size (left) and duration (right) distributions of Vm inferred avalanches when data are combined from seven recordings from the same neuron falling in the same 20 min period. The comparison quotients (q) are both above 0.10 (0.878 and 0.874, respectively), indicating that the size and duration distributions were better fits to power laws at the given cutoff than 87% of power laws produced by a random number generator with the same parameters (shown as a gray density cloud). N′ indicates the number of avalanches above the lower cutoff of the fit (red vertical line) and N indicates the total number of avalanches. Size duration exponent denoted with τ and β is used for duration. D, Scaling relation is a function relating average avalanche size to each given duration. The predicted exponent (γp) successfully explains 95.6% of the variance of a log–log representation of the data. A linear least-squares regression could explain 96.7% and gives the fitted exponent (γf). Therefore, γp comes within 1.2% of the best linear explanation despite a 10% difference in exponent values. E, Shape collapse. Each line represents the average time course of an avalanch of a given duration. The color indicates the duration according to the scale bar. Durations below 50 ms (the lower bound on turtle pyramidal time constants) are made translucent and slightly thickened. This shape collapse represents the global collapse across all recordings in all cells. This confirms that a universal scaling function, , is present. For the seven recordings in the group represented in C and D, the mean scaling relation exponent derived from shape collapse was γSC = 1.23, a disagreement of 2.2% relative to γf.

To quantify the statistics of avalanche properties, we applied concepts and notations from the field of “critical phenomena” in statistical physics (Nishimori and Ortiz, 2011; Pruessner, 2012). Because the critical point is such a small target for any naturally occurring self-organization (Pruessner, 2012; Hesse and Gross, 2014; Cocchi et al., 2017) and there is considerable risk of false positives (Taylor et al., 2013; Hartley et al., 2014; Touboul and Destexhe, 2017; Priesemann and Shriki, 2018), asserting criticality in a new system or with a new tool requires extraordinary evidence. Because this is a new tool, we created four criteria and set quantifiable standards for concluding a system is consistent with criticality based on avalanche power laws and completed this exhaustive battery of tests with shape collapse, a geometrical analysis of self-similarity in the avalanche profiles (see “Experimental design and statistical analysis” section).

In brief, we found that both the size and duration distributions of the fluctuations treated as avalanches were consistent with power laws (Fig. 2C), P(A) ∝ A−τ and P(D) ∝ D−β matching widely reported exponents (Beggs and Plenz, 2003; Priesemann et al., 2009, 2014; Hahn et al., 2010; Klaus et al., 2011; Friedman et al., 2012; Shriki et al., 2013; Arviv et al., 2015; Shew et al., 2015; Karimipanah et al., 2017a,b), obeyed the scaling relation (Fig. 2D), and exhibited shape collapse over an expansive set of durations (Fig. 2E).

Specifically, of the 51 recording groups featuring data from the first 20 min period of recording from one cell, 98% had power laws in both size and duration distributions. The exponent values for the size distribution were τ = 1.91 ± 0.38 (median ±SD). Exponent values for the duration distribution were β = 2.06 ± 0.48. Of the 51 neurons with a recording group from the first 20 min, 18 had an additional 20 min period spanning multiple recordings. All these 18 groups had power laws in both size and duration; the exponent values for the size distribution were τ = 1.87 ± 0.29 and the exponent values for the duration distribution were β = 2.21 ± 0.39.

It is also important to confirm that power-law behavior extends across several orders of magnitude of avalanche durations. We demonstrate a power-law distribution over 2.45 ± 0.39 orders of magnitude of duration. For the scaling relation, we found a larger span with 2.62 ± 0.23 orders of magnitude across our whole avalanche duration range.

Another statistic crucial to signatures of criticality measures the relationship between the power laws describing size and duration of avalanches (Sethna et al., 2001; Beggs and Timme, 2012; Friedman et al., 2012). If the average avalanche size also scales with duration according to 〈A〉(D) ∝ Dγ, then the exponent γ is not independent, but rather depends on the exponents τ and β according to γ = (β − 1)/(τ − 1) regardless of criticality (Scarpetta et al., 2018). For critical systems this condition is enforced because avalanche profiles follows the same shape for all durations which means that this prediction is believed to be more precise than for non-critical systems and the exact values are important (Sethna et al., 2001; Nishimori and Ortiz, 2011). We found that average avalanche size scaled with duration 〈A〉(D) ∝ Dγ according to a power law and that the observed values of τ and β provided a good prediction γ = (β − 1)/(τ − 1) of the fitted γ (Fig. 2D).

Specifically, of the 51 recording groups from the first 20 min period, the fitted scaling relation exponents were γf = 1.19 ± 0.05 and the predicted scaling relation exponents were γp = 1.17 ± 0.35. For the additional second 20 min period (18 groups/neurons), the fitted scaling relation exponents were γf = 1.21 ± 0.05 and the predicted scaling relation exponents were γp = 1.28 ± 0.21.

To affect a more convincing analysis, we defined four stringent criteria that must be independently satisfied before any set of avalanches can be deemed consistent with network dynamics near a critical point (see “Experimental design and statistical analysis” section). Overall, of the 69 groups of recordings (which includes 18 out of 51 cells twice), 98.6% had power laws in both the size and duration distributions of avalanches and 92.8% had scaling relations that were well fit by power laws (R2 > 0.95). All were deemed nontrivial by the test (γf − 1) > σγf where σγf is the dataset SD and σγf = 0.051. The smallest value was γf = 1.094. The fourth constraint, that the R2 of the predicted scaling relation was within 10% of the best-fit scaling relation, was satisfied 85.6% of the time. Together, this set of criteria cannot measure distance from a critical point nor eliminate false positives. However, the take away is that 81% of all recording groups examined were judged to be consistent with network activity near a critical point.

Separating out results: 76% of the 51 recording groups from the first 20 min period and 94% of the recording groups from the second 20 min period were judged consistent with criticality. The general pattern is that the first 20 min period and the second are both consistent with criticality, but the second group meets our criteria much more frequently. This could be an effect related to the length of time we are able to maintain a patch or it could be that a better patching results in both longer stable recording ability and better inference of dynamical network properties.

To further discount the possibility of false positives we investigated whether the avalanches within our dataset exhibited “shape collapse” (Fig. 2E). The scaling relation is a consequence of self-similarity (Sethna et al., 2001; Papanikolaou et al., 2011; Friedman et al., 2012; Marshall et al., 2016; Shaukat and Thivierge, 2016; Cocchi et al., 2017). In other words, avalanches all have the same “hump shape” no matter how long they last; this shape is called the scaling function or avalanche profile. The shape collapse also provides an independent estimate of the scaling relation exponent γ. If estimated exponent, γSC, matches the fitted exponent, γf, then it is considered strong evidence of critical point behavior. For critical systems, the average avalanche profile of an avalanche of duration D is given as A(t,D) = D(γ−1) where D(γ−1) is a coefficient governing the scaling of height with duration, and is the scaling-function that describes the universal shape of an avalanche at any duration. The similarity of avalanche profiles of different durations is qualitatively judged (Sethna et al., 2001; Beggs and Plenz, 2003; Friedman et al., 2012; Pruessner, 2012; Timme et al., 2016) by plotting empirically estimated scaling functions for several durations on top of one another after they have been rescaled as part of the process of estimating γSC.

We obtained shape collapse across more than one order of magnitude (between ∼50 and 700 ms) of avalanche durations. Below 50 ms, distinct peaks arose. Above 700 ms, the profile height grew faster than the power-law scaling that worked for shorter duration avalanches. This is observed as an apparent outlier in Figure 2E. This likely marks the point where avalanches become so long and so large that they begin to weakly activate the nonlinear action potential mechanism of the neuron. When comparing to plausible alternatives to Vm in later sections, we included analysis of mean curvature and avalanche profile peak height along with visual inspection of shape collapse quality (Fig. 2E). The shape collapse plots begin with short avalanches (20 ms) that are below the median lower cutoff for power-law behavior (which was 256 ms) but are well predicted by the scaling relation.

The exponents estimated from the shape collapse were a good match for both the predicted and fitted scaling relation exponents. The groups of recordings from the first 20 min yielded γSC = 1.1868 ± 0.042. The average matched absolute percentage error was 1.3% with respect to γf. A matched signed-rank difference of median test revealed that γf was not significantly different from γSC, simple difference effect size rSDF = 0.089, p = 0.063 (where p < 0.05 indicates that they are different).

This stage of the analysis showed that, when fluctuations of Vm are treated like neuronal avalanches, they are consistent with criticality by the standards of power laws governing size and duration. We also showed that Vm avalanches exhibit geometrical self-similarity across more than one order of magnitude. These factors showed that the cortical circuits driving fluctuations of Vm are consistent with activity near a critical point according to standards of self-similarity. In our next investigation, we compared with population data from microelectrode arrays and other results from the literature to determine whether Vm fluctuations are consistent with the universality requirement of behavior near critical points and if they can be used to measure dynamical network properties.

Vm fluctuations are consistent with avalanches from previously obtained microelectrode array LFP recordings

We sought to interpret our results from the analysis of single-neuron Vm fluctuations in the context of the more commonly used analysis of multiunit spiking activity (Friedman et al., 2012; Shew et al., 2015; Marshall et al., 2016; Karimipanah et al., 2017a) or multisite LFP event detection from MEA data (also known as “multielectrode array”) (Beggs and Plenz, 2003; Shew et al., 2015).

In a previous study, avalanche analysis was performed on LFP multisite MEA recordings from the visual cortex of a different set of 13 ex vivo eye-attached whole-brain preparations in turtles (Shew et al., 2015). Avalanches were inferred from the steady-state (after on response transients but before off response transients) of responses to visual presentation of naturalistic movies as opposed to the resting-state activity between presentations (which is where the Vm data come from). Avalanche size and duration distributions followed power laws.

The median exponents were τ = 1.94 ± 0.27 for the avalanche size distributions and β = 2.14 ± 0.32 for the avalanche duration distributions (Fig. 3A). A scaling relation existed with average exponent γf = 1.20 ± 0.06 fitted to the data and γp = 1.19 ± 0.07 from the average of the predicted scaling based on theory. The scaling power-law extended over one to two orders of magnitude. Critical branching was more firmly established in Shew et al. (2015) by analyzing the branching ratio. The branching ratio is the average ratio of events (i.e., spikes) from one moment in time to the next, but only during identified avalanches. A critical branching network has a branching ratio of one, but empirically estimating it requires discrete events and an assiduous choice of time binning for analysis. Shew et al. (2015) found that a branching ratio near one that was robust to reasonable choices of time bin and varied with choice of time bin in expectation with critical branching. We are not aware of methods for estimating a branching ratio in continuous signals like Vm.

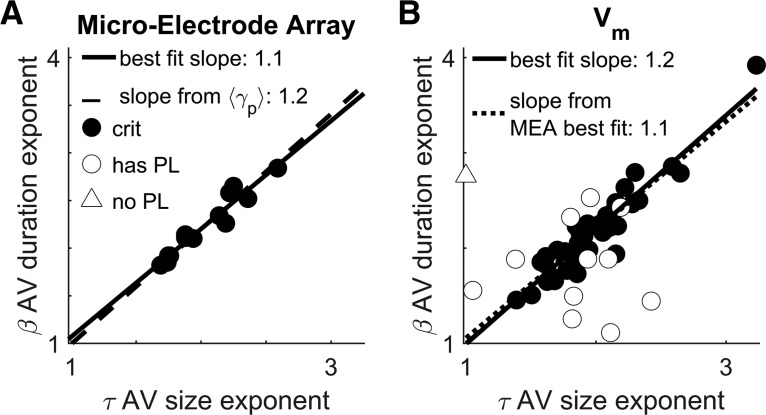

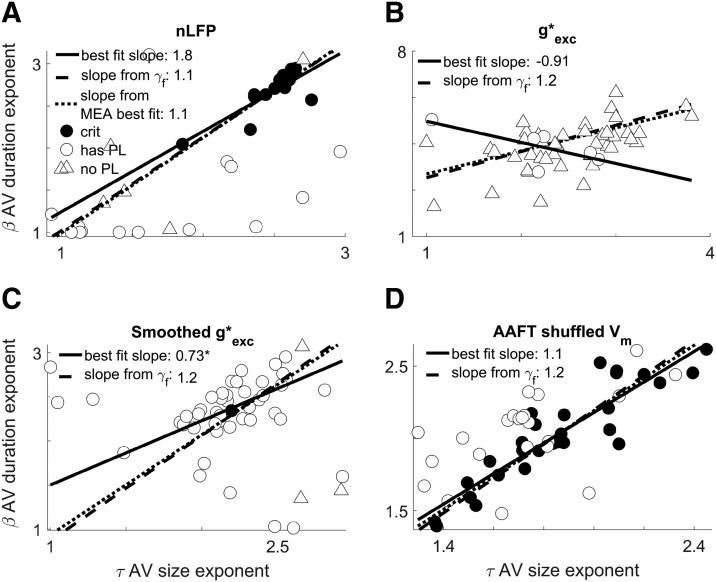

Figure 3.

Vm fluctuations are consistent with avalanches from previously obtained microelectrode array data. A plot of the exponents governing power-law scaling of avalanche duration versus the exponents governing avalanche size is shown. Circles indicate data which was best fit to a power-law in both its size and duration. Triangle indicates otherwise (the MLE estimation of a would-be power-law fit, the “scaling index,” is plotted in that case; Jeżewski, 2004). Filled circles indicate data that meet all four standardized criteria for judging data to be consistent with criticality. A, Reproduction from Shew et al. (2015) showing the results of avalanche analysis on microelectrode array data collected during the steady-state of stimulus presentation in an otherwise identical experimental preparation. The exponent values appear to covary to maintain a stable value of the scaling relation, γp = . The correlation between β and τ was high (see “Predicted scaling relation exponent is more stable than avalanche size or duration exponents” section). B, Results of avalanche analysis performed on fluctuations in subthreshold Vm. We found power laws with closely matching exponents and the same scaling relation with the similar level of stability. The correlation between β and τ was high (see “Predicted scaling relation exponent is more stable than avalanche size or duration exponents” section).

The set of avalanche size, duration, and scaling relation exponents obtained from Vm fluctuations (Fig. 3B) were not distinguishable from the MEA obtained set. The fitted scaling relation exponent γf had the least variability of all three kinds of exponents, so it is the most likely to show a difference. Thus, if a difference is not significant, then this suggests universality more strongly than for the avalanche size τ or duration β distribution exponents.

When we limited our analysis to the first 20 min period that contained multiple recordings (51 cells), neither the fitted scaling relation exponent nor the predicted scaling relation exponent were significantly different from the MEA results. The Wilcoxon rank-sum difference of medians test against the MEA data yielded (rSDF = 0.164, p = 0.37), and (rSDF = 0.08, p = 0.67), respectively. The median exponent values for the size and duration distributions were not significantly different from the median of the MEA data (rSDF = 0.164, p = 0.37) and (rSDF = 204, p = 0.265), respectively.

These results establish Vm fluctuations as an informative gauge of high-dimensional information while also demonstrating that the power-law characteristics are universal properties of the brain by showing a close match between data at different scales and under different conditions. Further underscoring universality, our results are also similar to the critical exponents measured from other animals such as the τ = 1.8 result from in vivo anesthetized cats (Hahn et al., 2010). Although an exhaustive literature search was not conducted here, others have conducted incomplete surveys (Ribeiro et al., 2010; Priesemann et al., 2014).

Single-neuron estimate of network dynamics is optimized at the network critical point

To gain a deeper insight into the relation between single-neuron input and network activity, we investigated a model network of probabilistic integrate and fire model neurons (Kinouchi and Copelli, 2006; Larremore et al., 2011a,b, 2012, 2014; Karimipanah et al., 2017a,b). This model network contains fundamental features of cortical populations, such as low connectivity, inhibition, and spiking while being sufficiently tractable for mathematical analysis (see “Model simulations” section).

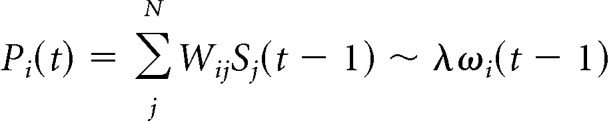

In brief, the model network consists of N = 104 binary probabilistic model neurons (Fig. 4A). The connection probability c results in a mean in-degree and out-degree of cN. The connection strength from neuron j to neuron i is quantified in terms of the network adjacency matrix W. Each connection strength Wij is drawn from a distribution of (initially) positive numbers with mean η, where the distribution is uniform on [0,2η]. A fraction χ of the neurons are designated as inhibitory; that is, their outgoing connections are made negative. The binary state Si(t) of neuron i is updated according to Si(t) = Θ(∑jNWijSj(t − 1) − ξi(t)) where ξi(t) is a random number between 0 and 1 drawn from a uniform distribution and Θ is the Heaviside step function.

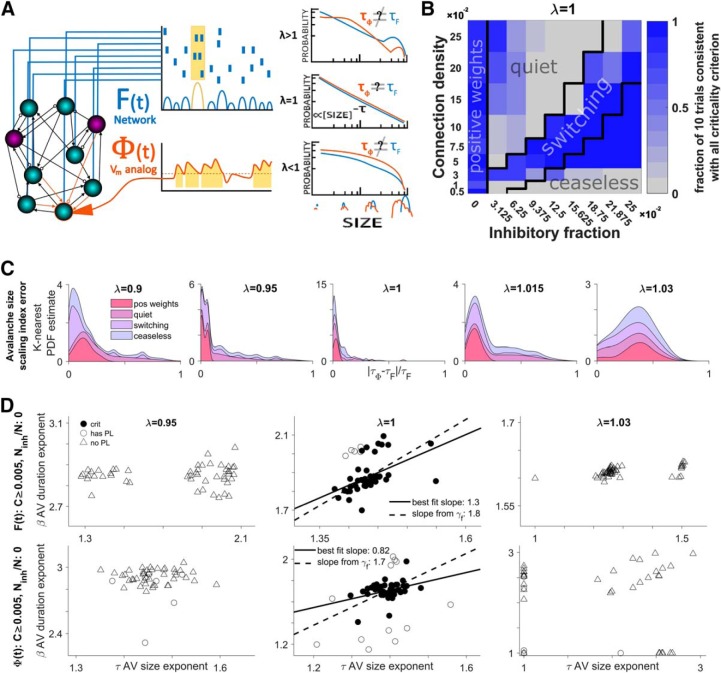

Figure 4.

The single-neuron estimate of network dynamics is optimized at the network critical point. A, Model network consisting of 104 excitatory (cyan) and inhibitory (magenta) model neurons with sparse connectivity (line tips: arrows = excitation; circles = inhibition). The simulated model activity (raster plot) is represented in terms of the single-neuron spiking (raster plot) and the active fraction of the network F(t) = S(t)/N where population spiking is S(t). Concurrently, the smoothed inputs (orange) to a single neuron represents the Vm proxy, Φi(t). The threshold (dashed line) crossings of Φi(t) define avalanches (see “Experimental design and statistical analysis” section). Avalanches of F(t) and Φi(t) are analyzed in terms of their size (shown) and duration (data not shown) distributions and their corresponding exponents, τ. Avalanche statistics depend on several network parameters including the critical branching tuning parameter λ. B, Diagram showing how the inclusion of inhibition affects the network behavior. The black lines mark the boundaries of arbitrarily defined parameter regions approximately corresponding to distinct kinds of behavior. The shade of blue indicates what fraction of 10 trials at each point met all four of our standardized criteria for consistency with expectations of critical branching behavior. C, Stacked area chart showing the probability density distribution of size exponent error (between F(t) and Φi(t) for different λ and dynamical regimes. The vertical thickness of each color band shows the probability density for that subset of the data and the outer envelope shows the overall probability density. Probability density is estimated with a normal kernel smoothing function. In this panel, we can see that power-law scaling is most similar at criticality despite variability dependent on the parameter regime. D, Complete summary of the tests for criticality when applied to F(t) (top row) and Φi(t) (bottom row). From this, we can confirm that the system is consistent with criticality when there is no inhibition. The subsampling method Φi(t) demonstrates consistency with criticality but displays a wider dispersion of exponent estimates. For experimental Vm and MEA data, there was a large correlation between β and τ, showing that the scaling relation (which predicts the slope of the trendline) is much more stable than exponent values. This is not the case for the model where for F(t), where the correlation is low (see “Predicted scaling relation exponent is more stable than avalanche size or duration exponents” section).

The largest eigenvalue, λ = ηcN(1 − 2χ), of the network adjacency matrix W characterizes the network dynamics, with critical network dynamics occurring at λ = 1. This tuning parameter λ controls the degree to which spike propagation “branches”: λ = 1 means that one spike creates one other spike on average, λ > 1 implies that one spike creates more than one other spike, whereas λ < 1 means that one spike creates less than one other spike (Haldeman and Beggs, 2005; Kinouchi and Copelli, 2006; Levina et al., 2007; Larremore et al., 2011b, 2012; Kello, 2013).

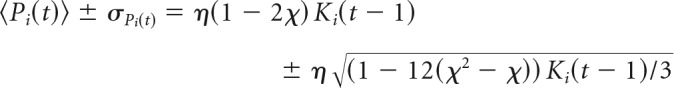

The input to model neuron i is Pi(t) = ∑jNWijSj(t − 1) and provides the link between network activity and single-neuron activity. From this we can derive a simple mathematical result characterizing how estimation of network properties is optimized at criticality.

If we let Ki(t − 1) denote the number of active neurons in the presynaptic population of neuron i, then we can rewrite the input to a model neuron as a sum of independent and identically distributed random variables drawn from the nonzero entries of W: Pi(t) = ∑kKi(t − 1)Wijk. After implementing inhibition by inverting some elements of W, the distribution of weights is not uniform but piecewise uniform. Weights are drawn uniformly from the interval [−2η,0] with probability χ and from the interval [0,2η] with probability 1 − χ. The mean of the nonzero entries of W are denoted with a prime so that the mean is 〈Wij′〉 = η(1 − 2χ) and the SD is . Now we can find the mean behavior of the input integration function as it relates to the presynaptic population:

|

We learn three things by examining the mean behavior of the input integration function. First, the mean grows as O(Ki) but the SD grows as the root O(), so the function becomes a more precise estimator of network activity with increasing activity in the presynaptic population (increasing Ki). Second, the input integration function Pi(t) is rarely negative. At the parameter combination c = 0.005 and χ = 0.25 (which has the largest variance relative to the mean), the mean becomes >1 SD larger than 0 when Ki > 5. Third, and most importantly, the input integration function is an averaging operator and the tuning parameter λ biases that averaging operation. To show this, we only need two observations: the instantaneous firing rate averaged over the presynaptic population is the number of active neurons divided by the expected total number of presynaptic neurons, ωi(t) = Ki(t)/cN. Next, we rearrange the definition of λ to get λ̸cN = η(1 − 2χ). Substituting these two observations into the mean behavior of our input integration function we get the key mathematical result:

|

Note that Pi′(t) = Pi(t) × Θ(Pi(t)) directly represents the probability for the neuron to spike at time t.

These results demonstrate that the inputs to a neuron Pi and the instantaneous firing rate of that neuron are the result of an averaging operator acting on the presynaptic population, which is a subsample of the network. Furthermore, the tuning parameter λ not only modulates the relationship of single neuron firing to downstream events (also known as branching), but also governs how the input to a neuron relates to the presynaptic population. It biases the averaging operator to either amplify firing rate (λ > 1) or dampen it (λ < 1). Therefore, our model implements both critical branching and the inverse of the critical branching condition, a critical coarse-graining condition. The model is a network of subsampling operators that only capture whole-system statistics when λ = 1 and the operators reflect an unbiased stochastic estimate of mean firing rate among the subsample (the presynaptic population). This averaging operation may exist in many kinds of networks, including those with structure and those that are not critical branching networks, so this result helps to establish plausible generalizability.

To further evaluate the relation between single-neuron input and network activity under different conditions, we simulated the described network of 104 model neurons for a total of 405 different parameter combinations, including connection probability, inhibition, and maximum eigenvalue (Fig. 4A), each parameter combination was repeated 10 times. We then compared the avalanche analysis results of simulated network activity F(t) = ∑i=1NSi(t) with the input to a single neuron (the input integration function). However, Pi(t) = ∑jNWijSj(t) is the probability that neuron i will fire at time t, also known as the instantaneous firing rate of neuron i.

Vm is not a direct representation of firing rate, but rather the firing rate is related to synaptic input through the F–I curve which is nonlinearly related to Vm. This nonlinearity could destroy the correspondence between the simulated single neuron signal and network activity. To better facilitate comparison of the simulated input integration function with the experimentally recorded Vm, we constructed a proxy for the subthreshold Vm, Φi(t), of a model neuron by convolving the simulated input Pi(t) with an alpha-function (see “Model Simulations” section).

The parameter space has four distinct patterns of critical network behavior (Fig. 4B). Qualitatively, these were reflected in the network activity. The presence of these paradoxical behaviors may indicate the presence of second phase transition tuned by the balance of excitation to inhibition (Shew et al., 2011; Poil et al., 2012; Kello, 2013; Hesse and Gross, 2014; Larremore et al., 2014; Scarpetta et al., 2018). Several key results differ strongly and thus are reported separately for these regions of parameter space.

These regions are defined in terms of the connection density and inhibition and are shown in Figure 4B. First is the “positive weights” region, where there is no inhibition (χ = 0) and the network is a standard critical branching network. The second region, “quiet,” has a small increase in the fraction of inhibitory neurons. Activity lasts slightly longer than for the classically critical network. The third region is called the “switching” regime because network activity switches between a low mean and a high mean (like “up and down states”; Destexhe et al., 2003; Millman et al., 2010; Larremore et al., 2014; Scarpetta et al., 2018). This occurred in the middle portion of the values of connectivity and inhibition. Last, we have the “ceaseless” region, with a large fraction of inhibition relative to connection density, where activity never dies out. This region is defined by c < (10e12χ − 13)/100 and χ > 0. Three of these regimes are displayed in Figure 5A; the “quiet” region is mostly redundant to the “positive weights” region. The “ceaseless” and “switching” regimes exhibit sustained self-generated activity and are included with the intention to model ongoing spontaneous activity dynamics without contamination by externally imposed firing patterns (Mao et al., 2001).

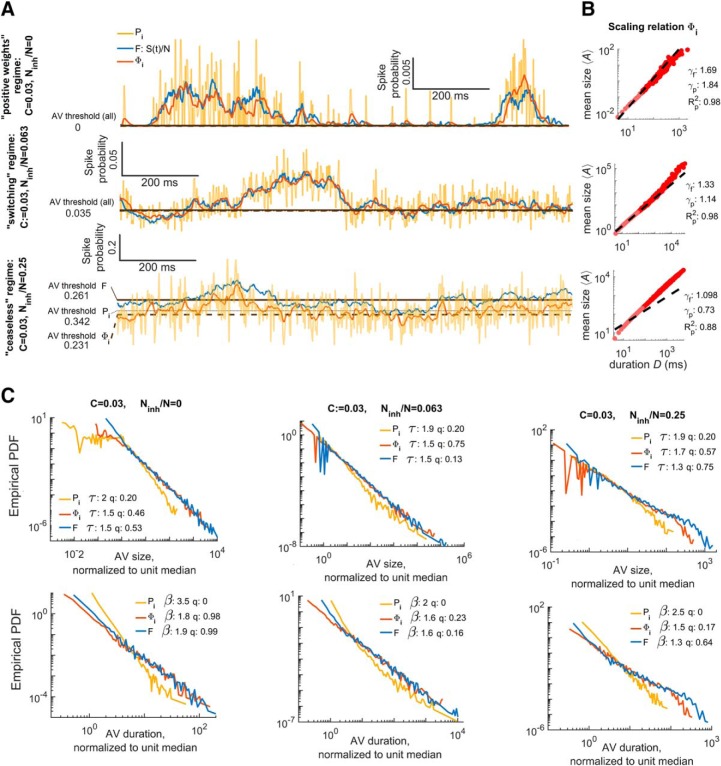

Figure 5.

Inputs to a neuron stochastically estimate firing of its presynaptic pool in this critical branching model. A, Differences in model activity dynamics with parameter regions (constant connectivity, λ =1 but inhibition, χ, varies). Each plot shows the active fraction of the network F(t) in blue, the instantaneous firing rate of node, Pi(t), is in gold and the Vm proxy for the same node, Φi(t), is in orange. The node is randomly selected from the nodes with degree within 10% of mean degree. The Vm proxy is produced by convolving the firing rate of a single neuron with an alpha-function with a 4 ms time constant. The top plot shows that with no inhibition (or very little inhibition) activity in this parameter region dies away to zero and is unimodally distributed about a small value. The middle plot shows that moderate amounts of inhibition results in self-sustained activity that is bimodally distributed about one high and one low value. The bottom plot shows that, when the fraction of nodes that are inhibitory is much larger than connection density, activity is self-sustaining and unimodally distributed about a high value with low variance relative to the mean. B, Scaling relation for the avalanches inferred from Φi(t) at different levels of inhibition, as in A. Inhibition detrimentally impacts the validity of the scaling relation predictions, which are required for consistency with critical branching. The predicted (γp) and fitted (γp) scaling exponents are indicated as is the goodness of fit (Rp2) for the predicted exponent. C, Diagram showing how avalanche (fluctuation) statistics vary with the parameter set displayed in A and B. The top row shows avalanche (fluctuation) sizes and the bottom row shows the duration distributions. Exponents τ (size distribution) and β (duration distribution) and comparison quotients q are annotated on the plot. From these plots, we can see that temporal smoothing (Φi(t)) is necessary to accurately capture F(t). Additionally, we see that mismatch between the F(t) and Pi(t) avalanche distributions vary with network parameters. At high levels of inhibition, the i(t) avalanches are power-law distributed over smaller portions of their support. For Φi(t), neither of the networks with less inhibition show the cutoffs associated with under sampling a critical branching network.

We looked at the magnitude of relative error between estimated exponents for the avalanche size distribution (Fig. 4C) to determine how well our proxy neural inputs, φi(t), reflected network activity, F(t), in different parameter regions, and with different values for the tuning parameter, λ. Importantly, the least error occurred for λ = 1 with and without the presence of inhibitory nodes. This insensitivity to parameter differences supports the claim (Larremore et al., 2014) that the system becomes critical when λ = 1 even in the presence of inhibition.

However, the four regions of parameter space perform differently according to our four standardized criteria for consistency with criticality. In the “positive weights” region, 90% of 90 trials (nine points in parameter space with 10 trials per point) have network activity that meets all four criteria when the tuning parameter is set at criticality (λ = 1) (Fig. 4C). A total of 39% meet the criteria in the “ceaseless” region, 19% in the “quiet” region, and 67% in the “switching” region, which may indicate the location of a second phase transition and shows that evidence for precise criticality in this model is limited once inhibition is included.

As we vary the tuning parameter, we can clearly distinguish critical from noncritical systems. Overall, 47% of trials met all four criteria when λ = 1, whereas 3% did when λ = 0.95, 18% when λ = 1.015, 1% when λ = 0.9, and 1% when λ = 1.03 (Fig. 4D).

The estimated power-law exponents show that the avalanche size distributions for F(t), Pi(t), and Φi(t) are most alike at criticality. Note that estimated exponents serves as the “scaling index,” a measure of the heavy tail even when a power-law is not the statistical model that fits best (Jeżewski, 2004). The fact that matching between network activity and the input integration function was best at criticality is important because it underscores the scale-free nature of critical phenomena and contrasts with the results obtained when testing subsampling methods with a different relationship to network structure (Priesemann et al., 2009; Yu et al., 2014; Levina and Priesemann, 2017).

While the system was both critical (λ = 1) and in the positive weights region, our Vm proxy Φi(t) met all four criteria for consistency with criticality 74% of the time for 90 trials (Fig. 4D), whereas Pi(t) met all four only 1% of the time. The network activity had avalanche size and duration exponent values τF = 1.43 ± 0.04 and βF = 1.87 ± 0.09 (Fig. 4D) and had a fitted scaling relation exponent, γFf = 1.83 ± 0.02 and a predicted exponent γFp = 1.99 ± 0.23. The Vm proxy, Φi(t), had slightly lower avalanche size and duration exponent values that fluctuated around the paired network values, τΦ = 1.40 ± 0.06 and βΦ = 1.73 ± 0.17 (Fig. 4D), and exclusively lower scaling relation exponents γΦf = 1.68 ± 0.02. Although the unsmoothed Pi(t) varied considerably more it had size and duration exponents that were almost exclusively higher than the paired network values, τP = 1.87 ± 0.50 and βP = 1.87 ± 0.50 with a fitted scaling relation exponent that was exclusively lower, γPf = 1.68 ± 0.02.

In Figure 5, we compared different population dynamics estimation techniques by looking at avalanches inferred from Pi(t) (the inputs to neuron i), and the Vm proxy Φi(t). Both Pi(t) and Φi(t) fluctuate about F(t), but Pi(t) is much noisier (Fig. 5A); in the ceaseless regime, Pi(t) and Φi(t) are systematically offset. Avalanches inferred from Φi(t) had average sizes that scaled with duration (Fig. 5B). Avalanches from Φi(t) consistently had duration and size distribution exponents that were closer to network avalanches than avalanches from Pi(t). However, Pi(t) performed satisfactorily in the sense that its error was systematically offset and best at criticality (Fig. 5C).

Including inhibition introduced several important differences. For the ceaseless region with λ = 1, far fewer trails met our criteria; however, Pi(t) followed F(t) much more closely. The network activity had avalanche size and duration exponent values τF = 1.48 ± 0.09, and βF = 1.53 ± 0.09 and had a fitted scaling relation exponent, γFf = 1.23 ± 0.11. The Vm proxy, Φi(t), had slightly higher avalanche size and duration exponent values that fluctuated around the paired network values, τΦ = 1.51 ± 0.19 and βΦ = 1.57 ± 0.17, but nearly identical scaling relation exponents γΦf = 1.23 ± 0.11. Although the unsmoothed Pi(t) varied considerably more, it had size and duration exponents that were almost exclusively higher than the paired network values, τP = 1.88 ± 0.20 and βP = 2.18 ± 0.34, with a fitted scaling relation exponent that was slightly lower, γPf = 1.19 ± 0.07.

When λ ≠ 1, both Φi(t) and Pi(t) failed to meet all four criteria for criticality at the same high rate as F(t) (to within 1%). This lack of false positives confirms that these signals are useful for characterizing critical branching. In Figure 4B, we calculated the absolute magnitude of relative error between the size exponent from avalanche analysis performed on F(t) and Φi(t). As expected, the avalanches were usually not power laws according to our standards; in this case, the exponent is known as the “scaling index” and describes the decay of the distribution's heavy tail (Jeżewski, 2004).

When we set λ = 0.95, we see a moderate deterioration in the ability of either Φi(t) or Pi(t) to recapitulate network exponent values. The error is no longer systematic; so they cannot be used to predict network values. The variability of the exponents increases greatly for Φi(t), whereas it decreases for Pi(t). The exponent error increases slightly over the λ = 1 case and the base of the distribution is much broader.

Reducing λ further, to λ = 0.90, the input integration function, Pi(t) ∼ λωi(t − 1), rapidly dampens impulses (ωi is the instantaneous firing rate over the presynaptic population for neuron i). Variability continues to increase and a systematic offset does not return. Exponent error is now much broader. With branching this low, events often are not able to propagate to the randomly selected neuron; an exception is the “ceaseless” regime where activity is still long lived.

When we set λ = 1.015, we see a dramatic deterioration in the ability of either Φi(t) or Pi(t) to recapitulate network values. Variability in exponent estimation increases for both Φi(t) and Pi(t). Exponent error increases rapidly, underscoring the inability to estimate network activity from neuron inputs.

Increasing λ further to λ = 1.03 produces an input integration function, Pi(t) ∼ λωi(t − 1), which rapidly amplifies all impulses and the network saturates. The effect is that variability in the estimated exponents decreases and a systematic offset returns, with both Φi(t) and Pi(t), producing exponents that are exclusively and considerably higher than network values. Exponent error reveals that estimating network properties from the inputs to a neuron is probably not possible for supercriticality in this model.