Abstract

Background The contribution of usability flaws to patient safety issues is acknowledged but not well-investigated. Free-text descriptions of incident reports may provide useful data to identify the connection between health information technology (HIT) usability flaws and patient safety.

Objectives This article examines the feasibility of using incident reports about HIT to learn about the usability flaws that affect patient safety. We posed three questions: (1) To what extent can we gain knowledge about usability issues from incident reports? (2) What types of usability flaws, related usage problems, and negative outcomes are reported in incidents reports? (3) What are the reported usability issues that give rise to patient safety issues?

Methods A sample of 359 reports from the U.S. Food and Drug Administration Manufacturer and User Facility Device Experience database was examined. Descriptions of usability flaws, usage problems, and negative outcomes were extracted and categorized. A supplementary analysis was performed on the incidents which contained the full chain going from a usability flaw up to a patient safety issue to identify the usability issues that gave rise to patient safety incidents.

Results A total of 249 reports were included. We found that incident reports can provide knowledge about usability flaws, usage problems, and negative outcomes. Thirty-six incidents report how usability flaws affected patient safety (ranging from incidents without consequence, to death) involving electronic patient scales, imaging systems, and HIT for medication management. The most significant class of involved usability flaws is related to the reliability, the understandability, and the availability of the clinical information.

Conclusion Incidents reports involving HIT are an exploitable source of information to learn about usability flaws and their effects on patient safety. Results can be used to convince all stakeholders involved in the HIT system lifecycle that usability should be considered seriously to prevent patient safety incidents.

Keywords: information technology; patient safety; ergonomics; databases, factual; software

Background and Significance

Health information technology (HIT) promises to improve the safety, efficiency, and overall quality of care delivery. 1 2 Yet, poor usability of HIT may lead to implementation failure or rejection, 3 usage difficulties, 4 and, even worse, to patient safety issues. 5 6 7 Poor usability is revealed by the presence of usability flaws, i.e., “ aspect[s] of the system and/or demand on the user which makes it unpleasant, inefficient, onerous, perturbing, or impossible for the user to achieve their [sic] goals in [a] typical usage situation ” 8 : these aspects may be related to the graphical user interface (GUI) of the technology, its behavior, and the suitability of the knowledge implemented within and of the features available for users' needs. 9 Usability flaws represent violations of usability design principles (also known as usability heuristics or usability criteria) when designing HIT. 10

The contribution of usability flaws to patient safety issues is well-acknowledged but there is little research on the effects of usability flaws on care delivery and patient safety. Common methods for usability evaluation do not enable this connection to be studied. Indeed, expert-based evaluations (e.g., heuristics evaluations, 11 cognitive walkthrough 12 ) and hazard-oriented analyses 13 enable identification of usability flaws. However, since there are no observations of technology in use, only hypotheses can be drawn about the effect of usability flaws. 14 As for user-based evaluations (e.g., user-testing, 15 think-aloud protocols 16 ) where representative end-users interact with the technology in a controlled environment, they offer insights about how usability flaws can impair work (i.e., usage problems): however, hypotheses must still be drawn on their potential negative outcomes on the work system (including patient safety). 14 One way to examine the contribution of usability flaws to negative outcomes including patient harm is by field observations and interviews (e.g., see refs. 14 17 18 19 20 21 ). These study designs enable connection of usability flaws with their effects on users and even with patient safety issues (e.g., see ref. 18 ). However, such studies provide insights about a limited range of situations. To get a deeper understanding on how usability flaws contribute to negative outcomes, it is necessary to analyze a variety of situations where HIT problems affected care delivery and patient safety.

Incident reports are an accessible and significant source of information about patient safety issues with health technology. Yet, biases and limitations give incident reports the reputation for being an unexploitable material. Indeed, the blame culture may lead to underreporting and may impact the accuracy of the reports 22 23 : relevant facts may be missed or presented with less certainty. 24 In addition, the reports reflect the expertise of the reporters (e.g., vendors, clinicians) 25 with all the inherent limitations of such a system. Despite those limitations and biases, reports from a range of incident monitoring systems have been successfully investigated to analyze patient safety issues with technologies. 25 26 27 28 29 30 By analyzing the free-text descriptions provided in reports, those studies have highlighted that incident reports were a valuable material to identify and categorize the types of issues with technology that affected patient safety. They have even described sociotechnical factors affecting the use of technology, including usability flaws that led to incidents.

Indeed, Magrabi et al identified that such factors made up 4% of the patient safety issues that were voluntarily reported by manufacturers to the U.S. Food and Drug Administration (FDA) Manufacturer and User Facility Device Experience (MAUDE) database 25 ; this ratio rose to 10% in the study by Warm and Edwards 29 and 16.77% in Samaranayake et al. 30 The analysis of reports from MAUDE noted good descriptions of technical issues and rich information about the types of software problems encountered. Reports provided by manufacturers were found to provide insights into how software and hardware systems were failing compared with those reported by health professionals which emphasized issues with clinical workflow integration and training.

In another study by Magrabi et al, 27 45% of the incidents reported involved sociotechnical factors. A study by Lyons and Blandford identified a few usability flaws which gave rise to errors and affected patient safety. 31 These studies show that despite their limitations and biases, incident reports, particularly those reported by manufacturers, may be a useful source information to gain a deeper understanding about how usability flaws can affect care delivery and patient safety. However, as far as we know, no previous studies have attempted to explicitly analyze incident reports from a usability perspective.

Objectives

The present article reports a study to examine the feasibility of using incident reports about HIT to learn about the consequences of usability flaws, with a focus on patient safety. We posed three questions:

To what extent can we gain knowledge about usability issues from incident reports?

What types of the usability flaws, related usage problems, and negative outcomes are reported in incidents reports?

What are the reported usability issues that give rise to patient safety incidents?

Methods

We performed a secondary analysis of a sample of incident reports that were previously identified as involving human factors issues. The method involved three main steps. First, out of this sample, we selected incident reports whose free-text descriptions presented a usability flaw. Second, we used the definitions provided by a usability framework to extract from the free-text descriptions three types of information: descriptions about (1) usability flaws, (2) usage problems, and (3) negative outcomes. Finally, we developed or reused coding schemes to analyze in detail each type of information.

Sample of Incidents Screened

We examined incident reports involving HIT (excluding medical devices such as infusion pumps and autoinjector devices) voluntarily reported by manufacturers to the U.S. FDA's MAUDE database that had been analyzed in a previous study. 25 That analysis identified broad categories of issues with HIT using 678 reports that had been submitted to MAUDE from January 2008 to July 2010. In the present study, we performed a secondary analysis on a subset of 359 reports that were previously identified as involving human factors issues. Some incidents spanned two reports 25 : an initial description and additional information (labeled hereafter “supplementary information”). Thus, the analyzed sample included a total of 359 reports corresponding to 242 different incidents (plus 117 “supplementary information” reports).

Eligibility Criteria

For a report to be included in the analysis, the free-text description must have presented at least one meaningful semantic unit (i.e., sets of words representing a single idea that was sufficiently self-explanatory to be analyzed) describing factually a usability flaw (cf. background and significance section for definition). Reports not including usability flaws, or where descriptions were too poor or incomplete (requiring hypothesis) or not factual (report of hypotheses drawn by the reporter), were excluded from the analysis. “Supplementary information” reports were included if they provided relevant information not mentioned in the initial report of the incident; if not, they were excluded.

Screening Process

The screening process was performed by three experts in human factors with a background in medical devices and usability evaluation of HIT (J.S., M.C.B.Z., and R.M.). The experts initially trained on a randomly chosen set of reports to gain a common understanding about the eligibility criteria and until agreement about the inclusion of reports could be easily reached ( n = 18). Then, two human factors experts (J.S. and R.M.) independently examined 40% ( n = 142) of the remaining reports against the inclusion criteria. An interrater reliability analysis using Cohen's kappa score was performed showing good consistency among coders (kappa = 0.73). The remaining 60% ( n = 199) were then examined by R.M. using the same categories; the results were cross-checked by J.S. (kappa = 0.79). When experts disagreed on a report, or there were doubts about inclusion, the report was reexamined during a meeting till consensus was reached. Disagreements were resolved by consulting the third expert and were checked by F.M.

Data Extraction and Analysis

Data Extraction

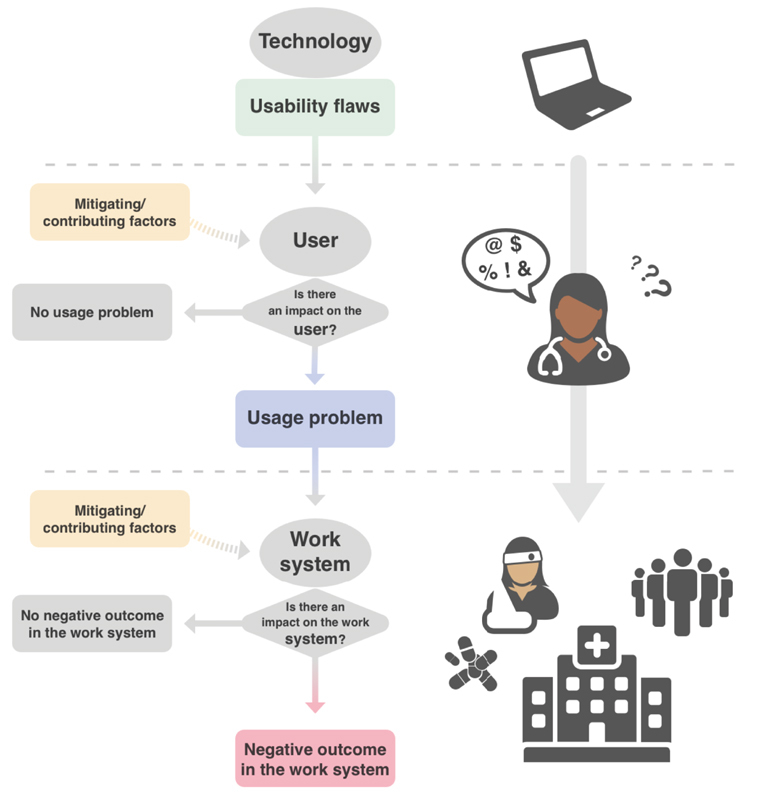

The data extraction was based on an existing usability framework 14 32 that describes the chain of latent consequences that leads from a usability flaw to a usage problem and then a negative outcome ( Fig. 1 ). Usability flaws impair first the user work and the tasks to be performed. These conscious or unconscious issues experienced by the user are referred to as “usage problems.” Other parts of the work systems, including the patient, are then impacted through the user; those issues are referred as “negative outcomes,” and include patient safety issues. The chain is not linear and depends on several factors including factors independent of the technology (e.g., training, clinical, and technical skills, expertise, workload) that may either favor or mitigate the impact of usability flaws at both levels of usage and negative outcomes. We used the definitions of usability flaws, usage problems, and negative outcomes provided by this framework to extract those three types of data from the incident reports.

Fig. 1.

Schematic representation of the consequences of violating a usability principle.

First, free-text from “supplementary information” was merged with the text of included reports. In each free-text description of the included incident, J.S. and R.M. extracted factual descriptions about usability flaws.

Then, for each included report, J.S. and R.M. independently examined consequences or absence of consequences of usability flaws, that is, usage problems and/or negative outcomes. An interrater agreement was calculated (kappa = 0.74). Disagreements were discussed till consensus arose. The following data were extracted from the reports that mentioned consequences of usability flaws:

Factual descriptions of usage problems : Any negative consequences of a usability flaw on the users and their tasks. Usage problems refer to the overall experience of the users interacting with the technology including their cognitive processes, decisions, behaviors, feelings, and emotions. 32 Usage problems include, but are not restricted to, use-errors as defined in reference 33 (e.g., the user entered inadvertently the wrong dose).

Factual descriptions of negative outcomes : Any negative impacts of the usability flaws on the work system or care delivery including tools, technologies, environment, organization, performance, and nonuser person (e.g., patient), 32 for example, the medication administration process was slowed down. Negative outcomes include patient safety (e.g., the patient got the wrong medication and experienced an adverse drug reaction).

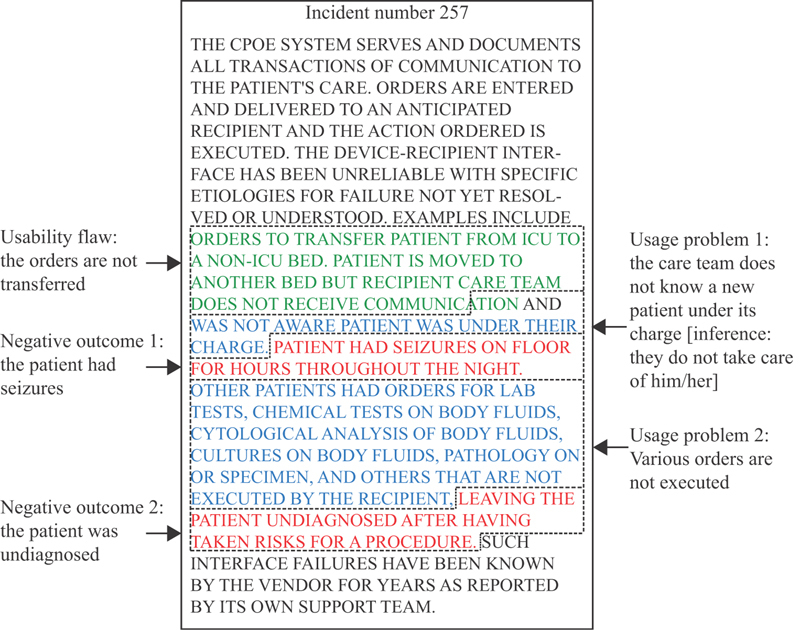

We also extracted information about the type of technology. It should be noted that a given incident may be comprised of one or more usability flaws, and of none, one or several usage problems and negative outcomes. Fig. 2 provides an illustration of how an incident was systematically deconstructed to identify the usability flaws and its consequences for the user and the work system and patient.

Fig. 2.

Deconstructing the free-text of an incident report included in the analysis to identify usability flaws, usage problems, and negative outcomes. Capitalization as written in the original report.

Classification Process

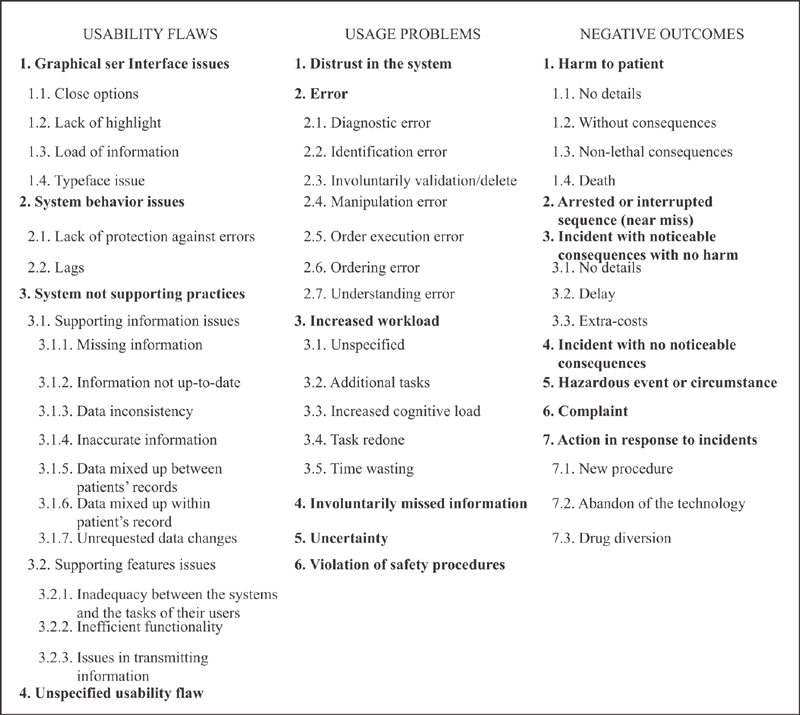

Data were analyzed by categorizing usability flaws, usage problems, and negative outcomes. For the usability flaws and usage problems, two separate coding schemes were developed inductively by J.S. and R.M. so that descriptions that represented the same types of issues were gathered together in unique classes. The coding schemes were developed to achieve unambiguous, clear, and mutually exclusive subcategories with high internal consistency. During the coding process, any disagreements were discussed till complete agreement was reached. At the end of the process, each usability flaw and usage problem were assigned to a unique category and subcategory of their respective coding scheme (cf. Fig. 3 , and Supplementary Appendices A and B [available in the online version] for the final coding schemes). Negative outcomes were examined using a standard approach 25 27 34 and are as follows:

Fig. 3.

Final coding schemes used to categorize the usability flaws (left), the usage problems (center), and the negative outcomes (right).

Harm to a patient (an adverse event) : An incident that reached the patient, 35 for example, a patient had a severe allergic reaction to prescribed medication even though allergy was entered in the patient's electronic medical record.

An arrested or interrupted sequence or a near miss : An incident that was detected before reaching the patient, 35 for example, a prescription in a wrong name noticed and corrected while printing.

An incident with a noticeable consequence but no patient harm : Issue that affected the delivery of care but did no harm to a patient, for example, time wasted waiting for a printer to function correctly.

An incident with no noticeable consequence : Issue that did not directly affect the delivery of care, for example, an electronic backup copy of patient records was corrupted, but this was detected and the copy was not needed.

A hazardous event or circumstance : Issue that could potentially lead to an adverse event or a near miss, for example, a computerized physician order entry (CPOE) fails to display a patient's allergy status.

A complaint : An expression of user dissatisfaction, for example, a user found that training to use new software was inadequate.

New categories were developed when new themes emerged. As with the categorization process for usability flaws and usage problems, any disagreements were discussed till complete agreement was reached (cf. Fig. 3 and Supplementary Appendix C [available in the online version] for the final coding scheme).

Descriptive analyses of incidents were undertaken by the type of technology, usability flaws, usage problems, and negative outcomes.

Analysis of the Usability Issues that Give Rise to Patient Safety Incidents

We examined the subset of incidents which contained the full chain going from a usability flaw through the usage of the technology up to the patient. To be included in this analysis, reports needed to include:

Effects on patient safety that were objectively described for a patient or a group of patients (excluding hypotheses).

A full chain of usability flaws, usage problems, and negative outcomes that make sense regarding the clinical work logic and the chronology of the incident reported. We excluded incidents that required us to draw hypotheses to understand how the usability flaw led to a usage problem and negative outcome. For instance, when the usage problem is an emotion and it is not described how this emotion led to a negative outcome, the incident was excluded.

Incidents which contained the full chain but whose negative outcome was not related to a patient safety issue (e.g., work organization, process) were excluded from this analysis.

For each type of technology involved in the analyzed incidents, we performed a narrative synthesis of the typical pathways of the propagation of the usability flaws up to the patient. First, we gathered together incidents that shared similar kinds of usability flaws. Then, we summarized the categories of usage problems and negative outcomes arising from this type of flaw.

Results

Incident Reports can Provide Knowledge about Usability Issues

We found that incidents reports could be analyzed from a usability perspective. A total of 249 reports out of 359 (69.3% inclusion) were included in the analysis, representing 229 different incidents along with 20 “supplementary information” reports. While the incidents involved a large variety of technology, the majority were associated with imaging software ( n = 107, 46.7%). CPOE, electronic health records (EHRs), medication administration records (MARs), and pharmacy clinical software (PCS) accounted for 79 incidents (34.5%). Twenty-five dealt with laboratory information systems (10.9%). Thirteen involved blood bank software (5.7%). Anatomic pathology systems, archiving software, data management systems, radiation systems, and electronic patient scales accounted for one incident each.

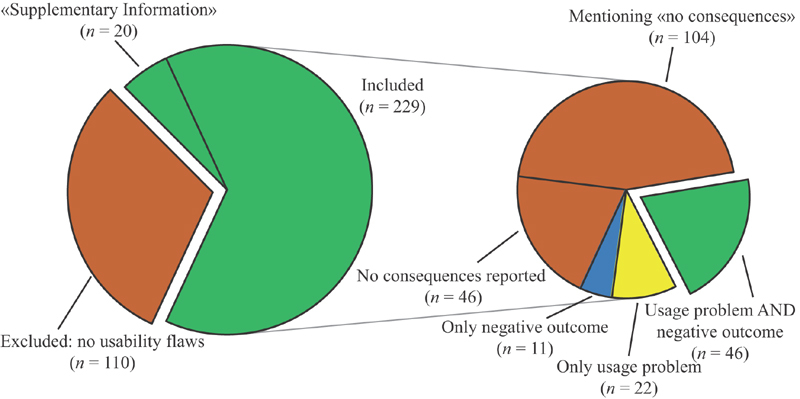

Of those 229 incidents analyzed, 46 did not report on any consequences, neither usage problems nor negative outcomes (20.1%), and 104 explicitly mentioned that there were no consequences (i.e., no error or no patient injury; 45.4%). In total, 46 incidents provided descriptions about usage problems and negative outcomes (20.1%) providing the full chain of propagation of the usability flaws. Twenty-two (9.6%) reported only usage problems, while 11 (4.8%) reported only negative outcomes. Fig. 4 summarizes the distribution of the incidents analyzed according to their content.

Fig. 4.

Graphical representation of the distribution of the incidents analyzed according to their eligibility (left) and their content in terms of usage problems and negative outcomes (right).

Reports Provide Information about Usability Flaws, Related Usage Problems, and Negative Outcomes

Our analyses successfully extracted usability flaws, usage problems, and negative outcomes from the free-text descriptions. Of the 229 incidents, 287 meaningful semantic units representing usability flaws were extracted and classed into a hierarchy of 4 meta-categories, 8 categories, and 10 subcategories (cf. Fig. 3 and Supplementary Appendix A [available in the online version] for details). Usability issues that were sufficiently described to be classified dealt with GUI issues, or with the behavior of the system including lack of protection against errors that may lead to preventable use errors. The last class dealt with violations of the needs of the users in terms of information and of features including missing, nonupdated, inconsistent, or inaccurate information and features that do not support users' individual and collective tasks, failing features, and information transmission issues.

Of the 68 incidents mentioning usage problems, 103 different meaningful semantic units were extracted and classed hierarchically into 6 categories and 12 subcategories (cf. Fig. 3 and Supplementary Appendix B [available in the online version] for details). Main classes dealt with the users distrusting the system, making errors, being uncertain, missing relevant information, violating safety procedures, or seeing their workload increased.

Of the 57 incidents mentioning negative outcomes, 64 meaningful semantic units were extracted and classed into 6 categories and 9 subcategories (cf. Fig. 3 and Supplementary Appendix C [available in the online version] for details). Main classes dealt with harm to patient (e.g., death, nonlethal consequences, no consequences), incident with noticeable consequences with no harm (e.g., delay in the care process), hazardous event or circumstance, and arrested or interrupted sequence (near miss). Two new categories were created: action in response to incidents (e.g., new procedures, abandon of the technology) and drug diversion.

Usability Issues that Give Rise to Patient Safety Incidents

Forty-six incidents report the full chain going from a usability flaw through the usage of the technology (usage problem) up to the work system and/or the patient (negative outcomes). Of these, nine were excluded from the analysis because their negative outcomes were related only to “actions in response to incidents,” “drug diversion,” or to “delays” in the care process, not to patient safety issues. Thirty-seven incidents reporting the full chain and leading to a patient safety issue were considered for analysis; one (incident number 253) was excluded because the link between the usage problem (“distrust for the functionality of the system by those using it”) and the patient safety issue (“varying degrees of adversity for the patients”) must be hypothesized (cf. Supplementary Appendix D , available in the online version). Table 1 presents the deconstruction of the 36 incidents analyzed. These involved three types of technology: electronic patient scales ( n = 1), imaging systems ( n = 4), and CPOE/EHR/MAR/PCS ( n = 31). It should be noted that the usability flaw was not always the direct cause of the patient safety issue but could also be a contributory factor. Overall, the usability flaws were varied but a few constants can be highlighted as we show below:

Table 1. Deconstruction of the 36 incidents analyzed to highlight the usability issues that gave rise to patient safety incidents: the usability flaws, usage problems, and negative outcomes are summarized.

| ID | Usability flaw(s) | Usage problem(s) | Negative outcome(s): patient safety issue(s) |

|---|---|---|---|

| Electronic patient scale | |||

| #242 | A patient scale allowed users to switch easily between units (pounds vs. kilograms) while it is supposed to be kept in kilograms | A nurse did not notice the change and weighed a patient incorrectly. Based on this erroneous measure, (s)he administered the wrong dose of medication | Despite this incident, the patient was not harmed and did not require medical management |

| Imaging system | |||

| #202 | The system merged the incorrect data and rejected the original images: images had the wrong patient tag. No further details were available about the usability flaw | It led to the misidentification of a patient and the surgery (s)he had to undergo | A surgery was performed on the wrong patient. No further details were available about patient outcome |

| #229 | The date of the image was not visible or was missing (not detailed) | The radiologist mistook an old image for a recent one and misdiagnosed the spreading of a metastatic disease | The disease spread widely |

| #163 | The left-right markers of an image were not sufficiently visible | The patient's image was flipped left-right unnoticedly. Based on this image, a surgeon operated on the wrong side | The wrong side of the head of the patient was operated upon |

| #267 | Images supporting the placement of a Peripherally Inserted Central Catheter (PICC) line did not show the line that was inserted too far | A radiologist misunderstood the absence of the line on the image, thought it has been removed and did not check it | This misunderstanding contributed to the death of the baby |

| CPOE/EHR/MAR/PCS | |||

| #42 | A medication was ordered but its prescription was not populated in the administration plan | The medication was administered 3 days late | The patient suffered from an ulcer that required a gastrectomy |

| #92 | A volume less than 0.01 mL was not displayed with the order | The nurse had to calculate the volume to be administered and miscalculated the dose | A patient received almost a 10-fold overdose of insulin by injection |

| #123 | In the drug administration details screen, after a 30 mL bottle of azithromycin 200 mg/5 mL was scanned, the screen displayed 200 mg as the dose amount, and 30 mL as the volume: the volume to administer was incorrect | A clinician miscalculated the dose and administered 1,200 mg of azithromycin instead of 250 mg ordered | The patient received almost five times the ordered dose, but no adverse effect was reported |

| #237 | Manual entries of patient allergies were overwritten during automatic updates | A clinician prescribed a medication ignoring that the patient was allergic | The patient suffered a temporary allergic reaction (shortness of breath) to the medication but had no further effect |

| #239 | A dropdown menu for medication dosing frequency contained 225 options arranged in alphabetical order and included counterintuitively arranged items | A user scrolled through the menu and selected the wrong frequency leading to a dosing error | The patient received four times the expected dose of digoxin |

| #239bis | An update in the frequency field on an existing prescription was not transmitted to the pharmacy: the pharmacy received the order with the wrong frequency | A clinician administered more than the prescribed dose | An elderly patient received more than the ordered dose of blood thinner Levoxyl for 6 weeks but had no serious injury Another patient received inappropriate dosage of carbamazepine and was admitted to hospital with atypical chest pains |

| #247 | The concentration of the medication was displayed amidst extraneous information in small font | A clinician did not see the concentration and made a mistake in the dose administered to a patient | The patient received 10 times the dose of epinephrine ordered and sustained a myocardial infarction (heart attack) |

| #248 | An order to hold the sliding scale insulin at night time was delivered but without notification | A nurse did not see the order and gave the patient the usual dose of insulin | The patient endured hypoglycemia with severe symptoms |

| #249 | A CPOE did not warn about duplicate medications; the font size was small; and the screen contained excess extraneous information | A physician ordered medications twice at different doses and schedules A pharmacist missed the duplicate medications Physicians delivered all medications ordered |

The patient received all the medications ordered. No further details were available about patient outcome |

| #250 | Orders for stress tests were ambiguous and displayed over four lines | A clinician misunderstood the physician's order and gave the patient the incorrect pharmacological modality (i.e., wrong form) | The patient incorrectly received an infusion of adenosine which caused him/her a life-threatening acute asthma attack |

| #251 | To enter a postoperative order, physicians needed to delete orders that were no longer needed, i.e., inactive orders, leave orders that were still needed, and then add new ones. This was a time-consuming and unusual procedure | Clinicians did not always perform this review due to the extra work and time it required. This led to commingling of the pre- and postoperative orders | One patient got his/her clean postoperative abdomen irrigated based on a preoperative order |

| #252 | The interface of a CPOE was unfriendly and displayed extensive extraneous information | A physician did not see an existing order and ordered duplicate treatments for a patient | The patient received duplicate treatments: infusion of total parenteral nutrition and concentrated dextrose solution. Their cumulative dose caused pulmonary edema |

| #257 | A patient was moved to another bed. But the order to transfer the patient was not received by the recipient care team | The recipient care team was not aware that the patient was under their care | The patient had seizures on floor for many hours throughout the night without the care team taking care of him/her |

| #265 | The procedure to reconcile orders with the execution of the orders was complex | A clinician did not execute the order. It was not known that the order was not executed. This led to a missed diagnosis opportunity | A patient with a life-threatening disease was not treated appropriately, contributing to his/her death |

| #266 | On a CPOE interface, the orders were obfuscated by verbiage and the system discontinued them | A clinician missed the orders, and therefore did not execute them | A known consequence is that an order for a transcutaneous pacemaker with life-threatening consequences (no details) failed to be executed |

| #266bis | Once correctly ordered, the system switched doses of methadone syrup for two patients without informing the user | A clinician gave a patient 5 mg more of methadone syrup than initially ordered | The patient received an excess dose of methadone but was not harmed |

| #269 | Test orders (hypercoagulability tests) were spuriously cancelled by the system without notifying ordering physicians | Clinicians did not execute the hypercoagulability tests ordered for a patient having blood clots | The blood clots remained unexplained. No further details were available about patient outcome |

| #270 | The font size of the list of patients was small | A clinician clicked on the wrong patient and entered an order of a test using radioactive tracers | A patient received the radioactive injection intended for another patient |

| #271 | The interface does not specify the dose in mg of a combination medication (e.g., in the Acetaminophen-Oxycodone, the exact dose of Tylenol is not specified). Moreover, certain fields do not specify the volume, requiring users to open a pop-up screen to see this information |

A physician did not know the combination medication dose in the volume (s)he ordered An excessive dose of Acetaminophen-Oxycodone was ordered for a patient Neither the physician, the pharmacist, or the nurse recognized and intercepted this medication error The combination medication was given to the patient |

10 mL of Acetaminophen-Oxycodone was given three times over 4 hours, meaning 1,950 mg of Tylenol were administered in 4 hours to a patient in starvation receiving other medication increasing the effects of Tylenol. The patient developed acute renal failure and died |

| #274 | A screen displayed vital information tinctured with abundant clutter. There was no display of current treatments and what had been recently ordered Moreover, the warning system was insufficient |

A clinician did not see the medications already ordered for the patient and ordered duplicate medications and intravenous fluids. (S)he was not warned by the system At least two intravenous solutions were active simultaneously and given to the patient |

The patient received at least two intravenous fluids that were similar |

| #275 | A system variably changed the schedule of medications ordered daily at two distinct doses to be administered daily at two distinct times. The system scheduled both doses to be administered at the same time | A nurse gave an excessive dose at once and skipped the second dose | All patients treated at the facility were endangered |

| #280 | A system did not transfer an order to discontinue intravenous fluids in a postoperative setting to the task list of the nurse | The nurse did not see the order and continued the intravenous fluids | The patient was overloaded with fluid |

| #284 | A system did not provide an adequate representation of the current medications and orders, nor did it display what other members of the care team had ordered. The decision support module was also defective | Physicians ordered four medications that increased the propensity for bleeding. They were not warned by the decision support system | A patient was simultaneously given enoxaparin, unfractionated heparin, aspirin, and warfarin |

| #287 | A system prevented physicians from ordering medications while another service had opened up the patient record | The physician could not order critical medication immediately. The order was delayed | The patient was in danger. No further details were available about patient outcome |

| #290 | A system did not transmit a transportation order Additionally, the way orders were displayed was excessively lengthy |

An order to transport a patient with a monitor because of a heart risk was not seen and not executed | The patient travelled to at least one test without a monitor |

| #293 | To transfer a patient after surgery, physicians must discontinue orders that are no longer needed. It was a counterintuitive function | The physicians wasted time to perform this procedure leading them to neglect this medication reconciliation A physician ordered medications that were already active, and prescriptions written after an operation contained duplicates and triplicates of five medications with distinct doses |

The patient was in danger. No further details were available about patient outcome |

| #300 | Medication labels for infusion bags that were created by a software labeling system were in a small and uniform font | A nurse mistook two bags. She accidentally hung the bag of norepinephrine instead of the epinephrine one | A patient was almost infused with norepinephrine instead of epinephrine |

| #304 | A system did not prevent preoperative and postoperative orders from being commingled nor from allowing multiple orders and doses of the same medication | Physicians had ordered up to six distinct acetaminophen doses, two distinct vancomycin doses, and two distinct famotidine doses concomitantly with pantoprazole in a postop order | The patient was in danger. No further details were available about patient outcome |

| #305 | The function to discontinue medication orders was not working: the medication orders still appeared in the nurses' administration plan | A physician who was aware of the problem wrote a note to the nurses The nurses did not see the note and continued medications orders as they appear in the MAR: gentamicin was given to three patients despite instructions to discontinue the medication |

Three patients received gentamicin while it was discontinued. No immediate injury occurred |

| #501 | On the order entry screen intended for ancillary orders but not for medication orders, it was mentioned that no allergy information was recorded while there was a historical allergy entry Allergy information from previous visits was not displayed without a specific medical record number |

Not being able to see this information, a physician used this order entry screen to order a medication to which the patient was allergic | The patient received the medication to which (s)he was allergic resulting in an allergic reaction. The patient was discharged within 48 hours |

| #313 | When a patient is transferred from a service to another, the system considered the patient to be discharged and to have a new admission. Therefore, during the stay of the patient in a second service, the system provided results related to the previous services only when a search was made on previous reactions to medications using large date constraints Furthermore, the system did not alert users that the date constraints used to make the search were beyond the range of the “current admission” |

A clinician ordered a patient an infusion of famotidine while the patient had already suffered a reaction to this treatment during her/his “first admission” A patient's relative informed a nurse that famotidine was contraindicated. The nurse searched with large date constraints but did not find any previously infused famotidine |

The patient who was suffering from serious delirium received a medication which had previously resulted in an allergic reaction during her/his previous admission The medication was stopped due the relative's insistence |

Abbreviations: CPOE, computerized physician order entry; EHR, electronic health record; MAR, medication administration record; PCS, pharmacist clinical software.

Electronic patient scales : The unit of measure could easily be changed causing an erroneous measure and the administration of an inadequate dose of medication. This incident did not lead to noticeable consequence.

Imaging systems : In the four incidents involving imaging systems, the unavailability and the unreliability of the information provided on images were the causes of various errors (e.g., patient identification, diagnostic, manipulation, order execution, understanding) that led to patient harm and even death.

CPOE, EHR, MAR, and PCS : Despite the great diversity existing in the types of usability flaws identified and in the ways of their propagation up to the patient, 5 typical paths can be identified in the 31 incidents concerning software related to the medication use process.

If an information is erroneous, ambiguous, changed, missing (including, not transmitted), illegible, or nowhere to be found, it leads clinicians to miss it and prevent them from making a correct order (e.g., duplicating medication) and from executing appropriately an order. Consequences on patients range from incident without consequence, to harm to patient, and even death (incident numbers 42, 92, 237, 247, 248, 252, 257, 265, 266, 269, 270, 271, 274, 249, 275, 280, 284, 290, 300, 305, 501, 313, 123, 239bis, 250, 266bis).

Issues in patient or medication menus (e.g., items not sufficiently separated in a list of medications) lead to erroneous orders (e.g., erroneous doses) and consequently to patient safety incident with no consequences (incident number 239).

The system does not prevent multiple orders of medications of the same pharmaceutical class or different doses and does not warn clinicians about duplicates. Therefore, clinicians inadvertently order duplicate or more medications. Patients get the medications and are put in harm's way (incident numbers 249 and 304).

Some unintuitive procedures to check or change medication orders do not respect clinicians' way of thinking and logic. These procedures increase clinicians' workload and dissuade clinicians to follow them. It leads to medication ordering errors and place patients at risk (incident numbers 251 and 293).

A physician cannot enter an order as soon as the patient's record is opened by another clinician even for a patient in an emergency condition. It compels the physician to delay the order. Ultimately, the patient's treatment is delayed despite its emergency, endangering the patient (incident number 287).

Discussion

Answers to Questions

This study posed three questions to examine whether reports about incidents involving HIT are an exploitable source of information to learn about the consequences of usability flaws, including effects on patient safety.

-

To what extent can we gain knowledge about usability issues from incident reports?

Our results show that 69.3% ( n = 249) of the analyzed reports described a usability flaw as one of the causes of the incident as perceived by the reporter. Among them, 20.1% ( n = 46) describe the full chain of propagation of the usability flaws through the user of the technology up to negative outcomes (including effects on care delivery and patient safety). The usability flaws extracted from the free-text descriptions form a coherent whole: no aberrant types of flaws were found, and several flaws were found in several reports. For instance, in the subcategory “Inaccurate information,” 17 separate incident reports mention that images were flipped. Besides, the descriptions of usability flaws are consistent with those known in the literature. For instance, the fact that “Options are too close” to each other on the screen (subcategory “Close options”) is mentioned in Khajouei and Jaspers' systematic review on the usability characteristics of CPOE (table 5, p. 12) 36 ; this article also highlights the problems with “dropdown menu [having] numerous options” (subcategory “Information overload”). Though the free-text descriptions in incident reports were provided by reporters who may not have had expertise in usability, they were rich enough to provide information about the usability flaws that contributed to the incident: thus, they are an exploitable source of information to get knowledge about usability flaws with HIT.

-

What types of the usability flaws, related usage problems, and negative outcomes are reported in incidents reports?

The descriptions of the usability flaws, usage problems, and negative outcomes were expressed in the reporters' own words (usually vendors). Nonetheless, it was possible for the usability experts who performed the analysis to identify, understand, and class the reported usability issues. Most of the usability flaws dealt with the GUI, the behavior of the system, and the reliability and display of the information. As for the resulting usage problems, they were mainly related to errors, missed information, increased workload, violated safety procedures, and users distrusting the system. Finally, negative outcomes on the work system mainly ranged from incident with noticeable consequences but no harm (e.g., “Delays” in the care process) up to patient harm and even death.

The lack of usability background of the reporters impacted their investigation of the usability flaws. Some types of flaws, more noticeable or easier to investigate (e.g., subcategories of “GUI issues”), were more precisely and more completely described than others, whose initial cause might have been more complex, deeper, or less apparent (e.g., subcategories of “System not supporting practice”). Therefore, based on the usability flaws' description, it is possible to formulate recommendations to fix the more precisely described flaws but not for all the complex ones. For instance, the complex usability flaw “computer discontinuation of orders” (incident number 266) may have several technical causes: an expert-based usability evaluation could be performed to get a deeper understanding of such flaws before appropriate recommendations can be formulated to fix them.

-

What are the reported usability issues that gave rise to patient safety incidents?

Free-text descriptions of incident reports are interesting in that the reporters make themselves the connection between the usability flaws, the usage problems, and the negative outcome (cf. Fig. 2 ). All in all, results tend to form a body of corroborating evidence that usability flaws of HIT can pose risks to patient safety. A total of 36 incidents out of the 249 describing a usability flaw (14.46%) reported the full chain of propagation up to a patient safety issue without requiring any hypotheses. These involved a variety of usability flaws (e.g., no protection against changes and errors, issues in the menus, procedures not fitting clinicians' way of thinking) but the most significant class is related to the reliability, the understandability, and the availability of the clinical information. The consequences of the latter range from incidents that were a near miss, and to those that reached patients, both with and without harm.

It must be kept in mind that the causal chain between the usability flaws, the usage problems, and the negative outcomes is not linear. A given usability flaw may lead to several usage problems that, in turn, may give rise to several negative outcomes; furthermore, a given negative outcome may be caused by several usage problems, themselves caused by several usability flaws. It is therefore not possible to identify the relative contributions of different usability flaws to a given patient safety incident.

Benefits of Usability-Oriented Analyses

Published analyses of HIT incidents reports usually adopt a patient safety perspective and try to uncover the broad types of issues associated with incidents (e.g., technical vs. human–computer interaction 27 29 ). Nonetheless, they do not look deeper into those causes to learn how they propagate. To the best of our knowledge, this study is the first that systematically and explicitly analyzes incident reports from a usability perspective with a standardized and reproducible method to unveil the chain of propagation of the usability flaws through the user up to the work system and the patient. The added value of analyzing incident reports is twofold. First, it enables analysts to make the connection between the usability flaws and their consequences on the work system and the patient unlike expert-based, hazard-oriented analyses, and user-based usability evaluation. Second, it enables analysts to examine a wider range of situations than in situ observational studies of usability.

Our results show that MAUDE's incident reports are an amenable material to make the connection between HIT usability flaws and their consequences. In a practical way, results are consistent with known literature and add to the body of work that aims to provide evidence that poor usability negatively impairs users' work, their work system, and puts patients at risk (e.g., see refs. 7, 32, 36–38). The results (especially Table 1 ) could be used to inform and convince all stakeholders in HIT development, evaluation, procurement, and implementation processes (e.g., designers, vendors, health care establishments' managers, certification bodies, health care authorities) that usability flaws in HIT do pose risks to patient safety. The material gathered highlights that usability of HIT must be taken seriously and that actions must be taken to consider it all along the HIT lifecycle.

Limitations

U.S. regulatory requirements on reporting medical device incidents in the MAUDE database are not enforced with respect to HIT. 39 Consequently, the HIT incidents we examined are unlikely to be representative of all systems and all incidents: HIT incidents may be underreported. Therefore, the body of corroborating evidence that usability flaws of HIT contribute to patient safety incidents may be even more significant.

As mentioned in the introduction, reporting biases may impact the accuracy of the incident reports. Despite those biases, previous studies pointed out that incident reports were a valuable material to identify the type of technology issues associated with the patient safety issues 25 26 27 28 29 30 and to identify incidentally usability flaws and consequences. 31 Moreover, analyzing a large collection of incidents enables identifying characteristic profiles. 40 In the present study, we deconstructed the free-text descriptions of 359 reports corresponding to 242 incidents. From previous studies, 27 41 this sample size may be sufficient to gain an overview about the types of usability issues reported and of their consequences. Besides, several usability flaws were found in many reports and were consistent with the literature: the knowledge extracted from incident reports has good internal and external consistency which underlines the reliability of the results. Nonetheless, the results must be considered carefully: factors that may have mitigated or favored the propagation of the usability flaws up to the patient were not identified. Therefore, the fact that some types of flaws did not lead to patient harm does not mean that this is always true: in other contexts, their consequences might be more severe. The reverse is also true: usability flaws that led to patient harm in the analyzed incidents may have less severe consequences in other contexts.

Finally, the reports analyzed date back to 2008 to 2010. One could question the usefulness of performing the analysis on old incidents. However, this article aimed to examine the feasibility of the proposed analysis and to test the method. This sample of reports was known to be related to human factors issues: it was easier to use them to test the feasibility of the analysis. Now that the feasibility of our method has been successfully demonstrated, the analysis can be extended to more recent reports and to reports from other databases.

Perspectives

This study has shown that analyzing the free-text descriptions of incident reports is feasible and effective to identify the usability flaws that led to patient safety incidents. Yet, to fully take advantage of the MAUDE database, it is necessary to improve the accuracy and the completeness of the reports by improving the guidance of reporting forms, 23 42 especially of the free-text entry. For instance, as recommended for the reporting of usability flaws in software engineering, 43 reporters should be assisted with question/wizard-based interaction guiding them through the steps of the report. The free-text field to relate the incident can be structured to encourage reporters to describe separately the usability flaws and resulting usage problems and the negative outcomes. Besides, providing the opportunity to upload pictures or screenshots of the technology and of annotating them would help describe more precisely the usability flaw. For the more complex usability flaws (i.e., usability flaws requiring an investigation in-depth to understand their causes, e.g., subcategory “Inaccurate information”), vendors should trigger an investigation procedure including an expert-based evaluation by usability experts to know precisely how to fix them.

The opportunity to automatize the analysis process to analyze larger samples of incident reports must also be questioned. Automatic screening methods have been successfully tested for extracting incidents and identifying broad types of incidents. 44 Yet, as far as we know and regardless of the domain, there are no attempts aiming to extract descriptions of usability flaws, related usage problems, and negative outcomes: automatic methods still must be tested. Besides, classifying the descriptions of the usability flaws, related usage problems, and negative outcomes requires a sound knowledge of the technology, of usability concepts, of the medical specialties, and of the possible related work organizations and practices. As for the detailed classification of incidents, 44 this task cannot be allocated to automatic tools and needs to be done by humans. Yet, it may take advantage of being supported by coding software (e.g., NVivo 45 ) to make data manipulation and exploration easier.

Finally, when several usability flaws are identified by the reporter of an incident as contributing factors to the patient safety incident, the limited and focused information provided by the free-text description does not allow examination of the relative contributions of each usability flaw. Larger-scale investigations must be undertaken. For instance, combining methods inspired by the fault tree analysis 46 with expert-based usability evaluations 11 12 of the HIT would allow for identifying different kinds of factors (e.g., technical, organizational, usability-related) that have contributed to the patient safety incident and to identify precisely the role of the usability flaws in the incident. Unfortunately, such an approach would have the same limitations as studies proceeding by field observations of HIT usage: they would allow the analysis of a limited range of situations. A balance must still be found between the need for large amounts of data to get evidence about the contribution of usability flaws to patient safety incidents and the need for precise information to model the propagation of usability flaws up to the patient.

Conclusion

When complete, free-text descriptions of incident reports are an amenable material to make the connection between the usability flaws and their consequences on the user, on the work system, and on the patient in a wide range of situations. Even if this knowledge must be interpreted with caution, it can be used to convince stakeholders in the development, evaluation, procurement, and implementation processes that usability flaws with HIT do pose risks to patient safety and that actions are required to seriously consider usability throughout the HIT lifecycle.

Clinical Relevance Statement

Be aware that problems with usability of HIT can put patients at the risk of harm.

Report problems with using HIT, particularly issues with the reliability, understandability, and availability of clinical information that is used to support decision-making.

Structure the description of incidents you report so that each step of the propagation from the usability flaw, through the usage problem up to the negative outcome, may easily be identifiable for reanalysis.

Multiple Choice Questions

-

Which element from the work system acts as an intermediary between a usability flaw and its negative outcomes for the patient?

The user.

The work organization.

The technology.

The environment.

Correct Answer: The correct answer is option a, “the user.” A usability flaw is a physical characteristic of the technology. If the technology is not used, it cannot have any consequence. As soon as the technology is used (directly or remotely), the usability flaw may disrupt the interaction of the user with the technology and then lead to use errors that may ultimately impact the work system or the patient.

-

In the sample of analyzed incidents related to CPOE, EHR, MAR, and PCS, what is the main type of usability flaws observed that led to patient safety issues?

Menu issue.

System behavior issue.

Lack of feature.

Supporting information issue.

Correct Answer: The correct answer is option d, “supporting information issue.” Out of the 31 incidents that led to patient safety issues, 26 were related to “supporting information issues” (information erroneous, ambiguous, changed, missing [including, not transmitted], illegible, or nowhere to be found).

Acknowledgment

The authors would like to thank Pierre-François Gautier for the design of the figures.

Conflict of Interest None declared.

Protection of Human and Animal Subjects

Human and/or animal subjects were not included in the project.

Supplementary Material

References

- 1.Chaudhry B, Wang J, Wu S et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144(10):742–752. doi: 10.7326/0003-4819-144-10-200605160-00125. [DOI] [PubMed] [Google Scholar]

- 2.Shekelle P G, Morton S C, Keeler E B. Rockville, MD: Agency for Healthcare Research and Quality; 2006. Costs and Benefits of Health Information Technology. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kaplan B, Harris-Salamone K D. Health IT success and failure: recommendations from literature and an AMIA workshop. J Am Med Inform Assoc. 2009;16(03):291–299. doi: 10.1197/jamia.M2997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Nolan M E, Siwani R, Helmi H, Pickering B W, Moreno-Franco P, Herasevich V. Health IT usability focus section: data use and navigation patterns among medical ICU clinicians during electronic chart review. Appl Clin Inform. 2017;8(04):1117–1126. doi: 10.4338/ACI-2017-06-RA-0110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kim M O, Coiera E, Magrabi F. Problems with health information technology and their effects on care delivery and patient outcomes: a systematic review. J Am Med Inform Assoc. 2017;24(02):246–250. doi: 10.1093/jamia/ocw154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.US Joint Commission on Accreditation of Healthcare Organizations. Sentinel Event Alert 2016;54. Available at:https://www.jointcommission.org/assets/1/6/SEA_54_HIT_4_26_16.pdf. Accessed February 21, 2019

- 7.Ratwani R, Fairbanks T, Savage E et al. Mind the Gap. A systematic review to identify usability and safety challenges and practices during electronic health record implementation. Appl Clin Inform. 2016;7(04):1069–1087. doi: 10.4338/ACI-2016-06-R-0105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lavery D, Cockton G, Atkinson M P.Comparison of evaluation methods using structured usability problem reports Behav Inf Technol 199716(4–5):246–266. [Google Scholar]

- 9.Marcilly R, Ammenwerth E, Vasseur F, Roehrer E, Beuscart-Zéphir M-C. Usability flaws of medication-related alerting functions: a systematic qualitative review. J Biomed Inform. 2015;55:260–271. doi: 10.1016/j.jbi.2015.03.006. [DOI] [PubMed] [Google Scholar]

- 10.Marcilly R, Ammenwerth E, Roehrer E, Niès J, Beuscart-Zéphir M-C. Evidence-based usability design principles for medication alerting systems. BMC Med Inform Decis Mak. 2018;18(01):69. doi: 10.1186/s12911-018-0615-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Scapin D L, Bastien J MC.Ergonomic criteria for evaluating the ergonomic quality of interactive systems Behav Inf Technol 199716(4–5):220–231. [Google Scholar]

- 12.Lewis C, Wharton C. Amsterdam: Elsevier Science; 1997. Cognitive walkthroughs; pp. 717–732. [Google Scholar]

- 13.Masci P, Zhang Y, Jones P, Thimbleby H, Curzon P.A Generic User Interface Architecture for Analyzing Use Hazards in Infusion Pump SoftwareSchloss Dagstuhl - Leibniz-Zent Fuer Inform GmbH WadernSaarbruecken Ger;2014 [Google Scholar]

- 14.Watbled L, Marcilly R, Guerlinger S, Bastien J C, Beuscart-Zéphir M-C, Beuscart R. Combining usability evaluations to highlight the chain that leads from usability flaws to usage problems and then negative outcomes. J Biomed Inform. 2018;78:12–23. doi: 10.1016/j.jbi.2017.12.014. [DOI] [PubMed] [Google Scholar]

- 15.Bastien J MC. Usability testing: a review of some methodological and technical aspects of the method. Int J Med Inform. 2010;79(04):e18–e23. doi: 10.1016/j.ijmedinf.2008.12.004. [DOI] [PubMed] [Google Scholar]

- 16.Peute L WP, de Keizer N F, Jaspers M WM. The value of Retrospective and Concurrent Think Aloud in formative usability testing of a physician data query tool. J Biomed Inform. 2015;55:1–10. doi: 10.1016/j.jbi.2015.02.006. [DOI] [PubMed] [Google Scholar]

- 17.Ash J S, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004;11(02):104–112. doi: 10.1197/jamia.M1471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Koppel R, Metlay J P, Cohen A et al. Role of computerized physician order entry systems in facilitating medication errors. JAMA. 2005;293(10):1197–1203. doi: 10.1001/jama.293.10.1197. [DOI] [PubMed] [Google Scholar]

- 19.Ash J S, Sittig D F, Dykstra R H, Guappone K, Carpenter J D, Seshadri V. Categorizing the unintended sociotechnical consequences of computerized provider order entry. Int J Med Inform. 2007;76 01:S21–S27. doi: 10.1016/j.ijmedinf.2006.05.017. [DOI] [PubMed] [Google Scholar]

- 20.Russ A L, Zillich A J, McManus M S, Doebbeling B N, Saleem J J. Prescribers' interactions with medication alerts at the point of prescribing: a multi-method, in situ investigation of the human-computer interaction. Int J Med Inform. 2012;81(04):232–243. doi: 10.1016/j.ijmedinf.2012.01.002. [DOI] [PubMed] [Google Scholar]

- 21.Saleem J J, Patterson E S, Militello L, Render M L, Orshansky G, Asch S M. Exploring barriers and facilitators to the use of computerized clinical reminders. J Am Med Inform Assoc. 2005;12(04):438–447. doi: 10.1197/jamia.M1777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Waring J J. Beyond blame: cultural barriers to medical incident reporting. Soc Sci Med. 2005;60(09):1927–1935. doi: 10.1016/j.socscimed.2004.08.055. [DOI] [PubMed] [Google Scholar]

- 23.Lewis A, Williams J G.Inefficient clinical incident reporting systems create problems in learning from errorsIn:Ascona, Switzerland; 2013. Available at:http://www.chi-med.ac.uk/publicdocs/WP171.pdf. Accessed February 26, 2019 [Google Scholar]

- 24.Myketiak C, Concannon S, Curzon P. Narrative perspective, person references, and evidentiality in clinical incident reports. J Pragmatics. 2017;117:139–154. [Google Scholar]

- 25.Magrabi F, Ong M-S, Runciman W, Coiera E. Using FDA reports to inform a classification for health information technology safety problems. J Am Med Inform Assoc. 2012;19(01):45–53. doi: 10.1136/amiajnl-2011-000369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Fong A, Adams K T, Gaunt M J, Howe J L, Kellogg K M, Ratwani R M. Identifying health information technology related safety event reports from patient safety event report databases. J Biomed Inform. 2018;86:135–142. doi: 10.1016/j.jbi.2018.09.007. [DOI] [PubMed] [Google Scholar]

- 27.Magrabi F, Ong M-S, Runciman W, Coiera E. An analysis of computer-related patient safety incidents to inform the development of a classification. J Am Med Inform Assoc. 2010;17(06):663–670. doi: 10.1136/jamia.2009.002444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Magrabi F, Liaw S T, Arachi D, Runciman W, Coiera E, Kidd M R. Identifying patient safety problems associated with information technology in general practice: an analysis of incident reports. BMJ Qual Saf. 2016;25(11):870–880. doi: 10.1136/bmjqs-2015-004323. [DOI] [PubMed] [Google Scholar]

- 29.Warm D, Edwards P. Classifying health information technology patient safety related incidents - an approach used in Wales. Appl Clin Inform. 2012;3(02):248–257. doi: 10.4338/ACI-2012-03-RA-0010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Samaranayake N R, Cheung S TD, Chui W CM, Cheung B MY. Technology-related medication errors in a tertiary hospital: a 5-year analysis of reported medication incidents. Int J Med Inform. 2012;81(12):828–833. doi: 10.1016/j.ijmedinf.2012.09.002. [DOI] [PubMed] [Google Scholar]

- 31.Lyons I, Blandford A. Safer healthcare at home: detecting, correcting and learning from incidents involving infusion devices. Appl Ergon. 2018;67:104–114. doi: 10.1016/j.apergo.2017.09.010. [DOI] [PubMed] [Google Scholar]

- 32.Marcilly R, Ammenwerth E, Roehrer E, Pelayo S, Vasseur F, Beuscart-Zéphir M-C. Usability flaws in medication alerting systems: impact on usage and work system. Yearb Med Inform. 2015;10(01):55–67. doi: 10.15265/IY-2015-006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.IEC 62366–1:2015(en,fr), Medical devices — Part 1: Application of usability engineering to medical devices / Dispositifs médicaux — Partie 1: Application de l'ingénierie de l'aptitude à l'utilisation aux dispositifs médicauxAvailable at:https://www.iso.org/obp/ui/#iso:std:iec:62366:-1:ed-1:v1:en,fr. Accessed September 20, 2018

- 34.Magrabi F, Baker M, Sinha I et al. Clinical safety of England's national programme for IT: a retrospective analysis of all reported safety events 2005 to 2011. Int J Med Inform. 2015;84(03):198–206. doi: 10.1016/j.ijmedinf.2014.12.003. [DOI] [PubMed] [Google Scholar]

- 35.Runciman W, Hibbert P, Thomson R, Van Der Schaaf T, Sherman H, Lewalle P. Towards an International Classification for Patient Safety: key concepts and terms. Int J Qual Health Care. 2009;21(01):18–26. doi: 10.1093/intqhc/mzn057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Khajouei R, Jaspers M WM.The impact of CPOE medication systems' design aspects on usability, workflow and medication orders: a systematic review Methods Inf Med 201049013–19.10.3414/ME0630 [DOI] [PubMed] [Google Scholar]

- 37.Zahabi M, Kaber D B, Swangnetr M. Usability and safety in electronic medical records interface design: a review of recent literature and guideline formulation. Hum Factors. 2015;57(05):805–834. doi: 10.1177/0018720815576827. [DOI] [PubMed] [Google Scholar]

- 38.Zapata B C, Fernández-Alemán J L, Idri A, Toval A. Empirical studies on usability of mHealth apps: a systematic literature review. J Med Syst. 2015;39(02):1. doi: 10.1007/s10916-014-0182-2. [DOI] [PubMed] [Google Scholar]

- 39.FDA.Changes to Existing Medical Software Policies Resulting from Section 3060 of the 21st Century Cures Act - Draft Guidance for Industry and Food and Drug Administration StaffDecember2017. Available at:https://www.fda.gov/downloads/MedicalDevices/DeviceRegulationandGuidance/GuidanceDocuments/UCM587820.pdf. Accessed February 25, 2019

- 40.Runciman W B, Webb R K, Lee R, Holland R. The Australian Incident Monitoring Study. System failure: an analysis of 2000 incident reports. Anaesth Intensive Care. 1993;21(05):684–695. doi: 10.1177/0310057X9302100535. [DOI] [PubMed] [Google Scholar]

- 41.Makeham M A, Stromer S, Bridges-Webb C et al. Patient safety events reported in general practice: a taxonomy. Qual Saf Health Care. 2008;17(01):53–57. doi: 10.1136/qshc.2007.022491. [DOI] [PubMed] [Google Scholar]

- 42.CHI + MED - Incidents and investigation. Available at:http://www.chi-med.ac.uk/incidents/index.php. Accessed February 26, 2019

- 43.Yusop N SM, Grundy J, Vasa R. Reporting usability defects: a systematic literature review. IEEE Trans Softw Eng. 2017;43(09):848–867. [Google Scholar]

- 44.Chai K EK, Anthony S, Coiera E, Magrabi F. Using statistical text classification to identify health information technology incidents. J Am Med Inform Assoc. 2013;20(05):980–985. doi: 10.1136/amiajnl-2012-001409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Richards L. London: Sage Publications; 1999. Using NVivo in Qualitative Research. [Google Scholar]

- 46.Hixenbaugh A F. Seattle, WA: The Boeing Company; 1968. Fault Tree for Safety. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.