Abstract

Matching methods are heavily used in the social and health sciences due to their interpretability. We aim to create the highest possible quality of treatment-control matches for categorical data in the potential outcomes framework. The method proposed in this work aims to match units on a weighted Hamming distance, taking into account the relative importance of the covariates; the algorithm aims to match units on as many relevant variables as possible. To do this, the algorithm creates a hierarchy of covariate combinations on which to match (similar to downward closure), in the process solving an optimization problem for each unit in order to construct the optimal matches. The algorithm uses a single dynamic program to solve all of the units’ optimization problems simultaneously. Notable advantages of our method over existing matching procedures are its high-quality interpretable matches, versatility in handling different data distributions that may have irrelevant variables, and ability to handle missing data by matching on as many available covariates as possible.

1. INTRODUCTION

In observational causal inference where the scientist does not control the randomization of individuals into treatment, an ideal approach matches each treatment unit to a control unit with identical covariates. However, in high dimensions, few such “identical twins” exist, since it becomes unlikely that any two units have identical covariates in high dimensions. In that case, how might we construct a match assignment that would lead to accurate estimates of conditional average treatment effects (CATEs)?

For categorical variables, we might choose a Hamming distance to measure similarity between covariates. Then, the goal is to find control units that are similar to the treatment units on as many covariates as possible. However, the fact that not all covariates are equally important has serious implications for CATE estimation. Matching methods generally suffer in the presence of many irrelevant covariates (covariates that are not related to either treatment or outcome): the irrelevant variables would dominate the Hamming distance calculation, so that the treatment units would mainly be matched to the control units on the irrelevant variables. This means that matching methods do not always pass an important sanity check in that irrelevant variables should be irrelevant. To handle this issue with irrelevant covariates, in this work we choose to match units based on a weighted Hamming distance, where the weights can be learned from machine learning on a hold-out training set. These weights act like variable importance measures for defining the Hamming distance.

The choice to optimize matches using Hamming distance leads to a serious computational challenge: how does one compute optimal matches on Hamming distance? In this work, we define a matched group for a given unit as the solution to a constrained discrete optimization problem, which is to find the weighted Hamming distance of each treatment unit to the nearest control unit (and vice versa). There is one such optimization problem for each unit, and we solve all of these optimization problems efficiently with a single dynamic program. Our dynamic programming algorithm has the same basic monotonicity property (downwards closure) as that of the apriori algorithm (Agrawal and Srikant, 1994) used in data mining for finding frequent itemsets. However, frequency of itemsets is irrelevant here, instead the goal is to find a largest (weighted) set of covariates that both a treatment and control unit have in common. The algorithm, Dynamic Almost Matching Exactly – DAME – is efficient, owing to the use of bit-vector computations to match units in groups, and does not require an integer programming solver.

A more general version of our formulation (Full Almost Matching Exactly) adaptively chooses the features for matching in a data-driven way. Instead of using a fixed weighted Hamming distance, it uses the hold-out training set to determine how useful a set of variables is for prediction out of sample. For each treatment unit, it finds a set of variables that (i) allows a match to at least one control unit; (ii) together have the best out-of-sample prediction ability among all subsets of variables for which a match can be created (to at least one control unit). Again, even though for each unit we are searching for the best subset of variables, we can solve all of these optimization problems at once with our single dynamic program.

2. RELATED WORK

As mentioned earlier, exact matching is not possible in high dimensions, as “identical twins” in treatment and control samples are not likely to exist. Early on, this led to techniques that reduce dimension using propensity score matching (Rubin, 1973b,a, 1976; Cochran and Rubin, 1973), which extend to penalized regression approaches (Schneeweiss et al., 2009; Rassen and Schneeweiss, 2012; Belloni et al., 2014; Farrell, 2015). Propensity score matching methods project the entire dataset to one dimension and thus cannot be used for estimating CATE (conditional average treatment effect), since units within the matched groups often differ on important covariates. In “optimal matching,” (Rosenbaum, 2016), an optimization problem is formed to choose matches according to a pre-defined distance measure, though as discussed above, this distance measure can be dominated by irrelevant covariates, leading to poor matched groups and biased estimates. Coarsened exact matching (Iacus et al., 2012, 2011) has the same problem, since again, the distance metric is pre-defined, rather than learned. Recent integer-programming-based methods considers extreme matches for all possible reasonable distance metrics, but this is computationally expensive and relies on manual effort to create the ranges (Morucci et al., 2018; NoorE-Alam and Rudin, 2015); in contrast we use machine learning to create a single good match assignment.

In the framework of almost-exact matching (Wang et al., 2017), each matched group contains units that are close on covariates that are important for predicting outcomes. For example, Coarsened Exact Matching (Iacus et al., 2012, 2011) is almost-exact if one were to use an oracle (should one ever become available) that bins covariates according to importance for estimating causal effects. DAME’s predecessor, the FLAME algorithm (Wang et al., 2017) is an almost-exact matching method that adapts the distance metric to the data using machine learning. It starts by matching “identical twins,” and proceeds by eliminating less important covariates one by one, attempting to match individuals on the largest set of covariates that produce valid matched groups. FLAME can handle huge datasets, even datasets that are too large to fit in memory, and scales well with the number of covariates, but removing covariates in exactly one order (rather than all possible orders as in DAME) means that many high-quality matches will be missed.

DAME tends to match on more covariates than FLAME; the distances between matched units are smaller in DAME than in FLAME, thus its matches are distinctly higher quality. This has implications for missing data, where DAME can find matched groups that FLAME cannot.

3. ALMOST MATCHING EXACTLY (AME) FRAMEWORK

Consider a dataframe D = [X, Y, T] where X ∈ {0, 1, …, k}n×p, Y ∈ ℝn, T ∈ {0, 1}n respectively denote the categorical covariates for all units, the outcome vector and the treatment indicator (1 for treated, 0 for control). The j-th covariate X of unit i is denoted Xij ∈ {0, 1, …, k}. Notation xi ∈ {0, 1, …, k}p indicates covariates for the ith unit, and Ti ∈ {0, 1} is an indicator for whether or not unit i is treated.

Throughout we make SUTVA and ignorability assumptions (Rubin, 1980). The goal is to match treatment and control units on as many relevant covariates as possible. Relevance of covariate j is denoted by wj ≥ 0 and it is determined using a hold-out training set. wj’s can either be fixed beforehand or adjusted dynamically inside the algorithm (see Full-AME in Section 5).

For now, assuming that we have a fixed nonnegative weight wj for each covariate j, we would like to find a match for each treatment unit t that matches at least one control unit on as many relevant covariates as possible. Thus we consider the following problem:

Almost Matching Exactly with Fixed Weights (AME): For each treatment unit t,

where ○ denotes Hadamard product. The solution to the AME problem is an indicator of the optimal set of covariates for the matched group of treatment unit t. The constraint says that the optimal matched group contains at least one control unit. When the solution of the AME problem is the same for multiple treatment units, they form a single matched group. For treatment unit t, the main matched group for t contains all units ℓ so that xt ○ θt* =xℓ ○ θt*. If any unit ℓ (either control or treatment) within t’s main matched group has its own different main matched group, then t’s matched group is an auxiliary matched group for ℓ. In this case, ℓ could have been matched to other units on more covariates than it was matched to t. Estimation of CATE for a unit should always be done on the main matched group for that unit.

The formulation of the AME and main matched group is symmetric for control units. There are two straightforward (but inefficient) approaches to solving the AME problem for all units.

AME Solution 1 (quadratic in n, linear in p): Brute force pairwise comparison of treatment points to control points. (Detailed in the appendix.)

AME Solution 2 (order n log n, exponential in p): Brute force iteration over all 2p subsets of the p covariates. (Detailed in the appendix.)

If n is in the millions, the first solution, or any simple variation of it, is practically infeasible. A straightforward implementation of the second solution is also inefficient. However, a monotonicity property (downward closure) allows us to prune the search space so that the second solution can be modified to be completely practical. The DAME algorithm does not enumerate all θ’s, monotonicity reduces the number of θ’s it considers.

Proposition 3.1. (Monotonicity of θ* in AME solutions) Fix treatment unit t. Consider feasible θ, meaning ∃ℓ with Tℓ = 0 and xℓ ○ θ = xt ○ θ. Then,

Any feasible θ′ such that θ′ < θ elementwise will have θ′Tw ≤ θTw.

Consequently, consider feasible vectors θ and θ′. Define as the elementwise min(θ, θ′). Then , and .

These follow from the fact that the elements of θ are binary and the elements of w are non-negative. The first property means that if we have found a feasible θ, we do not need to consider any θ′ with fewer 1’s as a possible solution of the AME for unit t. Thus, the DAME algorithm starts from θ being all 1’s (consider all covariates). It systematically drops one element of θ to zero at a time, then two, then three, ordered according to values of θTw. The second property implies that we must evaluate both θ and θ′ as possible AME solutions before evaluating . Conversely, a new subset of variables defined by cannot be considered unless all of its supersets have been considered. These two properties form the basis of the DAME algorithm.

The algorithm must be stopped early to avoid creating low quality matches. A useful stopping criterion is if the weighted sum of covariates θTw used for matching becomes too low (perhaps lower than a prespecified percentage of the total sum of weights ||w||1).

Note that matching does not produce estimates, it produces a partition of the covariate space, based on which we can estimate CATEs. Within each main matched group, we use the difference of the average outcome of the treated units and the average outcome of the control units as an estimate of the CATE value, given the covariate values for that group. Smoothing the CATE estimates could be useful after matching.

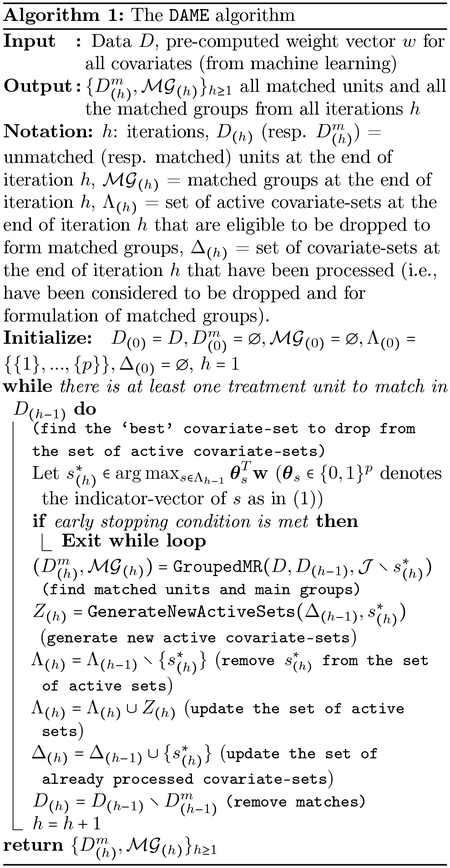

4. DYNAMIC ALMOST MATCHING EXACTLY (DAME)

We call a covariate-set any set of covariates. We denote by the original set of all covariates from the input dataset, where . When we drop a set of covariates s, it means we will match on . For any covariate-set s, we associate an indicator-vector θs ∈ {0, 1}p defined as follows:

| (1) |

that is, the value is 1 if the covariate is not in s implying that it is being used for matching.

Algorithm 1 gives the pseudocode of the DAME algorithm. It uses the monotonicity property stated in Proposition 3.1 and ideas from the apriori algorithm for association rule mining (Agrawal and Srikant, 1994). Instead of looping over all possible 2p vectors to solve the AME, it considers a covariate-set s for being dropped only if satisfies the monotonicity property of Proposition 3.1. For example, if {1} has been considered for being dropped to form matched groups, it would not process {1, 2, 3} next because the monotonicity property requires {1, 2}, {1, 3}, and {2, 3} to have been considered previously for being dropped.

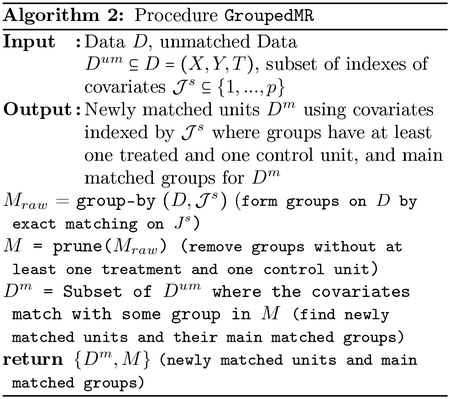

The DAME algorithm uses the GroupedMR (Grouped Matching with Replacement) subroutine given in Algorithm 2 to form all valid main matched groups having at least one treated and one control unit. GroupedMR takes a given subset of covariates and finds all subsets of treatment and control units that have identical values of those covariates. We use an efficient implementation of the group-by operation in the algorithm from Wang et al. (2017) that uses bit-vectors. To keep track of main matched groups, GroupedMR takes the entire set of units D as well as the set of unmatched units from the previous iteration D(h−1) as input along with the covariate-set to match on in this iteration. Instead of matching only the unmatched units in D(h−1) using the group-by procedure, it matches all units in D to allow for matching with replacement as in the AME objective. It keeps track of the main matched groups for the unmatched units D(h−1).

DAME keeps track of two sets of covariate-sets: (1) The set of processed sets Δ contains the covariate-sets whose main matched groups (if any exist) have already been formed. That is, Δ contains s if matches have been constructed on by calling the GroupedMR procedure. (2) The set of active sets Λ contains the covariate-sets s that are eligible to be dropped according to Proposition 3.1. For any iteration h, Λ(h) ∩ Δ(h) = ∅, i.e., the sets are disjoint, where Λ(h), Δ(h) denote the states of Λ, Δ at the end of iteration h. Due to the monotonicity property stated in Proposition 3.1, if s ∈ Λ(h), then each proper subset r ⊂ s belonged to Λ(h′) in an earlier iteration h′ < h. Once an active set s ∈ Λ(h−1) is chosen as the optimal subset to drop (i.e., s is ) in iteration h), s is excluded from Λ(h) (it is no longer active) and is included in Δ(h) as a processed set. In that sense, the active sets are generated and included in Λ(h) in a hierarchical manner similar to the apriori algorithm. A set s is included in Λ(h) only if all of its proper subsets of one less size r ⊂ s, |r| = |s| − 1, have been processed.

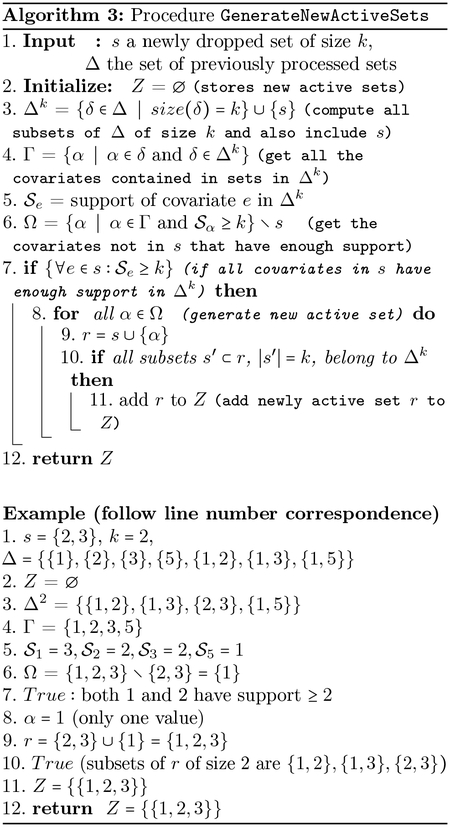

The procedure GenerateNewActiveSets gives an efficient implementation of generation of new active sets in each iteration of DAME, and takes the currently processed sets Δ = Δ(h−1) and a newly processed set as input. Let |s| = k. In this procedure, Δk ⊂ Δ ∪ {s} denotes the set of all processed covariatesets in Δ of size k, and also includes s. Inclusion of s in Δk may lead to generation of a new active set r of size k+1 only if all of r’s subsets of size k (one less) have been previously processed. The new active sets triggered by inclusion of s in Δk would be supersets r of s of size k +1 if all subsets s′ ⊂ r of size |s′| = k belong to Δk. To generate such candidate supersets r, we can append s with all covariates appearing in some covariate-set in Δ except those in s. However, this naive approach would iterate over many superfluous candidates for active sets. Instead, GenerateNewActiveSets safely prunes some such candidates that cannot be valid active sets using support of each covariate e in Δk, which is the number of sets in Δk containing e. Indeed, for any covariate that is not frequent enough in Δk, the monotonicity property ensures that any covariate-set that contains that covariate cannot be active. The following proposition shows that this pruning step does not eliminate any valid active set (proof is in the appendix):

Proposition 4.1. If for a superset r of a newly processed set s where |s| = k and |r| = k + 1, all subsets s′ of r of size k have been processed (i.e. r is eligible to be active after s is processed), then r is included in the set Z returned by GenerateNewActiveSets.

The explicit verification step of whether all possible subsets of r of one less size belongs to Δk is necessary, i.e., the above optimization only prunes some candidate sets that are guaranteed not to be active. For instance, consider s = {2, 3}, k = 2, and Δ2 = {{1, 2}, {1, 3}, {3, 5}, {5, 6}} ∪ {{2, 3}}. For the superset r = {2, 3, 5} of s, all of 2, 3, 5 have support of ≥ 2 in Δ2, but this r cannot become active yet, since the subset {2, 5} of r does not belong to Δ2.

Finally, the following theorem states the correctness of the DAME algorithm (proof is in the appendix).

Theorem 4.2. (Correctness) The DAME algorithm solves the AME problem.

Once the problem is solved, the main matched groups can be used to estimate treatment effects, by considering the difference in outcomes between treatment and control units in each group, and possibly smoothing the estimates from the matched groups to prevent overfitting of treatment effect estimates.

5. Almost Matching Exactly with Adaptive Weights

We now generalize the AME framework so the weights are adjusted adaptively for each subset of variables. The weights are chosen using machine learning on a hold-out training set. Let us consider a trivial variation of the AME problem with fixed weights and then generalize it to handle adaptive weights.

Almost Matching Exactly with Fixed Weights, Revisited: We will use squared rewards this time. For a given treatment unit u with covariates xu, compute the following, which is the maximum sum of rewards we can attain for a valid matched group (that contains at least one control unit):

| (2) |

The solution to this is an indicator of the optimal set of covariates to match unit u on. For treatment unit u, again, the main matched group for u contains all units ℓ so that xu ○ θu* = xℓ ○ θu*. Now we provide the (more general) adaptive version of AME.

Full Almost Matching Exactly (Full-AME): Denote θ ∈ {0, 1}p as an indicator vector for a subset of covariates to match on. Define the matched group for unit u with respect to covariates θ as the units that match u exactly on the covariates θ:

The usefulness of a set of covariates θ is now determined by how well they can be used together to make out-of-sample predictions. Specifically, the prediction error PE(θ) is defined with respect to a class of functions as: , where the expectation is taken over X, T and Y. Its empirical counterpart is defined with respect to a separate random sample from the distribution, used as a training set , specifically:

The training set is only used to calculate prediction error, not for matching. Using this, the best prediction error we could hope to achieve for a nontrivial matched group containing treatment unit u uses the following covariates for matching:

The main matched group for u is defined as . The goal of the Full-AME problem is to find the main matched group for all units u.

The class of functions can include nonlinear functions. We can use variable importance measures for prediction on such as permutation importance (also called model reliance) to determine the variable’s weight. If includes linear models, the weight wj for feature j would be the absolute value of feature j’s coefficient.

The Full-AME problem reduces to the fixed-squared-weights version under specific conditions, such as when is a single function f, which is linear with fixed linear weights (w, wT) and f(x○θ, T) = (w○θ)T(x○θ) + wTT, where w is the ground-truth coefficient vector that generates y, and is determined by the sum of weights for covariates determined by the feature-selector vector θ. This reduction is discussed formally by Wang et al. (2017).

In order to solve Full-AME, a step is needed in Algorithm 1 at the top of the while loop that updates the weights for each covariate-set we could choose at that iteration. In particular, we let

where Λ(h−1) is the active set of covariates, and the predictive error is computed over the training set with respect to a pre-specified class of models, . In the implementation in this paper we consider linear functions fit separately on the treated and the control units in the training set using ridge regression (that is, we add a ridge penalty to Eq (5)).

5.1. Early Stopping of DAME

It is important that DAME be stopped early when the quality of matches produced falls. In dropping covariates, its prediction error should never increase too far above its original value using all the covariates. This ensures the quality of every matched group: the covariates for every matched group thus obey , where the choice of ϵ (perhaps 5%) determines stopping. As such the while loop in Algorithm 1 should not only check whether there are more units to match, but also whether the predictive error has increased too much.

5.2. Hybrid FLAME-DAME

The DAME algorithm solves the Full-AME problem, whereas FLAME (Wang et al., 2017) approximates its solution. This is because FLAME uses backwards feature selection, whereas DAME calculates the solution without approximation. For problems with many features, we can use FLAME to remove the less relevant features, and then switch to DAME when we start to remove some of the more influential features. This hybrid algorithm scales substantially better, possibly without any noticeable loss in the quality of matches.

Matching-after-learning-to-stretch (MALTS) (Parikh et al., 2018) has been combined with FLAME and DAME to handle mixed real and categorical covariates.

5.3. Other Estimands

While CATEs are the most granular estimands, aggregate estimands such as Average Treatment Effect (ATE) and Average Treatment Effect on the Treated (ATT) may be of interest. Since DAME matches with replacement, standard techniques (e.g., frequency weights) should be used (Stuart, 2010; Abadie et al., 2004).

6. SIMULATIONS

We present results under several data generating processes. We show that DAME produces higher quality matches than popular matching methods such as 1-PSNNM (propensity score nearest neighbor matching) and Mahalanobis distance nearest neighbor matching, and better treatment effect estimates than black box machine learning methods such as Causal Forest (which is not a matching method, and is not interpretable). The ‘MatchIt’ R-package (Ho et al., 2011) was used to perform 1-PSNNM and Mahalanobis distance nearest neighbor matching (‘Mahalanobis’). For Causal Forest, we used the ‘grf’ R-package (Athey et al., 2019). DAME also improves over FLAME (Wang et al., 2017) with regards to the quality of matches. Other matching methods (optmatch, cardinality match) do not scale to large problems and thus needed to be omitted.

Throughout this section, the outcome is generated with y = ∑i αixi + T∑i=1 βixi + T · U∑i,γ,γ>i xixγ where T ∈ {0, 1} is the binary treatment indicator. This generation process includes a baseline linear effect, linear treatment effect, and quadratic (nonlinear) treatment effect. We vary the distribution of covariates, coefficients (α’s, β’s, U), and the fraction of treated units. We report conditional average treatment effects on the treated.

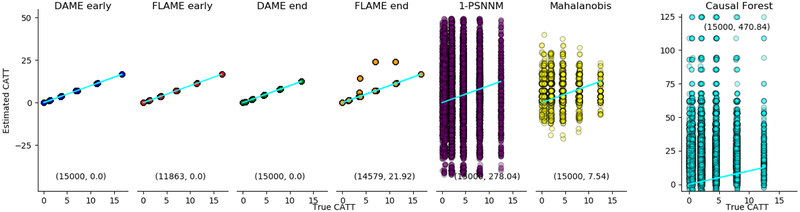

6.1. Presence of Irrelevant Covariates

A basic sanity check for matching algorithms is how sensitive they are to irrelevant covariates. To that end, we run experiments with a majority of the covariates being irrelevant to the outcome. For important covariates1 ≤ i ≤ 5 let αi ~ N(10s, 1) with s ~ Uniform{−1, 1}, βi ~ N(1.5, 0.15), xi ~ Bernoulli(0.5). For unimportant covariates 5 < i ≤ 15, xi ~ Bernoulli(0.1) in the control group and xi ~ Bernoulli(0.9) in the treatment group so there is little overlap between treatment and control distributions. This simulation generates 15000 control units, 15000 treatment units, 5 important covariates and 10 irrelevant covariates. Results: In Figure 1, DAME (even with early stopping) runs to the end and matches on all units because the stopping criteria is never met. In this figure, DAME finds all high-quality matches even after important covariates are dropped. In contrast, FLAME achieves the optimal result before dropping any important covariates and generates some poor matches after dropping important covariates. However, even FLAME’s worst case scenario is better than the comparative methods, all of which perform poorly in the presence of irrelevant covariates. Causal Forest is especially ill suited for this case.

Figure 1:

Estimated CATT vs. True CATT (Conditional Average Treatment Effect on the Treated). DAME and FLAME perfectly estimate the CATTs before dropping important covariates. DAME matches all units without dropping important covariates, but FLAME needs to stop early in order to avoid poor matches. All other methods are sensitive to irrelevant covariates and give poor estimates. The two numbers on each plot are the number of matched units and MSE.

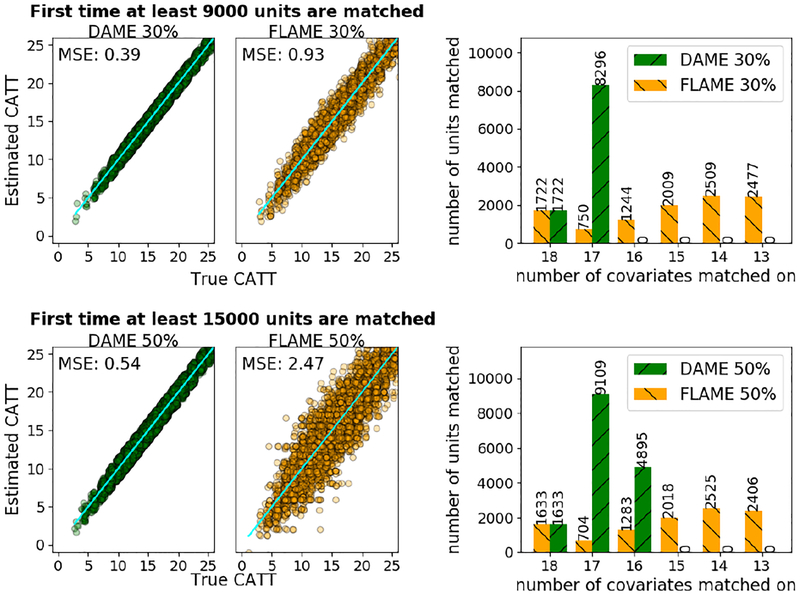

6.2. Exponentially Decaying Covariates

An advantage of DAME over FLAME is that it produces more high quality matches before resorting to lower quality matches. To test this, we considered covariates of decaying importance, letting the α parameters decrease exponentially as . We evaluated performance when ≈ 30% and 50% of units were matched. Results: As Figure 2 shows, DAME matches on more covariates, yielding better estimates than FLAME.

Figure 2:

DAME makes higher quality matches early on. Rows correspond to stopping thresholds (top row 30%, bottom row 50%). DAME matches on more covariates than FLAME, yielding lower MSE from matched groups.

6.3. Imbalanced Data

Imbalance is common in observational studies: there are often substantially more control than treatment units. The data for this experiment has covariates with decreasing importance. A fixed batch of 2000 treatment and 40000 control units were generated. We sampled from the controls to construct different imbalance ratios: 40000 in the most imbalanced case (Ratio 1), then 20000 (Ratio 2), and 10000 (Ratio 3). Results: Table 1 reveals that FLAME and DAME outperform the nearest neighbor matching methods. DAME is distinctly better than FLAME. Additionally, DAME has an average of 4 covariates not matched on, with ≈ 84% of units matched on all but 2 covariates, whereas FLAME averages 7 covariates not matched on and only ≈ 25% units matched on all but 2 covariates. Detailed results are in the longer version (Liu et al., 2018).

Table 1:

MSE for different imbalance ratios

| Mean Squared Error (MSE) | |||

|---|---|---|---|

| Ratio 1 | Ratio 2 | Ratio 3 | |

| DAME | 0.47 | 0.83 | 1.39 |

| FLAME | 0.52 | 0.88 | 1.55 |

| Mahalanobis | 26.04 | 48.65 | 64.80 |

| 1-PSNNM | 246.08 | 304.06 | 278.87 |

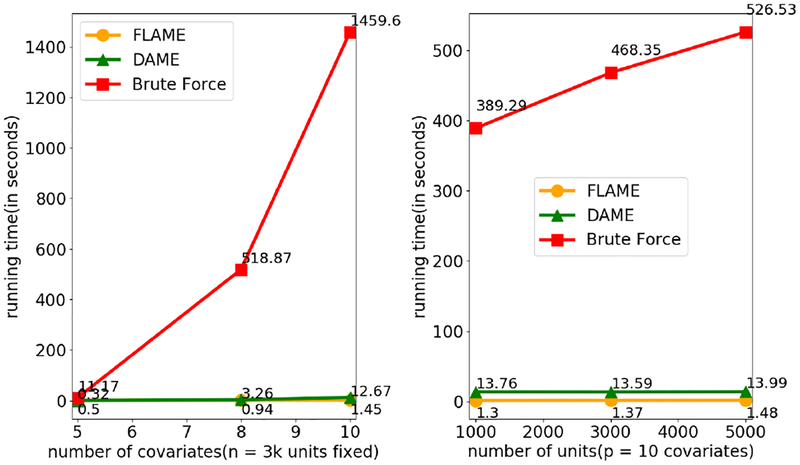

6.4. Run Time Evaluation

We compare the run time of DAME with a brute force solution (AME Solution 1 described in Section 3). All experiments were run on an Ubuntu 16.04.01 system with Intel Core i7 Processor (Cores: 8, Speed: 3.6 GHz), 8 GB RAM. Results: As shown in Figure 3, FLAME provides the best run-time performance because it incrementally reduces the number of covariates, rather than solving Full-AME. On the other hand, as shown in the previous simulations, DAME produces high quality matches that the other methods do not. It solves the AME much faster than brute force. The run time for DAME could be further optimized through simple parallelization of the checking of active sets.

Figure 3:

Run-time comparison between DAME FLAME, and brute force. Left: varying number of units. Right: varying number of covariates.

6.5. Missing Data

Missing data problems are complicated in matching. Normally one would impute missing values, but matches become less interpretable when matching on imputed values. If we match only on the raw values, DAME has an advantage over FLAME because it can simply match on as many non-missing relevant covariates as possible. When data are imputed, DAME still maintains an advantage over FLAME, possibly because it can match on more raw covariate values and fewer imputed values. Details of the experiments are in the longer version (Liu et al., 2018).

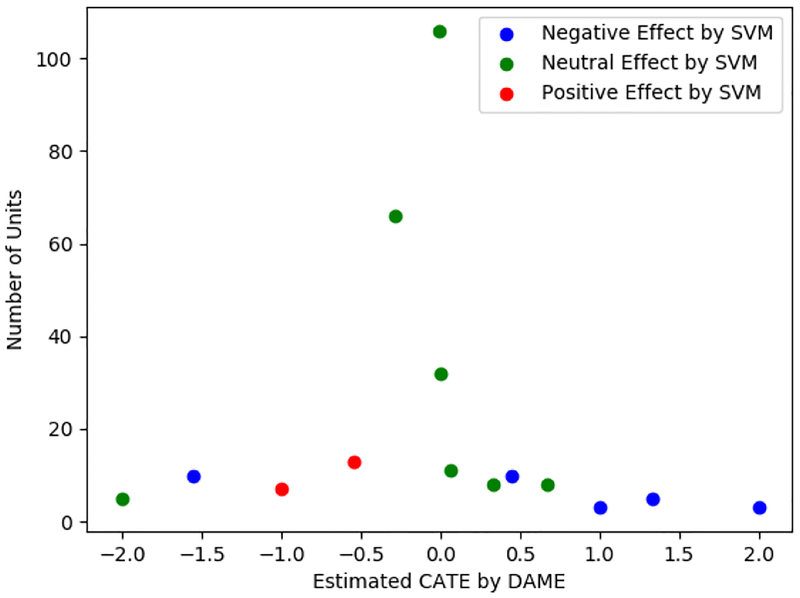

7. BREAKING THE CYCLE OF DRUGS AND CRIME

Breaking The Cycle (BTC) (Harrell et al., 2006) is a social program conducted in several U.S. states designed to reduce criminal involvement and substance abuse among current offenders. We study the effect of participating in the program on reducing non-drug future arrest rates. The details of the data and our results are in Appendix D. We compared CATE predictions of DAME and FLAME to double check the performance of a black box support vector machine (SVM) approach that predicts positive, neutral, or negative treatment effect for each individual. The result is that DAME and the SVM approach agreed on most of the exactly matched units. All of the units for which exact matching predicted approximately zero treatment effect all have a “neutral” treatment effect predicted label from the SVM. Most other predictions were similar between the two methods. There were only few disagreements between the methods. Upon further investigation, we found that the differences are due to the fact that DAME is a matching method and not a modeling method; the estimates could be smoothed afterwards if desired to create a model. In particular, one of the two disagreeing predictions between the SVM and DAME has a positive treatment CATE prediction, but it was closer in Hamming distance to units predicted to have negative treatment effects. With smoothing, its predicted CATE may have also become negative.

8. CONCLUSIONS

DAME produces matches that are of high quality. Its estimates of individualized treatment effects are as good as the (black box) machine learning methods we have tried. Other methods can match individuals together whose covariates look nothing alike, whereas the matches from DAME are interpretable and meaningful, because they are almost exact; units are matched on covariates that together can be used to predict outcomes accurately. Code is publicly available at: https://github.com/almost-matching-exactly/DAME.

Acknowledgements:

This work was supported in part by NIH award 1R01EB025021–01, NSF awards IIS-1552538 and IIS1703431, a DARPA award under the L2M program, and a Duke University Energy Initiative Energy Research Seed Fund (ERSF).

Appendix

A. Naïve AME solutions

In this section we present the complete outline of the two straightforward (but inefficient) solution to the AME problem (described in Section 3) for all units.

AME Solution 1 (quadratic in n, linear in p): For all treatment units t, we (i) iterate over all control units c, (ii) find the vector θtc ∈ {0, 1}p where θtcj = 1 if t and c match on covariate j and 0 otherwise, (iii) find the control unit(s) with the highest value of , and (iv) return them as the main matched group for the treatment unit t. Repeat the same procedure for each control unit c. Note that the CATE for each unit is computed based on its main matched group which means that the outcome of each unit can contribute to the computation of CATEs for multiple units. This algorithm is polynomial in both n and p, however, the quadratic time complexity in n also makes this approach impractical for large datasets (for instance, when we have more than a million units with half being treatment units).

AME Solution 2 (order nlogn, exponential in p:) This approach solves the AME problem simultaneously for all treatment and control units for a fixed weight vector w. First, (i) enumerate every θ ∈ {0, 1}p (which serves as an indicator for a subset of covariates), (ii) order the θ’s according to θTw, (iii) call GroupedMR for every θ in the predetermined order, (iv) the first time each unit is matched during a GroupedMR procedure, mark that unit with a ‘done’ flag, and record its corresponding main matched group and compute the CATE for each treatment and control unit using its main matched group. Each unit’s outcome will be used to estimate CATEs for every auxiliary group that it is a member of, as before. Although this approach can use an efficient ‘group by’ function (e.g., an implementation using bit-vectors or database/SQL queries as discussed by Wang et al. (2017)), which can be implemented in O(nlogn) time by sorting the units, iterating over all possible vectors θ ∈ {0, 1}p makes this approach unsuitable for practical purposes (exponential in p).

B. Proof of Proposition 4.1

Proposition 4.1 If for a superset r of a newly processed set s where |s| = k and |r| = k + 1, all subsets s′ of r of size k have been processed (i.e. r is eligible to be active after s is processed), then r is included in the set Z returned by GenerateNewActiveSets.

Proof. Suppose all subsets of r of size k are already processed and belong to Δk. Let f be the covariate in r \ s. Clearly, f would appear in Δk, since at least one subset s′ ≠ s of r of size k would contain f, and s′ ∈ Δk. Further all covariates in r, including f and those in s will have support at least k in Δk. To see this, note that there are k + 1 subsets of r of size k, and each covariate in r appears in exactly k of them. Hence f ∈ Ω, which the set of high support covariates. Further, the ‘if’ condition to check minimum support for all covariates in s is also satisfied. In addition, the final ‘if’ condition to eliminate false positives is satisfied too by assumption (that all subsets of r are already processed). Therefore r will be included in Z returned by the procedure. □

C. Proof of Theorem 4.2

Theorem 4.2 (Correctness) The DAME algorithm solves the AME problem.

Proof. Consider any treatment unit t. Let s be the set of covariates in its main matched group returned in DAME (the while loop in DAME runs as long as there is a treated unit and the stopping criteria have not been met, and the GroupedMR returns the main matched group for every unit when it is matched for the first time). Let θs be the indicator vector of s (see Eq. 1). Since the GroupedMR procedure returns a main matched group only if it is a valid matched group containing at least one treated and one control unit (see Algorithm 2), and since all units in the matched group on s have the same value of covariates in , there exists a unit ℓ with Tℓ = 0 and xℓ ○ θs = xt ○ θs.

Hence it remains to show that the covariate set s in the main matched group for t corresponds to the maximum weight θTw over all θ for which there is a valid matched group. Assume that there exists another covariate-set r such that , there exists a unit ℓ with Tℓ′, = 0 and xℓ′, ○ θr = xt ○ θr, and gives the maximum weight over all such r. Then,

r cannot be a (strict) subset of s, since DAME ensures that all subsets are processed before a superset is processed to satisfy the downward closure property in Proposition 3.1.

r cannot be a (strict) superset of s. Recall that θs,j is 1 for covariates j that are not in s (analogously for r). If r is a strict superset of s, then we would have , which violates the assumption that for non-negative weights.

Given (i) and (ii), r and s must be incomparable (there exist covariates in both r \ s and s \ r). Suppose the active set s was chosen in iteration h. If r was processed in an earlier iteration h′ < h, since r forms a valid matched group for t, it would give the main matched group for t, violating the assumption that s was chosen by DAME to form the main matched group for t, rather than r.

Next, we argue that r must be active at the start of iteration h, and will be chosen as the best covariate set in iteration h, leading to a contradiction.

Note that we start with all singleton sets as active sets in Λ(0) = {{1}, ⋯, {p}} in the DAME algorithm. Consider any singleton subset r0 ⊆ r (comprising a single covariate in r). Due to the downward closure property in Proposition 3.1, . Hence all of the singleton subsets of r will be processed in earlier iterations h′ < h, and will belong to the set of processed covariate sets Δ(h−1).

Repeating the above argument, consider any subset r′ ⊆ r. It holds that . All subsets r′ of r will be processed in earlier iterations h′ < h starting with the singleton subsets of r. In particular, all subsets of size |r| − 1 will belong to Δ(h−1). As soon as the last of those subsets is processed, the procedure GenerateNewActiveSets will include r in the set of active sets in a previous iteration h′ < h. Hence if r is not processed in an earlier iteration, it must be active at the start of iteration h, leading to a contradiction.

Hence for all treatment units t, the covariate-set r giving the maximum value of will be used to form the main matched group of t, showing the correctness of the DAME algorithm. □

D. Details of Breaking the Cycle of Drugs and Crime Study

D.1. Details About Survey

A survey was conducted in Alabama, Florida, and Washington regarding the program’s effectiveness, with high quality data for over 380 individuals. These data (and this type of data generally) can be a powerful tool in the war against opioids, and our ability to draw interpretable, trustworthy conclusions from it depends on our ability to construct high-quality matches. For the survey, participants were chosen to receive screening shortly after arrest and participate in a drug intervention under supervision. Similar defendants before the start of the BTC program were selected as the control group. Features are listed in Table 2.

Table 2:

Features for BTC data.

| Feature |

|---|

| 1. Live with anyone with an alcohol problem |

| 2. Have trouble understanding in life |

| 3. Live with anyone using non prescription drugs |

| 4. Have problem getting along with father in life |

| 5. Have an automobile |

| 6. Have drivers license |

| 7. Have serious depression or anxiety in past 30 days |

| 8. Have serious anxiety in life |

| 9. SSI benefit last 6 months |

| 10. Have serious depression in life |

D.2. Order of Dropping Covariates

For both DAME and FLAME we used ridge regression as the machine learning method for the Full-AME problem, calculating variable importance as the difference in mean squared error before and after dropping the variable. The order in which DAME and FLAME process covariates could be different. Table 3 shows the order in which the dynamic versions of the two algorithms process the covariates. The first covariate that the two algorithms process is identical: “Have problem getting along with father in life” but the two diverge afterwards. At the second round, DAME processes the covariate “Have an automobile.” On the other hand, at that same second round, FLAME processes “Have serious depression or anxiety in past 30 days”, which now is dropped along with “Have problem getting along with father in life.” What is important is that DAME is able to construct matched groups by only dropping subsets of what FLAME drops as early as the second and third iteration of the algorithm.

Table 3:

Order in which features were processed for DAME and FLAME. The feature numbers correspond to the feature numbers in Table 2. The number in the parenthesis corresponds to the number of units matched for the first time at that round. Before any covariates are dropped, 287 individuals are matched on all features, which is 75% of the data.

| DAME | FLAME | |

|---|---|---|

| 1st | 4: problem with father (15 new units matched) | 4 (7 units) |

| 2nd | 5: have an automobile (9 units) | 4,7 (25 units) |

| 3rd | 7: have serious depression (24 units) | 4,7,9 (9 units) |

| 4th | 4,7 (3 units) | 4,7,9,1 (7 units) |

| 5th | 5,7 (1 unit) | 4,7,9,1,8 (12 units) |

| 6th | 4,5 (7 units) | 4,7,9,1,8,10 (6 units) |

| 7th | 4,5,7 (0 units) | 4,7,9,1,8,10,6 (5 units) |

| 8th | 9 (8 units) | 4,7,9,1,8,10,6,5 (11 units) |

| 9th | 4,9 (0 units) | 4,7,9,1,8,10,6,5,2 (5 units) |

| ⋮ | ||

| 196th | 1,2,4,5 (1 unit) |

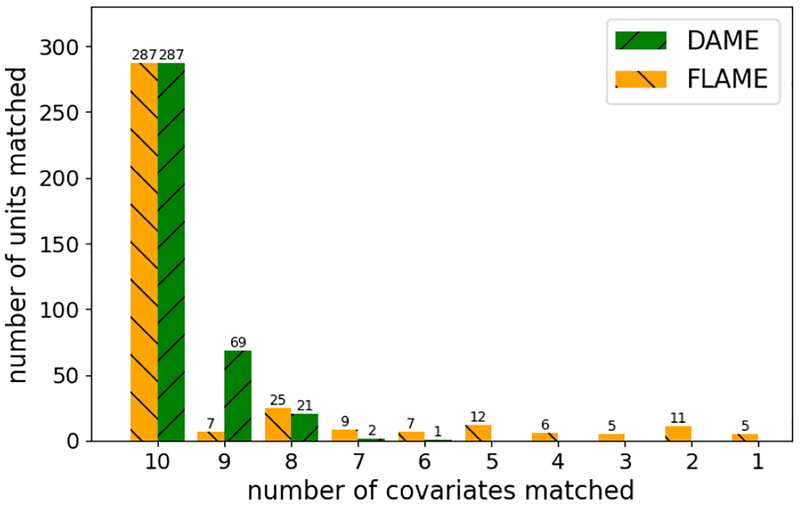

D.3. Match Quality for FLAME and DAME

We compare the quality of matches in the BTC data between FLAME and DAME in terms of the number of covariates used to match within the groups. Many of the units matched exactly on all covariates and thus were matched by both algorithms at the first round. In fact 75% of the data are matched on all covariates. This is important, because exact matching alone yields the highest quality CATE estimates for most of the data; if we had used a classical propensity score matching technique, we may not have noticed this important aspect of the data.

For the remaining units that do not have exact matches on all covariates, DAME matches on more covariates than FLAME. In Figure 4 we see that DAME matched many more units on 9 out of the 10 variables than FLAME; FLAME cannot match the same data on so many variables.

Figure 4:

Number Matched: Number of units matched per covariates for the BTC data

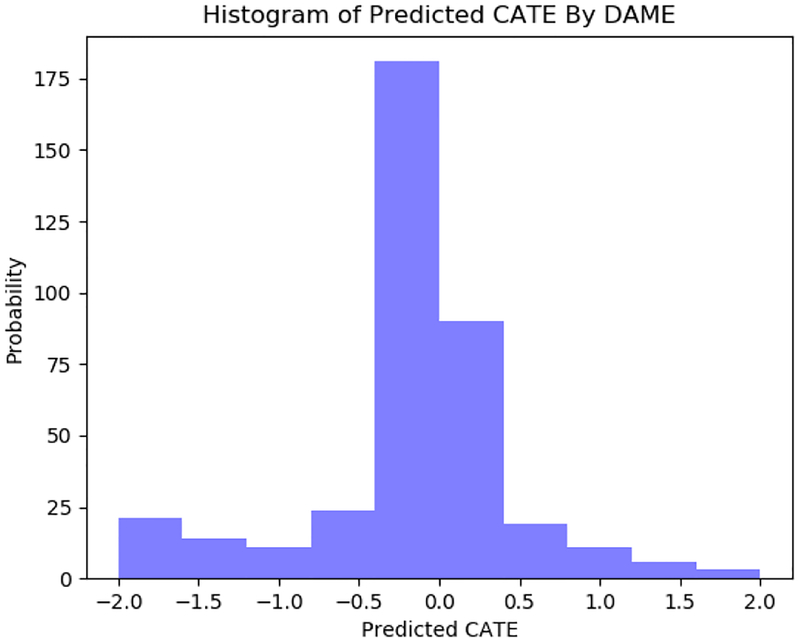

D.4. CATEs from BTC analysis

We plot a histogram of the estimated CATEs for BTC in Figure 5. The program does not seem to provide uniform protection from future arrests, but does seem to protect some individuals. The majority of people are estimated to experience little to no effect from the program.

Figure 5:

Histogram of estimated CATE by DAME. For individuals where the CATE is negative, it means that BTC was estimated to reduce crime.

D.5. A Comparison of DAME with SVM-Based Method Minimax Surrogate Loss

We can use DAME as a tool to check the performance of a black box machine learning approach. We chose a recent method that predicts whether treatment effects are positive, negative, or neutral, using a support vector machine formulation (Goh and Rudin, 2018). We ran DAME on the BTC dataset and saved the CATE for each treatment and control unit that were matched. Units with a positive CATE (outcome on treatment unit minus outcome on control unit) are considered to have a negative treatment effect, meaning that the program increased the probability of crime. Units with a negative CATE analogously had a positive predicted treatment effect. We also implemented the SVM approach and recorded a prediction of positive, negative, or neutral treatment effect for each unit. Figure 6 plots the CATEs for all the units that were matched exactly by DAME and colors them according to the output of the SVM. Since the distribution of some covariates is unbalanced, the number of matched groups is small with most units belonging to large groups.

Figure 6:

Comparison between DAME and SVM-based method

Figure 6 shows that DAME and the SVM approach agree on the direction of the treatment effect for most of the matched units: Most positive CATEs corresponded to negative treatment effects from the SVM. Only two points have a mismatch between DAME and SVM: the left-most green (neutral) labeled and blue (negative) labeled points.

The easiest way to explain the discrepancy between the two methods is that DAME is a matching method, not a statistical model and so does not smooth CATEs. CATEs are sometimes computed using a very small number of units, so it is possible that the SVM simply smoothed out the treatment effect estimates so that there was a different predicted treatment effect on some of the units. To evaluate this hypothesis, we computed the Hamming distance between the special group’s units (this is the group where DAME and the SVM disagree) with units in other groups to investigate.

In Figure 6, the units within the leftmost blue (negative) labeled matched group were much closer to other blue (negative) labeled matched groups than to green (neutral) or red (positive) labeled groups, suggesting that smoothing the estimates after running DAME would likely make them consistent with the SVM results. The units within the leftmost green (neutral) labeled matched group are not closer to other green (neutral) labeled matched groups than other colors, suggesting that neither SVM nor DAME have information to properly identify the causal effect for this group. We similarly investigated the blue (negative) labeled group for which CATE= 0.5 and again, the covariate values of its units were closer in Hamming distance to other blue (negative) labeled groups than to other points. Thus, additional smoothing of the CATEs from the matched groups could likely yield estimated positive and negative treatment effects similar to those of the SVMs.

References

- Abadie A, Drukker D, Herr JL, and Imbens GW. Implementing matching estimators for average treatment effects in stata. The Stata Journal, 4(3): 290–311, 2004. [Google Scholar]

- Agrawal R and Srikant R. Fast algorithms for mining association rules in large databases. In Proceedings of the 20th International Conference on Very Large Data Bases, VLDB ‘94, pages 487–499, 1994. [Google Scholar]

- Athey S, Tibshirani J, and Wager S. Generalized Random Forests. The Annals of Statistics, 47(2): 1148–1178, 2019. [Google Scholar]

- Belloni A, Chernozhukov V, and Hansen C. Inference on treatment effects after selection among highdimensional controls. The Review of Economic Studies, 81(2):608–650, 2014. [Google Scholar]

- Cochran WG and Rubin DB. Controlling bias in observational studies: A review. Sankhyã: The Indian Journal of Statistics, Series A, pages 417–446, 1973. [Google Scholar]

- Farrell MH. Robust inference on average treatment effects with possibly more covariates than observations. Journal of Econometrics, 189(1):1–23, 2015. [Google Scholar]

- Goh S and Rudin C. A minimax surrogate loss approach to conditional difference estimation. ArXiv e-prints: arXiv:1803.03769, March 2018. [Google Scholar]

- Harrell AV, Marlowe D, and Merrill J. Breaking the cycle of drugs and crime in Birmingham, Alabama, Jacksonville, Florida, and Tacoma, Washington, 1997–2001. Ann Arbor, MI: Inter-university Consortium for Political and Social Research, 2006-03-30, 2006. [Google Scholar]

- Ho D, Imai K, King G, and Stuart E. Matchit: Nonparametric preprocessing for parametric causal inference. Journal of Statistical Software, Articles, 42(8):1–28, 2011. [Google Scholar]

- Iacus SM, King G, and Porro G. Multivariate matching methods that are monotonic imbalance bounding. Journal of the American Statistical Association, 106 (493):345–361, 2011. [Google Scholar]

- Iacus SM, King G, and Porro G. Causal inference without balance checking: Coarsened exact matching. Political Analysis, 20:1–24, 2012. [Google Scholar]

- Liu Y, Dieng A, Roy S, Rudin C, and Volfovsky A. Interpretable almost-exact matching for causal inference. arXiv e-prints: arXiv:1806.06802, June 2018. [PMC free article] [PubMed] [Google Scholar]

- Morucci M, Noor-E-Alam M, and Rudin C. Hypothesis tests that are robust to choice of matching method. ArXiv e-prints, arXiv:1812.02227, December 2018. [Google Scholar]

- Noor-E-Alam M and Rudin C. Robust nonparametric testing for causal inference in observational studies. Optimization Online, December, 2015. [Google Scholar]

- Parikh H, Rudin C, and Volfovsky A. MALTS: Matching After Learning to Stretch. arXiv e-prints: arXiv:1811.07415, November 2018. [Google Scholar]

- Rassen JA and Schneeweiss S. Using high-dimensional propensity scores to automate confounding control in a distributed medical product safety surveillance system. Pharmacoepidemiology and Drug Safety, 21(S1):41–49, 2012. [DOI] [PubMed] [Google Scholar]

- Rosenbaum PR. Imposing minimax and quantile constraints on optimal matching in observational studies. Journal of Computational and Graphical Statistics, 26(1), 2016. [Google Scholar]

- Rubin DB. Matching to remove bias in observational studies. Biometrics, 29(1):159–183, March 1973a. [Google Scholar]

- Rubin DB. The use of matched sampling and regression adjustment to remove bias in observational studies. Biometrics, 29(1):185–203, March 1973b. [Google Scholar]

- Rubin DB. Multivariate matching methods that are equal percent bias reducing, I: Some examples. Biometrics, 32(1):109–120, March 1976. [Google Scholar]

- Rubin DB. Randomization analysis of experimental data: The fisher randomization test comment. Journal of the American Statistical Association, 75(371): 591–593, 1980. [Google Scholar]

- Schneeweiss S, Rassen JA, Glynn RJ, Avorn J, Mogun H, and Brookhart MA. High-dimensional propensity score adjustment in studies of treatment effects using health care claims data. Epidemiology (Cambridge, Mass.), 20(4):512, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stuart EA. Matching methods for causal inference: A review and a look forward. Statistical Science: a Review Journal of the Institute of Mathematical Statistics, 25(1):1, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang T, Roy S, Rudin C, and Volfovsky A. FLAME: A fast large-scale almost matching exactly approach to causal inference. arXiv e-prints: arXiv:1707.06315, July 2017. [Google Scholar]