Summary:

In the analysis of next-generation sequencing technology, massive discrete data are generated from short read counts with varying biological coverage. Conducting conditional hypothesis testing such as Fisher’s Exact Test at every genomic region of interest thus leads to a heterogeneous multiple discrete testing problem. However, most existing multiple testing procedures for controlling the false discovery rate (FDR) assume that test statistics are continuous and become conservative for discrete tests. To overcome the conservativeness, in this article, we propose a novel multiple testing procedure for better FDR control on heterogeneous discrete tests. Our procedure makes decisions based on the marginal critical function (MCF) of randomized tests, which enables achieving a powerful and non-randomized multiple testing procedure. We provide upper bounds of the positive FDR (pFDR) and the positive false non-discovery rate (pFNR) corresponding to our procedure. We also prove that the set of detections made by our method contains every detection made by a naive application of the widely-used q-value method. We further demonstrate the improvement of our method over other existing multiple testing procedures by simulations and a real example of differentially methylated region (DMR) detection using whole-genome bisulfite sequencing (WGBS) data.

Keywords: Differentially methylated regions, Discrete p-value, Marginal Critical Function, Multiple testing, Randomized test, Whole-genome bisulfite sequencing

1. Introduction

Recent developments of next generation sequencing (NGS) technology have revolutionized genomic research with its unprecedented throughput, scalability and speed. NGS data are typically presented in the form of short read counts and invoke large-scale multiple discrete testing. For example, in a whole-genome bisulfite sequencing (WGBS) experiment, input DNA is treated with sodium bisulfite and sequenced to help understand the DNA methylation pattern. Along the genome, the CpG sites are regions of DNA where a cytosine nucleotide occurs next to a guanine nucleotide in the linear sequence of bases along its length, and a comparative WGBS experiment gives methylated and unmethyated counts at each CpG site between the control and treatment samples. To identify the differentially methylated regions (DMRs) based on these discrete counts, a rudimentary method is to conduct Fisher’s Exact Test (FET) at each CpG site and then a DMR is detected as a genomic region of adjacent CpG sites that are differentially methylated (Challen et al., 2012; Gu et al., 2010). FET as a conditional test depends on its marginal counts, i.e. the sum of read counts between two samples, which is highly varying at different CpG sites. Thus testing at all the CpG sites leads to a heterogeneous multiple discrete testing problem, that is, tests with different discrete null distributions. However, most existing multiple testing methods are designed for continuous tests and lack sufficient power when applied to discrete tests (Pounds and Cheng, 2006). In this paper, we study how to overcome the conservativeness for general heterogeneous multiple discrete testing problems.

When testing multiple hypotheses, the false discovery rate (FDR) proposed by Benjamini and Hochberg (1995) is widely adopted to measure the overall error rate. Suppose that we test m hypotheses and reject R of them, out of which V tests are erroneously rejected. The false discovery proportion (FDP) is then the proportion of false rejections among all the rejections, i.e. . The FDR is defined as the expectation of FDP, i.e. . A slight variant of FDR, the positive FDR (pFDR) (Storey, 2002), is defined as the conditional FDR given at least one rejection, i.e. . The pFDR can be interpreted as a Bayesian Type-I error rate under a mixture model involving i.i.d. p-values (Storey, 2003). Storey (2002) also provided estimates of pFDR under the above mixture model for a single-step procedure that are related to the empirical Bayes FDR of Efron et al. (2001). When the number of tests is large, Pr(R > 0) is close to 1 and the pFDR serves as an good approximation to the FDR.

Over the past decade, a large number of multiple testing methods on controlling the FDR or pFDR have been proposed in the literature, such as Benjamini and Hochberg (1995); Efron and Tibshirani (2007); Pounds and Cheng (2004); Ruppert et al. (2007) and Storey (2002). Most of them assume that test statistics are continuously distributed and heavily rely on the resulting fact that under null hypotheses, the p-values follow Unif(0,1), i.e. the uniform distribution on the interval (0,1), for example, the BH algorithm (Benjamini and Hochberg, 1995) and the q-value method (Storey, 2003). However, when the continuity assumption no longer holds in discrete tests, ignoring the discreteness leads to FDR estimators with inflated standard error, conservativeness and lower detection power (Efron, 2008; Pounds and Cheng, 2006). The challenges imposed by discrete tests come from two major parts. The first challenge is that the p-values from discrete tests follow discrete distributions that are stochastically larger than the Unif(0,1) distribution, which leads to the conservativeness of directly applying conventional multiple testing methods. To alleviate the discreteness of p-values, Gilbert (2005) proposed a two-step procedure combining Tarone’s adjustment (Tarone, 1990) with the BH-algorithm, by first removing tests that can not be rejected even with their smallest possible p-values. Heller and Gur (2011) suggested applying the BH-algorithm to mid p-values (Lancaster, 1961) instead of the raw p-values, but this approach does not guarantee controlling the FDR at the nominal level. Habiger and Pena (2011) and Habiger (2015) proposed a unifying framework that applies traditional multiple testing methods such as the BH-algorithm or q-value method to the randomized p-values. It avoids the issue of discreteness since the randomized p-values are continuously distributed. However, due to its random decision, this approach can be difficult for practitioners to adopt. Habiger (2015) suggested using this approach as additional information for understanding the properties of usual multiple testing procedures. The second challenge lies in that discrete tests usually have different null distributions of p-values, for that many discrete tests are conditional tests, unlike p-values always follow Unif(0,1) in continuous tests. To handle this heterogeneity, Chen and Doerge (2015) proposed grouping discrete tests into groups with similar null distributions of p-values, then controlling the FDR by a weighted FDR-control procedure proposed by Hu et al. (2010). We note that there are special cases where tests are highly homogeneous, i.e. the p-values have discrete but identical distributions, such as those induced by permutation tests. This paper does not target on this scenario and we refer readers to the novel class of FDR estimators proposed by Liang (2016).

In this paper, we propose a novel procedure for heterogeneous multiple discrete testing based on the marginal critical function (MCF) of randomized tests (Kulinskaya and Lewin, 2009). Our MCF-based method reduces the discreteness of each individual test utilizing the idea of randomized tests, and also provides non-random decisions based on the ranked MCF values. The MCF of a randomized test is the conditional probability that a randomized p-value is less than a given threshold and can serve as a measure of evidence against the null hypothesis (Kulinskaya and Lewin, 2009). Compared to the approach in Habiger (2015) which relies on a single realization of randomized p-values, our MCF-based method utilizes more distributional information of the randomized p-values and is shown to result in a higher detection power and a more stable control of FDR, i.e. a smaller standard deviation of the FDP. When tests are heterogeneous enough, specifically when Condition 3.1 in Section 3 satisfies, we prove that the pFDR of our method is asymptotically controlled at the nominal level, and provide an asymptotic upper bound for the pFNR of our method. We also show empirically that our MCF-based method outperforms many other existing methods designed for multiple discrete testing.

The paper is organized as follows. We describe our MCF-based method in Section 2, with its theory given in Section 3. In Section 4, we evaluate our method based on various simulated datasets. Section 5 presents its application to DMR detection using WGBS data from NGS experiments. Section 6 contains concluding remarks and discussions. Proofs of the theorems and additional numerical results, algorithms, figures and tables are provided in the Supplementary Material.

2. Methods

Tocher (1950) showed that a single discrete test may not achieve an exact significance level for that its raw p-value is not continuously distributed. He further suggested using an efficient randomized test strategy as a remedy. Specifically, consider a right-tailed test (similarly for left-tailed and double-tailed) with discrete test statistics T, whose observed value is denoted by t. Let P denote the raw p-value of this test (viewed as a random variable), and its observed value be p = P(t) = Pr0(T ⩾ t) calculated under the null distribution of T. Since in a discrete test, the support of P is discrete, the test can not obtain certain significance level α exactly (Tocher, 1950). This difficulty can be solved by the introduction of randomized tests. Given observed test statistic t, the randomized p-value is defined as for U ~ Unif(0,1). The randomizer U can be interpreted as a need for an extra Bernoulli experiment with probability of rejection (α − Pr0(T > t))/Pr0(T = t) when T = t. Given p, let p− = Pr(T > t) be the largest possible value less than p in the support of the raw p-value (Geyer and Meeden, 2005), then

| (1) |

Marginally, when integrating out P, follows the Unif(0,1) distribution under the null hypothesis, and the exact level-α test can be achieved. Next we generalize the idea of randomized tests to multiple testing.

Consider testing m hypotheses with discrete test statistics. Let P1, …, Pm be their corresponding raw p-values and be their randomized p-values. To reduce the conservativeness caused by the discrete raw p-values, Habiger (2015) suggested utilizing the randomized p-values instead. First assume that, when integrating out Pi, , i = 1,2, …m, independently follow the two-component mixture model,

| (2) |

where ∆i is the indicator function that equals zero if the ith null hypothesis is true (true null) and equals one if it is false (true non-null), and F1i is the alternative distribution of . Suppose that Pr(∆i = 0) = π0 and Pr(∆i = 1) = π1 = 1 − π0, where π0 and π1 are called the null proportion and non-null proportion, respectively.

Under the above mixture model, Storey (2003) showed that the pFDR corresponding to a threshold λ on the randomized p-values is , where . Given a nominal FDR level 0 < α < 1, Habiger (2015) then defined

| (3) |

and suggested to reject the ith tests if (referred as Habiger’s method hereafter). We refer λ*(α) as λ* when no confusion. Habiger’s method is shown to control the FDR under the nominal level α (Habiger, 2015).

Although enjoying good theoretical properties, Habiger’s method is likely undesirable by practitioners due to its random decisions. In order to resolve this, we consider a related measure called the marginal critical function (MCF) of randomized test (Kulinskaya and Lewin, 2009).

Definition 1: Suppose that p is the observed p-value from a discrete test and p− is the largest possible value less than p in the support of p-value. If not such p− exists, let it be 0. For a given threshold λ ∈ (0, 1), the marginal critical function (MCF) of a randomized test is defined as

It follows from the conditional distribution of in (1) that . In other words, the MCF represents the conditional probability of a test being rejected by Habiger’s method. Therefore, the tests with larger MCF values are more likely to be true non-null and shall be rejected. For simplicity, we use ri to refer to the MCF r(pi, λ*) conditionally on pi.

Define the random indicators , i = 1, 2, …, m, then Habiger’s method rejects the ith test if Xi = 1. To avoid the random decision in Habiger’s method caused by the random variable Xi’s, we make decisions relying on the expected value of each Xi, E(Xi|pi) = ri, which is fixed given observed pi. We also make the same proportion of rejection as in Habiger’s method to ensure that the FDR is controlled. Specifically, following the two-component model (2), given level λ*, the expected proportion of rejections by Habiger’s method is . Define the (1 − u)-th quantile of the empirical distribution of the pooled MCF’s {r1, …, rm} as

| (4) |

where is the empirical cdf of the ri’s. We then reject the ith test if ri > Q(1 −u). When some tests are homogeneous and they have the same observed p-values, there exist ties in the MCF values and the proportion of rejections by our MCF-based method will be smaller than Habiger’s method. However, when the tests are not highly homogeneous, ties happen rarely and the difference in their proportions of rejections is small. Our simulation studies in Section 4 also show that even though our MCF-based method may result in slightly smaller number of rejections, it often has higher detection power than Habiger’s method due to its usage of more informative MCFs.

Our method is summarized in the following Algorithm 1.

In Step 1, common estimation methods for multiple continuous tests can be directly applied because the randomized p-values are continuously distributed (Nettleton et al., 2006; Pounds and Cheng, 2006; Storey, 2002). This is another advantage of our method as we avoid the complications to account for discreteness. To estimate π0, we generate B set of samples of the randomized p-values, , b = 1, 2, …, B and apply Storey’s estimator with threshold t. We then estimate π0 by . Storey (2002) provided a bootstrap method to find the optimal threshold t in Storey’s estimator. However, they found that the difference of using different thresholds was not drastic, and optimal performance in estimating pFDR was nearly attained in almost all the situations they simulated (Storey, 2002). In the scenario of multiple discrete testing, we also found that using different threshold t led to similarly good estimators based on our simulated datasets (see details in Web Appendix D). Therefore in our work, we suggest setting t = 0.5 by convention. To estimate F (λ) = π0λ + π1F1(λ), which is the denominator in (3), we use the empirical distribution of the pooled sample of randomized p-values, , i.e. . In practice, a reasonably large B will ensure accurate estimations and we find B = 1000 often sufficient. In Step 2, there is generally no closed-form solution to calculate λ*. However, following Genovese et al. (2006) we may assume that F1 is concave, then based on Lemma 1 in the Web Appendix E, we know that is non-decreasing in λ. This allows us to apply the bisection algorithm to find λ* (see Web Appendix F).

3. Theoretical Results

In this section, we derive the upper bounds for the asymptotic pFDR and pFNR corresponding to our MCF-based method. We also show that our method gives a strictly larger set of detections than the q-value method.

3.1. Asymptotic upper bound of pFDR

Let be the discrete support of the raw p-value Pi, i = 1, 2, …, m. Similar to the two-component model (2) of , we assume that the raw p-value Pi also follows a mixture model,

| (5) |

where ∆i is defined as in (2), H0i and H1i are the null and alternative cdf of Pi, respectively, and H0i(p) = p for any (Geyer and Meeden, 2005). Let h0i and h1i denote the pmf of H0i and of H1i, respectively. Given a threshold 0 < λ < 1, for the ith test, i = 1, …, m, let and , i.e. aiλ < λ ⩽ biλ are two consecutive values in around λ. Notice that, in the special case that biλ is the smallest value in , we let aiλ be 0. By Definition 1, the MCF value ri = r(pi, λ) takes value in and follows a two-component model,

| (6) |

where the cdf’s Gji, j = 0, 1, are step functions with jumps at {0, wi, 1} and the corresponding step sizes, i.e. pmf values, are {Hji(aiλ), hji(biλ), 1 − Hji(biλ)}. Marginally over m tests, the average null and alternative cdf of the MCF values are and , which have jumps at {1, w1, …, wm, 1} with corresponding step size . for j = 0, 1. As the number of tests m → ∞, each step size goes to 0 as hjk (bkλ) is bounded by 1. When tests are highly heterogeneous, in the sense that there are not infinitely many wi’s having the same value, and wi’s spread well within (0,1), we make the following assumption to linearly approximate the steps in and .

Condition 3.1: For any threshold λ ∈ (0, 1), and j = 0, 1, suppose there exist constants , such that as m → ∞, and . Let be a random variable with cdf

then as m → ∞ for j = 0, 1.

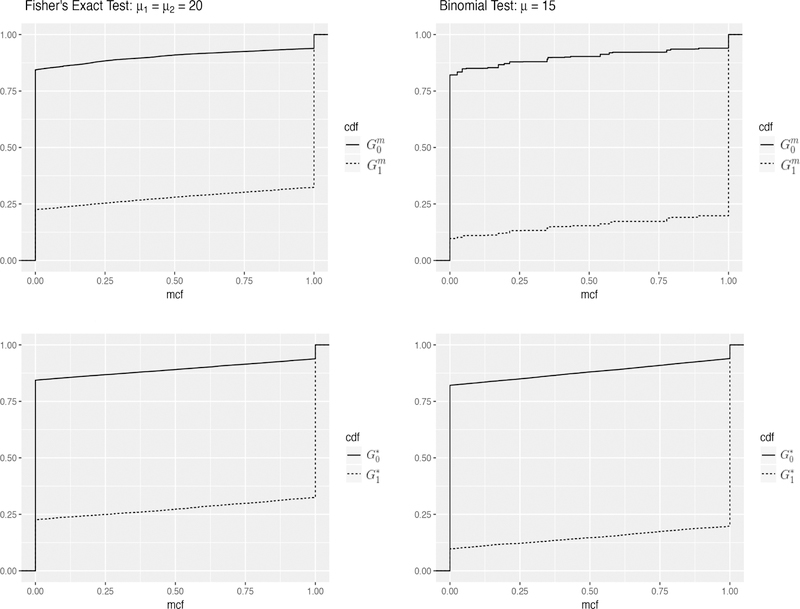

Condition 3.1 can be interpreted under a Bayesian hierarchical model setup. In discrete tests, for a given λ, the null and alternative distribution of the MCF value, (G0i, G1i), are usually determined by the configuration of ith test, i = 1, …, m. For example, the configuration of a Binomial test includes the sample size, the probability of success under the null and alternative hypotheses. Following a Bayesian hierarchical model setup, when the number of tests is large, the test configurations can be considered as independently generated from an underlying mechanism (Allison et al., 2002; Catelan et al., 2010; Tang et al., 2007). Thus for j = 0, 1, Hji(aiλ) and Hji(biλ) are i.i.d. random variables, i = 1, …, m, and based on the law of large numbers (LLN), their mean converges under mild conditions as m → ∞ (Lehmann, 2004). Condition 3.1 also assumes that this underlying mechanism generates highly heterogeneous tests so that the linear approximations of and hold. Figure 1 below shows the empirical and from simulated datasets under two setups described in Section 4, and their corresponding linear approximations and defined in Condition 3.1.

Figure 1.

Empirical and (top) and their corresponding linear approximations and (bottom) described in Condition 3.1, under Setup 1 with µ1 = µ2 = 20 (left) and Setup 2 with µ = 15 (right).

When tests are randomly generated from a hierarchical model, although each test is discrete, when they are heterogeneous enough, the step sizes of the average distribution and are small and they can be well approximated on (0,1) by a continuous distribution as in Condition 3.1. In Section 5, we also show that the real application of DMR detection based on WGBS read counts leads to a highly heterogeneous multiple discrete testing problem, and our MCF-based method applies well. When tests are highly homogeneous, for example when each test is a permutation test with the same sample size as described in Liang (2016), Condition 3.1 may not hold and one may adopt other methods, such as Liang (2016).

Under Condition 3.1, Theorem 1 gives the asymptotic upper bound of the pFDR for our method. See Web Appendix A for the proof of Theorem 1.

Theorem 1: In a multiple testing problem with m discrete tests, denote by Pi and the p-value and randomized p-value of the ith test, i = 1, 2, …, m, respectively. Suppose that Pi independently follows the distribution (5) and independently follows the distribution (2). With probability 1, as m → ∞, suppose that and for any 0 ⩽ t ⩽ 1, where 0 < π0 < 1 is a constant and is a continuous cdf. Given a nominal FDR level 0 < α < 1, let , and reject the ith test if ri > Q(1 −u*) where Q(1 − u*) is the (1 − u*)-th quantile of the pooled MCF values {r1, …, rm} as in (4). Suppose that Condition 3.1 holds, then as m → ∞, the corresponding pFDR satisfies,

| (7) |

3.2. Asymptotic upper bound of pFNR

Next we investigate the Type-II error performance for our MCF-based method in terms of the positive false non-discovery rate (pFNR) (Storey, 2003). Let W denote the number of nonsignificant tests, out of which T tests are true non-nulls, then . Theorem 2 below provides an asymptotic pFNR bound of our method. See Web Appendix B for the proof of Theorem 2.

Theorem 2: Under the assumptions of Theorem 1, the corresponding pFNR satisfies

| (8) |

In general, methods for controlling the FDR do not necessarily minimize the pFNR, but Theorem 2 enables us to quantify the pFNR by its upper bound.

3.3. Comparison to a naive application of the q-value method

As many FDR-control methods for discrete tests are developed only recently, the discreteness issue is often ignored by practitioners and hence common methods, e.g. the q-value method (Storey, 2003), are directly applied. The advantage of our MCF-based method compared to the q-value method is shown in the following theorem. See Web Appendix C for the proof of Theorem 3.

Theorem 3: In a multiple testing problem with m discrete tests, if a test is rejected after directly applying the q-value method to the raw p-values, it will also be rejected by our MCF-based method under the same nominal FDR level.

Theorem 3 claims that under the same nominal FDR level, our MCF-based method will not miss any significant test reported by the q-value method. In other words, our MCF-based method has a strictly larger detection power than the q-value method. This is also shown under various settings of simulations (Section 4) and in the real application to a WGBS dataset (Section 5). In contrast, Habiger’s method does not share this property as their decision solely depends on a one-time realization of the randomized p-values.

4. Simulation study

In this section, we demonstrate the performance of our method by simulations. We generate data under two different setups and consider testing m = 5000 hypotheses with the true null proportion π0 = 0.9, which emulates typical multiple-testing applications with relatively sparse signals, i.e. true non-nulls.

• Setup 1: The ith test, i = 1, 2, …, m, is an FET to test if the probabilities of success from two Binomial distributions are the same. This is consistent with the setup of the DMR detection in the real application in Section 5, where at each CpG site an FET is conducted. The FET is built on the data given in the following 2 × 2 contingency table, which consists of the counts (C, N − C) from two Binomial distributions, Bin(n1i, q1i) and Bin(n2i, q2i).

| C | N − C | Total | |

|---|---|---|---|

| Bin(n1i, q1i) | c1i | n1i − c1i | n1i |

| Bin(n2i, q2i) | c2i | n2i – c2i | n2i |

We first generate the number of trials nsi ~ Poisson(µs) and then the count csi ~ Binomial(nsi, qsi), s = 1, 2. Two cases are considered: µ1 = µ2 = 20 and µ1 = µ2 = 25. For the first π0 · m = 4500 tests (true nulls), we generate the common value of q1i and q2i independently from U[0,1]. For the rest 500 tests (true non-nulls), q2i = 0.5ui, q1i = q2i + δi. where ui ~ U[0, 1] and δi ~ U[0.2, 0.5]. Then we apply FET to test H0i : q1i = q2i v.s. H1i : q1i ≠ q2i, i = 1, …, m.

• Setup 2: The ith test, i = 1, 2, …, m, is an exact Binomial test to test if the probability of success in a Bernoulli experiment is 0.5 or not. We generate counts Xi from a Binomial distribution Bin(ni, qi) with success probability qi = 0.5 for i = 1, …, 4500 and qi = 0.5+δiϵi for i = 4501, …, 5000, where δi is the sign variable with equal probability being 1 and −1, and ϵi ~ U[0.2, 0.5]. Sample sizes n1, n2,…nm were generated independently from a Poisson distribution with mean µ. Two cases are considered: µ = 10 and µ = 15. We then apply Binomial tests to test H0i : qi = 0.5 v.s. H1i : qi ≠ 0.5, i = 1, …, m.

We run N = 100 Monte Carlo simulations to evaluate the average performance of our method. We keep nsi, qsi under Setup 1 and ni, qi under Setup 2 unchanged in different Monte Carlo samples and only update csi under setup 1 and Xi under setup 2.

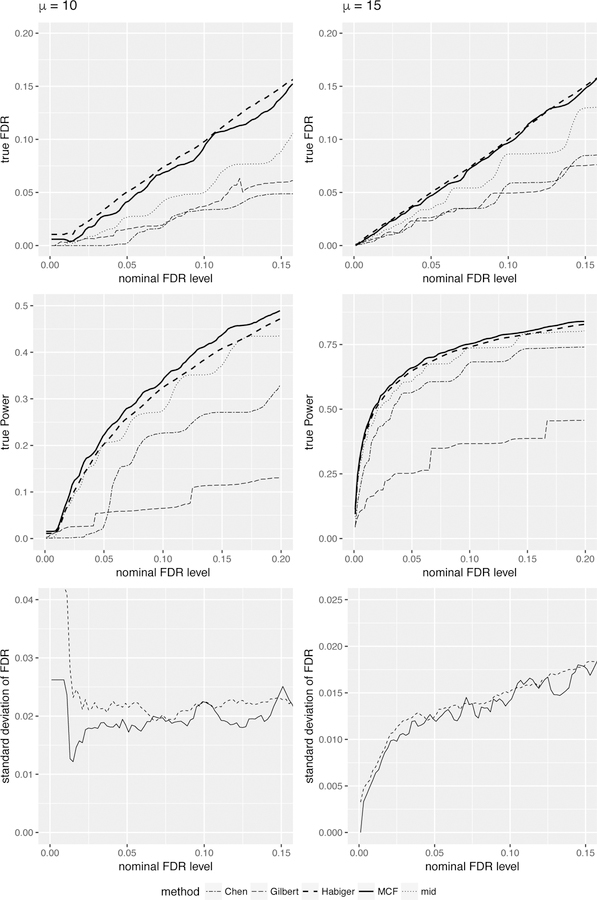

We compare our method with Habiger’s method and three other methods designed for multiple discrete testing (Chen and Doerge, 2015; Gilbert, 2005; Heller and Gur, 2011), in their true FDR and statistical power at different nominal FDR level α, where the statistical power is defined as the ratio between the number of correct rejections and the number of true non-null hypotheses.

The top rows of Figures 2 and 3 plot the average FDP of each method. We see that all five methods control the FDR under nominal level, i.e. below the 45 degree line. However except our method and Habiger’s method, the other three methods are too conservative based on the graph, i.e. far below the diagonal line. Although our MCF-based method results in a smaller FDR than Habiger’s method, it is not conservative and even more powerful than Habiger’s method according to the statistical power. The second rows of Figures 2 and 3 display the statistical power of each method, and our method achieves the best performance and very close to Habiger’s method. In addition, the third rows of Figures 2 and 3 plot the standard deviation of the FDP’s over the N = 100 Monte Carlo samples, i.e. , where FDPi is the FDP based on the ith sample and . We can see that our MCF-based method is more stable than Habiger’s method with a lower standard deviation, which is expected as our method utilized the distributional information of the randomized p-values comparing to utilizing their one-time realizations as in Habiger’s method. We also plot the FNR corresponding to our method and Habiger’s method, and the asymptotic pFNR upper bound proposed in Theorem 2 (see Web Figures 2 and 3 in Web Appendix I). We see that the FNR of our method is similar but slightly lower than that of Habiger’s method, and the asymptotic pFNR upper bound proposed in Theorem 2 is usually effective and close to the true FNR.

Figure 2.

Performance comparisons among different multiple testing methods for Setup 1. The average FDR vs. nominal FDR level (first row), the statistical power vs. nominal FDR level (second row) and the sample standard deviation of FDR (from 100 simulations) vs. nominal FDR level (third row), for Setup 1 with µ1 = µ2 = 25 (left column) and µ1 = µ2 = 20 (right column).

Figure 3.

Performance comparisons among different multiple testing methods for Setup 2. The average FDR vs. nominal FDR level (first row), the statistical power vs. nominal FDR level (second row) and the sample standard deviation of FDR (from 100 simulations) vs. nominal FDR level (third row), for Setup 2 with µ = 10 (left column) and µ = 15 (right column).

5. Applications

In this section, we apply our MCF-based multiple testing method to DMR detection, using a WGBS dataset from the NIH Roadmap Project (http://www.ncbi.nlm.nih.gov/geo/roadmap/epigenomics) in the Gene Expression Omnibus (GEO) database. We use three different types of sample cells: Brain, Skin and H1ES (Kundaje et al., 2015), where each sample has only 1 biological replicate. Following Challen et al. (2012), we considered three pairwise comparisons for detecting differentially methylated CpG sites between two biological samples, i.e., Brain versus H1ES, Skin versus H1ES and Brain versus Skin, by running FET at each CpG site. The data at the ith CpG sites can then be expressed as the following 2 × 2 contingency table.

| Methylated | Unmethylated | total | |

|---|---|---|---|

| Sample 1 | m1i | n1i − m1i | n1i |

| Sample 2 | m2i | n2i − m2i | n2i |

The CpG sites with at least one of the total counts (n1i, n2i) less than 15 were excluded from our analysis to ensure the reliability of the data source. The threshold 15 is applied to achieve a good compromise between the test accuracy and the genome coverage, as CpG sites with low read counts may not be measured accurately. We also repeated the same analysis using the cutoffs of 10 and 20, and our results were little affected by the cutoff value (see Web Appendix G). The three comparisons then contained 10,023,559, 15,894,438 and 9,384,917 tests, respectively. Boxplots of the total read count at different CpG sites in each sample (see Web Figure 4 in Web Appendix I) shows that these read counts are highly variated. Since FET is a conditional discrete test depending on the marginal counts, i.e. the total read counts, thus combing all CpG sites leads to a heterogeneous discrete multiple testing problem, which is an ideal application setting for our MCF-based method. We then compared our method with the widely-used q-value method under two nominal FDR levels α = 0.05 and 0.1, respectively. Because Habiger’s method produces random decisions, and the method of Chen and Doerge (2015) is computationally too intense to handle multiple testing at this large scale, we did not include them.

Results in Table 1 show that our MCF-based method always gave more discoveries than the q-value method. Across three comparisons, our MCF-based method resulted in 13–44% more discoveries. Moreover, our MCF-based method detected every discovery made by the q-value method, in that the number of significant CpG sites found by the q-value method is always the same as the number of significant CpG sites found by both the MCF-based method and the q-value method, and this is consistent with Theorem 3 in Section 3. Hereafter, we refer to the significant CpG sites found by the MCF-based method but not the q-value method as the “additional” sites, and the significant CpG sites found by both methods as “overlap” sites. We plotted the number of significant CpG sites detected by the two methods at different nominal FDR levels (from 0 to 0.2 with step size 0.001) (see Web Figure 5 in Web Appendix I), which shows that our MCF-based method consistently detected more significant CpG sites than the q-value method. We also plotted the histogram of the standardized methylation level difference between two genomic samples at each significant CpG site (see Web Figure 6 in Web Appendix I). The standardized methylation level difference at the ith CpG site is defined as , where , and Then from the overlaps of histograms between the additional sites and the overlap sites, we see that the additional power of our method was not due to a simple shift of the cutoff on the standardized methylation level difference.

Table 1.

Number of the significant CpG sites. “MCF” represents the number of significant CpG sites found by the MCF-based method, “q-value” the number of significant CpG sites found by q-value method, “both” the number of significant CpG site found by both methods.

|

α =

0.05 |

α =

0.1 |

|||||

|---|---|---|---|---|---|---|

| MCF | q-value | both | MCF | q-value | both | |

| Brain vs H1ES | 1,572,027 | 1,136,401 | 1,136,401 | 2,225,930 | 1,541,358 | 1,541,358 |

| Skin vs H1ES | 2,239,725 | 1,986,017 | 1,986,017 | 2,715,394 | 2,325,530 | 2,325,530 |

| Brain vs Skin | 1,593,047 | 1,250,206 | 1,250,206 | 2,082,701 | 1,545,953 | 1,545,953 |

We further plotted the histograms of for the additional CpG sites and the overlap CpG sites in Figure 4. We see that the additional CpG sites are usually associated with large values in ‘s, i.e. pi is not very small but is. The intuition is that, for a CpG site with small a , even though the observed pi is not very small, a small means that if we run a repeated experiment at this CpG site, it is likely that the observed p-value will shift to due to the sampling variation. In other words, our procedure does not solely rely on the observed p-values, but also consider their possible values in a repeated experiment. Also from Definition 1, if pi and λ are fixed, the smaller is, the larger its MCF would be, and more likely the CpG site will be claimed as significant. Therefore, for those CpG sites that are not detected by the q-value method, our MCF-based method gains power by looking at their ‘s and identifies those sites as significant if their ‘s are small.

Figure 4.

Histogram of at each significant CpG site. The light color represents the overlap CpG sites, the dark color represents the additional CpG sites.

The goal of a WGBS experiment usually does not stop at identifying individual CpG sites that show statistical significance between two samples, but to find differentially methylated regions (DMRs), which are regarded as possible functional regions involved in gene transcriptional regulation and chromatin remodeling (Hebestreit et al., 2013). A DMR involves a group of adjacent CpG sites that are mostly differentially methylated and we used a merging technique in Feng et al. (2014) to combine nearby CpG sites into DMRs (see details in Web Appendix H). The results are presented in Table 2, which shows that our MCF-based method gave 14% − 78% more detections than the q-value method at the DMR level.

Table 2.

Number of the significant DMRs. “MCF” represents the number of DMRs found by the MCF-based method, “q-value” the number of DMRs found by q-value method, “both” the number of DMRs found by both methods.

|

α =

0.05 |

α =

0.1 |

|||||

|---|---|---|---|---|---|---|

| MCF | q-value | both | MCF | q-value | both | |

| Brain vs H1ES | 18,177 | 12,390 | 12,387 | 32,547 | 18,283 | 18,268 |

| Skin vs H1ES | 51,218 | 44,956 | 44,955 | 63,746 | 54,134 | 54,132 |

| Brain vs Skin | 34,573 | 25,001 | 24,994 | 51,837 | 33,827 | 33,818 |

To validate the performance of our MCF-based method and to ensure that the additional DMRs detected by our method are biologically meaningful, we performed an enrichment analysis on the additional DMRs at the nominal FDR level α = 0.05. We used the Genomic Regions Enrichment of Annotations Tool (GREAT) (McLean et al., 2010) and presented the biological pathway ontology enrichment results using the Pathway Commons database (http://www.pathwaycommons.org/ ) (see Web Figures 7–9 in Web Appendix I). Biological pathway is one of the most widely used gene ontology types and includes biochemical reactions, complex assembly, transport and catalysis events, and physical interactions involving proteins, DNA, RNA, small molecules and complexes. GREAT performs both the binomial test over genomic regions and the hypergeometric test over genes to provide an accurate picture of annotation enrichments for genomic regions. A pathway is claimed as statistically enriched if it shows significance in both tests as well as its value in Binom Fold Enrichment (column 3 in Web Figures 7–9 in Web Appendix I) is greater than 2. The Binom Fold Enrichment is defined as k/(n * p), where k is the number of DMRs that hit the annotation, n is the number of the total genomic regions and p is the fraction of the genome annotated. For details about the GREAT analysis, refer to http://bejerano.stanford.edu/help/display/GREAT/Output. From the enrichment analysis results in Web Figures 7–9 in Web Appendix I, for the three comparisons of Brain versus H1ES, Skin versus H1ES and Brain versus Skin, there are 32, 9 and 31 biological pathways enriched, respectively, based on the additional DMRs detected by the MCF-based method. Many of these enriched pathways are related to the specific biological functions of the compared sample cells. For example, in the comparison between Brain and H1ES cells the ceramide signaling pathway was enriched, in the comparison between Skin and H1ES the vitamin C ascorbate metabolism pathway was enriched, and in the comparison between Brain and Skin the glutamate degradation pathway and organic anion transport pathway were enriched. The enrichment analysis thus shows that, the additional DMRs detected by our MCF-based method provided biological meaningful information in retrieving the functional profile of biological samples, and using MCF-based methods helped identify more biologically functional regions comparing to the traditional q-value method.

6. Conclusion and Discussion

In this paper, we proposed a novel MCF-based method for multiple testing of heterogeneous discrete tests. Our proposed method utilizes the MCF of randomized tests to achieve a powerful testing procedure. Rather than making decisions directly according to a one-time realization of the randomized p-values, our method performs better by using more distributional information of the randomized p-values. Our test decision is non-random and can be easily applied in practice comparing to the randomized testing approach in Habiger (2015). When tests are heterogeneous enough, specifically when Condition 3.1 satisfies, we proved that the pFDR of our method is asymptotically controlled under nominal level, and provided an asymptotic upper bound for the pFNR. Our method results in more detections than a naive application of the q-value method and does not miss any significant test detected by the q-value method. Another advantage of our method is that to estimate the null proportion and the alternative distribution of the randomized p-values, we can generate samples of the randomized p-values and apply common estimation methods without being concerned about the discreteness. Under different simulation settings, we showed that our MCF-based method outperformed many other existing methods with a higher detection power, and a controlled and more stable FDR. In a real application of DMR detection using WGBS data, our method improved the detection power by reporting more biologically meaningful regions. We also understood that our proposed method gains power from rejecting the tests that are likely to have smaller p-values if repeated experiments are conducted. Furthermore, our MCF-based method is designed for general heterogeneous multiple discrete testing, therefore besides the DMR detection mentioned in this paper, it has a broad range of applications in the analysis of the next-generation sequencing experiments and many other scientific fields, where data are collected as discrete read counts.

Supplementary Material

Acknowledgements

This study made use of data generated by the NIH Roadmap Project (http://www.roadmapepigenomics.org). In the simulations, we used the code from R package fdrDiscreteNull to implement the method of Chen and Doerge (2015). In addition, the authors wish to acknowledge the editor, Professor Malka Gorfine, and the two anonymous reviewers, for their helpful comments and valuable suggestions.

Footnotes

Supplementary Materials

Web Appendices, Web Tables, and Web Figures referenced in Sections 2, 3, 4 and 5 are available with this paper at the Biometrics website on Wiley Online Library. The R codes implementing the simulations and the real applications in this paper are available with this paper at the Biometrics website on Wiley Online Library and also the github repository: https://github.com/nanlin999/MCF-multtest.

References

- Allison DB, Gadbury GL, Heo M, Fernández JR, Lee C-K, Prolla TA, and Weindruch R (2002). A mixture model approach for the analysis of microarray gene expression data. Computational Statistics and Data Analysis 39, 1–20. [Google Scholar]

- Benjamini Y and Hochberg Y (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society. Series B 57, 289–300. [Google Scholar]

- Catelan D, Lagazio C, and Biggeri A (2010). A hierarchical Bayesian approach to multiple testing in disease mapping. Biometrical Journal 52, 784–797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Challen GA, Sun D, Jeong M, Luo M, Jelinek J, Berg JS, Bock C, Vasanthakumar A, Gu H, Xi Y, et al. (2012). Dnmt3a is essential for hematopoietic stem cell differentiation. Nature Genetics 44, 23–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen X and Doerge RW (2015). A weighted FDR procedure under discrete and heterogeneous null distributions. arXiv preprint arXiv:1502 00973. [DOI] [PubMed] [Google Scholar]

- Efron B (2008). Microarrays, empirical Bayes and the two-groups model. Statistical Science 23, 1–22. [Google Scholar]

- Efron B and Tibshirani R (2007). On testing the significance of sets of genes. The Annals of Applied Statistics 1, 107–129. [Google Scholar]

- Efron B, Tibshirani R, Storey JD, and Tusher V (2001). Empirical Bayes analysis of a microarray experiment. Journal of the American Statistical Association 96, 1151–1160. [Google Scholar]

- Feng H, Conneely KN, and Wu H (2014). A Bayesian hierarchical model to detect differentially methylated loci from single nucleotide resolution sequencing data. Nucleic Acids Research 42, e69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Genovese CR, Roeder K, and Wasserman L (2006). False discovery control with p-value weighting. Biometrika 93, 509–524. [Google Scholar]

- Geyer CJ and Meeden GD (2005). Fuzzy and randomized confidence intervals and p-values. Statistical Science 20, 358–366. [Google Scholar]

- Gilbert PB (2005). A modified false discovery rate multiple-comparisons procedure for discrete data, applied to human immunodeficiency virus genetics. Journal of the Royal Statistical Society: Series C 54, 143–158. [Google Scholar]

- Gu H, Bock C, Mikkelsen TS, Jäger N, Smith ZD, Tomazou E, Gnirke A, Lander ES, and Meissner A (2010). Genome-scale DNA methylation mapping of clinical samples at single-nucleotide resolution. Nature Methods 7, 133–136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Habiger JD (2015). Multiple test functions and adjusted p-values for test statistics with discrete distributions. Journal of Statistical Planning and Inference 167, 1–13. [Google Scholar]

- Habiger JD and Pena EA (2011). Randomised p-values and nonparametric procedures in multiple testing. Journal of Nonparametric Statistics 23, 583–604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hebestreit K, Dugas M, and Klein HU (2013). Detection of significantly differentially methylated regions in targeted bisulfite sequencing data. Bioinformatics 29, 1647–1653. [DOI] [PubMed] [Google Scholar]

- Heller R and Gur H (2011). False discovery rate controlling procedures for discrete tests. arXiv preprint arXiv:1112 4627. [Google Scholar]

- Hu JX, Zhao H, and Zhou HH (2010). False discovery rate control with groups. Journal of the American Statistical Association 105, 1215–1227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kulinskaya E and Lewin A (2009). On fuzzy familywise error rate and false discovery rate procedures for discrete distributions. Biometrika 96, 201–211. [Google Scholar]

- Kundaje A, Meuleman W, Ernst J, Bilenky M, Yen A, Heravi-Moussavi A, Kheradpour P, Zhang Z, Wang J, Ziller MJ, et al. (2015). Integrative analysis of 111 reference human epigenomes. Nature 518, 317–330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lancaster HO (1961). Significance tests in discrete distributions. Journal of the American Statistical Association 56, 223–234. [Google Scholar]

- Lehmann EL (2004). Elements of Large-Sample Theory Springer, New York. [Google Scholar]

- Liang K (2016). False discovery rate estimation for large-scale homogeneous discrete p-values. Biometrics 72, 639–648. [DOI] [PubMed] [Google Scholar]

- McLean CY, Bristor D, Hiller M, Clarke SL, Schaar BT, Lowe CB, Wenger AM, and Bejerano G (2010). GREAT improves functional interpretation of cis-regulatory regions. Nature Biotechnology 28, 495–501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nettleton D, Hwang JG, Caldo RA, and Wise RP (2006). Estimating the number of true null hypotheses from a histogram of p-values. Journal of Agricultural, Biological, and Environmental Statistics 11, 337–356. [Google Scholar]

- Pounds S and Cheng C (2004). Improving false discovery rate estimation. Bioinformatics 20, 1737–1745. [DOI] [PubMed] [Google Scholar]

- Pounds S and Cheng C (2006). Robust estimation of the false discovery rate. Bioinformatics 22, 1979–1987. [DOI] [PubMed] [Google Scholar]

- Ruppert D, Nettleton D, and Hwang JT (2007). Exploring the information in p-values for the analysis and planning of multiple-test experiments. Biometrics 63, 483–495. [DOI] [PubMed] [Google Scholar]

- Storey J (2002). A direct approach to false discovery rates. Journal of the Royal Statistical Society: Series B 64, 479–498. [Google Scholar]

- Storey JD (2003). The positive false discovery rate: A Bayesian interpretation and the q-value. Annals of Statistics 31, 2013–2035. [Google Scholar]

- Tang Y, Ghosal S, and Roy A (2007). Nonparametric Bayesian estimation of positive false discovery rates. Biometrics 63, 1126–1134. [DOI] [PubMed] [Google Scholar]

- Tarone RE (1990). A modified Bonferroni method for discrete data. Biometrics 46, 515–522. [PubMed] [Google Scholar]

- Tocher KD (1950). Extension of the Neyman-Pearson theory of tests to discontinuous variates. Biometrika 37, 130–144. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.