Abstract

Despite efforts aimed at improving the integration of clinical data from health information exchanges (HIE) and electronic health records (EHR), interoperability remains limited. Barriers due to inefficiencies and workflow interruptions make using HIE data during care delivery difficult. Capitalizing on the development of the Fast Healthcare Interoperability Resource (FHIR) specification, we designed and developed a Chest Pain Dashboard that integrates HIE data into EHRs. This Dashboard was implemented in one emergency department (ED) of Indiana University Health in Indiana. In this paper, we present the preliminary findings from a mixed-methods evaluation of the Dashboard. A difference-in-difference analysis suggests that the ED with the Dashboard implementation resulted in a significant increase in HIE use compared to EDs without. This finding was supported by qualitative interviews. While these results are encouraging, we also identified areas for improvement. FHIR-based solutions may offer promising approaches to encourage greater accessibility and use of HIE data.

Introduction

“The most profound technologies are those that disappear. They weave themselves into the fabric of everyday life until they are indistinguishable from it.1” While interoperability among health information technology (IT) applications aspires to this ideal articulated by Mark Weiser2,3, we can hardly say that we have achieved it4,5. A key question for health IT developers is how to design their systems so that the boundaries among various applications disappear, and the clinician user can concentrate fully on patient care rather than on navigating a collection of disparate systems6.

Ideally, clinicians would like to see patient information from multiple systems, such as electronic health records (EHR), ancillary systems, such as imaging and specialty systems, and health information exchanges, in one place4. Further, this information should be presented in a manner that makes clinical reasoning and decision-making efficient and reduces cognitive friction. From a software engineering perspective, realizing these goals faces numerous challenges, such as the difficulty or impossibility of modifying existing EHR user interfaces, and having to retrieve data using multiple protocols, such as HL7 messaging, the Simple Object Access Protocol (SOAP) or SQL-based database queries.

In consequence, the degree of integration of EHRs with health information exchanges (HIE) has been limited7, despite its demonstrated value and utility8,9. Currently, clinicians access information in an HIE most commonly through a secondary system, such as a Web portal, forcing them to interrupt their workflow. Even when the EHR passes user credentials and/or patient context automatically to the HIE application, such disruption has multiple negative consequences, such as a increase in the number of clicks/keystrokes, time and cognitive effort needed to retrieve the desired information.

These observations were validated in a small-scale pilot study of HIE use during emergency care in Indianapolis we recently conducted. Our study focused on the use of the Indiana Network of Patient Care (INPC), the nation’s largest inter-organizational clinical data repository and the primary platform that enables the Indiana Health Information Exchange (IHIE). The IHIE connects more than 100 hospitals, 14,000 practices and nearly 40,000 providers. Physicians access INPC through a Web-based platform called CareWeb. During a two-day study period in March 2017, we observed seven physicians over 17 hours delivering emergency care at Eskenazi Health and Indiana University Health (IUH) Methodist Hospital, the two busiest emergency departments (>100,000 patient visits/year) in the city of Indianapolis. In a total of 70 patient encounters observed, CareWeb was accessed twice, despite its potential utility10. Based on interviews with physicians, we identified several barriers to HIE use, such as technical difficulties, time delays, uncertainty about the presence of information as well as its expected relevance and having to leave the EHR to access CareWeb.

However, recent developments in how we write health IT software offer exciting opportunities to surmount these difficulties. For instance, the HL7 Fast Healthcare Interoperability Resources (FHIR)11 specification is an emerging standard that makes much of the information contained in EHRs accessible through a common, cross-platform interface. In the same vein, the Substitutable Medical Applications, Reusable Technologies (SMART) health IT platform12 allows innovators to create applications that integrate seamlessly with health IT applications such as EHRs.

Applied to the interaction of the clinician with the EHR and an HIE, these methods offer, for the first time, an easily generalizable way to integrate information from an HIE directly in an EHR. In fact, correctly designed, this integration can be so seamless that the user perceives the EHR and HIE as one system, unburdened from the physical and cognitive effort typically associated with interacting with two or more.

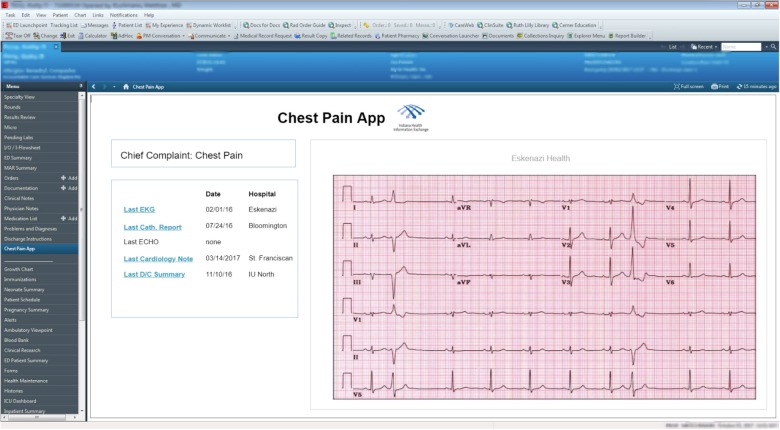

It is this paradigm which we used to implement our Chest Pain Dashboard (previously called FHIR HIEdrant; referred to as Dashboard in the remainder of this paper) using the INPC and Cerner EHR. In brief, we built and implemented the Dashboard to work on a custom-written FHIR interface to the INPC (the FHIR-on-INPC Server). This FHIR interface exposes selected data resources to external applications in compliance with the FHIR standard. In our case, we integrated the Dashboard with the Cerner instance used in the emergency department (ED) of IUH Methodist Hospital, our pilot site. Providers in the ED can launch the Dashboard from within Cerner with one click. The credentials of the authenticated user are passed to the Dashboard directly, obviating the need for logging in separately. The Dashboard then communicates with our FHIR-on-INPC Server and searches for five data elements important to managing patients with the chief complaint of chest pain (last EKG, cardiology note, discharge summary, cardiac catheterization report, and echo, stress, and nuclear medicine tests). These data elements were identified by IUH Methodist ED physicians as most important for managing patients with the chief complaint of chest pain.

If the patient has medical records in the INPC that match the search, the Dashboard retrieves the data from the FHIR-on-INPC Server and displays it (see Figure). During the development of the Dashboard, we compared the traditional method of retrieving information from the INPC through CareWeb to doing so using the Dashboard. The Dashboard reduced the number of clicks from 50 to 6, and search time from 3 minutes to 10 seconds, in a laboratory experiment.

Figure:

The Chest Pain Dashboard displayed within the Cerner EHR. The Dashboard retrieves five data elements from the INPC and displays them within Cerner. User interface and interaction design provide a seamless user experience.

In order to evaluate whether the Dashboard was successful in addressing the barriers to HIE use in the ED, we designed and conducted a comprehensive evaluation. The purpose of this paper is to present the preliminary findings of our evaluation. To our knowledge, this is the first evaluation of a FHIR app implemented in the ED care setting in the context of a mature HIE. While other projects, such as at the Chesapeake Regional Information System13 and Beth Israel Deaconess Medical Center14, use FHIR to implement interoperability functionality, we could not find published evaluations of these efforts. Findings from our evaluation may provide insights on improving interoperability in the ED setting and be of interest to researchers as well as decision-makers at hospital systems as well as EHR vendors.

Methods

Our methods were informed by an integrated set of theoretical frameworks previously used to evaluate technology acceptance and success. We used the Unified Theory of Acceptance and Use of Technology Model (UTAUT) which has been previously used to examine the adoption and use of EHR systems15–17. The UTAUT model posits that the behavioral intention to use a technology is influenced by several factors including performance expectancy, effort expectancy, social influence, and facilitating conditions. Performance expectancy (PE) is the degree to which an individual believes that using the system will help in improving their performance16,18. Effort expectancy (EE) is the ease of using the system. Social influence (SI) refers to the degree to which an individual perceives important others, such as a supervisor or influential colleague, believe he or she should use the new system. Facilitating conditions (FC) refer to the individual’s belief that sufficient organizational and technical infrastructure exists to support use of the system. While the UTAUT model enables measurement of technology acceptance, the DeLone and McLean (D&M) information system (IS) success model conceptualizes success19,20. The model involves three distinct, but interrelated, levels of success: technical involving system quality (e.g., does the system do what it was intended); semantic involving information quality (e.g., does the information convey the intended meaning); and effectiveness involving the effect of the IS on the receiver (e.g., user behavior, individual impact, organizational impact). Success is defined as net benefits to either the user or the organization.

Thus, to evaluate the use of the Dashboard (i.e. the technology in this case) in the context of the constructs described in the UTAUT and D&M models, we used a mixed-methods approach consisting of both quantitative and qualitative components. The quantitative portion of the evaluation allows us to assess net benefits as described in the D&M model by examining factors that can be measured in a quantitative manner, such as number of readmissions and time spent in the EHR. Further, net benefits are observed when the Dashboard is used. As such, we examined the constructs described in the UTAUT model (PE, EE, SI, FC) using a qualitative approach. The combination of quantitative and qualitative methods allows us to examine both the motivations behind use and the resulting benefits, thereby allowing for a more comprehensive evaluation of the Dashboard than typical approaches afford.

Quantitative evaluation study

Our evaluation used a quasi-experimental, longitudinal study design to evaluate the relationship between Dashboard usage and various outcomes related to clinical care in encounters with a chief complaint of chest pain. For the purpose of the evaluation, chest pain was defined as any chief complaint where the patient presented with explicit chest pain symptoms, or any other symptoms which could be interpreted as precursors or manifestations of a cardiac diagnosis. Other symptoms included shortness of breath, difficulty breathing, syncope, rib pain, dyspnea, tachycardia, arm pain, chest tightness and chest pressure. Since not all patients with a cardiac condition necessarily present with a chief complaint of chest pain, this broad list enabled us to capture an expanded set of conditions for which our Dashboard was likely to provide useful information.

We linked INPC usage log data to encounter data in order to determine encounters where the clinician accessed a patient’s clinical information in the HIE. INPC usage logs record all instances of user querying a patient’s clinical information through CareWeb or the Dashboard. In addition to INPC usage logs, we also used log files generated by the Dashboard itself. The Dashboard logs allowed us to distinguish between encounters where patient information was accessed using CareWeb versus the Dashboard.

Evaluation Hypotheses

As determined through the small-scale pilot study described above, usage of patient information from the HIE was low mainly due to the logistical and technical barriers to using CareWeb. The technical and user experience design of the Dashboard was intended to remove or mitigate these barriers in order to allow clinicians to increase their use of HIE information for the benefit of the patient. Allowing physicians to retrieve key clinical information with significantly reduced effort from the INPC instead of forcing lengthy navigation through CareWeb should, intuitively, make it easier for clinicians to access information from the INPC. Thus, our primary hypothesis was:

HIE use in the intervention ED will increase after the implementation of the Dashboard.

Our analysis focuses on two additional hypotheses which we will examine in the near future. First, since the Dashboard allows clinicians to view relevant clinical information within the EHR with much less effort than when using the EHR and CareWeb, our second hypothesis is: Clinicians in the intervention ED will spend less time within the EHR than in non-intervention EDs. Finally, increase use of the HIE and reducing the time spent in the EHR may help clinicians be better informed and dedicate more time to optimal clinical decision making. This could result in improved clinical management decisions and thus our third hypothesis is: Clinicians in intervention EDs will have an increase in favorable and a decrease in unfavorable clinical management decisions.

Dependent and Independent Variables

Based on our primary hypothesis, our dependent variable was HIE Use, defined as 1 if the HIE was used in an encounter (either through CareWeb or the Dashboard) and 0 if not. While this outcome examines each encounter regarding whether the HIE was accessed or not, we also evaluate HIE use as the proportion of total encounters in a bivariate analysis. Our independent variables included indicators to identify whether a given encounter occurred prior to or after the Dashboard implementation, as well as whether a given encounter took place at an intervention or control ED.

ata Analysis

We first examined the distributions of various variables of interest. Using Chi-squared analysis or t-tests as appropriate, we evaluated the bivariate relationships between independent variables and the binary dependent variable. Next, using a difference-in-difference (DID) model, we analyzed the relationship between the Dashboard implementation and HIE usage. The difference-in-difference approach has been previously employed by researchers for evaluating the impact of social policies and programs. The validity of any quasi-experimental design depends on a key, untestable, identifying assumption which implies that a “counterfactual” can be used to estimate the effect of the program or intervention. The counterfactual represents what would happen in the intervention ED in absence of the intervention, i.e. the implementation of the Dashboard. In order to control for the non-independence of observations, we clustered standard errors at the provider level. Findings for the DID model are presented as odds ratios. Since the implementation of the Dashboard is currently underway with data collection ongoing, for the purpose of this preliminary analysis we present findings testing only for Hypothesis 1. The study period for our quantitative analysis covered a 3-month period of encounters, respectively, before and after the implementation of the Dashboard in December 2017. Thus, the quantitative study period extended over six months from 9/2017 to 3/2018.

Qualitative evaluation study

Study Design

The main component of the qualitative evaluation study was a semi-structured, in-person, video, or phone interview, designed to last no more than 25 minutes. Prior to the interview, we asked participants to complete a 1-page survey containing 22 closed-ended questions. The questionnaire, described in further detail below, was based on the Post-Study e-Health Usability Questionnaire (PSHUQ)21. We combined the semi-structured interview with a survey for several reasons. First, we wanted to make the interview process as efficient as possible, given that our participants were all busy ED physicians. Second, the survey instrument provided us with information that allowed us to summarize feedback quantitatively across respondents. Third, the interview component offered the opportunity to probe particular quantitative responses in more detail, helping generate a rich data set. Interviews took place at a location of the clinician’s choosing, either in person or through Zoom teleconference/phone technology.

Participants

A total of 111 clinicians at the IUH Methodist ED had access to the Dashboard during the study period from 1/1/2018 to 8/1/2018. Of those, 42 had used the Dashboard at least once. We identified 28 target participants based on their usage of the application (light, medium and heavy users) determined from application logs. Participation in the study was voluntary and none of the clinicians were compensated.

Questionnaire

The survey instrument (included in the Appendix) was designed to measure user satisfaction with the Dashboard. It was based on the PSHUQ, an instrument that has undergone two rounds of validation21,22. To the dimensions of system usefulness and system/information quality measured in the PSHUQ, we added two questions each related to system efficiency and potential system improvements. In addition, we adapted the original instrument in minor ways to fit the objectives of our study.

The resulting instrument included 22 statements, of which 20 required answers on a seven-point, Likert-type scale ranging from extremely agree to extremely disagree, and one each a yes/no and an all of the time/sometimes/never answer. Sample survey statements include:

- system usefulness

- “Overall, I am satisfied with how easy it is to use the Chest Pain Dashboard.”

- “I felt comfortable using the Chest Pain Dashboard.”

- system/information quality

- “The Chest Pain Dashboard has all the capabilities I expected it to have.”

- “The information provided by the Chest Pain Dashboard helped me complete my clinical tasks.”

- system efficiency

- “The response time of the Chest Pain Dashboard was adequate.”

- potential system improvements

- “The system could be improved.”

We pilot-tested the survey with four individuals representative of our participants. The pilot test did not result in any changes.

Data analysis

We summarized quantitative responses from the survey descriptively using simple counts for each statement/question. Mean response score for each question was calculated by converting answer choices to numbers (extremely disagree=1, agree=2, … extremely agree=7) and averaging the converted responses. In addition, we provide context from the semi-structured interviews for the four dimensions we intended to measure: system usefulness, system/information quality, system efficiency and potential system improvements. We did not formally assess the validity and reliability of the instrument (planned for a future publication). This study was approved on November 27, 2017 by the Indiana University Institutional Review Board under the title Evaluation of SMART on FHIR CareWeb Dashboard for Chest Pain (protocol #1710793135).

The study periods for the quantitative (9/2017 to 3/2018) and qualitative (1/1/2018 to 8/1/2018) analysis differ slightly, primarily due to the availability of the dataset for the quantitative analysis. In addition, we deployed a significantly improved version of the Dashboard in April 2018, which affected the results of the qualitative but not the quantitative analysis.

Results

Quantitative evaluation results

Our data sample included a total of 266,291 encounters within all emergency departments in the IUH system during the study period for the quantitative analysis (9/2017 to 3/2018). Overall, 20.2% of all encounters occurred in the intervention ED. Further, over half (52.2%) of all encounters across all EDs occurred after the Dashboard was implemented. Chest pain was the most common chief complaint in all EDs, accounting for about 11.4% of all encounters. Clinicians did not use the INPC, either through CareWeb or the Dashboard, in a majority (95.4%) of the encounters. Compared to other EDs, the intervention ED had a greater INPC usage (10.55% vs 3.2%, p <0.001) during our study period. INPC use increased to a greater degree in the intervention ED than in other EDs in the post-implementation period (56.0% vs 53.5%, p=0.006). In our regression analysis (see Table 1), the difference-in-difference estimator was significantly and positively related to INPC usage (OR:1.15; CI: 1.02, 1.30; p=0.026) suggesting that after the implementation of the Dashboard, clinicians in the intervention ED used the INPC more than those in other EDs.

Table 1:

Estimates from difference-in-difference analysis for INPC usage. INPC usage refers to INPC usage through CareWeb or through the Dashboard. Post refers to the binary variable indicating time period, 1: post-implementation; 0: pre-implementation. Intervention refers to whether a given encounter was in the intervention ED or other EDs in the IUH system, 1: Intervention ED; 0: Other EDs

| INPC usage | |||

|---|---|---|---|

| Variables | Odds Ratio | Confidence Interval | p-value |

| Post | 1.05 | (0.95, 1.16) | 0.329 |

| Intervention | 3.38 | (2.73, 4.20) | <0.001 |

| Post × Intervention (DID estimator) | 1.15 | (1.02, 1.30) | 0.026 |

Qualitative evaluation results

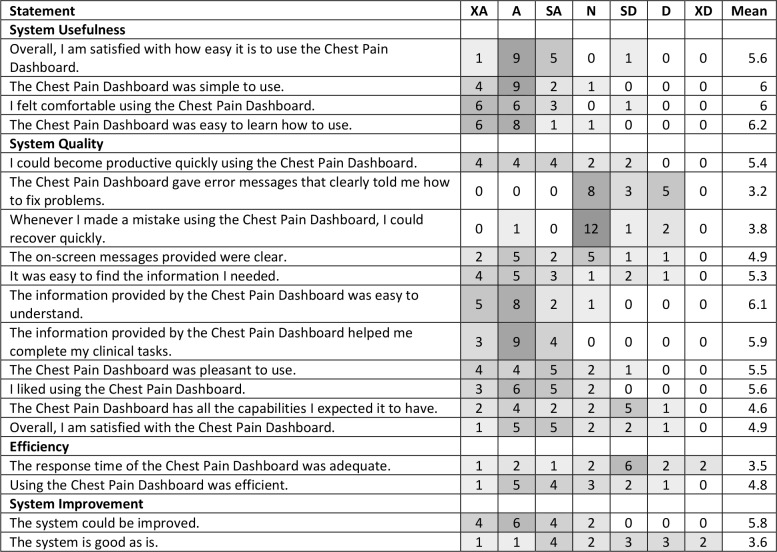

Of the 28 prospective participants invited by email, sixteen agreed to participate. The overwhelming majority (n=15) reported using the Dashboard sometimes, while one person used it all the time. Table 2 summarizes the quantitative responses to 19 other closed-ended questions. We report results by the dimension intended to be measured (system usefulness, system/information quality, system efficiency and potential system improvements).

Table 2:

Summary of responses to 19 survey questions. XA=Extremely Agree, A=Agree, SA=Strongly Agree, N= Neutral, SD =Strongly Disagree, D-Disagree, XD=Extremely Disagree. Cells are shaded proportionately to the number of respondents.

System usefulness

The overwhelming majority of respondents appeared to consider the system useful. In response to the four questions about system usefulness, 49 responses were either extremely agree or agree, while only 15 were somewhat agree or lower. Ease-of-use, as well as the ease of learning how to use the Dashboard, were highly rated. This assessment was borne out by comments made during the interviews, which included:

“Just a button. Has a lot of info needed with one click.”

“Easy to get to but have to direct others to the link for the app. Intuitive once it is shown to them. “

System/information quality

Compared to system usefulness, system/information quality was rated lower. Here, the number of responses in the extremely agree and agree categories (n=79) were outweighed by ratings somewhat agree or lower (n=97). Some statements, such as “It was easy to find the information I needed,” “The information provided by the Chest Pain Dashboard was easy to understand,” and “The information provided by the Chest Pain Dashboard helped me complete my clinical tasks” and “I liked using the Chest Pain Dashboard,” were rated fairly high. However, the handling of and recovery from errors were rated quite low. Two important statements, “The Chest Pain Dashboard has all the capabilities I expected it to have” and “Overall, I am satisfied with the Chest Pain Dashboard,” had mixed responses. Illustrative comments were:

“Computer system is a hindrance takes long time to load. Slower than expected, placement on Cerner nav. is low and need to search for it. Needs to be easily available. “

“Errors would be when there is ‘no data available’ but its accessible other ways. No solution presented.”

“Early experiences tainted with lack of functionality and may be biased when speaking on newer experiences.”

“It has the last document, which is nice. Wish it would automatically pull up last cardio clinic note. Most often pulls general notes and discharge notes. Need notes with all chest pain information, such as meds.”

The response patterns in the system/information quality dimension corresponded to the progress of our development efforts. First, while we rolled out the Dashboard with input of clinical informatics leadership at the IUH Methodist ED, we did not work closely with a large group of clinicians during development. Thus, most clinicians to whom the Dashboard was made available were unlikely to have expectations about the Dashboard’s capabilities or lack thereof. Second, the first version of the Dashboard had significant problems in terms of returning clinical documents and other errors. Thus, we expected the highly negative assessment of errors in the Dashboard. In the subsequent version of the Dashboard deployed in April 2018, the number of errors was significantly reduced, which was evidenced in several participant comments.

System efficiency

The assessment of system efficiency was predominantly negative, with only nine responses indicating extremely agree and agree in response to statements about adequate response time and efficient use, while 23 responses were somewhat agree or lower. This is illustrated in the following comments:

“Takes ten to thirty seconds. Would like instantaneous response. Time outs and freezes the interface which makes me logout. “

“Twenty seconds is a long time, for a single EKG. Compared multiple EKGs using the old method and was faster than finding a single EKG on the Dashboard.”

Again, our experience during the early phases of development validate these findings. In the first version of the Dashboard, response time tended to range from 15 to 30 seconds, which was clearly unacceptable to clinicians. In the second version rolled out in April 2018, we managed to reduce response time to between 10 and 20 seconds, with a mean of 15 seconds.

Potential system improvements

Agreement on the statement that the system can be improved was strong. Ten responses extremely agreed or agreed with the statement “The system could be improved,” while only six were somewhat agree or neutral. Only two responses extremely agreed or agreed with the statement “The system is good as is,” while twelve agreed with the statement “I feel there are some drawbacks of using the Chest Pain Dashboard.”

The question “I plan to use the Chest Pain Dashboard for all patients where it is useful” had an overall mixed but fairly positive response.

Discussion

This project implemented a novel approach to integrating information from a health information exchange directly with the clinical workflow in an EHR. The Dashboard we developed improves on the typical method which clinicians use to access HIE information by providing a set of relevant data, in our case focused on managing patients with chest pain, directly on a tab in Cerner. The key findings from our quantitative evaluation suggest that implementation of the Dashboard was related to an increase in overall HIE usage in the intervention ED. While we used a robust study design in our analysis, our findings should be interpreted cautiously.

A key issue that influenced both the quantitative and qualitative evaluation was the fact that we were evaluating “a moving target.” After a suboptimal implementation in December 2017, we re-designed and re-implemented the Dashboard in April 2018. As such, our quantitative evaluation is based on data from the earlier, non-optimized version of the Dashboard. However, this would likely bias our findings towards the null (i.e. no change in HIE use in the intervention ED) which is contrary to our findings. Further, in our analysis we observed that clinicians also used the Dashboard in encounters where the patients had chief complaints other than chest pain such as abdominal pain, vaginal bleeding, gunshot wounds, intoxication, seizure, and flu. Given the broad-spectrum utility of some clinical information (e.g. discharge summary) retrieved by the Dashboard it is possible that clinicians perceive the Dashboard as an efficient way to access useful information. However, further investigation is required in order to test this hypothesis.

Findings from our qualitative interviews generally support this assessment. With regard to the implementation of the re-designed Dashboard, several of our respondents remarked during the interviews that the Dashboard “had gotten a lot better recently,” reflecting partial success of our software engineering efforts. In summarizing clinician attitudes about the Dashboard, co-author AB, who conducted the majority of the interviews, remarked: “They love the idea, we just have to implement it better.” The findings of our qualitative study bear out this assessment. Attitudes towards system usefulness were overwhelmingly positive, highlighting strong support for the method. While the view of system/information quality was partially positive, it was marred by less-than-optimal response time and errors in retrieving documents. Response time was also rated low in the system efficiency category, and the idea of potential system improvements was fairly uniformly supported.

A few key aspects should be considered given the findings of this evaluation. First of all, the Dashboard is a pilot development designed to evaluate the feasibility of integrating HIE-based information directly into an EHR. Since this was the first time that we attempted to do this, we did not apply the level of software architecture design and engineering effort that typically would be expended on a true production-grade implementation. Our first priority was to determine whether the concept was going to work, using the tools, infrastructure, and resources at hand. Second, this was the first production implementation of a FHIR app developed and implemented by the Regenstrief Institute, the IHIE, and IUH, either individually or collectively. Implementing the Dashboard even in a pilot installation required covering a large technical space, ranging from the development of a FHIR interface for the INPC and user interface design within Cerner to implementation across a significant number of inter-organizational technical platforms and new approaches for DevOps.

This pilot, however, provided strong support for the general idea, as well as a strong foundation for future development. As of this writing, we have implemented a total of seven chief complaints in the system and integrated other useful functionality suggested by clinical end users. In addition, we are revisiting the system design and software architecture to work towards a robust production implementation of the concept.

Conclusion

To address barriers to HIE use in the ED, we created a FHIR-based Dashboard that integrated clinical information from the HIE with the EHR. Findings from our preliminary analysis suggest that the Dashboard resulted in an increase in HIE use in the intervention ED compared to other EDs. Further, while clinicians recommended improvements and requested additional features, they generally found the Dashboard useful. Our study suggests that providing additional services tailored to specific needs may facilitate the overarching goal of greater interoperability.

Acknowledgements

The authors would like to acknowledge the following individuals and institutions: Regenstrief Institute Data Core for the provision of data; Eric Puster and Luca Calzoni for helpful comments on the manuscript; Laura Ruppert for data collection; Abena Gyasiwa for formatting and preparing the manuscript for submission; and the following funding sources: the IUPUI’s Office of the Vice Chancellor for Research Funding Opportunities for Research Commercialization and Economic Success (FORCES) program; NLM award 1R01LM012605-01A1 Enhancing information retrieval in electronic health records through collaborative filtering; the IUH Methodist Foundation; the Lilly Endowment, Inc., Physician Scientist Initiative; and the Clem MacDonald chair account.

References

- 1.Weiser M. The Computer for the 21st Century. ACM SIGMOBILE Mob Comput Commun Rev. 1999 Jul 1;3(3):3–11. [Google Scholar]

- 2.Kuperman GJ. Health-information exchange: Why are we doing it, and what are we doing? J Am Med Inf Assoc. 2011 Sep;18(1527–974X (Electronic)):678–82. doi: 10.1136/amiajnl-2010-000021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Agrawal R, Grandison T, Johnson C, Kiernan J. Enabling the 21st century health care information technology revolution. Commun ACM. 2007 Feb;50(2):35–42. [Google Scholar]

- 4.Executive Summary. American Medical Association. 2014. Improving care: priorities to improve electronic health record usability. [Google Scholar]

- 5.Sheikh A, Sood HS, Bates DW. Leveraging health information technology to achieve the “Triple Aim” of healthcare reform. J Am Med Inform Assoc. 2015;22(4):849–56. doi: 10.1093/jamia/ocv022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.McGinn CA, Grenier S, Duplantie J, Shaw N, Sicotte C, Mathieu L, et al. Comparison of user groups’ perspectives of barriers and facilitators to implementing electronic health records: a systematic review. BMC Med. 2011 Dec 28;9(1):46. doi: 10.1186/1741-7015-9-46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Patel V, Henry J, Pylypchuk Y, Searcy T. Interoperability among U.S. non-federal acute care hospitals in 2015 [Internet] ONC Data Brief. 2016. pp. 1–11. Available from: https://dashboard.healthit.gov/evaluations/data-briefs/non-federal-acute-care-hospital-interoperability-2015.php.

- 8.Menachemi N, Rahurkar S, Harle CA, Vest J. The benefits of health information exchange: An updated systematic review. J Am Med Informatics Assoc. 2018;0(June):1–7. doi: 10.1093/jamia/ocy035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Sadoughi F, Nasiri S, Ahmadi H. The impact of health information exchange on healthcare quality and cost- effectiveness: a systematic literature review. Comput Methods Programs Biomed. 2018 Jul;161:209–32. doi: 10.1016/j.cmpb.2018.04.023. [DOI] [PubMed] [Google Scholar]

- 10.Overhage JM, Dexter PR, Perkins SM, Cordell WH, McGoff J, McGrath R, et al. A randomized, controlled trial of clinical information shared from another institution. Ann Emerg Med. 2002 Jan;39(1):14–23. doi: 10.1067/mem.2002.120794. [DOI] [PubMed] [Google Scholar]

- 11.Bender D, Sartipi K. HL7 FHIR: An agile and RESTful approach to healthcare information exchange. Proceedings of CBMS 2013 - 26th IEEE International Symposium on Computer-Based Medical Systems.2013. [Google Scholar]

- 12.Mandel JC, Kreda DA, Mandl KD, Kohane IS, Ramoni RB. SMART on FHIR: A standards-based, interoperable apps platform for electronic health records. J Am Med Informatics Assoc. 2016;23(5):899–908. doi: 10.1093/jamia/ocv189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sinha S, Jensen M, Mullin S, Elkin PL. Safe opioid prescription: A SMART on FHIR approach to clinical decision support. Online J Public Health Inform. 2017;9(2):e193. doi: 10.5210/ojphi.v9i2.8034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ganta K. San Francisco, CA: AMIA Annual Symposium; 2018. CentricityTM Healthcare’s FHIR application enables ‘Blue Button’ integration between Beth Israel Deaconess Medical Center and Fenway Health. [Google Scholar]

- 15.Hu PJ, Chau PYK, Sheng ORL, Tam KY. Examining the technology acceptance model using physician acceptance of telemedicine technology. J Manag Inf Syst. 1999 Sep 2;16(2):91–112. [Google Scholar]

- 16.Venkatesh V, Sykes TA, Zhang X. “Just what the doctor ordered”: A revised UTAUT for EMR system adoption and use by doctors. 2011 44th Hawaii International Conference on System Sciences; IEEE; 2011. pp. 1–10. [Google Scholar]

- 17.Seeman E, Gibson S. Predicting acceptance of electronic medical records: Is the technology acceptance model enough? SAM Adv Manag Journa. 2009;74(4) [Google Scholar]

- 18.Venkatesh V, Morris MG, Davis GB, Davis FD. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003;27(3):425–78. [Google Scholar]

- 19.Delone WH, Mclean ER. The DeLone and McLean Model of Information Systems Success: A ten-year update. J Manag Inf Syst. 2003;19(4):9–30. [Google Scholar]

- 20.Delone W, McLean E. Information systems success: The quest for the dependent variable. Inf Syst Res. 1992;3(1):60–95. [Google Scholar]

- 21.Frughling A, Lee S. Assessing the reliability, validity and adaptability of PSSUQ. AMCIS 2005 Proc 11th Am Conf Inf Syst; 2005. pp. 2394–402. [Google Scholar]

- 22.Lewis JR. Psychometric evaluation of the Post-Study System Usability Questionnaire: The PSSUQ. Proc Hum Factors Ergon Soc Annu Meet. 1992;36(16):1259–63. [Google Scholar]