Abstract

Dynamic frequency changes in sound provide critical cues for speech perception. Most previous studies examining frequency discrimination in cochlear implant (CI) users have employed behavioral tasks in which target and reference tones (differing in frequency) are presented statically in separate time intervals. Participants are required to identify the target frequency by comparing stimuli across these time intervals. However, perceiving dynamic frequency changes in speech requires detection of within-interval frequency change. This study explored the relationship between detection of within-interval frequency changes and speech perception performance of CI users.

Frequency change detection thresholds (FCDTs) were measured in 20 adult CI users using a 3- alternative forced-choice (3AFC) procedure. Stimuli were 1-sec pure tones (base frequencies at 0.25, 1, 4 kHz) with frequency changes occurring 0.5 sec after the tone onset. Speech tests were 1) Consonant-Nucleus-Consonant (CNC) monosyllabic word recognition, 2) Arizona Biomedical Sentence Recognition (AzBio) in Quiet, 3) AzBio in Noise (AzBio-N, +10 dB signal-to- noise/SNR ratio), and 4) Digits-in-noise (DIN). Participants’ subjective satisfaction with the CI was obtained. Results showed that correlations between FCDTs and speech perception were all statistically significant. The satisfaction level of CI use was not related to FCDTs, after controlling for major demographic factors. DIN speech reception thresholds were significantly correlated to AzBio-N scores.

The current findings suggest that the ability to detect within-interval frequency changes may play an important role in speech perception performance of CI users. FCDT and DIN can serve as simple and rapid tests that require no or minimal linguistic background for the prediction of CI speech outcomes.

Keywords: cochlear implant, speech perception, frequency change detection

Introduction

The World Health Organization reports that approximately 5% of the world’s population has disabling hearing loss as of 2018. For individuals with bilateral severe-to-profound hearing loss, cochlear implantation has been an effective treatment. There are about 500,000 cochlear implant (CI) users worldwide (US: 150,000). The number of CI users will substantially increase in the near future because of the broadened criteria for implant candidacy, earlier diagnosis of hearing loss and treatment, improved CI technologies, and reduced costs (Carlson et al., 2012; Alice et al., 2013; Vlastarakos et a., 2014; Raine et al., 2016).

Despite the impressive ability of CIs to improve sound audibility and speech understanding in general, several major challenges remain to realizing maximum benefits from CIs. One challenge is the substantial variability of CI speech outcomes due to the complex interplay of patient demographics, electrode placement, and neural and cognitive function (Raine et al., 2016; Holder et al., 2018). To address this challenge efficiently in the clinic, there is a need to identify simple assessment measures that could be used to quickly evaluate or predict CI outcomes. Such assessment measures have a greater value nowadays, given that more people are implanted and the demand for convenient patient care (e.g., telehealth, remote care, and patient self-service) is rising (Cullington et al., 2018).

The other challenge in the CI field is the relatively poor performance of pitch-based listening tasks, largely due to the technological restrains (Carlson et al., 2012). Specifically, while a healthy cochlea transmits temporal-frequency information of sounds through approximately 3000 inner hair cells, CIs deliver a highly degraded version of such information resulting from signal processing (e.g., signal compression, bandpass filtering, temporal envelope extraction) and only a small number (up to 22) of electrodes. The real number of spectral channels used for most CI users is likely less than 8 due to factors including channel interactions and frequency-to-electrode mismatches (Fu et al., 2004). Signal processing also removes temporal fine structure that normal hearing listeners can use to extract pitch information (Lorenzi et al., 2016). Furthermore, neural degeneration related to long-term deafness in CI users exacerbates their compromised ability to detect frequency differences of sounds (Sek & Moore, 1995; Moore, 1996).

The perception of speech and non-speech stimuli in our environment generally requires the auditory system to detect rapid dynamic frequency changes over time (e.g., voice fundamental frequency contours, spectral shapes of vowels, formant transitions, etc., Parikh & Loizou, 2005; Patel & Grigos, 2006; Cheang & Pell, 2008; Moore, 2008; McDermott et al., 2010). Due to relatively poor frequency resolution provided by a CI speech processor, it is not surprising that CI users typically do poorly in pitch-related listening tasks, such as melody perception, speech prosody (e.g., mood) differentiation, talker gender identification, tone perception, and segregation of sounds from different sources or different talkers (Gfeller et al., 2002; Fu et al., 2004; Looi et al., 2004; Zeng et al., 2005; Gfeller et al., 2007; Chatterjee & Peng, 2008; Drennan & Rubinstein, 2008; Oxenham, 2008; See et al., 2013; Looi et al., 2015; Brant et al., 2018).

Examining the correlations between frequency perception using psychoacoustic measures and speech perception performance in CI users has important implications. For instance, such studies can reveal fundamental mechanisms underlying speech perception through the CI, which is important in finding effective strategies to improve speech outcomes (Lorenzi et al., 2006); moreover, the psychoacoustic measures correlated with speech outcomes can also be used as non-linguistic tasks to assess CI outcomes in patients who cannot perform speech tasks reliably (e.g., young age, cognitive impairment; Drennan et al., 2016; Winn et al., 2016).

There are different approaches to measuring frequency discrimination (Sek & Moore, 1995). One approach is to use a pitch discrimination or ranking task (Looi et al., 2004; Vandali et al., 2005; Zeng et al., 2005; Gfeller et al., 2007; Pretorius & Hanekom, 2008; Kang et al., 2009; Kenway et al., 2015; Brown et al., 2017). Such tasks generally present target and reference tones over 2 or 3 separate time intervals. Participants must differentiate the target tone from reference tone (which one is different) or judge direction of pitch change between target and reference tones (which one has higher pitch). These tasks are not about merely detecting frequency changes per se, as the auditory system needs to detect the onset of the target frequency and the reference frequency for neurophysiological comparison. Evidence has shown that different neural mechanisms are involved in detecting the onset of a stimulus and frequency change embedded in the stimulus (Dimitrijevic et al., 2008; Liang et al., 2016; Brown et al., 2017).

A second approach is to use a modulation or spectral ripple task. The modulation task examines the minimum modulation frequency of an amplitude- or frequency-modulated stimulus relative to the unmodulated reference (e.g., participants indicate which stimulus is modulated). Spectral ripple tasks determine the minimum detectable modulation depth, or spectral contrast, in a spectral ripple stimulus. Numerous studies have used these tasks to examine spectral resolution of CI users (Zeng, 2002; Zeng et al., 2005; Won et al., 2007; Chatterjee & Peng, 2008; Landsberger, 2008; Kreft et al., 2013; Gifford et al., 2014; Lopez Valdes et al., 2014; Jeon et al., 2015; Winn et al., 2016; Brown et al., 2017). Similar to the first approach, these tasks require the participant to detect the perceptual difference between sequentially presented target and reference stimulus, thereby testing the ability to detect cross-interval frequency changes.

A third approach, used here, examines detection of minimal frequency change within stimuli that have embedded frequency changes. To our knowledge, such a stimulus paradigm has only been used in electrophysiological studies involving normal hearing listeners (Dimitrijevic et al., 2008; Pratt et al., 2009; Liang et al., 2016; Patel et al., 2016). Our previous studies first used such a stimulus approach in a study examining auditory evoked potentials in CI users (Liang et al., 2018). The advantage of this approach is that it allows for the examination of neural responses evoked by the stimulus onset (e.g., onset cortical auditory evoked potential) and the response evoked by the frequency change (e.g., acoustic change complex). This approach may better estimate the listeners’ ability to detect frequency changes embedded in the speech signals (such as formant) by minimizing the interference of stimulus onset cues.

While pitch discrimination in CI users can be examined using direct electrical stimulation (Zeng, 2002; Kreft & Litvak, 2005; Donaldson et al., 2005; Reiss et al., 2007; Chatterjee & Peng, 2008; Kong et al., 2009; Zeng et al., 2014), tasks presented in a sound field can provide information on pitch perception through the sound processor, which is the way CI users perceive speech in their daily lives (Wei et al., 2007; Pretorius & Hanekom, 2008; Vandali et al., 2015). The specific aim of this study was to examine CI users’ ability to detect within-interval frequency changes in tones presented in free-field and to explore correlations with measures of speech perception.

Materials and Methods

Participants

Twenty adult CI users (12 females and 8 males; 20–83 years old) participated in this study (Table 1). All participants were right-handed, native English speakers without any history of neurological or psychological disorders. The CI users wore devices from Cochlear Corporation (Sydney, Australia) and they all had severe-to-profound sensorineural hearing loss bilaterally prior to implantation. Of the 20 users, 11wore CIs bilaterally and 9 wore one CI only (6 wore hearing aids in the non-implanted ear). A total of 28 CI ears were tested separately (3 bilateral CI users were tested in one CI ear only). In these 28 tested CI ears, 3 used hybrid devices (short electrodes) and the other used devices with standard-length electrodes. All tested CI ears had used the CI for daily communication for at least 3 months. This study was approved by the Institutional Review Board of the University of Cincinnati. Participants gave written informed consent before participating in the study and were paid for their participation in this study.

Table 1.

Cochlear implant (CI) users’ demographics.

| CI ear ID | Gender | Ear Tested | Current age | Type of CI user | Type of CI | Duration of severe-toprofound deafness (years) | Duration of CI use (years) | Etiology |

|---|---|---|---|---|---|---|---|---|

| Sci104GG | M | R | 63.9 | Bilateral | Nucleus 6 | 10 | 1.5 | Meniere’s |

| L | Nucleus 6 | 10 | 0.25 | Meniere’s | ||||

| Sci02TJ | F | R | 50.3 | Unilateral | Nucleus 6 | 44 | 12 | Meniere’s |

| Sci105CS | M | R | 41.3 | Bilateral | Nucleus 6 | 40 | 3 | Possibly ototoxic |

| L | Nucleus 6 | 10 | 2 | Possibly ototoxic | ||||

| Sci106LK | F | L | 55.1 | Bilateral | Nucleus 6 | 55 | 16 | Unknown |

| R | Nucleus 6 | 55 | 4 | Unknown | ||||

| Sci103MT | M | R | 60.3 | Unilateral* | Nucleus 6 | 20 | 12 | Possibly genetic |

| Sci107RF | M | R | 83.4 | Unilateral | Kanso | 22 | 2 | Noise |

| Sci108SA | F | L | 61.3 | Unilateral | Nucleus 6 | 16 | 2 | Possibly genetic |

| Sci101DR | M | L | 65.2 | Bilateral | Nucleus 6 | 9 | 6 | Noise |

| R | Nucleus 6 | 9 | 6 | Noise | ||||

| Sci44LF | M | L | 44.8 | Bilateral | Nucleus 6 | 34 | 4 | Possibly ototoxic |

| R | Hybrid | 34 | 5 | Possibly ototoxic | ||||

| Sci39JC | F | L | 50.8 | Bilateral | Nucleus 6 | 46 | 7 | Measles |

| R | Nucleus 6 | 46 | 6 | Measles | ||||

| Sci109EJ | F | L | 48.3 | Unilateral | Nucleus 6 | 15 | 3 | Possibly genetic |

| Sci100MM | F | R | 47.6 | Unilateral | Nucleus 6 | 17 | 2 | Unknown |

| Sci113RM | F | R | 53.3 | Bilateral | Nucleus 6 | 47 | 15 | Possibly infection |

| Sci110AW | F | L | 66.7 | Unilateral | Nucleus 6 | 47 | 5 | Chronic ear infections |

| Sci115MC | F | R | 20.4 | Bilateral | Nucleus 6 | 18 | 0.25 | Meningitis |

| L | Nucleus 6 | 18 | 0.25 | Meningitis | ||||

| Sci45LK | F | R | 51.4 | Bilateral | Nucleus 6 | 41 | 9 | Unknown |

| Sci35KM | F | L | 65.7 | Unilateral | Nucleus 5 | 41 | 7 | Ototoxic |

| Sci46MF | M | R | 32.2 | Bilateral | Nucleus 5 | 11 | 10 | Trauma |

| Sci18RL | M | L | 44.5 | Unilateral | Nucleus 5 | 44 | 9 | Unknown |

| Sci114KZ | F | L | 65.0 | Bilateral | Hybrid | 1.5 | 0.5 | Possibly genetic |

| R | Hybrid | 5 | 4 | Possibly genetic |

Procedure

Participants were tested for hearing thresholds using pulsed tones to ensure audibility of sound presented through their CI processors. Psychoacoustic (frequency change detection task) and speech (CNC word, AzBio sentence in Quiet, AzBio sentence in Noise, and Digit-in-Noise tests) tasks were then conducted, in a randomized order. All tests were performed inside a double- walled sound treated room. Acoustic stimuli were presented via a loudspeaker in the sound field located at approximately 4 ft from the patient at head height, at 0-degree azimuth, and at approximately 70 dBA. CI users were allowed to adjust their processor sensitivity setting to the most comfortable setting. A questionnaire was administered to collect demographic data and information on how satisfied the participants were with their use of CI in the daily life (1–10, with 10 representing the greatest satisfaction). For CI users wearing two CIs, they were asked to answer the questions for each ear. All 28 CI ears were tested with all procedures except 4 ears, for which AzBio in Noise, the DIN test, and subjective evaluation of satisfaction level were not completed.

Psychoacoustic task

A series of pure tones bursts (0.25, 1, 4 kHz, 1-sec duration, 20 ms raised cosine ramps) were generated using Matlab at a sample rate of 44.1 kHz. Another series of tones of the same base frequencies contained upward frequency steps at 0.5 sec post-onset. Step magnitude varied from 0.5% to 200% and step change occurred for an integer number of base frequency cycles. Change occurred at 0° phase (zero crossing) to prevent audible transient clicks (Dimitrijevic et al., 2008). Amplitudes of all stimuli were equalized.

Three different base frequencies (0.25, 1, 4 kHz) were used in the present study. The frequency of 0.25 kHz is in the range of the fundamental frequency (F0) of the human voice. The frequencies of 0.25, 1, and 4 kHz are assigned to the electrodes in the apical, middle, and basal regions of the cochlear in the frequency allocation map (Skinner et al., 2002). Thus, the use of these three base frequencies may reveal the difference/similarity of how the auditory system may process changes in low and high frequency ranges (Pratt et al., 2009).

Stimuli were presented using Angel Sound (http://angelsound.tigerspeech.com/). An adaptive, 3-alternative forced-choice (3AFC) procedure was employed to measure the minimum frequency change the participant was able to detect. In each trial, a target stimulus (a tone with a frequency change in the middle of the tone) and two standard stimuli (the same tone with no frequency change in the middle) were included. The order of standard and target stimuli was randomized and the interval between the stimuli in a trial was 0.5 sec. The participant was instructed to choose the target signal (which one is different from the other 2 stimuli) by pressing the button on the computer screen and no feedback was given. The target stimulus of the first trial always contained a frequency change of 18% above the base frequency, with the step size adjusted according to a transformed up-down staircase technique based on the participants’ response. The 2-down 1-up staircase technique was used to track the 79% correct point on the psychometric function. Each response alternation is counted as a response reversal. Any test run that had less than 6 response reversals in 35 trials was discarded and repeated. The frequency change detection threshold (FCDT) at each reference frequency was calculated as the average of the last 6 reversals. The order of the 3 base-frequency conditions was randomized and counterbalanced across participants.

Speech tests

Consonant-Nucleus-Consonant (CNC) Word Recognition Test:

The CNC word recognition test was administered to assess open-set monosyllabic word recognition in quiet. The CNC word recognition test is part of the Minimum Speech Test Battery(MSTB) that has been recommended by clinicians and researchers for adult CI users (Firszt et al, 2002; Gifford et al., 2015). The test contains 500 monosyllabic words (consonant-vowel-consonant), divided into ten phonemically balanced lists of 50 words. Each recorded word is spoken by the same male speaker and is preceded by the carrier word “Ready,” with three practice words at the beginning of each list. In this study, one CNC word list was administered to each CI ear. Participants were instructed to repeat each word that they heard and to guess if they were unsure. Results were manually recorded by the experimenter and expressed as percent correct for words and phonemes.

Arizona Biomedical Sentence Recognition Test in Quiet (AzBio-Q):

The AzBio Sentences (Spahr et al., 2012) is another commonly used speech perception measure in numerous CI studies (Massa & Ruckenstein, 2014; Dorman et al., 2015; Roland et al., 2016; Holder et al., 2018). AzBio-Q sentences are recordings of a conversational speaking style by two male and two female talkers with limited contextual cues in each of 33 lists equated for intelligibility. In this study, each CI ear was presented with one 20-sentence list and the participants were instructed to repeat each sentence that they heard and to guess if they were unsure. Results were manually recorded by the experimenter and expressed as percent correct.

Arizona Biomedical Sentence Recognition Test in Noise (AzBio-N):

AzBio Sentences in Noise (multi-talker babble) simulate real-world listening environments in our daily life. AzBio-N at +10 dB signal-to-noise ratio (SNR) was chosen to avoid ceiling or floor effects in CI users (Dorman et al., 2012, 2015; Brant et al., 2018). Results were manually recorded by the experimenter and expressed as the percent correct.

Digit-in-Noise Test (DIN).

The DIN (Smits et al., 2013) is a simple test of speech perception in noise, in which digit triplets (e.g., 2, 3, 5) are presented in a speech-shaped noise. The target signals are numbers from 0–9, which have a low cognitive demand, not requiring complicated vocabulary. The test can thus be easily administrated to a broad range of patients. The DIN also has little training effect and its test-retest reliability is high. This test has been normalized in multiple languages and several different English regional accents and can thus be used in individuals with different linguistic backgrounds. Variants of the DIN have been used in several CI studies (Kaandorp et al., 2015; Cullington & Agyemang-Prempeh, 2017). In this study, the DIN stimuli were generated through an internet app (provided by hearX Group) and presented adaptively (step size 2 dB SNR) over 25 trials. Participants were asked to type the 3 digits they heard on the computer keyboard after each trial thus bypassing the need for experimenter interpretation of speech. Results were expressed as the speech reception threshold (SRT) in dB, representing the 50% intelligibility level.

Data Analysis

For each participant, the hearing threshold (dB nHL) at each test frequency, the FCDT (%) at each base frequency, the percent correct for the CNC and AzBio tests, and the SRT (dB) value for the DIN test were obtained. Descriptive statistics were computed for each dependent variable. The hearing thresholds at different frequencies were compared using analysis of variance (ANOVA). The effects of demographic factors on hearing thresholds were further analyzed with a mixed-effect model. The correlations between the FCDT and CI outcomes (speech tests and satisfaction level) were performed using linear regression models, with the effect of demographic factors controlled. Data were analyzed using the SAS statistical program (Statistical Analyses System, SAS Institute, Inc., Cary, North Carolina). For all comparisons and correlations, p < 0.05 was considered statistically significant. Correction of the p value was applied for multiple comparisons and correlations.

Results

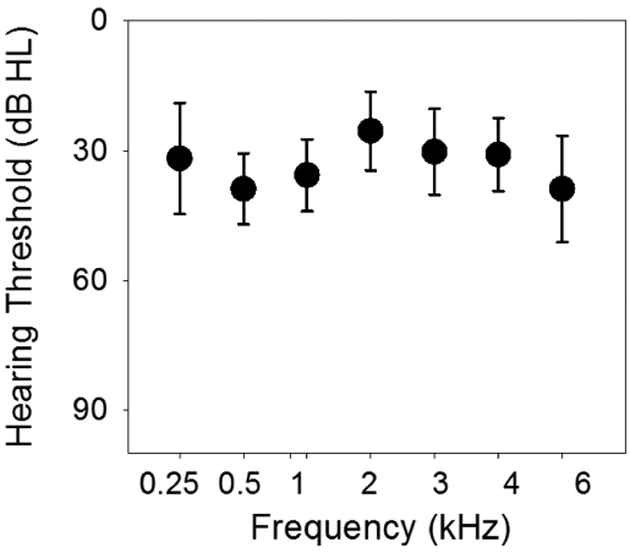

Participants using their CI(s) could generally hear pulsed tones up to 6 kHz with sensitivity equivalent to a mild hearing loss. Figure 1 shows the means and standard deviations of hearing thresholds using pulsed tones at frequencies from 0.25, 0.5, 1, 2, 4, and 6 kHz. The thresholds at different frequencies are at approximately 30 dB HL. A one-way repeated ANOVA showed no significant difference in the hearing threshold across frequencies (F(6,163)=0.11, p=0.99).

Figure 1.

Mean pulsed-tone thresholds at frequencies from 0.25, 0.5, 1, 2, 4, and 6 kHz in CI ears (n=24). The means (circles) and the standard deviations (error bars) of the means are plotted.

Mixed-effect model was used to model the hearing threshold on the tested frequency, adjusting for demographic variables, with ear-specific random effect to account for repeated measurements on the same ear. The effect of age was significant on the threshold (t=2.19, p=0.03), with a higher hearing threshold in older participants. The effect of duration of CI use was also significant (t=−2.91, p=0.004), with a lower hearing threshold for CI ears with longer duration of CI use.

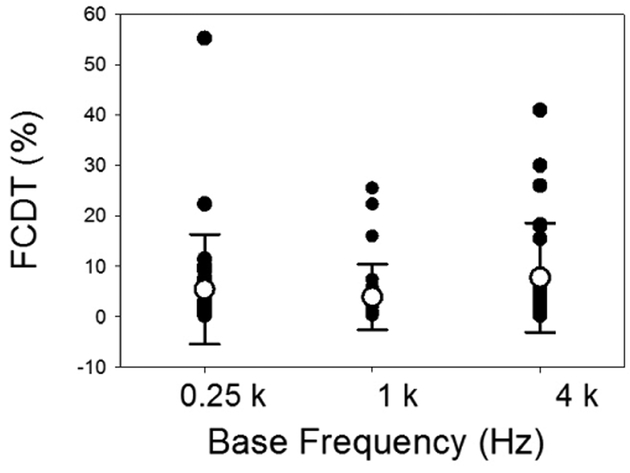

Frequency change detection thresholds (FCDTs) in individual CI ears and the mean FCDT at the 3 base frequencies are shown in Figure 2. The mean FCDTs are 5.48%, 3.94%, and 7.78%, respectively, for 0.25, 1, and 4 kHz, respectively. The variation of FCDTs among CI users was large, with a FCDT less than 10% in most ears and a FCDT of greater than 10% in 3–7 ears. Some users performed with great sensitivity at each frequency. A one-way repeated ANOVA was used to test the effect of Base Frequency on the FCDT. There was no statistical significance between groups [F(2,82)=2.03, p=0.14]. Due to this lack of difference across frequencies, the FCDTs from 3 base frequencies were averaged for the following correlation analysis.

Figure 2.

The frequency change detection thresholds (FCDT) at 0.25, 1, and 4 kHz base frequencies in individual CI ears (n=28). The means (open circles) and the standard deviations (error bars) of the means are also plotted.

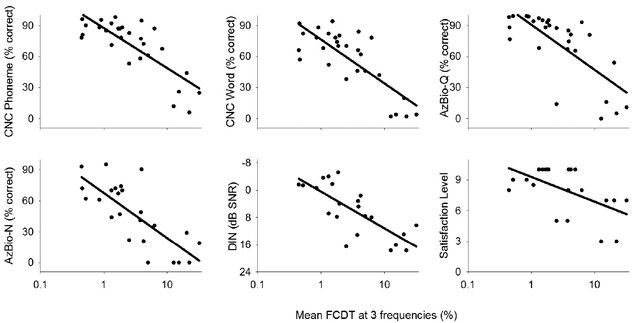

To examine the relation between FCDTs and each outcome measure, Figure 3 shows speech perception performance and satisfaction level as a function of each individual’s mean FCDT (note log scale). Six correlations were assessed using linear regression analysis. Corrections for multiple correlations were applied, with a corrected p level of 0.01 (0.05/6=0.0083) as the significance level. The results showed that the FCDT was significantly correlated to all speech measures and with satisfaction level. Note that a criterion of FCDT of 10% separated most moderate-to-good performers (>60% for CNC, AzBio-Q; >30% for AzBio-N; <10 dB for DIN test) and poor performers. Similarly, the criterion of FCDT of 10% separated most CI users with a high satisfaction level (>8) from those with a low satisfaction level (< 8).

Figure 3.

Scatterplots of the CI outcomes (speech performance and the satisfaction level) vs. FCDT (in log scale) in CI users. The solid black lines represent the curve to fit the data using a linear regression (see Table 2 for fitting parameters).

Linear regression models were further used to model CNC word, CNC phoneme, AzBio-Q, AzBio-N, DIN and satisfaction level on mean FCDTs (on a log scale), controlling for 6 major demographic variables (ear tested, type of CI user, gender, age at test, duration of deafness, and duration of CI use). After adjusting for demographic variables, the FCDT can be used to predict CNC word (F=35.9, p<0.0001), CNC phoneme (F=34.52, p<0.0001), AzBio-Q (F=28.59, p<0.0001), AzBio-N (F=24.73, p=0.0001), DIN (F=13.71, p<0.0024). Duration of deafness contributed significantly to the model for AzBio-N, with CI ears with longer durations of deafness showing poorer performance; ear tested contributed significantly to the model for AzBio-Q and DIN, with left CI ears showing poorer performance than right ear. The mean FCDT was no longer correlated with satisfaction after controlling for demographic factors (F=4.07, p=0.06). Table 2 provides the statistical results of the linear regression analysis of various CI outcome measures vs. the mean FCDT.

Table 2.

Linear regression analysis of various CI outcome measures vs. the mean FCDT.

| CNC phoneme | CNC word | AzBio-Q | AzBio-N | DIN | Satisfaction level | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R2 | p | R2 | p | R2 | p | R2 | p | R2 | p | R2 | p | |

| Mean FCDTs | 0.72 | <0.0001* | 0.71 | <0.0001* | 0.71 | <0.0001* | 0.74 | 0.0001* | 0.74 | 0.002* | 0.47 | 0.06 |

| Ear tested | 0.173 | 0.132 | 0.048* | 0.475 | 0.046* | 0.369 | ||||||

| Type of CI user | 0.148 | 0.235 | 0.065 | 0.186 | 0.525 | 0.340 | ||||||

| Gender | 0.444 | 0.994 | 0.610 | 0.574 | 0.413 | 0.856 | ||||||

| Age | 0.683 | 0.850 | 0.955 | 0.064 | 0.193 | 0.998 | ||||||

| Dur. of deafness | 0.219 | 0.546 | 0.307 | 0.034* | 0.542 | 0.347 | ||||||

| Dur. of CI use | 0.536 | 0.618 | 0.398 | 0.264 | 0.675 | 0.962 | ||||||

Note. Statistical results reported are those after controlling for 6 major demographic variables: ear tested (left and right), type of CI user (unilateral and bilateral), gender (male and female), age (in years), duration of deafness (in years), and duration of CI use (in years).

indicates a statistically significant relationship.

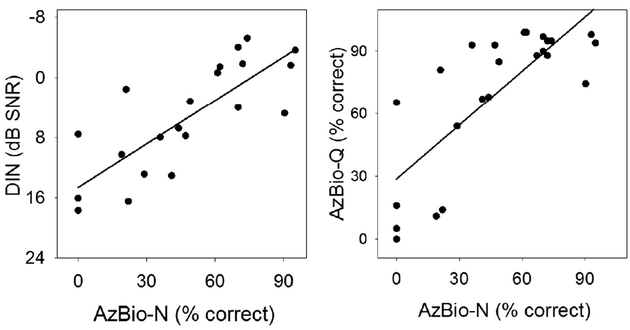

The correlations between DIN and AzBio-N (Figure 4 left), as well as AzBio-Q and AzBio-N (Figure 4 right) were also highly significant. These data provide validation for the AzBio-N measure in relation to the more psychophysically robust DIN. They show that individuals with good performance in AzBio-Q also had good performance in AzBio-N; performance in noise is poorer than that in quiet, as would be expected.

Figure 4.

The scatterplot of the DIN (left plot) and AzBio-Q (right plot) as a function of AzBio- N in CI users. The solid black line represents the linear regression fitting (see Table 2 for fitting parameters).

Discussion

Our findings show that the ability to detect within-interval frequency changes is significantly correlated to CI outcomes (speech performance and satisfaction level of CI use). CI speech performance can be predicted using the outcomes of FCDT and DIN tests that have no or minimal language requirements.

Frequency discrimination in CI users

In CI users, neural deficits resulting from long-term deafness and the limitations of CI speech processing substantially limit spectral resolution, resulting in poor frequency discrimination (Fu et al., 2004; Chatterjee & Peng, 2008; Strelcyk & Dau, 2009). This study confirmed our previous findings that CI users’ FCDT are substantially worse than those in normal hearing listeners (Liang et al., 2016; Liang et al., 2018). The variability in FCDTs is substantial in CI users, with some ears showing a FCDT of approximately 1% and greater than 40% in some other ears.

Using other methods, previous CI studies have examined frequency discrimination in CI users and also reported a much poorer frequency discrimination ability in CI users compared to normal hearing listeners. For instance, Goldsworthy (2015) reported that CI users’ pitch discrimination thresholds for pure tones (0.5, 1, and 2 kHz) ranged between 1.5–9.9%, while the thresholds for complex tones (with fundamental frequencies at 110, 220, 440 Hz) ranged between 2.6–28.5%. Wei et al. (2007) reported that the average frequency difference limen (FDL) was about 100 Hz, regardless of the standard frequency (from 125 to 4000 Hz at octave frequencies), while normal hearing listeners showed a FDL of 2–3 Hz at frequencies below 500 Hz and an increasing FDL for frequencies above 1000 Hz. Gfeller et al. (2002) reported that CI users had a mean discrimination threshold for complex tones (F0 between 73 to 553 Hz) of 7.56 semitones (range of 1–24 semitones, 1 semitone is approximately 6% change), which was significantly worse than that in normal hearing listeners’ (mean is 1.12 semitones, with a range of 1–2 semitones). Kang et al. (2009) reported that the FDL for CI users ranged from one to eight semitones, whereas normal listeners can easily perceive one semitone pitch change.

Note that, although frequency discrimination has been reported to be poorer in CI users compared to normal hearing listeners across multiple studies, the exact values vary among these studies. The reasons for this variance may be: 1) the heterogeneity of the CI subjects recruited in these studies, 2) difference in the frequency discrimination tasks stated earlier (pitch discrimination, pitch ranking, frequency change detection etc.), 3) difference in the stimulus type (pure tone vs. complex tone, acoustic vs. electric stimuli), and 4) difference in the reference frequency chosen. In this study, we used reference frequencies at 0.25, 1, and 4 kHz, which are assigned to electrodes in the apical, middle, and basal region of electrode array, respectively. However, no effect of base frequency was found. This finding is consistent with that in a previous study using frequency discrimination task (Turgeon et al., 2014). Previous studies reported that the frequency discrimination could be influenced by the stimulus frequency’s position relative to filter response curves in the CI output (Pretorius & Hanekom, 2008). Further research may be needed to examine the how the base frequency position affects the frequency change detection.

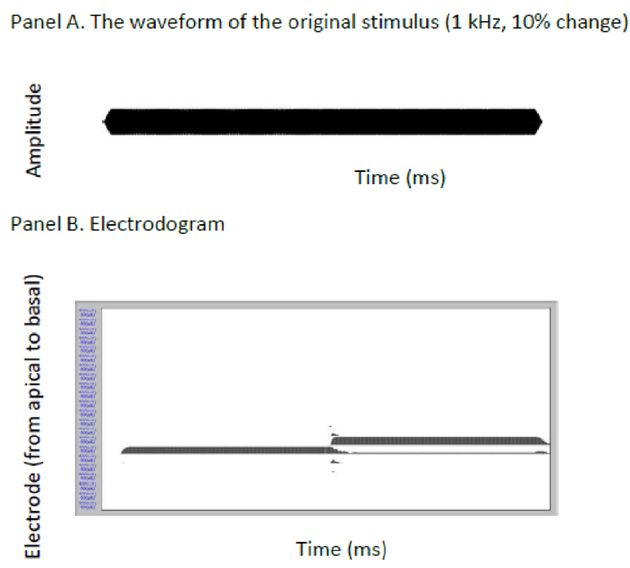

Although the variability in the FCDT was substantial, some good performers had FCDTs of approximately 1%. This suggested that these good performers are able to use the frequency change cues provided by the CI. At the CI speech processing stage, the acoustic sound is filtered through a number of contiguous band-pass filters based on its frequency components before further processing. The detection of a frequency change embedded in pure tones in the current study likely relies on the temporal rate cues as the result of different signal intensities on the filter response curve in the CI output (different intensities evoke different neural activation patterns and neural timing, Eggermont, 2001), and/or the place cues as the result of activating different electrodes (Pretorius & Hanekom, 2008). Such a speculation is supported by the electrodogram of the stimulus, which illustrates the output of the CI by submitting the original stimulus through the experimental speech processor. Figure 5 shows the example of a stimulus used in this study (e.g., 1 kHz pure tone containing a 10% change) and its corresponding electrodogram. The electrodogram was created using the default stimulation parameters for Cochlear Corporation’s Freedom device: the Advanced Combination Encode (ACE) strategy, 900 pulses per second (pps) per electrode, default frequency allocation (input frequency range: 188–7988 Hz), 8 maxima, etc. (Galvin et al., 2008). It can be seen that the CI transmits both temporal (intensity changes) and place cues (electrode changes) at the place where the frequency change occurs in the original stimulus.

Figure 5.

An example stimulus used in this study (top) and the corresponding electrodogram (CI stimulation patterns, bottom). The electrodogram was created using the default stimulation parameters for Cochlear Corporation’s Freedom device: ACE strategy, 900 pulses per second (pps) per electrode, default frequency allocation (input frequency range: 188–7988 Hz), 8 maxima, etc.

Speech perception in CI users

Speech perception performance varied across CI patients. The mean and standard deviation was 58.04%±28.66 for CNC word, 71.90%±31.98 for AzBio-Q, 47.26%±30.62 for AzBio-N, and 5.90±7.50 dB for DIN test. For all tasks, approximately 60–75% CI ears performed reasonably well (>60% CNC, >60% AzBio-Q, >30% AzBio-N, and <10 dB SRT for DIN test).

The performance in AzBio-Q is significantly correlated with the performance in AzBio- N, with a r of 0.78. A recent study (Brant et al., 2018) reported a similarly strong correlation (r = 0.77, p < 0.0001) between AzBio-Q and AzBio-N (+10dB SNR) in CI users. Such a correlation suggests that both tasks require some common fundamental mechanisms. However, the speech performance in noise is lower than that in quiet. Compared to speech in quiet for which temporal envelope cues are sufficient to support a reasonably good performance, speech in noise requires temporal fine structure cues that are missing in the outputs of contemporary CIs (Lorenzi et al., 2006).

The significant correlation between DIN and AzBio-N suggests that the simpler and more psychophysically robust DIN can be used to predict CI users’ speech performance in noise. The DIN is very simple and quick test and can be easily administered, with a high repeatability (Cullington & Agyemang-Prempeh, 2017). DIN data from the current study showed a normal distribution in CI users, suggesting that this test is appropriate for CI users with minimal ceiling and floor effects. A recent study (Cullington et al., 2018) used DIN test for the remote care group in a randomized controlled trial study and showed that DIN is a feasible test for long-term follow-up in CI users and for remote patient monitoring.

Correlations between FCDT and CI outcomes

This study is the first to report that scores on several tests of speech perception (CNC, AzBio-Q, AzBio-N, and DIN tests) and the ability to detect frequency changes contained in pure tones (within-interval frequency change detection) are significantly correlated, with a high strength of correlation. This finding is consistent with that in numerous studies using across-interval frequency discrimination such as pitch ranking, pitch discrimination, or spectral ripple tasks (Litvak et al., 2007; Drennan & Rubinstein, 2008; Gifford et al., 2014; Jeon et al., 2015; Kenway et al., 2015; Sheft et al., 2015; Turgeon et al., 2015; Drennan et al., 2016; Winn et al., 2016). For instance, using a modulation detection task with the stimulus trains presented through the electrode directly, Chatterjee and Peng (2008) reported signficant correlations between the modulation frequency detection threshold and performance of vowel and consonant recognition and speech intonation recognition. The correlation can be predicted with an exponential delay curve and the r ranged from approximately 0.68 to 0.83 with different modulation freqencies. Using a spectral ripple detection task presented through the CI sound processor, Litvak et al. (2007) reported a strong correlation between the spectral modulation threshold and vowel recognition, with a r of approximately 0.85. Using a similar spectral-ripple task but a free field presentation, Won et al. (2007) reported the spectral-ripple detection threshold is significantly correlated with speech outcomes, with the r at around 0.50 for CNC word recognition in quiet and 0.60 for CNC in noise. Using a frequency discrimination task with tone stimuli presented to the free field, Turgeon et al. (2015) reported that proficient CI users (>65% speech recognition) have a frequency discrimination threshold of less than 10% for 0.5 and 4 kHz, while non- proficient users (<65% speech recognition) have a threshold of approximately 20% for 0.5 kHz and 15% for 4 kHz. Together with our results using the within-interval frequency change detection task, these studies all suggest that speech perception is significantly correlated to frequency discrimination or frequency change detection, with high correlational coefficients. Therefore, it can be concluded that the variability of CI users in spectral resolution significantly account for the variability in their speech performance.

In the present study, acoustic presentation in the sound field was used to measure frequency resolution. In many previous studies, electrode discrimination via direct electric stimulation has been a commonly used and preferred method to measure psychophysical frequency resolution in CI patients. However, such measures may not truly reflect CI patients’ ability to discriminate the frequency changes as the sensitivity to frequency change may be further affected by the speech processor setting, such as input stage/front-end processing (i.e. automatic gain control, input frequency allocation, input dynamic range, etc.). For example, different sensitivity settings may map the same stimulus to different electric levels and different input frequency allocations may map the same stimulus to different electrodes for different CI patients, resulting in different electric stimulation patterns. Since the FCDT measures were conducted using the same setting as speech measures in the current study, all the aforementioned changes may be reflected in both FCDT and speech measures. Also, frequency resolution assessed using direct electric stimulation reflects single-channel electric stimulation and thus may not reflect CI patients’ ability to perceive complex multi-channel electric stimulation patterns for speech recognition. As shown in the electrodogram in Figure 5, multiple electrodes may be activated by the pure tones, suggesting that acoustic presentation of pure tones may better reflect the complex electric stimulation patterns for CI patients. The correlation between FCDT measures and speech performance observed in the current study suggests that, compared to the frequency tasks presented though direct electric stimulation, FCDT measures using acoustic presentation in the sound field may better reflect the CI patients’ ability to discriminate different frequencies in their daily listening condition (i.e., inter-subject variability).

Implications and future direction

This study has important implications. First, the finding suggests that within-interval frequency change detection plays important roles in CI users’ speech outcomes, therefore, approaches focusing on improving frequency change detection may result in better CI outcomes. For instance, one solution for improving frequency change detection may be to provide more detailed representation of frequency change information from the CI (Donaldson et al., 2005; Nie et al., 2005). Another solution for improving frequency change detection is to use auditory training to improve the sensitivity of the auditory system to detect frequency changes.

Second, our findings show that the frequency change detection task and DIN test can be easy-to-administer and quick tasks with none or minimal language requirement for CI users.

These tests can be done within several minutes, yet, their capability to predict speech perception performance is remarkable. A criterion of FCDT greater than 10% and DIN greater than 10 dB SRT may be used to screen and identify individuals who are likely to be poor performers for post-implantation rehabilitation.

There are several directions for future studies. First, objective measures such as neurophysiological measures would have important values in patients who cannot reliably perform behavioral tasks. Future studies will use the stimuli in this study to examine cortical auditory evoked potentials in response to frequency changes in CI users. Secondly, this study involved adult CI users only. Future studies will use the same FCDT and DIN tasks in pediatric CI users, who eventually need easy and quick tests for assessment.

Conclusion.

To summarize, a test of within-interval frequency change detection threshold (FCDT) and speech perception tests were administered in CI users. There were significant correlations between FCDTs and speech perception, suggesting that the FCDT can be a simple and useful non- linguistic task to assess CI outcomes. Moreover, digits-in-noise (DIN) results were significantly correlated with the AzBio-N, indicating that the DIN, which is simpler, more psychoacoustically robust, and available online, could be a preferable speech perception test for CI users.

Correlations between the frequency change detection thresholds (FCDTs) and speech perception were statistically significant in cochlear implant users.

The FCDT and Digit-in-noise (DIN) tests can serve as simple and rapid tasks that require no or minimal linguistic background for the prediction of CI speech outcomes.

Acknowledgement

We would like to thank all participants for their participation in this research. This research was partially supported by the University Research Council (URC) at the University of Cincinnati, the Center for Clinical and Translational Science and Training (CCTST), and the National Institute of Health (NIH 1R15DC011004–01 to Dr. Zhang and NIH R01-DC004792 to Dr. Fu). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The DIN test was kindly provided for research by hearX Group. Finally, we would like to thank Dr. Nanhua Zhang for his statistical assistance.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Alice B, Silvia M, Laura G, Patrizia T, & Roberto B (2013). Cochlear implantation in the elderly: Surgical and hearing outcomes. BMC Surgery. 10.1186/1471-2482-13-S2-S1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brant JA, Eliades SJ, Kaufman H, Chen J, & Ruckenstein MJ (2018). AzBio speech understanding performance in quiet and noise in high performing cochlear implant users. Otology and Neurotology. 10.1097/MAO.0000000000001765 [DOI] [PubMed] [Google Scholar]

- Brown CJ, Jeon EK, Driscoll V, Mussoi B, Deshpande SB, Gfeller K, & Abbas PJ (2017). Effects of Long-Term Musical Training on Cortical Auditory Evoked Potentials. Ear and Hearing. 10.1097/AUD.0000000000000375 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlson ML, Driscoll CLW, Gifford RH, & McMenomey SO (2012). Cochlear implantation: Current and future device options. Otolaryngologic Clinics of North America. 10.1016/j.otc.2011.09.002 [DOI] [PubMed] [Google Scholar]

- Chatterjee M, & Peng SC (2008). Processing F0 with cochlear implants: Modulation frequency discrimination and speech intonation recognition. Hearing Research. 10.1016/j.heares.2007.11.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheang HS, & Pell MD (2008). The sound of sarcasm. Speech Communication 10.1016/j.specom.2007.11.003 [DOI] [Google Scholar]

- Cullington HE, & Agyemang-Prempeh A (2017). Person-centred cochlear implant care: Assessing the need for clinic intervention in adults with cochlear implants using a dual approach of an online speech recognition test and a questionnaire. Cochlear Implants International. 10.1080/14670100.2017.1279728 [DOI] [PubMed] [Google Scholar]

- Cullington H, Kitterick P, Weal M, & Margol-Gromada M (2018). Feasibility of personalised remote long-term follow-up of people with cochlear implants: a randomised controlled trial. BMJ Open. 10.1136/bmjopen-2017-019640 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dimitrijevic A, Michalewski HJ, Zeng F, Pratt H, & Starr A (2008). Frequency changes in a continuous tone : Auditory cortical potentials, 119, 2111–2124. 10.1016/j.clinph.2008.06.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donaldson GS, Kreft HA, & Litvak L (2005). Place-pitch discrimination of single- versus dual-electrode stimuli by cochlear implant users. The Journal of the Acoustical Society of America. 10.1121/1.1937362 [DOI] [PubMed] [Google Scholar]

- Dorman MF, Cook S, Spahr A, Zhang T, Loiselle L, Schramm D, … Gifford R (2015). Factors constraining the benefit to speech understanding of combining information from low-frequency hearing and a cochlear implant. Hearing Research. 10.1016/j.heares.2014.09.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M, Spahr A, Gifford RH, Cook S, Zhanga T, Loiselle L, … Schramm D (2012). BILATERAL AND BIMODAL BENEFITS AS A FUNCTION OF AGE FOR ADULTS FITTED WITH A COCHLEAR IMPLANT. Journal of Hearing Science. [PMC free article] [PubMed] [Google Scholar]

- Drennan WR, & Rubinstein JT (2008). Music perception in cochlear implant users and its relationship with psychophysical capabilities. Journal of Rehabilitation Research and Development, 45(5), 779–89. 10.1682/JRRD.2007.08.0118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drennan WR, Won JH, Timme AO, & Rubinstein JT (2016). Nonlinguistic outcome measures in adult cochlear implant users over the first year of implantation. Ear and Hearing. 10.1097/AUD.0000000000000261 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eggermont JJ (2001). Between sound and perception: Reviewing the search for a neural code. Hearing Research, 157(1–2), 1–42. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Chinchilla S, & Galvin JJ (2004). The role of spectral and temporal cues in voice gender discrimination by normal-hearing listeners and cochlear implant users. JARO - Journal of the Association for Research in Otolaryngology. 10.1007/s10162-004-4046-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galvin JJ 3rd, Fu QJ, & Oba S (2008). Effect of instrument timbre on melodic contour identification by cochlear implant users. The Journal of the Acoustical Society of America, 124(4), EL189–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gfeller K, Turner C, Oleson J, Zhang X, Gantz B, Froman R, & Olszewski C (2007). Accuracy of Cochlear Implant Recipients on Pitch Perception, Melody Recognition, and Speech Reception in Noise. Ear and Hearing. 10.1097/AUD.0b013e3180479318 [DOI] [PubMed] [Google Scholar]

- Gfeller K, Witt S, Adamek M, Mehr M, Rogers J, Stordahl J, & Ringgenberg S (2002). Effects of training on timbre recognition and appraisal by postlingually deafened cochlear implant recipients. Journal of the American Academy of Audiology, 13(3), 132–145. https://doi.org/Article [PubMed] [Google Scholar]

- Gifford RH, Driscoll CLW, Davis TJ, Fiebig P, Micco A, & Dorman MF (2015). A Within-Subject Comparison of Bimodal Hearing, Bilateral Cochlear Implantation, and Bilateral Cochlear Implantation with Bilateral Hearing Preservation: High-Performing Patients. Otology and Neurotology. 10.1097/MAO.0000000000000804 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Hedley-williams A, Spahr AJ, Gifford RH, Hedley-williams A, & Clinical AJS (2014). Clinical assessment of spectral modulation detection for adult cochlear implant recipients : A non-language based measure of performance outcomes Clinical assessment of spectral modulation detection for adult cochlear implant recipients : A non-language b, 2027(May 2017). 10.3109/14992027.2013.851800 [DOI]

- Goldsworthy RL (2015). Correlations Between Pitch and Phoneme Perception in Cochlear Implant Users and Their Normal Hearing Peers. JARO - Journal of the Association for Research in Otolaryngology, 16(6), 797–809. 10.1007/s10162-015-0541-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holder JT, Reynolds SM, Sunderhaus LW, & Gifford RH (2018). Current Profile of Adults Presenting for Preoperative Cochlear Implant Evaluation. Trends in Hearing. 10.1177/2331216518755288 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeon EK, Turner CW, Karsten SA, Henry BA, & Gantz BJ (2015). Cochlear implant users’ spectral ripple resolution. The Journal of the Acoustical Society of America. 10.1121/1.4932020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaandorp MW, Smits C, Merkus P, Goverts ST, Joost M, Kaandorp MW, … Festen JM (2015). Assessing speech recognition abilities with digits in noise in cochlear implant and hearing aid users. International Journal of Audiology. 10.3109/14992027.2014.945623 [DOI] [PubMed] [Google Scholar]

- Kang R, Nimmons GL, Drennan W, Longnion J, Ruffin C, Nie K, … Rubinstein J (2009). Development and validation of the University of Washington clinical assessment of music perception test. Ear and Hearing. 10.1097/AUD.0b013e3181a61bc0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kenway B, Tam YC, Vanat Z, Harris F, Gray R, Birchall J, … Axon P (2015). Pitch Discrimination : An Independent Factor in Cochlear Implant Performance Outcomes, (14), 1472–1479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong Y-Y, Deeks JM, Axon PR, & Carlyon RP (2009). Limits of temporal pitch in cochlear implants. The Journal of the Acoustical Society of America. 10.1121/1.3068457 [DOI] [PubMed] [Google Scholar]

- Kreft HA, Nelson DA, & Oxenham AJ (2013). Modulation frequency discrimination with modulated and unmodulated interference in normal hearing and in cochlear-implant users. JARO - Journal of the Association for Research in Otolaryngology. 10.1007/s10162-013-0391-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landsberger DM (2008). Effects of modulation wave shape on modulation frequency discrimination with electrical hearing. J Acoust Soc Am. 10.1121/1.2947624 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liang C, Earl B, Thompson I, Whitaker K, Cahn S, Xiang J, … Zhang F (2016). Musicians are better than non-musicians in frequency change detection: Behavioral and electrophysiological evidence. Frontiers in Neuroscience, 10(OCT). 10.3389/fnins.2016.00464 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liang C, Houston LM, Samy RN, Abedelrehim LMI, & Zhang F (2018). Cortical processing of frequency changes reflected by the acoustic change complex in adult cochlear implant users. Audiology & Neuro-Otology, 23(3), 152–164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litvak LM, Spahr AJ, Saoji AA, & Fridman GY (2007). Relationship between perception of spectral ripple and speech recognition in cochlear implant and vocoder listeners. The Journal of the Acoustical Society of America. 10.1121/1.2749413 [DOI] [PubMed] [Google Scholar]

- Looi V, McDermott H, McKay C, & Hickson L (2004). Pitch discrimination and melody recognition by cochlear implant users. International Congress Series. 10.1016/j.ics.2004.08.038 [DOI] [Google Scholar]

- Looi V, Teo E-R, & Loo J (2015). Pitch and lexical tone perception of bilingual English–Mandarin-speaking cochlear implant recipients, hearing aid users, and normally hearing listeners. Cochlear Implants International. 10.1179/1467010015Z.000000000263 [DOI] [PubMed] [Google Scholar]

- Lopez Valdes A, Mc Laughlin M, Viani L, Walshe P, Smith J, Zeng FG, & Reilly RB (2014). Objective assessment of spectral ripple discrimination in cochlear implant listeners using cortical evoked responses to an oddball paradigm. PloS One. 10.1371/journal.pone.0090044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lorenzi C, Gilbert G, Carn H, Garnier S, & Moore BCJ (2006). Speech perception problems of the hearing impaired reflect inability to use temporal fine structure. Proceedings of the National Academy of Sciences. 10.1073/pnas.0607364103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Massa ST, & Ruckenstein MJ (2014). Comparing the performance plateau in adult cochlear implant patients using HINT and AzBio. Otology and Neurotology. 10.1097/MAO.0000000000000264 [DOI] [PubMed] [Google Scholar]

- McDermott JH, Keebler MV, Micheyl C, & Oxenham AJ (2010). Musical intervals and relative pitch: Frequency resolution, not interval resolution, is special. The Journal of the Acoustical Society of America. 10.1121/1.3478785 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore BCJ (1996). Perceptual Consequences of Cochlear Hearing Loss and their Implications for the Design of Hearing Aids. Ear and Hearing. 10.1097/00003446-199604000-00007 [DOI] [PubMed] [Google Scholar]

- Moore BCJ (2008). Basic auditory processes involved in the analysis of speech sounds. Philosophical Transactions of the Royal Society B: Biological Sciences. 10.1098/rstb.2007.2152 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nie K, Stickney G, & Zeng FG (2005). Encoding frequency modulation to improve cochlear implant performance in noise. IEEE Transactions on Biomedical Engineering. 10.1109/TBME.2004.839799 [DOI] [PubMed] [Google Scholar]

- Oxenham AJ (2008). Pitch Perception and Auditory Stream Segregation : Implications for Hearing Loss and Cochlear Implants, 316–331. 10.1177/1084713808325881 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parikh G, & Loizou PC (2005). The influence of noise on vowel and consonant cues. The Journal of the Acoustical Society of America. 10.1121/1.2118407 [DOI] [PubMed] [Google Scholar]

- Patel R, & Grigos MI (2006). Acoustic characterization of the question-statement contrast in 4, 7 and 11 year-old children. Speech Communication. 10.1016/j.specom.2006.06.007 [DOI] [Google Scholar]

- Patel TR, Shahin AJ, Bhat J, Welling DB, & Moberly AC (2016). Cortical Auditory Evoked Potentials to Evaluate Cochlear Implant Candidacy in an Ear with Long-standing Hearing Loss. Annals of Otology, Rhinology and Laryngology. 10.1177/0003489416656647 [DOI] [PubMed] [Google Scholar]

- Pratt H, Starr A, Michalewski HJ, Dimitrijevic A, Bleich N, & Mittelman N (2009). Auditory-evoked potentials to frequency increase and decrease of high- and low-frequency tones. Clinical Neurophysiology. 10.1016/j.clinph.2008.10.158 [DOI] [PubMed] [Google Scholar]

- Pretorius LL, & Hanekom JJ (2008). Free field frequency discrimination abilities of cochlear implant users. Hearing Research, 244(1–2), 77–84. 10.1016/j.heares.2008.07.005 [DOI] [PubMed] [Google Scholar]

- Raine C, Atkinson H, Strachan DR, & Martin JM (2016). Access to cochlear implants: Time to reflect. Cochlear Implants International. 10.1080/14670100.2016.1155808 [DOI] [PubMed] [Google Scholar]

- Reiss LAJ, Turner CW, Erenberg SR, & Gantz BJ (2007). Changes in pitch with a cochlear implant over time. JARO - Journal of the Association for Research in Otolaryngology. 10.1007/s10162-007-0077-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roland JT, Gantz BJ, Waltzman SB, & Parkinson AJ (2016). United States multicenter clinical trial of the cochlear nucleus hybrid implant system. Laryngoscope. 10.1002/lary.25451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- See RL, Driscoll VD, Gfeller K, Kliethermes S, & Oleson J (2013). Speech intonation and melodic contour recognition in children with cochlear implants and with normal hearing. Otology and Neurotology. 10.1097/MAO.0b013e318287c985 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sek A, & Moore BCJ (1995). Frequency discrimination as a function of frequency, measured in several ways. The Journal of the Acoustical Society of America. 10.1121/1.411968 [DOI] [PubMed] [Google Scholar]

- Sheft S, Cheng M-Y, & Shafiro V (2015). Discrimination of Stochastic Frequency Modulation by Cochlear Implant Users. Journal of the American Academy of Audiology. 10.3766/jaaa.14067 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skinner MW, Ketten DR, Holden LK, Harding GW, Smith PG, Gates GA, … Blocker B (2002). CT-derived estimation of cochlear morphology and electrode array position in relation to word recognition in nucleus-22 recipients. JARO - Journal of the Association for Research in Otolaryngology. 10.1007/s101620020013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smits C, Goverts ST, & Festen JM (2013). The digits-in-noise test : Assessing auditory speech recognition abilities in noise The digits-in-noise test : Assessing auditory speech recognition abilities in noise, 1693. 10.1121/1.4789933 [DOI] [PubMed]

- Spahr AJ, Dorman MF, Litvak LM, Van Wie S, Gifford RH, Loizou PC, … Cook S (2012). Development and validation of the azbio sentence lists. Ear and Hearing, 33(1), 112–117. 10.1097/AUD.0b013e31822c2549 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strelcyk O, & Dau T (2009). Relations between frequency selectivity, temporal fine-structure processing, and speech reception in impaired hearing. The Journal of the Acoustical Society of America. 10.1121/1.3097469 [DOI] [PubMed] [Google Scholar]

- Turgeon C, Champoux F, Lepore F, & Ellemberg D (2015). Deficits in auditory frequency discrimination and speech recognition in cochlear implant users. Cochlear Implants International. 10.1179/1754762814Y.0000000091 [DOI] [PubMed] [Google Scholar]

- Vandali AE, Sucher C, Tsang DJ, McKay CM, Chew JWD, & McDermott HJ (2005). Pitch ranking ability of cochlear implant recipients: A comparison of sound- processing strategies. The Journal of the Acoustical Society of America. 10.1121/1.1874632 [DOI] [PubMed] [Google Scholar]

- Vandali A, Sly D, Cowan R, & Van Hoesel R (2015). Training of cochlear implant users to improve pitch perception in the presence of competing place cues. Ear and Hearing, 36(2), e1–e13. 10.1097/AUD.0000000000000109 [DOI] [PubMed] [Google Scholar]

- Vlastarakos PV, Nazos K, Tavoulari EF, & Nikolopoulos TP (2014). Cochlear implantation for single-sided deafness: The outcomes. An evidence-based approach. European Archives of Oto-Rhino-Laryngology. 10.1007/s00405-013-2746-z [DOI] [PubMed] [Google Scholar]

- Wei C, Cao K, Jin X, Chen X, Zeng F, Wei C, … Zeng F-G (2007). Psychophysical performance and Mandarin tone recognition in noise by cochlear implant users...Asia- Pacific Symposium on Cochlear Implant and Related Sciences (APSCI). Ear & Hearing (01960202). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winn MB, Won JH, & Moon IJ (2016). Assessment of Spectral and Temporal Resolution in Cochlear Implant Users Using Psychoacoustic Discrimination and Speech Cue Categorization. Ear and Hearing. 10.1097/AUD.0000000000000328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Won JH, Drennan WR, & Rubinstein JT (2007). Spectral-ripple resolution correlates with speech reception in noise in cochlear implant users. JARO - Journal of the Association for Research in Otolaryngology. 10.1007/s10162-007-0085-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeng F-G, Nie K, Stickney GS, Kong Y-Y, Vongphoe M, Bhargave A, … Cao K (2005). Speech recognition with amplitude and frequency modulations. Proceedings of the National Academy of Sciences. 10.1073/pnas.0406460102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeng FG (2002). Temporal pitch in electric hearing. Hearing Research. 10.1016/S0378-5955(02)00644-5 [DOI] [PubMed] [Google Scholar]

- Zeng F, Tang Q, & Lu T (2014). Abnormal Pitch Perception Produced by Cochlear Implant Stimulation, 9(2). 10.1371/journal.pone.0088662 [DOI] [PMC free article] [PubMed] [Google Scholar]