Abstract

Objectives/Hypothesis:

Cochlear implants (CIs) restore auditory sensation to patients with moderate-to-profound sensorineural hearing loss. However, the benefits to speech recognition vary considerably among patients. Advancing age contributes to this variability in postlingual adult CI users. Similarly, older individuals with normal hearing (NH) perform more poorly on tasks of recognition of spectrally degraded speech. The overarching hypothesis of this study was that the detrimental effects of advancing age on speech recognition can be attributed both to declines in auditory spectral resolution as well as declines in cognitive functions.

Study Design:

Case-control study.

Methods:

Speech recognition was assessed in CI users (in the clear) and NH controls (spectrally degraded using noise-vocoding), along with auditory spectral resolution using the Spectral–Temporally Modulated Ripple Test. Cognitive skills were assessed using nonauditory visual measures of working memory, inhibitory control, speed of lexical/phonological access, nonverbal reasoning, and perceptual closure. Linear regression models were tested for mediation to explain aging effects on speech recognition performance.

Results:

For both groups, older age predicted poorer sentence and word recognition. The detrimental effects of advancing age on speech recognition were partially mediated by declines in spectral resolution and in some measures of cognitive function.

Conclusions:

Advancing age contributes to poorer recognition of degraded speech for CI users and NH controls through declines in both auditory spectral resolution and cognitive functions. Findings suggest that improvements in spectral resolution as well as cognitive improvements may serve as therapeutic targets to optimize CI speech recognition outcomes.

Keywords: Aging, cochlear implants, cognition, spectral resolution, speech perception

INTRODUCTION

Cochlear implants (CIs) restore the sensation of hearing to patients with moderate-to-profound sensorineural hearing loss. However, the benefits to speech recognition afforded to CI users vary considerably among patients. This variability continues to frustrate patients, clinicians, and researchers alike, as a result of our inability to predict outcomes preoperatively, to explain performance postoperatively (e.g., in a patient with an unexpected poor outcome), and to develop rehabilitative approaches to optimize individual patients’ speech recognition with their devices. Thus, efforts to understand the factors underlying individual CI outcome variability, and the mechanisms through which these factors exert their effects, are essential to inform novel diagnostic and therapeutic approaches for this patient population.

Several patient factors have been identified that traditionally help predict postimplantation outcomes in CI users, specifically adults with postlingual hearing loss (e.g., duration and severity of hearing loss prior to implantation, or amount of residual hearing).1,2 Importantly, a consistent inverse relationship has been demonstrated in several studies between patient age and word or sentence recognition performance in postlingual adult CI users,3,4 although not all studies confirm this relation.5 Similarly, there is evidence that older individuals with clinically normal hearing (NH) perform more poorly on tasks of recognition of spectrally degraded speech that is processed in a fashion similar to CI speech processing, using noise-vocoding.6 Thus, the effects of aging on speech recognition in CI users and NH peers listening to spectrally degraded speech deserve exploration, because a link between advancing age and poorer performance stands on the strong theoretical grounds of known aging-related declines in auditory and cognitive processes. Additionally, recognition aging-related declines that contribute to poorer recognition of degraded speech has implications beyond just CI users; these declines may also impact listening abilities for patients with milder degrees of hearing loss or even NH when listening under adverse conditions (e.g., noise, reverberation, situations with high cognitive demands).

Effects of Aging on Auditory Processing

Aging contributes to declines across multiple foundational skills that are likely to impact a listener’s ability to recognize and understand speech. The most obvious age-associated decline is hearing loss itself, the progressive degradation of the quality of auditory input received, perceived, and interpreted by aging listeners with presbycusis. Most studied are the effects of presbycusis on hearing thresholds; that is, patients’ progressive declines in audibility, particularly at higher frequencies. Without correction, this decline in auditory sensitivity degrades the speech signal by making important spectro-temporal speech cues inaccessible, with consequent failure of the listener to interpret the signal appropriately. Less evident on clinical audiometry are the suprathreshold changes in spectro-temporal processing associated with advancing age. For example, temporal gap detection studies demonstrate increasing thresholds, and poorer duration discrimination, with increasing age.7 Auditory nerve synchrony appears to decline with increasing age,8 and broadening of spectral filters occurs, which may in part be due to loss of the outer hair cell filter-sharpening process of the basilar membrane.9 Additionally, there are likely changes within the spiral ganglion and auditory nerve itself that lead to poorer spectral resolution with more advanced cases of presbycusis.10 Aging-related declines in spectral resolution may occur even in the absence of elevated pure-tone thresholds.11

It is often assumed that restoration of auditory input through a CI bypasses, and thus mostly eliminates, the effects of aging-related changes to the peripheral auditory system. Clearly, with appropriate programming of the device, each intracochlear electrode can be set independently to restore access to sound and achieve stimulation at what are generally reasonable hearing thresholds (<35 dB HL). The device can provide relatively accurate representations of temporal envelope structure of acoustic signals, but is limited in its ability to transmit temporal fine structure. This limitation has been demonstrated with the use of a number of behavioral measures of spectral resolution, in which listeners are typically asked to discriminate a spectrally rippled stimulus (one that is amplitude modulated in the frequency domain) from another stimulus that is not rippled, that is phase-reversed, or that contains a different ripple density (ripples per octave).12,13 In CI users, the overall spectral resolution afforded by CIs, although known to be highly impoverished relative to NH, is also highly variable among patients.14 Moreover, performance on spectral resolution tasks has also been shown to decline with aging.12,13 It is likely that in addition to device-related peripheral limitations that are unrelated to aging, there are persistent aging-related effects that contribute to decreased spectral resolution, potentially leading to poorer speech recognition for older CI users. The first goal of this study was to test the hypothesis that advancing age contributes to a decline in spectral resolution, which, in turn, contributes to poorer speech recognition performance in both adult CI users and NH peers listening to degraded speech. In other words, it was predicted that spectral resolution would mediate, at least in part, the detrimental effects of advancing age on speech recognition skills.

Effects of Aging on Cognitive Functions

Along with aging-related declines in auditory functions, there is abundant evidence that advancing age is associated with declines in cognitive functions, including working memory capacity, inhibitory control, processing speed, nonverbal reasoning, and perceptual closure.9,15–17 These cognitive declines likely contribute to greater difficulties in understanding speech for older adults. Although not widely examined in adult CI users, cognition has repeatedly been found to play a role in speech recognition for patients with milder degrees of hearing loss, and for adults with NH listening to degraded speech (usually tested in noise).18,19 In particular, verbal working memory (WM), the capacity to simultaneously store and manipulate verbal information,20 has been found to underlie success in recognition of degraded speech. WM capacity is consistently associated with speech perception abilities in adults with hearing loss.21,22 For example, speech recognition of older listeners with poorer WM capacity is more susceptible to distortions in hearing aid signal processing than those with higher WM capacity.23 However, none of these studies specifically examined adult CI users or the effects of advancing age on speech perception skills.

In addition to an aging-related decline in verbal WM as a potential contributor to poorer speech recognition, a recent study identified the role of inhibitory control abilities on speech recognition in postlingual adult CI users. A significant correlation was found between sentence recognition in speech-shaped noise and inhibitory control skills, assessed using a visual computerized version of a verbal Stroop task.24 Better inhibitory control may permit a listener to more effectively ignore nontarget auditory stimuli and to inhibit the activation of incorrect lexical units during recognition of running speech. Advancing age has been found to be associated with poorer inhibitory control using various testing paradigms.16,25 Thus, it is reasonable to predict that declines in inhibitory control may account for some of the effects of advancing age on speech recognition outcomes for adult CI users, or for NH peers listening to degraded speech.

Two other relevant cognitive skills that are strongly affected by increasing age are processing speed and nonverbal reasoning/intelligence quotient (IQ).26,27 Although processing speed measures like simple reaction time have not generally been found to relate to speech recognition performance in patients with milder degrees of hearing loss,28 advancing age clearly leads to overall declines in processing speed.27 Understanding running speech requires the listener to rapidly access phonological and lexical information from the incoming signal, and a listener’s speed of processing is likely taxed even more heavily when listening to degraded acoustic input. Thus, it is plausible that processing speed, particularly when assessed using a task that measures speed of lexical or phonological access, relates to speech recognition outcomes for adult CI users, and can explain some of the detrimental effects of advancing age. Similarly, although general tests of IQ, such as the Wechsler Adult Intelligence Scale-Revised,29 generally have failed to demonstrate significant relations with speech recognition abilities,30 nonverbal reasoning has received little research attention as a factor contributing to performance in adult CI users, with the exception of two studies. Knutson and colleagues found that scores on a visual task of nonverbal reasoning, Raven’s matrices, were mildly predictive (r = 0.44) of audiovisual consonant recognition in a group of adults with early multichannel CIs.31 Holden and colleagues found a correlation between a composite cognitive score, including verbal memory, vocabulary, similarities and matrix reasoning, and word recognition outcomes in adult CI users1; however, it was unclear in that study which component of the cognitive measure drove this relationship.

A final cognitive ability that is worth consideration with regard to its potential effects on the recognition of degraded speech is perceptual closure, also known as perceptual organization. This skill refers to the ability to create a perceptual whole from degraded sensory input, whether it is visual or auditory in nature. Visual assessments of perceptual closure have been developed and assessed for their relations to recognition of degraded speech, including the Text Reception Threshold test, the visual Speech Perception in Noise, and the Fragmented Sentences task. Scores on these tasks have been identified in some studies to correlate with speech reception thresholds in adults with normal hearing or mild degrees of hearing loss.32,33

Thus, the second goal of the current study was to test the hypothesis that advancing age leads to declines in cognitive functions (verbal WM, inhibitory control, speed of lexical/phonological access, nonverbal reasoning, and perceptual closure), some of which, in turn, lead to poorer speech recognition performance in adult CI users and NH peers listening to degraded speech. That is, it was predicted that cognitive changes would mediate, again, at least partially, the effects of advancing age on speech recognition.

Current Study

The current study was motivated primarily by the clinical question, “Do declines in spectral resolution and cognitive skills explain aging-related declines in speech recognition for adults with CIs and for NH adults listening to spectrally degraded speech?” To answer this question, three hypotheses were tested: 1) Both postlingually deaf adult experienced CI users and NH adult controls would demonstrate aging-related declines in spectral resolution. 2) Adult CI users and NH controls would demonstrate aging-related declines in cognitive skills. 3) Adult CI users and NH controls would demonstrate aging-related deficits in speech recognition, either through a CI or when processing spectrally degraded speech, and these deficits would be mediated by poorer spectral resolution and poorer cognitive functions associated with advancing age.

Inclusion of a NH control group in this study accomplished three main goals. First, it was to broaden the age range of study participants to more thoroughly investigate the effects of aging on the perception of spectrally degraded speech. Cochlear implant users typically represent a relatively limited age range among this patient population. For the purposes of the current study, we aimed to investigate only postlingual patients who developed relatively normal speech and language skills prior to losing their hearing. Their language development would be thus more comparable to that of participants in the NH control group, whereas inclusion of pre- or perilingual patients could make our findings difficult to interpret. Second, understanding the factors that contribute to recognition of spectrally degraded speech through vocoding may have broader implications for understanding the effects of additional adverse listening conditions, such as noise, reverberation, or performance of auditory tasks under high cognitive load. Third, it was to compare perceptual processing that underlies perception of spectrally degraded speech in NH and CI participants. Simulation of CI processing when noise-vocoded or sinewave-vocoded speech is presented to NH participants is a popular experimental approach. It often assumes similar underlying perceptual processing by patients with CIs and their NH peers. However, this assumption still requires validation. Thus, in the current study, along with CI users, NH adult participants were enrolled to assess the auditory and cognitive contributions to their recognition of noise-vocoded speech materials. A final hypothesis of this study was that CI users and NH controls would demonstrate similar relations of cognitive measures and recognition of degraded speech—speech delivered through a CI or noise-vocoded speech for NH controls—suggesting similar perceptual processing mechanisms between groups.

MATERIALS AND METHODS

Participants

Forty-two adult CI users and 96 adults who reported NH were enrolled and underwent initial testing. Participants were all native English speakers and had at least a high school diploma or equivalency. All participants were screened for vision using a basic near-vision test and were required to have better than 20/40 near vision, because cognitive measures were presented visually. Two CI participants had vision scores of 20/50; however, they still displayed normal reading scores, suggesting sufficient visual abilities to include their data in the analyses. A screening task for cognitive impairment was completed, using a visual version of the Mini-Mental State Examination (MMSE),34 with an MMSE raw score ≥26 required; all participants met this criterion, suggesting no evidence of cognitive impairment. A final screening test of basic word reading was completed, using the Wide Range Achievement Test.35 Participants were required to have a word reading standard score ≥80, suggesting reasonably normal general language proficiency. Socioeconomic status (SES) of participants was also collected because it may be a contributor to speech and language abilities. This was accomplished by quantifying SES based on a metric developed by Nittrouer and Burton, consisting of occupational and educational levels.36 There were two scales for occupational and education levels, each ranging from 1 to 8, with 8 being the highest level. These two numerical scores were then multiplied, resulting in scores between 1 and 64. Lastly, a screening audiogram of unaided hearing was performed for each ear separately for all participants to consider as covariates in analyses.

Thirty-four CI users met screening criteria, and they were between the ages of 50 and 83 years (mean = 69.0 years, standard deviation [SD] = 8.5) and were postlingually deafened, meaning they should have developed reasonably proficient language skills prior to losing their hearing. Thus, all but six CI patients reported onset of hearing loss no earlier than age 12 years (i.e., normal hearing until the time of puberty). The other six CI users reported some degree of congenital hearing loss or onset of hearing loss during childhood. However, all CI participants had experienced early hearing aid intervention and typical auditory-only spoken language development during childhood, had been mainstreamed in education, and had experienced progressive hearing losses into adulthood. All of the CI users received their CIs at or after the age of 35 years. Prior to implantation, all CI users had met candidacy requirements for cochlear implantation, including severe-to-profound hearing loss in both ears. The CI participants were enrolled from the patient population of the institution’s otolaryngology department and had demonstrated CI-aided thresholds in the clinic of better than 35 dB HL across speech frequencies. Duration of hearing loss ranged from 4 to 76 years (mean = 39.9 years, SD = 20.6 years), and duration of CI use ranged from 18 months to 34 years (mean = 7.1 years, SD = 6.9 years). Details of individual CI participants can be found in Table I.

Table I.

Cochlear Implant Participant Demographics

| Participant | Gender | Age (Years) | Implant Age (Years) | SES | Side of Implant | Contra lateral Hearing Aid Used | Etiology of Hearing Loss | Better Ear PTA (dB HL) |

Harvard Standard Sentence Recognition (% Correct Words) | PRESTO Sentence Recognition (% Correct Words) | CID Word Recognition (% Correct Words) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | F | 65 | 54 | 24 | Bilateral | No | Genetic | 120.0 | 89.8 | 87.9 | 92.0 |

| 2 | F | 66 | 62 | 35 | Right | Yes | Genetic/progressive as adult | 78.8 | 86.9 | 89.5 | 96.0 |

| 3 | F | 67 | 58 | 12 | Right | Yes | Genetic/progressive as adult | 103.8 | 88.1 | 71.9 | 74.0 |

| 4 | M | 70 | 65 | 30 | Right | No | Genetic/progressive as adult | 88.8 | 80.1 | 57.3 | 72.0 |

| 5 | F | 57 | 48 | 25 | Right | Yes | Genetic/progressive as child/adult | 82.5 | 83.1 | 84.4 | 90.0 |

| 6 | M | 79 | 76 | 48 | Right | Yes | Genetic/progressive as adult | 70.0 | 74.6 | 66.1 | 70.0 |

| 7 | F | 69 | 56 | 10.5 | Bilateral | No | Otosclerosis | 112.5 | 78.4 | 73.4 | 84.0 |

| 8 | M | 55 | 50 | 30 | Bilateral | No | Progressive as adult | 120.0 | 88.6 | 81.7 | 90.0 |

| 9 | F | 76 | 68 | 30 | Left | No | Progressive as adult | 108.8 | 62.3 | 37.1 | 34.0 |

| 10 | M | 79 | 74 | 10 | Left | No | Unknown | 108.8 | 61.4 | 56.5 | 56.0 |

| 11 | F | 81 | 71 | 30 | Right | No | Progressive as adult | 88.8 | 80.9 | 67.0 | 88.0 |

| 12 | M | 59 | 57 | 24 | Bilateral | No | Sudden hearing loss | 120.0 | 85.2 | 83.9 | 92.0 |

| 13 | M | 78 | 72 | 12.5 | Bilateral | No | Progressive as adult | 120.0 | 78.8 | 53.2 | 76.0 |

| 14 | M | 69 | 62 | 56 | Bilateral | No | Genetic/progressive as child | 120.0 | 76.7 | 66.9 | 76.0 |

| 15 | F | 50 | 35 | 32.5 | Bilateral | No | Progressive as child | 117.5 | 86.9 | 96.9 | 98.0 |

| 16 | F | 64 | 61 | 30 | Right | No | Progressive as adult | 103.8 | 86.9 | 57.1 | 70.0 |

| 17 | F | 67 | 58 | 9 | Bilateral | No | Meniere’s disease | 120.0 | 86.9 | 81.7 | 90.0 |

| 18 | M | 83 | 76 | 42 | Right | Yes | Progressive as adult/noise | 68.8 | 67.0 | 75.0 | 84.0 |

| 19 | F | 73 | 67 | 15 | Right | No | Progressive as child/adult | 98.8 | 78.4 | 66.1 | 74.0 |

| 20 | M | 76 | 73 | 49 | Left | Yes | Progressive as adult/noise | 72.5 | 45.3 | 12.1 | 56.0 |

| 21 | F | 79 | 45 | 15 | Right | Yes | Progressive as adult | 57.5 | 53.0 | 43.6 | 28.0 |

| 22 | M | 74 | 72 | 64 | Right | Yes | Congenital/progressive as child | 92.5 | 69.1 | 49.2 | 26.0 |

| 23 | M | 66 | 60 | 18 | Left | No | Meniere’s disease | 80.0 | 90.7 | 80.7 | 92.0 |

| 24 | M | 77 | 75 | 24 | Bilateral | No | Chronic ear infections/progressive | 105.0 | 12.3 | 6.7 | 32.0 |

| 25 | F | 65 | 63 | 36 | Right | No | Genetic/progressive as adult | 86.3 | 77.5 | 35.3 | 48.0 |

| 26 | F | 62 | 59 | 14 | Bilateral | No | Sepsis/ototoxicity | 95.0 | 78.8 | 31.7 | 50.0 |

| 27* | M | 80 | 79 | 9 | Left | No | Genetic/progressive as adult | 76.3 | – | – | 18.0 |

| 28 | M | 61 | 55 | 24 | Right | Yes | Unknown | 111.3 | 88.14 | 75.5 | 84.0 |

| 29 | M | 60 | 55 | 10.5 | Left | No | Genetic/progressive as adult | 115.0 | 78.81 | 57.1 | 66.0 |

| 30 | F | 64 | 59 | 24 | Left | Yes | Progressive as child | 95.0 | 63.98 | 33.0 | 46.0 |

| 31 | M | 59 | 54 | 6 | Right | No | Genetic/progressive as adult | 108.8 | 44.07 | 31.3 | 56.0 |

| 32 | M | 65 | 38 | 12 | Right | Yes | Progressive as adult/noise | 92.5 | 61.86 | 30.4 | 48.0 |

| 33 | M | 81 | 80 | 49 | Left | Yes | Progressive as adult | 75.0 | 64.41 | 33.5 | 42.0 |

| 34 | M | 70 | 68 | 42 | Left | Yes | Progressive as adult | 93.8 | 86.86 | 72.3 | 86.0 |

Participant 27 did not have sentence recognition scores due to testing error.

CID = Central Institute of the Deaf; HL = hearing level; F = female; M = male; PRESTO = Perceptually Robust English Sentence Test Open-set; PTA = pure-tone average; SES = socioeconomic status.

Control participants were recruited from the otolaryngology clinic as patients with nonotologic complaints, or using Research-Match, a national research recruitment service. Because enrolling older adults with normal pure-tone thresholds is difficult, the pure-tone average (PTA) criterion for frequencies 0.5, 1, 2, and 4 kHz was relaxed to 30 dB HL or better, along with passing the other screening criteria. Eighty-nine NH control participants met criteria and were included in the analyses. These NH controls were between the ages of 18 and 81 years (mean = 43.3 years, SD = 20.8 years), with PTA ranging from −3.75 to 30 dB HL (mean = 8.9 dB HL, SD = 6.7 dB HL). Group mean demographic and screening measures for CI and NH control participants are shown in Table II.

Table II.

Participant Demographics for CI Participants and NH Controls

| CI | NH | |||

|---|---|---|---|---|

| Demographics | Mean | SD | Mean | SD |

| Age (yr) | 69.0 | 8.5 | 43.3 | 20.8 |

| Pure-tone average (dB HL) | 97.3 | 18.0 | 8.9 | 6.7 |

| Reading (standard score) | 99.4 | 11.3 | 102.3 | 8.7 |

| MMSE (raw score) | 28.7 | 1.3 | 29.3 | 1.0 |

| SES | 26.6 | 15.3 | 31.2 | 13.1 |

| Duration of hearing loss (yr) | 39.9 | 20.6 | – | – |

| Duration of Cl use (yr) | 7.1 | 6.9 | – | – |

CI = cochlear implant; MMSE = Mini-Mental State Examination; NH = normal hearing; SD = standard deviation; SES = socioeconomic status.

Equipment and Materials

All testing took place at a central testing location using sound booths and acoustically insulated rooms for testing. All tests with auditory responses were audiovisually recorded for later scoring. Participants wore frequency modulation transmitters through the use of specially designed vests. This allowed for their responses to have direct input into the camera, permitting later offline scoring of tasks. Each task was scored by two separate individuals for 25% of responses to ensure reliable results. Reliability was determined to be >95% for all measures.

Visual stimuli were presented on paper or on a touch screen monitor made by Keytec Inc. (Richardson, TX) placed 2 feet in front of the participant. Auditory stimuli were presented via a Roland MA-12C speaker (Roland Corp., Los Angeles, CA) placed 1 m in front of the participant at 0 ° azimuth. All of the tests requiring auditory responses from the participant were audiovisually recorded via a Sony HDR-PJ260 High Definition Handycam (Sony Corp., Tokyo, Japan) with an 8.9 MP digital video camera for the purposes of scoring the tasks at a later time. The participants wore specially designed vests, with a pocket for the purpose of wearing a Sony UHF Synthesized Transmitter UTX-B1 (Sony Corp.), which was placed in the pocket of the vest, with the microphone attached to the neckline of the vest. The Sony Synthesized Diversity Tuner URX-91 (Sony Corp.) was attached to the video camera, which allowed the participants’ auditory responses to be directly transmitted to the camera for high-quality sound recording. Prior to the testing session, the Roland MA-12C speaker was calibrated to 68 dB SPL using a sound level meter positioned 1 m in front of the speaker at 0 ° azimuth. After the screening measures were completed, the measures outlined below were collected.

Speech Recognition Measures.

Speech recognition tasks were presented in the clear for CI users, and were processed using eight-channel noise-vocoding for NH controls. Vocoding was done using vocoder software in MATLAB (MathWorks, Natick, MA), using the Greenwood function with speech-modulated noise. Three speech recognition measures were included to test perception of speech under three different conditions:

HARVARD STANDARD SENTENCES.

Sentences were presented via loudspeaker, and participants were asked to repeat as much of the sentence as they could. Thirty sentences from the Harvard standard lists 1 to 10 were used, which were spoken and recorded by a single male talker.37 The sentences are long, complex, and semantically meaningful, consisting of an imperative or declarative structure. An example is, “A pot of tea helps to pass the evening.” Scores were percentage of total words repeated correctly, excluding the first two sentences as practice.

PRESTO SENTENCES.

These sentences were chosen from the Texas Instruments/Massachusetts Institute of Technology speech collection, and were created to balance talker gender, key words, frequency, and familiarity, with sentences varying broadly in speaker dialect and accent.38 Perceptually Robust English Sentence Test Open-set (PRESTO) sentences are high-variability, complex sentences, which would be expected to be more challenging for listeners to recognize. An example of a sentence is, “A flame would use up air.” Participants were asked to repeat 32 sentences. Scores were again the percentage of total words correct, excluding the first two sentences as practice.

CENTRAL INSTITUTE OF THE DEAF-W22 WORD LISTS.

Fifty Central Institute of the Deaf (CID)-W22 words were presented. The participants were instructed to repeat the last word that was said after the prompt, “Say the word __.” CID W-22 words are phonetically balanced and spoken and recorded by a single male speaker with a general American dialect.39 Because these are words presented without sentential context, performance should more closely represent sensitivity of the listener to acoustic-phonetic details of speech, as compared with the sentence recognition tasks above. List 1A, which consisted of 50 words, was used for testing. Scores were percentage of whole words correct.

Spectral Resolution.

The Spectral-Temporally Modulated Ripple Test (SMRT) was used to assess CI users’ spectral resolution. This task was developed by Aronoff and Landsberger40 and is available free of charge at http://smrt.tigerspeech.com. The task is described in detail by Aronoff and Landsberger but discussed briefly here. Stimuli consisted of 202 pure-tone frequencies having amplitudes spectrally modulated by a sine wave. Ripple density and phase of ripple onset were determined by frequency and phase of the modulating sinusoid. The ripple-resolution threshold was determined using a three-interval, two-alternative forced-choice, one-up/one-down adaptive procedure. The reference stimulus consisted of 20 ripples per octave (RPO). The initial target stimulus had 0.5 RPO, with a step size of 0.2 RPO. Listeners discriminated reference from target stimuli. The test was completed after six runs of 10 reversals each. A listener’s score was based on the last six reversals of each run, with the first three runs discarded as practice. A higher score represented better spectral resolution.

Cognitive Measures

VERBAL WORKING MEMORY–VISUAL DIGIT SPAN.

This task assessed verbal WM capacity of the participants in a visual fashion. This task was based on the original auditory digit span task from the Wechsler Intelligence Scale for Children, Fourth Edition, Integrated.29 For this task, participants were presented with visual stimuli in the form of digits (1–9) on the touch screen computer monitor. To familiarize the participants with the stimuli, one digit appeared on the screen first, followed by a screen with all nine numbers. Participants were asked to touch the digit on the screen that had appeared first. Next, the participants saw a sequence of numerical digits and were asked to recall the sequence correctly, via touching the numbers on the screen in the correct order, when the screen with all nine numbers appeared. The number of digits presented each trial began with two stimuli and increased gradually as the participant continued to answer correctly, up to a maximum of seven digits. Each string of digits was presented twice (different stimuli, same string length). Once the participant answered two strings of the same number of stimuli wrong, the task automatically ended. Digits were presented visually one at a time on a computer screen. Once the numbers disappeared from the screen, the participant was asked to touch the numbers on the screen in the correct serial order. Total correct items served as the performance score.

INHIBITORY CONTROL–STROOP.

This computerized task evaluated inhibitory control abilities and is publicly available (http://www.millisecond.com). Participants were shown a color word on the computer, presented in either the same or a different color. The participant was asked to press the computer key on the keyboard that corresponded with the color of the text of the word, not the color represented by the word. The Stroop task was divided into congruent trials (color and color word matched) and incongruent trials (color and color word did not match). Response times were computed for each condition, and an interference score was calculated by subtracting the mean response time for congruent condition from the mean response time for incongruent condition across trials.

PROCESSING SPEED FOR LEXICAL/PHONOLOGICAL ACCESS–TEST OF WORD READING EFFICIENCY, VERSION 2.

The Test of Word Reading Efficiency (TOWRE) is a measure of word reading accuracy and fluency, and can be considered an assessment of the speed of a participant’s lexical and phonological access.41 The test assesses two types of reading skills: the ability to accurately recognize and identify familiar real words, and the ability to “sound out” nonwords via phonetically decoding the nonwords. The participants read as many words as they could from the 108-word list in 45 seconds, followed by reading as many nonwords as they could in 45 seconds from the 66 nonword list. Two scores were computed: percent whole words correct and percent whole nonwords correct.

NONVERBAL REASONING–RAVEN’S MATRICES.

Visual patterns were presented on a touchscreen, and participants completed the patterns by selecting the best option. Participants completed as many items as possible in 10 minutes, and scores were total correct number of items.

PERCEPTUAL CLOSURE–FRAGMENTED SENTENCES TEST.

This task was developed by Feld and Sommers,42 who based their work off of Watson et al.43 During the task, meaningful sentences appeared briefly on the computer monitor with visually degraded (i.e., fragmented) words. The participants viewed 18 sentences. Participants were asked to read aloud as much of the sentence as possible while the sentence was on the screen, as well as in the 2 seconds after the sentence disappeared from the screen. Studies using similar tasks have found correlations between these abilities and speech perception in noise for NH individuals.44 The fragmented sentences test was scored using percentage of words correct.

General Approach

The study protocol was approved by the local institutional review board. All participants provided informed, written consent, and were reimbursed $15 per hour for participation. Testing was completed over a single 2-hour session, with frequent breaks to prevent fatigue. During testing, CI participants used their typical hearing prostheses, including any contralateral hearing aid, except during the unaided audiogram. Prior to the start of testing, examiners checked the integrity of the individual’s hearing prostheses by administering a brief vowel and consonant repetition task.

Data Analyses

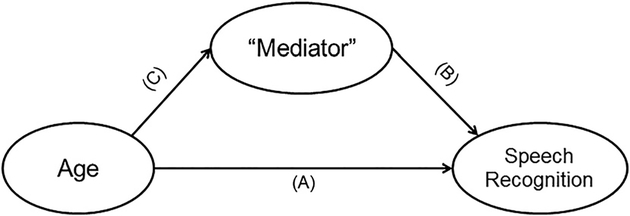

Linear regression analyses were performed for each group separately (CI and NH), testing different mediation models using the method by Baron and Kenny,45 using each speech recognition score as a separate outcome measure. Figure 1 demonstrates the steps to testing a mediation model. To test the first hypothesis, that spectral resolution would mediate the effects of advancing age on speech recognition, two simple linear regression analyses were performed for each group separately, first with speech recognition as outcome and participant age as predictor, and then with speech recognition as outcome and spectral resolution (the potential mediator) as predictor. A third simple linear regression analysis was then conducted to determine whether participant age predicted spectral resolution (the potential mediator). Last, a multiple linear regression analysis was then planned to test for mediating effects. A full mediation effect of spectral resolution (the potential mediator), when both spectral resolution and age were entered into the model, would be evidenced by a significant effect of spectral resolution and a nonsignificant effect of age. A partial mediation effect of spectral resolution (the potential mediator) would be suggested by a decline in the β value for age when spectral resolution was added to the model as a predictor along with age.

Fig. 1.

Steps for testing a mediation model. (A) First, a simple linear regression analysis is performed to determine whether age significantly predicts the speech recognition measure. (B) Second, a simple linear regression analysis is performed to determine whether the mediator measure of interest predicts the speech recognition measure. (C) Third, a simple linear regression is performed to determine whether age predicts the mediator measure of interest. Finally, a multiple linear regression analysis is performed, now with both age and the mediator as predictors of the speech recognition measure. A full mediation effect of the mediator would be evidenced by a significant effect of the mediator, but now a nonsignificant effect of age. A partial mediation effect of the mediator would be suggested by a decline in the β value for age when the mediator is added to the model.

To test the second hypothesis, that cognitive scores (verbal WM, inhibitory control, processing speed, nonverbal reasoning, and perceptual closure) would mediate the effects of advancing age on speech recognition, similar sets of analyses were performed as above, for each speech recognition outcome independently, for each group, and for each cognitive task independently.

RESULTS

Group mean scores for speech recognition, spectral resolution, and cognitive scores are shown in Table III. Results demonstrate variability among both CI users and NH controls in speech recognition performance, spectral resolution, and cognitive functions. Measures were not compared directly between groups because CI listeners heard unprocessed speech in the clear and NH controls heard noise-vocoded speech, and the groups were not equivalent in age range.

Table III.

Group Mean Scores for Speech Recognition, Spectral Resolution, and Cognitive Scores for CI Participants and NH Controls

| CI | NH | |||

|---|---|---|---|---|

| Mean | SD | Mean | SD | |

| Speech recognition | ||||

| Harvard standard sentences (% words correct) | 73.8 | 16.8 | 71.2 | 11.2 |

| PRESTO sentences (% words correct) | 59.0 | 23.2 | 62.6 | 10.5 |

| CID words (% words correct) | 67.2 | 23.1 | 54.5 | 10.8 |

| Spectral resolution (ripples per octave) | 2.19 | 1.47 | 7.80 | 1.54 |

| Cognitive scores | ||||

| Digit span (number digits correct) | 42.6 | 15.8 | 55.5 | 19.0 |

| Stroop interference score (ms) | 381.5 | 638.2 | 206.7 | 259.7 |

| TOWRE words (% words correct) | 71.4 | 11.9 | 80.5 | 11.6 |

| TOWRE nonwords (% nonwords correct) | 63.1 | 18.3 | 73.6 | 15.2 |

| Raven’s nonverbal reasoning (total correct) | 9.3 | 4.9 | 18.1 | 7.4 |

| Fragmented sentences (% words correct) | 68.3 | 11.5 | 77.7 | 11.1 |

CI = cochlear implant; CID = Central Institute of the Deaf; NH = normal hearing; PRESTO = Perceptually Robust English Sentence Test Open-set; SD = standard deviation; TOWRE = Test of Word Reading Efficiency.

Because previous work has identified patient characteristics and audiologic findings as significant predictors of speech recognition in CI users, the following traditional measures were first assessed for correlations with speech recognition in each group (CI and NH where appropriate) separately: socioeconomic status, residual hearing PTA, age at implantation (first implant for those with bilateral CIs), duration of hearing loss (current age minus reported age at onset of hearing loss), and duration of CI use. None of these factors correlated with speech recognition outcomes in CI users. In contrast, for NH controls, PTA correlated with recognition scores for vocoded speech materials (ranging from r = −0.364 to −0.592 across speech measures, all P < .001). Thus, PTA was considered as a confounding factor in a later analysis in NH controls.

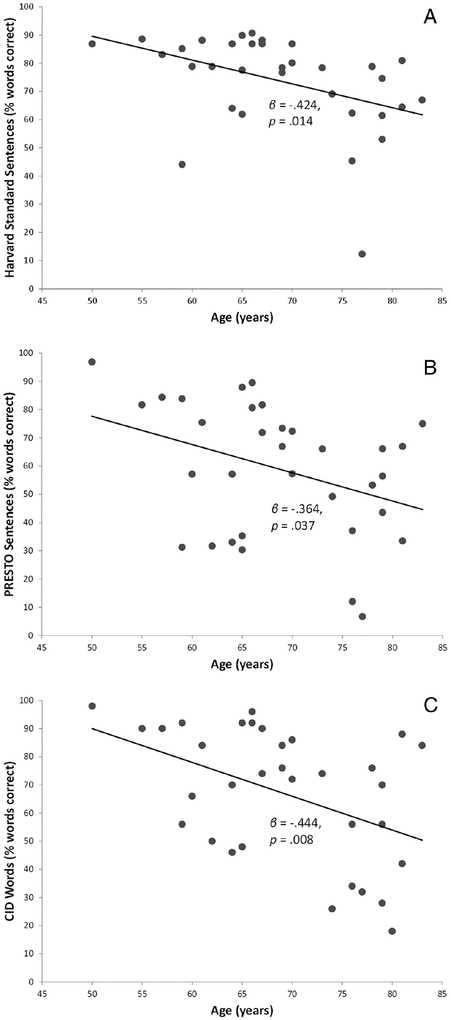

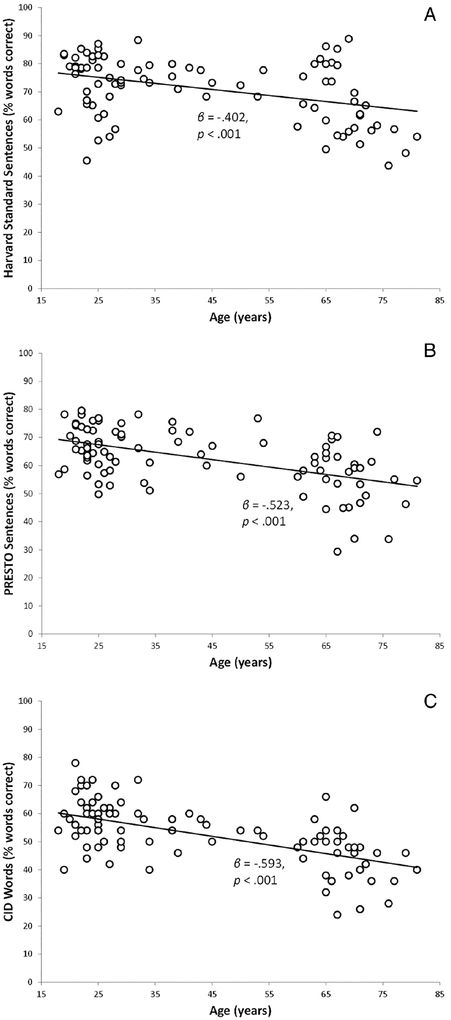

The first hypothesis was that spectral resolution would mediate the effects of advancing age on speech recognition, for each group separately (CI and NH). The first step was to determine whether advancing age predicted poorer speech recognition scores for each group separately. Scatterplots of speech recognition scores across age are shown in Figure 2 for CI users and Figure 3 for NH controls.

Fig. 2.

Scatterplots of speech recognition scores (in quiet) versus participant age for CI users. (A) Harvard standard sentences. (B) Perceptually Robust English Sentence Test Open-set (PRESTO) sentences. (C) Central Institute of the Deaf (CID) words

Fig. 3.

Scatterplots of speech recognition scores (eight-channel vocoded) versus participant age for normal-hearing controls. (A) Harvard standard sentences. (B) Perceptually Robust English Sentence Test Open-set (PRESTO) sentences. (C) Central Institute of the Deaf (CID) words.

Simple linear regression analyses were performed for each group for each speech recognition outcome separately, with participant age as the predictor. Table IV shows results for CI users, and Table V shows results for NH controls. As predicted, age was a significant predictor for all speech recognition scores for both groups, such that younger participants displayed higher speech recognition scores.

Table IV.

Results of Simple Linear Regression Analyses for Cochlear Implant Participants With Speech Recognition Scores as Dependent Measures and Age as Predictor

| Predictor: Age | ||||||

|---|---|---|---|---|---|---|

| B | SE(B) | β | t | P | R2 | |

| Harvard standard sentences (% words correct) | −0.844 | 0.324 | −0.424 | 2.61 | .014 | 0.180 |

| PRESTO sentences (% words correct) | −1.002 | 0.460 | −0.364 | 2.18 | .037 | 0.133 |

| CID words (% words correct) | −1.201 | 0.428 | −0.444 | 2.81 | .008 | 0.198 |

CID = Central Institute of the Deaf; PRESTO = Perceptually Robust English Sentence Test Open-set; B = Unstandardized beta; SE = standard error.

Table V.

Results of Simple Linear Regression Analyses for Normal-Hearing Controls With Speech Recognition Scores as Dependent Measures and Age as Predictor

| Predictor: Age | ||||||

|---|---|---|---|---|---|---|

| B | SE(B) | β | t | P | R2 | |

| Harvard standard sentences (% words correct) | −0.216 | 0.053 | −0.402 | 4.09 | <.001 | 0.162 |

| PRESTO sentences (% words correct) | −0.264 | 0.046 | −0.523 | 5.72 | <.001 | 0.274 |

| CID words (% words correct) | −0.308 | 0.045 | −0.593 | 6.87 | <.001 | 0.352 |

CID = Central Institute of the Deaf; PRESTO = Perceptually Robust English Sentence Test Open-set; B = Unstandardized beta; SE = standard error.

Next, simple linear regression analyses were performed for each speech recognition outcome separately, with spectral resolution entered as the predictor. This relationship was significant for all three speech recognition measures, both for CI users and for NH controls, as demonstrated in Tables VI and VII.

Table VI.

Results of Simple Linear Regression Analyses for Cochlear Implant Participants With Speech Recognition Scores as Dependent Measures and Spectral Resolution as Predictor

| Predictor: Spectral Resolution | ||||||

|---|---|---|---|---|---|---|

| B | SE(B) | β | t | P | R2 | |

| Harvard standard sentences (% words correct) | 3.214 | 1.481 | .374 | 2.17 | .038 | 0.140 |

| PRESTO sentences (% words correct) | 7.266 | 2.266 | .512 | 3.21 | .003 | 0.262 |

| CID words (% words correct) | 8.442 | 2.345 | .549 | 3.60 | .001 | 0.302 |

CID = Central Institute of the Deaf; PRESTO = Perceptually Robust English Sentence Test Open-set; B = Unstandardized beta; SE = standard error.

Table VII.

Results of Simple Linear Regression Analyses for Normal-Hearing Participants With Speech Recognition Scores as Dependent Measures and Spectral Resolution as Predictor

| Predictor: Spectral Resolution | ||||||

|---|---|---|---|---|---|---|

| B | SE(B) | β | t | P | R2 | |

| Harvard standard sentences (% words correct) | 3.212 | 0.710 | 0.441 | 4.525 | <.001 | 0.194 |

| PRESTO sentences (% words correct) | 3.404 | 0.643 | 0.498 | 5.29 | <.001 | 0.248 |

| CID words (% words correct) | 3.436 | 0.646 | 0.499 | 5.32 | <.001 | 0.249 |

CID = Central Institute of the Deaf; PRESTO = Perceptually Robust English Sentence Test Open-set; B = Unstandardized beta; SE = standard error.

Next, to determine whether age predicted spectral resolution, a simple linear regression analysis was performed with spectral resolution scores entered as the outcome and participant age entered as the predictor. Results demonstrated a significant relationship between age and spectral resolution for CI users, F(1,30) = 11.29, β = −0.523, P = .002, and for NH controls, F(1,85) = 82.38, β = −0.702, P < .001.

Lastly, to test for mediation effects, multiple linear regression analyses were performed for each group, for each speech recognition outcome separately, with both spectral resolution and participant age entered as predictors. A mediation effect would be demonstrated by 1) a significant effect of spectral resolution on speech recognition along with a decline in the β value for age (an attenuated relationship), or 2) an effect of age that is no longer statistically significant. For PRESTO sentences and CID words, spectral resolution significantly and fully mediated the effects of advancing age on speech recognition for CI users, but not for Harvard standard sentences. For NH controls, spectral resolution fully mediated the effects of advancing age on speech recognition for Harvard standard sentences, but demonstrated no mediation effects for PRESTO sentences or CID words. Results of the multiple linear regressions are demonstrated in Tables VIII and IX.

Table VIII.

Results of Multiple Linear Regression Analyses for Cochlear Implant Participants With Speech Recognition Scores as Dependent Measures and Both Spectral Resolution and Age as Predictors

| Predictors: Spectral Resolution and Age | ||||||

|---|---|---|---|---|---|---|

| Dependent Measures | B | SE(B) | β | t | P | R2 |

| Harvard standard sentences (% words correct) | ||||||

| Predictors | 0.221 | |||||

| Spectral resolution (spectral ripple threshold) | 1.702 | 1.687 | 0.198 | 1.01 | .322 | |

| Age (yr) | −0.502 | 0.294 | −0.334 | 1.71 | .099 | |

| PRESTO sentences (% words correct) | 0.272 | |||||

| Spectral resolution (spectral ripple threshold) | 6.363 | 2.692 | 0.448 | 2.36 | .025 | |

| Age (yr) | −0.300 | 0.470 | −0.121 | .64 | .529 | |

| CID words (% words correct) | 0.334 | |||||

| Spectral resolution (spectral ripple threshold) | 6.743 | 2.732 | 0.439 | 2.47 | .020 | |

| Age (yr) | −0.552 | 0.464 | −0.211 | 1.19 | .244 | |

CID = Central Institute of the Deaf; PRESTO = Perceptually Robust English Sentence Test Open-set; B = Unstandardized beta; SE = standard error.

Table IX.

Results of Multiple Linear Regression Analyses for Normal-Hearing Controls With Speech Recognition Scores as Dependent Measures and Both Spectral Resolution and Age as Predictors

| Predictors: Spectral Resolution and Age | ||||||

|---|---|---|---|---|---|---|

| Dependent Measures | B | SE(B) | β | t | P | R2 |

| Harvard standard sentences (% words correct) | ||||||

| Predictors | 0.217 | |||||

| Spectral resolution (spectral ripple threshold) | 2.129 | 0.988 | 0.292 | 2.16 | .034 | |

| Age (yr) | −0.114 | 0.073 | −0.212 | 1.56 | .122 | |

| PRESTO sentences (% words correct) | 0.316 | |||||

| Spectral resolution (spectral ripple threshold) | 1.651 | 0.866 | 0.241 | 1.91 | .060 | |

| Age (yr) | −0.185 | 0.064 | −0.365 | 2.89 | .005 | |

| CID words (% words correct) | 0.356 | |||||

| Spectral resolution (spectral ripple threshold) | 1.225 | 0.845 | 0.178 | 1.45 | .151 | |

| Age (yr) | −0.233 | 0.063 | −0.458 | 3.73 | <.001 | |

CID = Central Institute of the Deaf; PRESTO = Perceptually Robust English Sentence Test Open-set; B = Unstandardized beta; SE = standard error.

At this point, a consideration for the NH group was whether general hearing ability (PTA), which was previously found also to correlate significantly with speech recognition measures, might mediate the effect of age on degraded speech recognition. That is, even though speech materials in this study were all presented well above participants’ behavioral hearing thresholds, it could be that general audibility, rather than spectral resolution per se, would mediate the aging effects on degraded speech recognition. To test this hypothesis for the NH group alone, simple and multivariate linear regressions were performed for each speech measure as outcome measures, as described above, but now with age and PTA as predictors. Results demonstrated that PTA only partially mediated the effects of age on Harvard standard sentences, and did not mediate the effects of age at all on PRESTO sentences or CID word recognition. Thus, PTA itself did not generally serve as a mediator of the detrimental effects of aging on speech recognition in the NH group, but spectral resolution fully mediated the aging effect for Harvard standard sentences.

The above results supported the hypothesis that spectral resolution mediated the detrimental effects of advancing age on speech recognition for PRESTO sentences and CID word recognition in CI users; the same was true for NH controls for Harvard standard sentences only. Our second hypothesis was that the cognitive skills (verbal WM, inhibitory control, speed of lexical/phonological access, nonverbal reasoning, and perceptual closure) would mediate the effects of advancing age on speech recognition scores. Separate analyses for each group (CI or NH) were conducted in a similar fashion as described above for spectral resolution, for each group separately, now examining each speech recognition measure as a separate outcome, and each cognitive measure for its ability to mediate the effect of advancing age on speech recognition. First, based on simple linear regression analyses, we found that increasing age predicted poorer cognitive scores for all measures for both groups as demonstrated in Tables X and XI, except that digit span was not predicted by age for CI users. Of note, the β values for the Stroop interference score were positive (i.e., higher scores represent slower processing).

Table X.

Results of Simple Linear Regression Analyses for Cochlear Implant Users With Cognitive Scores as Dependent Measures and Age as Predictor

| Predictor: Age | ||||||

|---|---|---|---|---|---|---|

| B | SE(B) | β | t | P | R2 | |

| Digit span (total digits correct) | −0.033 | 0.327 | −0.018 | 0.10 | .92 | <0.001 |

| Stroop interference score (ms) | 30.574 | 12.044 | 0.409 | 2.54 | .016 | 0.168 |

| TOWRE words (% words correct) | −0.005 | 0.002 | −0.393 | 2.42 | .021 | 0.155 |

| TOWRE nonwords (% nonwords correct) | −1.210 | 0.313 | −0.565 | 3.87 | .001 | 0.319 |

| Raven’s nonverbal reasoning (total correct) | −0.420 | 0.070 | −0.728 | 6.00 | <.001 | 0.530 |

| Fragmented sentences (% words correct) | −0.671 | 0.206 | −0.499 | 3.26 | .003 | 0.249 |

B = Unstandardized beta; SE = standard error; TOWRE = Test of Word Reading Efficiency.

Table XI.

Results of Simple Linear Regression Analyses for Normal-Hearing Participants With Cognitive Scores as Dependent Measures and Age as Predictor

| Predictor: Age | ||||||

|---|---|---|---|---|---|---|

| B | SE(B) | β | t | P | R2 | |

| Digit span (total digits correct) | −0.281 | 0.093 | −0.309 | 3.03 | .003 | 0.095 |

| Stroop interference score (ms) | 6.121 | 1.170 | 0.491 | 5.23 | <.001 | 0.242 |

| TOWRE words (% words correct) | −0.001 | 0.001 | −0.225 | 2.15 | .034 | 0.051 |

| TOWRE nonwords (% nonwords correct) | −0.286 | 0.072 | −0.393 | 3.98 | <.001 | 0.154 |

| Raven’s nonverbal reasoning (total correct) | −0.230 | 0.029 | −0.645 | 7.88 | <.001 | 0.416 |

| Fragmented sentences (% words correct) | −0.178 | 0.054 | −0.334 | 3.30 | .001 | 0.111 |

B = Unstandardized beta; SE = standard error; TOWRE = Test of Word Reading Efficiency.

Next, simple linear regression analyses, with results shown in Table XII, demonstrated that all speech recognition measures were significantly predicted by better cognitive scores on TOWRE words and Raven’s scores for CI users; TOWRE nonwords and fragmented sentences also predicted CID word scores. For the NH group, as shown in Table XIII, digit span, Stroop interference score, TOWRE nonwords, and Raven’s scores predicted all three speech recognition scores; TOWRE words and fragmented sentences were also predictive of some speech recognition scores.

Table XII.

Results of Simple Linear Regression Analyses for Cochlear Implant Users With Speech Recognition Scores as Dependent Measures and Cognitive Scores as Predictors

| B | SE(B) | β | t | P | R2 | |

|---|---|---|---|---|---|---|

| Predictor: Digit span (total digits correct) | ||||||

| Harvard standard sentences (% words correct) | 0.106 | 0.187 | 0.101 | 0.566 | .576 | 0.010 |

| PRESTO sentences (% words correct) | 0.083 | 0.259 | 0.057 | 0.320 | .751 | 0.003 |

| CID words (% words correct) | 0.099 | 0.257 | 0.068 | 0.384 | .704 | 0.005 |

| Predictor: Stroop interference score (ms) | ||||||

| Harvard standard sentences (% words correct) | −0.008 | 0.004 | −0.292 | 1.70 | .099 | 0.085 |

| PRESTO sentences (% words correct) | −0.005 | 0.006 | −0.131 | 0.74 | .468 | 0.017 |

| CID words (% words correct) | −0.009 | 0.006 | −0.236 | 1.37 | .180 | 0.056 |

| Predictor: TOWRE words (% words correct) | ||||||

| Harvard standard sentences (% words correct) | 64.729 | 25.254 | 0.418 | 2.56 | .015 | 0.175 |

| PRESTO sentences (% words correct) | 93.010 | 34.581 | 0.435 | 2.69 | .011 | 0.189 |

| CID words (% words correct) | 108.112 | 28.406 | 0.558 | 3.81 | .001 | 0.312 |

| Predictor: TOWRE nonwords (% nonwords correct) | ||||||

| Harvard standard sentences (% words correct) | 0.346 | 0.180 | 0.327 | 1.93 | .063 | 0.107 |

| PRESTO sentences (% words correct) | 0.470 | 0.249 | 0.321 | 1.89 | .068 | 0.103 |

| CID words (% words correct) | 0.525 | 0.203 | 0.416 | 2.59 | .014 | 0.173 |

| Predictor: Raven’s nonverbal reasoning (total correct) | ||||||

| Harvard standard sentences (% words correct) | 1.826 | 0.511 | 0.540 | 3.57 | .001 | 0.291 |

| PRESTO sentences (% words correct) | 2.793 | 0.673 | 0.598 | 4.15 | <.001 | 0.357 |

| CID words (% words correct) | 2.667 | 0.680 | 0.570 | 3.93 | <.001 | 0.325 |

| Predictor: Fragmented sentences (% words correct) | ||||||

| Harvard standard sentences (% words correct) | 0.396 | 0.296 | 0.234 | 1.34 | .190 | 0.055 |

| PRESTO sentences (% words correct) | 0.781 | 0.396 | 0.334 | 1.97 | .058 | 0.111 |

| CID words (% words correct) | 0.385 | 0.096 | 0.396 | 4.02 | <.001 | 0.157 |

CID = Central Institute of the Deaf; PRESTO = Perceptually Robust English Sentence Test Open-set; B = Unstandardized beta; SE = standard error; TOWRE = Test of Word Reading Efficiency.

Table XIII.

Results of Simple Linear Regression Analyses for Normal-Hearing Controls With Speech Recognition Scores as Dependent Measures and Cognitive Scores as Predictors

| B | SE(B) | β | t | P | R2 | |

|---|---|---|---|---|---|---|

| Predictor: Digit span (total digits correct) | ||||||

| Harvard standard sentences (% words correct) | 0.204 | 0.059 | 0.347 | 3.45 | .001 | 0.120 |

| PRESTO sentences (% words correct) | 0.170 | 0.057 | 0.307 | 3.01 | .003 | 0.094 |

| CID words (% words correct) | 0.168 | 0.058 | 0.295 | 2.88 | .005 | 0.087 |

| Predictor: Stroop interference score (ms) | ||||||

| Harvard standard sentences (% words correct) | −0.019 | 0.004 | −0.444 | 4.59 | <.001 | 0.197 |

| PRESTO sentences (% words correct) | −0.020 | 0.004 | −0.479 | 5.06 | <.001 | 0.230 |

| CID words (% words correct) | −0.021 | 0.004 | −0.507 | 5.46 | <.001 | 0.257 |

| Predictor: TOWRE words (% words correct) | ||||||

| Harvard standard sentences (% words correct) | 19.845 | 10.136 | 0.205 | 1.96 | .053 | 0.042 |

| PRESTO sentences (% words correct) | 33.503 | 9.070 | 0.368 | 3.69 | <.001 | 0.136 |

| CID words (% words correct) | 23.513 | 9.697 | 0.252 | 2.43 | .017 | 0.063 |

| Predictor: TOWRE nonwords (% nonwords correct) | ||||||

| Harvard Standard Sentences (% words correct) | 0.402 | 0.066 | 0.546 | 6.08 | <.001 | 0.298 |

| PRESTO Sentences (% words correct) | 0.342 | 0.065 | 0.493 | 5.29 | <.001 | 0.243 |

| CID Words (% words correct) | 0.307 | 0.069 | 0.430 | 4.45 | <.001 | 0.185 |

| Predictor: Raven’s nonverbal reasoning (total correct) | ||||||

| Harvard standard sentences (% words correct) | 0.832 | 0.134 | 0.553 | 6.19 | <.001 | 0.305 |

| PRESTO sentences (% words correct) | 0.755 | 0.129 | 0.533 | 5.87 | <.001 | 0.284 |

| CID words (% words correct) | 0.724 | 0.135 | 0.497 | 5.34 | <.001 | 0.247 |

| Predictor: Fragmented sentences (% words correct) | ||||||

| Harvard standard sentences (% words correct) | 0.511 | 0.093 | 0.509 | 5.51 | <.001 | 0.259 |

| PRESTO sentences (% words correct) | 0.405 | 0.092 | 0.428 | 4.41 | <.001 | 0.183 |

| CID words (% words correct) | 0.639 | 0.474 | 0.252 | 1.353 | .187 | 0.063 |

CID = Central Institute of the Deaf; PRESTO = Perceptually Robust English Sentence Test Open-set; B = Unstandardized beta; SE = standard error; TOWRE = Test of Word Reading Efficiency.

Lastly, for each group separately, multiple linear regression analyses were performed for each speech recognition measure, entering age and each cognitive score as predictors, to examine for mediation effects of the cognitive scores. For CI users, these analyses were performed on TOWRE words and Raven’s scores, because each of these cognitive measures was predicted by age and each significantly predicted all three speech recognition scores. For the same reasons, in NH controls, multiple linear regression analyses were performed to include digit span, Stroop interference score, TOWRE nonwords, and Raven’s scores.

Results of multiple linear regression analyses for CI users are shown in Tables XIV (TOWRE words) and XV (Raven’s scores). For CI users, results demonstrated that both TOWRE rapid reading of words and Raven’s nonverbal reasoning significantly and fully mediated the effects of advancing age on speech recognition performance for PRESTO sentences and CID words. In each of those multiple linear regressions, adding the cognitive factor to the model made the effect of age nonsignificant. Raven’s scores also fully mediated the effects of advancing age on Harvard standard sentence scores.

Table XIV.

Results of Multiple Linear Regression Analyses for Cochlear Implant Users With Speech Recognition Scores as Dependent Measures and TOWRE Word Scores and Age as Predictors

| Predictors: TOWRE Words and Age | ||||||

|---|---|---|---|---|---|---|

| Dependent Measures | B | SE(B) | β | t | P | R2 |

| Harvard standard sentences (% words correct) | ||||||

| Predictors | 0.266 | |||||

| TOWRE words (% correct) | 48.142 | 25.708 | 0.311 | 1.87 | .071 | |

| Age (yr) | −0.636 | 0.331 | −0.320 | 1.92 | .064 | |

| PRESTO sentences (% words correct) | 0.243 | |||||

| TOWRE words (% correct) | 75.383 | 36.057 | 0.353 | 2.09 | .045 | |

| Age (yr) | −0.676 | 0.464 | −0.246 | 1.46 | .155 | |

| CID words (% words correct) | 0.371 | |||||

| TOWRE words (% correct) | 87.847 | 29.994 | 0.454 | 2.93 | .006 | |

| Age (yr) | −0.719 | 0.418 | −0.266 | 1.72 | .096 | |

CID = Central Institute of the Deaf; PRESTO = Perceptually Robust English Sentence Test Open-set; B = Unstandardized beta; SE = standard error; TOWRE = Test of Word Reading Efficiency.

Table XV.

Results of Multiple Linear Regression Analyses for Cochlear Implant Users With Speech Recognition Scores as Dependent Measures and Nonverbal Reasoning and Age as Predictors

| Predictors: Raven’s Nonverbal Reasoning and Age |

||||||

|---|---|---|---|---|---|---|

| Dependent Measures | B | SE(B) | β | t | P | R2 |

| Harvard standard sentences (% words correct) | ||||||

| Predictors | 0.294 | |||||

| Raven’s nonverbal reasoning (total correct) | 1.685 | 0.754 | 0.490 | 2.20 | .036 | |

| Age (yr) | −0.137 | 0.444 | −0.069 | 0.31 | .760 | |

| PRESTO sentences (% words correct) | 0.368 | |||||

| Raven’s nonverbal reasoning (total correct) | 3.288 | 0.985 | 0.704 | 3.34 | .002 | |

| Age (yr) | 0.402 | 0.580 | 0.146 | 0.693 | .494 | |

| CID words (% words correct) | 0.327 | |||||

| Raven’s nonverbal reasoning (total correct) | 2.454 | 1.006 | 0.524 | 2.44 | .021 | |

| Age (yr) | −0.169 | 0.581 | −0.063 | −0.29 | .772 | |

CID = Central Institute of the Deaf; PRESTO = Perceptually Robust English Sentence Test Open-set; B = Unstandardized beta; SE = standard error.

Results for NH controls are shown in Tables XVI (digit span), XVII (Stroop interference), XVIII (TOWRE nonwords), and XIX (Raven’s scores). For NH controls, digit span partially mediated the effects of age on Harvard standard sentence scores, as demonstrated by a reduction in the β value for age when digit span was entered into the model, compared with when age was the only predictor. In the same fashion, Stroop interference and TOWRE nonwords were partial mediators of the effects of age on all speech recognition measures. Raven’s scores fully mediated the effects of advancing age on Harvard standard sentence scores, and partially mediated the effects on PRESTO sentences.

Table XVI.

Results of Multiple Linear Regression Analyses for Normal-Hearing Controls With Speech Recognition Scores as Dependent Measures and Digit Span Scores and Age as Predictors

| Predictors: Digit Span and Age | ||||||

|---|---|---|---|---|---|---|

| Dependent Measures | B | SE(B) | β | t | P | R2 |

| Harvard standard sentences (% words correct) | ||||||

| Predictors | 0.216 | |||||

| Digit span (total items correct) | 0.145 | 0.059 | 0.247 | 2.46 | .016 | |

| Age (yr) | −0.175 | 0.054 | −0.326 | 3.25 | .002 | |

| PRESTO sentences (% words correct) | 0.297 | |||||

| Digit span (total items correct) | 0.089 | 0.053 | 0.161 | 1.70 | .094 | |

| Age (yr) | −0.239 | 0.048 | −0.473 | 4.98 | <.001 | |

| CID words (% words correct) | 0.366 | |||||

| Digit span (total items correct) | 0.071 | 0.051 | 0.124 | 1.37 | .173 | |

| Age (yr) | −0.288 | 0.047 | −0.555 | 6.15 | <.001 | |

CID = Central Institute of the Deaf; PRESTO = Perceptually Robust English Sentence Test Open-set; B = Unstandardized beta; SE = standard error.

Table XVII.

Results of Multiple Linear Regression Analyses for Normal-Hearing Controls With Speech Recognition Scores as Dependent Measures and Stroop Interference Scores and Age as Predictors

| Predictors: Stroop Interference and Age | ||||||

|---|---|---|---|---|---|---|

| Dependent Measures | B | SE(B)) | β | t | P | R2 |

| Harvard standard sentences (% words correct) | ||||||

| Predictors | 0.243 | |||||

| Stroop interference score (ms) | −0.014 | 0.005 | −0.322 | 2.97 | .004 | |

| Age (yr) | −0.133 | 0.058 | −0.247 | 2.28 | .025 | |

| PRESTO sentences (% words correct) | 0.341 | |||||

| Stroop interference score (ms) | −0.012 | 0.004 | −0.291 | 2.88 | .005 | |

| Age (yr) | −0.194 | 0.051 | −0.383 | 3.78 | <.001 | |

| CID words (% words correct) | 0.411 | |||||

| Stroop interference score (ms) | −0.012 | 0.004 | −0.286 | 2.99 | .004 | |

| Age (yr) | −0.234 | 0.050 | −0.450 | −4.70 | <.001 | |

CID = Central Institute of the Deaf; PRESTO = Perceptually Robust English Sentence Test Open-set; B = Unstandardized beta; SE = standard error; TOWRE = Test of Word Reading Efficiency.

Table XVIII.

Results of Multiple Linear Regression Analyses for Normal-Hearing Controls With Speech Recognition Scores as Dependent Measures and TOWRE Nonword Scores and Age as Predictors

| Predictors: TOWRE Nonwords and Age | ||||||

|---|---|---|---|---|---|---|

| Dependent Measures | B | SE(B) | β | t | P | R2 |

| Harvard standard sentences (% words correct) | ||||||

| Predictors | 0.340 | |||||

| TOWRE nonwords (% words correct) | 0.338 | 0.070 | 0.459 | 4.82 | <.001 | |

| Age (yr) | −0.119 | 0.051 | −0.222 | 2.33 | .022 | |

| PRESTO sentences (% words correct) | 0.372 | |||||

| TOWRE nonwords (% words correct) | 0.236 | 0.065 | 0.340 | 3.66 | <.001 | |

| Age (yr) | −0.197 | 0.047 | −0.389 | 4.19 | <.001 | |

| CID words (% words correct) | 0.398 | |||||

| TOWRE nonwords (% words correct) | 0.166 | 0.065 | 0.233 | 2.57 | .012 | |

| Age (yr) | −0.260 | 0.047 | −0.502 | 5.51 | <.001 | |

CID = Central Institute of the Deaf; PRESTO = Perceptually Robust English Sentence Test Open-set; B = Unstandardized beta; SE = standard error.

Table XIX.

Results of Multiple Linear Regression Analyses for Normal-Hearing Controls With Speech Recognition Scores as Dependent Measures and Nonverbal Reasoning and Age as Predictors

| Predictors: Raven’s Nonverbal Reasoning and Age |

||||||

|---|---|---|---|---|---|---|

| Dependent Measures | B | SE(B) | β | t | P | R2 |

| Harvard standard sentences (% words correct) | ||||||

| Predictors | 0.309 | |||||

| Raven’s nonverbal reasoning (total correct) | 0.756 | 0.177 | 0.503 | 4.28 | <.001 | |

| Age (yr) | −0.042 | 0.063 | −0.078 | .66 | .510 | |

| PRESTO sentences (% words correct) | 0.339 | |||||

| Raven’s nonverbal reasoning (total correct) | 0.474 | 0.163 | 0.334 | 2.91 | .005 | |

| Age (yr) | −0.155 | 0.058 | −0.307 | 2.68 | .009 | |

| CID words (% words correct) | 0.374 | |||||

| Raven’s nonverbal reasoning (total correct) | 0.285 | 0.163 | 0.196 | 1.75 | .083 | |

| Age (yr) | −0.242 | 0.058 | −0.467 | 4.18 | <.001 | |

CID = Central Institute of the Deaf; PRESTO = Perceptually Robust English Sentence Test Open-set; B = Unstandardized beta; SE = standard error.

As a final consideration, the above findings—that both spectral resolution and cognitive scores served to fully or partially mediate the effects of advancing age on speech recognition in CI users and NH controls—would suggest that there might be covariance between spectral resolution and the cognitive mediators. To examine for covariance between spectral resolution and the cognitive mediators for each group separately, Pearson bivariate correlation analyses were performed. For both groups, significant correlations were found between spectral resolution and each of the cognitive mediators, as shown in Table XX.

Table XX.

Results of Pearson Bivariate Correlation Analyses for CI and NH Participants Between Spectral Resolution and Each Group’s Cognitive Mediators of the Effects of Age on Speech Recognition

| Correlation (r) With Spectral Resolution |

P Value | |

|---|---|---|

| CI users-cognitive mediator of age on speech recognition | ||

| TOWRE words (% words correct) | 0.386 | .029 |

| Raven’s nonverbal reasoning (total correct) | 0.520 | .002 |

| NH controls-cognitive mediator of age on speech recognition | ||

| Digit span (number digits correct) | 0.346 | .001 |

| Stroop interference score (ms) | −0.564 | <.001 |

| TOWRE nonwords (% nonwords correct) | 0.437 | <.001 |

| Raven’s nonverbal reasoning (total correct) | 0.589 | <.001 |

CI = cochlear implant; NH = normal hearing; TOWRE = Test of Word Reading Efficiency.

This finding raised the possibility that this moderate degree of covariance was simply associated with parallel declines in each ability with advancing age. To answer this question, a partial correlation analysis was performed between spectral resolution and each cognitive mediator, while controlling for age. Results are shown in Table XXI. This analysis demonstrated no significant partial correlation for CI users between spectral resolution and cognitive mediators when controlling for age. For NH controls, smaller but still significant partial correlations were found between spectral resolution and cognitive mediators after accounting for age, except for digit span. Thus, after accounting for age effects, spectral resolution and cognitive mediators did not correlate in CI users but did for most cognitive mediators in NH controls. In other words, spectral resolution and the cognitive mediators for CI users independently mediated the effects of aging on speech recognition; for NH controls, these mediation effects were not completely independent.

Table XXI.

Results of Partial Correlation Analyses for CI and NH Participants Between Spectral Resolution and Each Group’s Cognitive Mediators, Controlling for Age

| Partial Correlation (r) With Spectral Resolution, Controlling for Age |

P Value | |

|---|---|---|

| CI users-cognitive mediator of age on speech recognition | ||

| TOWRE words (% words correct) | 0.229 | .214 |

| Raven’s nonverbal reasoning (total correct) | 0.238 | .198 |

| NH controls-cognitive mediator of age on speech recognition | ||

| Digit span (number digits correct) | 0.161 | .142 |

| Stroop interference score (ms) | −0.364 | .001 |

| TOWRE nonwords (% nonwords correct) | 0.245 | .024 |

| Raven’s nonverbal reasoning (total correct) | 0.247 | .022 |

CI = cochlear implant; NH = normal hearing; TOWRE = Test of Word Reading Efficiency.

DISCUSSION

This study addressed three main hypotheses regarding aging-related declines in the recognition of degraded speech, both for postlingual adults listening through CIs and for NH controls listening to spectrally degraded speech. First was the prediction that CI users and NH controls would demonstrate aging-related declines in spectral resolution. Second was the prediction that spectral resolution would partially mediate the effects of advancing age on speech recognition outcomes for both groups. The third prediction was that listeners’ cognitive functions would also partially mediate the effects of aging on recognition of degraded speech. A fourth and final prediction was that CI users and NH controls would demonstrate similar perceptual processing, as suggested by similar mediation effects between groups of the cognitive factors measured. Results demonstrated general support for the first three hypotheses, with poorer spectral resolution and poorer cognitive functions mediating the detrimental effects of aging on speech recognition performance in both groups for at least some speech measures, and partial support for the fourth hypothesis.

The finding of spectral resolution as a significant mediator of the effects of age on speech recognition is consistent with previous findings that better spectral resolution contributes to better speech recognition performance in CI users.14 The current study extends these findings by demonstrating the impact that aging has on spectral resolution and speech recognition in CI users, as well as in NH controls. Although this decline in spectral resolution is well established in patients with milder degrees of presbycusis, it is generally assumed that spectral resolution after cochlear implantation can be attributed mostly to factors related to placement of the electrode array itself. For example, there is some evidence that perimodiolar arrays provide better speech recognition benefits than lateral wall hugging electrodes, presumably because they afford more refined neural stimulation, less spread of excitation, and, therefore, greater spectral resolution.1 Alternatively, it has been found that the health of the auditory nerve dendrites and spiral ganglion impacts speech recognition, likely as a result of a larger number of functional neural elements that can be stimulated by the electrode array.46 However, that suggestion does not necessarily imply that those listeners have greater spectral resolution with their CIs. Additional studies will be required to explore the mechanisms that underlie the poorer spectral resolution associated with advancing age in CI users, taking into account central and cognitive factors as possible contributors to performance on spectral resolution tests.

In the present study, the average performance of the CI users on the SMRT task is lower than that recently reported by Landsberger et al.47 In that study, adult CI participants demonstrated an average SMRT score of 4.3 RPO, whereas the present CI users demonstrated a mean score of 2.2 RPO. This difference may have resulted from the difference in the age range of our CI participants. The mean age of adult CI users in Landsberger et al. was 59.8 years, which is about nine years younger than that of the CI users in the present study with a mean age of 69.0 years. An examination of Figure 2 in Landsberger et al. further indicates a progressive decline in SMRT scores for older CI users, with uniformly poor performance in those few CI users over the age of 70 years, which is more consistent with the current findings. Additional sources of discrepancy in the results may have been due to the differences in testing procedures and CI use. Whereas only bilateral CI users were tested in Landsberger et al., the current CI group was composed of only 10 bilateral users, 11 users tested with one CI alone, and 13 CI users tested with one CI and a contralateral hearing aid. Landsberger et al. also reported a significant correlation of −0.7 between SMRT scores and age. Thus, the results of Landsberger et al. and the current study are consistent in demonstrating a decline in spectral resolution abilities with aging.

When it comes to cognitive factors, we did not find verbal WM to be a significant predictor of speech recognition in adult CI users, but it did partially mediate the effects of age on speech recognition in NH controls. This lack of relation in CI users may be due to the measure chosen, which was a visually presented digit span task. It may be that performance on a visual task of verbal WM is not representative of the WM demands required by a CI listener attempting to recognize speech. For example, a recent study found no relation between sentence recognition in speech-shaped noise for CI users and scores on a reading span task, but strong correlations were found for an auditory listening span task of WM.48 However, in other populations of patients with lesser degrees of hearing loss, previous reports have found reading span to be correlated with speech recognition.23,49

In the sample of CI users tested in this study, inhibitory control abilities did not contribute substantially to speech recognition in CI users, but did partially mediate aging effects on speech recognition in NH controls. This finding is in contrast to previous findings using a Stroop task.24 In that study, correlations were found between Stroop response times for the incongruent condition and sentence recognition scores for CI users. However, listeners in that study were tested in speech-shaped noise. It could be that inhibitory control plays a more significant role in inhibiting the effects of noise on speech recognition than simply listening to degraded speech under quiet conditions.

Speed of lexical access on the TOWRE words served as a partial mediator of aging effects on speech recognition in CI users, and speed of phonological access on the TOWRE nonwords served as a partial mediator for NH controls. Because the TOWRE task is a timed task, it is tapping into participants’ abilities to rapidly access lexical and phonological information in long-term memory, even when words or nonwords are presented in a visual fashion. CI users’ speed of lexical access was detrimentally affected by advancing age, and slower lexical access predicted poorer speech recognition. On the other hand, speed of phonological access (in contrast to lexical access) was more relevant to speech recognition in the NH group. This discrepancy between groups could be related to the finding that postlingual adults perform significantly more poorly than their NH counterparts in tasks that require explicit access to phonological structure,50,51 possibly as a result of the degradation of phonological representations that CI users have stored in long-term memory.50 In other words, CI users may depend more heavily on coarser lexical structure, as compared with detailed phonological structure, when encoding and recognizing speech through their devices.

The Raven’s assessment of nonverbal reasoning or IQ served as the strongest cognitive predictor of speech recognition in this study, and the main cognitive mediator of advancing age on speech recognition for CI users. This finding was not consistent with previous reports indicating no significant relations between general measures of IQ or scholastic ability and speech recognition skills. However, it should be noted that during the version of the Raven’s task used here, a time restriction of 10 minutes to complete as many items as possible was enforced to limit overall testing time, which is not standard protocol. As a result of this time limit, an element of processing speed may have contributed to scores, in addition to true nonverbal reasoning and IQ. Nonetheless, in the current study, this measure of nonverbal reasoning alone was able to predict 29% to 43% of the variability in speech recognition scores among CI users, and 24% to 31% of that variability for NH controls.

In contrast, perceptual closure ability did not predict sentence recognition. The fragmented sentences task assesses the participants’ ability to perform perceptual closure (or perceptual organization) on a visually presented distorted sensory signal. The findings of this study are inconsistent with reports using a similar text reception threshold task, which demonstrated correlations with speech-in-noise recognition in patients with hearing loss.52 Similar results were not found in this study of CI users or NH controls listening to spectrally degraded speech. It is unclear if this lack of relation with speech recognition is a result of the particular task used, or the fact that the auditory stimuli heard by CI listeners, or the vocoded stimuli heard by our NH controls, were qualitatively distinct from those used in those earlier studies.

It is also possible that some differences between NH and CI listeners could arise based on their different levels of familiarity and practice with degraded speech. Although listening to spectrally degraded vocoded speech was a novel experience for NH listeners, CI users who have been using their CIs for years have had considerably more time to acclimate to spectrally degraded input. Over time, the greater experience of CI users might have led to different listening strategies and, potentially, to a different allocation of cognitive resources involved in perception of spectrally degraded speech.