Abstract

This study investigated the effects of unilateral hearing loss (UHL), of either conductive or sensorineural origin, on stereo sound localization and related visual bias in listeners with normal hearing, short-term (acute) UHL, and chronic UHL. Time-delay-based stereophony was used to isolate interaural-time-difference cues for sound source localization in free field. Listeners with acute moderate (<40 dB for tens of minutes) and chronic severe (>50 dB for more than 10 years) UHL showed poor localization and compressed auditory space that favored the intact ear. Listeners with chronic moderate (<50 dB for more than 12 years) UHL performed near normal. These results show that the auditory spatial mechanisms that allow stereo localization become less sensitive to moderate UHL in the long term. Presenting LED flashes at either the same or a different location as the sound source elicited visual bias in all groups but to different degrees. Hearing loss led to increased visual bias, especially on the impaired side, for the severe and acute UHL listeners, suggesting that vision plays a compensatory role in restoring perceptual spatial symmetry.

Keywords: binaural, learning, multisensory, sound localization, unilateral hearing loss

Introduction

According to a recent survey, unilateral hearing loss (UHL) affects 7.2% of the U.S. adult population (≈18 million). Yet less than half of the affected population report a perceived handicap, and hearing aid use is very low (4.2%) for those with moderate-or-worse UHL (Golub, Lin, Lustig, & Lalwani, 2018). Listener variability in laboratory tests is also large among UHL listeners (Bess, Tharpe, & Gibler, 1986; Humes, Allen, & Bess, 1980; Viehweg & Campbell, 1960). While the frequency and magnitude of localization errors tend to correlate with the severity of impairment and the origin of a lesion (cochlear vs. retrocochlear), audiometric patterns do not reliably predict patterns of binaural performance degradation (Gabriel, Koehnke, & Colburn, 1992; Hausler, Colburn, & Marr, 1983). To improve hearing restoration after UHL, it is important to understand the compensatory mechanisms that are related to subjective hearing well-being and objective performance variability for this population.

Visual guidance provides one potential compensatory mechanism for auditory localization. Vision provides an important spatial “frame-of-reference” for sound localization (Boring, 1926; Stein & Meredith, 1993; Warren, 1970). Exposure to a misaligned visual stimulus causes a rapid refinement of perceived auditory localization, known as the “ventriloquism effect.” This effect has been documented in both humans (Jack & Thurlow, 1973; Spence & Driver, 2000; Stein & Meredith, 1990) and monkeys (Recanzone, 1998). Visual “capture” of auditory localization can also occur when multisensory stimuli are not perceptually fused together (Bertelson & Radeau, 1981; Welch & Warren, 1980) and when auditory stimuli are presented from behind a listener (Montagne & Zhou, 2018). Extensive research in barn owls shows that the recalibration of auditory space after monaural HL requires visual feedback (Knudsen & Knudsen, 1985). Supporting this finding, Brainard and Knudsen (1998) found that manipulating visual feedback with prisms shifted, point-by-point, neural interaural differences of time (ITD) tuning in the auditory midbrain of young owls. Visually guided training also reduces localization errors after monaural occlusion in humans (Strelnikov, Rosito, & Barone, 2011). Prism adaptation in humans, however, is found to extend beyond the altered field of vision, suggesting that the remapping of auditory space involves a change in the gain of many spatial channels (Zwiers, Van Opstal, & Paige, 2003).

Complete hearing deprivation also affects vision, as exemplified by enhanced visual attention to peripheral targets (Bavelier, Dye, & Hauser, 2006; Pavani & Bottari, 2012). Unlike the congenitally and profoundly deaf populations, for whom vision provides the main source of spatial information, the auditory spatial functions of the UHL population are not entirely lost but adjusted through learning (Strelnikov et al., 2011). Hypothetically, visual influences on auditory localization may also become asymmetrical after UHL. Visual mechanisms may follow the “better” ear for a better signal estimate or enhance the side of the “worse” ear to compensate for hearing loss. These opposing hypotheses, that is, whether or not auditory and visual processing undergoes parallel or compensatory changes after the onset of unilateral UHL, have not been systematically tested in previous studies.

Another potential compensatory mechanism is a learned change in listening strategy after the onset of hearing loss. Compared with bilateral, symmetrical hearing loss, the hallmark of UHL deficit is an increased difficulty in identifying the location of a sound source (Durlach, Thompson, & Colburn, 1981). This spatial deficit is primarily caused by the disassociation between sound source location and auditory spatial cues, primarily ITD and interaural differences of level (ILD). With UHL, signal strength is reduced and signal transmission can also be delayed (e.g., after conductive hearing loss) at the impaired side (Hartley & Moore, 2003). In theory, this distortion should shift the perceived location of sounds presented at the midline toward the “better” ear. However, not all UHL listeners experience an unbalanced auditory space in real-life settings (Javer & Schwarz, 1995). Many studies have investigated the extent of spatial learning/adaptation by simulating UHL with monaural earplugging in humans. They show that, while there was an immediate, off-center shift in the perceived sound direction after occlusions of one ear, complex patterns of “recovery” occurred over a period of days (Bauer, Matuzsa, Blackmer, & Glucksberg, 1966; Florentine, 1976; McPartland, Culling, & Moore, 1997). For low-frequency stimuli (<1400 Hz), the auditory image could be recentered within days (Florentine, 1976) or even reversed to the plugged side (Bauer et al., 1966). However, very little evidence of learning/adaption has been observed for high-frequency stimuli (>1400 Hz) by Florentine (1976).

A growing body of studies proposes “cue reweighting” and “cue remapping” as two candidate restoration mechanisms underlying the adaptive nature of auditory spatial perception—see reviews by Keating and King (2013) and Van Opstal (2016). The “cue reweighting” hypothesis proposes that the impaired auditory system learns to minimize the role of distorted localization cues and emphasize other unaffected cues. One primary alternative to distorted ILDs is monaural spectral cues from pinna filtering at the intact ear. Results in humans (Agterberg, Hol, Van Wanrooij, Van Opstal, & Snik, 2014; Irving & Moore, 2011; Kumpik, Kacelnik, & King, 2010; Shub, Carr, Kong, & Colburn, 2008; Slattery & Middlebrooks, 1994; Van Wanrooij & Van Opstal, 2004, 2007) and animals (Bajo, Nodal, Moore, & King, 2010; Kacelnik, Nodal, Parsons, & King, 2006; Keating, Dahmen, & King, 2013;) suggest this is indeed possible (at least for some listeners). The “cue remapping” hypothesis proposes that the impaired auditory system could shift the neural tuning to binaural cues in order to overcome the disrupted relationship between localization cues and sound source location. Shifted ILD tuning after monaural occlusion has been reported in the midbrain structures of barn owls, where ILDs are initially processed (Mogdans & Knudsen, 1994). Recent studies show that these two adaptive mechanisms are implemented jointly but target different spectrum ranges, where high-frequency adaptation involves more cue reweighting (Keating, Rosenior-Patten, Dahmen, Bell, & King, 2016).

However, the degree to which distorted high-frequency ILDs can be corrected or compensated for by low-frequency ITDs in localizing a broadband stimulus remains unclear. Because the coincidence-detection mechanisms for extracting ITD depend on the timing, but not the relative amplitude of binaural stimuli (Goldberg & Brown, 1969), an ITD analysis should yield normal results for moderately impaired listeners for low-frequency stimuli, assumming that the signals are above threshold at both ears and that the timing and temporal precision of the signal associated with the impaired ear are minimally altered. By the same token, such a hypothesis would predict that listeners with a profound hearing loss would not be able to make such compensation because signals would be below the threshold at one ear, making ITD calculation impossible.

This study investigated how the duration and severity of UHL affect auditory localization in the presence of competing visual information. We compared the performances of three groups of adults—normal hearing, acute UHL (minutes, by earplugging), and chronic UHL (more than 10 years, no hearing aid experience) using the time-delay-based stereophony reported in our previous studies (Montagne & Zhou, 2016) and illustrated in Figure 1(a). Briefly, ITDs conveyed by the low-frequency stereo stimuli vary with the signal delay between loudspeakers (interchannel delay [ICD]). On the other hand, ILDs conveyed by the high-frequency stereo stimuli are relatively unaffected by the ICD because the sound waves from both loudspeakers reach both sides of the head, resulting in a near-zero overall distribution of ILDs across frequencies. In addition, in stereophony, monaural spectral cues remain relatively unchanged for different perceived sound locations (see further details and explanations in “Methods” section). Together, this results in ITDs being the primary cue for horizontal localization with time-delay-based stereo techniques. This feature allows us to investigate the relative roles of ITDs in auditory localization for hearing groups with various degrees and durations of UHL. We hypothesized that a “better” ear bias would be observed in stereo localization if the distorted ILDs after UHL remain uncompensated. Alternatively, we hypothesized that recalibrated binaural processing (through retuning or reweighting mechanisms) would lead to near-normal stereo localization after UHL. Our results suggest that compensatory mechanisms in both auditory and visual systems are recruited to offset compromised binaural cues, thereby restoring, over time, perceptual spatial symmetry after UHL.

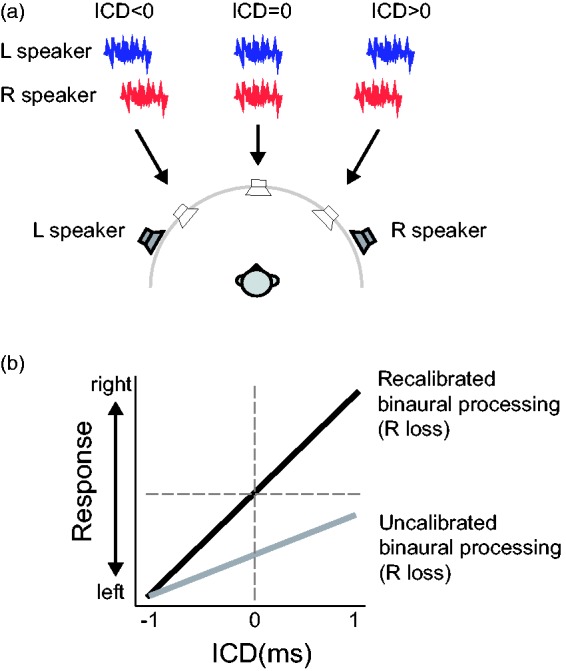

Figure 1.

Stereo perception and the hypothesis of this study. (a) In time-delay-based stereophony, two loudspeakers are positioned symmetrically about the listener’s frontal midline. The direction of a perceived sound source (arrows) is governed by the ICD between two identical signals presented from left and right loudspeakers (thick lines). A negative ICD (left speaker leads) causes a perceived sound direction from left and a positive ICD (right speaker leads) causes a perceived sound direction from right. (b) We hypothesized that the extent of recalibration after UHL would lead to different directional biases in stereo localization. If listeners do not recalibrate for distortion of ILDs, a zero ILD would cause a weaker stimulation at the loss ear. This would subsequently lead to a response bias toward the intact ear within a compressed response range (gray line). Alternatively, if distortion of ILDs is recalibrated perfectly to restore the mapping between the stimulus location and binaural disparity cues, near-normal stereo localization would be expected (black line) in which changing ICDs from negative to positive values would lead to a change in the perceived sound direction from left to right across the midline. The hypothetical relationship between ICD and listener response is based on right-ear hearing loss. ICD = interchannel delay.

Methods

Listeners

Nine normal-hearing listeners (age 21–28 years, five men) and nine chronic UHL listeners (age 19–43 years, five men) participated in this study. All listeners provided written informed consent and received financial compensation for their participation. The experiments were conducted in accordance with procedures approved by the Arizona State University’s Institutional Review Board.

Each normal hearing listener performed the test twice—once in the natural hearing condition (“normal hearing”) and the other in the UHL condition by wearing a foam earplug (3 M E-A-R soft) in one ear (“acute UHL”). The order of natural and plugged conditions was randomized among listeners. The sidedness of earplugging was also randomized. Four listeners were plugged in their left ear, and the other five were plugged in their right ear.

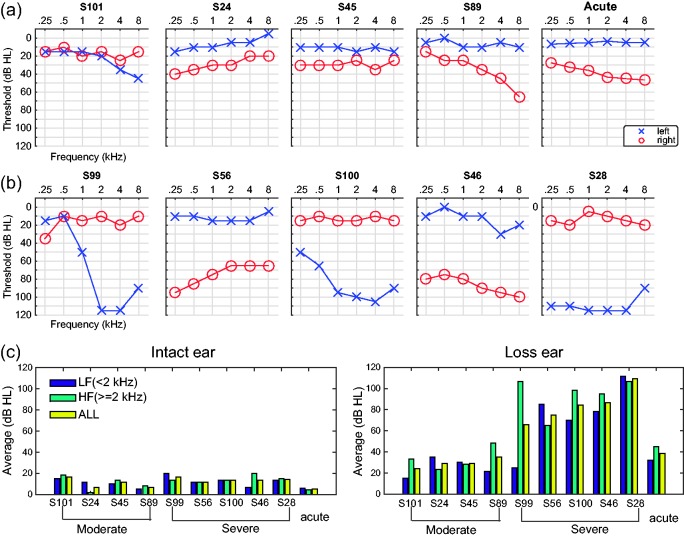

For the nine chronic UHL listeners, the duration of their hearing loss ranged from 10 to 24 years, and none had hearing aid experience before participating in our experiment. They were tested in their natural, unaided condition. As specified in Table 1, the history and etiology of their hearing loss are both variable. Before taking the test, the degree and type of UHL of each listener were evaluated using conventional pure-tone audiometry (Clark, 1981). All listeners showed an asymmetrical hearing loss (>20 dB) between intact and impaired ears at more than two frequencies tested between 0.25 and 8 kHz. Figure 2 shows the audiograms of all chronic UHL listeners and the effect of earplugging on the hearing level for the acute UHL group (averaged over five listeners). They all had normal hearing (<20 dB attenuation) in the intact ear and from mild-moderate (<50 dB) to severe (>50 dB) degrees of hearing loss in the impaired ear as measured by the across-frequency average. We refer to their results as the moderate (N = 4, Figure 2(a)) and severe (N = 5, Figure 2(b)) groups, respectively.

Table 1.

Demographic Information of All Participating Chronic Unilateral Hearing Loss Listeners.

| Category | ID | Gender | Age (years) | Length | HL side | Reported etiology | HL type |

|---|---|---|---|---|---|---|---|

| Moderate unilateral | S24 | F | 22 | 15 years | R | Eardrum perforation | Conductive |

| S45 | F | 29 | ∼15 years | R | Otosclerosis | Conductive | |

| S89 | F | 27 | 24 years | R | Unknown; Frequent OME since 3-year old | Sensorineural | |

| S101 | M | 35 | ∼12 years | L | Unknown | Sensorineural | |

| Severe unilateral | S28 | M | 31 | ∼12 years | L | Vestibular Schwannoma removed | Sensorineural |

| S46 | M | 19 | Congenital (19 years) | R | Facial nerve around stapes fixation | Conductive | |

| S56 | M | 22 | Congenital (22 years) | R | Atresia | Conductive | |

| S99 | F | 43 | 20 years | L | Unknown, history of severe OME | Sensorineural | |

| S100 | M | 35 | 10 years | L | Unknown | Sensorineural |

Note. The assessment method for moderate and severe degrees of impairment was based on the standard criterion (Clark, 1981). Conductive hearing loss was defined as an air-bone gap greater than 10 dB. HL = hearing level; M = male; F = female; OME = Otitis media with effusion.

Figure 2.

Audiograms of nine chronic UHL listeners and simulated UHL with monaural earplug. Hearing levels, at six frequencies, of the left and right ears for (a) the moderate and acute UHL listeners and (b) for the severe UHL listeners. (c) Average dB HL at LF, HF, and ALL ranges for the intact ear (left) and loss ear (right). The results are ranked based on the all-frequency loss level of the loss ear. LF = low frequency; HF = high frequency; ALL = all-frequency; dB HL = decibels in hearing level.

Figure 2(c) shows the averaged hearing level at the low- (0.25, 0.5, and 1 kHz), high- (2, 4, and 8 kHz), and all-frequency ranges. With only one exception (S99), all chronic UHL listeners had comparable UHL levels between low and high frequencies. For the four moderate UHL listeners, the binaural disparity (loss ear—intact ear) was less than 23 dB at low frequencies and less than 28 dB at high frequencies. For the severe UHL listeners (excluding S99), the binaural disparity was more than 56 dB at low frequencies and more than 49 dB at high frequencies. S99 had a predominant high-frequency loss with a mere 5-dB binaural disparity at low frequencies and 93-dB binaural disparity at high frequencies.

Normal hearing sensitivity was verified in the acute UHL group prior to earplug insertion using standard audiometric methods using insert earphones. Sound-field thresholds with a 0° azimuth presentation using pulsed-warble tones presented at octave frequencies from 250 to 8000 Hz were then obtained in bilateral open, bilateral plugged, and unilateral plugged with contralateral masking conditions. Unilateral plug thresholds were reported and obtained with the randomized loss ear occluded by the 3 M E-A-R soft plug and narrowband noise masking presented to the contralateral ear via an insert earphone at a level sufficient to isolate the plug ear thresholds. As shown in Figure 2(a) (far right panel), earplugging causes an overall degree of hearing loss similar to the moderate group, ranging from ∼25 dB at 250 Hz to ∼40 dB at 8000 Hz. This attenuation pattern is consistent with descriptions reported in other studies using foam earplugs (Kumpik et al., 2010; McPartland et al., 1997).

Apparatus and Stimuli

Detailed procedures were reported in our previous study in normal listeners (Montagne & Zhou, 2016). Briefly, free-field testing was conducted in a double-walled, sound-attenuated chamber (Acoustic Systems RE-243, 2.1 m × 2.1 m × 1.9 m). Sound stimuli were presented from two loudspeakers (full-range studio monitor, Adam F5, positioned at ±45° at a distance of 1.1 m) hidden behind a black, acoustically transparent curtain. Light stimuli were delivered by three high-power, surface-mounted white LEDs (10 mm × 10 mm, 6 cd.) attached to the acoustic curtain at 0° (center) and ±45° (left and right). All sound and light stimuli originated at eye level.

Auditory stimuli were unfiltered, Gaussian white noises gated on and off with a 15-ms rectangular window so that the frequency range of the noise burst was bounded by the frequency response range of the loudspeaker, which has a roll-off frequency at 45 kHz and a flat frequency response throughout the range of human hearing (±3 dB between 50 and 20 kHz). The studio monitors were specifically chosen for their flat responses across a wide range of frequencies. For this study, the frequency range of the monitors extends more than an octave above the highest frequencies expected for human sensitivity at 20 kHz so that irregularities that typically occur at the edges of a loudspeaker’s frequency response are out of the range of concern. A fresh noise token was used to generate all stimuli for a given experimental session. In pilot testing, we did not observe differences in localization results using different noise tokens. The average intensity for all auditory stimuli (single speaker and stereo signals) was maintained at 65 dBA, as verified using a sound level meter (Brüel & Kjær 2250-L) positioned at the location of the listener’s head. The root mean square amplitude of the individual channels of the stereo signals was adjusted so that, combined, they matched the power of the single-speaker control signals. Visual stimuli were 15-ms light flashes generated by LEDs. The onset of the light stimulus was synchronized with the onset of the sound stimulus at the leading loudspeaker. All auditory (A) and visual (V) stimuli were generated using custom-designed software written in MATLAB. Digitized stimuli were sent to an external sound card (RME Multiface II) at a sampling rate of 96 kHz to activate the loudspeakers and LEDs.

Time-Delay-Based Stereophony

We employed the method of time-delay-based stereophony using two symmetrically positioned loudspeakers (Figure 1(a)). When the two loudspeakers emit identical sounds with a submillisecond time delay, a phantom sound source is perceived at a location in between them (Leakey, 1959; see Blauert (1997) and Leakey (1959) for detailed analytical evaluations of stereo spatial information). To vary the horizontal position of a stereo sound from left to right, we employed seven ICDs, from −1 to 1 ms with a step size of 0.33 ms. We chose this stereophonic method because it renders a perceived change in sound direction by varying ITDs, not ILDs, in a free-field setup. This was confirmed in both analytical and numerical analyses of the stereo stimuli in our early work (see Figures 7 and 8 in Montagne & Zhou, 2016).

Briefly, time-delay-based stereophony creates four ITDs. One pair of ITDs is associated with the left and right loudspeaker locations (±0.3 ms), while the other pair is the cross-term associated with the ICD with opposing signs (±ICD). The relative weights of the four ITDs are not the same—the one related to the actual ICD dominates. On the other hand, because the stereo stimulation delivers sound waves of equal power to both left and right ears, the averaged ILD is evenly distributed around zero at all ICDs. However, this is a very crude estimate. The ILD cues can fluctuate across frequency and over time due to ongoing interference between lead and lag signals (Pastore & Braasch, 2015). Nevertheless, unlike ITDs, the fluctuation patterns of ILD do not exhibit any systematic relationship that could account for the left-to-right stereo perception when the ICD changes from −1 to 1 ms.

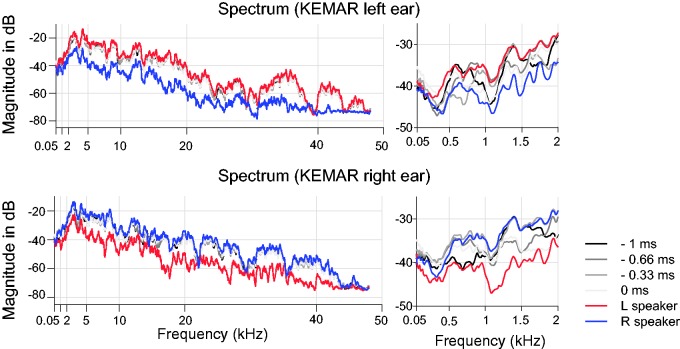

The other benefit of using stereo stimuli is controlled contribution of monaural spectrum in horizontal localization. In the single-source, free-field condition, monaural spectral cues could provide azimuthal information for the monaurally impaired (Kumpik et al., 2010; Shub et al., 2008). This is not the case for stereo stimulation, where the monaural spectrum manifests the combined spectral information of two fixed sound sources and does not covary with binaural localization cues. To illustrate this feature, Figure 3 shows the monaural spectrum of the ear-canal signals collected at the left and right ears of a KEMAR (Knowles Electronic Manikin for Acoustic Research) placed at the position of a listener. Measurements were made in response to identical 15-ms broadband stimuli in both stereo and control conditions. The signals were converted from analog to digital at 24-bit, 96-kHz resolution using the RME Multiface. Ear-canal signals were recorded 100 times and averaged to minimize the effect of system noise at each time-delay condition. For clarity, the results of positive ICDs associated with rightward directions are not shown. The analysis shows that the monaural spectra do not significantly change with ICDs at frequencies above 2 kHz (left panels, Figure 3). Rather, they are dominated by the signal spectrum from the speaker ipsilateral to that ear, while the contribution to the signal spectrum by the contralateral speaker is significantly attenuated due to head shadowing. As this phenomenon is the result of acoustic interactions of sound waves, it applies to sound frequencies within and above the human hearing range. At frequencies below 2 kHz (right panels, Figure 3), head shadowing is less prominent and the lead–lag interaction between two signals through ICDs causes spectral ripples in the monaural signals due to comb filtering (Zurek, 1980). However, these spectral ripples are unlikely to be informative about azimuth because they do not relate to a perceived sound source position in any simple way that is likely to be learned without feedback and substantial training in the laboratory (neither of which were provided).

Figure 3.

Monaural spectra of the KEMAR ear-canal signals to stereo and single-speaker control inputs. Gray lines show the results of stereo stimulation at various ICDs, and color lines show the results of left and right single-speaker control stimulation. The two left panels show the spectral variations between 50 Hz and 48 kHz, and the two right panels showed the details of variation in the low-frequency range (50 Hz–2 kHz). KEMAR = Knowles Electronic Manikin for Acoustic Research.

Thus, time-delay-based stereophony essentially isolates the ITD cue for studying its relative contributions to a free-field horizontal localization of broadband stimuli. In contrast, changes in the physical position of a single sound source will simultaneously vary ITD, ILD, and monaural spectral cues, making it difficult to assess the relative contribution of each cue in sound localization after UHL.

Procedure

Listeners sat in the center of the sound-attenuated chamber with their head supported by a chin rest to minimize head movement. In each experimental session, sound stimuli were presented with or without lights in randomized blocks, denoted as audio-visual (AV) and audio-only (AO) blocks, respectively. A total of four types of blocks (one AO block and three AV blocks for three light positions) were tested. Each block contained seven stereo stimuli (through time-delay manipulations) and two single-speaker control stimuli (L or R speaker alone). The presentation order for the stereo and control stimuli was also randomized within each block. Ten repeats were administered for each stimulus, resulting in a total of 360 trials, which took on average 30 min to complete. The normal hearing listeners were tested twice (four listeners for the plugged condition first and five for the unplugged condition first; six listeners completed testing on the same day with breaks, three listeners completed testing on different days). All chronic UHL groups were tested once.

Listener responses were recorded using a graphical user interface written in MATLAB. Listeners indicated their perceived sound source location by pushing one of the seven numerically labeled buttons (“1” to “7”), which were horizontally positioned from left to right in the graphical user interface. At the beginning of a task, listeners were given specific verbal instructions to maintain a center fixation before a trial started and to indicate the location of the sound they heard, not the light they saw. They were asked to keep their eyes open during the trial. Eye movement was not monitored. Listeners were encouraged to take breaks outside of the sound chamber every 15 min. They were not provided with any feedback or knowledge of their results during or after the experiments. Because loudspeakers were hidden behind a black curtain, listeners were unaware of the total number and locations of loudspeakers.

All listeners were asked to practice the testing procedure using a training panel. Unlike the testing panel, where the listener had to choose one of the response buttons after the stimulus was presented, during training, the listener chose a response button to trigger a stimulus from the desired location. The purpose of the training was to maintain a consistent numerical rating across listeners that bounded the perceived left and right directions. To do this, the system delivered the stimuli from the left loudspeaker when a listener pressed button “1” and delivered the stimuli from the right loudspeaker when a listener pressed button “7.” The button “3” triggered a stereo stimulus with ICDs of 0 ms. The acute UHL group were trained in their normal hearing condition, without wearing earplugs.

Data Analysis

To study the effects of UHL on AV response patterns, the responses of acute and chronic UHL groups were rearranged so that “left” is associated with the normal functioning, intact ear and “right” is associated with the impaired (or blocked) ear. Sound and light stimuli directions were rearranged in the data analyses accordingly. All results were interpreted using the rating scale, from “1” (leftmost) to “7” (rightmost), that a listener reported. Responses were grouped into AO and AV conditions. The three AV conditions—AVL, AVM, and AVR—were associated with left, middle, and right light positions, respectively. The stimulus–response relationship for AO or AV condition was analyzed for individual listeners. The mean and standard deviation of each listener’s responses for each stimulus were used for the pooled analysis across the population.

A one-way analysis of variance was conducted for the AO results of each hearing group. This analysis provides information about whether changing ICDs affects stereo localization for a hearing group. To evaluate whether or not a listener could perform stereo localization, which is manifested by correlated changes between response and ICDs, the performance of each listener was evaluated with a linear regression between response and stimulus.

The regression analysis offers a parametric assessment of localization accuracy as previously used by others (e.g., Van Wanrooij & Van Opstal, 2007). As listeners used the numerical range of “1” to “7” to signal the leftmost to rightmost change in a perceived sound direction, we remapped the seven ICDs (−1 ms to 1 ms) to the same numerical range. Thus, in the regression analysis, both stimuli and responses were bounded between 1 and 7. In so doing, a unity gain (slope) with zero bias (intercept) indicates perfect stereo perception. The gain, the bias of the linear fit, and the associated coefficient of determination (R2) are reported in results for each listener under each AO and AV conditions (Table 2).

Table 2.

Linear Regression of the Stereo Localization Results.

| Group | ID | AO |

AVL |

AVM |

AVR |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Gain | Bias | R 2 | Gain | Bias | R 2 | Gain | Bias | R 2 | Gain | Bias | R 2 | ||

| Normal hearing | S25 | 0.77 | 0.84 | .84 | 0.68 | 0.42 | .63 | 0.33 | 2.71 | .38 | 0.50 | 2.66 | .53 |

| S30 | 0.55 | 2.01 | .85 | 0.77 | 1.06 | .80 | 0.47 | 2.53 | .74 | 0.66 | 1.58 | .78 | |

| S35 | 0.53 | 1.97 | .60 | 0.65 | 1.04 | .51 | 0.38 | 2.54 | .47 | 0.40 | 3.39 | .27 | |

| S39 | 0.75 | 1.01 | .65 | 0.75 | 0.45 | .44 | 0.40 | 2.52 | .41 | 0.70 | 1.84 | .42 | |

| S40 | 0.78 | 0.81 | .90 | 0.87 | 0.01 | .83 | 0.56 | 1.61 | .74 | 0.88 | 0.94 | .71 | |

| *S26 | 0.64 | 1.37 | .82 | 0.67 | 0.93 | .70 | 0.47 | 2.07 | .71 | 0.68 | 1.70 | .73 | |

| S29 | 0.71 | 1.30 | .88 | 0.68 | 1.27 | .83 | 0.71 | 1.26 | .86 | 0.69 | 1.38 | .87 | |

| S31 | 0.75 | 0.89 | .80 | 0.72 | 0.86 | .72 | 0.63 | 1.59 | .75 | 0.61 | 1.92 | .54 | |

| S37 | 0.75 | 0.53 | .72 | 0.65 | 0.61 | .55 | 0.49 | 1.52 | .41 | 0.55 | 0.89 | .38 | |

| Median | 0.75 | 1.01 | .82 | 0.68 | 0.86 | .70 | 0.47 | 2.07 | .71 | 0.66 | 1.70 | .54 | |

| Acute moderate | S25 | 0.39 | 2.59 | .23 | 0.07 | 2.46 | .01 | 0.09 | 3.57 | .03 | 0.20 | 4.37 | .09 |

| S30 | 0.19 | 3.84 | .12 | 0.11 | 2.56 | .02 | 0.07 | 3.92 | .06 | 0.10 | 5.59 | .05 | |

| S35 | 0.41 | 1.44 | .22 | 0.39 | 1.69 | .11 | 0.56 | 1.20 | .27 | 0.35 | 2.95 | .09 | |

| S39 | 0.06 | 1.27 | .03 | −0.02 | 1.29 | .01 | 0.00 | 2.01 | .00 | −0.02 | 2.20 | .00 | |

| S40 | 0.10 | 1.79 | .02 | 0.05 | 1.06 | .02 | 0.04 | 3.30 | .01 | 0.30 | 3.41 | .06 | |

| *S26 | 0.11 | 2.60 | .04 | −0.01 | 1.96 | .00 | −0.01 | 4.01 | .00 | −0.01 | 5.89 | .00 | |

| S29 | 0.53 | 1.00 | .33 | 0.53 | 1.07 | .35 | 0.30 | 2.26 | .24 | 0.73 | 1.34 | .31 | |

| S31 | −0.04 | 3.73 | .00 | −0.11 | 4.29 | .02 | −0.07 | 4.27 | .02 | −0.04 | 4.25 | .00 | |

| S37 | 0.05 | 1.61 | .02 | 0.01 | 1.61 | .00 | 0.03 | 1.96 | .00 | 0.07 | 1.60 | .02 | |

| Median | 0.11 | 1.79 | .04 | 0.05 | 1.69 | .02 | 0.04 | 3.30 | .02 | 0.10 | 3.41 | .05 | |

| Chronic moderate | S24 | 0.60 | 1.36 | .68 | 0.63 | −0.14 | .49 | 0.06 | 3.80 | .12 | 0.63 | 2.21 | .47 |

| S45 | 0.65 | 2.04 | .69 | 0.77 | 0.13 | .57 | 0.29 | 3.21 | .41 | 0.45 | 3.68 | .31 | |

| *S89 | 0.63 | 1.47 | .74 | 0.39 | 1.27 | .39 | 0.21 | 3.06 | .36 | 0.18 | 5.04 | .11 | |

| S101 | 0.50 | 2.16 | .80 | 0.41 | 2.67 | .55 | 0.51 | 2.17 | .70 | 0.49 | 2.14 | .78 | |

| Median | 0.61 | 1.76 | .71 | 0.52 | 0.70 | .52 | 0.25 | 3.13 | .39 | 0.47 | 2.95 | .39 | |

| Chronic severe | *S28 | 0.02 | 1.24 | .00 | 0.01 | 1.36 | .00 | −0.08 | 2.87 | .01 | 0.01 | 3.58 | .00 |

| S46 | 0.05 | 2.76 | .01 | −0.06 | 2.51 | .01 | 0.05 | 3.00 | .00 | −0.05 | 4.23 | .02 | |

| S56 | −0.11 | 3.91 | .02 | −0.04 | 3.34 | .00 | −0.04 | 3.77 | .00 | −0.11 | 4.69 | .02 | |

| S99 | 0.26 | 3.16 | .12 | 0.29 | 1.99 | .11 | 0.09 | 3.60 | .02 | 0.14 | 4.74 | .04 | |

| S100 | 0.22 | 3.41 | .07 | 0.31 | 2.00 | .13 | 0.30 | 2.49 | .26 | 0.40 | 2.76 | .16 | |

| Median | 0.05 | 3.16 | .02 | 0.01 | 2.00 | .01 | 0.05 | 3.00 | .01 | 0.01 | 4.23 | .02 | |

Note. The analysis was conducted for the AO and AV responses of all listeners. The slope of the linear regression line describes the gain, and the intercept describes the bias of localization performance. A unity gain with zero bias indicates perfect stereo perception. In the AO condition, nonsignificant correlations (p > .05) are marked by shaded gain values. Asterisks mark the results of the exemplary listeners shown in Figure 5. AO = audio-only; AV = audio-visual.

As the “good” or “bad” stereo performance was manifested by the degree of correlation between responses and ICDs (i.e., slope or gain in the regression analysis and its significance level along with R2), not by the magnitude of responses, we did not evaluate the group effect among the four hearing groups using the summative statistics (i.e., group mean) by a two-way analysis of variance. Instead, we compared the regression results with respect to the response gain across four hearing groups as shown in Table 2. The significance of group difference was assessed by Wilcoxon rank-sum test with the significance criterion corrected for a total of six possible comparisons among four hearing groups (Bonferroni α = 0.05/6 = .0083).

Visual influence on sound source localization was evaluated in terms of the extent of visual bias (ΔAV), defined as the difference in mean listener response between AV and AO conditions tested with identical auditory stimuli, ΔAV = mean (AV) − mean (AO). This yields three types of visual biases, ΔAVL, ΔAVM, and ΔAVR, with a positive ΔAV indicating a rightward shift and a negative ΔAV indicating a leftward shift in response. This analysis was conducted on each listener. Because we used a within-subject experimental design, comparisons of magnitudes of ΔAV could be made across different listener groups with minimized effects of response bias in the AO conditions. The group difference in visual bias was also assessed by Wilcoxon rank-sum test with the significance criterion corrected (Bonferroni α = 0.05/6 = .0083).

Results

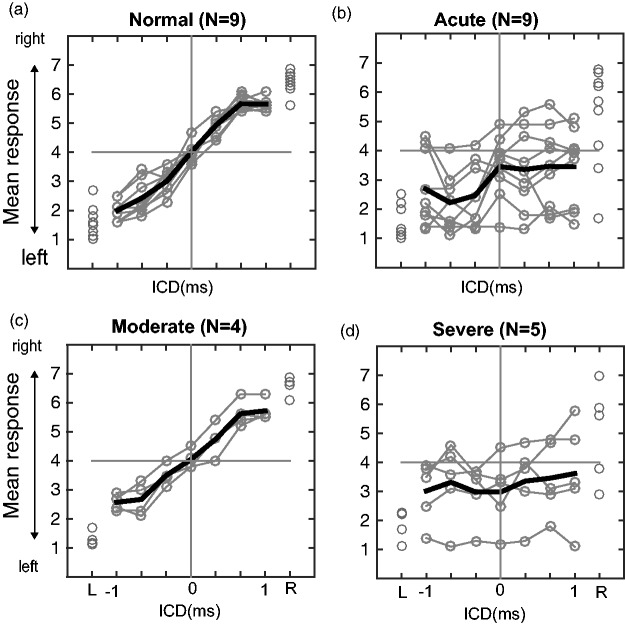

Stereo Localization Without Visual Stimulation

Figure 4 shows the stereo localization results of all listeners in the absence of lights (AO condition). There was a significant effect of ICD on the perceived direction of the sound source for the normal hearing, F(6, 56) = 162.63, p < 10−5, and moderate HL, F(6, 21) = 34.75, p < 10−5, groups, but not for the severe HL, F(6, 28) = 0.19, p = .98, and acute HL, F(6,56) = 1.77, p = .122, groups. Detailed inspection of the data and the regression results revealed different response patterns among the four groups. For the normal hearing group (Figure 4(a)), all nine listeners experienced typical stereo perception. The leftmost response was reported when the signals from the left loudspeaker led those from the right loudspeaker (ICD = −1 ms). The rightmost response was reported for the opposite timing condition (ICD = 1 ms). All their results had a nearly linear relationship with ICD (median gain = 0.74; median R2 = .82; p < 10−5; linear regression) as summarized in Table 2.

Figure 4.

Stereo localization in the AO condition of four hearing groups. (a) Localization responses of normal hearing, (b) acute UHL, (c) moderate UHL, and (d) severe UHL listeners. Listeners indicated the perceived direction of a sound source by pushing one of the seven numerically labeled buttons (“1” to “7”). Gray lines show individual responses, and thick black lines show the group average. Responses of acute and chronic UHL groups were rearranged so that “left” is associated with the properly functioning, intact ear and “right” is associated with the impaired (or blocked) ear. The single-speaker responses are shown on the very left and right margins of each panel for all listeners. ICD = interchannel delay.

When the same group of listeners was tested after monaural earplugging to induce acute UHL (Figure 4(b)), all listeners showed degraded response gain (median gain = 0.11) and weakened correlation between responses and ICD (median R2 = .04) relative to their normal hearing results and exhibited a general bias toward the intact ear. High individual variability was noticeable, especially on the plugged side. Five of the nine acute UHL listeners could no longer perform the stereo localization task (linear regression, p > .05). The overall reduction in response gain was significant between the acute UHL and normal hearing groups (p < 10−3, Wilcoxon rank-sum test with Bonferroni α of ·0083).

For the chronic UHL listeners, performance in stereo localization correlated with the severity of hearing loss. With moderate UHL (Figure 4(c)), all four listeners could localize the stereo sound (median gain = 0.61; p < 10−5; linear regression) and their overall response gain was not significantly different from those with normal hearing (p = .106, Wilcoxon rank-sum test) but with reduced precision (median R2 = .71). Importantly, although the acute and moderate UHL listeners had comparable overall levels of hearing loss (Figure 2), the patterns of their stereo localization performances were different in two ways: (a) The responses of moderate UHL listeners did not show a “better” ear bias and (b) they exhibited less individual variability than acute UHL listeners. The pair-wise comparison revealed that the moderate group had a significantly higher response gain than the acute group (p = .0056, Wilcoxon rank-sum test).

With severe UHL (Figure 4(d)), three of the five listeners could not perform the stereo localization task (p > .05; linear regression) and the responses of the other two listeners showed significant but weak correlation with ICD (R2 = .12 and .07, respectively). Similar to the acute UHL group, high individual variability and biases toward the intact ear were observed. While the response gains of severe UHL listeners were, on average, lower than the other three hearing groups, the difference was only significant when comparing to the normal hearing (p < 10−3) but not to the moderate UHL (p = .0159) and acute UHL (p = .364) when tested with a Bonferroni α of .0083.

The single-speaker responses showed similar between-group differences as the stereo responses. While normal hearing and moderate UHL could localize accurately the L and R locations, large errors and variability are seen on the side of the loss ear (“R”) for acute and severe UHL. As shown in our previous study (Montagne & Zhou, 2016), at ±1 ms ICD, time-delay-based stereophony could generate an ITD larger than that from the single-speaker stimulation (at ±45°). But we often found that the perceived stereo sound was closer to the midline than the perceived location of the single speakers (L or R). A compressed but symmetrical range of responses is more clearly shown for moderate UHL listeners (Figure 4(c)).

With UHL, stimulus loudness (or the perceived stimulus intensity) is much reduced at the side of the impaired ear, resulting in a de facto nonzero ILD favoring the intact ear. The near-normal stereo localization after chronic moderate UHL suggests that the performance of this group of listeners was less sensitive to this ILD distortion than the acute UHL group.

Stereo Localization With Visual Stimulation

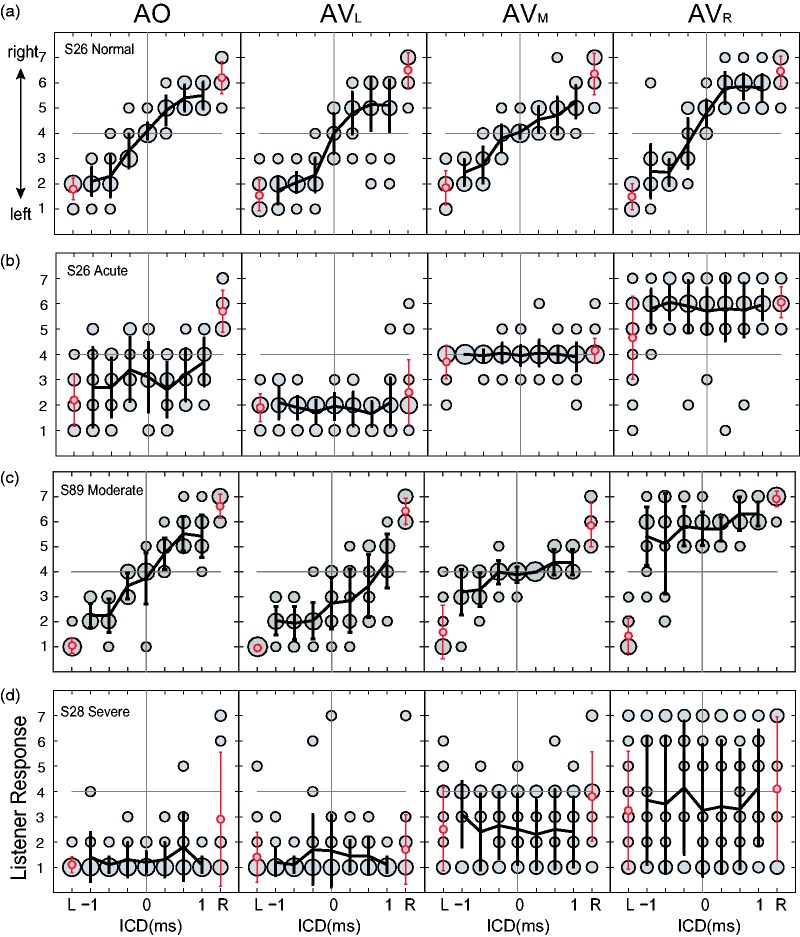

When light stimulation was introduced, the AV responses showed further contrasting patterns between normal hearing and UHL groups. Figure 5 shows the stereo responses of four representative listeners. The results are arranged in columns, from left to right, based on the lighting condition (AO, AVL, AVM, and AVR). The bubble plots depict the distribution of responses per stimulus and the overlaid lines indicate the corresponding mean and standard deviation.

Figure 5.

Stereo localization performance of four exemplar subjects. From Panels (a) to (d), the bubble plots show the stimulus–response relationship in AO and AV conditions. The lines show the mean and standard deviation of responses for each stereo stimulus. The one-speaker control stimuli are labeled as L and R on each panel and are shown in red. On all panels, responses were rearranged so that “left” was associated with the intact ear and “right” is associated with the loss ear. ICD = interchannel delay; AO = audio-only; AV = audio-visual.

The data patterns in the AO condition reveal that UHL affects not only the average but also the variability of position estimates in stereo localization. For the listeners with acute UHL (Figure 5(b)) and severe UHL (Figure 5(d)), their stereo responses were largely insensitive to changes in ICD and exhibited a clear bias toward the intact ear. However, their biased responses do not exhibit the same level of certainty. The responses of the acute UHL listener are more variable for a given ICD, while those of the severe UHL listeners concentrated on the extreme left direction associated with the intact ear. The random patterns and extreme bias were found in the results of both acute and severe UHL groups.

Based on visual inspection, the effects of light stimulation on stereo localization became stronger with UHL. In comparison, the responses of the moderate UHL listener were pulled toward the LED location (Figure 5(c)), more so than the responses of the normal hearing listeners (Figure 5(a)), despite their similar localization performance in the AO condition. Visual bias was most apparent for listeners with acute and severe UHL (Figure 5(b) and (d)), whose stereo responses were either completely dominated by the LED direction (e.g., AVR in Figure 5(b)), or became random (e.g., AVR in Figure 5(d)). The single-speaker responses (L or R) were also affected but to a different extent. For normal hearing and chronic, moderate UHL, single-speaker responses were affected less than stereo responses (e.g., the AVR response in Figure 5(c)). For acute and severe UHL, single-speaker and stereo responses appear to be equally affected (e.g., the AVR response in Figure 5(d)).

The performances of the four listeners shown in Figure 5 are typical of their individual hearing group. The visual stimulation-induced change in the stimulus–response relationship and individual variability are summarized in Table 2, which reports the linear regression analysis of responses of each listener at the three AV conditions. For all hearing groups, the effects of visual stimulation are evident in the distributions of the bias values. The left and right LED stimuli induce leftward (low bias value) and rightward (high bias value) responses, respectively.

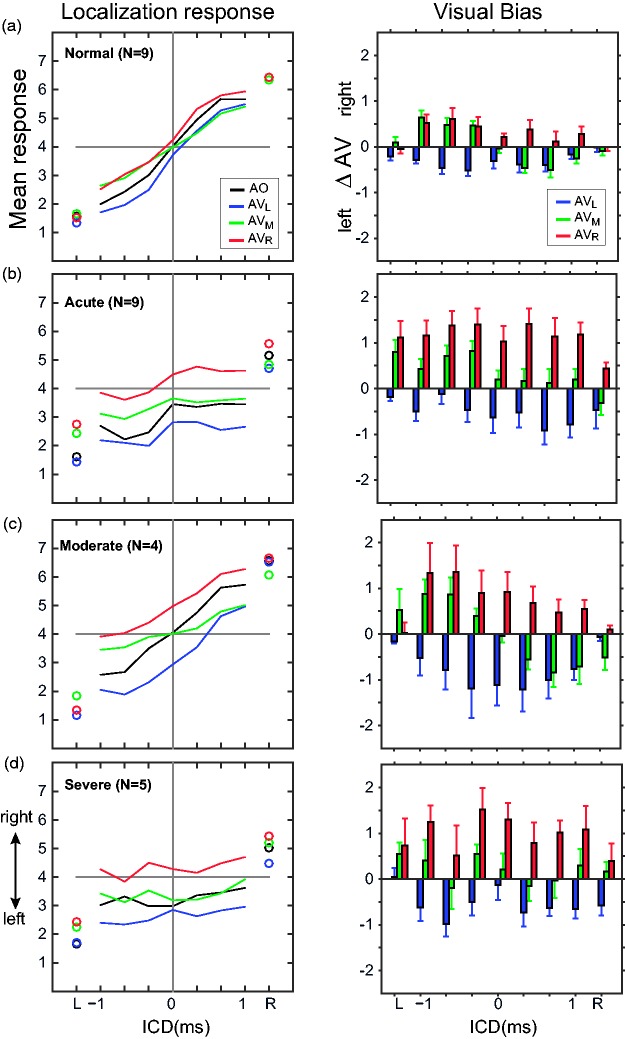

We then analyzed the group mean of AO and AV responses to compare the magnitude of visual bias across the three lighting conditions. The population averages (Figure 6, left column) largely reflect the characteristics of individual responses described in Figure 5. Using these data, we extracted the extent of visual bias based on the change in reported sound location between AO and AV conditions [ΔAV = mean(AV) − mean(AO)] for each listener. The right column of Figure 6 reports the population average of this analysis. For all hearing groups, there is a clear shift in the perceived sound direction after visual stimulation. In general, ΔAV has opposite signs, following the direction of the LED stimulus. For normal hearing and moderate UHL groups, the magnitude of the shift (|ΔAV|) was not significantly different between left and right LEDs (p > .05; Wilcoxon rank-sum test). This symmetry breaks down for acute and severe UHL groups. The right LED (red, the loss ear) caused a greater shift than the left LED (blue, the intact ear; acute UHL: p < .001; severe UHL: p = .019; Wilcoxon rank-sum test).

Figure 6.

Population analysis of the magnitude of visual bias. The left column shows the population average of the mean responses for each hearing group in the AO and three AV conditions. Single-speaker control responses are shown with circles, and stereo responses are shown with lines. The right column shows the population average of the magnitude of visual bias (ΔAV, mean + standard error of the mean) at the three lighting positions. Leftward and rightward visual biases are indicated by negative and positive ΔAV, respectively. ICD = interchannel delay; AV = audio-visual.

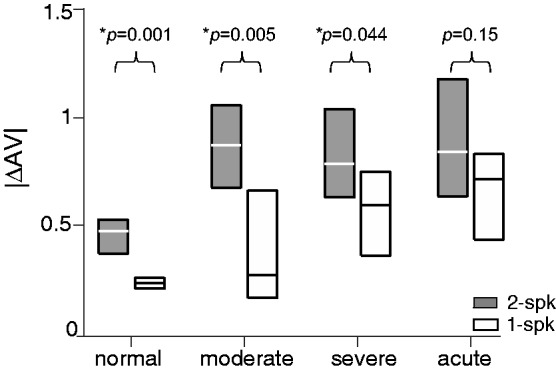

Figure 7 compares the distribution of visual bias (|ΔAV|) among the four hearing groups. This group analysis was based on averaged responses of listeners within a group at each ICD for all three light conditions. Single-speaker responses were separately analyzed. Several observations can be made based on pair-wise comparisons (Wilcoxon rank-sum test). First, UHL enhances the effects of visual stimuli on stereo localization. With the significance criterion corrected for a total of six possible between-group comparisons (Bonferroni α = 0.05/6 = .0083), all three UHL groups showed stronger visual bias than the normal hearing group (p < .0001). For single-speaker responses, visual bias was also stronger for acute and severe UHL groups than for the normal hearing (p < .0022). Second, despite differences in severity and duration of hearing loss, the magnitude of the overall visual bias is similar among three UHL groups during stereo localization (p > .05). Third, the magnitude of visual bias was, on average, greater for stereo than single-speaker stimuli. This difference, however, only reached the significance level for normal hearing (p < .0012) and the two chronic UHL groups (p < .044), not for the acute UHL group (p = .153) at a significance level of 0.05 for the within-group comparison.

Figure 7.

Comparison of the magnitude of visual bias among four hearing groups. For each hearing group, the results show the interquartile distribution (25%–75%) of visual bias (|ΔAV|) for stereo (2-spk) and single-speaker (1-spk) stimulation. The significance level of the difference between stereo and single-speaker results is shown above each group data. AV = audio-visual.

Discussion

This study investigated how the duration and severity of UHL affect auditory localization with and without simultaneous visual stimulation. We compared the performances of four hearing groups—normal hearing, acute UHL (minutes, by earplugging), chronic moderate UHL, and chronic severe UHL (more than 10 years, no hearing aid experience) using time-delay-based stereophony. This time-delay technique allowed the experimenter to manipulate ITD cues while keeping ILDs near zero and the monaural spectral information relatively unchanged. As a result, ITDs were essentially isolated as the cue for sound source localization in the horizontal plane. The performance of the moderate UHL group was near normal when auditory stimuli were presented alone but showed stronger visual bias than the normal hearing group when both visual and auditory stimuli were presented. Hearing loss led to degraded stereo localization and increased visual bias, especially on the impaired side, for the severe and acute UHL groups. These results suggest that the spatial mechanisms underlying auditory localization are adaptable with long-term experience and that vision plays a compensatory role in restoring perceptual spatial symmetry after UHL.

Stereo Sound Localization for the Unilaterally Hearing Impaired

Auditory fusion due to “summing localization,” the basis of stereophony (Blauert, 1997), underlies successful applications of sound reproduction and entertainment systems (e.g., TV and theatre). It is a perceptual phenomenon closely related to the “Precedence Effect” (Litovsky, Colburn, Yost, & Guzman, 1999; Wallach, Newman, & Rosenzweig, 1949). The crux of it critically depends on how ITDs and ILDs are processed by the binaural system. We found that not all UHL listeners experienced a localizable stereo sound (Figure 4). The task was difficult for those with either severe in degree or acute in duration UHL, but not for listeners with chronic, moderate UHL (>12 years), who performed near normal.

Unlike the single-source condition, only ITDs are informative about azimuth in time-delay-based stereophony. For UHL listeners with difficulties in our study, one explanation is that their ITD processing is impaired to the extent that changing the signal delay of the stereo stimuli does not lead to resolvable directional information. This is a reasonable explanation for the performance of the severe UHL listeners with more than 50 dB unilateral loss, that is, they failed to localize a stereo sound because, with only one functional ear, viable ITD information was unavailable to them. As the monaural spectrum at the intact ear did not change with ICD in any systematic way (Figure 3), their responses were essentially dominated by the level cue pointing at the intact ear.

However, the contrasting performances of the two UHL groups with similar moderate impairment are not amenable to such an explanation, that is, the chronic moderate group (with more than 12 years of impairment) responded like normal hearing listeners, whereas the acute group (with tens of minutes of impairment) was largely insensitive to stereo localization cues. Their contrasting results demonstrate how the auditory system handles binaurally unbalanced cues before (i.e., acute group) and after (i.e., chronic group) a long-term exposure to these localization cues.

As the acute group was tested immediately after earplugging, their performance demonstrated how signal distortion at the level of ear-canal affects auditory localization in a normal binaural system with natural weightings of ITDs and ILDs. The strong “better” ear bias in their results suggests that ILDs exert strong roles in constructing the internal estimate of sound position when low-frequency ITDs and high-frequency ILDs are both present. If ILD distortion simply shifts the midline of auditory space, but does not interfere with the ITD computation, one would expect the stereo response to be compressed but still correlated with ICD. Instead, the stereo performance of most acute UHL listeners showed lower certainty than those of normal and moderate UHL (as indicated by R2 values in Table 2); significant but weak correlations between responses and stimuli were found in results of four of the nine acute UHL listeners.

Two factors could attribute to the degraded performance of the acute group. One explanation is that earplugging decreases the precision of signal timing required for ITD computation. Studies have shown that besides level attenuation, earplugging could also cause from tens to hundreds of microsecond delays in the cochlear microphonic (Hartley & Moore, 2003; Lupo, Koka, Thornton, & Tollin, 2011). Lupo et al. (2011) revealed that earplug materials can cause a transmission delay that is larger at the low frequencies than high frequencies by a few hundred microseconds. If the frequency dependence of delays has rendered ITDs unreliable, an acute UHL listener may rely on ILDs to localize a sound immediately after earplugging. Earplugging causes between 20-dB and 50-dB attenuation (see Figure 2(a) acute). For an acute UHL listener, this occlusion-induced “ILD” would shift the responses at all seven ICDs across the midline toward the direction of the unplugged ear (see hypothesis in Figure 1). However, as shown in Figure 4(b), this is not the case for the stereo responses of three acute UHL listeners. Similarly, for the control responses to the right (loss side) single speaker stimulation, thus presenting natural combinations of ITD and ILD, six of the nine listeners pointed to the right side. This suggests that ITD cues may not become completely unusable after monaural earplugging in this study. Similar arguments have been made by Wightman and Kistler (1997).

This observation suggests another explanation for the degraded performance of the acute group. The unnaturally large low-frequency ILDs after earplugging (20–30 dB between 250 and 1000 kHz) interfered with low-frequency ITD analysis. Because such large ILDs are almost never combined with ITDs in natural conditions (Gaik, 1993), they are difficult to process for acute UHL listeners. This conjecture is supported by the observation of florentine (1976) that earplugging imposed an immediate shift of perceived auditory localization for low-frequency pure tones at 500 and 1000 Hz. This interference was strong but temporary, as the listeners in her studies could quickly recenter the midline by using smaller ILDs within days. Nevertheless, this rapid adjustment was not feasible for our listeners as the task typically lasts for 30 min, thereby their performance might demonstrate the immediate effects of unnaturally large ILDs on ITD computations at low frequencies.

On the other hand, it is intriguing that altered ILDs have little effects on the performance of the chronic group with moderate impairment; none of the four listeners we tested showed the “better” ear bias in their AO responses to either stereo and single-speaker stimuli (Figure 4(c)). This finding suggests a weakened role of asymmetric HL in auditory localization after long-term hearing loss. Unlike severe UHL listeners, who may not have access to binaural cues and often resort to spectral cues in azimuthal localization (Van Wanrooij & Van Opstal, 2004), listeners with moderate UHL have access to ITDs. Because ITDs are the only useful cue for timing-based stereo localization, the fact that the chronic group could perform stereo localization like those with normal hearing suggests adequate ITD processing is retained for this population. These results, however, cannot discern to what extent the near-normal results of the moderate UHL is related to a learned emphasis on ITDs over disrupted ILDs through the reweighting mechanism or to recentered ILDs through the remapping mechanism. Carefully designed cue-manipulation experiments, which address the naturally occurring binaural asymmetry after UHL (e.g., unlike those introduced by earplugging), are needed to answer this question in the future.

Finally, unilateral hearing impairment could also alter the signal timing, for example, after conductive hearing loss, and thus the natural association between ITD and source position. Auditory localization on the basis of ITDs is also adaptable in humans (Javer & Schwarz, 1995; Trapeau & Schonwiesner, 2015). Adding an extra delay using a hearing aid to one ear caused the auditory event deflected to the contralateral side, but the magnitude of this deflection decreased within hours (Javer & Schwarz, 1995). Intuitively, the remapped spatial perception could attribute to a new cue-location association that gradually modifies with repeated exposure to distorted binaural cues. However, this “cue retuning” mechanism, if maintained after hearing loss, conflicts with the observations that the localization performance quickly resumed to the normal level within minutes after removing the distortions in binaural cues (Javer & Schwarz, 1995; Trapeau & Schonwiesner, 2015). It remains to be tested whether long-term learning mechanisms are also applied to the ITD analysis itself in chronic UHL in humans.

Overall, increasing behavioral evidence suggests that the mammalian auditory system can adjust the relative weights of different localization cues and their relations to the external source locations in establishing a decision variable for auditory localization. It remains relatively less understood how the neural circuit functions support remodeling of spatial representation after hearing impairment. Understanding the recalibration process will require targeted auditory investigations such as those by Kumpik et al. (2010), Polley, Thompson, and Guo (2013), and Keating, Dahmen, and King (2015) using animal models that experience similar trends in spatial learning/adaptation as in humans.

Visual Influences in Stereo Performances of the Hearing Impaired

With UHL, we found that visual bias is much stronger on the loss side than the intact side for the severe and acute groups (Figures 6 and 7), suggesting that visual information is used to compensate for the broken symmetry in auditory spatial processing. We also found that time-delay-based stereophony evoked stronger visual bias than single-speaker stimulation for both normal hearing and UHL (Figure 7). Montagne and Zhou (2016) attributed the increased visual bias to uncertainty in the auditory spatial estimate as a result of conflicting ITDs and ILDs across frequency and with each other. The increased response variability and visual bias are evident for acute UHL subjects compared with their normal results (Table 2). Thus, our results are largely consistent with the cue reliability theory proposed for multisensory interaction (Ernst & Bülthoff, 2004), that is, sensory bias is proportional to the reliability of the unimodal estimates—the modality producing the most salient estimate dominates.

The decreased reliability of the auditory estimate may arise at the stage where individual cues are initially processed. Studies have shown that hearing loss degrades binaural sensitivity (Hancock, Noel, Ryugo, & Delgutte, 2010; Popescu & Polley, 2010) and changes the aural preferences of the midbrain and cortical neurons (Kral, Heid, Hubka, & Tillein, 2013; Polley et al., 2013; Tillein, Hubka, & Kral, 2016). However, it also remains a possibility that, for UHL, the cue-reweighting procedure itself is imperfect, causing a blurred auditory image due to inadequate combinations of available cues.

For people with hearing loss, changes in visual function may have also affected their performances. Previous studies have shown that, compared with normal hearing individuals, deaf individuals are more flexible in redirecting their attention to parafoveal visual targets, which are positioned 2° away from central fixation, in the presence of foveal distractors (Parasnis & Samar, 1985) and generally show faster reaction time to peripheral visual targets, whether static (Chen, Zhang, & Zhou, 2006; Colmenero, Catena, Fuentes, & Ramos, 2004) or in motion (Neville & Lawson, 1987). Interestingly, the results of the three groups of UHL showed a stronger visual bias than the normal hearing (Figure 7), suggesting a change in the overall strength of visual modulation. However, as this study did not directly measure the visual functions of participants, and visual attention was neither monitored nor controlled, interpretations of their visual function remain speculative.

Acknowledgments

William Yost and M. Torben Pastore provided critical feedback to early versions of the manuscript. The audiograms were collected in Andrea Pittman’s Laboratory of Pediatric Amplification at the Arizona State University. The authors appreciate their help.

Declaration of Conflicting Interests

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This research was supported by NSF BCS-1539376 (Y. Z.).

References

- Agterberg M. J., Hol M. K., Van Wanrooij M. M., Van Opstal A. J., Snik A. F. (2014) Single-sided deafness and directional hearing: Contribution of spectral cues and high-frequency hearing loss in the hearing ear. Frontiers in Neuroscience 8: 188. doi: 10.3389/fnins.2014.00188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bajo V. M., Nodal F. R., Moore D. R., King A. J. (2010) The descending corticocollicular pathway mediates learning-induced auditory plasticity. Nature Neuroscience 13: 253–260. doi: 10.1038/nn.2466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer R. W., Matuzsa J. L., Blackmer R. F., Glucksberg S. (1966) Noise localization after unilateral attenuation. The Journal of the Acoustical Society of America 40: 441–444. [Google Scholar]

- Bavelier D., Dye M. W., Hauser P. C. (2006) Do deaf individuals see better? Trends in Cognitive Sciences 10: 512–518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bertelson P., Radeau M. (1981) Cross-modal bias and perceptual fusion with auditory-visual spatial discordance. Perception & Psychophysics 29: 578–584. [DOI] [PubMed] [Google Scholar]

- Bess F. H., Tharpe A. M., Gibler A. M. (1986) Auditory performance of children with unilateral sensorineural hearing loss. Ear and Hearing 7: 20–26. [DOI] [PubMed] [Google Scholar]

- Blauert J. (1997) Spatial hearing: The psychophysics of human sound localization, Cambridge, MA: MIT Press. [Google Scholar]

- Boring E. G. (1926) Auditory theory with special reference to intensity, volume, and localization. The American Journal of Psychology 37: 157–188. [Google Scholar]

- Brainard M. S., Knudsen E. I. (1998) Sensitive periods for visual calibration of the auditory space map in the barn owl optic tectum. Journal of Neuroscience 18: 3929–3942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Q., Zhang M., Zhou X. (2006) Effects of spatial distribution of attention during inhibition of return (IOR) on flanker interference in hearing and congenitally deaf people. Brain Research 1109: 117–127. [DOI] [PubMed] [Google Scholar]

- Clark J. G. (1981) Uses and abuses of hearing loss classification. Asha 23: 493–500. [PubMed] [Google Scholar]

- Colmenero J. M., Catena A., Fuentes L. J., Ramos M. M. (2004) Mechanisms of visuospatial orienting in deafness. European Journal of Cognitive Psychology 16: 791–805. [Google Scholar]

- Durlach N., Thompson C., Colburn H. (1981) Binaural interaction in impaired listeners: A review of past research. Audiology 20: 181–211. [DOI] [PubMed] [Google Scholar]

- Ernst M. O., Bülthoff H. H. (2004) Merging the senses into a robust percept. Trends in Cognitive Sciences 8: 162–169. [DOI] [PubMed] [Google Scholar]

- Florentine M. (1976) Relation between lateralization and loudness in asymmetrical hearing losses. Journal of the American Audiology Society 1: 243–251. [PubMed] [Google Scholar]

- Gabriel K. J., Koehnke J., Colburn H. S. (1992) Frequency dependence of binaural performance in listeners with impaired binaural hearing. The Journal of the Acoustical Society of America 91: 336–347. [DOI] [PubMed] [Google Scholar]

- Gaik W. (1993) Combined evaluation of interaural time and intensity differences: Psychoacoustic results and computer modeling. The Journal of the Acoustical Society of America 94: 98–110. [DOI] [PubMed] [Google Scholar]

- Goldberg J. M., Brown P. B. (1969) Response of binaural neurons of dog superior olivary complex to dichotic tonal stimuli: Some physiological mechanisms of sound localization. Journal of Neurophysiology 32: 613–636. [DOI] [PubMed] [Google Scholar]

- Golub J. S., Lin F. R., Lustig L. R., Lalwani A. K. (2018) Prevalence of adult unilateral hearing loss and hearing aid use in the United States. Laryngoscope 128(7): 1681–1686. doi: 10.1002/lary.27017. [DOI] [PubMed] [Google Scholar]

- Hancock K. E., Noel V., Ryugo D. K., Delgutte B. (2010) Neural coding of interaural time differences with bilateral cochlear implants: Effects of congenital deafness. Journal of Neuroscience 30: 14068–14079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartley D. E., Moore D. R. (2003) Effects of conductive hearing loss on temporal aspects of sound transmission through the ear. Hearing Research 177: 53–60. [DOI] [PubMed] [Google Scholar]

- Hausler R., Colburn S., Marr E. (1983) Sound localization in subjects with impaired hearing: Spatial-discrimination and interaural-discrimination tests. Acta Oto-Laryngologica 96(sup400): 1–62. [DOI] [PubMed] [Google Scholar]

- Humes L. E., Allen S. K., Bess F. H. (1980) Horizontal sound localization skills of unilaterally hearing-impaired children. Audiology 19: 508–518. [DOI] [PubMed] [Google Scholar]

- Irving S., Moore D. R. (2011) Training sound localization in normal hearing listeners with and without a unilateral ear plug. Hearing Research 280: 100–108. [DOI] [PubMed] [Google Scholar]

- Jack C. E., Thurlow W. R. (1973) Effects of degree of visual association and angle of displacement on the “ventriloquism” effect. Perceptual and Motor Skills 37: 967–979. [DOI] [PubMed] [Google Scholar]

- Javer A. R., Schwarz D. (1995) Plasticity in human directional hearing. The Journal of Otolaryngology 24: 111–117. [PubMed] [Google Scholar]

- Kacelnik O., Nodal F. R., Parsons C. H., King A. J. (2006) Training-induced plasticity of auditory localization in adult mammals. PLoS Biology 4: e71. doi: 10.1371/journal.pbio.0040071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keating P., Dahmen J. C., King A. J. (2013) Context-specific reweighting of auditory spatial cues following altered experience during development. Current Biology 23: 1291–1299. doi: 10.1016/j.cub.2013.05.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keating P., Dahmen J. C., King A. J. (2015) Complementary adaptive processes contribute to the developmental plasticity of spatial hearing. Nature Neuroscience 18: 185–187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keating P., King A. J. (2013) Developmental plasticity of spatial hearing following asymmetric hearing loss: Context-dependent cue integration and its clinical implications. Frontiers in Systems Neuroscience 7: 123. doi: 10.3389/fnsys.2013.00123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keating P., Rosenior-Patten O., Dahmen J. C., Bell O., King A. J. (2016) Behavioral training promotes multiple adaptive processes following acute hearing loss. Elife 5: e12264. doi: 10.7554/eLife.12264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knudsen E. I., Knudsen P. F. (1985) Vision guides the adjustment of auditory localization in young barn owls. Science 230: 545–548. [DOI] [PubMed] [Google Scholar]

- Kral A., Heid S., Hubka P., Tillein J. (2013) Unilateral hearing during development: Hemispheric specificity in plastic reorganizations. Frontiers in Systems Neuroscience 7: 93. doi: 10.3389/fnsys.2013.00093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kumpik D. P., Kacelnik O., King A. J. (2010) Adaptive reweighting of auditory localization cues in response to chronic unilateral earplugging in humans. Journal of Neuroscience 30: 4883–4894. doi: 10.1523/JNEUROSCI.5488-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leakey D. M. (1959) Some measurements on the effects of interchannel intensity and time differences in two channel sound systems. The Journal of the Acoustical Society of America 31: 977–986. [Google Scholar]

- Litovsky R. Y., Colburn H. S., Yost W. A., Guzman S. J. (1999) The precedence effect. The Journal of the Acoustical Society of America 106: 1633–1654. [DOI] [PubMed] [Google Scholar]

- Lupo J. E., Koka K., Thornton J. L., Tollin D. J. (2011) The effects of experimentally induced conductive hearing loss on spectral and temporal aspects of sound transmission through the ear. Hearing Research 272: 30–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McPartland J. L., Culling J. F., Moore D. R. (1997) Changes in lateralization and loudness judgements during one week of unilateral ear plugging. Hearing Research 113: 165–172. [DOI] [PubMed] [Google Scholar]

- Mogdans J., Knudsen E. I. (1994) Site of auditory plasticity in the brain stem (VLVp) of the owl revealed by early monaural occlusion. Journal of Neurophysiology 72: 2875–2891. doi: 10.1152/jn.1994.72.6.2875. [DOI] [PubMed] [Google Scholar]

- Montagne C., Zhou Y. (2016) Visual capture of a stereo sound: Interactions between cue reliability, sound localization variability, and cross-modal bias. The Journal of the Acoustical Society of America 140: 471–485. [DOI] [PubMed] [Google Scholar]

- Montagne C., Zhou Y. (2018) Audiovisual interactions in front and rear space. Frontiers in Psychology 9: 713. doi: 10.3389/fpsyg.2018.00713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neville H. J., Lawson D. (1987) Attention to central and peripheral visual space in a movement detection task: An event-related potential and behavioral study. II. Congenitally deaf adults. Brain Research 405: 268–283. [DOI] [PubMed] [Google Scholar]

- Parasnis I., Samar V. J. (1985) Parafoveal attention in congenitally deaf and hearing young adults. Brain and Cognition 4: 313–327. [DOI] [PubMed] [Google Scholar]

- Pastore M. T., Braasch J. (2015) The precedence effect with increased lag level. The Journal of the Acoustical Society of America 138: 2079. doi: 10.1121/1.4929940. [DOI] [PubMed] [Google Scholar]

- Pavani F., Bottari D. (2012) Visual abilities in individuals with profound deafness a critical review. In: Murray M. M., Wallace M. T. (eds) The neural bases of multisensory processes, Boca Raton, FL: CRC Press. [PubMed] [Google Scholar]

- Polley D. B., Thompson J. H., Guo W. (2013) Brief hearing loss disrupts binaural integration during two early critical periods of auditory cortex development. Nature Communications 4: 2547. doi: 10.1038/ncomms3547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Popescu M. V., Polley D. B. (2010) Monaural deprivation disrupts development of binaural selectivity in auditory midbrain and cortex. Neuron 65: 718–731. doi: 10.1016/j.neuron.2010.02.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Recanzone G. H. (1998) Rapidly induced auditory plasticity: The ventriloquism aftereffect. Proceedings of the National Academy of Sciences of the United States of America 95: 869–875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shub D. E., Carr S. P., Kong Y., Colburn H. S. (2008) Discrimination and identification of azimuth using spectral shape. The Journal of the Acoustical Society of America 124: 3132–3141. doi: 10.1121/1.2981634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slattery W. H., Middlebrooks J. C. (1994) Monaural sound localization: Acute versus chronic unilateral impairment. Hearing Research 75: 38–46. [DOI] [PubMed] [Google Scholar]

- Spence C., Driver J. (2000) Attracting attention to the illusory location of a sound: Reflexive crossmodal orienting and ventriloquism. Neuroreport 11: 2057–2061. [DOI] [PubMed] [Google Scholar]

- Stein B. E., Meredith M. A. (1990) Multisensory integration. Neural and behavioral solutions for dealing with stimuli from different sensory modalities. Annals of the New York Academy of Sciences 608: 51–65. discussion 65-70. [DOI] [PubMed] [Google Scholar]

- Stein B. E., Meredith M. A. (1993) The merging of the senses, Cambridge, MA: MIT Press. [Google Scholar]

- Strelnikov K., Rosito M., Barone P. (2011) Effect of audiovisual training on monaural spatial hearing in horizontal plane. PLoS One 6: e18344. doi: 10.1371/journal.pone.0018344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tillein J., Hubka P., Kral A. (2016) Monaural congenital deafness affects aural dominance and degrades binaural processing. Cerebral Cortex 26: 1762–1777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trapeau R., Schonwiesner M. (2015) Adaptation to shifted interaural time differences changes encoding of sound location in human auditory cortex. Neuroimage 118: 26–38. doi: 10.1016/j.neuroimage.2015.06.006. [DOI] [PubMed] [Google Scholar]

- Van Opstal J. (2016) The auditory system and human sound-localization behavior, San Diego, CA: Academic Press. [Google Scholar]

- Van Wanrooij M. M., Van Opstal A. J. (2004) Contribution of head shadow and pinna cues to chronic monaural sound localization. Journal of Neuroscince 24: 4163–4171. doi: 10.1523/JNEUROSCI.0048-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Wanrooij M. M., Van Opstal A. J. (2007) Sound localization under perturbed binaural hearing. Journal of Neurophysiology 97: 715–726. doi: 10.1152/jn.00260.2006. [DOI] [PubMed] [Google Scholar]

- Viehweg R., Campbell R. A. (1960) Localization difficulty in monaurally impaired listeners. Annals of Otology, Rhinology & Laryngology 69: 622–634. [DOI] [PubMed] [Google Scholar]

- Wallach H., Newman E. B., Rosenzweig M. R. (1949) The precedence effect in sound localization. The American Journal of Psychology 62: 315–336. [PubMed] [Google Scholar]

- Warren D. H. (1970) Intermodality interactions in spatial localization. Cognitive Psychology 1: 114–133. [Google Scholar]

- Welch R. B., Warren D. H. (1980) Immediate perceptual response to intersensory discrepancy. Psycholoigal Bulletin 88: 638–667. [PubMed] [Google Scholar]

- Wightman F. L., Kistler D. J. (1997) Monaural sound localization revisited. The Journal of the Acoustical Society of America 101: 1050–1063. [DOI] [PubMed] [Google Scholar]

- Zurek P. M. (1980) The precedence effect and its possible role in the avoidance of interaural ambiguities. The Journal of the Acoustical Society of America 67: 953–964. [DOI] [PubMed] [Google Scholar]

- Zwiers M. P., Van Opstal A. J., Paige G. D. (2003) Plasticity in human sound localization induced by compressed spatial vision. Nature Neuroscience 6: 175–181. doi: 10.1038/nn999. [DOI] [PubMed] [Google Scholar]