Abstract

Understanding the dynamical processes that govern the performance of functional materials is essential for the design of next generation materials to tackle global energy and environmental challenges. Many of these processes involve the dynamics of individual atoms or small molecules in condensed phases, e.g. lithium ions in electrolytes, water molecules in membranes, molten atoms at interfaces, etc., which are difficult to understand due to the complexity of local environments. In this work, we develop graph dynamical networks, an unsupervised learning approach for understanding atomic scale dynamics in arbitrary phases and environments from molecular dynamics simulations. We show that important dynamical information, which would be difficult to obtain otherwise, can be learned for various multi-component amorphous material systems. With the large amounts of molecular dynamics data generated every day in nearly every aspect of materials design, this approach provides a broadly applicable, automated tool to understand atomic scale dynamics in material systems.

Subject terms: Materials science, Computational methods, Computer science

Understanding local dynamical processes in materials is challenging due to the complexity of the local atomic environments. Here the authors propose a graph dynamical networks approach that is shown to learn the atomic scale dynamics in arbitrary phases and environments from molecular dynamics simulations.

Introduction

Understanding the atomic scale dynamics in condensed phases is essential for the design of functional materials to tackle global energy and environmental challenges1–3. The performance of many materials depends on the dynamics of individual atoms or small molecules in complex local environments. Despite the rapid advances in experimental techniques4–6, molecular dynamics (MD) simulations remain one of the few tools for probing these dynamical processes with both atomic scale time and spatial resolutions. However, due to the large amounts of data generated in each MD simulation, it is often challenging to extract statistically relevant dynamics for each atom especially in multi-component, amorphous material systems. At present, atomic scale dynamics are usually learned by designing system-specific descriptions of coordination environments or computing the average behavior of atoms7–10. A general approach for understanding the dynamics in different types of condensed phases, including solid, liquid, and amorphous, is still lacking.

The advances in applying deep learning to scientific research open new opportunities for utilizing the full trajectory data from MD simulations in an automated fashion. Ideally, one would trace every atom or small molecule of interest in the MD trajectories, and summarize their dynamics into a linear, low dimensional model that describes how their local environments evolve over time. Recent studies show that combining Koopman analysis and deep neural networks provides a powerful tool to understand complex biological processes and fluid dynamics from data11–13. In particular, VAMPnets13 develop a variational approach for Markov processes to learn an optimal latent space representation that encodes the long-time dynamics, which enables the end-to-end learning of a linear dynamical model directly from MD data. However, in order to learn the atomic dynamics in complex, multi-component material systems, sharing knowledge learned for similar local chemical environments is essential to reduce the amount of data needed. The recent development of graph convolutional neural networks (GCN) has led to a series of new representations of molecules14–17 and materials18,19 that are invariant to permutation and rotation operations. These representations provide a general approach to encode the chemical structures in neural networks which shares parameters between different local environments, and they have been used for predicting properties of molecules and materials14–19, generating force fields19,20, and visualizing structural similarities21,22.

In this work, we develop a deep learning architecture, Graph Dynamical Networks (GDyNets), that combines Koopman analysis and graph convolutional neural networks to learn the dynamics of individual atoms in material systems. The graph convolutional neural networks allow for the sharing of knowledge learned for similar local environments across the system, and the variational loss developed in VAMPnets13,23 is employed to learn a linear model for atomic dynamics. Thus, our method focuses on the modeling of local atomic dynamics instead of global dynamics. This significantly improves the sampling of the atomic dynamical processes, because a typical material system includes a large number of atoms or small molecules moving in structurally similar but distinct local environments. We demonstrate this distinction using a toy system that shows global dynamics can be exponentially more complex than local dynamics. Then, we apply this method to two realistic material systems—silicon dynamics at solid–liquid interfaces and lithium ion transport in amorphous polymer electrolytes—to demonstrate the new dynamical information one can extract for such complex materials and environments. Given the enormous amount of MD data generated in nearly every aspect of materials research, we believe the broad applicability of this method could help uncover important new physical insights from atomic scale dynamics that may have otherwise been overlooked.

Results

Koopman analysis of atomic scale dynamics

In materials design, the dynamics of target atoms, like the lithium ion in electrolytes and the water molecule in membranes, provide key information to material performance. We describe the dynamics of the target atoms and their surrounding atoms as a discrete process in MD simulations,

| 1 |

where xt and xt+τ denote the local configuration of the target atoms and their surrounding atoms at time steps t and t + τ, respectively. Note that Eq. (1) implies that the dynamics of x is Markovian, i.e. xt+τ only depends on xt not the configurations before it. This is exact when x includes all atoms in the system, but an approximation if only neighbor atoms are included. We also assume that each set of target atoms follow the same dynamics F. These are valid assumptions since (1) most interactions in materials are short-range, (2) most materials are either periodic or have similar local structures, and we could test them by validating the dynamical models using new MD data, which we will discuss later.

The Koopman theory24 states that there exists a function χ(x) that maps the local configuration of target atoms x into a lower dimensional feature space, such that the non-linear dynamics F can be approximated by a linear transition matrix K,

| 2 |

The approximation becomes exact when the feature space has infinite dimensions. However, for most dynamics in material systems, it is possible to approximate it with a low dimensional feature space if τ is sufficiently large due to the existence of characteristic slow processes. The goal is to identify such slow processes by finding the feature map function χ(x).

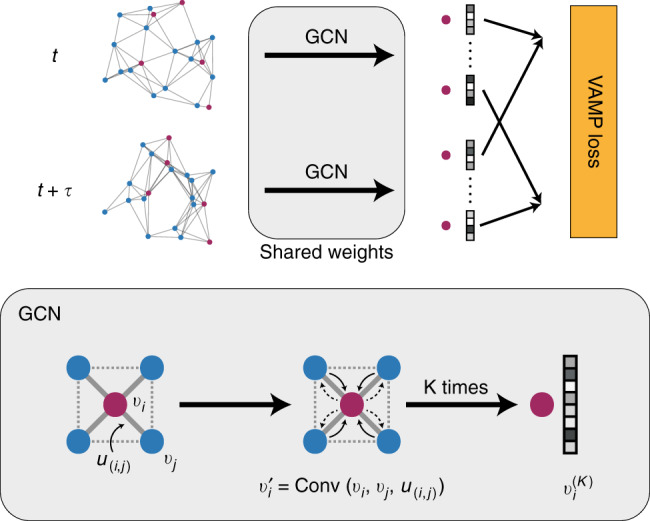

Learning feature map function with graph dynamical networks

In this work, we use GCN to learn the feature map function χ(x). GCN provides a general framework to encode the structure of materials that is invariant to permutation, rotation, and reflection18,19. As shown in Fig. 1, for each time step in the MD trajectory, a graph is constructed based on its current configuration with each node vi representing an atom and each edge ui,j representing a bond connecting nearby atoms. We connect M nearest neighbors considering periodic boundary conditions while constructing the graph, and a gated architecture18 is used in GCN to reweigh the strength of each connection (see Supplementary Note 1 for details). Note that the graphs are constructed separately for each step, so the topology of each graph may be different. Also, the 3-dimensional information is preserved in the graphs since the bond length is encoded in ui,j. Then, each graph is input to the same GCN to learn an embedding for each atom through graph convolution (or neural message passing16) that incorporates the information of its surrounding environments.

| 3 |

After K convolution operations, information from the Kth neighbors will be propagated to each atom, resulting in an embedding that encodes its local environment.

Fig. 1.

Illustration of the graph dynamical networks architecture. The MD trajectories are represented by a series of graphs dynamically constructed at each time step. The red nodes denote the target atoms whose dynamics we are interested in, and the blue nodes denote the rest of the atoms. The graphs are input to the same graph convolutional neural network to learn an embedding for each atom that represents its local configuration. The embeddings of the target atoms at t and t + τ are merged to compute a VAMP loss that minimizes the errors in Eq. (2)

To learn a feature map function for the target atoms whose dynamics we want to model, we focus on the embeddings learned for these atoms. Assume that there are n sets of target atoms each made up with k atoms in the material system. For instance, in a system of 10 water molecules, n = 10 and k = 3. We use the label v[l,m] to denote the mth atom in the lth set of target atoms. With a pooling function18, we can get an overall embedding v[l] for each set of target atoms to represent its local configuration,

| 4 |

Finally, we build a shared two-layer fully connected neural network with an output layer using a Softmax activation function to map the embeddings v[l] to a feature space with a pre-determined dimension. This is the feature space described in Eq. (2), and we can select an appropriate dimension to capture the important dynamics in the material system. The Softmax function used here allows us to interpret the feature space as a probability over several states13. Below, we will use the term “number of states” and “dimension of feature space” interchangeably.

To minimize the errors of the approximation in Eq. (2), we compute the loss of the system using a VAMP-2 score13,24 that measures the consistency between the feature vectors learned at timesteps t and t + τ,

| 5 |

This means that a single VAMP-2 score is computed over the whole trajectory and all sets of target atoms. The entire network is trained by minimizing the VAMP loss, i.e. maximizing the VAMP-2 score, with the trajectories from the MD simulations.

Hyperparameter optimization and model validation

There are several hyperparameters in the GDyNets that need to be optimized, including the architecture of GCN, the dimension of the feature space, and lag time τ. We divide the MD trajectory into training, validation, and testing sets. The models are trained with trajectories from the training set, and a VAMP-2 score is computed with trajectories from the validation set. The GCN architecture is optimized according to the VAMP-2 score similar to ref. 18.

The accuracy of Eq. (2) can be evaluated with a Chapman-Kolmogorov (CK) equation,

| 6 |

This equation holds if the dynamic model learned is Markovian, and it can predict the long-time dynamics of the system. In general, increasing the dimension of feature space makes the dynamic model more accurate, but it may result in overfitting when the dimension is very large. Since a higher feature space dimension and a larger τ make the model harder to understand and contain less dynamical details, we select the smallest feature space dimension and τ that fulfills the CK equation within statistical uncertainty. Therefore, the resulting model is interpretable and contains more dynamical details. Further details regarding the effects of feature space dimension and τ can be found in refs. 13,24.

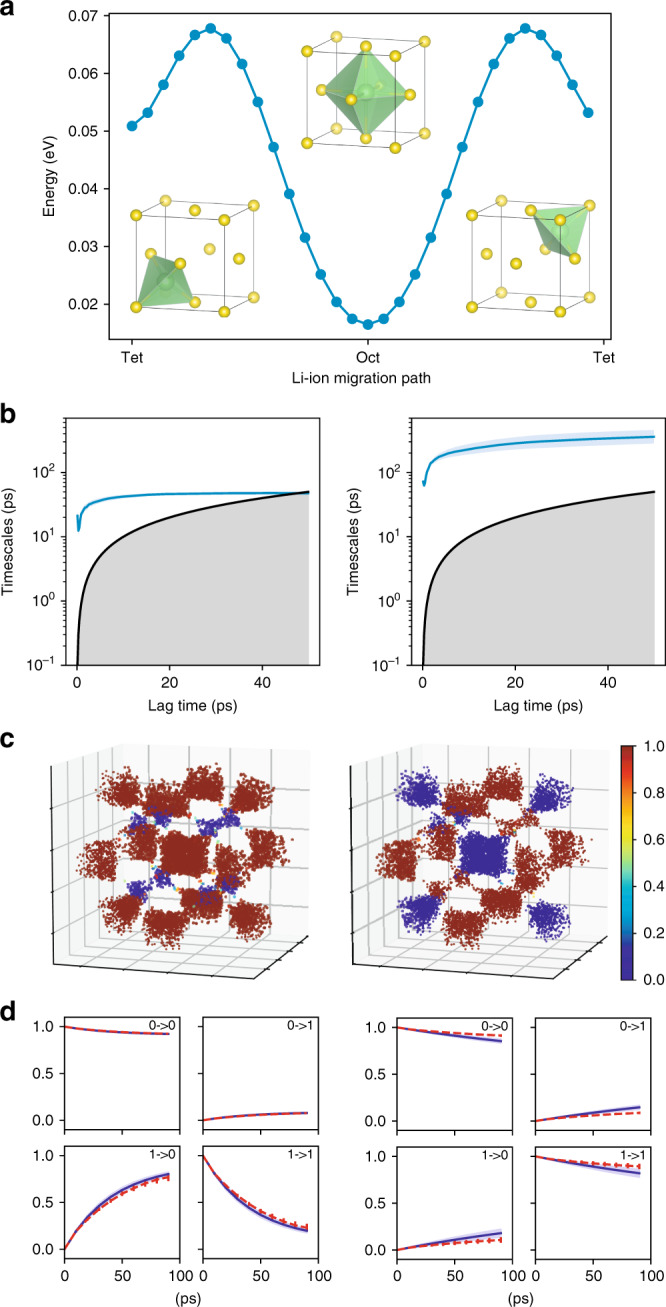

Local and global dynamics in the toy system

To demonstrate the advantage of learning local dynamics in material systems, we compare the dynamics learned by the GDyNet with VAMP loss and a standard VAMPnet with fully connected neural networks that learns global dynamics for a simple model system using the same input data. As shown in Fig. 2a, we generated a 200 ns MD trajectory of a lithium atom moving in a face-centered cubic (FCC) lattice of sulfur atoms at a constant temperature, which describes an important lithium ion transport mechanism in solid-state electrolytes7. There are two different sites for the lithium atom to occupy in a FCC lattice, tetrahedral sites and octahedral sites, and the hopping between the two sites should be the only dynamics in this system. As shown in Fig. 2b–d, after training and validation with the first 100 ns trajectory, the GDyNet correctly identified the transition between the two sites with a relaxation timescale of 42.3 ps while testing on the second 100 ns trajectory, and it performs well in the CK test. In contrast, the standard VAMPnet, which inputs the same data as the GDyNet, learns a global transition with a much longer relaxation timescale at 236 ps, and it performs much worse in the CK test. This is because the model views the four octahedral sites as different sites due to their different spatial locations. As a result, the transitions between these identical sites are learned as the slowest global dynamics.

Fig. 2.

A two-state dynamic model learned for a lithium ion in the face-centered cubic lattice. a Structure of the FCC lattice and the relative energies of the tetrahedral and octahedral sites. b–d Comparison between the local dynamics (left) learned with GDyNet and the global dynamics (right) learned with a standard VAMPnet. b Relaxation timescales computed from the Koopman models as a function of the lag time. The black lines are reference lines where the relaxation timescale equals to the lag time. c Assignment of the two states in the FCC lattice. The color denotes the probability of being in state 0, which corresponds to one of the two states that has a larger population. d CK test comparing the long-time dynamics predicted by Koopman models at τ = 10 ps (blue) and actual dynamics (red). The shaded areas and error bars in b, d report the 95% confidence interval from five independent trajectories by dividing the test data equally into chunks

It is theoretically possible to identify the faster local dynamics from a global dynamical model when we increase the dimension of feature space (Supplementary Fig. 1). However, when the size of the system increases, the number of slower global transitions will increase exponentially, making it practically impossible to discover important atomic scale dynamics within a reasonable simulation time. In addition, it is possible in this simple system to design a symmetrically invariant coordinate to include the equivalence of the octahedral and tetrahedral sites. But in a more complicated multi-component or amorphous material system, it is difficult to design such coordinates that take into account the complex atomic local environments. Finally, it is also possible to reconstruct global dynamics from the local dynamics. Since we know how the four octahedral and eight tetrahedral sites are connected in a FCC lattice, we can construct the 12 dimensional global transition matrix from the 2 dimensional local transition matrix (see Supplementary Note 2 for details). We obtain the slowest global relaxation timescale to be 531 ps, which is close to the observed slowest timescale of 528 ps from the global dynamical model in Supplementary Fig. 1. Note that the timescale from the two-state global model in Fig. 2 is less accurate since it fails to learn the correct transition. In sum, the built-in invariances in GCN provide a general approach to reduce the complexity of learning atomic dynamics in material systems.

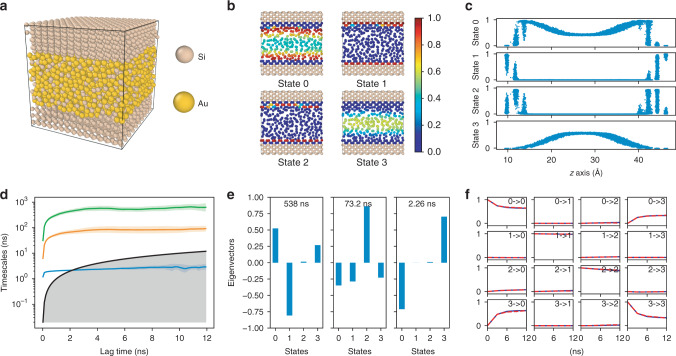

Silicon dynamics at a solid–liquid interface

To evaluate the performance of the GDyNets with VAMP loss for a more complicated system, we study the dynamics of silicon atoms at a binary solid–liquid interface. Understanding the dynamics at interfaces is notoriously difficult due to the complex local structures formed during phase transitions25,26. As shown in Fig. 3a, an equilibrium system made of two crystalline Si {110} surfaces and a liquid Si–Au solution is constructed at the eutectic point (629 K, 23.4% Si27) and simulated for 25 ns using MD. We train and validate a four-state model using the first 12.5 ns trajectory, and use it to identify the dynamics of Si atoms in the last 12.5 ns trajectory. Note that we only use the Si atoms in the liquid phase and the first two layers of the solid {110} surfaces as the target atoms (Fig. 3b). This is because the Koopman models are optimized for finding the slowest transition in the system, and including additional solid Si atoms will result in a model that learns the slower Si hopping in the solid phase which is not our focus.

Fig. 3.

A four-state dynamical model learned for silicon atoms at a solid–liquid interface. a Structure of the silicon-gold two-phase system. b Cross section of the system, where only silicon atoms are shown and color-coded with the probability of being in each state. c The distribution of silicon atoms in each state as a function of z-axis coordinate. d Relaxation timescales computed from the Koopman models as a function of the lag time. The black lines are reference lines where the relaxation timescale equals to the lag time. e Eigenvectors projected to each state for the three relaxations of Koopman models at τ = 3 ns. f CK test comparing the long-time dynamics predicted by Koopman models at τ = 3 ns (blue) and actual dynamics (red). The shaded areas and error bars in d, f report the 95% confidence interval from five sets of Si atoms by randomly dividing the target atoms in the test data

In Fig. 3b, c, the model identified four states that are crucial for the Si dynamics at the solid–liquid interface – liquid Si at the interface (state 0), solid Si (state 1), solid Si at the interface (state 2), and liquid Si (state 3). These states provide a more detailed description of the solid–liquid interface structure than conventional methods. In Supplementary Fig. 2, we compare our results with the distribution of the q3 order parameter of the Si atoms in the system, which measures how much a site deviates from a diamond-like structure and is often used for studying Si interfaces28. We learn from the comparison that (1) our method successfully identifies the bulk liquid and solid states, and learns additional interface states that cannot be obtained from q3; (2) the states learned by our method are more robust due to access to dynamical information, while q3 can be affected by the accidental ordered structures in the liquid phase; (3) q3 is system specific and only works for diamond-like structures, but the GDyNets can potentially be applied to any material given the MD data.

In addition, important dynamical processes at the solid–liquid interface can be learned with the model. Remarkably, the model identified the relaxation process of the solid–liquid transition with a timescale of 538 ns (Fig. 3d, e), which is one order of magnitude longer than the simulation time of 12.5 ns. This is because the large number of Si atoms in the material system provide an ensemble of independent trajectories that enable the identification of rare events29–31. The other two relaxation processes correspond to the transitions of solid Si atoms into/out of the interface (73.2 ns) and liquid Si atoms into/out of the interface (2.26 ns), respectively. These processes are difficult to obtain with conventional methods due to the complex structures at solid–liquid interfaces, and the results are consistent with our understanding that the former solid relaxation is significantly slower than the latter liquid relaxation. Finally, the model performs excellently in the CK test on predicting the long-time dynamics.

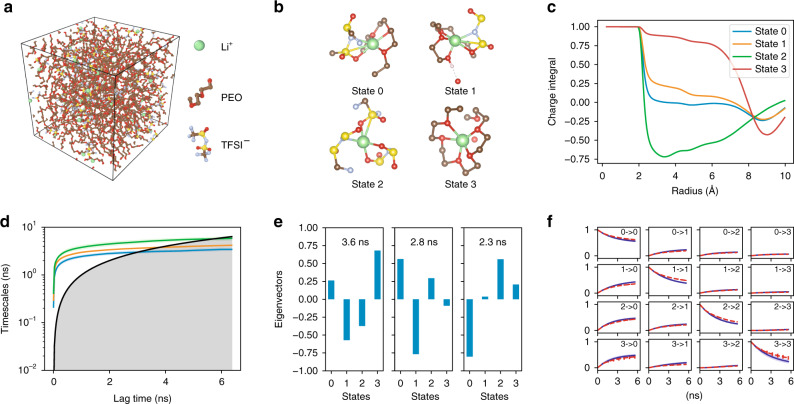

Lithium ion dynamics in polymer electrolytes

Finally, we apply GDyNets with VAMP loss to study the dynamics of lithium ions (Li-ions) in solid polymer electrolytes (SPEs), an amorphous material system composed of multiple chemical species. SPEs are candidates for next-generation battery technology due to their safety, stability, and low manufacturing cost, but they suffer from low Li-ion conductivity compared with liquid electrolytes32,33. Understanding the key dynamics that affect the transport of Li-ions is important to the improvement of Li-ion conductivity in SPEs.

We focus on the state-of-the-art33 SPE system—a mixture of poly(ethylene oxide) (PEO) and lithium bis-trifluoromethyl sulfonimide (LiTFSI) with Li/EO = 0.05 and a degree of polymerization of 50, as shown in Fig. 4a. Five independent 80 ns trajectories are generated to model the Li-ion transport at 363 K, following the same approach as described in ref. 67. We train a four-state GDyNet with one of the trajectories, and use the model to identify the dynamics of Li-ions in the remaining four trajectories. The model identified four different solvation environments, i.e. states, for the Li-ions in the SPE. In Fig. 4b, the state 0 Li-ion has a population of 50.6 ± 0.8%, and it is coordinated by a PEO chain on one side and a TFSI anion on the other side. The state 1 has a similar structure as state 0 with a population of 27.3 ± 0.4%, but the Li-ion is coordinated by a hydroxyl group on the PEO side rather than an oxygen. In state 2, the Li-ion is completely coordinated by TFSI anion ions, which has a population of 15.1 ± 0.4%. And the state 3 Li-ion is coordinated by PEO chains with a population of 7.0 ± 0.9%. Note that the structures in Fig. 4b only show a representative configuration for each state. We compute the element-wise radial distribution function (RDF) for each state in Supplementary Fig. 3 to demonstrate the average configurations, which is consistent with the above description. We also analyze the total charge carried by the Li-ions in each state considering their solvation environments in Fig. 4c (see Supplementary Note 3 and Supplementary Table 1 for details). Interestingly, both state 0 and state 1 carry almost zero total charge in their first solvation shell due to the one TFSI anion in their solvation environments.

Fig. 4.

A four-state dynamical model learned for lithium ion in a PEO/LiTFSI polymer electrolyte. a Structure of the PEO/LiTFSI polymer electrolyte. b Representative configurations of the four Li-ion states learned by the dynamical model. c Charge integral of each state around a Li-ion as a function of radius. d Relaxation timescales computed from the Koopman models as a function of the lag time. The black lines are reference lines where the relaxation timescale equals to the lag time. e Eigenvectors projected to each state for the three relaxations of Koopman models at τ = 0.8 ns. f CK test comparing the long-time dynamics predicted by Koopman models at τ = 0.8 ns (blue) and actual dynamics (red). The shaded areas and error bars in d, f report the 95% confidence interval from four independent trajectories in the test data

We further study the transition between the four Li-ion states. Three relaxation processes are identified in the dynamical model as shown in Fig. 4d, e. By analyzing the eigenvectors, we learn that the slowest relaxation is a process involving the transport of a Li-ion into and out of a PEO coordinated environment. The second slowest relaxation happens mainly between state 0 and state 1, corresponding to a movement of the hydroxyl end group. The transitions from state 0 to states 2 and 3 constitute the last relaxation process, as state 0 can be thought of an intermediate state between state 2 and state 3. The model performs well in CK tests (Fig. 4f). Relaxation processes in the PEO/LiTFSI systems have been extensively studied experimentally34,35, but it is difficult to pinpoint the exact atomic scale dynamics related to these relaxations. The dynamical model learned by GDyNet provides additional insights into the understanding of Li-ion transport in polymer electrolytes.

Implications to lithium ion conduction

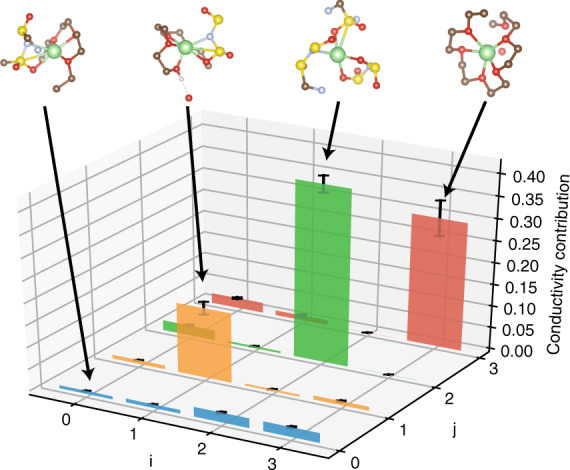

The state configurations and dynamical model allow us to further quantify the transitions that are responsible for the Li-ion conduction. In Fig. 5, we compute the contribution from each state transition to the Li-ion conduction using the Koopman model at τ = 0.8 ns. First, we learn that the majority of conduction results from transitions within the same states (i → i). This is because the transport of Li-ions in PEO is strongly coupled with segmental motion of the polymer chains8,36, in contrast to the hopping mechanism in inorganic solid electrolytes37. In addition, due to the low charge carried by state 0 and state 1, the majority of charge conduction results from the diffusion of states 2 and 3, despite their relatively low populations. Interestingly, the diffusion of state 2, a negatively charged species, accounts for ~40% of the Li-ion conduction. This provides an atomic scale explanation to the recently observed negative transference number at high salt concentration PEO/LiTFSI systems38.

Fig. 5.

Contribution from each transition to lithium ion conduction. Each bar denotes the percentage that the transition from state i to state j contributes to the overall lithium ion conduction. The error bars report the 95% confidence interval from four independent trajectories in test data

Discussion

We have developed a general approach, GDyNets, to understand the atomic scale dynamics in material systems. Despite being widely used in biophysics31, fluid dynamics39, and kinetic modeling of chemical reactions40–42, Koopman models, (or Markov state models31, master equation methods43,44) have not been used in learning atomic scale dynamics in materials from MD simulations except for a few examples in understanding solvent dynamics45–47. Our approach also differs from several other unsupervised learning methods48–50 by directly learning a linear Koopman model from MD data. Many crucial processes that affect the performance of materials involve the local dynamics of atoms or small molecules, like the dynamics of lithium ions in battery electrolytes51,52, the transport of water and salt ions in water desalination membranes53,54, the adsorption of gas molecules in metal organic frameworks55,56, among many other examples. With the improvement of computational power and continued increase in the use of molecular dynamics to study materials, this work could have broad applicability as a general framework for understanding the atomic scale dynamics from MD trajectory data.

Compared with the Koopman models previously used in biophysics and fluid dynamics, the introduction of graph convolutional neural networks enables parameter sharing between the atoms and an encoding of local environments that is invariant to permutation, rotation, and reflection. This symmetry facilitates the identification of similar local environments throughout the materials, which allows the learning of local dynamics instead of exponentially more complicated global dynamics. In addition, it is easy to extend this method to learn global dynamics with a global pooling function18. However, a hierarchical pooling function is potentially needed to directly learn the global dynamics of large biological systems including thousands of atoms. It is also possible to represent the local environments using other symmetry functions like smooth overlap of atomic positions (SOAP)57, social permutation invariant (SPRINT) coordinates58, etc. By adding a few layers of neural networks, a similar architecture can be designed to learn the local dynamics of atoms. However, these built-in invariances may also cause the Koopman model to ignore dynamics between symmetrically equivalent structures which might be important to the material performance. One simple example is the flip of an ammonia molecule—the two states are mirror symmetric to each other so the GCN will not be able to differentiate them by design. This can potentially be resolved by partially breaking the symmetry of GCN based on the symmetry of the material systems.

The graph dynamical networks can be further improved by incorporating ideas from both the fields of Koopman models and graph neural networks. For instance, the auto-encoder architecture12,59,60 and deep generative models61 start to enable the direct generation of future structures in the configuration space. Our method currently lacks a generative component, but this can potentially be achieved with a proper graph decoder62,63. Furthermore, transfer learning on graph embeddings may reduce the number of MD trajectories needed for learning the dynamics64,65.

In summary, graph dynamical networks present a general approach for understanding the atomic scale dynamics in materials. With a toy system of lithium ion transporting in a face-centered cubic lattice, we demonstrate that learning local dynamics of atoms can be exponentially easier than global dynamics in material systems with representative local structures. The dynamics learned from two more complicated systems, solid–liquid interfaces and solid polymer electrolytes, indicate the potential of applying the method to a wide range of material systems and understanding atomic dynamics that are crucial to their performances.

Methods

Construction of the graphs from trajectory

A separate graph is constructed using the configuration in each time step. Each atom in the simulation box is represented by a node i whose embedding vi is initialized randomly according to the element type. The edges are determined by connecting M nearest neighbors whose embedding u(i,j) is calculated by,

| 7 |

where μt = t · 0.2 Å for t = 0, 1, …, K, σ = 0.2 Å, and d(i,j) denotes the distance between i and j considering the periodic boundary conditions. The number of nearest neighbors M is 12, 20, and 20 for the toy system, Si–Au binary system, and PEO/LiTFSI system, respectively.

Graph convolutional neural network architecture details

The convolution function we employed in this work is similar to those in refs. 18,22 but features an attention layer66. For each node i, we first concatenate neighbor vectors from the last iteration , then we compute the attention coefficient of each neighbor,

| 8 |

where and denotes the weights and biases of the attention layers and the output αij is a scalar number between 0 and 1. Finally, we compute the embedding of node i by,

| 9 |

where g denotes a non-linear ReLU activation function, and and denotes weights and biases in the network.

The pooling function computes the average of the embeddings of each atom for the set of target atoms,

| 10 |

Determination of the relaxation timescales

The relaxation timescales represent the characteristic timescales implied by the transition matrix K(τ), where τ denotes the lag time of the transition matrix. By conducting an eigenvalue decomposition for K(τ), we could compute the relaxation timescales as a function of lag time by,

| 11 |

where λi(τ) denotes the ith eigenvalue of the transition matrix K. Note that the largest eigenvalue is alway 1, corresponding to infinite relaxation timescale and the equilibrium distribution. The finite ti(τ) are plotted in Figs. 2b, 3d, and 4d for each material system as a function of τ by performing this computation using the corresponding K(τ). If the dynamics of the system is Markovian, i.e. Eq. (6) holds, one can prove that the relaxation timescales ti(τ) will be constant for any τ13,24. Therefore, we select a smallest τ* from Figs. 2b, 3d, and 4d to obtain a dynamical model that is Markovian and contains most dynamical details. We then compute the relaxation timescales using this τ* for each material system, and these timescales remain constant for any τ > τ*.

State-weighted radial distribution function

The RDF describes how particle density varies as a function of distance from a reference particle. The RDF is usually determined by counting the neighbor atoms at different distances over MD trajectories. We calculate the RDF of each state by weighting the counting process according to the probability of the reference particle being in state i,

| 12 |

where rA denotes the distance between atom A and the reference particle, pi denotes the probability of the reference particle being in state i, and ρi denotes the average density of state i.

Analysis of Li-ion conduction

We first compute the expected mean-squared-displacement of each transition at different t using the Bayesian rule,

| 13 |

where pi (t) is the probability of state i at time t, and d2(t′, t′ + t) is the mean-squared-displacement between t′ and t′ + t. Then, the diffusion coefficient of each transition Di→j(τ) at the lag time τ can be calculated by,

| 14 |

which is shown in Supplementary Table 2.

Finally, we compute the contribution of each transition to Li-ion conduction with Koopman matrix K(τ) using the cluster Nernst-Einstein equation67,

| 15 |

where e is the elementary charge, kB is the Boltzmann constant, V, T are the volume and temperature of the system, NLi is the number of Li-ions, πi is the stationary distribution population of state i, and zij is the averaged charge of state i and state j. The percentage contribution is computed by,

| 16 |

Lithium diffusion in the FCC lattice toy system

The molecular dynamics simulations are performed using the Large-scale Atomic/Molecular Massively Parallel Simulator (LAMMPS)68, as implemented in the MedeA®69 simulation environment. A purely repulsive interatomic potential in the form of a Born–Mayer term was used to describe the interactions between Li particles and the S sublattice, while all other interactions (Li–Li and S–S) are ignored. The cubic unit cell includes one Li atom and four S atoms, with a lattice parameter of 6.5 Å, a large value allowing for a low energy barrier. 200 ns MD simulations are run in the canonical ensemble (nVT) at a temperature of 64 K, using a timestep of 1 fs, with the S particles frozen. The atomic positions, which constituted the only data provided to the GDyNet and VAMPnet models, are sampled every 0.1 ps. In addition, the energy following the Tet-Oct-Tet migration path was obtained from static simulations by inserting Li particles on a grid.

Silicon dynamics at solid–liquid interface

The molecular dynamics simulation for the Si–Au binary system was carried out in LAMMPS68, using the modified embedded-atom method interatomic potential27,28. A sandwich like initial configuration was created, where Si–Au liquid alloy was placed in the middle, contacting with two {110} orientated crystalline Si thin films. 25 ns MD simulations are run in the canonical ensemble (nVT) at the eutectic point (629 K, 23.4% Si27), using a time step of 1 fs. The atomic positions, which constituted the only data provided to the GDyNet model, are sampled every 20 ps.

Scaling of the algorithm

The scaling of the GDyNet algorithm is , where N is the number of atoms in the simulation box, M is the number of neighbors used in graph construction, and K is the depth of the neural network.

Supplementary information

Acknowledgements

This work was supported by Toyota Research Institute. Computational support was provided by Google Cloud, the National Energy Research Scientific Computing Center, a DOE Office of Science User Facility supported by the Office of Science of the U.S. Department of Energy under Contract No. DE-AC02-05CH11231, and the Extreme Science and Engineering Discovery Environment, supported by National Science Foundation grant number ACI-1053575.

Author contributions

T.X. developed the software and performed the analysis. A.F.-L. and Y.W. performed the molecular dynamics simulations. T.X., A.F.-L., Y.W., Y.S.H., and J.C.G. contributed to the interpretation of the results. T.X. and J.C.G. conceived the idea and approach presented in this work. All authors contributed to the writing of the paper.

Data availability

The MD simulation trajectories of the toy system, the Si–Au binary system, and the PEO/LiTFSI system are available at 10.24435/materialscloud:2019.0017/v1.

Code availability

GDyNets is implemented using TensorFlow70 and the code for the VAMP loss function is modified on top of ref. 13. The code is available from https://github.com/txie-93/gdynet.

Competing interests

The authors declare no competing interests.

Footnotes

Peer review information: Nature Communications thanks Stefan Chmiela and other anonymous reviewers for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information accompanies this paper at 10.1038/s41467-019-10663-6.

References

- 1.Etacheri V, Marom R, Elazari R, Salitra G, Aurbach D. Challenges in the development of advanced li-ion batteries: a review. Energy Environ. Sci. 2011;4:3243–3262. [Google Scholar]

- 2.Imbrogno J, Belfort G. Membrane desalination: where are we, and what can we learn from fundamentals? Annu. Rev. Chem. Biomol. Eng. 2016;7:29–64. doi: 10.1146/annurev-chembioeng-061114-123202. [DOI] [PubMed] [Google Scholar]

- 3.Peighambardoust SJ, Rowshanzamir S, Amjadi M. Review of the proton exchange membranes for fuel cell applications. Int. J. Hydrog. energy. 2010;35:9349–9384. [Google Scholar]

- 4.Zheng A, Li S, Liu S-B, Deng F. Acidic properties and structure–activity correlations of solid acid catalysts revealed by solid-state nmr spectroscopy. Acc. Chem. Res. 2016;49:655–663. doi: 10.1021/acs.accounts.6b00007. [DOI] [PubMed] [Google Scholar]

- 5.Yu C, et al. Unravelling li-ion transport from picoseconds to seconds: bulk versus interfaces in an argyrodite li6ps5cl–li2s all-solid-state li-ion battery. J. Am. Chem. Soc. 2016;138:11192–11201. doi: 10.1021/jacs.6b05066. [DOI] [PubMed] [Google Scholar]

- 6.Perakis F, et al. Vibrational spectroscopy and dynamics of water. Chem. Rev. 2016;116:7590–7607. doi: 10.1021/acs.chemrev.5b00640. [DOI] [PubMed] [Google Scholar]

- 7.Wang Y, et al. Design principles for solid-state lithium superionic conductors. Nat. Mater. 2015;14:1026. doi: 10.1038/nmat4369. [DOI] [PubMed] [Google Scholar]

- 8.Borodin O, Smith GD. Mechanism of ion transport in amorphous poly (ethylene oxide)/litfsi from molecular dynamics simulations. Macromolecules. 2006;39:1620–1629. [Google Scholar]

- 9.Miller TF, III, Wang Z-G, Coates GW, Balsara NP. Designing polymer electrolytes for safe and high capacity rechargeable lithium batteries. Acc. Chem. Res. 2017;50:590–593. doi: 10.1021/acs.accounts.6b00568. [DOI] [PubMed] [Google Scholar]

- 10.Getman RB, Bae Y-S, Wilmer CE, Snurr RQ. Review and analysis of molecular simulations of methane, hydrogen, and acetylene storage in metal–organic frameworks. Chem. Rev. 2011;112:703–723. doi: 10.1021/cr200217c. [DOI] [PubMed] [Google Scholar]

- 11.Li Q, Dietrich F, Bollt EM, Kevrekidis IG. Extended dynamic mode decomposition with dictionary learning: a data-driven adaptive spectral decomposition of the koopman operator. Chaos: Interdiscip. J. Nonlinear Sci. 2017;27:103111. doi: 10.1063/1.4993854. [DOI] [PubMed] [Google Scholar]

- 12.Lusch B, Kutz JN, Brunton SL. Deep learning for universal linear embeddings of nonlinear dynamics. Nat. Commun. 2018;9:4950. doi: 10.1038/s41467-018-07210-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Mardt A, Pasquali L, Wu H, Noé F. Vampnets for deep learning of molecular kinetics. Nat. Commun. 2018;9:5. doi: 10.1038/s41467-017-02388-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Duvenaud, D. K. et al. Convolutional networks on graphs for learning molecular fingerprints. In Advances in neural information processing systems 2224–2232 (2015).

- 15.Kearnes S, McCloskey K, Berndl M, Pande V, Riley P. Molecular graph convolutions: moving beyond fingerprints. J. Comput.-aided Mol. Des. 2016;30:595–608. doi: 10.1007/s10822-016-9938-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gilmer, J., Schoenholz, S. S., Riley, P. F., Vinyals, O. & Dahl, G. E. Neural message passing for quantum chemistry, arXiv preprint arXiv:1704.01212 (2017).

- 17.Schütt KT, Arbabzadah F, Chmiela S, Müller KR, Tkatchenko A. Quantum-chemical insights from deep tensor neural networks. Nat. Commun. 2017;8:13890. doi: 10.1038/ncomms13890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Xie T, Grossman JC. Crystal graph convolutional neural networks for an accurate and interpretable prediction of material properties. Phys. Rev. Lett. 2018;120:145301. doi: 10.1103/PhysRevLett.120.145301. [DOI] [PubMed] [Google Scholar]

- 19.Schütt KT, Sauceda HE, Kindermans P-J, Tkatchenko A, Müller K-R. Schnet—a deep learning architecture for molecules and materials. J. Chem. Phys. 2018;148:241722. doi: 10.1063/1.5019779. [DOI] [PubMed] [Google Scholar]

- 20.Zhang L, Han J, Wang H, Car R, Weinan E. Deep potential molecular dynamics: a scalable model with the accuracy of quantum mechanics. Phys. Rev. Lett. 2018;120:143001. doi: 10.1103/PhysRevLett.120.143001. [DOI] [PubMed] [Google Scholar]

- 21.Zhou Q, et al. Learning atoms for materials discovery. Proc. Natl Acad. Sci. USA. 2018;115:E6411–E6417. doi: 10.1073/pnas.1801181115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Xie T, Grossman JC. Hierarchical visualization of materials space with graph convolutional neural networks. J. Chem. Phys. 2018;149:174111. doi: 10.1063/1.5047803. [DOI] [PubMed] [Google Scholar]

- 23.Wu, H. & Noé, F. Variational approach for learning markov processes from time series data, arXiv preprint arXiv:1707.04659 (2017).

- 24.Koopman BO. Hamiltonian systems and transformation in hilbert space. Proc. Natl Acad. Sci. USA. 1931;17:315–318. doi: 10.1073/pnas.17.5.315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Sastry S, Angell CA. Liquid–liquid phase transition in supercooled silicon. Nat. Mater. 2003;2:739. doi: 10.1038/nmat994. [DOI] [PubMed] [Google Scholar]

- 26.Angell CA. Insights into phases of liquid water from study of its unusual glass-forming properties. Science. 2008;319:582–587. doi: 10.1126/science.1131939. [DOI] [PubMed] [Google Scholar]

- 27.Ryu S, Cai W. A gold–silicon potential fitted to the binary phase diagram. J. Phys.: Condens. Matter. 2010;22:055401. doi: 10.1088/0953-8984/22/5/055401. [DOI] [PubMed] [Google Scholar]

- 28.Wang Y, Santana A, Cai W. Atomistic mechanisms of orientation and temperature dependence in gold-catalyzed silicon growth. J. Appl. Phys. 2017;122:085106. [Google Scholar]

- 29.Pande VS, Beauchamp K, Bowman GR. Everything you wanted to know about markov state models but were afraid to ask. Methods. 2010;52:99–105. doi: 10.1016/j.ymeth.2010.06.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Chodera JD, Noé F. Markov state models of biomolecular conformational dynamics. Curr. Opin. Struct. Biol. 2014;25:135–144. doi: 10.1016/j.sbi.2014.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Husic BE, Pande VS. Markov state models: From an art to a science. J. Am. Chem. Soc. 2018;140:2386–2396. doi: 10.1021/jacs.7b12191. [DOI] [PubMed] [Google Scholar]

- 32.Meyer WH. Polymer electrolytes for lithium-ion batteries. Adv. Mater. 1998;10:439–448. doi: 10.1002/(SICI)1521-4095(199804)10:6<439::AID-ADMA439>3.0.CO;2-I. [DOI] [PubMed] [Google Scholar]

- 33.Hallinan DT, Jr, Balsara NP. Polymer electrolytes. Annu. Rev. Mater. Res. 2013;43:503–525. [Google Scholar]

- 34.Mao G, Perea RF, Howells WS, Price DL, Saboungi M-L. Relaxation in polymer electrolytes on the nanosecond timescale. Nature. 2000;405:163. doi: 10.1038/35012032. [DOI] [PubMed] [Google Scholar]

- 35.Do C, et al. Li+ transport in poly (ethylene oxide) based electrolytes: neutron scattering, dielectric spectroscopy, and molecular dynamics simulations. Phys. Rev. Lett. 2013;111:018301. doi: 10.1103/PhysRevLett.111.018301. [DOI] [PubMed] [Google Scholar]

- 36.Diddens D, Heuer A, Borodin O. Understanding the lithium transport within a rouse-based model for a peo/litfsi polymer electrolyte. Macromolecules. 2010;43:2028–2036. [Google Scholar]

- 37.Bachman JC, et al. Inorganic solid-state electrolytes for lithium batteries: mechanisms and properties governing ion conduction. Chem. Rev. 2015;116:140–162. doi: 10.1021/acs.chemrev.5b00563. [DOI] [PubMed] [Google Scholar]

- 38.Pesko DM, et al. Negative transference numbers in poly (ethylene oxide)-based electrolytes. J. Electrochem. Soc. 2017;164:E3569–E3575. [Google Scholar]

- 39.Mezić I. Analysis of fluid flows via spectral properties of the koopman operator. Annu. Rev. Fluid Mech. 2013;45:357–378. [Google Scholar]

- 40.Georgiev GS, Georgieva VT, Plieth W. Markov chain model of electrochemical alloy deposition. Electrochim. acta. 2005;51:870–876. [Google Scholar]

- 41.Valor, A., Caleyo, F., Alfonso, L., Velázquez, J. C. & Hallen, J. M. Markov chain models for the stochastic modeling of pitting corrosion. Math. Prob. Eng.2013 (2013).

- 42.Miller JA, Klippenstein SJ. Master equation methods in gas phase chemical kinetics. J. Phys. Chem. A. 2006;110:10528–10544. doi: 10.1021/jp062693x. [DOI] [PubMed] [Google Scholar]

- 43.Buchete N-V, Hummer G. Coarse master equations for peptide folding dynamics. J. Phys. Chem. B. 2008;112:6057–6069. doi: 10.1021/jp0761665. [DOI] [PubMed] [Google Scholar]

- 44.Sriraman S, Kevrekidis IG, Hummer G. Coarse master equation from bayesian analysis of replica molecular dynamics simulations. J. Phys. Chem. B. 2005;109:6479–6484. doi: 10.1021/jp046448u. [DOI] [PubMed] [Google Scholar]

- 45.Gu, C. et al. Building markov state models with solvent dynamics. In BMC bioinformatics, Vol. 14, S8 (BioMed Central, 2013). 10.1186/1471-2105-14-S2-S8 [DOI] [PMC free article] [PubMed]

- 46.Hamm P. Markov state model of the two-state behaviour of water. J. Chem. Phys. 2016;145:134501. doi: 10.1063/1.4963305. [DOI] [PubMed] [Google Scholar]

- 47.Schulz R, et al. Collective hydrogen-bond rearrangement dynamics in liquid water. J. Chem. Phys. 2018;149:244504. doi: 10.1063/1.5054267. [DOI] [PubMed] [Google Scholar]

- 48.Cubuk ED, Schoenholz SS, Kaxiras E, Liu AJ. Structural properties of defects in glassy liquids. J. Phys. Chem. B. 2016;120:6139–6146. doi: 10.1021/acs.jpcb.6b02144. [DOI] [PubMed] [Google Scholar]

- 49.Nussinov, Z. et al. Inference of hidden structures in complex physical systems by multi-scale clustering. In Information Science for Materials Discovery and Design, 115–138 (Springer International Publishing, Springer, 2016). 10.1007/978-3-319-23871-5_6

- 50.Kahle, L., Musaelian, A., Marzari, N. & Kozinsky, B. Unsupervised landmark analysis for jump detection in molecular dynamics simulations, Phys. Rev. Materials 3, 055404 (2019).

- 51.Funke K. Jump relaxation in solid electrolytes. Prog. Solid State Chem. 1993;22:111–195. [Google Scholar]

- 52.Xu K. Nonaqueous liquid electrolytes for lithium-based rechargeable batteries. Chem. Rev. 2004;104:4303–4418. doi: 10.1021/cr030203g. [DOI] [PubMed] [Google Scholar]

- 53.Corry B. Designing carbon nanotube membranes for efficient water desalination. J. Phys. Chem. B. 2008;112:1427–1434. doi: 10.1021/jp709845u. [DOI] [PubMed] [Google Scholar]

- 54.Cohen-Tanugi D, Grossman JC. Water desalination across nanoporous graphene. Nano Lett. 2012;12:3602–3608. doi: 10.1021/nl3012853. [DOI] [PubMed] [Google Scholar]

- 55.Rowsell JLC, Spencer EC, Eckert J, Howard JAK, Yaghi OM. Gas adsorption sites in a large-pore metal-organic framework. Science. 2005;309:1350–1354. doi: 10.1126/science.1113247. [DOI] [PubMed] [Google Scholar]

- 56.Li J-R, Kuppler RJ, Zhou H-C. Selective gas adsorption and separation in metal–organic frameworks. Chem. Soc. Rev. 2009;38:1477–1504. doi: 10.1039/b802426j. [DOI] [PubMed] [Google Scholar]

- 57.Bartók AP, Kondor R, Csányi G. On representing chemical environments. Phys. Rev. B. 2013;87:184115. [Google Scholar]

- 58.Pietrucci F, Andreoni W. Graph theory meets ab initio molecular dynamics: atomic structures and transformations at the nanoscale. Phys. Rev. Lett. 2011;107:085504. doi: 10.1103/PhysRevLett.107.085504. [DOI] [PubMed] [Google Scholar]

- 59.Wehmeyer C, Noé F. Time-lagged autoencoders: deep learning of slow collective variables for molecular kinetics. J. Chem. Phys. 2018;148:241703. doi: 10.1063/1.5011399. [DOI] [PubMed] [Google Scholar]

- 60.Ribeiro JML, Bravo P, Wang Y, Tiwary P. Reweighted autoencoded variational bayes for enhanced sampling (rave) J. Chem. Phys. 2018;149:072301. doi: 10.1063/1.5025487. [DOI] [PubMed] [Google Scholar]

- 61.Wu, H., Mardt, A., Pasquali, L. & Noe, F. Deep generative markov state models. In Proceedings of the 32Nd International Conference on Neural Information Processing Systems, 3979–3988 (Curran Associates Inc., USA 2018). http://dl.acm.org/citation.cfm?id=3327144.3327312

- 62.Jin, W., Barzilay, R. & Jaakkola, T. Junction tree variational autoencoder for molecular graph generation, arXiv preprint arXiv:1802.04364 (2018).

- 63.Simonovsky, M. & Komodakis, N. Graphvae: Towards generation of small graphs using variational autoencoders, arXiv preprint arXiv:1802.03480 (2018).

- 64.M. M. Sultan & V. S. Pande. Transfer learning from markov models leads to efficient sampling of related systems. J. Phys. Chem. B (2017). 10.1021/acs.jpcb.7b06896 [DOI] [PubMed]

- 65.Altae-Tran H, Ramsundar B, Pappu AS, Pande V. Low data drug discovery with one-shot learning. ACS Cent. Sci. 2017;3:283–293. doi: 10.1021/acscentsci.6b00367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Velickovic, P. et al. Graph attention networks, arXiv preprint arXiv:1710.10903 1 (2017).

- 67.France-Lanord, A. & Grossman, J. C. Correlations from ion-pairing and the nernst-einstein equation, Phys. Rev. Lett. 122, 136001 (2019). [DOI] [PubMed]

- 68.Plimpton S. Fast parallel algorithms for short-range molecular dynamics. J. Comput. Phys. 1995;117:1–19. [Google Scholar]

- 69.MedeA-2.22. Materials Design, Inc, San Diego, (2018).

- 70.Abadi, M. et al. Tensorflow: A system for large-scale machine learning. In 12th {USENIX} Symposium on Operating Systems Design and Implementation ({OSDI} 16), 265–283 (Savannah, GA, USA 2016). http://dl.acm.org/citation.cfm?id=3026877.3026899

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The MD simulation trajectories of the toy system, the Si–Au binary system, and the PEO/LiTFSI system are available at 10.24435/materialscloud:2019.0017/v1.

GDyNets is implemented using TensorFlow70 and the code for the VAMP loss function is modified on top of ref. 13. The code is available from https://github.com/txie-93/gdynet.