Sir,

Publication bias is defined as the failure to publish the results of a study on the basis of the direction or strength of the study findings.[1] This may mean that only studies which have statistically significant positive results get published and the statistically insignificant or negative studies does not get published. Of the several reasons of this bias the important ones are rejection (by editors, reviewers), lack of interest to revise, competing interests, lack of motivation to write in spite of conducting the study.[2] Many researchers do not publish research with negative results because they consider it as a failed research which is not true. If the hypothesis made by them is rejected based on results of a study with sound methodology, it does not mean it is a failed research.

There are three reasons for negative results: studies with small sample size and lacking power, no difference between groups, and more complications or adverse events in the study group. It is therefore obvious that either the editor does not send the research with negative results for further review or the reviewers reject the manuscript upfront.[3] An unpublished study with negative results also leads to a significant amount of monetary loss and time of the researchers and/or funding body involved. Clinical trials at various levels funded by pharmaceutical companies involving volunteers or patients which demonstrate adverse events does not get published in peer-reviewed journals for obvious reasons.

Systematic reviews and meta-analyses have an important place in modern day evidence-based clinical practice. Meta-analyses involve statistical analysis of pooled data of all the randomised controlled trials. However due to publication bias, the final analysis does not involve negative data as it has either not been published ever or has been rejected. Therefore, the practice guidelines that evolve from the results of systematic reviews and meta-analyses which comes under the category of level 1 evidence has to be taken into consideration with a pinch of salt.

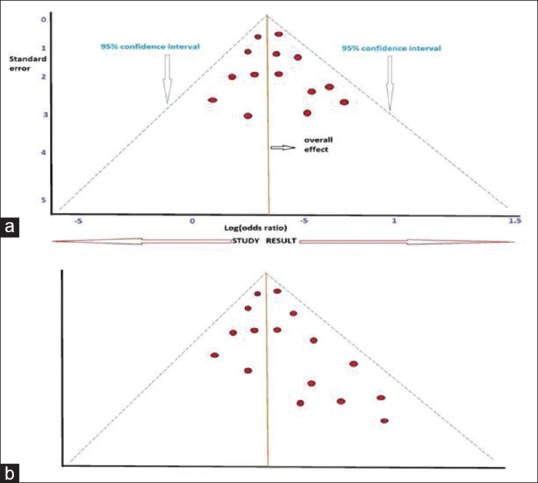

Systematic reviews and meta-analyses use a funnel plot to check for the existence of publication bias or systematic heterogenicity in the studies taken for analysis. If the plot is symmetric inverted funnel shape, publication bias is unlikely.[4] If the funnel plot is asymmetric, it means that there is a systematic difference between studies of higher and lower precision [Figure 1a and b]. Egger's regression is a statistical measure for quantifying funnel plot asymmetry.[5] Rosenthal's fail-safe number or “fail-safe N method” is another way of determining publication bias.[6] It identifies the number of additional negative studies to increase the P value in a meta-analysis to above 0.05. Although it a simple way of deriving a number, it is dependent on P value.

Figure 1.

(a) Hypothetical symmetric funnel plot showing no publication bias. The figure also shows were to look for 95% confidence interval, overall effect, and study result. (b) An unlabeled hypothetical, asymmetric funnel plot showing publication bias

Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement and a flowchart of PRISMA is mandatory as it helps authors in improving reporting of systematic review and meta-analyses. Item no. 16 of the PRISMA checklist is titled ’Meta-bias(es)’ where the authors need to specify if there was any planned assessment of meta-bias(es) like publication bias across studies or selective reporting within studies.

(http://www.prisma-statement.org/documents/PRISMA-P-checklist.pdf)

This item might not help in the analysis if there is a dearth of published or reported negative trials.

In conclusion, the editorial board should insist authors on submitting negative results also which should be considered for publication if found suitable based on appropriate methods, statistical methods used and acceptable discussion.

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

REFERENCES

- 1.DeVito NJ, Goldacre B. Catalogue of bias: Publication bias. BMJ Evid Based Med. 2018:pii. doi: 10.1136/bmjebm-2018-111107. bmjebm-2018-111107. [DOI] [PubMed] [Google Scholar]

- 2.Mlinarić A, Horvat M, Šupak Smolčić V. Dealing with the positive publication bias: Why you should really publish your negative results. Biochem Med (Zagreb) 2017;27:030201. doi: 10.11613/BM.2017.030201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Joober R, Schmitz N, Annable L, Boksa P. Publication bias: What are the challenges and can they be overcome? J Psychiatry Neurosci. 2012;37:149–52. doi: 10.1503/jpn.120065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hedin RJ, Umberham BA, Detweiler BN, Kollmorgen L, Vassar M. Publication bias and nonreporting found in majority of systematic reviews and meta-analyses in anesthesiology journals. Anesth Analg. 2016;123:1018–25. doi: 10.1213/ANE.0000000000001452. [DOI] [PubMed] [Google Scholar]

- 5.Furuya-Kanamori, Barendregt JJ, Doi SAR. A new improved graphical and quantitative method for detecting bias in meta-analysis. Int J Evid Based Healthc. 2018;16:195–203. doi: 10.1097/XEB.0000000000000141. [DOI] [PubMed] [Google Scholar]

- 6.Fragkos KC, Tsagris M, Frangos CC. Publication bias in meta-analysis: Confidence intervals for Rosenthal's fail-safe number. Int Sch Res Notices. 2014;2014:825383. doi: 10.1155/2014/825383. [DOI] [PMC free article] [PubMed] [Google Scholar]