Abstract

A fundamental problem faced by the auditory system of humans and other vertebrates is the segregation of concurrent vocal signals. To discriminate between individual vocalizations, the auditory system must extract information about each signal from the single temporal waveform that results from the summation of the simultaneous acoustic signals. Here, we present the first report of midbrain coding of simultaneous acoustic signals in a vocal species, the plainfin midshipman fish, that routinely encounters concurrent vocalizations. During the breeding season, nesting males congregate and produce long-duration, multiharmonic mate calls that overlap, producing beat waveforms. Neurophysiological responses to two simultaneous tones near the fundamental frequencies of natural calls reveal that midbrain units temporally code the difference frequency (dF). Many neurons are tuned to a specific dF; their selectivity overlaps the range of dFs for naturally occurring acoustic beats. Beats and amplitude-modulated (AM) signals are also coded differently by most units. Although some neurons exhibit differential tuning for beat dFs and the modulation frequencies (modFs) of AM signals, others exhibit similar temporal selectivity but differ in their degree of synchronization to dFs and modFs. The extraction of dF information, together with other auditory cues, could enable the detection and segregation of concurrent vocalizations, whereas differential responses to beats and AM signals could permit discrimination of beats from other AM-like signals produced by midshipman. A central code of beat dFs may be a general vertebrate mechanism used for coding concurrent acoustic signals, including human vowels.

Keywords: temporal coding, periodicity coding, auditory midbrain, hearing, acoustic beats, AM signals, concurrent vocalizations, vowels, acoustic communication

The temporal waveforms of concurrent vocalizations from independent senders summate into a single resultant signal at a listener’s ear. To identify individual vocalizations, a listener’s auditory system must first separate information regarding each signal based on cues from this single acoustic waveform. Psychophysical experiments in humans have demonstrated that listeners can segregate two concurrent multiharmonic signals, namely vowels, that differ in fundamental frequency (F0) (Brokx and Nooteboom, 1982;Chalikia and Bregman, 1989). Several models propose mechanisms for segregating two concurrent vowels with small differences in F0 (<10 Hz) (Assman and Summerfield, 1990; Meddis and Hewitt, 1992; Culling and Darwin, 1994). However, few studies have examined the coding of concurrent acoustic signals in the auditory periphery (Palmer, 1990;Cariani and Delgutte, 1996b; McKibben et al., 1993) and cochlear nucleus (Keilson et al., 1995). Hence, the central computations used in the segregation of concurrent vocalizations in vertebrates remains largely unknown.

To identify the neural mechanisms that permit the segregation of concurrent multiharmonic vocal signals, the coding of simultaneous signals must first be assessed. Here, we report the first neurophysiological investigation of the central coding of concurrent acoustic signals (beats) in the auditory midbrain of a vertebrate that routinely encounters concurrent vocal signals, the plainfin midshipman fish (Porichthys notatus). Reproductively active males generate long-duration (>1 min) advertisement signals, “hums,” that attract females to their nest (Ibara et al., 1983; Brantley and Bass, 1994; McKibben et al., 1995). Hums are multiharmonic signals with an F0 of ∼100 Hz (Fig.1A). During the breeding season, males cluster and hum simultaneously (Ibara et al., 1983; DeMartini, 1988; Brantley and Bass, 1994; Bass, 1996). Behavioral phonotaxis experiments show that when presented with two concurrent tones near the F0s of natural hums, and which originate from separate underwater loudspeakers, individual midshipman localize and approach a tone from a single speaker (McKibben et al., 1995). This suggests that mechanisms within the midshipman auditory system enable the segregation of concurrent hums.

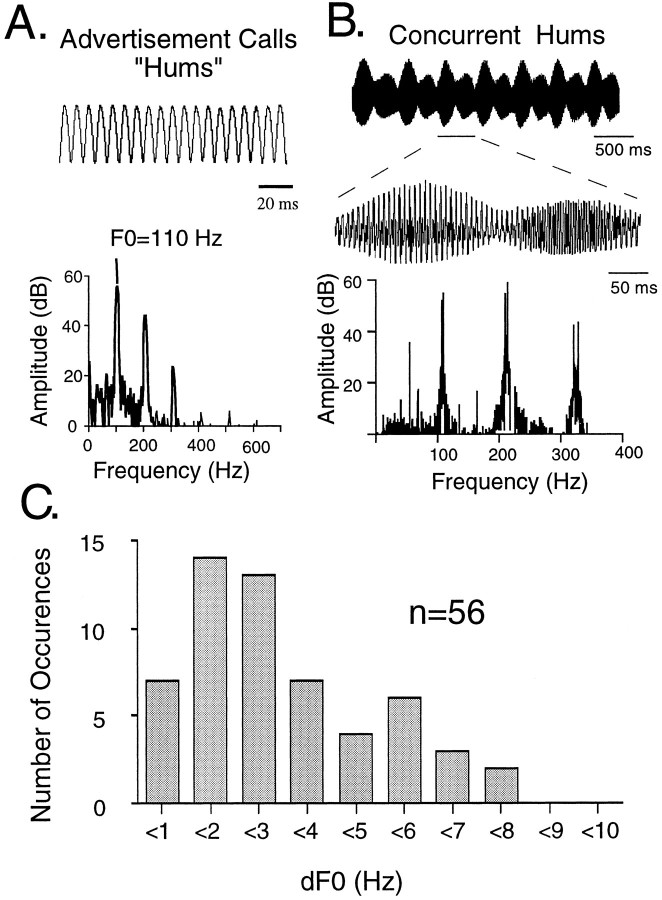

Fig. 1.

Acoustic signals of plainfin midshipman fish.A, An example of the temporal waveform and power spectrum of a hum produced by a nesting male. B, An example of the temporal waveform and power spectrum of two, field-recorded, simultaneous hums with different F0s (F01 = 117.8 Hz and F02 = 120.2 Hz; dF0 = 2.4 Hz). C, The distribution of dFs for the fundamental frequencies (dF0) of concurrent hums recorded in natural habitats. The recorded F0s ranged from 96 to 126 Hz. Because F0 varies directly with water temperature (Bass and Baker, 1990; Brantley and Bass, 1994), measurement of dF from simultaneously recorded males ensured that both animals were at the same water temperature.

Concurrent hums with small differences in F0 interfere to produce beat waveforms characterized by amplitude and phase modulations at the difference frequencies (dFs) of their harmonic components (Fig.1B,C). These acoustic beats are analogous to the electric beats of fish that have central neurons that encode electric organ discharge dFs (Heiligenberg, 1991). Here, in midshipman, we demonstrate that midbrain units temporally code the dF of acoustic beats composed of two tones near the F0s and with dFs comparable to those of natural beats. Comparisons of responses to beats and amplitude-modulated (AM) signals indicate that approximately one-third of the units exhibit differential tuning for beat dF and the modulation frequency of AM signals, whereas most others differ in their degree of synchronization to either signal. Interestingly, males also produce trains of short duration (50–200 msec), AM-like signals called “grunts,” in agonistic encounters with other males (Brantley and Bass, 1994). Hence, the coding of dF information, in conjunction with other auditory cues, could enable the detection and segregation of concurrent hums, as well as the discrimination of hums from grunts.

Portions of these results have appeared earlier in abstract form (Bodnar and Bass, 1996; Bodnar et al., 1996).

MATERIALS AND METHODS

The midshipman is a sound-producing teleost fish found along the western coast of the United States and Canada (Walker and Rosenblatt, 1988). Concurrent midshipman hums were recorded from neighboring nests during 1993 and 1995 at two habitats (Tomales Bay, CA, and Brinnon, WA) using hydrophones (Cornell University Laboratory of Ornithology) and either a Sony ProWalkman or Marantz cassette recorder. Recordings were digitized at 2 kHz, and 16 bit resolution and their power spectra were calculated with a fast Fourier transform size of either 16K (0.11 Hz frequency resolution) or 8K (0.22 Hz frequency resolution) using Canary 1.1 (Cornell University Laboratory of Ornithology). The dFs of the fundamental frequencies (dF0s) of concurrent signals were determined by measuring the spectral peaks and then calculating their difference (Fig. 1C). Only the spectral peaks of F0s that could be clearly resolved were used.

Animals for physiological experiments were collected from nests (Tomales Bay, CA), housed initially in running seawater holding tanks and later in artificial seawater aquaria at 15–16°C, and maintained on a diet of minnows. Midshipman have two male reproductive “morphs” (Bass, 1996). Type I males build and guard nests and generate both hums and grunts. Type II males do not build nests or acoustically court females; instead they sneak spawn and, like females, only produce low-amplitude grunts infrequently (Brantley and Bass, 1994). In this study, we used 52 type I males ranging in size from 30 to 80 gm for neurophysiological recordings; future studies will assess possible sex differences.

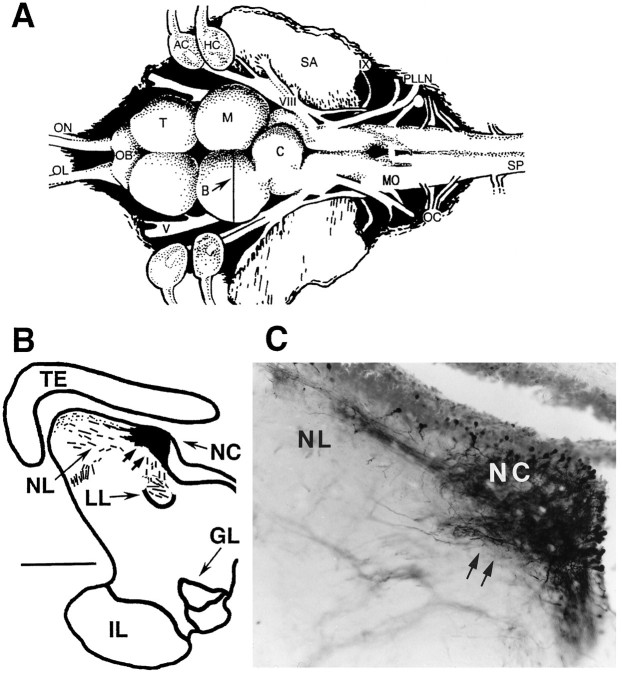

As in many other teleosts (Fay, 1993), the principal acoustic end organ of the midshipman’s inner ear is the sacculus (Fig.2A, SA) (Cohen and Winn, 1967), an otolith organ that also responds to acoustic stimuli in terrestrial vertebrates (Lewis et al., 1982; McCue and Guinan, 1994). Eighth nerve primary afferents (Fig.2A,VIII) terminate in octaval nuclei of the medulla (Fig. 2A,MO) (Bass et al., 1994). These nuclei in turn project to a variety of nuclei, including a nucleus centralis in the torus semicircularis of the midbrain (Knudsen, 1977; Bass et al., 1996; McCormick and Hernandez, 1996), a homolog of the mammalian inferior colliculus (McCormick, 1992). Recordings were made from the nucleus centralis (Fig.2A,B, NC). The locations of central recording sites were verified after iontophoretic injection of neurobiotin (Vector Laboratories, Inc., Burlingame, CA; 5% in 3m KCl, +3 μA, 10 min) and survival times of ∼15 min–15 hr (after Bass et al., 1996; Kawasaki and Guo, 1996); the procedures used for fixation and visualization of a dense reaction product (Fig.2C) have been described elsewhere (Bass et al., 1994).

Fig. 2.

Neurobiotin verification of midbrain recording site. A, Dorsal view of the brain of a midshipman; thevertical line indicates the level of the line drawing inB. AC, Anterior canal ampulla;C, cerebellum; HC, horizontal canal ampulla; M, midbrain; OB, olfactory bulb;OC, occipital nerve roots; OL, olfactory nerve; ON, optic nerve; PLLN, posterior lateral line nerve; SA, saccular otolith;SP, spinal cord; T, telencephalon;V, trigeminal nerve; VIII, eighth nerve;IX, glossopharyngeal nerve (modified from Bass et al., 1994). B, Line drawing of cross-section at level of midbrain recording site. The blackened area encompasses the center of injection site and surrounding area of dense neurobiotin labeling; hatched lines indicate labeled fibers. Scale bar, 600 μm. GL, Nucleus glomerulosus;IL, inferior lobe of hypothalamus; LL, lateral lemniscus; NC, nucleus centralis;NL, nucleus lateralis; TE, mesencephalic tectum. C, Photomicrograph through region of neurobiotin labeling at site outlined in B. The densest area of staining represents the center of the injection site. Double arrows point to corresponding positions in B andC.

For surgery, animals were anesthetized by immersion in 0.2% ethylp-amino benzoate (Sigma, St. Louis, MO) in seawater from their housing unit. The midbrain was exposed, and a plastic dam attached to the skin surrounding the opening allowed for submersion of the fish below the water surface. During recording, pancuronium bromide (0.5 mg/kg) was used for immobilization and fentanyl (1 mg/kg) was used for analgesia. Acoustic signals were synthesized using custom software (CASSIE, designed by J. Vrieslander, Cornell University) and delivered through a UW30 underwater speaker (Newark Electronics) beneath the fish in a 32-cm-diameter tank (design after Lu and Fay, 1993). The frequency response of the speaker was measured with a Bruel and Kajer 4130 minihydrophone, and sound pressure was equalized using CASSIE software. Hydrophone recordings of acoustic stimuli verified that reflections from the tank walls and water surface do not alter the sound pressure of the signals. All experiments were conducted inside a soundproof chamber.

Single-unit extracellular recordings were made using glass micropipettes or indium-filled electrodes, amplified and bandpass-filtered between 250 Hz and 3 kHz. There were no obvious differences in the kinds of units detected with either electrode type. Single-unit recordings were discriminated from multiple units on the basis of their signal-to-noise ratio and spike shape; single units had distinct large-amplitude peaks and fast rise times. In addition, for data acquisition, a pattern-matching algorithm within CASSIE was used to extract visually identified single units. On isolation of most single units, their threshold frequency tuning curve and/or isointensity response curve was measured. To measure isointensity curves, 10 repetitions of 1-sec-duration pure tones were presented at 2 or 5 Hz increments between 70 and 120 Hz at various intensity levels, usually 6, 12, and 18 dB above threshold. The best excitatory frequency of a unit was designated as the frequency with the maximum average spike rate response. The 80% bandwidths of isointensity curves were measured by determining the frequencies at which the average spike rate response fell below 80% of the maximum response.

To investigate the coding of beats, stimuli were presented that were composed of two tones (F1 and F2) near the F0s of natural hums. F1 was held constant at 90 Hz, which is close to the characteristic frequency of most auditory midbrain units; F2 varied from F1 up to ±20 Hz in 2 Hz increments (Fig. 3A). For most units, the intensity level of the beat stimuli was 12 dB above threshold measured for a 90 Hz pure tone; for some units the intensity level was either 6 or 18 dB above threshold. The order of presentation of beat stimuli was either with increasing dF, decreasing dF, or random dF. There were no differences in results using different presentation orders. Stimuli consisted of either 10 repetitions of a 1 sec stimulus (n = 76), two repetitions of a 5 sec stimulus (n = 6), or one repetition of a 10 sec stimulus (n = 13), all with rise and fall times of 50 msec. For experiments in which we tested responses to beat stimuli composed of different primary tones, F1 was held constant at either 80 or 100 Hz, and F2 varied from F1 up to ±20 Hz. Comparable AM signals were generated by multiplying a 90 Hz carrier frequency by a modulation frequency (2–10 Hz) with 80% depth of modulation (Fig.3B). The intensity level of the AM stimulus was approximately equivalent (within 2 dB) to that of the beat stimuli. We chose to use 80% AM, because the envelope shape and rise time more closely resemble that of a beat waveform, whereas 100% AM produces a steeper rise time and more sharply peaked envelope (Fig.3C). In this study, we considered that the most salient features of comparison between AM signals and beats are those of envelope shape and rise time rather than absolute depth of modulation. The effects of different depths of modulation on beat and AM coding will be explored in a subsequent study.

Fig. 3.

Experimental stimuli and measures.A, An example of the power spectrum (left) and waveform (right) of a beat stimulus used in neurophysiological experiments. In this case, F1 = 90 Hz (solid line), F2 = 100 Hz (dashed line), and dF = 10 Hz. B, The power spectrum (left) and waveform (right) of a representative AM stimulus created by multiplying a carrier frequency (Fc; solid line) by a varied modulation frequency (modF; dashed line). As the modulation frequency is varied, the sidebands are varied (Fc ± modF). In the example shown, Fc = 90 Hz and modF = 10 Hz.C, Comparisons of the rise times and envelope shapes of 80% AM (left) and 100% AM (right) with beat stimuli. D, Period histograms of single-unit responses and overlay of one cycle of the beat stimulus; the histograms are constructed such that the cycle period is equal to the dF of the beat and the vector strength is calculated according to the method ofGoldberg and Brown (1969).

Spike train responses were quantified by measuring the average spike rate and vector strength of synchronization (VS) to the individual tones. VS measures from 0 to 1 the accuracy of phase locking to a periodic signal (Goldberg and Brown, 1969). In addition, vector strength of synchronization to the beat frequency, VSdF, was measured by constructing period histograms for the cycle period equal to dF (Fig. 3D). Traditionally, VS is calculated over all the spike train data. However, to quantify and compare beat responses between units and different stimuli, we computed VSdF over 1 sec intervals and statistical measures over 10 sec. A Rayleigh Z test, based on the mean VSdF and mean number of spikes per repetition, was used to test whether synchronization to dF was significantly different from random (p < 0.05) (Batschelet, 1981). An ANOVA was used to test the effect of dF on VS. To assess any differences between responses to beats with different primaries or beats and AM stimuli, we performed an ANOVA to test the effect of stimulus type, and a two-factor ANOVA to test the effect of dF*stimulus type.

The research reported here was performed within the guidelines of the Cornell University Animal Care and Use Committee and the National Institutes of Health.

RESULTS

Single-unit recordings were obtained from a total of 126 auditory midbrain neurons. In general, midbrain neurons in midshipman exhibit low-frequency tuning and poor synchronization in their responses to pure tones. The majority of units responded to frequencies ranging from 60 to 150 Hz with thresholds ranging from 105 to 120 dB (re: 1 μPa). Based on isointensity curves (Fig.4A), the best excitatory frequencies ranged from 70 to 110 Hz for all units tested (Fig. 4B). The 80% bandwidths, which ranged from <10 to 40 Hz, identified three categories of frequency tuning (Fig.4C). “Narrowly” tuned units had 80% bandwidths ≤10 Hz, with best excitatory frequencies centered mainly at ∼90 Hz (Fig.4D). A second group of “broadly” tuned units had 80% bandwidths >10 Hz and up to 40 Hz. For some units, designated as “low”-frequency units, the spike rates were >80% at 70 Hz; hence, an 80% bandwidth could not be defined. For these units, the best excitatory frequency, taken as the highest spike rate response within the frequency range tested, was always ≤80 Hz. Most auditory midbrain units exhibited very low synchronization to pure tone stimuli; in 40 of 44 units, VS was <0.30 for a 90 Hz pure tone 6 or 12 dB above threshold.

Fig. 4.

Frequency tuning of midshipman auditory midbrain units. A, Examples of isointensity curves of three different units with broad, narrow, andlow frequency tuning. B, Distribution of the best excitatory frequencies of all units tested (n = 49) based on isointensity curves.C, Distribution of the 80% bandwidth of isointensity curves. D, Distribution of the best excitatory frequency of narrowly tuned (80% bandwidth, ≤10 Hz) units.

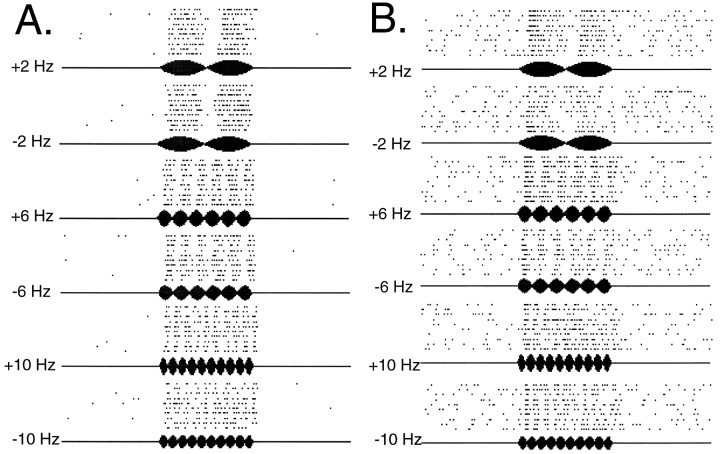

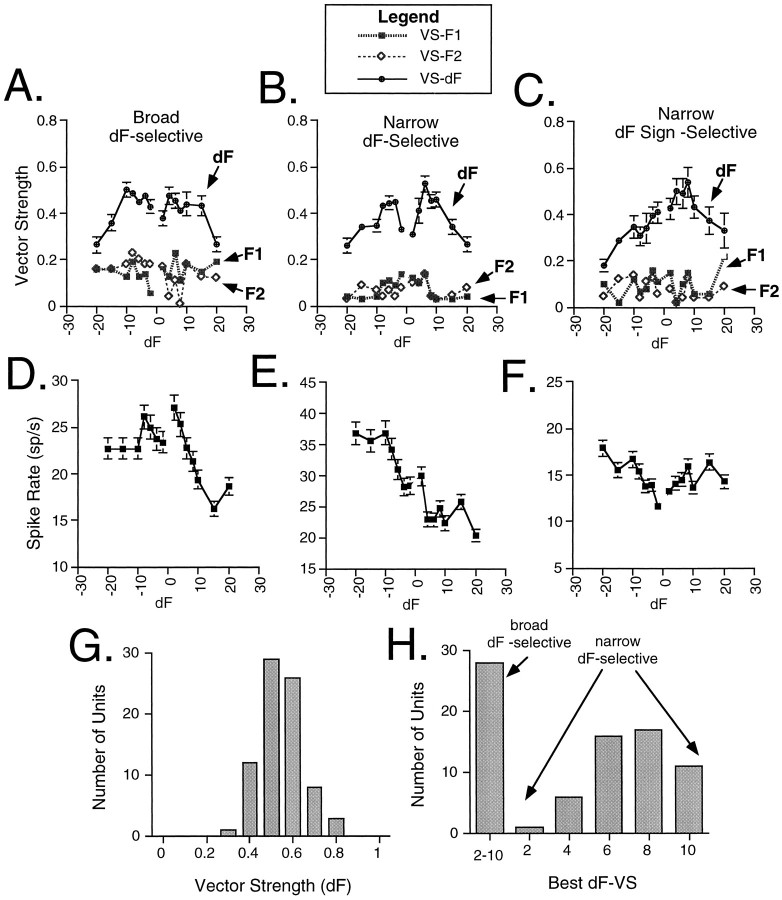

The range of beat dF0s recorded in a natural population is 0–8 Hz (Fig. 1C). With this information as a guide, we measured responses to low-frequency beats composed of two tones near the F0s of natural hums in 95 auditory midbrain units over an even broader range of dFs within ±20 Hz (Fig.5A,B). Plots of VS and average spike rate versus dF for three representative units are shown in Figure 6. Auditory midbrain units exhibited low synchronization to the individual components (F1 and F2) of a beat, whereas synchronization to beat dF was higher (Fig. 6A–C). Synchronization to dF was significant (Rayleigh Z test, p < 0.05) for at least one dF within the ±10 Hz range in 83% of the units; we refer to these units as dF-selective. VSdF versus dF profiles of dF selective units reflected three response types for beat stimuli. Thirty-five percent of the dF-selective units showed moderate synchronization to all dFs between ±10 Hz (Fig. 6A) but no significant variations in their VSdF values (ANOVA, effect of dF, p > 0.05); we refer to these units as “broad dF-selective.” The remaining 65% of the dF-selective units (Fig. 6B,C) showed distinct peaks at a particular dF (ANOVA, effect of dF, p < 0.05); we refer to these units as “narrow dF-selective.” Both response types were observed for all three stimulus durations (1, 5, and 10 sec). Among the narrow dF-selective units, 75% exhibited symmetrical tuning to positive and negative dFs (Fig. 6B), whereas the remaining 25% (Fig. 6C) displayed different responses to positive and negative dFs; we refer to these asymmetrically tuned units as “narrow dF sign-selective” units. In general, changes in average spike rate did not reflect changes in VSdF (Fig.6D–F); a temporal code of dF appears to be the most salient feature of spike train representations of concurrent acoustic signals. The distribution of the maximum VSdF is shown for all dF-selective units in Figure 6G. Figure6H presents the distribution of tuning of both broad and narrow dF selective units based on VSdF. Midbrain units show their best synchronization to dFs of 2–10 Hz.

Fig. 5.

Midshipman auditory midbrain responses to beat stimuli with low-frequency dFs. A, B, Examples of raster plot responses to beat stimuli from two representative midshipman auditory midbrain units. Each panel shows raster plots for ±2, ±6, and ±10 Hz beats.

Fig. 6.

Midshipman auditory midbrain responses to beat stimuli with low-frequency dFs. Plots of vector strength of synchronization (±SE) (A–C) and average spike rate (±SE) (D–F) for three representative units. Each beat stimulus is composed of F1, which in all cases is 90 Hz, and F2, which is 90 ± 2–20 Hz. For example, inC, F2 is 86 Hz at −4 dF, 80 Hz at −10 dF, and 75 Hz at −15 dF. Similarly, F2 is 94 Hz at +4 dF, 100 Hz at +10 dF, and 105 Hz at +15 dF. A–C, Examples of midbrain responses in which vector strength either does not significantly change (A) or does significantly change (B, C) with different dFs. Units exhibited low synchronization to the individual components (F1 and F2) of a beat. G, Histogram of the distribution of the maximum vector strength of synchronization to dF. H, Distribution of best dFs based on VS measures for midbrain units over a 10 Hz range that encompasses the natural range of dFs (see Fig. 1C).

The graphs in Figure 7 show direct comparisons of beat responses with isointensity responses to separately presented pure tone stimuli at the same intensity level and within the same frequency range as the beat stimuli for the same units from Figure6. Comparisons of isointensity curves and beat responses in 28 units revealed that only two units had frequency selectivities that directly reflected their dF selectivity. Of the remaining units, 38% were similar to those shown in Figure 7A,B in which VSdF was similar for positive and negative dFs (broad and narrow dF-selective units), whereas isointensity spike rates decreased dramatically over either positive or negative dF frequencies. For the majority of units (62%) VSdF profiles did notfollow their isointensity curves over either positive or negative frequencies. For example, for the unit illustrated in Figure7C, the isointensity profile showed a broad frequency sensitivity, whereas the VSdF profile had a distinct positive peak (a narrow dF sign selective unit). With regard to changes in spike rate, 28% of the units exhibit spike rate changes in response to beat stimuli that generally followed the changes in spike rate in their isointensity curves over the entire frequency range (e.g., Fig.7D,E), whereas another 22% exhibit similar spike rate changes only over either positive or negative frequencies. The remaining 50% show no correspondence between their increases and decreases in spike rate for beat stimuli and pure tones (e.g., Fig.7F).

Fig. 7.

Comparison of isointensity response curves for pure tone stimuli with responses to beat stimuli. A–C, Overlays of isointensity curves (thickened lines) with vector strength of synchronization to dF (±SE) (same units as in Fig.6). D–F, Overlays of isointensity curves (thickened lines) with average spike rate.

Responses to beats with the same dFs but different primary tones were tested in 25 units; 64% displayed no significant variation in their dF selectivity (Fig. 8A; ANOVA, effect of dF*primary, p > 0.05), whereas the remaining units showed shifts in their peak dF when the primary tones were changed (Fig. 8B; ANOVA, effect of dF*primary,p < 0.05). Thus, some units appeared to be selective for a specific dF, whereas in others the coding of dF and spectral components were coupled. In general, changes in average spike rate did not reflect changes in VSdF (Fig. 8C,D).

Fig. 8.

Comparison of responses to beat stimuli with different primary tones. A, B, Plots show the vector strength of synchronization (±SE) versus dF for beats with F1 = 90 Hz (filled circles) and F1 = 80 Hz (open circles). C, D, These graphs show average spike rate versus dF for beats with F1 = 90 Hz (filled squares) and F1 = 80 Hz (open squares). A, B, Examples of units that showno significant (A) or a significant (B) shift in vector strength tuning.

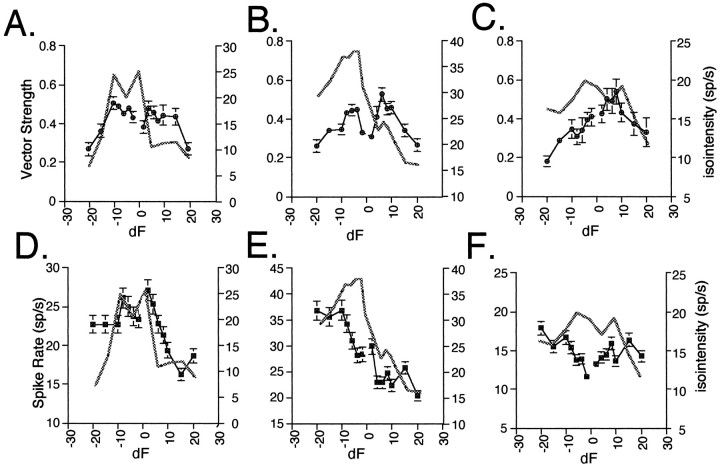

Comparisons of responses to beat and AM stimuli in which F1 and the carrier frequency were equal (see Fig. 3A,B) were studied in 33 units. Sixty-five percent of the neurons (n = 21) were tuned to the same dF and modulation frequency (modF) (Fig.9A; ANOVA, effect of dF*stimulus type, p > 0.05). However, in 87% of these units, synchronization to AM signals was significantly higher than for beat signals (ANOVA, effect of stimulus type, p < 0.05; across the population, paired t test for VSmax, p = 0.0002). The remaining units (35%; n = 12) showed significant differences in tuning (Fig. 9B, ANOVA, effect of dF*stimulus type,p < 0.05), but across the population there was no significant difference in their maximum degree of synchronization to AM and beat signals (paired t test, p = 0.3769). Here too, changes in spike rate usually did not reflect changes in VSdF (Fig. 9C,D). There was no correspondence between cells that responded similarly or differentially to AM and beat stimuli and categorization of a unit according to its frequency selectivity as narrow, broad, or low (see Fig. 4).

Fig. 9.

Comparison of responses to beat stimuli with AM signals. A, B, Plots show the vector strength of synchronization (±SE) versus dF for beats with F1 = 90 Hz (filled circles) and vector strength versus modF for AM signals with Fc = 90 Hz (open circles). AM modF values are plotted as both positive and negative to facilitate comparison with beat data. C, D, These graphs show average spike rate (±SE) versus dF for beats (filled squares) and modF for AM (open squares).A, Example of a unit that shows a significant effect of stimulus type on dF–modF synchronization but no significant change in dF–modF selectivity for beats versus AM signals. B, Example of a unit that exhibits a significant change in dF–modF selectivity for beats versus AM signals.

DISCUSSION

A temporal dF code in midshipman

Species that use acoustic communication in their social behavior often encounter overlapping vocal signals. A major question in auditory processing is what mechanisms are used by the auditory system to segregate concurrent vocal signals, i.e., to detect, discriminate, and identify individual signals that overlap in time. Because of the long duration of midshipman hums and the close proximity of males, concurrent vocalizations are a regular occurrence for these fish. Concurrent hums produce complex beating signals with envelope fluctuations at the dFs between the F0s as well as the upper harmonics (see Fig. 1B,C). Here, we focused on the coding of simple beats composed of two tones near the F0s of natural hums as a first step in assessing the coding of concurrent acoustic signals. We find that the dF of acoustic beats is temporally coded by auditory midbrain units, and that many of these units are selective for a particular dF. To our knowledge, this is the first demonstration of a central dF code within the auditory system of a vertebrate.

The range of temporal dF tuning overlaps with the dFs of natural concurrent hums. Behavioral phonotaxis experiments show that when presented with concurrent tones near the F0s of natural hums, midshipman localize and approach an individual tone from a single speaker (McKibben et al., 1995), i.e., midshipman are able to segregate concurrent signals. Hence, it is plausible that the extraction of dF information plays a role in the segregation of overlapping vocal signals.

More complex envelope modulations created by the presence of upper harmonics or multiple concurrent signals may influence dF coding. In the auditory afferents of mammals and amphibians, synchronization to the fundamental frequency of a multiharmonic signal can increase or decrease depending on the relative phase angle of the harmonics and resultant shape of the temporal envelope (for review, see Bodnar and Vrieslander, 1997). Midbrain dF coding in midshipman may be similarly enhanced or degraded by the effects of upper harmonics on the shape of the beat waveform.

What mechanisms might underlie temporal dF tuning within the auditory midbrain? One possibility is that the observed dF tuning simply results from frequency tuning. In this case, the responses of a unit to beat stimuli should reflect its isointensity curve. Yet, comparisons of vector strength versus dF profiles with the frequency tuning of a unit indicate that a simple linear-filtering mechanism does not explain temporal dF tuning (see Fig. 7). Alternatively, auditory midbrain dF coding could reflect afferent coding of beat dF. However, in contrast to midbrain units, auditory afferents exhibit a low degree of synchronization to the beat dF, although they show high synchronization to the individual components of a low-frequency beat (Bodnar et al., 1996; McKibben and Bass, 1996). Together, the data suggest that computational mechanisms within auditory nuclei transform an afferent periodicity code of the individual frequency components of a beat into a central periodicity code of dF.

Coding of AM

The temporal features of many vertebrate communication signals, including human speech, serve as the primary cues for distinguishing between different signals (e.g., Gerhardt, 1988; Shannon et al., 1995). Studies in amphibians, birds, and mammals show that midbrain auditory units exhibit tuning in their responses to different AM rates (Langner, 1983; Rose and Capranica, 1985; Langner and Schreiner, 1988; Batra et al., 1989; Gooler and Feng, 1992; Condon et al., 1994, 1996). Similarly, in a sound-producing mormyrid electric fish, the intervals of click stimuli are represented by the spike outputs of midbrain units (Crawford, 1993, 1997). AM tuning in, for example, frogs is primarily based on changes in spike rate rather than vector strength of synchronization, although changes in vector strength are also observed (Rose and Capranica, 1985). In contrast, dF selectivity in midshipman is primarily based on changes in synchronization to dF.

Beats and AM signals with the same dF and modF are similar in the amplitude modulations of their envelopes but differ in their spectral and fine temporal structures, e.g., phase modulation (see Fig. 3). Differential coding of AM and beat stimuli would be essential for the discrimination of two concurrent signals from an individual AM signal. This is important for midshipman given that in agonistic encounters, they produce short-duration grunts that have the same frequency components as hums, but with sidebands characteristic of AM signals (Brantley and Bass, 1994). The vast majority of midbrain units temporally code both beat dFs and AM modFs. Although some neurons exhibit differential dF and modF tuning for beats and AM signals, others exhibit similar temporal selectivity but differ in their degree of synchronization to dF and modF; synchronization to dF is lower. This suggests that midbrain units play a role in both the detection of and discrimination between concurrent courtship hums and agonistic grunts.

Although lower synchronization to beat dF compared with AM modF suggests a poorer temporal code for beats, synchronization to beat dFs is still significant and in many neurons relatively high. Thus, the detection and segregation of concurrent signals is not necessarily compromised. Furthermore, the discrimination of an individual AM signal may only require information about modF, whereas the segregation of concurrent signals would require information about dF and at least one frequency component. Thus, high-fidelity dF coding may have to be sacrificed to maintain some frequency information. Although midbrain units do not code frequency via simple synchronization, other temporal coding strategies may be used. Studies are currently in progress to examine the concomitant coding of beat dF and frequency information.

Comparisons with other vertebrates

Mechanisms for the computation of an analogous electric beat dF have been identified in weakly electric fish that produce a quasi-sinusoidal electric organ discharge (EOD) used in social communication and electrolocation (Heiligenberg, 1991). When two fish have EODs with F0s that differ by a few hertz (dF), a fish will adjust its EOD away from that of its conspecific (Bullock et al., 1972;Heiligenberg, 1991; Kawasaki, 1993). Within the midbrain of gymnotiforms, dF-selective units have been identified, and information regarding the magnitude of dF for two interacting EODs is based on primary afferent coding of the amplitude and phase modulations of the beat waveform (Heiligenberg, 1991). In some units, the sign of dF is computed from differential amplitude and phase modulations across the animal’s body surface with the animal’s own signal serving as the reference (Heiligenberg and Bastian, 1984; Heiligenberg and Rose, 1985;Rose and Heiligenberg, 1986).

In mammals, studies of the coding of concurrent vowels in the eighth nerve and cochlear nucleus indicate that individual F0s and upper harmonics are encoded within the temporal patterns of afferent spike trains (Palmer, 1990; Keilson et al., 1995; Cariani and Delgutte, 1996a,b). Within the midbrain, many neurons code interaural phase modulation produced by binaural beats that are generated by presenting either slightly different pure tones or AM signals independently to each ear (Yin and Kuwada, 1983; Batra et al., 1989; the latter differs from our paradigm, in which both ears are in receipt of an acoustic beat). Sensitivity to binaural phase differences in concurrent signals could serve as a mechanism for sign selectivity or spatial segregation of concurrent signals.

For humans, models proposed for segregating concurrent vowels based on the perception of small differences (<10 Hz) in F0 rely on two different coding strategies. Assman and Summerfield (1990) and Meddis and Hewitt (1992) propose that the segregation of concurrent signals depends on the temporal coding of their fundamental frequencies (F0s) at both peripheral and central levels. In contrast, the model ofCulling and Darwin (1994) hypothesizes that information regarding modulations in the beat waveform itself may be used for vowel segregation. As noted earlier, auditory afferents in midshipman exhibit high synchronization to the periodicity of the individual components (F0s) of concurrent signals but relatively weak synchronization to the dF of the beat waveform. These findings are consistent with the peripheral coding level of the models of Assman and Summerfield (1990)and Meddis and Hewitt (1992), namely that the F0s of two multiharmonic signals are contained within the firing pattern of primary afferents. Because afferents synchronize to both F0s, information regarding the fine temporal structure of the beat waveform, i.e., phase and amplitude modulations, is also present in afferent spike trains. By contrast, midbrain auditory units exhibit a high degree of synchronization to the dF of a beat but weak synchronization to its F0s (this report); this is consistent with the model of Culling and Darwin (1994). In sum, the results for midshipman suggest a “combinatorial” coding strategy in which a peripheral periodicity code of individual F0s is transformed into a central dF code.

A midbrain extraction of dF information may be common to other vertebrates that rely on acoustic signals for communication. Studies in a wide range of species suggest a prominent role for the midbrain in coding the temporal features of acoustic communication signals (Rose, 1986; Langner, 1992; Casseday and Covey, 1996; Condon et al., 1996). The coding of beat dF, as well as AM, in the midbrain of midshipman fish supports this hypothesis and now demonstrates a midbrain role in processing the temporal features of not only single but also concurrent vocalizations.

Footnotes

This work was supported by National Institutes of Health Grant DC-00092 and Cornell University grants (A.H.B.). We thank C. Clark, B. Corzelius, J. Corwin, J. Crawford, A. Lee, and M. Marchaterre for technical advice and assistance and T. H. Bullock, J. McKibben, and T. Natoli for help with this manuscript.

Correspondence should be addressed to Deana A. Bodnar, Section of Neurobiology and Behavior, Mudd Hall, Cornell University, Ithaca, NY 14853.

REFERENCES

- 1.Assman PF, Summerfield Q. Modeling the perception of concurrent vowels with different fundamental frequencies. J Acoust Soc Am. 1990;88:680–697. doi: 10.1121/1.399772. [DOI] [PubMed] [Google Scholar]

- 2.Bass AH. Shaping brain sexuality. Am Sci. 1996;84:352–363. [Google Scholar]

- 3.Bass AH, Baker R. Sexual dimorphisms in the vocal control system of a teleost fish: morphology of physiologically identified neurons. J Neurobiol. 1990;21:1155–1168. doi: 10.1002/neu.480210802. [DOI] [PubMed] [Google Scholar]

- 4.Bass AH, Marchaterre MA, Baker R. Vocal-acoustic pathways in a teleost fish. J Neurosci. 1994;14:4025–4039. doi: 10.1523/JNEUROSCI.14-07-04025.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bass AH, Bodnar DA, Marchaterre M. Auditory pathways in a vocal fish: inputs to a midbrain nucleus encoding acoustic beats. Soc Neurosci Abstr. 1996;22:447. [Google Scholar]

- 6.Batra R, Kuwada S, Standford Y. Temporal coding of envelopes and their interaural delays in the inferior colliculus of the unanesthetized rabbit. J Neurophysiol. 1989;61:257–268. doi: 10.1152/jn.1989.61.2.257. [DOI] [PubMed] [Google Scholar]

- 7.Batschelet E. Circular statistics in biology. Academic; New York: 1981. [Google Scholar]

- 8.Bodnar DA, Bass AH (1996) The coding of concurrent vocal signals within the auditory midbrain of a sound producing fish, the plainfin midshipman. Assoc Res Otolaryngol 19th Midwinter Abstr 155.

- 9.Bodnar DA, Vrieslander JD. The contribution of changes in stimulus temporal features and peripheral nonlinearities to the phase sensitivity of auditory afferents in the bullfrog (Rana catesbeiana). Auditory Neurosci. 1997;3:231–254. [Google Scholar]

- 10.Bodnar DA, McKibben JR, Bass AH. Temporal computation of the difference frequency of concurrent acoustic signals in the central auditory system of a vocal fish. Soc Neurosci Abstr. 1996;22:447. [Google Scholar]

- 11.Brantley RK, Bass AH. Alternative male spawning tactics and acoustic signals in the plainfin midshipman fish, Porichthys notatus (Teleostei, Batrachoididae). Ethology. 1994;96:213–232. [Google Scholar]

- 12.Brokx JPL, Nooteboom SG. Intonation and the perception of simultaneous voices. J Phon. 1982;10:23–26. [Google Scholar]

- 13.Bullock TH, Hamstra RH, Scheich H. The jamming avoidance response of high frequency electric fish. J Comp Physiol. 1972;77:1–48. [Google Scholar]

- 14.Cariani P, Delgutte B. Neural corelates of the pitch of complex tones. I. Pitch and pitch salience. J Neurophysiol. 1996a;76:1698–1716. doi: 10.1152/jn.1996.76.3.1698. [DOI] [PubMed] [Google Scholar]

- 15.Cariani P, Delgutte B. Neural corelates of the pitch of complex tones. II. Pitch shift, pitch ambiguity, phase invariance, pitch circularity, rate pitch, and the dominance region for pitch. J Neurophysiol. 1996b;76:1716–1734. doi: 10.1152/jn.1996.76.3.1717. [DOI] [PubMed] [Google Scholar]

- 16.Casseday JH, Covey E. A neuroethological theory of the operation of the inferior colliculus. Brain Behav Evol. 1996;47:311–336. doi: 10.1159/000113249. [DOI] [PubMed] [Google Scholar]

- 17.Chalikia M, Bregman A. The perceptual segregation of simultaneous auditory signals: pulse train segregation and vowel segregation. Percept Psychophys. 1989;46:487–496. doi: 10.3758/bf03210865. [DOI] [PubMed] [Google Scholar]

- 18.Cohen MJ, Winn HW. Electrophysiological observations on hearing and sound production in the fish, Porichthys notatus. J Exp Zool. 1967;165:355–370. doi: 10.1002/jez.1401650305. [DOI] [PubMed] [Google Scholar]

- 19.Condon CJ, White KR, Feng AS. Processing of amplitude-modulated signals that mimic echoes from fluttering targets in the inferior colliculus of the little brown bat, Myotis lucifugus. J Neurophysiol. 1994;71:768–784. doi: 10.1152/jn.1994.71.2.768. [DOI] [PubMed] [Google Scholar]

- 20.Condon CJ, White KR, Feng AS. Neurons with different temporal firing patterns in the inferior colliculus of the little brown bat differentially process sinusoidal amplitude-modulated signals. J Comp Physiol. 1996;178:147–157. doi: 10.1007/BF00188158. [DOI] [PubMed] [Google Scholar]

- 21.Crawford JD. Central auditory neurophysiology of a sound producing fish: the mesencephalon of Pollimyrus isidori (Mormyridae). J Comp Physiol. 1993;172:139–152. doi: 10.1007/BF00189392. [DOI] [PubMed] [Google Scholar]

- 22.Crawford JD. Feature-detecting auditory neurons in the brain of a sound producing fish. J Comp Physiol. 1997;180:439–450. doi: 10.1007/s003590050061. [DOI] [PubMed] [Google Scholar]

- 23.Culling JF, Darwin CJ. Perceptual and computational separation of simultaneous vowels: cues arising from low frequency beating. J Acoust Soc Am. 1994;95:1559–1569. doi: 10.1121/1.408543. [DOI] [PubMed] [Google Scholar]

- 24.DeMartini EE. Spawning success of the male plainfin midshipman. I. Influences of male body size and area of spawning site. J Exp Mar Biol Ecol. 1988;121:177–192. [Google Scholar]

- 25.Fay RR. Structure and function in sound discrimination among vertebrates. In: Webster DB, Fay RR, Popper A, editors. The evolutionary biology of hearing. Springer; New York: 1993. pp. 229–266. [Google Scholar]

- 26.Gerhardt HC. Acoustic properties used in call recognition by frogs and toads. In: Fritzsch B, Ryan MJ, Wilczynski W, Hetherington TE, Walkowiak W, editors. The evolution of the amphibian auditory system. Wiley; New York: 1988. pp. 455–483. [Google Scholar]

- 27.Goldberg JM, Brown PB. Response of binaural neurons of dog superior olivary complex to dichotic tonal stimuli: some physiological mechanisms of sound localization. J Neurophysiol. 1969;32:613–636. doi: 10.1152/jn.1969.32.4.613. [DOI] [PubMed] [Google Scholar]

- 28.Gooler DM, Feng AS. Temporal coding in the frog auditory midbrain: the influence of duration and rise-fall time on the processing of complex amplitude-moduated stimuli. J Neurophysiol. 1992;67:1–22. doi: 10.1152/jn.1992.67.1.1. [DOI] [PubMed] [Google Scholar]

- 29.Heiligenberg W. Neural nets in electric fish. MIT; Cambridge: 1991. [Google Scholar]

- 30.Heiligenberg W, Bastian J. The electric sense of weakly electric fish. Annu Rev Physiol. 1984;46:561–583. doi: 10.1146/annurev.ph.46.030184.003021. [DOI] [PubMed] [Google Scholar]

- 31.Heiligenberg W, Rose GJ. Phase and amplitude computations in the midbrain of an electric fish: intracellular studies of neurons participating in the jamming avoidance response of Eigenmannia. J Neurosci. 1985;2:515–531. doi: 10.1523/JNEUROSCI.05-02-00515.1985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Ibara RM, Penny LT, Ebeling AW, van Dykhuizen G, Cailliet G. The mating call of the plainfin midshipman fish, Porichthys notatus. In: Noakes DGL, Lindquist DG, Helfman GS, Ward JA, editors. Predators and prey in fishes. Junk; The Hague, Netherlands: 1983. pp. 205–212. [Google Scholar]

- 33.Kawasaki M. Independently evolved jamming avoidance responses employ identical computational algorithms: a behavioral study of the African electric fish, Gymnarchus niloticus. J Comp Physiol. 1993;173:9–22. doi: 10.1007/BF00209614. [DOI] [PubMed] [Google Scholar]

- 34.Kawasaki M, Guo YX. Neuronal circuitry for comparison of timing in the electrosensory lateral line lobe of the African wave-type electric fish, Gymnarchus niloticus. J Neurosci. 1996;16:380–391. doi: 10.1523/JNEUROSCI.16-01-00380.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Keilson SE, Richards VM, Wyman BT, Young ED (1995) Pitch-tagged spectral representation in the cochlear nucleus. Assoc Res Otolaryngol 18th Midwinter Abstr 128.

- 36.Knudsen EI. Distinct auditory and lateral line nuclei in the midbrain of catfishes. J Comp Neurol. 1977;173:417–432. doi: 10.1002/cne.901730302. [DOI] [PubMed] [Google Scholar]

- 37.Langner G. Evidence for neuronal periodicity detection in the auditory system of the guinea fowl—implications for pitch analysis in the time domain. Exp Brain Res. 1983;52:333–335. doi: 10.1007/BF00238028. [DOI] [PubMed] [Google Scholar]

- 38.Langner G. Periodicity coding in the auditory system. Hear Res. 1992;60:115–142. doi: 10.1016/0378-5955(92)90015-f. [DOI] [PubMed] [Google Scholar]

- 39.Langner G, Schreiner CE. Periodicity coding in the inferior colliculus of the cat. I. Neuronal mechanisms. J Neurophysiol. 1988;60:1799–1822. doi: 10.1152/jn.1988.60.6.1799. [DOI] [PubMed] [Google Scholar]

- 40.Lewis ER, Baird RA, Leverenz EL, Koyama H. Inner ear: dye injection reveals peripheral origins of specific sensitivities. Science. 1982;215:1641–1643. doi: 10.1126/science.6978525. [DOI] [PubMed] [Google Scholar]

- 41.Lu Z, Fay RR. Acoustic response properties of single units in the torus semicircularis of the goldfish, Carassius auratus. J Comp Physiol. 1993;173:33–48. doi: 10.1007/BF00209616. [DOI] [PubMed] [Google Scholar]

- 42.McCormick CA. Evolution of central auditory pathways in an amniotes. In: Webster DB, Fay RR, Popper AN, editors. The evolutionary biology of hearing. Springer; New York: 1992. pp. 323–350. [Google Scholar]

- 43.McCormick CA, Hernandez DV. Connections of octaval and lateral line nuclei of the medulla in the goldfish, including the cytoarchitecture of the secondary octaval population in goldfish and catfish. Brain Behav Evol. 1996;47:113–137. doi: 10.1159/000113232. [DOI] [PubMed] [Google Scholar]

- 44.McCue MP, Guinan JJ., Jr Acoustically responsive fibers in the vestibular nerve of the cat. J Neurosci. 1994;14:6058–6070. doi: 10.1523/JNEUROSCI.14-10-06058.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.McKibben JR, Bass AH. Peripheral encoding of behaviorally relevant acoustic signals in a vocal fish. Soc Neurosci Abstr. 1996;22:447. [Google Scholar]

- 46.McKibben JR, Bodnar DA, Capranica RR. Encoding of auditory beats in the frog VIIIth nerve. Soc Neurosci Abstr. 1993;19:576. [Google Scholar]

- 47.McKibben JR, Bodnar D, Bass AH. Everybody’s humming but is anybody listening: acoustic communication in a marine teleost fish. In: Burrows M, Matheson T, Newland PL, Schuppe, editors. Nervous systems and behavior. Proceedings of the Fourth International Congress of Neuroethology. Thieme; New York: 1995. p. 351. [Google Scholar]

- 48.Meddis R, Hewitt MJ. Modelling the identification of concurrent vowels with different fundamental frequencies. J Acoust Soc Am. 1992;91:233–245. doi: 10.1121/1.402767. [DOI] [PubMed] [Google Scholar]

- 49.Palmer AR. The representation of the spectra and fundamental frequency of steady-state single- and double-vowel sounds in the temporal discharge patterns of guinea pig cochlear nerve fibers. J Acoust Soc Am. 1990;88:1412–1426. doi: 10.1121/1.400329. [DOI] [PubMed] [Google Scholar]

- 50.Rose GJ. A temporal processing mechanism for all species. Brain Behav Evol. 1986;28:134–144. doi: 10.1159/000118698. [DOI] [PubMed] [Google Scholar]

- 51.Rose GJ, Capranica RR. Sensitivity to amplitude modulated sounds in the anuran auditory nervous system. J Neurophysiol. 1985;53:446–465. doi: 10.1152/jn.1985.53.2.446. [DOI] [PubMed] [Google Scholar]

- 52.Rose GJ, Heiligenberg W. Neural coding of difference frequencies in the midbrain of the electric fish Eigenmannia: reading the sense of rotation in an amplitude-phase plane. J Comp Physiol. 1986;158:613–624. doi: 10.1007/BF00603818. [DOI] [PubMed] [Google Scholar]

- 53.Shannon RV, Zeng FG, Kamath V, Wygonski J, Elelid M. Speech recognition with primary temporal cues. Science. 1995;270:303–304. doi: 10.1126/science.270.5234.303. [DOI] [PubMed] [Google Scholar]

- 54.Walker HJ, Rosenblatt RH. Pacific toadfishes of the genus Porichthys (Batrachoididae) with descriptions of three new species. Copeia. 1988;1988:887–904. [Google Scholar]

- 55.Yin TCT, Kuwada S. Binaural interaction in low frequency neurons in inferior colliculus of cat. II. Effects of changing rate and direction of interaural phase. J Neurophysiol. 1983;50:1000–1019. doi: 10.1152/jn.1983.50.4.1000. [DOI] [PubMed] [Google Scholar]