Abstract

Using functional magnetic resonance imaging (fMRI), we found an area in the fusiform gyrus in 12 of the 15 subjects tested that was significantly more active when the subjects viewed faces than when they viewed assorted common objects. This face activation was used to define a specific region of interest individually for each subject, within which several new tests of face specificity were run. In each of five subjects tested, the predefined candidate “face area” also responded significantly more strongly to passive viewing of (1) intact than scrambled two-tone faces, (2) full front-view face photos than front-view photos of houses, and (in a different set of five subjects) (3) three-quarter-view face photos (with hair concealed) than photos of human hands; it also responded more strongly during (4) a consecutive matching task performed on three-quarter-view faces versus hands. Our technique of running multiple tests applied to the same region defined functionally within individual subjects provides a solution to two common problems in functional imaging: (1) the requirement to correct for multiple statistical comparisons and (2) the inevitable ambiguity in the interpretation of any study in which only two or three conditions are compared. Our data allow us to reject alternative accounts of the function of the fusiform face area (area “FF”) that appeal to visual attention, subordinate-level classification, or general processing of any animate or human forms, demonstrating that this region is selectively involved in the perception of faces.

Keywords: extrastriate cortex, face perception, functional MRI, fusiform gyrus, ventral visual pathway, object recognition

Evidence from cognitive psychology (Yin, 1969;Bruce et al., 1991; Tanaka and Farah, 1993), computational vision (Turk and Pentland, 1991), neuropsychology (Damasio et al., 1990; Behrmann et al., 1992), and neurophysiology (Desimone, 1991; Perrett et al., 1992) suggests that face and object recognition involve qualitatively different processes that may occur in distinct brain areas. Single-unit recordings from the superior temporal sulcus (STS) in macaques have demonstrated neurons that respond selectively to faces (Gross et al., 1972; Desimone, 1991; Perrett et al., 1991). Evidence for a similar cortical specialization in humans has come from epilepsy patients with implanted subdural electrodes. In discrete portions of the fusiform and inferotemporal gyri, large N200 potentials have been elicited by faces but not by scrambled faces, cars, or butterflies (Ojemann et al., 1992;Allison et al., 1994; Nobre et al., 1994). Furthermore, many reports have described patients with damage in the occipitotemporal region of the right hemisphere who have selectively lost the ability to recognize faces (De Renzi, 1997). Thus, several sources of evidence support the existence of specialized neural “modules” for face perception in extrastriate cortex.

The evidence from neurological patients is powerful but limited in anatomical specificity; however, functional brain imaging allows us to study cortical specialization in the normal human brain with relatively high spatial resolution and large sampling area. Past imaging studies have reported regions of the fusiform gyrus and other areas that were more active during face than object viewing (Sergent et al., 1992), during face matching than location matching (Haxby et al., 1991, 1994;Courtney et al., 1997), and during the viewing of faces than of scrambled faces (Puce et al., 1995; Clark et al., 1996), consonant strings (Puce et al., 1996), or textures (Malach et al., 1995; Puce et al., 1996). Although these studies are an important beginning, they do not establish that these cortical regions are selectivelyinvolved in face perception, because each of these findings is consistent with several alternative interpretations of the mental processes underlying the observed activations, such as (1) low-level feature extraction (given the differences between the face and various control stimuli), (2) visual attention, which may be recruited more strongly by faces, (3) “subordinate-level” visual recognition (Damasio et al., 1990; Gauthier et al., 1996) of particular exemplars of a basic-level category (Rosch et al., 1976), and (4) recognition of any animate (or human) objects (Farah et al., 1996).

Such ambiguity of interpretation is almost inevitable in imaging studies in which only two or three conditions are compared. We attempted to overcome this problem by using functional magnetic resonance imaging (fMRI) to run multiple tests applied to the same cortical region within individual subjects to search for discrete regions of cortex specialized for face perception. (For present purposes, we define face perception broadly to include any higher-level visual processing of faces from the detection of a face as a face to the extraction from a face of any information about the individual’s identity, gaze direction, mood, sex, etc.). Our strategy was to ask first whether any regions of occipitotemporal cortex were significantly more active during face than object viewing; only one such area (in the fusiform gyrus) was found consistently across most subjects. To test the hypothesis that this fusiform region was specialized for face perception, we then measured the activity in this same functionally defined area in individual subjects during four subsequent comparisons, each testing one or more of the alternative accounts listed in the previous paragraph.

MATERIALS AND METHODS

General design. This study had three main parts. In Part I, we searched for any occipitotemporal areas that might be specialized for face perception by looking within each subject for regions in the ventral (occipitotemporal) pathway that responded significantly more strongly during passive viewing of photographs of faces than photographs of assorted common objects. This comparison served as a scout, allowing us to (1) anatomically localize candidate “face areas” within individual subjects, (2) determine which if any regions are activated consistently across subjects, and (3) specify precisely the voxels in each subject’s brain that would be used as that subject’s previously defined region of interest (ROI) for the subsequent tests in Parts II and III.

We used a stimulus manipulation with a passive viewing task (rather than a task manipulation on identical stimuli) because the perception of foveally presented faces is a highly automatic process that is difficult to bring under volitional control (Farah et al., 1995). Imagine, for example, being told that a face will be flashed at fixation for 500 msec and that you must analyze its low-level visual features but not recognize the face. If the face is familiar it will be virtually impossible to avoid recognizing it. Thus when faces are presented foveally, all processes associated with face recognition are likely to occur no matter what the task is, and the most effective way to generate a control condition in which those processes do not occur is to present a nonface stimulus (Kanwisher et al., 1996).

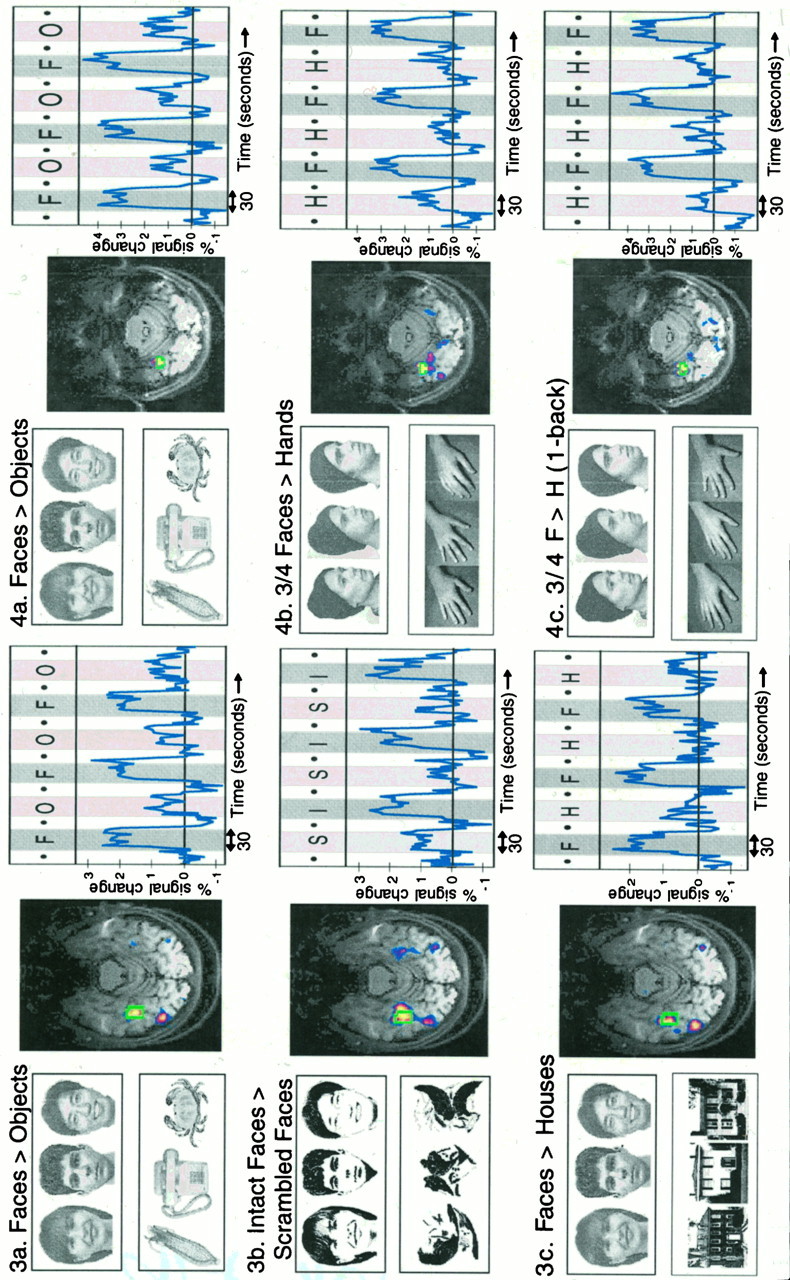

The results of Part I showed only one region that was activated consistently across subjects for the faces versus objects comparison; this area was in the right fusiform gyrus (and/or adjacent sulci). We hypothesized that this region was specialized for some aspect of face perception, and we tested alternatives to this hypothesis with several different stimulus comparisons in Parts II and III. In Part II, each of five subjects who had revealed a clear fusiform face activation in Part I was tested on two new stimulus comparisons. In each, the methodological details were identical to those of the faces versus objects runs, and only the stimulus sets differed. Our first new stimulus comparison in Part II was between intact two-tone faces (created by thresholding the photographs used in Part I) and scrambled two-tone faces in which the component black regions were rearranged to create a stimulus unrecognizable as a face (see Fig. 3b). This manipulation preserved the mean luminance and some low-level features of the two-tone face stimuli and avoided producing the “cut-and-paste” marks that have been a problem in the scrambling procedures of some earlier studies; this contrast therefore served as a crude test of whether the “face areas” were simply responding to the low-level visual features present in face but not nonface stimuli. Our second stimulus contrast—front view photographs of faces versus front view photographs of houses (see Fig. 3c)—was designed to test whether the “face area” was involved not in face perception per se but rather in processing and/or distinguishing between any different exemplars of a single class of objects.

Fig. 3.

Results of Part II. Left column, Sample stimuli used for the faces versus objects comparison as well as the two subsequent tests. Center column, Areas that produced significantly greater activation for faces than control stimuli for subject S1. a, The faces versus objects comparison was used to define a single ROI (shown in green outline for S1), separately for each subject. The time courses in the right column were produced by (1) averaging the percentage signal change across all voxels in a given subject’s ROI (using the original unsmoothed data), and then (2) averaging these ROI-averages across the five subjects. F andO in a indicate face and object epochs;I and S in b indicate intact and scrambled face epochs; and F andH in c indicate face and hand epochs.

In Part III, a new but overlapping set of five subjects who had revealed clear candidate face areas in Part I were tested on two new comparisons. (Subjects S1 and S2 participated in both Parts II and III.) In the first new comparison, subjects passively viewed three-quarter-view photographs of faces (all were of people whose hair was tucked inside a black knit ski hat) versus photographs of human hands (all shot from the same angle and in roughly the same position). This comparison (see Fig. 4b) was designed to test several different questions. First, would the response of the candidate face area generalize to different viewpoints? Second, is this area involved in recognizing the face on the basis of the hair and other external features of the head (Sinha and Poggio, 1996) or on the basis of its internal features? Because the external features were largely hidden (and highly similar across exemplars) in the ski hat faces, a response of this area to these stimuli would suggest that it is primarily involved in processing the internal rather than external features of the face. Third, the use of human hands as a control condition also provided a test of whether the face area would respond to any animate or human body part. In the second new comparison, the same stimuli (three-quarter-view faces vs hands) were presented while subjects performed a “1-back” task searching for consecutive repetitions of identical stimuli (pressing a button whenever they detected a repetition). For this task, a 250 msec blank gray field was sandwiched between each successive 500 msec presentation of a face. The gray field produced sensory transients over the whole stimulus and thereby required subjects to rely on higher-level visual information to perform the task (Rensink et al., 1997). Because the 1-back task was, if anything, more difficult for hand than face stimuli, the former should engage general attentional mechanisms at least as strongly as the latter, ruling out any account of greater face activation for faces in terms of general attentional mechanisms.

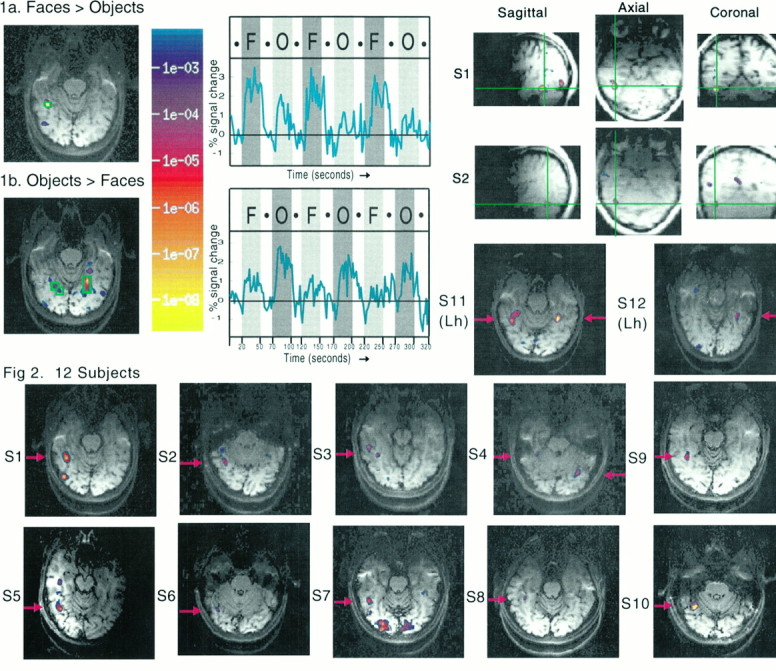

Fig. 4.

Results of Part III. Stimulus contrasts for each test are shown in the left column.a, Face ROIs were defined separately for each subject using the average of two face versus object scans as described for Figure 3a. The resulting brain slice with statistical overlay for one subject (S10) is shown in the center column, and the time course of signal intensity averaged over the five subjects’ ROIs is shown at the right. As described for Figure 3a (Part II), the ROI specified on the basis of the faces versus objects comparison was used for the two subsequent comparisons of passive viewing of three-quarter faces versus hands (b), and the consecutive matching task on three-quarter faces versus hands (c).

Tests of each subject in Parts II and III were run on the basic face versus object comparison from Part I in the same session, so that the results of Part I could be used to generate the precise ROIs for that subject for the comparisons in Parts II and III. For the passive viewing conditions, subjects were instructed to maintain fixation on the dot when it was present, and otherwise to simply look at the stimuli attentively without carrying out other mental games at the same time.

Subjects. Tests of 20 normal subjects under the age of 40 were run, and all of the subjects reported normal or corrected-to-normal vision and no previous neurological history. The data from five of them were omitted because of excessive head motion or other artifacts. Of the remaining 15 subjects (9 women and 6 men), 13 participants described themselves as right-handed and two as left-handed. All 15 subjects participated in Part I. (Subject S1 was run on Part I many times in different scanning sessions spread over a 6 month period both to measure test–retest reliability within a subject across sessions and to compare the results from Part I with a number of other pilot studies conducted over this period.) Subjects S1, S2, S5, S7, and S8 from Figure 2 were run in Part II, and subjects S1, S5, S9, S10, and S11 from Figure 2 were run in Part III. Subjects S1–S10 described themselves as right-handed, whereas subjects S11 and S12 described themselves as left-handed. The experimental procedures were approved by both the Harvard University Committee on the Use of Human Subjects in Research and the Massachusetts General Hospital Subcommittee on Human Studies; informed consent was obtained from each participant.

Fig. 2.

Bottom two rows, Anatomical images overlaid with color-coded statistical maps from the 10 right-handed subjects in Part I who showed regions that produced a significantly stronger MR signal during face than object viewing. For each of the right-handed subjects (S1–S10), the slice containing the right fusiform face activation is shown; for left-handed subjects S11 and S12, all the fusiform face activations are visible in the slices shown. Data from subjects S1 and S2 resliced into sagittal, coronal, and axial slices (top right). Data from the three subjects who showed no regions that responded significantly more strongly for faces than objects are not shown.

Stimuli. Samples of the stimuli used in these experiments are shown in Figures 3 and 4. All stimuli were ∼300 × 300 pixels in size and were gray-scale photographs (or photograph-like images), except for the intact and scrambled two-tone faces used in Part II. The face photographs in Parts I and II were 90 freshman ID photographs obtained with consent from members of the Harvard class of 1999. The three-quarter-view face photos used in Part II were members of or volunteers at the Harvard Vision Sciences Lab. (For most subjects none of the faces were familiar.) The 90 assorted object photos (and photo-like pictures) were obtained from various sources and included canonical views of familiar objects such as a spoon, lion, or car. The 90 house photographs were scanned from an architecture book and were unfamiliar to the subjects.

Each scan lasted 5 min and 20 sec and consisted of six 30 sec stimulus epochs interleaved with seven 20 sec epochs of fixation. During each stimulus epoch in Parts I and II, 45 different photographs were presented foveally at a rate of one every 670 msec (with the stimulus on for 500 msec and off for 170 msec). Stimulus epochs alternated between the two different conditions being compared, as shown in Figures 1, 3, and 4. The 45 different stimuli used in the first stimulus epoch were the same as those used in the fifth stimulus epoch; the stimuli used in the second stimulus epoch were the same as those used in the sixth. The stimuli in Part III were the same in structure and timing, except that (1) a total of 22 face stimuli and 22 hand stimuli were used (with most stimuli occurring twice in each epoch), and (2) the interval between face or hand stimuli was 250 msec.

Fig. 1.

Results from subject S1 on Part I. Theright hemisphere appears on the left for these and all brain images in this paper (except the resliced images labeled “Axial” in Fig. 2). The brain images at theleft show in color the voxels that produced a significantly higher MR signal intensity (based on smoothed data) during the epochs containing faces than during those containing objects (1a) and vice versa (1b) for 1 of the 12 slices scanned. These significance images (see color key at right for this and all figures in this paper) are overlaid on a T1-weighted anatomical image of the same slice. Most of the other 11 slices showed no voxels that reached significance at the p < 10−3 level or better in either direction of the comparison. In each image, an ROI is shown outlined in green, and the time course of raw percentage signal change over the 5 min 20 sec scan (based on unsmoothed data and averaged across the voxels in this ROI) is shown at the right. Epochs in which faces were presented are indicated by the vertical gray bars marked with anF; gray bars with an Oindicate epochs during which assorted objects were presented;white bars indicate fixation epochs.

Stimulus sequences were generated using MacProbe software (Hunt, 1994) and recorded onto videotape for presentation via a video projector during the scans. Stimuli were back-projected onto a ground-glass screen and viewed in a mirror over the subject’s forehead (visual angle of the stimuli was ∼15 × 15°).

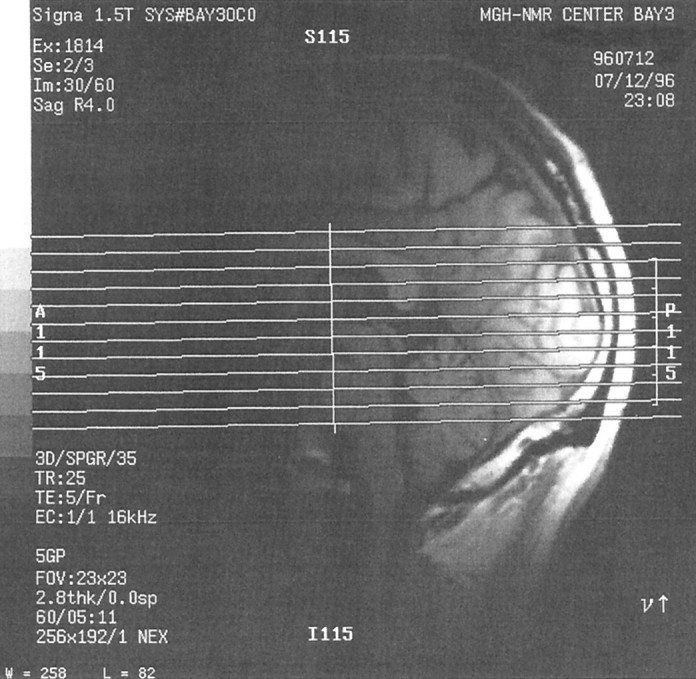

MRI acquisition. Scans were conducted using the 1.5 T MRI scanner (General Electric Signa, Milwaukee, WI) at the Massachusetts General Hospital NMR Center (Charlestown, MA), using echo-planar imaging (Instascan, ANMR Systems, Wilmington, MA) and a bilateral quadrature receive-only surface coil (made by Patrick Ledden, Massachusetts General Hospital NMR Center). Functional data were obtained using an asymmetric spin echo sequence (TR = 2 sec, TE = 70 msec, flip angle = 90°, 180° offset = 25 msec). Our 12 6 mm slices were oriented parallel to the inferior edge of the occipital and temporal lobes and covered the entire occipital and most of the temporal lobe (see Fig. 5). Head motion was minimized with a bite bar. Voxel size was 3.25 × 3.25 × 6 mm. Details of our procedure are as described in Tootell et al. (1995), except as noted here.

Fig. 5.

Midsagittal anatomical image from subject S1 showing the typical placing of the 12 slices used in this study. Slices were selected so as to include the entire ventral surface of the occipital and temporal lobes.

Data analysis. Five subjects of the 20 scanned had excessive head motion and/or reported falling asleep during one or more runs; the data from these subjects were omitted from further analysis. Motion was assessed within a run by looking for (1) a visible shift in the functional image from a given slice between the first and last functional image in one run, (2) activated regions that curved around the edge of the brain and/or shifted sides when the sign of the statistical comparison was reversed, and/or (3) ramps in the time course of signal intensity from a single voxel or set of voxels. Motion across runs was assessed by visually inspecting the raw functional images for any change in the shape of a brain slice across runs.

For the remaining 15 subjects no motion correction was carried out. Pilot data had indicated that the significance from a single run was sometimes weak, but became much stronger when we averaged across two identical runs within a subject (i.e., when the two corresponding values for each voxel, one from each scan, were averaged together for each of the 160 images × 12 slices collected during a single 5 min 20 sec scan). We therefore ran each test twice on each subject, and averaged over the two runs of each test. The data were then analyzed statistically using a Kolmogorov–Smirnov test, after smoothing with a Hanning kernel over a 3 × 3 voxel area to produce an approximate functional resolution of 6 mm. This analysis was run on each voxel (after incorporating a 6 sec lag for estimated hemodynamic delay), testing whether the MR signal intensity in that voxel was significantly greater during epochs containing one class of stimuli (e.g., faces) than epochs containing the other (e.g., objects). Areas of activation were displayed in color representations of significance level, overlaid on high-resolution anatomical images of the same slice. Voxels of significant activation were also inspected visually by plotting the time course of raw (unsmoothed) signal intensity over the 5 min 20 sec of the scan.

To identify all regions within our chosen slices and coil range that responded more strongly to faces than objects in Part I, as well as their Talairach coordinates, each subject’s anatomical and functional data were first fitted into their own Talairach space and then analyzed (using the program Tal-EZ by Bush et al., 1996) to find all the regions that produced a stronger signal for faces than objects at thep < 10−4 level of significance (uncorrected for multiple comparisons). This analysis was intended as a scout for candidate face areas and revealed that the only region in which most of our subjects showed a significantly greater activation for faces than objects was in the right fusiform gyrus. This region therefore became the focus of our more detailed investigations in Parts II and III.

For each subject in Parts II and III, a face ROI was identified that was composed of all contiguous voxels in the right fusiform region in which (1) the MR signal intensity was significantly stronger during face than object epochs at the p < 10−4level, and (2) a visual inspection of the raw time course data from that voxel did not reveal any obvious ramps, spikes, or other artifacts. For subject S11, who was left-handed and had very large and highly significant activations in both left and right fusiform gyri, the ROI used in Part III included both of these regions.

For each of the comparisons in Parts II and III we first averaged over the two runs from each subject and then averaged across the voxels in that subject’s predefined face ROI (from Part I) to derive the time course of raw signal intensity in that subject’s ROI. Two further analyses were then carried out. First, the average MR signal intensity in each subjects’ ROI for each epoch was calculated (by averaging within a subject across all the voxels in their ROI and across all the images collected in each epoch). The average MR signal intensities for each subject and stimulus epoch were then entered into a three-way ANOVA across subjects (epoch number × face/control × test) separately for Parts II and III. The factor of epoch number had three levels corresponding to the first, second, and third epochs for each condition; the test factor had three levels for the three different stimulus comparisons (faces vs objects/scrambled vs intact faces/faces vs houses for Part II and faces vs objects/passive faces vs hands/1-back faces vs hands for Part III). These ANOVAs allowed us to test for the significance of the differences in signal intensity between the various face and control conditions and also to test whether this difference interacted with epoch number and/or comparison type.

Second, for each subject we converted the raw time course of MR signal intensity from that subject’s face ROI into a time course of percent signal change, using that subject’s average signal across all the fixation epochs in the same runs (in the face ROI) as a baseline. These time courses of percent signal change for each subject’s face ROI could then be averaged across the five subjects who were run on the same test, for all the tests in Parts I through III. By averaging across each subject’s ROI and across all the data collected during each epoch type, we derived an average percentage signal change for the face and control conditions for each test. The ratio of the percentage signal change for the faces versus control condition for each test provides a measure of the selectivity of the face ROI to the stimulus contrast used in that test.

RESULTS

Part I

In Part I we asked whether any brain areas were significantly more active during face viewing than object viewing. Figure1a shows the results from a single subject (S1), revealing a region in the right fusiform gyrus that produced a significantly higher signal intensity during epochs in which faces were presented than during epochs in which objects were presented (in five adjacent voxels at the p < 10 −4level based on an analysis of smoothed data). This pattern is clearly visible in the raw (unsmoothed) data for this single subject shown in Figure 1a (right), where the percentage signal change is plotted over the 5 min 20 sec of the scan, averaged over the five voxels outlined in green (Fig. 1a, left). The opposite effect, a significantly higher MR signal (each significant at the p < 10−4 level) during the viewing of objects than during face viewing, was seen in a different, bilateral and more medial area including two adjacent voxels in the right hemisphere and eight in the left in the same slice of the same data set (Fig. 1b). A similar bilateral activation in the parahippocampal region for objects compared with faces was seen in most of the subjects run in this study; this result is described briefly inKanwisher et al. (1996), where images of this activation are shown for three different subjects. The two opposite activations for faces and objects constitute a double dissociation and indicate that the face activation cannot merely be an artifact of an overall tendency for the faces to be processed more extensively than the objects or vice versa.

To scout for any regions of the brain that might be specialized for face perception consistently across subjects, we tabulated (in Table 1) the Talairach coordinates of all the regions in each subject that produced a stronger signal for faces than objects at thep < 10−4 level of significance (uncorrected for multiple comparisons). The only region in which most of our subjects showed a significantly greater activation for faces than objects was in the right fusiform gyrus. This region therefore became the focus of our more detailed investigations in Parts II and III.

Table 1.

Talairach coordinates of brain regions with stronger responses to faces than objects in individual subjects

| Subject | Fusiform face area | MT gyrus/ST sulcus | Other activation loci | Other activation loci |

|---|---|---|---|---|

| A. Right-handed subjects | ||||

| S1 | (40, −48, −12), 2.1, −10 | (43, −75, −6), 2.1,−10 | ||

| S2 | (37, −57, −9), 0.4, −7 | (50, −54, 15), 0.3, −6 | (−37, −57, 21), 0.6, −5 | (−43, −72, 25), 0.7, −5 |

| (0, −54, 28), 6.1,−8 | ||||

| S3 | (43, −54, −18), 1.8, −9 | (65, −51, 9), 3.6, −6 | (37, −78, −15), 0.6, −8 | (40, −69, 40), 2.8, −5 |

| (56, −60, 3), 1.6, −5 | (56, −27, 18), 1.1, −5 | |||

| S4 | (31, −62, −6), 0.9, −10 | (56, −57, 6), 0.9, 2e−7 | (34, −42, −21), 0.3, −4 | |

| (−31, −62, −15), 1.3, −6 | ||||

| S5 | (50, −63, −9), 2.1,−10 | (34, −81, 6), 0.6, −6 | (40, −30, −9), 0.2, −6 | |

| S6 | (37, −69, −3), 0.1, −4 | (46, −48, 12), 0.7, −4 | (−34, −69, 0), 0.2, −4 | |

| (−34, −69, 0), 0.1, −4 | ||||

| S7 | (46, −54, −12), 0.8,−6 | (43, −69, −3), 0.4, −5 | (−12, −87, 0), 3.1,−5 | |

| (−40, −69, −12), 0.5, −5 | (0, −75, 6), 1.9, −9 | (21, −90, −3), 3.7,−6 | ||

| (−6, −75, 34), 4.2, −8 | (40, 54, 34), 2.8, −5 | |||

| (12, −81, 46), 1.7,−6 | ||||

| S8 | (40, −39, −6), 0.06, −6 | (3, −72, 31), 2.7, −6 | ||

| (−34, −75, −3), 0.06,−8 | ||||

| S9 | (40, −51, −12), 0.7,−1.10 | |||

| S10 | (34, −57, −15), 1.4, −13 | (56, −60, 6), 0.2, −5 | ||

| (−37, −41, −12), 0.4,−6 | ||||

| B. Left-handed subjects | ||||

| S11 | (−37, −42, −12), 1.9, −12 | (46, −69, 0), 0.4, −5 | (−62, −30, 12), 0.4, −5 | |

| (40, −48, −12), 1.1, −8 | (−53, −54, 0), 1.4, −5 | |||

| S12 | (−34, −48, −6), 0.4,−8 | (56, −42, 21), 0.5, −5 | (3, −60, 12), 0.3,−5 | (34, −90, 6), 0.3, −4 |

| (6, −60, 31), 3.5, −5 | ||||

Regions that responded significantly (at the p < 10−4 level) more strongly during face than object epochs (Part I) for each subject. For each activated region is given (1) the Talairach coordinates (M-Lx, A-Py, S-Iz), (2) size (in cm3), and (3) exponent (base 10) of the p level of the most significant voxel (based on an analysis of unsmoothed data) in that region (in italics). This table was generated using a program (Tal-EZ) supplied by G. Bush et al. (1996). Subject S5 was run with a surface coil placed over the right hemisphere, so only right hemisphere activations could be detected.

Fusiform activations for faces compared with objects were observed in the fusiform region in 12 of the 15 subjects analyzed; for the other three subjects no brain areas produced a significantly stronger MR signal intensity during face than object epochs at thep < 10−4 level or better. [Null results are difficult to interpret in functional imaging data: the failure to see face activations in these subjects could reflect either the absence of a face area in these subjects or the failure to detect a face module that was actually present because of (1) insufficient statistical power, (2) susceptibility artifact, or (3) any of numerous other technical limitations.] The slice showing the right fusiform face activation for each of the 12 subjects is shown in Figure2. An inspection of flow-compensated anatomical images did not reveal any large vessels in the vicinity of the activations. Despite some variability, the locus of this fusiform face activation is quite consistent across subjects both in terms of gyral/sulcal landmarks and in terms of Talairach coordinates (see Table 1). Half of the 10 right-handed subjects showed this fusiform activation only in the right hemisphere; the other half showed bilateral activations. For the right-handed subjects, the right hemisphere fusiform area averaged 1 cm3 in size and was located at Talairach coordinates 40x, −55y, −10z (mean across subjects of the coordinates of the most significant voxel). The left hemisphere fusiform area was found in only five of the right-handed subjects, and in these it averaged 0.5 cm3 in size and was located at −35x, −63y, −10z. (As shown in Table 1, the significance level was also typically higher for right hemisphere than left hemisphere face activations.) For cortical parcellation, the data for individual subjects were resliced into sagittal, coronal, and axial slices (as shown for S1 and S2 in Fig. 2). This allowed localization of these activated areas to the fusiform gyrus at the level of the occipitotemporal junction (parcellation unit TOF in the system of Rademacher et al., 1993), although in several cases we cannot rule out the possibility that the activation is in the adjacent collateral and/or occipitotemporal sulci.

Subject S1 was run on the basic faces versus objects comparison in Part I in many different testing sessions spread over a period of 6 months. A striking demonstration of the test–retest reliability of this comparison can be seen by inspecting the activation images for this subject from four different sessions in which this same faces versus objects comparison was run; these are shown in Figure 1a, the two different axial images for subject S1 in Figure 2 (bottom left and top right), and Figure3a. The high degree of consistency in the locus of activation suggests that the complete lateralization of the face activation to the right hemisphere in this subject is not an artifact of partial voluming (i.e., a chance positioning of the slice plane so as to divide a functional region over two adjacent slices, thereby reducing the signal in each slice compared with the case in which the entire region falls in a single slice). Although our sample size is too small to permit confident generalizations about the effects of handedness, it is worth noting that our 10 right-handed subjects showed either unilateral right-hemisphere or bilateral activations in the fusiform region, whereas one left-handed subject (S11) showed a unilateral left-hemisphere and the other (S12) showed a bilateral activation.

In addition to the activation in the fusiform region, seven subjects also showed an activation for faces compared with objects in the region of the middle temporal gyrus/superior temporal (ST) sulcus of the right hemisphere. Talairach coordinates for these activations are provided in the second column of Table 1. Most subjects also showed additional face (compared with object) activations in other regions (Table 2, third and fourth columns), but none of these appeared to be systematic across subjects.

Table 2A.

Part I

| Faces | Objects | Ratio | Intact | Scrambled | Ratio | Face | House | Ratio |

|---|---|---|---|---|---|---|---|---|

| 1.9% | 0.7% | 2.8 | 1.9% | 0.6% | 3.2 | 1.6% | 0.2% | 6.6 |

Table 2B.

Part II

| Faces | Objects | Ratio | Passive | 1-Back repetition detection | ||||

|---|---|---|---|---|---|---|---|---|

| 3/4 Faces | Hands | Ratio | 3/4 Faces | Hands | Ratio | |||

| 3.3% | 1.2% | 2.7 | 2.7% | 0.7% | 4.0 | 3.2% | 0.7% | 4.5 |

Mean percent signal change (from average fixation baseline) across all five subjects for face epochs versus control epochs for each of the comparisons in Parts II and III. The ratio of percent signal change for faces to the percent signal change for the control condition is a measure of face selectivity.

Part II

Part II tested whether the activation for faces compared with objects described in Part I was attributable to (1) differences in luminance between the face and object stimuli and/or (2) the fact that the face stimuli but not the object stimuli were all different exemplars of the same category. Five subjects who had also been run in Part I were run on Part II in the same scanning session, allowing us to use the results from Part I to derive previous face ROIs for the analysis of the data in Part II.

First we defined a “face” ROI in the right fusiform region separately for each of the five subjects, as described above, and then averaged the response across all the voxels in that subjects own face ROI during the new tests. The pattern of higher activation for face than nonface stimuli was clearly visible in the raw data from each subject’s face ROI for each of the tests in Part II. To test this quantitatively, we averaged the mean MR signal intensity across each subject’s ROI and across all the images collected within a given stimulus epoch and entered these data into a three-way ANOVA across subjects (face/control × epoch number × test). This analysis revealed a main effect of higher signal intensity during face epochs than during control stimulus epochs (F(1,4) = 27.1; p < 0.01). No other main effects or interactions reached significance. In particular, there was no interaction of face/control × test (F < 1), indicating that the effect of higher signal intensity during face than control stimuli did not differ significantly across the three tests. As a further check, separate pairwise comparisons between the face and control stimuli were run for each of the three tests, revealing that each reached significance independently (p < 0.001 for faces vs objects,p < 0.05 for intact vs scrambled faces, andp < 0.01 for faces vs houses). Note that because the ROI and exact hypothesis were specified in advance for the latter two tests (and because we averaged over all the voxels in a given subject’s ROI to produce a single averaged number for each ROI), only a single comparison was carried out for each subject in each test, and no correction for multiple comparisons is necessary for the intact versus scrambled faces and faces versus houses comparisons.

For each subject the ROI-averaged time course data were then converted into percentage signal change (using the average MR signal intensity across the fixation epochs in that subject’s face ROI as a baseline). The average across the five subjects’ time courses of percentage signal change are plotted in Figure 3, where the data clearly show higher peaks during face epochs than during nonface epochs. An index of selectivity of the face ROI was then derived by calculating the average percentage signal change across all subjects’ face ROIs during face epochs to the average percentage signal change during nonface epochs. This ratio (see Table 2) varies from 2.8 (the faces vs objects test) to 6.6 (faces vs houses), indicating a high degree of stimulus selectivity in the face ROIs. For comparison purposes, note that Tootell et al. (1995) reported analogous selectivity ratios from 2.2 to 16.1 for the response of visual area MT to moving versus stationary displays.

In sum, these data indicate that the region in each subject’s fusiform gyrus that responds more strongly to faces than objects also responds more strongly to intact than scrambled two-tone faces and more strongly to faces than houses.

The selectivity of the MT gyrus/ST sulcus activation could not be adequately addressed with the current data set because only one of the five subjects run in Part II showed a greater response for faces than objects in this region. (For this subject, S2, the ST/MT gyrus region activated for faces vs objects was activated only weakly if at all in the comparisons of intact vs scrambled faces and faces vs houses.)

Part III

Part III tested whether the activation for faces compared with objects described in Part I was attributable to (1) a differential response to animate (or human) and inanimate objects, (2) greater visual attentional recruitment by faces than objects, or (3) subordinate-level classification. Five subjects (including two who were run on Part II in a different session) were run on Parts I and III in the same session. The data were analyzed in the same way as the data from Part II: fusiform face ROIs were defined on the basis of the faces versus objects data from Part I, and these ROIs were used for the analysis of the two new tests. Each subjects’ individual raw data clearly showed higher signal intensities in the face ROI during the two new face compared with nonface tests (passive three-quarter faces vs hands and 1-back faces vs hands). The 3-way ANOVA across subjects on the mean signal intensity in each subject’s face ROI for each of the stimulus epochs (face/control × epoch number × test) revealed a significant main effect of higher signal intensity for face than nonface stimuli (F(1,4) = 35.2;p < 0.005); no other main effects or interactions reached significance. Separate analyses of the mean signal intensity during face versus control stimulus epochs confirmed that each of the three tests independently reached significance (p < 0.001 for faces vs objects,p < 0.02 for faces vs hands passive, andp < 0.005 for faces 1-back vs hands 1-back).

As in Part II, we also calculated the percentage signal change in each subject’s prespecified face ROI. The averages across the five subjects’ time courses of percentage signal change are plotted in Figure 4, where the data clearly show higher peaks during face than nonface epochs. The face selectivity ratios (derived in the same way described in Part II) varied from 2.7 for faces versus objects to 4.5 for faces versus hands 1-back (Table 2), once again indicating a high degree of selectivity for faces. Thus, the data from Part III indicate that the same region in the fusiform gyrus that responds more strongly to faces than objects also responds more strongly during passive viewing of three-quarter views of faces than hands, and more strongly during the 1-back matching task on faces than hands.

We have only partial information about the selectivity of the MT gyrus/ST sulcus activation in the two comparisons of Part III, because only two of the five subjects run in Part III contained activations in the MT/ST region for faces versus objects (S10 and S11). Both of these subjects showed significantly greater signal intensities in this region for faces versus hands, suggesting that it is at least partially selective for faces; however, this result will have to be replicated in future subjects to be considered solid.

Although a technical limitation prevented recording of the behavioral responses collected from subjects in the scanner during the 1-back task, the experimenters were able to verify that the subject was performing the task by monitoring both the subject’s responses and the stimulus on-line during the scan. All subjects performed both tasks well above chance. Subsequent behavioral measurements on different subjects (n = 12) in similar viewing conditions in the lab found similar performance in the two tasks (86% correct for hands and 92% correct for faces, corrected for guessing), although all subjects reported greater difficulty with the hands task than the faces task. Thus the hands task was at least as difficult as the faces task, and general attentional mechanisms should be at least as actively engaged by the hands task as the faces task.

DISCUSSION

This study found a region in the fusiform gyrus in 12 of 15 subjects that responded significantly more strongly during passive viewing of face than object stimuli. This region was identified within individual subjects and used as a specific ROI within which further tests of face selectivity were conducted. One test showed that the face ROIs in each of five subjects responded more strongly during passive viewing of intact two-tone faces than scrambled versions of the same faces, ruling out luminance differences as accounting for the face activation. In a second test, the average percentage signal increase (from the fixation baseline) across the five subjects’ face ROIs was more than six times greater during passive viewing of faces than during passive viewing of houses, indicating a high degree of stimulus selectivity and demonstrating that the face ROI does not simply respond whenever any set of different exemplars of the same category are presented. In a third test, the face ROIs in a new set of five subjects responded more strongly during passive viewing of three-quarter-view faces with hair concealed than during viewing of photographs of human hands, indicating that (1) this region does not simply respond to any animal or human images or body parts and (2) it generalizes to respond to images of faces taken from a different viewpoint that differed considerably in their low-level visual features from the original set of face images. Finally, in a fourth test, each of the five subjects’ face ROIs were shown to respond more strongly during a consecutive matching task carried out on the three-quarter-view faces than during the same matching task on the hand stimuli. Because both tasks required subordinate-level categorization, and the hand task was at least as difficult as the face task, the greater activation of the face ROIs during the face task indicates that the activity of this region does not reflect general processes associated either with visual attention or with subordinate-level classification of any class of stimuli (contrary to suggestions by Gauthier et al., 1996). The elimination of these main alternative hypotheses provides compelling evidence that the fusiform face area described in this study, which we will call area “FF,” is specifically involved in the perception of faces.

Area FF responds to a wide variety of face stimuli, including front-view gray-scale photographs of faces, two-tone versions of the same faces, and three-quarter-view gray-scale faces with hair concealed. Although it is possible that some low-level visual feature present in each of these stimuli can account for the activation observed, this seems unlikely given the diversity of faces and nonface control stimuli used in the present study. Furthermore, another study in our lab (E. Wojciulik, N. Kanwisher, and J. Driver, unpublished observations) has shown that area FF also responds more strongly during attention to faces than during attention to houses, even when the retinal stimulation is identical in the two cases. (The faces in that study were also smaller and were presented to the side of fixation, indicating further that area FF generalizes across the size and retinal position of the face stimuli.) We therefore conclude that area FF responds to faces in general rather than to some particular low-level feature that happens to be present in all the face but not nonface stimuli that have been tested so far. In addition, the fact that area FF responds as strongly to faces in which the external features (e.g., hair) are largely concealed under a hat suggests that area FF is more involved in face recognition proper than in “head recognition” (Sinha and Poggio, 1996).

Our use of a functional definition of area FF allowed us to assess the variability in the locus of the “same” cortical area across different individual subjects. Before considering the variability across individuals, it is important to note that our face-specific patterns of activation were highly consistent across testing sessions within a single subject. The remarkable degree of test–retest reliability can be seen in the results from four different testing sessions in subject S1 (see the brain images in Fig. 1a, S1 in the bottom left of Fig. 2, S1 in the top right of Fig. 2, and in Fig. 3a). Given the consistency of our within-subject results, it is reasonable to suppose that the variation observed across individuals primarily reflects actual individual differences.

Area FF was found in the fusiform gyrus or the immediately adjacent cortical areas in most right-handed subjects (Fig. 2, Table 1). This activation locus is near those reported in previous imaging studies using face stimuli, and virtually identical in Talairach coordinates to the locus reported in one (40x, −55y, −10z for the mean of our right-hemisphere activations; 37x, −55y, −10z in Clark et al., 1996). We found a greater activation in the right than left fusiform, a finding that is in agreement with earlier imaging studies (Sergent et al., 1992; Puce et al., 1996). We suspect that our face activation is somewhat more lateralized to the right hemisphere than that seen inCourtney et al. (1997) and Puce et al. (1995, 1996), because our use of objects as comparison stimuli allowed us to effectively subtract out the contribution of general object processing to isolate face-specific processing. In contrast, if scrambled faces are used as comparison stimuli (Puce et al., 1995; Courtney et al., 1997), then regions associated with both face-specific processing and general shape analysis are revealed and a more bilateral activation is produced. (See the image in Fig. 3b for a bilateral activation in our own intact vs scrambled faces run.) Our results showing complete lateralization of face-specific processing in some subjects (e.g., S1) but not in others (e.g., S4) are consistent with the developing consensus from the neuropsychology literature that damage restricted to the posterior right hemisphere is often, although not always, sufficient to produce prosopagnosia (De Renzi, 1997).

In addition to the fusiform face area described above, seven subjects in the present study also showed a greater activation for faces than objects in a more superior and lateral location in the right hemisphere in the region of the middle temporal gyrus/STS (Table 1). Although other areas were observed to be activated by faces compared with objects in individual subjects (Table 1), they were not consistent across subjects.

Physiological studies in macaques have shown that neurons that respond selectively to faces (Gross et al., 1972; Desimone, 1991;Perrett et al., 1991) are located in both the inferior temporal gyrus and on the banks of the STS. Cells in inferotemporal cortex tend to be selective for individual identity, whereas cells in STS tend to be selective for facial expression (Hasselmo et al., 1989) or direction of gaze or head orientation (Perrett et al., 1991). Lesion studies have reinforced this view, with bilateral STS lesions leaving face-identity matching tasks unimpaired but producing deficits in gaze discrimination (Heywood and Cowey, 1993). Similarly, studies of human neurological patients have demonstrated double dissociations between the abilities to extract individual identity and emotional expression from faces, and between individual identity and gaze direction discrimination, suggesting that there may be two or three distinct brain areas involved in these different computations (Kurucz and Feldmar, 1979; Bruyer et al., 1983; Adolphs et al., 1996). A reasonable hypothesis is that the fusiform face area reported here for humans is the homolog of the inferotemporal region in macaques, whereas the face-selective regions in the STS of humans and macaques are homologs of each other. If so, then we would expect future studies to demonstrate that the human fusiform face area is specifically involved in the discrimination of individual identity, whereas the MT gyrus/STS area is involved in the extraction of emotional expression and/or gaze direction.

The import of our study is threefold. First, it demonstrates the existence of a region in the fusiform gyrus that is not only responsive to face stimuli (Haxby et al., 1991, Sergent et al., 1992; Puce et al., 1995, 1996) but is selectively activated by faces compared with various control stimuli. Second, we show how strong evidence for cortical specialization can be obtained by testing the responsiveness of the same region of cortex on many different stimulus comparisons (also see Tootell et al., 1995). Finally, the fact that special-purpose cortical machinery exists for face perception suggests that a single general and overarching theory of visual recognition may be less successful than a theory that proposes qualitatively different kinds of computations for the recognition of faces compared with other kinds of objects.

Recent behavioral and neuropsychological research has suggested that face recognition may be more “holistic” (Behrmann et al., 1992;Tanaka and Farah, 1993) or “global” (Rentschler et al., 1994) than the recognition of other classes of objects. Future functional imaging studies may clarify and test this claim, for example, by asking (1) whether area FF can be activated by inducing similarly holistic or global processing on nonface stimuli, and (2) whether the response of area FF to faces is attenuated if subjects are induced to process the faces in a more local or part-based fashion. Future studies can also evaluate whether extensive visual experience with any novel class of visual stimuli is sufficient for the development of a local region of cortex specialized for the analysis of that stimulus class, or whether cortical modules like area FF must be innately specified (Fodor, 1983).

Footnotes

We thank the many people who helped with this project, especially Oren Weinrib, Roy Hamilton, Mike Vevea, Kathy O’Craven, Bruce Rosen, Roger Tootell, Ken Kwong, Lia Delgado, Ken Nakayama, Susan Bookheimer, Roger Woods, Daphne Bavelier, Janine Mendola, Patrick Ledden, Mary Foley, Jody Culham, Ewa Wojciulik, Patrick Cavanagh, Nikos Makris, Chris Moore, Bruno Laeng, Raynald Comtois, and Terry Campbell.

Correspondence should be addressed to Nancy Kanwisher, Department of Psychology, Harvard University, Cambridge, MA 02138.

REFERENCES

- 1.Adolphs R, Damasio H, Tranel D, Damasio AR. Cortical systems for the recognition of emotion in facial expressions. J Neurosci. 1996;16:7678–7687. doi: 10.1523/JNEUROSCI.16-23-07678.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Allison T, Ginter H, McCarthy G, Nobre AC, Puce A, Belger A. Face recognition in human extrastriate cortex. J Neurophysiol. 1994;71:821–825. doi: 10.1152/jn.1994.71.2.821. [DOI] [PubMed] [Google Scholar]

- 3.Behrmann M, Winocur G, Moscovitch M. Dissociation between mental imagery and object recognition in a brain-damaged patient. Nature. 1992;359:636–637. doi: 10.1038/359636a0. [DOI] [PubMed] [Google Scholar]

- 4.Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, Strauss MM, Hyman SE, Rosen BR. Response and habituation of the human amygdala during visual processing of facial expression. Neuron. 1996;17:875–887. doi: 10.1016/s0896-6273(00)80219-6. [DOI] [PubMed] [Google Scholar]

- 5.Bruce V, Doyle T, Dench N, Burton M. Remembering facial configurations. Cognition. 1991;38:109–144. doi: 10.1016/0010-0277(91)90049-a. [DOI] [PubMed] [Google Scholar]

- 6.Bruyer R, Laterre C, Seron X, Feyereisen P, Strypstein E, Pierrand E, Rectem D. A case of prosopagnosia with some preserved covert remembrance of familiar faces. Brain Cogn. 1983;2:257–284. doi: 10.1016/0278-2626(83)90014-3. [DOI] [PubMed] [Google Scholar]

- 7.Bush G, Jiang A, Talavage T, Kennedy D. An automated system for localization and characterization of functional MRI activations in four dimensions. NeuroImage. 1996;3:S55. [Google Scholar]

- 8.Clark VP, Keil K, Maisog JM, Courtney S, Ungerleider LG, Haxby JV. Functional magnetic resonance imaging of human visual cortex during face matching: a comparison with positron emission tomography. NeuroImage. 1996;4:1–15. doi: 10.1006/nimg.1996.0025. [DOI] [PubMed] [Google Scholar]

- 9.Courtney SM, Ungerleider LG, Keil K, Haxby JV (1997) Transient and sustained activity in a distributed neural system for human working memory. Nature, in press. [DOI] [PubMed]

- 10.Damasio AR, Tranel D, Damasio H. Face agnosia and the neural substrates of memory. Annu Rev Neurosci. 1990;13:89–109. doi: 10.1146/annurev.ne.13.030190.000513. [DOI] [PubMed] [Google Scholar]

- 11.De Renzi E. Prosopagnosia. In: Feinberg TE, Farah MJ, editors. Behavioral neurology and neuropsychology. McGraw-Hill; New York: 1997. pp. 245–255. [Google Scholar]

- 12.Desimone R. Face-selective cells in the temporal cortex of monkeys. Special issue: face perception. J Cognit Neurosci. 1991;3:1–8. doi: 10.1162/jocn.1991.3.1.1. [DOI] [PubMed] [Google Scholar]

- 13.Farah MJ, Wilson KD, Drain WH, Tanaka JR. The inverted face inversion effect in prosopagnosia: evidence for mandatory, face-specific perceptual mechanisms. Vision Res. 1995;35:2089–2093. doi: 10.1016/0042-6989(94)00273-o. [DOI] [PubMed] [Google Scholar]

- 14.Farah MJ, Meyer MM, McMullen PA. The living/nonliving dissociation is not an artifact: giving an a prior implausible hypothesis a strong test. Cognit Neuropsychol. 1996;13:137–154. doi: 10.1080/026432996382097. [DOI] [PubMed] [Google Scholar]

- 15.Fodor J. Modularity of mind. MIT; Cambridge, MA: 1983. [Google Scholar]

- 16.Gauthier I, Behrmann M, Tarr MJ, Anderson AW, Gore J, McClelland JL. Subordinate-level categorization in human inferior temporal cortex: converging evidence from neuropsychology and brain imaging. Soc Neurosci Abstr. 1996;10:11. [Google Scholar]

- 17.Gross CG, Roche-Miranda GE, Bender DB. Visual properties of neurons in the inferotemporal cortex of the macaque. J Neurophysiol. 1972;35:96–111. doi: 10.1152/jn.1972.35.1.96. [DOI] [PubMed] [Google Scholar]

- 18.Hasselmo ME, Rolls ET, Baylis GC. The role of expression and identity in the face-selective responses of neurons in the temporal visual cortex of the monkey. Behav Brain Res. 1989;32:203–218. doi: 10.1016/s0166-4328(89)80054-3. [DOI] [PubMed] [Google Scholar]

- 19.Haxby JV, Grady CL, Horwitz B, Ungerleider LG, Mishkin M, Carson RE, Herscovitch P, Schapiro MB, Rapoport SI. Dissociation of spatial and object visual processing pathways in human extrastriate cortex. Proc Natl Acad Sci USA. 1991;88:1621–1625. doi: 10.1073/pnas.88.5.1621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Haxby JV, Horwitz B, Ungerleider LG, Maisog JM, Pietrini P, Grady CL. The functional organization of human extrastriate cortex: a PET-rCBF study of selective attention to faces and locations. J Neurosci. 1994;14:6336–6353. doi: 10.1523/JNEUROSCI.14-11-06336.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Heywood CA, Cowey A. Colour and face perception in man and monkey: the missing link. In: Gulyas B, Ottoson D, Roland PE, editors. Functional organisation of human visual cortex. Pergamon Press; Oxford: 1993. pp. 195–210. [Google Scholar]

- 22.Hunt SMJ. MacProbe: A Macintosh-based experimenter’s work station for the cognitive sciences. Behav Res Methods Instrum Comput. 1994;26:345–351. [Google Scholar]

- 23.Kanwisher N, Chun MM, McDermott J, Ledden P. Functional imaging of human visual recognition. Cognit Brain Res. 1996;5:55–67. doi: 10.1016/s0926-6410(96)00041-9. [DOI] [PubMed] [Google Scholar]

- 24.Kurucz J, Feldmar G. Prosop-affective agnosia as a symptom of cerebral organic disease. J Am Geriatr Soc. 1979;27:91–95. doi: 10.1111/j.1532-5415.1979.tb06037.x. [DOI] [PubMed] [Google Scholar]

- 25.Malach R, Reppas JB, Benson RB, Kwong KK, Jiang H, Kennedy WA, Ledden PJ, Brady TJ, Rosen BR, Tootell RBH. Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proc Nat Acad Sci USA. 1995;92:8135–8138. doi: 10.1073/pnas.92.18.8135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Nobre AC, Allison T, McCarthy G. Word recognition in the human inferior temporal lobe. Nature. 1994;372:260–263. doi: 10.1038/372260a0. [DOI] [PubMed] [Google Scholar]

- 27.Ojemann JG, Ojemann GA, Lettich E. Neuronal activity related to faces and matching in human right nondominant temporal cortex. Brain. 1992;115:1–13. doi: 10.1093/brain/115.1.1. [DOI] [PubMed] [Google Scholar]

- 28.Perrett DI, Oram MW, Harries MH, Bevan R, Hietanen JK, Benson PJ, Thomas S. Viewer-centered and object-centered coding of heads in the macaque temporal cortex. Exp Brain Res. 1991;86:159–173. doi: 10.1007/BF00231050. [DOI] [PubMed] [Google Scholar]

- 29.Perrett DI, Hietanen JK, Oram MW, Benson PJ. Organisation and functions of cells responsive to faces in the temporal cortex. Philos Trans R Soc Lond [Biol] 1992;335:23–30. doi: 10.1098/rstb.1992.0003. [DOI] [PubMed] [Google Scholar]

- 30.Puce A, Allison T, Gore JC, McCarthy G. Face-sensitive regions in human extrastriate cortex studied by functional MRI. J Neurophysiol. 1995;74:1192–1199. doi: 10.1152/jn.1995.74.3.1192. [DOI] [PubMed] [Google Scholar]

- 31.Puce A, Allison T, Asgari M, Gore JC, McCarthy G. Differential sensitivity of human visual cortex to faces, letterstrings, and textures: a functional magnetic resonance imaging study. J Neurosci. 1996;16:5205–5215. doi: 10.1523/JNEUROSCI.16-16-05205.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Rademacher J, Caviness VS, Jr, Steinmetz H, Galaburda AM. Topographical variation of the human primary cortices: implications for neuroimaging, brain mapping, and neurobiology. Cereb Cortex. 1993;3:313–329. doi: 10.1093/cercor/3.4.313. [DOI] [PubMed] [Google Scholar]

- 33.Rensink RA, O’Regan JK, Clark JJ (1997) To see or not to see: the need for attention to perceive changes in scenes. Psychol Sci, in press.

- 34.Rentschler I, Treurwein B, Landis T. Dissociation of local and global processing in visual agnosia. Vision Res. 1994;34:963–971. doi: 10.1016/0042-6989(94)90045-0. [DOI] [PubMed] [Google Scholar]

- 35.Rosch E, Mervis CB, Gray WD, Johnson DM, Boyes-Braem P. Basic objects in natural categories. Cognit Psychol. 1976;8:382–439. [Google Scholar]

- 36.Sergent J, Ohta S, MacDonald B. Functional neuroanatomy of face and object processing: a positron emission tomography study. Brain. 1992;115:15–36. doi: 10.1093/brain/115.1.15. [DOI] [PubMed] [Google Scholar]

- 37.Sinha P, Poggio T. I think I know that face. Nature. 1996;384:404. doi: 10.1038/384404a0. [DOI] [PubMed] [Google Scholar]

- 38.Tanaka JW, Farah MJ. Parts and wholes in face recognition. Q J Exp Psychol [A] 1993;46A:225–245. doi: 10.1080/14640749308401045. [DOI] [PubMed] [Google Scholar]

- 39.Tootell RBH, Reppas JB, Kwong KK, Malach R, Born RT, Brady TJ, Rosen BR, Belliveau JW. Functional analysis of human MT and related visual cortical areas using magnetic resonance imaging. J Neurosci. 1995;15:3215–3230. doi: 10.1523/JNEUROSCI.15-04-03215.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Turk M, Pentland A. Eigenfaces for recognition. Special issue: face perception. J Cognit Neurosci. 1991;3:71–86. doi: 10.1162/jocn.1991.3.1.71. [DOI] [PubMed] [Google Scholar]

- 41.Yin RK. Looking at upside-down faces. J Exp Psychol. 1969;81:141–145. [Google Scholar]