Abstract

This study is part of an effort to map neural systems involved in the processing of emotion, and it focuses on the possible cortical components of the process of recognizing facial expressions. We hypothesized that the cortical systems most responsible for the recognition of emotional facial expressions would draw on discrete regions of right higher-order sensory cortices and that the recognition of specific emotions would depend on partially distinct system subsets of such cortical regions. We tested these hypotheses using lesion analysis in 37 subjects with focal brain damage. Subjects were asked to recognize facial expressions of six basic emotions: happiness, surprise, fear, anger, disgust, and sadness. Data were analyzed with a novel technique, based on three-dimensional reconstruction of brain images, in which anatomical description of surface lesions and task performance scores were jointly mapped onto a standard brain-space. We found that all subjects recognized happy expressions normally but that some subjects were impaired in recognizing negative emotions, especially fear and sadness. The cortical surface regions that best correlated with impaired recognition of emotion were in the right inferior parietal cortex and in the right mesial anterior infracalcarine cortex. We did not find impairments in recognizing any emotion in subjects with lesions restricted to the left hemisphere. These data provide evidence for a neural system important to processing facial expressions of some emotions, involving discrete visual and somatosensory cortical sectors in right hemisphere.

Keywords: emotion, somatosensory cortex, right hemisphere, facial expression, lesion method, brain mapping, fear, human

Clinical and experimental studies have suggested that the right hemisphere is preferentially involved in processing emotion in humans (Ley and Bryden, 1979; DeKosky et al., 1980; Ross, 1985; Silberman and Weingartner, 1986; Bowers et al., 1987, 1991;Blonder et al., 1991; Borod et al., 1992; Van Strien and Morpurgo, 1992; Borod, 1993; Darby, 1993). Earlier studies showed that damage to the right hemisphere can impair the processing of emotional faces or scenes (DeKosky et al., 1980) and that electrical stimulation of right temporal visual-related cortices can disrupt the processing of facial expressions (Fried et al., 1982). Several discrete sectors in the right hemisphere have been reported to result in defects in processing emotion. Lesions in the right temporal and parietal cortices have been shown to impair emotional experience and arousal (Heller, 1993) and to impair imagery for emotion (Blonder et al., 1991; Bowers et al., 1991), and it has been proposed that the right hemisphere contains modules for nonverbal affect computation (Bowers et al., 1993), which may have evolved to subserve aspects of social cognition (Borod, 1993).

Much recent work has focused on the visual recognition of emotion signaled by human facial expressions. Selective impairments in recognizing facial expressions, sparing the ability to recognize identity, can occur after right temporoparietal lesions (Bowers et al., 1985). Specific anomia for emotional facial expressions has been reported after right middle temporal gyrus lesions (Rapcsak et al., 1989, 1993). The evidence that the right temporoparietal cortex is important in processing emotional facial expressions is corroborated by data from PET imaging (Gur et al., 1994) and neuronal recording (Ojemann et al., 1992) in humans.

The above findings suggest, therefore, that damage to right temporal or parietal cortices can impair recognition of emotional facial expressions, but they leave open the possibility that only specific anatomical sectors are involved and that not all emotions are impaired equally, as has been reported recently with respect to subcortical structures (Adolphs et al., 1994, 1995). Accordingly, the purpose of the present study was to extend the characterization of the system components involved in recognizing facial expressions to a deeper level of anatomical detail and to relate the anatomical findings to distinct emotions as opposed to emotion in general.

Based on the findings reviewed above, we undertook to test the following hypotheses: (1) that higher-order sensory cortices within the right, but not the left, hemisphere would be essential to recognize emotion in facial expressions; and (2) that partly different sets of such cortical regions might be important in processing different basic emotions. We are aware, of course, that brain regions in frontal cortex and subcortical nuclei may also be involved in processing emotion, but the present study concentrates on investigating the contribution of sensory cortices, and on one aspect of emotion processing: that of recognizing facial expressions of emotion.

Previous studies have often relied on single case data and have used a variety of different experimental tasks, making comparisons and generalizations difficult. To obtain results that circumvent these problems, we tested our hypothesis in a large number of subjects with circumscribed lesions in left or right sensory neocortex on a carefully designed, quantitative task of the recognition of facial expressions of emotion (Adolphs et al., 1994, 1995), using both standard (Damasio and Damasio, 1989) and novel lesion analysis techniques. The results allow us to infer the existence of putative cortical systems important to processing facial expressions of emotions.

MATERIALS AND METHODS

Thirty-seven brain-damaged subjects [verbal IQ (WAIS-R) = 99 ± 10; age = 53 ± 16 (mean ± SD)] who were all right-handed participated in a task of the recognition of facial expressions of emotion. We compared their performances to the mean performance of 15 normal controls (7 males, 8 females) of similar age and IQ [estimated verbal IQ (NART-R) = 104 ± 7; age = 55 ± 13]. Brain-damaged subjects were selected from the Patient Registry of the Division of Behavioral Neurology and Cognitive Neuroscience at the University of Iowa and had been fully characterized neuroanatomically and neuropsychologically according to the standard protocols of the Benton Neuropsychology Laboratory (Tranel, 1996) and the Laboratory of Neuroimaging and Human Neuroanatomy (Damasio and Damasio, 1989; Damasio and Frank, 1992). For each brain-damaged subject, MR and/or CT scan data were available. Three-dimensional reconstructions of MR images were obtained wherever possible.

The neurological diagnoses of the subjects included stroke (n = 28), neurosurgical lobectomies for the treatment of epilepsy (n = 6), or herpes simplex encephalitis (n = 3).

Subject selection

Brain-damaged subjects were chosen on the basis of neuroanatomical criteria. Out of an initial pool of 68 subjects, we first chose any subjects who satisfied the inclusion and exclusion criteria below.

Inclusion criteria. Included were subjects with (1) stable, chronic lesions (>3 months post onset) (2) in primary or higher-order sensory cortices.

We included subjects with lesions of any size.

Exclusion criteria. We excluded 31 subjects before data analysis, for the following reasons: (1) no clear lesions were visible on CT or MR scans taken at the time the subject was tested on our task; (2) the subject had predominantly subcortical or prefrontal lesions; (3) the subject was judged to be too aphasic to give a valid task performance; or (4) the subject had questionable or atypical cerebral dominance.

These criteria yielded an initial group of 34 subjects. After an examination of the distribution of the sites of lesions of these subjects, we found it necessary to control for the fact that some subjects with very large, posteriorly centered lesions nonetheless had some involvement of frontal cortex. Although it was not an aim of this study to examine frontal cortex, we decided to add 3 subjects (#1331, #1656, and #1569) with lesions primarily in the right frontal lobe, specifically to control for those right frontal sectors that were also involved in some of our subjects who had lesions centered in the right parietal cortex.

This brought our final group to 37 subjects, 22 with unilateral right hemisphere lesions, 13 with unilateral left hemisphere lesions, and 2 with bilateral lesions. The 2 subjects with bilateral lesions were included in both the left hemisphere group and the right hemisphere group for neuroanatomical analyses, but were excluded from statistical comparisons of left versus right hemisphere damage (both subjects had lesions in primary visual cortex and turned out to perform entirely normally on our task).

Experimental tasks

Subjects were shown black-and-white slides of faces with emotional expressions and were asked to judge the expressions with respect to several verbal labels (the adjectives that corresponded to the emotions we showed), as described previously (Adolphs et al., 1994,1995). We chose 39 facial expressions from Ekman and Friesen (Ekman, 1976) that had all been shown to be identified reliably by normal subjects at >80% success rate. Each of the 39 expressions was presented 6 times in two blocks separated by several hours. Six faces (both male and female) each of anger, fear, happiness, surprise, sadness, and disgust, as well as three neutral faces were projected on a screen, one at a time, in randomized order. Subjects had in front of them cards with the names of the emotions typed in large print and were reminded periodically of these by the experimenter. Before each rating of the faces on a new emotion label, subjects were involved in a brief discussion that clarified the meaning of that label through examples. Subjects were asked to judge each face on a scale of 0–5 (0 = not at all, 5 = very much) on the following six labels: happy, sad, disgusted, angry, afraid, surprised (1 adjective per block of slides), in random order. There was no time limit. Subjects gave verbal responses whenever possible or pointed to the numbers on a scale if they could not give verbal responses. Care was taken to ensure that all subjects knew which label they were using for the rating and that they used the scale correctly. All subjects understood the labels, as assessed by their ability to comprehend scenarios pertaining to that emotional label.

Neuropsychological analysis

We calculated the correlations between a subject’s ratings of an expression on the six emotion labels and the mean rating given to that expression by 15 normal control subjects. This yielded a measure of recognition of facial expressions of emotion. The correlations wereZ-transformed to normalize their distribution, averaged over faces that expressed the same emotion, and inverseZ-transformed to give the mean Pearson correlation to normal ratings for each emotion category.

Neuroanatomical analysis

The neuroanatomical data were analyzed with a new method for quantitative visualization of lesion overlaps in two dimensions, MAP-2. We traced the surface damage of each subject’s brain in the group onto the corresponding regions of cortex in the image of a normal reference brain that had been reconstructed in three dimensions (Damasio and Frank, 1992). A straight lateral and mesial view were used. The method for transferring a lesion onto the normal brain is described below.

(a) In those cases in which a three-dimensional reconstruction of the lesioned brain was available, lateral and mesial views of the brain with the lesion were matched to the corresponding views of the normal brain. The surface contour of the lesion was then mapped onto the normal brain, taking into account its relation to sulcal and gyral landmarks (which had been color-coded previously in both brains).

(b) In those cases in which only two-dimensional MR or CT data were available, we used a modification of the template method (Damasio and Damasio, 1989) as follows.

(1) Using the program BRAINVOX (Damasio and Frank, 1992), the normal brain was resliced so as to match the slice orientation and thickness of the two-dimensional images of the lesioned brain. In this manner, we created a complete set of images matched for level and attitude between the two brains.

(2) For each matched pair of brain slices, we manually transferred the region that was lesioned from the subject’s brain onto the normal brain, taking care to maintain the same relations to identifiable anatomical landmarks.

(3) The cumulative transfer of lesions from each slice of the subject’s damaged brain onto the normal brain resulted in a series of normal brain slices with a trace of the subject’s entire lesion. When the normal brain slices were reconstructed in three dimensions, we obtained mesial and lateral views showing the lesion on the surface of the brain.

After lesions had been traced onto the normal reference brain, we verified the lesion transfer by visually comparing the lesion in the original subject’s brain to the transferred lesion in the normal reference brain. In all cases, the two representations of the subject’s lesion corresponded closely with respect to neuroanatomical landmarks.

We computed overlaps of subjects’ lesions so as to determine which lesion sites were shared among subjects. Additionally, we computed the mean neuropsychological scores associated with all the subjects who had lesions that included a particular neuroanatomical location, so as to obtain a measure of the extent to which different neuroanatomical loci contribute to task performance.

The lesion traces in the normal reference brain were convolved with a 2-pixel-wide Gaussian filter (pixel size = 0.937 mm). This minimized sharp discontinuities in the images by blurring the boundaries of the lesion trace. The composite traces for all the lesions, together with the neuropsychological data for each subject, were subsequently averaged as follows. Images were composed in a hue-saturation-lightness (HSL) space. Pixel hue was used to encode the average, or weighted average, scores of those subjects whose lesion included the pixel position; pixel saturation encoded the number of subjects who had lesions that included that pixel; and pixel lightness encoded the underlying view of the normal brain onto which the lesion and neuropsychological data were mapped. This procedure yielded a map of the superimposed lesions on the surface of the normal brain, color-coded to reflect the mean (or weighted mean) task performance score for all subjects who had a lesion that encompassed a particular neuroanatomical location.

We computed both mean and weighted mean neuropsychological scores in our analysis. Z-transforms of correlations were used in all averaging procedures. Mean scores are simply the average score of all the subjects whose lesion included a particular neuroanatomical location. Weighted mean scores are obtained by averaging subjects’ scores such that more weight is given to some subjects’ scores than to others, as described below. The rationale for computing weighted mean scores is that subjects with normal performances should contribute more to the mean performance index for a given pixel than subjects with impaired performances. Subjects with more normal performances, therefore, will tend to override subjects with more impaired performances when both share lesion sectors, consequently permitting us to infer which sectors are most important to normal task performance. For example, a subject with a large lesion might be impaired, but the lesion will give little information about the specific neuroanatomical substrate of the impairment. However, when other subjects with partly overlapping lesions perform normally, we can infer that the first subject’s impairment may depend on that sector of the lesion that does not overlap with the lesions of the subjects who performed normally.

Weighted mean scores were calculated by assigning a weight,w = 0.01 + 0.99/(1 + exp(−10(x − 0.5))), to each subject’s score (x), such that subjects with more normal scores (closer to 1) were weighted more than subjects with very defective scores (close to 0). The functionw(x) is a well behaved sigmoid function commonly used to sum inputs in neural network simulations. This method in effect subtracts from an impaired subject’s lesion all sectors that are shared in common with lesions of subjects who are not impaired, allowing us to focus on those sectors of the lesion that correlate best with defective performance. During our analysis, we examined a large number of different functions of the form w(x) that varied in steepness and offset. In all cases, the analysis converged on very similar results, indicating that the method is robust for the data in our sample.

Multiple interactive regression analysis

We wanted to control for the possibility that impaired recognition of facial expressions of emotion might be attributable to other defects. Of special interest were general visuoperceptual function, IQ, and measures of depression. We examined subjects’ scores on the following neuropsychological tests: verbal and performance IQ (Wechsler, 1981), perceptual matching of unfamiliar faces (Benton et al., 1983), judgment of line orientation (Benton et al., 1983), the Rey–Osterrieth complex figure test (copy), three-dimensional block construction (Benton et al., 1983), the D-scale of the Minnesota Multiphasic Personality Inventory (Greene, 1980), the Beck Depression Inventory (Beck, 1987), and naming and recognition of famous faces (Tranel et al., 1995). We used an interactive regression analysis so as to examine to what extent performances on our experimental tasks covaried with performances on these neuropsychological control tasks.

RESULTS

We first examined the effects on emotion recognition caused by side of lesion (left or right) and by the emotion to be recognized in the task (happy, surprised, afraid, angry, disgusted, or sad) with a 2 × 6 ANOVA, with side of lesion as a between-subjects factor and type of emotion as a within-subjects factor. There was a main effect of emotion type: performances differed significantly depending on the specific emotion (F = 13.0; p < 0.0001). There was no significant main effect of side of lesion (F = 2.2; p = 0.14), but a significant interaction between side of lesion and emotion (F = 4.0; p = 0.002), showing that subjects with right hemisphere damage did not differ from subjects with left hemisphere damage with respect to recognition of emotion in general, but that they did differ with respect to specific emotions, as we had predicted.

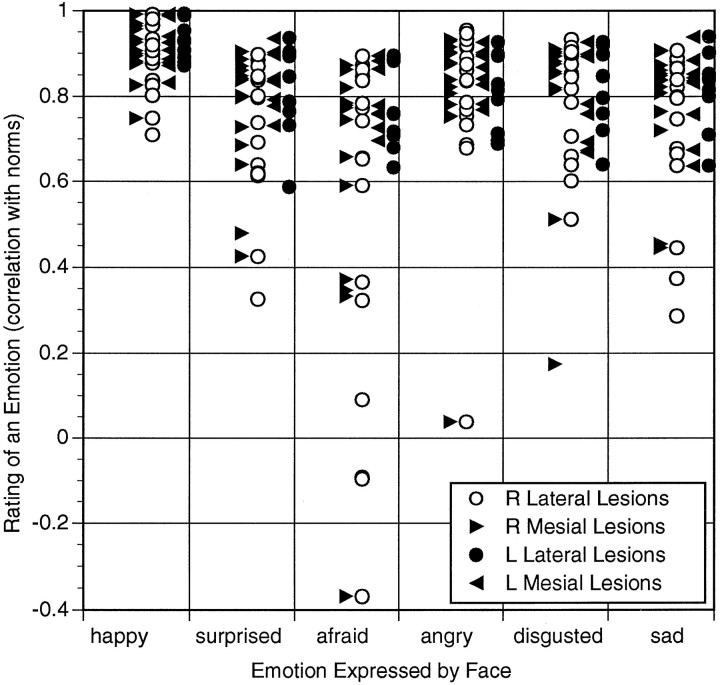

Analysis with respect to individual emotions revealed that different emotions were differentially impaired (Fig. 1). Recognition of happy emotions was not impaired, whereas recognition of several negative emotions, especially fear, was notably impaired. Recognition of happy faces differed significantly from recognition of all faces except angry faces, and recognition of afraid faces differed from recognition of all other faces (Scheffe test, p < 0.01). To analyze these data further with respect to the specific anatomical sectors that might be responsible for our results, we calculated surface overlap between lesions together with mean performance scores for all subjects (see Materials and Methods for details). The results are depicted on the lateral and mesial views of the left and right hemispheres of a normal brain in the following sections.

Fig. 1.

Performance scores on recognition of facial expression for all subjects. Pearson correlations between a brain-damaged subject’s ratings and normal ratings are shown for each subject and for each emotion category used in the task. The recognition of fearful faces is impaired in the largest number of subjects, and the recognition of happy faces is never impaired.

Left hemisphere lesions

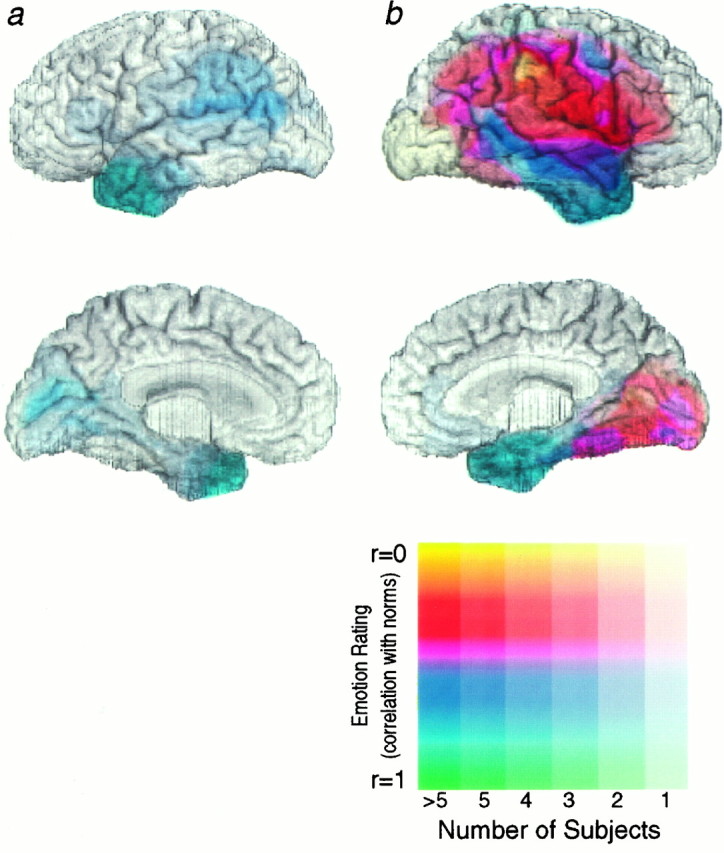

Fifteen subjects with lesions of the left hemisphere were tested on their recognition of facial expressions of emotion. None had difficulty recognizing any facial expressions of emotion. We computed average performances for all subjects sharing a lesion locus, as detailed in Materials and Methods. We show the mean (unweighted) performance scores for subjects with left hemisphere lesions in Figure2a. To obtain a lower limit to subjects’ performance with regard to any emotion, we show the means of each subject’s lowest correlation on any of the six emotions.

Fig. 2.

Mean performance scores on recognition of facial expressions for subjects with left (a) and right (b) hemisphere lesions. Performance scores are correlations of a subject’s rating of a facial expression with the mean ratings given by normal controls. The unweighted mean scores were calculated for that emotion on which each subject performed the worst, so as to give a lower limit to the ability to process emotions in general. Thus, if a subject was impaired in recognizing any of the six emotions, he would be impaired on this measure. Composite extents of lesions for all subjects, together with their mean scores on their worst individual emotion performances, are shown on the lateral and mesial surfaces of the hemispheres. The number of subjects sharing a lesion locus is encoded by the saturation (fainter colors correspond to small numbers of subjects, andstronger colors correspond to larger numbers of subjects), and the mean score is encoded by the color of each pixel (yellow and red hues correspond to more impaired performances, and blue and green hues correspond to more normal performances), as indicated in the scale. The figure shows that there were no impairments in recognizing any facial expressions among subjects with left hemisphere lesions, but that some subjects with lesions in regions of the right hemisphere were impaired.

Right hemisphere lesions

We tested 24 subjects with lesions of the right hemisphere. Several of these subjects were impaired on our task. The composite image pertaining to the analysis of right hemisphere lesions is shown in Figure 2b.

Initial analysis of mean performance scores showed that there are sectors in the right hemisphere that contribute differentially to impaired recognition of emotion (Fig. 2b). Anterior and inferior temporal cortex appeared not to be essential to the recognition of emotion in facial expressions, whereas parietal and mesial occipital cortices were involved when there was impaired recognition of emotion.

Subjects were not equally impaired on the recognition of all emotional expressions. The recognition of expressions of fear was the most impaired, whereas the recognition of expressions of happiness was not impaired (Figs. 1, 3). Although some subjects who were impaired in recognizing fear were also impaired in recognizing other negative emotions, the impaired recognition of negative emotions other than fear did not result in a mean impaired score at any anatomical location (Fig. 3). Possible exceptions to this observation are anger and sadness, which showed very small regions of somewhat impaired mean performance (Fig. 3); however, the relatively small number of subjects associated with these results (compare Fig. 1) does not allow us to draw any firm conclusions.

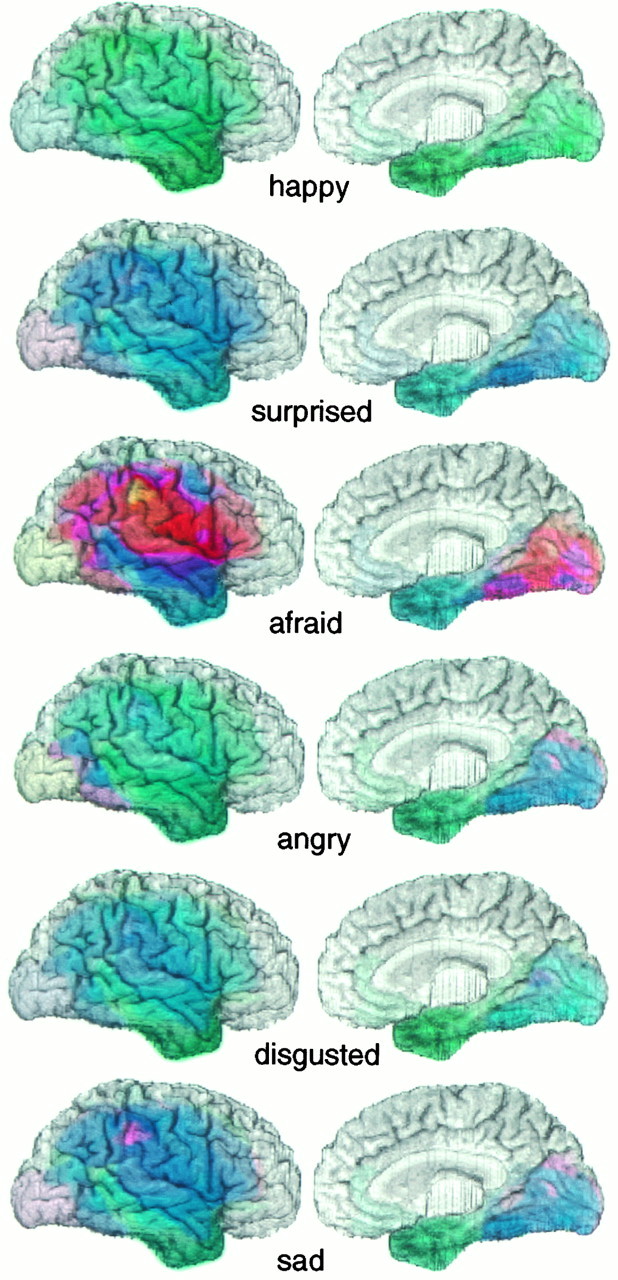

Fig. 3.

Unweighted mean performance scores on recognition of specific facial expressions for subjects with right hemisphere lesions. Unweighted mean correlation scores are shown for each emotion for all subjects with lesions in the lateral (left) or mesial (right) aspects of the right hemisphere. Pixel attributes are as in Figure 2; hue corresponds to the mean score of all the subjects who had a lesion that included a given pixel location. The recognition of fear was most impaired in subjects with lesions in right hemisphere. However, there are also more subtle differences among the other emotions. Happiness was recognized entirely normally (green) with respect to lesions at any location, whereas lesions that included a region within the supramarginal gyrus resulted in a somewhat impaired (purple) recognition of sad faces. Lesions restricted to the anterior and inferior temporal cortex did not result in impairments in recognizing any emotion (green–blue of this region in all images).

To examine directly the overlap of lesions of those subjects who were the most impaired in recognizing fear, we generated overlap images for various subject groups with respect to the lateral and mesial surfaces of the right hemisphere. We calculated the surface overlaps of the lesions of all subjects whose scores in recognition of fear were less than a specific cut-off. In all cases, this was equivalent to choosing the subject’s worst score on any emotion. We chose cut-offs of 0.5 and 0.3 and show these overlaps together with the lesion overlaps of the entire subject sample in Figure 4a. The maximal overlap of subjects with the most impaired performance is in parietal and mesial occipital sectors in right hemisphere. The top panel in Figure 4a shows the lesions of the entire subject pool and demonstrates that our results are not likely to be attributable to the way in which different neuroanatomical loci were sampled.

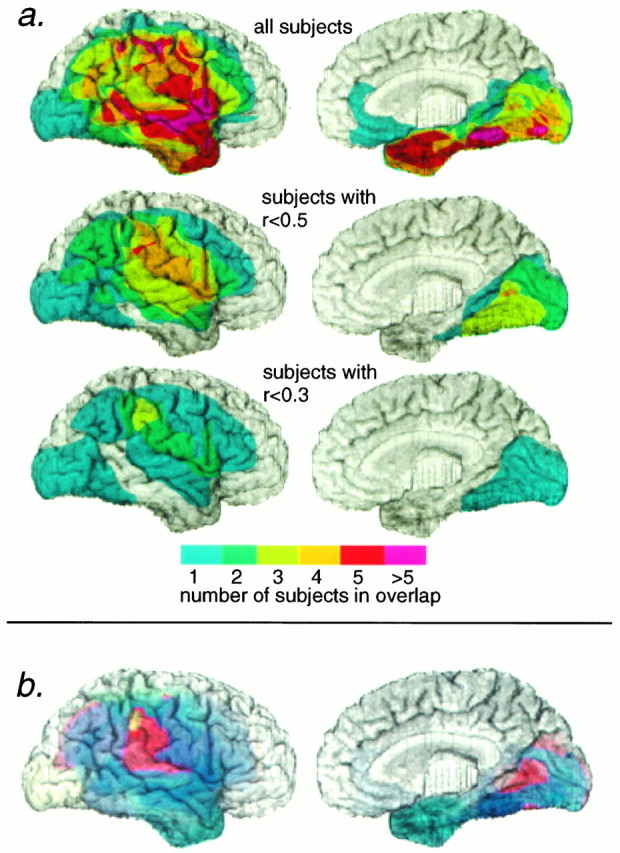

Fig. 4.

Anatomical regions involved in the recognition of fear. a, Anatomical overlap of lesions of subject groups. We calculated overlaps only for the lateral (left) and mesial (right) aspects of the right hemisphere, because all subjects with left hemisphere lesions were normal on our task. The top panel shows the overlap of the lesions of all subjects. Bottom panels show the overlap of the lesions of all subjects whose score on recognition of fear (equivalent to their lowest score on any emotion) was less than a given cut-off value, indicated on the figure. The maximal overlap of the lesions of those subjects with the most impaired scores was in the right inferior parietal cortex and in the right infracalcarine cortex. It should be noted that a single impaired subject whose lesion encompassed both mesial and lateral right occipital cortex is visible in all panels. Although this subject appears on the lateral views, we think it likely that his impaired performance in fact results from the inclusion of right mesial occipital sectors, which he shares in common with other impaired subjects. b, Weighted mean performance scores on recognition of facial expressions of fear for subjects with lesions of the right hemisphere. In this figure, pixel hue corresponds to the mean of subjects’ weighted scores, such that more normal scores contribute more to the mean than do more impaired scores; see Materials and Methods for details. In the lateral aspect of the right hemisphere (left), there is a hot-spot in the right supramarginal and right posterior superior temporal gyri. In the mesial aspect of the right hemisphere (right) there is a hot-spot in the posterior sector of the right anterior infracalcarine cortex. Subjects whose lesions included the hot-spot region were the most impaired in their recognition of fear. Pixel hue and saturation are encoded as in the scale to Figure 2. Convergent results were obtained in a and b.

As an additional method to extract specific sectors that may account for impaired performance, we used a weighted mean analysis in which subjects with higher (more normal) scores were weighted more than subjects with lower (more impaired) scores. With this analysis, sectors shared by subjects who performed normally and by subjects whose performance was impaired would show up as essentially normal (see Materials and Methods for details). Our analysis suggested that specific and circumscribed sectors on the lateral and mesial surfaces of the right hemisphere were most important in contributing to impaired recognition of fear; we call such loci “hot-spots.” On the lateral surface of the brain, the territory of the supramarginal gyrus and the posterior sector of the superior temporal gyrus appear to be hot-spots with this approach. On the mesial surface of the brain, there appears to be a hot-spot in a sector of the infracalcarine cortex corresponding to the anterior segment of the lingual gyrus (Fig. 4b). To determine the reliability of these findings, we next conducted statistical comparisons between subject groups. With respect to recognition of fear, subjects whose lesions included one of the two hot-spots (n = 9) differed significantly from subjects whose lesions did not include a hot-spot (n = 28; Mann–Whitney U test, p < 0.0001).

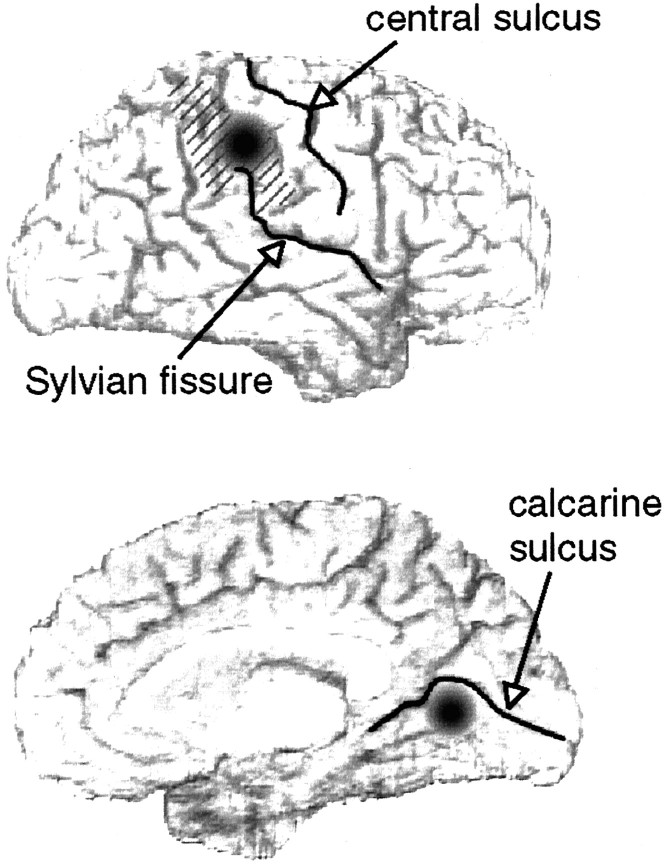

Thus, the regions of maximal overlap of lesion for impaired subjects (Fig. 4a) and the “hot-spots” obtained from the weighted MAP-2 analysis of all subjects (Fig. 4b) both point to two neuroanatomical regions: the inferior parietal cortex and the mesial anterior infracalcarine cortex. With respect to our subject sample, lesions within either of these two areas are the most important contributors to impaired recognition of emotional facial expressions, specifically fear.

Relationships between the processing of different emotions and between the processing of emotions and other neuropsychological measures

We found that recognition of fear tends to be more consistently impaired by specific brain lesions than does recognition of other negative emotions and that recognition of happiness is never impaired. Does impaired recognition of some emotions covary within subjects? For each subject, we calculated Pearson correlations between the performance scores on all the different emotions (we calculated correlations between Z-transforms). The mean results of this analysis for all subjects are given in Table 1. The Bonferroni-corrected probabilities that these correlations are significant suggest that (1) damage that includes the right inferior parietal cortex results in recognition impairments that correlate for most negative emotions, especially fear and sadness, and (2) damage that includes the right anterior infracalcarine cortex results in recognition impairments that appear to be more specific to fear, and that correlate for surprise and fear. Recognition scores on happy expressions did not correlate with the recognition of any other emotion for any group of subjects, suggesting that happy expressions are processed differently from all other expressions.

Table 1.

Pearson correlations between subjects’ scores on different emotions

| Right lateral lesion group; n = 18; Bartlett χ2 = 73 (p < 0.0001) | ||||||

|---|---|---|---|---|---|---|

| Happy | Surprised | Afraid | Angry | Disgusted | Sad | |

| Happy | 1 | 0.499 | 0.474 | 0.56 | 0.405 | 0.423 |

| Surprised | 1 | †0.786 | 0.599 | 0.552 | †0.748 | |

| Afraid | 1 | †0.735 | †0.827 | †0.779 | ||

| Angry | 1 | †0.769 | *0.698 | |||

| Disgusted | 1 | †0.803 | ||||

| Sad | 1 | |||||

| Right mesial lesion group; n = 14; Bartlett χ2 = 44 (p < 0.0001) | ||||||

|---|---|---|---|---|---|---|

| Happy | Surprised | Afraid | Angry | Disgusted | Sad | |

| Happy | 1 | 0.36 | 0.317 | 0.529 | −0.214 | 0.416 |

| Surprised | 1 | †0.812 | 0.389 | 0.53 | 0.521 | |

| Afraid | 1 | 0.582 | *0.733 | 0.722 | ||

| Angry | 1 | 0.241 | 0.665 | |||

| Disgusted | 1 | 0.387 | ||||

| Sad | 1 | |||||

Bonferroni-adjusted p-values:

p < 0.05;

p < 0.01.

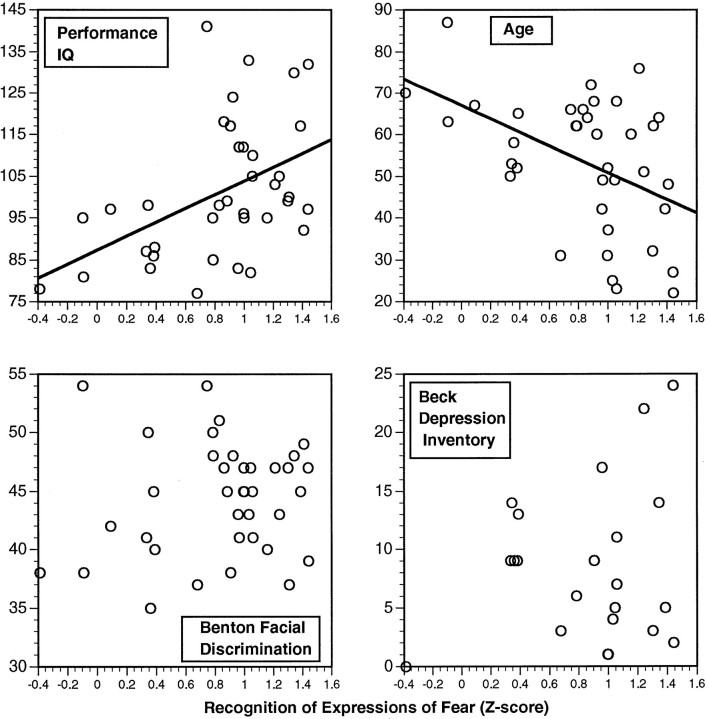

We also wanted to investigate to what extent other factors such as visuoperceptual function, IQ, or depression might correlate with impaired recognition of facial expressions. We consequently examined subjects on a large number of neuropsychological tasks (see Materials and Methods; Table 2), including measures of visuoperceptual and visuospatial capability and depression. All of these variables, in addition to subject age and gender, were entered into an interactive multiple linear regression program so as to calculate the extent to which each of these variables could predict the scores on our experimental task of emotion recognition. Significant regressions were found only for the recognition of afraid and sad faces. For both of these emotions, age and performance IQ were the only significant predictors (Fig. 5). For fear, PIQt-ratio = 3.71 (p < 0.01), aget-ratio = −2.58 (p = 0.018), and Beck Depression Inventory t-ratio = 1.7 (p = 0.1; not significant); adjustedR2 = 52.1%. For sadness, PIQt-ratio = 2.15 (p = 0.038), aget-ratio = −2.12 (p = 0.041), and adjusted R2 = 30.5%. Thus, age and performance IQ correlate with recognition of facial expressions of fear and sadness, although these two factors could not fully account for the impairments in recognizing the emotions. Importantly, there was no correlation between performance on our experimental task and performance on visuoperceptual discrimination tasks (compare Fig. 5), showing that the impairments in emotion recognition cannot be attributed to impaired perception but, instead, reflect a difficulty in recognizing the emotion signalled by the perceived face.

Table 2.

Subject neuropsychology

| ID | VIQ | PIQ | Age/ gender | Face dsc. | Line or. | R-O copy | 3-D | MMPI D-scale | BDI | Vision | Faces: recogn. | Faces: naming |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Left hemisphere lesions | ||||||||||||

| 194 | 84 | 92 | 48/F | 49 | 27 | 35 | 28 | normal | normal | impaired | ||

| 580 | 81 | 112 | 31/F | 45 | 23 | 36 | 1 | normal | normal | normal | ||

| 674 | 108 | 117 | 42/F | 45 | 26 | 35 | 5 | normal | impaired | impaired | ||

| 1023 | 96 | 117 | 68/M | 38 | 23 | 35 | 29 | 9 | normal | normal | normal | |

| 1077 | 113 | 132 | 22/M | 39 | 27 | 36 | 29 | 2 | normal | normal | normal | |

| 1251 | 91 | 95 | 37/M | 45 | 21 | 34 | 29 | normal | normal | normal | ||

| 1366 | 108 | 98 | 66/M | 51 | 25 | 33 | 29 | normal | normal | |||

| 1374 | 96 | 96 | 52/M | 47 | 24 | 34 | 29 | 47 | 1 | RRH | ||

| 1713 | 106 | 99 | 72/F | 45 | 30 | 35 | RHH | normal | normal | |||

| 1861 | 106 | 124 | 60/F | 48 | 30 | 33 | RUQ | normal | normal | |||

| 1899 | 120 | 118 | 64/M | 47 | 26 | 30 | RHH | normal | impaired | |||

| 1962 | 141 | 66/M | 54 | 24 | 31 | 29 | normal | normal | impaired | |||

| 1976 | 100 | 100 | 62/M | 37 | 25 | 28 | RHH | |||||

| Right hemisphere lesions | ||||||||||||

| 650 | 88 | 86 | 52/M | 45 | 26 | 32 | 29 | 72 | 9 | LHH | normal | impaired |

| 692 | 87 | 77 | 31/F | 37 | 19 | 26 | 29 | 76 | 3 | normal | normal | normal |

| 1078 | 101 | 98 | 53/F | 50 | 30 | 36 | 63 | 14 | [1] | impaired | impaired | |

| 1103 | 94 | 78 | 70/M | 38 | 22 | 29 | 25 | 60 | 0 | L neglect | normal | normal |

| 1106 | 96 | 87 | 50/M | 41 | 20 | 34 | 29 | 77 | 9 | normal | normal | impaired |

| 1331 | 117 | 95 | 62/M | 50 | 23 | 33 | 28 | 70 | 6 | normal | normal | impaired |

| 1362 | 96 | 110 | 68/M | 41 | 29 | 35 | 27 | 7 | [2] | normal | normal | |

| 1377 | 84 | 81 | 63/M | 38 | 28 | 16 | 29 | normal | normal | normal | ||

| 1441 | 97 | 95 | 87/F | 54 | 21 | 36 | normal | normal | normal | |||

| 1465 | 98 | 130 | 64/M | 48 | 28 | 36 | 29 | 68 | 14 | normal | normal | |

| 1512 | 97 | 88 | 65/M | 40 | 32 | 30 | 29 | 13 | [3] | |||

| 1569 | 98 | 103 | 76/F | 47 | 26 | 28 | LHH | normal | normal | |||

| 1575 | 89 | 83 | 58/M | 35 | 24 | 20 | 23 | 63 | 9 | normal | normal | |

| 1580 | 110 | 105 | 23/M | 45 | 25 | 36 | 29 | 11 | normal | normal | normal | |

| 1603 | 106 | 133 | 25/F | 43 | 25 | 36 | 29 | 47 | 4 | normal | normal | normal |

| 1605 | 114 | 112 | 49/M | 41 | ||||||||

| 1620 | 102 | 97 | 67/F | 42 | 26 | 31 | 62 | |||||

| 1656 | 93 | 105 | 51/M | 43 | 25 | 29 | 22 | normal | normal | |||

| 1660 | 95 | 97 | 27/F | 47 | 24 | 35 | 29 | 24 | normal | normal | normal | |

| 1737 | 117 | 95 | 60/M | 40 | 28 | 33 | 29 | LUQ | normal | normal | ||

| 1932 | 95 | 99 | 32/F | 47 | 31 | 33 | 29 | 3 | normal | normal | normal | |

| 1933 | 98 | 83 | 42/F | 43 | 25 | 35 | 46 | 17 | normal | normal | normal | |

| Bilateral lesions | ||||||||||||

| 1658 | 101 | 82 | 49/M | 47 | 16 | 32 | 29 | 5 | [4] | normal | normal | |

| 1790 | 97 | 85 | 62/F | 48 | 26 | 28 | 29 | LHH; | normal | normal | ||

| alexia |

[1] L. Visual field cut; dyschromatopsia in inferior left quadrant.

[2] Small central left homonymous field cut.

[3] L. homon. hemianopia; L. neglect; severe visuospatial defect.

[4] Complete blindness in L. visual field and macular sparing not extending past 10° in R. visual field.

ID, Subject ID number; VIQ/PIQ, WAIS-R verbal and performance IQ; Face dsc., Benton facial discrimination test (raw score); Line or., judgment of line orientation (raw score); R-O copy, Rey–Osterreith complex figure copy (raw score); 3-D, 3-D block construction (raw score); MMPI-D score, D-scale of the MMPI (t-score); BDI, Beck Depression Inventory (raw score); vision, abbreviations refer to field defects (UQ, upper quadrantanopia; HH, homonymous hemianopia); faces, recognition and naming of famous faces. See Materials and Methods for references.

Fig. 5.

Performance scores of the recognition of fear are plotted against four independent variables: performance IQ, age, performance on the Benton facial discrimination test (raw score), and score on the Beck Depression Inventory. Scores on the recognition of fear correlated only with performance IQ and age; no other neuropsychological variable covaried significantly.

To ensure that nonspecific visuoperceptual impairment could not account for our findings, we repeated our original ANOVA (first section of Results) with visuoperceptual performance as a covariate. We used the Benton Facial Discrimination Test, a task in which subjects have to match an unfamiliar face with one or more different aspects of that same face embedded in a number of other faces (compare Table 2 and Fig.5 for subjects’ scores on this task). This test provides a sensitive measure of the ability to discriminate between different people’s faces and provides the most relevant control task for our purposes, because our experimental task also used faces as stimuli. The ANCOVA of emotion × side of lesion, using the scores on the Benton task as a covariate, yielded the same significant effects as we reported above.

DISCUSSION

The most salient results in this study are as follows. First, no impairment in the processing of facial expressions of emotion was found in subjects with lesions restricted to left hemisphere; only damage in right hemisphere was ever associated with an impairment. Second, most of the impaired processing of facial expressions of emotion correlated with damage to two discrete regions in right neocortex: (1) the right inferior parietal cortex on the lateral surface, and (2) the anterior infracalcarine cortex on the mesial surface (Fig. 6). Third, expressions of happiness were recognized normally by all subjects. Fourth, the impaired recognition of facial expressions pertained to a few negative emotions, especially fear. An ANCOVA showed that these results cannot be explained on the basis of impaired visuoperceptual function but, instead, are specific to processing facial expressions of emotion. We attribute impaired recognition of facial expressions of fear to damage in the anatomical regions identified here, although it will be important to establish the reliability of this finding in additional subjects. The findings support the widely held notion that the right hemisphere contains essential components of systems specialized in the processing of emotion. However, the findings further suggest that impairments in the recognition of emotional facial expressions occur relative to discrete and specific visual and somatosensory cortical system components, and that processing different emotions draws on different sets of such components. The results also provide specific predictions for future studies with alternate methods, such as functional imaging studies in normal subjects.

Fig. 6.

Summary of findings. Recognition of facial expressions of emotion is most impaired by lesions in specific right hemisphere locations. Our data point to two loci (black circles), in right inferior parietal cortex on the lateral surface (supramarginal gyrus, hatched region) and in right infracalcarine cortex on the mesial surface.

The impaired recognition of emotion that we report might also be a consequence of damage to essential white matter communications between visual and somatosensory cortices. It is probable, in fact, that most lesions we reported in either infracalcarine or inferior parietal cortices also disrupt underlying white matter. Future studies will need to address the possibility that damage to such white matter connections could result in impaired recognition of facial expressions of emotion.

Different emotions are differentially impaired

None of our subjects was impaired in recognizing happy faces, whereas several subjects had difficulty recognizing certain negative emotions. In attempting to account for this result, we propose that two factors may have resulted in a relative separation of the neural systems that process positive or negative emotions. First, there are fewer kinds of positive than negative emotions, which probably makes it more difficult to distinguish among negative emotions, at a basic level, than among positive emotions. In fact, it seems possible that, at a basic level, there is only one positive emotion, happiness, and that recognizing happiness is thus a simpler task than recognizing specific negative emotions. Second, virtually all happy faces contain some variant of a stereotypic signal, the smile. Our findings are also consistent with EEG studies that suggest the right hemisphere may be specialized for processing negative, but not positive, emotions (Davidson and Fox, 1982; Davidson, 1992).

With respect to the especially impaired recognition of fear and sadness, there are two possible explanations. One is that these two emotions are the most difficult ones to process and, therefore, those whose recognition is most impaired. Another is that there may be specific systems for processing specific negative emotions such as fear. The presence of a significant interaction in the ANOVA of lesion group × emotion, and the finding that recognition of fear differed significantly from recognition of all other emotions, suggests that lesions in the right hemisphere regions specifically impair the processing of fear. Additionally, there is no evidence from normal subjects to suggest that fear is any more difficult to process than other emotional expressions (Ekman, 1976) (our unpublished observations). Instead, we believe that there are right hemisphere systems dedicated to processing stimuli that signal fear. This proposal is also consonant with lexical priming studies indicating that the right hemisphere may be specialized to process stimuli related to threat (Van Strien and Morpurgo, 1992).

Networks for the acquisition and retrieval of information about emotion

Our working hypothesis regarding the recognition of expressions of emotion proposes that perceptual representations of facial expressions (in early visual cortices) normally leads to the retrieval of information from diverse neural systems (located “downstream”), including those that represent pertinent past states of the organism’s body, and those that represent factual knowledge associated with certain types of facial expressions during development and learning (Damasio, 1994, 1995; Adolphs et al., 1995). The retrieval of previous body-state information would rely on structures such as the somatosensory and motor cortices, as well as limbic structures involved in visceral and autonomic/neuroendocrine control. Lack of access to such information would result in defective concept retrieval and, therefore, impaired performance on our task. (It should be clear that we are not suggesting that body-state information is accessed necessarily in the form of a conscious emotional experience during our task.)

The present findings on emotion recognition are thus especially interesting, because the right hemisphere is also preferentially involved in emotional expression and experience. For instance, there is substantial evidence that the right hemisphere has an important role in regulating the autonomic and somatovisceral components of emotion (Gainotti et al., 1993), and it has been proposed that right temporoparietal sectors regulate both the experience of emotion and autonomic arousal (Heller, 1993). When facial expressions are used as conditioned stimuli, conditioning of stimuli to autonomic responses is most vulnerable to right hemisphere lesions (Johnsen and Hugdahl, 1993), and right posterior hemisphere damage leads to impaired autonomic responses to emotionally charged stimuli (Morrow et al., 1981; Zoccolotti et al., 1982; Tranel and Damasio, 1994). Tachistoscopic presentation of emotional stimuli to the right hemisphere has been reported to result in larger blood pressure changes than do presentations to the left hemisphere (Wittling, 1990).

We would like to advance the hypothesis that the experience of some emotions, notably fear, during development would play an important role in the acquisition of conceptual knowledge of those emotions (by conceptual knowledge we mean all pertinent information, not just lexical knowledge). It seems plausible that the partial reevocation of such conceptual knowledge would be a prerequisite for the ability to recognize the corresponding emotions normally.

These considerations raise an important issue regarding the role of different neural systems in the acquisition and in the retrieval of information about emotions. We have described previously a subject who acquired bilateral amygdala damage early in life and who was impaired in recognizing facial expressions of fear (Adolphs et al., 1994, 1995). We subsequently reported in a collaborative study that two subjects who acquired bilateral amygdala damage in adulthood did not show the same impairment (Hamann et al., 1996). We believe that these findings support the following hypothesis: during development, the human infant/child acquires the connection between faces expressing fear and the conceptual knowledge of what fear is (which includes instances of the subject’s experience of fear). Such a process requires two neural components: (1) a structure that can link perceptual information about the face to information about the emotion that the face denotes; and (2) structures in which conceptual knowledge of the emotion can be recorded, and from where it can be retrieved in the future. Two candidates for structures fulfilling roles (1) and (2) would be, respectively, the amygdala and neocortical regions in the right hemisphere. Our previous data (Adolphs et al., 1994, 1995; Hamann et al., 1996) suggest that the amygdala is required during development so as to establish the networks that permit recognition of facial expressions of fear. Once established, however, these networks may function independently of the amygdala. The present study suggests two cortical sectors that are important components of the system by which adults retrieve knowledge about facial expressions of emotion. We therefore expect that impaired recognition of facial emotion could result from amygdala damage provided that the lesion occurred early in life, but could result from damage to right hemisphere cortical regions at any age. This framework is open to further testing in both human and nonhuman primates, part of which is currently under way in our laboratory.

Footnotes

This study was supported by National Institute of Neurological Diseases and Stroke Grant NS 19632. R.A. is a Burroughs-Wellcome Fund Fellow of the Life Sciences Research Foundation. We thank Randall Frank for help with image analysis and Kathy Rockland for many helpful discussions concerning neuroanatomy.

Correspondence should be addressed to Dr. Ralph Adolphs, Department of Neurology, University Hospitals and Clinics, 200 Hawkins Drive, Iowa City, IA 52242.

REFERENCES

- 1.Adolphs R, Tranel D, Damasio H, Damasio AR. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature. 1994;372:669–672. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- 2.Adolphs R, Tranel D, Damasio H, Damasio AR. Fear and the human amygdala. J Neurosci. 1995;15:5879–5892. doi: 10.1523/JNEUROSCI.15-09-05879.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Beck AT. Beck depression inventory. Psychological Corporation; San Antonio, TX: 1987. [Google Scholar]

- 4.Benton AL, Hamsher K, Varney NR, Spreen O. Contributions to neuropsychological assessment. Oxford UP; New York: 1983. [Google Scholar]

- 5.Blonder LX, Bowers D, Heilman K. The role of the right hemisphere in emotional communication. Brain. 1991;114:1115–1127. doi: 10.1093/brain/114.3.1115. [DOI] [PubMed] [Google Scholar]

- 6.Borod JC. Cerebral mechanisms underlying facial, prosodic, and lexical emotional expression: a review of neuropsychological studies and methodological issues. Neuropsychology. 1993;7:445–463. [Google Scholar]

- 7.Borod JC, Andelman F, Obler LK, Tweedy JR, Welkowitz J. Right hemisphere specializations for the identification of emotional words and sentences: evidence from stroke patients. Neuropsychologia. 1992;30:827–844. doi: 10.1016/0028-3932(92)90086-2. [DOI] [PubMed] [Google Scholar]

- 8.Bowers D, Bauer RM, Coslett HB, Heilman KM. Processing of faces by patients with unilateral hemisphere lesions. Brain Cognit. 1985;4:258–272. doi: 10.1016/0278-2626(85)90020-x. [DOI] [PubMed] [Google Scholar]

- 9.Bowers D, Coslett HB, Bauer RM, Speedie LJ, Heilman KH. Comprehension of emotional prosody following unilateral hemispheric lesions: processing defect versus distraction defect. Neuropsychologia. 1987;25:317–328. doi: 10.1016/0028-3932(87)90021-2. [DOI] [PubMed] [Google Scholar]

- 10.Bowers D, Blonder LX, Feinberg T, Heilman KM. Differential impact of right and left hemisphere lesions on facial emotion and object imagery. Brain. 1991;114:2593–2609. doi: 10.1093/brain/114.6.2593. [DOI] [PubMed] [Google Scholar]

- 11.Bowers D, Bauer RM, Heilman KM. The nonverbal affect lexicon: theoretical perspectives from neuropsychological studies of affect perception. Neuropsychology. 1993;7:433–444. [Google Scholar]

- 12.Damasio AR (1994) Descartes’ error: emotion, reason, and the human brain. New York: Grosset/Putnam.

- 13.Damasio AR. Toward a neurobiology of emotion and feeling: operational concepts and hypotheses. The Neuroscientist. 1995;1:19–25. [Google Scholar]

- 14.Damasio H, Damasio AR. Lesion analysis in neuropsychology. Oxford UP; New York: 1989. [Google Scholar]

- 15.Damasio H, Frank R. Three-dimensional in vivo mapping of brain lesions in humans. Arch Neurol. 1992;49:137–143. doi: 10.1001/archneur.1992.00530260037016. [DOI] [PubMed] [Google Scholar]

- 16.Darby DG. Sensory aprosodia: a clinical clue to lesions of the inferior division of the right middle cerebral artery. Neurology. 1993;43:567–572. doi: 10.1212/wnl.43.3_part_1.567. [DOI] [PubMed] [Google Scholar]

- 17.Davidson RJ. Anterior cerebral asymmetry and the nature of emotion. Brain Cognit. 1992;6:245–268. doi: 10.1016/0278-2626(92)90065-t. [DOI] [PubMed] [Google Scholar]

- 18.Davidson RJ, Fox N. Asymmetrical brain activity discriminates between positive and negative affective stimuli in 10 month old infants. Science. 1982;218:1235–1237. doi: 10.1126/science.7146906. [DOI] [PubMed] [Google Scholar]

- 19.DeKosky ST, Heilman KM, Bowers D, Valenstein E. Recognition and discrimination of emotional faces and pictures. Brain Language. 1980;9:206–214. doi: 10.1016/0093-934x(80)90141-8. [DOI] [PubMed] [Google Scholar]

- 20.Ekman P. Pictures of facial affect. Consulting Psychologists; Palo Alto, CA: 1976. [Google Scholar]

- 21.Fried I, Mateer C, Ojemann G, Wohns R, Fedio P. Organization of visuospatial functions in human cortex. Brain. 1982;105:349–371. doi: 10.1093/brain/105.2.349. [DOI] [PubMed] [Google Scholar]

- 22.Gainotti G, Caltagirone C, Zoccolotti P. Left/right and cortical/subcortical dichotomies in the neuropsychological study of human emotions. Cognit Emotion. 1993;7:71–94. [Google Scholar]

- 23.Greene R. The MMPI: an interpretive manual. Grune and Stratton; New York: 1980. [Google Scholar]

- 24.Gur RC, Skolnick BE, Gur RE. Effects of emotional discrimination tasks on cerebral blood flow: regional activation and its relation to performance. Brain Cognit. 1994;25:271–286. doi: 10.1006/brcg.1994.1036. [DOI] [PubMed] [Google Scholar]

- 25.Hamann SB, Stefanacci L, Squire LR, Adolphs R, Tranel D, Damasio H, Damasio A. Recognizing facial emotion. Nature. 1996;379:497. doi: 10.1038/379497a0. [DOI] [PubMed] [Google Scholar]

- 26.Heller W. Neuropsychological mechanisms of individual differences in emotion, personality, and arousal. Neuropsychology. 1993;7:476–489. [Google Scholar]

- 27.Johnsen BH, Hugdahl K. Right hemisphere representation of autonomic conditioning to facial emotional expressions. Psychophysiology. 1993;30:274–278. doi: 10.1111/j.1469-8986.1993.tb03353.x. [DOI] [PubMed] [Google Scholar]

- 28.Ley RG, Bryden MP. Hemispheric differences in processing emotion and faces. Brain Language. 1979;7:127–138. doi: 10.1016/0093-934x(79)90010-5. [DOI] [PubMed] [Google Scholar]

- 29.Morrow L, Vrtunski PB, Kim Y, Boller F (1981) Arousal responses to emotional stimuli and laterality of lesion. 19:65–71. [DOI] [PubMed]

- 30.Ojemann JG, Ojemann GA, Lettich E. Neuronal activity related to faces and matching in human right nondominant temporal cortex. Brain. 1992;115:1–13. doi: 10.1093/brain/115.1.1. [DOI] [PubMed] [Google Scholar]

- 31.Rapcsak SZ, Kaszniak AW, Rubens AB. Anomia for facial expressions: evidence for a category specific visual-verbal disconnection syndrome. Neuropsychologia. 1989;27:1031–1041. doi: 10.1016/0028-3932(89)90183-8. [DOI] [PubMed] [Google Scholar]

- 32.Rapcsak SZ, Comer JF, Rubens AB. Anomia for facial expressions: neuropsychological mechanisms and anatomical correlates. Brain Language. 1993;45:233–252. doi: 10.1006/brln.1993.1044. [DOI] [PubMed] [Google Scholar]

- 33.Ross ED. Modulation of affect and nonverbal communication by the right hemisphere. In: Mesulam M-M, editor. Principles of behavioral neurology. F.A. Davis; Philadelphia: 1985. pp. 239–258. [Google Scholar]

- 34.Silberman EK, Weingartner H. Hemispheric lateralization of functions related to emotion. Brain Cognit. 1986;5:322–353. doi: 10.1016/0278-2626(86)90035-7. [DOI] [PubMed] [Google Scholar]

- 35.Tranel D. The Iowa-Benton school of neuropsychological assessment. In: Grant I, Adams KM, editors. Neuropsychological assessment of neuropsychiatric disorders. Oxford UP; New York: 1996. [Google Scholar]

- 36.Tranel D, Damasio H. Neuroanatomical correlates of electrodermal skin conductance responses. Psychophysiology. 1994;31:427–438. doi: 10.1111/j.1469-8986.1994.tb01046.x. [DOI] [PubMed] [Google Scholar]

- 37.Tranel D, Damasio H, Damasio AR. Double dissociation between overt and covert face recognition. J Cog Neurosci. 1995;7:425–432. doi: 10.1162/jocn.1995.7.4.425. [DOI] [PubMed] [Google Scholar]

- 38.Van Strien JW, Morpurgo M. Opposite hemispheric activations as a result of emotionally threatening and non-threatening words. Neuropsychologia. 1992;30:845–848. doi: 10.1016/0028-3932(92)90087-3. [DOI] [PubMed] [Google Scholar]

- 39.Wechsler DA. The Wechsler Adult Intelligence Scale: revised. Psychological Corporation; New York: 1981. [Google Scholar]

- 40.Wittling W. Psychophysiological correlates of human brain asymmetry: blood pressure changes during lateralized presentation of an emotionally laden film. Neuropsychologia. 1990;28:457–470. doi: 10.1016/0028-3932(90)90072-v. [DOI] [PubMed] [Google Scholar]

- 41.Zoccolotti P, Scabini D, Violani C. Electrodermal responses in patients with unilateral brain damage. J Clin Neuropsychol. 1982;4:143–150. doi: 10.1080/01688638208401124. [DOI] [PubMed] [Google Scholar]