Abstract

It has become routine to collect data that are structured as multiway arrays (tensors). There is an enormous literature on low rank and sparse matrix factorizations, but limited consideration of extensions to the tensor case in statistics. The most common low rank tensor factorization relies on parallel factor analysis (PARAFAC), which expresses a rank k tensor as a sum of rank one tensors. When observations are only available for a tiny subset of the cells of a big tensor, the low rank assumption is not sufficient and PARAFAC has poor performance. We induce an additional layer of dimension reduction by allowing the effective rank to vary across dimensions of the table. For concreteness, we focus on a contingency table application. Taking a Bayesian approach, we place priors on terms in the factorization and develop an efficient Gibbs sampler for posterior computation. Theory is provided showing posterior concentration rates in high-dimensional settings, and the methods are shown to have excellent performance in simulations and several real data applications.

Keywords: Big data, Bayesian, Categorical data, Contingency table, Low rank, Matrix completion, PARAFAC, Tensor factorization

1. Introduction

Sparsely observed big tabular data sets are commonly collected in many applied domains. One example corresponds to recommender systems in which the dimensions of the table correspond to users, items and different contexts (Karatzoglou et al. (2010)), with a tiny proportion of the cells filled in for users providing rankings. The task is to fill in the rest of the huge table in order to make recommendations to users of which items they may prefer in each context. This extends the widely studied matrix completion problem (Candes and Recht (2009)) of which the Netflix challenge was one example. Another setting corresponds to contingency tables in which multivariate categorical data are collected for each individual, and the cells of the table contain counts of the number of individuals having a particular combination of values. In contingency table analyses, the focus is typically on inferring associations among the different variables, but challenges arise when there are many variables, so that the number of cells in the table is vastly bigger than the sample size.

Suppose that the tensor of interest is , with a space of p-way tensors having dj rows in the jth direction. Often there are constraints on the elements of the tensor. For recommender systems, ratings are non-negative so that one is faced with a non-negative tensor factorization problem (Paatero and Tapper (1994); Lee and Seung (1999); Friedlander and Hatz (2005); Lim and Comon (2009); Liu et al. (2012)). For contingency tables, the tensor corresponds to the joint probability mass function for multivariate categorical data, so that the elements are non-negative and add to one across all the cells (Dunson and Xing (2009); Bhattacharya and Dunson (2012)). Let Y denote the data collected on tensor π. For recommender systems, Y consists of ratings for a small subset of the cells in the tensor, while for contingency tables Y includes response vectors yi = (yi1,…, yip)T for subjects i = 1, …, n, with yij ∈ {1, …, dj} for j = 1, …, p. In both cases, data are extremely sparse, with no observations in the overwhelming majority of cells.

To combat this data sparsity, it is necessary to substantially reduce dimensionality in estimating π. The usual way to accomplish this is through a low rank assumption. Unlike for matrices, there is no unique definition of rank but the most common convention is to define the rank k of a tensor π as the smallest value of k such that π can be expressed as

| (1) |

which is sum of k rank one tensors, each an outer product of vectors1 for each dimension (Kolda and Bader, 2009). Expression (1) is commonly referred to as parallel factor analysis (PARAFAC) (Harshman (1970); Bro (1997)). For k small, the number of parameters is massively reduced from to ; as the low rank assumption often holds approximately, this leads to an effective approach in many applications, and a rich variety of algorithms are available for estimation.

However, the decrease in degrees of freedom from exponential in p to linear in p is not sufficient when p is big. Large p small n problems arise routinely, and a usual solution outside of tensor settings is to incorporate sparsity. For example, in linear regression, many of the coefficients are set to zero, while in estimation of large covariance matrices, sparse factor models are used that assume few factors and many zeros in the factor loadings matrices (West (2003); Carvalho et al. (2008)). In the matrix factorization literature, there has been consideration of low rank plus sparse decompositions (Chartrand (2012)), but this approach does not solve our problem of too many parameters. Including zeros in the component vectors is not a viable solution, particularly as we do not want to enforce exact zeros in blocks of the tensor π but require an alternative notion of sparsity.

Our notion is as follows. For component h (h = 1, …, k), we partition the dimensions into two mutually exclusive subsets . The proposed sparse PARAFAC (sp-PARAFAC) factorization is then

| (2) |

Hence, instead of having to introduce a separate vector for every h and j, we allow there to be more degrees of freedom used to characterize the tensor structure in certain directions than in others. Consider the recommender systems application and suppose we have three dimensions, including users (j = 1), items (j = 2) and context (j = 3). If we let for h = 1, …, k,

| (3) |

so that we factorize the user-item matrix as being of rank k, and then include a multiplier specific to each level of the context factor. This assumes that users rank systematically higher or lower depending on context but there is no interaction. In the contingency table application, . If for h = 1, …, k, then the jth variable is independent of the other variables with . By including for some but not all h ∈ {1, …, k} one can use fewer degrees of freedom in characterizing the interaction between the jth factor and the other factors. In practice, we will learn {Sh} using a Bayesian approach, as the appropriate lower dimensional structure is typically not known in advance.

We conjecture that many tensor data sets can be concisely represented via (2), with results substantially improved over usual PARAFAC factorizations due to the second layer of dimension reduction. For concreteness and brevity, we focus on contingency tables, but the methods are easily modified to other settings. Contingency table analysis is routine in practice; refer to Agresti (2002); Fienberg and Rinaldo (2007). However, in stark contrast to the well developed literature on linear regression and covariance matrix estimation in big data settings, very few flexible methods are scalable beyond small tables. Throughout the rest of the paper, we assume that the observed data yi = (yi1, …, yip)T, i = 1, …, n, is multivariate unordered categorical, with yij· ∈ {1, …, dj}. Our interest is in situations where the dimensionality p is comparable or even larger than the number of samples n.

2. Sparse Factor Models for Tables

2.1. Model and prior

We focus on a Bayesian implementation of sp-PARAFAC in (2). Let denote the (r − 1)-dimensional probability simplex. In the contingency table case, Dunson and Xing (2009) proposed the following probabilistic PARAFAC factorization.

| (4) |

where and is a vector of probabilities of yij = 1, …, dj in component h. Introducing a latent sub-population index zi ∈ {1, …, k} for subject i, the elements of yi are conditionally independent given zi with , and marginalizing out the latent index zi leads to a mixture of product multinomial distribution for yi. Placing Dirichlet priors on the component vectors leads to a simple and efficient Gibbs sampler for posterior computation. We will refer to this model (4) as standard PARAFAC.

This approach has excellent performance in small to moderate p problems, but as p increases there is an inevitable breakdown point. The number of parameters increases linearly in p, as for other PARAFAC factorizations, so problems arise as p approaches the order of n or p ≫ n. For example, we are particularly motivated by epidemiology studies collecting many categorical predictors, such as occupation type, demographic variables, and single nucleotide polymorphisms. For continuous response vectors , there is a well developed literature on Gaussian sparse factor models that are adept at accommodating p ≫ n data (West (2003); Lucas et al. (2006); Carvalho et al. (2008); Bhattacharya and Dunson (2011)). These models include many zeros in the loadings matrices to induce additional dimension reduction on top of the low rank assumption. Pati et al. (2013a) provided theoretical support through characterizing posterior concentration.

Our sp-PARAFAC factorization provides an analog of sparse factor models in the tensor setting. Modifying for the categorical data case, we let

| (5) |

where |Sh| ≪ p(|S| denotes the cardinality of a set S) and the vectors are fixed in advance; we consider two cases:

corresponding to a discrete uniform and empirical estimates of the marginal category probabilities. By fixing the baseline dictionary vectors in advance, and allocating a large subset of the variables within each cluster h to the baseline component, we dramatically reduce the size of the model space. In particular, the probability tensor π in (5) can be parameterized as , where . Thus, the effective number of model parameters is now reduced to , which is substantially smaller than the parameters in the original specification, provided |Sh| ≪ p for all h = 1, … k. This is ensured via a sparsity favoring prior on |Sh| below. We will illustrate that this can lead to huge differences in practical performance.

Completing a Bayesian specification with priors for the unknown parameter vectors and expressing the model in hierarchical form, we have2

| (6) |

It is evident that the hierarchical prior in (6) is supported on the space of probability tensors with a sp-PARAFAC decomposition as in (5), since (6) is equivalent to letting the subset-size |Sh| ~ Binom(p, τh) and drawing a random subset Sh uniformly from all subsets of {1, …, p} of size |Sh| in (5). A stick-breaking prior (Sethuraman, 1994) is chosen for the component weights {νh}, taking a nonparametric Bayes approach that allows k = ∞, with a hyperprior placed on the concentration parameter α in the stick-breaking process to allow the data to inform more strongly about the component weights. The probability of allocation τh to the active (non-baseline) category in component h is chosen as beta(1, γ), with γ > 1 favoring allocation of many of the s to the baseline category . In the limiting case as γ > ∞, the joint probability tensor π becomes an outer product of the baseline probabilities for the individual variables, . On the other hand, as γ → 0, one reduces back to standard PARAFAC (4).

Line 2 of expression (6) is key in inducing the second level of dimensionality reduction in our Bayesian sparse PARAFAC factorization. The inclusion of the baseline component that does not vary with h massively reduces the number of parameters, and can additionally be argued to have minimal impact on the flexibility of the specification. The s are incorporated within , which for large p is highly concentrated around its mean since the ‘s are independent across j. This is a manifestation of the concentration of measure phenomenon (Talagrand, 1996), which roughly states that a random variable that depends in a smooth way on the influence of many independent variables, but not too much on any one of them, is essentially constant. For example, if and , then E(Θ) = (1/2)p and var(Θ) = (1/3)p, which rapidly converges to zero. This implies that replacing a large randomly chosen subset of the s by should have minimal impact on modeling flexibility.

2.2. Induced prior in log-linear parameterization

An important challenge is accommodating higher order interactions, which play an important rolein many applications (e.g., genetics), but are typically assumed to equal zero for tractability. As p grows, it is challenging to even accommodate two-way interactions in traditional categorical data models (log-linear, logistic regression) due to an explosion in the number of terms. In contrast, the tensor factorization does not explicitly parameterize interactions, but indirectly induces a shrinkage prior on the terms in a saturated log-linear model. One can then reparameterize in terms of the log-linear model in conducting inferences in a post model-fitting step. We illustrate the induced priors on the main effects and interactions below.

For ease of exposition, we first focus on a case where p = 3 and dj = d = 2 for j = 1, …, 3. We generate 10, 000 random probability tensors , t = 1, …, 10, 000 distributed according to (6), where we fix the baseline for all j. Given a 2 × 2 × 2 tensor π, we can equivalently characterize π in terms of its log-linear parameterization

consisting of 3 main effect terms β1, β2, β3, three second-order interaction terms β12, β13, β23 and one third order interaction term β123; refer to §5.3.5 of Agresti (2002). Given each prior sample π(t), we equivalently obtain a sample β(t) from the induced prior on β, which allows us to estimate the marginal densities of the main effects and interactions and also their joint distributions. In particular, since γ plays an important role in placing weights on the baseline component, we would like to see how our induced priors differ with different γ values.

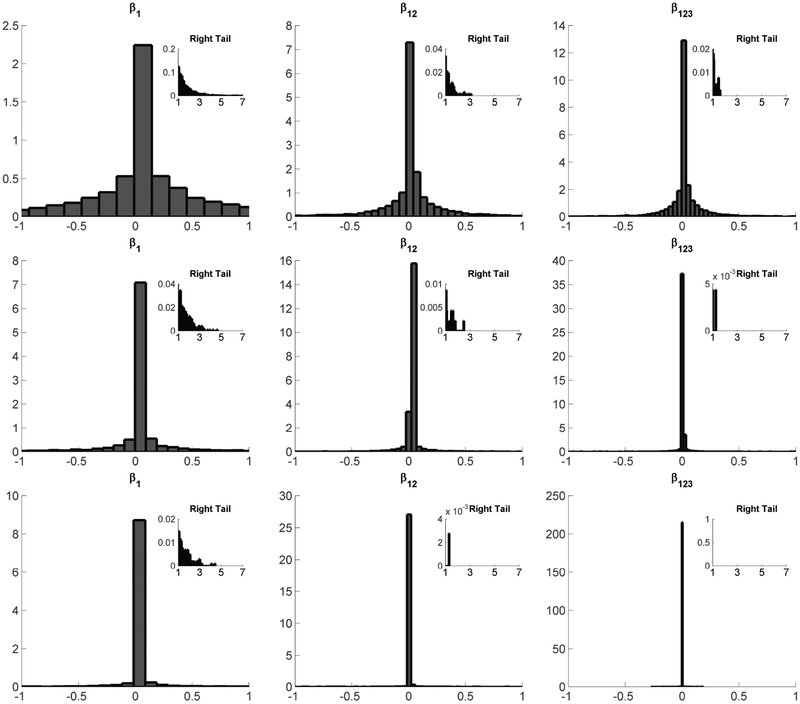

In our simulation exercise, we fix three values of γ, namely, γ = 1, 5, 20. Note that γ = 1 corresponds to a U(0,1) prior on τh. For different values of γ, we show the histograms of one main effect term β1, one two-way interaction β12 and the three-way interaction β123 in Figure 1. Table 1 additionally reports summary statistics.

Figure 1:

Histograms of induced priors for one main effect β1, one two-way interaction β12, and the three-way interaction β123 - Top Row: γ = 1; Middle Row: γ = 5; Bottom Row: γ = 20.

Table 1:

Summary statistics of induced priors on coefficients in log-linear model parameterization.

| γ | Coefficient | Mean | Std.dev | Min | Max | Skewness | Kurtosis |

|---|---|---|---|---|---|---|---|

| 1 | β1 | 0.014 | 0.831 | −6.765 | 6.389 | 0.210 | 9.109 |

| 1 | β12 | −0.002 | 0.340 | −2.895 | 3.105 | −0.025 | 16.583 |

| 1 | β123 | 0.002 | 0.196 | −2.223 | 2.632 | 0.525 | 24.686 |

| 5 | β1 | −0.002 | 0.485 | −5.648 | 5.433 | 0.031 | 27.980 |

| 5 | β12 | 0.000 | 0.124 | −2.085 | 2.244 | 0.495 | 93.438 |

| 5 | β123 | 0.000 | 0.051 | −1.214 | 0.745 | −3.701 | 159.360 |

| 20 | β1 | 0.002 | 0.246 | −3.109 | 5.669 | 2.474 | 99.554 |

| 20 | β12 | 0.000 | 0.042 | −1.126 | 1.819 | 9.488 | 632.790 |

| 20 | β123 | 0.000 | 0.009 | −0.664 | 0.214 | −44.051 | 3014.000 |

In high-dimensional regression, , there has been substantial interest in shrinkage priors, which draw βj a priori from a density concentrated at zero with heavy tails. Such priors strongly shrink the small coefficients to zero, while limiting shrinkage of the larger signals (Park and Casella, 2008; Carvalho et al., 2010; Polson and Scott, 2010; Hans, 2011; Armagan et al., 2013a). In Figure 1, the induced prior on any of the log-linear model parameters is symmetric about zero, with a large spike very close to zero, and heavy tails. Thus, we have indirectly induced a continuous shrinkage prior on the main effects and interactions through our tensor decomposition approach. In addition, the prior automatically shrinks more aggressively as the interaction order increases. Such greater shrinkage of interactions is commonly recommended (Gelman et al., 2008). Importantly, we do not zero out small interactions but allow many small coefficients, which is an important distinction in applications, such as genomics, having many small signals. The hyperparameter γ serves as a penalty controlling the degree of shrinkage.

Our next set of simulations involve larger values of p, where the necessity of the regularization implied by γ becomes strikingly evident. In the log-linear parameterization, we now have p main effects β1, …, βp; let βmain = (β1, …, βp)T. In the p ≫ n setting, one cannot even hope to consistently recover all the main effects unless a large fraction of the βj’s are zero or close to zero. One would thus favor a shrinkage prior on βmain, with any particular draw resembling a near-sparse vector. Since the induced prior on the βj’s is continuous, we study the l1 norm as a surrogate for the l0 norm to quantify the sparsity.

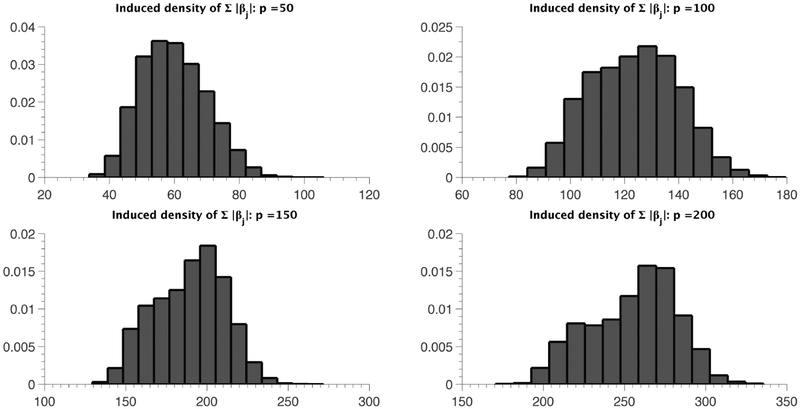

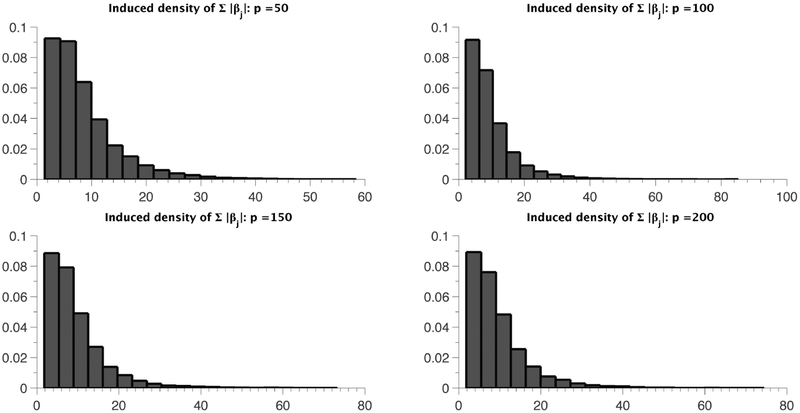

We consider p = 50, 100, 150, 200 and plot histograms of the induced density of ∥βmain∥1 based on 10, 000 prior draws in Figures 2 and 3. Figure 2 corresponds to the case where γ = 0, i.e., when the sp-PARAFAC formulation reduces back to the standard PARAFAC (4), while γ/p is set to a constant β ∈ (0,1) in Figure 3. Figure 2 reveals a highly undesirable property of the standard PARAFAC in high dimensions, where the entire distribution of ∥βmain∥1 shifts to the right with increasing p, with . The induced prior clearly lacks any automatic multiplicity adjustment property (Scott and Berger, 2010), and would bias inferences for moderate to large values of p. On the other hand, under the sp-PARAFAC model, the induced prior on ∥βmain∥1 is robust to increasing p, as evident from Figure 3. The choice γ = βp essentially forces a constant proportion of the variablesto be assigned to the null group; see Castillo and van der Vaart (2012) for a similar choice of the hyper-parameter in a regression setting.3.1 Preliminaries

Figure 2:

Histograms of ∥βmain∥1 for different values of p under the standard PARAFAC model.

Figure 3:

Histograms of ∥βmain∥1 for different values of p under the sp-PARAFAC model with γ = 0.1p.

3. Posterior concentration

3.1. Preliminaries

In this section, we provide theoretical justification to the proposed sp-PARAFAC procedure in high dimensional settings by studying the concentration properties of the posterior with growing sample size. When the parameter space is finite dimensional, it is well known that the posterior contracts at the parametric rate of n−1/2 under mild regularity conditions (Ghosal et al., 2000). However, we are interested in the asymptotic framework of the dimension p = pn growing with the sample size n, potentially at a faster rate, reflecting the applications we are interested in. There is a small but increasing literature on asymptotic properties of Bayesian procedures in models with growing dimensionality, with most of the focus being on linear models or generalized linear models belonging to the exponential family; refer to Ghosal (1999, 2000); Belitser and Ghosal (2003); Jiang (2007); Armagan et al. (2013b); Bontemps (2011); Castillo and van der Vaart (2012); Yang and Dunson (2013) among others. In all these cases, the object of interest is a vector of high-dimensional regression coefficients or more generally, the conditional distribution f (y | x) of a univariate response y given high-dimensional predictors x. However, our object of interest is significantly different as we are concerned with estimation of the high-dimensional joint probability tensor π.

Let denote the class of all probability tensors; we shall assume in the sequel for notational convenience. Let be a sequence of true tensors. We observe y1, …, yn ~ π(0n) and set y(n) = (y1, …, yn). We denote the prior distribution on induced by the sp-PARAFAC formulation by and the corresponding posterior distribution by .

For two probability tensors π(1) and , the L1 distance is defined as:

For a sequence of numbers ϵn → 0 and a constant M > 0 independent of ϵn, let

| (7) |

denote a ball of radius Mϵn around π(0n) in the L1 norm. We seek to find a minimum possible sequence ϵn such that

| (8) |

3.2. Assumptions

In this section we state our assumptions on the true data generating model and briefly discuss their implications.

Assumption 3.1. The true sequence of probability tensors π(0n) are of the form

| (A0) |

where are assumed to be known. Unless otherwise specified, we shall assume is the probability vector corresponding to the uniform distribution on {1, …, d}.

We now provide some intuition for assumption (A0). Letting , we can rewrite the expansion of π(0n) in (A0) as

| (9) |

where

In (9), the term doesn’t involve h and can be factored out completely. Assumption (A0) thus posits that the variables in are marginally independent and the entire dependence structure is driven by the variables in S0. We shall refer to S0 and as the non-null and null group of variables respectively.

Let qn = |S0| and define a mapping j → ej from {1, …, qn} to the ordered elements of S0, so that . As j varies between 1 to qn, ej ranges over the elements of S0. Denote by ψ(0n) the joint probability tensor for the variables {yij : j ∈ S0}, so that

| (10) |

Thus, after factoring out the marginally independent variables in , (A0) implies a standard PARAFAC expansion (10) for ψ(0n) with kn many components. Since any non-negative tensor admits a standard PARAFAC distribution (Lim and Comon, 2009), we can always write an expansion of ψ(0n) as in (10).

The next set of assumptions are provided below.3

Assumption 3.2. In addition to (A0), π(0n) satisfies

(A1) The number of components kn = O(1).

(A2) Letting , one has sn = o(log pn).

(A3) There exists a constant εο ∈ (0,1) such that for all 1 ≤ h ≤ kn, 1 ≤ c ≤ d, j ∈ S0h.

(A1) and (A2) imply that the size of the non-null group is much smaller than pn, since .

Some discussion is in order for condition (A3). First, note that we can choose ε0 in a way so that for all h, c and j ∈ S0. Hence, (A3) implies a lower bound on the joint probability ψ(0n) in (10). Such a lower bound on a compactly supported target density is a standard assumption in Bayesian non-parametric theory; see for example van der Vaart and van Zanten (2008). However, unlike univariate or multivariate density estimation in fixed dimensions where the density can be assumed to be bounded below by a constant, we need to precisely characterize the decay rate of the lower bound of the joint probability. Since ψ(0n) is a probability tensor, . Assumption (A3) implies that

| (11) |

for some constant c0 > 0.

3.3. Main result

We are now in a position to state a theorem on posterior convergence rates.

Theorem 3.1. Assume the true sequence of tensors satisfy assumptions (A0) – (A3) and sn log pn/n → 0. Also, assume the sp-PARAFAC model is fitted with the stick-breaking prior truncated to kn many components and for some constant β ∈ (0,1) in (6). Then, (8) is satisfied with in (7).

A proof of Theorem 3.1 can be found in the appendix. As an implication of Theorem 3.1, if pn = nd for some constant d, then the posterior contracts at the near parametric rate for some constant c > 0. Moreover, consistent estimation is possible even if pn is exponentially large as long as . In particular, with pn = exp(nδ/2) for δ < 1, the posterior contracts at least at the rate n−(1−δ)/2.

Remark 3.2. We assume the number of components kn known in Theorem 3.1 for ease of exposition, with our main focus on dimensionality reduction. Adapting to an unknown number of components in mixture models is a well -studied problem; see, for example, Ge and Jiang (2006); Pati et al. (2013b); Shen et al. (2011). For the infinite stick-breaking prior on the mixture components, one can use the sieving technique developed in Pati et al. (2013b) to estimate deviation bounds for the tail sum of a stick-breaking process.

Remark 3.3. In practice, we recommend the choice γ = βpn for numerical stability, with β = 0.2 used as a default choice in all our examples. The probability mass function of the induced beta-bernoulli prior on |Sh| with behaves like exp(−cs log pn) for small s, while the same is exp(−cs) for γ = βpn; refer to the proof of Theorem 3.1 for further details.

4. Posterior Computation

Under model (6), we can easily proceed to draw posterior samples from a Gibbs sampler since all the full conditionals have recognizable forms. The algorithm iterates through the following steps:

- For each jth variable and latent class h, update from a mixture of two distributions with different weights. Given the prior we specified for in (6), the posterior maintains its conjugacy and comes from either a Dirichlet or the baseline category. i.e., for j = 1, …, p, h = 1, …, k*, where k* = max{z1, …, zn}:

where and are the mixture weights:(12)

- Let Shj be the allocation variable with Shj = 0 if is updated from the baseline component, and Shj = 1 if is from a Dirichlet posterior distribution. Update τh, h = 1, …, k* from a Beta full conditional:

(13)

- The full conditional of Vh, h = 1, …, k* only requires the updated information on latent class allocation for all subjects:

(14)

- We sample zi, i = 1, …, n from the multinomial full conditional with:

where νh = Vh ∏l<h(1 − Vl).(15)

- Update α from the Gamma full conditional:

(16)

These steps are simple to implement and we gain efficiency by updating the parameters in blocks. For example, instead of updating one at a time, we sample jointly with corresponding parameters in matrix form. In all our examples, we ran the chain for 25, 000 iterations, discarding the first 10, 000 iterations as burn-in and collecting every fifth sample post burn-in to thin the chain. Mixing and convergence were satisfactory based on the examination of trace plots and the run time scaled linearly with n and p. We also carried out sensitivity analysis by multiplying and dividing the hyperparamaters aα, bα and γ in (6) by a factor of 2, with the conclusions remained unchanged from the default setting aα = bα = 1 and γ = 0.2 p.

5. Simulation Studies

5.1. Estimating sparse interactions

We first conduct a replicated simulation study to assess the estimation of sparse interactions using the proposed sp-PARAFAC model. We simulated 100 dependent binary variables yij ∈ {0,1}, j = 1, …, p = 100 (dj = d = 2) for i = 1, …, n = 100 subjects from a log-linear model having up to three-way interactions:

| (17) |

For example, if S = {1, 2,4}, then βS = β1,2,4 and with 1(·) denoting the indicator function. To mimic the situation where only a few interactions are present, we restrict to S ∈ S* = {2, 4, 12, 14} and set all interactions except

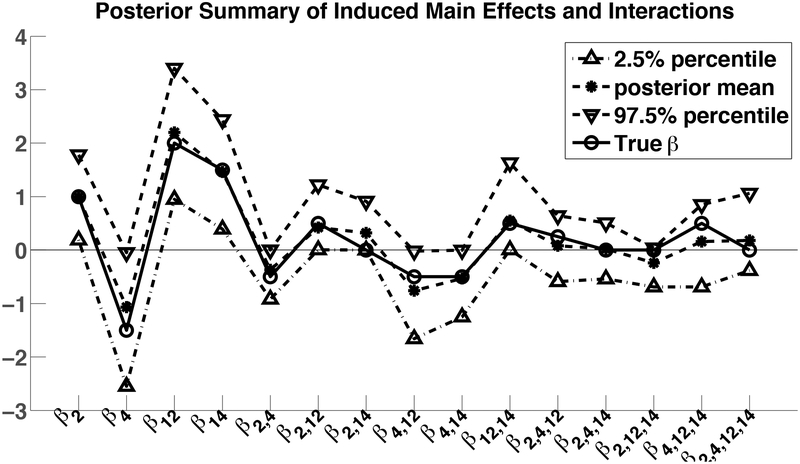

to zero. This data generating mechanism induces dependence among the variables in S*, while rendering the other variables to be marginally independent. Figure 4 reports the posterior means and 95% credible intervals for all main effects and interactions for the variables in S* averaged across 100 simulation replicates along with the true coefficients. As illustrated in Figure 4, averaging across the simulation replicates and different parameters, the 95% credible intervals cover the true parameter values 80% of the time.

Figure 4:

Posterior means and 95% credible intervals for all main effects and interactions in S* compared with the true coefficients.

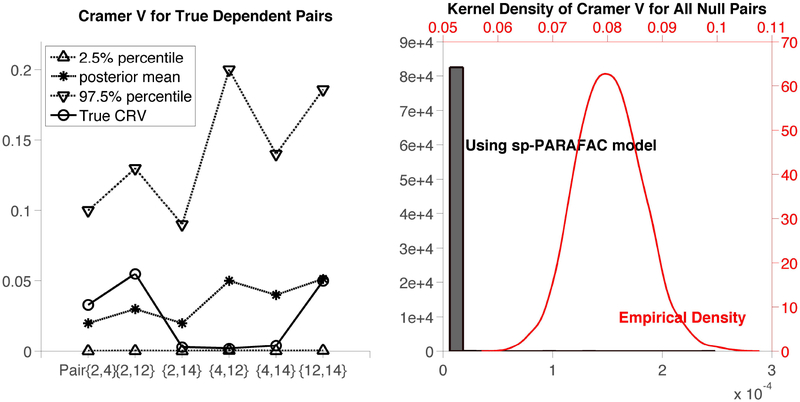

Next, we study performance in estimating the dependence structure. Cramer’s V is a popular statistic measuring the strength of association or dependence between two (nominal) categorical variables in a contingency table, ranging from 0 (no association) to 1 (perfect association). Let ρjj′ denote the Cramer’s V statistics for variables j and j′, so that

| (18) |

where and . Under the log-linear model (17), ρ = (ρjj′) is a sparse matrix with the Cramer’s V for all pairs except those in S* × S* being zero. This is an immediate consequence of the fact that if (j, j′) ∉ S* × S*, then yij and yij′, are independent.

We compare estimation of the off-diagonal entries of ρ under the sp-PARAFAC model with the empirical Cramer’s V matrix . We can clearly convert posterior samples for the model parameters to posterior samples for ρjj′ through (18). The empirical estimator is obtained by replacing and by their empirical estimators. The left panel in Figure 5 shows the posterior summaries (averaged across simulation replicates) of the Cramer’s V values for all possible dependent pairs along with the true Cramer’s V values (which can be calculated from (17)). In the right panel of Figure 5, we overlay kernel density estimators of posterior samples (in grey) and the empirical estimators (in red) of the Cramer’s V values for all null pairs across all simulation replicates. Note the axes are also marked in grey and red for the respective cases. The sp-PARAFAC method clearly outperforms the empirical estimator convincingly, with the posterior density for the null pairs highly concentrated near zero while the empirical estimator has a mean Cramer’s V value of 0.08 across the null pairs.

Figure 5:

Left: Posterior summaries of the Cramer’s V values for all dependent pairs vs. the true Cramer’s V values; Right: Estimated density of Cramer’s V combining all null pairs under sp-PARAFAC vs. empirical estimation.

Furthermore, we can obtain power for any non-null variable or type I error for any null variable by computing the percentage of detected significance over the simulation replicates. We first look at the power and type I error of the main effects and interactions in S*, most of the power and type I error are appealing, although a few of them are far from satisfactory (see Table 2 and Table 3). However, given the Cramer’s V results in the right panel of Figure 5, the type I error for any variable not in S* should be very small or zero. As an example, we tested the main effects and all the possible interactions for positions 20, 30, 40 and 50. The type I error rates are 0 for all of them. These results are based on examining whether 95% intervals contain zero, and it is as expected that the approach may have difficulty assessing the exact interaction structure among a set of associated variables based on limited data.

Table 2:

Power for Non-null Variables Based on 100 Simulations

| β2 | β4 | β12 | β14 | β2,4 | β2,12 | β4,12 | β4,14 | β12,14 | β2,4,12 | β4,12,14 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Power | 0.97 | 0.9 | 1 | 1 | 0.95 | 0.99 | 0.98 | 0.97 | 0.99 | 0 | 0 |

| True coefficient | 1 | −1.5 | 2 | 1.5 | −0.5 | 0.5 | −0.5 | −0.5 | 0.5 | 0.25 | 0.5 |

Table 3:

Type I Error for Null Variables Based on 100 Simulations

| β2,4 | β2,4,14 | β2,12,14 | β2,4,12,14 | |

|---|---|---|---|---|

| Type I error | 0.97 | 0 | 0.68 | 0 |

| True coefficient | 0 | 0 | 0 | 0 |

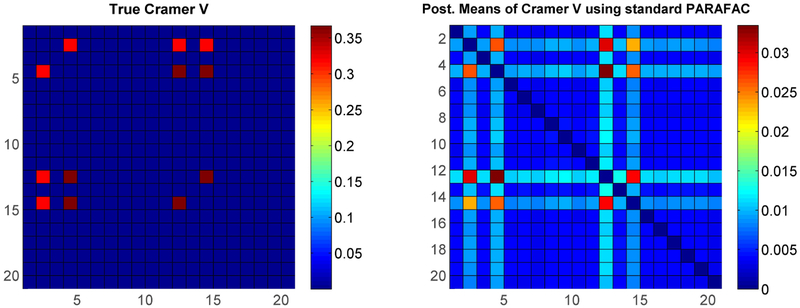

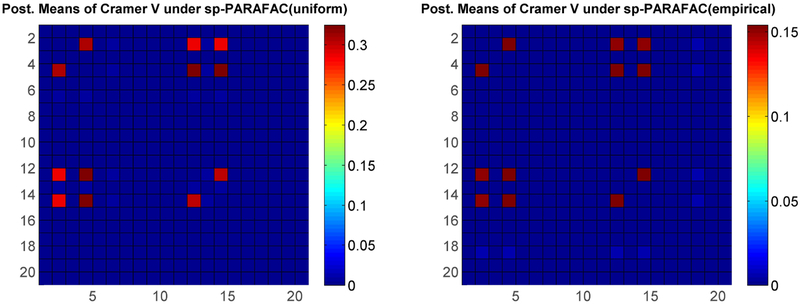

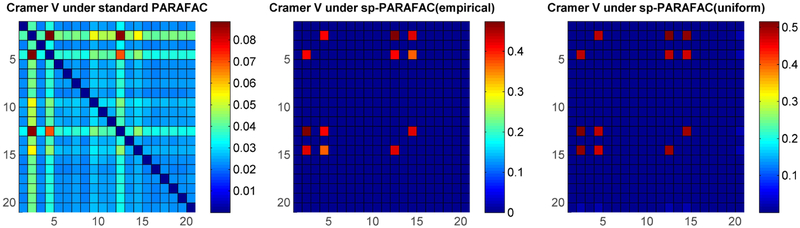

5.2. Comparison with standard PARAFAC

We now conduct a simulation study to compare estimation of the Cramer’s V matrix ρ under the proposed approach to the usual specification of the PARAFAC model without any sparsity as in (4), which is equivalent to setting γ = 0 in (6). We considered 100 simulation replicates, with data in each replicate consisting of p = 100 categorical variables for n = 100 subjects, with each variable having 4 possible levels (dj = d = 4). Two simulation settings were considered to induce dependence between the variables in S* = {2, 4, 12, 14}: (i) via multiple subpopulations as in the simulation study in Dunson and Xing (2009), and (ii) via a nominal GLM model for j ∈ S*, where yi(j)βc is a linear combination of all variables that are associated with the jth variable excluding the jth variable. The remaining variables were independently generated from a discrete uniform distribution.

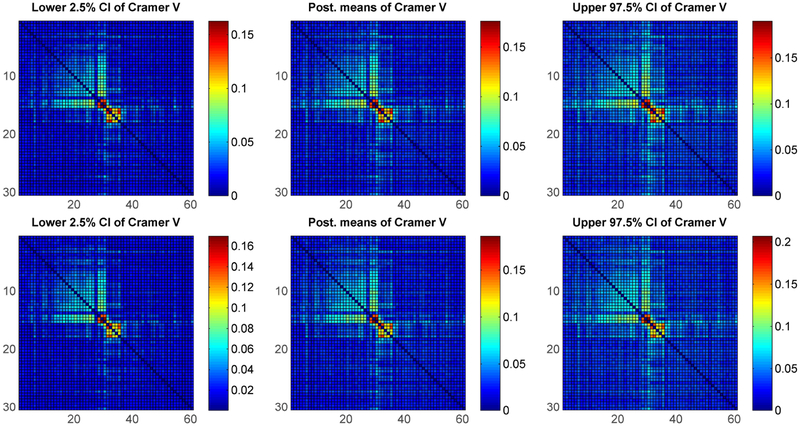

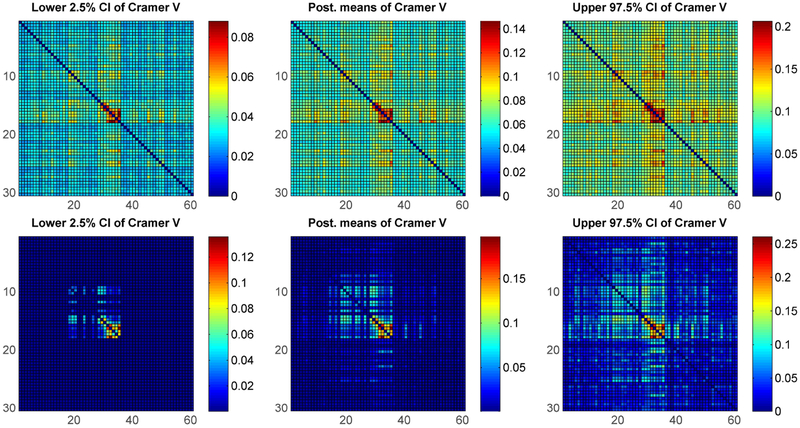

The color plot on the left in Figure 6 shows the true pairwise Cramer’s V values under simulation setting (i) (only the top-left 20 × 20 sub matrix of ρ is shown for clarity). Figure 6 (right) and Figure 7 represent one of the replicates, in which the right plot in Figure 6 shows the Cramer’s V under the standard non-sparse PARAFAC method, while Figure 7 shows the Cramer’s V using our method with two different baseline components. It is obvious that our approach has much better estimates for not only the true dependent pairs but also the true nulls. Results for simulation (ii) shown in Figure 8 again show superiority of our sparse improvement to PARAFAC.

Figure 6:

Simulation setting (i) - Left: True Cramer’s V matrix; Right: Posterior means of Cramer’s V using standard PARAFAC.

Figure 7:

Posterior means of Cramer’s V under simulation setting (i) using proposed method - Left: with being discrete uniform; Right: with being empirical estimates of the marginal category probabilities.

Figure 8:

Posterior means of Cramer’s V under simulation setting (ii) – Left: using standard PARAFAC; Middle: under proposed method using empirical marginal with Diri(1, …,1) prior for λ0; Right: using proposed method with discrete uniform λ0.

6. Application

6.1. Splice-junction Gene Sequences

We applied the method to the Splice-junction Gene Sequences (abbreviated as splice data below). Splice junctions are points on a DNA sequence at which ‘superfluous’ DNA is removed during the process of protein creation in higher organisms. These data consist of A, C, G, T nucleotides at p = 60 positions for N = 3, 175 sequences. Since its sample size is much larger than the number of variables, we compared our approach with the standard PARAFAC in two scenarios, first a small randomly selected subset (of size n = 2p = 120) of the full data set, and second, the full data set itself. Using two different sample sizes in this manner allows for a study of the new and existing method and a comparison to a gold standard (a sufficiently large data set). We ran the analysis to estimate the pairwise positional dependence structure under the standard PARAFAC method and the proposed approach with discrete uniform baseline component. As is apparent in Figure 10, both methods have similar performance when n ≫ p, however, in the smaller sample size situation, Figure 9 demonstrates that our proposed method has the advantage of identifying the dependence structure and pushing the independent pairs to zero, which it is closer to the results in a large sample case (Figure 10).

Figure 10:

Posterior quantiles of Cramer’s V with 3,175 sequences of splice data – Upper panel: under standard PARAFAC; Bottom panel: under proposed method.

Figure 9:

Posterior quantiles of Cramer’s V with 120 sequences of splice data – Upper panel: under standard PARAFAC; Bottom panel: under proposed method.

7. Discussion

We have proposed a sparse modification to the widely-used PARAFAC tensor factorization, and have applied this in a Bayesian context to improve analyses of ultra sparse huge contingency tables. Given the compelling success in this application area, we hope that the proposed notion of sparsity will have a major impact in other areas, including tensor completion problems in machine learning. There is an enormous literature on low rank and sparse matrix factorizations, and the sp-PARAFAC should facilitate scaling of such approaches to many-way tables while dealing with the inevitable curse of dimensionality. Although we take a Bayesian approach, we suspect that frequentist penalized optimization methods can also exploit our same concept of sparsity in learning a compressed characterization of a huge array based on limited data.

Appendix

7.1. Proof of Theorem 3.1

We verify the conditions of Theorem 4 in Yang & Dunson (2013), which is a minor modification of Theorem 2 appearing in Ghosal et al. (2000). Let ϵn → 0 be such that and . Suppose there exist a sequence of sets and a constant C > 0 such that the following hold:4

Then, the posterior contracts at the rate ϵn, i.e., (8) is satisfied. We now proceed to verify conditions (1) - (3). We define,

| (19) |

where denotes the (r − 1)-dimensional probability simplex and A > 0 is an absolute constant. We shall use C to denote an absolute constant whose meaning may change from one line to the next.

To estimate , we make use of the following Lemma, which follows in a straight-forward manner by repeated uses of the triangle inequality.

Lemma 7.1. Let π(1), with

Then,

Lemma 7.1 implies that if π(1), with , then

Based on the above observation, we create an ϵ-net of as follows: In (19), (i) vary over all possible subsets of {1, …, pn} with for h = 1, …, kn, (ii) for h ∈ {1, … kn} and , vary over an ϵn/(2Adsn)-net of and (iii) vary ν* over an ϵn/(2kn)-net of .

For a fixed h, there are subsets of size smaller then or equal to Asn. Using the in-equality for s ≤ p/2, the number of possible subsets in (i) can be bounded above by exp(Cknsn log pn). Hence,

Using the fact that (Vershynin, 2010), the right hand side in the above display can be bounded above by , since kn = O(1).

We now bound . Recall that in the sp-PARAFAC model, the induced prior on the subset size |Sh| is Bin(pn, τh), with τh ~ Beta(1, γ). Now,

Integrating τ1, the distribution of |S1| is a beta-bernoulli distribution with probability mass function

for s = 0,1,…, pn. B(·, ·) denotes the Beta function in the above display. Hence, for s ≥ 1,

Now, letting , one has for any pn ≥ 2 and 1 ≤ s ≤ pn/2,

In general, for , we can bound this from both sides by C/pn. Noting that , we have

implying there exists constants c1, c2 > 0 such that

| (20) |

for 0 ≤ s ≤ pn/2. In particular, the upper bound holds for all 0 ≤ s ≤ pn, since (pn − s + 1)/(pn − s + γ) ≤ C/pn for all s. Hence, for n large enough so that sn ≥ 3,

We finally show that (3) holds. Recall the decomposition of π(0n) from (9). A probability tensor π following a sp-PARAFAC model with a truncated stick-breaking prior on ν can be parameterized as

where , Sh ⊂ {1, …, pn}, . Consider the following subset of the parameter space,

We now show that implies , so that can be bounded below by . First, observe that since Sh = S0 for all h on , π/π(0n) = ψ/ψ(0n), where ψ(0n) is as in (10) and ψ is the dqn joint probability tensor implied by the sp-PARAFAC model for the variables {yij : j ∈ S0},

Hence,

where the penultimate step follows from an application of triangle inequality and the last step uses log(1 + x) ≤ x for x ≥ 0. For any , by an application of triangle inequality,

| (21) |

We now state a Lemma to facilitate bounding the second term of the above display.

Lemma 7.2. Let v1,… vr ∈ (ε0, 1 − ε0) for some ε0 > 0. Let δ > 0 be such that rδ < ε0/2. Then, if u1, …, ur satisfy |uj − vj | ≤ δ for all j = 1, …, r, then

Apply Lemma 7.2 with r = qn, and (clearly ) to obtain that for any 1 ≤ h ≤ qn, .·Substituting this bound in (21), we have on ,

For the two terms in the above display after the first inequality, we used the lower bound (11) for the first term along with on , and by definition of ψ(0n), the second term is .

It thus remains to lower bound . By independence across h, . Further, by exchangeability of the prior on S1, since all subsets of a particular size receive the same prior probability, . From (20), Pr(|S1| = qn ≥ exp(−Csn log pn). Using , we conclude that Pr(S1 = S0) ≥ exp(−Csn log pn).

Recall that , where independently. Find numbers {} such that . It is easy to see that there exists a constant C > 0 such that for all h = 1, …, kn implies . Hence, using a general result on small ball probability estimate of Dirichlet random vectors (Lemma 6.1 of Ghosal et al. (2000)), one has

Again, applying Lemma 6.1 of Ghosal et al. (2000),

Combining, we get . Hence, we have established (1) - (3) completing the proof.

7.2. Proof of Lemma 7.2

Observe that

Now, since uh ≤ vh + δ for all h,

Using the binomial theorem, . Next, bound and use the fact that rδ/ε0 < 1/2 to conclude that .

On the other hand, using uh ≥ vh − δ for all h,

The proof is concluded by observing that

Footnotes

For p = 2, ψ(1) ⊗ ψ(2) = ψ(1)ψ(2)T. In general,

Mult({1, …, d}; λ1, …, λd) denotes a discrete distribution on {1, …, d} with probabilities λ1, …, λd associated to each atom.

For sequences an, bn, we write an = o(bn) if an/bn → 0 as n → ∞ and an = O(bn) if an ≤ Cbn for all large n.

Given a metric space , let denote its ϵ-covering number, i.e., the minimum number of d-balls of radius ϵ needed to cover .

Contributor Information

Jing Zhou, Department of Biostatistics, The University of North Carolina at Chapel Hill.

Anirban Bhattacharya, Department of Statistics, Texas A&M University.

Amy Herring, Department of Biostatistics and Carolina Population Center, The University of North Carolina at Chapel Hill.

David Dunson, Department of Statistical Science, Duke University.

References

- Agresti A (2002), Categorical data analysis, Vol. 359, Wiley-interscience. [Google Scholar]

- Armagan A, Dunson D, and Lee J (2013a), “Generalized double Pareto shrinkage,” Statistica Sinica, 23, 119–143. [PMC free article] [PubMed] [Google Scholar]

- Armagan A, Dunson D, Lee J, Bajwa W, and Strawn N (2013b), “Posterior consistency in high-dimensional linear models,” Biometrika (to appear). [Google Scholar]

- Belitser E, and Ghosal S (2003), “Adaptive Bayesian inference on the mean of an infinitedimensional normal distribution,” The Annals of Statistics, 31, 536–559. [Google Scholar]

- Bhattacharya A, and Dunson D (2011), “Sparse Bayesian infinite factor models,” Biometrika, 98, 291–306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bhattacharya A, and Dunson D (2012), “Simplex factor models for multivariate unordered categorical data,” Journal of the American Statistical Association, 107, 362–377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bontemps D (2011), “Bernstein-von Mises theorems for Gaussian regression with increasing number of regressors,” The Annals of Statistics, 39, 2557–2584. [Google Scholar]

- Bro R (1997), “PARAFAC. Tutorial and applications,” Chemometrics and Intelligent Laboratory Systems, 38, 149–171. [Google Scholar]

- Candes E, and Recht B (2009), “Exact matrix completion via convex optimization,” Foundations of Computational Mathematics, 9, 717–772. [Google Scholar]

- Carvalho C, Lucas J, Wang Q, Nevins J, and West M (2008), “High-dimensional sparse factor modelling: applications in gene expression genomics,” Journal of the American Statistical Association, 103, 1438–1456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carvalho C, Polson N, and Scott J (2010), “The horseshoe estimator for sparse signals,” Biometrika, 97, 465–480. [Google Scholar]

- Castillo I, and van der Vaart A (2012), “Needles and straws in a haystack: Posterior concentration for possibly sparse sequences,” The Annals of Statistics, 40, 2069–2101. [Google Scholar]

- Chartrand R (2012), “Nonconvex splitting for regularized low-rank plus sparse decomposition,” IEEE Transactions on Signal Processing, 60, 5810–5819. [Google Scholar]

- Dunson DB, and Xing C (2009), “Nonparametric Bayes modeling of multivariate categorical data,” Journal of the American Statistical Association, 104, 1042–1051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fienberg S, and Rinaldo A (2007), “Three centuries of categorical data analysis: Log-linear models and maximum likelihood estimation,” Journal of Statistical Planning and Inference, 137, 3430–3445. [Google Scholar]

- Friedlander M, and Hatz K (2005), “Computing non-negative tensor factorizations,” Optimization Methods and Software, 23, 631–647. [Google Scholar]

- Ge Y, and Jiang W (2006), “On consistency of Bayesian inference with mixtures of logistic regression,” Neural Computation, 18, 224–243. [DOI] [PubMed] [Google Scholar]

- Gelman A, Jakulin A, Pittau M, and Su Y (2008), “A weakly informative default prior distribution for logistic and other regression models,” Annals of Applied Statistics, 2, 1360–1383. [Google Scholar]

- Ghosal S (1999), “Asymptotic normality of posterior distributions in high-dimensional linear models,” Bernoulli, 5, 315–331. [Google Scholar]

- Ghosal S (2000), “Asymptotic normality of posterior distributions for exponential families when the number of parameters tends to infinity,” Journal of Multivariate Analysis, 74, 49–68. [Google Scholar]

- Ghosal S, Ghosh J, and van der Vaart A (2000), “Convergence rates of posterior distributions,” Annals of Statistics, 28, 500–531. [Google Scholar]

- Hans C (2011), “Elastic net regression modeling with the orthant normal prior,” Journal of the American Statistical Association, 106, 1383–1393. [Google Scholar]

- Harshman R (1970), “Foundations of the PARAFAC procedure: Models and conditions for an “explanatory” multi-modal factor analysis,” UCLA Working Papers in Phonetics, 16, 84. [Google Scholar]

- Jiang W (2007), “Bayesian variable selection for high dimensional generalized linear models: convergence rates of the fitted densities,” The Annals of Statistics, 1487–1511. [Google Scholar]

- Karatzoglou A, Amatriain X, Baltrunas L, and Oliver N (2010), “Multiverse recommendation: n-dimensional tensor factorization for context-aware collaborative filtering,” Proceedings of the Fourth ACM Conference on Recommender Systems. [Google Scholar]

- Kolda T, and Bader B (2009), “Tensor decompositions and applications,” SIAM Review, 51, 455–500. [Google Scholar]

- Lee DD, and Seung HS (1999), “Learning the parts of objects by non-negative matrix factorization,” Nature, 401,788–791. [DOI] [PubMed] [Google Scholar]

- Lim L, and Comon P (2009), “Nonnegative approximations of nonnegative tensors,” Jour. Chemometrics, 432–441. [Google Scholar]

- Liu J, Liu J, Wonka P, and Ye J (2012), “Sparse non-negative tensor factorization using column-wise coordinate descent,” Pattern Recognition, 45, 649–656. [Google Scholar]

- Lucas JE, Carvalho C, Wang Q, Bild A, Nevins J, and West M (2006), “Sparse statistical modelling in gene expression genomics,” in Bayesian Inference for Gene Expression and Proteomics, eds. Do K, Müller P and Vannucci M, Cambridge University Press, pp. 155–176. [Google Scholar]

- Paatero P, and Tapper U (1994), “Positive matrix factorization: A non-negative factor model with optimal utilization of error estimates of data values,” Environmetrics, 5, 111–126. [Google Scholar]

- Park T, and Casella G (2008), “The Bayesian lasso,” Journal of the American Statistical Association, 103,681–686. [Google Scholar]

- Pati D, Bhattacharya A, Pillai N, and Dunson D (2013a), “Posterior contraction in sparse Bayesian factor models for massive covariance matrices,” arXiv:1206.3627. [Google Scholar]

- Pati D, Dunson DB, and Tokdar ST (2013b), “Posterior consistency in conditional distribution estimation,” Journal of Multivariate Analysis. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polson N, and Scott J (2010), “Shrink globally, act locally: Sparse Bayesian regularization and prediction,” in Bayesian Statistics 9 (Bernardo JM, Bayarri MJ, Berger JO, Dawid AP, Heckerman D, Smith AFM and West M, eds.), Oxford University Press, New York, pp. 501–538. [Google Scholar]

- Scott J, and Berger J (2010), “Bayes and empirical-Bayes multiplicity adjustment in the variable selection problem,” The Annals of Statistics, 38, 2587–2619. [Google Scholar]

- Sethuraman J (1994), “A constructive definition of Dirichlet priors,” Statistica Sinica, 4, 639–650. [Google Scholar]

- Shen W, Tokdar S, and Ghosal S (2011), “Adaptive Bayesian multivariate density estimation with Dirichlet mixtures,” arXivpreprint arXiv:1109.6406. [Google Scholar]

- Talagrand M (1996), “A new look at independence,” The Annals of Probability, 24, 1–34. [Google Scholar]

- van der Vaart A, and van Zanten J (2008), “Rates of contraction of posterior distributions based on Gaussian process priors,” The Annals of Statistics, 36, 1435–1463. [Google Scholar]

- Vershynin R (2010), “Introduction to the non-asymptotic analysis of random matrices,” Arxiv preprint arxiv:1011.3027. [Google Scholar]

- West M (2003), “Bayesian factor regression models in the “large p, small n” paradigm,” in Bayesian Statistics 7 (Bernardo JM, Bayarri MJ, Berger JO, Dawid AP, Heckerman D, Smith AFM and West M, eds.), Oxford University Press, New York, pp. 733–742. [Google Scholar]

- Yang Y, and Dunson DB (2013), “Bayesian conditional tensor factorizations for high-dimensional classification,” arXiv preprint arXiv:1301.4950. [DOI] [PMC free article] [PubMed] [Google Scholar]