Abstract

Objective

To describe nurses’ preferences for the design of a probability-based clinical decision support (PB-CDS) tool for in-hospital clinical deterioration.

Methods

A convenience sample of bedside nurses, charge nurses, and rapid response nurses (n = 20) from adult and pediatric hospitals completed participatory design sessions with researchers in a simulation laboratory to elicit preferred design considerations for a PB-CDS tool. Following theme-based content analysis, we shared findings with user interface designers and created a low-fidelity prototype.

Results

Three major themes and several considerations for design elements of a PB-CDS tool surfaced from end users. Themes focused on “painting a picture” of the patient condition over time, promoting empowerment, and aligning probability information with what a nurse already believes about the patient. The most notable design element consideration included visualizing a temporal trend of the predicted probability of the outcome along with user-selected overlapping depictions of vital signs, laboratory values, and outcome-related treatments and interventions. Participants expressed that the prototype adequately operationalized requests from the design sessions.

Conclusions

Participatory design served as a valuable method in taking the first step toward developing PB-CDS tools for nurses. This information about preferred design elements of tools that support, rather than interrupt, nurses’ cognitive workflows can benefit future studies in this field as well as nurses’ practice.

Keywords: decision support systems, clinical decision-making, computer-assisted, models, statistical, nursing

INTRODUCTION

Sound clinical decision-making depends on one’s ability to access, process, and use the array of information at one’s disposal. Growing complexity in health care has made decision-making in today’s clinical environment more challenging than ever. Increased information-providing technology in the clinical setting has added to this complexity and influences the decision-making of clinicians.1 Clinical decision-support (CDS) tools are intended to assist decision-making, but the rapidity of technological advancement has outpaced our knowledge of tool use, design display, and decision-making influence in the clinical environment.2,3 The value of CDS tools, in general, is increasingly recognized; however, gaps remain in understanding interactions between CDS tools and users.3,4 Notably, probability-based CDS (PB-CDS) tools (also referred to as predictive analytics), with an inherent focus on mathematical probabilities, are increasingly prevalent but have not received adequate attention regarding their influence on clinician behavior.5,6

Most work on PB-CDS tools has focused on statistical model development, variable inclusion, and model accuracy.7–11 These factors are necessary but insufficient to influence patient outcomes, because a change in clinician behavior is also required for patient care to be impacted. Some studies have simply provided clinicians with information from the predictive model,12 while others have attempted to automatically initiate an intervention.13 To facilitate the study of these tools’ benefits, an initial approach would be to study a clinical situation where a clinician’s prompt decision and action are warranted. Additionally, the prototypic situation would include an outcome where the anticipated and actual events occur close together, in order to minimize the potential influence of additional variables (eg, other clinicians’ actions or nonhospital factors) on weakening the temporal connection between the probability and actual occurrence of events. Therefore, using currently available data to predict events likely to occur within 24–48 h would be ideal. Rapid clinical deterioration, similar to that observed in conditions like sepsis, hypovolemic shock, and cardiopulmonary arrest, meets this criterion and served as the clinical condition for this study.

We specifically selected rapid clinical deterioration leading to cardiopulmonary arrest as an exemplar by which to study PB-CDS phenomena, because the onset of cardiopulmonary arrest is more easily defined and is the result of other clinical deterioration etiologies if left untreated. Furthermore, cardiopulmonary arrests are common (approximately 209 000 hospitalized patients in the United States every year14) and have substantial associated mortality (survival rates are only 23%–37%15). Cardiopulmonary arrests occurring outside of the intensive care unit (ICU) are of particular interest, because these in-hospital events might be preventable, or at least survivable with early intervention, such as increased vigilance, timely medication administration, and escalation to a higher level of care.15–17 Published reports demonstrating the accuracy of predictive analytic models in health care exist,7,8 and many of these have been developed to predict cardiopulmonary arrest.18–23 Even though studies examining the impact of predictive models on identification and management of patients preceding cardiopulmonary arrest have demonstrated high accuracy,12 especially when compared to traditional scoring systems,22 the CDS tools lack demonstrable benefit on patient outcomes outside of modest improvements in length of stay.12,13 One reason for this inefficacy could be that most studies progress directly from model development to implementation in the clinical environment4,24 without adequate preliminary testing (eg, user-centered design and usability studies).

Nurses spend more time with hospitalized patients than any other clinician and became the focus of this study. Compared to physician-focused studies, relatively few nursing-focused decision-support studies have been published,4,6,25,26 but some evidence suggests that nurses prefer being able to see relationships between variables when viewing physiological data.27 Others have noted that nurses benefit from information displays focused on trends and the recall of relevant patient information, while physicians benefit from displays that promote inference for decision-making.28 Given the interdisciplinary differences, we also questioned whether unique decision-support tools might be needed for the multiple intradisciplinary nursing roles that interact with patients at risk for cardiopulmonary arrest. Bedside nurses have detailed knowledge of a few patients, provide sustained observations, and might use a PB-CDS tool to facilitate early recognition. In contrast, charge nurses have less detailed knowledge of each patient, oversee many patients (typically an entire ward), and might use a PB-CDS tool to reassign high-risk patients to more experienced bedside nurses. Rapid response team nurses review only 1 patient’s information, must quickly decide if a deteriorating patient warrants additional treatment, and might use a PB-CDS tool to determine whether the patient should transfer to a higher level of care. These varied tasks warrant further exploration into whether different nursing roles need separate decision-support tools.

Our study took what Friedman calls a “small ball” approach29 to developing an information resource and challenges previous research approaches by exploring the user interface in a simulated environment before introducing the tool in clinical practice. This approach permits assessment of clinician preferences as well as modifications of the PB-CDS tool before significant resources have been spent. The overall objective of this study was to describe nurses’ preferences for the design of a PB-CDS tool. In this paper, we report our findings on the information preferences of nurses for the design of a tool to assist with cardiopulmonary arrest identification.

METHODS

Design

We conducted 3 separate participatory design sessions, each with different participants, in this study. Participatory design is a qualitative method that engages participants as co-investigators in the design process. Technology and engineering fields commonly use participatory design because it applies iterative changes seeking to align technology design with users and the environment; the method is less commonly used in health care informatics research.30–32 Three major activities comprise each session of a participatory design study: priming, designing, and debriefing. The priming activity helps participants understand the intended tasks and context surrounding the study purpose while preparing them to become active participants in the designing activity. The designing activity is the more active portion of the study, where all participants (ie, researchers, designers, intended end users) co-create design elements of the tool. The debriefing activity allows participants to describe their experience of creating and reflect on the words and actions of others. The final product is a report, possibly with prototypes.30,33

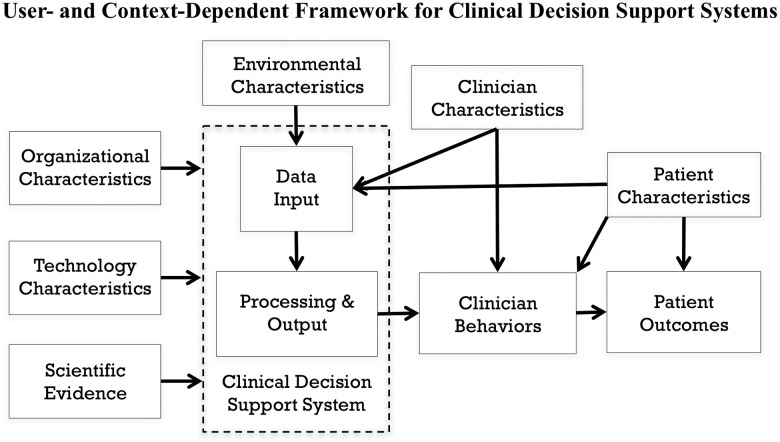

Conceptual framework

To guide the study, we developed a conceptual model (see Figure 1 ) informed by human factors engineering (Carayon),34 CDS system rule development (Brokel),35 and information technology acceptance theories (Venkatesh).36 The technology characteristics of a CDS system (specifically, the user interface of a prediction model’s output) and clinician characteristics (ie, roles) were the primary variables of interest. We assumed a relationship between the CDS system and clinician behaviors, which mediate patient outcomes. We appreciated the influence of organizational characteristics (eg, culture, capital resources), environmental characteristics (eg, lighting), and patient characteristics (eg, nonmodifiable risk factors) on patient outcomes, but they were not of primary interest.

Figure 1.

Conceptual framework used to guide the study, with major relationships identified.

Participants and setting

The Vanderbilt University Institutional Review Board reviewed and approved our study. Using e-mail flyers and word of mouth, we recruited a convenience sample of nurses working in an adult teaching hospital, a pediatric teaching hospital, and an adult federal hospital in Nashville, Tennessee. To be included in the study, participants had to be bedside nurses or charge nurses working in non–critical care inpatient departments (eg, medical wards, surgical wards) with either adults or children. We also included nurses working in ICUs who responded to rapid response team calls. Participants received a $75 gift card for their participation. Data collection occurred in the Vanderbilt University School of Nursing Simulation Center, which houses high- and low-fidelity simulation manikins, specially trained personnel to operate the manikins, several patient rooms that mimic a hospital unit, and a large open space for small-group work. High-fidelity manikins were capable of connecting to continuous telemetry monitoring, receiving general physical assessments (eg, chest rise and palpable pulses), and communicating with participants.

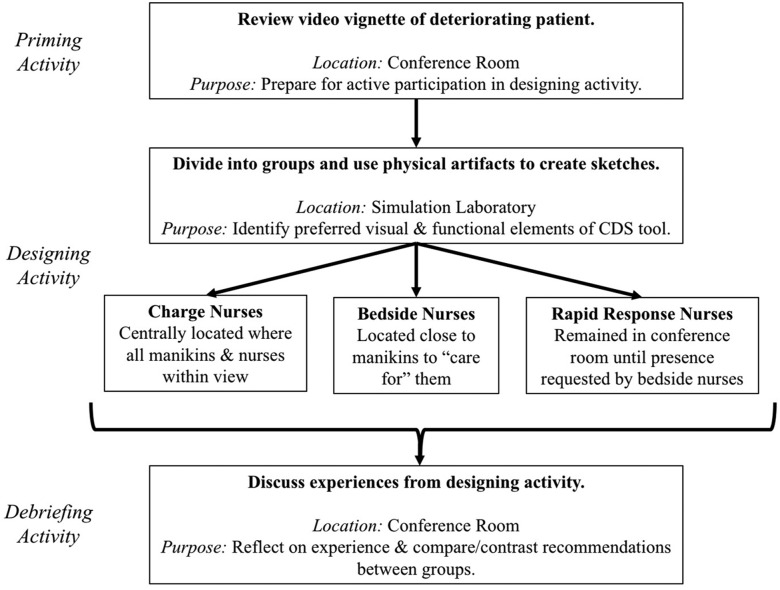

Procedures

We conducted 3 participatory design sessions, each 2 h in length and comprising 5–10 end users currently working as either bedside nurses, charge nurses, or rapid response team nurses. Facilitated by at least 2 of the researchers, each session contained a priming activity (∼20 min in length), a designing activity (∼60 min), and a debriefing activity (∼30 min). Figure 2 illustrates the sequence of events for each session.

Figure 2.

Sequence of events during each participatory design session.

Priming activity

During the priming activity, we gathered all participants into a conference room and watched an 8-min video vignette in which a patient experienced clinical deterioration that warranted activation of a rapid response team. We modified a video developed by the Agency for Healthcare Research and Quality37 to create the vignette scenario, which is available upon request. We validated vignette content by asking researchers (n = 5) and nurse and physician subject matter experts (n = 7) whether the new video was clinically accurate and aligned with the purpose of the priming activity. Following validation, we made no major changes but included additional screen captions emphasizing technology-focused actions. During the priming activity, we instructed the nurses to take notes on what they observed and remembered from the vignette, along with additional information they would have requested in a real scenario. Following the video, we collected those notes to include in data analysis.

Designing activity

The designing activity, which occurred in a simulation laboratory, engaged the nurses in hands-on creation of a physical representation of an electronic CDS tool using paper, colored pencils, scissors, rulers, and adhesive note paper. We recreated a clinical environment with manikins in order to mimic the context in which the PB-CDS tool would realistically be used. Bedside nurses were physically located near patient manikins, charge nurses were located farther from the manikins but within sight of bedside nurses, and rapid response team nurses initially remained in the nearby conference room where the priming activity occurred. The simulation laboratory included 3 low-fidelity manikins and 1 high-fidelity manikin, the latter representing the patient described in the priming activity vignette and operated by a laboratory staff member. A brief narrative of each patient’s history and physical assessment along with vital signs, laboratory values, and the numerical result of a fictitious PB-CDS tool (eg, 1.3%, 56.8%) were available at each bedside. We provided an abbreviated overview of all patients (including the results of a fictitious CDS tool) to charge nurses. We gave no patient information to the rapid response team. Researchers interacted with all nurse end-user participants throughout the designing activity, and examples of previously published cardiopulmonary arrest CDS tools were available to assist with brainstorming.

To provide an additional use case for the proposed CDS tool, approximately halfway through the designing activity, the high-fidelity manikin experienced an acute deteriorating condition. Researchers encouraged the group of bedside nurses to ask for help from the charge nurses and rapid response team nurses. Feedback from the first session revealed that a greater emphasis on statistical probabilities could increase participants’ design-focused dialogue. Thus, in an attempt to induce cognitive dissonance (which we expected would increase discussion), the second and third participatory design sessions included a very high numerical result for a patient whose history and physical assessment suggested a very low probability of cardiopulmonary arrest.

Debriefing activity

After the designing activity, all participants returned to the conference room for the debriefing activity. Researchers used semistructured open-ended questions to ask nurse end users to share their sketches and provide a rationale for each of the chosen visual and functional elements. We audio-recorded the debriefing conversations, took notes of the discussion, and captured photos of physical artifacts. In all participatory design sessions following the first, we shared concepts and photos from previous sessions with participants, offering the opportunity for convergence of ideas.

Analysis

Consistent with usability testing principles, the research team leveraged theme-based content analysis, ongoing aggregation of results, and discussion and deliberation of nurse end-user comments and artifacts.38,39 The principal investigator (AJ) developed an initial codebook with concepts and definitions and applied codes to handwritten notes, physical artifacts, and transcripts of the audio-recorded debriefing activities. A co-investigator (LN) reviewed and revised the codebook and application of codes. A computer-based qualitative data analysis software program (Dedoose40) facilitated deliberation among researchers, and the 2 investigators discussed differences in coding schemes until consensus was reached. After the 2 investigators applied codes to all data, all investigators participated in development and confirmation of the final themes.

After a preliminary analysis, we consulted with human-computer interaction and design experts to provide an informal evaluation of the tools’ proposed visual and functional elements. We synthesized all recommendations, developed a low-fidelity prototype, and shared the prototype with 14 (70%) of the nurse end users who participated in the sessions. We used this final step as a form of “member checking”41 to ensure that participants felt their preferences were appropriately converted into the prototype.

RESULTS

Six bedside nurses, 8 charge nurses, and 6 rapid response team nurses (n = 20) attended the sessions from 14 unique units (see Table 1). In addition to several minor themes identified in the priming activity notes, 3 major themes and several considerations for design elements of a PB-CDS tool surfaced.

Table 1.

Sample size and composition of participants at each stage of the study

| Descriptor | Session #1 | Session #2 | Session #3 | Member Checking |

|---|---|---|---|---|

| Total | 5 | 9 | 6 | 14 |

| Bedside Nurses | 1 | 3 | 2 | 4 |

| Charge Nurses | 2 | 4 | 2 | 6 |

| Rapid Response Nurses | 2 | 2 | 2 | 4 |

| Work Areas Represented | Adult Med-Surg | Adult Med-Surg | Adult Med-Surg | Adult Med-Surg |

| Ped. Cardiac | Adult Cardiac Adult ICU | Adult ICU | Adult ICU | |

| Ped. ICU | Ped. Cardiac | Ped. Cardiac | Ped. Cards | |

| Ped. ICU | Ped. ICU | |||

| Age (Min/Median/Max) | 28/30/46 | 22/30/47 | 24/28/50 | 22/30/50 |

Note: Med-Surg = medical-surgical; Ped. = pediatric.

Themes

During the priming activity, end users took notes describing a need for: (1) communication, (2) bedside nurse autonomy, (3) attention to the patient’s physical assessment, (4) review of historical vital signs and laboratory values, (5) timing of treatments, and (6) standardization of actions. Three major themes emerged from the designing and debriefing activities and represent participants’ goals for the CDS tool.

Goal 1: Communication of patient status

First, participants reported they wanted a CDS tool that “paints a picture” or “tells the story” of the patient’s condition over time. They requested that individual users be able to select which variables become visible and layer those variables’ trends for hypothesis generation and succinct communication. For example, the electronic health record could provide a visual depiction of heart rate values layered over the probability-based cardiopulmonary arrest summary value. One participant noted:

I like the idea that you could see the trending vital signs during that rapid response call, like we started here and this is where we’re going, so you can easily see at a glance. Have things go up or down. We mentioned seeing the interventions, like a little tab, where you just tap – “Look, IV fluids given and who did an EKG”… Timing, to go with it, so you can see where it goes and all that’s trending. That way anybody that walks into the room, they can easily see what’s going without asking a bunch of questions, repeating the story every time…

Goal 2: Empowerment

The concepts of advocacy and autonomy surfaced in the second goal. If a CDS tool is designed well, it could empower nurses to advocate for patients and contribute to treatment decision-making. As an objective assessment of a patient’s condition, the CDS tool has the potential to provide participants with a structured method by which nurses can garner support for their recommendations. Regarding the tool’s benefit, one participant noted:

…[the tool] gives that gut feeling some weight and autonomy.

Goal 3: Consistency with context

In the third goal, nurses agreed that the model had to make sense, and the general perception was that probability-based models are more helpful for confirming what one already thinks rather than identifying unrecognized patient conditions. If the CDS tool provides results that are discrepant with what one thinks or does not appear to consider a patient’s “context” or “baseline,” it prompts many questions, which has potential for both benefit and harm. To paraphrase several of the participants, one of the researchers noted in a post-session discussion:

…changes in the number need interpretation. Why or what contributed to a rapid change?…a slow steady trend also needs interpretation… Don’t let a tool overtake critical thinking. It’s all about the trends and the baseline.

Design elements

A list of design elements requested by nurse end users as well as expert recommendations are provided in Table 2. Participants frequently expressed a desire to be able to visualize the temporal trend of the predicted probability of the outcome along with user-selected overlapping depictions of vital signs, laboratory values, and outcome-related treatments and interventions. Charge nurses and rapid response team nurses strongly requested viewing only a ranked order of the highest-risk patients at first; however, when viewing individual patient information (the focus of most design elements), all nurse roles expressed similar preferences. Less notable but fairly commonly heard requests included alerts only for values exceeding an absolute threshold or high degree of change, a green/yellow/red color scheme, and the ability to view the tool on both a mobile device and a dashboard.

Table 2.

Design element considerations for PB-CDS tools

| Elements | Participant preferences | Expert evaluation |

|---|---|---|

| Trends |

|

Consider combinations of color-coding and ranking |

| Layers and Filters |

|

|

| Treatments and Interventions |

|

Might need to be unit-specific |

| Ranking |

|

|

| Alert Notification |

|

Consider building statistics for 12–24 h early so that nurses are “helping the next shift out” as opposed to “depending on a statistical model to tell them how to do their job” |

| Color Scheme |

|

|

| Medium |

|

|

| Communication |

|

Notes: EHR = electronic health record; RRT = rapid response team. Expert evaluation refers to heuristic usability considerations provided by human-computer interaction and design experts.

Participants also gave generic recommendations for future technology development and identified potential barriers (see Table 3). The most prominent findings include ensuring that the tool is readily available to all health care team members, balancing ease of information access with patient privacy, and being concerned about discrepancies in objective probabilities and subjective perceptions. Regarding this latter point, some participants expressed concern over the potential for overreliance on CDS tools, with a loss in critical thinking as these tools become more common.

Table 3.

Recommendations for future technology development and potential barriers

| Category | Participant Request | Heuristic Perspective |

|---|---|---|

| Other Desired Features |

|

|

| Barriers |

|

How this tool will embed itself within the workflow of multiple disciplines (ie, consider the context of “routinized practice”) |

Notes: RRT = rapid response team.

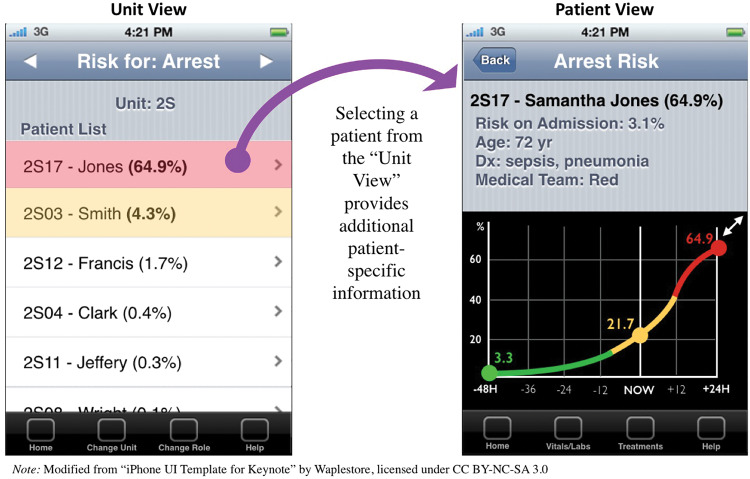

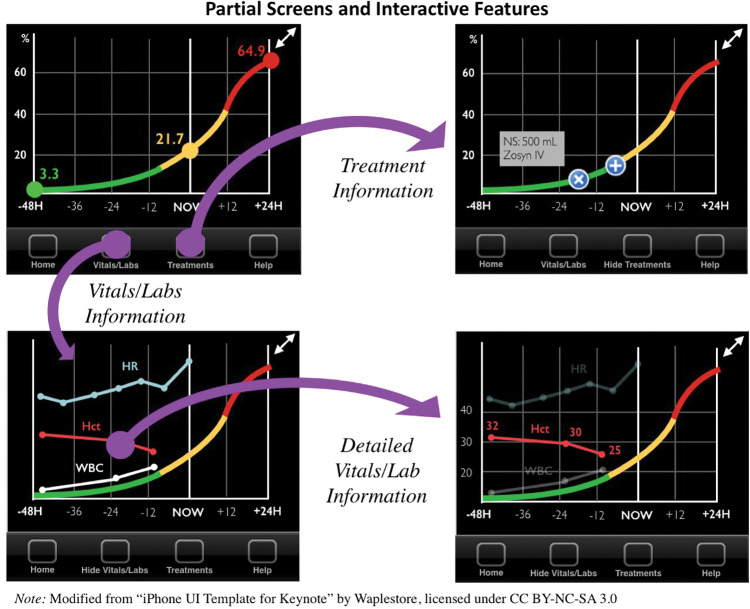

Prototype development

Figures 3 and 4 illustrate screenshot examples of a prototype to represent the most salient preferences from participants. Consistent with requests for ranking, Figure 3 provides a prototype of what a charge nurse might use to review a list of all patients on that unit, ranked in descending order of risk to promote easy recognition of high-risk patients. In order to illustrate individual patient trends and accompanying “baseline,” Figure 3 also displays how all types of nurse end users preferred to see an individual patient’s risk. Combining the most prominent themes of trend lines, filters, layers, and treatments, Figure 4 exemplifies several interactive screenshots: (1) vital signs and laboratory values layered over a predicted probability of cardiopulmonary arrest, (2) additional detail of 1 vital sign selected, and (3) cardiopulmonary arrest–related interventions layered over the time period in which they occurred. Participatory design session end users who reviewed the prototype did not recommend any changes to the current design; however, they did provide additional suggestions for future implementation, including a desire for automaticity of data exchange with the electronic health record and individual configuration of all filters and layers.

Figure 3.

Prototype of charge nurses’ “unit view” of all patients (left) and individual “patient view” (right). The unit view shows all patients ranked in descending order of those most at risk for a cardiopulmonary arrest. The patient view contains basic patient information accompanied by a 72-h trend of predicted probability of cardiopulmonary arrest.

Figure 4.

Partial screens and interactive capabilities of prototype with filters and layers applied to predicted probability of cardiopulmonary arrest. Upper left: Initial “patient view” from Figure 3. Upper right: Cardiopulmonary arrest–related preventive treatment displayed on the clinical shift in which they occurred. Lower left: User-selected vital signs and laboratory values displayed. Lower right: Additional detail (including modified y-axis and measured values) for 1 variable among user-selected vital signs and laboratory values. Nonselected variables’ colors are softened.

DISCUSSION

We used the participatory design method to identify important design elements for nurses and create a prototype for a PB-CDS tool that would predict the likelihood of cardiopulmonary arrest in the context of a patient demonstrating rapid clinical deterioration. Co-creation of the CDS tool via participatory design was beneficial, because the active involvement of multiple nurse stakeholders facilitated the identification of novel, integrative design concepts that groups of participants (ie, researchers and end users) might not have identified separately. Designers and developers can use and extend the goals and associated recommended design elements to create PB-CDS tools for in-hospital nurses in the future. We condensed preferred design elements into a mobile phone–based prototype due to participants’ requests for a mobile-friendly tool; this will hopefully ease the transition to computer monitor–sized displays (in contrast to removing key elements during screen-size reduction). When reviewing the priming activity notes provided by end-user participants, we treated these as a type of needs assessment, and we believe our recommended design elements and prototype would contribute to meeting these needs. Others have developed CDS tools for nurses that are probability-based or cardiopulmonary arrest–focused,18–23,27 but to our knowledge, the participatory design method has never been reported for studying these types of tools. Seeking input from clinician users during the design of the CDS tool user interface could increase the likelihood of adoption,42 especially for probability-based tools.

Similar to how the information needs of nurses differ from those of physicians due to their different practice and diagnostic models,28,43 we had hypothesized that the needs of participants with various specialties would differ, given their different settings and work. Even though others’ work has suggested that nurses benefit more from trend-containing displays than the inference-focused displays preferred by physicians,28 we did not find such distinct differences among our participants. In fact, we found the desire for inference-support and hypothesis-generation assistance to be present across all participant roles. When considering this desire paired with participants’ request for exploring a patient’s “baseline” and “context,” our findings appear consistent with the view that nurses’ diagnostic reasoning skills are context-dependent in the social and humanistic domains.43 Finally, a recent simulation study of nurses’ acceptance of CDS suggestions found that the primary reason to accept a suggestion resulted from the belief that it was “good for the patient,”44 and we believe this supports our theme of “consistency with context” as a CDS tool goal.

Strengths and limitations

Strengths of the study include placing participants in an environment that mimicked real workflows, recruiting 3 unique roles of nurses from 3 hospitals located in 2 health care systems, and conducting iterative design testing in collaboration with end users, researchers, and human-computer interaction experts. Sharing findings from previous sessions with participants in the second and third sessions, collaborating with human-computer interaction experts, and engaging participants in member-checking increase the credibility of our findings. Although we focused on a specific outcome, cardiopulmonary arrest, many of the findings should be generalizable to similar patient outcomes involving clinical deterioration. Limitations of the study include the use of a convenience sample from neighboring hospitals and the inability to determine if CDS tool–based information is capable of changing behavior. Bedside nurses and charge nurses from the ICU and emergency department were excluded, because the workflows of these nurses are different from those of non–critical care inpatient nurses. These environments might require design elements of CDS tools that differ from our reported findings.

Future directions

The terms “baseline” and “context” surfaced frequently and are likely specific to health care clinicians and perhaps even nurses or practice specialties. Future studies could explore their meanings across settings and how information technology can provide information within this mental framework. These terms might be related to participants’ expression of fears regarding the loss of critical thinking skills as new tools are added to the clinical environment. These fears are reminiscent of the possibility of “cookbook medicine” in response to the advent of evidence-based medicine.45 Exploring these terms, associated fears, and possible interventions would further facilitate successful PB-CDS tool implementation efforts. Finally, developing more robust prototypes (based on our recommended design elements) followed by formal usability testing is needed. Usability testing will be especially important when comparing some design elements head-to-head, and simulation laboratories will serve as ideal environments, because patient safety would not be compromised. As the prototypes become more robust and prepare for integration into a clinical setting, we plan to crosswalk our recommendations with the recently released international standards for nursing process–focused CDS tools, which contain additional criteria necessary for optimal integration into workflows that support practice and advance the science.46

CONCLUSION

The information we gained about the preferred design elements of predictive analytics tools that support, rather than interrupt, nurses’ cognitive workflows can benefit future studies in this field as well as nurses’ practice. As these themes and elements undergo additional testing and refinement, we anticipate that they can eventually serve as standards for developing PB-CDS tools that are more likely to influence clinician behavior and ultimately patient outcomes.

ACKNOWLEDGMENTS

We would like to thank the Agency for Healthcare Research and Quality for allowing us to modify their video vignette. We would also like to thank Dr Sally Miller and the Vanderbilt University School of Nursing Simulation Center staff for allowing us to use their laboratory space and provide personnel support, and the Center for Research and Innovation in Systems Safety for input on prototype design. Finally, we acknowledge and appreciate the time and input of our research participants.

Funding

The work was supported by Clinical and Translational Science Award no. UL1TR000445 from the National Center for Advancing Translational Sciences, as well as resources and the use of facilities at the VA Tennessee Valley Healthcare System. The contents are solely the responsibility of the authors and do not necessarily represent official views of the National Center for Advancing Translational Sciences, the National Institutes of Health, the Department of Veterans Affairs, or the United States government.

Competing Interests

The authors have no competing interests to declare.

Contributors

AJ, LN, and LM conceived the study. AJ, LN, and BK collected and analyzed the data. All authors contributed to study design, interpreted the data, and wrote or revised the article for intellectual content and approved the final version.

References

- 1. Dekker S. Complexity, signal detection, and the application of ergonomics: Reflections on a healthcare case study. Appl Ergon. 2012;433: 468–72. [DOI] [PubMed] [Google Scholar]

- 2. Bright TJ, Wong A, Dhurjati R. et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012;1571:29–43. [DOI] [PubMed] [Google Scholar]

- 3. Jones SS, Rudin RS, Perry T, Shekelle PG. Health information technology: an updated systematic review with a focus on meaningful use. Ann Intern Med. 2014;1601:48–54. [DOI] [PubMed] [Google Scholar]

- 4. Dunn Lopez K, Gephart SM, Raszewski R, Sousa V, Shehorn LE, Abraham J. Integrative review of clinical decision support for registered nurses in acute care settings. J Am Med Inform Assoc. 2017;242:441–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Bates DW, Saria S, Ohno-Machado L, Shah A, Escobar G. Big data in health care: using analytics to identify and manage high-risk and high-cost patients. Health Aff (Millwood). 2014;337:1123–31. [DOI] [PubMed] [Google Scholar]

- 6. Jeffery AD. Methodological challenges in examining the impact of healthcare predictive analytics on nursing-sensitive patient outcomes. Comput Inform Nurs. 2015;336:258–64. [DOI] [PubMed] [Google Scholar]

- 7. Collins G, Le Manach Y. Multivariable risk prediction models: it’s all about the performance. Anesthesiology. 2013;1186:1252–53. [DOI] [PubMed] [Google Scholar]

- 8. Collins GS, de Groot JA, Dutton S. et al. External validation of multivariable prediction models: a systematic review of methodological conduct and reporting. BMC Med Res Methodol. 2014;14:40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Kansagara D, Englander H, Salanitro A. et al. Risk prediction models for hospital readmission: a systematic review. JAMA. 2011;30615:1688–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Ettema RG, Peelen LM, Schuurmans MJ, Nierich AP, Kalkman CJ, Moons KG. Prediction models for prolonged intensive care unit stay after cardiac surgery: systematic review and validation study. Circulation. 2010;1227:682–89, 7 p following p 9. [DOI] [PubMed] [Google Scholar]

- 11. Collins GS, Omar O, Shanyinde M, Yu LM. A systematic review finds prediction models for chronic kidney disease were poorly reported and often developed using inappropriate methods. J Clin Epidemiol. 2013;663:268–77. [DOI] [PubMed] [Google Scholar]

- 12. Bailey TC, Chen Y, Mao Y. et al. A trial of a real-time alert for clinical deterioration in patients hospitalized on general medical wards. J Hosp Med. 2013;85:236–42. [DOI] [PubMed] [Google Scholar]

- 13. Kollef MH, Chen Y, Heard K. et al. A randomized trial of real-time automated clinical deterioration alerts sent to a rapid response team. J Hosp Med. 2014;97:424–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Merchant RM, Yang L, Becker LB. et al. Incidence of treated cardiac arrest in hospitalized patients in the United States. Crit Care Med. 2011;3911:2401–06. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Go AS, Mozaffarian D, Roger VL. et al. Heart disease and stroke statistics—2014 update: a report from the American Heart Association. Circulation. 2014;1293:e28–e292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Hodgetts TJ, Kenward G, Vlackonikolis I. et al. Incidence, location and reasons for avoidable in-hospital cardiac arrest in a district general hospital. Resuscitation. 2002;542:115–23. [DOI] [PubMed] [Google Scholar]

- 17. Cleverley K, Mousavi N, Stronger L. et al. The impact of telemetry on survival of in-hospital cardiac arrests in non–critical care patients. Resuscitation. 2013;847:878–82. [DOI] [PubMed] [Google Scholar]

- 18. Alvarez CA, Clark CA, Zhang S. et al. Predicting out of intensive care unit cardiopulmonary arrest or death using electronic medical record data. BMC Med Inform Decis Mak. 2013;13:28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Churpek MM, Yuen TC, Park SY, Gibbons R, Edelson DP. Using electronic health record data to develop and validate a prediction model for adverse outcomes in the wards. Crit Care Med. 2014;424:841–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Escobar GJ, LaGuardia JC, Turk BJ, Ragins A, Kipnis P, Draper D. Early detection of impending physiologic deterioration among patients who are not in intensive care: development of predictive models using data from an automated electronic medical record. J Hosp Med. 2012;75:388–95. [DOI] [PubMed] [Google Scholar]

- 21. Evans RS, Kuttler KG, Simpson KJ. et al. Automated detection of physiologic deterioration in hospitalized patients. J Am Med Inform Assoc. 2014;22:350–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Finlay GD, Rothman MJ, Smith RA. Measuring the Modified Early Warning Score and the Rothman Index: advantages of utilizing the electronic medical record in an early warning system. J Hosp Med. 2014;92:116–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Kirkland LL, Malinchoc M, O’Byrne M. et al. A clinical deterioration prediction tool for internal medicine patients. Am J Med Qual. 2013;282:135–42. [DOI] [PubMed] [Google Scholar]

- 24. Amarasingham R, Patzer RE, Huesch M, Nguyen NQ, Xie B. Implementing electronic health care predictive analytics: considerations and challenges. Health Aff (Millwood). 2014;337:1148–54. [DOI] [PubMed] [Google Scholar]

- 25. Fillmore CL, Bray BE, Kawamoto K. Systematic review of clinical decision support interventions with potential for inpatient cost reduction. BMC Med Inform Decis Mak. 2013;13:135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Jaspers MW, Smeulers M, Vermeulen H, Peute LW. Effects of clinical decision-support systems on practitioner performance and patient outcomes: a synthesis of high-quality systematic review findings. J Am Med Inform Assoc. 2011;183:327–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Effken JA, Loeb RG, Kang Y, Lin ZC. Clinical information displays to improve ICU outcomes. Int J Med Inform. 2008;7711:765–77. [DOI] [PubMed] [Google Scholar]

- 28. Johnson CM, Turley JP. The significance of cognitive modeling in building healthcare interfaces. Int J Med Inform. 2006;752:163–72. [DOI] [PubMed] [Google Scholar]

- 29. Friedman CP. “Smallball” evaluation: a prescription for studying community-based information interventions. J Med Libr Assoc. 2005;93(4 Suppl):S43–S48. [PMC free article] [PubMed] [Google Scholar]

- 30. Reeder B, Hills RA, Turner AM, Demiris G. Participatory design of an integrated information system design to support public health nurses and nurse managers. Public Health Nurs. 2014;312:183–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Thursky KA, Mahemoff M. User-centered design techniques for a computerised antibiotic decision support system in an intensive care unit. Int J Med Inform. 2007;7610:760–68. [DOI] [PubMed] [Google Scholar]

- 32. Ventura F, Koinberg I, Sawatzky R, Karlsson P, Öhlén J. Exploring the person-centeredness of an innovative E-supportive system aimed at person-centered care: prototype evaluation of the care expert. Comput Inform Nursing. 2016;345:231–39. [DOI] [PubMed] [Google Scholar]

- 33. Bodker K, Kensing F, Simonsen J. Participatory IT Design: Designing for Business and Workplace Realities. Cambridge, MA: MIT Press; 2004. [Google Scholar]

- 34. Carayon P, Schoofs Hundt A, Karsh BT. et al. Work system design for patient safety: the SEIPS model. Qual Saf Health Care. 2006;15 (Suppl 1):i50–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Brokel JM, Schwichtenberg TJ, Wakefield DS, Ward MM, Shaw MG, Kramer JM. Evaluating clinical decision support rules as an intervention in clinician workflows with technology. Comput Inform Nurs. 2011;291:36–42. [DOI] [PubMed] [Google Scholar]

- 36. Venkatesh V, Morris MG, Davis GB, Davis FD. User acceptance of information technology: Toward a unified view. Manag Inform Sys Quart. 2003;273:425–78. [Google Scholar]

- 37. Agency for Healthcare Research and Quality. Training Videos: TeamSTEPPS Rapid Response Systems Guide. December 2012. http://www.ahrq.gov/teamstepps/rrs/videos/index.html. Accessed September 20, 2016.

- 38. Miami University of Ohio. Usability Testing: Developing Useful and Usable Products. 2004. http://www.units.miamioh.edu/mtsc/usabilitytestingrevisedFINAL.pdf. Accessed June 1, 2017. [Google Scholar]

- 39. Li AC, Kannry JL, Kushniruk A. et al. Integrating usability testing and think-aloud protocol analysis with “near-live” clinical simulations in evaluating clinical decision support. Int J Med Inform. 2012;8111:761–72. [DOI] [PubMed] [Google Scholar]

- 40. SocioCultural Research Consultants, LLC. Dedoose Version 7.0.23, web application for managing, analyzing, and presenting qualitative and mixed method research data. 2016. http://www.dedoose.com/. Accessed October 4, 2016.

- 41. Miles MB, Huberman AM, Saldana J. Qualitative Daya Analysis: A Methods Sourcebook. 3rd ed Los Angeles: SAGE Publications; 2014. [Google Scholar]

- 42. Jaspers MW. A comparison of usability methods for testing interactive health technologies: methodological aspects and empirical evidence. Int J Med Inform. 2009;785:340–53. [DOI] [PubMed] [Google Scholar]

- 43. Chiffi D, Zanotti R. Medical and nursing diagnoses: a critical comparison. J Eval Clin Pract. 2015;211:1–6. [DOI] [PubMed] [Google Scholar]

- 44. Sousa VE, Lopez KD, Febretti A. et al. Use of simulation to study nurses’ acceptance and nonacceptance of clinical decision support suggestions. Comput Inform Nurs. 2015;3310:465–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn’t. BMJ. 1996;3127023:71–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Müller-Staub M, de Graaf-Waar H, Paans W. An internationally consented standard for nursing process-clinical decision support systems in electronic health records. Comput Inform Nursing. 2016;3411:493–502. [DOI] [PubMed] [Google Scholar]