Abstract

Due to their high signal-to-noise ratio (SNR) and robustness to artifacts, steady state visual evoked potentials (SSVEPs) are a popular technique for studying neural processing in the human visual system. SSVEPs are conventionally analyzed at individual electrodes or linear combinations of electrodes which maximize some variant of the SNR. Here we exploit the fundamental assumption of evoked responses – reproducibility across trials – to develop a technique that extracts a small number of high SNR, maximally reliable SSVEP components. This novel spatial filtering method operates on an array of Fourier coefficients and projects the data into a low-dimensional space in which the trial-to-trial spectral covariance is maximized. When applied to two sample data sets, the resulting technique recovers physiologically plausible components (i.e., the recovered topographies match the lead fields of the underlying sources) while drastically reducing the dimensionality of the data (i.e., more than 90% of the trial-to-trial reliability is captured in the first four components). Moreover, the proposed technique achieves a higher SNR than that of the single-best electrode or the Principal Components. We provide a freely-available MATLAB implementation of the proposed technique, herein termed “Reliable Components Analysis”.

Keywords: SSVEP, EEG, reliability, coherence, spatial filtering

Introduction

When presented with a temporally periodic stimulus, the visual system responds with a periodic response at the stimulus frequency (and its harmonics). The resulting steady steady visual evoked potential (SSVEP) [21, 37, 44, 45] is readily measurable via electroencephalography (EEG) and has been used extensively to probe the spatiotemporal dimensions of visual sensory processing in the human brain (see [38, 46]). In addition to their employment in cognitive [3] and developmental/clinical neuroscience [2], SSVEPs have also been commonly applied to the development of brain-computer-interfaces (BCIs [27],[28],[52]).

The volume conduction inherent to EEG spatially smoothes the electric currents generated by cortical sources, thus lowering the spatial resolution of the resulting scalp measurements. Viewed in another manner, however, the spatial diversity brought about by volume conduction means that the underlying neural signal may be picked up across multiple locations, each with generally different noise statistics. Consequently, modern-day SSVEP paradigms employ multichannel recording arrays and afford the experimenter with high-dimensional data sets spanning the electrode montage. The conventional procedure is to a priori select one or a few target electrodes and then to analyze the evoked data in the space of the chosen subset. In contrast, spatial filtering approaches [13] exploit the spatial redundancy inherent to EEG and form linear combinations of the data, yielding signal “components”. A variety of approaches to computing the spatial filter weights have been proposed: maximizing statistical independence [47], maximizing the variance explained [36], minimizing the noise power [11], and maximizing a variant of the signal-to-noise ratio (SNR) [5], [11]. The spatial filtering approach to SSVEPs yields a parsimonious, low-rank representation of the experimental data with the SNR of the components generally exhibiting an increase over that of individual electrodes. Moreover, the topography of weights comprising the linear combination can potentially inform one of the (at least approximate) location of the underlying neuronal generators.

However, this latter potential has not been fully realized by existing spatial filtering approaches [10, 13]. The predominant criterion being optimized in current spatial filtering paradigms is the SNR, which varies inversely with noise power. The SNR maximizing approaches thus often operate by steering the array orthogonal to the noise subspace, without controlling for the ensuing signal distortion. Consequently, the topographies of the resulting components do not always bear resemblance to the scalp projections of actual cortical sources, and are thus difficult to interpret (see Figure 8 in [5], for example).

The primary application of spatial filtering SSVEP techniques has been the BCI [4, 13, 25, 31, 34, 48, 51], where SNR optimization, rather than faithful signal representation, is the primary goal. By contrast, the cognitive neuroscience and clinical assessment communities commonly employ SSVEPs to elucidate neural information processing, typically examining the SSVEP at a single electrode or by its (raw unfiltered) topography across the electrode array (see, for example, [19, 22, 29, 30, 42, 43]).

Recently, a novel spatial filtering technique which maximizes the inter-subject correlations among a set of continuous EEG records has been proposed [8]. This method projects the data of multiple subjects onto a common space such that the resulting projections capture the neural responses common to all viewers. Here, we adopt a similar approach in the SSVEP context by focusing on the across-trial correlations. The technique exploits the fundamental assumption of evoked responses – reproducibility across trials – to identify spatial components of the SSVEP which exhibit maximal trial-to-trial covariance. In other words, we project the data into a space in which the reliability (i.e., correlation across trials) of the real and imaginary SSVEP Fourier coefficients is maximal. The proposed technique operates on single-trial SSVEP spectra and explicitly represents the trial dimension. This is in contrast to existing component analysis techniques which “stack” or concatenate the trial dimension in order to achieve a two-dimensional data matrix (space-by-time or space-by-frequency) from which covariance matrices are typically formed (notable exceptions include [50, 51] which employ a tensor formulation of the data in conjunction with multiway CCA to perform trial selection). Note that such stacking throws away the structure of evoked response data. Here, we instead use the third (trial) dimension to focus the spatial filters onto features which are evoked in each trial.

We apply the technique to one simulated and one real SSVEP data set, and from each extract components that exhibit behavior consistent with physiology (for example, the method recovers dipolar topographies which contralateralize with the stimulated visual hemifield). Moreover, the SNR of the captured components is significantly higher than that of the “best” (i.e., highest SNR) individual electrode. We contrast the method to both Principal Components Analysis (PCA) and the Common Spatial Patterns (CSP) technique [5] which optimizes an SNR-related criterion, and find that the proposed technique yields favorable tradeoffs between plausibility of components and achieved SNR. Additionally, the proposed method provides a drastic dimensionality reduction as the number of components required to capture the bulk of the trial-to-trial reliability is shown to be more than an order of magnitude lower than the number of acquired channels. In summary, the proposed technique yields a compact representation of SSVEP data sets with high-SNR, physiologically plausible components by optimizing the characteristic feature of evoked responses – reliability across trials. A MATLAB toolbox which contains source code to implement the technique, herein referred to as “Reliable Components Analysis” (RCA) is available at github.com/dmochow/rca.

Methods

Reliable components analysis

The following details the extension of the method of [8] to the SSVEP context; namely, we propose a component analysis technique which explicitly maximizes the trial-to-trial spectral covariance of the SSVEP. The approach is inspired by canonical correlation analysis [20] and its generalizations to multiple subjects [23], differing in that it uses the same projection for all data sets. It is conceptually similar to the “common canonical variates” method [32], which is based on a maximum likelihood formulation, as opposed to the conventional eigenvalue problem developed in [8] and herein.

Consider an experimental paradigm in which a stimulus is presented N times, such that we have a set of N data matrices {X1, …, XN} where Xn represents the neural response during trial n. Specifically, the (mean-centered) rows of Xn denote channels, with the columns carrying real and imaginary Fourier coefficients across the frequency range of interest (i.e., a three-response-frequency paradigm will have 6 columns in Xn).

In the following, let denote the set of all P = N × (N − 1)/2 unique trial pairs. We then form the following trial-aggregated data matrices:

| (1) |

We apply a linear spatial filter to the aggregated spectral data

| (2) |

where T denotes matrix transposition. The correlation coefficient between the resulting spatially filtered data records is given by:

| (3) |

Substituting (2) into (3) yields:

| (4) |

where

| (5) |

where F is the number of analyzed frequencies, R11 and R22 denote within-trial spatial covariance matrices, and R12 is the across-trial spatial covariance matrix which captures trial-to-trial reliability. Note from (4) that is the ratio of across-to within-trial covariance. We seek to find the spatial filter w which maximizes this ratio:

| (6) |

It is shown in [8] that assuming wTR11w = wTR22w, the solution to (6) is an eigenvalue problem:

| (7) |

where A is the eigenvalue corresponding to the maximal trial-aggregated correlation coefficient (i.e., the optimal value of ) achieved by projecting the data onto the spatial filter w. There are multiple such solutions, ranked in decreasing order of trial-to-trial reliability: λ1 > λ2 > … > λD, where D = min [rank (R12), rank (R11 + R22)]. The associated eigenvectors, w1, w2, …, wD are not generally orthogonal. This is in contrast to PCA which yields spatially orthogonal filter weights. However, the component spectra recovered by the various w’s are mutually uncorrelated [15].

It is also worthwhile to point out that the assumption wTR11w = wTR22w does not limit generality, as one can simply define and then substitute in (1) to ensure that R11 = R22; this was performed throughout our analyses. Moreover, when computing the eigenvalues of (7), we regularize the within-trial pooled covariance by keeping only the first K dimensions, where K corresponds to the “knee” of the eigenvalue spectrum, in the spectral representation of R11 + R22. For the data sets considered here, K ≈ 2F. Finally, it will be subsequently shown that the bulk of the across-trial reliability is captured in the first C dimensions, where C ≪ D. This fact is responsible for the dimensionality reduction of RCA, which we quantify in the forthcoming results by defining the following measure:

| (8) |

where η(C) is termed the proportion of reliability explained by the first C RCs.

Comparison techniques

Throughout the results, we compare the behavior of the proposed technique (shown diagrammatically in Figure 1) with two of the more commonly employed component analysis techniques: CSP [5] and PCA. To allow for a fair comparison among these three techniques, care was taken to ensure that all three methods were driven by the same spatial covariances. To that end, CSP seeks to project the sensor data onto a space in which the difference between two conditions is maximized [5]. In the SSVEP context, these two conditions are simply “stimulation-on” and “stimulation-off”. As a result, CSP effectively maximizes the following criterion [13]:

| (9) |

where Rx is the spatial covariance matrix of the observed data during stimulation and Rn is the noise-only spatial covariance (i.e., no stimulation). By contrast, PCA identifies linear combinations of electrodes which maximize the proportion of variance explained in the observed data:

| (10) |

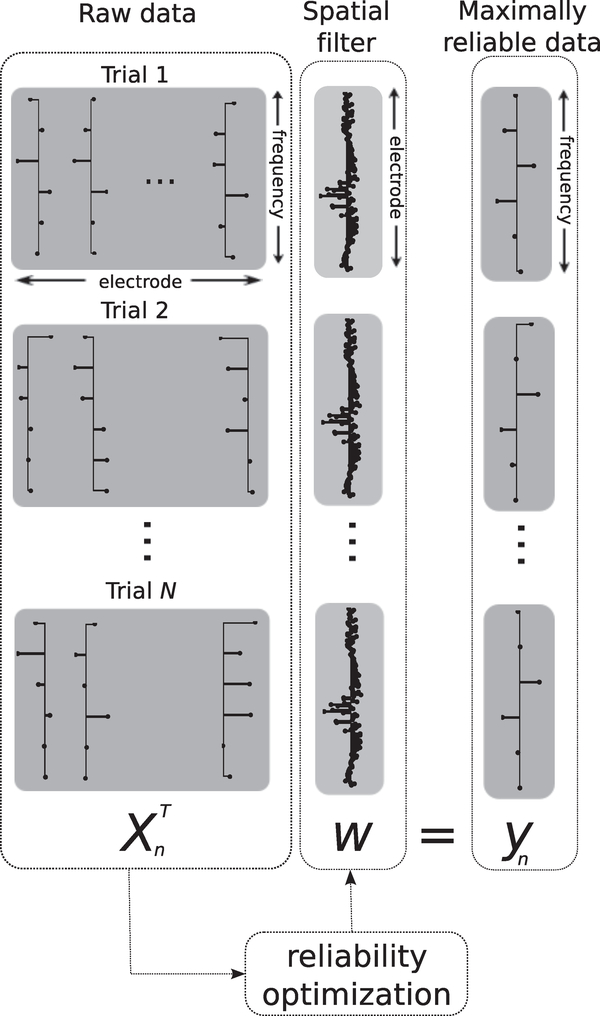

Figure 1:

A diagrammatic view of reliable components analysis (RCA). A electrode-by-frequency data matrix Xn which captures the real and imaginary components of the SSVEP across the array is constructed for every trial n. An optimally tuned spatial filter w then projects all N such data matrices onto a common space in which the trial-to-trial covariance of the resulting spectra yn is maximized. In our implementation, the raw data matrices Xn contain the real and imaginary parts of Fourier coefficients at frequencies corresponding to the stimulus frequency and its harmonics. The projected spectra consist of linear combinations of the spectra at individual electrodes, with the weights of the linear combination chosen to maximize trial-to-trial reliability.

Covariance computation and SNR definition

Covariance matrices were computed over trial-aggregated data records according to Equation 1, with Rx = R11 = R22; that is, the within-trial spatial covariance used in the denominator of the RCA objective function is precisely the observed spatial covariance operated on by CSP and PCA. For the simulated data set (details forthcoming), we estimated the noise covariance Rn by assuming that a noise-only period was available (i.e., we formed the noise covariance by simply omitting the propagation of the desired signal to the array in the computation of Rx). Analogously, a signal-only covariance Rs was also computed by omitting the contribution of the noise. The output SNR then followed as:

| (11) |

For the real data set, we estimated the noise covariance from the temporal frequency bands directly adjacent (one below and one above) the signal frequencies considered (i.e., the even harmonics of the stimulation frequency). The SNR was then computed according to:

| (12) |

where it should be noted that Rx replaces Rs in the numerator as one does not have access to the true signal-only covariance with real data.

Note that Equations 11 and 12 constitute an aggregated single-trial SNR criterion. It would also have been possible to instead form trial-averaged covariance matrices and hence optimize trial-averaged SNR. In the SSVEP context, however, when the number of frequencies is typically much smaller than the number of channels, trial-averaged covariance matrices are rank-deficient. For this reason, and to maintain consistency with RCA’s inherent use of trial-aggregated data, we considered the single-trial version of SNR in this study.

Component scalp projections

When comparing component topographies, we contrast not the spatial filter weights yielded by the appropriate optimization problem, but rather the resulting scalp projection of the activity recovered by that spatial filter. This inverse topography is generally more informative than the weights in that it encompasses both the filter weights as well as the data that is being multiplied by them [18]. Specifically, let us construct a weight matrix W whose columns represent the spatial filter weight vectors w yielded by a component analysis technique. The projections of the resulting components onto the scalp data are given by [18, 35]:

| (13) |

where Rx = R11 = R22 is the (within-trial) spatial covariance matrix of the observed data. The columns of A represent the pattern of electric potentials that would be observed on the scalp if only the source signal recovered by w was active, and inform us of the approximate location of the underlying neuronal sources (up to the inherent limits imposed by volume conduction in EEG).

Synthetic data set

Prior to delving into real data, we evaluated the proposed technique in a simulated environment. Simulations possess several desirable properties stemming from the fact that one has access to ground-truth signals, which is particularly beneficial for the computation of the achieved SNR. A simulation also allows one to easily sweep through parameter spaces; here, we perform a Monte Carlo simulation which evaluates the effect of the number of trials on the recovered components. 500 draws were simulated for each of the following number of trials per draw: {10, 20, 30, 50, 100}. Our aim was to assess the behavior of the proposed and conventional component analysis techniques as a function of the amount of available data from which to learn the required spatial filters.

With the availability of detailed, anatomically-accurate head models, the volume conduction aspect of EEG can be readily modeled in a simulation [16]. To that end, we employed a three-layer boundary element model (BEM) model which was accompanied by labeled cortical surface mesh regions-of-interest (ROIs, see [1] for details). We chose two of these cortical surface ROIs as the desired signal sources (details below). The topographies of the recovered components were subsequently contrasted with the lead fields of these simulated sources, thus shedding light on the ability of the various techniques to recover the desired signal generators. In other words, we probed whether the techniques recover the underlying sources, and if so, under what conditions.

Specifically, the BEM head model consisted of 20484 cortical surface mesh nodes and 128 electrodes placed on the scalp according to a subset of the 10/5 system [33]. Two SSVEP generators were modeled: source 1 was located at the set of all nodes adjacent to the calcarine sulcus (“peri- calcarine”) with a waveform given by s1(n) = cos 2π(1/20)n + cos 2π(3/20)n, n = 1,..., 1000. Meanwhile, source 2 originated in the bilateral temporal poles with a waveform given by s2(n) = uQ[sin 2π(1/20)n + sin 2π(3/20)n], n = 1, …, 1000, where the amplitude u was a uniform random variable on the range (0, 1) which models trial-to-trial amplitude variability, and Q is a scaling factor which equalizes the different number of mesh nodes in the ROIs of the two sources. Moreover, spatially uncorrelated noise with a temporal frequency content following the 1/f pattern (generated by applying a 100-tap finite-impulse-response filter designed by the MATLAB function firls() to a white Gaussian noise sequence) was added to the signal at each electrode. The time-domain SNR of the resulting signal was −34 dB, falling in the range corresponding to real EEG [14]. The array data was then converted to the frequency-domain using a 1024-point FFT and only the frequency bins corresponding to the F = 2 SSVEP frequencies (1/20 and 3/20) were retained for the component analysis.

Real data set

SSVEPs were collected from 22 subjects (gender-balanced, mean age 20 years) with normal or corrected-to-normal visual acuity. Informed consent was obtained prior to study initiation under a protocol that was approved by the Institutional Review Board of Stanford University. Visual stimuli were presented using in-house software on a contrast linearized CRT monitor with a resolution of 800-by-600 and a vertical refresh rate of 72 Hz. Stimuli consisted of oblique sinusoidal gratings windowed by a 10 degree square centered vertically to the left or right of fixation, depending on the hemifield being stimulated. Stimuli for each hemified were mirror symmetric, with gratings on the left oriented at 45 °, and those on the right oriented at 135 °. For both hemifields, the spatial frequency of the gratings was 3 cycles per degree, with mean luminance kept constant throughout the experiments. Stimulus contrast was defined as the difference between the maximum and minimum luminance of the grating divided by their sum. The contrast of the stimulus was temporally modulated (i.e., contrast reversal) by a 9 Hz sinusoid. Each stimulus presentation consisted of ten 1-second presentations of contrast reversal. Each 1s presentation occurred at a fixed contrast, with the first set to 0.05, the last at 0.8, and the rest logarithmically spaced between these two values. The 22 subjects were split into two groups of 11. For one group, the stimulus was presented 90 times in the right visual field, and 10 times in the left visual field, with the ordering randomized before the beginning of the session. For the other group, these numbers were reversed. In the analysis, we retained only the 90 trials corresponding to the predominantly stimulated hemifield.

The EEG was acquired using a 128-channel electrode array (Electrical Geodesics Inc, OR) at a sampling rate of 500 Hz with a vertex reference electrode. All pre-processing was done offline using in-house software. Signals were band-pass filtered between 0.1 Hz and 200 Hz. Channels in which 15% of the samples exceeded a fixed threshold of 30 μV were replaced with a spatial average of the six nearest neighbors. Within each channel, 1 second epochs containing samples exceeding a fixed threshold (30 μV) were rejected. The EEG was then re-referenced to the common average of all channels. Spectral analysis was performed via a Discrete Fourier Transform with 0.5 Hz resolution. The contrast reversing stimuli generated VEPs whose spectra were dominated by even multiples of the presentation frequency (i.e., 2nd, 4th and 6th harmonics); as such, the real- and imaginary-components of these 3 Fourier coefficients across the array formed the 128-by-6 data record stemming from each trial.

Results

To evaluate the proposed technique, we applied RCA to two SSVEP data sets whose full details are described in the Methods. Briefly, the first is a synthetic data set which employs a BEM head model to simulate the propagation of cortical signals to an array of scalp electrodes; the simulation analysis allows for ground-truth measurements of the SNR as well as a comparison of recovered component topographies with the lead fields of the underlying sources. The second (real) data set was acquired in a paradigm consisting of visual stimulation of the left- or right-hemifield with sinusoidal gratings presented at a temporal frequency of f = 9 Hz. In addition to RCA, we evaluated the popular CSP [5] method as well as PCA.

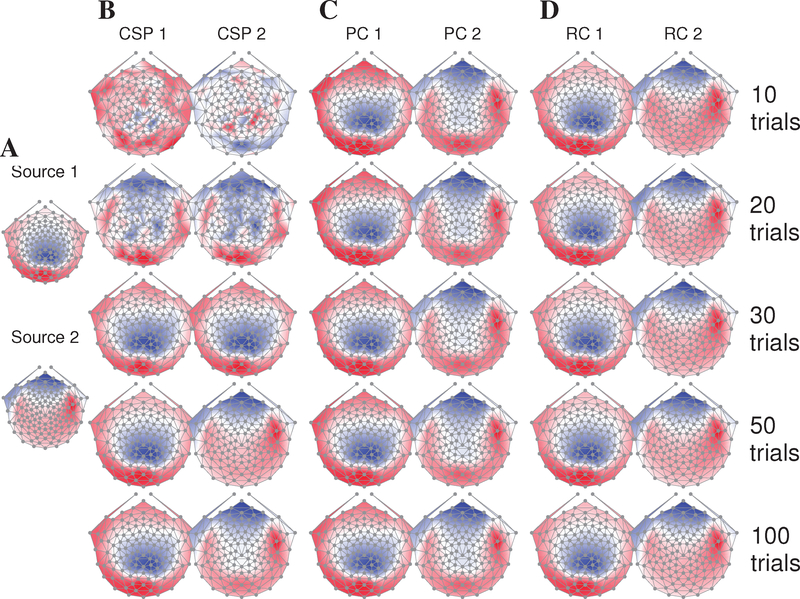

We first present the results of evaluating the three component analysis techniques on the synthetic data set, beginning with an examination of the extracted components. When comparing the scalp topographies of the various components, we depict not the weights themselves (i.e., the “W”) but rather their projection onto the scalp (i.e., the “A”, see Methods). For a detailed explication of the computation of this scalp projection, please refer to the Methods section “Component scalp projections” and [18, 35].

Figure 2 illustrates the scalp projections of the first two components recovered by each method, where we have chosen a representative draw from the Monte Carlo simulation to construct the figure. The lead fields corresponding to the ground-truth signal sources are illustrated in Panel A: source 1 has a symmetric front-to-back dipolar topography roughly centered over electrode Oz. Meanwhile, source 2 is marked by bilateral poles over temporal electrodes and frontal negativity. Panel B depicts the topographies yielded by the CSP technique: the lead fields of sources 1 and 2 are recovered upon inclusion of 30 and 50 trials, respectively, in the analysis. On the other hand, PCA recovers the desired signal topographies at just 10 trials; note, however, that the orthogonality constraint inherent to PCA leads to a distortion (i.e., lack of positivity) in the recovered topography of PC2 over central electrodes (Panel C). RCA cleanly recovers the topographies of both SSVEP generators at 10 trials (panel D).

Figure 2:

Sample topographies recovered by component analysis techniques as a function of the number of available trials per simulation draw. (A) Lead fields of the first (top panel) and second (bottom panel) SSVEP sources employed in the simulation, as computed from a three-layer BEM head model. (B) CSP requires 30 (50) trials to recover source 1 (2). (C) PCA recovers both SSVEP generators at 10 trials; however, due to its requirement of spatially orthogonal weight vectors, the topography of PC2 is distorted over central electrodes. (D) RCA cleanly recovers the lead fields of both sources at 10 trials.

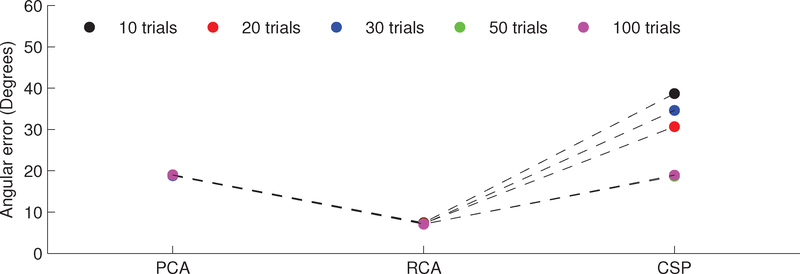

Figure 2 was derived from a representative but single simulation draw; a more complete evaluation of the recovered components is shown in Figure 3, which depicts the median deviations (across simulation draws) between the obtained component scalp projections and the underlying lead field. That is, we use the vector angle between the first component of each method and topography of source 1 as a measure of “goodness”. For all values of number of trials, RCA yields the lowest angular error (p < 0.001, n = 500, paired, left-tailed Wilcoxon signed rank test). PCA outperforms CSP for 10–30 trials (p < 0.001, n = 500, paired, left-tailed Wilcoxon signed rank test).

Figure 3:

Assessing the ability of component analysis techniques to recover the desired source in a synthetic data set. Each data point conveys the median (across Monte Carlo simulation draws) vector angle between the first component’s scalp projection and the lead field of the primary SSVEP generator. For all values of number of trials, RCA yields the lowest angular error between component topography and underlying lead field (p < 0.001, n = 500, paired, left-tailed Wilcoxon signed rank test).

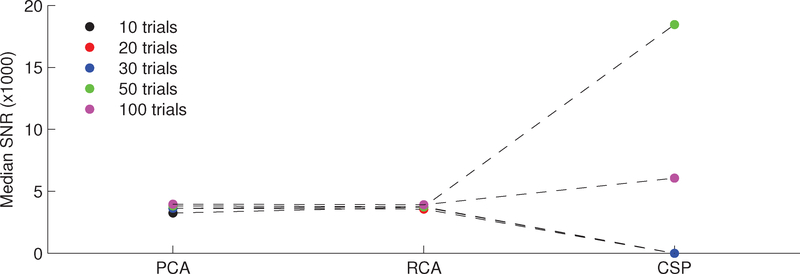

While the physiological plausibility of an extracted component is certainly important to inferring the corresponding source, in some applications (for example, signal detection), it may be appropriate to sacrifice physiological meaning in order to achieve a high SNR. This entails focusing the spatial filter weights on the channels which exhibit low noise power. To that end, Figure 4 displays the SNRs yielded by the first components of each method as a function of the number of available trials. The data points convey the median value across simulation draws. At 10 trials, RCA and PCA yield significantly higher output SNRs than CSP (p < 0.001, n = 500, paired, right-tailed Wilcoxon signed rank test). However, given enough input data (i.e., >50 trials) CSP is able to identify the null space and thus achieves significantly higher SNR (p < 0.001, n = 500, paired, right-tailed Wilcoxon signed rank test).

Figure 4:

A comparison of the SNRs yielded by component analysis techniques on a synthetic data set, shown as a function of the number of trials. The data points convey the median value across simulation draws. RCA and PCA significantly outperform CSP at 10 trials per simulation draw (p < 0.001, n = 500, paired, right-tailed Wilcoxon signed rank test). Given at least 50 trials, however, CSP is able to learn the null space of the noise and thus achieves significantly higher output SNRs (p < 0.001, n = 500, paired, right-tailed Wilcoxon signed rank test).

Evaluation on real data

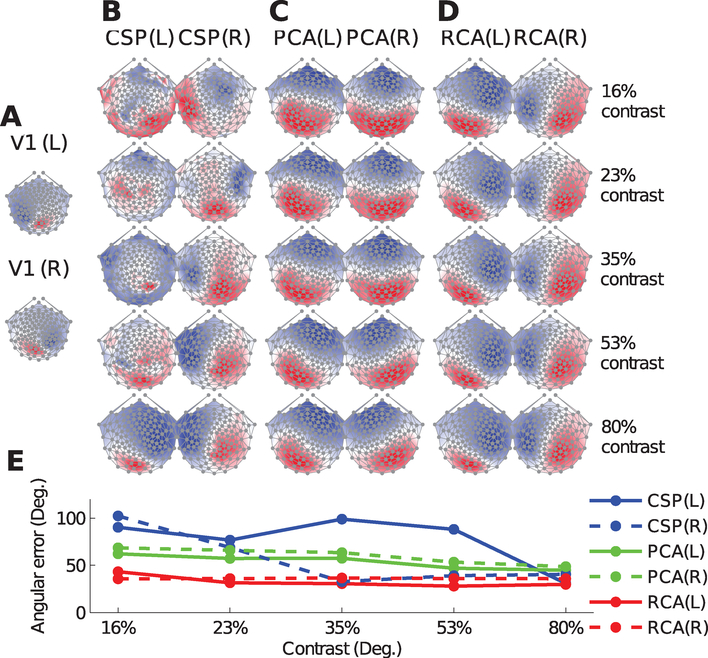

We now turn to the evaluation of the component analysis techniques on a real data set. Similar to what was described above for the simulated data, we sought to evaluate the physiological plausibility of the components yielded by RCA and its alternatives. Here, however, we do not possess ground-truth information as to the lead fields of the underlying cortical sources. From the anatomy of the human visual system, however, input in the left visual field (i.e., left “hemifield”) is processed in the right cerebral hemisphere and vice versa. Thus, one way of assessing the physiological relevance of the obtained components is to compute the projections separately for stimulation of the left and right hemifields (LH and RH, respectively), and then observe whether a contralateralization of the scalp topographies emerges. Moreover, to assess the role of the input SNR in the physiological plausibility of the resulting components, we performed the analysis separately for varying levels of stimulus contrast and thus response amplitude. In what follows, we focus exclusively on the first component (i.e., CSP1, PC1, and RC1) of each candidate method.

Figure 5A displays the lead fields from both left and right primary visual cortices (V1 (L) and V1 (R), respectively), as computed from a BEM model of a sample head. Striate visual cortex is expected to be a major generator of the activity evoked by this SSVEP paradigm. The dipolar topographies exhibit mirror symmetry with the left primary visual cortex projecting positively to the right occipital electrodes, and vice versa.

Figure 5:

RCA yields physiologically plausible scalp topologies insensitive to input SNR. (A) Theoretical scalp projections from the left (L) and right (R) primary visual cortices as computed by the boundary element model (BEM) of an individual human head. (B) The scalp projections of the first CSP as computed from data recorded during visual stimulation of the left (L) and right (R) hemifields. At low contrasts, the topologies lack physiological plausibility and appear to to be driven by noise;moreover, a lack of lateralization is apparent until the highest contrast level. (C) Same as (A) but now for the first principal component (PC). The level of observed contralateralization increases with the stimulus contrast, highlighting that PCA components become more physiologically plausible as the input SNR increases. (D) The scalp projections of the first reliable component (RC) exhibit physiologically plausible topographies with clear lateralization even at low contrast-values, with the topographies remaining relatively stable over the entire contrast range. (E) Moreover, RCA topographies bear a close resemblance to the lead fields from primary visual cortex, achieving lower angular errors (assuming V1 as the ground-truth) than CSP and PCA at low contrast values.

Figure 5B depicts the scalp projections of CSP for both LH and RH at each stimulus contrast. At low contrast, the topographies are visibly noisy and lack the spatial structure expected in a visual paradigm (i.e., concentration of activity at the occipital electrodes). A contralateralization of the scalp topographies with stimulated hemifield is not apparent until 80% contrast. PCA topographies more closely resemble the maps expected from this visual paradigm (Panel C). Moreover, there is a progressively greater level of mirror symmetry in the obtained scalp projections with increasing contrast, and clear contralateralization emerges at 53% contrast. The scalp projections of RCA are shown in Panel D: a contralateralization with the stimulated hemifield is readily observed at 16% contrast. Moreover, the topographies remain quite stable with contrast.

In order to quantify the goodness of the recovered components, we computed the angular errors between all topographies and the corresponding lead fields from primary visual cortex (Panel E). As expected from visual inspection of Panels B-D, RCA achieves smaller angular errors at the low contrast values. Note, however, that it is difficult to conclusively claim RCA as better at recovering the underlying sources in this data set: extrastriate visual areas such as V2, V3, V3a, V4, and MT, whose lead fields also contralateralize with the stimulated hemifield, may also have been activated.

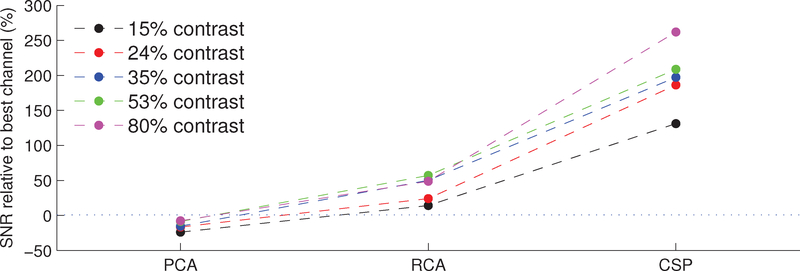

Next, we computed the single-trial SNR of the components found by the three candidate methods, computing the spatial filter weights individually for each subject to take into account inter-subject variability. SNR estimation was facilitated by defining noise frequency bands as lying directly adjacent to the frequencies of interest (i.e., the first three even harmonics). We pool across the subject dimension to yield a distribution of n = 1980 single-trial SNRs for each method and stimulus contrast level. Additionally, we performed a post-hoc exhaustive search of the electrode space to identify the single electrode yielding the highest single-channel SNR. The results are shown in Figure 6, where the data points depict the median SNR improvement over this best individual-channel.

Figure 6:

RCA yields SNRs greater than the best single electrode. The figure shows the median (across n = 1980 trials pooled across subjects) SNR improvement over the best (post-hoc selected) individual electrode as yielded by various component analysis methods. For all contrast levels, PCA yields degradations in SNR relative to the best channel. RCA provides a 14±7% improvement in the low-contrast condition, while yielding 49±18% improvement in the high-contrast condition. For all contrast values, the median RCA SNR is significantly greater than that of PCA (p < 0.001, paired, right-tailed Wilcoxon signed rank test). Meanwhile, the CSP method offers improvements as high as 262 ± 52% in the high-contrast case. The median CSP SNR is significantly higher than the corresponding median SNR yielded by RCA (p < 0.001) for all stimulus contrasts.

Notice first that the median SNR yielded by PCA is lower than that given by the best individual channel for all contrast values. This is indicative of the fact that dimensions explaining the majority of the variance in EEG often capture noise sources. Meanwhile, RCA offers a median SNR improvement of 14±7% (mean ± s.e.m.) at low-contrast, and 49 ± 18% at high contrast, relative to the best channel. Finally, CSP yields large improvements over the best channel: 131 ± 116% at low-contrast, and 262 ± 52% at high contrast. We performed a Wilcoxon signed rank test to determine whether the differences in SNR improvements between methods are significant: for all input contrasts, the SNRs yielded by RCA are significantly greater than those yielded by PCA (p < 0.001, n = 1980, paired, right-tailed Wilcoxon signed rank test). Similarly, the CSP SNRs are significantly greater than those of RCA (p < 0.001, n = 1980, paired, right-tailed Wilcoxon signed rank test).

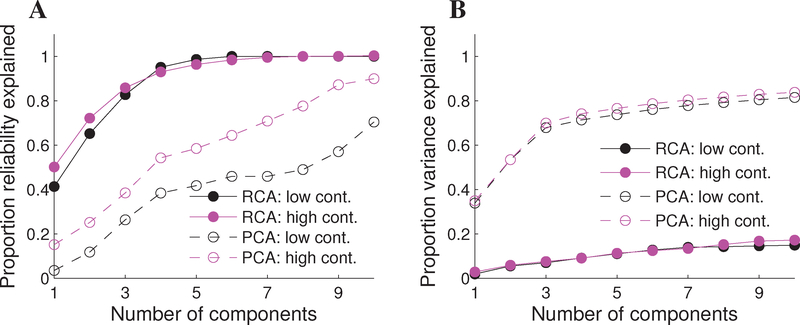

To quantify the level of dimensionality reduction afforded by RCA on this data set, we computed the proportion of reliability explained as a function of the number of RCs (see equation 8 in Methods). This is the reliability analogue of the proportion of variance explained as a function of the number of PCs, which was also computed on the data. To shed light on the tradeoff between trial-to-trial covariance and variance explained, we then performed a “cross-over” analysis which considers the amount of trial-to-trial reliability captured by the PCs, and the amount of variance explained by the RCs.

Figure 7A displays the proportion of reliability explained as a function of the number of retained RCs (solid line, filled markers) and PCs (dashed line, open markers) for the low (black markers) and high (magenta markers) contrast case. The first RC captures 41% and 50% of the trial-to-trial covariance for the low- and high-contrast case, respectively. Meanwhile, more than 93% of the reliability is contained in the first four RCs, representing a dimensionality reduction of 128/4 = 32 while only sacrificing less than 10% of the reliable SSVEP. Meanwhile, the PCs are tuned to optimally capture within-trial variance and thus capture substantially less of the trial-to-trial reliability: the first four PCs capture 38% and 54% of the trial-to-trial covariance for the low- and high-contrast data, respectively. Moreover, while the cumulative curve converges to unity at C = 6 RCs, more than ten PCs are required to explain the full reliability spectrum.

Figure 7:

Reducing dimensionality of SSVEP data sets by forming components optimizing the reliability and variance. (A) Proportion of reliability explained as a function of the number of retained components, shown separately for low and high contrast stimulation (“low cont.” and “high cont.”, respectively). The first four RCs capture > 95% of the trial-to-trial covariance in the data, with 35 – 55% captured by the first four PCs (B) Proportion of within-trial variance explained as a function of the number of retained components. The first four PCs explain 75% of the variance, with the first four RCs capturing < 10% of the within-trial variance.

Figure 7B displays the corresponding amount of variance explained as a function of the number of retained PCs and RCs. The first four PCs explain 71% and 74% of the within-trial variance for the low- and high-contrast case, respectively. Note, however, that the number of PCs required to account for the bulk (i.e., virtually all) of the within-trial variance is still substantially greater than ten. Meanwhile, the first four RCs capture just 9% of the within-trial variance, exemplifying the stark difference in criteria being optimized by PCA and RCA.

Discussion

We have presented a novel component analysis technique which drastically reduces the dimensionality of SSVEP data sets while retrieving physiologically plausible scalp topographies and yielding SNRs greater than the best single electrode. The method follows from the fundamental assumption of evoked responses, namely that the neural activity evoked by the experimental paradigm is reproducible from trial-to-trial.

The results of the simulation study revealed that RCA provides a desirable tradeoff between physiological plausibility and output SNR. Specifically, the technique recovered the desired source topography with comparable or lower angular error relative to PCA and CSP for all number of trials per simulation draw (Figures 2 and 3). Moreover, RCA achieves the highest output SNR at ≤ 30 trials, with CSP significantly outperforming RCA/PCA at ≥ 50 trials (Figure 4).

The analysis of real data acquired in a single-hemifield visual stimulation paradigm demonstrated the ability of RCA to yield physiologically plausible components which contralateralize with the stimulated hemifield even at low contrast (Figure 5). PCA recovered components which exhibit progressively more physiological plausibility with increasing contrast; however, the output SNR of these extracted components fell below that yielded by the best single channel. Finally, CSP yielded high SNR components for all contrast levels, but sacrificed physiological plausibility of the topographies, particularly at low contrast.

Application to time-domain evoked responses

While the focus of this work has been on reliability in the SSVEP spectrum, maximization of trial-to-trial covariance can also be readily exploited in the case of time-domain evoked responses (both transient and steady state). By inserting time-domain electric potentials into the columns of Xn and carrying forward the development in the Methods, the optimization of Equation 6 identifies linear combinations of electrodes whose temporal dynamics are maximally reproducible from trial-to-trial. Each resulting RC is then comprised of a spatial topography and a corresponding temporal waveform. The advantage of such a timedomain approach is the greater number of samples typically comprising a single-trial. On the other hand, the SNR of the input data is significantly lower than the SSVEP spectrum.

Relevance of simulation results

The simulation study performed here is clearly a simplification of the actual neural environment that generates SSVEPs. Aspects of the simulation that reflect potential deviations from reality include: dipolar sources [39], average isotropic conductivity values [49], and uncorrelated additive sensor noise. Nevertheless, we feel that simulations are useful here in order to quantify the tradeoffs inherent to the existing methods. While the figures of merit obtained in a simulation may not necessarily translate to real settings, their relative values (i.e., comparisons across methods) are more likely to hold. Moreover, the validity of simulation results is boosted when consistent with that found in evaluations on real data. Notice, for example, the general agreement between the results of Figures 2 and 5. Finally, simulations are useful in that the ground truth lead field for the sources is available. We showed in Figure 3 that PCA distorts the expected topography for the second source, but RCA does not.

Learning on individual versus aggregated data

As with any component analysis technique, a learning procedure is employed by RCA to estimate the reliability-maximizing spatial filters. An important question is whether one should learn on subject-aggregated data, yielding a set of uniform RCs for the entire data set, or rather compute the components separately for each subject. The tradeoff here is between the noise level in the estimated covariance matrices (subject-aggregated covariance has lower estimation noise) and the ability to exploit individual differences in component topographies. For example, to construct Figure 5, which aimed to characterize the reliable activity evoked by the visual stimulation paradigm, we pooled data from all subjects to learn the (smoothed) RCA spatial filters. On the other hand, when comparing achieved SNR across component analysis methods in Figure 6, we opted instead to learn the optimal filters individually for each subject, as structural and functional variations are expected to lead to disparate topographies. Note that when learning the filter weights individually, the resulting dimensionality-reduced data is not congruent across subjects (i.e., RC1 of subject 1 generally lies in a different space than RC1 of subject 2). As such, care should be taken when comparing the projected spectra across data computed using different spatial filters.

RCA as a reliability filter

Here we have focused the analysis to the space of the formed components which encompass data integrated across multiple electrodes. In some cases, it may be desirable to rather analyze the data in the original electrode space. To that end, it is also possible to treat RCA as a “reliability filter” which outputs a data set whose dimensionality is that of the original data set. This is achieved by first projecting the data onto a set of C RCs, and then back-projecting this rank-reduced data onto the scalp: if Y denotes the frequency-by-component RCA data matrix, and the electrode-by-component matrix A denotes the corresponding scalp projections (see Methods), then the reconstructed sensorspace data matrix follows as . This procedure removes dimensions exhibiting low trial-to-trial reliability, presumably corresponding to noise sources, from the data. Conventional analysis methods such as trial-averaging may then be employed on .

Application to source imaging

We have shown here that the topographies of the various RCs bear strong resemblance to the underlying lead fields generating the observed SSVEP. This suggests that RCA may be combined with source localization approaches to yield robust estimates of the location of the neuronal generators. Note that the conventional manner of performing EEG source localization is to select an array of scalp potentials at a given latency (or frequency-band) and project the resulting vector onto the cortical surface using an appropriately generated inverse matrix. While we do not engage here in a discussion of the legitimacy of the resulting source location estimates, we do propose that the RCA scalp projections (for example, Figure 5D) may themselves be employed as inputs into a source localization algorithm. Note that these scalp projections are not tied any particular time instant: rather, they correspond to the source of activity which is reliably evoked across trials. As such, their use as source localization inputs eliminates the need to choose a particular time instant at which to localize. The resulting cortical source distribution (i.e., the output of the source localization) bears a time course given by the RC whose scalp projection was used for the input. Moreover, this procedure yields insight into the sources underlying the reliable activity extracted by the component, and whether the RCs represent vast mixtures of generators or more spatially localized dipoles.

Application to BCIs

The proposed technique is primarily aimed at data sets collected in neurobiological or clinical assessment settings.. However, we anticipate that RCA may also become relevant for BCI applications which learn patterns of electrodes associated with a particular cognitive state. We propose that RCA be employed at the front-end as a feature selection step which reduces the dimensionality of the input feature vector while still capturing the reproducible neural features. Note that the technique is inherently blind, requiring only multiple congruent data records (i.e., without labels indicating the outcome of any associated task) to learn the RCs. Moreover, the resulting features may yield better generalization due to their closer link to physiology.

Complex versus real-based implementation

Our optimization of SSVEP reliability was formulated with a single spatial filter coefficient applied to each electrode’s spectrum. Thus, the procedure identifies linear combinations of channels which exhibit reliable even and odd spectra. By doing so, we have maintained purely real input data and resulting filter coefficients, easing the interpretation of the resulting components. An alternative implementation is to combine the real and imaginary parts into a single complex coefficient and then perform a joint optimization of the real and imaginary parts of the filter coefficients across the array. Such an approach can potentially identify reliable phase relationships among electrodes.

Goodness of the SNR as a quality metric

The SNR is a natural metric which certainly conveys the most obvious goal of a signal processing algorithm: reducing the noise. However, it is worthwhile to point out that SNR becomes infinite for zero-noise even if the desired signal has been greatly distorted. In other words, the SNR down-weights signal distortion in favor of noise reduction. However, electrodes which possess the lowest noise levels are not necessarily those at which the cortical sources project to most strongly. Thus, we caution from interpreting the SNR as a “gold-standard” in measuring the goodness of a spatial filtering algorithm. There are cases in which one may be willing to sacrifice noise reduction in order to obtain a minimally distorted version of the underlying source signal. To that end, several approaches to managing the tradeoff between signal distortion and noise reduction have been proposed in related signal processing fields [6].

Emergence of reliability in neuroscience

Our findings add to the emerging body of evidence pointing to the utility of employing reliability as a criterion with which to measure and extract meaningful neural signals. Highly reliable neural responses have been observed in extensive parts of cortex during naturalistic audio(visual) stimulation in fMRI [17], EEG [8, 9], and magnetoencephalography (MEG) [24, 26]. Moreover, a recent fMRI study has reported that the level of inter-subject correlation in the blood-oxygenation-level-dependent (BOLD) signal is greater when the stimulus is presented in 3D [12]. In terms of trial-based applications, a method to identify correlations among spectral envelopes of multivariate electrophysiological recordings has been proposed in [7]. Finally, reproducibility of neural activation has been linked to conscious perception [40]. Collectively, these findings highlight the increasing use of reliability as a meaningful feature in neuroscience: indeed, data collection in the brain sciences almost always encompasses multiple data records (i.e., multiple trials, multiple subjects, or both). Given that the desired signal is expected to be common to these records, reliability represents a natural means of separating the reliable signal from the variable noise.

Application matters

While we have presented RCA as an alternative to commonly employed methods such as CSP and PCA, we do not suggest that it is the “best” component analysis method for analyzing SSVEPs. Rather, we feel that the field of cognitive neuroimaging has not reaped the benefits of spatial filtering approaches in the same way that the BCI world has. For BCIs, SNR may in fact be the most appropriate metric, as it may best relate to information bit rate. However, for elucidating neural processing in human visual cortex, extracting components which have physiological relevance is of utmost importance. Here, we believe that exploiting the trial-to-trial reliability of evoked responses is an appropriate way of bringing the recovered components closer to physiology.

Highlights.

A technique that maximizes trial-to-trial reliability of SSVEPs was developed.

The method recovered high SNR, physiologically plausible components on real data.

A freely-available software implementation of the proposed technique was provided.

Acknowledgments

This work was supported by NIH Grants RO1-EY018875 and RO1-EY015790.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- [1].Ales JM, Yates JL, and Norcia AM. V1 is not uniquely identified by polarity reversals of responses to upper and lower visual field stimuli. Neuroimage, 52(4):1401–1409, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Almoqbel F, Leat SJ, and Irvinge E The techniqu, validity and clinical use of the sweep VEP Ophthalmic Physiol Opt. 28(5):393–403. 2008. [DOI] [PubMed] [Google Scholar]

- [3].Andersen SK, Muller MM, and Martinovic J. Bottom-up biases in feature-selective attention. The Journal of Neuroscience 3247: 16953–16958. 2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Bin G, Gao X, Yan Z, Hong B, and Gao S, S. (2009). An online multi-channel SSVEP-based brain-computer interface using a canonical correlation analysis method. Journal of neural engineering, 6(4), 046002, 2009. [DOI] [PubMed] [Google Scholar]

- [5].Blankertz B, Tomioka R, Lemm S, Kawanabe M, and Muller K-R. Optimizing spatial filters for robust eeg single-trial analysis. Signal Processing Magazine, IEEE, 25(1):41–56, 2008. [Google Scholar]

- [6].Chen J, Benesty J, Huang Y, and Doclo S, S. New insights into the noise reduction Wiener filter. IEEE Transactions on Audio, Speech, and Language Processing, 14(4), 1218–1234. 2006 [Google Scholar]

- [7].Dahne S, Nikulin VV, Ramrez D, Schreier PJ, Muller KR, K. and S. Haufe S Finding brain oscillations with power dependencies in neuroimaging data NeuroImage, 96, 334–348. 2014. [DOI] [PubMed] [Google Scholar]

- [8].Dmochowski JP, Sajda P, Dias J, and Parra LC. Correlated components of ongoing eeg point to emotionally laden attention-a possible marker of engagement? Frontiers in human neuroscience, 6, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Dmochowski JP, Bezdek MA, Abelson BP, Johnson JS, Schumacher EH and Parra LC. Audience preferences are predicted by reliability of temporal neural processing. Nature Communications, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].de Cheveigné A, and Parra LC. Joint decorrelation, a versatile tool for multichannel data analysis. NeuroImage (2014). [DOI] [PubMed] [Google Scholar]

- [11].Friman O, Volosyak I, and Graser A. Multiple channel detection of steady-state visual evoked potentials for brain-computer interfaces. Biomedical Engineering, IEEE Transactions on, 54(4):742–750, 2007. [DOI] [PubMed] [Google Scholar]

- [12].Gaeblerlabel M, Biessmannlabel F, Lamke JP, Muller KR, Walter H, and Hetzer S, S. Stereoscopic depth increases intersubject correlations of brain networks. NeuroImage, 2014. [DOI] [PubMed] [Google Scholar]

- [13].Garcia-Molina G and Zhu D. Optimal spatial filtering for the steady state visual evoked potential: Bci application. In Neural Engineering (NER), 2011 5th International IEEE/EMBS Conference on, pages 156–160. IEEE, 2011. [Google Scholar]

- [14].Goldenholz DM, Ahlfors SP, Hämäläinen MS, Sharon D, Ishitobi M, Vaina LM, and Stufflebeam SM. Mapping the signal-to-noise-ratios of cortical sources in magnetoencephalography and electroencephalography. Human brain mapping, 30(4):1077–1086, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Golub GH and Van Loan CF. Matrix computations, volume 3 JHU Press, 2012. [Google Scholar]

- [16].Hallez H, Vanrumste B, Grech R, Muscat J, De Clercq W, Vergult A, D’Asseler Y, Camilleri KP, Fabri SG, Van Huffel S, et al. Review on solving the forward problem in eeg source analysis. Journal of neuro-engineering and rehabilitation, 4(1):46, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Hasson U, Nir Y, Levy I, Fuhrmann G, and Malach R. Intersubject synchronization of cortical activity during natural vision. science, 303 (5664):1634–1640, 2004. [DOI] [PubMed] [Google Scholar]

- [18].Haufe S, Meinecke F, Gorgen K, Dahne S, Haynes JD, Blankertz B, Biessmann F. On the interpretation of weight vectors of linear models in multivariate neuroimaging. NeuroImage, 87, 96–110. 2014. [DOI] [PubMed] [Google Scholar]

- [19].Herrmann CS. Human eeg responses to 1–100 hz flicker: resonance phenomena in visual cortex and their potential correlation to cognitive phenomena. Experimental brain research, 137(3–4):346–353, 2001. [DOI] [PubMed] [Google Scholar]

- [20].Hotelling H. Relations between two sets of variates. Biometrika, pages 321–377, 1936. [Google Scholar]

- [21].Kamp A, Sem-Jacobsen CW, and Leeuwen W. Cortical responses to modulated light in the human subject. Acta physiologica scandinavica 48(1), 1–12, 1960. [DOI] [PubMed] [Google Scholar]

- [22].Kemp A, Gray M, Eide P, Silberstein R, and Nathan P. Steady-state visually evoked potential topography during processing of emotional valence in healthy subjects. NeuroImage, 17(4):1684–1692, 2002. [DOI] [PubMed] [Google Scholar]

- [23].Kettenring JR. Canonical analysis of several sets of variables. Biometrika, 58(3):433–451, 1971. [Google Scholar]

- [24].Koskinen M and Sepp M. Uncovering cortical MEG responses to listened audiobook stories. NeuroImage, 2014. [DOI] [PubMed] [Google Scholar]

- [25].Lin Z, Zhang C, Wu W, and Gao X. (2006). Frequency recognition based on canonical correlation analysis for SSVEP-based BCIs. IEEE Transactions on Biomedical Engineering, 53(12), 2610–2614, 2006. [DOI] [PubMed] [Google Scholar]

- [26].Lankinen K, Saari J, Hari R, and Koskinen M. Intersubject consistency of cortical meg signals during movie viewing. NeuroImage, 92:217–224, 2014. [DOI] [PubMed] [Google Scholar]

- [27].Middendorf M, McMillan G, Calhoun G, Jones KS, et al. Brain-computer interfaces based on the steady-state visual-evoked response. IEEE Transactions on Rehabilitation Engineering, 8(2):211–214, 2000. [DOI] [PubMed] [Google Scholar]

- [28].Ming C and Shangkai G. An eeg-based cursor control system. In [Engineering in Medicine and Biology, 1999. 21st Annual Conference and the 1999 Annual Fall Meetring of the Biomedical Engineering Society] BMES/EMBS Conference, 1999 Proceedings of the First Joint, volume 1, pages 669-vol. IEEE, 1999. [Google Scholar]

- [29].Morgan S, Hansen J, and Hillyard S. Selective attention to stimulus location modulates the steady-state visual evoked potential. Proceedings of the National Academy of Sciences, 93(10):4770–4774, 1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Müller M, Andersen S, Trujillo N, Valdes-Sosa P, Malinowski P, and Hillyard S. Feature-selective attention enhances color signals in early visual areas of the human brain. Proceedings of the National Academy of Sciences, 103(38):14250–14254, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Nam Y, Cichocki A, and Choi S. Common spatial patterns for steady-state somatosensory evoked potentials. Engineering in Medicine and Biology Society (EMBC), 2013 35th Annual International Conference of the IEEE, 2013. [DOI] [PubMed] [Google Scholar]

- [32].Neuenschwander BE and Flury BD. Common canonical variates. Biometrika, 82(3):553–560, 1995. [Google Scholar]

- [33].Oostenveld R and Praamstra P. The five percent electrode system for high-resolution eeg and erp measurements. Clinical neurophysiology, 112 (4):713–719, 2001. [DOI] [PubMed] [Google Scholar]

- [34].Pan J, Gao X, Duan F, Yan Z, and Gao S. Enhancing the classification accuracy of steady-state visual evoked potential-based brain-computer interfaces using phase constrained canonical correlation analysis. Journal of neural engineering, 8(3), 036027, 2011. [DOI] [PubMed] [Google Scholar]

- [35].Parra LC, Spence CD, Gerson AD, and Sajda P. Recipes for the linear analysis of eeg. Neuroimage, 28(2):326–341, 2005. [DOI] [PubMed] [Google Scholar]

- [36].Pouryazdian S and Erfanian A. Detection of steady-state visual evoked potentials for brain-computer interfaces using pca and high-order statistics In World Congress on Medical Physics and Biomedical Engineering, September 7-12, 2009, Munich, Germany, pages 480–483. Springer, 2009. [Google Scholar]

- [37].Regan D. Some characteristics of average steady-state and transient responses evoked by modulated light. Electroencephalography and clinical neurophysiology, 20penalty0 (3):238–248 1966 [DOI] [PubMed] [Google Scholar]

- [38].Regan D. Human brain electrophysiology: evoked potentials and evoked magnetic fields in science and medicine. 1989.

- [39].Riera JJ, Ogawa T, Goto T, Sumiyoshi A, Nonaka H, Evans A, Miyakawa H, and Kawashima R. Pitfalls in the dipolar model for the neocortical eeg sources. Journal of neurophysiology, 108(4):956–975, 2012. [DOI] [PubMed] [Google Scholar]

- [40].Schurger A, Pereira F, Treisman A, and Cohen JD. Reproducibility distinguishes conscious from nonconscious neural representations. Science, 327(5961):97–99, 2010. [DOI] [PubMed] [Google Scholar]

- [41].Silberstein RB. Steady-state visually evoked potentials, brain resonances, and cognitive processes In Nunez PL, editor, Neocortical dynamics and human brain rhythms. Oxford University Press, Oxford, 1995. [Google Scholar]

- [42].Silberstein RB, Pipingas A, et al. Steady-state visually evoked potential topography during the wisconsin card sorting test. Electroencephalography and Clinical Neurophysiology/Evoked Potentials Section, 96(1):24–35, 1995. [DOI] [PubMed] [Google Scholar]

- [43].Srinivasan R and Petrovic S. Meg phase follows conscious perception during binocular rivalry induced by visual stream segregation. Cerebral Cortex, 16(5):597–608, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Van der Tweel LH, and Verduyn Lunel HFE. Human visual responses to sinusoidally modulated light. Electroencephalography and Clinical Neurophysiology 186: 587–598, 1965. [DOI] [PubMed] [Google Scholar]

- [45].van LH der Tweel LH and H Spekreijse. Signal transport and rectification in the human evoked-response system. Ann N Y Acad Sci. 156(2):678–95. 1969. [DOI] [PubMed] [Google Scholar]

- [46].Vialatte F-B, Maurice M, Dauwels J, and Cichocki A. Steady-state visually evoked potentials: focus on essential paradigms and future perspectives. Progress in neurobiology, 90(4):418–438, 2010. [DOI] [PubMed] [Google Scholar]

- [47].Wang Y, Wang R, Gao X, Hong B, and Gao S. A practical vep-based brain-computer interface. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 14(2):234–240, 2006. [DOI] [PubMed] [Google Scholar]

- [48].Wang Y, X., Gao, Hong B, Jia C, and Gao S, S. (2008). Brain-computer interfaces based on visual evoked potentials. IEEE Engineering in Medicine and Biology Magazine, 27(5), 64–71, 2008. [DOI] [PubMed] [Google Scholar]

- [49].Wolters C, Anwander A, Tricoche X, Weinstein D, Koch M, and MacLeod R. Influence of tissue conductivity anisotropy on eeg/meg field and return current computation in a realistic head model: a simulation and visualization study using high-resolution finite element modeling. NeuroImage, 30(3):813–826, 2006. [DOI] [PubMed] [Google Scholar]

- [50].Zhang Y, Zhou G, Zhao Q, Onishi A, Jin J, Wang X, and Cichocki A, A. (2011, January). Multiway canonical correlation analysis for frequency components recognition in SSVEP-based BCIs. In Proceedings Neural Information Processing, 287–295, 2011. [Google Scholar]

- [51].Zhang Y, Zhou G, Jin J, Wang M, Wang X, and Cichocki A. L1-regularized multiway canonical correlation analysis for SSVEP-based BCI. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 21(6):887–897, 2013. [DOI] [PubMed] [Google Scholar]

- [52].Zhu D, Bieger J, Molina GG, and Aarts RM A survey of stimulation methods used in SSVEP-based BCIs. Computational intelligence and neuroscience, 2010:1, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]