Abstract

Background:

Poor EHR design adds further challenges, especially in the areas of order entry and information visualization, with a net effect of increased rates of incidents, accidents, and mortality in ICU settings.

Objective:

The purpose of the study was to propose a novel, mixed-methods framework to understand EHR-related information overload by identifying and characterizing areas of suboptimal usability and clinician frustration within a vendor-based, provider-facing EHR interface.

Methods:

A mixed-methods, live observational usability study was conducted at a single, large, tertiary academic medical center in the Southeastern US utilizing a commercial, vendor based EHR. Physicians were asked to complete usability patient cases, provide responses to three surveys, and participant in a semi-structured interview.

Results:

Of the 25 enrolled ICU physician participants, there were 5(20%) attending physicians, 9 (36%) fellows, and 11 (44%) residents; 52% of participants were females. A significant negative correlation was found between EHR usability and ease of use with frustration, such that as usability and ease of use increased, frustration levels decreased (p=.00 and p=. 01 respectively). Performance was negatively correlated with complexity and inconsistency in the interface design, where the higher degrees of complexity or inconsistency in the EHR associated to lower perceived performance of physicians (p=.03 and p=.00 respectively).

Discussion:

Physicians remain frustrated with the EHR due to difficulty in finding patient information. EHR usability remains a critical challenge in healthcare, with implications for medical errors, patient safety, and clinician burnout. There is a need for scientific findings on current information needs and ways to improve EHR-related information overload.

Keywords: Electronic Health Records, Usability, Evaluation, Physicians, Providers, Satisfaction

INTRODUCTION

Background and Rationale

Now that electronic health records (EHRs) have become a permanent fixture across the healthcare landscape, clinicians must navigate through digital data streams of increasing volume and complexity. This reality is of particular importance in Intensive Care Units (ICUs) where clinicians are already presented with over 200 variables and an average of 1300 individual data points per patient per day [1, 2], despite having only two minutes on average per patient to synthesize this data [3]. In this environment, rates of diagnostic errors and near misses have increased, which can lead to patient harm [4]. Information overload has been identified as a pivotal culprit in this cycle [5]. Thus, finding ways to mitigate information overload and improve clinician workflows remains a key objective for improving patient safety.

EHRs are major hubs of patient information and clinical management in the ICU. Nonetheless, ICU clinicians often report EHRs to be cumbersome, and studies have shown that EHR use disrupts ICU workflow [6]. Poor EHR design adds further challenges, especially in the areas of order entry and information visualization (among others), with a net effect of increased rates of incidents, accidents, and mortality in ICU settings [6-8]. Compared to other patient populations, critically ill patients require constant monitoring and are more vulnerable to acute clinical changes, which may not always be clearly reflected within the EHR. This combination of clinical instability and abundant electronic health data makes the ICU an ideal setting to study information overload in the context of EHR usability.

Objective

The purpose of the study is to propose a novel, mixed-methods framework to understand EHR-related information overload by identifying and characterizing areas of suboptimal usability and clinician frustration within a vendor-based, provider-facing EHR interface. Our intention is to better understand the relationship between the EHR and provider workload, satisfaction, and performance. We hypothesize that information overload, marked by high levels of information density (data elements per screen) and information sprawl (data elements scattered among multiple screens), will measurably impact ICU clinicians’ decision-making and satisfaction. Herein, we describe the protocol for a novel, mixed-methods evaluation framework of EHR usability that incorporates simulation-based testing, semi-structured interview questions, and survey questionnaires.

Theoretical Framework

This mixed-methods study involves high-fidelity simulation testing with various patient scenarios and clinical tasks (discussed in detail below and highlighted in Table 3). The goal with simulation-based EHR testing is to achieve high levels of clinical validity by replicating real-world activities that are both familiar and common to clinical end-users: for this study, tasks that clinicians would be expected to complete routinely for an average ICU patient.

Table 3.

Overview of simulation cases with selected user tasks. For each task, specific aspects of EHR functionality are examined to better understand usability challenges, key screens used, and user processes for answering the clinical question.

| Case | Selected Clinical Tasks and EHR Functionality |

|---|---|

| Multi-system organ failure |

|

| Acute hypoxic respiratory failure |

|

| Sepsis |

|

| Volume Overload |

|

In addition to a focus on clinical validity, the scenarios and tasks developed by the research team were also informed by the usability heuristic framework described by Nielsen [9]. Nielsen’s usability heuristics have become foundational in the fields of human computer interaction and user-centered design. While vigorous usability testing has penetrated other industries such as aviation and transportation, recent studies have shown that the use of user-centered design principles among EHR vendors has been more limited [10].

Clinical tasks have been constructed such that suboptimal usability patterns or potential violations of usability heuristics might be exposed if and where they exist. For instance, to examine the principle of “Consistency” (i.e., the extent to which common interface and formatting features appear throughout), one task involves retrieving information about a patient’s IV fluids and urine output: since users can access this clinical information in multiple places within the EHR, this task may help explore potential variation among various user-facing tabs and screens, potentially uncovering patterns related to user preferences.

METHODS

Setting

This mixed-methods, live observational usability study was conducted at a single, large, tertiary academic medical center in the Southeastern US utilizing a commercial, vendor based EHR (Epic 2016, Epic Systems, Verona, WI). Participants were brought to a biobehavioral lab located at the institution for simulation testing and other portions of the study. This locked, secured space was equipped with a standard work station that includes a desk, computer, and monitor that was configured with the usability technology described below. The study received full approval from the Institutional Review Board.

Participants

Recruited participants were required to meet the eligibility criteria outlined in Table 1. Any participants who do not meet the inclusion criteria was excluded. Given the time and resource constraints associated with development of the simulation cases, we chose to focus on physician providers and scenario-based tasks most representative of medical ICU patients. Thus, clinical providers from other roles (medical students, nurses, nurse practitioners) and other critical care settings (Surgical ICU, Neurosurgical ICU, Pediatric ICU, etc.) was excluded. Furthermore, given that participants were required to wear eye-tracking goggles during the simulation exercise, accommodations were made on a case-by-case basis for participants who use eyeglasses for vision correction.

Table 1.

Study Eligibility Criteria

| Inclusion Criteria | Exclusion Criteria |

|---|---|

|

|

Recruitment

Participants were recruited from the academic medical center via word of mouth, email, and flyers posted in physician workrooms. Persons interested in the study were directed to the study coordinators by email for scheduling. Participants were compensated with gift cards upon full completion of the one-hour session.

The target population was ICU physicians. While prior work has demonstrated that five participants are typically adequate for usability studies [11], given our observations that EHR user preferences tend to demonstrate wide individual variation, we will aim to recruit from a broader cohort of participants to develop a more comprehensive picture of current usability challenges faced by physician users with varying levels of experience and training.

Measures

This protocol employed usability software, eye-tracking goggles, semi-structured interview questions, and surveys to generate a robust set of quantitative and qualitative data for each individual user. Key metrics of interest included: time, task completion, click burden, gaze patterns, workload, and satisfaction. These metrics, when analyzed en masse, may build a compelling case for EHR-related information overload.

Time

For each participant, time was tracked by the usability software such that more granular metrics can be derived, such as time per patient, time per screen, and time to complete the task. This enables further analysis and comparison by case type, task, physician role, and discrete EHR screens.

Task Completion

Task completion was measured as the percentage of clinical tasks that participants answer or complete correctly during the simulation cases. Scoring involved a grading scale for each task with 1 point (response is fully correct), 0.5 points (partially correct), or 0 points (incorrect).

Click Burden

Click burden was assessed by determining the number of mouse clicks per case and clicks per screen. This data was captured by the usability software and is a well-known metric in the usability literature.

Screen Pathways

The discrete EHR screens that physicians visit, as well as the cumulative screen pathway throughout the simulation activity, were recorded for each participant. This data revealed not only where physicians look for information within the EHR but also 1) the extent to which they search in different locations for the same information and 2) the extent to which they return to the same screens repeatedly. Screen traffic data may also provide insight with regard to most-utilized screens across a cohort of providers, which could lead to targeted efforts for EHR redesign. Pathway mapping also revealed usage patterns and preferences that may provide insight related to navigational efficiency. Lastly, data about individual screens and screen pathways, in conjunction with time and completion data, may shed light on areas of information overload.

Gaze Fixation

Gaze location and fixation were captured for each participant using state of the art eye tracking goggles (Tobii Pro Glasses 2, Tobii Group, Stockholm, Sweden) [12]. These goggles will provide millisecond level data with respect to where a participant is focusing on a given screen. This data was analyzed in the Tobii Pro Lab©, and detailed heat maps were generated to depict participants’ eye gaze patterns on key EHR screens. Individual and group analysis was performed, with a goal of uncovering “hot spots” and common pathways.

Workload

Cognitive workload was evaluated using the NASA-TLX, a validated tool for quantifying subjective workload that has been used in multiple industries including aeronautics and healthcare [13]. The NASA-TLX will measure six domains on a ten-point scale: mental demands, physical demands, temporal demands, frustration, effort, and performance. A score was calculated for each domain, then multiplied by 10 to be generate a 100-point scale. The six domain scores were averaged to calculate a composite score. Previous studies examining EHR usage in healthcare have established a threshold for “overwork” with NASA-TLX scores ≥ 55 [14].

Satisfaction

Clinician satisfaction with the EHR system were assessed using a modified version of the validated Questionnaire for User Interaction Satisfaction (QUIS) [15]. The original QUIS includes nine categories, of which this modified version examines four: overall reaction to the system, screen factors, terminology and system feedback, and learning factors. Across these four categories, participants were asked a total of 20 equally-weighted questions. Responses were recorded on a bipolar Likert scale (range: 1 to 9). We added two qualitative, free-response questions to the end of the survey to assess participants’ “top three” most-favorite and most-frustrating features of the Epic EHR from their own personal user-experience.

In addition to the QUIS, clinician satisfaction will also be assessed using the System Usability Scale (SUS) [16]. This instrument includes scores for 10 questions related to usability on a scale of 1-5. The raw scores were adjusted to generate a total score on a 100-point scale. In prior EHR usability studies of physicians, scores ≥ 70 have been considered to denote average or acceptable usability, with scores < 70 reflective of poor usability [17].

Semi-Structured Interview

Brief semi-structured interviews followed the simulation and survey portions of the study. These interviews were conducted by the primary investigator and will consist of eight questions that aim to determine physicians’ opinions regarding clinical information that is difficult to find in the EHR, most-needed information for patient care (specifically for critically ill patients), perceived usefulness of key screens and screen features, and perceived relationship between EHR use and physician burnout. These questions have been developed in conjunction with the Odum Institute for Research and Social Science to ensure that they do not present any form of bias or leading language. Interviews were audio recorded, transcribed, and coded to support qualitative and thematic analysis using grounded theory to better understand users’ screen preferences and to inform suggestions for user-centered interface redesign.

Usability Software

Turf 4.0 (University of Texas Health Science Center, Houston, TX), a standard usability software developed specifically for assessing the usability of health IT, was used during testing [18]. This software ran in the background while participants progress through the EHR simulation exercises and seamlessly captured an abundance of user-generated data: mouse/cursor tracking patterns and hover for each screen, audio recording, video screen capture, number of mouse clicks, and time between mouse clicks. Turf generated detailed heat maps to depict patterns of mouse/cursor location in relation to key screens. This software will also provide an interface for users to input their demographics (age, level of training, etc.) and complete the SUS survey.

Simulation Cases

Four realistic ICU patient cases (Table 3) were developed by the research team and were used in the one-hour simulation sessions. The cases had no sequential order to mimic typical ICU cases that a physician would encounter. The cases had no sequential order to mimic typical ICU cases that a physician would encounter. Cases was independent and mutually exclusive to reduce case dependencies and to ensure more accurate findings. For each case, a full patient record was populated in the training instance of the institution’s commercial EHR (Epic Playground). Due to time and resource constraints, the research team proceeded with development of the four cases as follows: strategic modifications were made to two existing training patients, and two additional training patients were created from scratch and added to the Playground environment. Clinical validity was prioritized throughout development and implementation of the cases through close collaboration and consultation with a domain expert (board certified Pulmonary and Critical Care physician). Two of the four simulation patients contain over 24 hours’ worth of clinical data and are representative of a multi-day hospitalization; the other two contain less than 24 hours of clinical data and are representative of patients admitted overnight.

Clinical Development: Patient Cases, Scenarios, and Tasks

To begin, the research team reviewed multiple existing ICU patient cases within the training instance of the institution’s EHR (Epic Playground) which had previously been developed for onboarding and training purposes. Unfortunately, it was apparent that most of these training ICU patient cases had not been developed with significant clinician input, as many were highly synthetic and lacked clinical validity, rendering them unsuitable for simulation testing. For example, one ICU training patient with a lower extremity wound and relatively normal vital signs had received unusual antibiotics (Azithromycin and Tobramycin) in the Emergency Department; most clinical users would balk at this case for many reasons, including both the medication decisions (macrolide and aminoglycoside for skin and soft tissue infection?) and the level of care (ICU with stable vital signs and no oxygen requirement?). However, two training patients were ultimately deemed suitable for use in the simulation study after key modifications were made to their profiles which increased their fidelity to real medical ICU patients (e.g. modifications to confer greater vital sign instability, more severe laboratory abnormalities, expanded clinical notes, etc.).

In parallel, one of the authors (GCC), in conjunction with physician colleagues, identified representative medical ICU patient cases from an actual ICU case log at the study institution. Two patient cases were selected and clinical data for these cases (including hourly vital signs, ventilator settings, hemodynamic data, medication administration data, laboratory data, clinical orders, etc.) were transcribed into Excel spreadsheets. No personal protected health information (PHI) was collected, documented, or transcribed during case development. Where necessary, mock information was developed for sensitive fields such as patient name, date of birth, medical record number, etc. For all cases, a complete and robust data set was generated including height, weight, past medical history, past surgical history, allergies, physiologic data, lab data, medication data, and a comprehensive hourly intake/output report (including hemodialysis where appropriate). Finally, clinical notes were developed to include documentation from the primary ICU team, nursing, respiratory therapy, and in some cases Emergency Department; in all cases, notes for the simulation cases were developed as text files, modeled on actual EHR notes but with PHI replaced by sham data.

In close collaboration with the consulting domain expert, the research team developed representative tasks for each simulation patient. These tasks involved information retrieval and synthesis as well as clerical functions and were designed to be relevant for physicians regardless of level of training. A summary of the four simulation patients and tasks is provided below and in Table 3:

Case 1: A 44 year-old female patient with multisystem organ failure. Participants are asked to manage medication orders and determine input from consulting clinical teams.

Case 2: A 60 year-old female patient with acute hypoxic respiratory failure. Participants are asked to review clinical documentation and flowsheets, to evaluate changes related to the patient’s condition and mechanical ventilation, and to analyze microbiology data.

Case 3: A 25 year-old male patient with severe infection (sepsis). Participants are asked to assess the clinical flowsheet, assess laboratory data, evaluate antibiotics and intravenous fluid management, and manage laboratory studies.

Case 4: A 56 year-old male trauma patient with postoperative heart failure and volume overload. Participants are asked to identify trends in the patient’s weight during previous clinical encounters and to manage orders for IV fluids and other medications.

EHR (Epic) Development

The research team worked closely with an in-house health IT consultant to build the above simulation cases. The consultant provided spreadsheet templates which the research team used to organize all relevant discrete data points such as hourly vital signs, laboratory orders and associated results, medications administered, etc. The consultant used these spreadsheets and Word documents with clinical notes to populate the patient record for each simulation case. The four simulation cases were built in succession, with close communication between the IT consultant and the research team throughout. The research team, as well as the consulting domain expert, performed an iterative review of each simulation case in the Epic Playground, making modifications and corrections as needed.

After final review and assurance of clinical validity by the domain expert, five duplicate copies were made of each simulation patient case in the Epic Playground. Case copies involved identical first names but unique medical record numbers and last names. This was done to allow for up to five simulation sessions in a given day, such that each individual participant would have access to the same baseline copy of each simulation patient, but user-generated modifications to a simulation patient would not affect the patient data seen by the subsequent participant. For instance, if participant 1 modified the laboratory or medication orders for patient 1a, participant 2 would be unaffected as he/she would use patient 1b for simulation (patient 1b is identical to patient 1a without participant 1’s activity).

Planned Statistical Analyses

Data collected during interviews, video/screen captures, and surveys were synthesized to develop cognitive and digital workflows and to identify gaps in (categories of): cognitive workflows (e.g., information storage, retrieval, and interpretation); EHR workload and satisfaction (workload, interface design and functionality); and EHR information needs.

Descriptive statistics (means, standard deviations, medians, etc.) of responses to the QUIS, SUS, and NASA-TLX survey instruments (i.e. individual survey items, subscales and total scores) were used to characterize the three groups (i.e. attending, fellow, residents). Descriptive analysis will reflect the levels of satisfaction, and satisfaction levels were correlated with other variables we will collect (years of experience, role, etc.) Pearson correlation were used to draw relationships between characteristics (age, sex, and training) and EHR satisfaction. Statistical analysis were done in Stata. (Outputs: QUIS, SUS, Qualitative data).

Data collected during observations, video/screen captures, and surveys were synthesized to identify gaps in cognitive workflows based on high workload (NASA TLX>55 [19]) and poor performance (longer task completion times, lower task completion percentage, and poor self-assessment).

Study Protocol

Participants arrived individually for a scheduled one-hour time slot in the biobehavioral lab as described above. On arrival, study participants was given a written consent form to review and sign, followed by a verbal introduction and overview of the study procedure. After signing the consent form and expressing readiness to proceed, the participant was guided to the work station where the Tobii eye tracking goggles was calibrated. Turf usability software was activated in the background to initiate the session.

The participant was provided with default login credentials for an inpatient physician profile. During the login process, the participant will confirm his/her usual profile settings (“hospitalist physician, Pulmonary physician”) to best match the training interface to the participant’s customary interface; this was vital to mimic real-life EHR experience. A research assistant was seated at the workstation and will read from a standard script, first providing the name of a simulation patient and a brief one-line description of the patient’s case. The participant was instructed to take as much time as is needed to review the chart as if he or she were pre-rounding on the patient, to familiarize themselves with the clinical case at hand. Given that physicians were not subject to “external clocks” when reviewing patient records in the real world, no artificial time limit was imposed during this phase of simulation testing. Upon completion, the participant signaled verbally that he or she is ready to proceed. At this time, the research assistant guided the participant through a series of verbal questions and clinical tasks, during which the participant is free to interact with the EHR as needed, including going back to look up information where necessary. Participants provided a verbal answer to questions asked by the research assistant but were not informed whether their response is correct or incorrect. During this time, a second research assistant took written notes to record the participant’s verbal answers. This process was repeated for all four simulation cases.

Upon completion of the simulation cases, participants were given a demographics questionnaire and the SUS survey to complete on the desktop computer at the workstation. Following this, participants completed two additional written items: the NASA-TLX and QUIS surveys (measuring workload and satisfaction, respectively). Participants were instructed to complete these survey instruments based on their typical experience with the EHR (not solely on their experience during the simulation exercise). Lastly, time permitting, the participants engaged in a semi-structured interview with the primary investigator in which they respond to 8 scripted questions related to their experience as end-users of the EHR and key EHR functionality. Verbal responses were audio recorded for future transcription, and a research assistant will take notes during this portion of the session.

All files for the simulations, surveys, and interviews were stored on local machines as well as a secure, HIPAA compliant cloud-based server accessible only by the research team for data protection against unauthorized access and as an extra security measure. Hard copies of written notes and surveys were stored, when applicable, in a locked drawer on site. All data were de-identified, and a unique participant ID is generated for tracking and data storage. Data from the files was extracted, coded, and analyzed by research assistants and uploaded to the research team’s cloud-based server.

RESULTS

Of the 25 enrolled ICU physician participants, there were 5(20%) attending physicians, 9 (36%) fellows, and 11 (44%) residents; 52% of participants were females. The number of years of experience of Epic were close for most participants due to the recent immigration to Epic from a homegrown system a few years ago. All participants completed the surveys post the completion of the test cases mentioned above. The baseline characteristics of participants can be found in Table 4.

Table 4.

Demographics table of study participants.

| Count (%) | ||

|---|---|---|

| Gender | Females | 13 (52%) |

| Males | 12 (48%) | |

| Education | DO | 1 (4%) |

| MD | 23 (92%) | |

| MD-PhD | 1 (4%) | |

| Role | Attending | 5 (20%) |

| Fellow | 9 (36%) | |

| Resident | 11 (44%) | |

| Average Epic Experience (years) | 4.3 | |

| Mean Task Completion Time (mm:ss) (SD) | 34:43 (11:56) | |

| Total | 25 | |

Understanding the Association between perceived Workload and Usability levels

A Pearson correlation was conducted to assess the association level among NASA-TLX and SUS survey variables, Table 5. A significant negative correlation was found between EHR usability and ease of use with frustration, such that as usability and ease of use increased, frustration levels decreased (p=.00 and p=.01 respectively). Significant positive correlation was found between how complex and cumbersome the EHR was and frustration, whereas participant who viewed the EHR as more complex and cumbersome reported higher frustration levels (p=.05 and p=.01 respectively).

Table 5.

Correlations between NASA-TLX and SUS surveys

| Temporal Demand |

Performance | Effort | Frustration | ||

|---|---|---|---|---|---|

| Usability | Pearson Correlation | −.036 | −.189 | −.267 | −.529** |

| Sig. (2-tailed) | .863 | .366 | .197 | .007 | |

| Complexity | Pearson Correlation | .583** | −.414* | .323 | .389* |

| Sig. (2-tailed) | .002 | .039 | .116 | .055 | |

| Ease of Use | Pearson Correlation | −.362* | .039 | −.349* | −.497* |

| Sig. (2-tailed) | .075 | .853 | .087 | .012 | |

| Inconsistent | Pearson Correlation | .482* | −.553** | .235 | .228 |

| Sig. (2-tailed) | .015 | .004 | .258 | .274 | |

| Cumbersome | Pearson Correlation | .391* | −.130 | .377 | .507** |

| Sig. (2-tailed) | .053 | .534 | .063 | .010 |

Indicates significant correlation (p<0.05)

Indicates marginal correlation (p<0.1)

Performance was negatively correlated with complexity and inconsistency in the interface design, where the higher degrees of complexity or inconsistency in the EHR associated to lower perceived performance of physicians (p=.03 and p=.00 respectively).

The level of perceived effort was negatively correlated with ease of use, such that participants who reported higher ease of use of the EHR found the system to require less effort to complete the tasks (p=.08). There was a positive relationship between the complexity level of screens and temporal demands, such that participants who thought the interface was complex or cumbersome reported higher scores for temporal demand, indicating that they felt rushed completing the task (p=.002). Alternatively, there was a negative correlation between ease of use and temporal demand meaning that the easier the EHR was to use the less rushed they were to complete the task (p=. 07).

Understanding the Association between perceived Workload and Satisfaction levels

The NASA-TLX responses were correlated with the QUIS survey to help understand possible associations between perceived workload and satisfaction levels. Table 6 shows a significant negative correlation between ease of using the interface and effort required to complete tasks, where the easy to follow interface design is associated with less required effort (p=.04). Frustration levels negatively correlated with the ability to find information in the EHR such as the more difficult participants found information the higher the frustration levels with the system (p=.01). Participant’ responses significantly associated unhelpful error messages, such as alerts, with prolonged task completion time in the EHR (p=.03). Similarly, the more helpful error messages were provided the higher the perceived performance (p=.03).

Table 6.

Correlations between NASA-TLX/QUIS surveys

| Difficult-Easy | Frustrating- Satisfying |

Information Accessibility |

Error Messages |

||

|---|---|---|---|---|---|

| Temporal Demand | Pearson Correlation | −.114 | −.353* | .008 | −.427** |

| Sig. (2-tailed) | .587 | .084 | .969 | .033 | |

| Performance | Pearson Correlation | .007 | .065 | .088 | .415** |

| Sig. (2-tailed) | .973 | .758 | .676 | .039 | |

| Effort | Pearson Correlation | −.405** | −.110 | −.356* | −.217 |

| Sig. (2-tailed) | .045 | .600 | .081 | .298 | |

| Frustration | Pearson Correlation | −.342* | −.344* | −.507** | −.177 |

| Sig. (2-tailed) | .094 | .092 | .010 | .397 |

Indicates significant correlation (p<0.05)

Indicates marginal correlation (p<0.1)

Frustration levels were marginally associated with difficulty navigating the interface design. Difficult and poor interface design were correlated with high frustration levels (p=.09 and p=.09 respectively). Ease of finding information in the EHR negatively correlated with the required effort to complete a task. The easier it was to find information, less effort was required to complete a task (p=.08). Temporal demand was marginally, negatively correlated with satisfaction with the EHR interface design. The quicker participants were able to complete a task in the EHR, the higher the levels of satisfaction with the interface (p=.08).

Understanding the Association between Workload and Satisfaction levels

The SUS responses were correlated with the QUIS survey to help understand possible associations between perceived usability and satisfaction levels. Table 7 demonstrates that information organization and representation in the EHR had significant, positive correlation with participant’s perceptions regarding EHR usability, ease of use, workflow integration, and confidence. Better information presentation was tied with higher satisfaction scores on usability, ease of use, workflow integration, and confidence (p=. 00; p=. 0.01; p=. 01; p=.02 respectively). Information organization and representation also had significant, negative correlation with perceived level of complexity and cumbersomeness. Poor information presentation was tied with higher perceptions of complexity and cumbersomeness in the EHR (p=.00; p=.00 respectively).

Table 7.

Correlations between SUS /QUIS surveys

| Organization of Information |

Sequence of Screens |

Prompts for Input |

Learning to Operate the System |

Straight Forwardness of Tasks |

Help Messages on the Screen |

||

|---|---|---|---|---|---|---|---|

| Usability | Pearson Correlation | .654** | .623** | .144 | .366* | .446** | .143 |

| Sig. (2-tailed) | .000 | .001 | .491 | .072 | .026 | .495 | |

| Complexity | Pearson Correlation | −.587** | −.642** | −.282 | −.347* | −.478** | −.223 |

| Sig. (2-tailed) | .002 | .001 | .172 | .089 | .016 | .284 | |

| Ease of Use | Pearson Correlation | .503** | .584** | .473** | .336 | .196 | .363* |

| Sig. (2-tailed) | .010 | .002 | .017 | .101 | .348 | .075 | |

| Integration | Pearson Correlation | .507** | .480** | .156 | .422** | .312 | .232 |

| Sig. (2-tailed) | .010 | .015 | .456 | .036 | .129 | .265 | |

| Inconsistent | Pearson Correlation | −.421* | −.440** | −.209 | −.199 | −.251 | −.390* |

| Sig. (2-tailed) | .036 | .028 | .316 | .340 | .226 | .054 | |

| Cumbersome | Pearson Correlation | −.607** | −.595** | −.426** | −.522** | −.430** | −.415** |

| Sig. (2-tailed) | .001 | .002 | .034 | .007 | .032 | .039 | |

| Confidence | Pearson Correlation | .441** | .350 | .334 | .359* | .565** | .127 |

| Sig. (2-tailed) | .027 | .087 | .103 | .078 | .003 | .544 |

Indicates significant correlation (p<0.05)

Indicates marginal correlation (p<0.1)

Understanding how to navigate EHR screens, as confusing or clear, had a significant, positive association with satisfaction around usability, ease of use, integration (p=.00; p=.00; p=. 01 respectively); significant negative association with complexity, inconsistency, and cumbersomeness (p=. 00; p=.02; p=. 00). Physician’s comprehension of prompts or alerts was strongly associated with ease of use. Prompts that were rated as clear correlated with overall higher ease of use perception (p=.01), and less cumbersomeness (p=.03).

Understanding why physicians have a difficulty operating the EHR is strongly associated to poor usability, high complexity, low self-confidence in EHR skills (p=.07; p=.08; p=.07 respectively). Significant, positive relationship was found between the ability to complete a task with EHR usability and self-confidence in EHR skills (p=.02; p=.00). On-screen location and content of in-system help messages positively correlated with the ease of use (p=.07), and negatively correlated with inconsistency and cumbersomeness (p=.05; p=.03).

DISCUSSION

EHR usability has emerged as a critical challenge in healthcare, with implications for medical errors, patient safety, and clinician burnout. Indeed, ONC’s recent Easy EHR Issue Reporting Challenge [20] highlights the increased attention towards EHR usability among policy makers, health IT researchers, application developers, and commercial vendors. Thus, the current study will serve a vital role in revealing key insights with regard to information overload and breakdowns in digital workflows in ICU settings. Furthermore, recent calls to action by Sittig et al and Khairat et al have identified the urgency and collaboration vital to this work [21, 22].

This study provides a novel mixed-methods approach to better understand areas of limitations in the EHR. We ran inter-survey correlation to understand possible associations between perceived workload, usability, and satisfaction post EHR-usability study. The relationship between those areas not only provides insightful feedback on areas of limitation but also suggests how improving one aspect of the EHR can influence another. For example, improving information representation can reduce the complexity of EHR screens.

We found significant association between the perceived usability and satisfaction levels, as well as the perceived workload within the EHR and usability levels and satisfaction respectively. Participants associated high efforts in completing the task with information accessibility and the ease of us of the interface design. The overall perceived EHR usability score for all participants was below average, indicating the need for more interface optimization. The study showed that physicians used four screens to retrieve information 50% of the time, which requires further investigation on the reasoning behind the low usage of other EHR screens.

The current study is, to the best of our knowledge, one of the largest EHR usability studies utilizing physicians with simulation-based testing, and it combines mixed-methods techniques that will generate robust qualitative and quantitative data. Prior EHR usability work has also employed eye-tracking technology [23] as well as simulation-based testing [24, 25], but our study will provide the largest dataset with regard to physicians in critical care settings where the stakes are quite high.

The current study follows prior work from March et al who piloted EHR simulation testing to study patient safety and medical errors in the ICU [26]. Our novel, mixed-methods protocol expands greatly upon this important work by including attending physicians in the cohort of users, utilizing multiple ICU patients instead of one, examining the process of physician order entry, leveraging sophisticated usability and eye-tracking software, and integrating usability testing with a more robust exploration of physician satisfaction.

We note that our study will provide important contributions to the critical conversations regarding EHR use and physician burnout that are ongoing. Prior work has examined the relationship between EHR use and clerical burden, demonstrating low provider satisfaction and higher risk of burnout [27]. Our work will help to better quantify the burdens of EHR use among ICU providers, including number of screens and mouse clicks needed to review a patient’s chart, as well as identify key usability challenges associated with EHR interfaces in critical care. Furthermore, our work was informative to a broader population of physician end-users in inpatient settings because we intentionally include some tasks that are not only to the ICU but also other inpatient settings (reviewing a patient’s net volume status or medication administration record, for instance). Thus, this study protocol will address a current gap in the literature by responding to the call from Sinsky and Privitera to develop measures of a “manageable cockpit” for clinicians (including measures of cognitive workload and time associated with EHR tasks) [28].

Data from our simulation study may also be useful in determining the extent to which one’s pattern of EHR use is associated with level of experience and clinical role. If, for instance, participants from across roles identify one common pathway for accessing key clinical data, then it may suggest an area of “low-hanging fruit” for screen re-design or an opportunity for improved and more standardized EHR training. Conversely, if participants from different groups report different EHR pathways, or if members of the same group use different pathways, this may indicate 1) variation in user practices, 2) multiple pathways as a potential source of error, and/or 3) an opportunity for enhanced provider satisfaction through targeted customization.

Limitations and future direction

Our study protocol also has several limitations. Many of these are intrinsic to simulation testing, such as the possibility for the Hawthorne Effect, the awareness that physicians were participating in a simulation, which may alter their normal interactions with the EHR. In addition, participants in our simulation will necessarily use a generic provider login account which may not confer the unique customized features or layouts associated with their profiles in the EHR production environment. This may reduce the fidelity of our data with regard to true user-preferences in the areas of information-seeking and execution of clerical tasks. Furthermore, while our simulation patients are high fidelity, they are not perfect (as an example, for some patients, chest x-ray films are lacking in number and resolution). Lastly, given that our simulation testing will occur in a usability lab, we will not capture the interruptions, distractions, and other interactions that occur in real-life clinical environments. While we do not impose a strict time limit for participants to complete a specific simulation case, we note that the entire one-hour session includes four patient cases, three survey questionnaires, and eight semi-structured interview questions, so we did not anticipate participants would spend an unreasonable amount of time on the simulation cases and thus introduce procedural bias.

Future work includes the development of eye tracking evaluation methods by investigating EHR-specific behaviors and by proposing validated threshold for fatigue and cognitive overload based on pupil dilation measurements. A follow-up study will focus on improving information representation by developing a dashboard that aggregates most-needed ICU patient information (as reported by clinicians) and conducting a comparative effectiveness study comparing the current EHR interface with the new user-centered dashboard in a simulated environment. In addition, further work could involve user interface testing of multiple EHR vendors to establish best practices for user interfaces based on comparative analysis of user performance and satisfaction. Future work will also include investigating users from different clinical roles (nursing, respiratory therapists, etc.) and different clinical units (Surgical ICU, Neonatal ICU, etc.).

CONCLUSION

Limited studies have investigated clinicians’ digital information needs and information overload with regard to the EHR. There is a need for scientific findings on current information needs and ways to improve EHR-related information overload. This research aims to fill a current gap in literature by creating a mixed-methods approach that presents the top EHR data commonly used by physicians in the ICU, which serves as scientific foundation to future studies (whether in critical care or other clinical settings). Studies investigating EHR use are typically done in simulated environments. This research aims to expand upon this paradigm and conduct innovative usability testing by harnessing eye-tracking and screen-tracking technologies, leveraging high-fidelity simulation testing, and incorporating surveys and semi-structured end-user interviews to better capture the physician-EHR interaction. Furthermore, the qualitative and quantitative methods deployed in this study can be applied to any clinical setting and any EHR product.

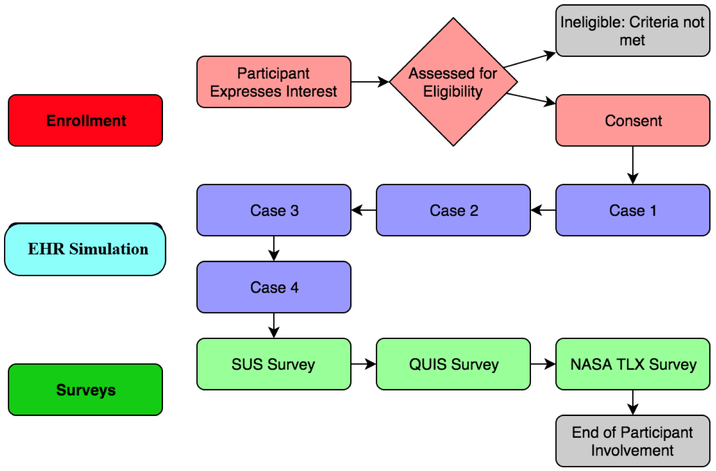

Figure 1:

System Assessment for Information overload Framework (SAIF) demonstrating recommended participant flow sheet.

Table 2.

Metrics of interest and data collection strategies

| Metric | Units | Measurement Strategy |

|---|---|---|

Time

|

seconds | usability software |

| Task Completion | % | Direct observation |

Click Burden

|

# mouse clicks |

usability software |

| Eye Fixation | Gaze density (heat map) | eye-tracking goggles |

| Workload | NASA-TLX | Standardized survey |

| Satisfaction | SUS/QUIS | Standardized surveys Semi-structured interviews |

Highlights.

The use of mixed-methods for usability evaluation provides more comprehensive analysis of health IT systems.

Conducting inter-survey analysis may reveal further explanation to users' perceptions.

Inconsistent EHR interface and complex screen designs lead to high frustration levels among providers.

*Acknowledgments

The authors would like to acknowledge Dr. Shannon Carson, MD and Dr. Donald Spencer, MD for their support.

*Funding

This work was supported by the National Library of Medicine (NLM) under grant number 1T15LM012500-01.

Footnotes

*Ethics approval and consent to participate

Institutional Review Board approval was obtained prior to conducting the study. Study information was presented to participants and verbal consent was obtained.

*Consent for publication

Authors consent publication of this work and ensure that it has not been published elsewhere before.

*Availability of data and material

Datasets are available from the corresponding author upon request.

*Competing interests

The authors declare that they have no competing interests.

Conflict of Interest: The authors declare no conflicts of interest.

All authors had access to the data and a role in writing the manuscript.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- 1.Morris A Computer applications. Princ Crit Care. 1992:500–514. [Google Scholar]

- 2.Manor-Shulman O, Beyene J, Frndova H, Parshuram CS. Quantifying the volume of documented clinical information in critical illness. J Crit Care. 2008;23(2):245–250. doi: 10.1016/j.jcrc.2007.06.003 [DOI] [PubMed] [Google Scholar]

- 3.Brixey JJ, Tang Z, Robinson DJ, et al. Interruptions in a level one trauma center: A case study. Int J Med Inform. 2008;77(4):235–241. doi: 10.1016/j.ijmedinf.2007.04.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Pickering BW, Hurley K, Marsh B. Identification of patient information corruption in the intensive care unit: Using a scoring tool to direct quality improvements in handover. Crit Care Med. 2009;37(11):2905–2912. doi: 10.1097/CCM.0b013e3181a96267 [DOI] [PubMed] [Google Scholar]

- 5.Kohn L, Donaldson MS. To Err Is Human: Building a Safer Health System.; 1999. [PubMed] [Google Scholar]

- 6.Cheng CH, Goldstein MK, Geller E, Levitt RE. The Effects of CPOE on ICU workflow: an observational study. AMIA . Annu Symp proceedings AMIA Symp. 2003;2003:150–154. doi:D030002925 [pii] [PMC free article] [PubMed] [Google Scholar]

- 7.Thimbleby Oladimeji P and Cairns PH Unreliable numbers: error and harm induced by bad design can be reduced by better design. Unreliable numbers error harm Induc by bad Des can be Reduc by better Des. 2015;12(110):SP-0685. doi: 10.1098/rsif.2015.0685 PMCID: PMC4614478 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.MacK EH, Wheeler DS, Embi PJ. Clinical decision support systems in the pediatric intensive care unit. Pediatr Crit Care Med. 2009;10(1):23–28. doi: 10.1097/PCC.0b013e3181936b23 [DOI] [PubMed] [Google Scholar]

- 9.Nielsen J Enhancing the explanatory power of usability heuristics. Conf companion Hum factors Comput Syst - CHI ’94. 1994:210. doi: 10.1145/259963.260333 [DOI] [Google Scholar]

- 10.Ratwani RM, Benda NC, Zachary Hettinger A, Fairbanks RJ. Electronic health record vendor adherence to usability certification requirements and testing standards. JAMA - J Am Med Assoc. 2015;314(10): 1070–1071. doi: 10.1001/jama.2015.8372 [DOI] [PubMed] [Google Scholar]

- 11.Nielsen J, Landauer TK. A mathematical model of the finding of usability problems. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems - CHI ’93 New York, New York, USA: ACM Press; 1993:206–213. doi: 10.1145/169059.169166 [DOI] [Google Scholar]

- 12.Tobii Pro Lab - Software for eye tracking and biometrics. 2015. https://www.tobiipro.com/product-listing/tobii-pro-lab/. Accessed May 29, 2018.

- 13.Pickering BW, Herasevich V, Ahmed A, Gajic O. Novel Representation of Clinical Information in the ICU. Appl Clin Inform. 2010; 1(2): 116–131. doi: 10.4338/ACI-2009-12-CR-0027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Chera BS, Mazur L, Jackson M, et al. Quantification of the impact of multifaceted initiatives intended to improve operational efficiency and the safety culture: a case study from an academic medical center radiation oncology department. Pract Radiat Oncol. 2014;4(2):e101–8. doi: 10.1016/j.prro.2013.05.007 [DOI] [PubMed] [Google Scholar]

- 15.Chin JP, Diehl VA, Norman LK. Development of an instrument measuring user satisfaction of the human-computer interface In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems - CHI ’88. New York, New York, USA: ACM Press; 1988:213–218. doi: 10.1145/57167.57203 [DOI] [Google Scholar]

- 16.Brooke J SUS - A quick and dirty usability scale. Usability Eval Ind. 1996;189(194):4–7. doi: 10.1002/hbm.20701 [DOI] [Google Scholar]

- 17.Clarke MA, Belden JL, and Kim MS. How Does Learnability of Primary Care Resident Physicians Increase After Seven Months of Using an Electronic Health Record? A Longitudinal Study. JMIR Hum Factors. 2016. Jan-Jun; 3(1): e9. doi: 10.2196/humanfactors.4601 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.EHR Usability Toolkit - School of Biomedical Informatics. UTHeath Health Science Center at Houston; https://sbmi.uth.edu/nccd/turf/. Published 2018. Accessed May 29, 2018. [Google Scholar]

- 19.Calkin BA, PARAMETERS AFFECTING MENTAL WORKLOAD AND THE NUMBER OF SIMULATED UCAVS THAT CAN BE EFFECTIVELY SUPERVISED. 2007, Wright State University. [Google Scholar]

- 20.Gettinger A A New Challenge Competition – Can you Help Make EHR Safety Reporting Easy. Health IT Buzz. https://www.healthit.gov/buzz-blog/electronic-health-and-medical-records/a-new-challenge-competition-can-you-help-make-ehr-safety-reporting-easy/. Published 2018. Accessed May 29, 2018. [Google Scholar]

- 21.Sittig DF, Belmont E, Singh H. Improving the safety of health information technology requires shared responsibility: It is time we all step up. Healthcare. https://www.sciencedirect.com/science/article/pii/S2213076417300209?via%3Dihub. Published March 1, 2017. Accessed May 29, 2018. [DOI] [PubMed] [Google Scholar]

- 22.Khairat S, Coleman GC, Russomagno S, Gotz D. Assessing the Status Quo of EHR Accessibility, Usability, and Knowledge Dissemination. eGEMs (Generating Evid Methods to Improv patient outcomes). 2018;6(1). doi: 10.5334/egems.228 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Asan O and Yang Y. Using Eye Trackers for Usability Evaluation of Health Information Technology: A Systematic Literature Review. JMIR Hum Factors. 2015. April 14;2(1):e5. doi: 10.2196/humanfactors.4062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Mazur LM, Mosaly PR, Moore C, et al. Toward a better understanding of task demands, workload, and performance during physician-computer interactions. J Am Med Informatics Assoc. 2016;23(6): 1113–1120. doi: 10.1093/jamia/ocw016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Khairat S, Burke G, Archambault H, et al. Perceived Burden of EHRs on Physicians at Different Stages of Their Career. Appl Clin Inform 2018;9:336–347. doi.org/10.1055/s-0038-1648222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.March CA, Steiger D, Scholl G. Use of simulation to assess electronic health record safety in the intensive care unit: a pilot study. BMJ Open. 2013. April 10;3(4). pii: e002549. doi: 10.1136/bmjopen-2013-002549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Shanafelt TD, Drybye LN, Sinsky C, et al. Relationship Between Clerical Burden and Characteristics of the Electronic Environment With Physician Burnout and Professional Satisfaction. Mayo Clin Proc. 2016. July;91(7):836–48. doi: 10.1016/j.mayocp.2016.05.007. [DOI] [PubMed] [Google Scholar]

- 28.Sinsky CA and Privitera MR. Creating a "Manageable Cockpit" for Clinicians: A Shared Responsibility. JAMA Intern Med. 2018. June 1;178(6):741–742. doi: 10.1001/jamainternmed.2018.0575. [DOI] [PubMed] [Google Scholar]