Abstract

While most models of randomly connected neural networks assume single-neuron models with simple dynamics, neurons in the brain exhibit complex intrinsic dynamics over multiple timescales. We analyze how the dynamical properties of single neurons and recurrent connections interact to shape the effective dynamics in large randomly connected networks. A novel dynamical mean-field theory for strongly connected networks of multi-dimensional rate neurons shows that the power spectrum of the network activity in the chaotic phase emerges from a nonlinear sharpening of the frequency response function of single neurons. For the case of two-dimensional rate neurons with strong adaptation, we find that the network exhibits a state of “resonant chaos”, characterized by robust, narrow-band stochastic oscillations. The coherence of stochastic oscillations is maximal at the onset of chaos and their correlation time scales with the adaptation timescale of single units. Surprisingly, the resonance frequency can be predicted from the properties of isolated neurons, even in the presence of heterogeneity in the adaptation parameters. In the presence of these internally-generated chaotic fluctuations, the transmission of weak, low-frequency signals is strongly enhanced by adaptation, whereas signal transmission is not influenced by adaptation in the non-chaotic regime. Our theoretical framework can be applied to other mechanisms at the level of single neurons, such as synaptic filtering, refractoriness or spike synchronization. These results advance our understanding of the interaction between the dynamics of single units and recurrent connectivity, which is a fundamental step toward the description of biologically realistic neural networks.

Author summary

Biological neural networks are formed by a large number of neurons whose interactions can be extremely complex. Such systems have been successfully studied using random network models, in which the interactions among neurons are assumed to be random. However, the dynamics of single units are usually described using over-simplified models, which might not capture several salient features of real neurons. Here, we show how accounting for richer single-neuron dynamics results in shaping the network dynamics and determines which signals are better transmitted. We focus on adaptation, an important mechanism present in biological neurons that consists in the decrease of their firing rate in response to a sustained stimulus. Our mean-field approach reveals that the presence of adaptation shifts the network into a previously unreported dynamical regime, that we term “resonant chaos”, in which chaotic activity has a strong oscillatory component. Moreover, we show that this regime is advantageous for the transmission of low-frequency signals. Our work bridges the microscopic dynamics (single neurons) to the macroscopic dynamics (network), and shows how the global signal-transmission properties of the network can be controlled by acting on the single-neuron dynamics. These results paves the way for further developments that include more complex neural mechanisms, and considerably advance our understanding of realistic neural networks.

Introduction

The existence of a chaotic phase is a common property of large networks of neurons with random connectivity [1, 2]. Chaotic dynamics has been proposed as a mechanism for internally-generated cortical variability [3–5] and the richness of the dynamics at the edge of chaos has been exploited to learn complex tasks involving generation of temporal patterns [6–12]. In these and other related approaches, the chaotic behavior of the network mainly arises from the random interactions, whereas the dynamics of single neurons are typically given by first-order differential equations. The simplicity of single neuron dynamics in these models allows to quantitatively determine the chaotic phase of synaptically coupled neurons using dynamical mean-field theory (DMFT) [1], even in networks with more realistic connectivity structure [2, 12–14].

A fascinating question is what kind of activity emerges in neural networks that are subject to additional biological constraints. Biological neurons exhibit rich multi-dimensional internal dynamics [15–18] that are inconsistent with first-order equations. However, a theoretical understanding of the emergent activity patterns in networks of more realistic multi-dimensional neuron models is largely lacking. Here, we develop a theoretical framework that extends DMFT to multi-dimensional rate neurons. Using this framework, we show that the power spectrum of the network activity in the nonlinear, strongly coupled regime, emerges from a sharpening of the single-neuron frequency response function due to strong recurrent connections.

Our theory uses firing rate models with two or more variables per unit. While rate-based models [19, 20] discard information on the exact spike-timing of single neurons, they have the advantage of being accessible to an analytical characterization of their dynamics. However, commonly-used one-dimensional rate models cannot fully capture the dynamics of the mean activity of a population of spiking neurons, such as the synchronization of neurons in response to a stimulus onset [21–23], an effect that is readily observed in integrate-and-fire models [24–28]. To capture rapid synchronization after stimulus onset in rate models, it is necessary to consider at least two equations per rate neuron [29–31]. Multi-dimensional models also account for additional cellular mechanisms such as refractoriness [32], spike-frequency adaptation (SFA) [28, 33–35], synaptic filtering [36, 37], subthreshold resonance [38] or for the effect of dendritic compartments [39, 40].

To be specific, we focus on SFA, the decrease of a neuron’s firing rate in response to a sustained stimulus, but our theory can also be applied to other phenomena. SFA is present in neurons at all stages of sensory processing, and is believed to play a crucial role for efficient coding of external stimuli [15]. Moreover, SFA over multiple timescales represents an efficient solution for information transmission of sensory signals whose statistics change dynamically [16, 18, 41]. It is therefore of great interest to understand how adaptation and recurrent connections interact to shape network dynamics and signal transmission [42, 43]. If connections and adaptation are weak, the network dynamics can be largely understood within linear response theory. In particular, in the presence of signals and noise, linear response theory predicts that adaptation shapes signal and noise in precisely the same manner [35], canceling the noise-shaping effect of adaptation [42–44]. In contrast, in strongly coupled networks generating chaotic fluctuations [1], linear response theory is not applicable and the effect of adaptation on the signal transmission in this case remains poorly understood. Here, we show that introducing adaptation into a strongly-coupled network of rate units shifts the network to a state of “resonant” chaos that is qualitatively different from the chaotic behavior of the network without adaptation. In this state, the network generates a stable rhythm corresponding to a narrow-band peak in the power spectrum which is robust against quenched disorder in adaptation parameters (heterogeneity). We show that in this new regime the network has two interesting functional properties: first, the correlation time increases with the adaptation timescale; second, the low-frequency power of the chaotic activity is strongly decreased, enabling a better transmission of slow signals.

In the Results section, we first present the microscopic model for the network of rate neurons with adaptation and describe its dynamical regimes. Then, we introduce the mean-field approximation that allows us to describe the resonant chaotic state and to study its functional consequences. Finally, we present the general multi-dimensional model that allows to introduce multiple mechanisms at the single-neuron level. Detailed derivations are provided in the Methods section, while in the Discussion we examine possible extensions and generalizations.

Results

We study the dynamics of a network of rate neurons that undergo rate adaptation. Each neuron is described by two variables, xi(t) and ai(t). The first variable xi is the activation variable that defines the output rate y via a nonlinear function ϕ, i.e. yi(t) = ϕ(xi(t)). More precisely, ϕ(xi(t)) should be interpreted as the deviation of the firing rate from some reference rate. Therefore, ϕ(xi(t)) can take both positive and negative values. The adaptation variable ai(t) of neuron i is driven by the neuron activation variable xi(t) and provides negative feedback onto xi. In what follows, we measure time in units of the timescale of x (i.e. time is considered dimensionless). The corresponding network equations are

| (1) |

| (2) |

where the dot indicates the temporal derivative and N is the number of units in the network. The synaptic weights are sampled i.i.d from a Gaussian distribution, i.e. . The parameter γ > 0 can be interpreted as the ratio of the timescales of the two variables x and a, while β > 0 is a parameter that controls the strength of adaptation.

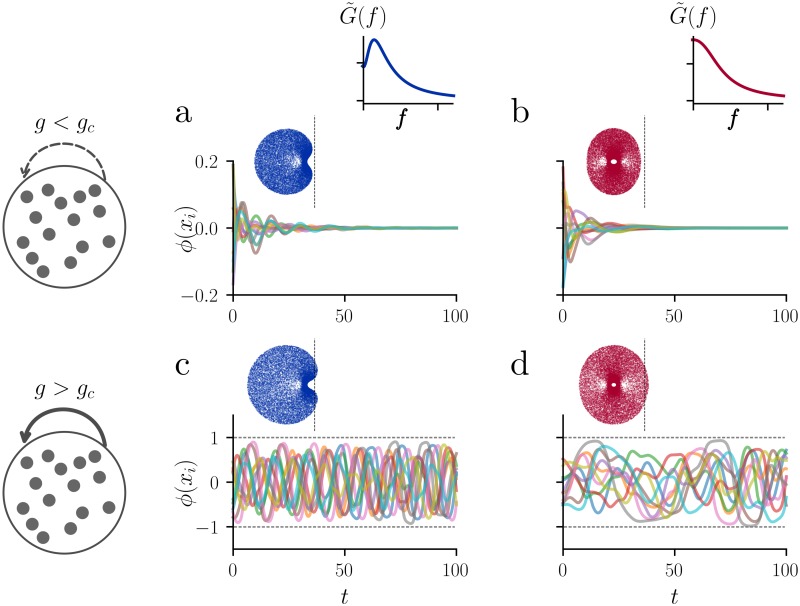

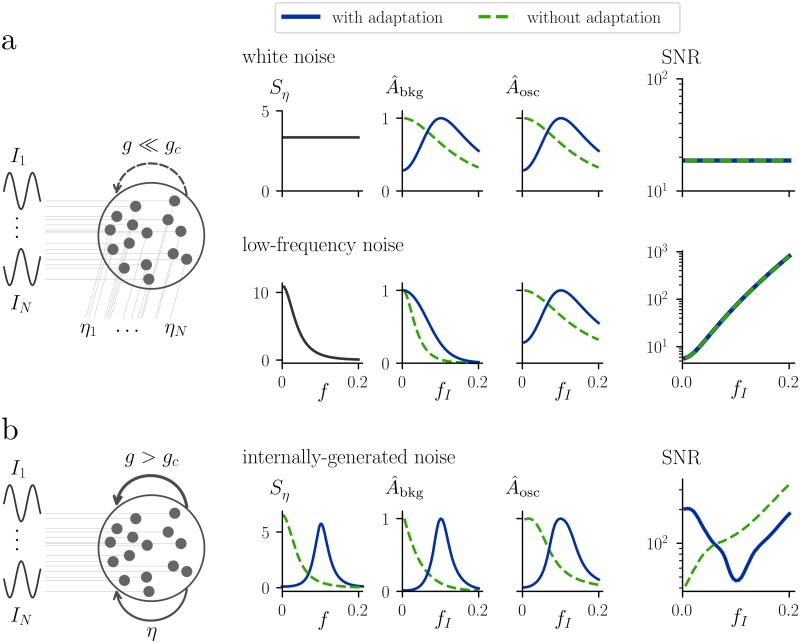

Numerical simulations of the network with adaptation show that for low connection strength g, the network exhibits transient dynamics before it settles to a fixed point in which all xi and ai are zero (Fig 1a and 1b). By analyzing the stability of this fixed point (see Methods), we find that, in the N → ∞ limit, the critical value of g at which stability is lost depends on the adaptation parameters via

| (3) |

where . Notice that gc(γ, β)>1 for all γ, β > 0, i.e. adaptation stabilizes the dynamics since in the case without adaptation we have gc(0, 0) = 1 [1]. Interestingly, the two different cases in Eq 3 correspond to two different bifurcation types: for β < βH(γ) the eigenvalue spectrum is convex, exhibiting a rightmost eigenvalue (in the complex plane) which is real (Fig 1b and 1d, insets). In this regime the system undergoes a saddle-node bifurcation at g = gc(γ, β). In contrast, for β > βH(γ) the eigenvalue spectrum is deformed such that the eigenvalues with the largest real part are complex (Fig 1a and 1c). This indicates the presence of a Hopf bifurcation at g = gc(γ, β) which is a consequence of the introduction of adaptation (see Methods for details). Above the bifurcation, i.e. for g > gc(γ, β), the network exhibits self-sustained, irregular fluctuations (Fig 1c and 1d) that we will characterize in the next section.

Fig 1. Microscopic network dynamics with firing rate adaptation.

In the top row (panels a and b), the network is below the bifurcation, (g = 0.96gc(γ, β)), and it exhibits a transient activity to the stable fixed point. In the bottom row (panels c and d), the fixed point is unstable (g = 1.3gc) and the network exhibits irregular, self-sustained oscillations. In the left column (panels a and c), the network is in the resonant regime (γ = 0.2, β = 0.5), as it can be seen from the single-neuron linear frequency response function (cf. Eq 9). In the right column (panels b and d), the network is in the non-resonant regime (γ = 1, β = 0.1). For each panel, ten randomly chosen units are shown, out of N = 1000 units. Panel c corresponds to the resonant chaotic state, while in panel d the system exhibits chaotic activity similar to the case described in [1]. The insets show the eigenvalue spectrum in the complex plane for the four different sets of parameters. The dashed black line indicates the imaginary axis. Comparing the eigenvalue spectrum of panel a with the one of panel c, we see that the network undergoes a Hopf bifurcation.

In all the simulations and numerical integrations, we choose ϕ(x) as a piecewise-linear function given by

| (4) |

unless stated otherwise.

Resonant chaos in random networks with adaptation

The dynamics of the 2N-dimensional dynamical system in Eqs 1 and 2 for large N is too high-dimensional to be studied at the microscopic level. In contrast, using dynamical mean-field theory [1], we can find properties of the network dynamics that are independent of the specific connectivity realization. In what follows, we will assume that the external input Ii(t) to each unit is an independent realization of the same stationary Gaussian process with zero mean. Following [1], we approximate the network input to a representative unit i with a Gaussian process η, an approximation valid in the large-N limit [45, 46]. The mean-field equations read (see Methods)

| (5) |

| (6) |

where η(t) is a Gaussian process with zero mean and whose autocorrelation must be computed self-consistently by imposing (see Methods)

| (7) |

Due to the linearity of the mean-field equations, x(t) is a zero-mean Gaussian process which is fully characterized by its second-order statistics, i.e. the autocorrelation function in time domain, or the power spectral density Sx (power spectrum) in frequency domain, defined as the Fourier transform of the autocorrelation . By Fourier transforming Eqs (5) and (6), we find that the power spectrum is the solution of

| (8) |

where Sϕ(x)(f) and SI(f) are the power spectra of ϕ(x) and I respectively, defined analogously to the one of x. Importantly, Sϕ(x)(f) is a functional of Sx(f), which can be computed semi-analytically for simple nonlinearities such as the piecewise-linear function, Eq 4, as detailed in Methods, section “Effect of nonlinearities on second-order statistics”). The factor is the square modulus of the linear response function of an uncoupled single unit, and is given by

| (9) |

with ω = 2πf.

By solving iteratively the mean-field equation for the power spectrum (Eq 8) in the absence of external input (see Methods, section “Iterative procedure to solve the mean-field theory” for details), we find that if g < gc(γ, β), the power spectrum converges to zero, Sx(f) → 0, at all frequencies. Therefore the mean-field variable x is constantly equal to zero. This is consistent with the presence of a stable fixed point at zero and it indicates that, for large N, the fixed point solution is the only possible one.

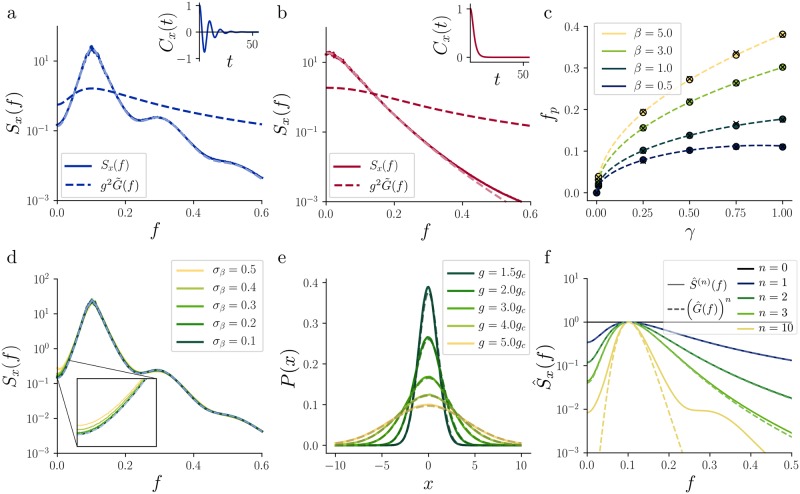

On the other hand, if g > gc(γ, β), the mean-field network is characterized by a nonzero, continuous power spectral density (Fig 2). This is an indication that, at the microscopic level, the network is in a chaotic state [47]. However, we stress that a more rigorous proof of chaos would require the computation of the maximum Lyapunov exponent of the network, which we will not perform. In contrast to a network without adaptation [1], we find that in the presence of adaptation the network can be in two qualitatively different chaotic regimes. For very weak and/or fast adaptation, the chaotic fluctuations are qualitatively the same as for the network without adaptation, i.e. the power spectrum is broad-band with maximum at f = 0 (Fig 2b). We refer to this regime as to the non-resonant regime. On the other hand, for strong and/or slow adaptation, the mean-field network settles in a new regime, characterized by an autocorrelation that decays to zero via damped oscillations and, equivalently, by a power spectrum that exhibits a pronounced resonance band around a nonzero resonance frequency fp (Fig 2a). The decaying autocorrelation function and the continuous power spectral density are an indication that the network is—also in this regime—in a state of microscopic chaos. This new dynamical state, that we refer to as resonant chaos, is qualitatively different from the one of the non-resonant regime and from the one of the non-adaptive network.

Fig 2. Self-consistent statistics in the chaotic regime.

a: Resonant (narrow-band) chaos. Power spectral density obtained from mean-field theory (solid line) and microscopic simulations (light blue, dashed) for γ = 0.25, β = 1 and g = 2gc(γ, β). The dashed, dark blue line indicates the square modulus of the linear response function for the same adaptation parameters. Inset: Normalized mean-field autocorrelation Cx(τ) for the same parameters, plotted against the time lag in units of τx. b: Non-resonant (broad-band) chaotic regime. Curves and inset are the same as in a, but with γ = 1, β = 0.1 and g = 2gc(γ, β). c: Maximum-power frequency fp of the recurrent network plotted against γ, for different β. Crosses depict results obtained from microscopic simulations, circles show the semi-analytical prediction based on the iterative method and dashed lines shows the theory based on the single neuron response function. For γ = 0 all curves start at fp = 0. d: Power spectral density Sx(f) for different levels of heterogeneity of the parameter β (solid lines), compared to the case without heterogeneity (dashed line). All the curves are almost superimposed, except at very low frequencies where small deviations are visible (inset). Parameters: γ = 0.25, , . e: Distributions P(x) of the activation x from microscopic simulation (N = 2000, solid lines) and theoretical prediction (dashed lines). The adaptation parameter were γ = 0.25 and β = 1. f: Normalized power spectral density (solid lines) at different iterations n, for the network with adaptation. For the first iterations, the powers of (dashed lines), provide a good approximations of the power spectrum width. The initial power spectral density is a constant and the network parameters are the same as in panel a.

Strikingly, whether the network settles in the resonant or in the non-resonant regime can be predicted purely based on the single-unit adaptation properties. More precisely, if β < βH(γ), the function is monotonically decreasing with the frequency f, i.e. it exhibits a low-pass characteristic (Fig 2b). This low-pass behavior of the single neuron is reflected by a power spectrum of the network that is also dominated by low frequencies, albeit less broad. The network power spectrum corresponds exactly to the non-resonant regime discussed above.

In contrast, if β > βH(γ), the single neuron response amplitude exhibits a maximum at a nonzero frequency . Such a resonance peak is typical of a band-pass filter (Fig 2a). The frequency f0 is identical to fm (Eq 28), which is derived from the imaginary part of the critical eigenvalue at the Hopf bifurcation (see Methods, section “Fixed-point stability”). The single-neuron linear response characteristics are qualitatively preserved in the fluctuating activity of the recurrent network, which also exhibits a power spectral density dominated by a nonzero frequency fp. This regime corresponds to the resonant regime discussed above. Interestingly, we find numerically that fp = f0, i.e. the resonance frequency is not affected by the introduction of recurrent connections (Fig 2c, tested up to g = 5gc(γ, β)). We notice that the non-resonant and resonant regimes are consistent with the fixed point stability analysis of the network in the microscopic description. Indeed, the resonant and non-resonant regimes match the regions in which we observe Hopf or saddle-node bifurcations, respectively (see Methods).

Using simulations of the full microscopic network, we verify that the mean-field description is a good approximation of the system for large but finite N. In Fig 2e we show that the probability density of the activation variable x measured from the microscopic simulations matches the Gaussian distribution predicted by the mean-field theory, with relatively small finite-size effects that increase close to the criticality (see Fig 2e, g = 1.5gc(γ, β)). Moreover, the mean-field solution provides a good description of the system for a wide range of adaptation parameters γ, β (Fig 2c).

To summarize, single-neuron properties determine whether the network settles in a resonant or non-resonant chaotic state through the factor . This is a general property that is also valid for more complex rate models. Indeed, for a single-neuron model with squared linear response function given by an arbitrary , we have that the network power spectrum Sx(f) is the result of a nonlinear sharpening of (see Methods, section “Qualitative study of the iterative map” for details). As a result, the network activity exhibits the same frequency bands that are preferred by single neurons, albeit much narrower. We will discuss more general rate model in section “Network of multi-dimensional rate neurons”.

Network with heterogeneous adaptation

The narrow-band oscillations in networks of adapting neurons reported so far have been obtained for networks of identical neurons. The variability of physiological properties of real neurons, however, suggests that adaptation parameters differ among neurons. Heterogeneity of neuronal parameters is known to sensitively influence synchronization in networks of neural oscillators [48]. In particular, mismatches of oscillation frequencies can impede the formation of neural rhythms. Does heterogeneity have a similar effect in strongly coupled random networks of adapting neurons? To address this question, we introduce a second source of disorder in the system by considering quenched randomness in the adaptation parameters. Specifically, we construct the heterogeneous network by adding Gaussian noise to the parameter β, i.e. by sampling independently for each neuron. Numerical simulations of the network in the presence of heterogeneous adaptation show that the dynamics of the random network are surprisingly robust to this type of noise (Fig 2d). Even for relatively high variability (), the only effect is a barely visible increase of the power spectral density at low-frequencies (Fig 2d, inset). In Methods, section “Mean-field theory with heterogeneous adaptation” we derive the mean-field equations that correspond to the network with heterogeneous adaptation, and we compute the effective factor in this case. The semi-analytical solution of the mean-field theory for heterogeneous adaptation predicts, similar to simulations, a stronger power at low frequencies than in the homogeneous case. However, the deviations predicted by the theory are smaller than the mismatch between theory and simulations for the homogeneous case, so that we could not perform a quantitative verification of the mean-field theory for the heterogeneous case.

Recurrent connectivity sharpens the single-neuron response properties

We have seen that the power spectrum of the network activity in the presence of adaptation inherits the properties of the single-neuron reponse function. However, the resonance band present in the network power spectrum is much narrower than the one predicted by the linear response function. Since the linear response function of a single unit is equivalent to the power spectrum of a network of unconnected units driven by white noise, we conclude that the sharpening of the power spectrum is due to the recurrent connections. Such sharpening can be understood by studying the evolution of the mean-field solution during the iterative procedure that leads to the solution. In Methods, section “Qualitative study of the iterative map”, we derive the following first-order approximation of the power spectrum at iteration n:

| (10) |

where an is a frequency-independent factor of proportionality. This approximation breaks down for large n and hence does not allow for a self-consistent solution of the mean-field theory corresponding to the limit n → ∞. However, Eq 10 is sufficient to explain the sharpening of the power spectrum. In Fig 2f we compare the evolution of the normalized network power spectrum over iterations with the theoretical prediction according to Eq 10. We see that the theory predicts the width of the power spectrum quite well for the first few iterations. The sharpening is clearly visible when comparing the power spectrum at iteration 2 and 3 with the one at iteration 1, that is proportional to the single-unit linear response function. As expected, the approximation breaks down after more iterations, as the higher-order terms are necessary to reach a self-consistent solution (see also Methods, section “Qualitative study of the iterative map”).

We now focus on the resonant chaotic regime, that represents the novel dynamical state that emerges from the introduction of adaptation and study the functional properties of the network in this regime.

Adaptation increases the network correlation time

While the resonance frequency in the resonant regime seems to depend solely on the single-neuron properties, the introduction of recurrent connections increases the coherence of the stochastic oscillations, i.e. decreases the width of the resonance band. The narrower the resonance band, the more coherent the oscillatory behavior will be. To quantify the increase of the oscillation coherence, we measure the quality factor (Q-factor) of the stochastic oscillations, defined as

| (11) |

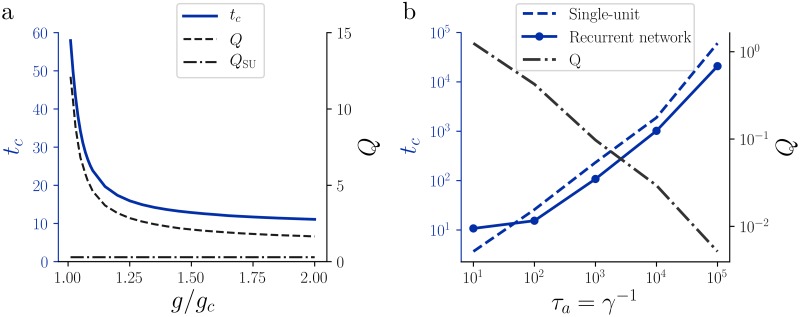

where fp is the frequency of maximum height of the power spectrum Sx and ΔfHM is the frequency width of the power spectrum Sx(f) at the half-maximum. Intuitively, for a narrow-band oscillation, the quality factor quantifies the number of oscillation cycles during the characteristic decay time of the autocorrelation function. For a single neuron driven by white noise, the single-neuron power spectrum of x is proportional to . Compared to this reference shape, we find a higher Q-factor in the recurrent network (Fig 3a), corresponding to a sharper resonance peak in the power spectrum (see also Methods, section “Qualitative study of the iterative map”). When approaching the criticality from the chaotic phase, g → gc(γ, β)+, the quality factor diverges (Fig 3a), i.e. the dynamics approach regular oscillations.

Fig 3. Correlation time and effect of recurrent connections.

a: Correlation time (blue solid line) and Q-factor (dashed line) as a function of the connectivity strength. The weakest connectivity level plotted is g = 1.01gc(γ, β). Adaptation parameters: γ = 0.1 and β = 1. The dash-dotted horizontal line indicates the Q-factor of a single unit with the same adaptation parameters, driven by white noise. b: Correlation time (blue) and Q-factor (black, dash-dotted line) as a function of the adaptation timescale τa ≔ γ−1. Both the recurrent network (solid line) and the single unit driven by white noise (dashed line) scale with τa. β = 1 and g = 1.5gc(γ, β).

While the Q-factor measures the decay time constant of the autocorrelation function relative to the mean oscillation period, it is also interesting to consider the absolute correlation time of the activity. As a measure of correlation time of a stochastic process we use the normalized first moment (center of mass) of the absolute value of the autocorrelation function (e.g. [49]),

| (12) |

Since the Q-factor diverges when g → gc(γ, β), in this limit the corresponding autocorrelation exhibits sustained oscillations with a diverging correlation time. Due to the increase of the Q-factor, the correlation time also diverges when g → gc(γ, β) (Fig 3a).

In the regime of slow adaptation, a single unit driven by white noise can have a larger correlation time than a recurrent network (Fig 3b). This is due to the fact that in this regime the correlation time of the single unit driven by white noise is dominated by the long tail of the autocorrelation. The introduction of recurrent connections increases the oscillatory component, giving a larger “weight” to the short time lags, thus decreasing tc. Nevertheless, the correlation time increases with the timescale of adaptation τa for both the single unit driven by white noise and the recurrent network (Fig 3b). Note that the Q-factor goes to zero for very large adaptation timescale (γ → 0), so that the dominant contribution to the correlation time in this regime is the non-oscillatory one.

Adaptation shapes signal transmission in the presence of internally-generated noise

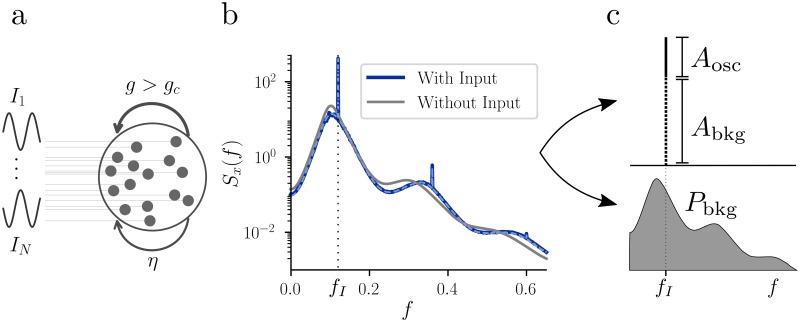

In order to go beyond the study of the spontaneous activity of the network, we consider its response to an external oscillatory signal. While signal transmission in linear systems is fully characterized by the frequency response function of the system and by the noise spectrum of the output, the situation is different in the nonlinear neural network that we study here. Similarly to previous approaches [4], we provide oscillatory input to each unit in the microscopic network, randomizing the phase (Fig 4a)

| (13) |

where θi ∼ U(0, 2π). The corresponding power spectral density of the input is given by . Thanks to the phase randomization, the network still reaches a stationary state and the mean 〈x(t)〉 remains at zero. Notice that even if in the case in which the input is a perfect sinusoidal and therefore non-Gaussian, the mean-field equation for the power spectrum (Eq 8) is still valid. However, since x is also not Gaussian anymore, in order to find the mean-field solution we need to modify our iterative scheme by splitting the activation variable x into its Gaussian and its oscillatory part [4].

Fig 4. Response of the mean-field network to an oscillatory input.

a: Schematic representation of the random network driven by an external input, with phase randomization. For g > gc, the chaotic activity can be seen as internally-generated noise. b: Effect of an oscillatory external input on the power spectral density Sx(f). In the example, γ = 0.25, β = 1, g = 2gc(γ, β), fI = 0.12, while AI = 0.5 (blue) and AI = 0 (gray). Simulations (solid blue) and theory (dashed blue) are superimposed. c: Top: Schematic representation of the separation of the power spectral density into its oscillatory (Aosc) and chaotic (Abkg) components. Note that these quantities depend on the size of the frequency discretization bin. Bottom: Graphical interpretation of Pbkg, i.e. the total variance of the network activity due to chaotic activity (shaded gray area).

The presence of the input affects the dynamics of the mean-field network, quantified by the power spectral density (Fig 4b). If the input is given while the network is in the chaotic regime (g > gc), sharp peaks at the driving frequency fI and multiples thereof are elicited by the external input, standing out from a background power spectrum that is deformed compared to the case without the external input. For fI > fp, as in the example, the bumps of the background spectrum are slightly shifted toward larger values. The opposite happens if fI < fp. Notice that both this shift and the shaping of the chaotic activity are nonlinear effects due to the recurrent dynamics. As an additional nonlinear effect, the network activity also exhibits harmonics at the driving frequency of the external input.

To characterize the response to the external stimulus, we split the power spectrum Sx(f) into an oscillatory component and a chaotic component that constitutes the background activity

| (14) |

where bk are positive coefficients and we included the multiples of the driving frequency in order to account for the harmonics. To solve the mean-field equations numerically, we have to consider a finite frequency bin Δf (in our numerical results, Δf = 0.001). As a consequence, the heights of the delta peaks in the power spectrum in Eq 14 are finite and depend on Δf. First, we will look at the transmission of the oscillatory signal near the driving frequency, i.e. how much of the peak in the power spectrum Sx(f) at f = fI is due to the oscillatory drive and how much is due to the background activity. At the driving frequency fI we write (see Fig 4c)

| (15) |

i.e. we measure the contribution of chaotic activity to the power spectrum at the driving frequency by interpolating the power spectrum at neighboring frequencies. The signal-to-noise ratio (SNR) at the driving frequency fI is then given by

| (16) |

Notice the size of the frequency bin Δf scales the SNR, but since we are interested in the dependency of the SNR on fI and not in its numerical value, this scaling factor can be neglected. Finally, we have seen in the example in Fig 4b that the oscillatory input can suppress background activity at frequencies far from fI. In order to quantify this chaos-suppression effect, we split the total variance of x into two contributions (Fig 4c)

| (17) |

Weak drive and signal transmission

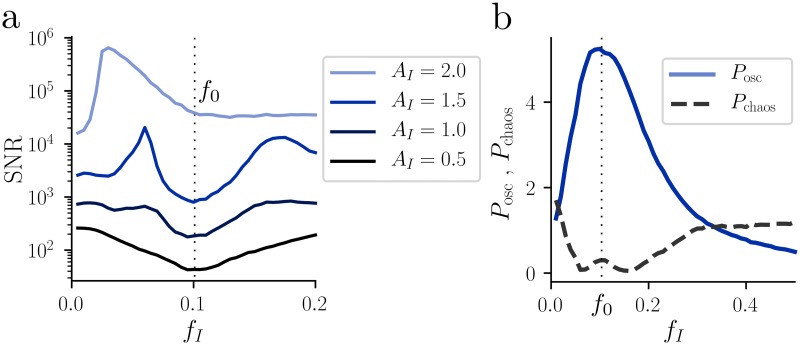

If the oscillatory input is weak, chaos is not entirely suppressed and acts as internally-generated noise on the transmission of the oscillatory input. We now study how the network transmits this oscillatory input signal, and how the transmission quality depends on the signal frequency fI. It is known from linear response theory that the transmission of weak signals through single homogeneous populations with strong (intrinsic or external) noise does not benefit from adaptation [35, 50]. This is because in the signal-to-noise ratio (SNR) both the signal and the noise are affected in the same way [35]. We wondered whether in a strongly coupled, large random network, adaptation could have a different effect on the oscillatory signal than on the noise, thereby re-shaping the SNR. A particularly interesting question is how signals are transmitted in the presence of purely intrinsically-generated chaotic fluctuations that are shaped by adaptation and recurrent connectivity.

To understand why adaptation cannot shape the SNR in a weakly-coupled network, consider our random network in the non-chaotic regime, with g ≪ gc (Fig 5a). If we drive the non-chaotic network with oscillatory input together with a noise source η, the typical response of one unit in the network can be approximated using the mean-field linear frequency response function , whose square modulus is given by (see Methods, section “Fixed point stability in the mean-field network”)

| (18) |

where we add the subscript β to stress that depends on the adaptation parameters γ and β (cf. Eq 2). If we indicate by SI(f) and Sη(f) the power spectral density of the oscillatory input and of the external noise respectively, the power spectral density of the output (taken as the network activity x) can be approximated by

| (19) |

Fig 5. Adaptation shapes the SNR in the chaotic regime.

a: For small g, a recurrent network driven by an oscillatory input and external noise can be analyzed in the linear response theory framework. Top row: response of the network to oscillatory drive and independent white noise to each neuron. Bottom row: response of the network to oscillatory drive and independent low-frequency noise to each neuron. For each row, from left to right, we plot the power spectrum of the input noise, the background component of the power spectrum , the oscillatory component of the power spectrum , and the SNR as a function of the driving frequency. The hat over the symbols Abkg and Aosc indicates that, to highlight the network shaping, they are normalized to have the same maximum height (equal to one). Notice that, since both signal and noise are shaped in the same way in the linear response framework, the introduction of adaptation does not affect the SNR. b: For large g, the network is subject to internally generated noise and driven by oscillatory input. We plot the same quantities as in panel a. Notice that, due to the nonlinearity of the network, signal and internally-generated noise are shaped in different ways, with the signal being subject to a broader effective filter. As a consequence, the introduction of adaptation in the nonlinear network shapes the SNR by favoring low frequencies. Parameters of the network with adaptation for all panels: γ = 0.25, β = 1 g = 2gc(γ, β) and AI = 0.5.

This means that both the signal and the noise are shaped by the same factor that characterizes the network (Fig 5a). The SNR of the output at the driving frequency, defined as in Eq 16, is given by

| (20) |

i.e. the parameters of the network, reflected in the linear response function χβ, do not influence the SNR (Fig 5a). Notice that we considered the activation variable x as our output. We verified that considering instead the firing rate ϕ(x) as the output yields qualitatively the same results, therefore we will for simplicity continue our analysis for the output x. Eq 20 implies that the SNR depends only on the power spectra of the signal and of the noise. For example, if we consider low-frequency dominated noise, high-frequency signals will be transmitted more easily, but once again the introduction of adaptation will not play any role (Fig 5a). While this argument is based on a linear response approximation, we verified using the DMFT solution that the linear approximation is quite accurate. Deviations are visible very close to the criticality, but once again the SNR is almost entirely independent of the neuron parameters.

The findings are completely different for a network in the chaotic phase, i.e. g > gc. As discussed above, in this regime the network produces internal fluctuations whose power spectrum depends on single neuron parameters (Fig 5b, see also section “Resonant chaos in random networks with adaptation”). For clarity, let us assume that there is no external noise, such that the noise is only internally generated by the network. In this case, the linear response theory framework cannot be applied; in order to predict the effect of the network in shaping both the input and the internally-generated noise, we need to solve the DMFT equations (Eq 8) iteratively. As in the previous section, the resulting power spectrum can be split into a chaotic component and into an oscillatory component (see Eq 14). How does the introduction of adaptation shape these two components? We have seen that in the presence of adaptation the network can enter a state of resonant chaos that differs from the traditional chaos of a network without adaptation because of the presence of a dominant frequency band centered at f0 (Fig 5b, see also section “Resonant chaos in random networks with adaptation”). The state of resonant chaos survives in the presence of weak input. As the driving frequency changes, the amplitude of the transmitted signal Aosc(fI) passes through a maximum at the resonance frequency f0 of the network; however, Aosc(fI) decreases with the distance from the resonance frequency more slowly than the noise amplitude Abgk(fI) does (Fig 5b). As a consequence, the SNR is maximal at very slow driving frequencies, goes through a minimum at the resonance frequency f0 before it increases again (Fig 5b). In other words, resonant chaos acts as a notch filter with respect to the transmission of weak signals.

The shaping of the SNR can be understood as follows: For weak signals, the noise amplitude is , where is the spontaneous power spectrum for I = 0. The factor exhibits a maximum at the resonance frequency, and therefore sharpens Abgk(fI). The signal power that sticks out of the noise power is for a weak input signal, where the first term is the direct influence of the signal on x(t) and the second term represents indirect contributions caused by recurent connections. If the direct effect dominates, the signal-to-noise ratio is approximately . Importantly, even though the expression for the SNR looks formally similar to Eq (20) for the case of external noise, the effect of single neuron dynamics is markedly different: As shown above, the power spectrum of internally generated noise depends on the single neuron filter , whereas the corresponding power spectrum of external noise in Eq (20) is, by definition, independent of single neuron dynamics. Thus, in the case of internally generated fluctuations, single neuron dynamics strongly affects the SNR through network-enhanced noise shaping. We conclude that in the chaotic regime adaptation improves the SNR at low frequencies, whereas in weakly-coupled, non-chaotic networks such an improvement cannot be observed, independently of the choice of the adaptation parameters γ, β. If the strength of the input is increased, the interaction between noise and signal becomes stronger, leading to a deformation of the SNR (Fig 6a). However, even for strong drive we observe a peak of the SNR at frequencies that are lower than the resonance one.

Fig 6. Effect of a strong oscillatory input.

a: SNR at the driving frequency fI as a function of the driving frequency, for different values of the signal amplitude AI. As AI increases, nonlinear interaction between signal and noise become stronger, leading to a qualitative change in the SNR profile. b: Total power of the chaotic (black dashed) and oscillatory (light blue) components of the power spectrum, in the case of strong input (AI = 1.5). For both panels, γ = 0.25, β = 1.0, and g = 2gc(γ, β).

Strong drive and chaos suppression

In the presence of strong input, chaos suppression together with the formation of a sharp peak are indications that at the microscopic level the network is driven towards a limit cycle. Similarly to [4], we now study how chaos suppression depends on the driving frequency fI. By solving the DMFT equations (Eq 8) in the presence of external input, we find that both Pchaos and Posc exhibit a non-monotonic dependence on fI (Fig 6b). Aosc depends smoothly on fI, reaching its largest value around f0. On the other hand, Achaos is zero for input frequencies that are close to f0, indicating that the network is driven into a limit cycle. While a network without adaptation also exhibits such a non-monotonic dependence [4], in our case this effect is more pronounced due to the resonant power spectrum of the spontaneous activity in the presence of adaptation.

Network of multi-dimensional rate neurons

We have seen how adaptation, by changing the response function of singe neurons, shapes the chaotic dynamics of a recurrent network and consequently the signal-transmission properties of the network. In biology, several other mechanisms could contribute to the response properties of neurons, such as synaptic filtering, facilitation or the presence of dendritic compartments [28–40]. We account for multiple of such mechanisms by considering a general D-dimensional linear-nonlinear rate model. The first variable is an activation variable that defines the output rate y via a nonlinear function ϕ, i.e. , as in the adaptation case. The remaining D − 1 variables are auxiliary variables. We assume that the rate is the only signal that unit j uses to communicate with other units. Conversely, the signals coming from other units only influence the variable , i.e. the rate of unit j is directly coupled only to the first variable of unit i. The choice of having the same variable sending and receiving signals is dictated by simplicity and is not necessary for the development of the theory. Unit i receives input from all the other units, via a set of random connections Jij, sampled i.i.d. from a Gaussian distribution with mean zero and variance g2/N. The resulting network equations are

| (21) |

where δαβ is the Kronecker delta symbol. Subscripts (in Latin letters) indicate the index of the unit in the network and run from 1 to N, while superscripts (in Greek letters) indicate the index of the variable in the rate model and run from 1 to D. The matrix A is assumed to be non-singular and to have eigenvalues with negative real parts.

By generalizing the mean-field theory to the case of the D-dimensional rate model (see Methods, section “Mean-field theory”), we obtain the analogous of the self-consistent equation for the power spectrum (Eq 8) for the general case

| (22) |

where Sϕ(x1)(f) is the power spectrum of ϕ(x1), i.e. the mean-field firing rate. As in the case of adaptation, is the squared modulus of the linear response function of single neurons in the frequency domain (see Methods).

By solving the mean-field theory, we find that, similarly to the case of adaptation, for small coupling the power spectrum converges to zero for all frequencies. The critical value of the coupling g is defined implicitly by (see Methods, section “Fixed point stability in the mean-field network”)

| (23) |

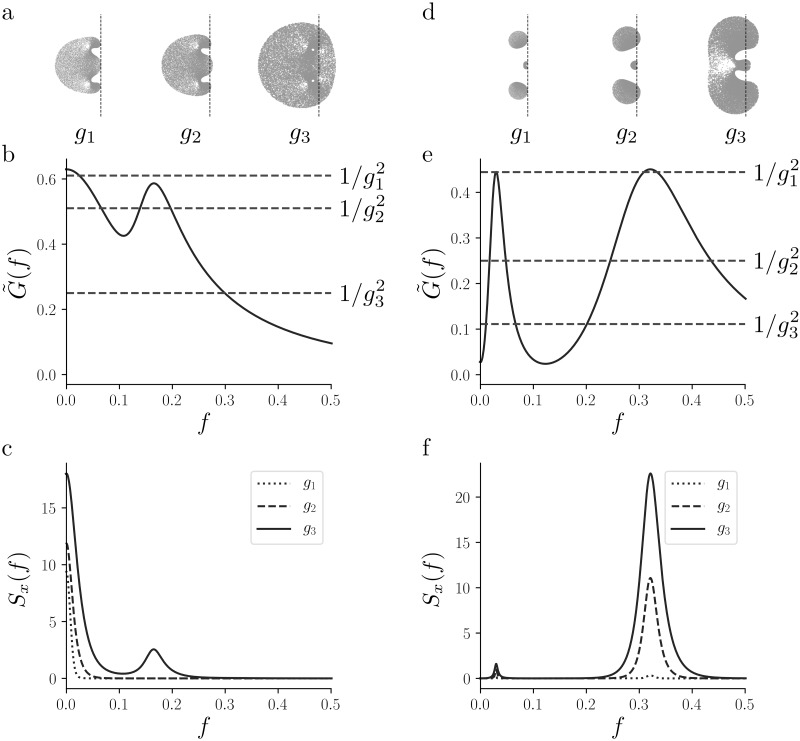

On the other hand, for g > gc we find that the network, also in this more general case, exhibits fluctuating activity, whose power spectrum results from a sharpening of the single-neuron linear response function . In Methods, section “Qualitative study of the iterative map” we show how this property can be understood from the mean-field equations and we provide two examples of network power spectra for higher-dimensional rate models.

Discussion

We studied how the dynamics of a random network of rate neurons are shaped by the properties of single neurons, and in particular by the presence of history-dependent mechanisms such as adaptation. To this end, we generalized DMFT, a well-established theoretical tool [1], to the case of multi-dimensional rate units. This allowed us to reduce the high-dimensional, deterministic network model to a low dimensional system of stochastic differential equations. Standard approaches to solving the mean-field equations [1] were not fruitful in the multi-dimensional setting. However, the mean-field solution could be found efficiently in a semi-analytical way using an iterative approach. The iterative approach highlights how recurrent connections sharpen the response function of single neurons, i.e. how bands of preferred frequencies become narrower (see also Methods, section “Qualitative study of the iterative map”). Previous studies that considered the role of single neuron properties on random network dynamics focused only on the role of the gain function [2, 14]. To our knowledge, this is the first result that relates the single neuron frequency response function to the spectral properties of random network dynamics.

We studied in detail the important case of neuronal adaptation, using a two-dimensional rate model. We showed that adaptation extends the stability region of a recurrent network of rate units because the transition from a stable fixed point to a fluctuating regime happens at g = gc > 1, i.e. for higher coupling strength than for the network without adaptation. Crucially, above the criticality and for slow adaptation, the dynamics settle in a state of “resonant chaos” that, unlike the chaotic activity of networks of rate units without adaptation, is dominated by a nonzero resonance frequency. We observed that the resonance frequency can be computed from the single unit properties and it is therefore independent of the connection strength g. On the other hand, the presence of recurrent connections increases the coherence of the oscillations and therefore influences the correlation time. The oscillation coherence is maximal at the onset of chaos and decreases with g, for g > gc(γ, β). Indeed, as it is typical of critical behavior, the correlation time in the chaotic phase diverges when approaching the criticality. In the presence of adaptation, this happens because the system approaches a limit cycle.

It is interesting to observe that for slow adaptation there are two separate contributions to the correlation time of the network activity: an oscillatory component, related to the resonance frequency, and a long tail that scales with the adaptation timescale. For finite τa, the correlation time diverges when g → gc due to the oscillatory component. For τa → ∞, the correlation time also diverges, but this is due to the long tail, since both the resonance frequency and the Q-factor go to zero for large τa, yielding a finite and therefore sub-dominant contribution to the correlation time. Such multi-scale structure of the autocorrelation could be advantageous for network computations that require expressive dynamics over multiple timescales, as it is often the case in motor control. Indeed, adaptation has been proposed to play a role in sequential memory retrieval [51], slow activity propagation [52], perceptual bistability [53] and decision making [54]. Moreover, SFA has beneficial consequences both for reservoir computing approaches [10] and for spiking neuron-based machine learning architectures [55]. Further work could explore the relation between long correlation time induced by adaptation and computational properties.

We found that, when a network in the resonant chaotic state is driven by an oscillatory input, chaos is more easily suppressed when the driving frequency is close to the resonance frequency. In the presence of weak input, in contrast, chaos is not fully suppressed. Interestingly, we found that in the chaotic regime the presence of adaptation shapes the SNR in frequency space. In particular, adaptation increases the SNR for low-frequency signals, a possibly important feature since behaviorally relevant stimuli can have information encoded in slow signal components [56]. Crucially, this effect is not present in the sub-critical regime (g < gc), since signal and external noise are shaped together [35]. It is known that the properties of biological neurons, including adaptation parameters, can be dynamically adjusted using neuromodulators [57, 58]. In view of our results, this would allow to dynamically shape the SNR depending on the requirements imposed by the behavioral context.

While our theory is applicable to single units with D interacting variables, the effect of a single adaptation variable (D = 2) on the dynamics of random recurrent networks was also studied independently and simultaneously by another group [59], who reached results consistent with ours [60]. The authors of [59] used a slightly different network architecture and did not focus on the relation between single neuron response and spectral properties, but rather on the correlation time of the network activity and on the effect of white noise input. One major difference is the conclusion reached regarding correlation time: by using a different definition, in [59] the authors conclude that the correlation time does not scale with the adaptation timescale. Based on our analysis, we infer that the definition of correlation time used in [59] captures only the oscillatory contribution to the correlation time, and not its long tail.

Current mean-field theories for spiking neural networks [61] are self-consistent only with respect to mean activities (firing rates), whereas second-order statistics such as autocorrelation function or power spectral density of inputs and outputs are inconsistent [62]. While iterative numerical procedures are available [62–64], a self-consistent analytical calculation of the autocorrelation (or power spectrum) via DMFT for networks of spiking neurons is known to be a hard theoretical problem. In the present manuscript, the rate-based modeling framework allowed us to put forward explicit expressions for the map of autocorrelations. For a general nonlinearity ϕ(x), this map takes the form of an infinite series (according to Eq 37 in Methods, section “Qualitative study of the iterative map”). However, for polynomial nonlinearities the series simplifies to a finite sum, e.g. Eq 43 in Methods, section “Effect of nonlinearities on second-order statistics”, which permits a closed-form analytical expression for the iterative map. Therefore, our study offers a unique method for the calculation of the autocorrelation in biologically constrained random neural networks, and thus represents a promising step towards a self-consistent mean-field theory beyond first-order rate models [1, 2].

Random network models are widely used to model different biologically-relevant systems, such as metabolic networks [65], protein regulatory networks [66–68] or in the study of epidemic outbreaks [69]. In all the above examples, the collective dynamics result from the interplay between network connectivity and the dynamics of single units.

Extensions and generalizations

We see four extensions to the work presented in the present manuscript. First, our study is limited to rate neurons while it would be interesting to extend the analysis to spiking neuron models. As a first step in this direction, previous work has already investigated the introduction of white noise in random rate networks [2, 47, 59], which would be straightforward to include in the case of D-dimensional rate units. Second, our framework can readily be extended to multiple adaptation variables (see Methods, section “Qualitative study of the iterative map” for two examples). This is a key feature in order to account for realistic SFA, which is known to have multiple timescales and it has been shown to have power-law structure [16–18, 41]. Interestingly, our framework can be extended to power-law adaptation, since we require only the knowledge of the linear frequency-response function of the single neurons. We expect that in this situation the internal noise generated by the network will also have a power-law profile of the type fα, with α > 0. With such a noise spectrum, the signal that maximizes information transmission should be dominated by low-frequencies in a power-law fashion [18, 70]. Third, the introduction of additional structure in the connectivity, such as low-rank perturbations [12], attractor structure [71], or large scale connectivity of the brain [20], could give rise to interesting dynamics when combined with single units with multiple adaptation variables. In particular, the state of resonant chaos may also arise from an interaction of excitatory and inhibitory spiking neurons in networks with partially random, and partially structured connections. Finally, while our study focused on neural networks, random network models are used in other areas of biology and physics [72]. By extending mean-field theory techniques to more complex node dynamics, our approach also contributes to understanding the interaction between node dynamics and network structure in more general settings. We hypothesize that our approach can be used in the future to provide and understanding the variability of single-neuron activity across trials in the presence of one or several peaks in the power spectrum at gamma and theta frequencies.

Methods

In most of the following sections, we first present the derivations for the general D-dimensional model presented in section “Network of multi-dimensional rate neurons”, and then apply the result to the case of adaptation.

Fixed-point stability

General theory

The system of N ⋅ D coupled nonlinear differential equations (Eq 21) becomes intractable for large N. However, because ϕ(0) = 0, the system has a fixed point at the origin , the stability of which can be studied owing to the clustered structure of the system. The Jacobian at the fixed point is given by

| (24) |

where J is the random connectivity matrix and IN is the N-dimensional identity matrix. The matrix B is of size ND × ND and it therefore has ND eigenvalues. Since all the blocks of B commute with each other, we can apply the result of [73] to find a relation between the eigenvalues of J, A and B

| (25) |

where A− is the matrix obtained by removing the first column and the first row from the matrix A. This expression is valid for all the eigenvalues of B that are not coincident with those of A−. Eq 25 can be transformed into a polynomial equation of degree D in λB, so that for every value of λJ we obtain D eigenvalues of B, as expected. From now on we will assume that, without loss of generality, ϕ′(0) = 1.

In the N → ∞ limit, the eigenvalues λJ are known to be uniformly distributed on a disk in the complex plane, centered at zero and of radius g [74]. If one can invert Eq 25, it becomes computationally fast to compute the eigenvalues of the Jacobian in the N → ∞ limit without finite-size effects. Whether one can obtain an explicit inverse formula depends on the dimensionality and on the entries of the matrix A.

Network with adaptation

For the two-dimensional model defined by Eqs 1 and 2, we can invert Eq 25, and obtain an expression for the eigenvalues of the Jacobian

| (26) |

Using the mapping between the eigenvalues of the connectivity matrix J and those of the Jacobian matrix B (Eq 26), we find the critical value of g for which the stability of the fixed point is lost

| (27) |

where . The critical value gc can also be calculated from dynamical mean-field theory (see Methods, section “Fixed point stability in the mean-field network”).

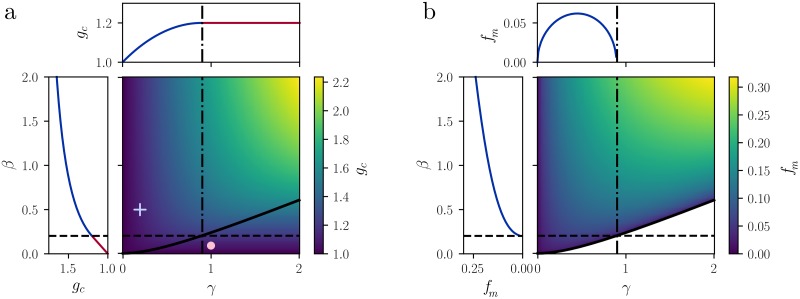

The bifurcation that characterizes the loss of stability depends on two parameters, viz. the ratio of timescales γ and the strength of the adaptation β. To further characterize the bifurcation at g = gc(γ, β), we can study the imaginary part of the critical eigenvalue, i.e. the one with real part equal to zero at g = gc(γ, β). If the adaptation strength β has a value β ≤ βH(γ), then the imaginary part of the critical eigenvalue is equal to zero corresponding to a saddle-node bifurcation at g = gc(γ, β). On the other hand, if β > βH(γ), then the critical eigenvalue is a pair of complex-conjugate, purely imaginary eigenvalues, a signature of a Hopf bifurcation. Therefore, we introduce the curve β = βH(γ), which separates the positive quadrant of the γ − β plane in two regions: one in which the system becomes unstable at the critical value gc(γ, β) via a saddle-node bifurcation, and another one in which the instability occurs via a Hopf bifurcation (Fig 7a). In the Hopf-bifurcation region, the imaginary part of the critical eigenvalues can be computed analytically:

| (28) |

Fig 7. Stability of the fixed point and local properties.

a: Critical value of the coupling gc (color code, right) for different adaptation parameters γ (horizontal axis) and β (vertical axis). The curve βH(γ) (solid black line) separates the regions of the γ − β plane in which for increasing g we encounter a Hopf bifurcation (above βH(γ)) or a saddle-node bifurcation (below βH(γ)). Cross and filled circle: parameters used in Fig 1. Left inset: dependence of gc on β for fixed γ = 0.9. Top inset: dependence of gc on γ for fixed β = βH(γ = 0.9). Blue line: Hopf bifurcation; red line: saddle-node bifurcation. b: Resonance frequency fm for different adaptation parameters γ, β. Notice that in the non-resonant region the resonance frequency is not defined. Left inset: square-root increase of fm as a function of β for fixed γ = 0.9. Top inset: non-monotonic behavior of fm as a function of γ, for fixed β = βH(γ = 0.9).

The parameter fm is the frequency of low-amplitude oscillations close to the bifurcation, if N < ∞. In the finite-N case, we find numerically that these low-amplitude oscillations are stable. When N → ∞, however, we find that chaotic dynamics onset right above the bifurcation (see section “Resonant chaos in random networks with adaptation”). The frequency fm is monotonic in β but non-monotonic in γ (Fig 7b), indicating that a slower adaptation variable (smaller γ) does not necessarily correspond to slower oscillations. When considering codimension-two bifurcations, we have that for g = gc and β = βH(γ) the system undergoes a Bogdanov-Takens bifurcation.

Mean-field theory

General theory

The dynamics of the ND-dimensional dynamical system in Eq 21 for large N is too high-dimensional to be studied at the microscopic level. In contrast, using dynamical mean-field theory [1], we can find properties of the network dynamics that are independent of the specific connectivity realization. In what follows, we will assume that the external input Ii(t) to each unit is an independent realization of the same Gaussian process. Following [1], we approximate the network input to a representative unit i with a Gaussian process η and substitute the average over time, initial conditions and network realizations with the average over realizations of η. This approximation is valid in the large-N limit, in which neurons become independent [45, 46]. In the mean-field description, the activity of each individual unit in the network follows a realization of the following system of D stochastic differential equations, to which we refer to as mean-field equations (see Methods, section “Mean-field theory derivation” for more details)

| (29) |

where η(t) is a Gaussian process. The mean 〈η(t)〉 vanishes, because the averaging over the Gaussian process statistics mimics the average over different neurons and network realizations, and the connections Jij in Eq 21 are sampled from a Gaussian distribution with mean zero. Thanks to the fact that neurons become independent in the large-N limit [1], the average of the network input over network realizations is also zero (see Methods, section “Mean-field theory derivation” for more details). On the other hand, the autocorrelation function 〈η(t)η(s)〉 needs to be determined self-consistently by imposing (cf. Methods, section “Mean-field theory derivation”)

| (30) |

Thanks to the mean-field approximations, we reduced the ND-dimensional, deterministic, nonlinear system of Eq 21 to the D-dimensional, stochastic system of Eq 29, which looks linear at first glance. However, the nonlinearity is important and is hidden in the self-consistent match of the second moment, as expressed by Eq 30. The linear mathematical structure of Eq 29 allows us to write, in the frequency domain

| (31) |

where is the linear response function (susceptibility) of the mean-field system (Eq 29), which is equal to the linear response function of an uncoupled single neuron in the microscopic description. For the linear dynamics given by Eq 29, the linear response function is given by

| (32) |

where ID is the D-dimensional identity matrix and the upper indices 1,1 indicate the first element of the first row of the matrix inside the square brackets.

In what follows, we assume that the external input I(t) is stationary and zero-mean, and that the network is in the stationary regime. Therefore, the mean of all variables is equal to zero. The second-order statistics must be determined self-consistently. In the frequency domain, this requires a self-consistent determination of the power spectral density (“power spectrum” for short) Sx(f) of the activation variable x1, defined as the Fourier transform of the autocorrelation function, . Using the squared modulus of the linear response function , the power spectrum can be expressed as

| (33) |

where Sη(f) and SI(f) denote the power spectral densities of η(t) and I(t), respectively. Importantly, from Eq 30 we have that Sη(f) depends implicitly on Sx(f) through the self-consistency condition

| (34) |

The factor can be expressed as a function of the matrix A as

| (35) |

where adj(2πifID − A) is the adjoint matrix of (2πifID − A) and are the eigenvalues of A. In Methods, section “Fixed point stability in the mean-field network”, we show that knowing the maximum of is sufficient to compute the critical value of the coupling gc:

| (36) |

Iterative procedure to solve the mean-field theory

The traditional approach in the DMFT literature is to consider the time-domain version of Eq 22 [1]. Applying the inverse Fourier transform to Eq 22 would lead to a differential equation of order 2D. Unfortunately, by contrast with the case D = 1, for the multi-dimensional case D > 1 the dynamics is no longer conservative, which precludes the determination of the initial conditions (see [1]). We propose an alternative approach to find a self-consistent solution to Eq 22 in the Fourier domain. This approach is based on an iterative map, the fixed point of which is the self-consistent solution. Iterative methods have been proposed previously both in the context of spiking [62, 64] and rate-based networks [75] using Monte-Carlo methods. Here, we use a semi-analytical iteration method that allows to rapidly solve for the self-consistent power spectrum, and hence to qualitatively understand several features of the network dynamics.

In the frequency domain, the linear transform associated with is simple, whereas the nonlinearity ϕ(x) is difficult to handle. Concretely, we need to express Sϕ(x1) as a functional of Sx(f). This calculation can be performed semi-analytically for the piecewise-linear nonlinearity (a detailed treatment of the nonlinear step is given in Methods, section “Effect of nonlinearities on second-order statistic”). The idea of our iterative method is to start with an arbitrary initial power spectral density , which we choose to be constant (white noise). We then apply multiple iterations each consisting of a linear step followed by a nonlinear one (Fig 2f). At each iteration, the linear step is simply a multiplication by and it allows us to compute (Sx)(n+ 1)(f). The nonlinear step afterwards transforms (Sx)(n+1)(f) into .

By studying the iterative map that defines the mean-field solution, we conclude that the power spectrum of the network activity emerges from a sharpening of the linear response function of single units. The sharpening mainly arises from repeated multiplications with the factor in the iteration, which however is balanced by cross-frequency interactions and saturation effects of the nonlinear steps (see Methods, section “Qualitative study of the iterative map” for a detailed discussion). As a result, the network activity exhibits the same frequency bands that are preferred by single neurons, albeit much narrower.

Qualitative study of the iterative map

For a qualitative understanding of the effect of the iterations on the power spectral density, we exploit the fact that x1 is a Gaussian process, for which the following formula holds [76]

| (37) |

where the angular brackets indicate the mean over the statistics of x1. Eq 37 gives the effect of a nonlinearity ϕ on a the autocorrelation of a Gaussian process x1. By truncating the series after the first term, we get

| (38) |

Fourier transforming this equation we get an approximation of the power spectral density of ϕ(x1)

| (39) |

where we we introduced the function to highlight the fact that the coefficient that multiplies Sx(f) depends on the area under the power spectral density, i.e. on the variance of x1, and is therefore nonlocal in frequency space. We stress that retaining only the first term in Eq 37 is different than considering a linear approximation of ϕ, since the dependence of the coefficient on the variance would not appear in that case.

Using this approximation, we can express the power spectral density at the nth iteration of the iterative method, as a function of the initial power spectral density from which we started to iterate. We obtain

| (40) |

where . If we take to be constant and we define , we can rewrite the above expression as

| (41) |

If g > gc, there will be a range of frequencies for which , which implies that its nth power diverges when n grows. In a purely linear network, this phenomenon would lead to a blow-up of the power spectral density, in agreement with the fact that activity in a linear network is unbounded for g > gc. If ϕ is a compressive nonlinearity however, the coefficient an will tend to zero for growing n, counterbalancing the unbounded growth of . Based on Eq 41, we would predict that all the modes for which will get amplified over multiple iterations, while all the other modes will get suppressed. While this is a highly simplified description, the suppression and the amplification of modes is clearly visible when observing the evolution of the power spectrum over iterations (Fig 2f) and when comparing the dynamics of the self-consistent solution (Fig 8c and 8f, parameters in Table 1) to the corresponding linear response function (Fig 8b and 8e, parameters in Table 1). When truncating the series after the first order however, the mean-field network does not admit a self-consistent solution, for which we need to retain also higher order terms. Such terms will balance the progressive sharpening of the power spectrum, allowing for a self-consistent solution.

Fig 8. Two examples of multi-dimensional rate models.

Parameters defining both models can be found in Table 1. a-b-c: Analysis of a three-dimensional rate model. Eigenvalue spectra (a) corresponding to the coupling values g1 = 1.28, g2 = 1.4 and g3 = 2. The dashed line indicates the imaginary axis. In b we plot the linear response function of the single unit (solid line), and the instability threshold corresponding to the three coupling values g1, g2 and g3 (dashed lines). In c we plot the solution of the mean field theory obtained with the iterative method for the three values of g, g1 = 1.5, g2 = 2 and g3 = 3. d-e-f: Same as a,b,c, but for a four-dimensional rate model.

Table 1. Parameters of the models in Fig 8.

Matrix A defining the rate model for the different examples in Fig 8.

As an example of higher-order term, consider the next term in the series in Eq 37, given by

| (42) |

where Ψ2 is defined analogously to Ψ1. In general, higher-order terms will contain convolutions of the power spectral density with itself, which are responsible for the creation of higher harmonics. To qualitatively understand this effect, consider the case in which Sx(f) is a Dirac δ-function with support in f0. In this case, the two-fold convolution of Sx(f) with itself is again equal to a Dirac δ-function, but centered in 2f0. A similar argument can be given for resonant power spectral densities, which implies that a self-consistent solution should exhibit harmonics of the fundamental resonance frequency. Note that in this paper we considered odd functions, for which only odd terms in the series are nonzero.

For higher values of g, the relative importance of higher-order terms in the series in Eq 37 will increase, leading to a broader power spectrum. The self-consistent power spectrum however, seems to be always narrower than the single neuron linear response function. For a possible explanation of this phenomenon, we consider the g → ∞ limit, which was already studied in [46] for the network without adaptation. Using the same technique, we conclude that in this limit the autocorrelation decay tends to be the same as one obtained for a single unit driven by white noise [46]. In the frequency domain, this is equivalent to say that the power spectral density of the network tends to the one of a single unit driven by white noise.

Effect of nonlinearities on second-order statistics

In this section, we provide some additional details on how to compute the effect of nonlinearities on the second order statistics (autocorrelation or power spectral density) of a Gaussian process. We consider three cases of interest: polynomials, piecewise linear functions and arbitrary nonlinear functions. To simplify our notation, we drop the superscript of and consider a generic Gaussian process x.

The effect of polynomial nonlinearities can be expressed in closed form in time domain. This can be seen by considering again the infinite series expression (Eq 37), valid for stationary Gaussian processes x

| (43) |

where the angular brackets indicate the average over the statistics of x. In the case in which ϕ is a polynomial of degree p, only the terms in the sum up to p are nonzero. As an example, we can compute the effect of a cubic approximation of the hyperbolic tangent, i.e.

| (44) |

As expected, the effect of the nonlinearity depends on Cx(0) i.e. on the variance of x itself. Notice that the coefficient of the first term is compressive (i.e. smaller than one) only if Cx(0) is smaller than one itself. This type of behavior is expected since ϕ3 is unbounded.

Another interesting case are piecewise linear nonlinearities. In this case, we use Price’s theorem twice to get

| (45) |

For a piecewise linear ϕ, the second derivative ϕ′′ is a sum of Dirac’s delta functions with variable coefficients. More precisely, we consider

| (46) |

where xp are the points in which the first derivative is discontinuous, cp are some arbitrary coefficients and Θ(⋅) is the Heaviside function. The second derivative of ϕPL is given by

| (47) |

The delta functions allow us to compute the correlation function explicitly

| (48) |

where we defined . Inserting Eq 48 in Eq 45 and integrating twice with respect to Cx(t) we get

| (49) |

In the case in which ϕ is an odd function, the term fϕ(0; Cx(0)) is equal to zero. For the specific case of the piecewise linear approximation of the hyperbolic tangent considered in this paper, i.e.

| (50) |

the expression in Eq 49 reduces to

| (51) |

For the piecewise linear function, an alternative approach is based on the infinite series in Eq 37, which yields [77, 78]:

| (52) |

with input variance σ2 = Cx(0) and cumulative Gaussian distribution function . For the figures in this paper, we used the map in Eq 51.

For an arbitrary nonlinear function, we can use two methods. The first method is a semi-analytical approach that relies on the integral form of the autocorrelation of the rate Cϕ(x)(τ) as a functional of the autocorrelation Cx(τ) of x [45]

| (53) |

where . Notice that a slightly different version of this formula was already proposed in [1]. Therefore, to obtain the effect of ϕ on the power spectral density, one should 1) inverse Fourier transform Sx(f) to get Cx(τ) 2) apply Eq 53, by computing the two integrals numerically 3) Fourier transform Cϕ(x)(τ) to get Sϕ(x)(f). Practically, this procedure requires the application of the fast Fourier transform algorithm and the numerical evaluation of two integrals.

The second method is fully numerical and it can be useful in cases in which the integrals in the first method are expensive to evaluate numerically. This method consists in approximating the power spectral density Sϕ(x) via Monte Carlo sampling. More precisely, we sample multiple realizations in frequency domain of the Gaussian process with zero mean and power spectral density Sx(f). We then transform each sample to time domain and apply the nonlinearity ϕ(x) to each sample x(t) individually. Finally, we transform back to Fourier domain and get Sϕ(x) by averaging. Despite being computationally more expensive than the closed form expressions, this sampling method provides a solution of the mean-field theory for an arbitrary nonlinearity and it is computationally much cheaper than running the full microscopic simulation. Moreover, this method can easily be extended to be used in the presence of a non-Gaussian sinusoidal input (cf. section “Adaptation shapes signal transmission in the presence of internally-generated noise” and [4]).

Mean-field theory derivation

In this section, we extend the derivation of dynamical mean-field theory (DMFT) to the case of the network of multi-dimensional rate units. Since there are no additional complication with respect to the standard case, we report here only the main steps. For a review of the path-integral approach to DMFT, see e.g. [45, 46]. The moment-generating functional corresponding to the microscopic system in Eq 21 is given by

| (54) |

where

| (55) |

and we introduced the notation . The integral is over paths and bold symbols indicate vectors, over both the network space and the rate model space, so that .

We are interested in properties that are independent of the particular realization of the coupling matrix J. In order to extract those properties, we average over the quenched disorder by defining the averaged generating function

| (56) |

The average over each Jij can be computed by noticing that the terms corresponding to different Jij factorize and the integral can be solved by completing the square. Since the details of this calculation are analogous to the one-dimensional case, we directly report the result

| (57) |

We now aim to decouple the interaction term in the last line by introducing the auxiliary field

| (58) |

We introduce Q1 in the generating functional by inserting the following representation of the unity

| (59) |

where δ[⋅] is the delta functional. Using the integral representation of the delta functional leads to the introduction of a second auxiliary field, which we call Q2. We obtain

| (60) |

This expression has the advantage that any interaction between different units is removed and all the contribution coming from different units factorize. It is convenient to rewrite the averaged generating functional as a field theory for two auxiliary fields Q1, Q2, i.e. we remove the vectorial response terms and we add two scalar response terms for the auxiliary fields. The result is

| (61) |

where we extended our notation to . The crucial observation to make is that essentially all factors associated to different units factorized yielding the factor N. For this reason, the integration is now not over all rate model indices but over only one unit index. The remainder is the problem of one unit, characterized by D variables, interacting with two external fields Q1, Q2.

The final step is to perform a saddle-point approximation, i.e. replace Q1, Q2 by their values that make the action stationary. To do this, we need to solve the two saddle-point equations

| (62) |

These equations are analogous to the ones in the one-dimensional case, and lead to the saddle-point solution

| (63) |

where Cϕ(x1)(s, t) is the autocorrelation function of ϕ(x1) evaluated at the saddle point solution. The averaged generating functional at the leading order in N can be written as

| (64) |

This is the statistical field theory corresponding to D linearly interacting variables, with x1 that receives a Gaussian noise whose autocorrelation is given by Cϕ(x1). Writing the corresponding differential equations results in our mean-field description (Eq 29).

Mean-field theory with heterogeneous adaptation

In this section, we will extend the derivation of the dynamic mean-field theory (DMFT) for the case of the network with heterogeneous adaptation. We consider the case in which each neuron has different parameters, sampled i.i.d from the same distributions, and different parameters of the same neuron are uncorrelated with each other. More precisely, we sample the elements of the matrix Ai for neuron i as

| (65) |

where the subscript i runs over the neurons in the network.

In deriving the mean-field theory, most of the steps are identical to those in Methods, section “Mean-field theory derivation”, so we will focus on the additional terms due to the new source of disorder. We separate the contribution of mean adaptation parameters from the deviations, so that the generating functional reads

| (66) |

where

| (67) |

and is the matrix of the expected values of A.

The action S0 is the same as for the network without heterogeneity, and when averaging over the connectivity disorder, we obtain the same result as for homogeneous network. In this case however, we need to also average over the disorder due to heterogeneity, i.e. over all the . The averaged generating functional will then result from the average

| (68) |