Abstract

Speech separation is the task of separating target speech from background interference. Traditionally, speech separation is studied as a signal processing problem. A more recent approach formulates speech separation as a supervised learning problem, where the discriminative patterns of speech, speakers, and background noise are learned from training data. Over the past decade, many supervised separation algorithms have been put forward. In particular, the recent introduction of deep learning to supervised speech separation has dramatically accelerated progress and boosted separation performance. This paper provides a comprehensive overview of the research on deep learning based supervised speech separation in the last several years. We first introduce the background of speech separation and the formulation of supervised separation. Then, we discuss three main components of supervised separation: learning machines, training targets, and acoustic features. Much of the overview is on separation algorithms where we review monaural methods, including speech enhancement (speech-nonspeech separation), speaker separation (multitalker separation), and speech dereverberation, as well as multimicrophone techniques. The important issue of generalization, unique to supervised learning, is discussed. This overview provides a historical perspective on how advances are made. In addition, we discuss a number of conceptual issues, including what constitutes the target source.

Keywords: Seech separation, speaker separation, speech enhancement, supervised speech separation, deep learning, deep neural networks, speech dereverberation, time-frequency masking, array separation, beamforming

I. INTRODUCTION

THE goal of speech separation is to separate target speech from background interference. Speech separation is a fundamental task in signal processing with a wide range of applications, including hearing prosthesis, mobile telecommunication, and robust automatic speech and speaker recognition. The human auditory system has the remarkable ability to extract one sound source from a mixture of multiple sources. In an acoustic environment like a cocktail party, we seem capable of effortlessly following one speaker in the presence of other speakers and background noises. Speech separation is commonly called the “cocktail party problem,” a term coined by Cherry in his famous 1953 paper [26].

Speech separation is a special case of sound source separation. Perceptually, source separation corresponds to auditory stream segregation, a topic of extensive research in auditory perception. The first systematic study on stream segregation was conducted by Miller and Heise [124] who noted that listeners split a signal with two alternating sine-wave tones into two streams. Bregman and his colleagues have carried out a series of studies on the subject, and in a seminal book [15] he introduced the term auditory scene analysis (ASA) to refer to the perceptual process that segregates an acoustic mixture and groups the signal originating from the same sound source. Auditory scene analysis is divided into simultaneous organization and sequential organization. Simultaneous organization (or grouping) integrates concurrent sounds, while sequential organization integrates sounds across time. With auditory patterns displayed on a time-frequency representation such as a spectrogram, main organizational principles responsible for ASA include: Proximity in frequency and time, harmonicity, common amplitude and frequency modulation, onset and offset synchrony, common location, and prior knowledge (see among others [11], [15], [29],[30], [32], [163]). These grouping principles also govern speech segregation [4], [31], [49], [93], [154], [201]. From ASA studies, there seems to be a consensus that the human auditory system segregates and attends to a target sound, which can be a tone sequence, a melody, or a voice. More debatable is the role of auditory attention in stream segregation [17], [120], [148], [151]. In this overview, we use speech separation (or segregation) primarily to refer to the computational task of separating the target speech signal from a noisy mixture.

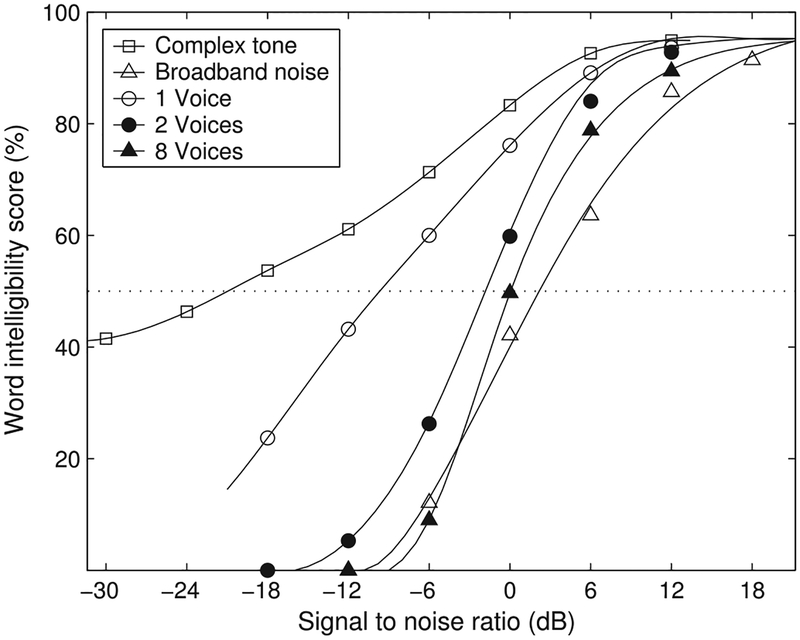

How well do we perform speech segregation? One way of quantifying speech perception performance in noise is to measure speech reception threshold, the required SNR level for a 50% intelligibility score. Miller [123] reviewed human intelligibility scores when interfered by a variety of tones, broadband noises, and other voices. Listeners were tested for their word intelligibility scores, and the results are shown in Fig. 1. In general, tones are not as interfering as broadband noises. For example, speech is intelligible even when mixed with a complex tone glide that is 20 dB more intense (pure tones are even weaker interferers). Broadband noise is the most interfering for speech perception, and the corresponding SRT is about 2 dB. When interference consists of other voices, the SRT depends on how many interfering talkers are present. As shown in Fig. 1, the SRT is about −10 dB for a single interferer but rapidly increases to −2 dB for two interferers. The SRT stays about the same (around −1 dB) when the interference contains four or more voices. There is a whopping SRT gap of 23 dB for different kinds of interference! Furthermore, it should be noted that listeners with hearing loss show substantially higher SRTs than normal-hearing listeners, ranging from a few decibels for broadband stationary noise to as high as 10–15 dB for interfering speech [44], [127], indicating a poorer ability of speech segregation.

Fig. 1.

Word intelligibility score with respect to SNR for different kinds of interference (from [172], redrawn from [123]). The dashed line indicates 50% intelligibility. For speech interference, scores are shown for 1, 2, and 8 interfering speakers.

With speech as the most important means of human communication, the ability to separate speech from background interference is crucial, as the speech of interest, or target speech, is usually corrupted by additive noises from other sound sources and reverberation from surface reflections. Although humans perform speech separation with apparent ease, it has proven to be very challenging to construct an automatic system to match the human auditory system in this basic task. In his 1957 book[27], Cherry made an observation: “No machine has yet been constructed to do just that [solving the cocktail part problem].” His conclusion, unfortunately for our field, has remained largely true for 6 more decades, although recent advances reviewed in this article have started to crack the problem.

Given the importance, speech separation has been extensively studied in signal processing for decades. Depending on the number of sensors or microphones, one can categorize separation methods into monaural (single-microphone) and array-based (multi-microphone). Two traditional approaches for monaural separation are speech enhancement [113] and computational auditory scene analysis (CASA) [172]. Speech enhancement analyzes general statistics of speech and noise, followed by estimation of clean speech from noisy speech with a noise estimate [40], [113]. The simplest and most widely used enhancement method is spectral subtraction [13], in which the power spectrum of the estimated noise is subtracted from that of noisy speech. In order to estimate background noise, speech enhancement techniques typically assume that background noise is stationary, i.e., its spectral properties do not change over time, or at least are more stationary than speech. CASA is based on perceptual principles of auditory scene analysis[15] and exploits grouping cues such as pitch and onset. For example, the tandem algorithm separates voiced speech by alternating pitch estimation and pitch-based grouping [78].

An array with two or more microphones uses a different principle to achieve speech separation. Beamforming, or spatial filtering, boosts the signal that arrives from a specific direction through proper array configuration, hence attenuating interference from other directions [9], [14], [88], [164]. The simplest beamformer is a delay-and-sum technique that adds multiple microphone signals from the target direction in phase and uses phase differences to attenuate signals from other directions. The amount of noise attenuation depends on the spacing, size, and configuration of the array – generally the attenuation increases as the number of microphones and the array length increase. Obviously, spatial filtering cannot be applied when target and interfering sources are co-located or near to one another. Moreover, the utility of beamforming is much reduced in reverberant conditions, which smear the directionality of sound sources.

A more recent approach treats speech separation as a supervised learning problem. The original formulation of supervised speech separation was inspired by the concept of time-frequency (T-F) masking in CASA. As a means of separation, T-F masking applies a two-dimensional mask (weighting) to the time-frequency representation of a source mixture in order to separate the target source [117], [170], [172]. A major goal of CASA is the ideal binary mask (IBM) [76], which denotes whether the target signal dominates a T-F unit in the time-frequency representation of a mixed signal. Listening studies show that ideal binary masking dramatically improves speech intelligibility for normal-hearing (NH) and hearing-impaired (HI) listeners in noisy conditions [1], [16], [109], [173]. With the IBM as the computational goal, speech separation becomes binary classification, an elementary form of supervised learning. In this case, the IBM is used as the desired signal, or target function, during training. During testing, the learning machine aims to estimate the IBM. Although it served as the first training target in supervised speech separation, the IBM is by no means the only training target and Section III presents a list of training targets, many shown to be more effective.

Since the formulation of speech separation as classification, the data-driven approach has been extensively studied in the speech processing community. Over the last decade, supervised speech separation has substantially advanced the state-of-theart performance by leveraging large training data and increasing computing resources [21]. Supervised separation has especially benefited from the rapid rise in deep learning – the topic of this overview. Supervised speech separation algorithms can be broadly divided into the following components: learning machines, training targets, and acoustic features. In this paper, we will first review the three components. We will then move to describe representative algorithms, where monaural and array-based algorithms will be covered in separate sections. As generalization is an issue unique to supervised speech separation, this issue will be treated in this overview.

Let us clarify a few related terms used in this overview to avoid potential confusion. We refer to speech separation or segregation as the general task of separating target speech from its background interference, which may include nonspeech noise, interfering speech, or both, as well as room reverberation. Furthermore, we equate speech separation and the cocktail party problem, which goes beyond the separation of two speech utterances originally experimented with by Cherry [26]. By speech enhancement (or denoising), we mean the separation of speech and nonspeech noise. If one is limited to the separation of multiple voices, we use the term speaker separation.

This overview is organized as follows. We first review the three main aspects of supervised speech separation, i.e., learning machines, training targets, and features, in Sections II, III, and IV, respectively. Section V is devoted to monaural separation algorithms, and Section VI to array-based algorithms. Section VII concludes the overview with a discussion of a few additional issues, such as what signal should be considered as the target and what a solution to the cocktail party problem may look like.

II. CLASSIFIERS AND LEARNING MACHINES

Over the past decade, DNNs have significantly elevated the performance of many supervised learning tasks, such as image classification [28], handwriting recognition [53], automatic speech recognition [73], language modeling [156], and machine translation [157]. DNNs have also advanced the performance of supervised speech separation by a large margin. This section briefly introduces the types of DNNs for supervised speech separation: feedforward multilayer perceptrons (MLPs), convolutional neural networks (CNNs), recurrent neural networks (RNNs), and generative adversarial networks (GANs).

The most popular model in neural networks is an MLP that has feedforward connections from the input layer to the output layer, layer-by-layer, and the consecutive layers are fully connected. An MLP is an extension of Rosenblatt’s perceptron [142] by introducing hidden layers between the input layer and the output layer. An MLP is trained with the classical backpropagation algorithm [143] where the network weights are adjusted to minimize the prediction error through gradient descent. The prediction error is measured by a cost (loss) function between the predicted output and the desired output, the latter provided by the user as part of supervision. For example, when an MLP is used for classification, a popular cost function is cross entropy:

where i indexes an output model neuron and pi,c denotes the predicted probability of i belonging to class c. N and C indicate the number of output neurons and the number of classes, respectively. Ii,c is a binary indicator, which takes 1 if the desired class of neuron i is c and 0 otherwise. For function approximation or regression, a common cost function is mean square error (MSE):

where ŷi and yi are the predicted output and desired output for neuron i, respectively.

The representational power of an MLP increases as the number of layers increases [142] even though, in theory, an MLP with two hidden layers can approximate any function [70]. The backpropagation algorithm is applicable to an MLP of any depth. However, a deep neural network (DNN) with many hidden layers is difficult to train from a random initialization of connection weights and biases because of the so-called vanishing gradient problem, which refers to the observation that, at lower layers (near the input end), gradients calculated from backpropagated error signals from upper layers, become progressively smaller or vanishing. As a result of vanishing gradients, connection weights at lower layers are not modified much and therefore lower layers learn little during training. This explains why MLPs with a single hidden layer were the most widely used neural network prior to the advent of DNN.

A breakthrough in DNN training was made by Hinton et al. [74]. The key idea is to perform layerwise unsupervised pre-training with unlabeled data to properly initialize a DNN before supervised learning (or fine tuning) is performed with labeled data. More specifically, Hinton et al. [74] proposed restrictive Boltzmann machines (RBMs) to pretrain a DNN layer by layer, and RBM pretraining is found to improve subsequent supervised learning. A later remedy was to use a rectified linear unit (ReLU) [128] to replace the traditional sigmoid activation function, which converts a weighted sum of the inputs to a model neuron to the neuron’s output. Recent practice shows that a moderately deep MLP with ReLUs can be effectively trained with large training data without unsupervised pretraining. Recently, skip connections have been introduced to facilitate the training of very deep MLPs [62], [153].

A class of feedforward networks, known as convolutional neural networks (CNNs) [10], [106], has been demonstrated to be well suited for pattern recognition, particularly in the visual domain. CNNs incorporate well-documented invariances in pattern recognition such as translation (shift) invariance. A typical CNN architecture is a cascade of pairs of a convolutional layer and a subsampling layer. A convolutional layer consists of multiple feature maps, each of which learns to extract a local feature regardless of its position in the previous layer through weight sharing: the neurons within the same module are constrained to have the same connection weights despite their different receptive fields. A receptive field of a neuron in this context denotes the local area of the previous layer that is connected to the neuron, whose operation of a weighted sum is akin to a convolution.1 Each convolutional layer is followed by a subsampling layer that performs local averaging or maximization over the receptive fields of the neurons in the convolutional layer. Subsampling serves to reduce resolution and sensitivity to local variations. The use of weight sharing in CNN also has the benefit of cutting down the number of trainable parameters. Because a CNN incorporates domain knowledge in pattern recognition via its network structure, it can be better trained by the backpropagation algorithm despite the fact that a CNN is a deep network.

RNNs allow recurrent (feedback) connections, typically between hidden units. Unlike feedforward networks, which process each input sample independently, RNNs treat input samples as a sequence and model the changes over time. A speech signal exhibits strong temporal structure, and the signal within the current frame is influenced by the signals in the previous frames. Therefore, RNNs are a natural choice for learning the temporal dynamics of speech. We note that a RNN through its recurrent connections introduces the time dimension, which is flexible and infinitely extensible, a characteristic not shared by feedforward networks no matter how deep they are [169]; in a way, a RNN can be viewed a DNN with an infinite depth [146]. The recurrent connections are typically trained with backpropagation through time [187]. However, such RNN training is susceptible to the vanishing or exploding gradient problem [137]. To alleviate this problem, a RNN with long short-term memory (LSTM) introduces memory cells with gates to facilitate the information flow over time [75]. Specifically, a memory cell has three gates: input gate, forget gate and output gate. The forget gate controls how much previous information should be retained, and the input gate controls how much current information should be added to the memory cell. With these gating functions, LSTM allows relevant contextual information to be maintained in memory cells to improve RNN training.

Generative adversarial networks (GANs) were recently introduced with simultaneously trained models: a generative model G and a discriminative model D [52]. The generator G learns to model labeled data, e.g., the mapping from noisy speech samples to their clean counterparts, while the discriminator – usually a binary classifier – learns to discriminate between generated samples and target samples from training data. This framework is analogous to a two-player adversarial game, where minimax is a proven strategy [144]. During training, G aims to learn an accurate mapping so that the generated data can well imitate the real data so as to fool D; on the other hand, D learns to better tell the difference between the real data and synthetic data generated by G. Competition in this game, or adversarial learning, drives both models to improve their accuracy until generated samples are indistinguishable from real ones. The key idea of GANs is to use the discriminator to shape the loss function of the generator. GANs have recently been used in speech enhancement (see Section V.A).

In this overview, a DNN refers to any neural network with at least two hidden layers [10], [73], in contrast to popular learning machines with just one hidden layer such as commonly used MLPs, support vector machines (SVMs) with kernels, and Gaussian mixture models (GMMs). As DNNs get deeper in practice, with more than 100 hidden layers actually used, the depth required for a neural network to be considered a DNN can be a matter of a qualitative, rather than quantitative, distinction. Also, we use the term DNN to denote any neural network with a deep structure, whether it is feedforward or recurrent.

We should mention that DNN is not the only kind of learning machine that has been employed for speech separation. Alternative learning machines used for supervised speech separation include GMM [97], [147], SVM [55], and neural networks with just one hidden layer [91]. Such studies will not be further discussed in this overview as its theme is DNN based speech separation.

III. TRAINING TARGETS

In supervised speech separation, defining a proper training target is important for learning and generalization. There are mainly two groups of training targets, i.e., masking-based targets and mapping-based targets. Masking-based targets describe the time-frequency relationships of clean speech to background interference, while mapping-based targets correspond to the spectral representations of clean speech. In this section, we survey a number of training targets proposed in the field.

Before reviewing training targets, let us first describe evaluation metrics commonly used in speech separation. A variety of metrics has been proposed in the literature, depending on the objectives of individual studies. These metrics can be divided into two classes: signal-level and perception-level. At the signal level, metrics aim to quantify the degrees of signal enhancement or interference reduction. In addition to the traditional SNR, speech distortion (loss) and noise residue in a separated signal can be individually measured [77], [113]. A prominent set of evaluation metrics comprises SDR (source-to-distortion ratio), SIR (source-to-interference ratio), and SAR (source-to-artifact ratio) [165].

As the output of a speech separation system is often consumed by the human listener, a lot of effort has been made to quantitatively predict how the listener perceives a separated signal. Because intelligibility and quality are two primary but different aspects of speech perception, objective metrics have been developed to separately evaluate speech intelligibility and speech quality. With the IBM’s ability to elevate human speech intelligibility and its connection to the articulation index (AI) [114] – the classic model of speech perception – the HIT − FA rate has been suggested as an evaluation metric with the IBM as the reference [97]. HIT denotes the percent of speech-dominant T-F units in the IBM that is correctly classified and FA (false-alarm) refers to the percent of noise-dominant units that is incorrectly classified. The HIT−FA rate is found to be well correlated with speech intelligibility [97]. In recent years, the most commonly used intelligibility metric is STOI (short-time objective intelligibility), which measures the correlation between the short-time temporal envelopes of a reference (clean) utterance and a separated utterance [89], [158]. The value range of STOI is typically between 0 and 1, which can be interpreted as percent correct. Although STOI tends to overpredict intelligibility scores [64], [102], no alternative metric has been shown to consistently correlate with human intelligibility better. For speech quality, PESQ (perceptual evaluation of speech quality) is the standard metric [140] and recommended by the International Telecommunication Union (ITU) [87]. PESQ applies an auditory transform to produce a loudness spectrum, and compares the loudness spectra of a clean reference signal and a separated signal to produce a score in a range of −0.5 to 4.5, corresponding to the prediction of the perceptual MOS (mean opinion score).

A. Ideal Binary Mask

The first training target used in supervised speech separation is the ideal binary mask [76], [77], [141], [168], which is inspired by the auditory masking phenomenon in audition [126] and the exclusive allocation principle in auditory scene analysis [15]. The IBM is defined on a two-dimensional T-F representation of a noisy signal, such as a cochleagram or a spectrogram:

| (1) |

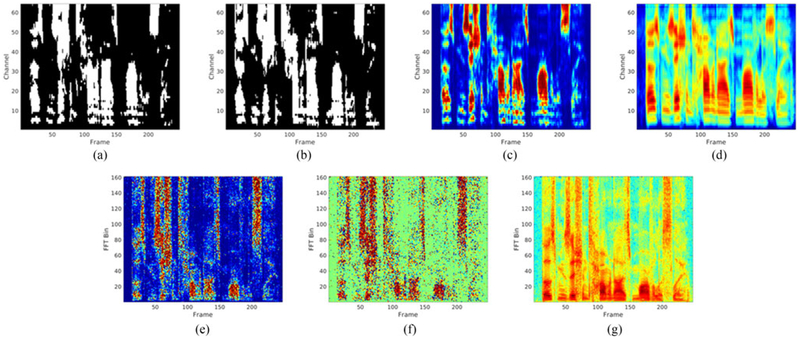

where t and f denote time and frequency, respectively. The IBM assigns the value 1 to a unit if the SNR within the T-F unit exceeds the local criterion (LC) or threshold, and 0 otherwise. Fig. 2(a) shows an example of the IBM, which is defined on a 64-channel cochleagram. As mentioned in Section I, IBM masking dramatically increases speech intelligibility in noise for normal-hearing and hearing-impaired listeners. The IBM labels every T-F unit as either target-dominant or interference-dominant. As a result, IBM estimation can naturally be treated as a supervised classification problem. A commonly used cost function for IBM estimation is cross entropy, as described in Section II.

Fig. 2.

Illustration of various training targets for a TIMIT utterance mixed with a factory noise at −5 dB SNR. (a) IBM. (b) TBM. (c) IRM. (d) GF-TPS. (e) SMM. (f) PSM. (g) TMS.

B. Target Binary Mask

Like the IBM, the target binary mask (TBM) categorizes all T-F units with a binary label. Different from the IBM, the TBM derives the label by comparing the target speech energy in each T-F unit with a fixed interference: speech-shaped noise, which is a stationary signal corresponding to the average of all speech signals. An example of the TBM is shown in Fig. 2(b). Target binary masking also leads to dramatic improvement of speech intelligibility in noise [99], and the TBM has been used as a training target [51], [112].

C. Ideal Ratio Mask

Instead of a hard label on each T-F unit, the ideal ratio mask (IRM) can be viewed as a soft version of the IBM [84], [130], [152], [178]:

| (2) |

where S(t, f)2 and N(t, f)2 denote speech energy and noise energy within a T-F unit, respectively. The tunable parameter β scales the mask, and is commonly chosen to 0.5. With the square root the IRM preserves the speech energy with each T-F unit, under the assumption that S(t, f) and N(t, f) are uncorrelated. This assumption holds well for additive noise, but not for convolutive interference as in the case of room reverberation (late reverberation, however, can be reasonably considered as uncor-related interference.) Without the root the IRM in (2) is similar to the classical Wiener filter, which is the optimal estimator of target speech in the power spectrum. MSE is typically used as the cost function for IRM estimation. An example of the IRM is shown in Fig. 2(c).

D. Spectral Magnitude Mask

The spectral magnitude mask (SMM) (called FFT-MASK in [178]) is defined on the STFT (short-time Fourier transform) magnitudes of clean speech and noisy speech:

| (3) |

where |S(t, f)| and |Y(t, f)| represent spectral magnitudes of clean speech and speech, respectively. Unlike the IRM, the SMM is not upper-bounded by 1. To obtain separated speech, we apply the SMM or its estimate to the spectral magnitudes of noisy speech, and resynthesize separated speech with the phases of noisy speech (or an estimate of clean speech phases). Fig. 2(e) illustrates the SMM.

E. Phase-Sensitive Mask

The phase-sensitive mask (PSM) extends the SMM by including a measure of phase [41]:

| (4) |

where θ denotes the difference of the clean speech phase and the noisy speech phase within the T-F unit. The inclusion of the phase difference in the PSM leads to a higher SNR, and tends to yield a better estimate of clean speech than the SMM [41]. An example of the PSM is shown in Fig. 2(f).

F. Complex Ideal Ratio Mask

The complex ideal ratio mask (cIRM) is an ideal mask in the complex domain. Unlike the aforementioned masks, it can perfectly reconstruct clean speech from noisy speech [188]:

| (5) |

where S, Y denote the STFT of clean speech and noisy speech, respectively, and ‘*’ represents complex multiplication. Solving for mask components results in the following definition:

| (6) |

where Yr and Yi denote real and imaginary components of noisy speech, respectively, and Sr and Si real and imaginary components of clean speech, respectively. The imaginary unit is denoted by ‘i’. Thus the cIRM has a real component and an imaginary component, which can be separately estimated in the real domain. Because of complex-domain calculations, mask values become unbounded. So some form of compression should be used to bound mask values, such as a tangent hyperbolic or sigmoidal function [184], [188].

Williamson et al. [188] observe that, in Cartesian coordinates, structure exists in both real and imaginary components of the cIRM, whereas in polar coordinates, structure exists in the magnitude spectrogram but not phase spectrogram. Without clear structure, direct phase estimation would be intractable through supervised learning, although we should mention a recent paper that uses complex-domain DNN to estimate complex STFT coefficients [107]. On the other hand, an estimate of the cIRM provides a phase estimate, a property not possessed by PSM estimation.

G. Target Magnitude Spectrum

The target magnitude spectrum (TMS) of clean speech, or |S(t, f)|, is a mapping-based training target [57], [116], [196],[197]. In this case supervised learning aims to estimate the magnitude spectrogram of clean speech from that of noisy speech. Power spectrum, or other forms of spectra such as mel spectrum, may be used instead of magnitude spectrum, and a log operation is usually applied to compress the dynamic range and facilitate training. A prominent form of the TMS is the log-power spectrum normalized to zero mean and unit variance [197]. An estimated speech magnitude is then combined with noisy phase to produce the separated speech waveform. In terms of cost function, MSE is usually used for TMS estimation. Alternatively, maximum likelihood can be employed to train a TMS estimator that explicitly models output correlation [175]. Fig. 2(g) shows an example of the TMS.

H. Gammatone Frequency Target Power Spectrum

Another closely related mapping-based target is the gamma-tone frequency target power spectrum (GF-TPS) [178]. Unlike the TMS defined on a spectrogram, this target is defined on a cochleagram based on a gammatone filterbank. Specifically, this target is defined as the power of the cochleagram response to clean speech. An estimate of the GF-TPS is easily converted to the separated speech waveform through cochleagram inversion [172]. Fig. 2(d) illustrates this target.

I. Signal Approximation

The idea of signal approximation (SA) is to train a ratio mask estimator that minimizes the difference between the spectral magnitude of clean speech and that of estimated speech[81], [186]:

| (7) |

RM(t, f) refers to an estimate of the SMM. So, SA can be interpreted as a target that combines ratio masking and spectral mapping, seeking to maximize SNR [186]. A related, earlier target aims for the maximal SNR in the context of IBM estimation [91]. For the SA target, better separation performance is achieved with two-stage training [186]. In the first stage, a learning machine is trained with the SMM as the target. In the second stage, the learning machine is fine-tuned by minimizing the loss function of (7).

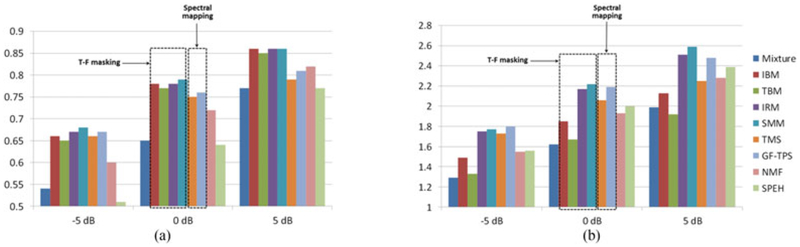

A number of training targets have been compared using a fixed feedforward DNN with three hidden layers and the same set of input features [178]. The separated speech using various training targets is evaluated in terms of STOI and PESQ, for predicted speech intelligibility and speech quality, respectively. In addition, a representative speech enhancement algorithm [66] and a supervised nonnegative matrix factorization (NMF) algorithm [166] are evaluated as benchmarks. The evaluation results are given in Fig. 3. A number of conclusions can be drawn from this study. First, in terms of objective intelligibility, the masking-based targets as a group outperform the mapping-based targets, although a recent study [155] indicates that masking is advantageous only at higher input SNRs and at lower SNRs mapping is more advantageous.2 In terms of speech quality, ratio masking performs better than binary masking. Particularly illuminating is the contrast between the SMM and the TMS, which are the same except for the use of |Y(t, f)| in the inator of the SMM (see (3)). The denom-better estimation of the SMM may be attributed to the fact that the target magnitude spectrum is insensitive to the interference signal and SNR, whereas the SMM is. The many-to-one mapping in the TMS makes its estimation potentially more difficult than SMM estimation. In addition, the estimation of unbounded spectral magnitudes tends to magnify estimation errors [178]. Overall, the IRM and the SMM emerge as the preferred targets. In addition, DNN based ratio masking performs substantially better than supervised NMF and unsupervised speech enhancement.

Fig. 3.

Comparison of training targets. (a) In terms of STOI. (b) In terms of PESQ. Clean speech is mixed with a factory noise at 5 dB, 0 dB and 5 dB SNR. Results for different training targets as well as a speech enhancement (SPEH) algorithm and an NMF method are highlighted for 0−dB mixtures. Note that the results and the data in this figure can be obtained from a Matlab toolbox at http://web.cse.ohio-state.edu/pnl/DNN_toolbox/.

The above list of training targets is not meant to be exhaustive, and other targets have been used in the literature. Perhaps the most straightforward target is the waveform (time-domain) signal of clean speech. This indeed was used in an early study that trains an MLP to map from a frame of noisy speech waveform to a frame of clean speech waveform, which may be called temporal mapping [160]. Although simple, such direct mapping does not perform well even when a DNN is used in place of a shallow network [34], [182]. In [182], a target is defined in the time domain but the DNN for target estimation includes modules for ratio masking and inverse Fourier transform with noisy phase. This target is closely related to the PSM3. A recent study evaluates oracle results of a number of ideal masks and additionally introduces the so-called ideal gain mask (IGM) [184], defined in terms of a priori SNR and a posteriori SNR commonly used in traditional speech enhancement [113]. In [192], the so-called optimal ratio mask that takes into account of the correlation between target speech and background noise [110] was evaluated and found to be an effective target for DNN-based speech separation.

IV. FEATURES

Features as input and learning machines play complementary roles in supervised learning. When features are discriminative, they place less demand on the learning machine in order to perform a task successfully. On the other hand, a powerful learning machine places less demand on features. At one extreme, a linear classifier, like Rosenblatt’s perceptron, is all that is needed when features make a classification task linearly separable. At the other extreme, the input in the original form without any feature extraction (e.g., waveform in audio) suffices if the classifier is capable of learning appropriate features. In between are a majority of tasks where both feature extraction and learning are important.

Early studies in supervised speech separation use only a few features such as interaural time differences (ITD) and interaural level (intensity) differences (IID) [141] in binaural separation, and pitch-based features [55], [78], [91] and amplitude modulation spectrogram (AMS) [97] in monaural separation. A subsequent study [177] explores more monaural features including mel-frequency cepstral coefficient (MFCC), gamma-tone frequency cepstral coefficient (GFCC) [150], perceptual linear prediction (PLP) [67], and relative spectral transform PLP (RASTA-PLP) [68]. Through feature selection using group Lasso, the study recommends a complementary feature set comprising AMS, RASTA-PLP, and MFCC (and pitch if it can be reliably estimated), which has since been used in many studies.

We conducted a study to examine an extensive list of acoustic features for supervised speech separation at low SNRs [22]. The features have been previously used for robust automatic speech recognition and classification-based speech separation. The feature list includes mel-domain, linear-prediction, gammatone-domain, zero-crossing, autocorrelation, medium-time-filtering, modulation, and pitch-based features. The mel-domain features are MFCC and delta-spectral cepstral coefficient (DSCC) [104], which is similar to MFCC except that a delta operation is applied to mel-spectrum. The linear prediction features are PLP and RASTA-PLP. The three gammatone-domain features are gammatone feature (GF), GFCC, and gammatone frequency modulation coefficient (GFMC) [119]. GF is computed by passing an input signal to a gammatone filterbank and applying a decimation operation to subband signals. A zero-crossing feature, called zero-crossings with peak-amplitudes (ZCPA) [96], computes zero-crossing intervals and corresponding peak amplitudes from subband signals derived using a gammatone filter-bank. The autocorrelation features are relative autocorrelation sequence MFCC (RAS-MFCC) [204], autocorrelation sequence MFCC (AC-MFCC) [149] and phase autocorrelation MFCC (PAC-MFCC) [86], all of which apply the MFCC procedure in the autocorrelation domain. The medium-time filtering features are power normalized cepstral coefficients (PNCC) [95] and suppression of slowly-varying components and the falling edge of the power envelope (SSF) [94]. The modulation domain features are Gabor filterbank (GFB) [145] and AMS features. Pitch-based (PITCH) features calculate T-F level features based on pitch tracking and use periodicity and instantaneous frequency to discriminate speech-dominant T-F units from noise-dominant ones. In addition to existing features, we proposed a new feature called Multi-Resolution Cochleagram (MRCG) [22], which computes four cochleagrams at different spectrotemporal resolutions to provide both local information and a broader context.

The features are post-processed with the auto-regressive moving average (ARMA) filter [19] and evaluated with a fixed MLP based IBM mask estimator. The estimated masks are evaluated in terms of classification accuracy and the HIT − FA rate. The HIT−FA results are shown in Table I. As shown in the table, gammatone-domain features (MRCG, GF, and GFCC) consistently outperform the other features in both accuracy and HIT−FA rate, with MRCG performing the best. Cepstral compaction via discrete cosine transform (DCT) is not effective, as revealed by comparing GF and GFCC features. Neither is modulation extraction, as shown by comparing GFCC and GMFC, the latter calculated from the former. It is worth noting that the poor performance of pitch features is largely due to inaccurate estimation at low SNRs, as ground-truth pitch is shown to be quite discriminative.

TABLE I.

Classification Performance of a List of Acoustic Features in Terms of HIT−FA (in %) for Six Noises at −5 dB SNR, Where FA is Shown in Parentheses (From [22])

| Factory | Babble | Engine | Cockpit | Vehicle | Tank | Average | |

|---|---|---|---|---|---|---|---|

| MRCG | 63 (7) | 49 (13) | 77 (4) | 73 (4) | 80 (10) | 77 (6) | 70 (7) |

| GF | 61 (7) | 45 (15) | 75 (4) | 71 (3) | 80 (10) | 76 (6) | 68 (8) |

| GFCC | 61 (6) | 46 (14) | 73 (4) | 70 (3) | 78 (11) | 74 (6) | 67 (7) |

| DSCC | 56 (7) | 42 (14) | 70 (5) | 66 (3) | 77 (11) | 73 (6) | 64 (8) |

| MFCC | 57 (7) | 43 (14) | 69 (5) | 67 (4) | 77 (11) | 72 (7) | 64 (8) |

| PNCC | 56 (6) | 44 (14) | 69 (5) | 66 (4) | 77 (11) | 71 (7) | 64 (8) |

| PLP | 56 (6) | 41 (12) | 68 (5) | 66 (4) | 77 (11) | 71 (7) | 63 (8) |

| AC-MFCC | 56 (6) | 42 (14) | 67 (5) | 65 (4) | 77 (11) | 71 (7) | 63 (8) |

| RAS-MFCC | 57 (6) | 41 (14) | 68 (5) | 66 (4) | 76 (11) | 71 (7) | 63 (8) |

| GFB | 57 (7) | 41 (18) | 67 (5) | 66 (4) | 75 (12) | 70 (7) | 63 (9) |

| ZCPA | 55 (8) | 40 (16) | 68 (5) | 65 (4) | 75 (13) | 70 (8) | 62 (9) |

| SSF | 54 (7) | 39 (15) | 67 (5) | 60 (4) | 76 (11) | 69 (7) | 61 (8) |

| RASTA-PLP | 52 (6) | 38 (15) | 64 (5) | 61 (4) | 76 (12) | 67 (7) | 60 (8) |

| GFMC | 48 (7) | 35 (15) | 61 (6) | 60 (5) | 67 (17) | 59 (9) | 55 (10) |

| PITCH | 46 (3) | 29 (22) | 50 (5) | 50 (2) | 59 (16) | 53 (7) | 48 (9) |

| AMS | 40 (6) | 27 (9) | 49 (5) | 52 (4) | 50 (31) | 45 (11) | 44 (11) |

| PAC-MFCC | 17 (5) | 11 (8) | 30 (9) | 29 (7) | 40 (48) | 21 (17) | 25 (16) |

Boldtype Indicates Best Scores

Recently, Delfarah and Wang [34] performed another feature study that considers room reverberation, and both speech denoising and speaker separation. Their study uses a fixed DNN trained to estimate the IRM, and the evaluation results are given in terms of STOI improvements over unprocessed noisy and reverberant speech. The features added in this study include log spectral magnitude (LOG-MAG) and log mel-spectrum feature (LOG-MEL), both of which are commonly used in super vised separation [82], [196]. Also included is waveform signal (WAV) without any feature extraction. For reverberation, simulated room impulse responses (RIRs) and recorded RIRs are both used with reverberation time up to 0.9 seconds. For denoising, evaluation is done separately for matched noises where the first half of each nonstationary noise is used in training and second half for testing, and unmatched noises where completely new noises are used for testing. For cochannel (two-speaker) separation, the target talker is male while the interfering talker is either female or male. Table II shows the STOI gains for the individual features evaluated. In the anechoic, matched noise case, STOI results are largely consistent with Table I. Feature results are also broadly consistent using simulated and recorded RIRs. However, the best performing features are different for the matched noise, unmatched noise, and speaker separation cases. Besides MRCG, PNCC and GFCC produce the best results for the unmatched noise and cochannel condition, respectively. For feature combination, this study concludes that the most effective feature set consists of PNCC, GF, and LOG-MEL for speech enhancement, and PNCC, GFCC, and LOG-MEL for speaker separation.

TABLE II.

STOI Improvements (in %) for a List of Features Averaged on a set of Test Noises (From [34])

| Feature | Matched noise | Unmatched noise | Cochannel | Average | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Anechoic | Sim. RIRs | Rec. RIRs | Anechoic | Sim. RIRs | Rec. RIRs | Anechoic | Sim. RIRs | Rec. RIRs | ||

| MRCG | 7.12 | 14.25 | 12.15 | 7.00 | 7.28 | 8.99 | 21.25 (13.00) | 22.93 (13.19) | 21.29 (12.81) | 12.92 |

| GF | 6.19 | 13.10 | 11.37 | 6.71 | 7.87 | 8.24 | 22.56 (11.87) | 23.95 (12.31) | 22.35 (12.87) | 12.71 |

| GFCC | 5.33 | 12.56 | 10.99 | 6.32 | 6.92 | 7.01 | 23.53 (14.34) | 23.95 (14.01) | 22.76 (13.90) | 12.50 |

| LOG-MEL | 5.14 | 12.07 | 10.28 | 6.00 | 6.98 | 7.52 | 21.18 (13.88) | 22.75 (13.54) | 21.71 (13.18) | 12.08 |

| LOG-MAG | 4.86 | 12.13 | 9.69 | 5.75 | 6.64 | 7.19 | 20.82 (13.84) | 22.57 (13.40) | 21.82 (13.55) | 11.91 |

| GFB | 4.99 | 12.47 | 11.51 | 6.22 | 7.01 | 7.86 | 19.61 (13.34) | 20.86 (11.97) | 19.97 (11.60) | 11.75 |

| PNCC | 1.74 | 8.88 | 10.76 | 2.18 | 8.68 | 10.52 | 19.97 (10.73) | 19.47 (10.03) | 19.35 (9.56) | 10.78 |

| MFCC | 4.49 | 11.03 | 9.69 | 5.36 | 5.96 | 6.26 | 19.82 (11.98) | 20.32 (11.47) | 19.66 (11.54) | 10.72 |

| RAS-MFCC | 2.61 | 10.47 | 9.56 | 3.08 | 6.74 | 7.37 | 18.12 (11.38) | 19.07 (11.19) | 17.87 (10.30) | 10.44 |

| AC-MFCC | 2.89 | 9.63 | 8.89 | 3.31 | 5.61 | 5.91 | 18.66 (12.50) | 18.64 (11.59) | 17.73 (11.27) | 9.87 |

| PLP | 3.71 | 10.36 | 9.10 | 4.39 | 5.03 | 5.81 | 16.84 (11.29) | 16.73 (10.92) | 15.46 (9.50) | 9.46 |

| SSF-II | 3.41 | 8.57 | 8.68 | 4.18 | 5.45 | 6.00 | 16.76 (10.07) | 17.72 (9.18) | 18.07 (8.93) | 9.09 |

| SSF-I | 3.31 | 8.35 | 8.53 | 4.09 | 5.17 | 5.77 | 16.25 (10.44) | 17.70 (9.40) | 18.04 (9.35) | 8.97 |

| RASTA-PLP | 1.79 | 7.27 | 8.56 | 1.97 | 6.62 | 7.92 | 11.03 (6.76) | 10.96 (6.06) | 10.27 (6.28) | 7.46 |

| PITCH | 2.35 | 4.62 | 4.79 | 3.36 | 3.36 | 4.61 | 19.71 (9.37) | 17.82 (8.45) | 16.87 (6.72) | 7.03 |

| GFMC | −0.68 | 7.05 | 5.00 | −0.54 | 4.44 | 4.16 | 5.04 (0.07) | 6.01 (0.33) | 4.97 (0.28) | 4.40 |

| WAV | 0.94 | 2.32 | 2.68 | 0.02 | 0.99 | 1.63 | 11.62 (4.81) | 11.92 (6.25) | 10.54 (1.05) | 3.89 |

| AMS | 0.31 | 0.30 | −1.38 | 0.19 | −2.99 | −3.40 | 11.73 (5.96) | 10.97 (6.76) | 10.20 (4.90) | 1.71 |

| PAC-MFCC | 0.00 | −0.33 | −0.82 | 0.18 | −0.92 | −0.67 | 0.95 (0.15) | 1.25 (0.26) | 1.17 (0.09) | −0.17 |

“Sim.” and “Rec.” Indicate Simulated and Recorded Room Impulse Responses. Boldface Indicates the Best Scores in Each Condition. In Cochannel (Two-Talker) Cases, the Performance is Shown Separately for a Female Interferer and Male Interferer (in Parentheses) with a Male Target Talker

The large performance differences caused by features in both Tables I and II demonstrate the importance of features for supervised speech separation. The inclusion of raw waveform signal in Table II further suggests that, without feature extraction, separation results are poor. But it should be noted that, the feed-forward DNN used in [34] may not couple well with waveform signals, and CNNs and RNNs may be better suited for so-called end-to-end separation. We will come to this issue later.

V. MONAURAL SEPARATION ALGORITHMS

In this section, we discuss monaural algorithms for speech enhancement, speech dereverberation as well as dereverberation plus denoising, and speaker separation. We explain representative algorithms and discuss generalization of supervised speech separation.

A. Speech Enhancement

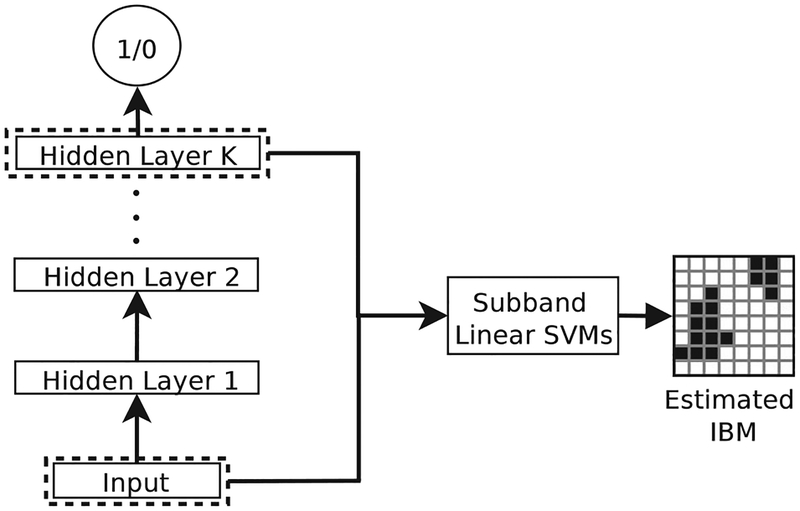

To our knowledge, deep learning was first introduced to speech separation by Wang and Wang in 2012 in two conference papers [179], [180], which were later extended to a journal version in 2013 [181]. They used DNN for subband classification to estimate the IBM. In the conference versions, feedforward DNNs with RBM pretraining were used as binary classifiers, as well as feature encoders for structured perceptrons [179] and conditional random fields [180]. They reported strong separation results in all cases of DNN usage, with better results for DNN used for feature learning due to the incorporation of temporal dynamics in structured prediction.

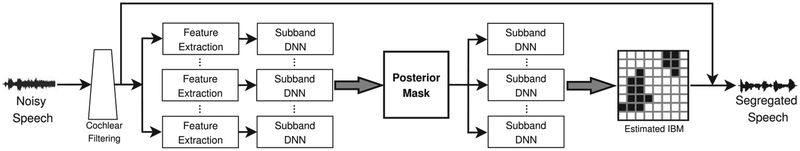

In the journal version [181], the input signal is passed through a 64-channel gammatone filterbank to derive subband signals, from which acoustic features are extracted within each T-F unit. These features form the input to subband DNNs (64 in total) to learn more discriminative features. This use of DNN for speech separation is illustrated in Fig. 4. After DNN training, input features and learned features of the last hidden layer are concatenated and fed to linear SVMs to estimate the subband IBM efficiently. This algorithm was further extended to a two-stage DNN [65], where the first stage is trained to estimate the subband IBM as usual and the second stage explicitly incorporates the T-F context in the following way. After the first-stage DNN is trained, a unit-level output before binarization can be interpreted as the posterior probability that speech dominates the T-F unit. Hence the first-stage DNN output is considered a posterior mask. In the second stage, a T-F unit takes as input a local window of the posterior mask centered at the unit. The two-stage DNN is illustrated in Fig. 5. This second-stage structure is reminiscent of a convolutional layer in CNN but without weight sharing. This way of leveraging contextual information is shown to significantly improve classification accuracy. Subject tests demonstrate that this DNN produced large intelligibility improvements for both HI and NH listeners, with HI listeners benefiting more [65]. This is the first monaural algorithm to provide substantial speech intelligibility improvements for HI listeners in background noise, so much so that HI subjects with separation outperformed NH subjects without separation.

Fig. 4.

Illustration of DNN for feature learning, and learned features are then used by linear SVM for IBM estimation (from [181]).

Fig. 5.

Schematic diagram of a two-stage DNN for speech separation (from [65]).

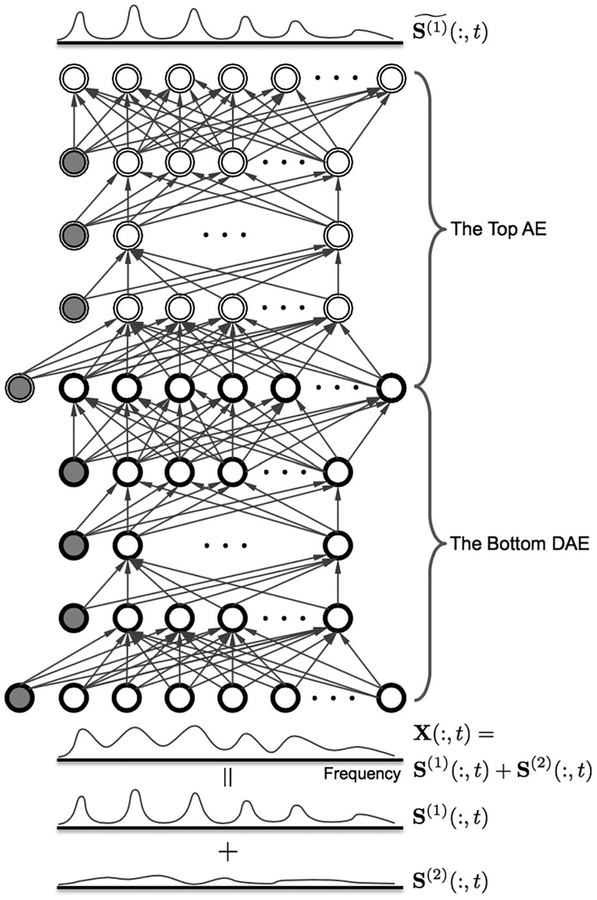

In 2013, Lu et al. [116] published an Interspeech paper that uses a deep autoencoder (DAE) for speech enhancement. A basic autoencoder (AE) is an unsupervised learning machine, typically having a symmetric architecture with one hidden layer with tied weights, that learns to map an input signal to itself. Multiple trained AEs can be stacked into a DAE that is then subject to traditional supervised fine-tuning, e.g., with a back-propagation algorithm. In other words, autoencoding is an alternative to RBM pretraining. The algorithm in [116] learns to map from the mel-frequency power spectrum of noisy speech to that of clean speech, so it can be regarded as the first mapping based method.4

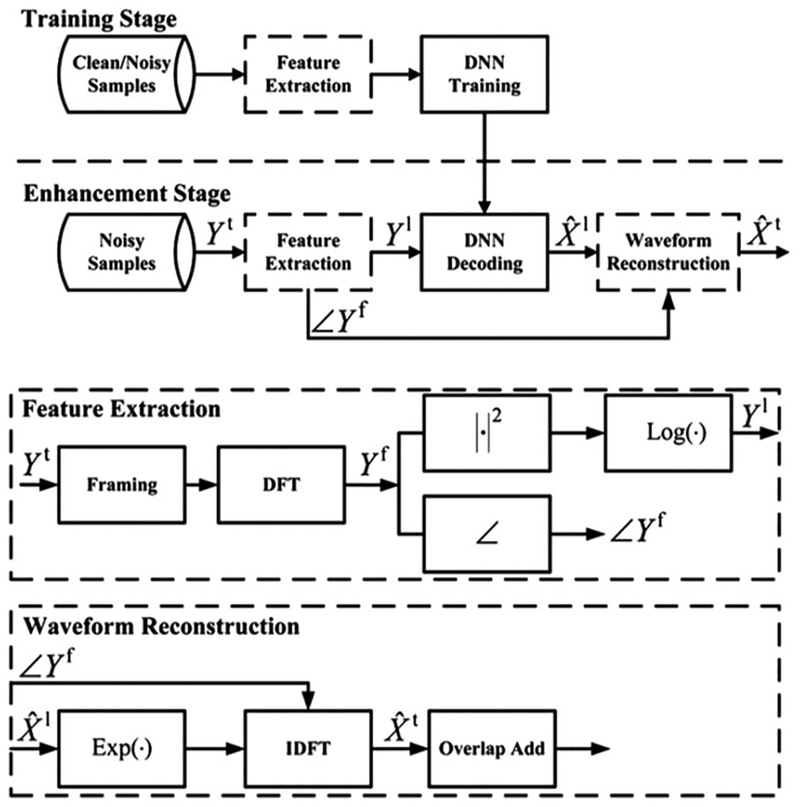

Subsequently, but independent of [116], Xu et al. [196] published a study using a DNN with RBM pretraining to map from the log power spectrum of noisy speech to that of clean speech, as shown in Fig. 6. Unlike [116], the DNN used in [196] is a standard feedforward MLP with RBM pretraining. After training, DNN estimates clean speech’s spectrum from a noisy input. Their experimental results show that the trained DNN yields about 0.4 to 0.5 PESQ gains over noisy speech on untrained noises, which are higher than those obtained by a representative traditional enhancement method.

Fig. 6.

Diagram of a DNN-based spectral mapping method for speech enhancement (from [196]). The feature extraction and waveform reconstruction modules are further detailed.

Many subsequent studies have since been published along the lines of T-F masking and spectral mapping. In [185], [186], RNNs with LSTM were used for speech enhancement and its application to robust ASR, where training aims for signal approximation (see Section III.I). RNNs were also used in [41] to estimate the PSM. In [132], [210], a deep stacking network was proposed for IBM estimation and a mask estimate was then used for pitch estimation. The accuracy of both mask estimation and pitch estimation improves after the two modules iterate for several cycles. A DNN was used to simultaneously estimate the real and imaginary components of the cIRM, yielding better speech quality over IRM estimation [188]. Speech enhancement at the phoneme level has been recently studied [18], [183]. In [59], the DNN takes into account of perceptual masking with a piecewise gain function. In [198], multi-objective learning is shown to improve enhancement performance. It has been demonstrated that a hierarchical DNN performing subband spectral mapping yields better enhancement than a single DNN performing full-band mapping [39]. In [161], skip connections between nonconsecutive layers are added to DNN to improve enhancement performance. Multi-target training with both masking and mapping based targets is found to outperform single-target training [205]. CNNs have also been used for IRM estimation [83] and spectral mapping [46], [136], [138].

Aside from masking and mapping based approaches, there is recent interest in using deep learning to perform end-toend separation, i.e., temporal mapping without resorting to a T-F representation. A potential advantage of this approach is to circumvent the need to use the phase of noisy speech in reconstructing enhanced speech, which can be a drag for speech quality, particularly when input SNR is low. Recently, Fu et al. [47] developed a fully convolutional network (a CNN with fully connected layers removed) for speech enhancement. They observe that full connections make it difficult to map both high and low frequency components of a waveform signal, and with their removal, enhancement results improve. As a convolution operator is the same as a filter or a feature extractor, CNNs appear to be a natural choice for temporal mapping.

A recent study employs a GAN to perform temporal mapping [138]. In the so-called speech enhancement GAN (SEGAN), the generator is a fully convolutional network, performing enhancement or denoising. The discriminator follows the same convolutional structure as G, and it transmits information of generated waveform signals versus clean signals back to G. D can be viewed as providing a trainable loss function for G. SEGAN was evaluated on untrained noisy conditions, but the results are inconclusive and worse than masking or mapping methods. In another GAN study [122], G tries to enhance the spectrogram of noisy speech while D tries to distinguish between the enhanced spectrograms and those of clean speech. The comparisons in [122] show that the enhancement results by this GAN are comparable to those achieved by a DNN.

Not all deep learning based speech enhancement methods build on DNNs. For example, Le Roux et al. [105] proposed deep NMF that unfolds NMF operations and includes multiplicative updates in backpropagation. Vu et al. [167] presented an NMF framework in which a DNN is trained to map NMF activation coefficients of noisy speech to their clean version.

B. Generalization of Speech Enhancement Algorithms

For any supervised learning task, generalization to untrained conditions is a crucial issue. In the case of speech enhancement, data-driven algorithms bear the burden of proof when it comes to generalization, because the issue does not arise in traditional speech enhancement and CASA algorithms which make minimal use of supervised training. Supervised enhancement has three aspects of generalization: noise, speaker, and SNR. Regarding SNR generalization, one can simply include more SNR levels in a training set and practical experience shows that supervised enhancement is not sensitive to precise SNRs used in training. Part of the reason is that, even though a few mixture SNRs are included in training, local SNRs at the frame level and T-F unit level usually vary over a wide range, providing a necessary variety for a learning machine to generalize well. An alternative strategy is to adopt progressive training with increasing numbers of hidden layers to handle lower SNR conditions [48].

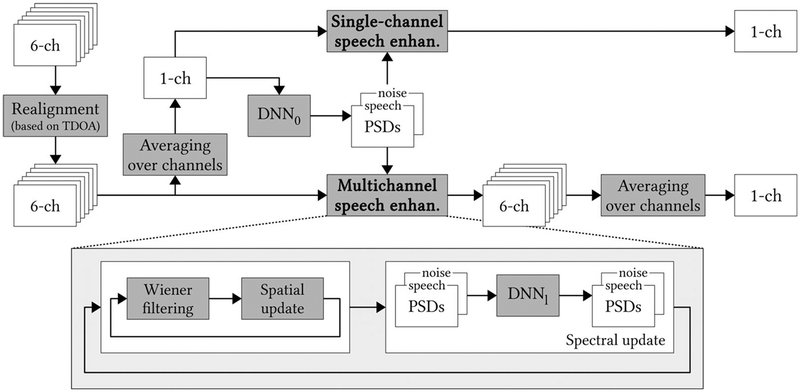

In an effort to address the mismatch between training and test conditions, Kim and Smaragdis [98] proposed a two-stage DNN where the first stage is a standard DNN to perform spectral mapping and the second stage is an autoencoder that performs unsupervised adaptation during the test stage. The AE is trained to map the magnitude spectrum of a clean utterance to itself, much like [115], and hence its training does not need labeled data. The AE is then stacked on top of the DNN, and serves as a purity checker as shown in Fig. 7. The rationale is that well enhanced speech tends to produce a small difference (error) between the input and the output of the AE, whereas poorly enhanced speech should produce a large error. Given a test mixture, the already-trained DNN is fine-tuned with the error signal coming from the AE. The introduction of an AE module provides a way of unsupervised adaptation to test conditions that are quite different from the training conditions, and is shown to improve the performance of speech enhancement.

Fig. 7.

DNN architecture for speech enhancement with an autoencoder for unsupervised adaptation (from [98]). The AE stacked on top of a DNN serves as a purity checker for estimated clean speech from the bottom DNN. S(1) denotes the spectrum of a speech signal, S(2) the spectrum of a noise signal, and an estimate of S(1).

Noise generalization is fundamentally challenging as all kinds of stationary and nonstationary noises may interfere with a speech signal. When available training noises are limited, one technique is to expand training noises through noise perturbation, particularly frequency perturbation [23]; specifically, the spectrogram of an original noise sample is perturbed to generate new noise samples. To make the DNN-based mapping algorithm of Xu et al. [196] more robust to new noises, Xu et al. [195] incorporate noise aware training, i.e., the input feature vector includes an explicit noise estimate. With noise estimated via binary masking, the DNN with noise aware training generalizes better to untrained noises.

Noise generalization is systematically addressed in [24]. The DNN in this study was trained to estimate the IRM at the frame level. In addition, the IRM is simultaneously estimated over several consecutive frames and different estimates for the same frame are averaged to produce a smoother, more accurate mask (see also [178]). The DNN has five hidden layers with 2048 ReLUs in each. The input features for each frame are cochlea-gram response energies (the GF feature in Tables I and II). The training set includes 640,000 mixtures created from 560 IEEE sentences and 10,000 (10K) noises from a sound effect library (www.sound-ideas.com) at the fixed SNR of −2 dB. The total duration of the noises is about 125 hours, and the total duration of training mixtures is about 380 hours. To evaluate the impact of the number of training noises on noise generalization, the same DNN is also trained with 100 noises as done in [181]. The test sets are created using 160 IEEE sentences and nonstationary noises at various SNRs. Neither test sentences nor test noises are used during training. The separation results measured in STOI are shown in Table III, and large STOI improvements are obtained by the 10K-noise model. In addition, the 10K-noise model substantially outperforms the 100-noise model, and its average performance matches the noise-dependent models trained with the first half of the training noises and tested with the second half. Subject tests show that the noise-independent model resulting from large-scale training significantly improves speech intelligibility for NH and HI listeners in unseen noises. This study strongly suggests that large-scale training with a wide variety of noises is a promising way to address noise generalization.

TABLE III.

Speech Enhancement Results at −2 dB SNR Measured in STOI (From [24])

| Babble1 | Cafeteria | Factory | Babble2 | Average | |

|---|---|---|---|---|---|

| Unprocessed | 0.612 | 0.596 | 0.611 | 0.611 | 0.608 |

| 100-noise model | 0.683 | 0.704 | 0.750 | 0.688 | 0.706 |

| 10K-noise model | 0.792 | 0.783 | 0.807 | 0.786 | 0.792 |

| Noise-dependent model | 0.833 | 0.770 | 0.802 | 0.762 | 0.792 |

As for speaker generalization, a separation system trained on a specific speaker would not work well for a different speaker. A straight forward attempt for speaker generalization would be to train with a large number of speakers. However, experimental results [20], [100] show that a feedforward DNN appears incapable of modeling a large number of talkers. Such a DNN typically takes a window of acoustic features for mask estimation, without using the long-term context. Unable to track a target speaker, a feedforward network has a tendency to mistake noise fragments for target speech. RNNs naturally model temporal dependencies, and are thus expected to be more suitable for speaker generalization than feedforward DNN.

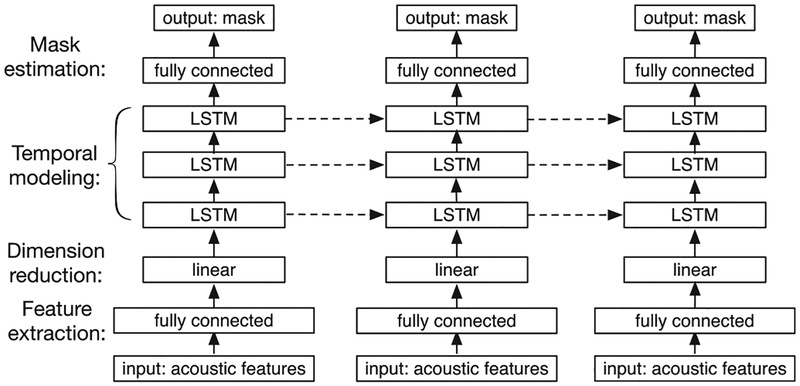

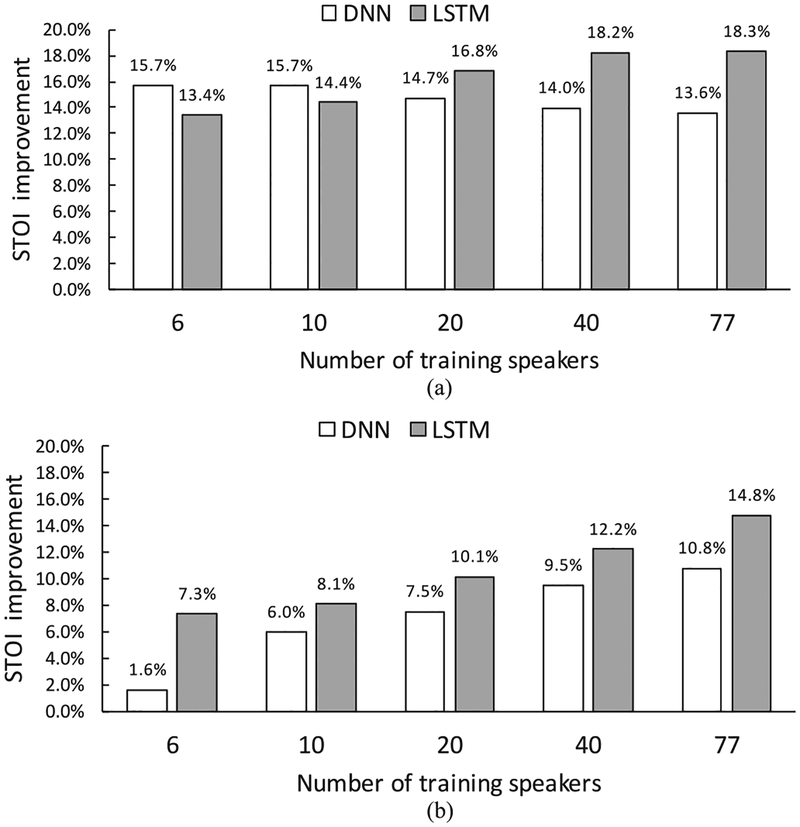

We have recently employed RNN with LSTM to address speaker generalization of noise-independent models [20]. The model, shown in Fig. 8, is trained on 3,200,000 mixtures created from 10,000 noises mixed with 6, 10, 20, 40, and 77 speakers. When tested with trained speakers, as shown in Fig. 9(a), the performance of the DNN degrades as more training speakers are added to the training set, whereas the LSTM benefits from additional training speakers. For untrained test speakers, as shown in Fig. 9(b), the LSTM substantially outperforms the DNN in terms of STOI. LSTM appears able to track a target speaker over time after being exposed to many speakers during training. With large-scale training with many speakers and numerous noises, RNNs with LSTM represent an effective approach for speaker-and noise-independent speech enhancement.

Fig. 8.

Diagram of an LSTM based speech separation system (from [20]).

Fig. 9.

STOI improvements of a feedforward DNN and a RNN with LSTM (from [20]). (a) Results for trained speakers at −5 dB SNR. (b) Results foruntrained speakers at −5 dB SNR.

C. Speech Dereverberation and Denoising

In a real environment, speech is usually corrupted by reverberation from surface reflections. Room reverberation corresponds to a convolution of the direct signal and an RIR, and it distorts speech signals along both time and frequency. Reverberation is a well-recognized challenge in speech processing, particularly when it is combined with background noise. As a result, dereverberation has been actively investigated for a long time [5],[61], [131], [191].

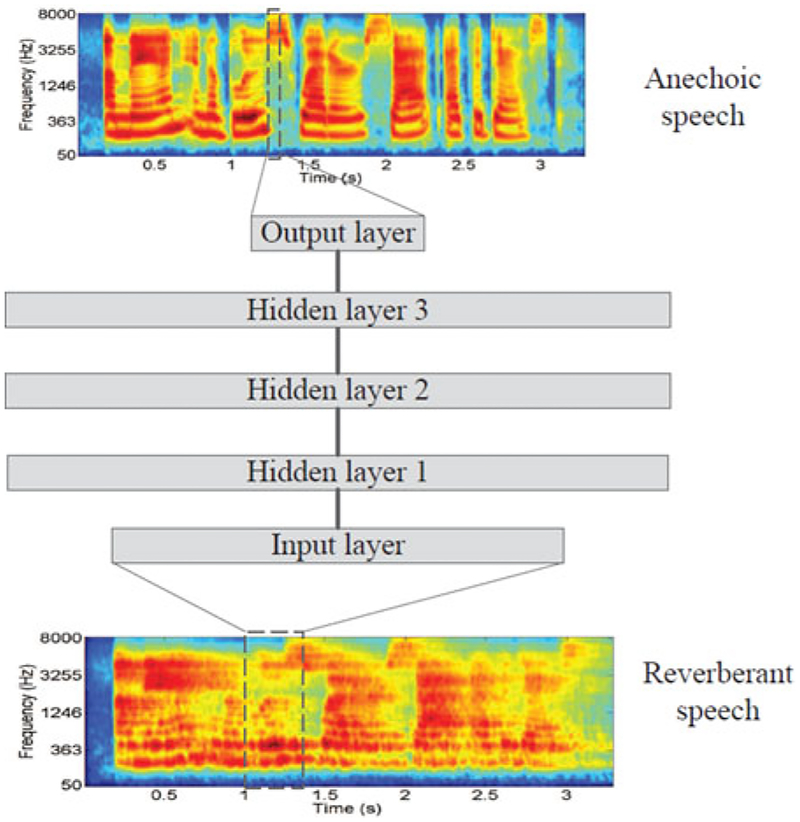

Han et al. [57] proposed the first DNN based approach to speech dereverberation. This approach uses spectral mapping on a cochleagram. In other words, a DNN is trained to map from a window of reverberant speech frames to a frame of anechoic speech, as illustrated in Fig. 10. The trained DNN can reconstruct the cochleagram of anechoic speech with surprisingly high quality. In their later work [58], they apply spectral mapping on a spectrogram and extend the approach to perform both dereverberation and denoising.

Fig. 10.

Diagram of a DNN for speech dereverberation based on spectral mapping (from [57]).

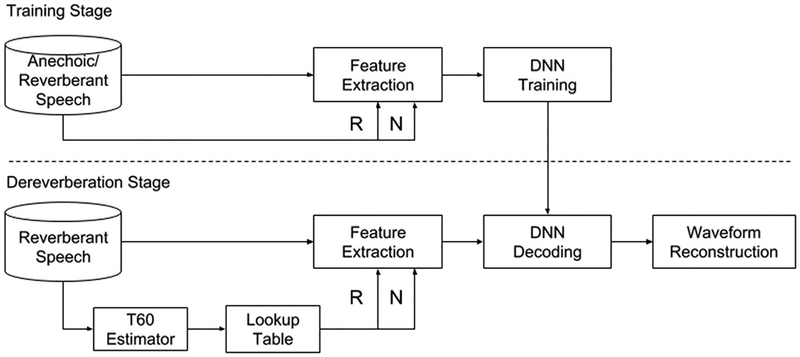

A more sophisticated system was proposed recently by Wu et al. [190], who observe that dereverberation performance improves when frame length and shift are chosen differently depending on the reverberation time (T60). Based on this observation, their system includes T60 as a control parameter in feature extraction and DNN training. During the dereverberation stage, T60 is estimated and used to choose appropriate frame length and shift for feature extraction. This so-called reverberation-time-aware model is illustrated in Fig. 11. Their comparisons show an improvement in dereverberation performance over the DNN in [58].

Fig. 11.

Diagram of a reverberation time aware DNN for speech dereverberation (redrawn from [190]).

To improve the estimation of anechoic speech from reverberant and noisy speech, Xiao et al. [194] proposed a DNN trained to predict static, delta and acceleration features at the same time. The static features are log magnitudes of clean speech, and the delta and acceleration features are derived from the static features. It is argued that DNN that predicts static features well should also predict delta and acceleration features well. The incorporation of dynamic features in the DNN structure helps to improve the estimation of static features for dereverberation.

Zhao et al. [211] observe that spectral mapping is more effective for dereverberation than T-F masking, whereas masking works better than mapping for denoising. Consequently, they construct a two-stage DNN where the first stage performs ratio masking for denoising and the second stage spectral mapping for dereverberation. Furthermore, to alleviate the adverse effects of using the phase of reverberant-noisy speech in resynthesizing the waveform signal of enhanced speech, this study extends the time-domain signal reconstruction technique in [182]. Here the training target is defined in the time-domain, but clean phase is used during training unlike in [182] where noisy phase is used. The two stages are individually trained first, and then jointly trained. The results in [211] show that the two-stage DNN model significantly outperforms the single-stage models for either mapping or masking.

D. Speaker Separation

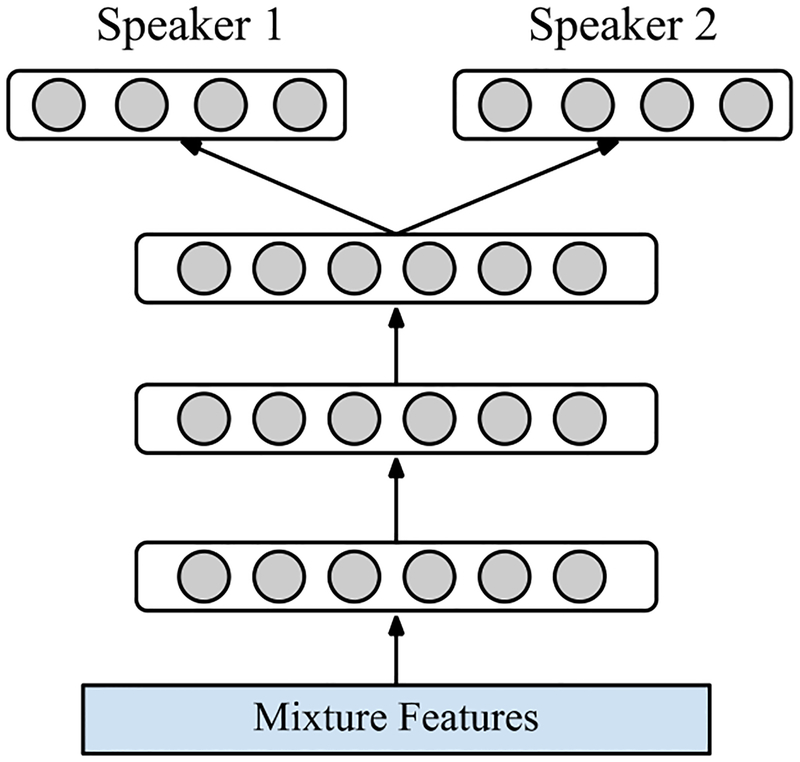

The goal of speaker separation is to extract multiple speech signals, one for each speaker, from a mixture containing two or more voices. After deep learning was demonstrated to be capable of speech enhancement, DNN has been successfully applied to speaker separation under a similar framework, which is illustrated in Fig. 12 in the case of two-speaker or cochannel separation.

Fig. 12.

Diagram of DNN based two-speaker separation.

According to our literature search, Huang et al. [81] were the first to introduce DNN for this task. This study addresses two-speaker separation using both a feedforward DNN and an RNN. The authors argue that the summation of the spectra of two estimated sources at frame t, Ŝ1 (t) and Ŝ2 (t), is not guaranteed to equal the spectrum of the mixture. Therefore, a masking layer is added to the network, which produces two final outputs shown in the following equations:

| (8) |

| (9) |

where Y (t) denotes the mixture spectrum at t. This amounts to a signal approximation training target introduced in Section III.I. Both binary and ratio masking are found to be effective. In addition, discriminative training is applied to maximize the difference between one speaker and the estimated version of the other. During training, the following cost is minimized:

| (10) |

where S1(t) and S2(t) denote the ground truth spectra for Speaker 1 and Speaker 2, respectively, and γ is a tunable parameter. Experimental results have shown that both the masking layer and discriminative training improve speaker separation [82].

A few months later, Du et al. [38] appeared to have independently proposed a DNN for speaker separation similar to [81]. In this study [38], the DNN is trained to estimate the log power spectrum of the target speaker from that of a cochannel mixture. In a different paper [162], they trained a DNN to map a cochannel signal to the spectrum of the target speaker as well as the spectrum of an interfering speaker, as illustrated in Fig. 12 (see[37] for an extended version). A notable extension compared to[81] is that these papers also address the situation where only the target speaker is the same between training and testing, while interfering speakers are different between training and testing.

In speaker separation, if the underlying speakers are not allowed to change from training to testing, this is the speaker-dependent situation. If interfering speakers are allowed to change, but the target speaker is fixed, this is called target-dependent speaker separation. In the least constrained case where none of the speakers are required to be the same between training and testing, this is called speaker-independent. From this perspective, Huang et al.’s approach is speaker dependent [81], [82] and the studies in [38], [162] deal with both speaker and target dependent separation. Their way of relaxing the constraint on interfering speakers is simply to train with cochannel mixtures of the target speaker and many interferers.

Zhang and Wang proposed a deep ensemble network to address speaker-dependent as well as target-dependent separation [206]. They employ multi-context networks to integrate temporal information at different resolutions. An ensemble is constructed by stacking multiple modules, each performing multi-context masking or mapping. Several training targets were examined in this study. For speaker-dependent separation, signal approximation is shown to be most effective; for target-dependent separation, a combination of ratio masking and signal approximation is most effective. Furthermore, the performance of target-dependent separation is close to that of speaker-dependent separation. Recently, Wang et al. [174] took a step further towards relaxing speaker dependency in talker separation. Their approach clusters each speaker into one of the four clusters (two for male and two for female), and then trains a DNN-based gender mixture detector to determine the clusters of the two underlying speakers in a mixture. Although trained on a subset of speakers in each cluster, their evaluation results show that the speaker separation approach works well for the other untrained speakers in each cluster; in other words, this speaker separation approach exhibits a degree of speaker independency.

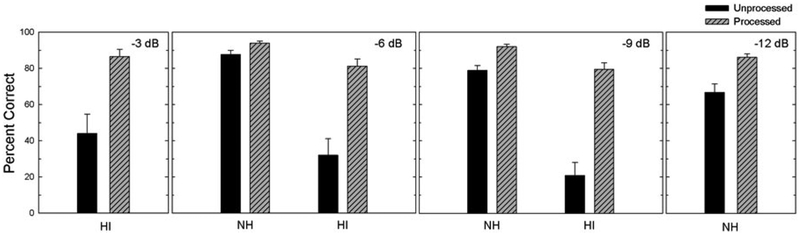

Healy et al. [63] have recently used a DNN for speaker-dependent cochannel separation and performed speech intelligibility evaluation of the DNN with both HI and NH listeners. The DNN was trained to estimate the IRM and its complement, corresponding to the target talker and interfering talker. Compared to earlier DNN-based cochannel separation studies, the algorithm in [63] uses a diverse set of features and predicts multiple IRM frames, resulting in better separation. The intelligibility results are shown in Fig. 13. For the HI group, intelligibility improvement from DNN-based separation is 42.5, 49.2, and 58.7 percentage points at −3 dB, −6 dB, and 9 dB target-to-interferer ratio (TIR), respectively. For the NH−group, there are statistically significant improvements, but to a smaller extent. It is remarkable that the large intelligibility improvements obtained by HI listeners allow them to perform equivalently to NH listeners (without algorithm help) at the common TIRs of − 6 and −9 dB.

Fig. 13.

Mean intelligibility scores and standard errors for HI and NH subjects listening to target sentences mixed with interfering sentences and separated target sentences (from [63]). Percent correct results are given at four different target-to-interferer ratios.

Speaker-independent separation can be treated as unsupervised clustering where T-F units are clustered into distinct classes dominated by individual speakers [6], [79]. Clustering is a flexible framework in terms of the number of speakers to separate, but it does not benefit as much from discriminative information fully utilized in supervised training. Hershey et al. were the first to address speaker-independent multi-talker separation in the DNN framework [69]. Their approach, called deep clustering, combines DNN based feature learning and spectral clustering. With a ground truth partition of T-F units, the affinity matrix A can be computed as:

| (11) |

where Y is the indicator matrix built from the IBM. Yi,c is set to 1 if unit i belongs to (or dominated by) speaker c, and 0 otherwise. The DNN is trained to embed each T-F unit. The estimated affinity matrix  can be derived from the embeddings. The DNN learns to output similar embeddings for T-F units originating from the same speaker by minimizing the following cost function:

| (12) |

where V is an embedding matrix for T-F units. Each row of V represents one T-F unit. denotes the squared Frobenius norm. Low rank formulation can be applied to efficiently calculate the cost function and its derivatives. During inference, a mixture is segmented and the embedding matrix V is computed for each segment. Then, the embedding matrices of all segments are concatenated. Finally, the K-means algorithm is applied to cluster the T-F units of all the segments into speaker clusters. Segment-level clustering is more accurate than utterance-level clustering, but with clustering results only for individual segments, the problem of sequential organization has to be addressed. Deep clustering is shown to produce high quality speaker separation, significantly better than a CASA method[79] and an NMF method for speaker-independent separation.

A recent extension of deep clustering is the deep attractor network [25], which also learns high-dimensional embeddings for T-F units. Unlike deep clustering, this deep network creates attractor points akin to cluster centers in order to pull T-F units dominated by different speakers to their corresponding attractors. Speaker separation is then performed as mask estimation by comparing embedded points and each attractor. The results in [25] show that the deep attractor network yields better results than deep clustering.

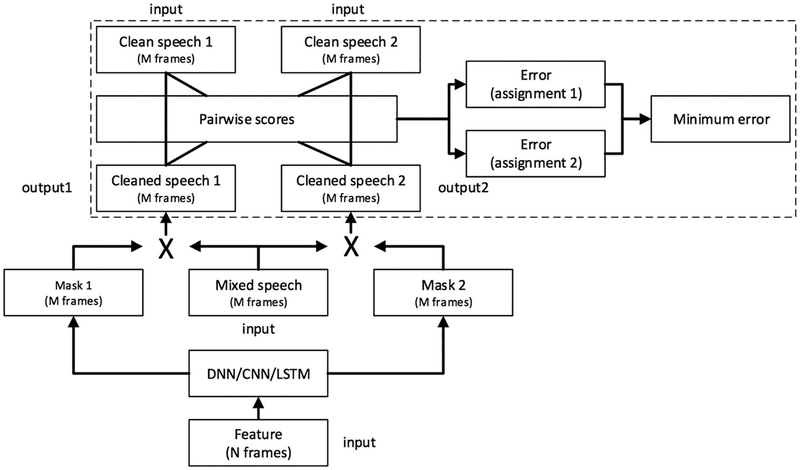

While clustering-based methods naturally lead to speaker-independent models, DNN based masking/mapping methods tie each output of the DNN to a specific speaker, and lead to speaker-dependent models. For example, mapping based methods minimize the following cost function:

| (13) |

where and |Sk (t)| denote estimated and actual spectral magnitudes for speaker k, respectively, and t denotes time frame. To untie DNN outputs from speakers and train a speaker-independent model using a masking or mapping technique, Yu et al. [202] recently proposed permutation-invariant training, which is shown in Fig. 14. For two-speaker separation, a DNN is trained to output two masks, each of which is applied to noisy speech to produce a source estimate. During DNN training, the cost function is dynamically calculated. If we assign each output to a reference speaker |Sk (t)| in training data, there are two possible the assignments, each of which is associated with an MSE. The assignment with the lower MSE is chosen and the DNN is trained to minimize the corresponding MSE. During both training and inference, the DNN takes a segment or multiple frames of features, and estimates two sources for the segment. Since the two outputs of the DNN are not tied to any speaker, the same speaker may switch from one output to another across consecutive segments. Therefore, the estimated segment-level sources need to be sequentially organized unless segments are as long as utterances. Although much simpler, speaker separation results are shown to match those obtained with deep clustering [101], [202].

Fig. 14.

Two-talker separation with permutation-invariant training (from [202]).

It should be noted that, although speaker separation evaluations typically focus on two-speaker mixtures, the separation framework can be generalized to separating more than two talkers. For example, the diagrams in both Figs. 12 and 14 can be straightforwardly extended to handle, say, three-talker mixtures. One can also train target-independent models using multi-speaker mixtures. For speaker-independent separation, deep clustering [69] and permutation-invariant training [101] are both formulated for multi-talker mixtures and evaluated on such data. Scaling deep clustering from mixtures of two speakers to more than two is more straightforward than for scaling permutation-invariant training.

An insight from the body of work overviewed in this speaker separation subsection is that a DNN model trained with many pairs of different speakers is able to separate a pair of speakers never included in training, a case of speaker independent separation, but only at the frame level. For speaker-independent separation, the key issue is how to group well-separated speech signals at individual frames (or segments) across time. This is precisely the issue of sequential organization, which is much investigated in CASA [172]. Permutation-invariant training may be considered as imposing sequential grouping constraints during DNN training. On the other hand, typical CASA methods utilize pitch contours, vocal tract characteristics, rhythm or prosody, and even common spatial direction when multiple sensors are available, which do not usually involve supervised learning. It seems to us that integrating traditional CASA techniques and deep learning is a fertile ground for future research.

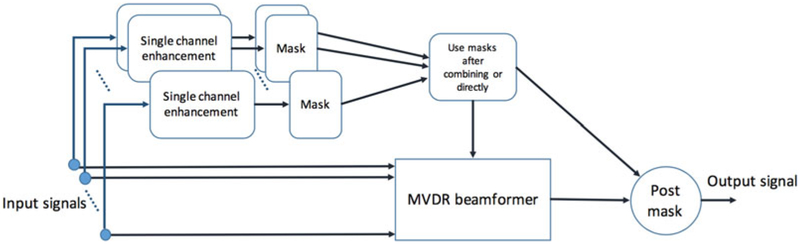

VI. ARRAY SEPARATION ALGORITHMS

An array of microphones provides multiple monaural recordings, which contain information indicative of the spatial origin of a sound source. When sound sources are spatially separated, with sensor array inputs one may localize sound sources and then extract the source from the target location or direction. Traditional approaches to source separation based on spatial information include beamforming, as mentioned in Section I, and independent component analysis [3], [8], [85]. Sound localization and location-based grouping are among the classic topics in auditory perception and CASA [12], [15], [172].

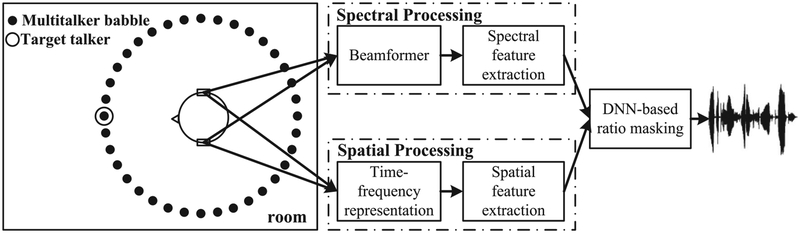

A. Separation Based on Spatial Feature Extraction

The first study in supervised speech segregation was conducted by Roman et al. [141] in the binaural domain. This study performs supervised classification to estimate the IBM based on two binaural features: ITD and ILD, both extracted from individual T-F unit pairs from the left-ear and right-ear cochlea-gram. Note that, in this case, the IBM is defined on the noisy speech at a single ear (reference channel). Classification is based on maximum a posteriori (MAP) probability where the likelihood is given by a density estimation technique. Another classic two-sensor separation technique, DUET (Degenerate Unmixing Estimation Technique), was published by Yilmaz and Rickard [199] at about the same time. DUET is based on unsupervised clustering, and the spatial features used are phase and amplitude differences between the two microphones. The contrast between classification and clustering in these studies is a persistent theme and anticipates similar contrasts in later studies, e.g., binary masking [71] vs. clustering [72] for beamforming (see Section VI.B), and deep clustering [69] versus mask estimation [101] for talker-independent speaker separation (see Section V.D).

The use of spatial information afforded by an array as features in deep learning is a straightforward extension of the earlier use of DNN in monaural separation; one simply substitutes spatial features for monaural features. Indeed, this way of leveraging spatial information provides a natural framework for integrating monaural and spatial features for source separation, which is a point worth emphasizing as traditional research tends to pursue array separation without considering monaural grouping. It is worth noting that human auditory scene analysis integrates monaural and binaural analysis in a seamless fashion, taking advantage of whatever discriminant information existing in a particular environment [15], [30], [172].

The first study to employ DNN for binaural separation was published by Jiang et al. [90]. In this study, the signals from two ears (or microphones) are passed to two corresponding auditory filterbanks. ITD and ILD features are extracted from T-F unit pairs and sent to a subband DNN for IBM estimation, one DNN for each frequency channel. In addition, a monaural feature (GFCC, see Table I) is extracted from the left-ear input. A number of conclusions can be drawn from this study. Perhaps most important is the observation that the trained DNN generalizes well to untrained spatial configurations of sound sources. A spatial configuration refers to a specific placement of sound sources and sensors in an acoustic environment. This is key to the use of supervised learning as there are infinite configurations and a training set cannot enumerate various configurations. DNN based binaural separation is found to generalize well to RIRs and reverberation times. It is also observed that the incorporation of the monaural feature improves separation performance, especially when the target and interfering sources are co-located or close to each other.